Abstract

We show that the minimizers of regularized quadratic functions restricted to their natural Krylov spaces increase in Euclidean norm as the spaces expand.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given a real symmetric, possibly indefinite, matrix H and vector g, we are concerned with Krylov methods for approximating the global solution of the possibly nonconvex regularization problem

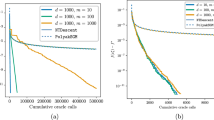

where \(\sigma > 0\), \(p > 2\) and \(\Vert \cdot \Vert \) is the Euclidean norm—note that Q is bounded below over \(\mathfrak {R}^n\), and all global minimizers have the same norm [7, §3]. Such methods have been advocated by a number of authors, e.g., [1,2,3, 8]. Here we are interested in how the norms of the estimates of the solution evolve as the Krylov process proceeds. The main utility is that these estimates provide useful predictions for the “multipliers” \(\sigma \Vert x\Vert ^{p-2}\) as the Krylov subspace expands [9]. Our result is an analogue of that obtained by Lukšan, Matonoha and Vlček [11] for the trust-region subproblem.

By way of motivation and explanation, the solution \(x_*\) to (1.1) necessarily satisfies the first-order criticality condition \(\nabla _x Q(x_*;\sigma ,p) = 0\), i.e.,

In addition, \(H + \mu _* I\) is positive semi-definite at any global minimizer, and if \(H + \mu _* I\) is positive definite, the minimizer is unique [3, Thm.3.1]. If \(\mu _*\) was known, the minimizer might be found simply by solving the linear system (1.2), and the skill is then in finding convergent estimates of \(\mu _*\) by iteration [5]. Briefly, this is achieved by seeking the rightmost root of the secular equation

where

while ensuring that \( H + \mu I\) is positive semi-definite. This is always possible so long as g intersects the subspace \(\mathcal{U}\) of eigenvectors of H corresponding to the leftmost eigenvalue \(\lambda _{\min }(H)\) of H, and in this case \(H + \mu _* I\) is positive definite. The rare possibility that the later does not happen is known colloquially as the “hard case” [12], and the solution to (1.1) in the hard case involves an addition component from \(\mathcal{U}\). We also make the connection between (1.1) and the regularized quadratic

namely that if \(\nu = \mu _*\) and the hard case does not occur then \(x_*\) minimizes \(Q_{\nu }(x)\).

This all presupposes that one can solve the linear system (1.3), and unfortunately in some applications matrix factorization is out of the question, indeed H may only be available indirectly via Hessian-vector products Hv for given v. An attractive alternative in such cases is to find an approximation to the solution of (1.1) by restricting the search domain to a subspace or sequence of subspaces. A particularly appealing set of nested subspaces is provided by the Krylov space defined by H and g that we will define formally in the next section. Crucially, the k-th Krylov subspace, \(\mathcal{K}_k(H,g)\), may be generated recursively through Hessian-vector products, and has an orthogonal basis \(V_k\), with the desirable property that if

then \(x_k = V_k \bar{x}_k\), where

the vector \(e_1 \in \mathfrak {R}^k\) is the first column of the identity matrix and \(T_k^{} = V_k^T H V_k^{} \in \mathfrak {R}^{k \times k}\) is tridiagonal. The latter implies that solving (1.5) is feasible via its optimality equations

even when the dimension is large, since factorizing shifted tridiagonal matrices and solving linear systems involving them may be achieved in a few multiples of k floating-point operations.

Of course, we need to judge when \(x_k\) is a meaningful approximation to \(x_*\) as the subspaces evolve, and furthermore to solve each successive subproblem (1.5) efficiently. The former is addressed in [2, 6] and requires estimates of \(\mu _k\), while the latter appeals to the ideas in [5] and relies on a good starting “guess” for \(\mu _k\). Thus generating a good starting guess provides motivation for our short paper.

In the next section we provide a set of lemmas leading to our main result, namely that so long as the evolving Krylov subspaces are of full dimensionality, the norms of the solution estimates \(\Vert s_k\Vert \) and the corresponding “multipliers” \(\mu _k\) increase monotonically. We summarize, extend and discuss implications and limitations of our results in the concluding Sect. 3.

2 The main result

We start with four vital lemmas that we use to prove our main result. The first shows a simple property of the conjugate gradient method. We use the generic notation \(H \succ 0\) (resp. \(H \succeq 0\)) to mean that the real, symmetric matrix H is positive definite (resp. positive semi-definite).

Lemma 1

Given a real symmetric matrix H and real vector g, let

\(k \ge 1\), be the k-th Krylov subspace generated by H and the vector g, and let the columns of \(V_k\) provide an orthonormal basis for \(\mathcal{K}_k(H,g)\). Letting \(\ell \ge k \ge 1\), suppose that

and define

Then

Proof

This follows from [4, Thm.7.5.1] as the requirement there, namely that \(p_k^T H p_k^{} > 0\) for specific vectors \(p_k \in \mathcal{K}_k(H,g)\), is implied by the more general assumption (2.1). \(\square \)

Note that this is a generalization of [13, Thm.2.1] that relaxes the requirement that H be everywhere positive definite to be so merely over the evolving Krylov subspaces of interest.

Our second lemma compares Krylov subspaces of the matrices H and \(H +\mu I\) for some \(\mu \in \mathfrak {R}\).

Lemma 2

[11, Lem.2.3]. Let H, g and \(\mathcal{K}_k\) be as in Lemma 1, and \(\mu \in \mathfrak {R}\). Then

Next, we state a crucial relation between the parameter \(\nu \) that defines \(Q_{\nu }(x)\) in (1.4) and the norm of the minimizer of \(Q_{\nu }(x)\) within the k-th Krylov space.

Lemma 3

[11, Lem.2.5]. Suppose that the columns of \(V_k\) provide an orthonormal basis for \(\mathcal{K}_k(H,g)\) for given real symmetric H and real g. Let \(V_k^T H V_k^{} + \nu _i^{} I\), \(\nu _i \in \mathfrak {R}\), \(i \in \{1, 2\}\), be symmetric and positive definite. Let

Then

We define the grade of H and g, \(\text{ grade }(H,g) \le n\), to be the maximum dimension of the evolving Krylov spaces \(\mathcal{K}_k(H,g)\), \(k = 1, \ldots , n\) [10]. Our final lemma indicates that the evolving minimizers are unique.

Lemma 4

Let H, g and \(V_k\) be as in Lemma 3 and let \(\mu _k\) by the rightmost root of the secular equation

Then \(V_k^T H V_k^{} + \mu _k^{} I \succ 0\) for all \(1 \le k \le m :=\text{ grade }(H,g)\).

Proof

Using the Lanczos orthonormal basis, we have that \(V_k^T H V_k^{} = T_k^{}\) for an irreducible tridiagonal matrix \(T_k\) for \(k = 1, \ldots , m\). It then follows [4, Thm.7.5.12] that \(\mathcal{K}_k(H,g)\) has a nontrivial intersection with the space of eigenvectors of \(T_k\) corresponding to the eigenvalue \(\lambda _{\min }(T_k)\) (i.e., the “hard case” cannot occur), and thus that the only permitted root \(\mu _k\) of the secular Eq. (2.3) for the problem satisfies \(\mu _k > - \lambda _{\min }(T_k)\), where \(\lambda _{\min }\) denotes the leftmost eigenvalue of its symmetric matrix argument [5, Sec.2.2]. \(\square \)

We are now in a position to state and prove our main theorem.

Theorem 1

Given a real symmetric matrix H, vector g and scalars \(\sigma > 0\) and \(p > 2\), let \(m = \text{ grade }(H,g)\),

and

for \(j \ge 1\). Then \(\mu _k \le \mu _{\ell }\) and \(\Vert x_k\Vert \le \Vert x_{\ell }\Vert \) for \(1 \le k \le \ell \le m\).

Proof

Let \(V_j\) be as in the statement of Lemma 3. The vector \(x_j = V_j y_j\) is a minimizer of the j-th regularization subproblem if and only if

and the minimizer is unique since \(V_j^T (H + \mu _j I) V_j \succ 0\) from Lemma 4 [5, Thm.2]. Consider two integers k and \(\ell \) for which \(1 \le k \le \ell \le m\).

Since we have \(V_k^T (H + \mu _k I)V_k \succ 0\) and \(V_{\ell }^T (H + \mu _{\ell } I)V_{\ell } \succ 0\), and as \(\mathcal{K}_k (H+\mu _kI,g) = \mathcal{K}_k(H,g)\) by Lemma 2, it follows from (2.5) that \(x_k\) is also the (unique) solution of the subspace minimization problem

Assume that \(\mu _k > \mu _{\ell }\), which implies that \(V_{\ell }^T (H + \mu _k I)V_{\ell } \succ 0\). Let

Then it follows from Lemma 1 that

But since \(\mu _{\ell } < \mu _k\), Lemma 3 gives that

Hence using the definition (2.4) and combining the inequalities (2.6) and (2.7)

which is a contradiction. Thus \(\mu _k \le \mu _{\ell }\) has to hold. It then follows from the definition (2.4) that \(\Vert x_k\Vert \le \Vert x_{\ell }\Vert \). \(\square \)

The monotonic behaviour of the multipliers \(\mu _k\) was predicted in [9, Lem.2.6] when \(p=3\), but the proof suggested there relied on [11, Thm.2.6], which appears to have a minor flaw—the proof depends on [13, Thm.2.1], but applies this at one point to an indefinite \(H+\mu I\). Lemma 1 avoids this issue, and the same result fixes the proof of [11, Thm.2.6] that applies in the trust-region case.

3 Comments and conclusions

We have shown that the norms of the approximations generated by well-known Krylov methods for solving the regularization problem (1.1) increase monotonically as the dimension of the Krylov spaces expands. This implies that the corresponding “multipliers” \(\mu _k\) also increase, and is useful as estimates of these multipliers are crucial when solving the Krylov subproblem; in particular, as the multiplier for the k-th problem is a lower bound for the \((k+1)\)-st, Newton-like iterations for the required root of the secular equation

will converge both globally and rapidly to \(\mu _{k+1}\) when started from \(\mu _{k}\) if additionally \(\mu _{k} > \lambda _{\min }(T_{k+1})\) [5, §3]. In particular, Newton’s method, the secant method or methods based upon certain higher (odd)-order Taylor approximations or nonlinear rescalings of the term \(\Vert \bar{x}( \mu )\Vert ^{p-2}\) all converge monotonically from such a starting \(\mu \). Knowledge of the monotonic nature of these quantities is also important when deriving convergence bounds [6] for such methods.

We warn readers that in exceptional circumstances, namely when g is orthogonal to the eigenspace corresponding to the leftmost eigenvalue of H and \(\sigma \) is not large enough, the global minimizer of (1.1) will not lie in \(\mathcal{K}_m(H,g)\), and \(\mu _m\) will underestimate the optimal multiplier. This (zero-probability) possibility is often referred to as the “hard case” ([3], §6,1, [12]), and, despite their popularity, might be viewed as an unavoidable defect of Krylov methods.

The main result here may trivially be extended for Krylov methods to

for given symmetric \(M \succ 0\), where \(\Vert x\Vert _M^2 :=x^T M x\), so long as we instead consider the Krylov spaces \(\mathcal{K}(M^{-1} H, M^{-1} g)\). It is well known that this may be achieved using the M-preconditioned Lanczos method [3, Sec.6.3]. In particular, if

it follows (using the transformation \(x \leftarrow M^{{\scriptstyle \frac{1}{2}}} x\)) that

for \(1 \le k \le \ell \le m\) just as in Theorem 1.

References

Bianconcini, T., Liuzzi, G., Morini, B., Sciandrone, M.: On the use of iterative methods in cubic regularization for unconstrained optimization. Comput. Optim. Appl. 60(1), 35–57 (2015)

Carmon, Y., Duchi, J.C.: Analysis of Krylov subspace solutions of regularized nonconvex quadratic problems. arXiv:1806.09222v1 (2018)

Cartis, C., Gould, N.I.M., Toint, PhL: Adaptive cubic regularisation methods for unconstrained optimization. Part I: motivation, convergence and numerical results. Math. Program. Ser. A 127(2), 245–295 (2011)

Conn, A.R., Gould, N.I.M., Toint, PhL: Trust-Region Methods. SIAM, Philadelphia (2000)

Gould, N.I.M., Robinson, D.P., Thorne, H.S.: On solving trust-region and other regularised subproblems in optimization. Math. Program. Comput. 2(1), 21–57 (2010)

Gould, N.I.M., Simoncini, V.: Error estimates for iterative algorithms for minimizing regularized quadratic subproblems. Optim. Methods Softw. https://doi.org/10.1080/10556788.2019.1670177 (2019)

Griewank, A.: The modification of Newton’s method for unconstrained optimization by bounding cubic terms. Technical Report NA/12, Department of Applied Mathematics and Theoretical Physics, University of Cambridge, England (1981)

Kohler, J.M., Lucchi, A.: Sub-sampled cubic regularization for non-convex optimization. In: Precup, D., Teh, Y.W., (eds.) Proceedings of the 34th International Conference on Machine Learning, volume 70 of Proceedings of Machine Learning Research, pp. 1895–1904 (2017)

Lange, M.: Subproblem solutions in cubic regularisation methods. M.Sc. thesis, University of Oxford, England (2017)

Liesen, J., Strakoš, Z.: Krylov Subspace Methods. Oxford University Press, Oxford (2013)

Lukšan, L., Matonoha, C., Vlček, J.: On Lagrange multipliers of trust-region subproblems. BIT 48(4), 763–768 (2008)

Moré, J.J., Sorensen, D.C.: Computing a trust region step. SIAM J. Sci. Stat. Comput. 4(3), 553–572 (1983)

Steihaug, T.: The conjugate gradient method and trust regions in large scale optimization. SIAM J. Numer. Anal. 20(3), 626–637 (1983)

Acknowledgements

The authors would like to thank two referees and the associate editor for their very helpful comments on the original draft of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the EPSRC Grant EP/M025179/1 (NIMG), and both by a German research foundation (DFG) fellowship through the Graduate School of Quantitative Biosciences Munich (QBM) and by the Joachim Herz Stiftung (ML)

Rights and permissions

About this article

Cite this article

Cartis, C., Gould, N.I.M. & Lange, M. On monotonic estimates of the norm of the minimizers of regularized quadratic functions in Krylov spaces. Bit Numer Math 60, 583–589 (2020). https://doi.org/10.1007/s10543-019-00791-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-019-00791-2