Abstract

This paper explores the application of Probabilistic Neural Network (PNN), Support Vector Machine (SVM) and K-means clustering as tools for automated classification of massive stellar spectra. The data set consists of a set of stellar spectra associated with the Sloan Digital Sky Survey (SDSS) SEGUE-1 and SEGUE-2, which consists of 400,000 data from 3850 to 8900 Å with 3646 data points each. We investigate the application of principal components analysis (PCA) to reducing the dimensionality of data set to 280, 400 and 700 components. We show that PNN can give fairly accurate spectral type classifications \(\sigma _{\mathit{RMS}}=1.752\), \(\sigma_{\mathit{RMS}}=1.538\) and \(\sigma_{\mathit{RMS}}=1.391\) and K-means can classify these spectra with an accuracy of \(\sigma_{\mathit{RMS}}=1.812\), \(\sigma_{\mathit{RMS}}=1.731\) and \(\sigma_{\mathit{RMS}}=1.654\) and SVM with the accuracy of \(\sigma_{\mathit{RMS}}=1.795\), \(\sigma_{\mathit{RMS}}=1.674\) and \(\sigma_{\mathit{RMS}}=1.529\) across the 280, 400 and 700 components, respectively. By using K-means the classification of the spectra renders 38 major classes. Furthermore, by comparing the results we noticed that PNN is more successful than K-means and SVM in automated classification.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Over the past decades, stellar population has increased because of large spectroscopic surveys such as RAdial Velocity Experiment (RAVE) (Steinmetz et al. 2006) or the Sloan Digital Sky Survey (SDSS) (Stoughton et al. 2002). Visual classification of flooded data stream by human experts is often subjective and needs extensive effort which is very time consuming. Automated classification using different statistical modeling can easily be implemented for very large numbers of these spectra.

Classification of stellar spectra requires a model which is based on stars information and detail of analysis. The MK classification of stellar spectra (Morgan et al. 1943; Morgan and Keenan 1973) has long been an important tool in astrophysics which is still in use today.

A reliable stellar spectral classification pipeline is necessary to automatically exploit these data sets. Developing an automated data analysis tool is of great challenge with the coming future instruments and the astronomers show fair attention towards these techniques which gives a very fast and reliable way of data analysis. There are many kinds of techniques for the classification of astronomical data such as Support Vector Machine (SVM), Probabilistic Neural Networks (PNN), Self-Organizing Map (SOM), Expert System and K-means. We choose PNN which is a kind of multilayer neural network model and K-means clustering for their fast and efficient implementation and also SVM algorithm.

The efficiency of artificial neural networks in spectral classification is addressed in previous works such as: Gulati et al. (1994a,b, 1995), Von Hipple et al. (1994), Weaver and Torres-Dodgen (1995), Bailer-Jones et al. (1998), Bazarghan and Gupta (2008), and Manteiga et al. (2009). Also the K-means classification of spectra is used for different astrophysical contexts in these papers: Balazs et al. (1996), Simpson et al. (2012), Galluccio et al. (2008), Sánchez Almeida et al. (2009, 2010), and Morales-Luis et al. (2011). The performance of SVM can be found in Zhang et al. (2004), Bailer-Jones et al. (2008) and Kim et al. (2011).

We also show how the use of principal component analysis (PCA) can greatly compress the spectra and reduce the dimensionality of the data. Moreover, using PCA leads to a faster processing of ANN algorithm, as the dimension of data (spectrum) is reduced, and it reduces the complexity of the neural network and hence improves the classification accuracy.

This paper is organized as follows: Sects. 2 and 3 describe the data sets used in this study, their preprocessing and reduction procedure, Sect. 4 presents different classification methods implemented in this work, while Sect. 5 discusses our results.

2 Data sets and pre-processing

2.1 Data selection

The spectra come from SEGUE-2 (Yanny et al. 2009) which is one of the four surveys (BOSS, SEGUE-2, APOGEE, and MARVELS) and SEGUE-1 of the SDSS III. SDSS has been in operation since 2000, using the 2.5 m telescope at Apache Point Observatory (APO) in the Sacramento Mountains in the southern New Mexico (Gunn et al. 2006). The initial surveys (York et al. 2000) carried our five broad bands filters (u, g, r, i, z) (Fukugita et al. 1996).

In this paper, data sets are from the SDSS data release 10 (DR10) and data release 12 (DR12). The data sets of stellar spectra from DR10 and DR12 associated with SEGUE-1 and SEGUE-2 with 100000 for training set and 300000 for test set are used. The members of training set are selected such that, it covers all the spectral types available in the downloaded data set. It is because, while training, the network must learn from the examples of all the spectral types. Hence, while testing the network can detect and recognize the given unknown spectra. We use catalogue Archive Server (CAS) to extract information of SEGUE-2 targets. The data set were just labeled as stars of classes of objects.

2.2 Spectra pre-processing

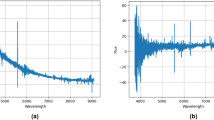

We processed the spectra to have the same starting and ending wavelength scale at 3850 to 8900 Å. The spectra have units of flux per unit wavelength which contain 3646 data points after having the same wavelength scale. The spectra were then normalized to have equal intensities in order to meet the requirements of our algorithms. The details of data sets are summarized in Fig. 1. We have \(100000\times 3646\) data array consisting of 100000 rows and 3646 columns for train set and \(300000\times 3646\) data array as a test set.

In MK classification spectral types are shown by alpha numeric model. To evaluate the performance of our algorithms we dedicated a continuous scale of numbers 1–38 for them (Table 1).

2.3 Principal Component Analysis

Principal Component Analysis (PCA) is a mathematical tool to emphasize variation and bring out strong patterns to reduce the dimensionality of data set. In particular, it allows us to identify the principal component direction in which the data varies. It often leads to make data set easy to classify. PCA has been used among others by Von Hipple et al. (1994) and Bailer-Jones et al. (1998) in stellar spectral classification, Francis et al. (1992) for quasar spectral classification, Connolly et al. (1995) and Lahav et al. (1996) for galaxy spectral classification.

The principal components are found by calculating the eigenvectors and eigenvalues of the data covariance matrix. This process is finding the axis system in which the covariance matrix is diagonal. The largest eigenvalues is the direction of greatest variation of eigenvectors, the one with the second largest eigenvalues is the orthogonal direction with the next highest variation and so on. Thus the most considerable principal component represent those features which vary the most between spectra ignoring those components that show the least variance in the data.

Wanting to get the spectra back is obviously of great concern in using PCA transform for data compression but it is considerable that if we took all the eigenvectors in our transformation, we will get exactly the original spectra back. We reduced the number of eigenvectors in the final transformation, then the retrieved spectra lost some redundant data and noise. Detail of these analysis is described by Bailer-Jones et al. (1998) and we refer to that work. Reconstructed spectra contain the most significant principal components which are strongly correlated in many of the spectra. The quality of spectral reconstruction by increasing the number of eigenvectors is shown in Fig. 2. We see that the quality of reconstruction grow significantly over the first 280 eigenvectors that 280 eigenvectors is sufficient to reconstruct 94.6 % of the variance in the data and it rise to 97.2 % in 700 principal component. We use 280, 400 and 700 eigenvector to classify our data set to compare the result of our reduction. The advantage of this large dimensionality reduction includes providing a simpler representation of the spectra and fast classification resulted by the reduced complexity of the network and hence confusion of the system.

3 Method of classification

3.1 Probabilistic neural networks

The probabilistic neural network (PNN) described by Specht (1990), is a special type of neural network based on Bayesian decision theory and the estimation of probability density function (PDF). PNN has a close relation with Parzen (1962) window PDF estimator which is a nonparametric procedure. Unlike other neural networks, PNN is easy to implement and have fast training process. Because of these advantages, it has become an effective tool for solving classification problems. The PNN algorithm have been used extensively for the astronomical data Bazarghan and Gupta (2008), Bazarghan et al. (2008).

The PNN architecture is composed of many interconnected neurons organized in four layer, input layer, pattern layer, summation layer and output layer as illustrated in Fig. 3.

The input layer does not perform any computation and passes the input vector \(X=(x_{1},x_{2},{\ldots },x_{n} ){\in }R^{n}\) to pattern layer. All connections in the network have a weight of 1, which means that the input vector is passed directly to each pattern node. In PNN, there is one pattern node for each training sample. Each pattern node has a center point, which is the input vector of sample. A pattern node also has a spread factor, which defines the size of its respective field. There are a diversity of ways to set this parameter. Spread factor is equal to the fraction f of the distance to the nearest neighbor of each sample. The value of f begins at 0.5. The neurons of the pattern layer compute its output using a Gaussian kernel,

where \(n\) denotes dimension of the pattern vector \(X\), \(\sigma \) is the smoothing parameter, and \(X_{K,i}\) is the center of the Kernel. The summation layer neurons compute its output as the PDF for all neurons that belong to the same class.

Then at the output layer pattern \(x\) is classified in accordance with the Bayes decision rule based on the output of all the summation layer neurons and the highest probability at the output of Summation layer will appear at the output layer c.

where \(K\) denotes the total number of classes in the training samples.

3.2 K-means

K-means was first used by MacQueen (1967). It is one of the simplest unsupervised learning algorithm that could solve the classification problems. The procedure follows a way to classify a given data set through a k number of clusters. Classification process begins by finding k number of clusters and their centers. Then each set of spectra is assigned to the nearest center that is closest in a least square sense. When all the spectra are loaded to the network, the cluster center gets refreshed (recalculated) as the average of the spectra in the cluster. And each point is replaced by the respective cluster center. These steps are repeated until no point moves cluster. Finally the algorithm provides a number of clusters with all the spectra assigned to one of them. Efficiency of K-means is closely related to the choice of k number of clusters. We consider 38 clusters as the number of spectral type in our data set.

3.3 Support Vector Machine

Support Vector machine (SVM) is a supervised machine learning algorithm which was introduced by Vapnik (1995) and is based on the foundation of statistical learning theory. SVM is widely used for classification, data analysis and pattern recognition (Zhang et al. 2004, Bailer-Jones et al. 2008, Kim et al. 2011). The main purpose of the SVM is to create a hyper plane or set of hyper planes in a high- or infinite-dimensional decision boundary between data sets to indicate which class it belongs to. The points on the boundaries are called support vectors. The problem of finding the optimal hyper plane is an optimization problem and can be solved by optimization techniques using Lagrange multipliers to get into a form that can be solved analytically. Whereas most of the other classifiers use all the data set to determine the boundary, SVM take the nearest points to the boundary. The challenge is to train the machine to provide the SVM with examples of the different classes of data set and dedicate right class label. Training a SVM model belongs to a quadratic programming problem involving inequality constraints. The best result corresponds to the hyper plane that has the largest distance to the nearest training data point of any class (the maximum margin). Total classification error contain those points which lie on the wrong side of the hyper plane.

4 Results and discussion

In the following discussion we use PNN, SVM and K-means three times, for 280, 400 and 700 principal components. Figure 4 shows PNN classification results. In Fig. 4a the classification scatter plot is shown, where 280 principal components of the spectra are used. In the subsequent panels result, Figs. 4b and 4c, classification result of the 400 and 700 principal components are shown. The diagonal line plotted to better representation of the quality of PNN determination. Because of uncertainty in the classification, even an excellent classifier could not give results exactly on this line.

In Fig. 4a it is clear that there is large scatter with the correlation coefficient of \(r=0.9314\), thus indicating that 280 principal components are not at all sufficient for the satisfactory classification. Although configuration of 400 components gives a good result in this analysis with \(r=0.9328\) but, the scattering was noticed to be better for 700 components with \(r=0.9356\). There is small scatter in Fig. 4c which convinced us that 700 principal components were enough to show the great quality of classification result. The right hand panels are histograms of the classification residual \((X^{p}-Y ^{p})\), where \(X^{p}\) is the classification result and the \(Y^{p}\) catalogue class of spectrum. As we can see from the histograms, a total of 198121, 213540 and 237156 spectra have been classified correctly out of the total sample of 300000 spectra from 280, 400 and 700 principal components which indicating a success rate of approximately 66 %, 72 % and 80 %.

Considering on our best result (700 pc), we have the most misclassification for F9 stars. Out of a total 40637 spectra for this class, 31844 spectra were classified correctly. From the 8793 that were misclassified, most of them were classified as F5 and G0. F9 stars have been put in these classes by the PNN probably because of the resemblance of these spectra to each other and be the largest sample of our data set. The second numerous misclassification is for K3 which of a total of 22123 spectra, 15325 were correctly classified, while 6798 spectra were misclassified. The spectra were incorrectly classified into K1 and G2. M2 stars has 8844 spectra in all. 5376 spectra have been correctly classified, 3468 spectra have been misclassified into the other subclasses of M stars. PNN classification accuracy of WD has the best compliance with the class. Out of 2681 spectra of WD stars, 2653 spectra were classified correctly, while 28 spectrum was misclassified into other classes.

Figure 5 gives sample plots for correctly classified spectra from various spectral and sub-spectral types. It shows \(4 \times 5\) windows with the pair spectra plotted in each window (result of classification Vs the catalogue class) to show the quality of classification and fitness. The catalogue spectrum is shown in dotted (green) line and the classification result in dashed (blue) line.

The K-means algorithm is used for 300000 spectra with 280, 400 and 700 principal components to classify them into 38 clusters. The spectra in their classes are alike. The number of stars in the classes are shown as histograms in Fig. 6, that Figs. 6a, 6b and 6c belong to 280, 400 and 700 principal components respectively. These figures are arranged in the reducing order, the class with the highest population (highest peak) plotted first and the class with the lowest peak comes last. As a result of classification using K-means for the 280, 400, and 700 principal components, the highest peak belongs to the class of F9 stars. But the results show some variation for other classes using different components.

The allocation of the stars to each class depends on how far the star is from the cluster center. The error of this kind of classification could be measured by allocating each member of cluster to the right class and not similar to any other cluster. If most of stars in each cluster are the same as each other, the efficiency of classification increases. We obtained the best classification using 700 principal components, same as the classification with PNN.

Finally, we classified our data set with 280, 400 and 700 principal component by SVM algorithm. We use LIBSVM software which is provided by Chang and Lin (2011) to implement the SVM algorithm. There are four basic kernels in SVM: linear, polynomial, radial basis function (RBF), and sigmoid. When the number of features are very large, linear kernel is good enough and we just need to find an optimal C parameter, which is 0.7 in this project. Another important part that improves the result is, scaling before applying SVM. We normalize and use PCA on our data set which is discussed in Sect. 2.2. After analyzing the SVM output we see that, some B type stars are misclassified as A type stars. Moreover, although the SVM classification works quite well for stars from O to A type, but F to K type stars have the highest completeness larger than 90 %. It means that more than 90 % F to K type stars are correctly classified by the SVM algorithm. The completeness of O to B and M type stars are about 82 % and 73 %, respectively, which are still acceptable. However, the completeness for other types of stars are only about 64 %. These error are probably because the spectral features of them are very similar and they are very difficult to be disentangled in SVM. Like PNN and K-means, the least error in SVM also belongs to 700 principal components.

Classification of spectra with 700 principal components by these algorithms needs more time than 280 and 400 principal components, but has better results. The most time consuming algorithm is SVM, then K-means and finally PNN has the least processing time. The personal computer of core i7 with 12 cores CPU, 32 GB RAM and 256 GB SSD hard drive is used in this project. Table 2 is a summary of the spectral classification results. We use RMS error to evaluate the performance of our algorithms. The most significant result is for the smallest error which is obtained using 700 principal components, as seen in Table 2.

5 Conclusion

We have classified a set of 400000 stellar spectra with PNN, SVM and K-means algorithm. The PCA showed that the efficient dimension of the spectra is about 700 components. We have shown that we can achieve classification errors of 1.391, 1.529 and 1.715 for our best principal component (700 pc) using PNN, SVM and K-means respectively. These algorithms have been able to correctly classify approximately 80 % of the data set. As is summarized in Table 2, PNN and SVM have a better performance, i.e. lower RMS error, than the K-means algorithm for all feature sizes. PNN is marginally better than SVM for a relatively small feature size of 280 but as the feature size grows to 700, PNN shows a better performance than SVM.

References

Bailer-Jones, C.A.L., Irwin, M., von Hippe, T.: Mon. Not. R. Astron. Soc. 298, 361–377 (1998)

Bailer-Jones, C.A.L., et al.: Mon. Not. R. Astron. Soc. 391, 1838 (2008)

Balazs, L.G., Garibjanyan, A.T., Mirzoyan, L.V., et al.: Astron. Astrophys. 311, 145 (1996)

Bazarghan, M., Gupta, R.: Astrophys. Space Sci. 315, 201–210 (2008)

Bazarghan, M., Safari, H., Innes, D.E., Karami, E., Solanki, S.K.: Astron. Astrophys. 492, L13–L16 (2008)

Chang, C.-C., Lin, C.-J.: ACM Trans. Intell. Syst. Technol. 2, 27 (2011)

Connolly, A.J., Szalay, A.S., Bershady, M.A., Kinney, A.L., Calzetti, D.: Astron. J. 110, 1071 (1995)

Francis, P.J., Hewett, P.C., Foltz, C.B., Chaffee, F.H.: Astrophys. J. 398, 476 (1992)

Fukugita, M., Ichikawa, T., Gunn, J.E., et al.: Astron. J. 111, 1748 (1996)

Galluccio, L., Michel, O., Bendjoya, P., Slezak, E.: In: Bailer-Jones, C.A.L. (ed.) Classification and Discovery in Large Astronomical Surveys. AIP Conf. Ser., vol. 1082, p. 165. University of Chicago Press, Chicago (2008)

Gulati, R.K., Gupta, R., Khobragade, S.: Astrophys. J. 426, 340 (1994a)

Gulati, R.K., Gupta, R., Khobragade, S.: Astrophys. J. 38, 293 (1994b)

Gulati, R.K., Gupta, R., Khobragade, S.: In: Shaw, R.A., Payne, H.E., Hayes, J.J.E. (eds.) Astronomica Data Analysis Software and Systems IV. ASP Conf. Ser., vol. 77, p. 253 (1995)

Gunn, J.E., Siegmund, W.A., Mannery, E.J., et al.: Astron. J. 131, 2332 (2006)

Kim, D., et al.: Astrophys. J. 735, 68 (2011)

Lahav, O., Naim, A., Sodre, L. Jr., Storrie-Lombardi, M.C.: Mon. Not. R. Astron. Soc. 283, 207 (1996)

MacQueen, J.: In: Le Cam, L.M., Neyman, J. (eds.) Fifth Berkeley Symposium on Mathematical Statistics and Probability, p. 281. University of Chicago Press, Berkeley (1967)

Manteiga, M., Carricajo, I., et al.: Astron. J. 137, 3245–3253 (2009)

Morales-Luis, A.B., Sánchez Almeida, J., Aguerri, J.A.L., Muñoz-Tu nón, C.: Astrophys. J. 743, 77 (2011)

Morgan, W.W., Keenan, P.C.: Annu. Rev. Astron. Astrophys. 11, 29 (1973)

Morgan, W.W., Keenan, P.C., Kellman, E.: An Atlas of Stellar Spectra, with an Outline of Spectral Classification. University of Chicago Press, Chicago (1943)

Parzen, E.: Ann. Math. Stat. 3, 1065 (1962)

Sánchez Almeida, J., Aguerri, J.A.L., Muñoz-Tuñón, C., de Vicente, A.: Astrophys. J. 698, 1497 (2009)

Sánchez Almeida, J., Aguerri, J.A.L., Muñoz-Tuñón, C., de Vicente, A.: Astrophys. J. 714, 487 (2010)

Simpson, J.D., Cottrell, P.L., Worley, C.C.: Mon. Not. R. Astron. Soc. 427, 1153 (2012)

Specht, D.F.: Neural Netw. 1(3), 109 (1990)

Steinmetz, M., Zwitter, T., Siebert, A., et al.: Astron. J. 132, 1645 (2006)

Stoughton, C., Lupton, R.H., Bernardi, M., et al.: Astron. J. 123, 485 (2002)

Vapnik, V.N.: The Nature of Statistical Learning Theory. Springer, New York (1995)

Von Hipple, T., Storrie-Lombardi, L.J., Storrie-Lombardi, M.C., Irwin, M.J.: Mon. Not. R. Astron. Soc. 269, 97 (1994)

Weaver, W.B., Torres-Dodgen, A.V.: Astrophys. J. 446, 300 (1995)

Yanny, B., Rockosi, C., Newberg, H.J., et al.: Astron. J. 137, 4377–4399 (2009)

York, D.G., Adelman, J., Anderson, J.E., et al.: Astron. J. 120, 1579 (2000)

Zhang, Y.X., et al.: Astron. Astrophys. 422, 1113 (2004)

Acknowledgement

We would like to thank Coryn Bailer Jones for his insightful suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kheirdastan, S., Bazarghan, M. SDSS-DR12 bulk stellar spectral classification: Artificial neural networks approach. Astrophys Space Sci 361, 304 (2016). https://doi.org/10.1007/s10509-016-2880-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10509-016-2880-3