Abstract

An important challenge when creating automatically processable laws concerns open-textured terms. The ability to measure open-texture can assist in determining the feasibility of encoding regulation and where additional legal information is required to properly assess a legal issue or dispute. In this article, we propose a novel conceptualisation of open-texture with the aim of determining the extent of open-textured terms in legal documents. We conceptualise open-texture as a lever whose state is impacted by three types of forces: internal forces (the words within the text themselves), external forces (the resources brought to challenge the definition of words), and lateral forces (the merit of such challenges). We tested part of this conceptualisation with 26 participants by investigating agreement in paired annotators. Five key findings emerged. First, agreement on which words are open-texture within a legal text is possible and statistically significant. Second, agreement is even high at an average inter-rater reliability of 0.7 (Cohen’s kappa). Third, when there is agreement on the words, agreement on the Open-Texture Value is high. Fourth, there is a dependence between the Open-Texture Value and reasons invoked behind open-texture. Fifth, involving only four annotators can yield similar results compared to involving twenty more when it comes to only flagging clauses containing open-texture. We conclude the article by discussing limitations of our experiment and which remaining questions in real life cases are still outstanding.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Rules state a determinate legal result that follows from one or more facts (Sullivan 1992). In contrast, an open-textured norm requires legal decision makers to apply one or more underlying principles to a set of facts in order to interpret the norm and draw a legal conclusion depending on the cultural and social context (Buchholtz 2019; Sullivan 1992). Following these definitions, a 100 km/h speed limit is a rule, whereas a law that states that drivers must drive safely is considered an open-textured norm. The core characteristic of rules is that outcomes are determined by the presence or absence of triggering facts that can be specified ex ante. With open-textured norms, outcomes require situation-specific factual inquiries, and/or balancing of competing factors and values. Open-textured norms are ones that have an inherent semantic indeterminacy, meaning that different individuals might understand the term differently (Vecht 2020). Consequently, encoding open-textured norms (or balancing norms) is inherently problematic (Cobbe 2020; Diver 2020; Hildebrandt 2020). Legal concepts such as “good faith” and “reasonableness” illustrate this trade-off between legal certainty and other fundamental values such as justice and equality.

Knowing which and how many open-textured terms a law contains is relevant for determining how suitable a law is to be turned into an automatically processable regulation (APR), that is, “pieces of regulation that are possibly interconnected semantically and are expressed in a form that makes them accessible to be processed automatically, typically by a computer system that executes a specified algorithm, i.e., a finite series of unambiguous and uncontradictory computer-implementable instructions to solve a specific set of computable problems” (Guitton et al. 2022). Clear, unambiguous rules are preferred for creating APR, as interpretation brings back the transaction costs APR aims to reduce, considering that if open-textured terms arise, human input is required, or appropriate case law needs to be consulted in order to determine the end result of a provision. However, from a legislative perspective, open-textured terms can be useful and cost effective in some instances (Huber and Shipan 2012), as permitting open-texture lowers the cost of creating legal texts (e.g. as a tool to create political consensus by pushing decisions of interpretation onto the judiciary). In certain instances, using open-texture also allows to encompass on purpose a wide range of scenarios which would not be possible to individually specify into the law because the list would be too long and varied or because such scenarios are not yet fully imaginable at the time of legislating (Endicott 2011). Consequently, knowing how much of a law is open-textured can help determine whether APR is feasible and worthwhile for this law.

We contribute to the body of knowledge by providing a framework to determine how much of the law is likely to be open-textured. For this, we start by elaborating on what has been researched and the nature of open-textured terms (Sect. 2). We discuss an approach to classify terms into non-open-textured ones and open-textured ones and conceptualise open-texture in law as a lever whose state is impacted by three types of forces: internal forces (the words within the text themselves), external forces (the resources brought to challenge the definition of words), and lateral forces (the merit of such challenges). We apply our methodology to concrete pieces of legislation, and test it with 26 participants (Sect. 3). In Sect. 4 we showcase our results and finally, we discuss the impacts of our findings (Sect. 5).

2 Open-textured terms

2.1 Prior research

A consideration of open-textured norms is an important part of answering the question whether the law can or should be encoded. Research on encoding open-textured terms is limited, however. It has been noted that when open-textured terms leave room for interpretation, this can be seen as a desirable feature of the law itself (Bonatti et al. 2020). Other research has tackled open-texture by proposing a “value-ontology” with two axes (Benzmüller et al. 2020), one where security opposes freedom on one axis, and one where equality opposes utility on another orthogonal axis. These approaches, while at times correct, are also at times too simplistic, as the relation between security and freedom can be complex, with many more nuances than one coming at the cost of the other. In the context of the COVID-19 threat, privacy-preserving technologies were suggested and deployed (Troncoso et al. 2020), even if an increase in technological surveillance had questionable effectiveness (Brakel et al., 2022). In yet another context, namely tackling terrorism, the impact of surveillance by intelligence services can be limited via proper control and oversight structures (Solove 2011).

The limitation of the approach by Benzmüller et al. highlights the current dire need to have a much better understanding of how to approach open-textured terms, if only then to better leave them out at a later stage. Research does exist that has measured the complexity of legislation by means of computational analysis (Bourcier and Mazzega 2007; Katz and Bommarito 2014; Palmirani and Cervone 2013; Waltl and Matthes 2014), calculating aspects like structure (e.g. paragraphs, sections, articles), language (e.g. number of tokens, word length), interdependence (e.g. citations), indeterminacy and vocabulary variety, and readability. Indeterminacy relates the closest to open-texture, and Waltl and Matthes (2014) identified 62 legal terms which they could classify under indeterminacy, did not specify the methodology that they used to create the list (or the list itself).

2.2 Defining open-texture

What qualifies as open-textured terms? Arguably in law almost every term can be open-textured, as illustrated by Hart’s famous sentence that “law is open-textured” (Hart 1994; Schauer 2013) and by multiple cases. One of such cases is with the determination under the General Data Protection Regulation of who falls under the term Controller or Joint Controller, with the ECJ broadening the term to include also entities who determine parameters of the processing but do not themselves process personal data under the scope of joint-controllership, e.g. in the case of the Wirtschaftsakademie Schleswig–Holstein GmbH (Unabhängiges Landeszentrum für Datenschutz Schleswig–Holstein v Wirtschaftsakademie Schleswig–Holstein GmbH 2018). Another such cases is with a decision by the Swiss Federal Supreme Court determining that stopping at a red light and unbuckling the seat belt before buckling it back up is considered “while driving” under Swiss traffic regulation (Beschwerde gegen den Entscheid des Obergerichts des Kantons Luzern 2011). The interpretation of terms, and consequently rules, can also change over time and can spill over to other domains. A woman from Texas, US, 34-weeks into her pregnancy, used on a highway the high-occupancy lane which by law may only be used if at least two passengers are in the car. This was after the overturning of the Supreme Court of Roe v. Wade. She argued and challenged the traffic ticket she received on the basis that the recent change in the Supreme Court’s reasoning on how to classify foetuses cannot hold true only in the context of an abortion but applies more generally, thus allowing her to use the high-occupancy lane with her unborn child in the car (Oladipo 2022). This nicely illustrates how changes in legal understanding (and the political shaping of this understanding), the application of terms across context, and the social plan that law creates (Shapiro 2011), make interpreting the law a highly contextual, social, and reflective issue.

Hence, open-texture encompasses different interpretations in different contexts, times, and geographical areas, and more generally, encompasses a wide range of ambiguity and vagueness. Ambiguity has different forms such as of the lexical type (“duck” is both a verb and a noun, although the immediate context of the word should help with making the appropriate distinction), syntactic (where it is unclear what commas, pronouns, quantifiers refer to), and pragmatic (“can you pass me the salt?”)(Sennet 2021). Ambiguity is further different from vagueness (“at some point in the future”), context sensitivity (e.g. “appropriate measures need to be taken”), from under-specification (“to sanction” can mean both “to approve of” and “to lay a penalty upon”) (The Economist 2022), and from sense or reference transfer (e.g. “he is parked” refers to a car rather than to a person, Sennet 2021). And lastly, Essentially Contested Concepts (Gallie 1956) are “so value-laden that no amount of argument or evidence can ever lead to agreement on a single version as the 'correct or standard use'” (Baldwin 1995). When attempting to turn legislation into an automatically processable form, any of these—ambiguity (of all types), vagueness, under-specification, reference transfer, (essentially) contested concepts—leads to a hindrance. In this study, we cluster them under the overarching term of “open-texture”. Such a definition extends the one given by Waismann (1945) when he coined the term as he differentiated between those terms whose “equivocal usages can be avoided by more precision definitions” and those with “Porosität” (i.e., open-texture).

Scholars have put forward certain methods, at times empirical, to be able to determine whether a term falls into one category or another (Farnsworth et al. 2010; Gallie 1956; Massey et al. 2014; Sennet 2021). These focused on certain aspects of open-texture (e.g. ambiguity but not concepts), and we built on this existing literature to create questions which annotators could follow to determine open-texture. Massey et al. (2017, 2014) most notably distinguished between the three types of ambiguities (lexical, syntactic, semantic), vagueness, incompleteness, and referentiality (e.g. “The boy told his father about the damage. He was very upset”)—and as such did not account for concepts (e.g. fairness, justice), preferring to subsume it under vagueness within their context of looking at technical requirements. But they provided definitions so that other scholars could determine which category words could fall into. Further experimental studies on the ambiguity of technical requirements (e.g. Bhatia et al. 2016) reused this typology. Also worth mentioning, certain (legal) scholars have theorised on vagueness albeit without providing a practical methodology on how to delineate it (Keil and Poscher 2016). Regarding essentially contested concepts, the seminal articles by Gallie (1956) did provide a list of seven features (later extended to 16 by other scholars)—but which we assess as rather difficult to apply by (legal) annotators who would be tasked with classifying words in a statute as they can be rather opaque, and non-intuitive. For instance, consider the two first ones: “(I) it must be appraisive in the sense that it signifies or accredits some kind of valued achievement. (II) This achievement must be of an internally complex character, for all that its worth is attributed to it as a whole.” (Gallie 1956, pp. 171–172).

2.3 The open-texture model

We contend that a model for open-texture is necessary regarding the lack of a comprehensive explanatory model for the concept. By trying to model open-texture in a way that takes into account all the different aspects of it, it becomes easier to understand how to deal with the issues open-texture brings along, depending on the context. For instance, efforts to automatically turn legal clauses into automatically processable regulation is hampered by the lack of a shared understanding of what open-texture is (Guitton et al. 2024). Adding to the conceptualisation of open-texture in the literature, we posit that words in legal clauses may oscillate between the two different states of being open-textured or not open-textured tilting at times more towards the former or more towards the latter, and therefore propose to model open-texture using a lever (see Fig. 1 below). Applied to automatically processable regulation, this view of open-texture as an oscillating concept already hints that developers will need to keep track of open-texture terms and will have to update their meaning as changes to their status occur. The question that naturally follows is how open-texture should be identified. Our model goes much further than only putting forward an oscillation between states and seeks to refine more precisely what is meant with open-texture notably by introducing different levels, degrees, values, uncertainties, and forces. In the context of automatically processable regulation, we propose that all these factors are valuable to successfully process open-texture in legal documents.

Open-texture is then a state which needs to be defined more specifically to a certain degree and level (A), and which is under the influence of external forces (B), lateral forces (C), and internal forces (D). With Open-Texture Value, we seek to capture that there is a qualitative difference between, for instance, “periodically”—a term under-defined and for which bringing precision might be relatively easy—and “justice”—a term with many different facets. The Open-Texture Value is associated with a level of uncertainty as it may be difficult to pin a value.

Example

If a term or a clause is considered non open-texture (e.g., ‘must not turn right’), then the lever would have its right-end at the bottom (Fig. 2, Tile A). A challenge would push it towards a higher Open-Texture Value, hence with a downward exerting force (e.g., turning right because of a medical emergency). While the challenge is on-going (e.g., pending at the court for consideration), the term would remain with a high Open-Texture Value (Fig. 2, Tile B). Depending on whether and how the challenge is settled, the last position could be anywhere within the spectrum.

2.3.1 Level and degree of open-texture (A)

Open-texture can only be established at a specific level, and for specific degrees of open-texture. The specific level relates to the information being heeded to determine open-texture as definitions to solve certain ambiguity, vagueness, or concepts (e.g. related to “periodically”, or to “joint controllers”) could be found in other sources. More specifically, we distinguish between establishing open-texture at three levels: case, text, or general level. The facts in many legal cases will often bring on a challenge to a term, most vividly in criminal law (e.g. torture, intent, pornography). The legal outcome of the case will bring further definition of the term but almost any new case will have to be, again, looked at separately (Easterbrook 1994). And so, at the conclusion of a case, a term may not be open-textured anymore but the definition it will have acquired will only be useful within this very case, amidst a specific context and facts. The second level which we distinguish is the text level where a text might not be open-textured if we were to look for meaning in other legal texts (case law or statutes) which would refine the term. But by only looking at the legal text in and of itself, the term is open-textured. And lastly, the most comprehensive of all levels is the general one where one would assume an omniscient access to the law—all statutes and all case law possible—which would result in being able to determine whether there is a further precise definition of the term.

Yet, even at such a general level, it makes sense to have a distinction for degrees of open-texture, that is, while there may be agreement on one definition, to which extent does this definition itself further contain open-textured terms? And again, to which extent does the definition of the definition contain open-textured terms? (Coolidge et al. 2010) And so on. In order to clarify this, we propose to introduce that a term has been determined as being non open-textured with a certain degree n.

2.3.2 External forces (B)

External forces put upward and downward pressure on the lever, determining thus whether a term is moved from open-textured to non-open-textured or vice versa.

A downward pressure means that the status quo is challenged by a person or entity that (attempts to) contest a currently assumed established term. A push from the ground upwards is a challenge to the status quo of the status of the term, be it whether the term tended to be open-textured, or not. For instance, such a challenge could be in the form of a case being brought to a court—as in the above examples of the specific meaning of “while driving” and with the high-occupancy lane. When such external upward pressure challenging a non-open-textured term occurs however is difficult to predict, as challenging legal constructs in court requires sufficient financial and time resources. It will be case dependent on when sufficient financial and time resources are available to take on the challenge to move the lever downwards from non-open-texture to open-texture and will likely also be driven by other factors, such as psychological reasons. Why a challenge occurs is dependent on a variety of facts, but the ability to bring on a challenge is also dependent on the merit of the case itself; this will determine as well whether a court accepts the case or whether the legal grounds are too spurious to do so. It is hence neither entirely due to internal or external factors, but rather to a combination of both.

An open-textured term can also be influenced by external upward pressure, typically when an authority takes a decision, either bringing a term closer to being open-textured by abandoning a currently agreed definition, or by refining a definition and hence bringing the term closer to being non open-textured. An example of the latter type comes with government bodies issuing guidelines as a result of courts forcing them to do so.

2.3.3 Lateral forces (C)

Certain terms are more central to the interpretation of clauses than others, meaning that the way the balance tilts and reacts to external and internal will also depend on where the fulcrum is located. The lateral forces hence represent the sensitivity of a term to change in response to internal and external forces as the fulcrum is not necessarily centred. The position of the fulcrum depends on the societal relevance of the legal text (see Fig. 3). With societal relevance, we mean that societies put emphasis on different topics at different points in time, a reflection of which can be seen via agenda-setting in politics (Zahariadis 2016). Law, as a social plan (Shapiro 2011), is hence also the reflection of changing emphasis within societies. For example, compared to the previous decades, questions of climate change litigations have become a lot more prominent (Setzer and Higham 2022), while at the same time, in many countries, a new non-gender based meaning of “marriage” has emerged thereby settling a debate and decreasing the need for re-defining the term (Pathak and Dev 2023). And so, the societal relevance of the text will dictate the importance to clarify terms, and in turn, how easy or difficult it will be to bring a challenge to the status quo.

In order for a court to consider a challenge, either the court has to accept hearing a case due to in-force procedures (in which case the fulcrum is in a neutral state in the middle), or a court therefore needs to be convinced of the merit of the case (Gustafson 2023). To illustrate this latter, many companies have been refuting allegations of misbehaviour by stating that they were “without merit” (Indap 2023). In August 2023, a judge in the US accepted a lawsuit by shareholders claiming that they had been misled by the firm which issued at the time such a rebuttal of stating that the lawsuit was “without merit”. The judge, accepting the shareholders’ lawsuit against the company did therefore find that the shareholders’ case did have sufficient merit to proceed (Indap 2023). The judge accepting the shareholders’ lawsuit against the company, which had claimed that the case against them was “without merit”, did therefore find that the shareholders’ case did have sufficient merit to proceed (Indap 2023). The other way around is also common: judges can also dismiss cases if they deem these as being without merit—former President Donald Trump has made headlines for having several of his cases dismissed with a judge fining him for “frivolous lawsuits undermining the rule of law” (Colvin 2023).

And so, at the time a court is considering accepting the challenge or not, four cases are possible determining both the position of the fulcrum, and in turn, determining how difficult it will be to challenge the status quo (Table 1 and Fig. 3).

The position of the fulcrum thus impacts how easy terms and clauses can change their Open-Texture Value. The more importance the term is given, the more likely different actors will seek to challenge its definition, and the easier they will be able to do so, and hence, the more likely that the lever will react with an upward movement even with a small challenge—and hence the fulcrum should not be located in the middle of the lever but on its left-side.

2.3.4 Internal forces (D)

In our representation of open-texture with a lever, internal forces relate to the weight on the right end side of the lever: the internal forces consider the word on its own. To identify internal forces—those that are inherent to the text of the law—we propose a sequence of steps to follow. We first devised a manual algorithm but because the categories of open-texture are not mutually-exclusive, this turned out not practical. After several iterations, we devised instead a set of four questions (see Table 2) which, we argue, should capture the span of open-textured words across the aforementioned spectrum from ambiguities to referentiality. While the questions retain some of the overlap, this is much less problematic when considered not as steps in an algorithm but merely as triggering questions. We also argue that due to the inherent nature of open-textured terms being ill-defined, it would appear that attempting to design mutually exclusive categories would be in vain: in specific contexts, there might often be an overlap between categories. In this sense, an approach in terms of states and forces influencing these states is a much more coherent way to tackle open-texture. The questions correspond roughly to the following aforementioned categories:

These many distinctions are especially relevant in the context of automatically processable regulation where these delineations become crucial. In the rest of this article, as we attempt to classify open-textured terms within the text of regulations, we shall do so only at the degree 1 (meaning that we only looked at the word per se, and not at whether its definition also contained open-textured words) and at the text level, as this is the most practical level to consider when legal texts are turned into an automatic processable version. We present here the empirical results of this classification with quantifying the resulting Open-Texture Value, that is, the extent to which a term is non open-textured or open-textured.

3 Method

Our method focuses on investigating internal forces, legal scholars’ reading and interpretation of words. On the other hand, the methodology would be substantially different if we were to focus on testing external and lateral forces: this would presumably involve for instance surveying in a systematic manner those who file and those who accept legal challenges, or looking at classifying challenges of cases which reach a court.

3.1 Hypotheses

Legal professionals are notorious for debating, holding opposing opinions, and arguing (Ackerman 1986; Kramer 2003). One highly relevant aspect of such arguments that we can investigate relates to agreement regarding open-texture. In the context of our conceptualised model on open-texture, we formulate two hypotheses on two different aspects of open-texture, one related to the words themselves, and one to the Open-Texture Value:

H1: Legal scholars are able to agree on which words are open-textured at the text-level.

Testing only the text on its merit, dissociated from any case, allows to target the internal forces without adding further complexity related to the case itself (challenge) and the merit of the case (lateral forces).

We can measure agreement between pairs of legal annotators using Cohen’s KappaFootnote 1 score. But by asking whether annotators agree with their colleague, obtaining high agreement, as measured by Cohen’s Kappa score, may not be sufficient, as we need to control that annotators could arbitrarily validate words (either under bias, or because the task becomes too lengthy, or because of any other reason). In order to control for this, we suggest the following: when asking annotators which trigger questions from internal forces led to classify a word as open-textured, the distribution of trigger questions should remain sensibly constant across one round of annotators flagging words on their own, and one round where they have to review the list of words from a pair and decide on whether they agree with single items or not.

H2: Legal scholars are able to agree on the resulting Open-Texture Value of words at the text level.

As Fig. 1 shows, we conceptualised open-texture not in a binary way (a word is or is not open-textured), but rather as a spectrum. With H2, we seek to test whether legal scholars then can not only agree on open-textured words, but also on the resulting Open-Texture Value of these words. As indicated by H1 where we test for agreement, two rounds are necessary (one round where annotators independently flag words, and one where agreement is actually tested), and so, if annotators do not arbitrarily attribute values to open-textured words, we should be able to see only small differences between pairs of annotators across both rounds. To test the hypothesis, annotators can have the option to select “uncertain”.

In effect, by proving H1, and H2, this means that the triggering questions are helpful in flagging open-textured words, and that legal professionals can assign a value which they find meaningful to the flagged open-textured words. Hence, proving H1 and H2 would bring supporting evidence to the usability of part of our current model, especially the part regarding internal forces for open-texture, for text-level and degree 1.

3.2 Measurement

Our conceptual approach to open-texture forms a basis to be able to establish a list of terms to use as a heuristic to quickly detect those predicates which could cause more difficulties for turning them into APR. We only claim that the resulting lists are applicable to the pieces of legislation under consideration. Other authors, such as the influential study on ambiguity by Massey et al. (2014), have in the past asked 18 participants to validate their typology; others such as Waltl and Matthes (2014) did not appear to have sought to validate their list of words. We also sought confirmation by asking a number of participants—yet we depart from Massey et al. (2014) in two different important ways: first, we sought to validate whether agreement on flagging open-texture was possible at all, and second, we included two rounds for review and validation to test agreement not only on words but on Open-Texture Value and reasoning too.

In order to first validate the process, Step 1 involved two law students from Maastricht University with two of the authors to survey four laws to extract just the stems of open-textured terms. By stems, we mean that for instance, by having the word “lawful” on the list, it would span and match as open-textured: “lawfulness”, “unlawful”, “lawfully”, “unlawfully”, etc. We followed consistently a four-eyes principle that a second annotator had to review all stems but noticed that differences between annotators had to be heeded. At this time, annotators had to identify open-texture without any guiding questions provided to them, yet (see Table 3 for a break-down of the list size of words flagged as open-texture). We hence used this first step in our empirical investigation of open-texture notably to form the basis of developing practical, empirical questions as presented in our conceptualisation of open-texture, and to devise a more refined way of approaching agreement. In order to compare more precisely annotations between different annotators, we had, however, to abandon the idea of focusing on stems to focus instead on each exact identified word.

Step 2 involved the four authors drawing on the lessons of Step 1 to devise a way to heed agreement and to include reasoning and values behind each flagged open-textured word. In Step 3, we ran a test with 5 participants to validate the clarity of our instruction for Step 4 and corrected the instructions accordingly.

Step 5 involved 20 (different) law students from Maastricht University, paired, with each pair being asked to survey the same four pieces of legislation as in the first step, but only a few articles each time (totalling roughly 5,000 words per annotator, see Appendix 1 for the detailed break-down). We divided people in pairs because the alternative, that all participants have to go through all texts totalling over 53 thousand words, would have been far too long for any single participant and we assessed that this would have hampered their ability to flag open-texture. Before conducting the full-scale experiment, we carried out a trial with five people to verify the readability of our instructions (Step 3 of Fig. 4 below). In Step 4, the 20 students were also requested to indicate for each open textured word that they wrote down: a value for open-texture on a spectrum (including a possibility to mark it down as “uncertain”), and which of the four questions triggered it (see Appendix 2 for the instructions the students had to follow). Two rounds occurred, with the second round asking pairs to explore resolving the differences between their list and their partner’s (see Table 4).

Lastly, in Step 5, we used list 1 (Step 1, Round 5), and list 2 (Step 4, Round 2) to detect open-textures at the clause level. We compare whether the two lists flag similar or different clauses as open-textured, and which proportions of clauses each list flags.

Figure 4 provides an overview of how the different steps we followed fit together.

If one word is open-textured, then the consequence is that the whole clause becomes more difficult to turn into an automatic processable form. Regarding the level of difficulty of finding 20 annotators in Step 4 compared to only 4 in Step 2, it makes sense to compare the two Steps as well.

3.3 Legal domain of application

We purposely picked legislations where we assumed that they would contain many open-textured words, so that we could extract meaningful lists, but we also wanted these laws to contain open-textured words to various degrees so that we could also compare them to one another. The four laws from which articles were aggregated for annotators to carry out their review at the text level, and why we chose to focus on them were:

-

1.

The General Data Protection Regulation (GDPR): notably because of the large interest to currently turn it into an automatically processable regulation (see e.g. (Palmirani and Cervone 2013)).

-

2.

The Law Enforcement Directive (2016/680): because it is parallel to the GDPR, with the difference that the LED is applicable only for “law enforcement purposes” –which falls outside the scope of the GDPR;

-

3.

The EU Charter of Fundamental Rights, and;

-

4.

The European Convention on Human Rights: because of the assumption—including for (3)—that they would further contain many open-textured terms.

4 Results

H1: Legal scholars are able to agree on which words are open-textured at the text level.

Across the 10 pairs of participants of Step 4, while the average of the Cohen’s Kappa after the first round is only at 0.24, the second round shows that much higher agreement can be achieved: after this round, the average increases to 0.70, with a median at 0.76. Only two pairs score lower than 0.5 in the second round, and only one pair scores between 0.5 and 0.6 (\({\overline{\kappa }}_{round_2} >{\overline{\kappa }}_{round_1}\) with p-value < 0.01, see Fig. 5).

As the participants are asked about agreeing or not to the words from their pair, there is a possibility that certain participants randomly agreed. We therefore sought to test how much randomness could distort the results. We argue that the average of triggered questions used between Round 1 and 2 should remain the same if annotators did not arbitrarily agree with words between the two rounds (Nround_1 = 1670, Nround_2 = 2020, see Table 5. And for all questions, two-tailed t-tests returns that the means are similar in Round 1 and 2 (\({\overline{x}}_{question_i, round_2} ={\overline{x}}_{question_i, round_1} \, for\;i=\{\mathrm{1,2},\mathrm{3,4}\}\), p-values < 0.01), confirming the hypothesis that annotators seemingly picked the trigger questions with careful consideration.

H2: Legal scholars are able to agree on the resulting Open-Texture Value of words at the text level.

Each time annotators agree on a word being open-textured, we look at the difference in the allocated resulting Open-Texture Value: for instance, one reviewer may say that “justice” is open-textured with an associated value of “5-high” while their pair says that it is but rather as “4-Medium high”. The spectrum was discrete going from 1 to 5, so that the difference between the values could go between 0 and 5. The number of common words on which this was tested was Nround_1 = 134 and Nround_2 = 601 (the figures differ to the ones mentioned in Table 3 as there are words here that are non-unique across pairs, and which are considered here). Figure 6 shows the distribution of the differences between values, and we argue that it is sufficiently small to validate H2. Lastly, the number of times that annotators chose the option “uncertain” is small: this corresponds to 5 times in round 1 (3.7% of all answers) and 45 times in round 2 (7.5% of all answers), also showing the practical relevance and possibility of giving a value on the spectrum.

Furthermore, we tested the relation between values and triggered questions. We hypothesised that certain questions, for instance on concepts, could be associated with higher Open-Texture Values (see Fig. 7 for Round 1 and Fig. 8 for Round 2). In order to test the relation, we performed for both rounds a chi-squared test between answers for the value versus question triggered (Nround_1 = 1161; Nround_2 = 1615), and we could reject the null hypothesis that there is no relationships between the variables (with p-values < 0.01). The overall Pearson correlation is 12.56% and 8.83% respectively in Round 1 and 2; and a linear regression yields very low p-values (< 0.01) but with low R2 at 0.007 (see Table 6).

We interpret the results from the chi-squared test as meaning that the sample data is representative, and that the values and questions are dependent. However, this dependence might not be linear as indicated by the weak correlation and with the low R2 hinting at the poor fit of the linear method.

We hence found that certain questions are more strongly associated with certain levels of open-texture than others, although the finding comes with a caveat regarding linearity. What we can observe is: in Round 1, Question 4 on whether a word is value-laden clearly stands out for its association with the Open-Texture Value “high” (14 percentage points higher than the next value), when other questions are otherwise roughly used equally for other values. In Round 2, the difference remains although it shrinks to 9 percentage points. In Round 2, the major relations standing out is the one of Question 3 on no single definition with the value medium (at 8 percentage points higher than the next value), and on Question 1 on no single quantitative thresholds with the value medium–high (at 8 percentage points higher than the next value). While we are not able to conclude on the specific relations between triggering questions and Open-Texture Values, the results from the chi-square tests are conclusive to validate the hypothesis.

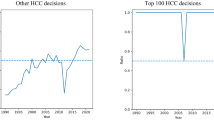

5 Comparing step 1 and step 4

Across the four pieces of legislation, the final list in Step 1 detected 59.9% of clauses as open-textured, while the final list in Step 4 detected 65.9% of clauses. When comparing the clauses detected by the two steps as being open-textured, both agree with a high Cohen’s Kappa score of 0.747 (see Table 7). This small difference between the two lends credence to using only the very first step which required much more limited resources, especially if the interest is only in flagging clauses containing open-textured words. The drawbacks of this step, however, is that less is known about agreement, reasons for flagging open-texture, and on the Open-Texture Value of words.

6 Impacts of our findings

A major hindrance to automatically processable regulation is open-texture. It is not only that several possible variants of interpreting a clause could exist—if that was only the case, different interpretations could be coded with choices left to the user. The issue is more upstream and lies in identifying such clauses which contain open-textured words prior even to starting encoding. The different steps of the experiments point towards the fact that it is somewhat possible to find agreement on flagging open-textured words, but it also raises further questions and limitations to this study.

For a start, we only asked participants to look for open-texture at the text-level, and at degree 1 (see Appendix 1 and 2). In a real implementation, this is unlikely to suffice: checking at the general level would probably be more realistic, but would have been much more expensive to conduct. That means that in a real case implementation, experts in the specific legal field would have to be surveyed rather than generalists with a legal background. One of our conclusions is comforting in this regard: surveying with only four people yielded very similar results than accounting with twenty viewpoints. Experts could consider recitals for instance, what we have not so far. While recitals can give guidance as to how to interpret certain ambiguous terms which we may currently classify as open-textured, the guidance itself will necessarily need to come under interpretation too. And this guidance is not legally binding itself either. That means that at best, we may only be able to say that certain open-textured terms could have one likely interpretation as opposed to another, but this would not change that the term remains an open-textured one. Future research might be able to integrate them to modulate to some further extent the classification within open-textured terms. But this points to a clear question on the degree of open-texture: which degree, if clearly > 1, would need to be considered realistically? And at least two further questions would still require answers: how many people, say legal experts in their fields, would it suffice to bring together? And how many experts would need to agree on open-texture in order to accept the word as open-textured? Our threshold of pairing people up could be challenged as not being wide enough.

Which leads to a further question to consider is whether our current methodology under- or over-assess open-texture. As there is no single source of ground truth, we cannot compute F1-scores, and even computing Cohen Kappa’s score between the two lists—as opposed to between clauses flagged as containing open-textured—is not meaningful as they function differently (one looking at roots, the other one at full terms including compound terms when two annotators agreed on its relevance of the whole compound terms to be considered as open-textured). Arguably, as we seek agreement, we could be under-assessing open-texture: there could be a case to be made that anyone having suspicions that a word is open-textured could be sufficient, hypothetically, to engage in a legal challenge tilting the balance of the open-textured lever. In that case, it would have been sufficient within Step 4 to only create a unique list out of the combination of all the words from anyone, without looking for the intersections. If we had done so, we would have had a list with 761 unique words (versus 106 when looking at agreed words of Round 1), and just for the GDPR, the number of open-textured clauses would shoot up to 74.8% (from 48% with Round 1). The low Cohen’s kappa in Round 1 are a testimony of how different people read and process words differently and would support the creation of such a list with inclusion of a word as soon as at least one person writes it. However, the creation of APR is not an endeavour carried by one person and their own. It involved large groups of people working in multidisciplinary environments and these people need to be able to consider other options, what the design with two rounds when seeking agreement tries to replicate.

But also arguably, by asking the opinion of a third reviewer, we could have diminished the size of the intersection—which would indicate that we over-assessed open-texture. As our model presents, in the real world, internal forces alone are not sufficient to take into consideration for hypothetical scenarios: resources (mostly time and money) of a challenger, as well as the merit of a case play a role. Further studies could seek to complement this one by integrating such real world variables, for instance by looking at court cases. The lists already established here could be used as a basis for supervised learning when combined with NLP techniques, a step those looking at ambiguity in software requirements have already attempted (Ferrari and Esuli 2019; Hosseini et al. 2021; Patwardhan et al. 2018).

Lastly, the statistics in Table 6 are sobering, or rather what we can derive from them: the number of clauses without open-textured words constitutes the minority. When considering the feasibility of turning existing pieces of legislation into automatically processable versions, such a point should weigh into considerations of whether the associated costs would balance the benefits. But maybe more importantly, albeit less practically: it also brings an important nuance to those offering often-valid criticisms regarding APRs, even though the extent of the issues are unknown. Such statistics allow to better gauge to which extent these criticisms could apply.

7 Conclusion

The concept of open-texture as combining different types of ambiguity, vagueness, and contested concepts had remained under-theorised so far, despite it being so crucial in the emerging field of computational law. We have sought to remedy this by introducing a framework that combines the different forces (internal, external, lateral) establishing whether, and to which extent, a word is open-textured. We have further sought to test out part of the model concerning internal forces of open-texture at the text-level and of degree 1, most notably focusing on the triggering questions. Taken together, the experiment shows five findings. First, that it is possible to flag open-textured words. Second, that agreement can be high and non-random. Third, agreement between open-textured words comes with a high level of agreement on the Open-Texture Values of those words. Fourth, there is a dependence between Open-Texture Values and internal forces of open-textured words, although this dependence is non-linear and would need further modelling. Fifth and lastly, the number of people involved need not to be so great: the marginal contribution of adding more people diminishes very rapidly. These conclusions have come as well with a few caveats which offer avenues for future research, most notably on how not only to focus on internal forces, but on how to integrate external and lateral forces too. Methodologies on how to do so are not readily available and will probably involve trying different paths. This represents, however, exciting prospects to make clear tangible progress on current roadblocks of making automatically processable regulation more widespread.

Notes

Cohen’s Kappa (κ) is a statistical measure of inter-rater reliability, the degree of agreement between independent observers of a phenomenon. It is preferable to simple percent agreement because it takes into account the probability of chance agreement. The metric is equal to 1 if raters are in complete agreement and equal to 0 if there is no agreement other than what would be expected by chance.

References

Ackerman BA (1986) Law, economics, and the problem of legal culture. Duke Law J 1986(6):929–947

Baldwin DA (1995) Security studies and the end of the cold war. World Politics 48:117–141

Benzmüller C, Fuenmayor D, Lomfeld B (2020) Encoding legal balancing: automating an abstract ethico-legal value ontology in preference logic. In: Freie Universität Empirical Legal Studies Center (FUELS) Working Paper, 5.

Beschwerde gegen den Entscheid des Obergerichts des Kantons Luzern, II. Kammer, vom 3. November 2010, (Bundesgericht 2011). https://www.bger.ch/ext/eurospider/live/de/php/aza/http/index.php?highlight_docid=aza%3A%2F%2F14-07-2011-6B_5-2011&lang=de&type=show_document&zoom=YES&

Bhatia J, Breaux TD, Reidenberg2 JR, Norton TB (2016) A theory of vagueness and privacy risk perception. In: 24th International Requirements Engineering Conference

Bonatti PA, Kirrane S, Petrova IM, Sauro L (2020) Machine understandable policies and GDPR compliance checking. KI-Künstliche Intelligenz 34:303–315

Bourcier D, Mazzega P (2007) Toward measures of complexity in legal systems. In: Eleventh International Conference on Artificial Intelligence and Law, New York

Brakel RV, Kudina O, Fonio C, Boersma K (2022) Bridging values: finding a balance between privacy and control. The case of Corona apps in Belgium and the Netherlands. J Conting. Crisis Manag 30(1):50–58

Buchholtz G (2019) Artificial intelligence and legal tech: challenges to the rule of law. In: Wischmeyer T, Rademacher T (eds) Regulating artificial intelligence. Springer, pp 175–198

Cobbe J (2020) Legal singularity and the reflexivity of law. In: Deakin S, Markou C (eds) Is law computable critical perspectives on law and artificial intelligence. Hart Publishing

Colvin J (2023) Judge fines Trump, lawyer for ‘frivolous’ Clinton lawsuit. AP News

Coolidge FL, Overmann KA, Wynn T (2010) Recursion: what is it, who has it, and how did it evolve? Wires Cognit Sci 2(5):547–554

Diver L (2020) Digisprudence: the design of legitimate code. Law Innov Technol 13(2):325–354

Easterbrook FH (1994) Text, history, and structure in statutory interpretation. Harvard J Law Public Policy 17:61–70

Endicott T (2011) The value of vagueness. In: Marmor A, Soames S (eds) Philosophical foundations of language in law. Oxford University Press, Oxford, pp 14–30

Farnsworth W, Guzior DF, Malani A (2010) Ambiguity about ambiguity: an empirical inquiry into legal interpretation. J Leg Anal 2(1):257–300

Ferrari A, Esuli A (2019) An NLP approach for cross-domain ambiguity detection in requirements engineering. Autom Softw Eng 26:559–598

Gallie WB (1956) Essentially contested concepts. Proc Aristot Soc 56:167–198

Guitton C, Tamò-Larrieux A, Mayer S (2022) A typology of automatically processable regulation. Law Innov Technol 14(2):267–304

Guitton C, Mayer S, Tamò-Larrieux A, Garcia K, Fornara N (2024) A proxy for assessing the automatic encodability of regulation. In: 3rd ACM Computer Science And Law Symposium. Boston, MA

Gustafson KA (2023) To hear or not to hear or not to hear?—Resolving a federal court’s obligation to hear a case involving both legal and declaratory judgment claims. Univ Baltimore Law Rev 52(3):2

Hart HLA (1994) The concept of law. Clarendon Press

Hildebrandt M (2020) Code-driven law: freezing the future and scaling the past. In: Deakin S, Markou C (eds) Is law computable? Critical perspectives on law and artificial intelligence. Hart Publishing

Hosseini MB, Heaps J, Slavin R, Niu J, Breaux T (2021) Ambiguity and Generality in Natural Language Privacy Policies. In: 29th International Requirements Engineering Conference (RE)

Huber JD, Shipan CR (2012) Deliberate discretion? The institutional foundations of bureaucratic autonomy. Cambridge University Press

Indap S (2023) Companies now need to be more careful in denials of wrongdoing. Financial Times

Katz D, Bommarito M (2014) Measuring the complexity of the law: The United States Code. Artif Intell Law 22:337–374

Keil G, Poscher R (2016) Vagueness and law: philosophical and legal perspectives. Oxford University Press, Oxford

Kramer LD (2003) When lawyers do history. George Washington Law Rev 72:387

Massey AK, Rutledge RL, Antón AI, Swire PP (2014) Identifying and classifying ambiguity for regulatory requirements. In: 22nd International Requirements Engineering Conference

Massey AK, Holtgrefe E, Ghanavati S (2017) Modeling regulatory ambiguities for requirements analysis. In: International Conference on Conceptual Modeling

Oladipo G (2022) Texas woman given traffic ticket says unborn child counts as second passenger. The Guardian. https://www.theguardian.com/us-news/2022/jul/09/texas-womanticket-abortion-roe-v-wade

Palmirani M, Cervone L (2013) Measuring the complexity of law over time. In: Springer Lecture Notes in AI AI Approaches to the Complexity of Legal Systems: AICOL 2013 International Workshops

Pathak SK, Dev G (2023) The evolution of same-sex marriage laws and their impact on the traditional family structure. Indian J Law Legal Res 5(2):1

Patwardhan M, Sainani A, Sharma R, Karande S, Ghaisas S (2018) Towards automating disambiguation of regulations: using the wisdom of crowds. In: 33rd International Conference on Automated Software Engineering

Schauer F (2013) On the open texture of law. Grazer Philosophische Studien 87:197–215

Sennet, A. (2021). Ambiguity. Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/entries/ambiguity/

Setzer J, Higham C (2022) Global trends in climate change litigation: 2022 snapshot. Grantham Research Institute on Climate Change and the Environment and Centre for Climate Change Economics and Policy, London School of Economics and Political Science, London

Shapiro SJ (2011) Legality. The Belknap Press of Harvard University Press

Solove D (2011) Nothing to hide: the false tradeoff between privacy and security. Yale University Press

Sullivan KM (1992) The Justices of Rules and Standards. Harv Law Rev 106:22

The Economist (2022) Some words have two opposite meanings. Why? The Economist. https://www.economist.com/culture/2022/09/08/some-words-have-two-oppositemeanings-why

Troncoso C, Payer M, Hubaux J-P, Salathé M, Larus J, Bugnion E, Pereira J (2020) Decentralized privacy-preserving proximity tracing. Arxiv. https://doi.org/10.48550/arXiv.2005.12273

Unabhängiges Landeszentrum für Datenschutz Schleswig-Holstein v Wirtschaftsakademie Schleswig-Holstein GmbH, (Bundesverwaltungsgericht 2018). https://curia.europa.eu/juris/liste.jsf?num=C-210/16

Vecht, J. J. (2020). Open texture clarified. Inquiry.

Waismann F (1945) Verifiability. Proc Aristot Soc 19:100–164

Waltl B, Matthes F (2014) Towards measures of complexity: Applying structural and linguistic metrics to German laws. In: JURIX 2014: The Twenty-seventh Annual Conference

Zahariadis N (2016) Handbook of public policy agenda setting. Edward Elgar Publishing Limited, Cheltenham

Funding

Open access funding provided by University of St.Gallen. Hasler Stiftung, 21089, Clement Guitton.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

See Table

Appendix 2

Instructions for open-textured test experiment

3.1 Overarching task

Determine whether words are open-textured or not following a given algorithm.

3.2 Scope

Human algorithm to determine open-textured (OT) words, degree 1, text-level. By that we mean:

-

Degree 1: We only look at the word per se, and not if further open-textured words are in the definition of the word. And so, if there is only one definition, this should suffice to answer no to questions 2 and 3.

-

Text-level: is further information to be found in the same statute where the word appears? If you would need to rely on other external legal sources for a more specific definition, then this is OT at the text-level.

3.3 Process

A) Take your personally assigned Articles only and determine whether a word is open-textured. To do so, go through the following 4 non-mutually-exclusive questions (See Table

9):

If you answer any of these with “yes”, then the word should be marked down as open-textured. As we are only going after unique values, you do not have to mark down the word—but can still do so—if it is already on your list.

If you consider the word as being non open-textured, then you should not write it down. We are also interested in how you perceive relatively open-textured terms on a spectrum, and ask you to choose between 5 scale points representing how much you think the term entails open-texture: 1-low, 2-medium low, 3-medium, 4-medium high, or 5-high (the option “Uncertain” also exists). For instance, you might consider that “month” is low open-textured (with 28, 29, 30, or 31 days), but that comparatively, “freedom” is high as it can have many more definitions and nuances.

B) Use the template/table provided to mark down the open-textured word. If you are uncertain, and you think that it could be OT, please mark that down accordingly in the column “OT-Value”—we’re trying to have the most comprehensive (but correct) list. If you have anything else you would like to mark down use the “Note” column. In case you feel that 2 questions were relevant for you to answer, you can also mark it down accordingly (order irrelevant), and—again—we do not regard it as problematic that some of the 5 questions have a degree of overlap with one another.

C) Upon sending us your list, we will follow up with potential inadvertent omissions. After both pairs have submitted their results, we will extract the different words in each list. We will revert back to you with a list containing only the difference between your list and those of your pair and will ask you which word you would agree to add to your own list, with the corresponding associated value, and most appropriate leading questions.

3.4 Please write the following way words into the list:

-

Only write down the word as written in the law you are analysing

-

Do not use special characters in the column “Word” (no commas, no “&” symbol, no three periods/dots, etc.)

-

Compounded words together (e.g. freedom of expression)

-

If the words are separated by a comma or a logical conjunction (and/or), we leave it to your judgement to assess whether the words need to be together or represent two instances of open-textured words (e.g. “rights and freedoms” vs. “rights” on one line and “freedoms” on another one)–-this should reflect whether you think that individual words are OT, or whether they are only OT when taken together.

Allocated articles (skip recitals!)

See Table

10.

4.1 End goal

Go through your allocated articles and statutes, and fill out the table in the provided excel file—do not complete the table in this file, it’s just provided as an example (See Table

11):

Appendix 3

5.1 List of open-textured terms in step 1, round 5

Ability, activity, adequate, adequate level of protection, adequate time and facilities, appropriate, appropriate safeguards, automated means, available technology, clear and plain language, commonly used, communications, complexity, confidential information, confidentiality, consent, democratic principles, democratic society, direct marketing purposes, disproportionate, due account, duly justified, easily accessible, effective mechanisms, effectiveness, electronic means, emergency, essential interests, exceptional circumstances, expert knowledge, fair, fairly, filing system, free, free movement, fundamental freedoms, fundamental rights, fundamental rights and freedoms, high level, high risk, home, human dignity, human rights, impartially, independence, independent, inhuman, insufficiently, just satisfaction, justify, lawfully, legitimate interest, legitimate interests, liberty, likelihood, likely, limiting, maladministration, necessary, necessary facilities, normal, operation, partly, period, periodic, periodic review, periodically, personal data, prejudice, professional qualities, professional secrecy, promptly, properly, proportionate, protection, public health, public interest, public security, reasonable, reasonable doubts, reasonable fee, reasonable time, reasons of public interest, relevant, relevant and reasoned objection, right, rights and freedoms, satisfaction, serious, severity, significantly, social protection, speedily, substantial public interest, substantially, sufficient, suitable, technically feasible, threats, transparent procedure, undertakings, undue delay, unjustified, unlikely, vital interests, without delay.

5.2 List of open-textured terms step 4, round 2

A limited number, ability, absolutely necessary, accuracy, accurate, activity, acts of war, additional, additional information, adequate, adequate enforcement powers, adequate level of protection, adequate time and facilities, adverse, adverse legal effect, adversely, adversely affect, agreement, all relevant developments, amount, annual period, any, any action taken, any appropriate means, any available information, any communication made, any damage, applicable safeguards, appropriate level of expertise, appropriate means, appropriate, appropriate level, appropriate level of expertise, appropriate means, appropriate measure, appropriate measures, appropriate procedural safeguards, appropriate safeguards, appropriate security, appropriate technical and organisational measures, appropriate time limits, appropriate training, approximate, archiving purposes, armed forces, as far as possible, as far as practicable, at any time, authorisations, automated means, availability, available, available technology, awareness, awareness-raising, behaviour, check, civilised nations, clear, clear and plain language, clear distinction, clearly, clearly distinguishable, code of conduct, commonly used, communications, compelling legitimate grounds, compensation, competent, complexity, complexity of the subject matter, comprehensive rules, concise, concise transparent intelligible and easily accessible form, confidential information, confidentiality, confirmation, conflicts of interest, consent, consistent, consult, consumer protection, criminal offences, cultural or social identity, damage, decent existence, degrading, degree of accuracy, completeness and reliability, democratic principles, democratic society, derogating, destruction of any of the rights and freedoms, dignity, direct, direct marketing purposes, disclosed, disproportionate, disproportionate effort, dissuasive, due account, due respect, duly justified, duration, during, easily, easily accessible, economic or financial interest, effective, effective compensation, effective conduct, effective data subject rights, effective exercise of this right, effective functioning, effective judicial remedy, effective mechanisms, effective redress, effective remedy, effective rights, effectiveness, effects, electronic means, emergency, encourage, enforceable data subject rights, environmental protection, equal, equally effective, equivalent, essence of the fundamental rights and freedoms, essential interests, ethics, everyone, exceptional, exceptional circumstances, excessive, exchange, exigencies, experience, expert knowledge, expertise, explanation, explicitly brought to the attention, external influence, factual or legal reasons, fair, fair and just, fair and transparent, fair compensation, fairly, family, feasible, filing system, financial, fixed period of time, for one or more, found a family, free, free ballot, free movement, freedom, freedom of expression, freedom of expression and information, freedoms, freely, freely given, friendly settlement, friendly settlements, fully informed, fundamental freedoms, fundamental interests, fundamental rights, fundamental rights and freedoms, further information, gainful, general, general principles, general principles recognized by the community of nations, generally, genuinely, good administration, good time, greater extent, grounds of public interest, guilty, high level, high moral character, high risk, high risk to the rights and freedoms, high standards of quality, high standards of safety, hindrance, home, human dignity, human rights, human rights and fundamental freedoms, identifiable, immediate and serious threat, immediately, impact, impartially, important reasons, improvement of quality, in a timely manner, in good time, in private, in public, in relation, in the course, inaccurate, incompatible, independence, independent, indirect, indirectly, individual's intervention, informed, inhuman, insufficiently, integrity, international cooperation, interpretation, interpretation of the convention, investigations, joint operations, journalistic purposes, judicial independence, judicial remedy, just satisfaction, justify, knowledge, large scale, lawful, lawful and fair, lawfulness, lawfully, legal economic and social protection, legal questions, legitimate, legitimate aim, legitimate basis, legitimate interest, legitimate interests, legitimate purposes, liberty, likelihood, likely, limited, limited storage periods, limiting, machine-readable format, making available, maladministration, manifestly, manifestly ill-founded, manifestly made public, manifestly unfounded or excessive character, manner, marriageable age, matter, measures, media, monitored, monitoring, month, more favourable, national security, nature, necessary, necessary and genuinely, necessary and proportionate measure, necessary and proportionate measure in a democratic society, necessary facilities, necessary for the general interest, necessity, negligent character, new technologies, normal, not excessive, obligations, obstructing official or legal inquiries, old age, one month, ongoing basis, operation, opinion, other, other means, other opinion, part, partly, period, periodic, periodic review, periodically, periods, personal data, physical mental moral or social development, pluralism, poverty, precisely, precisely specific, prejudice, prejudicial, prejudicing, presumed, principle of subsidiarity, principle of sustainable development, principles, prior, prior to, private, private life, professional qualities, professional secrecy, prohibited, promptly, proper, proper administration of justice, proper application, proper assessment, properly, proportionality, proportionate, protection, public emergency, public health, public interest, public security, publicly, purely, purely household, purely personal, qualifications, quality, reasonable, reasonable doubts, reasonable efforts, reasonable fee, reasonable period, reasonable steps, reasonable suspicion, reasonable time, reasonably, reasoned, reasons of important public interest, reasons of public interest, regular, regularly, relate, related, related safeguards, relevant, relevant and reasoned objection, relevant information, relevant legislation, reservations of a general character, resilience, respect, respect for human rights, restricted, right, right of establishment, right to social and housing assistance, rights, rights and freedoms, rights and legitimate interests, rights and respective duties of data subjects, risks of varying likelihood and severity, rule of law, rules, safeguards, same, satisfaction, scientific or historical research, secrecy, secret ballot, secure, security, serious, serious grounds, serious misconduct, serious risk, seriously impair, services of general economic interest, several, severity, sex, significant, significant disadvantage, significantly, significantly affects, skills, social advantages, social origin, social protection, social security, society, special, specific criteria, specific features, specific purposes, specified purposes, speedily, state, statistical purposes, strictly necessary, strictly required, structured, substantial public interest, substantially, sufficient, sufficient resources, sufficient safeguards, suitable, suitable guarantees, suitable measures, suitable measures, technical, technical specifications, technically feasible, the essence of the fundamental rights, the life of the nation, their opinion, threatening, threats, threats to public security, timely, timely manner, to the extent necessary, transparent, transparent manner, transparent procedure, transparent manner, unambiguous, undertakings, undue, undue delay, unique, unique identification, unique information, unjustified, unlawful, unlikely, unlikely to result in a risk, urgent, urgent need, urgent need to act, urgently, use, utmost, utmost account, varying likelihood, virtue, vital interests, well-established, where appropriate, where relevant, within, within a reasonable time, without delay, without interference, without undue delay, without undue further delay, years, young.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guitton, C., Tamò-Larrieux, A., Mayer, S. et al. The challenge of open-texture in law. Artif Intell Law (2024). https://doi.org/10.1007/s10506-024-09390-1

Accepted:

Published:

DOI: https://doi.org/10.1007/s10506-024-09390-1