Abstract

In this paper, we address the task of facial aesthetics enhancement (FAE). Existing methods have made great progress, however, beautified images generated by existing methods are extremely prone to over-beautification, which limits the application of existing aesthetic enhancement methods in real scenes. To solve this problem, we propose a new method called aesthetic enhanced perception generative adversarial network (AEP-GAN). We builds three blocks to complete facial beautification guided by facial aesthetic landmarks: an aesthetic deformation perception block (ADP), an aesthetic synthesis and removal block (ASR), and a dual-agent aesthetic identification block (DAI). The ADP learns the implicit aesthetic transformation between the landmarks of the source image and enhanced image. ASR ensures the consistency of image identity before and after beautification. The DAI distinguishes between the source images and generated images. At the same time, to prevent over-beautification, we constructed a real-world facial wedding photography dataset to enable the model to learn human aesthetics. To evaluate the effectiveness of the AEP-GAN, this paper adopted the wedding photography dataset for training, the SCUT-FBP5500 dataset, and the high-resolution Asian face dataset for testing. Experiments showed that the AEP-GAN addresses the over-beautification problem and achieves excellent results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Facial aesthetic enhancement (or facial beautification) is a subtheme derived from the study of facial aesthetics [1], which aims to rearrange the facial geometric structure and modify the facial texture to maximize the similarity with the original face while improving the aesthetics. This task has broad demand in many real scenes [2, 3]. Examples include medical cosmetic surgery, journalism and advertising, and wedding photography.

Early research on facial aesthetic enhancement (FAE) was mainly divided into two aspects: facial geometric deformation and appearance beautification. The main idea of facial geometric deformation is that the faces of celebrities are widely recognized and attractive. Therefore, many researchers have built aesthetic prototype datasets consisting of stars or models and adjusted the geometric structure, texture and color information of the face using machine learning and image processing technology to make the facial features closer to the features of the aesthetic prototype datasets [4,5,6]. Furthermore, some researchers have changed the geometric structure of the face according to classical aesthetic theory [7,8,9]. The latest method is to use an unsupervised learning method to conduct subtle deformation of the face [10]. Facial appearance beautification mainly uses interactive methods to process facial defects or skin color using image processing technology [11,12,13].

Although the above methods can achieve good beautification effects, they are still separate beautification methods that do not combine geometric deformation and appearance. Moreover, the beautification mode depends on the artificial prototype datasets and does not take into account the differences in individual faces to carry out different degrees of beautification.

With the development of deep learning, some researchers have also explored and researched facial beautification using GANs [14,15,16,17,18].

In contrast to traditional facial beautification methods, deep learning methods can achieve end-to-end beautification and simultaneously beautify the geometry and appearance, with a better beautification effect. However, the deep learning method is more focused on facial editing, and the maintenance of identity attributes and the lack of paired beautification datasets for learning must be solved. In addition, the above deep learning method easily overbeautifies, that is, leads to excessive face thinning, whitening and peeling, and changes the background content of the picture, resulting in a huge difference between the face and background of the beautified image and the original image.

Although FAE has been applied in many studies, most are aimed at people in Europe and America. With the rise of social media culture and cosmetic surgery in Asia, there is an urgent need for facial enhancement technology for Asian people. However, the facial features of people in Asia are not as deep as those in Europe and the United States, and the relative distance between the facial features is larger [19]. Therefore, when applying the current FAE research results to the faces of people in Asia, the results are not necessarily applicable. At present, the existing commercial tools can beautify Asian people, but most beautify the face by removing dark and smooth skin or by using interactive actions according to the user’s preferences, which may cause excessive beautification and cannot automatically generate images that meet the user’s beautification needs [20, 21].

In this paper, we focus on the application needs in the real world to solve the problems of facial over-beautification and identity consistency. Inspired by Cyclegan [22], we propose a new method called the aesthetic enhanced perception generative adversarial network (AEP-GAN).

Specifically, we designed an aesthetic deformation perception block (ADP), aesthetics synthesis and removal block (ASR), dual-agent aesthetic identification block (DAI), and FBP block. The four blocks are combined to perform facial aesthetic enhancement through deformation feature guidance and aesthetic evaluation assistance judgment.

ADP maps the source facial landmarks to the representations of facial geometric features and learns the implicit aesthetic transformation between the landmarks of the source image and augmented image. To ensure aesthetic and identity information, the presentation features are decomposed via dimensionality reduction, and the reconstruction is then optimized to match the enhanced facial geometric features, thereby achieving aesthetic enhancement of the source facial geometric features.

ASR is a bidirectional recurrent network constructed with landmarks as aesthetic guidance that aims to generate and restore aesthetic facial images from landmarks and source facial images to ensure identity consistency. In other words, landmarks and bidirectional recurrent networks play different roles in the face editing process: controlling aesthetic synthesis and maintaining identity consistency. During aesthetic synthesis, both are used to specify the target aesthetics so that aesthetic synthesis can transform the source face into the desired beautified face. In the aesthetic removal process, both are responsible for indicating the enhanced face state, which facilitates the restoration of the source image.

DAI is a pair of discriminators related to ASR to differentiate between source and generated images, enabling ASR to generate high-quality aesthetic images.

FBP is primarily used to perform aesthetic auxiliary judgment on the generated image and then optimize the enhanced image.

The AEP-GAN method presented in combination with the above modules can not only maintain the identity attribute but also automatically beautify the face to avoid over-beautification. Its characteristics are as follows: (1) learn aesthetics to enhance knowledge, (2) automatically perform aesthetic enhancement and maintain identity consistency, (3) use facial beauty prediction (FBP) to assist aesthetic judgment, and (4) avoid excessive beautification. The facial aesthetic enhancement results of AEP-GAN are shown in Fig. 1.

Facial aesthetic enhancement results of AEP-GAN on the SCUT-FBP5500 dataset. The first column shows the source images, the second column shows the beautified images corresponding to the first column, the third column shows the source images, and the fourth column shows the beautified images corresponding to the third column

In general, compared with previous research methods, the innovation points of this paper are as follows:

First, AEP-GAN skillfully combines ADP, ASR, DAI and FBP to achieve facial beautification according to the characteristics of each module. Specifically, the ADP module is used to generate enhanced facial landmarks as a guide for aesthetic deformation. Utilizing the characteristics of ASR (a two-way recursive network guided by landmarks) to generate and restore beautiful facial images from enhanced landmarks and source facial images to achieve end-to-end automatic facial enhancement and ensure identity consistency. In addition, DAI and FBP could ensure that the generated aesthetic images are higher quality and more aesthetic.

Second, the self-built real scene matching dataset can be used for supervision training to learn aesthetic enhancement knowledge and prevent over-beautification.

Third, AEP-GAN can independently learn each individual’s facial aesthetic knowledge using the wedding photography dataset and ensure that the enhanced image conforms to aesthetics.

This method can be applied to automatic image beautification of social network media and can provide a reference for users and doctors in cosmetic surgery.

To evaluate the effectiveness of our method, we constructed a dataset of real wedding photography scenes containing 2147 pairs of face images (e.g., male/female, Asian) for AEP-GAN training, collected the SCUT-FBP5500 dataset [23] and constructed a high-resolution Asian face dataset for testing. The SCUT-FBP5500 dataset contains 4000 Asian face images (female/male). The high-resolution Asian face dataset contains 4000 high-resolution Asian face images of nonreal identities generated based on StyleGAN. Extensive results on the two datasets demonstrated the superiority of the proposed AEP-GAN.

The contributions of this paper are as follows:

-

We constructed a real-world Asian face wedding photography dataset, including unaugmented and augmented face images, to address the aesthetic overfitting problem.

-

We combined facial aesthetics enhancement and evaluation to construct an automatic facial aesthetic enhancement framework based on aesthetic auxiliary judgments.

-

We built the ADP to learn the implicit aesthetic transformation between the source facial landmarks and the enhanced facial landmarks.

-

We built a bidirectional recurrent network to synthesize and eliminate beautification while maintaining identity consistency.

-

AEP-GAN can enhance facial aesthetics automatically, which has practical application significance in medical aesthetics and plastic surgery scenarios.

The remainder of this paper is organized as follows. Section 2 reviews related work on the FAE, and Section 3 presents the details of the architecture of the AEP-GAN. Section 4 presents the wedding photography scene dataset, SCUT-FBP dataset, high-resolution Asian face dataset, and the implementation details. Section 5 provides the results obtained on the three datasets, together with a discussion. Finally, we conclude the paper in Section 6.

2 Related work

2.1 Traditional facial image enhancement

Generally, the methods of facial beautification can be divided into two categories: geometry-based and appearance-based. Geometry-based methods focus on adjusting the geometric shape of the face; appearance-based methods remove facial defects, such as spots and wrinkles [11, 24], correct skin color [12] and facial makeup [25, 26], and enhance facial color contrast [13].

2.1.1 Geometry-based

Geometry-based methods adjust the original face shape to make it closer to that of a more attractive face [4,5,6,7, 27].

Early studies are represented by [4]. Tommer et al. [4] trained an automatic facial attractiveness rating system, extracted a set of distances between facial feature positions, mapped them to a point in the high-dimensional ”face space”, and searched for the near points with high attractiveness ratings in the face space and mapped the original facial features to their adjusted positions using 2D distortion fields. This method can improve the attractiveness of most faces. However, this method is limited by the facial attractiveness rating system, and the performance of the facial attractiveness rating system directly affects the specific adjustment position of the face.

Most later studies are based on using the established aesthetic prototype dataset to adjust the facial geometry. For example: Melacci et al. [27] designed a system that can automatically enhance male and female frontal facial images using a celebrity database as a beautification reference. Given the input image, its landmarks are compared to those of the known celebrity face template and adjusted to the nearest template through 2D image distortion. This method can make most faces more attractive. However, the enhancement mode depends on the celebrity face template and the diversity of celebrity face template data determines the beautification result.

Seo et al. [5] proposed a beautification method of automatic face reduction based on fast B-spline approximation. The author uses the active appearance model to automatically extract the landmarks on the face contour, transforms these boundary landmarks into corresponding target points, and then reduces the face shape based on the B-spline approximation. This method can quickly reduce face shape and improve face attractiveness. However, this method considers only the influence of face shape on facial attractiveness and does not consider the influence of other facial features (such as eyes and eyebrows). At the same time, excessive reduction of face shape will cause over-beautification.

Sun et al. [6] proposed a quantitative method to beautify the face: a group of beautiful face prototypes are used as the clustering center of beautiful faces while learning a beauty decision function as an aesthetic classifier. Then, the nearest beautiful prototype can be found based on the original face, using facial deformation to make the original face close to the prototype until the beauty decision function determines that the beautified face is beautiful. In this way, the face is beautified, and the difference between the beautified face and the original face is minimized. However, this method is limited by the selection of beautiful face prototypes. The beautification degree and beautification part of different faces are different.

In addition to beautifying faces by learning aesthetic prototypes, some classic aesthetic theories have been applied to enhancement technology. Roy et al. [7] modified facial features based on the neoclassical beauty theorem and the golden ratio. This method can significantly beautify the face. However, this method does not consider the consistency of facial identity after beautification. Inspired by the Galton experiment [28], the idea of the ”average face is very attractive” is seen as another way to improve facial attractiveness. By using image deformation and warping technology, the face can be averaged and synthesized to generate a beautified version [8, 9]. However, the average face is always generated by deforming different faces. Although the final beautified face may be more attractive, it may not be similar to any part of the original face. This method violates the constraint of keeping the beautified face similar to the original face while enhancing the beauty, so this paper will not describe this method in detail.

At present, the most advanced method based on geometry is [10]. Hu et al. [10] proposed an unsupervised beautification method in the operator space of faces, where an input face is iteratively pulled toward a local neighboring density mode with improved attractiveness. This method improves the facial shape attractiveness for a large range of poses and expressions. Although this framework is a state-of-the-art method, it makes small modifications to the shape of a given face and cannot adaptively adjust the modification intensity for facial images.

2.1.2 Appearance-based

Appearance-based methods generally modify facial texture and color through image processing technology. Arakawa et al. [11] proposed an image processing system that uses interactive computing to beautify face images. The author proposes a nonlinear digital filter bank system that removes unwanted skin components, such as wrinkles and spots, from the face image to make the face look more beautiful. The system is very effective, but many parameters must be adjusted manually.

Guo et al. [25] proposed a method to migrate facial makeup on a facial image based on an image as a style example. The author first divides the two images into three layers: facial structure layer, skin detail layer and color layer. Then, the author transfers information from each layer of one image to the corresponding layer of another image. The main advantage of this method is that only one sample image is needed. This makes facial makeup very convenient and practical. However, this method is only applicable for the makeup transfer of the front portrait.

Hara et al. [12] proposed a facial beautification method for video communication. This method detects the distribution of skin color and brightness of the face and transforms the color distribution of the whole image through histogram transformation. This method can beautify the color of the face and achieve the whitening effect, but it does not include the effect of peeling and removing defects.

Lee et al. [24] proposed a method to beautify portraits through facial smoothing. This method is based on the existing face detection and feature alignment algorithms. First, the face and neck regions to be smoothed are automatically segmented. The smoothing filter is then applied to these areas to beautify them. This method can improve specific parts of the skin; however, this method has no whitening effect.

Liu et al. [26] proposed a fully automatic system for hair and facial makeup recommendation and synthesis. The author proposes a multitree structure super graph model to explore the complex relationship between high-level beauty attributes, intermediate beauty attributes and low-level beauty attributes, and then based on this model, the most compatible aesthetic attributes of a given facial image can be effectively deduced. In addition, the author designed an effective facial image synthesis module to seamlessly integrate the recommended hairstyle and makeup into the facial image. This method can not only recommend the most suitable hair style and makeup but also show the synthetic effect. However, this method produces partial distortion in the uncovered area after the hair style and makeup migrate.

Ohchi et al. [13] proposed a nonlinear image processing system that uses contrast enhancement technology to beautify face images. Based on human subjective standards and tastes, the system uses interactive evolutionary computing (IEC) for optimization design. The purpose of facial beautification is achieved by enhancing the contrast of the basic components of the facial image extracted by the nonlinear filter bank. The system can remove unwanted skin characteristics, such as wrinkles and spots, and make the skin look smooth and beautiful. However, some parameters of the system contrast must be adjusted manually.

In general, based on traditional facial beautification methods, image processing technology is used to adjust the geometric structure of the face, and texture and color information achieve a better beautification effect. Nevertheless, facial deformation depends on the aesthetic prototype dataset and does not take into account the differences in individual faces to beautify to different degrees. Furthermore, most facial whitening and skin-polishing operations smooth or enhance the contrast of the facial region without taking into account the problem of excessive smoothing and whitening.

2.2 Deep learning facial image enhancement

In recent years, with the rapid development of deep learning, the field of images has received extensive attention [29,30,31]. Zhang et al. [29] proposed an end-to-end deep residual convolutional dehazing network based on convolutional neural networks for single image dehazing. This framework is used to dehaze images using the two subnetworks to restore clear images and optimize images. Si et al. [30] proposed a hybrid contrastive model, which uses the idea of contrastive learning to perform identity-level contrastive learning and image-level contrastive learning. Tang et al. [31] proposed a novel image fusion method that uses a dynamic converter module designed to obtain local features and important context information to comprehensively maintain the thermal radiation information from infrared images and scene details from visible images.

Moreover, image generation technology based on GANs [32] has also been extensively studied, such as WGAN [33], WGAN-GP [34], and PGGAN [35], to realize more diverse and novel extended GANs, such as StyleGAN [36] and StyleGAN2 [37], automatic and unsupervised separation of high-level facial attributes and random variations and generation of highly realist images, network-mapped edge mapping to color images [38], and image-to-image translation [22, 39, 40].

Compared with traditional facial beautification methods, facial beautification using deep learning technology has received less research attention [10]. The main reason is that the labor cost of collecting paired data before and after beautification is high. Therefore, at present, more attention is given to makeup transfer. However, due to the difference between makeup transfer and facial beautification, this article only briefly introduces this content.

2.2.1 Makeup transfer

Makeup transfer is used to transfer the facial makeup of a reference image to a source image using deep learning technology while maintaining the original facial features of the source image. BeautyGAN [41] and BeautyGlow [42] are two classic deep learning makeup transfer frameworks.

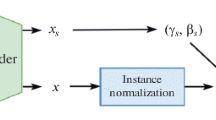

BeautyGAN [41] uses one encoder to extract the identity information of the character and the other encoder to extract the makeup information of the character. The two types of information are fused and then input into the decoder to generate the makeup migration result. Additionally, three kinds of loss are applied to constrain the generated image.

BeautyGlow [42] designed a glow model that can obtain the late vectors of the source image and the reference image. Then, the late vectors are decomposed into facial features and makeup features. Then, the makeup feature of the reference image and the false feature of the source image are added to obtain the hidden space of the target image with makeup. Finally, the glow model is inversely transformed into the RGB target image with makeup.

2.2.2 Facial beautification

Although there are few studies on facial beautification based on deep learning technology, some researchers have explored facial beautification using GANs or GAN variants. Diamant et al. [14] introduced Beholder-GAN, which builds upon previous work on GAN learning from low- to high-resolution images and Conditional GAN (CGAN) [43] for generating image conditioning based on certain attributes, class labels, and feature vectors. Beholder-GAN uses a variant of PG-GAN conditioned on beauty scores to generate realistic facial images. Beholder-GAN elucidates the biology and some deep-rooted rationale for ensuring the consistency of high beauty ratings across race, social class, gender, and age. In the results of Beholder-GAN, the higher the beauty score, the more likely a person is to tend toward femininity, rejuvenation, and whitening. However, Beholder-GAN does not take into account the consistency of facial identity attributes after beautification and before beautification and is prone to over-beautification.

Liu et al. [15] proposed a method that combines style-based beauty expression and beauty scores for facial beautification. This method is based on the GAN architecture, which can transform the face into a beautiful image with a reference facial style and facial score. However, this method modifies image information in addition to the face, and the beautification result is more inclined to makeup migration than geometric deformation, whitening and peeling.

Huang et al. [16] proposed a framework consisting of CGAN, FBP, and a face recognition network (FRN) for identity-preserving facial beauty transformation. The trained generator converts the aesthetic scale of the input image to the target scale indicated by the input condition of the real-valued aesthetic score. To train such a framework, researchers introduced conditional instance normalization and self-consistent loss. The framework adjusts only for skin texture and brightness and not for facial geometry.

Zhou et al. [17] proposed an enhanced version of Beholder-GAN, that is, taking a portrait image as the input, using the gradient descent method to generate the corresponding hidden vector, using the hidden vector to synthesize an image similar to the input image, and then using the beauty semantic editing processing ability of InterFaceGAN to beautify the face image. However, as their paper shows (and explains), this enhanced version addresses the weakness of identity protection only to some extent. However, the beautified image generated by this method modifies the background content to a certain extent, and there is still an excessive beautification problem.

He et al. [18] proposed a framework that beautifies the geometry and appearance of the face simultaneously. The frame designs two branches of geometric deformation and appearance beautification, which beautify the face. Although this method can enhance the geometry and appearance of the face at the same time, it depends on an aesthetic prototype dataset. In terms of facial geometry, the face is transformed into small faces and large eyes, and in terms of appearance, the face is transformed into clean cheeks, red lips and black eyes. It does not take into account the differences between the face parts to beautify different degrees. Furthermore, this method is limited to strict frontal images.

Since the [18] has not been published and no pretraining model has been provided, the experimental results in this paper do not compare this method.

In general, compared with the traditional facial beautification method, the deep learning method can achieve end-to-end beautification and simultaneously beautify the geometry and appearance, but some problems remain, such as the maintenance of identity attributes, over-beautification and the lack of paired beautification datasets for learning.

3 Proposed methods

3.1 Task definition

Let S and R denote the set of source images and reference images, and let LS and LR denote the landmarks corresponding to S and R, respectively. s is sampled from S according to the distribution \(\mathcal {P}_{S}\), ls is obtained using the landmarks positioning function ls = L(s), ls ∈ LS. r is sampled from R according to the distribution \(\mathcal {P}_{R}\), and lr is obtained using lr = L(r), lr ∈ LR.

The AEP-GAN learns a facial aesthetic enhancement function \(\widetilde {s}=G_{e}(s, ADP(l_{s}))\), where ADP(⋅) indicates the ADP module and the enhancement image \(\widetilde {s}\) is as close to r as possible. In addition, it learns a removal function \(\widetilde {r}=G_{r}(r, ADP(l_{s}))\), which removes beauty from r to recover the debeauty image s.

3.2 Framework

3.2.1 Overall

The overall framework of AEP-GAN is shown in Fig. 2. The AEP-GAN combines ADP, ASR, DAI and FBP to address the facial aesthetic enhancement task. ADP learns the implicit aesthetic transformation between the original facial landmarks ls and the enhanced facial landmarks lr, that is, uses the source image landmark ls as input to map to enhanced image landmark lr. The ASR takes lr as the aesthetic guide to generate beauty image and remove the aesthetic feeling of the enhanced image. In addition, DAI and FBP ensure that the generated aesthetic images are higher quality and more attractive.

3.2.2 Aesthetic Deformation Perception block (ADP)

ADP was used to learn the mapping from ls to lr, as shown in Fig. 3. According to [10], we used ls of the source image to perform matrix transformation to extract the facial feature representation fs and obtain the landmarks lr of the enhanced image by performing dimensionality reduction and mapping.

Framework of the aesthetic deformation perception block: ls denotes the landmarks of the source image, fs represents the features of the source image, \(\mathcal {V}\) denotes the principal components in descending order of the associated variance, λ denotes the projection component coefficient, fr is the enhanced facial features, lr denotes the landmarks of the enhanced image

Specifically, fs is expressed as follows [10].

where T is the matrix transpose operator, \(P_{s}=(l_{s\_x},l_{s\_y},1)\) denotes the homogeneous coordinates.

To illustrate that feature representation is related only to facial geometry, we visualized the feature representations for different faces in Fig. 4.

Visualization of operator representation of facial landmarks: two different faces and one face in a different position; the rendered images of the three corresponding operator representations, with white, red, and black representing low, medium, and high values, respectively; the black lines separate different facial parts for clearer visualization. These parts include the jawline (JL), left eyebrow (LB), right eyebrow (RB), nose bridge (NB), nostril (NO), left eye (LE), right eye (RE), outer lip (OL), and inner lip (IL); (c) difference of the operator representations, for contrast, pixel values less than 10− 15 are set to 0

Figure 4 shows that the face feature representations of different face geometries are different, and for the same face geometry, the change in face position hardly affects the face feature representation (the maximum difference is on the order of 10− 16 ). Facial feature representations are geometrically unique, with an order of magnitude greater than 10− 15.

To preserve the major features of the face, we used principal component analysis (PCA) to reduce the dimensionality of fs by preserving 95% of the dataset variance, as shown in the following formula:

where \(\overline {f}_{s}\) is the mean of fs, \(\mathcal {V}_{k}\) is the principal components in descending order of the associated variance, λk denotes the projection coefficient of fs on the components, and K is the number of components that preserve 95% of variance.

Further, we mapped λk through a nonlinear function FC(⋅) and learned the enhanced facial feature representation fr, namely:

where FC(⋅) denotes the fully connected layer.

The enhanced image landmarks lr is the projection of the source landmarks onto the new optimized operator:

3.2.3 Aesthetics synthesis and removal module (ASR)

ASR is a bidirectional recurrent network that consists of a pair of generators Ge, Gr, as shown in Fig. 2. ASR introduces facial landmarks through the ADP module and guides facial deformation through dense U-Net connections. Simultaneously, a bidirectional structure is adopted to obtain the original image by decoupling the facial landmarks and the beautified image, thereby ensuring identity consistency. The generator structure is illustrated in Fig. 5.

3.2.4 Dual-agent Aesthetic Identification module (DAI)

Corresponding to the two generators Ge, Gr is a pair of discriminators De and Dr, which distinguish real images and generate images. De and Dr act as supervisors to narrow the gap between the distributions of the real and synthetic faces, as shown in Fig. 2.

3.3 Objective function

3.3.1 Adversarial loss

Generators and discriminators were trained alternatively toward adversarial goals, following the pioneering work of [32]. The adversarial losses for the generators and discriminators are given by Eq. (5) and Eq. (6), respectively.

3.3.2 Cycle consistency loss

Generators Ge and Gr construct a complete mapping cycle between the source and enhancement images. If we convert a face image from the source to beauty and then convert it back to the source, we should obtain the same face image under ideal circumstances. According to [16], we introduce the cyclic consistency loss \(L_{G\_cyc}\) to ensure consistency between the source and reconstructed images. \(L_{G\_cyc}\) is formulated as

3.3.3 Perceptual loss

The basic principle of facial aesthetic enhancement is that facial identity should be retained after aesthetic enhancement and deletion. Therefore, we utilized a VGG-16 model pretrained on ImageNet to compare the activations of the source images and generated images in the hidden layer. The perceptual loss \(L_{G\_perc}\) is given by

where Fl(⋅) denotes the output of the l-th layer of the pretrained VGG-16 model.

3.3.4 Beauty score loss

To constrain the aesthetic evaluation of the generated face to be consistent with the target aesthetic evaluation, we introduced the beauty score loss \(L_{G\_beauty}\):

where FBP(⋅) denotes a pretrained facial beauty prediction model. With \(L_{G\_beauty}\), the AEP-GAN can obtain the necessary information related to beauty from the FBP to generate faces that satisfy the beauty scoring requirements.

3.3.5 Total loss

The losses LG and LD for the generator and discriminator in our approach can be expressed as

where λadv, λcyc, λperc, and λbeauty are the weights used to balance the multiple objectives.

4 Experimental setting

4.1 Datasets

One newly collected dataset, one public dataset, and the constructed high-resolution Asian face dataset were used in our experiments. The wedding photography dataset is a newly created dataset of Asian facial wedding photography in real scenes, which contains 2147 pairs of facial images (e.g., without background, unenhanced/enhanced, male/female, Asian). The unenhanced images were all taken in real scenes, and the enhanced images were repeatedly modified by technicians with professional aesthetic knowledge according to the aesthetics of most people. This subset is illustrated in Fig. 6. This dataset can be used to study the aesthetics of Asian people. SCUT-FBP5500 [23] is a benchmark dataset that contains 5500 frontal face images. Most of the images were taken from the internet. The SCUT-FBP5500 subset is illustrated in Fig. 7. The high-resolution Asian face dataset contains 4000 high-resolution images of nonreal identities generated based on Stylegan [36]. This subset is shown in Fig. 8.

4.2 Implementation details

To learn Asian aesthetics, we used the wedding photography dataset to train AEP-GAN. To further demonstrate that our AEP-GAN can perform automatic aesthetic enhancement, our network was tested on the SCUT-FBP5500 dataset, which contains only Asian faces, and a high-resolution Asian face dataset. When computing the perceptual loss, we used the pool 3 feature layer of VGG-16. We trained the network for 100 epochs using the Adam optimizer [14] with a learning rate of 0.0002 and a batch size of 5. For DAI, we used an architecture similar to that of the patch discriminator in [38].

Considering that \(L_{G\_cyc}\) and \(L_{G\_perc}\) are more important than \(L_{G\_adv}\) and \(L_{G\_beauty}\), this paper sets λadv and λbeauty as 1 by default, sets different λcyc and λperc, and selects the best λcyc and λperc based on PSNR, SSIM, and FID, as shown in Fig. 9.

Figure 9 shows that when λcyc and λperc are 5, the PSNR, SSIM, and FID of image generation are the best, so this paper sets the λadv and λbeauty to 1, λcyc and λperc to 5.

5 Results and discussion

5.1 Ablation experiment

Because the training dataset contains face images without backgrounds, to ensure the consistency of the dataset, the ablation experiment in this study uses background-free face images for verification.

5.1.1 Aesthetic Deformation Perception Block

In AEP-GAN, ADP is designed to learn the implicit aesthetic transformation between the landmarks of the original and enhanced images. The concept of ADP is based on [10]. However, it is necessary to note that [10] must provide parameters manually for deformation, whereas ADP is applied in an automated manner. Moreover, in the beautification process of [10], the whole face changes simultaneously, while ADP can adaptively beautify single or multiple regions to different degrees according to different faces through learning. Furthermore, this study verifies that the same face has the same feature representation in different positions through the ADP module, which solves the problem of overfitting of marker learning caused by different face positions before and after training data beautification. Therefore, the design of ADP is innovative and necessary for AEP-GAN. Figure 10 shows that the landmarks of the original image obtain new landmarks through ADP and the difference between the two.

Figure 10 shows the enhanced landmarks learned from the source landmarks of the four samples using ADP. By comparing the feature representations, it can be seen that Sample 1 involves mainly aesthetic enhancement of eyebrows and eyes, and Sample 2 involves mainly aesthetic enhancement of eyebrows. Sample 3 involves mainly aesthetic enhancement of the eyebrows and jaw, and Sample 4 involves mainly aesthetic enhancement of the eyebrows, jaw, and nose. In summary, ADP can independently determine the parts that need to be enhanced and beautify the aesthetics according to the learned aesthetic experience.

5.1.2 Generator block of ASR

ASR combines the original image and landmarks and uses the U-Net network structure to generate an enhanced image or restore an enhanced image. To ensure that the U-Net can learn the features of landmarks and then deform the image, the landmarks must be connected to the U-Net downsampling layer. To verify that the dense connection method proposed in this study is satisfactory, we conducted ablation experiments on three datasets with different connection methods, as shown in Figs. 11,12, 13, 14, 15 and 16.

Figures 11–16 show that when landmarks and U-Net are combined more deeply, the facial image becomes clearer, and the contours and facial features become softer. Specifically, without using landmarks, the wedding dataset could still obtain a clear facial image after enhancement. This is because the wedding dataset was used for training. As the number of training epochs increased, the network gradually overfit. At this time, the network could not learn the aesthetic transformations for different faces, which led to blurred test results for the SCUT-FBP5500 dataset and high-resolution Asian face dataset. Upon gradually increasing the connection, the network learned more complete aesthetic transformations, and the results were better.

Simultaneously, we also quantitatively evaluated different connections on the test set, as shown in Table 1.

The TIME in Table 1 represents the time spent for one training epoch. Table 1 shows that as the connection deepens, the peak signal-to-noise ratio (PSNR) and structure similarity index measure (SSIM) of the enhanced image increase, and the Fréchet inception distance (FID) decreases, indicating that the quality of the generated image is higher. Furthermore, although the dense connection contains the largest number of parameters (21.07M), compared with the number of parameters of only one connection (21.04M), it only increases the number of parameters by 0.03M. Moreover, the training time is increased by only 2 minutes. Therefore, the dense connection method proposed in this study is optimal in terms of qualitative and quantitative results, as well as parametric results.

5.1.3 Impact of AEP-GAN modules

To further reflect the novelty of the combination of ADP, ASR and DAI, we compare the results of Original–GAN, Original–GAN+ADP, ASR+DAI and AEP–GAN, as shown in Figs. 17, 18, 19 and 20 (FBP is added to all module combinations).

Examples of enhanced female images with combination of different modules on the high-resolution Asian face dataset (background-free): Original-GAN uses U-Net as the generation framework. ADP+Original-GAN means that the original GAN network adds ADP modules, and ASR+DAI means the combination of ASR and DAI

Examples of enhanced male images with combination of different modules on the high-resolution Asian face dataset (background-free): Original-GAN uses U-Net as the generation framework. ADP+Original-GAN means that the original GAN network adds ADP modules, and ASR+DAI means the combination of ASR and DAI

Figures 17–20 show that each combination of modules applies whitening and peeling operations to the image due to the addition of FBP. Compared with that of the original image, the facial geometric structure of the image generated by the Original-GAN has almost no change, and the whole image has been slightly whitened and skinned, because the Original-GAN have not learned how to beautify the face. After adding the ADP module, the effect is significantly improved, because the ADP module can generate facial aesthetic marker points that guide geometric deformation. However, due to the lack of identity attribute constraints, unexpected geometric deformations may occur, such as shown in the third row and third column of Fig. 18. The images generated by ASR+DAI are whitened and peeled and maintain identity consistency, but there are obvious contour shadows around the face, because there is no facial aesthetic marker point generated by the ADP module as a guide for geometric deformation. The above results show that ADP guides geometric deformation and that ASR maintains identity attributes. The results of combining these components to form AEP-GAN show that AEP-GAN skillfully combines ADP, ASR, DAI and FBP to generate enhanced facial geometric landmarks through the ADP module and uses the characteristics of ASR (a two-way recursive network guided by landmarks) to generate and restore beautiful facial images from landmarks and source facial images to achieve end-to-end automatic facial enhancement and ensure identity consistency.

To further illustrate that ASR+DAI can improve the quality of generated images compared with Original-GAN, we performed a quantitative evaluation, as shown in Table 2.

The TIME in Table 2 represents the time spent for a training epoch. The results show that the quality of images generated by ASR+DAI is better than that of Original-GAN. ADP+Original-GAN is better than ASR+DAI because the quality of the facial shadow contour is reduced, but the combination of ADP, ASR and DAI has the best image generation quality. From the perspective of model complexity, the number of parameters and training time of the ADP+Original-GAN module is significantly less than that of ASR+DAI, which is equivalent to that of Original-GAN, indicating that the complexity of the ADP module is the smallest. Although the parameter quantity of AEP-GAN is increased by 10M and the training time is increased by 4 minutes compared with those of Original-GAN, AEP-GAN is better in terms of the combination of the effect and quality of beautifying images. Compared with ADP+Original-GAN, AEP-GAN has more parameters (difference: 10M) and a longer training time (difference: 3 minutes), but PSNR, SSIM and FID are better. Therefore, AEP-GAN is the best in terms of the evaluation indicators and model complexity.

5.2 Results

To evaluate the generated images qualitatively and quantitatively, this study validates the wedding dataset (train), SCUT-FBP5500 dataset (test), and high-resolution Asian face dataset (test). The enhanced results of the wedding dataset (background-free), SCUT-FBP5500 dataset (background-free), and high-resolution Asian face dataset (background-free) are shown in Figs. 21, 22, 23, 24, 25 and 26.

Figures 21–26 show that the augmented image is more aesthetically pleasing than the original image while preserving information and identity consistency other than the face. Specifically, for women, compared with those of the original image, the color saturation and contrast are higher, and there are obvious brightening, smoothing, and face-lifting effects; the contours and facial features of the beautified image are softer. For men, most facial contours and features are slightly altered, enhancing color saturation and contrast. Furthermore, the AEP-GAN can beautify the modified face map twice. For example, in Fig. 23, the red circle-marked image represents an artificially retouched image. Compared to this type of image, the color and lines of the beautified image are more in line with human aesthetics.

To further verify the beautification performance of AEP-GAN, this study describes the test datasets (with background), as shown in Figs. 27, 28, 29 and 30.

Figures 27–30 show that AEP-GAN performs whitening and deformation beautification operations for images with backgrounds and can achieve similar beautification effects as images without backgrounds. Therefore, AEP-GAN is suitable for both background-free and background facial image beautification. Although the whitening operation of AEP-GAN affects the color of the background, it does not change the content.

Furthermore, this study verifies whether Asians’ aesthetic evaluation of beautified images is improved. Specifically, for comparison, this study collected aesthetic evaluation scores from 20 people in Asia. However, owing to the high cost of artificial aesthetic scoring, this study validated only the SCUT-FBP5500, as shown in Fig. 31.

Figure 31(a) shows that the mean, median, upper quartile, and lower quartile of the evaluation scores of the beautified images were improved; therefore, the images beautified by AEP-GAN had higher aesthetic evaluation. Figure 31(b) shows the specific differences in beauty scores before and after beautification. Therefore, the images identified by the AEP-GAN were effective.

5.3 Comparison with SOTA

AEP-GAN performs both whitening and deformation functions. We compared this approach with the state-of-the-art FAE methods [10, 14, 15, 17, 37]. We also added two algorithms (Meitu and Face++) [20, 21] of the most widely used commercial facial beauty apps in Asia for comparison, as shown in Figs. 32 and 33.

Figures 32 and 33 show that AEP-GAN can generate more beautiful images than the original image. Specifically, for different source female images, AEP-GAN enhances different parts to different degrees to satisfy esthetics.

For women, compared with the most advanced traditional algorithm [10], the face thinning operation is stronger, and the identity of the face is more consistent with the original face, as shown in the second line of Fig. 32. Moreover, the beautified image of AEP-GAN retains some original facial details, such as in the third line of Fig. 32 (smiling expression) and the fourth line of Fig. 33 (tooth exposure). Compared with the deep learning methods [14, 17, 37], AEP-GAN maintains identity consistency while improving the face, effectively avoiding over-beautification and maximizing the consistency of information other than the face. Intuitively, [14, 17, 37] make the image more beautiful and young, but it changes the facial attributes expression), identity attributes and background content of the image. In contrast to [15], AEP-GAN performs face thinning, whitening and peeling operations simultaneously, and [15] is more inclined to make up on the face. Compared with commercial tools [20, 21], AEP-GAN not only performs skin improvement and whitening operations but also performs face thinning and contrast enhancement operations.

For men, the beautification operation implemented by AEP-GAN is related to the aesthetic enhancement knowledge of professionals in wedding photography data collection, most of which are skin grinding operations. Compared with the existing methods and tools, AEP-GAN maintains the consistency of identity and background to the greatest extent while performing the beautification operation, avoiding excessive beautification.

In general, AEP-GAN, trained on the wedding photography dataset, has learned the aesthetic enhancement knowledge of professionals and has a good beautification effect on the real face dataset (SCUT-FBP5500) and the virtually generated face dataset (high resolution Asian face). Although AEP-GAN tries to maintain the consistency of information other than the face, the whitening operation still affects the color of the background, which is a small flaw in the generated results. In the next step, we will consider beautifying the face separately and then fusing it with the background to generate a beautified image.

Furthermore, this paper conducts a quantitative evaluation of AEP-GAN and other deep learning methods [14, 15, 17, 37], as shown in Table 3.

Table 3 shows that the image performance index generated by AEP-GAN is better than the existing deep learning facial beautification framework. Additionally, we use the model parameter quantity and FLOPs to represent the space complexity and time complexity. Compared with other deep learning models, AEP-GAN and Beholder-GAN [14] have the similar complexity (PARAM: 21.07M vs 23.09M, FLOPs: 370.65M vs 387.42M), and the model of [17] has the highest complexity (PARAM: 52.51M, FLOPs: 16.64G). This is because compared with Beholder-GAN, facial coding and body attribute retention modules are added. Therefore, considering the quality of images generated by AEP-GAN and the complexity of the model, AEP-GAN has the best performance.

6 Conclusion and future work

In this paper, we mainly study the automatic beautification of face, maintain identity consistency and avoid over-beautification. To this end, we propose AEP-GAN, and build a wedding photography dataset to achieve end-to-end automatic beautification of the face.

Specifically, the source landmarks are mapped to enhanced landmarks through ADP, the aesthetic enhancement knowledge of each individual in the wedding photography dataset is learned independently, the characteristics of ASR are used to beautify the image and keep the identity attribute consistent, and DAI and FBP are used to constrain the generated image, making the beautified image higher quality and more aesthetic.

A large number of experiments on two test datasets show that our method solves the problems of overbeautification and identity consistency and can obtain the most advanced results. In addition, we plan to apply our new framework to other issues, such as aesthetic enhancement detection and restoration.

In the future, we plan to study 3D-FAE, which can be applied to 3D cosmetic surgery. In addition, we plan to study the FAE of all races, which will further bring new technologies to real-world applications.

References

Thakerar JN, Saburo I (1979) Cross-cultural comparisons in interpersonal attraction of females toward males. J Soc Psychol 108(1):121–122

Kim WH, Choi JH, Lee JS (2020) Objectivity and subjectivity in aesthetic quality assessment of digital photographs. IEEE Trans Affect Comput 11(3):493–506

Chen Y, Hu Y, Zhang L, Li P, Zhang C (2018) Engineering deep representations for modeling aesthetic perception. IEEE Trans Cybern 48(11):3092–3104

Tommer L, Daniel CO, Gideon D, Dani L (2008) Data-driven enhancement of facial attractiveness. ACM Trans Graph 27(3):38

Seo M, Chen Y-W, Aoki H (2011) Automatic transformation of ”kogao” (small face) based on fast b-spline approximation. J Inf Hiding Multimed Sig Process 2:192–203

Sun M, Zhang D, Yang J (2011) Face attractiveness improvement using beauty prototypes and decision. In: The First Asian Conference on Pattern Recognition, pp 283–287

Roy H, Dhar S, Dey K, Acharjee S, Ghosh D (2018) An automatic face attractiveness improvement using the golden ratio. In: Advanced Computational and Communication Paradigms, pp 755–763

Grammer K, Thornhill R (1994) Human (homo sapiens) facial attractiveness and sexual selection: The role of symmetry and averageness, vol 108, pp 233–42

Langlois JH, Roggman LA (1990) Attractive faces are only average. Psychol Sci 1(2):115–121

Hu S, Shum HPH, Liang X, Li FWB, Aslam N (2021) Facial reshaping operator for controllable face beautification. Expert Syst Appl 167:114067

Arakawa K, Nomoto K (2005) A system for beautifying face images using interactive evolutionary computing. 2005

Hara K, Maeda A, Inagaki H, Kobayashi M, Abe M (2009) Preferred color reproduction based on personal histogram transformation. IEEE Trans Consum Electron 55(2):855–863

Ohchi S, Sumi S, Arakawa K (2010) A nonlinear filter system for beautifying facial images with contrast enhancement. In: 2010 10th International Symposium on Communications and Information Technologies, pp 13–17

Diamant N, Zadok D, Baskin C, Schwartz E, Bronstein AM (2019) Beholder-gan: Generation and beautification of facial images with conditioning on their beauty level. In: Proceedings of the 2019 IEEE International Conference on Image Processing. IEEE, Taipei, Taiwan, pp 739–743

Liu X, Wang R, Chen C-F, Yin M, Peng H, Ng S, Li X (2019) Face beautification: Beyond makeup transfer. In: Frontiers in Computer Science

Huang Z, Suen CY (2021) Identity-preserved face beauty transformation with conditional generative adversarial networks. In: Proceedings of the 25th International Conference on Pattern Recognition. IEEE, Virtual Event / Milan, Italy, pp 7273–7280

Zhou Y, Xiao Q (2020) Gan-based facial attractiveness enhancement. CoRR. arXiv:2006.02766

He J, Wang C, Zhang Y, Guo J, Guo Y (2020) Fa-gans: Facial attractiveness enhancement with generative adversarial networks on frontal faces. CoRR. arXiv:2005.08168

Zhang M, Wu S, Siyuan D, Qian W, Jieyi C, Qiao L, Yang Y, Tan J, Yuan Z, Peng Q, Yu L, Navarro N, Tang K, Ruiz-Linares A, Wang J-C, Claes P, Jin L, Li J, Wang S (2022) Genetic variants underlying differences in facial morphology in east asian and european populations. Nat Genet 54:1–9

Meitu Meitu AI Open Platform. https://ai.meitu.com/index,

MEGVII Face++ Open Platform. https://www.faceplusplus.com,

Zhu J, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the 2017 IEEE International Conference on Computer Vision. IEEE Computer Society, Venice, Italy, pp 2242–2251

Liang L, Lin L, Jin L, Xie D, Li M (2018) SCUT-FBP5500: A diverse benchmark dataset for multi-paradigm facial beauty prediction. In: Proceedings of the 24th International Conference on Pattern Recognition. IEEE Computer Society, Beijing, China, pp 1598–1603

Lee C, Schramm MT, Boutin M, Allebach JP (2009) An algorithm for automatic skin smoothing in digital portraits. In: Proceedings of the 16th IEEE International Conference on Image Processing. ICIP’09, pp 3113–3116

Guo D, Sim T (2009) Digital face makeup by example. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp 73–79

Liu L, Xing J, Liu S, Xu H, Zhou X, Yan S (2014) ”wow! you are so beautiful today!”, vol 11

Melacci S, Sarti L, Maggini M, Gori M (2010) A template-based approach to automatic face enhancement. Pattern Anal Appl 13(3):289–300

Galton, Francis (1879) Composite portraits. J Anthropol Inst 8:132–144

Zhang S, He F (2020) DRCDN: learning deep residual convolutional dehazing networks. Vis Comput 36(9):1797–1808

Si T, He F, Zhang Z, Duan Y (2022) Hybrid contrastive learning for unsupervised person re-identification, vol 1–1

Tang W, He F, Liu Y (2022) Ydtr: Infrared and visible image fusion via y-shape dynamic transformer

Goodfellow IJ, Jean PA, Mirza M, Xu B, David WF, Ozair S, Courville AC, Bengio Y (2014) Generative adversarial networks. CoRR. arXiv:1406.2661

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein GAN. CoRR. arXiv:1701.07875

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville AC (2017) Improved training of wasserstein gans. CoRR. arXiv:1704.00028

Karras T, Aila T, Laine S, Lehtinen J (2018) Progressive growing of gans for improved quality, stability, and variation. In: Proceedings of the 6th International Conference on Learning Representations. OpenReview.net, Vancouver, BC, Canada

Karras T, Laine S, Aila T (2021) A style-based generator architecture for generative adversarial networks. IEEE Trans Pattern Anal Mach Intell 43(12):4217–4228

Karras T, Laine S, Aittala M, Hellsten J, Lehtinen J, Aila T (2020) Analyzing and improving the image quality of StyleGAN. In: Proc. CVPR

Isola P, Zhu J, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. IEEE Computer Society, Honolulu, HI, USA, pp 5967–5976

Choi Y, Choi MJ, Kim M, Ha JW, Kim S, Choo J (2017) Stargan: Unified generative adversarial networks for multi-domain. image-to-image translation. CoRR. arXiv:1711.09020

Li M, Huang H, Ma L, Liu W, Zhang T, Jiang Y (2018) Unsupervised image-to-image translation with stacked cycle-consistent adversarial networks. In: Proceedings of The15th European Conference, 11213. Springer, Munich, Germany, pp 186–201

Li T, Qian R, Dong C, Liu S, Yan Q, Zhu W, Lin L (2018) Beautygan: Instance-level facial makeup transfer with deep generative adversarial network. In: Proceedings of the 26th ACM International Conference on Multimedia. Association for Computing Machinery, New York, NY, USA, pp 645–653

Chen H-J, Hui K-M, Wang S-Y, Tsao L-W, Shuai H-H, Cheng W-H (2019) Beautyglow: On-demand makeup transfer framework with reversible generative network. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 10034–10042

Mirza M, Osindero S (2014) Conditional generative adversarial nets. CoRR. arXiv:1411.1784

Acknowledgements

This work was supported by the National Natural Science Foundation of China [Nos. 61972060, 62027827 and 62221005], National Key Research and Development Program of China (Nos. 2019YFE0110800), Natural Science Foundation of Chongqing [cstc2020jcyj-zdxmX0025, cstc2019cxcyljrc-td0270].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

We declare that we have no financial and personal relationships with other people or organizations that can inappropriately influence our work, there is no professional or other personal interest of any nature or kind in any product, service and/or company that could be construed as influencing the position presented in, or the review of, the manuscript entitled.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

All authors contributed equally to this work.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, H., Li, W., Gao, X. et al. AEP-GAN: Aesthetic Enhanced Perception Generative Adversarial Network for Asian facial beauty synthesis. Appl Intell 53, 20441–20468 (2023). https://doi.org/10.1007/s10489-023-04576-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04576-7