Abstract

Existing multi-label learning classification algorithms ignore that class labels may be determined by some features in the original feature space. And only a partial label of each instance can be obtained for some real applications. Therefore, we propose a novel algorithm named joint Label-Specific features and Label Correlation for multi-label learning with Missing Label (LSLC-ML) and its optimized version to solve the above-mentioned problems. First, a missing label can be recovered by the learned positive and negative label correlations from the incomplete training data sets, then the label-specific features can be selected, finally the multi-label classification task can be modeled by combining the labelspecific feature selections, missing labels and positive and negative label correlations. The experimental results show that our algorithm LSLC-ML has strong competitiveness compared with some state-of-the-art algorithms in evaluation matrices when tested on benchmark multi-label data sets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As an important branch of Artificial Intelligence, machine learning has been widely used in many practical applications, especially in the field of smart applied approaches. Many scholars have proposed many algorithms one after another, such as Zhou et al. [1], Bencherif et al. [2], Souza et al. [3], and Bae et al. [4]. These algorithms mainly use fuzzy neural networks to solve related problems. Traditional supervised learning methods are often only aimed at a single label, but in reality, an object often has multiple classification labels. This type of supervised learning is called multi-label classification. At present, multi-label learning has been widely used in web page classification [5], image annotation [6], biological analysis [7] and other fields.

There are two ways to solve multi-label classification algorithm: problem transformation and algorithm adaptation. The idea of problem transformation is to transform multi-label classification into a series of single-label classifications, such as MLSVM [6] (Multi-label Support Vector Mechine). However, MLSVM does not take the label correlations into account. Different from the problem transformation, the idea of algorithm adaptation is to adapt the existing single-label classification algorithm to solve the multi-label classification, such as ML-KNN [8](Multi-Label K Nearest Neighbor), it introduces MAP(Maximum A Posteriori Probability Estimate) based on the traditional KNN algorithm, but the ML-KNN ignores the label correlations. Therefore, many algorithms related to label correlations have been proposed one after another, such as [9,10,11,12,13,14] etc. In fact, there is also a mutual exclusion phenomenon between labels, which are called negative correlations. For example, both Huang et al. [15] and Wu et al. [16] are linking the positive and negative label correlations. There are also some scholars who put forward some corresponding methods to solve the problem of class imbalance in multi-label learning, such as [17,18,19].

In the classification task, sometimes the curse of dimensionality often occurs because the data features are too large and feature selection is a method to solve the dimension problem. Up to now, a lot of algorithms have been proposed, which based on the assumption that selected features have something to do with all class labels, such as [20,21,22,23,24] etc. However, each class label might be determined by some specific feature subsets. For example, in some text classification scenarios, if there are a series of vocabulary such as” basketball” and” ping-pong ball” in the layout and then there will be a greater probability that the layout will be judged as” sport”. These words are called label-specific features. In order to solve the problems mentioned above, many new algorithms are proposed to select label-specific features [25,26,27].

Unfortunately, many state-of-the-art methods have a poor performance when the labels are missing in some applications. According to previous research, it is inappropriate that many multi-label learning classification algorithms based on the assumption that all class labels are observed. But in many practical application, it is difficult to obtain complete labels.

To solve missing label problem in classification tasks, there are many algorithm have been proposed, such as [28,29,30,31,32,33], and it is feasible to deal with missing label. However, these algorithms use identical data representation composed of all the features in the discrimination of all the class labels. Therefore, how to recover missing label by the learned positive and negative label correlations from the incomplete training data sets of each different class label and select label-specific features with it remains a hot spots of multi-label learning with missing labels. The motivation of this article is summarized as follows.

-

The class labels may be determined by some features in the original feature space.

-

Many algorithms only deal with the missing labels by the positive label correlations and ignore the negative label correlations.

To solve the aforesaid two problems, we proposed a novel algorithm which jointly label-Specific features selections and recover missing labels for multi-label learning by using label correlation in a unified model. Compared with other algorithms, the innovation of the algorithm proposed in this paper is that when completing the missing label matrix, we not only use the positive correlation of labels, but also use the negative correlation of labels. The main contributions are summarized as follows.

-

This is the first model that combines label-specific features selections, missing label and label correlations of positive and negative.

-

The positive and negative label correlations can be learned directly from the incomplete training datasets, and used to complete the matrix and multi-label classification model can be modeled by selecting label-specific features iteratively.

-

Our proposed algorithm performs competitive performance against existing state-of-the-art algorithm from the experiment in this paper.

The rest of this paper is organized as follows. Section 2 reviews the related work of labelspecific features, missing labels and label correlations. In Section 3, we propose two versions of the algorithm. Section 4 gives the optimization of the proposed algorithm. The experimental setup and conclusion are shown in Section 5 and Section 6 respectively.

2 Related work

2.1 Multi-label learning with label-specific feature

In multi-label learning, it is useful to the classification results if the label-specific feature can be found. Zhang et al. [25] proposed LIFT, and it calculates the distance between the original data and the cluster center by clustering of positive and negative instances of each class label respectively, and takes the distance as part of the original data features. Similarity, LSDM [34] utilizes clustering algorithm by setting different ratio parameter for positive and negative instances class label respectively, and finds label-specific feature, which consists of distance information and spatial topology information. However, they does not consider label correlations. Comparatively speaking, LSF-CI [35] selects label-specific feature for each class label by modeling label correlations in label space and feature space simultaneously.

Huang et al. [26] and Weng et al. [36] proposed LLSF-DL and LF-LPLC respectively, the former joints label-specific features and class-dependent labels by stacking sparesly, while the latter joints label-specific features and local pairwise label correlations. Therefore, although these algorithms can deal with label-specific feature, they do not perform particularly well when the label is missing. There are also some scholars who consider this issue from other angles, such as [37, 38]. NSLSF [37] translates the logic labels to the numerical ones and utilize the numerical labels for extracting label specific features. FSSL [38] selects labelspecific features for each newly-arrived label with designing inter-class discrimination and intra-class neighbor recognition.

2.2 Multi-label learning with missing labels

It is inappropriate to assume that all labels are observed. Therefore, there are many algorithms for missing labels, such as [28,29,30]. Wu et al. [28] proposed a new model for multi-label with missing label and it has been proved that this model can solve the problem of label missing. Maxide [29] speedups matrix completion by exploring the side information and it is assumed that the matrix to be supplemented and the side information matrix are shared the latent information. SVMNN [30] is an algorithm based on the SVM method, it constructs two graph to explore sample smoothness and class smoothness respectively, and then the loss function is modeled which combines these two factors. The algorithm mentioned just now is aimed at missing labels for each instance is unknown, so [32, 33] are proposed based on which partial missing labels are known. SSWL [33] is a semi-supervised learning to supplement missing label by both instance similarity and label similarity are considered. iMVML [32] explores a shared subspace that handles multi-view multi-label learning, and the subspace can be learned from incomplete views with weak labels, label correlations and a predictor in this subspace simultaneously. Besides, there are some frameworks that can handle label correlations and missing label or others simultaneously, such as [31, 39]. LSML [39] can handle multi-label task with missing label, label correlations and label-specific feature selection and it is similar to our method in this paper that select label-specific feature by adding l1 regularization. MLMLFS [31] jointly deals with feature selections with missing label and models classifier in a unified framework. It is modeled by l2,p regularization to select the most discriminative features and a manifold regularization to explore label correlations. That is to say, how to find the label correlations is the most critical issue.

2.3 Multi-label learning with label correlations

Whatever it is missing labels or feature selections, the label correlation plays an important role in it and it has been proved to improve the classification ability of the multi-label learning algorithm. Examples are ML-LOC [10], Glocal [13] etc. ML-LOC can obtain local label correlations by clustering and it is taken into account in the feature space, while Glocal can obtain global and local label correlation based on ML-LOC. However, these label correlations are only positive label correlations. There is also a mutual exclusion between labels, called negative correlations, such as RAkEL [12], LPLC [15] and CPNL [16]. LPLC can explore positive and negative label correlations matrix during the training process by calculating the max posterior probability of each class label given by another one on nearest neighbors sets. Similarity, RAkEL is an improved version of [14], it adds positive and negative correlations into k-labelsets by calculating the cosine similarity of the two matrices. Nevertheless, CPNL does not obtain positive and negative label correlation through calculation, but is modeled in a unified framework. In conclusion, it is proposed to joint label-specific features selections and label Correlation for Multi-label Learning with missing label in this paper.

3 The proposed model

3.1 Notations

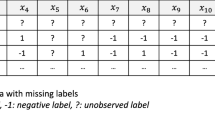

For an arbitrary matrix W, Wi and Wj denote the i-th row and j-th column of W, WT denote the deposition of W. In multi-label learning, given dataset \( D={\left\{\left({\mathbf{x}}_i,{\mathbf{y}}_i\right)\right\}}_{i=1}^n \), where we denote the feature vector of the i-th instance by xi ∈ Rm, and denote the i-th ground-truth label vector by yi ∈ {0, 1}l, and m represents the number of features of the instance and l represents the number of class labels associated with an instance. For simplicity, the training data is expressed as matrix X = [x1, x2, …, xn]T ∈ Rn × m; Y = [y1, y2, …, yn]T ∈ {0, 1}n × l, where yil = 1 represents the i-th instance is associated with the l-th label, and yil = 0 represents the i-th instance is nothing to do with the l-th label or the l-th label is missing.

3.2 Label-specific feature selection

Given datasets, we propose the assumption that each class label is only determined by a certain subset of specific features from the original feature space. In order to select this feature, that is, label–specific feature, l1 regularization is used in the modeling of a linear model. Naturally, label–specific feature is selected by no-zero values of each wi ∈ Rm, the optimization problem is defined as

where W ∈ Rm × l is the regression coefficient and W = [w1, w2, …, wl], and l1 is the tradeoff parameter. \( {\left\Vert \ast \right\Vert}_F^2 \) is the summing the squares of each element in matrix *.

3.3 Learning multi-label with missing label

In previous studies, we assume that the labels of the given training data sets are complete and all are observable. However, this assumption is often not true. Considering that labeling is a huge project, missing labels have become a hot spot in multi-label learning. In order to solve such problems, it is proposed to a method that recovers incomplete label matrix to complete label matrix by making full use of the label correlations. First of all, we assume that any unobserved, that is, missing labels can be explored by the relationship between observed labels. Let P ∈ Rl × l be label correlations, where pij represents the degree of correlation between the i-th label and the j-th label. Following the idea of Huang et al. [39], we assume that one class label may be correlated with only a subset of class labels, thus we add the l1 –norm regularizer over P. The optimization problem can be rewritten as

3.4 Learning positive label correlations

In the multi-label classification task, the accuracy of classification can be greatly improved if the relationship between labels can be considered. Therefore, we incorporate the label pairwise correlation by calculating the Euclidean distance between any pair of coefficient vectors which are constrained by the learned label correlation matrix P. Here, for two label yi and yj have positive correlations, that is, pij or pji is large, and their label-specific features have more probability to be same. That is to say, the corresponding regression coefficient wi and wj will be more similar, and vice versa. According to what has been mentioned above, we can add the regularizer by the Euclidean distance of wi and wj as follows,

Where Lp = Dp − P ∈ Rl × l is the laplacian matrix and Dp is the diagonal matrix with P1, where 1 is a matrix with all values one. Thus, the optimization problem can be rewritten as,

3.5 Learning negative label correlations

In fact, the label relation mentioned above be actually a positive label correlation. There is also a mutual exclusion between labels, called negative label correlations [16]. For example, for” aquarium”, the relationship between” seal” and” aquarium” will be relatively close, but the relationship with” tiger” will be relatively weak. Thus, similar to the idea of positive correlation of labels, we can learned negative label correlation matrix N, for two label yi and yj have negative correlations, that is, nij or nji is large, and their label-specific features have more probability to be same. That is to say, the corresponding regression coefficient wi and wj will be more similar, and vice versa. Note, the Euclidean distance of wi and wj should be large. Therefore, we can add the regularizer as follows,

where Ln = Dn + N ∈ Rl × l and Dn is the diagonal matrix with N1, where 1 is a matrix with all values one. Note that we propose a hypothesis that the relationship between labels is a combination of positive label correlation and negative label correlation. For simplicity, we adopt linear combination here, that is, P + N. Thus, the final optimization problem can be rewritten as,

4 Model optimization and prediction

In this section, considering that object function is non-smooth, the accelerated proximal gradient method is applied in this issue according to paper [39]. For simplicity, let Φ be model parameters, that is, Φ = (W, P, N). Thus, object function(6) can be written as,

Where

Note, although function f(Φ) and g(Φ) are convex, the latter is not smooth. First of all, we use the following function defined in paper [40].

Here, for any L ≥ Lf, QL(Φ, Φi) ≥ F(Φ) will always be found, where Lf is the Lipschitz constant. Next, minimizing a sequence of separable quadratic approximations to F(Φ) by the proximal algorithm. Similar to paper [40], we can solve Φ by minimizing QL(Φ), the calculation formula is as follows,

where

It is effective that speed up the convergence by set \( {\boldsymbol{\Phi}}^i=\boldsymbol{\Phi} +\frac{\alpha_{i-1}}{\alpha_i}\left({\boldsymbol{\Phi}}_i-{\boldsymbol{\Phi}}_{i-1}\right) \) for sequence αi, where αi satisfies \( {\alpha}_{i+1}^2-{\alpha}_{i+1}\le {\alpha}_i^2 \), and Φiis the i-th iteration of Φ .

4.1 Update W

Firstly, Updating W with fixed P and N, the derivation of function (8) can be calculated as follows,

Then, according to Eq. (11), W can be updated by

Where, ε is step size. Here, because W in g(Φ) is regularization term with l1-norm, the element–wise soft–threshold operator is adopted in solving this value. The calculation formula is as follows,

where (⋅)+ = max (⋅, 0).

4.2 Update P

Similarly, Updating P with fixed W and N, the derivation of function (8) can be calculated as follows,

Thus, P can be updated by

Where, ε is step size. Here, because P in g(Φ) is nonnegative regularization term with l1-norm, the element–wise soft–threshold operator is adopted in solving this value. The calculation formula is as follows,

where (⋅)+ = max (⋅, 0).

4.3 Update N

Similarly, Updating N with fixed W and P, the derivation of function (8) can be calculated as follows,

Thus, N can be updated by

where, ε is step size. Here, because N in g(Φ) is nonnegative regularization term with l1-norm, the element–wise soft–threshold operator is adopted in solving this value. The calculation formula is as follows,

where (⋅)+ = max (⋅, 0). Note, the derivative of the function(8) with respect to P is the same as the derivative of N, because we assume that the label relationship is a simplest linear combination of P and N, that is, P + N. All optimization steps are summarized in algorithm1, called LSLC for simplicity.

4.4 Lipschitz constant

Given model parameters Φ1 = (W1, P1, N1) and Φ2 = (W2, P2, N2), then, we have

Thus, the Lipschitz constant of optimization problem (6) can be calculated by

where \( {\mathbf{L}}_{{\mathbf{p}}^{\prime }}={\mathbf{L}}_{\mathbf{p}}+{\mathbf{L}}_{\mathbf{p}}^{\mathbf{T}} \) and \( {\mathbf{L}}_{{\mathbf{n}}^{\prime }}={\mathbf{L}}_{\mathbf{n}}+{\mathbf{L}}_{\mathbf{n}}^{\mathbf{T}} \).

4.5 Time complex

In this part, let us briefly analyze the time complexity of the optimization algorithm. For matrix X ∈ Rn × m, Y ∈ {0, 1}n × l, W ∈ Rm × l, P ∈ Rl × l, N ∈ Rl × l, L ∈ Ll × l, where n denotes the number of instances, l denotes the number of labels for corresponding instances, and m denotes the dimensions of instances. In LSLC-1, the positive and negative label correlations Lp and Ln need O(l2) in step 3, and the other key steps of the algorithm lie in the calculation of the Lipschitz constant and the derivation of the matrix. In step 4, the calculation of the Lipschitz constant needs O(m3 + l3 + mnl). The derivation of the matrix focuses on 6, 9 and 12 steps, the derivation of the matrix W needs O(nm2 + ml2 + lm2 + mnl), note that the derivation of the matrix P and N are the similar, so the time complexity of them needs O(nl2 + l3 + ml2 + mnl). As mentioned above, the time complexity of the calculating the Lipschitz constant can be reduced by finding a appropriate L ≥ Lf [40] and it needs O(ml2 + m2 n + m2 l + nl2 + mnl + nl + l2 + l3). These are summarized in algorithm 2, called LSLCnew for simplicity.

4.6 Model prediction

In this section, we mainly use two optimization algorithms mentioned above to obtain matrix W. For unlabeled test data sets Dts, we can predict the label of Dts by calculating the score matrix Sc and then using Sign(Sc - τ) with a given threshold τ, where Sc = W Dts and τ is set to be 0.5 in this experiment. The process of prediction is summarized in algorithm 3.

5 Experiment

5.1 Data sets

The algorithms proposed in this paper are all simulated on benchmark multi-label data sets. The specific statistical information of multi-label data sets are shown in Table 1. During the simulation, for each data set, we randomly selected 80% of the data as training data and the rest as test data. The experiment is repeated ten times and a five-fold cross test is used.

5.2 Evaluation measures

In order to evaluate the performance of the experimental results, we use seven evaluation metrics. For simplicity, let t denote the number of test data, Yi and \( {\overset{\sim }{\mathbf{Y}}}_i \) denote the sets of ground-truth labels and predicted labels of the i-th instance, respectively; \( {Z}_j^{\mathbf{P}} \) and \( {Z}_j^{\mathbf{N}} \) denote the sets of positive and negative instance of j-th class label. \( {C}_{{\mathrm{X}}_i}\left(\mathbf{Y}\right) \) is confidence score of xi associated with label y. Thus, evaluation metrics can be computed as follows,

Hamming Loss(HL) evaluates error matching between the ground-truth labels Yi and the predicted labels \( {\overset{\sim }{\mathbf{Y}}}_i \).

where △ represents the difference between the two data sets.

Subset Accuracy(SA) evaluates the accuracy between the ground-truth labels Yi and the predicted labels \( {\overset{\sim }{\mathbf{Y}}}_i \) in a more rigorous way.

where ‖·‖ is an indication function.

Micro F1(MF1) is an extended version of the F1 Measure, and it takes each entry of the label vector as a single instance regardless of the distance between labels.

Average Precision(AP) evaluates the average score of related labels that are higher than the specific labels

Ranking Loss(RL) evaluates the fraction that the ranking of unrelated labels of the instance is lower than that of related labels.

One Error(OE) evaluates the fraction that the highest ranking label of the instance is not correctly labeled.

where ‖·‖ is an indication function.

Average AUC(AUC) evaluates the average fraction that the ranking of positive instance of the all class labels is higher than that of negative instance.

For these evaluation metrics, for HL, RL and OE, the smaller the better; whereas for SA, MF1, AP and AUC, the bigger the better.

5.3 Compared algorithms

The two versions of algorithm are proposed in this paper, which are compared with the following state-of-the-art for multi-label learning algorithm. For simplicity and efficiency, all configuration for parameters in the compared algorithms are suggested in the original paper.

MLKNN [8] It introduces maximum posteriori probability (MAP) to deal with multi-label classification based on traditional KNN algorithm. The parameter k is found in {3, 5, …, 21}.

LPLC [15] It explores local positive and negative pairwise label correlations based on traditional KNN algorithm. The parameter k is found in {3, 5, …, 21} and the tradeoff parameter α is found in {0.1, 0.2, …, 1}.

LIFT [25] It learns label-specific features through the idea of clustering algorithm. Here, base learner is linear kernel SVM, r = 0.1.

LLSF [26] It learns label-specific features. The regulation parameters λ is found in {2−10, …, 210}.

Glocal [13] It explores both global and local label correlations when recover missing labels. The paramter λ = 1,from λ1 to λ5 are found in {2−5, …, 21}, k is found in {0.1l, …, 0.6l}, and g is found in {2−10, …, 210}.

LSML [39] It directly learns label-specific features with missing labels. All paramters are found in {2−5, …, 21}.

LSLC and LSLCnew It is two versions of algorithm are proposed in this paper. It directly learns the label-specific features that missing labels while considering the positive and negative correlation of labels. All paramters are found in {2−10, …, 21}.

5.4 Comparison experiments

In the comparison experiments, we control the missing rate of class labels to be in the range of 10% to 60% with a step size of 10% and all data is normalized for fairness. Thus, for every comparing algoritm, the average result(mean std) on eleven data sets in terms of evaluation metrics are consulted on Tables 2, 3, 4, 5, 6, 7, and 8. Considering the limitation of table size, the experimental results here only give the average value, and the complete experimental results(mean ± std) are recorded in the uploaded zip file containing 11 Execl files. In order to better evaluate the performance of the algorithm, Friedman test [41] and Holm test [42] are used in here. Table 10 summarizes the Friedman statistics FF and the corresponding critical value in terms of every metric. As shown in Table 10, at significance level α = 0.05, the full hypothesis that the compared algorithm has performance of equivalent is rejected in terms of all the evaluation metric. As a result, the Nemenyi test [41] is employed to test whether our method LSLC can perform competitiveness against comparing algorithm. The performance of two algorithms will be significantly different if their averge ranks differ by at least one critical difference \( CD={q}_{\alpha}\sqrt{\frac{k\left(k+1\right)}{6N}} \) . Here, qα = 3.031 at significance level α = 0.05, therefore CD = 1.2924(k = 8, N = 66). The detailed CD diagrams are shown in Fig. 1. Holm test is based on the ranking of multiple classifiers. Firstly, the statistic \( Z=\frac{Rank_i-{Rank}_j}{SE} \) obeying the standard normal distribution is calculated, where \( SE=\frac{k\left(k+1\right)}{6N} \), Ranki is the average ranking of the i-th classifier on all data sets. Then the corresponding probability p can be obtained according to Z and sorted in ascending order. Finally, the probability p and α/(k − i) are compared to judge whether the original assumption (the two classifiers have the same performance) is rejected. Table 9 summarizes the Holm test result.

Based on the results of comparison experiments, the following observations can be gained:

-

LSLC can outperform other state-of-the-art for multi-label learning algorithms in some case of different label missing rates. According to the Tables 2, 3, 4, 5, 6, 7, and 8, we mainly analyze the experimental results from two directions. From the horizontal direction, we can see clearly that among all the evaluation matrices, the performance of LSLC and LSLCnew perform slightly better on most data sets than other comparison algorithms, and an analysis of Fig. 1 reveals that LPLC and LPLCnew are relatively left-sided in the evaluation matrices compared with other algorithms. From the vertical direction, the experimental results of MLKNN, LPLC, LIFT, LLSF and Glocal have obvious fluctuation with the increase of label missing rate. But the experimental results of algorithm LSML and LSLC show change relatively smooth and only rises slowly in a small range. The main cause of phenomenon is attributed to the algorithm itself. LPLC only considers positive label correlations. Both LIFT and LLSF consider only label-specific features. For MLKNN, LPLC and LLSF, they cannot handle missing labels. Although Glocal takes into account the fact that label correlations and missing labels, it cannot select label-specific features.

-

It can be seen from Table 9 that the performance of LSLC is different from that of MLKNN, LPLC, LIFT, LLSF and Glocal classifiers. Although the performance of LSLC and LSML is the same in holm test, it can be seen from the results in Fig. 1 that the performance of LSLC is better than that of LSML. LSML can handle missing labels, label correlations and label-specific features, but it only uses positive label correlations. In LSLC, first of all, the positive and negative label correlations can be learned from the incomplete training data sets, and then missing label will be recovered by learned label correlations, finally multi-label classification task will be modeled by selecting label-specific features iteratively.

-

LSLCnew still shows better performance compared with LSLC and it is faster than LSLC according to experimental results of all data sets.

In conclusion, our proposed algorithm shows a competitive performance compared to other exist state-of-the-art algorithm for multi-label classification task with missing label (Table 10).

5.5 Convergence

In our proposed algorithm, the accelerated proximal gradient(APG) is used to quickly solve the objective loss function. The loss function value w.r.t the number of iterations is shown in Fig. 2, and we conduct it over ‘medical’ data set. An analysis of Fig. 2 reveals that the ordinate value drops rapidly in the early stage over these two data sets and tends to be stable. Similarly, this phenomenon also occurs in other data sets.

5.6 Analysis of model parameters

In this section, we mainly analyze model parameters, that is, label-specific features and label correlations. Firstly, we conduct LSLC over ‘medical’ dataset with 60% label missing rate and visualize matrix W, which is shown in Fig. 3. Here, the horizontal axis and the vertical axis represent feature index and label index respectively. As can be seen from Fig. 3, we can select label-specific features represented by white color, and each class label is only determined by a certain subset of specific features from original feature space. At the same time, label-specific feature selection is based on the assumption that the label matrix is complete. Thus, the label correlations play an important role in dealing with missing label task and it can be obtained by the learned model parameter P and N according to 3.4 and 3.5 respectively. Similarity, we conduct LSLC over ‘medical’ dataset and visualize P and N two matrices are shown in Figs. 4 and 5. Here, the horizontal axis and the vertical axis represent label index. Note, the points with white color indicate the nozero entries of P and N. After observing the Fig. 4, we can arrive at the following conclusion that each label is only positive label correlation with some other labels. Likewise, this phenomenon also occurs in the Fig. 5.

To intuitively see the impact of positive and negative label correlations on classification results. We degenerate the algorithm of LSLC into two algorithms of LSLC-P and LSLC-N respectively. The former only positive label correlations are considered, while the latter only negative label correlations are considered. Simplicity, we conduct these algorithms over ‘medical’, ‘stack-chemistry’ and ‘rcv1subsets1’ datasets with 60% missing rate of class label. As shown in Table 11, it is effective to strengthen the classification ability if the positive and negative label correlations are considered.

5.7 Sensitivity to parameters

In this section, we mainly analyze the three parameters λ1, λ5, and λ6, where λ1 controls the sparsity of label-specific, λ5 controls the positive label relationship and λ6 controls the negative label relationship. We conduct the experiment on” medical” data set with 60% missing label, and dynamically changing two of parameters by fixing other four parameters with the optimal value respectively. We randomly selected 80% of the data as training data and the rest as test data. The experiment is repeated ten times and a five-fold cross test is used. The experimental results for evaluation metrics of MF1 and AUC are summarized in Fig. 6. An analysis of these Figures reveals that LSLC is sensitive obviously to these tradeoff parameters λ1, λ5, and λ6 in the set rang. When any one of the three parameters λ1, λ5, and λ6 exceeds a certain range, the performance of algorithm LSLC will be weakened.

6 Conclusion

In this paper, we have proposed a novel algorithm named LSLC, which can deal with labelspecific for multi-label learning with missing labels by taking both positive label correlations and negative label correlations into consideration. For missing labels, LSLC can complete the missing label matrix through the positive and negative label correlations and then completion label matrix drives label-specific features selection. Besides, the APG is applied in our proposed algorithm to quickly solve the objective function iteratively. Futhermore, the experimental results show that both positive and negative correlation of labels plays a certain role in the final classification effect of the classifier. After a series of experiments in eleven benchmark data sets, our algorithm shows better performance and can be better select special label features from multi-label classification tasks with missing labels.

References

Zhou H, Zhao H, Zhang Y (2020) Nonlinear system modeling using self-organizing fuzzy neural networks for industrial applications[J]. Appl Intell:1–16

Bencherif A, Chouireb F (2019) A recurrent TSK interval type-2 fuzzy neural networks control with online structure and parameter learning for mobile robot trajectory tracking[J]. Appl Intell 49(11):3881–3893

de Campos SPV, Guimaraes AJ, Araujo VS et al (2019) Incremental regularized Data Density-Based Clustering neural networks to aid in the construction of effort forecasting systems in software development[J]. Appl Intell 49(9):3221–3234

Bae JS, Oh SK, Pedrycz W et al (2019) Design of fuzzy radial basis function neural network classifier based on information data preprocessing for recycling black plastic wastes: comparative studies of ATR FT-IR and Raman spectroscopy[J]. Appl Intell 49(3):929–949

Ciarelli PM, Oliveira E, Salles EOT (2014) Multi-label incremental learning applied to web page categorization. Neural Comput Appl 24(6):1403–1419

Boutell MR, Luo J, Shen X et al (2014) Learning multi-label scene classification. Pattern Recognit 37(9):1757–1771

Alves RT, Delgado MR, Freitas AA (2008) Multi-label hierarchical classification of protein functions with artificial immune systems. In: Brazilian Symposium on Bioinformatics. Springer, Berlin, Heidelberg, pp 1–12

Zhang ML, Zhou ZH (2007) ML-KNN:A lazy learning approach to multi-label learning. Pattern Recognit 40(7):2038–2048

Read J, Pfahringer B, Holmes G et al (2011) Classifier chains for multi-label classification. Mach Learn 85(3):333–359

Huang S-J, Zhou Z-H (2012) Multi-label learning by exploiting label correlations locally. In: Proceedings of the 26th AAAI Conference on Artificial Intelligence, pp 949–955

Charte F, Rivera AJ, Del Jesus MJ et al (2014) LI-MLC: a label inference methodology for addressing high dimensionality in the label space for multilabel classification. IEEE Trans Neural Netw Learn Syst 25(10):1842–1854

Nan G, Li Q, Dou R et al (2018) Local positive and negative correlation-based k-labelsets for multi-label classification. Neurocomputing 318:90–101

Zhu Y, Kwok JT, Zhou ZH (2017) Multi-label learning with global and local label correlation. IEEE Trans Knowl Data Eng 30(6):1081–1094

Tsoumakas G, Katakis I, Vlahavas I (2010) Random k-labelsets for multilabel classification. IEEE Trans Knowl Data Eng 23(7):1079–1089

Huang J, Li G, Wang S et al (2017) Multi-label classification by exploiting local positive and negative pairwise label correlation. Neurocomputing 257:164–174

Wu G, Tian Y, Liu D (2018) Cost-sensitive multi-label learning with positive and negative label pairwise correlations. Neural Netw 108:411–423

Zhang C, Bi J, Xu S, Ramentol E, Fan G, Qiao B, Fujita H (2019) Multi-Imbalance: an open-source software for multi-class imbalance learning[J]. Knowl-Based Syst 174

Sun J, Li H, Fujita H, Binbin F, Ai W (2020) Class-imbalanced dynamic financial distress prediction based on Adaboost-SVM ensemble combined with SMOTE and time weighting[J]. Inform Fusion 54

Sun J, Lang J, Fujita H, Li H (2018) Imbalanced enterprise credit evaluation with DTE-SBD: decision tree ensemble based on SMOTE and bagging with differentiated sampling rates[J]. Inform Sci 425

Doquire G, Verleysen M (2013) Mutual information-based feature selection for multilabel classification. Neurocomputing 122:148–155

Lee J, Kim DW (2015) Mutual information-based multi-label feature selection using interaction information. Expert Syst Appl 42(4):2013–2025

Wang C, Lin Y, Liu J (2019) Feature selection for multi-label learning with missing labels. Appl Intell:1–16

Jorge G-L, Ventura S, Cano A (2019) Distributed selection of continuous features in multilabel classification using mutual information[J]. IEEE Trans Neural Netw Learn Syst

Jorge G-L, Ventura S, Cano A (2020) Distributed multi-label feature selection using individual mutual information measures[J]. Knowl-Based Syst 188:105052

Zhang ML, Wu L (2014) Lift: Multi-label learning with label-specific features. IEEE Trans Pattern Anal Mach Intell 37(1):107–120

Huang J, Li G, Huang Q et al (2016) Learning label-specific features and class-dependent labels for multi-label classification. IEEE Trans Knowl Data Eng 28(12):3309–3323

Xu S, Yang X, Yu H et al (2016) Multi-label learning with label-specific feature reduction. Knowl-Based Syst 104:52–61

Wu B, Lyu S, Hu BG et al (2015) Multi-label learning with missing labels for image annotation and facial action unit recognition. Pattern Recognit 48(7):2279–2289

Xu M, Jin R, Zhou ZH (2013) Speedup matrix completion with side information: Application to multi-label learning. In: Advances in neural information processing systems, pp 2301–2309

Liu Y, Wen K, Gao Q et al (2018) SVM based multi-label learning with missing labels for image annotation. Pattern Recogn 78:307–317

Zhu P, Xu Q, Hu Q, Zhang C, Zhao H (2018) Multi-label feature selection with missing labels. Pattern Recogn 74:488–502

Tan Q, Yu G, Domeniconi C, Wang J, Zhang Z (2018) Incomplete multi-view weak-label learning. In IJCAI

Dong HC, Li YF, Zhou ZH (2018) Learning from semi-supervised weak-label data. In: AAAI

Guo Y, Chung F, Li G, et al (2019) Leveraging label-specific discriminant mapping features for multi-label learning[J]. ACM Transactions on Knowledge Discovery from Data (TKDD) 13(2):1–23

Han H, Huang M, Zhang Y et al (2019) Multi-label learning with label specific features using correlation information. IEEE Access 7:11474–11484

Weng W, Lin Y, Wu S et al (2018) Multi-label learning based on label-specific features and local pairwise label correlation. Neurocomputing 273:385–394

Weng W, Chen Y-N, Chen C-L, Wu S-X, Liu J-H (2020) Non-sparse label specific features selection for multi-label classification[J]. Neurocomputing 377

Liu J, Li Y, Weng W et al (2020) Feature selection for multi-label learning with streaming label[J]. Neurocomputing

Huang J, Qin F, Zheng X et al (2019) Improving multi-label classification with missing labels by learning label-specific features. Inf Sci 492:124–146

Beck A, Teboulle M (2009) A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM Journal on Imaging Sciences 2(1):183–202

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets[J]. J Mach Learn Res 7(Jan):1–30

Hühn JC, Hüllermeier E (2008) FR3: A fuzzy rule learner for inducing reliable classifiers[J]. IEEE Transactions on Fuzzy Systems 17(1):138–149

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cheng, Z., Zeng, Z. Joint label-specific features and label correlation for multi-label learning with missing label. Appl Intell 50, 4029–4049 (2020). https://doi.org/10.1007/s10489-020-01715-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01715-2