Abstract

Valuation adjustments, collectively named XVA, play an important role in modern derivatives pricing to take into account additional price components such as counterparty and funding risk premia. They are an exotic price component carrying a significant model risk and computational effort even for vanilla trades. We adopt an industry-standard realistic and complete XVA modelling framework, typically used by XVA trading desks, based on multi-curve time-dependent volatility G2++ stochastic dynamics calibrated on real market data, and a multi-step Monte Carlo simulation including both variation and initial margins. We apply this framework to the most common linear and non-linear interest rates derivatives, also comparing the MC results with XVA analytical formulas. Within this framework, we identify the most relevant model risk sources affecting the precision of XVA figures and we measure the corresponding computational effort. In particular, we show how to build a parsimonious and efficient MC time simulation grid able to capture the spikes arising in collateralized exposure during the margin period of risk. As a consequence, we also show how to tune accuracy versus performance, leading to sufficiently robust XVA figures in a reasonable time, a very important feature for practical applications. Furthermore, we provide a quantification of the XVA model risk stemming from the existence of a range of different parameterizations according to the EU prudent valuation regulation. Finally, this work also serves as an handbook containing step-by-step instructions for the implementation of a complete, realistic and robust modelling framework of collateralized exposure and XVA.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The credit crunch crisis started in August 2007 forced market practitioners and academics to review the methodologies used to price over-the-counter (OTC) derivatives consistently with the available market quotations. In particular, basis spreads between interest rate instruments characterised by different underlying rate tenors (e.g. IBOR 3 M, IBOR 6 M, overnight,Footnote 1 etc.) exploded from few to hundreds of basis points. This change of regime led to the adoption of a “multi-curve” valuation framework, based on distinct yield curves to compute forward rates with different tenors,Footnote 2 and discounting curves consistent with the collateral remuneration rate.Footnote 3 The multi-curve framework is extensively discussed in the literature, a non-exhaustive list of references includes (Ametrano & Bianchetti, 2009; Bianchetti, 2010; Henrard, 2007, 2009; Kenyon, 2010; Mercurio, 2009; Piterbarg, 2012). Such framework was recently simplified by the interest benchmark reform and the progressive replacement of many IBOR rates with the corresponding overnight rates. We refer to e.g. Scaringi and Bianchetti (2020) and references therein for a discussion.

The credit crunch crisis also forced market participants to extend the multi-curve valuation framework to include additional risk factors, such as counterparty and funding risk, leading to a set of valuation adjustments collectively named XVA, for which we refer to the wide existing literature (see e.g. Brigo et al., 2013; Kjaer, 2018; Gregory, 2020). In particular, Credit and Debt Valuation Adjustments (CVA and DVA, respectively) take into account the bilateral counterparty default risk premium affecting derivative transactions, and are also required by international accounting standards (in EU since the introduction of IFRS13 in 2013, see IASB, 2011).

After the crisis, regulators pushed to mitigate counterparty default risk for OTC derivatives. With regard to non-cleared OTC derivatives, in 2015 the Basel Committee on Banking Supervision (BCBS) and the International Organization of Securities Commissions (IOSCO) finalized a framework (BCBS-IOSCO, 2015), introduced progressively from 2016, which requires derivatives counterparties to bilaterally post Variation Margin (VM) and Initial Margin (IM) on a daily basis at netting set level. VM aims at covering the current exposure stemming from changes in the value of the portfolio by reflecting its current size, while IM aims at covering the potential future exposure that could arise, in the event of default of the counterparty, from changes in the value of the portfolio in the period between last VM exchange and the close-out of the position. In particular, in 2016 ISDA published the Standard Initial Margin Model (SIMM) (see ISDA, 2013, 2018), with the aim to provide market participant with a uniform risk-sensitive IM model, and to prevent both potential disputes and the overestimation of IM requirements due to the use of the BCBS-IOSCO non-risk-sensitive standardized model. The ISDA-SIMM is a parametric VaR model based on Delta, Vega and Curvature (i.e. “pseudo” Gamma) sensitivities, defined across risk factors by asset class, tenor and expiry, and computed according to specific definitions.

In general, XVA pricing is subject to a significant model risk, since it depends on the many assumptions made for modelling and calculating the relevant quantities. Since computational constraints impose to reduce the number of floating-point calculations, model risk arises principally from the need to find an acceptable compromise between accuracy of the XVA figures and computational performance. In the EU pricing model risk is envisaged in EU (2013) (art. 105.10), and EC (2016) (art. 11), and refers precisely to the valuation uncertainty of fair-valued positions linked to the “potential existence of a range of different models or model calibrations used by market participants”. This may occur when a unique model recognized as a clear market standard for computing the price of a certain financial instrument does not exist, or when a model allows for different parameterizations or numerical solution algorithms leading to different model prices. We stress that this measure of model risk does not refer to the universe of possible pricing models and model parameterizations, which is virtually illimited, but, on the contrary, it does intentionally focus the range to those alternatives effectively used by market participants. While the pricing models and their parameterizations used by market participants are not, in general, easily observable, the situation for XVA pricing is slightly different, since market participants may occasionally infer some information from the XVA prices observed in the case of competitive corporate auctions, novations,Footnote 4 negotations of collateral agreements, and also systematically from consensus pricing services (e.g. Totem).

In light of the considerations above, our paper is intended to answer to the following three interconnected Research Questions:

-

Q1

which are the most critical model risk factors, to which exposure modelling and thus XVA are most sensitive?

-

Q2

How to set the XVA calculation parameters in order to achieve an acceptable compromise between accuracy and performance?

-

Q3

How to quantify the model risk affecting XVA figures?

We address these questions as follows. We identify all the relevant calculation parameters involved in the Monte Carlo simulation used to compute the exposure and the XVA figures under different collateralization schemes. For each parameter we quantify its relevance in terms of impacts on XVA figures and computational effort required. Putting all these results together, we may identify the parametrization which allows a compromise between accuracy and performance, i.e. leading to sufficiently robust XVA figures in a reasonable time, a very important feature for practical applications. As a consequence, we are also able to provide a quantification of the XVA model risk stemming from the existence of a range of different pricing model calibrations, numerical methods and their related parameterizations according to the EU provisions.

To these purposes we adopt an industry-standard realistic and complete modelling framework, typically adopted by XVA trading desks, including both VM and ISDA-SIMM dynamic IM, based on real market data, i.e. distinct discounting and forwarding yield curves, CDS spread curves, and swaption volatility cube. We apply this framework to the most diffused derivative financial instruments, i.e. interest rate Swaps and physically settled European Swaption, both with different maturities and moneyness. The stochastic dynamics of the underlying risk factors is modelled with a multi-curve two-factors G2++ short rate model with time-dependent volatility, which, including 19 parameters (see Table 15), allows a richer yield curve dynamics and a better calibration of the market swaption cube. Our XVA numerical implementation is based on a multi-step Monte Carlo simulation with nested exposures calculated by means of analytical formulae for Swaps and semi-analytical formulas for Swaptions. Since the G2++ model allows for an analytical expression for the transition probability under the T-forward measure, we may use a parsimonious time simulation gridFootnote 5 able to capture the spikes arising in collateralized exposure during the margin period of risk. The Monte Carlo XVA figures are also compared against the results obtained through analytical XVA formulas available for Swaps. The latter require the valuation of a strip of co-terminal European Swaptions for which we used both the G2++ and the SABR models, calibrated to the same market swaption cube.

Regarding collateral modelling, since VM and IM determine important mitigations of XVA figures, it is crucial to correctly model their dynamics taking into account the most important collateral parameters, i.e. the margin threshold, the minimum transfer amount, and the margin period of risk. On the one hand, extensive literature exists regarding dynamic VM modelling (see e.g. Brigo et al., 2013, 2018, 2019). On the other hand, dynamic IM modelling of the ISDA-SIMM involves the simulation of several forward sensitivities and their aggregation according to a set of predefined rules, imposing difficult implementation and computational challenges. Different methods have been proposed to overcome such challenges: approximations based on normal distribution assumptions (see Gregory, 2016; Andersen et al., 2017), approximated pricing formulas to speed up the calculation (see e.g. Zeron & Ruiz, 2018; Maran et al., 2021), adjoint algorithmic differentiation (AAD) for fast sensitivities calculation (see e.g. Capriotti & Giles, 2012; Huge & Savine, 2020) and regression techniques (see e.g. Anfuso et al., 2017; Caspers et al., 2017; Crépey & Dixon, 2020). We focus on the implementation of ISDA-SIMM avoiding as much as possible any approximation, computing forward sensitivities by a classic finite-difference (“bump-and-run”) approach which, although computationally intensive, may be used when performances are not critical.

The choice of the risk factors dynamics is a crucial aspect and should be based on a careful balance between the model sophistication and the corresponding unavoidable calibration and computational constraints. Even though our G2++ model model does not embed advanced features like stochastic volatility (see Bormetti et al., 2018), stochastic basis (see Konikov & McClelland, 2019) or stochastic credit process (see Glasserman & Xu, 2014), it is commonly preferred by financial institutions because of several reasons, as extensively argued in Green (2015) (Sects. 16.1.3, 16.3, 19.1.2) and Gregory (2020) (Sect. 15.4.2), which we summarize here: (i) more sophisticated models require the calibration of additional model parameters which are difficult to manage, particularly if one takes into account the complex covariance structure associated to multiple stochastic risk factors; (ii) more sophisticated models are typically much more computationally demanding and easily become unsustainable, e.g. because they do not allow for analytical formulas for transition probabilities and/or for the price of plain vanilla instruments; (iii) simpler models, like the G2++ adopted in this work, allow (semi-)analytical pricing formulas for the most diffused plain vanilla instruments, like Swaps and Swaptions; (iv) since we deal with trades under collateral, which reduces and possibly neutralize the corresponding exposures, the sophistication of the stochastic dynamics chosen for risk factors simulation is dominated in importance by the modelling choices adopted for the collateral dynamics, which we extensively discuss in this work; (v) in particular, for interest rates derivatives, the adoption of a stochastic basis between discounting and forward rates is not crucial since the sensitivity w.r.t. discounting rates is much smaller, and, historically, the volatility of the basis is typically much smaller that the volatility of the corresponding rates. A stochastic basis would play a role only in the case of basis swaps, as discussed in Konikov and McClelland (2019), which are typically traded on the OTC interbank market for hedging purposes. Moreover, the ongoing financial benchmark reform is gradually reducing the importance of such kind of instruments due to the IBOR rates cessation.

Finally, it’s worth to notice that, while the multi-curve single-factor G1++ model is commonly used, and may also be found in commercial software packages, the multi-curve two-factors G2++ model with time-dependent volatility parameters is less straightforward and, to the best of our knowledge, less diffused. Because of this reason, we report all the relevant G2++ equations in “Appendix A.3”. As a consequence, this work also serves as a handbook containing step-by step instructions for the implementation of a complete, realistic and robust modelling framework of collateralized exposure and XVA.

The aforementioned considerations led us to the choice of our G2++ framework, in order to provide an XVA model risk investigation based on a realistic XVA pricing architecture, typically adopted by XVA trading desks, and consistent with the prescriptions of the EU prudent valuation framework.

The paper is organized as follows. In Sect. 2 we briefly remind the XVA framework and the numerical steps involved in the calculation. In Sect. 3 we show the results both in terms of counterparty exposure and XVA figures for the selected financial instruments and collateralization schemes using the target parameterization of the framework, allowing an acceptable compromise between accuracy and performance. In Sect. 4 we report the analyses conducted on model parameters in order to answer the research questions n. 1 and n. 2 above. In Sect. 5 we describe the calculation of the AVA MoRi, answering to research question n. 3 above. In Sect. 6 we draw the conclusions. Finally, the four apps. A to D reports many details related to the corresponding main sections.

2 XVA pricing framework

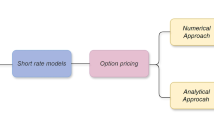

The framework required for XVA calculation including Variation and Initial Margins is a complex combination of many theoretical and numerical approaches that we summarize in the following list.

-

1.

The general no-arbitrage pricing formulas for financial instruments subject to XVA, discussed in “Appendix A.1”.

-

2.

The description of the financial instruments that we wish to test in our XVA calculations, i.e. interest rate Swaps and European Swaptions, discussed in “Appendix A.2”.

-

3.

The G2++ model adopted to describe the evolution of forward and discount curves, discussed in “Appendix A.3”, including: (i) the multi-curve, time-dependent volatility G2++ stochastic dynamics (“Appendix A.3.1”), (ii) the corresponding G2++ pricing formulas for Swaps and European Swaptions (“Appendix A.3.2”), (iii) the calibration procedure of G2++ model parameters to the available market data (“Appendix A.3.3”), and (iv) the G2++ dynamics under the forward measure, suitable for efficient Monte Carlo simulation (“Appendix A.3.4”).

-

4.

The model adopted to describe the XVA, discussed in “Appendix A.4”, including: (i) the XVA definition and pricing formulas (“Appendix A.4.1”), (ii) the discretized XVA formulas suitable for Monte Carlo simulation (“Appendix A.4.2”), and (iii) the analytical XVA formulas applicable to single, uncollateralized linear derivatives (“Appendix A.4.3”).

-

5.

The model adopted to describe the collateral dynamical evolution, discussed in “Appendix B”, including: (i) the formulas for the collateralised exposure with both VM and IM (“Appendix B.1”), (ii) the formulas to dynamically compute VM (“Appendix B.2”), and (iii) the formulas to dynamically compute IM (“Appendix B.3”) according to the ISDA Standard Initial Margin Model formulas (“Appendix B.3.2”).

-

6.

The market data set used to calibrate the G2++ model parameters and to compute the XVA, discussed in “Appendix D”.

The framework described above requires a precise sequence of calculation steps to compute XVA for the selected instruments, that can be summarized as follows.

-

1.

Calibration of the G2++ model parameters to market data (see Sect. A.3.3).

-

2.

Construction of the parsimonious time grid \(\left\{ t_i\right\} _{i=0}^N\) for MC simulation, which includes both the primary time grid \(\left\{ {\bar{t}}_i\right\} \) and the collateral time grid \(\left\{ {\hat{t}}_i\right\} \) (see Sect. 4.2.2).

-

3.

Simulation of the processes \([x_m(t_i), y_m(t_i)]\) for each time step \(t_i, i=1,\ldots ,N\) and Monte Carlo path \(m = 1,\dots ,N_{MC}\) according to the G2++ dynamics under the forward measure (see “Appendix A.3.4”).

-

4.

Calculation of the collateralized exposure \(H_m(t_i)\) for each time step \(t_i\) and simulated path m (see Eq. B1). This requires to:

-

a.

compute the instrument’s future mark-to-market values \(V_{0,m}({\bar{t}}_i)\) at time \({\bar{t}}_i\) on the primary time grid using the G2++ pricing formulas (see “Appendix A.3.2”);

-

b.

compute the Variation Margin \(\text {VM}_m({\bar{t}}_i)\) available at time \({\bar{t}}_i\) on the primary time grid, which is a function of \(V_{0,m}({\hat{t}}_i)\) at the previous time \({\hat{t}}_i = {\bar{t}}_i-l\) on the collateral time grid (see Eq. B2);

-

c.

compute the ISDA-SIMM dynamic Initial Margin \(\text {IM}_m({\bar{t}}_i)\) available at time \({\bar{t}}_i\) on the primary time grid, which is function of instrument Delta \(\Delta _m^c({\hat{t}}_i)\) and Vega \(\nu _m({\hat{t}}_i)\) sensitivities at the previous time \({\hat{t}}_i = {\bar{t}}_i-l\) on the collateral time grid (see Eq. B8).

-

a.

-

5.

Calculation of EPE \({\mathcal {H}}^{+} (t;t_i)\) and ENE \({\mathcal {H}}^{-} (t;t_i)\) for each time step \(t_i\) (see Eqs. A60 and A61).

-

6.

Calculation of survival probabilities for each time step \(t_i\) from default curves built from market CDS quotes.

- 7.

As discussed in the introduction, our multi-curve, time-dependent volatility G2++ model has a number of important characteristics for XVA calculation: (i) it allows perfect calibration of the market term structures of both discount and forward curves, (ii) it allows a better calibration of the market term structure of volatility, (iii) it allows a volatility skew that can be fitted, at least partially, to the market volatility skews, (iv) it allows, under the forward probability measure, an efficient Monte Carlo simulation, (v) it allows analytical pricing formulas for European Options (Caps/Floors/Swaptions).

3 XVA numerical calculations

In this section we report the results for exposure profiles and XVA figures for the financial instruments and the collateralization schemes considered in this work. In order to do so, we use the XVA pricing framework discussed in the previous Sect. 2, and the set of parameters meeting our acceptable compromise between accuracy and performance, discussed in the next Sect. 4 and summarized in Sect. 4.7.

We consider the most diffused derivative instrument, i.e. interest rate Swaps, which are typically traded in at least two very common situations: (i) between banks and their corporate clients, frequently without collateralization, and (ii) between banks for hedging purposes, collateralized with variation margin and (frequently) with initial margin. We include both spot and forward starting Swaps with different maturities and moneyness. Besides linear derivatives, we also consider another common interest rate option, i.e. the physically settled European Swaption, with different moneyness. All the instruments considered are listed in “Appendix A.2.1” (Table 10). Accordingly, we consider three different collateralization schemes: without collateral, with Variation Margin (VM) only, and with both VM and Initial Margin (IM). The case with IM only is not analyzed since IM is typically associated to VM.

Overall, we consider 36 different cases, as shown in the following section reporting the XVA figures (Table 1).

3.1 Exposure results

We show in Fig. 1 the most general and complex case of exposures with VM and IM, and we report in “Appendix C.1” the complete set of results for the instruments listed in Table 10 and the three collateralization schemes mentioned above, along with the corresponding detailed comments.

EPE and ENE profiles (blue and red solid lines respectively) for the 12 instruments in Table 10, full collateralization scheme with both VM and IM. To enhance plots readability, we excluded the initial time step \(t_0\) showing a very high exposure not yet mitigated by the collateral exchanged MPoR days later. All the quantities expressed as a percentage of the nominal amount. Letters P and R distinguish between payer and receiver instruments, respectively. Model setup as in Sect. 4.7

Overall, we observe that the expected exposure profiles are broadly consistent with those found in the existing literature (see e.g. Brigo et al., 2013; Gregory, 2020). A closer inspection reveals that our approach is able to capture the detailed and complex shape of the exposure mitigated by VM and IM, an important feature for XVA calculation. In particular we notice in Fig. 1, for all the instruments and moneynesses considered, that EPE/ENE profiles display short and medium term flat shapes with spikes appearing with increasing magnitude close to maturity, suggesting that IM turns out to be inadequate to fully suppress the exposure because of its decreasing profile. These spikes originate from the semi-annual jumps in Swaps’ future simulated mark-to-market values at cash flow dates, due to the different frequency of the two legs, captured by VM with a delay equal to the length of the margin period of risk (MPoR, see Sect. 4.2.1 for details).

3.2 XVA results

We report in Table 1 the XVA figures for the full set of 36 cases considered in this work. Notice that we compute XVA from the point of view of the instruments’ holder (i.e. with positive nominal amount N). Accordingly, uncollateralized physically settled Swaptions show non-zero DVA figures.Footnote 6

Regarding uncollateralized XVA, we observe that Swaps display larger CVA (DVA) figures for ITM (OTM) instruments due to the greater probability to observe positive (negative) future simulated mark-to-market values, also reflected in lower Monte Carlo errors. Moreover, CVA is larger than DVA except for the OTM 15Y Swap due to the simulated forward rates structure which causes expected floating leg values greater than those of the fixed leg. Finally, analysing the results for different maturities, the higher risk of 30 years Swaps leads to larger adjustments compared to those maturing in 15 years. Analogous results are obtained for forward Swaps. In this case, the asymmetric effect of simulated forward rates on opposite transactions causes larger CVA and smaller DVA for the OTM payer forward Swap with respect to the OTM receiver one. Slightly lower (absolute) CVA values are observed for the corresponding physically settled European Swaptions, since OTM paths are excluded after the exercise, while DVA values are considerably lower as negative exposure exists only after the expiry.

Regarding XVA with VM only, we observe that the adjustments are reduced on average by approx. two orders of magnitude with respect to the uncollateralized case, and are widely driven by spikes in exposure profiles. In general, DVA figures are greater compared to CVA ones, since for payer instruments the magnitude of the spikes in ENE becomes larger by approaching the maturity, where the default probability increases.

Finally, regarding XVA with VM and IM, we observe that the adjustments are reduced on average by approx. four orders of magnitude with respect to the uncollateralized case. Here, XVA figues are entirely driven by the spikes closest to maturity which are not fully suppressed by IM, thus confirming the importance of a detailed simulation of the collateralized exposure.

4 XVA model validation

The previous Sects. 2 and 3 suggest that XVA calculation depends on a number of assumptions which affect the results in different ways. We can look at these assumptions as sources of model risk, which need to be properly addressed.

The purpose of this section is threefold: (i) we want to identify and analyze in detail the most significant sources of model risk; (ii) we want to validate our XVA framework by assessing its robustness and tuning the corresponding calculation parameters; (iii) we look for a strategy to set the acceptable compromise between accuracy and performance, a very important feature for practical applications. These analyses will also lead to a distribution of XVA values which will be the basis to compute a model risk measure in the following Sect. 5.

In order to ease the presentation we report the results only for a subset of the instruments listed in Table 10, mainly the 15Y ATM payer Swap and the 5x10Y ATM physically settled European payer Swaption. Analogous results are obtained for the other instruments and are reported in the corresponding sections of “Appendix C”.

4.1 G2++ model calibration

Our XVA framework is based on the G2++ model, whose parameters p are calibrated on market ATM Swaption prices using the procedure described in “Appendix A.3.3”. We will refer to this calibration as the baseline calibration.

Calibrating the model to ATM swaption quotes is a standard approach for at least two reasons: (i) ATM quotes are the most liquid, in particular for the most frequently traded expiries/tenors, (ii) there is less interest into the smile risk when one has to deal with many counterparties and large netting sets dominated by linear interest rate derivatives, a typical situation for XVA trading desks. Nevertheless, this choice is not unique, and different approaches can be adopted according to market conditions, specific trades and the relevance of smile risk. For example, a specific calibration approach could be adopted when structuring a new trade for a client, especially in the case of a competitive auctions.

Since XVA figures depend on the G2++ model parameters, the G2++ model calibration is a source of model risk, and we address it by considering different alternative calibrations, also including the Swaption smile risk. In particular, we compare the baseline calibration (parameters denoted with p) with six alternative calibrations (parameters denoted with \(p_i\), with \(i=1,\dots ,6\)), which we define by tuning the following features: (i) the maximum expiry of market points used for calibration, (ii) the maximum/minimum strikes of market points used for calibration, and (iii) imposing flat volatility G2++ parameters.

We report in “Appendix C.2” all the details about the 7 different calibrations. We show here in Table 2 the XVA figures obtained with the seven different calibrations for the 15Y ATM payer Swap and the 5x10Y ATM physically settled European payer Swaption, both without collateral. Similar results are obtained for the other collateralization schemes. We observe that the range of values obtained using different model calibrations is always lower than the \(3\sigma \) statistical uncertainty due to MC simulation. Therefore we can conclude that the XVA model risk stemming from different G2++ model calibrations is limited, and that our choice of the baseline calibration on market ATM Swaptions is sufficiently robust for our purposes.

4.2 Time simulation grid

The numerical calculation of XVA in Eqs. A55 and A56 requires the discretization of the integral on a time grid in correspondence of which the exposure is computed using the Monte Carlo simulation, as shown in “Appendix A.4.2”.

The construction of this MC time simulation grid is a crucial step to find an acceptable compromise between accuracy and performance for at least two reasons: (i) an high granularity reduces the discretization error but increases the computational time, leading to poor performances, and (ii) the presence of the margin period of risk requires a careful distribution of the time simulation points in order to capture the spikes in collateralized exposures, which have material impact on XVA. As a consequence, both the granularity and the distribution of the time simulation grid are an important model risk factor in XVA calculation.

In order to identify the optimal construction of the time simulation grid we proceed as follows: (i) we analyse the spikes in collateralized exposure using the most accurate choice, i.e. a daily grid; (ii) we propose a workaround which allows to capture all the spikes using lower granularities and (iii) we perform a convergence analysis looking for the granularity which ensures an acceptable compromise between accuracy and performance.

4.2.1 Spikes analysis

Spikes arising in collateralized exposure are due to the MPoR, since it implies that the collateral available at time step \(t_i\) depends on instrument’s simulated mark-to-market values at time step \({\hat{t}}_i = t_i - l\), assumed to be the last date at which VM and IM are fully exchanged (see “Appendix B.1”). Clearly, the best possible choice in terms of accuracy is a daily time simulation grid, that both reduces the discretization error in the integrals in Eqs. A55 and A56, and automatically captures all the details of the exposure, including the spikes.

We show in Fig. 2 the EPE/ENE profiles obtained with a daily grid for the 15Y Swap (left-hand side panel) and the 5x10Y Swaption (right-hand side panel) for the three collateralization schemes considered.

EPE and ENE profiles (blue solid lines) for 15Y ATM payer Swap (left-hand side) and 5x10Y ATM physically settled European payer Swaption (right-hand side), EUR 100 Mio nominal amount, on a daily grid for the three collateralization schemes considered (top: no collateral, mid: VM, bottom: VM and IM). Black crosses: floating leg cash flow dates (semi-annual frequency); red circles: fixed cash flow dates (annual frequency); green triangles: Swaption’s expiry. To enhance Swaption’s plots readability, we omit the collateralized EPE at time steps \(t_0\) and \(t_1\) where the exposure spikes since collateral is exchanged MPoR days later (mid and bottom right-hand panels). In particular EPE(\(t_0\)) is equal to present Swaption’s price (5,030,423 EUR, approx. \(5\%\) of the nominal amount). Other model parameters as in Table 7. Quantities expressed as a percentage of the nominal amount

As can be seen, when only VM is considered, spikes emerge at inception as no collateral is posted, and at cash flow dates as sudden changes in future simulated mark-to-market values are captured by VM with a delay due to MPoR. When also IM is considered, spikes closest to maturity persist due to the downward profile of IM. Further investigations on the nature of the exposure’s spikes are reported in “Appendix C.3”.

The impact of these spikes on XVA figures is significant: with VM only the contribution is respectively of \(+7\%\) and \(+6\%\) for the 15Y Swap and of \(+3\%\) and \(+6\%\) for the 5x10Y Swaption. With also IM the exposure between spikes is suppressed, therefore CVA and DVA are completely attributable to spikes. In other words, neglecting the spikes would significantly underestimate the (absolute) XVA figures. On the other hand, since using a daily grid is unfeasible in practice, in the next section we look for a possible solution.

4.2.2 Parsimonious time grid

Although a daily grid, as discussed in the previous Sect. 4.2.1, clearly represents the best discrete approximation to compute the XVA integrals in Eqs. A55 and A56, this choice is often unfeasible in practice because of the poor computational performance, as can be observed in the last column of Table 3. Notice that the most time consuming component is the IM, which involves the calculation of several forward sensitivities for each path (see “Appendix B.3.3”). Another bottleneck is the numerical integration of the semi-analytical G2++ pricing formula for Swaptions (see Eq. A29). On the other hand, the adoption of simple, less granular, evenly spaced time grids would be inadequate to capture the spikes in collateralised exposure and could produce biased XVA figures.

In order to overcome these issues we build a parsimonious time simulation grid \(\left\{ t_i\right\} _{i=0}^N\), which is obtained by joining an initial time grid \(\left\{ {\bar{t}}_i\right\} \), evenly-spaced with time step \(\Delta t\), a cash flow grid, including the trade (or portfolio) cash flow dates, and a collateral time grid, where each previous date is shifted by the MPoR. The resulting final joint time grid depends on the initial time step \(\Delta t\) but is no longer evenly spaced. See “Appendix C.3” for more details.

We show in Fig. 3 the exposure profiles for the 15Y Swap obtained with different grids for the three collateralization schemes considered. For testing purposes we compare the joint time grid with a standard time grid, obtained adding the primary time grid and its corresponding collateral time grid, which does not include the cash flow time grid.

We observe that uncollateralized exposures are similar for both grids (top panels), but the standard grid fails to capture the spikes in exposures with VM (panel c vs d), because of the lack of the cash flow time grid. Adding the IM with standard grid completely suppresses the residual exposure (panel e), thus leading to null XVA figures. Instead, the joint time grid allows to correctly model all spikes in the collateralized exposure (panel f) with considerable computational benefits with respect to the daily grid discussed in the previous section. In fact, the joint grid \(\Delta t =1M\) exposures in Fig. 3 (right-hand side) are very similar to the corresponding \(\Delta t =1D\) exposures in Fig. 2 (left-hand side). Similar results are obtained for the 5x10Y Swaption (see “Appendix C.3”).

The parsimonious time simulation grid discussed above is governed by two parameters: i.e. the constant granularity \(\Delta t\) used in the initial time grid and the number n of cash flows in the cash flow grid. Since the number of cash flows is fixed exhogenously according to the trade or portfolio under analysis, the other parameter \(\Delta t\) can be used to tune the compromise between accuracy and computational performance in the XVA calculation.

We show in Table 3 the XVA results obtained for the 15Y Swap and the 5x10Y Swaption using the joint time grid with different granularities \(\Delta t\), taking the results obtained with the daily time grid as benchmark. We observe that uncollateralized XVA, without spikes, show a good convergence already for low granularities, i.e. \(\Delta t = 6\)M. In fact, the relative grid error is smaller than the MC \(3\sigma \) error. Instead, collateralized XVA require higher granularities, up to \(\Delta t = 1\)M, due to the exposure spikes. In particular, XVA with VM are dominated by the grid error for the Swap (except for DVA with \(\Delta t = 1M\)), and by the MC error for the Swaption (except for DVA with \(\Delta t = 12M,1M\)). XVA with both VM and IM, very small and highly spike dependent, are mainly dominated by the grid error, except for Swaption’s CVA. The differences between collateralized Swaps and Swaptions are not surprising, since in the collateralized exposure for physical Swaptions (i) cash flows and spikes appear only after the Swaptions’ expiry and (ii) many MC paths after the Swaptions’ expiry date go OTM and give zero prices (e.g. the 5x10Y Swaption goes OTM for 45.2% of the MC paths). This is clearly visible in Fig. 10, where the spikes for the 5x10Y collateralized Swaptions (panels d-i) are much smaller w.r.t. the corresponding collateralized Swaps in Fig. 9.

EPE/ENE profiles for 15Y ATM payer Swap, EUR 100 Mio nominal amount, obtained with standard grid (left-hand side) and joint grid (right-hand side) with monthly granularity. The standard time grid is built by primary + collateral time grids (does not include the cash flow time grid). Other parameters as in Fig. 2. The joint grid \(\Delta t =1M\) exposures are very similar to \(\Delta t =1D\) exposures in Fig. 2 (left-hand side)

Looking at the computational performance (last column), we observe that, overall, the computational time is roughly proportional to the number of time simulation steps \(N_S\). In particular, the monthly grid is approx. 26–27 times faster than the daily grid and 2.3\(-\)2.5 times slower than the quarterly grid. Regarding the instruments, the Swaption is approx. 10–12 times slower than the Swap. Regarding the collateral, adding VM costs approx. a factor of 2, and adding also IM costs another factor of 14–15, in total approx. 28–30 times slower than the uncollateralized case, both for the Swap and the Swaption.

In light of this analysis we may confirm that the construction of the time simulation grid is a relevant source of model risk. For the purposes of the present work, we identify \(\Delta t = 1M\) as an acceptable compromise between accuracy and performance.

4.3 Monte Carlo convergence

The Monte Carlo simulation used in this work for XVA calculation (Eq. A60), although computational intensive, allows to manage the complexities inherent XVA calculation, such as collateralization. Obviously, the most important parameter for MC is the number of MC scenarios, which has to be tuned to find an acceptable compromise between precision and computational effort.

Accordingly, we investigate the XVA convergence with respect to the number of Monte Carlo scenarios \(N_{MC}\). In order to do so, we assume the XVA figures calculated with a large number of MC scenarios (i.e. \(N_{MC} = 10^6\)) as proxies for the “exact” XVA figures, and we use them as benchmarks to assess the XVA convergence for smaller numbers of scenarios (always using the same seed in the pseudo-random number generator). Furthermore, in order to investigate the XVA Monte Carlo error, we use the upper and lower bounds on EPE/ENE in Eq. A64.

In order to clarify the MC convergence, we show in Fig. 4 the XVA convergence diagrams for the 5x10Y Swaption, for the three collateralization schemes considered.

CVA (l.h.s) and DVA (r.h.s) convergence diagrams versus number of MC scenarios for the 5x10Y ATM physically settled European payer Swaption, EUR 100 Mio nominal amount, and the three collateralization schemes considered (top: no collateral, mid: VM, bottom: VM and IM). Left-hand scale, black line: simulated XVA; grey area: \(3\sigma \) confidence interval; dashed red line: “exact” value proxies (we omit their small confidence interval). Right-hand scale, blue line: convergence rate in terms of absolute percentage difference w.r.t. “exact” values. Model parameters other than \(N_{MC}\) as in Table 7

We observe that XVA converge, for all collateralization schemes, to “exact” values with small absolute percentage differences already for few paths (i.e. \(N_{MC} = 1000\)). As expected, higher differences can be observed for IM (bottom panels) due to small XVA values; nevertheless, \(N_{MC} \ge 5000\) ensures an absolute percentage difference below 5%. Similar results are obtained for the 15Y Swap (see “Appendix C.4”). Regarding the computational effort, since it scales linearly with the number of simulated paths, we observe that beyond \(N_{MC} = 5000\) the benefits in terms of accuracy would be exceeded by the computational costs, particularly for IM.

In light of this analysis we may identify \(N_{MC} = 5000\) as an acceptable compromise between accuracy and performance for the purposes of the present work.

4.4 Forward vega sensitivity calculation

In this section we report the analyses conducted to validate the approach adopted to calculate the Vega sensitivity when simulating ISDA-SIMM dynamic IM (see “Appendix B.2”), which is a source of model risk for the Swaption’s XVA.

4.4.1 Sensitivity to G2++ parameters

ISDA-SIMM defines Vega sensitivity as the price change with respect to a 1% shift up in ATM shifted-Black implied volatility. Since the G2++ pricing formula for European Swaption does not depend explicitly on the Black implied volatility (see Eq. A29), in our framework Vega for Swaptions cannot be calculated at future time steps according to ISDA prescriptions. In “Appendix B.3.3” we propose an approximation scheme to calculate forward Vega by shifting up the G2++ model parameters governing the underlying process volatility. In order to validate this approach, we compare the Vega obtained at valuation date \(t_0\) through Eq. B31 with a “market” Vega and a “model” Vega, both consistent with ISDA prescriptions. Specifically, for a given combination of expiry and tenor, we computed the following three Vega sensitivities,

where \(\nu _{1}\) denotes the “market” Vega obtained by shifting the ATM Black implied volatility by \(+1\%\) and re-pricing the Swaption via Black pricing formula; \(\nu _{2}\) denotes the “model” Vega obtained by shifting the ATM Black implied volatility matrix by \(+1\%\), re-pricing market Swaptions via Black pricing formula, re-calibrating the G2++ parameters p on these prices, and computing the Swaption price using the re-calibrated parameters \({\hat{p}}\); \(\nu _{3}\) denotes the Vega obtained according to the approximation outlined in “Appendix B.3.3”, i.e. by applying the shocks \(\epsilon _{\sigma }\) and \(\epsilon _{\eta }\) on the G2++ parameters \(\sigma \) and \(\eta \) governing the underlying process volatility, recomputing the G2++ Swaptions’ prices and the corresponding Black implied volatilities.

In addition, we also tested Eq. 3 against different values of the shocks \(\epsilon _{\sigma }, \epsilon _{\eta }\), considering both \(\epsilon _{\sigma } = \epsilon _{\eta }\) and \(\epsilon _{\sigma } \ne \epsilon _{\eta }\). In the latter case, we recovered the values for the shocks from the re-calibrated parameters \({\hat{\sigma }}\) and \({\hat{\eta }}\) of Eq. 2, i.e. \(\epsilon _{\sigma } = 1\%\) and \( \epsilon _{\eta } = 4\%\). The results of the comparison are reported in Table 4.

We observe that Vega sensitivities are fairly aligned among the three approaches and the different shocks values examined. Hence, at the initial time step \(t_0\) our approximation produces Vega sensitivity and Vega Risk values consistent with those obtained by applying the ISDA definition. Therefore, we assume that this approach can be adopted also for future time steps. As regards the choice of shocks sizes, in order to avoid any arbitrary element, we decide to compute forward Vega by using the re-calibrated \({\hat{\sigma }}\) and \({\hat{\eta }}\), corresponding to \(\epsilon _{\sigma } = 1\%\) and \( \epsilon _{\eta } = 4\%\).

4.4.2 Implied volatility calculation

Looking closely at the Monte Carlo simulation of forward swap rates, we find that some paths exhibit deeply negative rates, exceeding (in absolute terms) the value of the Black shift \(\lambda _{x}(t_0)\) used at the initial time step \(t_0\) in the calibration of the model parameters. This feature prevents the calculation of Black implied volatilities at future time steps, needed to compute Vega sensitivity according to Eq. 3. In Fig. 5 we show the MC simulation of the 5x10Y forward swap rate, where in 2538 paths out of 5000 (51% of the total) the rate falls below \(\lambda _{6\text {m}}(t_0)=1\%\) for at least one time step.

In light of this fact, in order to ensure Vega sensitivity calculation for each time step and path, we are forced to use Black shift values larger than those necessary and sufficient at time step \(t_0\). To this end, we analysed the impact of different Black shifts on shifted-Black implied volatility, Vega sensitivity and Vega Risk at \(t_0\). The results for the 5x10Y ATM Swaption are reported in Table 5. We observe that shifted-Black implied volatility and Vega sensitivity are highly impacted by the different black shift but the Vega Risk, given by the product of the two quantities (see Eq. B16), is fairly stable, differing up to a maximum of 2% w.r.t. the case \(\lambda _{6\text {m}}=1\%\).

We conclude that Black shift values larger than those typically used at \(t_0\) ensure the inversion of the Black formula for each path with an acceptable accuracy in Vega Risk. For this reason we set \(\lambda _{6\text {m}} = 6\%\) in our calculations.

4.5 XVA sensitivities to CSA parameters

In order to establish the most relevant CSA parameters driving the exposure and the XVA, we analysed the corresponding sensitivities with respect to the most important CSA parameters, i.e. the margin threshold K, the minimum transfer amount MTA, and the length of margin period of risk (MPoR), keeping the other model parameters as in Table 7. In carrying out this analysis we distinguished between the following three collateralization schemes for both Swaps and Swaptions: (i) XVA with VM only; (ii) XVA with VM and IM, with K and MTA applied on VM only; (iii) XVA with VM and IM, with K and MTA applied on both VM and IM.

We report all the results in “Appendix C.5”. Overall, we found that MPoR does not contribute significantly with respect to K and MTA parameters. In particular, the threshold K is the most important parameter. As expected, for increasing values of K and MTA, the collateralized XVA converges to the uncollateralized value. We notice that this analysis does not identifies XVA model risk factors, but it is very useful in practical situations, in particular when collateral agreements are negotiated, as widely happened during the financial benchmarks reform.

4.6 Monte Carlo versus analytical XVA

As shown in “Appendix A.4.3”, in the case of uncollateralized Swaps, there exist analytical XVA formulas in terms of an integral over the values of co-terminal European Swaptions (see Eqs. A65, A66).

The corresponding numerical solution requires the discretization of the integrals on a time grid (see Eqs. A67, A68), whose granularity clearly introduces a model risk in the XVA figures. Therefore, we tested these formulas for different time grids with different frequencies. Moreover, given the model independent nature of this approach, we calculated co-terminal Swaptions’ prices according to two different approaches:

-

1.

using our G2++ model, Eq. A29, with G2++ parameters in Table 7;

-

2.

using the shifted-SABR model, using shifted-Black formulas and shifted-lognormal SABR volatilities (see Hagan et al., 2002; Obloj, 2007) calibrated on the market swaption cube for each available smile section. We stress that this approach is not straightforward, since typically only a few co-terminal Swaptions entering into the XVA analytical formula correspond to quoted smile sections, where the SABR formula can be directly used. All the remaining Swaptions insisting on non-quoted smile sections require delicate interpolation/extrapolation of the calibrated SABR parameters (see “Appendix A.4.3” for further details).

The purpose of this analysis is twofold: one the one hand, we want to test the results of the Monte Carlo approach against analytical formulas, on the other hand, we want to quantify the model risk stemming from the use of alternative pricing models.

The results for the 15Y ATM and OTM payer Swaps are shown in Table 6, We observe that, with respect to the Monte Carlo approach (last column), analytical formulas generally underestimate CVA and DVA values. The G2++ results (third column) are always consistent with the \(3\sigma \) Monte Carlo error (marked with an asterisk), with only one exception (the DVA of the OTM Swap with annual time grid granularity). This evidence confirms the robustness of the Monte Carlo simulation parameterized as discussed in the previous Sect. 4.3. Instead, the SABR results (fourth column) show considerable differences, particularly for the CVA, which is consistent with \(3\sigma \) Monte Carlo error only in one case. This is not surprising, since we are using two completely different dynamics of the underlying risk factors (G2++ for MC vs SABR for anaytical). In terms of computational performance, the analytical approach is obviously much faster than that of Monte Carlo approach: even with a daily time grid robust results can be obtained almost immediately, meaning that, for an uncollateralized Swap, the analytical approach can replace the Monte Carlo approach whereas performance is critical.

4.7 Tuning accuracy versus performance

The model validations performed in the previous sections allowed to identify the most important model risk factors and the corresponding calculation parameters governing the XVA framework and affecting the XVA figures, both in terms of accuracy and computational performance, which we summarize in Table 7.

In the last column we report the parameter values identified in our model validation analyses which set our acceptable compromise between accuracy and performance. Regarding the CSA parameters, we considered bilateral CSA with \(\text {K}=\text {MTA}=0\) both for VM and IM, with \(l=2\) days, which is a common practice and also get close to the perfect collateralization case.

Essentially, the most important parameters are the number of time steps \(N_S\) in the time simulation grid and the number \(N_{MC}\) of Monte Carlo scenarios. Their product \(N_C = N_S \times N_{MC}\) is proportional to the computational time \(T_C\) required for the XVA calculation, i.e. \(T_C = \alpha N_C\), where the proportionality coefficient \(\alpha \) depends on the hardware available, and all the rest being the same. Hence, given a computational budget \(\Delta T\), i.e. the maximum time that one is willing to wait to compute the XVA figures, tuning precision vs performance roughly amounts to set \(N_S\) and \(N_{MC}\) such that \(T_C \le \Delta T\).

We stress that this choice is not unique, since it depends on the specific context, in particular: (i) the trades or portfolio under analysis, (ii) the presence of collateral, in particular the IM, (iii) the hardware available, (iv) the calculation time constraints, (v) the desired level of accuracy, (vi) the purpose of the XVA calculation, e.g. either structuring a single trade for a client, or end of day XVA revaluation, or end of quarter accounting fair value measurement, (vii) the purpose of the model validation, e.g. either for the Front Office quants developing the XVA engine for the XVA trading desk, or for the Model Validation quants challenging the Front Office framework.

The considerations above answer to our first and second research questions reported in Sect. 1.

5 XVA model risk

According to the EU regulation (see EU, 2013; EC, 2016) financial institutions are required to apply prudent valuation to fair-valued positions in order to mitigate their valuation risk, i.e. the risk of losses deriving from the valuation uncertainty in the exit price of financial instruments. The prudent value has to be computed on the top of the fair value, including possible fair valuation adjustments accounted in the income statement, considering 9 different valuation risk factors at the \(90\%\) confidence level from a distribution of exit prices. The corresponding 9 differences between the prudent value and the fair value, called Additional Valuation Adjustments are aggregated and finally deducted from the Common Equity Tier 1 (CET1) capital In particular, the Model Risk (MoRi) AVA, envisaged in art. 11 of EC (2016), comprises the valuation uncertainty linked to the “potential existence of a range of different models or model calibrations used by market participants”. Accordingly, for MoRi AVA the prudent value at a 90% confidence level corresponds to the \(10{\textrm{th}}\) percentile of the distribution of the plausible prices obtained from different models/parameterizations.Footnote 7

Hence, we compute a MoRi AVA based on the analyses described in the previous Sect. 4. In particular, we build the distribution of XVA exit prices by considering the following four sources of model risk: (i) G2++ model calibration approach (see Sect. 4.1), (ii) time grid construction approach and related granularity \(\Delta t\) (see Sect. 4.2), (iii) number of MC scenarios \(N_{MC}\) (see Sect. 4.3), and (iv) the fast analytical XVA formulas for uncollateralized Swaps with different time grid granularities and SABR pricing formulas for the strip of co-terminal Swaptions (see Sect. 4.6).

According to the ranges of parameter values examined in Sect. 4 for the four sources of model risk above, we would obtain a distribution of XVA exit prices including 1440 points for the uncollateralized SwapsFootnote 8 and 1400 points for the uncollateralized Swaption.Footnote 9 Since the production of such an high number of XVA exit prices is computationally prohibitive, we restrict our analysis by considering 236 pointsFootnote 10 for the Swap, and 196 pointsFootnote 11 for the Swaption. Therefore, we compute MoRi AVA at time \(t_0\) asFootnote 12

where:

-

\(V(t_0;M) = V_0(t_0) + \text {XVA} \left( t_0; M \right) \) is the fair-value of the instrument, intended as the price obtained from our XVA framework, denoted here with M (see Table 7);

-

\(\text {PV}(t_0;M^{*}) = V_0(t_0) + \text {XVA}(t_0;M^{*})\) is the prudent value obtained from the prudent XVA framework, denoted by \(M^{*}\), determined as the \(10{\textrm{th}}\) percentile of the XVA exit price distribution. In other words, \(M^{*}\) ensures that one can exit the XVA at a price equal to or larger than \(\text {PV}(t_0;M^{*})\) with a degree of certainty equal to or larger than 90%Footnote 13;

-

the final formula reduces to the CVA only since the EU regulation expressly excludes any own credit risk component, as the DVA, which is filtered out from the CET1 capital; furthermore, we are not considering the valuation uncertainty related to the base value \(V_0(t_0)\).

We report in Fig. 6 the CVA distributions for the Swap and the Swaption. We observe that both distributions have a positive skew, with many points concentrated around the left tail. The less conservative points falling in the right tail are attributable to the analytical formulas and to MC simulations with a low number of scenarios and/or less granular time grids for both instruments, as visible in Tables 17 and 18 which detail these distributions.

In the following Tables 8 and 9 we show a summary of the full Tables 17 and 18. Looking at the 15Y Swap, the MoRi AVA, corresponding to the prudent XVA framework \({M_{24}}\) (calibration \(p_2\), joint grid, \(\Delta t = 1\)M and \(N_{MC} = 18{,}000\)), is equal to \(0.20\%\) of the CVA obtained from our XVA framework. For the 5x10Y Swaption, the MoRi AVA, corresponding to \({M_{20}}\) (calibration \(p_1\), joint grid, \(\Delta t = 1\)M and \(N_{MC} = 14{,}000\)), is equal to \(0.66\%\) of the CVA.

In conclusion, we observe that the small relative AVA values are due to the fact that our XVA framework produces already conservative CVA figures, and most of the mass of the XVA distribution is concentrated in the left tail. A different compromise between accuracy and performance, e.g. faster but less accurate, may produce more significant relative AVA values, leading to non-negligible CET1 reductions in the case of large financial institutions with important XVA figures.

The considerations above answer to our third research question reported in Sect. 1.

6 Conclusions

In this work we investigated the XVA model risk. To this scope we focused on an industry-standard realistic and complete XVA modelling framework, typically used by XVA trading desks, based on multi-curve time-dependent volatility G2++ stochastic dynamics calibrated on real market data, i.e. distinct discounting and forwarding yield curves, CDS spread curves, and swaption volatility cube. The numerical XVA calculation is based on a multi-step Monte Carlo simulation including both dynamic variation margin and initial margins under the ISDA Standard Initial Margin Model. We applied this framework to the most common linear and non-linear interest rates derivatives, i.e. Swaps and European Swaptions with different maturities and strikes. Within this context, we formulated in Sect. 1 three research questions, to which we report the corresponding answers below.

-

A1

Within the XVA modelling framework above, we were able to identify and investigate the most important model risk factors to which XVA exposure modelling and thus XVA are most sensitive, and to measure the associated computational effort. In particular, we showed that a crucial model risk factor is the construction of a MC time simulation grid able to capture the spikes arising in collateralized exposure during the margin period of risk, which have a material impact on XVA figures. To this end, we proposed a strategy to build a parsimonious and efficient grid which ensures to capture all the spikes and reduce the computational effort. Regarding the MC simulation, we observed a convergence of XVA figures even for a limited number of MC scenarios, leaving room for further saving of computational time if necessary. Regarding the simulation of the initial margin, further assumptions are required to compute G2++ forward vega sensitivities according to the ISDA-SIMM prescriptions. The related model risk has been addressed by ensuring that our calculation strategy is aligned with two alternative approaches, both consistent with ISDA-SIMM definition at time \(t_0\) (i.e. valuation date) and by avoiding any arbitrary elements in the choice of the associated parameters. Finally, we showed that XVA analytical formulas for uncollateralized Swaps represent a useful tool for validating the MC results and to speed up XVA calculations. In this case we found that model risk arises from the discrete time grid used in the XVA analytical formula and from the model used to price the corresponding strip of co-terminal European Swaptions. XVA figures obtained with the G2++ pricing formulas, consistent with the G2++ dynamics of the underlying risk factors, resulted to be superior with respect to those obtained with the SABR model, which assumes a different dynamics.

-

A2

The model risk analyses above allowed to identify a parametrization of the XVA modelling framework allowing a compromise between accuracy and performance, i.e. leading to sufficiently robust XVA figures in a reasonable time, a very important feature for practical applications. Obviously, this choice is not unique, and our analyses allow to adapt the parameters to different contexts and purposes of the XVA calculation.

-

A3

Finally, based on the large number of different parameterizations considered in the analyses above, we were able to estimate the XVA model risk using the Additional Valuation Adjustment (AVA) envisaged by the EU regulation as the 10th percentile of the XVA distribution, corresponding to the 90% confidence level for XVA.

Our framework is general and could be extended to include other valuation adjustments, e.g. Funding Valuation Adjustment (FVA) and Margin Valuation Adjustment (MVA), other financial instruments, and XVA calculation at portfolio level. The computational performance could be enhanced by using last generation high dimensional scrambled Sobol sequences generators, which allow to reduce the number of scenarios while keeping the MC error under control (see. e.g. Atanassov & Kucherenko 2020; Scoleri et al., 2021). Adjoint algorithmic differentiation (Capriotti & Giles, 2012; Huge & Savine, 2020) or Chebyshev decomposition (Maran et al., 2021) could be be used to speed up and stabilize sensitivities calculation for initial margin modelling.

Availability of data and materials

Input market data available in “Appendix D”.

Code Availability

Available on demand.

Notes

IBOR denotes a generic Interbank Offered Rate, such as EURIBOR. 3 M, 6 M, etc. denote the rate tenor, i.e. the time period used to compute the interest amount. Overnight rates have a 1-day tenor.

i.e. yield curves built from market quotations of homogeneous interest rate instruments with the same underlying rate tenor.

Collateral agreements are used to mitigate the counterparty default risk of derivatives transactions. They are typically based on the Credit Support Annex (CSA), a section of the International Swaps and Derivatives Association (ISDA) master agreement used to contractualise OTC derivatives. Collateral remuneration rates are typically overnight rates. Discounting curves are typically built from Overnight Indexed Swaps, based on (compounded) overnight rates. Hence the names “CSA discounting” or “OIS discounting”. This post-crisis valuation framework is different from the previous “single-curve framework”, characterized by a single yield curve, used to compute both forward and discount rates, built from inhomogeneous market instruments with mixed tenors, e.g. Deposits, Futures, Forward Rate Agreements, Swaps, etc.

A novation occurs when a bank A is called to step in an existing trade between another bank B and a client C. Since typically the two banks A and B have a collateral agreement, while the client trade is not collateralized, the XVA exit price is observed in the transaction.

Our Monte Carlo simulation framework does not depend neither on the specific stochastic dynamics of the risk factors nor on the length of the time simulation steps, and could be used with more complex stochastic dynamics requiring short time simulation steps. Obviously, the corresponding pricing formulas should be plugged in the framework.

From the point of view of the holder, uncollateralised cash-settled Swaptions have zero ENE and DVA. In the presence of collateral, small ENE and DVA figures may appear because of MC scenarios where the received collateral exceeds the Swaption’s price.

Notice that we conventionally adopt positive/negative prices for assets/liabilities.

1440 = 7 G2++ calibrations x 2 time grids x 5 values of \(\Delta t\) x 20 values of \(N_{MC}\) + 40 analytical XVA, where 40 = 7 G2++ calibrations x 5 \(\Delta t\) for G2++ model + 5 \(\Delta t\) for SABR model.

\(1400 = 1440 - 40\), since for the Swaption there are no XVA analytical formulas.

236 = 7 G2++ calibrations x (2 time grids + 5 \(\Delta _t\) + 20 \(N_{MC}\) + 1 baseline) + 40 analytical XVA.

\(196 = 236 - 40\).

EC (2016) prescribes an aggregation coefficient equal to 0.5 to take into account diversification benefit, which we do not consider here.

Notice that, according to our conventions, \(\text {PV}(t_0) \le V(t_0)\) and \(\text {AVA}(t_0)\ge 0\)

In order to ease the notation, in the following sections we omit subscript 0 unless clearly necessary, denoting the base value simply with V.

An ideal Credit Support Annex (CSA) ensuring a perfect match between the price \(V_{0}(t)\) and the corresponding collateral at any time t. This condition is realised in practice with a real CSA minimizing any friction between the price and the collateral, i.e. with daily margination, cash collateral in the same currency of the trade, flat overnight collateral rate, zero threshold and minimum transfer amount.

Namely an issuer with a credit risk equal to the average credit risk of the IBOR panel, see e.g. Morini (2009).

Since \( {\mathbb {E}}^{Q} \left[ D(t;T) V(T) \vert {\mathcal {F}}_t\right] = A(t;{{\textbf {S}}}) {\mathbb {E}}^{Q_{S}} \left[ \frac{V(T)}{A(T;{{\textbf {S}}})} \vert {\mathcal {F}}_t \right] \).

More sophisticated pricing models are used, typically based on SABR stochastic volatility model, see Hagan et al. (2016).

Here we assume “risk free” close-out at the mark to market, without any further adjustment.

We neglect here the wrong way risk arising when the exposure with the counterparty is inversely related to the creditworthiness of the counterparty itself.

We interpolated and extrapolated linearly.

Notice that when VM only is considered the collateralized exposure simply reduces to \(H_m(t_i)=V_{0,m} \left( t_i \right) -\text {VM}_m \left( t_i;V_{0,m} ({\hat{t}}_i),\text {K}_\text {VM},\text {MTA}_\text {VM} \right) \).

For Credit (Qualifying) Risk Class, which includes instruments whose price is sensitive to correlation between the defaults of different credits within an index or basket (e.g. CDO tranches), an additional margin component, i.e. the BaseCorrMargin shell be calculated (see ISDA, 2018).

Version 2.1 was effective from 1 December 2018 to 30 November 2019 when Version 2.2 was published. In particular, the values reported in this appendix refer to Version 2.1.

Low Volatility currencies: JPY; Regular Volatility currencies: USD, EUR, GBP, CHF, AUD, NZD, CAD, SEK, NOK, DKK, HKD, KRW, SGD and TWD; High Volatility currencies: all other currencies.

Low Volatility currencies: JPY; Regular Volatility well-traded currencies: USD, EUR, GBP; Regular Volatility less well-traded currencies: CHF, AUD, NZD, CAD, SEK, NOK, DKK, HKD, KRW, SGD, TWD; High Volatility currencies: all other currencies.

See footnote 27.

ISDA defines for both OIS and IBOR curves the following 12 tenors at which Delta shall be computed: 2 weeks, 1 month, 3 months, 6 months, 1 year, 2 year, 3 year, 5 year, 10 year, 15 year, 20 year, 30 year.

The Expected Exposure (EE) is defined as: \({\mathcal {H}}(t,t_i) = P(t;t_i) \frac{1}{N_{MC}} \sum _{m=1}^{N_{MC}} H_{m}(t_i)\).

For the instruments considered in this paper, the fixed cash flow dates are a subset of the floating ones, otherwise both fixed and floating cash flow dates should be added to the time grid.

We abuse the notation naming as \(t_i\) the points of both the initial and the joint grids, since the initial grid is only the starting point of our construction and is never used.

References

Ametrano, F. M., & Bianchetti, M. (2009). Smooth yield curves bootstrapping for forward libor rate estimation and pricing interest rate derivatives. In: Modelling interest rates: Latest advances for derivatives pricing risk books.

Ametrano, F. M., & Bianchetti, M. (2013). Everything you always wanted to know about multiple interest rate curve bootstrapping but were afraid to ask. Available at SSRN 2219548.

Andersen, L. B., Pykhtin, M., & Sokol, A. (2016). Credit exposure in the presence of initial margin. Available at SSRN 2806156.

Andersen, L. B., Pykhtin, M., & Sokol, A. (2017). Rethinking the margin period of risk. Journal of Credit Risk 13(1).

Anfuso, F., Aziz, D., & Giltinan, P., et al. (2017). A sound modelling and backtesting framework for forecasting initial margin requirements. Available at SSRN 2716279.

Atanassov, E., & Kucherenko, S. (2020). Implementation of Owen’s scrambling with additional permutations for Sobol’ sequences.

Basel Committee on Banking Supervision and Board of the International Organization of Securities Commissions. (2013). Second consultative document, margin requirements for non-centrally cleared derivatives. Bank for International Settlements.

Basel Committee on Banking Supervision and Board of the International Organization of Securities Commissions. (2015). Margin requirements for non-centrally cleared derivatives. Bank for International Settlements.

Bianchetti, M. (2010). Two curves, one price. Risk, 23(8), 66.

Bielecki, T., & Rutkowski, M. (2004). Credit risk: Modeling, valuation and hedging. Berlin: Springer.

Bormetti, G., Brigo, D., Francischello, M., & Pallavicini, A. (2018). Impact of multiple curve dynamics in credit valuation adjustments under collateralization. Quantitative Finance, 18(1), 31–44.

Brigo, D., Buescu, C., & Francischello, M., et al. (2018). Risk-neutral valuation under differential funding costs, defaults and collateralization. Defaults and Collateralization (February 28, 2018).

Brigo, D., Capponi, A., & Pallavicini, A. (2014). Arbitrage-free bilateral counterparty risk valuation under collateralization and application to credit default swaps. Mathematical Finance: An International Journal of Mathematics, Statistics and Financial Economics, 24(1), 125–146.

Brigo, D., Francischello, M., & Pallavicini, A. (2019). Nonlinear valuation under credit, funding, and margins: Existence, uniqueness, invariance, and disentanglement. European Journal of Operational Research, 274(2), 788–805.

Brigo, D., & Masetti, M. (2005). Risk neutral pricing of counterparty risk.

Brigo, D., & Mercurio, F. (2007). Interest rate models-theory and practice: With smile, inflation and credit. Berlin: Springer.

Brigo, D., Morini, M., & Pallavicini, A. (2013). Counterparty credit risk, collateral and funding: With pricing cases for all asset classes (Vol. 478). Hoboken: Wiley.

Brigo, D., Pallavicini, A., & Papatheodorou, V. (2011). Arbitrage-free valuation of bilateral counterparty risk for interest-rate products: Impact of volatilities and correlations. International Journal of Theoretical and Applied Finance, 14(06), 773–802.

Burgard, C., & Kjaer, M. (2011). In the balance. In C Burgard, M Kjaer In the balance, Risk, November (pp. 72–75).

Capriotti, L., & Giles, M. (2012). Adjoint Greeks made easy. Risk, 25(9), 92.

Caspers, P., Giltinan, P., Lichters, R., et al. (2017). Forecasting initial margin requirements: A model evaluation. Journal of Risk Management in Financial Institutions, 10(4), 365–394.

Crépey, S., & Dixon, M. (2020). Gaussian process regression for derivative portfolio modeling and application to CVA computations. Journal of Computational Finance, 24, 47–81.

European Commission. (2016). Commission Delegated Regulation (EU) 2016/101 of 26 October 2015 supplementing Regulation (EU) No 575/2013 of the European Parliament and of the Council with regard to regulatory technical standards for prudent valuation under Article 105(14). Official Journal of the European Union.

European Parliament and Council of the European Union. (2013). Regulation (EU) No 575/2013 of the European Parliament and of the Council of 26 June 2013 on prudential requirements for credit institutions and investment firms and amending Regulation (EU) No 648/2012. Official Journal of the European Union.

Glasserman, P., & Xu, X. (2014). Robust risk measurement and model risk. Quantitative Finance, 14(1), 29–58.

Green, A. (2015). XVA: Credit, funding and capital valuation adjustments. Hoboken: Wiley.

Green, A., & Kenyon, C. (2015). MVA: Initial margin valuation adjustment by replication and regression. Available at SSRN 2432281.

Green, A., Kenyon, C., & Dennis, C. (2014). KVA: Capital valuation adjustment. Risk.

Gregory, J. (2016). The impact of initial margin. Available at SSRN 2790227.

Gregory, J. (2020). The XVA challenge: Counterparty risk, funding, collateral, capital and initial margin. Hoboken: Wiley.

Hagan, P. S., Kumar, D., Woodward, A. S., & Lesniewski, D. E. (2002). Managing smile risk. The Best of Wilmott, 1, 249–296.

Hagan, P. S., Kumar, D., Woodward, A. S., & Lesniewski, D. E. (2016). Universal smiles. Wilmott, 84, 40–55.

Henrard, M. (2007). The irony in the derivatives discounting. Wilmott 92–98.

Henrard, M. (2009). The irony in the derivatives discounting part II: The crisis. Wilmott, 2(6), 301–316.

Huge, B., & Savine, A. (2020). Differential machine learning: The shape of things to come. Risk (10).

International Accounting Standards Board. (2011). International financial reporting standard 13—Fair value measurement.

International Swaps and Derivatives Association. (2013). Standard initial margin model for non-cleared derivatives.

International Swaps and Derivatives Association. (2016). ISDA SIMM: From Principles to Model Specification.

International Swaps and Derivatives Association. (2018). ISDA SIMM. Methodology, version 2.1.

Kenyon, C. (2010). Short-rate pricing after the liquidity and credit shocks: including the basis. Risk, November.

Kjaer, M. (2018). KVA Unmasked. Available at SSRN 3143875.

Konikov, M., & McClelland, A. (2019). Multi-curve Cheyette-style models with lower bounds on tenor basis spreads. Available at SSRN 3524703.

Maran, A., Pallavicini, A., & Scoleri, S. (2022). Chebyshev Greeks: Smoothing gamma without bias. Risk, November.

Mercurio, F. (2009). Post credit crunch interest rates: Formulas and market models. Bloomberg portfolio research paper 2010-01.

Morini, M. (2009). Solving the puzzle in the interest rate market. Available at SSRN 1506046.

Morini, M., & Prampolini, A. (2011). Risky funding with counterparty and liquidity charges. Risk, 24(3), 70.

Obloj, D. (2007). Fine-tune your smile: Correction to Hagan et al. Arxiv.

Pallavicini, A., Perini, D., & Brigo, D. (2012). Funding, collateral and hedging: Uncovering the mechanics and the subtleties of funding valuation adjustments. arXiv:1210.3811.

Piterbarg, V. V. (2012). Cooking with collateral. Risk, 25(8), 46.

Scaringi, M., & Bianchetti, M. (2020). No fear of discounting-how to manage the transition from EONIA to €STR. Available at SSRN 3674249.

Scoleri, S., Bianchetti, M., & Kucherenko, S. (2021). Application of quasi Monte Carlo and global sensitivity analysis to option pricing and Greeks: Finite differences vs. AAD. Wilmott, 2021, 66–83.

Zeron, M., & Ruiz, I. (2018). Dynamic initial margin via Chebyshev spectral decomposition. Working paper (24 August).

Acknowledgements

The authors acknowledge fruitful discussions with many colleagues in Intesa Sanpaolo Risk Management and Front Office Departments. A. Principe and M. Terraneo collaborated to the early stage of this work. The views and opinions expressed here are those of the authors and do not represent the opinions of their employers. They are not responsible for any use that may be made of these contents.

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Theoretical framework

In this appendix we detail the theoretical framework used in this work.

1.1 Pricing with collateral and XVA

We describe here the general no-arbitrage, additive pricing formulas for financial instruments subject to XVA.

Assuming no arbitrage and the usual probabilistic framework (\(\Omega ,{\mathcal {F}},{\mathcal {F}}_t,Q\)) with market filtration \({\mathcal {F}}_{t}\) and risk-neutral probability measure Q, the general pricing formula of a financial instrument with payoff V(T) paid at time \(T>t\) is

where the base value,Footnote 14 or mark to market), \(V_{0}(t)\) in Eq. A2 is interpreted as the price of the financial instrument under perfect collateralization,Footnote 15 the discount (short) rate r(t) in Eq. A3 is the corresponding collateral rate, B(t) is the collateral bank account growing at rate r(t), D(t; T) is the stochastic collateral discount factor, P(t; T) is the perfectly collateralized Zero Coupon Bond (ZCB) price, and \(Q^T\) is the T-forward probability measure associated to the numeraire P(t; T).

Valuation adjustments in Eq. A1, collectively named XVA, represent a crucial and consolidated component in modern derivatives pricing which takes into account additional risk factors not included among the risk factors considered in the base value \(V_0\) in Eq. A2. These risk factors are typically related to counterparties default, funding, and capital, leading, respectively to Credit/Debt Valuation Adjustment (CVA/DVA, see e.g. Brigo and Masetti (2005); Brigo et al. (2011, 2014)), Funding Valuation Adjustment (FVA, see e.g. Burgard and Kjaer (2011); Morini and Prampolini (2011); Pallavicini et al. (2012)), often split into Funding Cost/Benefit Adjustment (FCA/FBA), Margin Valuation Adjustment (MVA, see e.g. Green and Kenyon (2015)), Capital Valuation Adjustment (KVA, see e.g. Green et al. (2014)). A complete discussion on XVA may be found e.g. in Gregory (2020). For XVA pricing we must consider the enlarged filtration \( {\mathcal {G}}_{t}= {\mathcal {F}}_{t}\vee {\mathcal {H}}_{t}\supseteq {\mathcal {F}}_{t}\) where \( {\mathcal {H}}_{t}=\sigma (\{\tau \le u\}:u\le t)\) is the filtration generated by default events. More details can be found in a number of papers, see e.g. Brigo et al. (2013, 2018, 2019) and references therein.

1.2 Financial instruments

We describe here the detailed list of financial instruments considered in this work and their corresponding pricing formulas.

1.2.1 Instruments’ list

According to the discussion in Sect. 3, we show in the following Table 10 the complete list of financial instruments considered in this work.

1.2.2 Interest rate swap

A Swap is a contract which allows the exchange of a fixed rate K against a floating rate, characterised by the following time schedules

and by the following payoffs for the fixed and floating cash flows, respectively,

where \(\tau _{K}\) and \(\tau _{R}\) are the year fractions for fixed and floating rate conventions, respectively, and \(R_x(T_{j-1},T_j)\) is the underlying spot floating rate with tenor x, consistent with the time interval \(\left[ T_{j-1}, T_{j} \right] \) (e.g. \(x=6M\) for EURIBOR 6 M and semi-annual coupons).

The price of the Swap at time \(t\le T_n = S_m\) is given by the sum of the prices of fixed and floating cash flows occurring after t,