Abstract

We compare the performance of various advanced forecasting techniques, namely artificial neural networks, k-nearest neighbors, logistic regression, Naïve Bayes, random forest classifier, support vector machine, and extreme gradient boosting classifier to predict stock price movements based on past prices. We apply these methods with the high frequency data of 27 blue-chip stocks traded in the Istanbul Stock Exchange. Our findings reveal that among the selected methodologies, random forest and support vector machine are able to capture both future price directions and percentage changes at a satisfactory level. Moreover, consistent ranking of the methodologies across different time frequencies and train/test set partitions prove the robustness of our empirical findings.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Accurate forecasting of stock prices provide valuable information for investment decision-making process and economic planning of many economic agents. In particular, firms could plan their business activities and development strategies more efficiently given the tendency of their stock price movements. Investors, for their parts, would be able to design optimal portfolios that offer superior performance than stock markets do. The rationale behind forecasting accuracy is that stock market metrics such as prices, trading volumes, transaction costs and timing hide the predictive and complex patterns of stock price movements. To date, several time-series techniques have been extensively used to forecast stock prices. They include, among others, ARIMA, GARCH-based volatility models, and regime-switching models [see, Rapach and Zhou (2013) for a detailed literature review on this topic]. Past studies also make use of more complex algorithms such as the Bayesian network (Zuo and Kita 2012), the jump-diffusion models (Christensen et al. 2012), the fuzzy time-series models (Cheng and Yang 2018), the variational mode decomposition (Lahmiri 2016), and the data analytical prediction models (Oztekin et al. 2016).

Another important premise for stock price forecasting is whether or not the efficient market hypothesis (EMH) is a reliable paradigm. This cornerstone of financial economics has many implications for modern finance theories and asset pricing models at both national and international levels. As recalled by Ross (2005), the EMH is an neoclassical economic perspective of financial markets. The seminal paper by Fama (1970) defines informational efficiency as the state of the market, when all relevant information is embedded into current asset prices. It then becomes evident that the main element in this definition is the determination of the information set.Footnote 1 Two decades later of his seminal paper, Fama (1991) reexamines the abundant evidence collected during this lapse. The availability of data and the development of the information and communication technologies helped to emerge a bulk of papers aimed at testing different ways of outsmarting the market, creating supposedly profitable trading rules. Fama (1991) thus includes the tests for return predictability in the sense of weak-form efficiency. These tests are of diverse nature: economic, computer or biologically inspired. Ultimately, if the EMH is an adequate description of market behavior, return forecasts (whether they are based on past returns or an addition of other economic and financial variables) should be ruled out.

Our paper contributes to the related literature by testing the weak-form of the EMH for the Istanbul Stock Exchange (also known as Borsa Istanbul), which is the only official stock exchange in Turkey.Footnote 2 It considers high frequency data of individual stocks at different sampling frequency and makes return forecasts by means of a six different methodologies: artificial neural network (ANN), k-nearest neighbors (kNN), Logistic Regression (logistic), Naive Bayes (NB), random forest classifier (RF), support vector Machine (SVM), and extreme gradient boosting (XGBoost).

With these methodologies, we attempt to predict the direction of the future prices (upward or downward movement). This prediction allows for setting a trading rule that triggers a buy (sell) signal in case of an upward (a downward) forecast. Our main results indicate that trading strategies based on price direction change could help investors earn additional returns which should not be the case under the EMH. In particular, we show that among the selected methodologies RF and SVM provide the highest average accuracy rates and ideal profit ratios across different time scales and train/test set partitions. Moreover, kNN also performs equally well especially for the 60-min time frequency. We also observe that logistic regression works well especially at 10-min sample frequency in terms of the ideal profit ratios.

The rest of the paper is organized as follows. Section 2 presents a brief review of recent advanced forecasting methods applied in economics and finance. Section 3 describes our main methodologies. Section 4 explains the sample data whereas Sect. 5 presents the empirical results. Finally, Sect. 6 draws the main conclusions of our research.

2 Stock market forecasts: recent approaches

The usage of advanced forecasting techniques has been quite popular for some time in the context of financial market prediction. McGroarty et al. (2019) introduce an agent based model that can replicate clustered volatility, autocorrelation of returns, long memory in order flow, concave price impact and the presence of extreme price events. Their model allows for profitable high frequency trading strategies. Shang et al. (2019), using functional time series methods with dynamic updating techniques, successfully produce 1-day-ahead forecasts of the VIX index. Fernandes et al. (2019) investigate the predictability of European long-term government bond spreads through the application of heuristic and meta-heuristic support vector regression hybrid structures. Authors show that the sine-cosine LSVR is outperforming its counterparts in terms of statistical accuracy. Hudson and Urquhart (2021) use data from two Bitcoin markets and three other popular cryptocurrencies and find significant predictability and profitability in each cryptocurrency from testing almost 15,000 technical trading rules .

D’Ecclesia and Clementi (2021) estimate the time varying implied volatility of equity returns using the E-GARCH approach, Heston model and a novel ANN framework and show that ANN approach results the most accurate. Göçken et al. (2016) propose a hybrid model consisting in a harmony search and a genetic algorithm along with an ANN, in order to enhance the ability of return forecasts in the Turkish stock market. A hybrid approach was also selected by Qiu et al. (2016). They forecast Nikkei 225 index using an ANN with 18 explanatory variables. They combine the ANN gradient search with a genetic algorithm and simulated annealing to avoid bias in optimization. However, the drawback of this study is that the inclusion of so many input variables could produce multicollinearity among them. Recently, Iglesias Caride et al. (2018) found that ANN could be used to forecast stock price movements in the Brazilian market. This forecast is better for stocks with low market capitalization rather than with large capitalization stocks.

With regards to other machine learning approaches, several expert systems and decision support systems have been introduced. Kyriakou et al. (2021) apply a machine learning technique, namely, a fully nonparametric smoother with the covariates and the smoothing parameter chosen by cross-validation, to forecast stock returns in excess of different benchmarks. Kim and Won (2018) design a hybrid expert system that uses a machine-learning approach. Nadkarni and Neves (2018) emphasize the importance of isolating the most important factors first in algorithmic trading. Some systems focus on predicting the future direction of asset prices instead of the returns themselves (Malagrino et al. 2018; Karhunen 2019; Jeong and Kim 2019). Jeong and Kim (2019) provide an advanced machine-learning applications in the context of expert systems for quantitative trading. Brasileiro et al. (2017) introduce a piece-wise aggregate approximation approach that outperforms the competing methods for US stocks. The model by Feuerriegel and Gordon (2018) successfully reduces the forecast errors below baseline predictions from historic lags at a statistically significant level. Nam and Seong (2019) propose a novel machine learning model to forecast Korean stock price movements using financial news, and show that their approach outperforms traditional algorithms.Footnote 3 Avci et al. (2019) design a model that empirically tests the impact of agents’ attitudes on their price expectation through their trading behaviour and show that their hypothesis holds in forecasting day-ahead electricity price in Turkey.

3 Methodologies

3.1 Artificial neural network

As mentioned earlier, we aim to predict future stock price directions within an intraday (high-frequency) framework. Given that the existence of automatic trading (i.e., computer-triggered sells and buys) in several markets, we particularly aim at testing if ANNs are suitable to forecast changes in prices 10-, 30-, and 60-min ahead. This paper could thus be of interest not only for high frequency traders but also for dealers, designated market-makers, retail day-traders and other market participants as a whole.

In our setup, the forecast is implemented using a resilient propagation ANN (Isasi Viñuela and Galván León 2004). Neurons are organized into layers according to their function: the input layer provides information to the network, the output layer provides the answer and the hidden layers are responsible for carrying out the mapping between input and output (Freeman and Skapura 1991).

In addition, we use a multilayered neural network. It is a feed-forward network, totally connected, organized in three layers: 10 input neurons, six neurons in a single hidden layer and one output neuron. This architecture is equivalent to the one used by Gencay (1999), Lanzarini et al. (2011) and Fernández-Rodríguez et al. (2000). Differently, we introduce a learning algorithm changing from the more common back-propagation to a resilient propagation algorithm. The training is supervised. The resilient propagation updates each weight independently, and is not influenced by the magnitude of the derivative but only by behavior of its sign (Riedmiller and Braun 1993). The network is designed to forecast the market return.

Let \(P=(p_{1}, p_{2}, \ldots , p_{L})\) be the sequence of stock quotes, then the instantaneous return at the moment \(t+1\) is computed as:

where \(p_{t+1}\) and \(p_{t}\) are stock quotes at times \(t+1\) and t, and \(r_{t+1}\) is the continuously compounded return in the period \(t+1\).

In order to improve network performance, the trend of last nine returns which are used as inputs for the networks is calculated using ordinary least squares and used as the 10th input for the model. The supervised training implies knowing the expected value for each of the examples that will be used in the training.

Thus, it is required a set of ordered pairs \(\{(X_{1}, Y_{1}), (X_{2}, Y_{2}), \ldots , (X_{j}, Y_{j}), \ldots , (X_{M}, Y_{M})\}\) being \(X_{j}=(x_{j,1}, x_{j,2}, \ldots , x_{j,10})\) the input vector, from which \(x_{j,10}\) is the trend of last nine returns and \(Y_{j}\) is the answer value that the network expected to learn from the input vector. In this case:

The maximum number of pairs \((X_{j}, Y_{j})\) that can be formed with L stock quotes is \(M = L - 9\). We use 70% (also 80% and 90%) of them to train the network and the remaining data to verify its performance. Once the network is trained, its answer for vector \(X_{j}\) is computed as indicated in equation Eq. (4).

where \(x_{j,k}\) is the value corresponding to the k-th input defined in Eq. (2), \(b_{k,i}\) is the weight of the arch that links the k-th neuron of the input with the i-th hidden neuron and \(a_{i}\) is the weight of the arch that links the i-th hidden neuron with the single output neuron of the network. We should note that each hidden neuron has an additional arch, whose value is indicated in \(b_{0,i}\). This value is known as bias or trend term. A similar behavior happens to the output neuron with the weight \(a_{0}\).

We perform 30 independent runs for each stock in the sample described in Sect. 4. The maximum number of iterations is capped at 3000. The initial weights are randomly distributed between \(-1\) and 1. Initial update value is set to 0.01, while the updated values are limited within \(e^{-6}\) and 50. Increase factor and decrease factor for weight updates are set to 1.2 and 0.5, respectively. The functions F and G used by the neural network are both sigmoidal functions and defined in Eqs. (5) and (6), respectively. F is bounded between 0 and 1, and G is bounded between \(-1\) and 1. In this way, F allows hidden neurons to produce small values (between 0 and 1), and G permits the net to split between negative (expecting negative returns) and positive (expecting positive returns).

3.2 k-Nearest neighbors algorithm

k-nearest neighbors algorithm (KNN) is a non-parametric and lazy learning type of machine learning method used for classification and regression. It is non-parametric because it does not make any assumption about the data such as normality. It is lazy or instance-based learning because the function is only approximated locally and all computation is deferred until classification. KNN algorithm works as follows: For a given value of k, it computes the distance between the test data and each row of the training data by using a distance metric like Euclidean metric (some of the other metrics that can also be used are cityblock, Chebychev, correlation, and cosine etc.). The distance values are sorted in an ascending order and then top k elements are extracted from the sorted array. It finds the most frequent class among these k elements and returns as the predicted class.

In our application of KNN, we again use 70% (also 80% and 90%) of the whole data set as the training set and the remaining as the test set. We label the log-returns as up and down to create the output variable and we use nine lags of the returns as the input variables. We apply the algorithm for k= 1,...,20 together with the Euclidean metric and we choose the one with the highest accuracy rate.

3.3 Logistic regression

One of the widely used machine learning algorithms to make classification is the logistic regression which divides observations to a discrete set of classes. Logistic regression outputs a probability value by using the logistic sigmoid function and then this probability value is mapped to two or more discrete classes. In our case, we have a binary classification problem of identifying the next return as up or down. The logistic regression assigns probabilities to each row of the features matrix X. Let’s denote the sample size of the data set with N and thus we have N rows of the input vector. Given the set of d features, i.e. \(x_i=(x_{1,i},\ldots ,x_{d,i})\), and parameter vector w, the logistic regression minimizes the following optimization problem:

where \(f(x) = w^Tx+b\). Logistic regression model has some advantages compared to some other methods because of its parsimony and speed of implementation. For instance, it is less probable to expose to the over-fitting problem because of the less number of parameters to estimate compared to the artificial neural networks.

In our application of logistic regression, we again take 70% (also 80% and 90%) of the data set as the training set and the rest as the test set. In the training set we first label the returns as \(+1\) and \(-1\) to produce the output variable y and then we use nine lags of the returns as inputs in the algorithm in order to make it comparable with ANN methodology. Finally, we use the estimated parameters from the training set together with the lagged returns in the test set to predict the price directions in the test set.

3.4 Naïve Bayes classifier

Naïve Bayes classifier is another widely used machine learning algorithm which is based on the Bayes’ theorem in probability theory hence it is listed under probabilistic machine learning algorithms. The name “Naïve” comes from the independence assumption that the input features are conditionally independent of each other given the classification. Although this assumption is usually violated in practice, Naïve Bayes classifiers have worked quite well in many complex real-world situations. The Naïve Bayes algorithm assigns observations to the most probable class by first estimating the densities of the predictors within each class. As a second step, it computes the posterior probabilities according to Bayes rule:

where Y is the random variable corresponding to the class index of an observation, \(X_1,\ldots ,X_P\) are the random predictors of an observation, and \(\pi (Y = k)\) is the prior probability that a class index is k. Finally, it classifies an observation by estimating the posterior probability for each class, and then assigns the observation to the class yielding the maximum posterior probability.

In our case, Y takes the value of either \(+1\) for up price direction and \(-1\) for the other case. We use the notation \(X_1,..,X_9\) to denote the first lagged up to ninth lagged value of log returns for each of the stock series. We again use the ratio of 0.7/0.3 (also 0.8/0.2 and 0.9/0.1 ) for the training and test set division.

3.5 Random forest classifier

The Random Forest Classifier is an ensemble algorithm such that it combines more than one algorithm of the same or different kind for classifying objects. Decision trees are the building blocks of the random forest model. In other words, the random forest consists of a large number of individual decision trees that function as an ensemble. Random forest classifier creates a set of decision trees from a randomly selected subset of the training set, and each individual tree makes a class prediction. It then sums the votes from different decision trees to decide the final class of the test object. For instance, assume that there are 5 points in our training set that is \((x_1,x_2,\ldots , x_5)\) with corresponding labels \((y_1,y_2,\ldots ,y_5)\) then random forest may create four decision trees taking the input of subset such as \((x_1,x_2,x_3,x_4)\), \((x_1,x_2,x_3,x_5)\), \((x_1,x_2,x_4,x_5)\), \((x_2,x_3,x_4,x_5)\). If three of the decision trees vote for “up” against “down” then random forest predicts “up”. This works efficiently because a single decision tree may produce noise but a large number of relatively uncorrelated trees operating as a choir will reduce the effect of noise, resulting in more accurate results.

More generally, in the random forest method as proposed by (Breiman 2001), a random vector \(\theta _k\) is generated, independent of the past random vectors \(\theta _1,\ldots ,\theta _{k-1}\) but with the same distribution; and a tree is grown using the training set and \(\theta _k\) resulting in a classifier \(h(x,\theta _k)\) where x is an input vector. In random selection, \(\theta \) consists of a number of independent random integers between 1 and K. The nature and dimension of \(\theta \) depend on its use in tree construction. After a large number of trees are generated, they vote for the most popular class. This procedure is called random forest. A random forest is a classifier consisting of a collection of tree structured classifiers \({h(x,\theta _k ), k=1, \ldots }\) where the \(\theta _k\)’s are independent identically distributed random vectors and each tree casts a unit vote for the most popular class at input x.

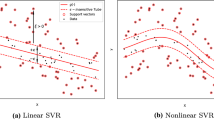

3.6 Support vector machine classifier

The support vector machine (SVM) is a supervised machine learning algorithm used for both regression and classification tasks. The support vector machine algorithm’s objective is to find a hyperplane in an N-dimensional space where N is the number of features that distinctly classify the data points. Hyperplanes can be thought of as decision boundaries that classify the data points. Data points falling on different sides of the hyperplane can be assigned to different classes. Support vectors are described as the data points that are closer to the hyperplane and influence the position and orientation of the hyperplane. The margin of the classifier is maximized using these support vectors. In more technical terms, the above process can be summarized as follows. Given the training vectors \(x_i\) for \(i=1,2,\ldots ,N\) with a sample size of N observations, the support vector machine classification algorithm solves the following problem given by

subject to \(y_i(w^T \phi (x_i) ) \ge 1-\xi _i\) and \(\xi _i \ge 0,i=1,2,\ldots ,N\). The dual of the above problem is given by

subject to \(y^T\alpha = 0\) and \(0\le \alpha _i \le C\) for \(i=1,2,\ldots ,N\), where e is the vector of all ones, \(C>0\) is the upper bound. Q is an n by n positive semi-definite matrix. \(Q_{ij} = y_i y_j K(x_i,x_j)\), where \(K(x_i,x_j) = \phi (x_i)^T \phi (x)\) is the kernel. Here training vectors are implicitly mapped into higher dimensional space by the function \(\phi \). The decision function in the support vector machines classification is given by

The optimization problem in Eq. 9 can be solved globally using the Karush–Kuhn–Tucker (KKT) conditions. Clearly, this optimization problem depends on the choice of the Kernel functions. Our study employs the Gaussian (rbf) kernel, which is denoted by \(\exp (-\gamma \Vert x-x'\Vert ^2)\) where \(\gamma \) must be greater than 0. When SVM is implemented, we try to find an optimal value of C and \(\gamma \) for each stock by using a grid search for each of these parameters.

3.7 Extreme gradient boosting classifier

The extreme gradient boosting (XGBoost) is a decision-tree-based ensemble machine learning algorithm that uses a gradient boosting framework. As we said before, an ensemble method is a machine learning technique that combines several base models in order to produce one optimal predictive model. An algorithm is called boosting if it works by adding models on top of each other iteratively, the errors of the previous model are corrected by the next predictor until the training data is accurately predicted or reproduced by the model. A method is called gradient boosting if, instead of assigning different weights to the classifiers after every iteration, it fits the new model to new residuals of the previous prediction and then minimizes the loss when adding the latest prediction. Namely, if a model is updated using gradient descent, then it is called gradient boosting. XGBoost improves upon the base gradient boosting framework through systems optimization and algorithmic enhancements. Some of these enhancements can be listed as parallelised tree building, tree pruning using depth-first approach, cache awareness and out-of-core computing, regularisation for avoiding over-fitting, efficient handling of missing data, and in-built cross validation capability.

3.8 Profitability measures

In order to measure the profitability of our selected forecasting techniques, we benchmark it with a Naïve flipping toss model and with a perfect forecast model. In the first case, we try to verify if selected methodologies are better at forecasting the price change direction than an simple up-or-down fair random process. In the second case, we aim at testing the ability of the selected forecasting techniques to capture a substantial share of a perfect forecast in price change. The profitability measures used on pairs \((X_{j}, Y_{j})\) of the training set are the following:

-

Sign prediction ratio Correctly predicted price direction change is assigned 1, and \(-1\) otherwise.

$$\begin{aligned}&SPR = \frac{\sum _{j=1+M/2}^{M} matches \big (Y_{j},Y^{\prime }_{j}\big )}{M/2} \end{aligned}$$(12)$$\begin{aligned}&matches\big (Y_{j}, Y^{\prime }_{j}\big ) = \left\{ \begin{array} {ll} 1, &{}\quad if \ sign(Y_{j})=sign\big (Y^{\prime }_{j}\big ), \\ 0, &{}\quad otherwise \end{array} \right. \end{aligned}$$(13)where M denotes the size of the set for which the sign prediction ratio is measured and sign is the sign function that maps \(+1\) when the argument is positive and \(-1\) when the argument is negative.

-

The maximum return is obtained by adding all the expected values in absolute value

$$\begin{aligned} MaxReturn = \sum _{j=1}^{N} abs(Y_{j}), \end{aligned}$$(14)where N denotes the size of the set for which maximum return is computed.

-

The Total Return is computed in the following way

$$\begin{aligned} TotalReturn = \sum _{j=1}^{N}sign(Y^{\prime }_{j}) * Y_{j}, \end{aligned}$$(15)where N denotes the size of the set for which total return is computed. Notice that the better the prediction method, the larger the total return is.

-

Ideal profit ratio is the ratio between the total return in Eq. (15) and the maximum return in Eq. (14).

$$\begin{aligned} IPR = \frac{TotalReturn}{MaxReturn}. \end{aligned}$$(16)

4 Data

We employ tick-by-tick prices (stamped to millisecond precision) for a sample of 27 blue-chip stocks of the Istanbul Stock exchange (ISE), from 30 November 2015 to 29 April 2016.Footnote 4 The benchmark index of the exchange is ISE30 which is composed of 30 blue-chip stocks and updated quarterly. The selected 27 stocks are the ones included in the benchmark index for the whole sample period. We excluded the stocks that joined or left the benchmark index in the sample period as we did not want to be affected by index inclusion–exclusion. We label all the trades which occur in the continuous session during the day as “continuous trades and we construct “all trades” by adding trades executed during opening and closing sessions to the “continuous trades”. The details of the number of observations for continuous and all trades and ISIN code of each stock are available in Table 1. In order to prevent asynchronous trading, we sample our data at 10, 30 and 60 min. There are 763 (872) time intervals for 60 min, 1417 (1635) time intervals for 30 min and 4251 (4637) time intervals for 10 min during the sample period for continuous (all) trades. We have at least one trade for each stock for 30 and 60 min time intervals as the selected assets are highly liquid stocks. Being the least liquid one in terms of the number of 10-min time intervals in which there is at least one trade, even ENKAI has 4228 (4614) 10-min time intervals which correspond to 99.5% of the total number of 10-min time intervals for continuous (all) trades.

The sample period and the selected stocks are important for two main reasons. First, the initial day of the sample period refers to the introduction of the new equity market trading and settlement system (Genium INET) together with the new surveillance, risk management and other surrounding systems (SMARTS) provided by NASDAQ. Second, with the new trading system, high frequency traders joined the Turkish stock market through co-location services. These traders mostly focus on the stocks listed in Table 1 due to their liquidity and market capitalization. Therefore, the findings in this paper are particularly important for this specific group of traders.

5 Empirical results

We sample our original tick data at every 10-, 30-, and 60-min time intervals and then apply five different methodologies described in Sect. 3. The training sample size for all the daily prediction time horizons is set as 50% of the total sample size, whereas the remaining 50% is utilized as the out-of-sample dataset. We show the results obtained for each stock at different time scales and for only continuous session and then all realized trades in different tables. We present two key metrics regarding the performance of the methods. The first one is the sign prediction rate which reflects the proportion of times the related methodology guesses correctly the future price direction (upward or downward). The fundamental benchmark model to compare our methods is Naïve flipping coin model. Clearly, if the underlying process is fully random, we should expect the correct sign prediction ratio to be 0.5. On the other hand, the correct guess of the future price change does not guarantee that we obtain better results. It is possible that the forecasting method can only correctly guess future price changes of small size and fail to guess big price changes. Consequently, a given forecasting method would generate a poor performance. Hence, we also have to compare the performance of different forecasting methods with a perfect foresight of the price changes. For this purpose, we compute the ideal profit ratio which is the ratio of the return generated by a given methodology to a perfect sign forecast. Furthermore, mean values, standard deviations, maximum and minimum values of each performance metrics are presented for a particular machine learning methodology in the related table.

It is also important to note that the results for the ANN method are the average of the 30 runs of the methodology for each stock. As a robustness check we also apply the procedure described above for all trades in the sample period. We observe that the outcomes are almost exactly the same as the results for the continuous session trades which are explained below. Hence results for the ‘all trades’ scenario are provided in Appendix A through Tables 11, 12, 13, 14, 15, 16, 17, 18 and 19 without any further explanation.

The columns in Table 2, 3, 4, 5, 6, 7, 8, 9 and 10 show the sign prediction accuracy ratios of the continuous trades for different machine learning algorithms across the selected stocks under three different train and test sets divisions. These tables also show the ideal profit ratios as an alternative method of comparison. From Table 2, we observe that at 10-min frequency by using 70% of the data as the training set, both RF and SVM yield the highest value of average success ratio which is 60%. Although XGboost has a slightly better average accuracy rate (57%), it is also very close to that of kNN, logistic, and NB algorithms (56%). ANN is the worst performing method with eight of the stocks having accuracy rates less than 50%. On the other hand, the sign prediction ratio can be as high as 73% for the PETKM returns with the SVM algorithm. From the same table we also observe that as a linear classification algorithm, Logistic regression provides significantly high ideal profit ratios with respect to other non-linear methods. Ideal profit ratios from the logistic regression can be as high as 39% with an average value of 13%. RF and SVM also have the second highest average ideal profit ratio which is 10%.

Table 3 shows the sign prediction ratio and ideal profit ratios for the 0.7/0.3 train/test set division at 30 min time scale. Similar to the results observed in the Table 2, the highest values of average sign prediction ratios are obtained by RF and SVM algorithms which are 56% and 55%, respectively. They are followed by kNN (54%) as the third best performing model. Similar to the previous results, ANN is the worst performing model with an average value of 50%. Hence we can say than there is no difference between random walk and ANN algorithm in this framework. As opposed to the results for the ideal profit ratios in the previous table, the highest average ideal profit ratio is attained by RF which is also the case for the sign prediction ratio. Moreover, kNN is the second best model (0.06) which is followed by SVM algorithm (0.05). In the same framework, Table 4 presents the results for the same train/test set division at 60-min frequency. We observe similar results to the previous cases but in this case kNN performs equally well as the RF algorithm in terms of the success ratios and even slightly better than RF by the ideal profit ratios. Furthermore, as it is expected as the time frequency gets smaller from 60 to 10-min the accuracy rates increase because of the predictive ability of the algorithms for the shorter terms with respect to the longer horizons.

The sign prediction rates and ideal profit ratios for the 0.8/0.2 train and test set partitions at 10-, 30-, 60-min time scales are given by Tables 5, 6 and 7. As it is the case for the 0.7/0.3 train and test set partition, while the best sign prediction rate (60%) is obtained by RF and SVM, the highest ideal profit ratio is achieved by the logistic regression. Again we observe that RF and kNN are the best performing models if we consider both success rates and ideal profit ratios. Similar to the previous cases, ANN is the worst performing algorithm in terms of the both evaluation criteria. On the other hand it is interesting to observe that as the time frequency gets coarser from 30 to 60-min, both sign prediction ratio and ideal profit ratios become slightly better for RF and kNN which is not the case for the other algorithms.

Table 8, 9 and 10 present the sign prediction and ideal profit ratios for the 0.9/0.1 train/test set division for the considered time intervals. We observe that the results are robust across different train and test set partitions as RF and SVM provide the highest accuracy rates and logistic regression yields the greatest ideal profit ratio values. Furthermore, the largest success ratio of 74% across all different partitions and methodologies is obtained for the KRDMD returns with this partition and RF algorithm. Similarly, the highest ideal profit ratio of 54% among all the possible cases is attained for HALKB at 60-min frequency with 0.9/0.1 partition and RF methodology.

By considering the time series of continues trades at 10, 30, and 60 min, we compare the forecasting ability of different machine learning algorithms. We also uncover the influence of the different train/test set partitions on the forecasting power of the particular algorithm together with the sampling frequency. In general we observe that ANN is the worst performing method with an overall average successful forecast ratio of around 50% and ideal profit ratio of 0.03. On the other hand, in terms of the sign prediction ratios RF and SVM provide the highest average accuracy rates across different time scales. Moreover, kNN also performs equally well especially for the 60-min time frequency. We also have the same observations for the ideal profit ratios with the addition that logistic regression works well especially at 10-min time scale.

6 Conclusions

The Efficient Market Hypothesis (EMH) in its weak form postulates that the current price of an asset incorporates all the information of past prices. Consequently, it rules out the possibility of obtaining superior returns based on price history. In this paper, we show that alternative modern forecasting techniques could capture some imperfections in the stock market at the high-frequency level such that trading based on these techniques’ recommendations could constitute a good day-trading strategy to earn extra returns. According to our results, random forest and support vector machine algorithms are able to capture both future price directions and percentage changes at a satisfactory level. These methods can, on the average, predict the signs of future price changes with a success rate significantly higher than 0.50 for any sampling frequency we consider. In the case of individual stocks, we observe considerable amount of cases where this ratio exceeds 0.70. These methods are also fairly successful in capturing the possible future potential returns with an average lying around 10%.Footnote 5 Another important finding is that forecast degree is not significantly related to firm size, probably due to the fact that all sample firms are quite large in terms of market capitalization.

Overall, regarding the Turkish stock market, the EMH is an approximate theory of the underlying dynamics of a stock market. However, there are some arbitrage opportunities not fully offset by market forces and our results reveal that it is possible to construct consistently profitable trading strategies with the proper usage of advanced forecasting techniques. It is however important to note that the “one-size-fits-all” does not apply in general, and therefore, given the sampling frequency and aim of the strategy, a combination of alternative methodologies should be taken into account.

Notes

Consequently, Fama (1970) classifies informative efficiency into three categories: (i) Weak efficiency, when the current price contains information from the past series of prices; (ii) Semi-strong efficiency, when the past price contains all the public information associated with that asset; and (iii) Strong efficiency, when the price reflects all public and private information relating to that asset.

Being world’s 17th largest economy, Turkey has been a destination of extensive capital flows in the last few years and its stock market has attracted a lot of attention. With 614 TL bn. market capitalization and more than 1 TL tn. traded value at the end of 2016, equity market of Istanbul Stock Exchange is ranked 6th in traded value among all emerging markets in the world. Moreover, it is ranked 3rd in the whole world with a share turnover velocity of more than 200% in the same year. These statistics show that there is a high level of trading activity at a global scale in Istanbul Stock Exchange and makes it a perfect ground to test our trading strategies.

It was not possible to obtain this tick-by-tick data from data providers so the data comes directly from the database of the stock exchange. Since this is a unique data set, it was not possible the extend it beyond 2016.

It is important to note that portfolios managed by financial institutions are very large and small abnormal returns (forecasting signs above 50% and profit ratio of 10%) could represent extra earnings worth millions of dollars. Similarly, trading thousands of times in a day with small abnormal returns would accumulate up to considerable amount of excess dollar gain in the market.

References

Avci, E., Bunn, D., Ketter, W., & van Heck, E. (2019). Agent-level determinants of price expectation formation in online double-sided auctions. Decision Support Systems, 124, 113068.

Brasileiro, R., Souza, V. L. F., & Oliviera, A. L. I. (2017). Automatic trading method based on piecewise aggregate approximation and multi-swarm of improved self-adaptive particle swarm optimization with validation. Decision Support Systems, 104, 79–91.

Breiman, L. (2001). Random forests. Machine Learning, 45(1), 5–32.

Cheng, C.-H., & Yang, J.-H. (2018). Fuzzy time-series model based on rough set rule induction for forecasting stock price. Neurocomputing, 302, 33–45.

Christensen, H. L., Murphy, J., & Godsill, S. J. (2012). Forecasting high-frequency futures returns using online Langevin dynamics. IEEE Journal of Selected Topics in Signal Processing, 6, 366–380.

D’Ecclesia, R. L., & Clementi, D. (2021). Volatility in the stock market: ANN versus parametric models. Annals of Operations Research, 299(1), 1101–1127.

Fama, E. F. (1970). Efficient capital markets: A review of theory and empirical work. Journal of Finance, 25, 383–417.

Fama, E. F. (1991). Efficient capital markets: II. Journal of Finance, 46, 1575–1617.

Fernandes, F. D. S., Stasinakis, C., & Zekaite, Z. (2019). Forecasting government bond spreads with heuristic models: Evidence from the Eurozone periphery. Annals of Operations Research, 282, 87–118.

Fernández-Rodríguez, F., González-Martel, C., & Sosvilla-Rivero, S. (2000). On the profitability of technical trading rules based on artificial neural networks: Evidence from the Madrid stock market. Economics Letters, 69, 89–94.

Feuerriegel, S., & Gordon, J. (2018). Long-term stock index forecasting based on text mining of regulatory disclosures. Decision Support Systems, 112, 88–97.

Fornaciari, M., & Grillenzoni, C. (2017). Evaluation of on-line trading systems: Markov-switching vs time-varying parameter models. Decision Support Systems, 93, 51–61.

Freeman, J. A., & Skapura, D. M. (1991). Neural networks, algorithms, applications, and programming techniques. Boston: Addison-Wesley Publishing Company.

Gencay, R. (1999). Linear, non-linear and essential foreign exchange rate prediction with simple technical trading rules. Journal of International Economics, 47, 91–107.

Göçken, M., Özçalici, M., Boru, A., & Dosdogru, A. T. (2016). Integrating metaheuristics and artificial neural networks for improved stock price prediction. Expert Systems with Applications, 44, 320–331.

Ho, C.-S., Damien, P., Gu, B., & Konana, P. (2017). The time-varying nature of social media sentiments in modeling stock returns. Decision Support Systems, 101, 69–81.

Hudson, R., & Urquhart, A. (2021). Technical trading and cryptocurrencies. Annals of Operations Research, 297(1), 191–220.

Iglesias Caride, M., Bariviera, A. F., & Lanzarini, L. (2018). Stock returns forecast: An examination by means of artificial neural networks. In C. Berger-Vachon, A. M. Gil Lafuente, J. Kacprzyk, Y. Kondratenko, J. M. Merigó, & C. F. Morabito (Eds.), Complex systems: Solutions and challenges in economics, management and engineering: Dedicated to Professor Jaime Gil Aluja (pp. 399–410). Cham: Springer.

Isasi Viñuela, P., & Galván León, I. M. (2004). Redes de neuronas artificiales. Un enfoque práctico. Prentice Hall: Pearson.

Jeong, G., & Kim, H. Y. (2019). Improving financial trading decisions using deep Q-learning: Predicting the number of shares, action strategies, and transfer learning. Expert Systems with Applications, 117, 125–138.

Karhunen, M. (2019). Algorithmic sign prediction and covariate selection across eleven international stock markets. Expert Systems with Applications, 115, 256–263.

Kim, H. Y., & Won, C. H. (2018). Forecasting the volatility of stock price index: A hybrid model integrating LSTM with multiple GARCH-type models. Expert Systems with Applications, 103, 25–37.

Kyriakou, I., Mousavi, P., Nielsen, J. P., & Scholz, M. (2021). Forecasting benchmarks of long-term stock returns via machine learning. Annals of Operations Research, 297(1), 221–240.

Lahmiri, S. (2016). Intraday stock price forecasting based on variational mode decomposition. Journal of Computational Science, 12, 23–27.

Lanzarini, L., Iglesias Caride, J. M., & Bariviera, A. F. (2011). Are technical trading rules useful to beat the market? Evidence from the Brazilian stock market. In World congress of international fuzzy systems association 2011 and Asia fuzzy systems society international conference 2011 (pp. 21–25).

Malagrino, L. S., Roman, N. T., & Monteiro, A. M. (2018). Forecasting stock market index daily direction: A Bayesian network approach. Expert Systems with Applications, 105, 11–22.

McGroarty, F., Booth, A., Gerding, E., & Chinthalapati, V. L. R. (2019). High frequency trading strategies, market fragility and price spikes: An agent based model perspective. Annals of Operations Research, 282, 217–244.

Nadkarni, J., & Neves, R. F. (2018). Combining neuro-evolution and principal component analysis to trade in the financial markets. Expert Systems with Applications, 103, 184–195.

Nam, K., & Seong, N. Y. (2019). Financial news-based stock movement prediction using causality analysis of influence in the Korean stock market. Decision Support Systems, 117, 100–112.

Oztekin, A., Kizilaslan, R., Freund, S., & Iseri, A. (2016). A data analytic approach to forecasting daily stock returns in an emerging market. European Journal of Operational Research, 253, 697–710.

Qiu, M., Song, Y., & Akagi, F. (2016). Application of artificial neural network for the prediction of stock market returns: The case of the Japanese stock market. Chaos, Solitons & Fractals, 85, 1–7.

Rapach, D. E., & Zhou, G. (2013). Forecasting stock returns. In G. Elliott & A. Timmermann (Eds.), Handbook of economic forecasting (Vol. 6, pp. 328–383). Amsterdam: Elsevier.

Riedmiller, M., & Braun, H. (1993). A direct adaptive method for faster backpropagation learning: The RPROP algorithm. In A. Anu (Ed.), IEEE international conference on neural networks (pp. 586–591). IEEE.

Ross, S. A. (2005). Neoclassical finance. Princeton: Princeton University Press.

Shang, H. L., Yang, Y., & Kearney, F. (2019). Intraday forecasts of a volatility index: Functional time series methods with dynamic updating. Annals of Operations Research, 282, 331–354.

Zuo, Y., & Kita, E. (2012). Stock price forecast using Bayesian network. Expert Systems with Applications, 39, 6729–6737.

Acknowledgements

Ahmet Sensoy gratefully acknowledges support from the Turkish Academy of Sciences under its Outstanding Young Scientist Program (TUBA-GEBIP).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A. Results for the ‘all trades’ scenario

Appendix A. Results for the ‘all trades’ scenario

This appendix presents some descriptive statistics and the results for our main forecasting techniques applied to ‘all trades’ instead of those occurring only in continuous matching session (Tables 2, 3, 4, 5, 6, 7, 8, 9 and 10).

Rights and permissions

About this article

Cite this article

Akyildirim, E., Bariviera, A.F., Nguyen, D.K. et al. Forecasting high-frequency stock returns: a comparison of alternative methods. Ann Oper Res 313, 639–690 (2022). https://doi.org/10.1007/s10479-021-04464-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-021-04464-8