Abstract

The use of the iris and periocular region as biometric traits has been extensively investigated, mainly due to the singularity of the iris features and the use of the periocular region when the image resolution is not sufficient to extract iris information. In addition to providing information about an individual’s identity, features extracted from these traits can also be explored to obtain other information such as the individual’s gender, the influence of drug use, the use of contact lenses, spoofing, among others. This work presents a survey of the databases created for ocular recognition, detailing their protocols and how their images were acquired. We also describe and discuss the most popular ocular recognition competitions (contests), highlighting the submitted algorithms that achieved the best results using only iris trait and also fusing iris and periocular region information. Finally, we describe some relevant works applying deep learning techniques to ocular recognition and point out new challenges and future directions. Considering that there are a large number of ocular databases, and each one is usually designed for a specific problem, we believe this survey can provide a broad overview of the challenges in ocular biometrics.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Several corporations and governments fund biometrics research due to various applications such as combating terrorism and the use of social networks, showing that this is a strategically important research area (Daugman 2006; Phillips et al. 2009). A biometric system exploits pattern recognition techniques to extract distinctive information/signatures of a person. Such signatures are stored and used to compare and determine the identity of a person sample within a population. As biometric systems require robustness against acquisition and/or preprocessing fails, as well as high accuracy, the challenges and the methodologies for identifying individuals are constantly developing.

Methods that identify a person based on their physical or behavioral features are particularly important since such characteristics cannot be lost or forget, as may occur with passwords or identity cards (Bowyer et al. 2008). In this context, the use of ocular information as a biometric trait is interesting regarding a noninvasive technology and also because the biomedical literature indicates that irises are one of the most distinct biometric sources (Wildes 1997).

The most common task in ocular biometrics is recognition, which can be divided into verification (1:1 comparison) and identification (1:n comparison). Also, recognition can be performed in two distinct protocols called closed-world and open-world. In the closed-world protocol, samples of an individual are present in the training and test set. The open-world protocol must have samples from different subjects both in the training and test sets. The identification process generally is performed on the closed-world protocol (except the open-set scenario, which has imposters that are only in the test set, i.e., individuals who should not match any subject in the gallery set), while verification can be performed in both, being the open-world most common protocol adopted in this setup. In addition to identification and verification, there are other tasks in ocular biometrics such as gender classification (Tapia and Aravena 2017), spoofing (Menotti et al. 2015) and liveness (He et al. 2016) detection, recognition of left and right iris images (Du et al. 2016), ocular region detection (Severo et al. 2018; Lucio et al. 2019), iris/sclera segmentation (Lucio et al. 2018; Bezerra et al. 2018), and sensor model identification (Marra et al. 2018).

Iris recognition under controlled environments at near-infrared wavelength (NIR) demonstrates impressive results, and as reported in several works (Bowyer et al. 2008; Phillips et al. 2008, 2010; Proença and Neves 2017, 2019) can be considered a mature technology. The use of ocular images captured in uncontrolled environments is currently one of the greatest challenges (Proença and Alexandre 2012; Rattani et al. 2016). As shown in Fig. 1, such images usually present noise caused by illumination, occlusion, reflection, motion blur, among others. Therefore, to improve the biometric systems performance in these scenarios, recent approaches have used information extracted only from the periocular region (Padole and Proença 2012; Proença and Neves 2018; Luz et al. 2018) or fusing them with iris features (Tan et al. 2012; Tan and Kumar 2013; Ahmed et al. 2016, 2017).

The term periocular is associated with the region around the eye, composed of eyebrows, eyelashes, and eyelids (Park et al. 2009, 2011; Uzair et al. 2015), as illustrated in Fig. 2. Usually, the periocular region is used when there is poor quality in the iris region, commonly in visible wavelength (VIS) images or part of the face is occluded (in face images) (Park et al. 2009; Luz et al. 2018). In the literature, regarding the periocular region, there are works that kept the iris and sclera regions (Luz et al. 2018; Proença and Alexandre 2012; De Marsico et al. 2017) and others that removed them Sequeira et al. (2016), Sequeira et al. (2017), Proença and Neves (2018).

Although there are several surveys in the literature describing ocular recognition methodologies (Wildes 1997; Bowyer et al. 2008; Ross 2010; Hake and Patil 2015; Nigam et al. 2015; Alonso-Fernandez and Bigun 2016b, a; De Marsico et al. 2016; Nguyen et al. 2017; Rattani and Derakhshani 2017; Lumini and Nanni 2017), such surveys do not specifically focus on databases and competitions. Table 1 summarizes the number of ocular databases/competitions described in these surveys.

One of the first surveys on iris recognition was presented by Wildes (1997), who examined iris recognition biometric systems as well as issues in the design and operation of such systems. Bowyer et al. (2008) described both the historical and the state-of-the-art development in iris biometrics focusing on segmentation and recognition methodologies. Addressing long-range iris recognition, the literature review described in Nguyen et al. (2017) presents and describes iris recognition methods at a distance system. Alonso-Fernandez and Bigun (2016b), Alonso-Fernandez and Bigun (2016a) surveyed methodologies focusing only on periocular biometrics, while Rattani and Derakhshani (2017) described state-of-the-art methods applied to periocular region, iris, and conjunctival vasculature recognition using VIS images. Nigam et al. (2015) described in detail methodologies for specific topics such as iris acquisition, preprocessing techniques, segmentation approaches, in addition to feature extraction, matching and indexing methods. Lastly, Omelina et al. (2021) recently performed an extensive survey regarding iris databases, describing properties of popular databases and recommendations to create a good iris database. The authors also made a brief description of some ocular competitions.

This work describes ocular databases and competitions (or contests) on biometric recognition using iris and/or periocular traits. We present the databases according to the images that compose them, i.e., NIR, VIS and Cross-Spectral, and multimodal databases. We also detailed information such as image wavelength, capture environment, cross-sensor, database size and ocular modalities employed, as well as the protocol used for image acquisition and database construction.

The main contributions of this paper are the following: (1) we survey and describe the types of existing ocular images databases and image acquisition protocols; (2) a detailed description of the applications and goals in creating these databases; (3) a discussion and description of the main and most popular ocular recognition competitions in order to illustrate the methodology strategies in each challenge; and (4) we drawn new challenging tasks and scenarios in ocular biometrics.

To the best of our knowledge, this is the first survey specifically focused on ocular databases and competitions. Thus, we believe that it can provide a general overview of the challenges in ocular recognition over the years, the databases used in the literature, as well as the best performance methodologies in competitions for different scenarios.

The remainder of this work is organized as follows. In Sect. 2, we detail the ocular databases separating them into three categories: NIR, VIS and cross-spectral, and multimodal databases. In Sect. 3, we present a survey and discussion of ocular recognition competitions using iris and periocular region information and describe the top-ranked methodologies. Sect. 4 presents recent works applying deep learning frameworks focusing on encoding and matching to iris/periocular recognition and other tasks regarding ocular biometrics (ocular preprocessing methods are beyond the scope of our review). Finally, future challenges and directions are pointed out in Sect. 5 and conclusions are given in Sect. 6.

2 Ocular databases

Currently, there are various databases of ocular images, constructed in different scenarios and for different purposes. These databases can be classified by VIS and NIR images and separated into controlled (cooperatives) and uncontrolled (non-cooperatives) environments, according to the process of image acquisition. Controlled databases contain images captured in environments with controlled conditions, such as lighting, distance, and focus. On the other hand, uncontrolled databases are composed of images obtained in uncontrolled environments and usually present problems such as defocus, occlusion, reflection, off-angle, to cite a few. A database containing images captured at different wavelengths is referred to as cross-spectral, while a database with images acquired by different sensors is referred to as cross-sensor. The summary of all databases cited in this paper as well as links to find more information about how they are available can be found at [www.inf.ufpr.br/vri/publications/ocularDatabases.html].

In this Section, the ocular databases are presented and organized into three subsections. First, we describe databases that contain only NIR images, as well as synthetic iris databases. Then, we present databases composed of images captured at both VIS and cross-spectral scenarios (i.e., VIS and NIR images from the same subjects). Finally, we describe multimodal databases, which contain data from different biometric traits, including iris and/or periocular.

2.1 Near-infrared ocular images databases

Ocular images captured at NIR wavelength are generally used to study the features present in the iris (CASIA 2010; Phillips et al. 2008, 2010). As even darker pigmentation irises reveal rich and complex features (Daugman 2004), most of the visible light is absorbed by the melanin pigment while longer wavelengths of light are reflected (Bowyer et al. 2008). Other studies can also be performed with this kind of databases, such as methodologies to create synthetic irises (Shah and Ross 2006; Zuo et al. 2007), vulnerabilities in iris recognition and liveness detection (Ruiz-Albacete et al. 2008; Czajka 2013; Gupta et al. 2014; Kohli et al. 2016), impact of contact lenses in iris recognition (Baker et al. 2010; Kohli et al. 2013; Doyle et al. 2013; Doyle and Bowyer 2015), template aging (Fenker and Bowyer 2012; Baker et al. 2013), influence of alcohol consumption (Arora et al. 2012) and study of gender recognition through the iris (Tapia et al. 2016). The databases used for these and other studies are described in Table 2 and detailed in this session. Some samples of ocular images from NIR databases are shown in Fig. 3.

One of the first iris databases found in the literature was created and made available by CASIA (Chinese Academy of Science). The first version, called CASIA-IrisV1, was made available in 2002. The CASIA-IrisV1 database has 756 images of 108 eyes with a size of \(320\times 280\) pixels. The NIR images were captured in two sections with a homemade iris camera (CASIA 2010). In a second version (CASIA-IrisV2), made available in 2004, the authors included two subsets captured by an OKI IRISPASS-h and CASIA-IrisCamV2 sensors. Each subset has 1200 images belonging to 60 classes with a resolution of \(640\times 480\) pixels (CASIA 2010). The third version of the database (CASIA-IrisV3), made available in 2010, has a total of 22, 034 images from more than 700 individuals, arranged among its three subsets: CASIA-Iris-Interval, CASIA-Iris-Lamp and CASIA-Iris-Twins. Finally, CASIA-IrisV4, an extension of CASIA-IrisV3 and also made available in 2010, is composed of six subsets: three from the previous version and three new ones: CASIA-Iris-Distance, CASIA-Iris-Thousand and CASIA-Iris-Syn. All six subsets together contain 54, 601 ocular images belonging to more than 1800 real subjects and 1000 synthetic ones. Each subset will be detailed below, according to the specifications described in CASIA (2010).

The CASIA-Iris-Interval database has images captured under a near-infrared LED illumination. In this way, these images are used to study the texture information contained in the iris traits. The database is composed of 2639 images, obtained in two sections, from 249 subjects and 395 classes with a resolution of \(320\times 280\) pixels.

The images from the CASIA-Iris-Lamp database were acquire by a non-fixed sensor (OKI IRISPASS-h) and thus the individual collected the iris image with the sensor in their own hands. During the acquisition, a lamp was switched on and off to produce more intra-class variations due to contraction and expansion of the pupil, creating a non-linear deformation. Therefore, this database can be used to study problems such as iris normalization and robust iris feature representation. A total of 16, 212 images, from 411 subjects, with a resolution of \(640\times 480\) pixels were collected in a single section.

During an annual twin festival in Beijing, iris images from 100 pairs of twins were collected to form the CASIA-Iris-Twins database, enabling the study of similarity between iris patterns of twins. This database contains 3183 images (400 classes from 200 subjects) captured in a single section with the OKI IRISPASS-h camera at a resolution of \(640\times 480\) pixels.

The CASIA-Iris-Thousand database is composed of 20, 000 ocular images from 1000 subjects, with a resolution of \(640\times 480\) pixels, collected in a single section by an IKEMB-100 IrisKing camera (IRISKING 2017). Due to a large number of subjects, this database can be used to study the uniqueness of iris features. The main source of intra-class variations that occur in this database is due to specular reflections and eyeglasses.

The last subset of CASIA-IrisV4, called CASIA-IRIS-Syn, is composed of iris images generated with iris textures automatically synthesized from the CASIA-IrisV1 subset. The generation process applied the segmentation approach proposed by Tan et al. (2010). Factors such as blurring, deformation, and rotation were introduced to create some intra-class variations. In total, this database has 10, 000 images belonging to 1000 classes.

The images from the ND-IRIS-0405 (Phillips et al. 2010) database were captured with the LG2200 imaging system using NIR illumination. The database contains 64, 980 images from 356 subjects and there are several images with subjects wearing contact lenses. Even the images being captured under a controlled environment, some conditions such as blur, occlusion of part of the iris region, and problems like off-angle may occur. The ND-IRIS-0405 is a superset of the databases used in the ICE 2005 (Phillips et al. 2008) and ICE 2006 (Phillips et al. 2010) competitions.

The ICE 2005 database was created for the Iris Challenge Evaluation 2005 competition (Phillips et al. 2008). This database contains a total of 2953 iris images from 132 subjects. The images were captured under NIR illumination using a complete LG EOU 2200 acquisition system with a resolution of \(640\times 480\) pixels. Images that did not pass through the automatic quality control of the acquisition system were also added to the database. Experiments were performed independently for the left and right eyes. The results of the competition can be seen in Phillips et al. (2008).

The ICE 2006 database has images collected using the LG EOU 2200 acquisition system with a resolution of \(640\times 480\) pixels. For each subject, two ‘shots’ of 3 images of each eye were performed per session, totaling 12 images. The imaging sessions were held in three academic semesters between 2004 and 2005. The database has a total of 59, 558 iris images from 240 subjects (Phillips et al. 2010).

The WVU Synthetic Iris Texture Based database, created at West Virginia University, has 1000 classes with 7 grayscale images each. It consists exclusively of synthetic data, with the irises being generated in two phases. First, a Markov Random Field model was used to generate the overall iris appearance texture. Then, a variety of features were generated (e.g., radial and concentric furrows, crypts and collarette) and incorporated into the iris texture. This database was created to evaluate iris recognition algorithms since, at the time of publication, there were few available iris databases and they had a small number of individuals (Shah and Ross 2006).

The WVU Synthetic Iris Model Based database also consists of synthetically generated iris images. This database contains 10, 000 classes from 5000 individuals, with degenerated images by a combination of several effects such as specular reflection, noise, blur, rotation, and low contrast. The image gallery was created in five steps using a model and anatomy-based approach (Zuo et al. 2007), which contains 40 randomized and controlled parameters. The evaluation of their synthetic iris generation methodology was performed using a traditional Gabor filter-based iris recognition system. This database provides a large amount of data that can be used to evaluate ocular biometric systems.

The Fake Iris Database was created using images from 50 subjects belonging to the BioSec baseline database (Fierrez et al. 2007) and has 800 fake iris images (Ruiz-Albacete et al. 2008). The process for creating new images is divided into three steps. The original images were first reprocessed to improve quality using techniques such as noise filtering, histogram equalization, opening/closing, and top hat. Then, the images were printed on paper using two commercial printers: an HP Deskjet 970cxi and an HP LaserJet 4200L, with six distinct types of papers: white paper, recycled paper, photographic paper, high-resolution paper, butter paper, and cardboard (Ruiz-Albacete et al. 2008). Finally, the printed images were recaptured by an LG IrisAccess EOU3000 camera.

The IIT Delhi Iris database consists of 1120 images, with a resolution of \(320\times 240\) pixels, from 224 subjects captured with the JIRIS JPC1000 digital CMOS camera. This database was created to provide a large-scale database of iris images of Indian users. In Kumar and Passi (2010), Kumar and Passi employed these images to compare the performance of different approaches for iris identification (e.g., Discrete Cosine Transform, Fast Fourier Transform, Haar wavelet, and Log-Gabor filter) and to investigate the impact in recognition performance using a score-level combination.

The images from the ND Iris Contact Lenses 2010 database were captured using the LG 2200 iris imaging system. Visual inspections were performed to reject low-quality images or those with poor results in segmentation and matching. To compose the database, the authors captured 9697 images from 124 subjects that were not wearing contact lenses and 12003 images from 87 subjects that were wearing contact lenses. More specifically, the images were acquired from 92 subjects not wearing lenses, 52 subjects wearing the same lens type in all acquisitions, 32 subjects who wore lenses only in some acquisitions and 3 subjects that changed the lens type between acquisitions (Baker et al. 2010). According to Baker et al. (2010), the purpose of this database is to verify the degradation of iris recognition performance due to non-cosmetic prescription contact lenses.

The ND Iris Template Aging database, described and used by Fenker and Bowyer (2012), was created to analyze the template aging in iris biometrics. The images were collected from 2008 to 2011 using an LG 4000 sensor, which captures images at NIR. This database has 22156 images, being 2312 from 2008, 5859 from 2009, 6215 from 2010 and 7770 from 2011, corresponding to 644 irises from 322 subjects. The ND-Iris-Template-Aging-2008-2010 subset belongs to this database.

All images from the ND TimeLapseIris database (Baker et al. 2013) were taken with the LG 2200 iris imaging system, without hardware or software modifications throughout 4 years. Imaging sessions were held at each academic semester over 4 years, with 6 images of each eye being captured per individual in each session. From 2004 to 2008, a total of 6797 images were obtained from 23 subjects who were not wearing eyeglasses, 5 subjects who were wearing contact lenses, and 18 subjects who were not wearing eyeglasses or contact lenses in any session. This database was created to investigate template aging in iris biometrics.

To investigate the effect of alcohol consumption on iris recognition, Arora et al. (2012) created the Iris Under Alcohol Influence (IIITD IUAI) database, which contains 440 images from 55 subjects, with 220 images being acquired before alcohol consumption and 220 after it. The subjects consumed approximately 200 ml of alcohol in approximately 15 min, and the second half of the images were taken between 15 and 20 min after consumption. Due to alcohol consumption, there is a deformation in iris patterns caused by the dilation of the pupil, affecting iris recognition performance (Arora et al. 2012). The images were captured using the Vista IRIS scanner at NIR wavelength.

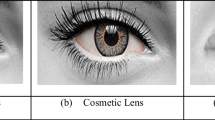

The IIITD Contact Lens Iris (IIITD CLI) database is composed of 6570 iris images belonging to 101 subjects. The images were captured by two different sensors: Cogent CIS 202 dual iris sensor and VistaFA2E single iris sensor with each subject (1) not wearing contact lenses, (2) wearing color cosmetic lenses, and (3) wearing transparent lenses. Four lens colors were used: blue, gray, hazel and green. At least 5 images of each iris were collected in each lens category for each sensor (Kohli et al. 2013).

The images from the ND Cosmetic Contact Lenses database (Doyle and Bowyer 2014) were captured by two iris cameras, an LG4000 and an IrisGuard AD100, in a controlled environment under NIR illumination with a resolution of \(640\times 480\) pixels. These images are divided into four classes, (1) no contact lenses, (2) soft, (3) non-textured and (4) textured contact lenses. Also, this database is organized into two subsets: Subset1 (LG4000) and Subset2 (AD100). Subset1 has 3000 images in the training set and 1200 images in the validation set. Subset2 contains 600 and 300 images for training and validation, respectively (Doyle et al. 2013; Yadav et al. 2014; Severo et al. 2018). Both subsets have 10 equal folds of training images for testing purposes.

The ND Cross-Sensor-Iris-2013 database (Notre Dame 2013) is composed of 146550 NIR images belonging to 676 unique subjects, being 29986 images captured using an LG4000 and 116564 taken by an LG2200 iris sensor with \(640\times 480\) pixels of resolution. The images were captured in 27 sessions over three years, from 2008 to 2010, and in at least two sessions there are images of the same subject. The purpose of this database is to investigate the effect of cross-sensor images on iris recognition. Initially, this database was released for a competition to be held at the BTAS 2013 Conference, but the competition did not have enough submission.

The Database of Iris Printouts was created for liveness detection in iris images and contains 729 printout images of 243 eyes, and 1274 images of imitations from genuine eyes. The database was constructed as follows. First, the iris images were obtained with an IrisGuard AD100 camera. Then, they were printed using the HP LaserJet 1320 and Lexmark c534dn printers. To check the print quality, the printed images were captured by the Panasonic ET-100 camera using an iris recognition software, and the images that were successfully recognized were recaptured by an AD100 camera with a resolution of \(640\times 480\) pixels to create the imitation subset. Initially, images from 426 distinct eyes belonging to 237 subjects were collected. After the process of recognizing the printed images, 243 eyes images (which compose the database) were successfully verified (Czajka 2013).

The IIITD Iris Spoofing (IIS) database was created to study spoofing methods. To this end, printed images from the IIITD CLI (Kohli et al. 2013) database were used. Spoofing was simulated in two ways. In the first, the printed images were captured by a specific iris scanner (Cogent CIS 202 dual eye), while in the second, the printed images were scanned using an HP flatbed optical scanner. The database contains 4848 images from 101 individuals (Gupta et al. 2014).

The Notre Dame Contact Lenses 2015 (NDCLD15) database contains 7300 iris images. The images were obtained under consistent lighting conditions by an LG4000 and an IrisGuard AD100 sensor. All images have \(640\times 480\) pixels of resolution and are divided into three classes based on the lens type: no lens, soft, and textured. This database was created to investigate methods to classify iris images based on types of contact lenses (Doyle and Bowyer 2015).

The IIITD Combined Spoofing database was proposed to simulate a real-world scenario of attacks against iris recognition systems. This database consists of joining the following databases: IIITD CLI (Kohli et al. 2013), IIITD IIS (Gupta et al. 2014), SDB (Shah and Ross 2006), IIT Delhi Iris (Kumar and Passi 2010) and, to represent genuine classes, iris images from 547 subjects were collected. The CSD database has a total of 1872 subjects, with 9325 normal image samples and 11368 samples of impostor images (Kohli et al. 2016).

The Gender from Iris (ND-GFI) database was created to study the recognition of the subject’s gender through the iris, specifically using the binary iris code (which is normally used in iris recognition systems) (Tapia et al. 2016). The images were obtained at NIR wavelength by an LG4000 sensor and labeled by gender. The ND-GFI database contains a single image of each eye (left and right) from 750 men and 750 women, totaling 3000 images. About a quarter of the images were captured with the subjects wearing clear contact lenses. This database has another set of images that can be used for validation, called UND_V, containing 1944 images, being 3 images of each eye from 175 men and 149 women. In this subset, there are also images using clear contact lenses and some cosmetics (Tapia et al. 2016).

According to ISO (2011), an iris image has good quality if the iris diameter is larger than 200 pixels, and if the diameter is between 150 and 200 pixels, the image is classified as adequate quality. In this context, the images from the BERC mobile-iris database have irises with a diameter between 170 and 200 pixels, obtained at NIR wavelength with \(1280\times 960\) pixels of resolution. Using a mobile iris recognition system, the images were taken in sequences of 90 shots (Kim et al. 2016) moving the device at three distances: 15 to 25 cm, 25–15 cm, and 40–15 cm. In total, the database has 500 images from 100 subjects, which were the best ones selected by the authors of each sequence.

Raghavendra et al. (2016) created the Cataract Surgery on Iris database to analyze the impact of cataract surgery on the verification performance of iris recognition systems. The database contains 504 images belonging to 84 subjects who were affected by cataracts. The subjects’ ages vary from 50 to 80 years, being 34 males and 49 females. Three eye samples of each subject were collected before (24 h) and after (36–42 h) the surgery to remove the cataractous lens. The images were captured using a commercial dual-iris NIR device with a resolution of \(640\times 480\) pixels.

The Oak Ridge National Laboratory (ORNL) Off-angle database was created to study how the gaze angle affects the performance of iris biometrics (Karakaya et al. 2013; Karakaya 2016, 2018). This database encompasses 1100 NIR iris images from 50 subjects varying the angle acquisition from \(- 50^{\circ }\) to \(+ 50^{\circ }\) with a step-size of \(10^{\circ }\). The gender distribution consists of \(56\%\) male and \(44\%\) female subjects, and iris color of \(64\%\) with dark colors and \(36\%\) with light-colors. The images were collected by a Toshiba Teli CleverDragon series camera and have a resolution of \(4096\times 3072\) pixels.

The Meliksah University Iris Database (MUID) was collected to investigate the off-angle iris recognition. The authors developed an iris image capture system composed of two cameras to simultaneously capture frontal and off-angle samples. Thus, it is possible to isolate the effect of the gaze angle from pupil dilation and accommodation (Kurtuncu et al. 2016). In total, the database has 24360 NIR images from 111 subjects, 64 males and 57 females, with an average age of 26 years. The images were captured by two infrared-sensitive IDS-UI-3240ML-NIR cameras varying from \(- 50^{\circ }\) to \(+ 50^{\circ }\) angles with a step-size of \(10^{\circ }\) and have a resolution of \(1280\times 1024\) pixels. More details about the iris image acquisition platform are described in Kurtuncu et al. (2016).

The CASIA-Iris-Mobile-V1.0 database is composed of 11000 NIR images belonging to 630 subjects, divided into three subsets: CASIA-Iris-M1-S1 (Zhang et al. 2015), CASIA-Iris-M1-S2 (Zhang et al. 2016) and a new one called CASIA-IRIS-M1-S3. The images were captured simultaneously from the left and right eyes and stored in 8 bits gray-level JPG files. The CASIA-Iris-M1-S1 subset has 1400 images from 70 subjects with a resolution of \(1920\times 1080\) pixels, acquired using a NIR imaging module attached to a mobile phone. The CASIA-Iris-M1-S2 subset has images captured using a similar device. In total, this subset contains 6000 images from 200 subjects with a resolution of \(1968\times 1024\) pixels, collected at three distances: 20, 25 and 30 cm. At last, the CASIA-Iris-M1-S3 subset is composed of 3600 images belonging to 360 subjects with a resolution of \(1920\times 1920\) pixels, which were taken with a NIR iris-scanning technology equipped on a mobile phone.

The Open Eye Dataset (OpenEDS) was created to investigate the semantic segmentation of eyes components, and background (Garbin et al. 2019). This database is composed of 356649 eye images, being 12, 759 images with pixel-level annotations, 252, 690 unlabeled ones, and 91, 200 images from video sequences belonging from 152 subjects. The images were captured with a head-mounted display with two synchronized cameras under controlled NIR illumination with a resolution of \(640\times 400\) pixels.

2.2 Visible and cross-spectral ocular images databases

Iris recognition using images taken at controlled NIR wavelength environments is a mature technology, proving to be effective in different scenarios (Bowyer et al. 2008; Phillips et al. 2008, 2010; Proença and Alexandre 2012; Proença and Neves 2017, 2019). Databases captured under controlled environments have few or no noise factors in the images. However, these conditions are not easy to achieve and require a high degree of collaboration from subjects. In a more challenging/realistic scenario, investigations on biometric recognition employing iris images obtained in uncontrolled environments and at VIS wavelength have begun to be conducted (Proença and Alexandre 2005; Proença et al. 2010). There is also research on biometric recognition using cross-spectral databases, i.e., databases with ocular images from the same individual obtained at both NIR and VIS wavelengths (Hosseini et al. 2010; Sharma et al. 2014; Nalla and Kumar 2017; Algashaam et al. 2017; Wang and Kumar 2019a). Currently, many types of research have been performed on biometric recognition using iris and periocular region with images obtained from mobile devices, obtained in an uncontrolled environment and by different types of sensors (De Marsico et al. 2015; Raja et al. 2015; Rattani et al. 2016). In this subsection, we describe databases with these characteristics. Table 3 summarize these databases. Some samples of ocular images from VIS and Cross-spectral databases are shown in Fig. 4.

From top to bottom: VIS and Cross-spectral ocular image samples from the VISOB (Rattani et al. 2016), MICHE-I (De Marsico et al. 2015), UBIPr (Padole and Proença 2012), UFPR-Periocular (Zanlorensi et al. 2020a), CROSS-EYED (Sequeira et al. 2016, 2017), PolyU Cross-Spectral (Nalla and Kumar 2017) databases

The UPOL (University of Palackeho and Olomouc) database has high-quality iris images obtained at VIS wavelength using the optometric framework (TOPCON TRC501A) and the Sony DXC-950P 3CCD camera. In total, 384 images of the left and right eyes were obtained from 64 subjects at a distance of approximately 0.15 cm with a resolution of \(768\times 576\) pixels, stored in 24 bits (RGB) (Dobeš et al. 2004).

The UBIRIS.v1 database (Proença and Alexandre 2005) was created to provide images with different types of noise, simulating image capture with minimal collaboration from the users. This database has 1877 images belonging to 241 subjects, obtained in two sections by a Nikon E5700 camera. For the first section (enrollment), some noise factors such as reflection, lighting, and contrast were minimized. However, in the second section, natural lighting factors were introduced by changing the location to simulate an image capture with minimal or without active collaboration from the subjects. The database is available in three formats: color with a resolution of \(800\times 600\) pixels, color with \(200\times 150\) pixels, and \(200\times 150\) pixels in grayscale (Proença and Alexandre 2005).

The UTIRIS is one of the first databases containing iris images captured at two different wavelengths (cross-spectral) (Hosseini et al. 2010). The database is composed of 1540 images of the left and right eyes from 79 subjects, resulting in 158 classes. The VIS images were obtained by a Canon EOS 10D camera with \(2048\times 1360\) pixels of resolution. To capture the NIR images, the ISW Lightwise LW camera was used, obtaining iris images with a resolution of \(1000\times 776\) pixels. As the melanin pigment provides a rich source of features at the VIS spectrum, which is not available at NIR, this database can be used to investigate the impact of the fusion of iris image features extracted at both wavelengths.

The UBIRIS.v2 database was built representing the most realistic noise factors. For this reason, the images that constitute the database were obtained at VIS without restrictions such as distance, angles, light, and movement. The main purpose of this database is to provide a tool for the research on the use of VIS images for iris recognition in an environment with adverse conditions. This database contains images captured by a Canon EOS 5D camera, with a resolution of \(400\times 300\) pixels, in RGB from 261 subjects containing 522 irises and 11, 102 images taken in two sessions (Proença et al. 2010).

The UBIPr (University of Beira Interior Periocular) database (Padole and Proença 2012) was created to investigate periocular recognition using images taken under uncontrolled environments and setups. The images from this database were captured by a Canon EOS 5D camera with a 400mm focal length. Five different distances and resolutions were configured: \(501\times 401\) pixels (8m), \(561\times 541\) pixels (7m), \(651\times 501\) pixels (6m), \(801\times 651\) pixels (5m), and \(1001\times 801\) pixels (4m). In total, the database has 10, 950 images from 261 subjects (the images from 104 subjects were obtained in 2 sessions). Several variability factors were introduced in the images, for example, different distances between the subject and the camera, as well as different illumination, poses and occlusions levels.

The BDCP (Biometrics Development Challenge Problem) database (Siena et al. 2012) contains images from two different sensors: an LG4000 sensor that captures images in gray levels, and a Honeywell Combined Face and Iris Recognition System (CFAIRS) camera (Siena et al. 2012), which captures VIS images. The resolutions of the images are \(640\times 480\) pixels for the LG4000 sensor and \(750\times 600\) pixels for the CFAIRS camera. To compose the database, 2577 images from 82 subjects were acquired by the CFAIRS sensor and 1737 images belonging to 99 subjects were taken by an LG4000 sensor. Images of the same subject were obtained for both sensors (Smereka et al. 2015). The main objective of this database is the cross-sensor evaluation, matching NIR against VIS images (Rattani and Derakhshani 2017). It should be noted that this database was used only in Smereka et al. (2015) and no availability information is reported.

Sequeira et al. (2014b) built the MobBIOfake database to investigate iris liveliness detection using images taken from mobile devices under an uncontrolled environment. It consists of 1600 fake iris images obtained from a subset of the MobBIO database (Sequeira et al. 2014a). The fake images were generated by printing the original images using a professional printer in a high-quality photo paper and recapturing the image with the same device and environmental conditions used in the construction of MobBIO.

The images that compose the IIITD Multi-spectral Periocular database were obtained under a controlled environment at NIR, VIS, and night-vision spectra. The NIR images were captured by a Cogent iris Scanner sensor at a distance of 6 inches from the subject, while the night vision subset was created using the Sony Handycam camera in night vision mode at a distance of 1.3 meters. The VIS images were captured with the Nikon SLR camera, also at a distance of 1.3 meters. The database contains 1240 images belonging to 62 subjects, being 310 images, 5 from each subject, at VIS and night vision spectra, and 620 images, 10 from each subject, at NIR spectrum (Sharma et al. 2014).

Nalla and Kumar (2017) developed the PolyU Cross-Spectral database to study iris recognition in the cross-spectral scenario. The images were obtained simultaneously under VIS and NIR illumination, totaling 12, 540 images from 209 subjects with \(640\times 480\) pixels of resolution, being 15 images from each eye in each spectrum.

To evaluate the state of the art on iris recognition using images acquired by mobile devices, the Mobile Iris Challenge Evaluation (MICHE) competition (Part I) was created (De Marsico et al. 2015). The MICHE-I (or MICHEDB) database consists of 3732 VIS images obtained by mobile devices from 92 subjects. To simulate a real application, the iris images were obtained by the users themselves, indoors and outdoors, with and without glasses. Images of only one eye of each individual were captured. The mobile devices used and their respective resolutions are iPhone5 (\(1536\times 2048\)), Galaxy S4 (\(2322\times 4128\)) and Galaxy Tablet II (\(640\times 480\)). Due to the acquisition mode and the purpose of the database, several noises are found in images such as specular reflections, focus, motion blur, lighting variations, occlusion due to eyelids, among others. The authors also proposed a subset, called MICHE FAKE, containing 80 printed iris images. Such images were created as follows. First, they were captured using the iPhone5 the Samsung Galaxy S4 mobile devices. Then, using a LaserJet printer, the images were printed and captured again by a Samsung Galaxy S4 smartphone. There is still another subset, called MICHE Video, containing videos of irises from 10 subjects obtained indoor and outdoor. A Samsung Galaxy S4 and a Samsung Galaxy Tab 2 mobile devices were used to capture these videos. In total, this subset has 120 videos of approximately 15 seconds each.

The VSSIRIS database, proposed by Raja et al. (2015), has a total of 560 images captured in a single session under an uncontrolled environment from 28 subjects. The purpose of this database is to investigate the mixed lighting effect (natural daylight and artificial indoor) for iris recognition at the VIS spectrum with images obtained by mobile devices (Raja et al. 2015). More specifically, the images were acquired by the rear camera of two smartphones: an iPhone 5S, with a resolution of \(3264\times 2448\) pixels, and a Nokia Lumia 1020, with a resolution of \(7712\times 5360\) pixels.

Santos et al. (2015) created the CSIP (Cross-Sensor Iris and Periocular) database simulating mobile application scenarios. This database has images captured by four different device models: Xperia Arc S (Sony Ericsson), iPhone 4 (Apple), w200 (THL) and U8510 (Huawei). The resolutions of the images taken with these devices are as follows: Xperia Arc S (Rear \(3264\times 2448\)), iPhone 4 (Front \(640\times 480\), Rear \(2592\times 1936\)), W200 (Front \(2592\times 1936\), Rear \(3264\times 2448\)) and U8510 (Front \(640\times 480\), Rear \(2048\times 1536\)). Combining the models with front and rear cameras, as well as flash, 10 different setups were created with the images obtained. In order to simulate noise variation, the image capture sessions were carried out in different sites with the following lighting conditions: artificial, natural and mixed. Several noise factors are presented in these images, such as different scales, off-angle, defocus, gaze, occlusion, reflection, rotation and distortions (Santos et al. 2015). The database has 2004 images from 50 subjects and the binary iris segmentation masks were obtained using the method described by Tan et al. (2010) (winners of the NICE I contest).

The VISOB database was created for the ICIP 2016 Competition on mobile ocular biometric recognition, whose main objective was to evaluate methods for mobile ocular recognition using images taken at the visible spectrum (Rattani et al. 2016). The front cameras of 3 mobile devices were used to obtain the images: iPhone 5S at 720p resolution, Samsung Note 4 at 1080p resolution and Oppo N1 at 1080p resolution. The images were captured in 2 sessions for each one of the 2 visits, which occurred between 2 and 4 weeks, counting in the total 158, 136 images from 550 subjects. At each visit, it was required that each volunteer (subject) capture their face using each one of the three mobile devices at a distance between 8 and 12 inches from the face. For each image capture session, 3 light conditions settings were applied: regular office light, dim light, and natural daylight. The collected images were preprocessed using the Viola-Jones eye detector and the region of the image containing the eyes was cropped to a size of \(240\times 160\) pixels.

Sequeira et al. (2016), Sequeira et al. (2017) created the Cross-Spectral Iris/Periocular (CROSS-EYED) database to investigate iris and periocular region recognition in cross-spectral scenarios. CROSS-EYED is composed of VIS and NIR spectrum images obtained simultaneously with 2K\(\times 2\)K pixel resolution cameras. The database is organized into three subsets: ocular, periocular (without iris and sclera regions) and iris. There are 3840 images from 120 subjects (240 classes), being 8 samples from each of the classes for every spectrum. The periocular/ocular images have dimensions of \(900\times 800\) pixels, while the iris images have dimensions of \(400\times 300\) pixels. All images were obtained at a distance of 1.5 meters, under uncontrolled indoor environment, with a wide variation of ethnicity and eye colors, and lightning reflexes.

The Post-mortem Human Iris database was collected to investigate the post-mortem human iris recognition. Due to the difficulty and restriction in collecting such images, this database has only 104 images from 6 subjects. The images were acquired in three sessions with an interval of approximately 11 hours using the IriShield M2120U NIR and Olympus TG-3 VIS cameras.

The QUT Multispectral Periocular database was developed and used by Algashaam et al. (2017) to study multi-spectral periocular recognition. In total, 212 images belonging to 53 subjects were captured at VIS, NIR and night vision spectrum with \(800\times 600\) pixels of resolution. The VIS and NIR images were taken using a Sony DCR-DVD653E camera, while the night vision images were acquired with an IP2M-842B camera.

Regarding some ocular biometrics problems caused by substantial degradation due to variations on illumination, distance, noise, and blur when using single-frame mobile captures, Nguyen et al. (2020) created the VISOB 2.0 database. This database comprises multi-frame captures and has stacks of eye images acquired using the burst mode of two mobile devices: Samsung Note 4 and Oppo N1. It is the second version of the VISOB database and was used in the 2020 IEEE WCCI competition (Nguyen et al. 2020). The images were collected in two visits. At each visit, the subjects collected their own images under three lighting conditions in two sessions. The available subset of the VISOB 2.0 database (competition training set) has 75, 428 images of left and right eyes belonging to 150 subjects. The VISOB 2.0 can also be employed to investigate the probing fairness of ocular biometrics across gender (Krishnan et al. 2020).

The Iris Social Database (I-SOCIAL-DB) has 3286 VIS images from 400 subjects, being \(43.75\%\) male and \(56.25\%\) female. It is composed of images of public persons such as artists and athletes. This database was created by collecting 1643 high-resolution portrait images using Google Image Search. Then, the ocular regions were cropped as rectangles of \(350\times 300\) pixels. The binary masks for the iris region (created by a human expert) are also available. This database can be employed to evaluate iris segmentation and recognition under unconstrained scenarios.

The UFPR-Periocular database has VIS images acquired in unconstrained environments by mobile devices. These images were captured by the subjects themselves using their own smartphone models through a mobile application (app) developed by the authors (Zanlorensi et al. 2020a). In total, this database contains 33, 660 samples from 1122 subjects acquired during 3 sessions by 196 different mobile devices. The image resolutions vary from \(360\times 160\) to \(1862\times 1008\) pixels. The main intra- and inter-class variability are caused by occlusion, blur, motion blur, specular reflection, eyeglasses, off-angle, eye-gaze, makeup, facial expression, and variations in lighting, distance, and angles. The authors manually annotated the eye corners and used them to normalize the periocular images regarding scale and rotation. This database can also be employed to investigate gender recognition, age estimation, and the effect of intra-class variability in biometric systems. The UFPR-Periocular database, which includes the manual annotations of the eye corners, as well as information on the subjects’ age and gender, is publicly available for the research community.

Zanlorensi et al. (2020b) created the UFPR-Eyeglasses database to investigate intra-class variability and also the effect of the occlusion by eyeglasses in periocular recognition under uncontrolled environments. This database has 2270 images captured by mobile devices from 83 subjects with a resolution of \(256\times 256\) pixels. The subjects captured the images using the same mobile app used to collect the UFPR-Periocular database. This database can be considered a subset of the UFPR-Periocular database containing some additional images. The authors manually annotated the iris’s bounding box in each image and used it to perform scale and rotation normalization. The intra-class variations in this database are mainly caused by illumination, occlusions, distances, reflection, eyeglasses, and image quality. The UFPR-Eyeglasses database, which includes the authors’ manual annotations, is publicly available to the research community.

2.3 Multimodal databases

In addition to the databases proposed specifically to assist the development and evaluation of new methodologies for iris/periocular recognition, some multimodal databases can also be used for this purpose. Table 4 show these databases. As described in this subsection, most of these databases consist of iris images obtained at NIR wavelength. Figure 5 shows samples of ocular images from some multimodal databases.

The BioSec baseline database, proposed by Fierrez et al. (2007), has biometric data of fingerprint, face, iris and voice. Data were acquired from 200 subjects in two acquisition sessions, with environmental conditions (e.g., lighting and background noise) not being controlled to simulate a real situation. There are 3200 NIR iris images, being 4 images of each eye for each session, captured by an LG IrisAccess EOU3000 camera (Fierrez et al. 2007).

The BiosecurID multimodal database consists of 8 unimodal biometric traits: iris, face, speech, signature, fingerprints, hand, handwriting, and keystroking (Fierrez 2010). The authors collected data from 400 subjects in four acquisition sessions through 4 months at six Spanish institutions. The iris images were captured at NIR by an LG Iris Access EOU 3000 camera with a resolution of \(640\times 480\) pixels. Four images of each eye were obtained in each of the 4 sessions, totaling 32 images per individual and a final set of 12, 800 iris images.

The BMDB (multienvironment multiscale BioSecure Multimodal Database) (Ortega-Garcia 2010) has biometric data from more than 600 subjects, obtained from 11 European institutions participating in the BioSecure Network of Excellence (Ortega-Garcia 2010). This database contains biometric data of iris, face, speech, signature, fingerprint and hand, and is organized into three subsets: DS1, which has data collected from the Internet under unsupervised conditions; DS2, with data obtained in an office environment under supervision; and DS3, in which mobile hardware was used to take data indoor and outdoor. The iris images belong to the DS2 subset and were obtained in 2 sessions at NIR wavelength in an indoor environment with supervision. For the acquisition, the use of contact lenses was accepted, but glasses needed to be removed. Four images (2 of each eye) were obtained in each session for each of the 667 subjects, totaling 5336 images. These images have a resolution of \(640\times 480\) pixels and were acquired by an LG Iris Access EOU3000 sensor.

The goal of the Multiple Biometrics Grand Challenge (MBGC) (NIST 2010b) was the evaluation of iris and face recognition methods using data obtained from still images and videos under unconstrained conditions (Phillips et al. 2009). The MBGC is divided into three problems: the portal challenge problem, the still face challenge problem, and the video challenge problem (NIST 2010b). This competition has two versions. The first one was held to introduce the problems and protocol, whereas version 2 was released to evaluate the approaches in large databases (Phillips et al. 2009). The iris images were obtained from videos captured at NIR by an Iridian LG EOU 2200 camera (Hollingsworth et al. 2009). The videos present variations such as pose, illumination, and camera angle. The MBGC database has 986 iris videos from 268 eyes collected in 2008 (Hollingsworth et al. 2009).

The Q-FIRE database (Quality in Face and Iris Research Ensemble) has iris and face images from 195 subjects, obtained through videos at different distances (Johnson et al. 2010). This database has 28 and 27 videos of face and iris, respectively, captured in 2 sections, with varying camera distance between 5, 7, 11, 15 and 25 feet. The videos have approximately 6 seconds each and were captured at approximately 25 frames per second. A Dalsa 4M30 infrared camera equipped with a Tamron AF 70–300 mm 1:4.5–5.6 LD DI lens were used to capture iris videos. For distances of 15 and 25 feet, a Sigma APO 300-800mm F5.6 EX DG HSM lens was used. The most attractive distance of capture for iris is 5 (\(300\times 280\) pixels), 7 (\(220 \times \)200 pixels), and 11 (\(120\times 100\) pixels) feet since they respectively represent high, medium and low resolution, based on the number of pixels in the iris diameter. The images also have variations of illumination, defocus, blur, eye angles, motion blur, and occlusions (Johnson et al. 2010).

The NIR images from the ocular region (iris and periocular) of the FOCS database (NIST 2010a) were extracted from the MBGC database (NIST 2010b) videos, which were collected from moving subjects (Matey et al. 2006). These videos were captured in an uncontrolled environment presenting some variations such as noise, gaze, occlusion and lighting. The database has 9581 images (4792 left, 4789 right) with a resolution of \(750\times 600\) pixels from 136 subjects (Smereka et al. 2015).

Their system can recognize users from up to 3 meters (10 feet) using a system with an active search for iris, face or palmprint patterns. The images were taken using a camera with high resolution so that a single image includes regions of interest for both eyes and face traits. Information from the face trait such as skin pattern can also be used for multi-modal fusion. The database has 2567 images from 142 individuals and 284 classes with a resolution of \(2352\times 1728\) pixels.

The SDUMLA-HMT multimodal database contains biometric traits of iris, face, finger vein, gait, and fingerprint (Yin et al. 2011). All data belong to 106 subjects and were collected at Shandong University in China. The iris images were collected at NIR and under a controlled environment at a distance of 6 cm to 32 cm between the camera and the subject. In total, the authors collected 1060 iris images with \(768\times 576\) pixels of resolution, being 10 images (5 of each eye) from each subject (Yin et al. 2011).

Sequeira et al. (2014a) created the MobBIO database due to the growing interest in mobile biometric applications, as well as the growing interest and application of multimodal biometrics. This database has data from iris, face, and voice belonging to 105 subjects. The data were obtained using an Asus TPad TF 300T mobile device, and the images were captured using the rear camera of this device in 8 MP of resolution. The iris images were obtained at VIS and in two different illumination conditions varying eye orientations and occlusion levels. For each subject, 16 images (8 of each eye, cropped from an image of both eyes) were captured. The cropped images have a resolution of \(300\times 200\) pixels. Manual annotations of the iris and pupil contours are provided along with the database, but iris illumination noises are not identified.

The gb2s\(\mu \)MOD database is composed of 8160 iris, face and hand videos belonging to 60 subjects and captured in three sessions with environment condition variation (Ríos-Sánchez et al. 2016). Sessions 1 and 2 were obtained in a controlled environment, while session 3 was acquired in an uncontrolled environment. The iris videos were recorded only in sessions 1 and 2 with a NIR camera (850 nm) held by the subject himself as close to the face as possible capturing both eyes. The diameter of the iris in such videos is approximately 60 pixels. Ten iris videos were collected in two (5 in each session) for each one of the 60 subjects. Along with the videos, information such as name, ID card number, age, gender, and handedness are also available.

All databases described in this subsection contain iris and/or periocular subsets, however, some databases that do not have such subsets can also be employed for iris/periocular recognition. For example, the FRGC (Phillips et al. 2005) database, which is a database of face images, has already been used for iris (Tan and Kumar 2013) and periocular (Woodard et al. 2010; Park et al. 2011; Smereka et al. 2015) recognition in the literature.

3 Ocular recognition competitions

In this section, we describe the major recent competitions and the algorithms that achieved the best results in iris and/or periocular region information. Through these competitions, it is possible to demonstrate the advancement in terms of methodologies for ocular biometrics and also the current challenges in this research area.

The competitions usually provide a database in which the competitors must perform their experiments and submit their algorithms. Once submitted, the algorithms are evaluated with another subset of the database, according to the metrics established by the competition protocol. In this way, it is possible to fairly assess the performance of different methodologies for specific objectives.

In ocular biometrics including iris and periocular recognition, there are several competitions aimed at evaluating different situations, such as recognition in images captured at NIR and/or VIS wavelengths, images captured in an uncontrolled environment, images obtained with mobile devices, among others. For each competition, we describe the approaches that achieved the best results using fused information from iris and periocular region, and also the best performing methodologies using only iris information. Table 5 presents the main competitions held in recent years and the best results achieved, while Table 6 details the methodologies that obtained the best results in these competitions.

3.1 NICE: noisy iris challenge evaluation

The Noisy Iris Challenge Evaluation (NICE) competition contains two different contests. In the first one (NICE.I), held in 2008, the goal was the evaluation of methods for iris segmentation to remove noise factors such as specular reflections and occlusions. Regarding the evaluation of encoding and matching methods, the second competition (NICE.II), was carried out in 2010. The databases used in both competitions are subsets of UBIRIS.v2 (Proença et al. 2010), which contains VIS ocular images captured under uncontrolled environments.

Described by Proença and Alexandre (2012), the first competition aimed to answer: “is it possible to automatically segment a small target as the iris in unconstrained data (obtained in a non-cooperative environment)?” In total, 97 research laboratories from 22 countries participate in the competition. The training set consisted of 500 images, and their respective manually generated binary iris masks. The committee evaluated the proposed approaches using another 500 images through a pixel-to-pixel comparison between the original and the generated segmentation masks. As a metric, the organizers choose the following error rate based on pixel-level:

where n refers to the number of test images, w and h are respectively the width and height of these images, \(P_{i}(r,c)\) means the intensity of the pixel on row r and column c of the ith segmentation mask, \(G_{i}(r,c)\) is the actual pixel value and \(\otimes \) is the or-exclusive operator.

According to the values of \(E_{j}\), NICE.I’s best results are the following: 0.0131 (Tan et al. 2010), 0.0162 (Sankowski et al. 2010), 0.0180 (De Almeida 2010), 0.0224 (Li et al. 2010), 0.0282 (Jeong et al. 2010), 0.0297 (Chen et al. 2010), 0.0301 (Donida Labati and Scotti 2010), 0.0305 (Luengo-Oroz et al. 2010).

The second competition (NICE.II) evaluated only the feature extraction and matching results. Therefore, all the participants used the same segmented images, which were generated by the winner methodology in the NICE.I contest (Proença and Alexandre 2012), proposed by Tan et al. (2010). The main goal was to investigate the impact of noise presented inside the iris region in the biometric recognition process. As described in both competitions (Proença and Alexandre 2012), these noise factors have different sources, e.g., specular reflection and occlusion, caused by the uncontrolled environment where the images were taken. This competition received algorithms sent by 67 participants from 30 countries. The training set consists of 1000 images and their respective binary masks. The proposed methods had to receive a pair of images followed by their masks as input and generate an output file containing the dissimilarity scores (d) of which pairwise comparison with the following conditions:

-

1.

\(d(I, I) = 0\)

-

2.

\(d(I_{1}, I_{2}) = 0 \Rightarrow I_{1} = I_{2}\)

-

3.

\(d(I_{1}, I_{2}) + d(I_{2}, I_{3}) \ge d(I_{1}, I_{3})\).

The submitted approaches were evaluated using a new set of 1000 images with their binary masks. Consider \(IM = \{I_{1},...,I_{n}\}\) as a collection of iris images, \(MA= \{M_{1},...,M_{n}\}\) as their respective masks, and id(.) representing a function that identifies an image. The comparison protocol one-against-all returns a match set \(D^{I} = \{d^{i}_{1},...,d_{im}\}\) and a non-match set \(D^{E} = \ d^{e}_{1},...,d_{ek}\}\) of dissimilarity scores, where \(id(I_{i}) = id(I_{j})\) and \(id(I_{i}) \ne id(I_{j})\), respectively. The algorithms were evaluated using the decidability scores \(d'\) (Daugman 2004), which measure the separation level of two distributions. The following overlap area gives this decidability scores \(d'\):

where the means of the two distributions are given by \(\mu _{I}\) and \(\mu _{E}\), and the standard deviations are represented by \(\sigma _{I}\) and \(\sigma _{I}\).

The best results of NICE.II ranked by their \(d'\) scores are as follows: 2.5748 (Tan et al. 2012), 1.8213 (Wang et al. 2012), 1.7786 (Santos and Hoyle 2012), 1.6398 (Shin et al. 2012), 1.4758 (Li et al. 2012), 1.2565 (De Marsico et al. 2012), 1.1892 (Li and Ma 2012), 1.0931 (Szewczyk et al. 2012).

The winner method, proposed by Tan et al. (2012), achieved a decidability value of 2.5748 by fusing iris and periocular features. The fusion process was performed at the score level by the sum rule method. Therefore, for iris and periocular images, different features and matching techniques were used. The iris features were extracted with ordinal measures and color histogram and for the periocular ones, texton histogram, and semantic information. To compute the matching scores, the authors employed the following metrics: SOBoost learning, diffusion distance, chi-square distance, and exclusive OR operator.

Wang et al. (2012) proposed a method using only iris information. Their approach was ranked second in the competition, achieving a decidability value of 1.8213. The algorithm performed the segmentation and normalization of iris using the Daugman technique (Daugman 1993). Features were extracted by applying the Gabor filters from different patches generated from the normalized image. The AdaBoost algorithm computed a selection of features and the similarity.

The main contribution of NICE competitions was the evaluation of iris segmentation and recognition methods independently, as several iris segmentation methodologies were evaluated in the first competition and the best one was applied to generate the binary masks used in the second one, in which the recognition task was evaluated. Hence, the approaches described in both competitions can be fairly compared since they employed the same images for training and testing.

Although NICE.II was intended to evaluate iris recognition systems, some approaches using information from the periocular region were also included in the final ranking. The winning method fused iris and periocular information, however, it should be noted that some approaches that also fused these two traits achieved lower results than methodologies that used only iris features. Moreover, it would be interesting to analyze the best performing approaches in the NICE.II competition in larger databases to verify the scalability of the proposed methodologies, as the database used in these competitions was not composed of a large number of images/classes.

Some recent works applying deep Convolutional Neural Network (CNN) models have achieved state-of-the-art results in the NICE.II database using information from the iris (Zanlorensi et al. 2018), periocular region (Luz et al. 2018) and fusing iris/periocular traits (Silva et al. 2018) with decidability values of 2.25, 3.47, 3.45, respectively.

3.2 MICHE: mobile iris challenge evaluation

In order to assess the performance that can be reached in iris recognition without the use of special equipment, the Mobile Iris CHallenge Evaluation II, or simply MICHE-II competition, was held (De Marsico et al. 2017). The MICHE-I database, introduced by De Marsico et al. (2015) has 3732 images taken by mobile devices and was made available to the participants to train their algorithms, while other images obtained in the same way were employed for the evaluation.

Similarly to NICE.I and NICE.II, MICHE is also divided into two phases. MICHE.I and MICHE.II focused on iris segmentation and recognition, respectively. Ensuring a fair assessment and targeting only the recognition step, all MICHE.II participants used the segmentation algorithm proposed by Haindl and Krupicka (2015), which achieved the best performance on MICHE.I.

The performance of each algorithm was evaluated through dissimilarity. Assuming I as a set of the MICHE.II database and that \(I_{a}, I_{b} \in I\), the dissimilarity function D is defined by:

satisfying the following properties:

-

1.

\(D(I_{a}, I_{a}) = 0\)

-

2.

\(D(I_{a}, I_{b}) = 0 \Rightarrow I_{a} = I_{b}\)

-

3.

\(D(I_{a}, I_{b}) = D(I_{b}, I_{a})\).

Two metrics were employed to assess the algorithms. The first, called Recognition Rate (RR), was used to evaluate the performance in the identification problem (1:n), while the second, called Area Under the Curve (AUC), was applied to evaluate the performance in the verification problem (1:1). In addition, the methodologies were evaluated in two different setups: first comparing only images acquired by the same device and then using images obtained by two different devices (cross-sensor). The algorithms were ranked by the average performance of RR and AUC. The best results are listed in Table 7.

Ahmed et al. (2016), Ahmed et al. (2017) proposed the algorithm that achieved the best result. Their methodology performs the matching of the iris and the periocular region separately and combines the final score values of each approach. For the iris, they used the rubber sheet model normalization proposed by Daugman (1993). Then, the iris codes were generated from the normalized images with the 1-D Log-Gabor filter. The matching was computed with the Hamming distance. Using only iris information, an Equal Error Rate (EER) of 2.12% was reached. Features from the periocular region were extracted with Multi-Block Transitional Local Binary Patterns and the matching was computed with the chi-square distance. With features from the periocular region, an EER of 2.74% was reported. The outputs of both modalities (iris and periocular) were normalized with z-score and combined with weighted scores. The weights used for the fusion were 0.55 for the iris and 0.45 for the periocular region, yielding an EER of 1.22% and an average between RR and AUC of 1.00.

The best performing approach using only iris information was proposed by Raja et al. (2017). In their method, the iris region was first located through a segmentation method proposed by Raja et al. (2015) and then normalized using the rubber sheet expansion model (Daugman 2004). Each image band (red, green and blue) was divided into several blocks. The features were extracted from these blocks, as well as from the entire image, using a set of deep sparse filters, resulting in deep sparse histograms. The histograms of each block and each band were concatenated with the histogram of the entire image, forming the vector of iris features. The features extracted were used to learn a collaborative subspace, which was employed for matching. This algorithm achieved the third place in the competition, with an average between RR and AUC of 0.86 and with EER values of 0% in the images obtained by the iPhone 5S and 6.55% in the images obtained by Samsung S4.

This competition was the first to evaluate iris recognition using images captured by mobile devices and also to evaluate methodologies applied to the cross-sensor problem, i.e., to recognize images acquired by different sensors.

As in the NICE.II competition, one issue is the scalability evaluation of the evaluated approaches. Although the reported results are very promising, we have to consider them as preliminary since the test set used for the assessment is very small, containing only 120 images. As expected, the best results were attained using iris and periocular region information, however, some approaches that used only iris information achieved better results than others that fused iris and periocular region information.

3.3 MIR: competition on mobile iris recognition

The BTAS Competition on Mobile Iris Recognition (MIR2016) was proposed to raise the state of the art of iris recognition algorithms on mobile devices under NIR illumination (Zhang et al. 2016). Five algorithms, submitted by two participants, were eligible for the evaluation.

A database (MIR-Train) was made available for training the algorithms and a second database (MIR-Test) was used for the evaluation. Both databases were collected under NIR illumination. The images of the two irises were collected simultaneously under an indoor environment. Three sets of images were obtained, with distances of 20 cm, 25 cm, and 30 cm, and 10 images for each distance. The images from both databases were collected in the same session. The MIR-Train database is composed of 4500 images from 150 subjects, while MIR-Test has 12, 000 images from 400 subjects. All images are grayscale with a resolution of \(1968\times 1024\) pixels. The main sources of intra-class variation in the images are due to variations in lighting, eyeglasses and specular reflections, defocus, distance changes, and others. Differently from NICE.II, the segmentation masks were not provided in MIR2016, thus, the methodologies submitted included iris detection, segmentation, feature extraction, and matching.

For the evaluation, the organizing committee considered that the left and right irises belong to the same class; thus, a fusion of the matching scores of both irises was performed. All possible intra-class comparisons (i.e., irises from the same subjects) were implemented to compute the False Non-Match Rate (FNMR). From each iris class, two samples were randomly selected to calculate the False Match Rate (FMR). In total, 174, 000 intra-class and 319, 200 inter-class matches were used. In cases where intra- or inter-class comparisons could not be performed due to failure enrollment or failure match, a random value between 0 and 1 was assigned to the score. The classification of the participants was performed using the FNMR4 metric, but the EER and DI metrics were also reported. The FNMR4 metric reports the FNMR value when the FMR equals to 0.0001. The EER is the value when FNMR is equal to the FMR, and the DI value is the decidability index, as explained previously.

The best result was from the Beijing Bata Technology Co. Ltd. reporting FNMR4 = 2.24%, EER = 1.41% and DI = 3.33. The methodology, described in Zhang et al. (2016), includes four steps: iris detection, preprocessing, feature extraction, and matching. For iris detection, the face is found using the AdaBoost algorithm (Viola and Jones 2004) and eye positions are found by using Support Vector Machines (SVM). Next, to lessen the effect of light reflections, the irises and pupils are detected by the modified Daugmans Integro-Differential operator (Daugman 2004). In pre-processing, reflection regions are located and then removed using a threshold and shape information. Afterward, the iris region is normalized using the method proposed by Daugman (1993). Eyelashes are also detected and removed using a threshold. An improvement in image quality is achieved through histogram equalization. The features were extracted with Gabor wavelet, while Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA) were applied for dimensionality reduction. The matching was performed using the cosine and Hamming distances, and the results combined.

The second place was achieved by TigerIT Bangladesh Ltd. with FNMR4 = 7.07%, EER = 1.29% and DI = 3.94. The proposed approach also made improvements in image quality through histogram equalization and smoothing. After pre-processing, the iris was normalized using the rubber sheet model (Daugman 2007). Features were then extracted with 2D Gabor wavelets, while the matching was performed employing the Hamming distance. This methodology was classified in second place since it obtained a higher FNMR4 value than the first one, but the EER and DI values were better than those reported by the winning algorithm of the competition.

The MIR2016’s main contribution is to be the first competition using NIR images acquired by mobile modules, in addition to the construction of a new database containing images from both eyes of each individual. Unfortunately, the competition did not have many participants and the proposed methodologies consist only of classical literature techniques.

3.4 VISOB 1.0 and VISOB 2.0 competitions on mobile ocular biometric recognition

The VISOB database was created for the VISOB 1.0 - ICIP 2016 Competition on mobile ocular biometric recognition, whose main objective was to evaluate the progress of research in the area of mobile ocular biometrics at the visible spectrum (Rattani et al. 2016). The front cameras of 3 mobile devices were used to obtain the images: iPhone 5S at 720p resolution, Samsung Note 4 at 1080p resolution and Oppo N1 at 1080p resolution. The images were captured in 2 sessions for each one of the 2 visits, which occurred between 2 and 4 weeks, counting in the total 158, 136 images from 550 subjects. At each visit, it was required that each volunteer (subject) capture their own face using each one of the three mobile devices at a distance between 8 and 12 inches from the face. For each session, images were captured under 3 light conditions: regular office light, offices lights off but dim ambient lighting still present (dim light) and next to sunlit windows (natural daylight settings). The collected database was preprocessed using the Viola-Jones eye detector and the region of the image containing the eyes was cropped to a size of \(240\times 160\) pixels.

The VISOB 1.0 competition was designed to evaluate ocular biometric recognition methodologies using images obtained from mobile devices in visible light on a large-scale database. The database created and used for the competition was VISOB (VISOB Database ICIP2016 Challenge Version) (Rattani et al. 2016). This database has 158, 136 images from 550 subjects, and is the database of images obtained from mobile devices with the largest number of subjects. The images were captured by 3 different devices (iPhone 5S, Oppo N1 and Samsung Note 4) under 3 different lighting classes: ‘daylight’, ‘office’, and ‘dim light’. Four different research groups participated in the competition and 5 algorithms were submitted. The metric used to assess the performance of the algorithms was EER.

In almost all competitions, participants submit an algorithm already trained and the evaluation is performed on an unknown portion of the database. On the other hand, VISOB 1.0 competitors submitted an algorithm that was trained and tested on an unknown portion of the database. Two different evaluations were carried out. In the first one (see Table 8), the algorithms were trained (enrollment) and tested for each device and type of illumination.

In the second evaluation, the algorithms were trained only with the images from the ‘office’ lighting class for each of the 3 devices. To assess the effect of illumination on ocular recognition, the tests were performed with the 3 types of illumination for each device. The results are shown in Table 9.

Raghavendra and Busch (2016) achieved an EER between 0.06% and 0.20% in all assessments, obtaining the best result of the competition. The proposed approach extracted periocular features using Maximum Response (MR) filters from a bank containing 38 filters, and a deep neural network learned with a regularized stacked autoencoders (Raghavendra and Busch 2016). For noise removal, the authors applied a Gaussian filter and performed histogram equalization and image resizing. Finally, the classification was performed through a deep neural network based on deeply coupled autoencoders.

All participants explored features based on the texture of the eye images, extracted from the periocular region. None of the submitted algorithms extracted features only from the iris. The organizing committee compared the performance of the algorithms using images obtained only by the same devices, that is, the algorithms were not trained and tested on images from different devices (cross-sensor). Thus, the main contributions of this competition were a large database containing images from different sensors and environments, along with the assessments on these different setups.

The second edition of this competition, called VISOB 2.0, was carried out at IEEE WCCI in 2020 (Nguyen et al. 2020). A new VISOB ’s subset with eye images from 250 subjects captured by two mobile devices: Samsung Note 4 and Oppo N1, was employed to compare the submitted approaches. This competition evaluated ocular biometrics recognition methods using stacks of five images in the open-world (subject-independent) protocol in different lighting conditions: Dark, Office, and Daylight. In the development (training) stage, the competitors were provided with stacks of images from 150 subjects. Regarding the subject-independent evaluation, the comparison of the submitted methods was performed employing samples from other 100 subjects that were not available in the training stage. The main idea of using multi-frame (stacks) captures for ocular biometrics is to avoid degradation in the images caused by variations in illumination, noise, blur, and user to camera distance. Two participants submitted algorithms based on deep representations and one based on hand-crafted features. Table 10 presents the results.