Abstract

The Somatic Mutation Theory (SMT) has been challenged on its fundamentals by the Tissue Organization Field Theory of Carcinogenesis (TOFT). However, a recent publication has questioned whether TOFT could be a valid alternative theory of carcinogenesis to that presented by SMT. Herein we critically review arguments supporting the irreducible opposition between the two theoretical approaches by highlighting differences regarding the philosophical, methodological and experimental approaches on which they respectively rely. We conclude that SMT has not explained carcinogenesis due to severe epistemological and empirical shortcomings, while TOFT is gaining momentum. The main issue is actually to submit SMT to rigorous testing. This concern includes the imperatives to seek evidence for disproving one’s hypothesis, and to consider the whole, and not just selective evidence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Somatic Mutation Theory (SMT) (Hanahan and Weinberg 2000) has been recently challenged on its fundamentals by Tissue Organization Field Theory of carcinogenesis (TOFT) (Sonnenschein and Soto 1999). TOFT is part of an old research tradition dating back to the XIX century (Triolo 1964; Vineis et al. 2010) updated and modified over 15 years ago by Sonnenschein and Soto (1999). According to TOFT, cancer is a disease happening at the tissue level of biological organization arising as a consequence of the disruption of the morphogenetic field (MF) that orchestrates histogenesis and organogenesis from fertilization to senescence. TOFT has challenged the hegemony of SMT, which, starting with Theodor Boveri (1929), posits that cancer arises from a single cell, due to an accumulation of genomic somatic mutations (Hanahan and Weinberg 2000).

A recent paper from Bedessem and Ruphy (2015) denies that TOFT could be an alternative hypothesis to SMT by claiming that both theories suffer from a “lack of non-ambiguous experimental proofs”, and because the “level of argumentation (is) insufficient to irrevocably choose one of the two theories”. However, Bedessem and Ruphy mainly criticize TOFT and deny that a true ‘crisis’ exists in this field that would justify a so-called ‘paradigm shift’. In addition they assert that only narrow differences exist between SMT and TOFT and that those divergences arise mainly from the ‘metaphysical ground’, because “philosophical arguments” are used to “compensate the deficiency in the empirical demonstration”. Bedessem and Ruphy go on to minimize the consequences of the shortcomings hitherto gathered within the SMT framework. Consequently their proposal suggests an “integration” between SMT and TOFT in order to overcome the hypothesized irreconcilability.

Herein, we will rebut Bedessem and Ruphy’s views and in order to do so we will consider: a) what are the current shortcomings of the SMT; b) the different experimental and philosophical premises on which SMT and TOFT respectively rely; and finally c) whether a crisis is taking place in the current framework, and whether this would justify a paradigmatic shift.

2 Evidence of the SMT Failure

2.1 The Role of Mutations

SMT entirely relies on the “causative role” of somatic mutations during carcinogenesis. Bedessem and Ruphy (2015) state that “cancer cells often exhibit large scale genetic perturbations, with a high number of local mutations and chromosomal anomalies. This is contradictory with the classical version of SMT, which considers that tumorigenesis is due to punctual genetic mutations”. While this is a worthy argument, the narrative ignores several arguments that stand against the plausibility of the ‘causative role’ of mutations in cancer initiation. To begin with, it has been acknowledged that mutations have been detected only in 30–40 % of tumor samples (Chanock and Thomas 2007). It is then relevant to ask to what factor(s) other than mutations the other 60 % of tumors are due. Also, somatic mutations have now been detected in normal as well as in inflamed tissues (Washington et al. 2000; Zhang et al. 1997; Lupski 2013; Yamanishi et al. 2002). Moreover, deep-sequencing analysis has revealed that non-malignant skin cells in healthy volunteers harbor many more so-called cancer-driving mutations than expected (Martincorena et al. 2015).

Evidence arguing for the irrelevance of mutations as a target for therapeutic management comes from studies performed on chronic myelogenous leukemia. It has been claimed that the abnormal fusion tyrosine kinase BCR-ABL acts as an “oncogene” and is deemed the key-initiating factor in myelogenous neoplastic transformation. Inhibition of the corresponding oncoproteins by means of tyrosine kinase inhibitor (TKI) has indeed lead to significant short-term beneficial responses, yet without achieving any benefit in terms of long-term survival. This latter failure has been ascribed to the fact that a reservoir of cancer stem cells still proliferate because they lack the alleged targeted-mutated gene and they are therefore insensitive to the TKI (Pellicano et al. 2014; Jiang et al. 2007). Thus, accordingly to this rationale, myeloid cells would become transformed by an oncogene that curiously is absent among the cancer stem cell population from which cancer is thought to arise. Recently, it has been shown that the genome signature of three different ependymoma tumors lacks tumor-driving mutations (while displaying epigenetic modifications), whereas others show neither genomic mutations nor epigenetic aberrations (Mack et al. 2014). This finding implies that cancer may arise even in the absence of any ‘genomic deregulation’ or ‘mutation’ (Greenman et al. 2007; Imielinski et al. 2012; Lawrence et al. 2013). Indeed, an additional challenge to SMT comes from recent sequencing studies in which zero mutations were found in the DNA of some tumors. Remarkably, sequencing studies dealing with that subject made little mention of the fact that some tumors had zero mutations (Kan et al. 2010; Baker 2015).

Therefore, as emphasized by Nature magazine editorialist, “it urge(s) us to revisit the role of gene mutations in cancer”, and address, “if not gene mutations, what else could cause cancer?” (Versteeg 2014). In summary, on the one hand, those data challenge the presumptive causative role played by somatic mutations in cancer onset, while on the other, their being so rarely quoted and appropriately discussed becomes puzzling.

Another source of controversy is represented by cancer arising in transgenic animals or in humans as ‘hereditary tumors’. Mice “engineered” to harbor several oncogenic driver mutations develop cancer, lending apparent support to the mutation-driven cancer model (Van Dyke and Jacks 2002). However, in transgenic experiments, as well as in hereditary cancers, these models are not concerned with a single ‘renegade’ cell committed to becoming a cancer, but with a whole organism in which all the cells, and hence all tissues and organs are ‘mutated’. Additionally, some of these transgenic systems could be considered as ‘conditional’ models, given that transgene expression is highly dependent on the ‘permissive’ effect of some unknown microenvironmental factors. Indeed, addition of doxycycline is required to either induce or repress (according to the experimental system used) the transgene expression (Baron and Bujard 2000). This finding reveals a very relevant difference regarding mutation activity in hereditary cancers and further stresses the fictitious character of such models.

Both hereditary cancers and tumors arising in transgenic animals may indeed be viewed as a consequence of inborn inherited errors of development—the result of a process initiated by a germ-line mutation(s) in the genome of one or both gametes (sperm and/or ovum)—as opposed to sporadic cancers (Sonnenschein et al. 2014a). The mutated genome of the resulting zygote will endow all the cells in the morphogenetic fields of the developing organism with such a genomic mutation(s). Examples of this variety of inborn errors of development include retinoblastoma, Gorlin syndrome, xeroderma pigmentoso, BRCA-1 and-2 neoplasia, and many others (Garber and Offit 2005). Therefore, cancers arising from inborn errors of development should be considered as separate pathogenetic entities when compared to sporadic cancers, given that they ‘emerge’ from very different morphogenetic fields (Sonnenschein et al. 2014a).

Future refinement of analytical and bioinformatics techniques may allow the identification of unexpected so-called cancer-driver mutations (Raphael et al. 2014). However, those newly uncovered mutations may be reciprocally exclusive. The effect of a mutation may indeed nullify that of another mutation. This happen, for example, when a mutation fosters the expression of a molecular factor while the concomitant mutation inhibits the synthesis of the same factor (Vandin et al. 2012). Alternatively, as the number of mutations increases, their respective ‘causal power’ becomes significantly reduced; that is, if carcinogenesis requires a hundred, or even thousands of mutated genes, the pathogenetic ‘weight’ of each single mutation decreases in a proportional manner.

2.2 Are Current Targeted Therapies Valid Arguments Favoring the SMT?

Bedessem and Ruphy claim that “some targeted therapies have provided successful results” and that these results provide a “strong experimental argument” that favors the SMT. However, references quoted by the authors are limited to a few instances, while ignoring that even SMT followers acknowledge “that targeted therapies are generally not curative or even enduringly effective” (Hanahan 2014). Therefore, the “promise of molecularly targeted therapies remains elusive” (Kamb 2010). Others have shared on this conclusion (Seymour and Mothersill 2013; Wheatley 2014). Admittedly, some limited progress has been noticed for a few specific cancers (Bailar and Gornik 1997; AACR Cancer Progress Report 2012). Mortality changes reflect declined incidence (mostly due to reduced tobacco smoking), or early detection, whereas drug innovation is likely to have dropped cancer mortality rate by only 4 % (Lichtenberg 2010). Unambiguously, cancer remains a major worldwide public health problem. Thus, the War on Cancer is far from being won (Ness 2010). One can conclude that the most promising approach in cancer management still relies on prevention (reduce carcinogen exposure and vaccination against Hepatitis viruses and HP viruses).

3 Epistemological Bases of Current Cancer Theories

Experimental and clinical evidence have provided a reliable ground on which TOFT has been built (Clark 1995; Potter 2001; Arnold et al. 2002; McCullough et al. 1997). Currently, SMT and TOFT are recognized to be different paradigms by independent researchers (Wolkenhauer and Green 2013; D’Anselmi et al. 2011; Sonnenschein et al. 2014b; Satgé and Bénard 2008; Prehn 2005; Schwartz et al.2002; Baker et al. 2010; Laforge et al. 2005; Longo and Montévil 2014; Levin 2012; Smythies 2015; Tarin 2011).

The SMT and the TOFT primarily diverge on their different epistemological premises. For the sake of simplicity, SMT is actually seen as a representative example of reductionism, while TOFT adopts an ‘organicism’ framework (Marcum 2010). Both approaches arise as an attempt to capture the complexity underpinning biology (Brigandt and Love 2015). The search for ‘intelligibility’ of the ‘natural world’ has historically been dominated—since Descartes and De la Forge (1664) by the search for a ‘fundamental invariant’, thought to represent the ‘key factor’ from which every process originates and develops according to a few simple rules (Miquel 2008). As an analogy to what happened in physics, in biology the key factor was surmised to be placed at the lowest level—i.e., the molecular one—which was assumed to represent a ‘privileged level of causality’ to which every other level and issuing complexity can be ‘reduced’. By reductionism it is meant the concept that every phenomenon can be explained by principles governing the smallest components participating in the observed phenomenon (Nagel 1998). According to the Stanford Encyclopedia of Philosophy (2015), “ontological reduction is the idea that each particular biological system (e.g., an organism) is constituted by nothing but molecules and their interactions. In metaphysics, this idea is often called physicalism”. Namely, current prevailing conceptions of physicalism reject downward causation because it is not compatible with the claim of physicalism that “all biological principles should be underived law about physical systems” (Soto et al. 2008a). Instead, biological systems are truly characterized by downward causation and diachronic emergence that cannot accommodate physicalist’s reductionist framework. Indeed, in the context of complex systems, physical forces and constraints acquire new properties (emergence) that are not anticipated or fixed at the beginning of a process: mechanical force may acquire novel properties, such as that of inducing gene expression, which cannot be predicted from our knowledge of the physical world. In this sense, physicalism is a true ‘reductionist’ approach, as it is unable to accommodate emergent properties of the living (Soto et al. 2008a).

3.1 SMT and Reductionism

Genetic reductionism (frequently regarded as synonymous with ‘genetic determinism’) is the belief that human phenotypes and even complex traits of living beings may be explained solely by the activity of genes, to which every biological feature may be ‘reduced’. The inappropriate use of metaphors borrowed from information theory has even reinforced the ‘causal’ power with which genes are credited (Longo et al. 2012). Thus, genetic reductionism may be viewed as an even more radical stance regarding ‘generic’ reductionism, given that for genetic reductionism only ‘genes’ matter, with the exclusion of any other sort of molecules or mechanisms (Mazzocchi 2008). Genetic reductionism underlying SMT may be sketched as follows: a) the genome is the ‘ontological’ hardcore of an organism: it defines both the phenotype as its dynamical responses to environmental stimuli, according to a ‘program’ hidden in the DNA and dictating, for better or worse, a cell’s fate. b) In this way, the gene has replaced the Aristotelian concept of deus ex machina, becoming so far a ‘deus in machina’. As a consequence, the causality principle must be found in the DNA. The causality chain is believed to be proceeding from genes to proteins—ultimately determining every cell’s function and structure (horizontal unidirectional causality) and from cells to tissues, organs and even the whole organism along a vertical tree (bottom-up causality flow). c) Dynamic interactions across this endless chain of causality are viewed as behaving in a linear, predictive manner. d) A further corollary, hastily borrowed from information theory, implies that biological functions are entirely governed by the DNA-based ‘program’. Hence, as an analogy with computers, modulation of cell activities is put in an on/off state by ‘molecular signals’ emanating from genes. These assumptions—each of them belonging to a sort of “genomic metaphysics” (Mauron 2002)—are no longer supported by experimental data (Weatherall 2001; Longo et al. 2012; Shapiro 2009). Yet, these concepts persist in shaping the mainstream as well as the methodological activity of scholars, despite being criticized over the past years (Strohman 2002; Noble 2008a, b). It is by now recognized that biological causation takes place at different and entrenched levels. Namely, the non-linear dynamics occurring at lower (molecular) levels is shaped by higher-level constraints that are superimposed on the intrinsic stochasticity of gene expression (Dinicola et al. 2011; Pasqualato et al. 2012; Kupiec 1983, 1997; Dokukin et al. 2011, 2015).

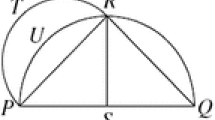

To accommodate such disturbing results, ‘tough’ reductionism has been subject to extensive critical reappraisal attempting to reframe the classical ‘vision’ upon which molecular biology has relied since its beginnings (Cornish-Bowden 2011). This endeavor has involved collecting heterogeneous disciplines in an effort to refine the understanding of gene-regulatory processes. Omics disciplines have added some useful insights into the intricate networks in which genes, proteins and other macromolecular components are entangled, yet without questioning the very basic fundamentals of molecular biology (Kitano 2002). According to an ‘omic’ perspective, causality does not depend on a single or on a discrete number of genes. Instead, it depends on supra-genomic wide regulatory rules, constraining the genome to function as a ‘whole’ (Tsuchiya et al. 2009). This is indisputably a step forward, and it allows one to grasp how the genome acts as a ‘coherent system’, characterized by non-linear dynamics, hysteresis and bistability (Bizzarri and Giuliani 2011). With few exceptions, such approaches are still framed according to a gene-centered paradigm, where the biological causality flows from genes to cells. Those models do not take into consideration how such processes are actually shaped by supra-cellular levels (tissue microenvironment) (Müller and Newman 2003). On the contrary, an integrated approach, spanning from molecules to cell-stroma interactions, may instead explain ‘emergent’ properties of living complex systems (Bizzarri et al. 2013). Reductionism is unable to deal with this complexity. This impossibility must be viewed as an ontological unfeasibility, because reductionism cannot explain emergent properties, not due to methodological inadequacies, but because of its absolute impracticality. An explanatory case in point is evidenced by the Rayleigh–Bénard convection, a widely recognized paradigmatic example for studying inter-levels causation (Bishop 2008). Classical philosophical accounts of causation—e.g., counterfactual, logical, probabilistic process, regularity, structural—have indeed been heavily influenced by a nearly exclusive focus on linear models (Pearl 2000). However, in the context of complex systems many additional channels and levels of interaction not envisioned in linear-based models are usually observed. Dealing with these additional forms of interaction it seems possible only within a new, non-reductionist, paradigm (Longo and Montévil 2014). In conclusion, being cancer an ‘emergent’ phenomenon, it cannot be ‘reduced’ to its lowest components whatever its dynamics.

3.2 TOFT and Organicism

TOFT has been shaped according to an open-ended organicism (Gilbert and Sarkar 2000). Organicism—more commonly known as “systems theory”—focuses on systems rather than on single components, putting the emphasis on bottom-up and top-down causation (Soto et al. 2008a). TOFT posits that ‘causative’ processes take place and are driven by the ‘morphogenetic field’ (MF) (Sonnenschein and Soto 1999). Originally introduced by Weiss (1939), MF has received renewed appreciation in the last few years (Gilbert et al. 1996). MF is acting within a living system by ‘driving’ the dynamics of all components (genes, cytoskeleton, enzymes and so on), constraining them into a coherent behavior. Undeniably, those processes are intrinsically ‘bonded’ by the very nature of raw components, the past history of the system, the physical-electromagnetic constraints, and chemical gradients.

Within MFs, a specific class of molecules—morphostats—mostly produced from stroma (fibroblasts) and non-epithelial cells (macrophages), have been credited with modulating cell proliferation. Morphostats and morphogens perform a wide array of functions in maintaining tissue architecture and appropriate development (Potter 2001). In turn, both of them are tightly regulated by tissue constraints. Therefore, any disruption in tissue architecture may lead to a deregulation in themorphostats/morphogens balance, leading to further abnormalities in cell proliferation and tissue organization. According to this framework “loss of normal tissue microarchitecture is a (perhaps the) fundamental step in carcinogenesis” (Potter 2007), a conclusion compatible with the TOFT. Moreover, pre-neoplastic lesions triggered by alterations in the MF may in turn contribute to further deregulating the surrounding milieu architecture and, “once this ‘pathological’ new niche is formed it set(s) the stage for tumor progression to occur” (Laconi 2007). The integrated sum of all of these factors determines the MF’s intrinsic ‘strength’ which allows cells travelling across an attractor’s landscape to ultimately find the most appropriate ‘location’ (phenotypic determination) (Nicolis and Prigogine 1989; Huang and Ingber 2006–2007).

A meaningful case in point is constituted by a very specific situation in which the MF is influenced by gravitational forces. Remarkably, living cells exposed to microgravity spontaneously acquire two distinct phenotypes, despite the absence of changes in their genome expression pattern (Testa et al. 2014; Masiello et al. 2014; Pisanu et al. 2014). The non-equilibrium theory (Kondepudi and Prigogine 1983) provides a convincing explanation of such counterintuitive phenomena. A far–from-equilibrium open system can form spatial stationary patterns after experiencing a phase transition, leading to new asymmetric configurations. These states are equally accessible, as there exists a complete symmetry between the emerging configurations as reflected in the symmetry of the bifurcation diagram. However, the superimposition of an external constraint may break the system’s symmetry, bestowing a preferential directionality according to which the system evolves by occupying a selected state (phenotypic determination). On the contrary, when constraints are removed, as occurs in microgravity, the driving control on the phenotypic switch is lost, and the system acquires additional degrees of freedom. In this way the systems will display more than one phenotype, in principle ‘incompatible’ with its native genetic ‘commitment’ (Bizzarri et al. 2014). Those investigations may also explain how determinant a ‘weak’ force could be in deflecting a normal development path, thus shaping morphologies and functions (Bravi and Longo 2015).

By analogy, something similar happens within an embryonic MF or normal 3D-environment. Results from studies in which cancer cells have been cultured in specific morphogenetic fields (3D, embryonic or maternal) reinforce the TOFT narrative by showing how microenvironments overcome the activity of mutated genes, hence promoting tumor ‘reversion’. By placing cancer cells into “normal” microenvironments—i.e., by restoring a normal, strong morphogenetic field—the tumor phenotype can be reverted into a normal one. Cancer cells exposed to embryonic morphogenetic fields (Bizzarri et al. 2011; D’Anselmi et al. 2013; Pierce and Wallace 1971), or cultured in 3D-reconstructed biological microenvironments mimicking the normal tissue architecture (Willhauck et al. 2007; Maffini et al. 2004) undergo apoptosis and differentiation, eventually ending in the reprogramming of a “normal” phenotype (Mintz and Illmensee 1975; Hendrix et al. 2007; Kenny and Bissell 2003).

Namely, the fate of transplanted tumors is highly dependent of the characteristics of the microenvironment. Indeed, even when transplanting highly metastatic cancer cells, no tumors arise when they are introduced into an embryo field (Lee et al. 2005). Additionally, several studies have highlighted that the likelihood of successful tumor transplant is highly dependent on the age of microenvironmental stroma (Maffini et al. 2005; Marongiu et al. 2014). Usually, this evidence is either dismissed by SMT supporters, or else it is ignored being considered an “odd” exception. Instead, within the TOFT framework, such paradoxical data constitute a pivotal proof of concept (Bizzarri and Cucina 2014).

Analogous cases have been provided by studies stressing the importance of endogenous, weak electro-magnetic fields in regulating morphogenesis, left–right patterning and many other developmental processes (Pai et al. 2015; Tosenberger et al. 2015). Moreover, a study concluded that depolarization of a glycine receptor-expressing channel in Xenopus Laevis neural crest may induce a full metastatic melanoma without any involvement of a mutation, carcinogen, or DNA damage (Blackiston et al. 2011). Deregulation of the bioelectric environment may rewire protein interaction networks (Taylor et al. 2009), and comprehensive reviews of the role of bioelectric processes in cancer, highlighting how bioelectric activity is a constitutive partner of the morphogenetic field involved during cancer transformation, have also been published (Chernet and Levin 2013).

Overall these data point out that: a) MF may counteract the supposedly carcinogenic effects of point mutations. Indeed, despite many ‘biochemical mistakes’ occurring within cells as a consequence of ‘altered’ gene activity, MF may efficiently restore a normal phenotype by inhibiting/reverting the malignant phenotype. b) Disturbed interactions among cells and their microenvironment lead to a ‘deregulated’ MF, and ultimately to the emergence of cancer, even in the absence of any mutation (Baker and Kramer 2007). Another example of these interactions became evident when undifferentiated embryonic stem cells were transplanted into the rat brain at the hemisphere opposite to an ischemic injury; transplanted cells migrated along the corpus callosum towards the damaged tissue and differentiated into neurons in the border zone of the lesion (Erdö et al. 2003). In the homologous mouse brain, the same murine embryonic stem cells did not migrate, but they produced highly malignant teratocarcinomas at the site of implantation. The authors concluded that this study “demonstrated that the interaction of embryonic cells with different microenvironments determines whether regeneration or tumorigenesis is promoted”. This is precisely what TOFT posits: cancer development is strongly reliant on the dynamic interactions occurring among cells and their microenvironment. Additionally, those results further support the notion for which carcinogenesis must be viewed as ‘development gone awry’ (Soto et al. 2008b).

4 Basic Premises

Quiescence or proliferation as the default state? From the experimental point of view, SMT posits that cancer is a “cell-based disease”, whereby a normal cell over time accumulates mutations that affect the control of its proliferation, thus becoming a ‘cancer cell’ (Weinberg 1998). According to this model, the ‘default state’ of a cell is quiescence, and proliferation must therefore be actively promoted (through ‘signaling molecules’, like ‘growth factors’ and ‘oncogenes’). The second SMT premise assumes that mammalian cells are in a resting state. Hence, motility should also be actively triggered (Varmus and Weinberg 1993).

From an evolutionary point of view, SMT premises are counterintuitive given that proliferation is the default state in either prokaryotes or unicellular eukaryotes (Luria 1975). Why would metazoan cells have changed their default state? No cogent rationale is advanced to explain this alleged change in strategy. Many experimental data provided support to the hypothesis of proliferation as default-state. Findings from hormone-dependent cancer cells (Sonnenschein et al. 1996), lymphocytes (Yusuf and Fruman 2003), hematopoietic cells (Passegué and Wagers 2006), and especially stem cells (Ying et al. 2008) provide evidence in support of proliferation as the default state of mammalian cells.

An analysis of the default state concept has recently been performed by Rosenfeld (2013) who acknowledges the relevance of the default state concept in biology while noting that “there has been no attempt in the literature to provide a more or less crisp definition” of this notion. Rosenfeld outlines that any attempt to provide a compelling definition should be framed within the context to which the cell belongs. Given that “the phenotypic traits of individual cells are shaped by interactions within their respective communities”, the “default states of the cells freed from the restraints of tissue structure may not be identical, or even similar, to those that are densely packed and immobilized in tissue”. Consequently the search for a univocal definition of the default state “is elusive”, as the default state is “governed by some external layer of control or by supervisory authority”. In accordance with this perspective the ‘default state’ of a cell would change according to the context in which cells are positioned. Admittedly, a lot of (external) constraints can effectively modify the proliferation status of a cell population. For instance, the usual milieu in which cells are cultured and studied (i.e., in vitro conditions) is an artificial one, and it can be inferred that the alleged mandatory requirement on ‘growth factors’ for sustaining proliferation in vitro is obviously a consequence of the above mentioned context (Sonnenschein and Soto 1999). But this argument is unrelated to the concept of default state, as this notion refers to “the state that needs not to be actively maintained” (Huang 2009). This definition makes a conceptual equivalence among the default state and the physical concept of inertia: a cell does not modify its proliferative state until external forces (constraints) supervene to change it. This is precisely what happens when we are referring to mammalian cells located in their ‘natural’ environment, i.e. a tissue. Indeed, experimental data show that proliferation is physiologically under the control of negative feedback regulators. The hypothesis of tissue control of proliferation (chalones) goes back to the 1950s, and it gained some acceptability in the 1990s (Elgjo and Reichelt 2004)with the discovery of myostatin and its role as a feedback controller of muscle growth (Lee and McPherron 1999). Since then, several other chalones have been identified in various tissues, many of which are claimed to be members of the TGFβ family (Gamer et al. 2003).

Compelling evidence showing that proliferation of estrogen-sensitive breast cancer cells is under negative control was provided in the 1980s when estradiol was shown to increase the proliferation rate of estradiol-sensitive cells by neutralizing a specific serum-borne inhibitor (Soto et al. 1986; Soule and McGrath 1980). Similar results have been obtained in several other tissues, including prostate cancer, hematopoietic cells, liver, leukemia (Sonnenschein et al. 1989, 1996; Mallucci and Wells 1998; Passegué and Wagers 2006; Yusuf and Fruman 2003; Wang et al. 2004; Lacorazza et al. 2006). Furthermore, cell proliferation has been called the “ground state” in the context of embryonic cells, because it is inherent to the system, and does not require stimulation (Wray et al. 2010; Ying et al. 2008).

Overall, this evidence prompted E. Parr (2012) to state that “a key difference between these models (TOFT vs. SMT) is that quiescence is postulated to be the default state of ‘normal cells’ in SMT, whereas proliferation is assumed as the default state of cells in TOFT” (Parr 2012). Parr also mentioned that “it seems highly unlikely that complex, ligand-dependent signaling pathways emerged de novo as a requirement for growth”. Accordingly, mammals are “systems in which growth factors can be withheld to control growth. Thus, the quiescence of cultured metazoan cells in the absence of growth factors would not reflect a passive lack of growth stimulation but rather an active process of growth inhibition”. As a consequence, this conclusion “further suggests that gene products that appear to ‘‘promote’’ growth actually act to reveal the cell’s innate tendency to grow.”

Similar considerations apply for motility. Everywhere, the default status of prokaryotes and eukaryotes is motility. Why would cells in multicellular organisms escape this property? Indeed, embryonic cells, as well as somatic adult cells display motility in different settings: development, connective adjustments, apoptotic processes, wound repair (Zajicek et al. 1985; Worbs and Förster 2009). Therefore, motility displayed by cancer cells represents only the recovery of an intrinsic cellular function.

5 Is SMT Facing a Crisis? The Need for a Paradigm Shift

Bedessem and Ruphy (2015) are reluctant to admit that SMT is experiencing an existential crisis. However, the lack of reliability of the premises adopted by SMT has been consistently criticized, since the’70 (Pierce et al. 1978; Coleman et al. 1993; Clark 1995; Wigle and Jurisica 2007).

Finally, the SMT paradigm is perceived as inadequate by an increasing number of scientists (Barcellos-Hoff and Rafani 2000; Barclay et al. 2005; Arnold et al. 2002; Baker 2014). In fact, even Robert A. Weinberg, a long-term advocate of the gene-centric paradigm in cancer research, has recently acknowledged that the expected evidence to vindicate explanations provided by SMT has been disappointing. Quoting him, “half a century of cancer research had generated an enormous body of observations […] but there were essentially no insights into how the disease begins and progresses” (Weinberg 2014). Yet, 2 years following this explicit admission of failure, the search for mutated oncogenes and/or tumor suppressor genes continues unabated. Weinberg added “…But even this (the gene-centric view) was an illusion, as only became apparent years later […] the identities of mutant cancer-causing genes varied dramatically from one type of tumor to the next […] Each tumor seemed to represent a unique experiment of nature”. Indeed, experimental data cast doubts on the role of gene mutations in cancer, “suggesting that mechanisms for cancer initiation are broader than is typically thought” (Weinberg 2014). This candid assessment by a thought-leader who sided with SMT for the last four decades favors discarding the SMT and adopting a different model of explanation, thus generating an opportunity to explore alternatives that might lead to a genuine “paradigm shift” (Strohman 1997; Sonnenschein and Soto 2000; Baker 2015).

According to Kuhn (1962), a paradigm shift has several properties. The first one is incommensurability, where the scientists on either side of the paradigm have great difficulty in understanding the other’s point of view or reasons for adopting the premises of the competing side. Yet, speaking of ‘irreconcilability’ regarding the two competing theories (SMT and TOFT) might be a more appropriate characterization of the current situation. This irreconcilability depends on radical divergence existing among basic premises to which different paradigms rely. Copernican theory was irreconcilably different from the Ptolemaic one, given that the central place in the solar system was occupied by the Earth in the latter and the Sun in the former. It is obviously impossible to support at the same time these two opposing hypothesis by constraining them into a ‘unified’ cosmological model. By analogy, SMT and TOFT cannot be merged because the premises on which those frameworks rely are incompatible: the default state of the cell can be considered either quiescence (SMT), or proliferation (according to TOFT). The two default states cannot be operational at the same time. Thereby, according to Kuhn’s perspective, the two theories should be considered mutually irreconcilable.

The second property of a paradigm shift is represented by the accumulation of contradictory results, where the current hegemonic paradigm ultimately generates a body of observations that not only fails to support that paradigm, but also points to obvious weaknesses in its method and theoretical outlook. Indeed, as previously seen, SMT cannot provide reliable explanations for troubling and contradictory results. Examples of such results include non-genotoxic dependent carcinogenesis, the presence of mutated genes in normal tissues or a lack of mutations in a significant fraction of tumors, the genomic heterogeneity of cancer cells issued from a same tumor sample, tumor reversion after exposition to embryonic or otherwise modified morphogenetic fields (Baker 2015).

The above-mentioned arguments solidify the conclusion that TOFT and SMT encompass an irreducible competition recognizable at the experimental, epistemological and philosophical levels.

-

(a)

Experimental An increased number of inconsistencies have been collected within the SMT paradigm. In order to accommodate those contradictory results, most concepts and results borrowed from experiments centered on cell-microenvironment models have been introduced aiming at correcting SMT rather than overtly rejecting it (Bissell and Radisky 2001; Laconi 2007). Yet, even these attempts have failed to provide a rigorous explanation. It is then time to abandon the ‘oncogene paradigm’ and move on (Bizzarri et al. 2008; Sonnenschein and Soto 2000).

-

(b)

Epistemological Scientific theories need to be tested and, if falsified, be discarded (Ayala 1968). Yet, by merging two distinct frameworks, it would result in an epistemological cul-de-sac, given that such an approach would impede the identification of either useless or useful data.

-

(c)

Philosophical From a philosophical point of view, SMT and TOFT conceive the causality principle in an opposite manner. For SMT causality resides only within the genome. TOFT, instead, posits that causality relies in non-linear dynamics, involving several components and different levels of causality, spanning from the molecular one to the tissue level. These differences between TOFT and SMT are indeed a specific case of the opposition existing between a ‘reductionist’ and an ‘organicist’ approach. Consequently, “the projection of the controversy on a metaphysical ground” rather than “specious and incoherent”—as claimed by Bedessem and Ruphy (2015)—becomes wholly justified.

Obviously, adhering to one of the paradigms does not imply that ‘raw’ data gathered by experimental studies based on the faulty one (in this case, SMT) must be discarded. Instead, those results can be re-interpreted according to the new paradigm within which they are likely to acquire a ‘different meaning’ (Baker 2014). Yet, the chasm between the two theories cannot be covered by following a strategy that insists on expanding the search for elusive ‘oncogenes’ and/or ‘regulatory pathways’ (Huang 2004). Addressing cancer complexity does not imply asking for more sophisticated mathematical models, or futuristic technologies. Instead, as happened at the birth of thermodynamics, a more ‘coarse grain’ attitude may have better chances in providing a reliable comprehension, by integrating observations at different levels and providing new insight regarding the principles on which biological organization is dynamically shaped (Bizzarri et al. 2013; Longo and Montévil 2014).

Finally, these two concurring factors—irreconcilability and accumulation of contradictory results—may explain why paradigm shifts encounter resistance to change from the old guard.

6 Conclusions

Whereas SMT encompasses irresolvable conundrums, TOFT is gaining momentum, as testified by the growing interest earned by scientists worldwide (Baker 2015; Cooper 2009). The main issue on the agenda, as repeatedly requested by Soto and Sonnenschein (2011), is to submit SMT to verification. There is, after all, an ethical issue embedded into the structure of science itself, one that is often ignored by governmental and corporate structures as funders of research. This issue includes the imperatives to seek evidence for disproving one’s hypothesis (Popper 2002), and to consider the whole, and not just selective evidence (Whithehead 1925). To meet this challenge it will need a steady support of adequate resources, a realistic management of the hype that has surrounded the cancer field, and a humble attitude toward the years spent following false leads.

References

AACR Cancer Progress Report (2012) www.cancerprogressreport.org

Arnold JT, Lessey BA, Seppälä M, Kaufman DG (2002) Effect of normal endometrial stroma on growth and differentiation in Ishikawa endometrial adenocarcinoma cells. Cancer Res 62:79–88

Ayala FJ (1968) Biology as an autonomous science. Am Sci 56:207–221

Bailar JC 3rd, Gornik HL (1997) Cancer undefeated. N Engl J Med 336:1569–1574. doi:10.1056/NEJM199705293362206

Baker SG (2014) Recognizing paradigm instability in theories of carcinogenesis. Br J Med Med Res 4:1149–1163. doi:10.9734/BJMMR/2014/6855

Baker SG (2015) A cancer theory kerfuffle can lead to new lines of research. J Natl Cancer Inst 107(2):dju405. doi:10.1093/jnci/dju405

Baker SG, Kramer BS (2007) Paradoxes in carcinogenesis: new opportunities for research directions. BMC Cancer 7:151. doi:10.1186/1471-2407-7-151

Baker SG, Cappuccio A, Potter JD (2010) Research on early-stage carcinogenesis: are we approaching paradigm instability? J Clin Oncol 28:3215–3218. doi:10.1200/JCO.2010.28.5460

Barcellos-Hoff MH, Rafani SA (2000) Irradiated mammary gland stromapromotesthe expression of tumorigenic potential by unirradiated epithelial cells. Cancer Res 60:1254–1260

Barclay WW, Woodruff RD, Hall MC, Cramer SD (2005) A system for studying epithelial-stromal interactions reveals distinct inductive abilities of stromal cells from benign prostatic hyperplasia and prostate cancer. Endocrinology 146:13–18. doi:10.1210/en.2004-1123

Baron U, Bujard H (2000) Tet repressor-based system for regulated gene expression in eukaryotic cells: principles and advances. Methods Enzymol 327:401–421. doi:10.1016/S0076-6879(00)27292-3

Bedessem B, Ruphy S (2015) SMT or TOFT? How the two main theories of carcinogenesis are made (artificially) incompatible. Acta Biotheor 63:257–267. doi:10.1007/s10441-015-9252-1

Bishop RC (2008) Downward causation in fluid convection. Synthese 160:229–248. doi:10.1007/s11229-006-9112-2

Bissell MJ, Radisky D (2001) Putting tumours in context. Nat Rev Cancer 1:46–54. doi:10.1038/35094059

Bizzarri M, Cucina A (2014) Tumor and the microenvironment: a chance to reframe the paradigm of carcinogenesis? Biomed Res Int 2014:934038. doi:10.1155/2014/934038

Bizzarri M, Giuliani A (2011) Representing cancer cell trajectories in a phase-space diagram: switching cellular states by biological phase transitions. In: Dehmer M, Emmert-Streib F, Graber A, Salvador Armindo (eds) Applied statistics for network biology methods in systems biology. Wiley-Blackwell, Hoboken, pp 377–403

Bizzarri M, Cucina A, Conti F, D’Anselmi F (2008) Beyond the oncogenic paradigm: understanding complexity in cancerogenesis. Acta Biotheor 56:173–196. doi:10.1007/s10441-008-9047-8

Bizzarri M, Cucina A, Biava PM, Proietti S, D’Anselmi F, Dinicola S, Pasqualato A, Lisi E (2011) Embryonic morphogenetic field induces phenotypic reversion in cancer cells. Review article. Curr Pharm Biotechnol 12:243–253. doi:10.2174/138920111794295701

Bizzarri M, Palombo A, Cucina A (2013) Theoretical aspects of systems biology. Prog Biophys Mol Biol 112:33–43. doi:10.1016/j.pbiomolbio.2013.03.019

Bizzarri M, Cucina A, Palombo A, Masiello MG (2014) Gravity sensing by cells: mechanisms and theoretical grounds. Rend Lincei-Sci Fis Nat 25:S29–S38. doi:10.1007/s12210-013-0281-x

Blackiston D, Adams DS, Lemire JM, Lobikin M, Levin M (2011) Transmembrane potential of GlyCl-expressing instructor cells induces a neoplastic-like conversion of melanocytes via a serotonergic pathway. Dis Model Mech 4:67–85. doi:10.1242/dmm.005561

Boveri T (1929) The origin of malignant tumors. Williams & Wilkins, Baltimore

Bravi B, Longo G (2015) The unconventionality of nature: biology, from noise to functional randomness. Springer, Berlin

Brigandt I, Love A (2015) Reductionism in biology. The stanford encyclopedia of philosophy. http://plato.stanford.edu/archives/fall2015/entries/reduction-biology

Chanock SJ, Thomas G (2007) The devil is in the DNA. Nat Genet 39:283–284. doi:10.1038/ng0307-283

Chernet B, Levin M (2013) Endogenous Voltage Potentials and the Microenvironment: bioelectric signals that reveal, induce and normalize cancer. J Clin Exp Oncol Suppl 1:S1-002. doi:10.4172/2324-9110.S1-002

Clark WH Jr (1995) The nature of cancer: morphogenesis and progressive (self)-disorganization in neoplastic development and progression. Acta Oncol 34:3–21

Coleman WB, Wennerberg AE, Smith GJ, Grisham JW (1993) Regulation of the differentiation of diploid and some aneuploid rat liver epithelial (stem like) cells by the hepatic microenvironment. Am J Pathol 142:1373–1382

Cooper M (2009) Regenerative pathologies: stem cells, teratomas and theories of cancer. Med Stud 1:55–66. doi:10.1007/s12376-008-0002-4

Cornish-Bowden A (2011) Systems biology—How far has it come? Biochemist 33:16–18

D’Anselmi F, Valerio M, Cucina A, Galli L, Proietti S, Dinicola S, Pasqualato A, Manetti C, Ricci G, Giuliani A, Bizzarri M (2011) Metabolism and cell shape in cancer: a fractal analysis. Int J Biochem Cell Biol 43:1052–1058. doi:10.1016/j.biocel.2010.05.002

D’Anselmi F, Masiello MG, Cucina A, Proietti S, Dinicola S, Pasqualato A, Ricci G, Dobrowolny G, Catizone A, Palombo A, Bizzarri M (2013) Microenvironment promotes tumor cell reprogramming in human breast cancer cell lines. PLoS ONE 8:e83770. doi:10.1371/journal.pone.0083770

Descartes R, De la Forge L (1664) L’Homme et un traité de la formation du Fœtus. Charles Angot, Paris

Dinicola S, D’Anselmi F, Pasqualato A, Proietti S, Lisi E, Cucina A, Bizzarri M (2011) A systems biology approach to cancer: fractals, attractors, and nonlinear dynamics. OMICS 15:93–104. doi:10.1089/omi.2010.0091

Dokukin ME, Guz NV, Gaikwad RM, Woodworth CD, Sokolov I (2011) Cell surface as a fractal: normal and cancerous cervical cells demonstrate different fractal behavior of surface adhesion maps at the nanoscale. Phys Rev Lett 107:028101. doi:10.1103/PhysRevLett.107.028101

Dokukin ME, Guz NV, Woodworth CD, Sokolov I (2015) Emergence of fractal geometry on the surface of human cervical epithelial cells during progression towards cancer. New J Phys 17:033019. doi:10.1088/1367-2630/17/3/033019

Elgjo K, Reichelt KL (2004) Chalones: from aqueous extracts to oligopeptides. Cell Cycle 3:1208–1211

Erdö F, Bührle C, Blunk J, Hoehn M, Xia Y, Fleischmann B, Föcking M, Küstermann E, KolossovE Hescheler J, Hossmann KA, Trapp T (2003) Host-dependent tumorigenesis of embryonic stem cell transplantation in experimental stroke. J Cereb Blood Flow Metab 23:780–785. doi:10.1097/01.WCB.0000071886.63724.FB

Gamer LW, Nove J, Rosen V (2003) Return of the Chalones. Dev Cell 4:143–144. doi:10.1016/S1534-5807(03)00027-3

Garber JE, Offit K (2005) Hereditary cancer predisposition syndromes. J Clin Oncol 23:276–292. doi:10.1200/JCO.2005.10.042

Gilbert SF, Sarkar S (2000) Embracing complexity: organicism for the 21st century. Dev Dyn 219:1–9. doi:10.1002/1097-0177(2000)9999

Gilbert SF, Opitz J, Raff RA (1996) Resynthesizing evolutionary and developmental biology. Dev Biol 173:357–372. doi:10.1006/dbio.1996.0032

Greenman C, Stephens P, Smith R et al (2007) Patterns of somatic mutation in human cancer genomes. Nature 446:153–158. doi:10.1038/nature05610

Hanahan D (2014) Rethinking the war on cancer. Lancet 383:558–563. doi:10.1016/S0140-6736(13)62226-6

Hanahan D, Weinberg RA (2000) The hallmarks of cancer. Cell 100:57–70. doi:10.1016/S0092-8674(00)81683-9

Hendrix MJ, Seftor EA, Seftor RE, Kasemeier-Kulesa J, Kulesa PM, Postovit LM (2007) Reprogramming metastatic tumour cells with embryonic microenvironments. Nat Rev Cancer 7:246–255. doi:10.1038/nrc2108

Huang S (2004) Back to the biology in systems biology: what can we learn from biomolecular networks? Brief Funct Genomic Proteomic 2:279–297. doi:10.1093/bfgp/2.4.279

Huang S (2009) Reprogramming cell fates: reconciling rarity with robustness. BioEssays 31:546–560. doi:10.1002/bies.200800189

Huang S, Ingber D (2006–2007) A non-genetic basis for cancer progression and metastasis: self-organizing attractors in cell regulatory networks. Breast Dis 26:27–54

Imielinski M, Berger AH, Hammerman PS et al (2012) Mapping the hallmarks of lung adenocarcinoma with massively parallels equencing. Cell 150:1107–1120. doi:10.1016/j.cell.2012.08.029

Jiang X, Saw KM, Eaves A, Eaves C (2007) Instability of BCR-ABL gene in primary and cultured chronic myeloid leukemia stem cells. J Natl Cancer Inst 99:680–693. doi:10.1093/jnci/djk150

Kamb A (2010) At a crossroads in oncology. Curr Opin Pharmacol 10:356–361. doi:10.1016/j.coph.2010.05.006

Kan Z, Jaiswal BS, Stinson J et al (2010) Diverse somatic mutation patterns and pathway alterations in human cancers. Nature 466:869–873. doi:10.1038/nature09208

Kenny PA, Bissell MJ (2003) Tumor reversion: correction of malignant behavior by microenvironmental cues. Int J Cancer 107:688–695. doi:10.1002/ijc.11491

Kitano H (2002) Systems biology: a brief overview. Science 295:1662–1664. doi:10.1126/science.1069492

Kondepudi DK, Prigogine I (1983) Sensitivity of non-equilibrium chemical systems to gravitational field. Adv Space Res 3:171–176. doi:10.1016/0273-1177(83)90242-9

Kuhn TS (1962) The structure of scientific revolutions. University of Chicago Press, Chicago

Kupiec JJ (1983) A probabilistic theory for cell differentiation, embryonic mortality and DNA C-value paradox. Specul Sci Technol 6:471–478

Kupiec JJ (1997) A Darwinian theory for the origin of cellular differentiation. Mol Gen Genet 255:201–208

Laconi E (2007) The evolving concept of tumor microenvironments. BioEssays 29:738–744. doi:10.1002/bies.20606

Lacorazza HD, Yamada T, Liu Y, Miyata Y, Sivina M, Nunes J, Nimer SD (2006) The transcription factor MEF/ELF4 regulates the quiescence of primitive hematopoietic cells. Cancer Cell 9:175–187. doi:10.1016/j.ccr.2006.02.017

Laforge B, Guez D, Martinez M, Kupiec JJ (2005) Modeling embryogenesis and cancer an approach based on an equilibrium between the autostabilization of stochastic gene expression and the interdependence of cells for proliferation. Prog Biophys Mol Biol 89:93–120. doi:10.1016/j.pbiomolbio.2004.11.004

Lawrence MS, Stojanov P, Polak P et al (2013) Mutational heterogeneity in cancer and the search for new cancer-associated genes. Nature 499:214–218. doi:10.1038/nature12213

Lee SJ, McPherron AC (1999) Myostatin and the control of skeletal muscle mass. Curr Opin Genet Dev 9:604–607. doi:10.1016/S0959-437X(99)00004-0

Lee LM, Seftor EA, Bonde G, Cornell RA, Hendrix MJ (2005) The fate of human malignant melanoma cells transplanted into zebrafish embryos: assessment of migration and cell division in the absence of tumor formation. Dev Dyn 233:1560–1570. doi:10.1002/dvdy.20471

Levin M (2012) Morphogenetic fields in embryogenesis, regeneration, and cancer: non-local control of complex patterning. Biosystems 109:243–261. doi:10.1016/j.biosystems.2012.04.005

Lichtenberg FR (2010) Has medical innovation reduced cancer mortality? www.nber.org/papers/w15880. doi: 10.3386/w15880

Longo G, Montévil M (2014) Perspectives on Organisms: Biological time, Symmetries and Singularities. Springer, Berlin

Longo G, Miquel PA, Sonnenschein C, Soto AM (2012) Is information a proper observable for biological organization? Prog Biophys Mol Biol 109:108–114. doi:10.1016/j.pbiomolbio.2012.06.004

Lupski JR (2013) Genetics. Genome mosaicism–one human, multiple genomes. Science 341:358–359. doi:10.1126/science.1239503

Luria SE (1975) 36 lectures in biology. MIT Press, Cambridge

Mack SC, Witt H, Piro RM et al (2014) Epigenomic alterations define lethal CIMP-positive ependymomas of infancy. Nature 506:445–450. doi:10.1038/nature13108

Maffini MV, Soto AM, Calabro JM, Ucci AA, Sonnenschein C (2004) The stroma as a crucial target in rat mammary gland carcinogenesis. J Cell Sci 117:1495–1502. doi:10.1242/jcs.01000

Maffini MV, Calabro JM, Soto AM, Sonnenschein C (2005) Stromal regulation of neoplastic development: age-dependent normalization of neoplastic mammary cells by mammary stroma. Am J Pathol 167:1405–1410. doi:10.1016/S0002-9440(10)61227-8

Mallucci L, Wells V (1998) Negative control of cell proliferation. growth arrest versus apoptosis. Role of βGBP. J Theor Med 3:169–173. doi:10.1080/10273669808833017

Marcum JA (2010) Cancer: complexity, causation, and systems biology. Matiére Premiére, Revuet épistémologie 1:171

Marongiu F, Serra MP, Sini M, Angius F, Laconi E (2014) Clearance of senescent hepatocytes in a neoplastic-prone microenvironment delays the emergence of hepatocellular carcinoma. Aging (Albany NY) 6:26–34

Martincorena I, Roshan A, Gerstung M, Ellis P, Van Loo P, McLaren S, Wedge DC, Fullam A, Alexandrov LB, Tubio JM, Stebbings L, Menzies A, Widaa S, Stratton MR, Jones PH, Campbell PJ (2015) Tumor evolution. High burden and pervasive positive selection of somatic mutations in normal human skin. Science 348:880–886. doi:10.1126/science.aaa6806

Masiello MG, Cucina A, Proietti S, Palombo A, Coluccia P, D’Anselmi F, Dinicola S, Pasqualato A, Morini V, Bizzarri M (2014) Phenotypic switch induced by simulated microgravity on MDA-MB-231breast cancer cells. Biomed Res Int 2014:652434. doi:10.1155/2014/652434

Mauron A (2002) Genome metaphysics. J Mol Biol 319:957–962. doi:10.1016/S0022-2836(02)00348-0

Mazzocchi F (2008) Complexity in biology. Exceeding the limits of reductionism and determinism using complexity theory. EMBO Rep 9:10–14. doi:10.1038/sj.embor.7401147

McCullough KD, Coleman WB, Smith GJ, Grisham JW (1997) Age-dependent induction of hepatic tumor regression by the tissue microenvironment after transplantation of neoplastically transformed rat liver epithelial cells into the liver. Cancer Res 57:1807–1813

Mintz B, Illmensee K (1975) Normal genetically mosaic mice produced from malignant teratocarcinomas cells. Proc Natl Acad Sci USA 72:3585–3589

Miquel P-A (2008) Biologie du XXIe siècle. Evolution des concepts fondateurs, De Boeck Supérieur éditeur

Müller GB, Newman SA (2003) Origination of Organismal Form. MIT Press, Cambridge

Nagel T (1998) Reductionism and anti-reductionism. In: Bock GR, Goode JA (eds) The limits of Reductionism in Biology. novartis foundation symposium vol 213. Wiley, New York, pp 3–10

Ness RB (2010) Fear of failure: why American science is not winning the war on cancer. Ann Epidemiol 20:89–91. doi:10.1016/j.annepidem.2009.12.001

Nicolis G, Prigogine I (1989) Exploring complexity. Freeman, New York

Noble D (2008a) Claude Bernard, the first systems biologist, and the future of physiology. Exp Physiol 93(1):16–26. doi:10.1113/expphysiol.2007.038695

Noble D (2008b) Genes and causation. Philos Trans A Math Phys Eng Sci 366:3001–3015. doi:10.1098/rsta.2008.0086

Pai VP, Lemire JM, Chen Y, Lin G, Levin M (2015) Local and long-range endogenous resting potential gradients antagonistically regulate apoptosis and proliferation in the embryonic CNS. Int J Dev Biol 59:327–340. doi:10.1387/ijdb.150197m

Parr E (2012) The default state of the cell: quiescence or proliferation? BioEssays 34:36–37. doi:10.1002/bies.201100138

Pasqualato A, Palombo A, Cucina A, Mariggiò MA, Galli L, Passaro D, Dinicola S, Proietti S, D’Anselmi F, Coluccia P, Bizzarri M (2012) Quantitative shape analysis of chemoresistant colon cancer cells: correlation between morphotype and phenotype. Exp Cell Res 318:835–846. doi:10.1016/j.yexcr.2012.01.022

Passegué E, Wagers AJ (2006) Regulating quiescence: new insights into hematopoietic stem cell biology. Dev Cell 10:415–417. doi:10.1016/j.devcel.2006.03.002

Pearl J (2000) Causality: models, reasoning, and inference. Cambridge University Press, Cambridge

Pellicano F, Mukherjee L, Holyoake TL (2014) Concise review: cancer cells escape from oncogene addiction: understanding the mechanisms behind treatment failure for more effective targeting. Stem Cells 32:1373–1379. doi:10.1002/stem.1678

Pierce GB, Wallace C (1971) Differentiation of malignant to benign cells. Cancer Res 31:127–134

Pierce GB, Shikes R, Fink LM (1978) Cancer: a problem of developmental biology. Prentice-Hall, Upper Saddle River

Pisanu ME, Noto A, De Vitis C, Masiello MG, Coluccia P, Proietti S, Giovagnoli MR, Ricci A, Giarnieri E, Cucina A, Ciliberto G, Bizzarri M, Mancini R (2014) Lung cancer stem cell lose their stemness default state after exposure to microgravity. Biomed Res Int 2014:470253. doi:10.1155/2014/470253

Popper K (2002) The logic of scientific discovery. Routledge, London

Potter JD (2001) Morphostats: a missing concept in cancer biology. Cancer Epidemiol Biomarkers Prev 10:161–170

Potter JD (2007) Morphogens, morphostats, microarchitecture and malignancy. Nat Rev Cancer 7:464–474. doi:10.1038/nrc2146

Prehn RT (2005) The role of mutation in the new cancer paradigm. Cancer Cell Int 5:9. doi:10.1186/1475-2867-5-9

Raphael BJ, Dobson JR, Oesper L, Vandin F (2014) Identifying driver mutations in sequenced cancer genomes: computational approaches to enable precision medicine. Genome Med 6:5. doi:10.1186/gm524

Rosenfeld S (2013) Are the somatic mutation and tissue organization field theories of carcinogenesis incompatible? Cancer Inform 12:221–229. doi:10.4137/CIN.S13013

Satgé D, Bénard J (2008) Carcinogenesis in Down syndrome: what can be learned from trisomy 21? Semin Cancer Biol 18:365–371. doi:10.1016/j.semcancer.2008.03.020

Schwartz L, Balosso J, Baillet F, Brun B, Amman JP, Sasco AJ (2002) Cancer: the role of extracellular disease. Med Hypotheses 58:340–346. doi:10.1054/mehy.2001.1539

Seymour CB, Mothersill C (2013) Breast cancer causes and treatment: where are we going wrong? Breast Cancer (Dove Med Press) 5:111–119. doi:10.2147/BCTT.S44399

Shapiro JA (2009) Revisiting the central dogma in the 21st century. Ann N Y Acad Sci 1178:6–28. doi:10.1111/j.1749-6632.2009.04990.x

Smythies J (2015) Intercellular signaling in cancer—the SMT and TOFT hypotheses, exosomes, telocytes and metastases: is the messenger in the message? J Cancer 6:604–609. doi:10.7150/jca.12372

Sonnenschein C, Soto AM (1999) The society of cells: cancer and control of cell proliferation. Springer, New York

Sonnenschein C, Soto AM (2000) Somatic mutation theory of carcinogenesis: why it should be dropped and replaced. Mol Carcinog 29:205–211. doi:10.1002/1098-2744(200012)29:4<205:AID-MC1002>3.0.CO;2-W

Sonnenschein C, Olea N, Pasanen ME, Soto AM (1989) Negative controls of cell proliferation: human prostate cancer cells and androgens. Cancer Res 49:3474–3481

Sonnenschein C, Soto AM, Michaelson CL (1996) Human serum albumin shares the properties of estrocolyone-I, the inhibitor of the proliferation of estrogen-target cells. J Steroid Biochem Mol Biol 59:147–154. doi:10.1016/S0960-0760(96)00112-4

Sonnenschein C, Davis B, Soto AM (2014a) A novel pathogenic classification of cancers. Cancer Cell Int 14:113. doi:10.1186/s12935-014-0113-9

Sonnenschein C, Soto AM, Rangarajan A, Kulkarni P (2014b) Competing views on cancer. J Biosci 39:281–302. doi:10.1007/s12038-013-9403-y

Soto AM, Sonnenschein C (2011) The tissue organization field theory of cancer: a testable replacement for the somatic mutation theory. BioEssays 33:332–340. doi:10.1002/bies.201100025

Soto AM, Murai JT, Siiteri PK, Sonnenschein C (1986) Control of Cell Proliferation: evidence for negative control on Estrogen-sensitive T47D human breast cancer cells. Cancer Res 46:2271–2275

Soto AM, Sonnenschein C, Miquel PA (2008a) On physicalism and downward causation in developmental and cancer biology. Acta Biotheor 56:257–274. doi:10.1007/s10441-008-9052-y

Soto AM, Maffini MV, Sonnenschein C (2008b) Neoplasia as development gone awry: the role of endocrine disruptors. Int J Androl 31:288–293. doi:10.1111/j.1365-2605.2007.00834.x

Soule HD, McGrath CM (1980) Estrogen responsive proliferation of clonal human breast carcinoma cells in athymic mice. Cancer Lett 10:177–189

Stanford Encyclopedia of Philosophy (2015) http://plato.stanford.edu/entries/reduction-biology/

Strohman RC (1997) The coming Kuhnian revolution in biology. Nat Biotechnol 15:194–200. doi:10.1038/nbt0397-194

Strohman R (2002) Maneuvering in the complex path from genotype to phenotype. Science 296:701–703. doi:10.1126/science.1070534

Tarin D (2011) Cell and tissue interaction in carcinogenesis and metastasis and their clinical significance. Semin Cancer Biol 21:72–82. doi:10.1016/j.semcancer.2010.12.006

Taylor IW, Linding R, David Warde-Farley, Liu Y, Pesquita C, Faria D, Bull S, Pawson T, Morris Q, Wrana JL (2009) Dynamic modularity in protein interaction networks predicts breast cancer outcome. Nat Biotechnol 27:199–204. doi:10.1038/nbt.1522

Testa F, Palombo A, Dinicola S, D’Anselmi F, Proietti S, Pasqualato A, Masiello MG, Coluccia P, Cucina A, Bizzarri M (2014) Fractal analysis of shape changes in murine osteoblasts cultured under simulated microgravity. Rend Lincei-Sci Fis Nat 25:S39–S47. doi:10.1007/s12210-014-0291-3

Tosenberger A, Bessonov N, Levin M, Reinberg N, Volpert V, Morozova N (2015) A conceptual model of morphogenesis and regeneration. Acta Biotheor 63:283–294. doi:10.1007/s10441-015-9249-9

Triolo VA (1964) Nineteenth century foundations of cancer research origins of experimental research. Cancer Res 24:4–27

Tsuchiya M, Piras V, Choi S, Akira S, Tomita M, Giuliani A, Selvarajoo K (2009) Emergent genome-wide control in wildtype and genetically mutated lipopolysaccarides-stimulated macrophages. PLoS ONE 4:e4905. doi:10.1371/journal.pone.0004905

Van Dyke T, Jacks T (2002) Cancer modeling in the modern era: progress and challenges. Cell 108:135–144. doi:10.1016/S0092-8674(02)00621-9

Vandin F, Upfal E, Raphael BJ (2012) De novo discovery of mutated driver pathways in cancer. Genome Res 22:375–385. doi:10.1101/gr.120477.111

Varmus H, Weinberg RA (1993) Genes and the biology of cancer. Scientific American Library, New York

Versteeg R (2014) Cancer: tumours outside the mutation box. Nature 506:438–439. doi:10.1038/nature13061

Vineis P, Schatzkin A, Potter JD (2010) Models of carcinogenesis: an overview. Carcinogenesis 31:1703–1709. doi:10.1093/carcin/bgq087

Wang GL, Iakova P, Wilde M, Awad S, Timchenko NA (2004) Liver tumors escape negative control of proliferation via PI3 K/Akt-mediated block of C/EBPα growth inhibitory activity. Genes Dev 18:912–925. doi:10.1101/gad.1183304

Washington C, Dalbègue F, Abreo F, Taubenberger JK, Lichy JH (2000) Loss of heterozygosity in fibrocystic change of the breast: genetic relationship between benign proliferative lesions and associated carcinomas. Am J Pathol 157:323–329. doi:10.1016/S0002-9440(10)64543-9

Weatherall DJ (2001) Phenotype-genotype relationships in monogenic disease: lessons from the thalassaemias. Nat Rev Genet 2:245–255. doi:10.1038/35066048

Weinberg RA (1998) One renegade cell: how cancer begins. Basic Books, New York

Weinberg RA (2014) Coming full circle-from endless complexity to simplicity and back again. Cell 157:267–271. doi:10.1016/j.cell.2014.03.004

Weiss P (1939) Principles of development: a text in experimental embryology. Henry Holt, New York

Wheatley D (2014) Cancer research: quo vadis—to war? E cancer Med sci 8:ed45. doi:10.3332/ecancer.2014.ed45

Whithehead NA (1925) Science and the modern world. Macmillan Company, New York

Wigle D, Jurisica I (2007) Cancer as a system failure. Cancer Inform 5:10–18

Willhauck MJ, Mirancea N, Vosseler S (2007) Reversion of tumor phenotype in surface transplants of skin SCC cells by scaffold-induced stroma modulation. Carcinogenesis 28:595–610. doi:10.1093/carcin/bgl188

Wolkenhauer O, Green S (2013) The search for organizing principles as a cure against reductionism in systems medicine. FEBS J 280:5938–5948. doi:10.1111/febs.12311

Worbs T, Förster R (2009) T cell migration dynamics within lymph nodes during steady state: an overview of extracellular and intracellular factors influencing the basal intranodal T cell motility. Curr Top Microbiol Immunol 334:71–105. doi:10.1007/978-3-540-93864-4_4

Wray J, Kalkan T, Smith AG (2010) The ground state of pluripotency. Biochem Soc Trans 38:1027–1032. doi:10.1042/BST0381027

Yamanishi Y, Boyle DL, Rosengren S, Green DR, Zvaifler NJ, Firestein GS (2002) Regional analysis of p53 mutations in rheumatoid arthritis synovium. Proc Natl Acad Sci USA 99:10025–10030. doi:10.1073/pnas.152333199

Ying QL, Wray J, Nichols J, Batlle-Morera L, Doble B, Woodgett J, Cohen P, Smith A (2008) The ground state of embryonic stem cell self-renewal. Nature 453:519–523. doi:10.1038/nature06968

Yusuf I, Fruman DA (2003) Regulation of quiescence in lymphocytes. Trends Immunol 24:380–386. doi:10.1016/S1471-4906(03)00141-8

Zajicek G, Oren R, Weinreb M (1985) The streaming liver. Liver 5:293–300

Zhang L, Zhou W, Velculescu VE, Kern SE, Hruban RH, Hamilton SR, Vogelstein B, Kinzler KW (1997) Gene expression profiles in normal and cancer cells. Science 276:1268–1272. doi:10.1126/science.276.5316.1268

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bizzarri, M., Cucina, A. SMT and TOFT: Why and How They are Opposite and Incompatible Paradigms. Acta Biotheor 64, 221–239 (2016). https://doi.org/10.1007/s10441-016-9281-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10441-016-9281-4