Abstract

We use a feature-based association model to fit grouped and individual level category learning and transfer data. The model assumes that people use corrective feedback to learn individual feature to categorization-criterion correlations and combine those correlations additively to produce classifications. The model is an Adaptive Linear Filter (ALF) with logistic output function and Least Mean Squares learning algorithm. Categorization probabilities are computed by a logistic function. Our data span over 31 published data sets. Both at grouped and individual level analysis levels, the model performs remarkably well, accounting for large amounts of available variances. When fitted to grouped data, it outperforms alternative models. When fitted to individual level data, it is able to capture learning and transfer performance with high explained variances. Notably, the model achieves its fits with a very minimal number of free parameters. We discuss the ALF’s advantages as a model of procedural categorization, in terms of its simplicity, its ability to capture empirical trends and its ability to solve challenges to other associative models. In particular, we discuss why the model is not equivalent to a prototype model, as previously thought.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Categorizing is a process critical for the survival of organisms, be they worms or human beings. Category learning is the process of building a memory trace that allows assigning perceived entities to groups (e.g., edible or non-edible, happy or sad) (Murphy 2002). Learning to categorize is rarely an end in itself. Categorization allows a series of other cognitive processes. By categorizing a previously unknown entity, inferences can be made about its unobserved features (e.g., whether an unknown animal is likely to have a heart), predictions can be made about its future behavior (e.g., whether an unknown fruit is likely to produce nausea when ingested), and knowledge can be combined and communicated (e.g., calling an unknown animal a striped horse) (Markman and Ross 2003).

Theories regarding the cognitive mechanisms that underlie the ability to learn and use categorical knowledge can be classified in two main types. Categories may be learned with the involvement of an explicit system that learns by testing hypotheses and that depends on executive functions (e.g., learning to classify an infection as being of bacterial origin by noting that high fever is a common symptom of bacterial infections), or with the involvement of an implicit processing system (Ashby et al. 1998; Ashby and Maddox 2005; Maddox and Ashby 2004; Worthy, Markman and Maddox, 2013).

Regarding implicit processing, two alternatives have been proposed. First, there is the view that people learn to categorize by creating an internal representation of the category and then classify old and new entities by computing their similarity to the stored category representation. In prototype models, the category representation which is stored in memory is an average of the category and new entities are classified depending on their similarity to that average representation (Reed 1972; Smith and Minda 1999). In exemplar models, individual memory traces are assumed to be stored for every experienced category exemplar and new entities are classified depending on their similarity to all individual memory traces (Medin and Schaffer 1978; Nosofsky 1986).

A second view of implicit category learning appeals to an associative mechanism, rather than to similarity. An associative theory holds that people learn categories by forming associations directly from the individual features that describe stimuli to the category label and later combine those association strengths to decide whether to respond to new entities with the same category label or not. Adaptive Linear Filters (ALF) instantiate the assumptions of the associative theory and have been proposed as models of procedural categorization (Amari 1977; Clapper and Bower 2002; Gluck and Bower 1988). In the current work, we propose an ALF with logistic output function and the Widrow and Hoff (1960) Least Mean Squares learning algorithm (LMS) as a model of procedural category learning and generalization.

ALFs have in their favor their extreme simplicity in terms of the underlying cognitive machinery. Basically, they adjust feature to category association weights depending on a feedback signal and combine them additively to achieve a decision. However, many researchers have favored similarity over associative accounts, mainly under Robert Nosofsky’s influential criticisms, who has argued that ALFs are equivalent to prototype models (i.e., both models additively combine a set of weighted features and set the sum against a threshold), thus inheriting some of their known limitations (Nosofsky et al. 1992; Nosofsky 1992). ALFs have also been criticized on grounds of being too simple and not having sufficient explanatory power to account for some category learning phenomena (Kruschke and Johansen 1999).

In contrast to those criticisms, in the current work the reader will find evidence showing that the ALF model can fit category learning and generalization data extremely well, both at the grouped and at the individual level, and in two well-known category learning procedures: The 5–4 category learning task (Medin and Schaffer 1978) and the prototype distortion task (Posner and Keele 1968; Reed 1972). We also discuss why the model is equivalent to a prototype model only under some specific assumptions, which we argue are unrealistic. In what follows, the reader will find a description of the model, two sets of modeling results showing the models’ potential to account for human data, and a discussion of the model’s theoretical contribution and potential use.

The model

Consistently with the basic idea that the computations necessary for procedural category learning may be implemented in subcortical areas such as the Basal Ganglia (Ashby and Ennis 2006), most category learning procedures can be viewed as requiring participants to provide one of two alternative responses (e.g., A category or B category), which are reinforced based on how features are combined. During training, rather than creating a similarity space where instances are represented (Nosofsky 1984; 1986; 2011), people update coefficients for each discrete feature (f1, f2, f3, …, fk), such that classification errors relative to feedback are minimized. These coefficients are formally the Least Mean Squared (LMS) correlation coefficients relating each feature with the experimenter-defined correct response for each exemplar experienced during training (i.e., the classification criterion). In fact, Widrow and Hoff (1960; see also Widrow and Kamenetsky 2003) show that there is a simple algorithm (see Eq. 1) that can asymptotically converge to the LMS correlation coefficients relating each feature with the classification criterion. These correlation coefficients represent association strengths and, by virtue of being correlation coefficients, are naturally bound in the −1 to + 1 range. Gluck and Bower (1988) show data consistent with people’s error correcting mechanism being able to converge toward a LMS solution, such that the best possible classification performance will be approached. Given sufficient training, the coefficients relating features to categories should reflect the LMS correlations between predictors (f1, f2, f3, …, fk) and categories (A, B). The equation below shows the LMS learning algorithm:

where wj+1 is the adjusted weight for feature fi due to the performance in the previous trial, wj is the weight for that same feature in the immediately preceding trial, n is a learning rate (constant through trials), d is the desired response for the preceding trial (i.e., the one provided by experimenter defined feedback), r is the actual response provided by the subject also for the exemplar received in the preceding trial, and x is the state of feature fi during the preceding trial.

As a result of learning, subjects’ responses will be a function of a linear combination of features states and their corresponding weights, as shown in the parenthetical term in Eq. (2). That linear equation is deterministic. As discussed in Gluck and Bower (1988), a simple model that relates coefficients to categorization probabilities is the logistic probability function Eq. (2).

where the w weights are the asymptotic approximations of the correlation coefficients, the f’s are each of the features that describe exemplars, which may be in a discrete 1 or -1 state and c is a parameter that allows representing different sensitivities with which the linear term is transformed into categorization probability (i.e., the slope of the sigmoid function). As noted to us by an anonymous reviewer, c regulates the steepness and width of the window in which the logistic curve quickly increases from 0 to 1.

Importantly for us, note that the precision with which the algorithm in Eq. (1) can converge to the LMS correlation coefficients linking each feature with the categorization criterion, depends on the (d – r) error term and on the size of the learning rate in Eq. (1). If responses and feedback were provided on a quasi-continuous scale (e.g., the model predicts p(A) = 0.62, and feedback signals p(A) = 0.70), then the precision of the approximation would depend only on the learning rate (which you would want to be conveniently small to achieve a desired level of precision). In that case, the model would be equivalent to a prototype model (as argued in Nosofsky 1992).

However, people in a category learning experiment receive a discrete feedback signal (e.g., “incorrect answer” or “the correct answer is category B”). In a category learning experiment, responses and feedback are typically discrete, such that the (d – r) term can achieve only 0, 1 or −1 values. Thus, the coefficients’ direction of change depends on the (d – r) term and the size of the change is limited by the learning rate value. Note that if we consider that the feedback signal is discrete, then individual subjects' coefficients will converge to values that may be different from the true LMS correlation coefficients. Furthermore, because the algorithm stops changing the coefficients when it stops incurring in errors (i.e., when d = r), it is possible that the model settles on coefficients that are not optimal (i.e., not precisely the LMS correlation coefficients), but on coefficients that are sufficient to solve the problem instead. For these reasons, discrete feedback allows the ALF model to behave qualitatively different from a prototype model. We will use this idea of discrete feedback when modeling category learning in Sect. 3.

Importantly, including the discrete feedback condition to derive model predictions makes the model more closely reflect real experimental conditions. In a real experiment, responses are discrete (e.g., A or B), there is a limited number of learning trials, learning needs to be only sufficiently close to a solution, and most importantly, the feedback signal is discrete.

Regarding the c sensitivity parameter in Eq. (2), it produces a sharper or a more gradual separation in the model’s predicted probabilities. As discussed in Smith and Minda (2000), one can attribute greater sensitivity to a series of factors: fluency, priming, association strength, familiarity, memory, explicit recall. An increase in any of them implies an increase in sensitivity. We remain agnostic regarding which of these factors corresponds to parameter c. Our only claim regarding this parameter is that training increases sensitivity for the trained exemplars and the c parameter can be used to model this. Finally, note that for all our computations using Eq. (2), w0 = 0, thus allowing that if the other coefficients in Eq. (2) equal zero, then p(A) = p(B) = 0.5, i.e., there has been no learning and subjects are just randomly answering.

Modeling classification judgments with the logistic response output function: group level data

Because data used in this section pertain only to classification and not to category learning, we only use Eq. (2) for modeling. In Sect. 3, we will use both equations combined in the full ALF model. To provide evidence for Eq. (2), we used data from 30 experiments that utilized the 5–4 task (Medin and Schaffer 1978). Starting with Medin and Shaffer’s work, many studies have used the 5–4 task in part because it makes contrasting predictions for prototype and exemplar theories (Blair and Homa 2003; Minda and Smith 2002; Smith and Minda 2000). Because prototype and exemplar theories can be mathematically expressed, the general strategy in many of these studies is to fit the models to participants’ responses by adjusting the models’ parameters such that the best possible fit is achieved. An important parameter of those models is the attentional weight assigned to features that characterize the trained categories (Kruschke 1992; Nosofsky 1986). Because the 5–4 task involves instances characterized by four binary features, similarity-based models used in Smith and Minda (2000) involve at least four free parameters. Other parameters may be added to reflect participants’ sensitivity (e.g., the stretching of the similarity space, Nosofsky 1986; 1989; Cohen et al. 2001), their use of the response scale (Ashby and Maddox 1993; Nosofsky and Zaki 2002), and guessing parameters (Minda and Smith, 2002).

In Smith and Minda (2000), data from 30 studies using the 5–4 task were summarized (see Smith and Minda 2000, Appendix Fig. 2 A). As shown in Table 1, exemplars in the 5–4 task are characterized by four binary features (f1, f2, f3, f4). The 5–4 task is set up in such a way that the LMS coefficients implied in the task can be directly computed from the training task’s structure (i.e., the first nine exemplars in Table 1), without recourse to subject data. In fact, we can compute a correlation coefficient directly from the codings in Table 1. For a contingency table such as shown in Fig. 1, the Phi correlation coefficient is the slope that minimizes mean squared errors Eq. (3). Note that the contingency table in Fig. 1 corresponds to feature 1, and similar contingency tables can be set up for the other three features. Using the Phi coefficient, weights can be computed for each of the four features, as shown in Table 2. There is no need to look at subject data for this. The task’s structure provides this information.

Values a, b, c and d are frequencies with which different binary features (f1, f2, f3, f4) provide evidence for category A or B. In this example, numbers correspond to frequencies for feature f1 in Table 1. Analogous contingency tables are possible for the other three features

Equation (3) is the equation for the LMS correlation coefficient for categorical data, such as shown in Table 1 and Fig. 1.

where a, b, c and d are the same frequencies used in Fig. 1, reflecting the LMS coefficient for f1.

In contrast to other modeling efforts discussed in Smith and Minda (2000), where multiple parameters exist, the only parameter allowed to vary freely in our model was parameter c. As Table 2 shows, the w weight values relating each feature (f1, f2, f3, f4) to categorization probabilities through Eq. (2) can be easily computed through a simple Phi coefficient. By inserting these values as w coefficients, the probability of any feature combination at test can be predicted (see Table 1).

We estimated two different values for parameter c, so our model effectively has only two free parameters: one for “old” items (trained exemplars A1 through B9), and another one for “new” items (T10 through T16). The reason for making this decision was that, as discussed in Smith and Minda (2000), part of the advantage of the exemplar model for fitting 5–4 data is that it allows setting a parameter that represents memory strength, which is always set higher for training than for transfer items (Nosofsky 2011). As already discussed, the c parameter allows representing factors that work precisely by increasing sensitivity to “old” more than to “new” items. This is the same logic used in Smith and Minda (2000) when setting up their “Twin sensitivity” model (see Table 3).

Using the GRG (Generalized Reduced Gradient) algorithm to minimize the mean squared error between empirical and predicted percent correct classifications, we found c = 2.21 for “old” exemplars (i.e., those used in training), and c = 1.14 for “new” exemplars (i.e., those not used during training). As expected, the estimated parameter value was higher for “old” than for “new” exemplars. Note that these values for c are point estimators that are deterministically estimated, and thus, we cannot currently perform statistical significance tests for them.

Fits for each of the 30 categorization experiments summarized in Smith and Minda (2000) have a range from 0.35 to 0.98, with an average R2 = 0.84 (a summary of the fits can be found in Supplementary Material SM Table 1). Figure 2 shows the overall fit achieved for averaged probability values across the 30 experiments. When categorization probabilities for “old” training and “new” transfer items are averaged across the 30 experiments, thus effectively controlling for differences in procedures, the explained variance achieved by the adaptive model is 0.96. Table 3 shows the current model’s fit against all models considered in Smith and Minda (2000). Note that the current model has a nominally better fit than any of the other models considered in Table 3. The most conservative interpretation of this fact is that the current model has at least as much explanatory power (in terms of explained variance) as other competing models but avoids the luxury of having many free parameters.

The current model’s fit to average categorization probabilities discussed in Smith and Minda (2000). Instances are those shown in Table 1. A = category A; B = category B; T = transfer items. Dashed line = model predictions. Full line = data. Error bars are 95% CIs for the mean probability across the 30 experiments

Limitations and grouped data conclusion

From the analyses above, we conclude that an associative model of category learning can account for performance in the 5–4 task better than similarity-based models that have traditionally been thought to account for the cognitive processes involved in this task. Not only is ours a simpler theoretical account (i.e., it does not require the similarity construct), but it is also a more parsimonious model. There is a consensus that simplest models with fewer free parameters are preferred to explain cognitive phenomena (see Farrell and Lewandowsky 2018). Although it is also possible to compare our model against other categorization models (e.g., ALCOVE, Kruschke 1992; Set of Rules Model, SRM, Johansen and Kruschke 2005), this is beyond the scope of this study.

We believe the most important shortcoming of our results so far is that they apply to grouped data. Because averaging across subjects removes individual differences, it may be that the ALF model is not representative of any individual subject (cf., Ashby et al. 1994). Another way of looking at this problem is that by virtue of being a simple model, it lacks the means to capture individual differences. To tackle this limitation, we applied the full model to individual level data and used it to make predictions for individual subjects, and for item and condition level performance during transfer, as described next.

Modeling category learning and classification: individual level data

To model individual level data during learning and classification, we used a dataset reported in Bowman and Zeithamova (2020). The data are publicly available from the original authors. In their work, Bowman and Zeithamova trained subjects (n = 163) with stimuli varying along 10 binary dimensions. One stimulus was chosen randomly for each subject to serve as the prototype of category A, and the stimulus that shared no feature with that prototype was used as the category B prototype (a procedure similar to a prototype distortion task design, Posner and Keele 1968; Reed 1972). Stimuli belonged to the category which they shared more features with, and category membership did not depend on a particular feature combination (i.e., subjects were trained with polymorphous concepts; Hampton 1979).

Six different conditions were constructed, in which exemplars shared on average 6, 6.7, 7, 7.2, 7.5, or 8 features with their corresponding prototypes (i.e., the condition’s coherence), and each training exemplar differed from all other training items by at least two features. In all conditions, training sets were built so that all individual features were equally predictive of category membership (i.e., all features were equally correlated with the classification criterion). Bowman and Zeithamova also included the number of exemplars in the training set as an additional independent variable (i.e., 5, 6, 7, 8 or 10 training items). However, they did not find that training set size had any impact on their results. We label our conditions indicating set size only to make comparisons with the Bowman and Zeithamova (2020) analyses easier for the reader. Thus, condition 5–6, e.g., is to be interpreted as that 5 exemplars were used during training and the average number of shared features with the category prototype equaled 6. The same labeling convention applies to the other five conditions.

Participants were trained with corrective feedback for a total of 8 training blocks. In addition to training items, transfer categorization exemplars included 42 new exemplars on which subjects were tested with no feedback. Importantly for our goals in the current work, the Bowman and Zeithamova dataset contains individual level performance (i.e., hits by trial and percent correct by block) during training and transfer.

To model those data, we made use of the full ALF with sigmoid output function and LMS learning algorithm model. Our modeling strategy was to use the individualized learning experience (i.e., for each participant, trial by trial hits and misses) to teach the LMS algorithm in Eq. (1), allowing it to converge to some individualized feature coefficients (as described shortly), and then used those coefficients as inputs to Eq. (2) to predict performance during transfer. Thus, predictions were made for each single participant (only two free parameters for each subject), and then, individual estimates were combined to compute fits. This contrasts with our modeling in Sect. 2, where data were averaged across individuals and then modeled.

Describing performance in the Bowman and Zeithamova (2020) dataset

During training, average performance in the 6 conditions varied depending on coherence (see SM Fig. 1, which shows average performance by training block). Essentially, the more coherent the training set, the easier it was to learn the corresponding categories. Consistently with this, harder conditions (i.e., those with lower coherence) produced more participants with random or below random performance during the last 8th training block. We did not include those non-learners in our modeling effort, because participants with chance performance will probably be better modeled by a random choice model than by any more complex model (see Bowman and Zeithamova 2020, Fig. 1A, where the same argument is offered). In other words, non-learners can be considered as participants who failed to perform the task and thus were not considered in the analyses.

Also, consistently with ease of learning, easier conditions produced more participants with perfect performance (i.e., 100% correct during the 8th training block). Perfect learners ranged from 0 to 24% of the participants in each of the six conditions and amounted only to 9% of the total 163 participants in the Bowman and Zeithamova study (a summary is found in SM Table 2). The most important reason for not including those perfect performers in our modeling effort, was that, to fit their performance data the algorithm in Eq. (1) needed to converge toward extremely high coefficient values that violated assumptions regarding coefficients being correlation values ranging from −1 to 1, as will be explained shortly. Not considering non-learners and perfect classifiers, our modeling effort was carried out with 129 subjects (79%) taken from the original 163 participants.

Modeling category learning with the LMS learning algorithm

For each subject in each condition, we modeled training performance as follows. Our goal was to obtain individualized feature w coefficients to which Eq. (1) converged, which reflected category learning and could be used as inputs to Eq. (2) for predicting performance during the transfer categorization phase. To this end, we implemented Eq. (1) and used trial by trial responses and desired outcomes (respectively, r and d in Eq. (1) to train the algorithm to reflect individual performance. Note that using subject data continues with our strategy of modeling discrete feedback, so feature coefficients were not constrained to remain in the −1 to 1 value range for modelling purposes. Note that a different strategy could have been to renormalize coefficients forcing them to remain in the desired range. We did not adopt this strategy because we wanted to test the model in its simplest form.

For each participant, all coefficients were seeded = 0, meaning that at the start of training, classification would be at chance level (we assumed absence of prior knowledge). Also for each subject, we allowed learning rate (n) to vary freely, and this was our only free parameter. Parameter c in Eq. (2) was kept constant at c = 1 for learning. This is likely to be unrealistic because people should become increasingly sensitive as learning progresses. However, keeping c constant avoided using redundant parameters which would have led the fitting algorithm to finding multiple solutions (i.e., learning could be represented by changes in the feature coefficients but also by the sensitivity parameter becoming larger). Our modeling criterion here was that redundant parameters should be reduced to a single parameter.

The n parameter was allowed to converge to a value that minimized the squared error of predicted versus empirical percent correct during training block 8. An alternative modeling strategy would have been to find an n that minimized the sum of squared errors across blocks 1 through 8. However, this alternative procedure fails in taking into account the temporal aspect of training because it makes all blocks equally relevant when computing errors. Recall that we wanted to obtain individualized feature coefficients to which Eq. (1) converged, which could then be used as inputs to Eq. (2). By taking into consideration all 8 training blocks, these coefficient estimates would have been influenced by noise present mainly in the initial blocks, leading to over- or undershooting the predicted performance in the last block. To illustrate, imagine a training phase with 3 blocks, where the first block produced a percent correct performance of 50%, a second block performance of 87% and a third block performance of 63%. Under these conditions, the fitting procedures are bound to overshoot their prediction for the last block, because the model will try to also fit the second block. The same undershooting and overshooting problem would be found relative to the feature coefficient estimations. In contrast, our choice of using performance on block 8 to fix parameters finds a learning rate that could constantly increment performance starting from complete ignorance and at a constant rate (as Fig. 3 illustrates).

During each block, the predicted percent correct was calculated as the average predicted probability of correct response across all trials belonging to the block in question. Notably, though we did not impose restrictions on the values towards which coefficients should converge (i.e., they were free to grow as large as necessary to achieve fit), feature coefficients converged to a value ranging between −1 and 1 for all participants, except for those with perfect or close to perfect performance on block 8. Note that this is precisely the reason for not taking perfect performers into account. It is not that Eqs. (1), (2) could not handle those cases. The problem is theoretical. Under discrete feedback conditions, to account for perfect performance the model tends to predict extremely high feature coefficients that violate the assumption that those association coefficients are correlation coefficients that are bound to the −1 to 1 range (i.e., coefficients grew much larger than + 1). The alternative strategy of renormalizing coefficients to force them remaining in the desired range, might have eliminated this problem, though somewhat artificially.

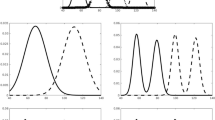

Figure 3 shows percent correct by training block predicted by the model versus empirically obtained values. Note that predicted percent correct values were almost always within the 95% CIs computed from subject data. The deviations occurring during the first two blocks of the easy conditions (i.e., conditions 5–8 and 8–7.5) reflect that a substantial amount of learning occurred within those blocks. Because our modeling used the same learning rate across all learning blocks and trials for a given subject, this fast learning during the initial training blocks could not be captured. This problem does not show up in the hard and medium difficulty conditions, where learning is much more gradual.

In Fig. 4 we plotted, for each training block, average prediction errors (predicted minus observed) across participants for each of the six conditions. Negative values show that predicted performance was lower than the empirically observed one. Regarding Fig. 4, it is interesting to note that errors become centered around zero at about the fourth block. This is noteworthy because we only used performance in the last block as fitting criterion and did not minimize errors across all eight blocks (which would have forced errors to be as small as possible for each training block). That modeling nonetheless captures performance starting from the fourth block, suggests that our assumption that learning is incremental is sound.

Average prediction errors (predicted minus observed) for each condition in the Bowman and Zeithamova (2020) dataset. Negative values show that predicted performance was lower than empirical performance. Error bars are standard errors

Modeling classification judgments with the ALF model with logistic response function

During transfer, participants in the Bowman and Zeithamova (2020) study classified 42 new exemplars (i.e., not previously seen during training). To test the current model, our strategy was to compute, for each participant, a predicted probability of producing a hit response for each transfer trial (i.e., the predicted probability of whichever response was the correct one for the current trial). To this end, we simply used the individualized coefficients to which Eq. (1) converged for training and directly imputed them to Eq. (2) to obtain the predicted probability of producing a correct answer in each transfer trial for each participant. The predicted percent correct for transfer was simply the average of those predictions. The c sensitivity parameter was adjusted individually for each of the 129 participants. More information regarding individual fits can be found in the Appendix, Fig. 1A, 2A, where the reader will find a sample of individual fits for each condition (see the Appendix) selected to illustrate one bad fit and one good fit for each of the six conditions.

To perform grouped analyses for the transfer phase, we averaged the individually fit c parameters across all subjects participating in each comparison. Because exemplars in the Bowman and Zeithamova dataset are organized according to their “distances” to the category prototype, we used those groupings to perform comparisons. During transfer, several trials included equivalent exemplars characterized by having the same distances, with distance = 0 meaning that all an exemplar’s features were the same as in the category prototype, distance = 1 meaning that one feature was different from the prototype, …, and distance = 4 meaning that four features were different from the prototype. We also made comparisons for each of the coherence conditions. Thus, for each group of equivalent exemplars, we computed the predicted mean probability of obtaining a hit and compared it to the empirical probability for each specific distance across participants in each condition.

Relative to the “distance” manipulation, as is shown in Fig. 5, except for the condition that produced the worst performance during training (i.e., condition 5–6), the model exhibited extremely high fits. Recall that condition 5–6 was the one that produced performances closer to random. For the other five conditions, Fig. 5 shows that predicted hit probabilities at different distances tend to consistently fall inside the 95% CIs of empirical percent correct data. On a relative scale, the model has a notably high performance across distances. Again, with the exception of condition 5–6, for which the correlation coefficient was not significant (r = -0.081, R2 = 0.006, p = 0.90), for all other conditions the Pearson correlation coefficient between predicted and empirical percent correct is extremely high (condition 6–6.7, r = 0.984, R2 = 0.968, p = 0.002; condition 10–7, r = 0.962, R2 = 0.926, p = 0.009; condition 7–7.2, r = 0.997, R2 = 0.994, p < 0.001; condition 8–7.5, r = 0.987, R2 = 0.973, p = 002; condition 5–8, r = 0.952, R2 = 905, p = 0.013). (Note that, when necessary, we report values with three decimal places to avoid confusions due to approximation, e.g., reporting 0.997 as 1.0.) Finally, even using absolute errors (i.e., difference between empirical and predicted percent correct regardless of sign), the model achieves impressive fits (see SM Table 3). Apart from coherence condition 5–6, errors are always lower than 10% on the probability scale and may be as low as 0.3%. It is worth stressing that these fits are achieved with only two free parameters for each participant, one for the learning (n) one for the transfer phase (c).

We also contrasted model predictions by conditions. To this end, we computed the mean hit probability by participant in each condition and compared it to model predictions. As Fig. 6 shows, predicted and empirical CIs always overlapped, and predicted percent correct values were always inside empirical data’s 95% Cis (excluding condition 5–6). As for our previous analyses, we believe this relative lack of fit for condition 5–6 is explained by participants’ classification under those conditions being closer to random. When all conditions except for condition 5–6 are considered, predicted mean percent correct values closely track the real transfer performance across conditions (r = 0.996, R2 = 0.93, p < 0.001; see Fig. 6).

General discussion

Our results suggest that the ALF model can model associative procedural learning, and that this type of category learning may reflect a wide variety of category learning tasks. Across the different experiments we have reanalyzed, many participants and experimental variations were included. Interestingly, both tasks used in the current work (i.e., the 5–4 task used in our group level fits and the Bowman and Zeithamova prototype distortion task procedure used in our individual level fits) have been thought to call for implicit similarity-based processing (Bozoki et al. 2006; Kéri et al. 2001; Reed et al. 1999) and have also the potential to be solved via explicit memory systems (Blair and Homa 2003; Wills, Ellet, Milton, Croft, and Beesley, 2020). This implies that in the current work we have not selected tasks that are, from a theoretical point of view, biased towards producing associative processing (Little and Thulborn 2006; Zeithamova et al. 2008) or that are too similar to information integration tasks that are thought to be solved implicitly (Ashby et al. 2003), and nonetheless our model performs remarkably well. Our work suggests that models of associative mechanisms may have greater potential for explaining human category learning behavior than previously thought.

Regarding the fits, there are several things that are worth highlighting. First, the high explained variances found in our grouped and individual level analyses indicate that there is not much room for improvement (in terms of unexplained variance). This poses a challenge to possible alternative models (prototype, exemplar, or models that add explicit memory systems), particularly given the low number of free parameters used in our modeling effort. In describing grouped results, the ALF model outperformed other models that have been traditionally used in the literature. In the individual level analyses, the model showed that its predictions closely follow subjects’ performance. However, we did not perform comparisons with alternative models at this level of analysis. Work at our laboratory is currently oriented towards this goal.

Model limitations

It is also interesting that our results allow discussing model limitations. The ALF model has difficulties in accounting for behavior close to random performance (e.g., in Bowman and Zeithamova’s condition 5–6). However, this is a condition that might prove challenging for any model, as Bowman and Zeithamova (2020) acknowledge. The ALF model with discrete feedback signal, at least as currently implemented, also has difficulties in explaining when people achieve perfect performance. To achieve fits under those conditions, the model needs to violate assumptions regarding the meaning of the linear coefficients (i.e., the association weights). Imposing greater restrictions to the model (e.g., renormalizing the w coefficients such that they are forced to remain in the −1 to 1 range) might contribute to solving this problem. This is not unlike restriction imposed on the attentional weights in similarity-based models, where all the attentional weights are constrained to sum to one (Farrell and Lewandowsky 2018). However, implementing such a modeling decision forces one to ask if gaining explanatory power outweighs the loss of parsimony. We hope to have an answer soon in the future.

Regarding the relative lack of fits for conditions that produced fast learning (i.e., a rapid increase in performance during the first blocks of the easy conditions), note that this is not a model limitation, but rather has to do with not being able to measure with the desired precision changes occurring within an individual learning block. Theoretically, fast learning can be explained by implicit associative processing. Factors such as priming effects (prior learning can facilitate posterior learning), number of features (stimuli with a lower number of features are easier to learn) and salience (salient stimuli might aid learning) could all be brought to bear to explain fast learning. These latter factors are not considered in the current model and could be a fruitful line for future developments.

A related concern is whether our model might be overfitting the data. This is clearly a problem in Sect. 2, where we fit the model to averaged data. However, in Sect. 2 our main conclusion is only relative to other models. Our conclusion there is that the ALF model achieves better fits than other models, and does so with fewer free parameters. Furthermore, our decision to have individual subject fits in Sect. 2 was in part to ease concerns about overfitting. In Sect. 3, our individual level fits were achieved with a minimal number of free parameters, which suggests that overfitting should not be a problem. We believe that the fact that the rate of free parameters to data points is very low suggests that underfitting was more likely than the reverse. Recall that for training we estimated only the learning rate and used it to fit percent correct in the 8 training blocks; and for transfer we estimated only the c sensitivity parameter and used it to fit 5 “distances”.

Future work and relation to other models

The ALF model we have discussed here can be adapted to study category learning in multiple tasks. Essentially, any task that uses binary features can be effect coded (as shown in Table 1). Association weights can be computed directly from task structure (as shown in Table 2) or estimated directly from individual subjects’ learning performance (as described in Sect. 3.2). By using Eq. (2), coded features and their corresponding coefficients, classification probabilities can be predicted, and model fitting can be performed. Furthermore, computational modeling with ALF need not be limited to binary features. By discretizing continuous features into n levels (where n > 2), it is trivial to apply the same procedures described here, with the only limitation being the increase in the total number of exemplars necessary during training to cover all possible combinations (Ashby and Valentin 2018).

The model we report here can be implemented as a neural net. In those terms, the model is a single layer neural net (i.e., a perceptron) with logistic output function and connection weights that are updated via feedback. This description allows relating it to other models.

Like the current model, in COVIS, Ashby et al. (1998) assume that procedural category learning is performed by linear integration (i.e., the parenthetical term in Eq. 2) in a perceptron neural net. Despite that similarity, there are at least two ways in which our work here extends work on COVIS. First, Ashby et al. (1998) do not make more precise assumptions about learning mechanisms. In contrast, the current work shows that the LMS algorithm (Widrow and Hoff 1960) can adequately model the convergence of feature association weights. Second, the COVIS procedural system is thought to account for tasks and stimuli that require information integration and that are not likely to be solved by explicit rules (e.g., discriminating between sets of Gabor patches described by continuous dimensions; Roeder and Ashby 2016). In contrast, here we show that stimuli that are not of the information integration type (i.e., stimuli with binary valued features) and that have been used to study explicit categorization (e.g., the 5–4 task, prototype distortion task), can be modeled successfully with a simple procedural associationist model.

ALCOVE is another model that is implemented as a neural net (Kruschke 1992). However, unlike the current model, the ALCOVE neural net is an exemplar model related to the Generalized Context Model (Nosofsky 1986), where the net’s hidden layer corresponds to the trained exemplars. Recall that our model is a single layer neural net, so it is much simpler, not only structurally, but also on grounds of the number of parameters allowed. Though ALCOVE’s theoretical foundations are different from what we discuss in the current work, some of its mathematical formulations are the same. Though the model computes distances and generalization gradients (i.e., computes similarities, not association strengths), and from them obtains response probabilities, the learning rule it implements is actually Widrow and Hoff’s (1960) LMS algorithm (Kruschke 1992; Lee and Navarro 2002).

Conclusion

In our current work, modeling shows that the ALF model with logistic output function and LMS learning algorithm with discrete feedback accounts extremely well for category learning and classification data in tasks that use stimuli characterized by binary features. At the grouped and individual subject level, the model achieves its fits with minimal need of free parameters. Notwithstanding its extreme simplicity, the model’s predictions closely track individual level performance during training and transfer. The model has sufficient explanatory power to account for item level and condition level performance. Compared to other models discussed throughout this work, its simplicity is an advantage. Importantly, our work suggests that the field’s early rejection of associationist models of category learning may have been a hasty decision.

Open science

For researchers wishing to use our procedures for modeling purposes, or those who wish to download our data, you can open download all of our materials in the OSF (https://osf.io/2r9ny/). We suggest reading carefully both ReadMe files, which contain detailed information of folders and files, and also a detailed step by step tutorial on how to implement the ALF model in both simulations.

References

Amari SI (1977) Neural theory of association and concept-formation. Biol Cybern 26:175–185. https://doi.org/10.1007/BF00365229

Ashby FG, Alfonso-Reese LA, Turken AU, Waldron EM (1998) A neuropsychological theory of multiple systems in category learning. Psychol Rev 105(3):442–481. https://doi.org/10.1037/0033-295X.105.3.442

Ashby FG, Ennis JM (2006) The role of the basal ganglia in category learning. Psychol Learn Motiv 46:1–36. https://doi.org/10.1016/S0079-7421(06)46001-1

Ashby FG, Ell SW, Waldron EM (2003) Procedural learning in perceptual categorization. Mem Cognit 31(7):1114–1125. https://doi.org/10.3758/BF03196132

Ashby FG, Maddox WT (1993) Relations between prototype, exemplar, and decision bound models of categorization. J Math Psychol 37(3):372–400. https://doi.org/10.1006/jmps.1993.1023

Ashby FG, Maddox WT (2005) Human category learning. Annu Rev Psychol 56(1):149–178. https://doi.org/10.1146/annurev.psych.56.091103.070217

Ashby FG, Maddox WT, Lee WW (1994) On the dangers of averaging across subjects when using multidimensional scaling or the similarity-choice model. Psychol Sci 5(3):144–151. https://doi.org/10.1111/j.1467-9280.1994.tb00651.x

Ashby FG, Valentin VV (2018) The categorization experiment: experimental design and data analysis In: Wixted, J T (4th Ed.), Stevens handbook of experimental psychology and cognitive neuroscience, Volume Five Methodology New York: Wiley pp. 307-333

Blair M, Homa D (2003) As easy to memorize as they are to classify: the 5–4 categories and the category advantage. Mem Cognit 31(8):1293–1301. https://doi.org/10.3758/BF03195812

Bowman CR, Zeithamova D (2020) Training set coherence and set size effects on concept generalization and recognition. J Exp Psychol Learn Mem Cogn 46(8):1442–1464. https://doi.org/10.1037/xlm0000824

Bozoki A, Grossman M, Smith EE (2006) Can patients with Alzheimer’s disease learn a category implicitly? Neuropsychologia 44:816–827

Clapper JP, Bower GH (2002) Adaptive categorization in unsupervised learning. J Exp Psychol Learn Mem Cogn 28(5):908–923

Cohen AL, Nosofsky RM, Zaki SR (2001) Category variability, exemplar similarity, and perceptual classification. Mem Cognit 29(8):1165–1175

Farrell S, Lewandowsky S (2018) Computational modeling of cognition and behavior. Cambridge University Press, UK

Gluck MA, Bower GH (1988) From conditioning to category learning: an adaptive network model. J Exp Psychol Gen 117(3):227–247. https://doi.org/10.1037/0096-3445.117.3.227

Hampton JA (1979) Polymorphous concepts in semantic memory. J Verbal Learning Verbal Behav 461:441–461

Johansen MK, Kruschke JK (2005) Category representation for classification and feature inference. J Exp Psychol Learn Mem Cogn 31(6):1433–1458. https://doi.org/10.1037/0278-7393.31.6.1433

Kéri S, Kálmán J, Kelemen O, Benedek G, Janka Z (2001) Are alzheimer’s disease patients able to learn visual prototypes? Neuropsychologia 39:1218–1223

Kruschke JK (1992) ALCOVE: an exemplar-based connectionist model of category learning. Psychol Rev 99(1):22–44. https://doi.org/10.1037/0033-295X.99.1.22

Kruschke JK, Johansen MK (1999) A model of probabilistic category learning. J Exp Psychol Learn Mem Cogn 25(5):1083–1119. https://doi.org/10.1037/0278-7393.25.5.1083

Lee MD, Navarro DJ (2002) Extending the ALCOVE model of category learning to featural stimulus domains. Psychon Bull Rev 9:43–58. https://doi.org/10.3758/BF03196256

Little DM, Thulborn KR (2006) Prototype-distortion category learning: a two-phase learning process across a distributed network. Brain Cogn 60(3):233–243. https://doi.org/10.1016/j.bandc.2005.06.004

Maddox WT, Ashby FG (2004) Dissociating explicit and procedural-learning based systems of perceptual category learning. Behav Proc 66(3):309–332. https://doi.org/10.1016/j.beproc.2004.03.011

Markman AB, Ross BH (2003) Category use and category learning. Psychol Bull 129(4):592–613. https://doi.org/10.1037/0033-2909.129.4.592

Medin DL, Schaffer MM (1978) Context theory of classification learning. Psychol Rev 85:207–238

Minda JP, Smith JD (2002) Comparing prototype-based and exemplar-based accounts of category learning and attentional allocation. J Exp Psychol Learn Mem Cogn 28(2):275–292. https://doi.org/10.1037/0278-7393.28.2.275

Murphy GL (2002) The big book of concepts. MIT Press, Cambridge, Mass

Nosofsky RM (1984) Choice, similarity, and the context theory of classification. J Exp Psychol Learn Mem Cogn 10(1):104–114. https://doi.org/10.1037/0278-7393.10.1.104

Nosofsky RM (1986) Attention, similarity, and the identification–categorization relationship. J Exp Psychol Gen 115(1):39–57. https://doi.org/10.1037/0096-3445.115.1.39

Nosofsky RM (1989) Further tests of an exemplar-similarity approach to relating identification and categorization. Percept Psychophys 45(4):279–290. https://doi.org/10.3758/BF03204942

Nosofsky RM (1992) Exemplars, prototypes, and similarity rules. In Healy, AF, Kosslyn, SM, & Shiffrin, RM (1992). From learning theory to connectionist theory: essays in honor of William K. Estes. New Jersey: Lawrence Erlbaum Associates

Nosofsky RM (2011) The generalized context model: an exemplar model of classification. In: Pothos EM, Wills AJ (eds) Formal approaches in categorization. Cambridge University Press, Cambridge, pp 18–39. https://doi.org/10.1017/CBO9780511921322.002

Nosofsky RM, Kruschke JK, McKinley SC (1992) Combining exemplar-based category representations and connectionist learning rules. J Exp Psychol Learn Mem Cogn 18(2):211–233

Nosofsky RM, Zaki SR (2002) Exemplar and prototype models revisited: response strategies, selective attention, and stimulus generalization. J Exp Psychol Learn Mem Cogn 28(5):924–940. https://doi.org/10.1037/0278-7393.28.5.924

Posner MI, Keele SW (1968) On the Genesis of abstract ideas. J Exp Psychol 77:353–363. https://doi.org/10.1037/h0025953

Reed SK (1972) Pattern recognition and categorization. Cogn Psychol 3(3):382–407. https://doi.org/10.1016/0010-0285(72)90014-X

Reed JM, Squire LR, Patalano AL, Smith EE, Jonides J (1999) Learning about categories that are defined by object-like stimuli despite impaired declarative memory. Behav Neurosci 113:411–419

Roeder JL, Ashby FG (2016) What is automatized during perceptual categorization? Cognition 154:22–33. https://doi.org/10.1016/j.cognition.2016.04.005

Smith JD, Minda JP (1999) Prototypes in the mist: the early epochs of category learning: correction to smith and minda (1998). J Exp Psychol Learn Mem Cogn 25(1):69–69. https://doi.org/10.1037/h0090333

Smith DJ, Minda JP (2000) Thirty categorization results in search of a model. J Exp Psychol Learn Mem Cogn 26(1):3–27. https://doi.org/10.1037/0278-7393.26.1.3

Widrow B, Hoff ME (1960) Adaptive switching circuits. Inst Radio Eng, West Electron Show Conv, Conv Rec 4:96–194

Widrow B, Kamenetsky M (2003) Statistical efficiency of adaptive algorithms. Neural Netw 16(5–6):735–744

Wills AJ, Ellett L, Milton F, Croft G, Beesley T (2020) A dimensional summation account of polymorphous category learning. Learn Behav 48(1):66–83. https://doi.org/10.3758/s13420-020-00409-6

Worthy DA, Markman AB, Todd Maddox W (2013) Feedback and stimulus-offset timing effects in perceptual category learning. Brain Cogn 81(2):283–293. https://doi.org/10.1016/j.bandc.2012.11.006

Zeithamova D, Maddox WT, Schnyer DM (2008) Dissociable prototype learning systems: evidence from brain imaging and behavior. J Neurosci 28(49):13194–13201. https://doi.org/10.1523/JNEUROSCI.2915-08.2008

Funding

The current work was supported by ANID Fondecyt grant 1190006 to the third author and by a graduate scholarship from Universidad Adolfo Ibáñez to the first author.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Human or animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Handling editor: Moreno Cocco (East London University), Antonio Calcagni (University of Padova); Reviewers: a researcher who prefers to remain anonymous.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Marchant, N., Canessa, E. & Chaigneau, S.E. An adaptive linear filter model of procedural category learning. Cogn Process 23, 393–405 (2022). https://doi.org/10.1007/s10339-022-01094-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10339-022-01094-1