Abstract

Recognizing emotional face expressions in others is a valuable non-verbal communication and particularly relevant throughout childhood given that children’s language skills are not yet fully developed, but the first interactions with peers have just started. This study aims to investigate developmental markers of emotional facial expression in children and the effect of age and sex on it. A total of 90 children split into three age groups: 6–7 years old (n = 30); 8–9 years old (n = 30); 10–11 years old (n = 30) took part in the study. Participants were exposed to 38 photos in two exposure times (500 ms and 1000 ms) of children expressing happiness, sadness, anger, disgust, fear and surprise on three intensities, plus images of neutral faces. Happiness was the easiest expression to be recognized, followed by disgust and surprise. As expected, 10–11-year-old group showed the highest accuracy means, whereas 6–7-year-old group had the lowest means of accuracy. Data support the non-existence of female advantage.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Face expressions are well recognized as a valuable strategy of non-verbal communication throughout lifespan. Recognizing emotional face expressions in others is a core ability for an adaptive social life (Batty and Taylor 2006). This ability is particularly relevant throughout childhood given that children’s language skills are not yet fully developed and the first interactions with peers have just started (Cheal and Rutherford 2011). Despite that, studies conducted with healthy children and seeking for biases in specific emotional face expressions recognition are scarce, and it is not yet possible to determine developmental markers of recognition of emotional faces (Cassia et al. 2012; Ebner et al. 2013; Garcia and Tully 2020; Halberstadt et al. 2020; Scherf and Scott 2012; Segal et al. 2019).

Previous research has shown that emotional face expressions recognition follows a slow developmental pathway (Batty and Taylor 2006; De Sonneville et al. 2002; Durand et al. 2007; Ewing et al. 2017; Gao and Maurer 2010; Herba and Phillips 2004; Lawrence et al. 2015; Leime et al. 2013; Meinhardt-Injac et al. 2020; Segal et al. 2019; Widen and Russell 2008). This enhancement relies on social learning and maturation or improvement of fundamental processes such as perceptual processes (categorization and automation), memory, attention, and cerebral areas—fusiform gyrus, prefrontal cortex, insula, and amygdala—involved on emotional faces processing (Adolphs 2002; Dennis et al. 2009; Hills 2012; Pollak et al. 2009; Thomas et al. 2007).

Furthermore, studies suggested that factors such as sex and age may influence emotional face expressions recognition skill (Dalrymple et al. 2013; Lawrence et al. 2015; McClure 2000; Poenitz and Román 2020; Rhodes and Anastasi 2012). Across all ages, when significant sex effects are observed, females present an advantage on facial expression recognition. A meta-analysis by McClure (2000) reviewed 117 studies on sex differences in emotional face expressions recognition from infancy through adolescence. A smaller but statistically significant female advantage was found among children and adolescents. However, McClure highlights that many studies used small samples; thus, results may not accurately reflect the full population distribution. Also, a small number of studies provided effect sizes and only 50% of the available effect sizes were statistically significant allowing biased and overrepresented results of effect sizes (McClure 2000). Nonetheless, other studies also indicate this female advantage—especially on negative emotions (Rehnman and Herlitz 2007; Williams et al. 2009) as well as a female but not male own-sex-bias (Wright and Sladden 2003).

On the other hand, research has yet aimed to understand how basic emotions recognition develop through each age. Data indicate that by six years old children recognize happiness in all intensities (Gao and Maurer 2009, 2010; Herba et al. 2008; Richards et al. 2007). At seven years old, it is possible to recognize anger faces but with intensity variations (Kessels et al. 2013; Richards et al. 2007). Sadness shows a similar development, with good levels of accuracy at 10 years old (Durand et al. 2007; Gao and Maurer 2009, 2010; Herba et al. 2008; Naruse et al. 2013). Fear and disgust present mixed results with good levels of accuracy by 10 years old (Durand et al. 2007; Herba et al. 2008; Mancini et al. 2013). Surprise is considered a confounding factor due to its similarities with fear being often not included on experiments or arising doubts about its results (Gao and Maurer 2010; Kessels et al. 2013; Naruse et al. 2013). In this sense, development of emotional face expressions recognition seems to follow childhood chronological course. At six years old, there is a lower accuracy, while at age 11, children present enhanced skills (Durand et al. 2007; Ewing et al. 2017; Herba and Phillips 2004; Herba et al. 2008; Lawrence et al. 2015; Naruse et al. 2013; Poenitz and Román 2020; Segal et al. 2019).

However, these findings are not consistent. Stimuli duration and experiments’ methods are varied, making results’ comparison more difficult. Beyond that, few studies assess all six basic emotions as well as neutral face expression (Batty and Taylor 2006); and the great majority uses only prototypical expressions (Batty et al. 2011; Chen et al. 2014) or adult image datasets (Ewing et al. 2017; Gao & Maurer 2010; Kessels et al. 2013; Lawrence et al. 2015; Mancini et al. 2013; Naruse et al. 2013; Segal et al. 2019). Furthermore, there are few studies on emotional recognition that use stimuli depicting emotional faces of various intensities (Gao and Maurer, 2010; Herba et al. 2008). Ekman et al. (1987) proposed that the assessment of faces includes both identification of which emotion is shown and identification of the intensity of that emotion. That intensity is mainly determined by how many of the key muscles of each expressed emotion are contracted and by how intense that contraction is. Interpretation of face intensity is crucial to determine one’s actions following emotion identification and therefore impact the quality of interpersonal relationships (Kommattam et al. 2019). Thus, it’s deemed relevant to investigate both accuracy and intensity of emotion recognition.

Thereby, the goal of the current study was to determine developmental markers of recognition of emotional faces in children aged between six and 11 years old. This is the first known study to investigate developmental markers of recognition of the six basic emotional faces presented in three intensities plus neutral faces at two different exposure times in children aged between six and 11 years old and the effect of age, sex, and length of stimuli presentation on this ability. We hypothesized that (1) children by 10–11 years old are more accurate in identifying emotional faces than children aged 8–9 years old and children by 6–7 years old with the later having the worst performance scores; (2) pictures depicting faces of higher intensities are recognized at earlier ages and more accurately than pictures depicting emotions of low and medium intensity; (3) girls outperform boys in emotion identification (accuracy of emotion and attributed intensity); (4) emotions are better recognized at 1000 ms of exposure; and (5) happiness is the first emotion to be identified by all ages, followed in sequence by anger, sadness, fear, disgust and surprise.

Methods

Participants

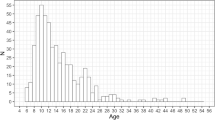

Participants were recruited in four schools from two cities in southern Brazil. A total of 520 children were approached and parents gave informed consent for 136 children. Of this sample we excluded: (a) 43 children who fell into the borderline/clinical threshold (T-score ≥ 60) in the Child Behavior Check-List (CBCL); (b) Two children who showed poor attention during the experiment; c) One child from whom we could not recover experimental data. All children scored > 11 points, therefore above the cognitive impairment threshold as assessed by the Raven’s Matrices (Raven percentile M = 71.6, sd = 22.9). Thus, results reported in this paper concern 90 children (mean age = 108,04 months, SD = 18,76), 48,9% boys, 51,1% girls), split into three age groups: 6–7 years old (n = 30); 8–9 years old (n = 30); 10–11 years old (n = 30) (see Table 1 for details). This study was approved by the Ethics Research Committee of Pontifical Catholic University of Rio Grande do Sul (CAAE: 26,068,114,000,005,336). All guardians and participants gave consent.

Instruments

Child Behavior Check-List (CBCL). Inventory answered by parents about their children aged between six and 18 years old in order to identify behavioral and emotional aspects of children and possible psychopathological disorders. The inventory comprises 118 problem items that parents rate 0 = not true, 1 = somewhat or sometimes true, or 2 = very true or often true, based on the past 6 months with higher scores corresponding to greater problems. Eight syndromes, three broadband scales (Internalizing, Externalizing, and Total Problems), and six DSM-oriented scales, are evaluated (Achenbach and Dumenci 2001). CBCL was adapted and validated in Brazil by Bordin et al. (1995) and a high level of sensitivity (87%) was identified. Raw scores are converted into T-scores and thresholds are as the following: normal (< 60), borderline (≥ 60 e ≤ 63), and clinical (> 63).

Raven’s Progressive Matrices (Colored scale). Nonverbal test for assessment of level of intelligence of individuals between 5 and 11 years. Application is divided into a series of matrices or drawings with an introductory problem, whose solution is clear, providing a standard for the task, that becomes progressively difficult. Results are expressed in percentages and grades range from 1 to 5, with grade 1 indicating intellectually superior intelligence (≥ 21 points) and grade 5 intellectually deficient intelligence (≤ 11 points). The Brazilian version was developed by Angelini et al. (1999) and standards for regional samples were reported by Bandeira et al. (2004).

Emotional Face Expression Recognition Task. A total of 38 photos of 15 children (seven girls and eight boys) were used in the task. Out of these, two images (one boy and one girl) depicted neutral facial expressions and 36 photos depicted emotional expressions of happiness, sadness, anger, disgust, fear, and surprise. Emotional images were also balanced by sex so that we had photos of one boy and one girl for all emotions at each stimuli intensity. For each emotion six images were presented: two of them in each stimuli intensity (low, medium, and high). All images were selected from the CEPS database (Romani-Sponchiado et al. 2015) and were presented in black and white, with a 300 × 300 resolution. Images were presented at two exposure times (500 ms and 1000 ms) adding up to 76 trials, plus two training trials conducted prior to experimental trials.

Procedures

Parents gave written informed consent and completed CBCL and a clinic interview of social and health information about the children. Participants were tested individually in a quiet and illuminated room at school, seated approximately 60 cm of a 14.7-in. monitor Dell Inspiron computer. Using E-prime software, the 38 images were randomly shown to children with duration of 500 ms and 1000 ms. Each photo was subsequently classified according to emotion (sad, neutral, happy, angry, surprise, disgust and fear). In order to help children on emotion classification stage, schematic faces were shown (see Fig. 1). Either a verbal response or pointing to the corresponding schematic face were accepted and the experimenter registered the child response in the software. Following the experimental task, the Raven’s Progressive Matrices were applied. Data collection lasted between 45 and 60 min.

Data analysis

Participants were assigned 1-point for each correct identification of the face emotion (happy, sad, fear, disgust, anger, surprise plus neutral). Accuracy score was a proportion computed by the sum of correct identifications of the target emotion divided by the number of trials of each emotion. Therefore, accuracy scores range from 0 or 0% (if none of the stimuli was correctly identified) and 1 or 100% (if all the stimuli were correctly identified). Accuracy scores were computed for overall emotion (e.g. average score of accuracy of all stimuli seen at each emotion regardless of the intensity) as well as for each emotion in each intensity (e.g. accuracy of images depicted in high happiness, in medium happiness, in low happiness etc.). We conducted analysis in three steps. Firstly, having overall scores of each emotion as dependent variables we conducted repeated measures ANOVA separately for 500 ms and 1000 ms in order to investigate the effects of emotion (happy, sad, fear, disgust, anger, surprise, neutral), sex(male/female) and age (6–7; 8–9 and 10–11 years old). Next, also using repeated measures ANOVA we investigated the effects of length of exposure (500 ms and 1000 ms) comparing average accuracy scores of each emotion at both presentation times. Finally, we used a similar strategy to investigate differences in accuracy in stimuli of the same emotion but of various intensities (low, medium, high) as well as to investigate the effects of sex and age on accuracy scores of stimuli of a given emotion presented at different intensities. Those were also conducted for 500 ms and at 1000 ms presentations. Neutral images do not vary in intensity and therefore were not used for the later set of analyses. Bonferroni correction was applied for all analyses and data was analysed using Jamovi Stats. Finally, as complementary analyses, a full model was also investigated though repeated measures ANOVA including Emotions (6 levels) X Intensity (3 levels) X Presentation time (2 levels) as within-subjects factors, and Age (3 levels) and Gender (2 levels) as between-subjects factors and result.

Results

Effects of emotion, sex, age, face intensity and length of presentation in accuracy scores

Tables 2 and 3 depict average accuracy scores for all emotions at 500 ms and 1000 ms presentation times.

Accuracy scores presented a similar pattern at 500 ms and 1000 ms exposure times. For both exposure times, mean accuracy scores were higher for happiness followed by neutral, disgust, surprise, anger, fear and sadness (see Fig. 2). Repeated-measures ANOVA conducted on accuracy scores of 500 ms presentations yielded a main effect of emotion [F(6, 504) = 59.49, p < 0.001, ŋ2 = 0.25] and most pairwise comparisons were significant. As seen in Fig. 2 participants were significantly more accurate in recognizing happy faces than all other emotions (all p´s < 0.001)—except for neutral faces. Disgust was the third easiest emotion to be recognized with all pairwise comparisons yielding significant differences (all p´s < 0.001) except for the comparison disgust-surprise. Fearful faces showed lower accuracy rates than faces of all other emotions (all p´s < 0.001) except for sad and angry faces. Finally, children were significantly less accurate in identifying sad faces than in identifying all other emotions (all p´s < 0.001), except for fear.

A significant age effect was also observed in accuracy scores at 500 ms [F(2, 84) = 10.16, p < 0.001, ŋ2 = 0.19] with 6–7 year old children showing lower accuracy scores than 8–9 year old (t = 3.41, p = 0.003) and than 10–11 year old (t = 4.21, p < 0.001). As seen in Table 2, when specific effects of age on each emotion were examined, results revealed significant effects of age on happy, neutral, disgust and surprise faces. Anger, fear and sad faces showed a pattern of low accuracy (rates of correct identification below 50%) in all age groups. There was no significant difference between 8–9 and 10–11 year old children and there was also no significant sex effect or age*sex effect. Significant differences were observed in stimuli of the same emotion but presented at various intensities with faces presented at lower intensities being harder to identify than faces presented at medium and/or high intensities (see Table 4).

Effects of emotion, age and sex were also examined at 1000 ms presentations. A significant emotion effect [F(6, 504) = 60.85, p < 0.001, ŋ2 = 0.22] was observed and the pattern of pairwise comparisons was identical to the one observed at 500 ms, except that at 1000 ms accuracy of disgust was not significantly different from accuracy of anger. Finally, a significant effect of age was observed at 1000 ms presentations [F(2, 84) = 14.83, p < 0.001, ŋ2 = 0.04] with 6–7 year old showing lower accuracy scores than 8–9 year old (t = 2.99, p = 0.01) and than 10–11 year old children (t = 5.44, p < 0.001). Accuracy scores of 8–9 year old were not significantly different from those of 10–11 year old and no significant effect of sex or age*sex was observed. Similar to what was observed in 500 ms, in 1000 ms exposure time a significant effect of intensity of the stimuli was observed in all emotions (see Table 4) with pairwise comparisons showing that, by and large, stimuli of lower intensities were harder to identify than stimuli of medium and of high intensities.

Effects of length of presentation were examined in all emotions and the only significant effect observed was in Anger [F(1, 84) = 21.751, p = 0.000, η2 = 0.21] with children being less accurate in recognizing angry faces in 500 ms than in 1000 ms.

Discussion

This article aimed to investigate the development of emotional face expressions recognition ability in children between six and 11 years old. We expected happiness to be the most accurately identified emotion by all ages, followed in sequence by anger, sadness, fear, disgust, and surprise. Data partially corroborated previously published studies, albeit comparisons are scarce given that to the best of our knowledge this is the first study to include analysis of all six basic emotions at low, medium and high intensity levels plus neutral faces. We also aimed to investigate the effect of age, sex and length of exposure to the stimuli on this ability expecting 10–11-year-old group to have the best results followed by 8–9 years old and 6–7 years old. As expected, development of emotional face expressions recognition followed childhood chronological course, since 10–11-year-old group showed higher accuracy scores than 8–9- and 6–7-year-old groups. Lastly, we expected a female advantage and general better accuracy at 1000 ms. Nevertheless, results did not corroborate a female advantage neither a better accuracy at 1000 ms of exposure.

Development of emotional face expressions recognition

Happiness was the easiest expression to be recognized by children, being highly recognized by all ages and at all intensities. This result corroborates previous studies (Garcia and Tully 2020; Gao and Maurer 2009; Ewing et al. 2017; Herba et al. 2008; Lawrence et al. 2015; Mancini et al. 2013; Poenitz and Román 2020; Segal et al. 2019). Facility in recognize happiness expressions is explained for being the most different expression between the six basic emotions (Ekman et al. 2002) and by the frequent amount of exposure to happy faces that children experience since birth (Batty and Taylor 2006). Additionally, identification of happiness seems to be a key emotion for establishing bonding (Marsh et al. 2010) and for eliciting motivation in social interaction (Nikitin and Freund 2019) and, therefore, it is highly adaptative to develop this skill early in childhood.

Disgust also had high accuracy scores, in particular when presented in medium and high intensities—which is consistent with previous studies (Garcia and Tully 2020; Kessels et al. 2013; Poenitz and Román 2020; Richards et al. 2007). This emotion has a strong evolutionary value of survival as disgust prevents the ingestion of rotten or poisonous foods as well as it signs any potential contamination. Recent studies suggest that the development of disgust is directly linked to identification of disgust through others’ faces (Widen and Russell 2013) meaning that early identification of a disgust face would be key to feel this emotion. Thus, it is reasonable that in this sample disgust showed good rates of accuracy.

Accuracy of fear was below 50% with greater accuracy scores being observed when stimuli was presented at medium and high intensities, corroborating previous studies (Gao and Maurer 2009, 2010; Kessels et al. 2013). Poor child performance in identifying fear may be justified by high misidentification with surprise (Gagnon et al. 2010), especially when stimuli is shown in medium and high intensity because fear and surprise share the open mouth feature. This fact may be inflated by the schematic faces used as anchors—fear faces have a mid-closed mouth with showing teeth and surprise faces have an open mouth. Thus, participants may have compared fear faces and schematic face, misidentificating it as surprise. In this sense, surprise was the third out of the six basic emotions more easily recognized contradicting our first hypothesis. This may be explained by the same issues discussed with regards to fear faces—comparison with schematic face turned surprise the only possible answer, as it was the only face with open mouth.

Anger was hypothesized as one of the easiest emotions to be recognized. However, in our sample this was not confirmed. Accuracy rates of anger were below those of disgust and surprise—even in stimuli of high intensity. Most of the studies that investigated recognition of facial expressions in children used adult faces (Ewing et al. 2017; Gao and Maurer 2010; Kessels et al. 2013; Lawrence et al. 2015; Mancini et al. 2013; Naruse et al. 2013; Segal et al. 2019). Moreover, recognition of anger in childhood seems to be related to the amount of exposure to violence or threat (Pollak and Sinha 2002)—which typically comes from an adult face. In our study we used images of children. Thus, two potential explanations arise. The first one is that children of our sample come from a background of low exposure to threat and therefore are not socially trained to identify anger. Second, angry faces presented in our images match all requirements of muscle contraction to be an angry face but are not enough threatening to be identified as an angry face by children—meaning that images might not resemble real life angry adults.

The pattern of identification of sadness was in line with our expectation and corroborated previous studies (De Sonneville et al. 2002; Durand et al. 2007; Segal et al. 2019) with a low mean accuracy in all ages. Previous studies have also found similar results with better accuracy in sad faces when stimuli of high intensity than medium and low intensities (Gao and Maurer 2009, 2010; Garcia and Tully 2020; Herba et al. 2008; Kessels et al. 2013; Mancini et al. 2013; Naruse et al. 2013; Poenitz and Román 2020). Results from the current study can be explained by high misidentification with all other emotions, aspect predicted by previous studies (Gao and Maurer 2010; Kessels et al. 2013), or may also indicate that images selected for this experiment were dubious or of not extreme high intensities (e.g. none of the pictures depicted a sad face with tears).

Age, sex, and length of presentation effect

Participants of 10–11 years old showed the highest means of accuracy of emotion, and albeit children of 8–9 years old showed lower means that older children, the difference was not significant between these two age groups. Notably, the 6–7-year-old group presented significantly lower accuracy rates than the two other age groups—even on happiness—the easiest emotion to be recognized. This result corroborates several studies which demonstrated a direct correlation with neural maturation and cognitive processes improvement (Batty and Taylor 2006; Durand et al. 2007; Gao and Maurer 2010; Lawrence et al. 2015; Mancini et al. 2013; Naruse et al. 2013; Poenitz and Román 2020; Segal et al. 2019), justifying a chronological pattern of emotional face processing development. Interestingly, a big change in the skill to recognize faces seems to happen at early school children, whereas after that, improvements continue at a steadily pace.

Regardless of studies showing existence of sex effects, data from the current article does not support a female advantage. This data differs from our original hypothesis which was based on published studies (Dalrymple et al. 2013; Lawrence et al. 2015; McClure 2000; Poenitz and Román 2020; Rehnman and Herlitz 2007; Wright and Sladden 2003) and considered the existence of a female advantage in recognizing emotional faces. However, authors have also found results that corroborate our findings (Calvo and Lundqvist 2008; De Sonneville et al. 2002; Gao and Maurer 2009, 2010; Herba et al. 2006, 2008; Mancini et al. 2013; Vicari et al. 2000), arguing that methodological variability may influence results comparison. Additionally, due to neural maturation a female advantage may appear only after puberty (Kessels et al. 2013; McClure 2000).

There is no consensus about length of presentation of stimuli in this kind of experiment. Each study uses a different stimuli presentation time, therefore it is not possible yet to postulate appropriate lengths of presentation effects (Batty et al. 2011; Deeley et al. 2008). However, it was expected that children were more accurate at 1000 ms as at longer exposure times they could process the information better. Despite this, results showed that there is no difference between the two tested lengths and, surprisingly, the only significant difference denotes a better accuracy in angry faces shown at 500 ms. This data can be justified by the fact that at longer exposure times children may have over thought and got confused about the right answer.

Developmental patterns and accuracy results presented in this article may be affected by the forced-choice procedure. This method possibly impacted children responses rising the rates of random choice. A free labelling procedure allowing children to provide their own label for the expression is recommended for future research and replications. Another limitation is the use of schematic faces to help younger children to see options of answer. Although this method has been used in studies as Gao and Maurer (2010), experimenters noticed that a significant number of participants, especially from 6–7-year-old group, clearly answered based on comparisons between database image and schematic faces. It is also recommended that future studies include a larger sample and adult images as well as an adult group to investigation and control of a possible own-age-bias (Rhodes and Anastasi 2012) and own sex bias. Despite its limitations to the best of our knowledge, this study is the first investigation of developmental markers of emotional face expression recognition and the effect of age and sex in children between six and 11 years old through an experiment using children images expressing the six basic emotions in three intensities and neutrality presented at two different exposure times. We hope that the results found can be used in practical studies, helping to develop trainings focused on emotion recognition for example.

Implication for the hypotheses

Regarding the study's first hypothesis (older children being more accurate and having better intensity attribution than younger children), significant age effects (younger children being less accurate than older ones) were observed in happiness, fear, disgust, surprise and neutral faces. Our second hypothesis was that emotions of higher intensities would be more easily recognized. Indeed, higher intensities were better recognized. The third hypothesis concerned girls outperforming boys, which was not supported. Our fourth hypothesis assumed better performance at a longer exposure time (1000 ms), which was only corroborated for anger faces. Our final hypothesis predicted happiness being the first emotion to be identified, followed by anger, sadness, fear, disgust and surprise. In general, results indicated happiness as the first emotion to be recognized, but it was followed respectively by disgust, surprise, anger, fear and sadness.

Conclusions

Our findings provide novel data of emotion recognition skills throughout childhood. Results indicated that happiness is the first emotion to be recognized, followed by neutral faces and for disgust—a crucial emotion for survival. Next, surprise, anger and fear and, lastly, sadness. This development also follows chronological course, as younger children–6–7-year-old group—presented low accuracy means, while children close to puberty–10–11-year-old group–showed higher levels of accuracy. Nevertheless, data support a non-existence of female advantage. In addition, it is fundamental to develop other studies focusing on neural mechanisms and effects of familiarity, race, age, sex and, mostly, psychopathologies.

Availability of data and material

Data that support the findings of this study are available on request to the corresponding author due to privacy/ethical restrictions.

References

Achenbach T, Dumenci L (2001) Advances in empirically based assessment: revised cross-informant syndromes and new DSM-oriented scales for the CBCL, YSR, and TRF: Comment on Lengua, Sadowksi, Friedrich, and Fisher (2001). J Consult Clin Psychol 69(4):699–702. https://doi.org/10.1037//0022-006X.69.4.699

Adolphs R (2002) Neural systems for recognizing emotion. Curr Opin Neurobiol 12(2):169–177. https://doi.org/10.1016/S0959-4388(02)00301-X

Angelini AL, Alves IC, Custódio EM, Duarte WF, Duarte JL (1999) Matrizes Progressivas Coloridas de Raven: Escala Especial. Centro Editor de Testes e Pesquisa em Psicologia, São Paulo

Bandeira DR, Alves ICB, Giacomel AE, Lorenzatto L (2004) Matrizes progressivas coloridas de Raven—escala especial: normas para Porto Alegre, RS. Psicol Estud [online] 9(3):479–486. https://doi.org/10.1590/s1413-73722004000300016

Batty M, Taylor MJ (2006) The development of emotional face processing during childhood. Dev Sci 9(2):207–220. https://doi.org/10.1111/j.1467-7687.2006.00480.x

Batty M, Meaux E, Wittemeyer K, Rogé B, Taylor MJ (2011) Early processing of emotional faces in children with autism: an event-related potential study. J Exp Child Psychol 109:430–444. https://doi.org/10.1016/j.jecp.2011.02.001

Bordin IA, Mari JJ, Caeiro MF (1995) Validação da versão brasileira do "Child Behavior Checklist" (CBCL) (Inventário de Comportamentos da Infância e Adolescência): dados preliminares. Revista ABP-APAL, 55–66. https://www.researchgate.net/profile/Isabel-Bordin/publication/285968522_Validation_of_the_Brazilian_version_of_the_Child_Behavior_Checklist_CBCL/links/59f8c278a6fdcc075ec99697/Validation-of-the-Brazilian-version-of-the-Child-Behavior-Checklist-CBCL.pdf

Calvo MG, Lundqvist D (2008) Facial expressions of emotion (KDEF): identification under different display-duration conditions. Behav Res Methods 40(1):109–115. https://doi.org/10.3758/BRM.40.1.109

Cassia V, Pisacane A, Gava L (2012) No own-age bias in 3-year-old children: more evidence for the role of early experience in building face-processing biases. J Exp Child Psychol 113(3):372–382. https://doi.org/10.1016/j.jecp.2012.06.014

Cheal JL, Rutherford MD (2011) Categorical perception of emotional facial expressions in preschoolers. J Exp Child Psychol 110(3):434–443. https://doi.org/10.1016/j.jecp.2011.03.007

Chen FS, Schmitz J, Domes G, Tuschen-Caffier B, Heinrichs M (2014) Effects of acute social stress on emotion processing in children. Psychoneuroendocrinology 40:91–95. https://doi.org/10.1016/j.psyneuen.2013.11.003

Dalrymple KA, Gomez J, Duchaine B (2013) The dartmouth database of children’s faces: acquisition and validation of a new face stimulus set. PLoS ONE 8(11):1–7. https://doi.org/10.1371/journal.pone.0079131

De Sonneville L, Verschoor C, Njiokiktjien C, Op het Veld V, Toorenaar N, Vranken M (2002) Facial identity and facial emotions: speed, accuracy, and processing strategies in children and adults. J Clin Exp Neuropsychol 24(2):200–213. https://doi.org/10.1076/jcen.24.2.200.989

Deeley Q, Daly EM, Azuma R et al (2008) Changes in male brain responses to emotional faces from adolescence to middle age. Neuroimage 40:389–397. https://doi.org/10.1016/j.neuroimage.2007.11.023

Dennis TA, Malone MM, Chen CC (2009) Emotional face processing and emotion regulation in children: an ERP study. Dev Neuropsychol 34(1):85–102. https://doi.org/10.1080/87565640802564887

Durand K, Gallay M, Seigneuric A, Robichon F, Baudouin J (2007) The development of facial emotion recognition: the role of configural information. J Exp Child Psychol 97(1):14–27. https://doi.org/10.1016/j.jecp.2006.12.001

Ebner NC, Johnson MR, Rieckmann A, Durbin KA, Johnson MK, Fischer H (2013) Processing own-age vs. other-age faces: neuro-behavioral correlates and effects of emotion. Neuroimage 78:363–371. https://doi.org/10.1016/j.neuroimage.2013.04.029

Ekman P, Friesen WV, O’Sullivan M, Chan A, Diacoyanni-Tarlatzis I, Heider K, Krause R, LeCompte WA, Pitcairn T, Ricci-Bitti PE, Scherer K, Tomita M, Tzavaras A (1987) Universals and cultural differences in the judgments of facial expressions of emotion. J Pers Soc Psychol 53(4):712–717. https://doi.org/10.1037/0022-3514.53.4.712

Ekman P, Friesen WV, Hager JC (2002) Facial action coding system: the manual on CD ROM. A Human Face, Salt Lake City

Ewing L, Karmiloff-Smith A, Farran EK, Smith ML (2017) Developmental changes in the critical information used for facial expression processing. Cognition 166:56–66. https://doi.org/10.1016/j.cognition.2017.05.017

Gagnon M, Gosselin P, Hudon-ven der Buhs I et al (2010) Children’s recognition and discrimination of fear and disgust facial expressions. J Nonverbal Behav 34:27–42. https://doi.org/10.1007/s10919-009-0076-z

Gao X, Maurer D (2009) Influence of intensity on children’s sensitivity to happy, sad, and fearful facial expressions. J Exp Child Psychol 102(4):503–521. https://doi.org/10.1016/j.jecp.2008.11.002

Gao X, Maurer D (2010) A happy story: Developmental changes in children’s sensitivity to facial expressions of varying intensities. J Exp Child Psychol 107(2):67–86. https://doi.org/10.1016/j.jecp.2010.05.003

Garcia SE, Tully EC (2020) Children’s recognition of happy, sad, and angry facial expressions across emotive intensities. J Exp Child Psychol 197:1–17. https://doi.org/10.1016/j.jecp.2020.104881

Halberstadt AG, Cooke AN, Garner PW, Hughes SA, Oertwig D, Neupert SD (2020) Racialized emotion recognition accuracy and anger bias of children’s faces. Emotion. https://doi.org/10.1037/emo0000756

Herba C, Phillips M (2004) Annotation Development of facial expression recognition from childhood to adolescence behavioural and neurological perspectives. J Child Psychol Psychiatry 45(7):1185–2119. https://doi.org/10.1111/j.1469-7610.2004.00316.x

Herba C, Landau S, Russell T, Ecker C, Phillips M (2006) The development of emotion-processing in children: effects of age, emotion, and intensity. J Child Psychol Psychiatry 47(11):1098–1106. https://doi.org/10.1111/j.1469-7610.2006.01652.x

Herba C, Benson P, Landau S et al (2008) Impact of familiarity upon children’s developing facial expression recognition. J Child Psychol Psychiatry 49(2):201–210. https://doi.org/10.1111/j-1469-7610.2007.01835.x

Hills PJ (2012) A developmental study of the own-age face recognition bias in children. Dev Psychol 48(2):499–508. https://doi.org/10.1037/a0026524

Kessels RPC, Montagne B, Hendriks AW, Perrett DI, Haan EHF (2013) Assessment of perception of morphed facial expressions using the emotion recognition task: normative data from healthy participants aged 8–75. J Neuropsychol 8(1):75–93. https://doi.org/10.1111/jnp.12009

Kommattam P, Jonas KJ, Fischer AH (2019) Perceived to feel less: Intensity bias in interethnic emotion perception. J Exp Soc Psychol 84:103809. https://doi.org/10.1016/j.jesp.2019.04.007

Lawrence K, Campbell R, Skuse D (2015) Age, gender, and puberty influence the development of facial emotion recognition. Front Psychol 6(761):1–14. https://doi.org/10.3389/fpsyg.2015.00761

Leime JL, Neto JR, Alves SM, Torro-Alves N (2013) Recognition of facial expressions in children, young adults and elderly people. Estud Psicol (campinas) 30(2):161–167. https://doi.org/10.1590/s0103-166x2013000200002

Mancini G, Agnoli S, Baldaro B, Bitti PE, Surcinelli P (2013) Facial expressions of emotions: recognition accuracy and affective reactions during late childhood. J Psychol 147(6):599–617. https://doi.org/10.1080/00223980.2012.727891

Marsh AA, Yu HH, Pine DS et al (2010) Oxytocin improves specific recognition of positive facial expressions. Psychopharmacology 209:225–232. https://doi.org/10.1007/s00213-010-1780-4

McClure E (2000) A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychol Bull 126(3):424–453. https://doi.org/10.1037/0033-2909.126.3.424

Meinhardt-Injac B, Kurbel D, Meinhardt G (2020) The coupling between face and emotion recognition from early adolescence to young adulthood. Cogn Dev 53:1–10. https://doi.org/10.1016/j.cogdev.2020.100851

Naruse S, Hashimoto T, Mori K, Tsuda Y, Takahara M, Kagami S (2013) Developmental changes in facial expression recognition in Japanese school-age children. J Med Invest 60(1–2):114–120. https://doi.org/10.2152/jmi.60.114

Nikitin J, Freund AM (2019) The motivational power of the happy face. Brain Sci, 9(1), 6. MDPI AG. Retrieved from http://dx.doi.org/https://doi.org/10.3390/brainsci9010006

Poenitz V, Román F (2020) Trajectory of the recognition of basic emotions in the neurodevelopment of children and its evaluation through the “Recognition of Basic Emotions in Childhood” Test (REBEC). Front Edu 5:1–15. https://doi.org/10.3389/feduc.2020.00110

Pollak SD, Sinha P (2002) Effects of early experience on children’s recognition of facial displays of emotion. Dev Psychol 38(5):784–791. https://doi.org/10.1037/0012-1649.38.5.784

Pollak SD, Messner M, Kistler DJ, Cohn JF (2009) Development of perceptual expertise in emotion recognition. Cognition 110(2):242–247. https://doi.org/10.1016/j.cognition.2008.10.010

Rehnman J, Herlitz A (2007) Women remember more faces than men do. Acta Psychol (amst) 124(3):344–355. https://doi.org/10.1016/j.actpsy.2006.04.004

Rhodes MG, Anastasi JS (2012) The own-age bias in face recognition: a meta-analytic and theoretical review. Psychol Bull 138(1):146–174. https://doi.org/10.1037/a0025750

Richards A, French CC, Nash G, Hadwin JA, Donnelly N (2007) A comparison of selective attention and facial processing biases in typically developing children who are high and low in self-reported trait anxiety. Dev Psychopathol 19(2):481–495. https://doi.org/10.1017/S095457940707023X

Romani-Sponchiado A, Sanvicente-Vieira B, Mottin C, Hertzog-Fonini D, Arteche A (2015) Child Emotions Picture Set (CEPS): development of a database of children’s emotional expressions. Psychol Neurosci 8(4):467–478. https://doi.org/10.1037/h0101430

Scherf K, Scott L (2012) Connecting developmental trajectories: Biases in face processing from infancy to adulthood. Dev Psychobiol 54(6):643–663. https://doi.org/10.1002/dev.21013

Segal SC, Reyes BN, Gobin KC, Moulson MC (2019) Children’s recognition of emotion expressed by own-race versus other-race faces. J Exp Child Psychol 182:102–113. https://doi.org/10.1016/j.jecp.2019.01.009

Thomas LA, De Bellis MD, Graham R, LaBar KS (2007) Development of emotional facial recognition in late childhood and adolescence. Dev Sci 10(5):547–558. https://doi.org/10.1111/j.1467-7687.2007.00614.x

Vicari S, Reilly J, Pasqualetti P, Vizzotto A, Caltagirone C (2000) Recognition of facial expressions of emotions in school-age children: the intersection of perceptual and semantic categories. Acta Paediatr 89(7):836–845. https://doi.org/10.1080/080352500750043756

Widen S, Russell J (2008) Children acquire emotion categories gradually. Cogn Dev 23(2):291–312. https://doi.org/10.1016/j.cogdev.2008.01.002

Widen SC, Russell JA (2013) Children’s recognition of disgust in others. Psychol Bull 139:271–299. https://doi.org/10.1037/a0031640

Williams LM, Mathersul D, Palmer DM, Gur RC, Gur RE, Gordon E (2009) Explicit identification and implicit recognition of facial emotions: I. Age effects in males and females across 10 decades. J Clin Exp Neuropsychol 31(3):257–277. https://doi.org/10.1080/13803390802255635

Wright DB, Sladden B (2003) An own gender bias and the importance of hair in face recognition. Acta Psychol (amst) 114(1):101–114. https://doi.org/10.1016/s0001-6918(03)00052-0

Acknowledgements

The research reported was supported by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES). The authors report no conflicts of interest.

Funding

The research reported was supported by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES).

Author information

Authors and Affiliations

Contributions

All authors whose names appear on the submission made substantial contributions to the article. Contributions by author are detailed below according to CRediT taxonomy. Conceptualization: Aline Romani-Sponchiado, Adriane Xavier Arteche; Data curation: Aline Romani-Sponchiado, Cíntia Pacheco Maia, Carol Nunes Torres, Adriane Xavier Arteche; Formal analysis: Aline Romani-Sponchiado, Cíntia Pacheco Maia; Funding acquisition: Aline Romani-Sponchiado; Investigation: Aline Romani-Sponchiado, Cíntia Pacheco Maia, Carol Nunes Torres; Methodology: Aline Romani-Sponchiado, Adriane Xavier Arteche; Project administration: Aline Romani-Sponchiado, Adriane Xavier Arteche; Supervision: Carol Nunes Torres, Adriane Xavier Arteche; Resources: Aline Romani-Sponchiado, Carol Nunes Torres; Visualization: Aline Romani-Sponchiado, Cíntia Pacheco Maia, Carol Nunes Torres, Inajá Tavares; Writing – original draft: Aline Romani-Sponchiado, Cíntia Pacheco Maia, Carol Nunes Torres, Inajá Tavares, Adriane Xavier Arteche; Writing – review & editing: Aline Romani-Sponchiado, Cíntia Pacheco Maia, Carol Nunes Torres, Inajá Tavares, Adriane Xavier Arteche.

Corresponding author

Ethics declarations

Conflict of interest

The authors report no conflicts of interest.

Ethics approval

the questionnaire and methodology for this study was approved by the Human Research Ethics committee of the Pontifical Catholic University of Rio Grande do Sul (Ethics approval number: 26068114000005336).

Consent to participate

Informed consent was obtained from all legal guardians.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Editor: Massimiliano Palmiero (University of Bergamo); Reviewers: Giulia Prete (University of Chieti and Pescara) and a second researcher who prefers to remain anonymous.

Romani-Sponchiado and Maia are currently affiliated at Federal University of Rio Grande do Sul (UFRGS).

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Romani-Sponchiado, A., Maia, C.P., Torres, C.N. et al. Emotional face expressions recognition in childhood: developmental markers, age and sex effect. Cogn Process 23, 467–477 (2022). https://doi.org/10.1007/s10339-022-01086-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10339-022-01086-1