Abstract

A decision maker usually holds various viewpoints regarding the priorities of criteria, which complicates the decision making process. To overcome this concern, in this study, a diversified AHP-tree approach was proposed. In the proposed diversified AHP-tree approach, the judgement matrix of a decision maker is decomposed into several subjudgement matrices, which are more consistent than the original judgement matrix and represent diverse viewpoints on the relative priorities of criteria. Thus, a nonlinear programming model was established and optimized, for which a genetic algorithm is designed. To assess the effectiveness of the proposed diversified AHP-tree approach, it was applied to a supplier selection problem. The experimental results showed that the application of the diversified AHP-tree approach enabled the selection of multiple diversified suppliers from a single judgement matrix. Furthermore, all suppliers selected using the diversified AHP-tree approach were somewhat ideal.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

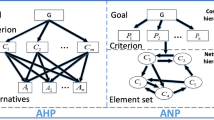

An analytic hierarchy process (AHP) is a recognized technique in multiple-criteria decision-making (MCDM) (Akgün and Erdal 2019; Chen et al. 2019; Dilawar et al. 2019; Wang et al. 2019). AHP is based on a decision maker’s judgment matrix, which summarizes the pairwise comparison results of the relative priorities of criteria (Saaty 2008). However, since only two criteria are compared each time, it is not easy to make all pairwise comparison results fully consistent (Benítez et al. 2011; Ami et al. 2018). To overcome this difficulty, an intuitive approach is to ask the decision maker to modify his/her pairwise comparison results (Lin and Yang 1996). However, such a treatment may go against his/her wishes. If more pairwise comparisons are to be made, it is more difficult to maintain consistency. For this reason, Wedley (1993) recommended that a decision maker made only some pairwise comparisons. Then, the results of other pairwise comparisons were estimated. Soliciting opinions from multiple decision makers is a viable way to enhance consistency (Moreno-Jiménez et al. 2008; Krivulin 2020). Xu (2000) and Escobar et al. (2004) applied the weighted geometric mean method to aggregate multiple decision makers’ judgment matrixes. The experimental results showed that consistency has improved after aggregation. Benítez et al. (2011) proposed an algorithm that can automatically modify a judgment matrix slightly to make it more consistent. Some studies suggested using different indicators (e.g., geometric consistency index, the index of determinants, harmonic consistency index, etc.) to measure consistency (or inconsistency) to increase the likelihood that a judgment matrix is consistent (Wedley 1993; Wang and Chen 2008; Business Performance Management Singapore 2013; Franek and Kresta 2014; Liu et al. 2017; Peláez et al. 2018). However, these treatments have changed the original rules of AHP, which may be unacceptable. In addition, there is still a need to find a way to improve consistency without asking a decision maker to modify his/her pairwise comparison results, compromise with others, or change the method of measuring consistency (Lin and Chen 2019; Chen et al. 2020). To this end, a diversified AHP-tree approach is proposed in this study.

In the diversified AHP-tree approach, it is assumed that a decision maker holds various views on a decision-making problem at the same time (Shen et al. 2019; Lin and Chen 2020), which leads to inconsistency among pairwise comparison results. To address this issue, a decision maker’s judgement matrix is decomposed into several subjudgement matrices, and each subjudgement matrix represents a single viewpoint of the decision maker when comparing criteria. A subjudgement matrix should be more consistent than the original judgement matrix, because there is no need to trade off various points of view. In addition, a subjudgement matrix can be further decomposed into its subjudgement matrices. Finally, a judgement matrix is decomposed into a tree comprising numerous subjudgement matrices. The priorities of criteria determined by these subjudgement matrix will be different.

One possible concern about this study is that AHP has been extensively studied and is mature. It seems that no further research can increase novelty. However, the diversified AHP-tree approach is novel in the following aspects:

-

(1)

This study provides a novel interpretation of a decision maker’s judgement matrix.

-

(2)

Since different subjudgement matrixes provide differrent priorities of criteria, it is easy to select multiple alternatives that are simultaneously optimal.

-

(3)

In addition, the optimal alternatives selected using the diversified AHP-tree approach are diversified, which is also novel.

The rest of this article is organized as follows. Section 2 provides a preliminary introduction to the conventional AHP approach and details the proposed diversified AHP-tree approach. Section 3 describes the application of the diversified AHP-tree approach to a supplier selection problem to evaluate its effectiveness. Some available methods are also applied to this case for comparison. Section 4 concludes this study. Some future research topics are also provided.

2 Diversified AHP-tree approach

The diversified AHP-tree approach proposed in this study aims to decompose a judgement matrix into several subjudgement matrices. These subjudgement matrices represent diverse viewpoints on the relative priorities of criteria (Fig. 1). In addition, the decomposition process is repeated until a sufficient number of viewpoints have been manifested. The result shows a tree-like structure of subjudgement matrices, which is termed a subjudgement tree or an AHP tree. Based on each subjudgement matrix, the priorities of criteria can be set, and then the best alternative is selected based on these priorities. Finally, many alternatives that are simultaneously optimal from various viewpoints can be chosen. For example, Fig. 1 shows nine judgement and subjudgement matrices. Based on these judgement and subjudgement matrices, up to nine best alternatives can be chosen.

The diversified AHP-tree approach includes the following steps:

-

Step 1 Construct a judgement matrix.

-

Step 2 Evaluate the consistency of the judgement index in terms of consistency ratio (CR).

-

Step 3 Derive the priorities of criteria from the judgement matrix.

-

Step 4 If consistency is sufficiently high (i.e., CR < 0.1), go to Step 8; otherwise, go to Step 5.

-

Step 5 If the number of viewpoints is sufficient, go to Step 8; otherwise, decompose the least consistent judgement or subjudgement matrix.

-

Step 6 Evaluate the consistency of each subjudgement matrix.

-

Step 7 Derive the priorities of criteria from each subjudgement matrix. Return to Step 5.

-

Step 8 End.

Figure 2 shows a flowchart illustrating the procedure for implementing the diversified AHP-tree approach.

2.1 Preliminary: analytic hierarchy process

In AHP, a decision maker compares the relative priority (or weight) of a criterion over that of another using linguistic terms, such as “as equal as,” “weakly more important than,” “strongly more important than,” “very strongly more important than,” and “absolutely more important than.” These linguistic terms are generally mapped to integers within [1, 9] (Saaty 2008; Chen 2020):

-

L1 “As equal as” = 1.

-

L2 “Weakly more important than” = 3.

-

L3 “Strongly more important than” = 5.

-

L4 “Very strongly more important than” = 7.

-

L5 “Absolutely more important than” = 9.

If the relative priority is between two successive linguistic terms, then values, such as 2, 4, 6, and 8, can be used. On the basis of pairwise comparison results provided by the decision maker, the judgement matrix A is created as follows:

where n is the number of criteria.

\(a_{ij}\) is a relative priority of criterion i over criterion j selected using the aforementioned linguistic terms. \(a_{ij}\) is a positive comparison if\(a_{ij} \ge 1\). The eigenvalue and eigenvector of A, indicated respectively with λ and x, satisfy the following:

and

where I is the identity matrix. The maximum eigenvalue of A and the priority of each criterion are derived as follows:

On the basis of\(\lambda_{\max }\), the consistency among pairwise comparison results can be evaluated in terms of the following indexes (Wu et al. 2020):

where RI is random index (Saaty 2013) (Table 1). CI and CR should be less than 0.1 for a small AHP problem. For a large-size AHP problem, the requirement for CI and CR can be relaxed to less than 0.3.

The arithmetic average method can be applied to estimate the values of priorities as follows (Mousavi et al. 2013):

Moreover, according to Eq. (4),

Thus, the maximal eigenvalue can be estimated as follows:

By substituting Eq. (11) into Eq. (7), CI can be estimated as follows:

In addition,

2.2 The diversified AHP-tree approach

In the diversified AHP-tree approach, judgement matrix A is decomposed into several subjudgement matrices {A(k)|k = 1 ~ K} by applying the arithmetic average operator as follows:

where A(k) is the kth subjudgement matrix; K is the number of subjudgement matrices. Equation (14) is equivalent to

All subjudgement matrices satisfy the following basic requirements for a judgement matrix:

where det() returns the determinant of a matrix. By considering a single viewpoint at a time, a subjudgement matrix becomes more consistent than the judgement matrix as follows:

Applying Eq. (13) to Inequality (18) gives

or

Equation (20) is not an assumption, but a constraint in generating subjudgement matrices. In addition, although a subjudgement matrix represents a single viewpoint, each decomposition generates at least two subjudgement matrices, as illustrated in Fig. 1.

However, possible subjudgement matrices are numerous. In the proposed diversified AHP-tree approach, if the distance between two subjudgement matrices A(k) and A(l) is the furthest, they will be generated first. For this purpose, the sum of the distances between any two subjudgement matrices is maximized:

where d() is the distance function. In this study, the Frobenius distance (Golub and Van Loan 1996) is adopted to measure the distance between two matrices as follows:

where

and

The aforementioned equation is a conjugate transpose. When all elements of X are real values,

Thus,

Subjudgement matrices are far from each other, meaning that the viewpoints they represent are diverse (Lin et al. 2019; Zhou and Bridges 2019).

Subsequently, the following nonlinear programming (NLP) model is optimized to select the most diverse subjudgement matrices:

(NLP Model I)

subject to

The objective function is to maximize the sum of distances between any two subjudgement matrices. Constraint (28) is used to decompose the judgement matrix into K subjudgement matrices. Constraint (29) is used to satisfy the reciprocal property of a subjudgement matrix. In Constraint (30), the consistency of each subjudgement matrix should be higher than that of the original judgement matrix. Constraint (31) estimates the values of weights from a subjudgement matrix.

To facilitate the optimization of NLP model I, it must be converted into a more tractable model (Tsai and Chen 2013; Lin et al. 2018; Chen and Wang 2019; Hübner et al. 2020; Wang et al. 2020). The objective function involving square roots should first be replaced with the following linear and quadratic equations (Tsai and Chen 2014):

Subsequently, let

Moving the denominator to the left-hand side gives

In addition, Constraint (29) is equivalent to

Moreover, Constraint (30) is equivalent to

Finally, the following NLP problem is solved instead:

(Model NLP II)

subject to

A genetic algorithm (GA) is designed to solve NLP II problem. First, the encoding of a chromosome is illustrated in Fig. 3. In the original judgement matrix A, \(a_{{12}} = 2 \), \(a_{{13}} = 5 \), and \(a_{{32}} = 3 \). CR = 0.40. A can be decomposed into two subjudgement matrices A(1) and A(2), in which \(a_{{12}} (1) = 1\), \(a_{{13}} (1) = 7 \), and \( a_{{32}} (1) = 1 \), while A(2) can be derived from A(1) according to Eq. (15).

Therefore, only a single chromosome with three strings of integers within [1, 9] is required to represent this decomposition, and A(1) is represented with 171. Constraint (40) is incorporated into the objective function as a penalty term to form the fitness function:

where M is a large positive value. Each population has a size of 10 chromosomes. The roulette wheel method is applied to choose parent chromosomes to be paired based on their fitness values. A single crossover point is chosen at random. Offspring chromosomes are generated by exchanging the genes of parents among themselves until the crossover point is reached. The crossover probability is 0.5. The mutation of a gene is done by slightly incrementing or decrementing its value:

The mutation rate is 0.1. The stopping criteria include

-

(1)

100 populations have been generated.

-

(2)

The improvement in the average population fitness has been less than 0.5.

-

(3)

The improvement in the fitness of the best individual at a given generation has been less than 0.1.

The GA algorithm is implemented using MATLAB which function eig() is convenient for deriving the eigenvalue and eigenvector of a judgment (or subjudgment) matrix.

Model NLP II can be applied to decompose the original judgement matrix or each subjudgement matrix. The decomposition process will terminate after the following conditions are met:

-

(1)

No further decomposition is possible. For example, all subjudgement matrices are completely consistent, or no feasible solution can be found for Model NLP II.

-

(2)

A sufficient number of priority sets have been generated.

The priority sets with higher consistency (i.e., lower values of CR) will be applied earlier.

Model NLP II is not a convex problem. Therefore, determining the global optimal solution of the model is not always easy. However, \(a_{{ij}} (k) \) has values of positive integers from 1 to 9 for a positive comparison. Therefore, feasible solutions to Model NLP II are countable. An enumeration procedure can be performed to obtain the global optimal solution of Model NLP II when the problem scale is not extremely large.

3 Application to a supplier selection problem

The supplier selection problem discussed in Lima Junior et al. (2014) was used to illustrate the applicability of the proposed diversified AHP-tree approach. According to de Boer et al. (2001), existing supplier selection methods can be classified into five categories as follows: linear weighting methods, total cost of ownership methods, mathematical programming methods, statistical methods, and artificial intelligence methods. AHP and its variants are linear weighting methods and are one of the most prevalently and constantly employed methods for supplier selection (Kahraman et al. 2003; Chan et al. 2008; Kilincci and Onal 2011; Deng et al. 2014; Dweiri et al. 2016; Sirisawat and Kiatcharoenpol 2018; Wang and Chen 2019; Chen et al. 2021).

In the supplier selection problem, the performance of a supplier was assessed in terms of five criteria, namely quality, price, delivery, supplier profile, and supplier relationship. Lima Junior et al. (2014) employed a fuzzy extent analysis (FEA)-based fuzzy AHP approach to solve this problem, in which all pairwise comparison results were given in fuzzy numbers.

In this problem, a decision maker constructed the following judgement matrix:

The CR of A was 0.154, which was inconsistent. The priorities of criteria were {0.451, 0.037, 0.099, 0.314, 0.099}. To decompose the judgement matrix into two subjudgement matrices, the Model NLP II of the problem was solved using GA in MATLAB on a personal computer (PC) with an i7-7700 central processing unit of 3.6 GHz and 8 GB of random access memory, and the optimal solution was obtained as follows:

\(Z^{*} = 10.630 \); \(CR({\mathbf{A}}^{*} (1)) = 0.147 \); and \(CR({\mathbf{A}}^{*} (2)) = 0.151 \). The execution time was 12.14 s. In the GA algorithm, each population was composed of 10 chromosomes. All chromosomes were possible values of \({\mathbf{A}}^{*} (1) \). For each of them, the corresponding value of \({\mathbf{A}}^{*} (2) \) could be derived according to Eq. (15). Therefore, \({\mathbf{A}}^{*} (2) \) was not included in chromosomes. The initial population was randomly generated, as illustrated by Table 2. For example, the first chromosome of the initial population corresponded to the following subjudgment matrix:

Then, according to Eq. (15), the corresponding \( {\mathbf{A}}^{*} (2)\) was derived as

The values of parameters in the GA algorithm were determined using the parameter tuning approach (Boyabatli and Sabuncuoglu 2004; Hassanat et al. 2019), i.e., various values of a parameter were tried and then the one giving the best result was chosen before the final run of the GA algorithm. For example, the effect of the crossover probability on the best fitness, when other parameters were fixed, was shown in Fig. 4. In this experiment, when the crossover probability was set to 0.4, the GA algorithm achieved the highest fitness very quickly. For this reason, the crossover probability was set to this value.

In addition, the mutation rate was set to 0.1, meaning that the probability of mutating a chromosome (subjudgment matrix) was 0.1. If a chromosome was decided to be mutated, one of its genes was added or subtracted by one; otherwise, it was not changed (see Fig. 5).

Although such a small-scale mutation seemed to delay the progress of achieving the global optimal solution, it actually prevented a subjudgment matrix from becoming invalid. For example, if the value of a gene was 2, subtracting 2 from it made it invalid, since all genes had to be within [1, 9]. For the same reason, adding 2 to a gene with a value of 8 also made it invalid.

The evolution process was repeated 50 times. Each evolution process had at most 100 generations, as illustrated in Fig. 6. In most evolution processes, the best fitness did not change after 20 generations, while the average fitness continued to improve, but the improvement became negligible (i.e., less than 0.5) after about 30 generations.

The subjudgement matrices were more consistent than the original judgement matrix. The priorities of criteria determined using the two subjudgement matrices were {0.279, 0.056, 0.101, 0.459, 0.105} and {0.555, 0.028, 0.098, 0.222, 0.097}, respectively.

Since \({\mathbf{A}}^{*} (1) \) was not sufficiently consistent, it was further decomposed as follow:

\(Z^{*} = 10.670 \); \(CR({\mathbf{A}}^{*} (1 - 1)) = 0.135 \); and \( CR({\mathbf{A}}^{*} (1 - 2)) = 0.143\). The priorities of criteria determined were {0.275, 0.106, 0.100, 0.456, 0.063} and {0.233, 0.039, 0.100, 0.494, 0.134}, respectively. Similarly, \( {\mathbf{A}}^{*} (2)\) was further decomposed as follows:

\(Z^{*} = 13.047 \); \(CR({\mathbf{A}}^{*} (2 - 1)) = 0.150 \); and \( CR({\mathbf{A}}^{*} (2 - 2)) = 0.140\). The priorities of criteria determined were {0.362, 0.027, 0.122, 0.393, 0.096} and {0.641, 0.031, 0.088, 0.120, 0.120}, respectively. Figure 7 shows the summarized results of the AHP tree. The decomposition process stopped at the third level because seven judgement (or subjudgement) matrices were available; however, further decompositions were still possible. These judgement (or subjudgement) matrices generated seven sets of priorities, thus enabling the selection of multiple suppliers that were simultaneously optimal from different viewpoints.

Table 3 summarizes the performances of six possible suppliers on various criteria. All performances were scored on an integer scale from 1 to 10, and among these suppliers, two suppliers were selected. Table 4 summarizes the selection based on various viewpoints. Suppliers #1 and #2 were the optimal choices.

Table 5 shows the results using the conventional AHP approach. Suppliers #2 and #6, which were different from those obtained using the diversified AHP-tree approach, were selected. Moreover, between the two selected suppliers using the conventional AHP approach, only Supplier #2 exhibited an optimal performance. By contrast, in the diversified AHP-tree approach, Suppliers #2 and #1 were selected and were optimally performing suppliers from different viewpoints.

Another existing method, the ordered weighted average (OWA) method (Yager and Kacprzyk 2012; Chiu and Chen 2021) was applied to this problem for comparison. In OWA, the performance of a supplier in terms of various criteria was sorted before aggregation. Weights assigned to the sorted performances depended on the decision strategy (Table 6). Table 7 summarizes the results obtained using the OWA method. When the pessimistic decision strategy was adopted, the result obtained using the OWA method was close to that obtained using the diversified AHP-tree approach. However, only a single optimal supplier, i.e., Supplier #2, was obtained using the OWA method.

The third existing method to be compared is the measuring attractiveness by a categorical based evaluation technique (MACBETH) (Bana e Costa et al. 2005). MACBETH is similar to AHP. Both methods are outranking methods based on the pairwise comparisons done by a decision maker. However, MACBETH uses an interval scale and AHP adopts a ratio scale. In addition, the calculation process of MACBETH is different from that of AHP. For the supplier selection problem, MACBETH solved the quadratic programming (QP) problem in Fig. 8 to derive the priorities of criteria. The results were \(w_{i}^{*}\) = {0.359, 0.000, 0.186, 0.309, 0.145}. The top performing supplier was Supplier #6, while the second was Supplier #3. The result were similar to that using OWA when the optimistic strategy was adopted, but was different from that using the traditional AHP approach or the diversified AHP-tree approach.

The experimental results indicated the following:

-

(1)

Although the results obtained using several methods were compared, determining the best method was not easy. However, the differences among the results obtained using these methods were evident. The selection of diversified optimal suppliers was possible only when the diversified AHP-tree approach was applied. However, this behavior is not attributed to the formation of numerous ties in comparing supplier performances but to various viewpoints considered by the decision maker.

-

(2)

Selecting suppliers based on the priority sets with higher consistency was preferred (i.e., lower values of CI).

-

(3)

The continuous decomposition of a judgement (or subjudgement) matrix is always possible. For example, if the decomposition results comprise a completely consistent matrix, where CR is 0, then further decomposition may aggravate CR. Furthermore, a judgement (or subjudgement) matrix with extreme values (i.e., 1 or 9) could not be decomposed.

-

(4)

If three priority sets were to be generated, the judgement matrix was decomposed into two subjudgement matrices twice, thereby generating four subjudgement matrices, which may be more efficient than decomposing the judgement matrix directly into three subjudgement matrices (Fig. 9). For example, there are only two ways to decompose a judgement matrix with a matrix element \( a_{{ij}} = 3\) into two subjudgement matrices, i.e., {1, 5} and {2, 4}. Therefore, decomposition using the same approach twice results in four matrices. By contrast, there are up to six ways to decompose the matrix into three subjudgement matrices, i.e., {1, 1, 7}, {1, 2, 6}, {1, 3, 5}, {1, 4, 4}, {2, 2, 5}, and {2, 3, 4}. For different values of\( a_{{ij}}\), Table 8 summarizes the results. This is a severe concern if all matrix elements are considered. For example, assuming

$${\mathbf{A}} = \left[ {\begin{array}{*{20}l} 1 &\quad 2 &\quad 5 \\ {1/2} &\quad 1 &\quad {1/3} \\ {1/5} &\quad 3 &\quad 1 \\ \end{array} } \right] $$Table 8 The number of possible ways to decompose a judgement matrix by considering a single matrix element Then 2 * 12 * 6 = 144, and thus, there are 144 ways to decompose A into three subjudgement matrices.

-

(5)

The effectiveness and efficiency of the GA algorithm was compared with those of an enumeration procedure that compared all feasible solution. The comparison results are summarized in Table 9. In most decompositions, GA could maximize the distance between subjudgement matrices efficiently. The enumeration procedure achieved the same purpose in all decompositions, but when there were a lot of feasible solutions, the efficiency might be very low.

Table 9 Comparison between the GA algorithm and an enumeration procedure -

(6)

To further elaborate the effectiveness of the diversified AHP-tree approach, it has been applied to another case (Chen et al. 2020), in which six factors critical to the robustness of a factory amid the COVID-19 pandemic, i.e., COVID-19 containment performance, pandemic severity, vaccine acquisition speed, demand shrinkage, supplier impact, and infection risk, were compared. A decision maker constructed the following judgement matrix:

$$ {\mathbf{A}} = \left[ {\begin{array}{*{20}l} 1 &\quad {1/3} &\quad {1/4} &\quad 4 &\quad 5 &\quad 5 \\ 3 &\quad 1 &\quad {1/5} &\quad {1/3} &\quad 4 &\quad 2 \\ 4 &\quad 5 &\quad 1 &\quad 3 &\quad 5 &\quad 5 \\ {1/4} &\quad 3 &\quad {1/3} &\quad 1 &\quad 5 &\quad 5 \\ {1/5} &\quad {1/4} &\quad {1/5} &\quad {1/5} &\quad 1 &\quad 3 \\ {1/5} &\quad {1/2} &\quad {1/5} &\quad {1/5} &\quad {1/3} &\quad 1 \\ \end{array} } \right]$$\( CR({\mathbf{A}}) = 0.223\), which was somewhat inconsistent. To enhance consistency, the diversified AHP-tree approach was applied to decompose A into at most five more consistent subjudgement matrices. The results are shown in Fig. 10. The implications of the AHP tree were multiple. For example, the priority of “vaccine acquisition speed” ranged from 0.203 to 0.511, depending on the viewpoint of the decision maker.

4 Conclusions

A decision maker must make many tradeoffs in comparing criteria in pairs. Because such tradeoffs are subjective and unavoidably conflicting, the judgement matrix is inconsistent. To overcome this problem, in the proposed diversified AHP-tree approach, an inconsistent judgement matrix is decomposed into some subjudgement matrices that are more consistent than the original judgement matrix. Such subjudgement matrices represent multiple viewpoints of the decision maker during the pairwise comparison process. The optimal performing alternative from each viewpoint can be selected. Therefore, multiple alternatives can be simultaneously selected by the decision maker, all of which are the optimal alternatives according to different viewpoints, which is the novelty of the diversified AHP-tree approach.

The diversified AHP-tree approach was applied to a supplier selection problem to demonstrate its applicability and effectiveness. According to the experimental results,

-

(1)

Generating multiple viewpoints from a single judgement matrix for a single decision maker was possible using the diversified AHP-tree approach.

-

(2)

Subjudgement matrices were more consistent than the original judgement matrix.

-

(3)

Two suppliers, which were optimal from the two different viewpoints, were selected by using the proposed diversified AHP-tree approach. By contrast, the conventional methods could only select a single optimal supplier.

Numerous variants of the diversified AHP-tree approach can be implemented in the future. For example, a judgement matrix can be a geometric mean, not an arithmetic mean, of its subjudgement matrices. Furthermore, a more efficient approach can be proposed to decompose a judgement matrix. The problem becomes complicated when multiple decision makers are involved. Future examinations can be conducted in the aforementioned directions.

References

Akgün İ, Erdal H (2019) Solving an ammunition distribution network design problem using multi-objective mathematical modeling, combined AHP-TOPSIS, and GIS. Comput Ind Eng 129:512–528

Ami D, Aprahamian F, Chanel O, Luchini S (2018) When do social cues and scientific information affect stated preferences? Insights from an experiment on air pollution. J Choice Model 29:33–46

Bana e Costa C, Corte JM, Vansnick JC (2005) On the mathematical foundation of MACBETH. In: Figueira J, Greco S, Ehrogott M (eds) Multiple criteria decision analysis: state of the art surveys. Springer, New York, pp 409–437

Benítez J, Delgado-Galván X, Gutiérrez JA, Izquierdo J (2011) Balancing consistency and expert judgment in AHP. Math Comput Model 54(7–8):1785–1790

Boyabatli O, Sabuncuoglu I (2004) Parameter selection in genetic algorithms. J Syst Cybern Inform 4(2):78

Business Performance Management Singapore (2013) AHP—high consistency ratio. https://bpmsg.com/ahp-high-consistency-ratio/

Chan FT, Kumar N, Tiwari MK, Lau HC, Choy KL (2008) Global supplier selection: a fuzzy-AHP approach. Int J Prod Res 46(14):3825–3857

Chen TCT (2020) Guaranteed-consensus posterior-aggregation fuzzy analytic hierarchy process method. Neural Comput Appl 32:7057–7068

Chen TCT, Wang YC (2019) An incremental learning and integer-nonlinear programming approach to mining users’ unknown preferences for ubiquitous hotel recommendation. J Ambient Intell Humaniz Comput 10(7):2771–2780

Chen TCT, Wang YC, Lin YC, Wu HC, Lin HF (2019) A fuzzy collaborative approach for evaluating the suitability of a smart health practice. Mathematics 7(12):1180

Chen T, Wang YC, Chiu MC (2020) Assessing the robustness of a factory amid the COVID-19 pandemic: a fuzzy collaborative intelligence approach. Healthcare 8(4):481

Chen T, Wang Y-C, Wu H-C (2021) Analyzing the impact of vaccine availability on alternative supplier selection amid the COVID-19 pandemic: a cFGM-FTOPSIS-FWI approach. Healthcare 9(1):71

Chiu M-C, Chen T (2021) Assessing mobile and smart technology applications to active and healthy ageing using a fuzzy collaborative intelligence approach. Cogn Comput 13:431–446

de Boer L, Labro E, Morlacchi P (2001) A review of methods supporting supplier selection. Eur J Purch Supply Manag 7(2):75–89

Deng X, Hu Y, Deng Y, Mahadevan S (2014) Supplier selection using AHP methodology extended by D numbers. Expert Syst Appl 41(1):156–167

Dilawar SM, Durrani DK, Li X, Anjum MA (2019) Decision-making in highly stressful emergencies: The interactive effects of trait emotional intelligence. Curr Psychol. https://doi.org/10.1007/s12144-019-00231-y

Dweiri F, Kumar S, Khan SA, Jain V (2016) Designing an integrated AHP based decision support system for supplier selection in automotive industry. Expert Syst Appl 62:273–283

Escobar MT, Aguarón J, Moreno-Jiménez JM (2004) A note on AHP group consistency for the row geometric mean priorization procedure. Eur J Oper Res 153(2):318–322

Franek J, Kresta A (2014) Judgment scales and consistency measure in AHP. Proc Econ Finance 12:164–173

Golub GH, Van Loan CF (1996) Matrix computations. Johns Hopkins, Baltimore, MD

Hassanat A, Almohammadi K, Alkafaween E, Abunawas E, Hammouri A, Prasath VB (2019) Choosing mutation and crossover ratios for genetic algorithms—a review with a new dynamic approach. Information 10(12):390

Hübner J, Schmidt M, Steinbach MC (2020) Optimization techniques for tree-structured nonlinear problems. Comput Manag Sci 17:1–28

Kahraman C, Cebeci U, Ulukan Z (2003) Multi-criteria supplier selection using fuzzy AHP. Logist Inf Manag 16(6):382–394

Kilincci O, Onal SA (2011) Fuzzy AHP approach for supplier selection in a washing machine company. Expert Syst Appl 38(8):9656–9664

Krivulin N (2020) Using tropical optimization techniques in bi-criteria decision problems. CMS 17(1):79–104

Lima Junior FR, Osiro L, Carpinetti LCR (2014) A comparison between fuzzy AHP and fuzzy TOPSIS methods to supplier selection. Appl Soft Comput 21:194–209

Lin YC, Chen T (2019) An advanced fuzzy collaborative intelligence approach for fitting the uncertain unit cost learning process. Complex Intell Syst 5(3):303–313

Lin YC, Chen T (2020) A multibelief analytic hierarchy process and nonlinear programming approach for diversifying product designs: smart backpack design as an example. Proc Inst Mech Eng Part B J Eng Manuf 234(6–7):1044–1056

Lin ZC, Yang CB (1996) Evaluation of machine selection by the AHP method. J Mater Process Technol 57(3–4):253–258

Lin YC, Chen T, Wang LC (2018) Integer nonlinear programming and optimized weighted-average approach for mobile hotel recommendation by considering travelers’ unknown preferences. Oper Res Int J 18(3):625–643

Lin YC, Wang YC, Chen TCT, Lin HF (2019) Evaluating the suitability of a smart technology application for fall detection using a fuzzy collaborative intelligence approach. Mathematics 7(11):1097

Liu F, Peng Y, Zhang W, Pedrycz W (2017) On consistency in AHP and fuzzy AHP. J Syst Sci Inf 5(2):128–147

Moreno-Jiménez JM, Aguarón J, Escobar MT (2008) The core of consistency in AHP-group decision making. Group Decis Negot 17(3):249–265

Mousavi SM, Tavakkoli-Moghaddam R, Heydar M, Ebrahimnejad S (2013) Multi-criteria decision making for plant location selection: an integrated Delphi–AHP–PROMETHEE methodology. Arab J Sci Eng 38(5):1255–1268

Peláez JI, Martínez EA, Vargas LG (2018) Consistency in positive reciprocal matrices: an improvement in measurement methods. IEEE Access 6:25600–25609

Saaty TL (2008) Decision making with the analytic hierarchy process. Int J Serv Sci 1(1):83–98

Saaty TL (2013) Analytic network process. Springer, Berlin

Shen W, Yuan Y, Yi B, Liu C, Zhan H (2019) A theoretical and critical examination on the relationship between creativity and morality. Curr Psychol 38(2):469–485

Sirisawat P, Kiatcharoenpol T (2018) Fuzzy AHP-TOPSIS approaches to prioritizing solutions for reverse logistics barriers. Comput Ind Eng 117:303–318

Tsai HR, Chen T (2013) A fuzzy nonlinear programming approach for optimizing the performance of a four-objective fluctuation smoothing rule in a wafer fabrication factory. J Appl Math 2013:1–15

Tsai HR, Chen T (2014) Enhancing the sustainability of a location-aware service through optimization. Sustainability 6(12):9441–9455

Wang TC, Chen YH (2008) Applying fuzzy linguistic preference relations to the improvement of consistency of fuzzy AHP. Inf Sci 178(19):3755–3765

Wang YC, Chen TCT (2019) A partial-consensus posterior-aggregation FAHP method—supplier selection problem as an example. Mathematics 7(2):179

Wang YC, Chen T, Yeh YL (2019) Advanced 3D printing technologies for the aircraft industry: a fuzzy systematic approach for assessing the critical factors. Int J Adv Manuf Technol 105(10):4059–4069

Wang YC, Chiu MC, Chen T (2020) A fuzzy nonlinear programming approach for planning energy-efficient wafer fabrication factories. Appl Soft Comput 95:106506

Wedley WC (1993) Consistency prediction for incomplete AHP matrices. Math Comput Model 17(4–5):151–161

Wu HC, Chen T, Huang CH (2020) A piecewise linear FGM approach for efficient and accurate FAHP analysis: smart backpack design as an example. Mathematics 8(8):1319

Xu Z (2000) On consistency of the weighted geometric mean complex judgement matrix in AHP. Eur J Oper Res 126(3):683–687

Yager RR, Kacprzyk J (2012) The ordered weighted averaging operators: theory and applications. Springer, Berlin

Zhou M, Bridges JF (2019) Explore preference heterogeneity for treatment among people with type 2 diabetes: a comparison of random-parameters and latent-class estimation techniques. J Choice Model 30:38–49

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Not required.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, T. A diversified AHP-tree approach for multiple-criteria supplier selection. Comput Manag Sci 18, 431–453 (2021). https://doi.org/10.1007/s10287-021-00397-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10287-021-00397-6