Abstract

Cancerous skin lesions are one of the deadliest diseases that have the ability in spreading across other body parts and organs. Conventionally, visual inspection and biopsy methods are widely used to detect skin cancers. However, these methods have some drawbacks, and the prediction is not highly accurate. This is where a dependable automatic recognition system for skin cancers comes into play. With the extensive usage of deep learning in various aspects of medical health, a novel computer-aided dermatologist tool has been suggested for the accurate identification and classification of skin lesions by deploying a novel deep convolutional neural network (DCNN) model that incorporates global average pooling along with preprocessing to discern the skin lesions. The proposed model is trained and tested on the HAM10000 dataset, which contains seven different classes of skin lesions as target classes. The black hat filtering technique has been applied to remove artifacts in the preprocessing stage along with the resampling techniques to balance the data. The performance of the proposed model is evaluated by comparing it with some of the transfer learning models such as ResNet50, VGG-16, MobileNetV2, and DenseNet121. The proposed model provides an accuracy of 97.20%, which is the highest among the previous state-of-art models for multi-class skin lesion classification. The efficacy of the proposed model is also validated by visualizing the results obtained using a graphical user interface (GUI).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Cancer is one of the deadliest diseases, that happens due to uncontrollable growth of the body cells in the affected region that will spread and damage other parts of the body by replacing the normal cells with cancerous cells. According to World Health Organization (WHO), cancer is a prime reason for demise around the world, leading to one in six deaths or reckoning for approximately 10 million deaths in 2020 [1]. Skin cancer is known as one of the most common cancers around the world. The epidermis, which is the top layer of skin, is where skin cancer begins [2]. The epidermis consists of cells such as basal cells, squamous cells, and melanocytes. The type of cancer that forms in the squamous cells which are present in the skin refers to squamous cell carcinoma (SCC). Most of these squamous cell carcinomas of the skin occur due to prolonged exposure to ultraviolet radiation. Even though it is not life-threatening, if left untreated, this squamous cell carcinoma can cause some serious complications [3].

Basal cell carcinoma (BCC) occurs as the mutation begins in the basal cells that are present in the base layer of the epidermis to grow swiftly and continue increasing. This may result in tumor formation, which appears as a lesion on the skin and is caused due to indelible subjection to ultraviolet (UV) radiation coming from sunlight [4]. They appear as a slightly transparent bump or sore that does not heal, and they may also be visible in other forms too such as blue, brown, or black lesions, scaly flat patches, and waxy white scar-like lesions. Some of the complications of this cancer include an increased risk of the formation of other types of cancers, the spreading of cancer beyond the skin, and a reoccurrence risk [5].

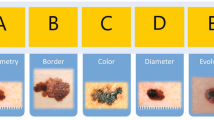

Melanoma, which is the deadliest type of skin cancer, occurs when there is something serious with melanocytes which are melanin-producing cells in the skin. Melanomas also called black tumors can develop anywhere in the body, however commonly develops in areas that are frequently exposed to the sun and approximately 30% of melanomas begin in moles and typically a delay in the treatment may sometimes lead to death. Along with these skin cancers, there are also some least common skin cancers such as Merkel cell carcinoma, Kaposi sarcoma, and Sebaceous gland carcinoma [6]. BCC and SCC are the two most common types of skin cancers together the non-melanoma type skin cancer. As per the Skin Cancer Foundation, every month, around 5400 people die due to non-melanoma cancer worldwide. According to an estimation made by this foundation, there are 3.6 million cases of basal cell carcinoma and 1.8 million cases of squamous cell carcinoma diagnosed yearly in the USA. And it is also expected that it is likely an increase of 6.5% in deaths due to melanoma. When these cancers are detected early, most of them can be cured completely. Even melanoma can have a 5-year survival rate of up to 99% on early detection. Biopsy and imaging tests are the other two most common tests that are used in the diagnosis of skin cancer. Though this biopsy method has a success rate comparatively, some constraints must be considered during and post-biopsy [7].

With the advancements of computer-aided intelligent diagnosis tools, along with the huge amount of labeled data, it is easy to understand and interpret various types of skin cancers. Many machine learning (ML) and deep learning (DL) models can be deployed in extracting the features like color, size, shape, and texture to predict cancers [8, 9]. To avoid manual hand-crafted feature extraction, many DL algorithms like CNN, transfer learning models, LSTM, and RNN models were employed in skin cancer detection. In this work, we consider the application of a deep CNN model with global average pooling and preprocessing to enable better multi-class classification of skin lesions. Our key role aspects of the proposed study deal with:

-

The design of a novel deep convolutional neural network (DCNN) model that detects and classifies skin cancer more precisely at an early stage.

-

The comparison of the outcomes of the proposed DCNN model with other previous state of art models.

-

Evaluating the performance of the suggested model in comparison with the existing transfer learning models like VGG-16, ResNet50, DenseNet121, and MobileNetV2.

-

Development of a user interface to assess the efficacy of the DCNN model in obtaining the best classification accuracy.

Related Works

Over the years, deep learning (DL)–based models have proven their efficiency in the medical field, especially in predicting and classifying diseases with the help of medical images using convolutional neural networks (CNN). Calderon et al. [10] proposed a bilinear CNN composed of ResNet50 and VGG16 architectures deployed to perform skin lesion classification on the HAM10000 dataset with comparatively highest accuracy and low cost of computation. This framework comprises several techniques such as data augmentation, transfer learning, and fine-tuning to increase performance. The results state that the proposed work achieved 0.9321 accuracies, 2.7% greater than state-of-the-art methods and other metrics with good results. In [11], the authors developed a light-weighted classification model to classify skin cancers to aid medical care. To achieve a remote diagnosis, they deployed their model on mobile devices as well as cloud platforms. They applied KCGMH (Kaohsiung Chang Gung Memorial Hospital) dataset to their model and achieved a binary classification accuracy of 89.5% and obtained an accuracy of 72.1% for a five-class classification, while the same model has given a seven-class classification accuracy of 85.8% on the HAM10000 dataset. A deep CNN model for the classification of benign and malignant lesions accurately has been proposed in [12] and compared with transfer learning models such as ResNet, MobileNet, AlexNet, DenseNet, and VGG16. Some preprocessing techniques such as noise and artifact removal, normalization of input data, feature extraction, and data resampling were performed on the HAM10000 dataset. A comparatively highest accuracy of 93.16% in training and an accuracy of 91.93% in testing were obtained with the proposed model.

In [13], the existing state-of-the-art models such as MobileNetV2, DenseNet201, GoogleNet, Inception-ResNetV2, and InceptionV3 were used for the creation of plain as well as hierarchical classifiers. The results proved that the plain model provided better results than the hierarchical model. The concept of CNN was leveraged by hyperparameter tuning and a deep comparative analysis between ResNet50 and MobileNet architectures was performed [14]. Another CNN architecture was suggested in [15] for the classification of the HAM10000 dataset and the model provided 91.51% of classification accuracy. This model was evaluated by connecting to an application in two phases which is web-based and validated by 7 expert dermatologists.

A fully automated computerized aided deep learning framework was proposed which included the decorrelation formulation for data preprocessing followed by MASK-RCNN for segmentation, feature extraction by DenseNet model and then the resultant vector was then sent to an entropy-controlled least square SVM (LS-SVM) [16]. In [17], the authors proposed their model in deep learning studios (DLS), a model-driven architecture tool that provided some components related to the modeling of neural networks such that we can drag-drop the required neural network modeling components according to our requirements. Here, they built their own CNN model in DLS and obtained an accuracy of 71.17% in training, and an accuracy of 71.41% during validation and this proposed model was compared with ResNet, SqueezNet, DenseNet, and InceptionV3.

An automatized computer-aided system has been proposed in [18]. The authors made a comparative analysis of the performance of transfer learning models such as InceptionV3, NASNetLarge, Xception, ResNetXt101, and InceptionResNetV2 and they also compared the performance of ensemble models such as InceptionV3 + Xception, InceptionResNetV2 + ResNetXt101, InceptionResNetV2 + Xception, and InceptionResNetV2 + Xception + ResNetXt101. Among these stated ensemble models, InceptionResNetV2 + ResNetXt101 have given a maximum classification accuracy of 92.83%. And ResNetXt101 model in the transfer learning models category has given the best accuracy among all the compared models, i.e., an accuracy of 93.20%.

Rahman et al. [19] proposed a weighted average ensemble model, and the models that are used for the weighted average ensemble are SeResNetXt, Xception, ResNetXt, DenseNet, and ResNet as the base weighted average ensemble. They utilized a combined HAM10000 and ISIC 2019 datasets to test their models. A multi-CNN approach (MSM-CNN) has been proposed in [20] as a fusion scheme of three levels, where the authors trained the three state-of-the-art models (i.e., EfficentNetB0, EfficentNetB1, and SeReNeXt-50) and obtained the final accuracy of 96.3% for the proposed model.

An approach that is fully automated for segmentation, as well as the classification of multi-class skin lesions, has been proposed [21]. Initially, the input images are enhanced using the LCcHIV technique, followed by estimation of saliency using a novel method called Deep Saliency Segmentation consisting of a 10-layered CNN. Then, the features are extracted from segmented color lesions with the help of the thresholding function. An IMFO (Improved Mouth Flame Optimization) algorithm is used as a dimensionality reduction technique. And using analysis of multiset maximum correlation, resultant features were fused, then these are classified with KELM (Kernel Extreme Learning Machine) classifier. In the proposed methodology, the segmentation performance is evaluated on different datasets, and a segmentation accuracy of 98.70%, 95.79%, 95.38%, and 92.69% was obtained on PH2, ISBI2017, ISBI2016, and ISIC2018 datasets respectively. And finally, a classification accuracy of 90.67% is obtained, when the proposed model is evaluated on the HAM10000 dataset.

Kassem et al. [22] utilized a pre-trained GoogleNet model and transfer learning on ISIC 2019 dataset to test the model’s capacity for classifying eight different skin lesion classes, namely, melanocytic nevus, squamous cell carcinoma, benign keratosis, basal cell carcinoma, dermatofibroma, actinic keratosis, and vascular lesion present in this dataset. Classification specificity, precision, accuracy, and sensitivity percentages of 94.92%, 79.8%, 80.36%, and 97% were achieved respectively. A boundary segmentation technique using a fully resolution Convolution Network (FrCN) on skin lesion images has been applied and compared with InceptionV3, Inception-ResNetV2, ResNet50, and DenseNet201 models [23].

A CNN-based skin disease identification system named Eff2Net has been proposed by Karthick et al. [24] which is a combination of EfficientNetV2 and a block called Efficient Channel Attention (ECA). It resulted in a significant drop in total parameters and 84.70% accuracy in testing was achieved by this model. A DermoExpert (Demoscopic Expert) classification architecture which is a combination of preprocessing and hybrid CNN has been implemented in [25]. This hybrid CNN consists of three different feature extractor modules, which are fused for achieving better feature maps and deployed for a web application. This DermoExpert is trained and tested on ISIC2016, ISIC2017, and ISIC2018 datasets and has achieved AUC of 0.96, 0.95, and 0.97 respectively. Recently, two novel hybrid deep learning models with SVM classifier (that provided an accuracy of 88%) for classifying melanoma and benign lesions have been proposed by concatenating the features extracted from the two CNN models [26]. Also, the authors proposed a deep threshold prediction network that offered an accurate estimate of the threshold to learn and predict the threshold that separates the lesion from the background [27]. They have extended their work by proposing an EfficientNet-based modified sigmoid transform to improve the accuracy of segmenting the skin lesions [28].

Based on the above-mentioned studies gaining few insights, one can declare that many complex models along with optimization methods for hyperparameter tuning, feature selection, etc. have been used for skin lesion classification. This led to the proposal of a novel and simple DCNN model in this work. The images of the cancerous skin lesions include 10,015 samples and seven classes that are identified and classified using the recommended model. The performance of the model is evaluated and characterized by accuracy, training time, trainable parameters, precision, recall, and F1 score. A graphical user interface (GUI) is developed to confirm the efficacy of the proposed model. Table 1 gives a clearer overview of the summary of the literature review along with the performance metrics obtained by the existing models.

Methodology Adopted

Several preprocessing techniques such as black hat filtering, histogram equalization, and contrast enhancement followed by sharpening and data augmentation have been applied to the skin lesion images which help the proposed model to understand the information present in the images. But to reduce the computational burden, a simple novel model has been proposed that consumes less memory, unlike the models that were discussed in the literature. The authors have tried the above preprocessing techniques and have finally concluded that image resizing, black hat filtering, and data augmentation enhance the performance of the proposed simple DCNN model in providing better accuracy. The flowchart shown in Fig. 1 provides a clear overview of the steps adopted in the methodology. The proposed model is compared with a few of the existing transfer learning models such as MobileNetV2, VGG16, ResNet50, and DenseNet121 and the results are captured in the form of various metrics.

Description of Dataset

The available datasets for skin lesion classification include HAM10000, ISIC2018, ISIC2019, and ISBI datasets. Among them, we found that the Human Against Machine with 10,015 training images (HAM10000) dataset is a reliable dataset consisting of 10,015 skin lesion images with high diversity among skin lesion classes (available at https://doi.org/10.7910/DVN/DBW86T). HAM10000 is a seven-class skin lesion class dataset that is acquired from different modalities, and populations, with several cleaning and acquisition methods such as manual cropping, and histogram corrections for color and visual contrast enhancement performed on this dataset. Another major reason to use the HAM10000 dataset is the collection of the dataset includes all important categories in pigmented lesions realm and more than half of these lesion images are verified by pathologists [29], with the remaining lesions were confirmed with follow-up examination, expert consensus, or by in vivo confocal microscopy. Therefore, HAM10000 dataset is considered here for skin lesion classification.

In general, skin cancer is classified into two types: melanoma and non-melanoma. And non-melanoma is further classified into two main types: BCC (basal cell carcinoma) [6] and SCC (squamous cell carcinoma). There are different types of skin cancers such as akiec (actinic keratosis) [30] and bkl (benign keratosis) which come under squamous cell carcinoma [31]. And the rest of the skin cancer types present in the HAM10000 dataset are vasc (vascular skin lesions), nv (melanocytic nevi), and df (dermatofibroma) which may be tumorous or non-tumorous skin lesions [32,33,34,35]. The HAM10000 dataset consists of these seven different classes of skin lesions, namely nv, akiec, mel, bcc, vasc, bkl, and df. The overall and per-class data distribution is displayed in Table 2.

The HAM10000 dataset is available in the Harvard Dataverse repository as HAM10000 includes 10,015 images in two parts with an image size of 600 × 450, and an overall HAM10000_ metadata file [29]. The overall metadata file consists of features, namely lesion_id, dx, image_id, sex, dx_type, localization, and age, where lesion_id aids in the identification of lesion, image_id helps in the identification of the image, dx tells us to which class the corresponding skin lesion image belongs to, and dx_type tells the method applied on that particular lesion like histogram, consensus, confocal, and follow-up, and localization indicates the area in the body.

Data Preprocessing Techniques

The images present in the HAM10000 dataset are RGB images and of size 600 × 450 and the following different preprocessing techniques are implemented.

Histogram Equalization

There are different types of histogram equalization such as CLAHE (contrast limited adaptive histogram equalization), MBOBHE (multipurpose bet optimized bihistogram equalization), adaptive histogram equalization, and MPHE (multipeak histogram equalization). In this study, CLAHE is used; the reason for going with CLAHE technique is, compared to other types of histogram equalization, the CLAHE technique operates on small portions of the image rather than the whole image and it reduces the issue of noise amplification [36]. The original image and the image after applying histogram equalization are shown in Fig. 2.

Conventional Contrast Enhancement and Sharpening

The normal contrast enhancement technique offered by the pillow (“PIL”) library can produce better results using a module called “ImageEnhance” which offers a vast number of images with enhancing functions that can be used for enhancing brightness, contrast, and sharpening and can convert black and white images to colored images.

To increase the contrast of an image, we have applied the contrast function of the ImageEnhance module with a factor of 0.5 on the skin lesions images in the dataset and it is found that applying some sharpening techniques to the image can give a better result. So, the pillow library offers another module called Image and there is a function called “filter” which provides numerous different types of filters as we are looking for sharpening the image; we have used the filter function to sharpen the contrasted image [37]. The original image and the image after applying conventional contrast enhancement and sharpening are shown in Fig. 3.

Black Hat Filtering

Many of the skin lesion images present in the HAM10000 dataset consist of black hair present in it and there is a chance that this black hair may overlap with the lesion leading to misdiagnosis. So, it is of utmost importance that artifacts such as black hair must be removed. So, we had chosen the black hat filtering process to perform this task. Black hat filtering is one of the techniques available in morphological transformations. Usually, these operations are carried out upon binary images. It requires two inputs, of which one is the original image and the other is called a kernel or structuring element that is crucial in deciding the sort of operation. Here, the “MORPH_BLACKHAT” function from OpenCV library has been chosen to do this task.

In the process of black hat filtering, firstly, we made use of a threshold and convert these RGB images into black and white images. And this image is then converted into its complement where the skin is black and the hair is white. This complemented image acts as a mask to the original image and is multiplied with the original image. The resultant image undergoes the process of painting, where the space of black hair in the image is filled with the image information attained from the neighboring pixels. And the final image is then converted into a color image. The same process when applied to the lesion images as in Fig. 4a gives the resultant output of lesion images whose black hair is removed as in Fig. 4b [38].

Data Augmentation

As said earlier, the data present in the HAM10000 dataset is uneven among the classes that are present. The HAM10000 dataset faces an imbalance problem where a particular class called “nv” itself consists of 6705 images which are more than 50% of the total number of images present in the HAM10000 dataset and the remaining six classes together consist of only 3310 images. To avoid the biased classification by the model towards the majority class, balancing the data across all the classes present in the HAM10000 dataset is a must before training the model. So, resampling techniques have been considered regarding balancing the data across all the classes. There are so many resampling techniques available in Python. ImageDataGenerator is a class that belongs to the “preprocessing” module which is a part of the minimalist python library called “Keras” from the “TensorFlow” python library (https://www.tensorflow.org/resources/libraries-extensions and https://keras.io/). The main reason to utilize the ImageDataGenerator is that it can offer real-time image augmentation and offers a vast number of augmentation techniques like flipping the image, rescaling the image, shifting the image by 20% along both height and width, and rotating the image with a degree of 0–10. And to fill the spaces generated due to augmentation techniques to their nearest pixels, a technique called “fill_mode” is also used [39]. The parameter values used for augmentation are displayed in Table 3.

The original skin lesion image and the augmented images are shown in Fig. 5. We have not performed augmentation on the test dataset, as we want to test the original dataset images on the models. The balanced dataset is shown in Table 4 and the data distribution of the original images before augmentation and the distribution of training images after augmentation are shown in Figs. 6 and 7 as pie chart and barchart.

The preprocessing techniques of three different combinations are shown in Figs. 8, 9, and 10. Those combinations include.

i) Black hat filtering + histogram equalization + data augmentation

ii) Black hat filtering + contrast enhancement and sharpening + data augmentation

iii) Black hat filtering + data augmentation

To conclude with a better combination technique than those mentioned above, we have tested all these three combinations on the model variants whose details will be discussed in the upcoming section.

Transfer Learning Models and the Proposed Model

CNN works very well for image/object classification and recognition. They provide an advantage of feature extraction through filters and convolution of the image, and this process continues from layer to layer. Transfer learning is a process of transferring knowledge of learned features of a pre-trained network of neurons and adapting the network to a different and new dataset to perform a similar task. The transfer learning models deployed in this study are VGG16, MobileNetV2, ResNet50, and DenseNet121. Regarding the hyperparameters, such as learning rate, we have tried three different learning rates, i.e., 0.01, 0.001, and 0.0001, and we found the learning rate of 0.001 worked fine for all the models. The Adam optimizer helps the model to converge faster. And as we have multiple classes in the dataset, the Softmax classifier and categorical cross-entropy loss function have been chosen.

The Proposed DCNN Model

The proposed DCNN model is developed by taking inspiration from the VGG16 architecture and from the basic CNN architecture, which is a convolution layer succeeded by Maxpool layer. The convolution layer accompanied by a Maxpool layer together is taken as a block. Further, the number of these blocks in the network is finalized by executing the training procedure with different combinations of these blocks and changing the filter sizes.

A combination of five convolution layers followed by Maxpool layers has been implemented. And then these blocks are followed up by one layer of global average pooling before going to the dense layer as well as the output layer. The advantages offered by Global Average Pool are that it minimizes the problem of overfitting by reducing the number of parameters and Global Average Pool layer is better than the flattening layer, by providing a better representation of the vector, whereas the flattened layer can only covert the multi-dimensional object to one-dimensional object. And Global Average Pool layer also works better in correlating feature maps and categories over dense layers. Finally, a dense layer of filter size 64 to reduce the computational cost of the network with the ReLU activation function is used. Rectified linear unit (ReLU) is a simple and fast piecewise function that is linear and that outputs the positive inputs directly and the negative inputs will be nullified to zero and would not be processed further. And this layer is followed by another dense layer with seven nodes as an output layer which consists of a softmax activation function, which is recommended activation function when categorical cross-entropy is used as a loss function for multi-class classifications. And the overall proposed architecture is shown elaboratively in Fig. 11.

The visual representation in another way for our proposed model is shown in Fig. 12. And the model summary is mentioned in Table 5.

Experimental Results and Discussion

The proposed DCNN model and all the mentioned models have been trained using Python programming and executed in Jupyter Notebook on a system with an Intel Core i5-8250U CPU processor, 8 GB of RAM, and NVIDIA GeForce MX130 GPU card. The input image has been resized to 100 × 100 pixels from 600 × 400 pixels to avoid high computational costs. The data has been split as 80% for training (8012 images that were augmented to 45,756 images) and the rest 20% for testing purposes (2003 images). And for finalizing the preprocessing combinations, we tested all three combinations on VGG16, and the results are shown in Table 6.

The third combination which is the black hat filtering and data augmentation preprocessing technique provides good results compared to others as shown in Table 6. As the HAM10000 dataset has already undergone some preprocessing techniques including color and contrast enhancements in its preparation stage, there may not be any necessity for another contrast enhancement technique and in turn, adding these techniques may result in additional noise. The same techniques are applied to our proposed model displayed in Table 7.

Therefore, it is concluded that the combination of black hat filtering and data augmentation works well in improving accuracy. The black hat filtering and augmentation have been performed only on the dataset used for training before revealing it to the model and the number of training images has been increased to 45,756 images. To avoid the overfitting problem, we used some callbacks such as reducing the learning rate on the plateau and early stopping. All models have been trained for 50 epochs along with a batch of size 64.

Training Accuracy and Training Loss

The performance metrics were obtained for all the models and the results have shown that the proposed model has got better training accuracy and less loss compared to mentioned state-of-the-art models as shown in Fig. 13a–e.

Testing Results

Some models can perform well in the training stage, but cannot give good testing accuracy, which means that those models cannot generalize well. So, testing accuracy plays a very crucial role in deciding a model, as a generalization is all that is needed from a good model. Equations (1)–(4) provide the formulae for the performance metrics.

Accuracy: Accuracy gives us a ratio of correctly predicted count to the total count of cases.

Sensitivity/Recall: It is a metric that gives the ratio of correct predictions for positive cases to the total number of positive cases.

Precision: It is a metric that gives the ratio of positives predicted correctly to the total number of positives predicted.

F1 score: It is a metric that states the relation between precision and recall.

The below-mentioned Table 8 shows the achieved statistics for testing accuracy, precision, recall, and F1 score for the test data for the previously mentioned models for every class respectively. Table 8 also shows the results for the proposed model and Fig. 14 displays the confusion matrix of the proposed model.

ROC Curves

The receiver-operating characteristic (ROC) curve for the other models and the proposed model are shown in Fig. 15a–e.

Class-wise ROC Curves for Each Model

On observing the ROC curves for all the models, individually with respect to the classes, we can say that for all the classes, the AUC for the proposed model is almost near to 1 performing well for all the classes, especially nv, mel, and bkl. The graphs corresponding to the classes except for nv, mel, and bkl have all the curves merged, which states that all the models are performing well for those classes and the difference between their performances can be observed from nv, mel, and bkl classes more significantly. And the proposed model is superior to all the other models for all the classes. This analysis can be drawn from Fig. 16 and the comparison of class-wise AUC-ROC scores is displayed in Table 9.

Graphical User Interface

For our proposed model, we also built a graphical user interface (GUI) with the support of an application programming interface (API) called Gradio.

It is one of the easiest APIs to work with and can be embedded in any Python-related notebooks as well as webpages through the link generated automatically. We have used this interface to review the functioning of our model proposed and the corresponding results can be seen in Fig. 17a–e. This can be an aiding tool in evaluating and visualizing the lesions and the output classes predicted from the DCNNs.

Comparing Results of All the Metrics for All Models

The statistics shown in Table 10 indicate the superiority of the proposed model performance in comparison with other state-of-the-art DL models VGG16, DenseNet121, ResNet50, and MobileNetV2 through evaluation metrics such as training and testing accuracies, recall, precision, F1 score, ROC-AUC scores, total trainable parameters, and the total time for training. The training and testing accuracies, for the proposed model, are 99.96% and 97.204%, while recall, precision, and F1 score are 0.97, 0.97, and 0.97 respectively, while the ROC-AUC score is 0.9969 which is higher than the other mentioned state-of-the-art DL models. The number of trainable parameters for our proposed model is less than 5% of the parameters of any other models compared and the training time per epoch is 112 s which is notably less than the above-mentioned models. This plays a major role in reducing the computational complexity of the model. Thus, the above statistical results prove that our proposed model is more efficient, reliable, and less complex than the compared state-of-the-art models.

Conclusion and Future Scope

Thus, the research objective of designing a novel and simple DCNN model with a less computational burden to predict skin cancers using skin lesions at the earliest stages has been accomplished. The main motivation to work in this domain is that predicting skin cancer at an early stage can help in completely curing it. The proposed model utilizes the global average pooling to avoid overfitting issues associated with regular deep CNN models and careful consideration of preprocessing and data augmentation further helps to improve the overall accuracy compared with other current state-of-the-art deep learning models in the skin lesion domain. The results show that the proposed model has performed well in every metric especially obtaining a 97.2% testing accuracy and in all the performance-measuring entities namely Precision (0.97), Recall (0.97), F1 score (0.97), and ROC-AUC score (0.997) when compared to the other transfer learning–based deep learning models and other complex models that were discussed in the literature. The graphics user interface was built on the proposed model which can lead to the development of a clinical decision support system for the experts. There are some limitations to the proposed study. Although we have used data augmentation, the data is still not sufficient. The proposed model has been trained with only the HAM10000 dataset. To make it more robust, the model requires training with other skin lesion datasets too. For real-time application, this work can be further developed by practicing the evaluation process on medical field images that are difficult to acquire from experienced physicians. The performance of the model can be further improved with optimized hyperparameters and attention mechanisms to become even more versatile to classify the different types of datasets and real-time medical datasets by making the network deeper. The experimental results and the user interface tool can be used for healthcare assistance to get an early prediction and to make some early decisions to diagnose skin cancer reliably.

Availability of Data and Material

The datasets employed by the current study are publicly available standard datasets, namely HAM10000 datasets.

References

“US Department of Health and Human Services. The Surgeon General’s Call to Action to Prevent Skin Cancer. Washington (DC): Office of the Surgeon General (US); 2014.”

“Yousef H, Alhajj M, Sharma S. Anatomy, Skin (Integument), Epidermis. [Updated 2021 Nov 19]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2022 Jan-. Available from: https://www.ncbi.nlm.nih.gov/books/NBK470464/.”

“Howell JY, Ramsey ML. Squamous Cell Skin Cancer. [Updated 2022 Aug 1]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2022 Jan-. Available from: https://www.ncbi.nlm.nih.gov/books/NBK441939/.”

“https://www.mayoclinic.org/diseases-conditions/cancer/symptoms-causes/syc-20370588.”

“McDaniel B, Badri T, Steele RB. Basal Cell Carcinoma. [Updated 2022 Sep 19]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2022 Jan-. Available from: https://www.ncbi.nlm.nih.gov/books/NBK482439/.”

“https://my.clevelandclinic.org/health/diseases/14391-melanoma.”

R. Skaggs and B. Coldiron, “Skin biopsy and skin cancer treatment use in the Medicare population, 1993 to 2016,” J. Am. Acad. Dermatol., vol. 84, no. 1, pp. 53–59, Jan. 2021. https://doi.org/10.1016/j.jaad.2020.06.030.

O. Reiter, V. Rotemberg, K. Kose, and A. C. Halpern, “Artificial Intelligence in Skin Cancer,” Curr. Dermatol. Rep., vol. 8, no. 3, pp. 133–140, 2019. https://doi.org/10.1007/s13671-019-00267-0.

M. Goyal, T. Knackstedt, S. Yan, and S. Hassanpour, “Artificial intelligence-based image classification methods for diagnosis of skin cancer: Challenges and opportunities,” Comput. Biol. Med., vol. 127, p. 104065, 2020. https://doi.org/10.1016/j.compbiomed.2020.104065.

C. Calderón, K. Sanchez, S. Castillo, and H. Arguello, “BILSK: A bilinear convolutional neural network approach for skin lesion classification,” Comput. Methods Programs Biomed. Updat., vol. 1, p. 100036, 2021. https://doi.org/10.1016/j.cmpbup.2021.100036.

B. W.-Y. Hsu and V. S. Tseng, “Hierarchy-aware contrastive learning with late fusion for skin lesion classification,” Comput. Methods Programs Biomed., vol. 216, p. 106666, 2022. https://doi.org/10.1016/j.cmpb.2022.106666.

M. S. Ali, M. S. Miah, J. Haque, M. M. Rahman, and M. K. Islam, “An enhanced technique of skin cancer classification using deep convolutional neural network with transfer learning models,” Mach. Learn. with Appl., vol. 5, p. 100036, 2021. https://doi.org/10.1016/j.mlwa.2021.100036.

K. Thurnhofer-Hemsi and E. Domínguez, “A Convolutional Neural Network Framework for Accurate Skin Cancer Detection,” Neural Process. Lett., vol. 53, no. 5, pp. 3073–3093, 2021. https://doi.org/10.1007/s11063-020-10364-y.

S. Mohapatra, N. V. S. Abhishek, D. Bardhan, A. A. Ghosh, and S. Mohanty, “Comparison of MobileNet and ResNet CNN Architectures in the CNN-Based Skin Cancer Classifier Model,” in Machine Learning for Healthcare Applications, John Wiley & Sons, Ltd, 2021, pp. 169–186.

O. Sevli, “A deep convolutional neural network-based pigmented skin lesion classification application and experts evaluation,” Neural Comput. Appl., vol. 33, no. 18, pp. 12039–12050, 2021. https://doi.org/10.1007/s00521-021-05929-4.

M. A. Khan, T. Akram, Y.-D. Zhang, and M. Sharif, “Attributes based skin lesion detection and recognition: A mask RCNN and transfer learning-based deep learning framework,” Pattern Recognit. Lett., vol. 143, pp. 58–66, 2021. https://doi.org/10.1016/j.patrec.2020.12.015.

M. A. Kadampur and S. Al Riyaee, “Skin cancer detection: Applying a deep learning based model driven architecture in the cloud for classifying dermal cell images,” Informatics Med. Unlocked, vol. 18, p. 100282, 2020. https://doi.org/10.1016/j.imu.2019.100282.

S. S. Chaturvedi, J. V Tembhurne, and T. Diwan, “A multi-class skin Cancer classification using deep convolutional neural networks,” Multimed. Tools Appl., vol. 79, no. 39, pp. 28477–28498, 2020. https://doi.org/10.1007/s11042-020-09388-2.

Z. Rahman, M. S. Hossain, M. R. Islam, M. M. Hasan, and R. A. Hridhee, “An approach for multiclass skin lesion classification based on ensemble learning,” Informatics Med. Unlocked, vol. 25, p. 100659, 2021. https://doi.org/10.1016/j.imu.2021.100659.

A. Mahbod, G. Schaefer, C. Wang, G. Dorffner, R. Ecker, and I. Ellinger, “Transfer learning using a multi-scale and multi-network ensemble for skin lesion classification,” Comput. Methods Programs Biomed., vol. 193, p. 105475, 2020. https://doi.org/10.1016/j.cmpb.2020.105475.

M. A. Khan, M. Sharif, T. Akram, R. Damaševičius, and R. Maskeliūnas, “Skin Lesion Segmentation and Multiclass Classification Using Deep Learning Features and Improved Moth Flame Optimization,” Diagnostics, vol. 11, no. 5, 2021. https://doi.org/10.3390/diagnostics11050811.

M. A. Kassem, K. M. Hosny, and M. M. Fouad, “Skin Lesions Classification Into Eight Classes for ISIC 2019 Using Deep Convolutional Neural Network and Transfer Learning,” IEEE Access, vol. 8, pp. 114822–114832, 2020. https://doi.org/10.1109/ACCESS.2020.3003890.

M. A. Al-masni, D.-H. Kim, and T.-S. Kim, “Multiple skin lesions diagnostics via integrated deep convolutional networks for segmentation and classification,” Comput. Methods Programs Biomed., vol. 190, p. 105351, 2020. https://doi.org/10.1016/j.cmpb.2020.105351.

R. Karthik, T. S. Vaichole, S. K. Kulkarni, O. Yadav, and F. Khan, “Eff2Net: An efficient channel attention-based convolutional neural network for skin disease classification,” Biomed. Signal Process. Control, vol. 73, p. 103406, 2022. https://doi.org/10.1016/j.bspc.2021.103406.

N. I. Hasan and A. Bhattacharjee, “Deep Learning Approach to Cardiovascular Disease Classification Employing Modified ECG Signal from Empirical Mode Decomposition,” Biomed. Signal Process. Control, vol. 52, pp. 128–140, 2019. https://doi.org/10.1016/j.bspc.2019.04.005.

D. Keerthana, V. Venugopal, M. K. Nath, and M. Mishra, “Hybrid convolutional neural networks with SVM classifier for classification of skin cancer,” Biomed. Eng. Adv., vol. 5, p. 100069, 2023. https://doi.org/10.1016/j.bea.2022.100069.

V. Venugopal, J. Joseph, M. V. Das, and M. K. Nath, “DTP-Net: A convolutional neural network model to predict threshold for localizing the lesions on dermatological macro-images,” Comput. Biol. Med., vol. 148, p. 105852, 2022. https://doi.org/10.1016/j.compbiomed.2022.105852.

V. Venugopal, J. Joseph, M. Vipin Das, and M. Kumar Nath, “An EfficientNet-based modified sigmoid transform for enhancing dermatological macro-images of melanoma and nevi skin lesions,” Comput. Methods Programs Biomed., vol. 222, p. 106935, 2022. https://doi.org/10.1016/j.cmpb.2022.106935.

P. Tschandl, C. Rosendahl, and H. Kittler, “The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions,” Sci. Data, vol. 5, no. 1, p. 180161, 2018. https://doi.org/10.1038/sdata.2018.161.

E. Stockfleth, “Actinic Keratoses,” in Skin Cancer after Organ Transplantation, E. Stockfleth and C. Ulrich, Eds. Boston, MA: Springer US, 2009, pp. 227–239.

T. G. Berger, J. H. Graham, and D. K. Goette, “Lichenoid benign keratosis,” J. Am. Acad. Dermatol., vol. 11, no. 4, Part 1, pp. 635–638, 1984. https://doi.org/10.1016/S0190-9622(84)70220-9.

“Myers DJ, Fillman EP. Dermatofibroma. [Updated 2022 Apr 30]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2022 Jan-. Available from: https://www.ncbi.nlm.nih.gov/books/NBK470538/.”

“Riker, A. I., Zea, N., & Trinh, T. (2010). The Epidemiology, Prevention, and Detection of Melanoma. The Ochsner Journal, 10(2), 56–65. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3096196/.”

“Viana, A. C., Gontijo, B., & Bittencourt, F. V. (2013). Giant congenital melanocytic nevus. Anais brasileiros de dermatologia, 88(6), 863–878. https://doi.org/10.1590/abd1806-4841.20132233.”

“Vijayakumar A, Srinivas A, Chandrashekar BM, Vijayakumar A. Uterine vascular lesions. Rev Obstet Gynecol. 2013;6(2):69–79.”

“Joseph, S., & Olugbara, O. O. (2022). Preprocessing Effects on Performance of Skin Lesion Saliency Segmentation. Diagnostics (Basel, Switzerland), 12(2), 344. https://doi.org/10.3390/diagnostics12020344.”

A. N. Hoshyar, A. Al-Jumaily, and A. N. Hoshyar, “The Beneficial Techniques in Preprocessing Step of Skin Cancer Detection System Comparing,” Procedia Comput. Sci., vol. 42, pp. 25–31, 2014. https://doi.org/10.1016/j.procs.2014.11.029.

A. Khan, D. Iskandar, J. Al-Asad, and S. Elnakla, “Classification of Skin Lesion with Hair and Artifacts Removal using Black-hat Morphology and Total Variation. International Journal of Computing and Digital Systems.,” 2020.

K. Maharana, S. Mondal, and B. Nemade, “A review: Data pre-processing and data augmentation techniques,” Glob. Transitions Proc., vol. 3, no. 1, pp. 91–99, 2022. https://doi.org/10.1016/j.gltp.2022.04.020.

Acknowledgements

The authors thank the Simulation Lab at the School of Electrical & Electronics Engineering, SASTRA Deemed University, for providing infrastructural support to carry out this research work.

Author information

Authors and Affiliations

Contributions

All authors discussed the results and contributed equally to the final manuscript that is submitted for consideration.

Corresponding author

Ethics declarations

Ethics Approval

This research study was conducted using the HAM10000 dataset coming from publicly available datasets.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Raghavendra, P.V.S.P., Charitha, C., Begum, K.G. et al. Deep Learning–Based Skin Lesion Multi-class Classification with Global Average Pooling Improvement. J Digit Imaging 36, 2227–2248 (2023). https://doi.org/10.1007/s10278-023-00862-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-023-00862-5