Abstract

The main problem in content-based image retrieval (CBIR) systems is the semantic gap which needs to be reduced for efficient retrieval. The common imaging signs (CISs) which appear in the patient’s lung CT scan play a significant role in the identification of cancerous lung nodules and many other lung diseases. In this paper, we propose a new combination of descriptors for the effective retrieval of these imaging signs. First, we construct a feature database by combining local ternary pattern (LTP), local phase quantization (LPQ), and discrete wavelet transform. Next, joint mutual information (JMI)–based feature selection is deployed to reduce the redundancy and to select an optimal feature set for CISs retrieval. To this end, similarity measurement is performed by combining visual and semantic information in equal proportion to construct a balanced graph and the shortest path is computed for learning contextual similarity to obtain final similarity between each query and database image. The proposed system is evaluated on a publicly available database of lung CT imaging signs (LISS), and results are retrieved based on visual feature similarity comparison and graph-based similarity comparison. The proposed system achieves a mean average precision (MAP) of 60% and 0.48 AUC of precision-recall (P-R) graph using only visual features similarity comparison. These results further improve on graph-based similarity measure with a MAP of 70% and 0.58 AUC which shows the superiority of our proposed scheme.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In medical field, different imaging modalities like X-ray, computed tomography (CT), and magnetic resonance imaging (MRI) are being used to produce numerous images which can help in diagnosis and therapy. For example, by analyzing CT scan of lungs, radiologist can take the decision whether the lung tissue or lesion is normal or abnormal. This decision is normally based on some common imaging signs (CISs). These signs (CISs) can be categorized into different types without correlating it to some particular disease because the same signs can appear in different diseases [1]. To date, almost 50 categories of CISs have been observed and reported by medical experts. Among them, nine categories which appear most frequently in lung CT images are air bronchogram (AB), bronchial mucus plugs (BMP), calcification, cavity and vacuolus (CV), ground-glass opacity (GGO), lobulation, obstructive pneumonia (OP), pleural dragging (PI), and spiculation [2]. The sample images in which these signs appear are shown in Fig. 1. Content-based image retrieval (CBIR) systems can help in identifying the category of these CISs. CBIR systems can also assist the medical practitioners and radiologist by providing some relevant past cases and also help them in decision-making process which can improve the diagnosis accuracy. Radiomics is also emerging as a new field to develop and increase the power of clinical decision support systems. In contrast to visual interpretations of digital images provided by CBIR systems and other traditional practices, it is a process that converts a large number of quantitative features extracted from digital images into mineable data and then associates them with the biological and clinical endpoints useful for identifying prognostic biomarkers or treatment response in an individual patient[3,4,5]. To improve the predictive power of decision support systems, the establishment of radiomics is still in its earliest stages and it has to overcome the challenges such as the formation of large shared database, data accessibility, and availability of experienced radiologists to actively participate in data curation[5].

Existing CBIR systems mostly perform image retrieval using three fundamental units namely low-level features, similarity measure, and semantic gap reduction [6]. The performance of the CBIR system generally depends on feature extraction [7, 8] and similarity measurement technique [9]. Feature extraction algorithms are used to extract low-level visual information such as shape[10,11,12], texture[13,14,15,16,17], and color [18, 19] from the images to form an index feature vector. The dimension of feature vector plays an important role in determining the amount of storage space required for the vector, the retrieval accuracy, and computational complexity [20]. High-dimensional data are often hard to interpret and contains redundant and irrelevant features which can reduce system performance. When selecting the number of features for a certain problem, the main objectives are to retrieve the results that effectively represent the semantics of images by decreasing retrieval process complexity and improving the overall system efficiency [11, 21, 22].

Feature selection can be broadly categorized into three types: filter, wrapper, and embedded methods [20, 23]. In filter methods, features are ranked according to their individual scores based on some evaluation criteria and without using any classifier model. The highest-ranked features are adopted to evaluate the system and the lowest are discarded considering them as irrelevant or redundant. In wrapper methods, a subset of features are selected collectively by defining an evaluation and search algorithm. Moreover, a classification model is selected to assess their predictive power. The methods which combine both feature selection and a classifier learning model into a single process are known as embedded methods. Conventional, CBIR systems such as [24, 25] used low-level information to compute similarity using commonly used distance metrics [26] between two instances. However, visual information cannot capture the high-level semantic concepts and in general, there is no direct connection between them [27]. Object ontology [28] and supervised [29] and unsupervised learning method [30] were introduced to define high-level semantic information. In addition, [31, 32] used visual and semantic outputs together to improve the performance of the retrieval system and to find consistencies between them.

Another important consideration in CBIR systems is the ranking and order of relevant cases retrieved to the specific query. Previously, many systems such as [33,34,35,36,37] have been proposed for similarity learning by learning distance functions and by exploitation of contextual information which led to enhanced performances. The images in the database are associated with each other, and the information that exists among the group of images is often called contextual information. Additionally, the problem of more relevant and less relevant differences among the images must be addressed if we want to obtain human-like performance [38]. Recently graph-based data manifold [39, 40] in addition to the fusion of visual and semantic similarity [41] has led to effective retrieval systems.

Table 1 presents the relevant work in summarized form, emphasizing the challenges, which is the primary motivation behind our effort. It can be seen that reported methods either consider only low-level visual information to compute relevancy or consider both semantic and low-level visual information except [41] which also used contextual information. We would like to note that classifiers are not mentioned for those techniques which used retrieval as their first step for classification task. The classifiers have been mentioned only for those techniques which classified query sample and then retrieved results.

In a nutshell, despite the fact that several CBIR systems have been proposed in recent times, the semantic gap poses a serious challenge to these systems and still remains an unresolved issue [49, 50]. In addition, different strategies on the basis of feature descriptors, feature selection, and similarity measurement were adopted previously. However, none of them focused on the effectiveness of combining all three processes. In this paper, we have proposed a combination of descriptors rather than relying on a single descriptor. Texture features such as LPQ [51], LTP [52], DWT [53] are used to form our feature vector. Next, the JMI-based [54] feature selection method is used to deal with high dimensionality problem. We also combined visual similarity and semantic similarity in equal proportion to construct a balanced graph. Finally, the shortest path was computed to acquire contextual information and similarity criteria for our retrieval system were obtained. Though our method of similarity measurement has resemblance to the one in [41], the novelty of our work lies in the fact that we have used balanced graph in contrast to an unbalanced graph in [41] which combined visual and semantic information in unequal proportion. The main contributions of this paper are as follows:

-

1.

Local phase quantization (LPQ) blur invariance property is explored for the first time in CISs.

-

2.

The proposed feature combination with JMI feature selection and balanced graph similarity strategy led to an effective retrieval system.

-

3.

The contribution of multiple descriptors along with feature selection and its impact on overall retrieval performance is thoroughly analyzed and explained.

-

4.

In contrast to the previous works for retrieval, rather than focusing on improving individual processes such as feature extraction scheme, feature selection, and similarity measurement criteria, we evaluated each of them and verified experimentally that all these processes when used collectively can produce better results.

The rest of the paper is organized as follows. Proposed Methodology gives an overview of our proposed framework. Results and experimental setup are discussed in Results section. Finally, conclusion and future work are presented in Conclusion section.

Proposed Methodology

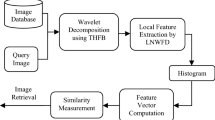

We have proposed a CBIR system based on visual, semantic, and contextual information of lung CT images. The whole framework of proposed retrieval system is divided into two phases as shown in Fig. 2. In the offline phase, we developed a feature database by extracting different texture features from training data which are stored as low-level visual information. We have chosen a feature selection method to obtain a set of features that will contribute towards better retrieval performance as well as reduce the dimension of a feature vector. The high-level semantic information is acquired by training support vector machine (SVM) model. In online phase, visual similarity is computed between query image feature vector and feature database while semantic similarity is computed using two steps. In first step, query image is classified and in the next step semantic database is created by computing semantic similarity with reference to each database image. Moreover, visual and semantic similarities are combined to construct a weighted balanced graph and then final similarity is found by using shortest path algorithm. The detail description of each component of our proposed work is given in the subsequent sections.

Dataset

The dataset used in our proposed methodology was obtained from publicly available LISS database [2]. LIDC/IDRI is also a very popular database that is being used for the classification and retrieval problem of lung nodules. However, its main focus is on the fact whether a lesion is a nodule or non-nodule based on its internal characteristics such as signs of calcification, speculation, and lobulation appearing in nodules. Most of the signs that are not part of nodules and belong to different lung diseases are not covered by this database. So, it was important to use a dataset that covers a variety of imaging sign annotations. Furthermore, the main purpose of the LISS database was to categorize the nine common imaging signs present in CT images rather than to categorize them to different nodules such as malignant and benign nodules which are formed from the combination of some of these signs. All the images in the LISS dataset are in DICOM format with a resolution of 512 × 512. The database comprises 271 scans with 252 scans for 2D images and 19 scans for 3D images. The total number of images that consists of lesion or CISs is 511 for 2D images set and 166 images are in the 3D image set. In addition, annotated text files are provided for the identification of ROIs. The ROIs were cropped from images according to the information given as, e.g., “PA19 IM208 144 254 170 275,”which means that the 208th slice image of the 19th scan or patient has lesion surrounded by a rectangle whose top-left coordinates are (144,254) and bottom-right (170,275) [2]. All the 2D sample ROI images (including 23 in AB category, 81 in BMP category, 47 in calcification category, 147 in CV category, 45 in GGO category, 41 in lobulation category, 18 in OP category, 80 in PI category, and 29 in speculation category) were selected to form ROIs image dataset. This dataset was divided into approximately 50% training and testing set using a hold-out technique. The tuning parameters were obtained by validating training data. Feature database was formed using training examples for visual similarity comparison and also for training the SVM classifier. It should be noted that the only semantic interpretation/feature provided by the LISS dataset is the labeling of ROI into its particular category of CISs other than this no such semantic features (e.g, gender, age, lesion margin) are included.

Feature Extraction

Feature extraction is one of the basic building blocks of many machine learning and computer vision problems. The choice of feature descriptor plays a crucial part in system performance. The features are extracted from the ROIs of lung CT images. We have used three descriptors namely LPQ, LBP, and DWT for the proposed work. Moreover, all the resultant features obtained are normalized to zero mean and unit variance and then concatenated to form a feature combination. High-dimensional data are often hard to interpret and contain redundant and irrelevant features which can affect the system’s performance, so we decided to use feature selection to reduce the number of features without compromising on system performance. The brief explanation of each descriptor is as follows:

Local Phase Quantization

Local Phase Quantization is a texture descriptor that operates in the frequency domain by computing phase locally for all pixels covered by selected window. The LPQ has shown some promising results for texture classification due to its blur invariance property [51]. The features are extracted from ROI by applying short-term Fourier transform (STFT) on input image f(x) and a rectangular uniform window of size m × m is defined for which local phase will be computed at each pixel location \(x =\left [x_{1} \ x_{2}\right ]^{T}\) with neighborhood Nx then 2-D discrete time STFT is given by [51]

where \(u =\left [u_{1} \ u_{2}\right ]^{T}\) is the 2-D frequency and wu(x) is a window function which is defined as

The phases of corresponding four frequencies u1 = [a,0]T,u2 = [0,a]T,u3 = [a,a]T,u4 = [a,−a]T are considered which result in a vector [51]

The phase information is further quantized to form an 8-bit binary codeword using sign of real and imaginary parts as follows [51]:

and

where qj is the j th component of G(p) which yields quantized version \(Q=\left [q_{1},q_{2},q_{3},\ldots ,q_{8}\right ]\) of G. The resultant feature vector is obtained by converting Q to decimal integers and then creating a histogram of 256 bins.

Local Ternary Pattern

Local ternary pattern is a texture descriptor that is less insensitive to illumination changes and some other image degradation [52]. It divides an image into small cells where cell size is adjusted depending upon the ROI size. The circular symmetric neighborhood P with radius R around the center pixel (xc,yc) is defined for each cell. The center pixel value ic is then compared with the surrounding pixels ip using some predefined threshold \('t^{\prime }\) to obtain 3 valued code as follows [52]:

where thresholding function s(u) is defined as

The code obtained is further decomposed into upper and lower binary patterns which are then converted to decimal integers separately to obtain histogram. The histogram of both patterns are concatenated which yields 512 dimension feature vector.

Discrete Wavelet Transform

Discrete wavelet transform provides multi-resolution analysis at much finer scales by decomposing signals into multiple low frequencies and its associated high frequencies. A large number of wavelet families exist to define the mother wavelet function for the corresponding problem. The most popular wavelet families are Haar and Daubechies [8]. Due to high regularity and symmetry, Daubechies wavelets have achieved success in lung nodule classification [53]. We have used 2-D Daubechies wavelet with four levels of decomposition to represent the ROI. For each level of decomposition, four frequency sub-bands are obtained. The low frequency content (approximated coefficients) of an image is represented by LL sub-band whereas the high frequency content (detail coefficient) is represented by LH, HL, and HH sub-band which gives the vertical, horizontal, and diagonal details of an image. The feature vector is constructed from the approximate and detail coefficients for each level of decomposition by commonly used statistical measures such as mean, standard deviation, and entropy. Hence, in this case, the resultant 48 dimension feature vector is obtained (4 × 3n = 48, where n = number of levels).

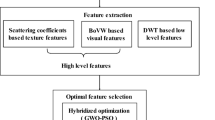

Hybrid Approach and Selection Criteria

The feature descriptors for the proposed system were selected based on correlation criteria. To further not limit our study to only texture descriptors, initially, we also explored shape features such as area [55], centroid [56], perimeter [57], circularity[57], roundness [57], eccentricity [58], and intensity features, i.e., mean [56], variance [56], maximum value inside [55], minimum value inside [55], skewness [56], and kurtosis [56] for CISs representation in order to obtain more diversity in selection of feature combinations. The aim was to select the features which show high intraclass correlation for the same category set of features since it will best match the images of the same category and show strong reliability whereas the low interclass correlation among features is desirable for different category images in order to obtain better discrimination ability. In this approach, we selected two different categories from our dataset. The correlation (intraclass) is first computed between features of the same category, and the next correlation (interclass) is computed between different categories. Figure 3 provides the correlation comparison between selected feature descriptors as well as shape and intensity features. Since LPQ, LTP, and DWT results are encouraging and better than shape and intensity features for both correlation measures, we concatenate (256 + 512 + 48) it to form a single 816 dimension feature vector.

Feature Selection

Feature ranking methods have low computational cost and avoid overfitting [59, 60]. The choice of feature selection (FS) method should be based on its accuracy, computational cost, and feature reduction ability. JMI offers better stability and accuracy trade-off among many information theoretic feature selection methods over a variety of datasets [61]. We have ranked features by using JMI for our proposed system, and to the best of our knowledge, it has not been explored previously for CBIR systems with medical applications. Further feature selection process is carried out only on the training set, and obtained feature indices are then used also for testing set. The detailed explanation of our selected feature selection technique is as follows:

Mutual Information

Mutual information (MI) measures the dependencies between two random variables X and Y. According to Shannon’s definition of entropy, \('H^{\prime }\) which is the measure of uncertainty present in a distribution X is given by

if the variable Y (class) is observed for a given feature vector X, then conditional entropy is denoted as [61]

Equation 9 indicates the remaining uncertainty present in X after we observe Y. Hence, the amount of uncertainty that is being reduced is given by [61]:

which is called MI between X and Y. If the two variables X and Y are statistically independent, i.e., p(x,y) = p(x).p(y), then MI is zero and if not then its value will be greater than zero. The limitation of MI is that each feature is tested independently without taking into account the relationship with other features which led to some redundant features.

Joint Mutual Information

Joint mutual information ranks the relevance of input variables by considering MI and conditional MI. The main aim is to discard the features that are dependent on each other and obtain a feature set that has collectively higher predictive power because sometimes features are individually redundant but jointly relevant. According to [54], JMI is defined as

where MI and conditional MI on the right side of the equation are maximized using Eqs. 9 and 10 to obtain feature set pair for maximum JMI. To get more insight into this, let our feature vector be n dimension (Xi,…,Xn) and the number of features selected be k < n, then we can obtain all feature input pairs (i,j) that yield maximum JMI. The advantage of using conditional MI is to remove redundant features by identifying inputs that are dependent on each other using conditional independence denoted as

Thus, conditional MI is zero if the feature Xj does not contribute to the already given information[Xi,…,n − 1]. Finally, the optimal feature set k is obtained by sorting all feature scores to rank them in descending order. It should be noted that if there is any correlation among selected descriptors it is obvious from the above explanation that JMI discards the redundant features as well as selects feature combination that helps to obtain complementary information and effective representation of CISs.

Similarity Measurements

Visual Information Retrieval

The visual comparisons of an image in our work can be divided into steps. First, visual similarity is computed between all database images using the feature database, and next, it is computed between the query and all database images. All the computations are performed by commonly used distance metrics such as Euclidean, correlation, city block, Spearman, and cosine. Let X1 and X2 be the two observations of N dimension then visual similarity Dv is obtained using mathematical formulation given in Table 2. In addition, for Spearman distance, rank vectors r1 and r2 are computed from observations X1 and X2 which are then used to compute the distance. Two observations are visually similar if they have less distance between them.

Semantic Resemblance

The image analysis at the semantic level is mostly performed through classifiers which captures the semantic information present in an image such that they are close to human perceptions. SVM is one such classifier which is quite effective in understanding the semantics of an image as well as when the feature dimension is greater than the number of data points. SVM linearly separates data points by drawing a decision boundary through a line or hyperplane between different classes. This problem is often characterized as a binary classification problem. However, kernel tricks such as radial basis function and polynomial function can also be used for multi-class classification when data is not linearly separable. The hyperplane function is given as

where the two learning parameters are wi(weight) and b (bias), N is the number of training examples of data X, xi is the i th training example, yi is the label of i th sample, and k is the kernel function. We have classified the feature database through SVM [62] classifier with Gaussian radial basis function (RBF) as a kernel to get semantic information in the form of probabilities. Gaussian radial basis function uses nonlinear decision boundaries to classify nine classes of CISs. This kernel function can be represented as

We have used one vs all strategy which fits K classifiers equal to number of classes C. When training the k th classifier, the examples of that class are considered positive and examples of remaining C − 1 classes are considered as negative. In query phase, label of the highest scoring (probability) class is assigned to query image. Finally, the semantic database is constructed by storing the semantic similarities between query and each database image given as [41]

The probability measure \(P(C_{I_{d}} |I_{q})\) shows the similarity between the query image Iq and database image Id, i.e., the more similar two images are, the higher the \(P(C_{I_{d}} |I_{q})\) and \(C_{I_{d}}\) show the true class of database image. It should be noted that higher probability should be mapped to lower distance and vice versa which is achieved by taking log.

Balanced Graph Construction and Retrieval

Once we achieve visual and semantic comparisons, the next step is to combine both measures to obtain a weighted undirected graph G = (V,E) with a set of nodes V and edge weights for query image as shown in Fig. 4. The graph consists of different category ROI images which are considered as graph nodes for better illustration purposes. All the nodes are numbered from 1 to 5. The root node is a query image, and the leaf nodes represent different database images while edges represent the cost from one node to another. The cost \(w^{c}_{iq}\) between database images \(I=\left [i_{1},i_{2},i_{3},\ldots ,i_{n} \right ]\) is determined by using only visual similarity Dv whereas the cost between query and all database images is computed by using both visual and semantic similarity Ds with weights a and b as [41]

Since visual and semantic information is less effective when used in unequal proportions, so, in contrast to [41] which used an unequal proportion of weights, we weigh them equally to avoid any bias towards a single information. We call this a balanced graph. In the graph shown, the costs are marked with green lines whereas the shortest path is computed by executing a Dijkstra algorithm [63] using query node as starting vertex and computing distance to each leaf node. The graph is represented by the adjacency matrix which is always symmetric. Hence, the final cost in the graph is shown by blue lines which is the final similarity score between the query and each database image. Furthermore, illustration of the shortest path computation also describes the extraction of contextual information and depicts the relationship between database images. For example, the initial distance between query node 1 and 4 was high and there is a chance that it may find smaller distance with some other class node than its own; however, by propagating similarity through node 2, the path was chosen with lower distance as compared to the earlier one which clearly shows the effectiveness of considering contextual information in retrieval problems. The final similarity scores determined through the shortest path are sorted, and the images are returned in descending order of their similarities.

Results

Performance Metrics

We have evaluated our proposed method using standard performance metrics namely: precision at position k(p@k), average precision (AP), mean average precision (MAP), precision-recall (P-R) graph. The mathematical representations of these performance metrics are given below:

where N is number of retrieved images and rel(k) is an indicator function for relevancy; if item at position k is relevant, rel(k) is 1, otherwise 0.

AP is calculated by using p@k as follows:

The NR in Eq. 18 shows the number of relevant images. MAP for a set of queries \('Q^{\prime }\) is the mean of average precision scores computed for each query. It is given by

The retrieved images are considered relevant if the label of these images matches with the query image. The effectiveness of our retrieval process is mainly dependent upon four factors, i.e., the number of features selected, distance metric, classifier tuning, and determination of weight parameters a and b for graph construction. Since the weight parameters are already determined by balancing the graph, the number of features, distance metric, and classifier tuning parameters are decided based on the validation results. As shown in Table 3, the Spearman distance yields the highest value of 0.5912 MAP among all distance metrics using a subset of 200 features. It can also be seen from the results that the performance of the system tends to decrease when we alter the number of features from the optimal feature set. Hence, both these parameters are further used in the testing process for our visual similarity and graph-based similarity computation. Furthermore, to prove the effectiveness of SVM-Gaussian for semantic interpretations as well as determining their tuning parameters, we experimented with some other variants of SVM as well as linear (naive Bayes) and non-linear (Adaboost M2, KNN, Bagging) classifiers using hyperparameter optimization. The comparison of these classifiers in terms of classification accuracy (CAR) along with tuning parameters is given in Table 4. The SVM-Gaussian outperformed the other implemented classifiers by yielding 81.75 CAR, and its obtained tuned parameters are used in the testing process of our proposed system.

Query Results and Comparisons

We have demonstrated the results of our proposed system using two different approaches. First, we have used only visual features, and in the second approach, we have used a balanced graph that combines visual, semantic, and contextual similarity. The reason for this is that these two approaches describe the impact on the results of the previously reported schemes. Furthermore, in order to check the effectiveness of our proposed feature extraction and selection processes on retrieval, initially, we evaluated our proposed method based on visual information only using a single descriptor, then we combined multiple descriptors, and finally, we performed feature selection over it. These results of different stages are shown in Table 5. The LPQ descriptor performed better than among all selected descriptors as well as combining all of them. The reason for LPQ’s better performance is due to its blur invariance property. The pair of CIS images are selected from three different categories to explain this phenomenon as shown in Fig. 5. All the images are cropped to focus on the point of interest. If we compare both images visually, in each class, the image to the left appears to be slightly blurred as compared to the other. Since LPQ is insensitive to the blurriness and its phase does not change [51], it can capture the essential information present in the image without deteriorating the results. It can also be seen in Table 5 that LPQ results on the average for Top 10–, 20–, 30–, 50–ranked images are very close to the feature selection method based on Fisher criterion and Genetic algorithm [41] method which makes it a strong candidate to describe CISs. Furthermore, the reduction in performance due to combining all descriptors is obvious due to very high dimensionality. Hence, with the application of feature selection, we combined the discriminative capabilities of all descriptors and obtained a feature set that finally led to better performance in terms of visual similarity. This feature set is then further employed for our proposed balanced graph-based retrieval. To check the effectiveness of our deployed feature selection technique, we experimented with other information theoretic feature selection techniques such as conditional mutual info maximization (CMIM)[64], max-relevance min-redundancy (MRMR)[65], interaction capping (ICAP)[66], conditional infomax feature extraction (CIFE)[67], and double input symmetrical relevance (DISR)[68] to compare the effectiveness of each of them for CISs retrieval. The results shown in Table 6 are retrieved based on balanced graph similarity for the Top 10–ranked images. It is obvious from these results that JMI is the optimal choice for feature selection among all feature selection approaches. More importantly, if we compare the results of Table 5 and Table 6, it can be inferred that at first place, the combination of feature descriptors and feature selection improved the performance of CBIR system and then the inclusion of semantic and contextual information in similarity measurement criteria further yielded better results. Hence, none of them can be separated in order to establish an effective retrieval system.

Discussion

We have made a detailed comparison of our proposed method with [41] which is close to our system’s performance. Recently [69] proposed CBIR system for bone tumor application by combining visual and semantic information. However, in contrast to [41] and our work, they did not include contextual information and employed relevance feedback mechanism which require user intervention and suffer from interreader variability. Furthermore, we believe that rather than evaluating the system on few query images as done in [69] a more generalized approach would be to evaluate the system on all testing set of query images and then computing its performance parameter values. Hence, we rule out their comparison with our work. We start our discussion by comparing results of Top 10, 20, 30, 50, and 100 retrieved images in terms of AP and MAP based on visual and graph similarity as shown in Table 7 and Table 8 respectively. It is evident from the given results that our proposed method outperforms [41] for both similarity methods. Our method performs better in terms of AP for most number of classes and in terms of MAP by approximately 7% for the whole retrieval process. In general, as we retrieve a large number of images in order to retrieve more relevant images, the precision decreases due to more irrelevant results as compared to relevant ones. So, the robustness of our method lies in the fact that as we retrieve a large number of images, the performance of our system does not decrease significantly in contrast to [41] as can be seen in results. In addition, the effects of different classification accuracies on overall retrieval process were also discussed through P-R curves in [41]. They used a few examples to construct different query subsets. We have extended their idea further by using slightly different approach; i.e., instead of using multiple classification accuracies, we imbalance the weights in our graph by assigning 0.7 to visual and 0.3 to semantic similarities respectively. As a result, an unbalanced graph is created. Next, we used an entire query set to create a more generalized retrieval model. The main purpose is to find a relation between semantic weight and classification accuracy. In other words, assigning less weight implies less contribution from semantic information. Hence, if a query sample is classified correctly, still, there can be a noticeable amount of irrelevant retrieved results depending upon visual information as shown in the Fig. 6. This problem is fixed by balancing the graph. Hence, classification accuracy is directly linked in determining semantic weight which further affects retrieval performance. The P-R curves of all three methods are shown in Fig. 7. The red, green, and blue curves correspond to the retrieval based on visual similarity, balanced graph similarity, and unbalanced graph similarity. The area under the P-R curve (AUPRC) is shown in the top right corner of these curves. It can be seen that AUPRC value of our proposed balanced graph is higher than that of the other two methods. Lastly, [41] reported four methods for retrieval and the highest reported AUPRC value was 0.4854 on the FCSS method which is lower than our both proposed balanced graph (i.e. 0.5871) and the unbalanced graph (i.e., 0.5347) method. Since the unbalanced graph method is similar to FCSS, its higher AUPRC value than FCSS is due to our robust feature combination and feature selection. The above discussion and the quantification of overall results indicate the robustness of our proposed method as compared to the [41].

Although many existing CBIR systems (e.g., [44], [45],[11], [48]) for medical applications achieved encouraging results they mostly focused on improving ranking mechanism of retrieved results or either employed classifiers and their combination to reduce semantic gap, but the impact of feature combinations alongside similarity learning mechanisms for the retrieved results have not been much studied in detail. Our proposed work addressed some of the issues in CBIR systems by extensive experimentation with a diverse set of feature extraction and selection schemes. At the same time, a balanced graph strategy for similarity measurement was also introduced which ultimately led to an efficient retrieval system. We incorporated domain-specific knowledge by exploring blur invariance property in CISs through LPQ. This exploration can be helpful in designing CAD systems for lung nodule classification and retrieval since some of these signs are found in benign and malignant nodules. The selection process of feature descriptors for the current problem was mainly based on intraclass and interclass correlation which was further enhanced by JMI criteria. The representation of CISs obtained through this mechanism yielded promising results, and hence, it can assist in one of the possible ways when designing and selecting the feature descriptors for different application areas since mostly the performance of the systems designed for the machine learning and computer vision problems heavily rely on feature extraction schemes.

Our proposed system also exhibit certain limitations. First, our proposed system is evaluated on a single limited LISS dataset owing to the fact that it is the only publicly available dataset that covers variety of CISs categorization. It can be tested on some other existing dataset (e.g., LIDC) if the CISs present in lung nodules are annotated and categorized by an experienced radiologist. We plan this work in the future for our proposed scheme to prove its well generalization.

The second limitation of our proposed work is its time-consuming process of graph construction for similarity measurement [41]. In contrast to traditional CBIR systems that employ only visual comparisons, their retrieved results are less accurate but more faster than our proposed work. Hence, our system achieves high accuracy at the expense of time. Furthermore, for each query image, it searches the whole database to compute the shortest path for similar instances despite the fact that some database images and query image may not correspond to the same category of CISs. This problem can be quite challenging and computationally intensive in large image database collection often utilized in deep learning. However, it can be mitigated by shrinking the search space technique such as proposed in [70].

Finally, the average AP of few CISs appears to be slightly low as compared to the rest of CISs which in turn effects the overall MAP score. It may be due to the class imbalance created by the small number of examples in the least AP class. In the future, to further enhance the retrieval results, some class balancing strategies and data augmentation techniques can be explored with the same retrieval process.

Conclusion

In this paper, a new combination of multiple descriptors is proposed. The combination of local phase quantization, local ternary pattern, and discrete wavelet transform features were used to form a complementary set of features. Then, optimal feature set from candidate features is obtained by using a joint mutual information-based feature selection scheme, as it offered a significant advantage over different information theoretic feature selection techniques to reduce the semantic gap and improve the performance parameters of CBIR system. We also explored the impact of blur invariance property of LPQ and its impact on overall retrieval performance. The best similarity measure was obtained by combining visual and semantic similarities in equal proportion to yield a balanced graph and finally learning contextual similarity through the shortest path to complete the retrieval process. Our proposed scheme achieved the highest MAP of 0.7027 and AUC 0.5871 for CBIR systems on the LISS dataset. The improvement in the overall retrieval system clearly indicates that the choice of feature descriptors, feature selection, and similarity metric are the key factors that determine the effectiveness of the CBIR system and none of these factors can be ignored for a better and stable retrieval system. Despite the limited dataset, the proposed strategy can be scaled to deep learning methodology by the transfer learning approach. In this approach, pre-trained networks can be used which are trained on large databases. Further deep models can be created from scratch by using large databases from the same domain and then transferring their learned weights. We plan to explore this approach in future work. The fusion of handcrafted and deep features can also be experimented to improve performance. The neighborhood structure of data using multiple graphs instead of a single graph for similarity criteria can also be a direction for future work.

References

Liu X., Ma L., Song L., Zhao Y., Zhao X., Zhou C.: Recognizing common ct imaging signs of lung diseases through a new feature selection method based on fisher criterion and genetic optimization. IEEE journal of biomedical and health informatics 19 (2): 635–647, 2014

Han G., Liu X., Han F., Santika I. N. T., Zhao Y., Zhao X., Zhou C.: The liss —a public database of common imaging signs of lung diseases for computer-aided detection and diagnosis research and medical education. IEEE Transactions on Biomedical Engineering 62 (2): 648–656, 2014

Rizzo S., Botta F., Raimondi S., Origgi D., Fanciullo C., Morganti A. G., Bellomi M.: Radiomics: the facts and the challenges of image analysis. European radiology experimental 2 (1): 1–8, 2018

Peeken J. C., Bernhofer M., Wiestler B., Goldberg T., Cremers D., Rost B., Wilkens J. J., Combs S. E., Nüsslin F.: Radiomics in radiooncology–challenging the medical physicist. Physica Medica 48: 27–36, 2018

Gillies R. J., Kinahan P. E., Hricak H.: Radiomics: images are more than pictures, they are data. Radiology 278 (2): 563–577, 2016

Liu Y., Zhang D., Lu G., Ma W. Y.: A survey of content-based image retrieval with high-level semantics. Pattern recognition 40 (1): 262–282, 2007

Dubey S. R., Singh S. K., Singh R. K.: A multi-channel based illumination compensation mechanism for brightness invariant image retrieval. Multimedia Tools and Applications 74 (24): 11,223–11,253, 2015

Rashedi E., Nezamabadi-Pour H., Saryazdi S.: A simultaneous feature adaptation and feature selection method for content-based image retrieval systems. Knowledge-Based Systems 39: 85–94, 2013

ElAlami M. E.: A new matching strategy for content based image retrieval system. Applied Soft Computing 14: 407–418, 2014

Alajlan N., Kamel M. S., Freeman G. H.: Geometry-based image retrieval in binary image databases. IEEE Transactions on Pattern Analysis and Machine Intelligence 30 (6): 1003–1013, 2008

Behnam M., Pourghassem H.: Optimal query-based relevance feedback in medical image retrieval using score fusion-based classification. Journal of digital imaging 28 (2): 160–178, 2015

Larsen A. B. L., Vestergaard J. S., Larsen R.: Hep-2 cell classification using shape index histograms with donut-shaped spatial pooling. IEEE transactions on medical imaging 33 (7): 1573–1580, 2014

Dubey S. R., Singh S. K., Singh R. K.: Local wavelet pattern: a new feature descriptor for image retrieval in medical ct databases. IEEE Transactions on Image Processing 24 (12): 5892–5903, 2015

Chun Y. D., Kim N. C., Jang I. H.: Content-based image retrieval using multiresolution color and texture features. IEEE Transactions on multimedia 10 (6): 1073–1084, 2008

Ojala T., Pietikäinen M., Mäenpää T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Transactions on Pattern Analysis & Machine Intelligence 7: 971–987, 2002

Dubey S. R., Singh S. K., Singh R. K.: Local diagonal extrema pattern: a new and efficient feature descriptor for ct image retrieval. IEEE Signal Processing Letters 22 (9): 1215–1219, 2015

Quellec G., Lamard M., Cazuguel G., Cochener B., Roux C.: Wavelet optimization for content-based image retrieval in medical databases. Medical image analysis 14 (2): 227–241, 2010

Liu G. H., Yang J. Y.: Content-based image retrieval using color difference histogram. Pattern recognition 46 (1): 188–198, 2013

Chatzichristofis S. A., Zagoris K., Boutalis Y. S., Papamarkos N.: Accurate image retrieval based on compact composite descriptors and relevance feedback information. International Journal of Pattern Recognition and Artificial Intelligence 24 (02): 207–244, 2010

Guyon I., Elisseeff A.: An introduction to variable and feature selection. Journal of machine learning research 3: 1157–1182, 2003. Mar

Guldogan E., Gabbouj M.: Feature selection for content-based image retrieval. Signal, Image and Video Processing 2 (3): 241–250, 2008

Chun Y. D., Seo S. Y., Kim N. C.: Image retrieval using bdip and bvlc moments. IEEE transactions on circuits and systems for video technology 13 (9): 951–957, 2003 bioinformatics

Saeys Y., Inza I., Larrañaga P.: A review of feature selection techniques in bioinformatics. bioinformatics 23 (19): 2507–2517, 2007

Cho H.C., Hadjiiski L., Sahiner B., Chan H.P., Helvie M., Paramagul C., Nees A.V.: Similarity evaluation in a content-based image retrieval (cbir) cadx system for characterization of breast masses on ultrasound images. Medical physics 38 (4): 1820–1831, 2011

Yue J., Li Z., Liu L., Fu Z.: Content-based image retrieval using color and texture fused features. Mathematical and Computer Modelling 54 (3-4): 1121–1127, 2011

Yu J., Amores J., Sebe N., Radeva P., Tian Q.: Distance learning for similarity estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence 30 (3): 451–462, 2008

Sethi I. K., Coman I. L., Stan D.: Mining association rules between low-level image features and high-level concepts.. In: Data mining and knowledge discovery: theory, tools, and technology III, vol. 4384, pp. 279–290. International Society for Optics and Photonics, 2001

Mezaris V., Kompatsiaris I., Strintzis M. G.: An ontology approach to object-based image retrieval.. In: Proceedings 2003 International Conference on Image Processing (Cat. No. 03CH37429), vol. 2, pp. II–511. IEEE, 2003

Vailaya A., Figueiredo M. A., Jain A. K., Zhang H. J.: Image classification for content-based indexing. IEEE transactions on image processing 10 (1): 117–130, 2001

Chen Y., Wang J. Z., Krovetz R.: An unsupervised learning approach to content-based image retrieval.. In: Seventh international symposium on signal processing and its applications, 2003. Proceedings., vol. 1, pp. 197–200. IEEE, 2003

André B., Vercauteren T., Buchner A. M., Wallace M. B., Ayache N.: Learning semantic and visual similarity for endomicroscopy video retrieval. IEEE Transactions on Medical Imaging 31 (6): 1276–1288, 2012

Zhu L., Shen J., Xie L., Cheng Z.: Unsupervised visual hashing with semantic assistant for content-based image retrieval. IEEE Transactions on Knowledge and Data Engineering 29 (2): 472–486, 2016

Pedronette D. C. G.: Torres, R.d.S.: Exploiting contextual information for image re-ranking and rank aggregation. International Journal of Multimedia Information Retrieval 1 (2): 115–128, 2012

Perronnin F., Liu Y., Renders J. M.: A family of contextual measures of similarity between distributions with application to image retrieval.. In: 2009 IEEE Conference on computer vision and pattern recognition, pp. 2358–2365. IEEE, 2009

Schwander O., Nielsen F.: Reranking with contextual dissimilarity measures from representational bregman k-means.. In: VISAPP (1), Pp. 118–123, 2010

El-Naqa I., Yang Y., Galatsanos N. P., Nishikawa R. M., Wernick M. N.: A similarity learning approach to content-based image retrieval: application to digital mammography. IEEE transactions on medical imaging 23 (10): 1233–1244, 2004

Bai S., Bai X.: Sparse contextual activation for efficient visual re-ranking. IEEE Transactions on Image Processing 25 (3): 1056–1069, 2016

Bai X., Yang X., Latecki L. J., Liu W., Tu Z.: Learning context-sensitive shape similarity by graph transduction. IEEE Transactions on Pattern Analysis and Machine Intelligence 32 (5): 861–874, 2009

Bai S., Sun S., Bai X., Zhang Z., Tian Q.: Improving context-sensitive similarity via smooth neighborhood for object retrieval. Pattern Recognition 83: 353–364, 2018

Bai S., Sun S., Bai X., Zhang Z., Tian Q.: Smooth neighborhood structure mining on multiple affinity graphs with applications to context-sensitive similarity.. In: European conference on computer vision, pp. 592–608. Springer, 2016

Ma L., Liu X., Gao Y., Zhao Y., Zhao X., Zhou C.: A new method of content based medical image retrieval and its applications to ct imaging sign retrieval. Journal of biomedical informatics 66: 148–158, 2017

Rahman M. M., Desai B. C., Bhattacharya P.: Image retrieval-based decision support system for dermatoscopic images.. In: 19Th IEEE symposium on computer-based medical systems (CBMS’06), pp. 285–290. IEEE, 2006

Ballerini L., Li X., Fisher R. B., Rees J.: A query-by-example content-based image retrieval system of non-melanoma skin lesions.. In: MICCAI International workshop on medical content-based retrieval for clinical decision support, pp. 31–38. Springer, 2009

Dhara A. K., Mukhopadhyay S., Dutta A., Garg M., Khandelwal N.: Content-based image retrieval system for pulmonary nodules: Assisting radiologists in self-learning and diagnosis of lung cancer. Journal of digital imaging 30 (1): 63–77, 2017

Wei G., Cao H., Ma H., Qi S., Qian W., Ma Z.: Content-based image retrieval for lung nodule classification using texture features and learned distance metric. Journal of medical systems 42 (1): 13, 2018

Suganya R., Rajaram S.: Content based image retrieval of ultrasound liver diseases based on hybrid approach. American Journal of Applied Sciences 9 (6): 938, 2012

Akakin H. C., Gurcan M. N.: Content-based microscopic image retrieval system for multi-image queries. IEEE transactions on information technology in biomedicine 16 (4): 758–769, 2012

Qayyum A., Anwar S. M., Awais M., Majid M.: Medical image retrieval using deep convolutional neural network. Neurocomputing 266: 8–20, 2017

Deserno T. M., Antani S., Long R.: Ontology of gaps in content-based image retrieval. Journal of digital imaging 22 (2): 202–215, 2009

Smeulders A. W., Worring M., Santini S., Gupta A., Jain R.: Content-based image retrieval at the end of the early years. IEEE Transactions on Pattern Analysis & Machine Intelligence 12: 1349–1380, 2000

Ojansivu V., Heikkilä J.: Blur insensitive texture classification using local phase quantization.. In: International conference on image and signal processing, pp. 236–243. Springer, 2008

Tan X., Triggs W.: Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE transactions on image processing 19 (6): 1635–1650, 2010

Orozco H. M., Villegas O. O. V., Sánchez V. G. C., Domínguez H.d.J.O., Alfaro M.d.J.N.: Automated system for lung nodules classification based on wavelet feature descriptor and support vector machine. Biomedical engineering online 14 (1): 9 , 2015

Yang H., Moody J.: Feature selection based on joint mutual information.. In: Proceedings of international ICSC symposium on advances in intelligent data analysis, pp. 22–25. Citeseer, 1999

Messay T., Hardie R. C., Rogers S. K.: A new computationally efficient cad system for pulmonary nodule detection in ct imagery. Medical image analysis 14 (3): 390–406, 2010

Akram S., Javed M. Y., Akram M. U., Qamar U., Hassan A.: Pulmonary nodules detection and classification using hybrid features from computerized tomographic images. Journal of Medical Imaging and Health Informatics 6 (1): 252–259, 2016

Tartar A., Kilic N., Akan A. (2013) Classification of pulmonary nodules by using hybrid features. Computational and Mathematical Methods in Medicine, 2013

Wang Z., Chi Z., Feng D.: Shape based leaf image retrieval. IEE Proceedings-Vision. Image and Signal Processing 150 (1): 34–43, 2003

Chandrashekar G., Sahin F.: A survey on feature selection methods. Computers & Electrical Engineering 40 (1): 16–28, 2014

Xue B., Zhang M., Browne W. N., Yao X.: A survey on evolutionary computation approaches to feature selection. IEEE Transactions on Evolutionary Computation 20 (4): 606–626, 2015

Brown G., Pocock A., Zhao M. J., Luján M.: Conditional likelihood maximisation: a unifying framework for information theoretic feature selection. Journal of machine learning research 13: 27–66, 2012. Jan

Platt J., et al.: Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Advances in large margin classifiers 10 (3): 61–74, 1999

Shaffer C. A.: Data structures and algorithm analysis. Update 3: 0–10, 2013

Fleuret F.: Fast binary feature selection with conditional mutual information. Journal of Machine learning research 5: 1531–1555, 2004. Nov

Peng H., Long F., Ding C.: Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Transactions on Pattern Analysis & Machine Intelligence 8: 1226–1238, 2005

Jakulin A. (2005) Machine learning based on attribute interactions: phd dissertation. Ph.D. thesis, Univerza v Ljubljani, Fakulteta za računalništvo in informatiko

Lin D., Tang X.: Conditional infomax learning: an integrated framework for feature extraction and fusion.. In: European conference on computer vision, pp. 68–82. Springer, 2006

Meyer P. E., Bontempi G.: On the use of variable complementarity for feature selection in cancer classification.. In: Workshops on applications of evolutionary computation, pp. 91–102. Springer, 2006

Banerjee I., Kurtz C., Devorah A. E., Do B., Rubin D. L., Beaulieu C. F.: Relevance feedback for enhancing content based image retrieval and automatic prediction of semantic image features: Application to bone tumor radiographs. Journal of biomedical informatics 84: 123–135, 2018

Khatami A., Babaie M., Tizhoosh H. R., Khosravi A., Nguyen T., Nahavandi S.: A sequential search-space shrinking using cnn transfer learning and a radon projection pool for medical image retrieval. Expert Systems with Applications 100: 224–233, 2018

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kashif, M., Raja, G. & Shaukat, F. An Efficient Content-Based Image Retrieval System for the Diagnosis of Lung Diseases. J Digit Imaging 33, 971–987 (2020). https://doi.org/10.1007/s10278-020-00338-w

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-020-00338-w