Abstract

We consider kernel methods to construct nonparametric estimators of a regression function based on incomplete data. To tackle the presence of incomplete covariates, we employ Horvitz–Thompson-type inverse weighting techniques, where the weights are the selection probabilities. The unknown selection probabilities are themselves estimated using (1) kernel regression, when the functional form of these probabilities are completely unknown, and (2) the least-squares method, when the selection probabilities belong to a known class of candidate functions. To assess the overall performance of the proposed estimators, we establish exponential upper bounds on the \(L_p\) norms, \(1\le p<\infty \), of our estimators; these bounds immediately yield various strong convergence results. We also apply our results to deal with the important problem of statistical classification with partially observed covariates.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \((\varvec{Z},Y)\) be a \(\mathbb {R}^d\times \mathbb {R}\)-valued random vector with distribution function \(F_{{\varvec{Z}}, {\mathrm{Y}}}\) and consider the problem of estimating the regression function

based on the data \(\mathbb {D}_n = \{(\varvec{Z}_1,Y_1), \ldots ,(\varvec{Z}_n,Y_n)\}\), where \((\varvec{Z}_i, Y_i)\)’s are independently and identically distributed (i.i.d) random vectors from \(F_{{\varvec{Z}},{\mathrm{Y}}}\). In practice the data \(\mathbb {D}_n\) may be incomplete. More specifically, we are concerned with the important problem of estimating the regression function \(m(\varvec{z})\) when \(\mathbb {D}_n\) has incomplete covariates. The literature contains a large number of results on parametric and semi-parametric techniques for estimating a regression function in the presence of missing covariates. Robins et al. (1994) developed a class of locally and globally adaptive semi-parametric estimators using inverse probability weighted estimating equations. Lipsitz and Ibrahim (1996) examined the use of the EM algorithm to obtain parameter estimates for a general parametric regression model in the case of incomplete categorical covariates. Ibrahim et al. (1999) extended the results of Lipsitz and Ibrahim (1996) to general linear models for the case of mixed continuous and categorical covariates for non-ignorable missing data, in which the probability of observing the missing covariate is dependent on the possible missing value itself. Chen (2004) developed consistent maximum likelihood estimates for the parameters of the regression function by modeling the covariate distribution semi-parametrically. Liang et al. (2004) proposed estimators of the unknown components of a partially linear model and extend their results to longitudinal data. Zhang and Rockette (2005) constructed semi-parametric maximum likelihood estimates for the parameters of the regression function, without specifying the distribution for either the selection probabilities or the covariates, provided that the always-observable covariates are discrete or can be discretized. Sinha et al. (2014) proposed semi-parametric estimators for the model parameters using an estimated score approach, whereas Guo et al. (2014) considered the estimation of a semi-parametric multi-index model based on a weighted estimating equation approach. Bravo (2015) considered an iterative estimator based on inverse probability weighting and local linear estimation.

The literature on nonparametric regression function estimation with incomplete covariates is not as extensive as that of parametric and semi-parametric models. Results along these lines include the imputation based estimators of a distribution function proposed and studied by Cheng and Chu (1996). Mojirsheibani (2007) extended the approach of Cheng and Chu (1996) to the estimation of set- and function-indexed parameters, which are then used to study the uniform performance of certain nonparametric estimators of regression and density functions in the presence of incomplete covariates. Efromovich (2012) constructed adaptive orthogonal series estimators and studies the minimax rate of the MISE of these estimators when the regression function belongs to a Sobolev class. Faes et al. (2011) developed variational Bayes algorithms using penalized splines with a mixed model representation, whereas Hu et al. (2014) proposed a two-stage multiple imputation method for nonparametric estimation in quantile regression models.

This article focuses on kernel estimation of a regression function when there may be incomplete covariates in the data \(\mathbb {D}_n\). We recall that when \(\mathbb {D}_n\) is fully observable (i.e., there are no missing variables), the popular kernel regression estimator of \(m(\varvec{z})\) is given by

where \(\mathcal {K}: \mathbb {R}^d\rightarrow \mathbb {R}_+\) is an integrable function, called the kernel, and \(h_n>0\) is the corresponding smoothing parameter of the kernel. Devroye and Wagner (1980), and Spiegelman and Sacks (1980), established the \(L_1\) convergence \(\int |m_n(\varvec{z})-m(\varvec{z})|\mu (d\varvec{z})\overset{p}{\rightarrow } 0\), as \(n\rightarrow \infty \), where \(\mu \) is the probability measure of the random vector \(\varvec{Z}\). Devroye (1981) established the strong convergence of (2) in \(L_1\), whereas Devroye and Krzyz̀ak (1989) derived the following nonparametric exponential upper bound under the assumption that \(|Y|\le M\), for some \(M<\infty \). This classical result may be summarized as follows: for every \(\epsilon >0\) and n large enough

with \(c={\min }^2\left\{ \epsilon ^2/[128M^2(1+c_1)]~,~ \epsilon /[32L(1+c_1)]\right\} \), where \(c_1\) is a constant that depends on the kernel \(\mathcal {K}\) only (see Lemma 1). Clearly, this bound together with the Borel-Cantelli lemma yield the complete convergence (and thus the almost-sure convergence) of the \(L_p\) norm of \(m_n\). For more on such results, one may also refer to Kohler et al. (2003), Walk (2002a), and Walk (2002b). The problem can be substantially more complicated when the data contains covariate vectors that are not necessarily fully observable, and this will be the focus of this paper.

In the rest of this paper we develop kernel based estimators of the regression function (1), when a subset of the covariate vector is Missing At Random (MAR). In Sect. 2 we proceed to develop results similar to that of Devroye and Krzyz̀ak (1989), and derive exponential upper bounds on the general \(L_p\) norms of our estimators for \(1\le p<\infty \). In Sect. 3 we apply our results to the problem of statistical classification in the presence of partially observed covariates.

2 Main results

Our goal in this section is to construct kernel estimators of the regression function \(m(\varvec{z})=E[Y|\varvec{Z}=\varvec{z}]\) when some of the components of the covariate vector \(\varvec{Z}\) may be missing. Let \((\varvec{Z},Y)\) be an \(\mathbb {R}^{d+s}\times \mathbb {R}\)-valued random vector where \(\varvec{Z}=(\varvec{X}',\varvec{V}')'\), with \(\varvec{X}\in \mathbb {R}^d\), \(\varvec{V}\in \mathbb {R}^s\), and \(d,s\ge 1\). Here \(\varvec{X}\) is always observable but \(\varvec{V}\) may be missing at random. Define the Bernoulli random variable \(\Delta \) according to \(\Delta =1\) if \(\varvec{V}\) is observable (and \(\Delta =0\), otherwise). Therefore, the data may be represented as \(\mathbb {D}_n= \{(\varvec{Z}_1,Y_1,\Delta _1), \ldots ,(\varvec{Z}_n,Y_n,\Delta _n)\}\). We also define the selection probability (also called the missing probability mechanism) according to

If the right hand side of (4) is equal to a constant, then the missing probability mechanism is said to be Missing Completely At Random (MCAR). The MCAR assumption is unrealistic in practice. Therefore, in what follows, we will use the more commonly used Missing At Random (MAR) assumption. Under the MAR assumption, the probability that \(\varvec{V}\) is missing depends only on the variables which are always available. More specifically, under the MAR assumption, the selection probability in (4) becomes

For more on these and other missingness patterns one may refer to the monograph by Little and Rubin (2002). To motivate our proposed regression estimators, first consider the hypothetical situation where the selection probability \(\eta ^*\) is completely known. In this case, we propose to work with the modified kernel regression estimator given by

Observe that the estimator in (6) works by weighting the complete cases by the inverse of the selection probabilities, which is in the spirit of the classical estimator of Horvitz and Thompson (1952). In fact, this approach has been used by many authors in the literature; see, for example, Robins et al. (1994), Hirano and Ridder (2003), and Wang et al. (2010). As for the usefulness of \(\widehat{m}_{\eta ^*}(\varvec{z})\) as an estimator of \(m(\varvec{z})\), observe that (6) can be rewritten as

which is a ratio of kernel estimators for the following ratio of two conditional expectations

Therefore when the missing probability mechanism \(\eta ^*\) is known, (6) can be seen as a kernel regression estimator of the regression function \(E[Y|\varvec{Z}]=m(\varvec{Z})\). To study the performance of the estimator in (6), we examine its convergence properties in \(L_p\) norms. In what follows we shall assume that the selected kernel \(\mathcal {K}\) is regular: A nonnegative kernel \(\mathcal {K}\) is said to be regular if there are positive constants \(b>0\) and \(r>0\) for which \(\mathcal {K}({\varvec{z}})\ge b\, I\{{\varvec{z}}\in S_{0,r}\}\) and \(\int \sup \nolimits _{{\varvec{y}}\in {\varvec{z}}+S_{0,r}} \mathcal {K}({\varvec{y}})d{\varvec{z}} < \infty \), where \(S_{0,r}\) is the ball of radius r centered at the origin. This is also the type of kernel used by Devroye and Krzyz̀ak (1989). In fact, many of the kernels used in practice are regular kernels; the popular Gaussian kernel is a regular kernel. For more on regular kernels, one may also refer to Györfi et al. (2002). Before going any further, we will state a condition that amounts to requiring \(\varvec{V}\) to be observable with a nonzero probability:

Condition A1

\(\inf \nolimits _{\varvec{x}\in \mathbb {R}^d,y\in \mathbb {R}} \, P(\Delta =1|\varvec{X} = \varvec{x},Y=y) :=\eta _0>0\) for some \(\eta _0\).

Theorem 1

Let \(\widehat{m}_{\eta ^*}(\varvec{z})\) be the kernel regression estimator defined in (6), where \(\mathcal {K}\) is a regular kernel, and suppose that condition A1 holds. If \(|Y|\le M<\infty \) and \(h_n\rightarrow 0\) and \(nh_n^{d+s}\rightarrow \infty \), as \(n\rightarrow \infty \), then for every \(\epsilon >0\) and n large enough

where \(a\equiv a(\epsilon )={\min }^2(\epsilon ^2 \eta _0^{2}/[2^{2p+7}M^{2p}(1+c_1)],\epsilon \eta _0/[2^{p+5}M^{p}(1+c_1)])\), and \(c_1\) is the positive constant of Lemma 1 in the Appendix.

The proof of Theorem 1 is rather straightforward and follows from the upper bound in (3) and the fact that for any \(\varvec{z}\in \mathbb {R}^{d+s}\) and \(p\in [1,\infty )\) one has \(\left| \widehat{m}_{\eta ^*}(\varvec{z})-m(\varvec{z})\right| ^p\le \left( \left| \widehat{m}_{\eta ^*} (\varvec{z})\right| + \left| m(\varvec{z}) \right| \right) ^{p-1}\left| \widehat{m}_{\eta ^*}(\varvec{z})-m(\varvec{z}) \right| \le (2M)^{p-1} \left| \widehat{m}_{\eta ^*} (\varvec{z})-m(\varvec{z})\right| \,.\) In passing, we also note that the bound in Theorem 1 together with the Borel-Cantelli lemma immediately yield \(E[|\widehat{m}_{\eta ^*} (\varvec{Z})-m(\varvec{Z})|^p|\mathbb {D}_n] \overset{\text {a.s.}}{\rightarrow }0\), as \(n\rightarrow \infty \).

Clearly, the kernel estimator in (6) is useful only if the missing probability mechanism \(\eta ^*(\varvec{X},Y)=E[\Delta |\varvec{X},Y]\) is known (an unrealistic case). If \(\eta ^*\) is unknown, it must be replaced by some sample-based estimator. We consider two different estimators of \(\eta ^*\) and study the performance of the corresponding revised versions of (6). Our first estimator of \(\eta ^*(\varvec{X},Y)\) is itself a kernel estimator whereas the second one is based on the least-squares method.

2.1 The first estimator

Here we consider replacing the unknown selection probability \(\eta ^*(\varvec{X}_i,Y_i)\) in (6) by the following estimator

with the convention that \(0/0=0\), where \(\mathcal {H}{: }\mathbb {R}^d\rightarrow \mathbb {R}_+\) and \(\mathcal {J}{: }\mathbb {R}^{d+1}\rightarrow \mathbb {R}_+\) are the kernels used, and the smoothing parameter \(\lambda _n\) satisfies \(\lambda _n\rightarrow 0\) as \(n\rightarrow \infty \). The top estimator in (7) will be used if Y is a discrete random variable, otherwise the second estimator will be considered. Our modified kernel-type estimator of \(m(\varvec{z})=E[Y|\varvec{Z}=\varvec{z}]\) is given by

To study the regression function estimator (8), first we state a number of assumptions.

Condition A2

The kernel \(\mathcal {H}\) in (7) satisfies \(\int _{\mathbb {R}^d} \mathcal {H}({\varvec{w}})d{\varvec{w}}=1\) and \(\int _{\mathbb {R}^d} |w_i| \mathcal {H}({\varvec{w}})d{\varvec{w}}<\infty ,\) for \(i=1,\ldots , d,\) where \(\varvec{w}=(w_1,\ldots ,w_d)'\). Furthermore, the smoothing parameter \(\lambda _n\) satisfies \(\lambda _n\rightarrow 0\) and \(n \lambda _n^d\rightarrow \infty \), as \(n\rightarrow \infty \)

Condition A3

The random vector \({\varvec{X}}\) has a compactly supported probability density function, \(f({\varvec{x}})=\sum _{y\in \mathcal {Y}} f_y(\varvec{x})P(Y=y)\), which is bounded away from zero on its support, where \(f_y(\varvec{x})\) is the conditional density of \(\varvec{X}\) given \(Y=y\). Additionally, both f and its first-order partial derivatives are uniformly bounded on its support.

Condition A4

The partial derivatives \(\frac{\partial }{\partial x_i} \eta ^*(\varvec{x},y)\), exist for \(i=1,\ldots , d\) and are bounded uniformly, in \(\varvec{x}\), on the compact support of f.

Condition A2 is not restrictive since the choice of the kernel \(\mathcal {H}\) is at our discretion. Condition A3 is usually imposed in nonparametric regression in order to avoid having unstable estimates (in the tail of the p.d.f of \(\varvec{X}\)). Condition A4 is technical and has already been used in the literature; see, for example, Cheng and Chu (1996).

Theorem 2

Let \(\widehat{m}_{\widehat{\eta }}(\varvec{z})\) be as in (8), where \(\widehat{\eta }\) is the top estimator in (7). Suppose that \(|Y|\le M<\infty \) and that \(\mathcal {K}\) is a regular kernel. If \(h_n\rightarrow 0\) and \(nh_n^{d+s}\rightarrow \infty \), as \(n\rightarrow \infty \), then under conditions A1, A2, A3, and A4, for every \(\epsilon >0\) and every \(p\in [1,\infty )\), and n large enough,

where \(a_1,~a_2,\) and \(a_3\) are positive constants not depending on n or \(\epsilon \), and \(b\equiv b(\epsilon )>0\) does not depend on n.

Proof

See the Appendix. \(\square \)

Remarks

Theorem 2 has been stated (and proved) for the case where \(\widehat{\eta }\) is the top estimator in (7) which corresponds to the case where Y is a discrete random variable. This is particularly useful when Y is the class variable in a classification problem. However, Theorem 2 continues to hold, with different values of the constants \(a_1, a_2, a_3,\) and b, even if Y is a continuous random variable (in which case the bottom estimator in (7) will be used for \(\widehat{\eta }\)). The proof of this result is similar to (and, in fact, easier than) that of Theorem 2 and will not be given here. In this case \(\mathcal {H}\) will be replaced by the kernel \(\mathcal {J}\) and d will be replaced by \(d+1\) in condition A2, and f will be the pdf of \({\varvec{U}}=({\varvec{X}'},Y)'\) in condition A3. Furthermore, condition A4 will be expressed in terms of the partial derivatives \(\frac{\partial }{\partial u_i} \eta ^*({\varvec{u}})\), \(i=1,\ldots , d+1\), where \({\varvec{u}}=({\varvec{x}}', y)'\). In passing, we also note that in view of Theorem 2 and the Borel-Cantelli lemma, if \(\log n/(n\lambda _n^d)\rightarrow 0\) as \(n\rightarrow \infty \), then \(E[|\widehat{m}_{\widehat{\eta }} (\varvec{z})-m(\varvec{z})|^p|\mathbb {D}_n]\overset{\text {a.s.}}{\rightarrow }0\).

2.2 The second estimator

Another method to estimate the selection probability \(\eta ^*\) (under the MAR assumption) is the logistic regression model; this model’s flexibility and convenience has made it one of the most popular regression estimators for such cases. In addition to this, here we also discuss the least-squares method to estimate \(\eta ^*\). Suppose that it is known in advance that the regression function belongs to a given (known) class of functions. In this case the least-squares estimator is an alternative to the kernel estimator of \(\eta ^*\) and works as follows. Let \(\eta ^*\) belong to a known class of functions \(\mathcal {M}\) of the form \(\eta :\mathbb {R}^d\times \mathbb {R}\rightarrow [\eta _0,1]\), where \(\eta _0=\inf \limits _{\varvec{x},y}\, P(\Delta =1|\varvec{X}=\varvec{x},Y=y)>0\), as described in assumption A1. The least-squares estimator of the function \(\eta ^*\) is

Replacing \(\eta ^*(\varvec{X}_i,Y_i)\) in (6) with the least-squares estimator (9), we arrive at the revised regression estimator

To study the performance of \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}} (\varvec{z})\), we employ results from the empirical process theory [see, for example, van der Vaart and Wellner (1996, p. 83) and Pollard (1984, p. 25); also see Györfi, et al. (2002, p. 134)]. We say \(\mathcal {M}\) is totally bounded with respect to the \(L_1\) empirical norm if for every \(\epsilon >0\), there exists a subclass of functions \(\mathcal {M}_{\epsilon } = \{\mathcal {M}_1,\ldots ,\mathcal {M}_{\varvec{N}_{\epsilon }}\}\) such that for every \(\eta \in \mathcal {M}\) there exists a \(\eta ^{\dagger }\in \mathcal {M}_{\epsilon }\) with the property that for the fixed points \((\varvec{x}_1,y_1),\ldots ,(\varvec{x}_n,y_n)\) we have \(\frac{1}{n} \sum _{i=1}^n\left| \eta (\varvec{x}_i,y_i) - \eta ^{\dagger }(\varvec{x}_i,y_i)\right| <\epsilon \). The subclass \(\mathcal {M}_{\epsilon }\) is called an \(\epsilon \)-cover of \(\mathcal {M}\). The cardinality of the smallest such cover is called the \(\epsilon \)-covering number of \(\mathcal {M}\) and is denoted by \(\mathcal {N}_1(\epsilon ,\mathcal {M},\mathbb {D}_n)\). The following result summarizes the performance of the \(L_p\) norms of \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}(\varvec{z})\).

Theorem 3

Let \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}(\varvec{z})\) be as defined via (10) and (9) and suppose that \(\mathcal {M}\) is totally bounded with respect to the empirical \(L_1\) norm. Let \(\mathcal {K}\) in (10) be a regular kernel and suppose that condition A1 holds and that \(|Y|\le M<\infty \). Then, provided that \(h_n\rightarrow 0\) and \(nh_n^{d+s}\rightarrow \infty \), as \(n\rightarrow \infty \), one has for every \(\epsilon >0\), every \(p\in [1,\infty )\), and n large enough

with \(b\equiv b(\epsilon )\) as in Theorem 2, and \(b_1,\ldots ,b_5>0\) are constants not depending on n or \(\epsilon \).

We also note that \(E[|\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}} (\varvec{z})-m(\varvec{z})|^p| \mathbb {D}_n]\overset{\text {a.s}}{\rightarrow }0\), whenever \(\log (E\big [\mathcal {N}_1\big (b_3\wedge b_5,\mathcal {M},\mathbb {D}_n\big )\big ])/n\rightarrow 0\) as \(n\rightarrow \infty \).

Proof

See the Appendix. \(\square \)

2.3 Applications to classification with missing covariates

In this section we consider an application of our main results to the problem of statistical classification. Let \((\varvec{Z},Y)\) be an \(\mathbb {R}^{d+s}\times \{1,\ldots ,N\}\)-valued random vector, where Y is the class label and is to be predicted from the explanatory variables \(\varvec{Z}=(Z_1,\ldots ,Z_{d+s})\); here \(N\ge 2\) is an integer. In classification one searches for a function \(\phi :\mathbb {R}^{d+s}\rightarrow \{1,\ldots ,N\}\) such that the missclassification error probability \(L(\phi )=P\{\phi (\varvec{Z})\ne Y\}\) is as small as possible. Let \(\pi _k(\varvec{z}) = P\left\{ Y=k|\varvec{Z} = \varvec{z}\right\} ,~\varvec{z}\in \mathbb {R}^{d+s},~1\le k\le N\,,\) be the conditional class probabilities. Then, the best classifier called the Bayes classifier (i.e., the one that minimizes \(L(\phi )\)) is given by [see, for example, Devroye and Györfi (1985, pp. 253–254)]

We note that the Bayes classifier satisfies \(\max \nolimits _{1\le k\le N} \pi _k(\varvec{z})=\pi _{\phi _{B}(\varvec{z})}(\varvec{z})\,.\) In practice, the Bayes classifier is unavailable and one has to use a random sample \(\mathbb {D}_n=\{(\varvec{Z}_1,Y_1),\ldots ,(\varvec{Z}_n,Y_n)\}\) from the distribution \((\varvec{Z},Y)\) to construct estimates of \(\phi _B\). Now, let \(\widehat{\phi }_n(\varvec{Z})\) be any sample-based classifier to predict Y from the data \(\mathbb {D}_n\) and \(\varvec{Z}\). The missclassification error for this sample-based classifier is given by

Here we are interested in sample-based classifiers whose error rates converge to that of the optimal classifier. It can be shown [see, for example, Devroye and Györfi (1985, pp. 254)] that

Therefore, to show \(L_n(\widehat{\phi }_n) - L(\phi _B)\overset{\text {a.s.}}{\longrightarrow }0\,,\) it is sufficient to show that \(E[|\widehat{\pi }_k(\varvec{Z})-\pi _k(\varvec{Z})||\mathbb {D}_n] \overset{\text {a.s.}}{\longrightarrow }0\), as \(n\rightarrow \infty \), for \(k=1,\ldots ,N\).

In what follows, we consider the problem of classification with missing covariates. More specifically, we consider the situation where a subset of the covariate vector \(\varvec{Z}\) may be missing at random. For some relevant results along these lines see, for example, Mojirsheibani (2012). Our notation below is as before, i.e., for all \(i\ge 1\), the Bernoulli random variable \(\Delta _i\) will be 1 if the corresponding \(\varvec{Z}_i\) is fully observable. To construct our classifiers in the presence of missing covariates, first let

be the estimated class conditional probabilities, where \(\breve{\eta }\) is any estimator of the selection probability \(\eta \). Our proposed sample-based classifier is the plug-in type estimator of (11) given by

The following result summarizes the performance of \(\widehat{\phi }_n\).

Theorem 4

Let \(\widehat{\phi }_n\) be the classifier defined via (14) and (13).

-

(i)

If \(\breve{\eta }\) in (13) is taken to be the kernel estimator \(\widehat{\eta }\) defined in (7) then, under the conditions of Theorem 2, \(\widehat{\phi }_n\) is strongly consistent i.e., \(L_n(\widehat{\phi }_n)-L(\phi _B)\overset{\text {a.s.}}{\rightarrow }0\), as \(n\rightarrow \infty \).

-

(ii)

If \(\breve{\eta }\) in (13) is taken to be the least-squares estimator \(\widehat{\eta }_{{LS}}\) defined in (9) then, under the conditions of Theorem 3, \(\widehat{\phi }_n\) is strongly consistent i.e., \(L_n(\widehat{\phi }_n) - L(\phi _B)\overset{\text {a.s.}}{\rightarrow }0\), as \(n\rightarrow \infty \).

Proof of Theorem 4

-

(i)

Let \(\breve{\eta }\) be the kernel estimator \(\widehat{\eta }\) defined by the top estimator in (7). Then it is straightforward to show that the following counterpart of (12) holds

$$\begin{aligned} P\{L_n(\widehat{\phi }_n)-L(\phi _B)>\epsilon \} \le \displaystyle \sum _{i=1}^N P\left\{ \int \left| \widehat{\pi }_k(\varvec{z}) - \pi _k(\varvec{z})\right| \mu (d\varvec{z})>\frac{\epsilon }{N}\right\} \,. \end{aligned}$$The proof of the theorem now follows from an application of Theorem 2 and the Borel Cantelli lemma.

-

(ii)

The proof of part (ii) is similar and will not be given.\(\square \)

2.4 Some numerical examples

In this section we give some numerical examples to illustrate the performance of our proposed estimators. We will also compare our estimators with the one based on complete case analysis (i.e., the one that ignores the incomplete covariates and uses the fully observed cases only).

Example 1

Here we consider the performance of the estimator \(\widehat{m}_{\widehat{\eta }}\), as given in (8), the estimator \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}\), as defined via (10), and the complete case estimator which uses the fully observed data only, i.e., the estimator \(\widehat{m}_{\mathrm{cc}}(\varvec{z}) := \left[ \sum _{i=1}^n \Delta _i Y_i \mathcal {K}\left( (\varvec{z}-\varvec{Z}_i)/h_n)\right) \right] \div \left[ \sum _{i=1}^n \Delta _i\mathcal {K}\left( (\varvec{z}-\varvec{Z}_i)/h_n)\right) \right] \,. \) To carry out our numerical studies, we generated \(n=150\) observations from each of the following three models:

- Model A: :

-

\(\varvec{Z}\in \mathbb {R}^2,~\hbox {and}~~~~Y= Z_1Z_2+Z_2^2+N(0,0.5)\)

- Model B: :

-

\(\varvec{Z}\in \mathbb {R}^4,~\hbox {and}~~~~Y= -\sin (2Z_1)+Z_2^2+Z_3-\exp (-Z_4)+N(0,0.5)\)

- Model C: :

-

\(\begin{array}{l}\varvec{Z}\in \mathbb {R}^4,~\hbox {and}~~~~ Y= Z_1+(2Z_2-1)^2+\frac{\sin (2\pi Z_3)}{2-\sin (2\pi Z_3)}+\sin (2\pi Z_4)\\ \qquad \qquad \qquad \qquad \qquad \quad +2\cos (2\pi Z_4) + 3\sin ^2(2\pi Z_4)\\ \qquad \qquad \qquad \qquad \qquad \quad +4\cos ^2(2\pi Z_4)+N(0,0.5) \end{array}\),

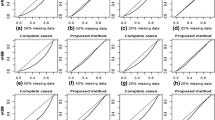

where the vector \(\varvec{Z}\) has a Gaussian distribution with mean \(\mathbf{0}\) and the covariance matrix \({\varvec{\Sigma }}=(\sigma _{ij})_{i,j\ge 1},\) where \(\sigma _{ij}= 2^{-|i-j|}\,.\) Here, model A is a toy example whereas models B and C are as in Meier et al. (2009). For model A, we allowed \(Z_2\) to be missing at random (MAR) based on the logistic missing probability mechanism \(P\{\Delta =1|Z_1,Y\}=\exp (1+0.2Z_1-0.5Y)/[1+\exp (1+0.2Z_1-0.5Y)]\,,\) where the coefficients (1, 0.2, \(-0.5\)) in this model were chosen to produce approximately 50 % missing values. For models B and C, we allowed \(Z_3\) and \(Z_4\) to be missing, but not \(Z_1\) and \(Z_2\). The missing probability mechanism for models B and C was taken to be \(P\{\Delta =1|Z_1,Z_2,Y\}=\exp (a+bZ_1+cZ_2+dY)/[1+\exp (a+bZ_1+cZ_2+dY)]\), where (a, b, c, d) is (\(0.1,-0.2,1,0.2\)) for model B and (\(0.8, 0.2, 0.2, -0.1\)) for model C. These choices result in approximately 50 % missing values in the data. For our kernel estimators and their smoothing parameters we used the cross-validation method of Racine and Li (2004) in the R package called “np” (Racine and Hayfield 2008). The parameters of the logistic missing probability mechanism were estimated using nonlinear least squares regression (based on the R package “nls2”). Next, to access the performance of the three estimators, we computed the empirical \(L_1\) and \(L_2\) errors of each estimator based on the observed data. The entire above process was repeated a total of 300 times (each time using a sample of \(n=150\) observations) and the average \(L_1\) and \(L_2\) error were computed. The numerical results appear in Table 1. The numbers appearing in brackets are the standard errors computed over 300 Monte Carlo runs. The last row of Table 1 correspond to the case where there are no missing data, i.e., \(P\{\Delta =1\}=1\). As Table 1 shows, the estimators \(\widehat{m}_{\widehat{\eta }}\) and \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}\) have the ability to outperform the complete case estimator \(\widehat{m}_{\mathrm{cc}}\). We also note that the method based on the least squares estimator of the missing probability mechanism performs better than the one based on kernel regression, which is not surprising because we are assuming that we know that the true underlying missing probability mechanism follows a logistic model. The standard errors of \(\widehat{m}_{\widehat{\eta }}\) and \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}\) are slightly higher than that of the complete case estimator; this is primarily due to the presence of the terms \(\widehat{\eta }\) and \(\widehat{\eta }_{{\mathrm{LS}}}\) that appear in (8) and (10). We have produced the boxplots of the 300 empirical \(L_1\) errors; these appear in the top row of Fig. 1. The boxplots for the 300 empirical \(L_2\) errors appear in Fig. 2 (top row).

Boxplots of the \(L_1\) errors of various estimators under different models and different missing patterns. In each of these 9 plots, boxplot 1 corresponds to \(\widehat{m}_{\mathrm{cc}}\), 2 corresponds to \(\widehat{m}_{\widehat{\eta }}\), 3 corresponds to \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}\), and 4 correspond to the case with no missing data

Boxplots of the \(L_2\) errors of various estimators under different models and different missing patterns. In each of these 9 plots, boxplot 1 corresponds to \(\widehat{m}_{\mathrm{cc}}\), 2 corresponds to \(\widehat{m}_{\widehat{\eta }}\), 3 corresponds to \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}\), and 4 correspond to the case with no missing data

Next, we consider some different missing probability mechanisms as follows. For the data corresponding to Model A, once again we allowed \(Z_2\) to be missing at random (MAR) based on the missing probability mechanism \(P\{\Delta =1|Z_1,Y\}=\left| \cos \big (e^{0.6 Y}-0.1 \sin (-2Z_1Y+Y^2)\big )\right| \). For models B and C, again we allowed \(Z_3\) and \(Z_4\) to be missing at random (but not \(Z_1\) and \(Z_2\)), and the MAR missing probability mechanism is taken to be \(P\{\Delta =1|Z_1,Z_2,Y\}=0.8\times \big |\cos \big (Z_1+Z_2-Y-2 \sin (Z_1Z_2Y)\big )\big |\). These choices result in approximately 50 % missing data in each of the 3 models. The results based on 300 Monte Carlo runs (with n=150 as before) appear in Table 2. The last row of Table 2 gives the results when there are no missing data, i.e., \(P\{\Delta =1\}=1\). Table 2 shows that \(\widehat{m}_{\widehat{\eta }}\) continues to perform better than the complete case estimator \(\widehat{m}_{\mathrm{cc}}\), but \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}\) is no longer a good estimator. This is not surprising because we are pretending, as many do, that the underlying MAR probabilities still follow logistic models (when in fact they are given by the more complicated trigonometric function defined earlier), and are using nonlinear least squares to estimate the parameters of the incorrectly assumed logistic model. The boxplots of the 300 empirical \(L_1\) errors corresponding to the left panel of Table 2 appear in the middle row of Fig. 1, whereas those of the empirical \(L_2\) errors of Table 2 appear in the middle row of Fig. 2.

Finally, we consider the MCAR setup where \(P\{\Delta =1|\varvec{Z}=\varvec{z},Y=y\}=P\{\Delta =1\}=0.5\). The results, based on 300 Monte Carlo runs (with n=150 for each run), appear in Table 3.

As this table shows, the complete case estimator \(\widehat{m}_{\mathrm{cc}}\) is the best estimator. This is no fluke; in fact, in view of Theorem 1, under the MACR assumption the estimator \(\widehat{m}_{\mathrm{cc}}\) is already strongly consistent in the \(L_p\) norm, \(p\ge 1\). We have also produced the boxplots of the empirical \(L_1\) and \(L_2\) errors of various estimators; these appear in bottom rows of Figs. 1 and 2.

Example 2

(Pima Indians Diabetes Data) In this example we consider the problem of kernel classification with missing covariates, as described in Sect. 2.3, and apply our results to the above data. This real data set involves 768 patients, 268 of whom have “tested positive” for diabetes (class 1), whereas the remaining 500 patients had negative test results (class 0). A complete description of this data set is available from the UCI Repository of machine learning databases at: http://www.icu.uci.edu/~mlearn/MLRepository.html. All of the covariates are numerical, but there are missing values among them. We will focus on one dominant missing pattern where the covariates ‘Triceps skin fold thickness’ and ‘2-Hour serum insulin’ are jointly missing for 227 patients. Here, we are considering the classification of a patient’s diabetes status (class 0 or class 1) based on the covariates \(Z_1=\) ‘Number of times pregnant’, \(Z_2=\) ‘Plasma glucose concentration’, \(Z_3=\) ‘Diastolic blood pressure’, \(Z_4=\) ‘Triceps skin fold thickness’, and \(Z_5=\) ‘2-Hour serum insulin’. The classifier works by assigning a patient to class 1 if her observed covariate vector \(\varvec{z}\) satisfies \(\widehat{\pi }_1(\varvec{z})>\widehat{\pi }_0(\varvec{z})\), otherwise she is assigned to class 0, where \(\widehat{\pi }_k(\varvec{z}),~k=0,1\), is the estimated class conditional probability given by (13). Once again, we consider three versions of \(\widehat{\pi }_k(\varvec{z})\): (i) the version that takes \(\breve{\eta }\) in (13) to be the kernel estimator \(\widehat{\eta }\) defined in (7), (ii) the version that takes \(\breve{\eta }\) in (13) to be the estimator \(\widehat{\eta }_{{LS}}\) based on logistic regression, and (iii) the complete case estimator that ignores the incomplete covariates and takes \(\breve{\eta }= 1\) in (13). Table 4 gives the misclassification error rates of the three classifiers based on the resubstitution method, i.e., the error committed by each classifier on the data. As Table 4 shows, both kernel and regression based estimators of the missing probability mechanism can produce better results than the naïve complete case estimator.

3 Discussion

In this paper we have proposed kernel methods to construct nonparametric estimators of a regression function with incomplete data. The presence of missing covariates is handled using a Horvitz–Thompson inverse weighting approach, where the weights are themselves estimates of the unknown selection probabilities. When weights are kernel estimators, our proposed nonparametric estimator of the regression function \(m(\varvec{z})=E[Y|\varvec{Z}=\varvec{z}]\) is given by \(\widehat{m}_{\widehat{\eta }}(\varvec{z})\), as defined by (8), and the least squares estimator is given by \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}(\varvec{z})\), defined by (10). Using numerical studies we have also compared the performance of these two estimators with that of the complete case estimator that deletes the incomplete observations and only uses the fully observed data, i.e. the estimator

In fact, our numerical results in Sect. 2.4 show that, in general, both \(\widehat{m}_{\widehat{\eta }}(\varvec{z})\) and \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}(\varvec{z})\) have the ability to outperform \(\widehat{m}_{\mathrm{cc}}(\varvec{z})\) (except when the missing pattern is MCAR). This is not surprising since the complete case estimator in (15) is, in general, an incorrect estimator. To appreciate this, observe that (15) is equal to

which is the kernel estimator of the ratio \(E[\Delta Y|\varvec{Z}=\varvec{z}]\div E[\Delta |\varvec{Z}=\varvec{z}]\) which is, in general, not equal to \(E[Y|\varvec{Z}=\varvec{z}]=m(\varvec{z})\), [not even under the MAR assumption (5)]. Therefore, the results in Theorems 2 and 3 on the \(L_p\) norms of \(\widehat{m}_{\widehat{\eta }}(\varvec{z})\) and \(\widehat{m}_{\widehat{\eta }_{{\mathrm{LS}}}}(\varvec{z})\) fail to hold for the estimator \(\widehat{m}_{\mathrm{cc}}(\varvec{z})\).

A main issue with any kernel type estimator is the choice of the bandwidth. In the case of kernel regression estimators, a popular choice of the bandwidth is the one that minimizes the Integrated Squared Error (ISE) of the corresponding kernel regression estimator. Since ISE depends on the underlying unknown regression and density functions, Hardle and Marron (1985) replace them with “leave-one-out” estimators which are then used to define their cross-validation bandwidth selection rule. A more recent approach is based on the cross-validation method of Racine and Li (2004), which is implemented in the ‘R’ package called “np” (see Racine and Hayfield (2008)); in fact, we have utilized this method in our numerical studies. Better results may be achievable for the first estimator [which is defined via (8) and (7)] if the two bandwidths were selected simultaneously to minimize the cross-validation estimate of an error criterion such as ISE, but we have not pursued that path in this paper.

4 Appendix

We first state a number of technical lemmas that will be used in the proofs of out main results.

Lemma 1

Let K be a regular kernel, and let \(\mu \) be any probability measure on the Borel sets of \(\mathbb {R}^{d+s}\). Then, there exists a finite positive constant \(c_1\), only depending on the kernel K, such that for all \(h_n>0\)

This lemma and its proof is given in Devroye and Krzyz̀ak (1989, Lemma 1). Also, see Devroye and Wagner (1980) as well as Spiegelman and Sacks (1980).

Lemma 2

Let K be a regular kernel, and put \(m_n^*(\varvec{z})=\sum _{i=1}^n Y_i K(\frac{\varvec{z}-\varvec{Z}_i}{h_n})/(nE[K(\frac{\varvec{z}-\varvec{Z}}{h_n})])\), where \((\varvec{Z}_i,Y_i)\)’s are i.i.d. \(\mathbb {R}^{d+s}\times [-M,M]\)-valued random vectors. Then, for every \(\epsilon >0\) and n large enough,

Here, \(\mu \) is the probability measure of \(\varvec{Z}\), \(m(\varvec{z})=E[Y|\varvec{Z}=\varvec{z}]\), and \(c_1\) is as in Lemma 1.

For a proof of this result see, for example, Györfi, et al. (2002, Lemma 23.9).

Lemma 3

Let \(\phi (\varvec{X}_i,Y_i)=\eta ^*(\varvec{X}_i,Y_i)f(\varvec{X}_i)P(Y=Y_i|Y_i)\) where \(\eta ^*(\varvec{X}_i,Y_i)\) is as in (5), and put \(\widehat{\phi }(\varvec{X}_i,Y_i)=\lambda _n^{-d} n^{-1}\sum _{j=1}^n\Delta _j I\{Y_j=Y_i\}\mathcal {H}((\varvec{X}_i-\varvec{X}_j)/\lambda _n)\) where \(\lambda _n\) and the kernel \(\mathcal {H}\) are as in (7). Suppose that conditions A2, A3, and A4 hold.

Then,

where \(c_2\) is a positive constant not depending on n.

Proof of lemma 3

The proof is similar to that of Mojirsheibani (2012, Lemma 3) and goes as follows. First note that

Using a one-term Taylor expansion, we can bound \(\Delta _{n,i}(1)\) as follows

where \(X_{1,k}\) and \(X_{i,k}\) are the kth components of \(\varvec{X}_1\) and \(\varvec{X}_i\), respectively, and \(\varvec{X}^{\dagger }\) is on the interior of the line segment joining \(\varvec{X}_1\) and \(\varvec{X}_i\). Therefore,

To bound the term \(\Delta _{n,i}(2)\), first note that

Now, let \(f_y(\varvec{x})\) be the conditional pdf of \(\varvec{X}\), given \(Y=y\), and observe that

Therefore, by condition A2 and the fact that \(P(Y = Y_i|Y_i) = \sum _{y\in \mathcal {Y}} P(Y=y)I\{Y_i=y\}\), one finds

Now, a one-term Taylor expansion and the fact that \(|\eta ^*(\varvec{X}_i,Y_i)|\le 1\) yield

This completes the proof of Lemma 3 \(\square \)

Proof of theorem 2

To prove Theorem 2, we first define the following kernel-type estimators:

Using the expressions in (17), and upon observing that \(|\tilde{m}_{\widehat{\eta }}(\varvec{z})/\overline{m}_{\widehat{\eta }} (\varvec{z})|\le M\), one obtains

Therefore, with \(\tilde{m}_{\widehat{\eta }}(\varvec{z})\) and \(\overline{m}_{\widehat{\eta }}(\varvec{z})\) as in (17), and \(\tilde{m}_{\eta ^*}(\varvec{z})\) and \(\overline{m}_{\eta *}(\varvec{z})\) as in (16), we have that for every \(\epsilon >0\)

But, by (3), in view of the result of Devroye and Krzyz̀ak (1989),

where \(b\equiv b(\epsilon )={\min }^2\big (\epsilon ^2 \eta _0^{2p}/[2^{4p+7}M^{2p}(1+\eta _0)^{2p-2}(1+c_1)]\,,\epsilon \eta _0^{p}/[2^{2p+5}M^{p}(1+\eta _0)^{p-1}(1+c_1)]\big ).\) To deal with the term \(P_{n,1}\), first observe that

However, we can bound \(\int S_{n,1}(\varvec{z})\mu (d\varvec{z})\) as follows

where the last line follows from Lemma 1 in the Appendix. To bound \(\int S_{n,2}(\varvec{z})\mu (d\varvec{z})\), we have

Therefore, using (19) and (20) we have

The bound for the term \(P_{n,3}\) follows in a similar fashion as in \(P_{n,1}\). More specifically, we first note that

The integral \(\int S'_{n,1}(\varvec{z})\mu (d\varvec{z})\) can be upper-bounded similar to the term \(\int S_{n,1}(\varvec{z})\mu (d\varvec{z})\) in (19); in fact we have

For the term \(\int S'_{n,2}(\varvec{z})\mu (d\varvec{z})\), we have

Therefore, \(P_{n,3}\) can be bounded as follows

Thus, in view of (21), (22), (23), (24), (25), and (26), we find

First note that upon taking \(m(\varvec{z})=1\) in Lemma 2 (in the Appendix) and \(Y_i\overset{\text {a.s.}}{=}1\) for all \(i=1,\ldots , n\), we have

for n large enough, where \(a_1=\eta _0^{2p}/(2^{4p+8}\, 3^{2p}c_1^2 M^{2p})\). To deal with the term \(T_{n,1}\), define the terms

where \(\lambda _n\) and \(\mathcal {H}\) are as in (7), and note that

Also, note that \(|\widehat{\Phi }\left( \varvec{X}_i,Y_i\right) /\widehat{\Omega } \left( \varvec{X}_i,Y_i\right) |\le 1\). Using the above notation, observe that

Now, let \(0<f_0:= \inf \limits _{\varvec{x}\in \mathbb {R}^d} f(\varvec{x})\); see condition A3. Then we have

Therefore,

where \(t=\epsilon \eta _0^{p+1}\omega _0/[2^{2p+3}\, 3^{p-1} M^p c_1]>0\), with \(\omega _0\) as in (33). Now, for \(i=1,\ldots ,n\) and \(j=1\ldots ,n,\,j\ne i\), put

and observe that

But, conditional on \((\varvec{X}_i,Y_i)\), the terms \(\Gamma _j(\varvec{X}_i,Y_i)\,, j=1,\ldots ,n\,,j\ne i\), are independent zero-mean random variables, bounded by \(-\lambda _n^{-d} \Vert \mathcal {H}\Vert _{\infty }\) and \(+\lambda _n^{-d} \Vert \mathcal {H}\Vert _{\infty }\). Furthermore, conditional on \((\varvec{X}_i,Y_i)\), we have \(\text {Var}(\Gamma _j(\varvec{X}_i,Y_i)|\varvec{X}_i,Y_i) = E[\Gamma _j^2(\varvec{X}_i,Y_i)|\varvec{X}_i,Y_i]\le \lambda _n^{-d} \Vert \mathcal {H}\Vert _{\infty } \Vert f \Vert _{\infty }\). Therefore by an application of Bernstein’s Inequality (Bernstein 1946), we find

for n large enough; here the last line follows by the fact that in bounding \(P\{|\widehat{\eta }(\varvec{X}_i,Y_i)-\eta ^*(\varvec{X}_i,Y_i)|>\epsilon \eta _0^{p+1}/[2^{2p+2}\, 3^{p-1} c_1 M^p]\}\), we only need to consider \(\epsilon \in (0,(2^{2p+2}\, 3^{p-1} c_1 M^p)/\eta _0^{p+1})\), because \(|\widehat{\eta }(\varvec{X}_i,Y_i)-\eta ^*(\varvec{X}_i,Y_i)|\le 1\). Similarly, since \(\widehat{\Omega }(\varvec{X}_i,Y_i)\) is a special case of \(\widehat{\Phi }(\varvec{X}_i,Y_i)\), with \(\Delta _j=1\) for \(j=1,\ldots ,n\), one finds an upper bound for (34) as such

for n large enough. Combining (36) and (37), we obtain

where \(a_2= (\eta _0^{2p+2}\omega _0^2)/(2^{4p+9}\,3^{2p-2}\, c_1^2 M^{2p}\Vert H\Vert _{\infty }[\Vert f\Vert _{\infty }+\omega _0/12])\). Finally, to deal with the term \(T_{n,3}\), first note that \(P\{\widehat{\eta }(\varvec{X}_i,Y_i)<\eta _0/2\} \le P\{|\widehat{\eta }(\varvec{X}_i,Y_i)-\eta ^*(\varvec{X}_i,Y_i)|\ge \eta _0/2\}\). Therefore, by the arguments that lead to the derivation of the bound on \(T_{n,1}\), we have

where \(a_3 = (\eta _0^2 \omega _0^2)/(2^7\Vert H\Vert _{\infty }[\Vert f\Vert _{\infty }+\eta _0/48])\), which does not depend on \(\epsilon \) or n. Consequently, in view of (27), (28), (38), and (39), one finds

This completes the proof of Theorem 2. \(\square \)

Proof of Theorem 3

Start by defining the quantities

Since \(|\tilde{m}_{\widehat{\eta }_{{\mathrm{LS}}}} (\varvec{z})/\overline{m}_{\widehat{\eta }_{{\mathrm{LS}}}}(\varvec{z})|\le M\), one obtains

Therefore, with \(\tilde{m}_{\widehat{\eta }_{{\mathrm{LS}}}}(\varvec{z})\) and \(\overline{m}_{\widehat{\eta }_{{\mathrm{LS}}}}(\varvec{z})\) as in (40), and \(\tilde{m}_{\eta ^*}(\varvec{z})\) and \(\overline{m}_{\eta *}(\varvec{z})\) as in (16), we can use simple algebra to show that for every \(\epsilon >0\)

But, as an immediate consequence of the main result of Devroye and Krzyz̀ak (1989) (see (3)), we have

where b is as in Theorem 2. To deal with the term \(Q_{n,1}\), first note that

Now, using the arguments that lead to (19), we find

Furthermore, the term \(\int \pi _{n,2}(\varvec{z})\mu (d\varvec{z})\) can be bounded as in (20), i.e., we have

Now, put \(\epsilon _1=\epsilon \eta _0^{p-1}/(2^{3p-1}M^p)\) and observe that combining (42) and (43) one finds

Finally, to bound \(Q_{n,3}\), first note that

But, once again, the arguments that lead to (19) and (20) yield

and

Next, with \(\epsilon _1=\epsilon \eta _0^{p-1}/(2^{3p-1}M^p)\), it is straightforward to see that

Thus, from (44), (45), (46), and (47), it follows that

Now, upon taking \(Y_i\overset{\text {a.s.}}{=}1~, i=1,\ldots ,n\), and \(m(\varvec{z})=1\) in lemma 2, one obtains

where \(b_1 = \eta _0^{2p}/(2^{6p+8} M^{2p} c_1^2)\). To deal with the term \(U_{n,1}\), observe that

To handle the term (50), first put \(\epsilon _2=\epsilon _1\eta _0^2/(2^5 c_1)>0\) and let the class of functions \(\mathcal {G}\) be defined as \(\mathcal {G}=\{g_{\eta }~|~g_{\eta }(\varvec{x},y)=|\eta (\varvec{x},y)-\eta ^*(\varvec{x},y)|\,,~\eta \in \mathcal {M}\}\). Now, observe that for any \(g_{\eta },g_{\eta ^{\dagger }}\in \mathcal {G}\) one has

Therefore, if \(\mathcal {M}_{\epsilon _2}=\{\eta _1,\ldots ,\eta _{N_{\epsilon _2}}\}\) is a minimal \(\epsilon _2\)-cover of \(\mathcal {M}\) with respect to the empirical \(L_1\) norm, then \(\mathcal {G}_{\epsilon _2}=\{g_{\eta _1},\ldots ,g_{\eta _{N_{\epsilon _2}}}\}\) will be an \(\epsilon _2\)-cover of \(\mathcal {G}\). Futhermore, the \(\epsilon _2\)-covering numbers for \(\mathcal {G}\) and \(\mathcal {M}\) satisfy \(\mathcal {N}_1(\epsilon _2,\mathcal {G},\mathbb {D}_n)\le \mathcal {N}_1(\epsilon _2,\mathcal {M},\mathbb {D}_n)\). Now, using standard results from the empirical process theory [see Pollard (1984, p. 25), or Theorem 9.1 of Györfi, et al. (2002, p. 136 )], one finds

where \(b_2 =\eta _0^{p+1}/(2^{3p+4} M^p c_1)\) and \(b_3 = \eta _0^{2p+2}/(2^{6p+9} M^{2p} c_1^2 )\). Next, to deal with the term (51), note that by the Cauchy-Schwartz inequality we have

Since

one can write

Therefore, in view of (55),

Now, let \(\Psi = \{\psi _{\eta }~|~\psi _{\eta }(\varvec{x},y,\delta ) = |\eta (\varvec{x},y)-\delta |^2\,,~~\eta \in \mathcal {M}\}\) and put \(\epsilon _3=\epsilon _1^2\eta _0^4/(2^8 c_1^2)\). Then for any \(\psi _{\eta },\psi _{\eta '}\in \Psi \) (where \(\eta , \eta '\in \mathcal {M}\)), one has

In other words, if \(\mathcal {M}_{\epsilon _3/2}=\{\eta _1,\ldots ,\eta _{N_{\epsilon _3/2}}\}\) is a minimal \(L_1\) empirical \(\epsilon _3/2\)-cover of \(\mathcal {M}\), then \(\Psi _{\epsilon _3}=\{\psi _{\eta _1},\ldots ,\psi _{\eta _{N_{\epsilon _3}}}\}\) is an \(\epsilon _3\)-cover of \(\Psi \). Additionally, one has that \(\mathcal {N}_1(\epsilon _3,\Psi ,\mathbb {D}_n)\le \mathcal {N}_1(\epsilon _3/2,\mathcal {M},\mathbb {D}_n)\). Thus, one obtains

where \(b_4 = \eta _0^{2p+2}/(2^{6p+6} M^{2p} c_1^2 )\) and \(b_5 = \eta _0^{4p+4}/(2^{12p+6} M^{4p} c_1^4)\). Therefore, in view of (48), (49), (52), and (57), we have

This completes the proof of Theorem 3. \(\square \)

References

Bernstein S (1946) The theory of probabilities. Gastehizdat Publishing House, Moscow

Bravo F (2015) Semiparametric estimation with missing covariates. J Multivar Anal 139:329–346

Chen HY (2004) Nonparametric and semiparametric models for missing covariates in parametric regression. J Am Stat Assoc 99(468):1176–1189

Cheng PE, Chu CK (1996) Kernel estimation of distribution functions and quantiles with missing data. Stat Sin 6:63–78

Devroye L (1981) On the almost everywhere convergence of nonparametric regression function estimates. Ann Stat 9:1310–1319

Devroye L, Györfi L, Lugosi G (1985) Nonparametric density estimation: the L1 view. Wiley, New York

Devroye L, Krzyz̀ak A (1989) An equivalence theorem for \(L_1\) convergence of kernel regression estimate. J Stat Plan Inference 23:71–82

Devroye L, Wagner T (1980) On the \(L_1\) convergence of kernel estimators of regression functions with applications in discrimination. Z. Wahrsch. Verw. Gebiete 51:15–25

Efromovich S (2012) Nonparametric regression with predictors missing at random. J Am Stat Assoc 106:306–319

Faes C, Ormerod JT, Wand MP (2011) Variational Bayesian inference for parametric and nonparametric regression with missing data. J Am Stat Assoc 106(495):959–971

Guo X, Xu W, Zhu L (2014) Multi-index regression models with missing covariates at random. J Multivar Anal 123:345–363

Györfi L, Kohler M, Krzyz̀ak A, Walk H (2002) A distribution-free theory of nonparametric regression. Springer, New York

Hardle W, Marron J (1985) Optimal bandwidth selection in nonparametric regression function estimation. Ann Stat 13:1465–1481

Hirano KI, Ridder G (2003) Efficient estimation of average treatment effects using the estimated propensity score. Econometrica 71:1161–1189

Horvitz DG, Thompson DJ (1952) A generalization of sampling without replacement from a finite universe. J Am Stat Assoc 47:663–685

Hu Y, Zhu Q, Tian M (2014) An efficient technique of multiple imputation in nonparametric quantile regression. J Math Stat 10:30–44

Ibrahim JG, Lipsitz SR, Chen MH (1999) Missing covariates in generalized linear models when the missing data mechanism is non-ignorable. J R Stat Soc Ser B (Statistical Methodology) 61(1):173–190

Kohler M, Krzyz̀ak A, Walk H (2003) Strong consistency of automatic kernel regression estimates. Ann. Inst. Stat. Math. 55:287–308

Liang H, Wang S, Robins J, Carroll R (2004) Estimation in partially linear models with missing covariates. J Am Stat Assoc 99(466):357–367

Lipsitz SR, Ibrahim JG (1996) A conditional model for incomplete covariates in parametric regression models. Biometrika 83(4):916–922

Little RJA, Rubin DB (2002) Statistical analysis with missing data. Wiley, New York

Meier L, van de Geer S, Bühlmann P (2009) High-dimensional additive modeling. Ann Stat 37:3779–3821

Mojirsheibani M (2007) Nonparametric curve estimation with missing data: a general empirical process approach. J Stat Plan Inference 137:2733–2758

Mojirsheibani M (2012) Some results on classifier selection with missing covariates. Metrika 75:521–539

Pollard D (1984) Convergence of stochastic processes. Springer, New York

Racine J, Hayfield T (2008) Nonparametric econometrics: the np package. J Stat Softw 27:1–32

Racine J, Li Q (2004) Nonparametric estimation of regression functions with both categorical and continuous data. J Econom 119:99–130

Robins JM, Rotnitzky A, Zhao LP (1994) Estimation of regression coefficients when some regressors are not always observed. J Am Stat Assoc 89(427):846–866

Sinha S, Saha KK, Wang S (2014) Semiparametric approach for non-monotone missing covariates in a parametric regression model. Biometrics 70(2):299–311

Spiegelman C, Sacks J (1980) Consistent window estimation in nonparametric regression. Ann Stat 8:240–246

van Der Vaart AW, Wellner JA (1996) Weak convergence and empirical processes with applications to statistics. Springer, New York

Walk H (2002a) On cross-validation in kernel and partitioning regression estimation. Stat Probab Lett 59:113–123

Walk H (2002b) Almost sure convergence properties of Nadaraya–Watson regression estimates. In: Modeling uncertainty. International Series of Operational Research and Management Science, vol 46. Kluwer Academic Publishing, Boston

Wang L, Rotnitzky A, Lin X (2010) Nonparametric regression with missing outcomes using weighted kernel estimating equations. J Am Stat Assoc 105:1135–1146

Zhang Z, Rockette HE (2005) On maximum likelihood estimation in parametric regression with missing covariates. J Stat Plan Inference 134:206–223

Acknowledgments

This work is supported by the National Science Foundation Grant DMS-1407400 of Majid Mojirsheibani.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work is supported by the NSF Grant DMS-1407400 of Majid Mojirsheibani.

Rights and permissions

About this article

Cite this article

Reese, T., Mojirsheibani, M. On the \(L_p\) norms of kernel regression estimators for incomplete data with applications to classification. Stat Methods Appl 26, 81–112 (2017). https://doi.org/10.1007/s10260-016-0359-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-016-0359-6