Abstract

In simulation of cardiovascular processes and diseases patient-specific model parameters, such as constitutive properties, are usually not easy to obtain. Instead of using population mean values to perform “patient-specific” simulations, thereby neglecting the inter- and intra-patient variations present in these parameters, these uncertainties have to be considered in the computational assessment. However, due to limited computational resources and several shortcomings of traditional uncertainty quantification approaches, parametric uncertainties, modeled as random fields, have not yet been considered in patient-specific, nonlinear, large-scale, and complex biomechanical applications. Hence, the purpose of this study is twofold. First, we present an uncertainty quantification framework based on multi-fidelity sampling and Bayesian formulations. The key feature of the presented method is the ability to rigorously exploit and incorporate information from an approximate, low fidelity model. Most importantly, response statistics of the corresponding high fidelity model can be computed accurately even if the low fidelity model provides only a very poor approximation. The approach merely requires that the low fidelity model and the corresponding high fidelity model share a similar stochastic structure, i.e., dependence on the random input. This results in a tremendous flexibility in choice of the approximate model. The flexibility and capabilities of the framework are demonstrated by performing uncertainty quantification using two patient-specific, large-scale, nonlinear finite element models of abdominal aortic aneurysms. One constitutive parameter of the aneurysmatic arterial wall is modeled as a univariate three-dimensional, non-Gaussian random field, thereby taking into account inter-patient as well as intra-patient variations of this parameter. We use direct Monte Carlo to evaluate the proposed method and found excellent agreement with this reference solution. Additionally, the employed approach results in a tremendous reduction of computational costs, rendering uncertainty quantification with complex patient-specific nonlinear biomechanical models practical for the first time. Second, we also analyze the impact of the uncertainty in the input parameter on mechanical quantities typically related to abdominal aortic aneurysm rupture potential such as von Mises stress, von Mises strain and strain energy. Thus, providing first estimates on the variability of these mechanical quantities due to an uncertain constitutive parameter, and revealing the potential error made by assuming population averaged mean values in patient-specific simulations of abdominal aortic aneurysms. Moreover, the influence of correlation length of the random field is investigated in a parameter study using MC.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The assessment of biomechanical factors of cardiovascular and other diseases using computational tools has led to tremendous progress in the understanding of the underlying processes and causes leading to severe medical conditions and often death. Furthermore, these computational models can offer new, unprecedented diagnostic and predictive possibilities, presenting a promising avenue to further advance in medical health care. Hence, the improvements achieved in the last decade have fueled the desire for the application of these tools in clinical settings and medical research.

However, most computational models require a multitude of patient-specific data and input parameters to accurately predict the relevant patient-specific biomechanical factors. Many of these aforementioned input parameters are unavailable or can only be estimated in some way. Often when truly patient-specific data are inaccessible assumptions about these parameters are made, or population-averaged values are used. The difference between the actual patient-specific values and any assumed or averaged values in the input parameters in a deterministic model of course translates to uncertainty or potential error in the computed quantities.

For instance, in the context of computational models for biomechanical problems, already the process to get from medical image data to a discretized model is a potential source for uncertainty and error in the computational geometry. Additionally, this task is often also dependent on the operator performing the segmentation and meshing process and thus not objective and reproducible. The differences in model geometry of course translate into differences in numerical results (Moore et al. 1997). Furthermore, the resolution of state-of-the-art clinical CT or MR scanners often is not high enough to determine certain important geometrical features. An even more severe problem is that patient-specific physical parameters, such as constitutive properties, almost never can be measured accurately. Many of these parameters intrinsically vary within and between patients and are also significantly affected by lifestyle, age, and diseases, which lead to further substantial variations. Moreover, as pointed out previously by Chen et al. (2013), uncertainty is introduced also by the often ambiguous choice of mathematical models and applied boundary conditions, which will affect the simulation results.

Let us consider, e.g., 3D models of the human vasculature in the following. As the wall thickness of blood vessels can generally not be obtained from medical images, assumptions about the wall thickness and its regional distribution are made when creating structural models of the vessel wall. Especially in cases where the patient suffers from a cardiovascular disease like arteriosclerosis or abdominal aortic aneurysm (AAA), vessel walls are subject to pathological changes resulting in a wall thickness deviating strongly from population mean values and exhibiting large spatial intra-patient variations (Reeps et al. 2012; Raghavan et al. 2006, 2011).

Another source of uncertainty are the unknown patient-specific constitutive properties of the vessel wall. As in the case of the wall thickness, the constitutive properties of the vessel wall are severely affected by advancing cardiovascular diseases. As shown in many ex vivo studies, the nonlinear material properties of an aneurysmatic arterial wall are subject to large inter- and intra-patient variations, and constitutive parameters determined by tensile tests vary significantly within a patient, patient cohort, and experimental setup in the various published studies (Reeps et al. 2012; Marini et al. 2011; Raghavan et al. 2006, 2011; Vande Geest et al. 2006b, c; Raghavan et al. 1996; Vallabhaneni et al. 2004; Thubrikar et al. 2001). Even in non-arteriosclerotic human aorta, the stiffness parameters reported in the literature easily span one order of magnitude (Roccabianca et al. 2014). Despite the progress that has been made regarding in vivo characterization of constitutive properties based on pulse wave velocity or local changes in diameter of the aorta (Schulze-Bauer and Holzapfel 2003; Astrand et al. 2011; Vappou et al. 2010), current in vivo approaches are not yet able to detect short-scale spatial variations. Furthermore, none of the above-mentioned approaches has been successfully applied and validated for, e.g., AAA or human aorta by tensile tests.

While the exact knowledge of patient-specific values for wall thickness and constitutive parameters may be irrelevant for pure computational fluid models, this is not the case for solid or fluid–solid interaction models. Therefore, in absence of truly patient-specific parameters, it is extremely important to be aware of the uncertainty in population averaged or assumed patient-specific model parameters. In lack of true patient-specific values, these uncertainties should be incorporated into the computational model to quantify the impact of the uncertainty on the computed results and to obtain more reliable predictions or worst-case scenarios. The identification and quantification of the uncertainties in the computational results will help to access the margin of error of simulation results due to uncertain model parameters, thus allowing estimates on the accuracy of deterministic models. This is crucial if computational models are, ultimately, to be used for diagnosis of cardiovascular diseases, or to obtain recommendations for prevention and treatment of individual patients. A prominent example is the patient-specific rupture risk stratification of AAA using finite element models to determine whether the patient should undergo elective surgery. Additionally, the results could help to improve computational models and provide directions for future research, as the major contributors to the output uncertainty are identified.

In order to achieve such a quantification of uncertainties, two major steps are required. First, a suitable probabilistic description of the uncertain model parameters must be found, and second, an appropriate approach to propagate the uncertainties through the model is needed. As far as uncertain material properties and arterial wall thickness are considered, two options exist to model these uncertainties in a probabilistic fashion. The simpler approach models the constitutive parameters as a spatially constant random variable, which follows a prescribed probability distribution, while thus neglecting spatial variability. The other option, which also accounts for spatial variations, are so-called random fields (Vanmarcke 2010; Shinozuka and Jan 1972). An essential feature of random fields is the ability to capture the spatial correlation, encoded in the autocorrelation function (ACF), of a varying property at different locations.

In either case, the resulting computational model is no longer deterministic, and hence, a stochastic problem must be solved. Various approaches to solve this kind of stochastic boundary value problem have been proposed in recent decades (Spanos and Zeldin 1998; Stefanou 2009; Panayirci and Schuëller 2011; Xiu 2010; Eldred 2009). However, only few approaches scale to large nonlinear problems with moderate to high stochastic dimension. The majority of the proposed approaches fall into one of the following two categories. The first is based on some form of Polynomial Chaos Expansion (PCE) (Xiu 2010; Ghanem and Spanos 2003; Xiu 2009). The major disadvantage of solution schemes based on PCE is the curse of dimensionality, which renders these approaches infeasible for problems with high stochastic dimension as is the case when considering random fields. The second broad class of algorithms is based on sampling approaches like Monte Carlo (MC) and all its variants (Metropolis and Ulam 1949; McKay et al. 1979; Bourgund and Bucher 1986; Cliffe et al. 2011; Pellissetti and Schuëller 2009; Pradlwarter et al. 2007; Olsson and Sandberg 2002; Neal 2001; Hurtado and Barbat 1998). As reported by Stefanou (2009), direct MC might still be the only universal approach for uncertainty quantification (UQ) in complex systems, because it only requires a deterministic solver and the ability to draw samples from the distributions of the uncertain input parameters. However, while the stochastic problem at hand can in principle be solved using direct MC, the computational costs associated with standard MC render this approach infeasible for realistic, nonlinear and hence quite complex biomechanical models, as MC requires typically several thousand model evaluations.

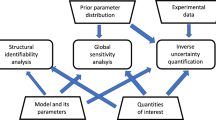

In this study, we present a framework which incorporates a probabilistic description of spatially varying model parameters, based on experimental data. Moreover, this framework allows for the efficient solution of the resulting stochastic boundary value problem using approximate models in combination with nonparametric Bayesian regression (Koutsourelakis 2009). We demonstrate that this approach is able to reproduce the MC reference solution at a fraction of the computational costs and delivers accurate results even if strongly simplified approximate models are used. Thus, the computational costs of UQ can be reduced to an acceptable level even for large-scale, nonlinear, and complex biomechanical problems with uncertainties modeled as random fields.

As a first step toward full UQ incorporating all relevant uncertain model parameters in patient-specific biomechanics, we exemplarily consider two patient-specific AAA geometries and the uncertainty in one of the constitutive parameters of the aneurysm wall. The constitutive parameter is modeled as random field, since our experimental data suggest that the chosen parameter exhibits high inter- and intra-patient variations. We present the method and demonstrate, through a proof of concept study, the impact of uncertainties present in the constitutive parameter in the arterial wall on mechanical quantities usually related to AAA rupture potential, such as von Mises stress/strain or strain energy density. In addition, we perform a parameter study to investigate the influence of the correlation length of the random field using direct MC.

Thus, the purpose of this work is twofold. On the one hand, a novel UQ approach is presented, and its efficiency and accuracy are shown for large-scale nonlinear models. On the other hand, in addition to the methodology presented in this paper, the uncertainties in the simulation results of patient-specific AAA models are quantified, and the ramifications for patient-specific computational assessment of AAA rupture potential are discussed.

To the knowledge of the authors, this is the first time that UQ with respect to a spatially correlated random quantity is performed employing nonlinear patient-specific finite element models. Hitherto, research regarding UQ in cardiovascular mechanics was limited to either idealized geometries and uncertainties modeled as independent random variables rather than correlated stochastic processes. For instance, Sankaran and Marsden (2011) investigated idealized geometries of AAAs considering parametric uncertainties such as the radius, while Chen et al. (2013) and Xiu and Sherwin (2007) studied the effect of uncertain parameters in one-dimensional models of the arterial network. Although we consider patient-specific models of AAA, we stress the fact that our approach is very general and can be applied to a wide range of problems and is by no means limited to this particular application.

The remainder of this paper is structured as follows. In Sect. 2, the stochastic boundary value problem and the probabilistic model used to describe the random and spatial variations of the constitutive parameter are provided. Section 3 summarizes the multi-fidelity MC approach utilized here and highlights important aspects. The described methodology is applied to two large, nonlinear, patient-specific finite element models of AAAs, and the results of these model problems are discussed in Sect. 4. By means of a simple proof concept example, we show in Sect. 5 that geometric uncertainties can be considered in addition to the constitutive uncertainty as well. Conclusions are drawn in Sect. 6.

2 Stochastic problem formulation

The material properties of AAAs are subject to large inter- and intra-patient variations in all of the material properties (Reeps et al. 2012; Raghavan et al. 2011). Hence, we use the random field approach to formulate a stochastic material law for the aneurysmatic arterial wall. These random fields are constructed based on our own experimental data from tensile tests. Reliable information about the spatial distribution and correlation of constitutive properties in AAA is scarce; hence, some auxiliary assumptions have to be made for the characterization of the field. Nevertheless, the assumptions regarding the probabilistic characteristics of the random field are far less restrictive than those typically made in a deterministic problem setting where the variations or uncertainties in the model parameters are commonly ignored entirely. In addition, the sensitivity of assumptions on the results can also be tested by the proposed approach.

2.1 Probabilistic constitutive model

A homogenous, univariate, lognormal three-dimensional random field is used to describe the inter- and intra-patient variations of one constitutive parameter of a hyperelastic constitutive law for the AAA wall. More specifically, we use the hyperelastic constitutive model proposed in (Raghavan and Vorp 2000) as a starting point for our probabilistic description of the arterial wall:

where \(\bar{I_1}\) is the first invariant of the isochoric Right-Cauchy-Green strain tensor \(\varvec{\bar{C}}\) and \(J=\det \varvec{F}\) is the determinant of the deformation gradient \(\varvec{F}\). The parameters of the isochoric part \(\alpha \) and \(\beta \) can be determined by fitting the strain energy function given above to experimentally measured stress-stretch curves as we precisely described in (Reeps et al. 2012). A fixed bulk modulus of \(\kappa = \frac{2\alpha }{1-2\nu }\) with \(\nu = 0.49 \) was used throughout this work and \(\eta = -2\).

The parameters \(\alpha \) and \(\beta \) were fitted for \(n=142\) tensile test specimens harvested in open surgery using the methodology described in (Reeps et al. 2012). Figure 1 depicts the histograms of the constitutive parameters \(\alpha \) and \(\beta \) and reveals that the intra-patient variations are of the same order of magnitude as the inter-patient variations. It was found that \(\beta \) exhibits a larger variance as well as a greater variation between individual patients as compared to \(\alpha \). Hence, as a first step towards a fully probabilistic constitutive framework, we choose to model only the parameter \(\beta \) as a random field leaving the remaining constitutive parameters \(\alpha = 0.059\) and \(\kappa = 5.9\) MPa deterministic population-averaged mean values.

2.1.1 Generation of non-Gaussian random fields using spectral representation and translation process theory

Solving stochastic finite element problems with uncertainties modeled as random fields using a sampling-based approach requires the computation of discretized sample functions, often referred to as realizations, of the random field. The spectral representation method in combination with translation process theory is a widely used approach to generate these sample functions based on a large number of trigonometric functions with independent random phase angles (Shinozuka and Jan 1972). First, a Gaussian random field is created with the spectral representation method, which is than mapped into a non-Gaussian field using translation process theory (Grigoriu 1995).

In order to use the spectral representation method, two probabilistic characteristics of the random field, the first-order probability distribution and the autocorrelation function (ACF), need to be specified. Experimental data from tensile tests was utilized to obtain an estimate for the probability distribution using 142 tested specimens from 49 patients, which provides empirical information on the range and relative frequency of \(\beta \), see Fig. 1b. A lognormal probability distribution for the parameter \(\beta \), which is visualized in Fig. 1b, was obtained by applying the lognfit function in matlab to this data.

As usual in stochastic mechanics (Charmpis et al. 2007), our experimental data did not contain sufficient information to fully determine the second characteristic of the random field, the ACF. Based on the reasonable assumption that the constitutive parameters vary smoothly in space, we chose the squared exponential ACF

The ACF describes the decay of covariance, which, for homogenous fields, is solely based on the distance between two points \(\varvec{\tau }= \varvec{x}-\varvec{x'}\) and a parameter called correlation length \(d\). The correlation length controls the distance between two points above which the correlation between the values at these points approaches practically zero. The parameter \(\sigma _{\beta }^2\) denotes the variance of the lognormal distribution in (2). The ACF can be transformed into the power spectral density (PSD) of the random field using the Wiener-Khintchine relationship

where \(\varvec{\kappa }\) denotes the frequency vector. The PSD is needed to create sample functions of the random field using the spectral representation method (Shinozuka and Jan 1972). The formula to generate sample functions of a univariate three-dimensional Gaussian random field is given in (Shinozuka and Deodatis 1996):

with \(\varDelta \kappa _i = \frac{\kappa _{i_u}}{N_i}\) and \(\kappa _{in_i}=n_i \varDelta \kappa _i \). Herein, \(\kappa _{i_u}\) represents the cutoff frequency above which the PSD is of insignificant magnitude and can assumed to be zero. This threshold depends on the correlation length of the field and has to be adjusted accordingly. The \(\xi _{n_1 n_2 n_3}^{(i)}\) denote the independent random phase angles which are uniformly distributed between \([0,2\pi ]\).

Sample functions generated with (5) are asymptotically Gaussian for \(N \rightarrow \infty \) by virtue of the central limit theorem. However, a value of \(N_i=64\) yielded sufficiently accurate results and hence was used throughout this work for the generation of sample functions. Using translation process theory, the sample of the Gaussian random field can be transformed into a sample of a non-Gaussian random field, the first-order probability density of which obeys the desired lognormal probability density function (PDF) (Grigoriu 1995)

where \(F_{\mathrm {N\!G}}^{-1} \) is the inverse cumulative distribution function (CDF) of the desired lognormal distribution as given in (2) and \(\varPhi \) is the normal CDF of the underlying Gaussian process. The nonlinear transform described above leads to a correlation distortion in the sense that (6) not only changes the first-order distribution of the stochastic process but also affects the ACF. In order to account for this distortion as well as any potentially arising incompatibilities between prescribed CDF and ACF (Grigoriu 1995), we implemented a framework based on (Shields et al. 2011), which allows to efficiently generate sample functions of random fields that match a prescribed PDF as well as the desired ACF. For a more detailed in-depth overview over algorithms for generating non-Gaussian random fields, the reader is referred to (Bocchini and Deodatis 2008) and (Stefanou and Papadrakakis 2007).

With the non-Gaussian random field described above, we can extend the constitutive law for the aneurysmatic arterial wall to incorporate the uncertainty in the parameter \(\beta =\beta (\varvec{x}, \varvec{\xi })\). Thus, \(\beta \) becomes a function of the location and the random phase angles \(\xi _{n_1 n_2 n_3}^{(i)}\), which are summarized in the vector \(\varvec{\xi }\) for notational convenience. Once a sample of random phase angles is drawn, a realization of the three-dimensional random field is computed using (5) and (6). The constitutive parameter \(\beta (\varvec{x},\varvec{\xi })\) can then be evaluated, e.g., at the midpoint of each element in the AAA wall. This value is then assigned to the element as local constitutive parameter. The full stochastic version of the strain energy function, which now depends on the location \(\varvec{x}\) as well as on the vector of random phase angles \( \varvec{\xi }\), is

Note that the methodology to create sample functions of random fields described above can also be used to consider models with any other uncertain quantities, e.g., uncertain wall thickness as later also used in one numerical example. In case of multiple uncertain model parameters, each of those can be modeled individually as random field using the formulas given above, if the parameters are uncorrelated. However, if these parameters are correlated, cross-correlated random vector fields should be used as a probabilistic model. The generation of non-Gaussian cross-correlated random vector fields is more involved and an area of ongoing and vivid research. Several methods for the generation of such cross-correlated sample functions have for example been proposed in (Popescu et al. 1998; Field and Grigoriu 2012; Vořechovský 2008; Cho et al. 2013). In this work, we restrict ourselves to random fields, which are not cross-correlated for ease of exposition, and because at this stage, we can only speculate about potential cross-correlations between different model parameters. But while sample generation will be more involved in the cross-correlated random vector field case, we do not see a reason why the proposed Bayesian multi-fidelity approach should not work as well.

2.2 Nonlinear stochastic boundary value problem

Having established a probabilistic description of the material properties, the boundary value problem that has to be solved becomes stochastic as well. For a formal description of the problem, a probability space \((\varOmega ,\mathcal {F},\mathcal {P})\) is defined, where \(\varOmega \) is the event space, \(\mathcal {F}\) a \(\sigma \)-algebra and \(\mathcal {P}\) a probability measure. The random phase angles now define a mapping \(\varvec{\xi }: \varOmega \rightarrow [0,2\pi ]^d\), where \(d\) corresponds to the total number of phase angles used to generate sample functions of the random field. Additionally, we ask that the random phase angles obey a uniform distribution \(\pi _{\xi _i} = \mathcal {U}(0,2\pi )\). The stochastic boundary value problem can then be written in the most general form as

with the nonlinear stochastic differential operator \(\mathcal {L}\) depending on the vector \(\varvec{\xi } \in \mathbb {R}^d\) of real random variables describing, e.g., the uncertainty in the material properties or the wall thickness. Only deterministic boundary conditions and body loads are considered in this work. The solution \(\varvec{u}(\varvec{x},\varvec{\xi })\) of the problem, the displacement, thus becomes a stochastic process as well, so do all quantities derived from the displacement field or functions thereof, such as the von Mises stress \(\sigma _\mathrm{vM }\), von Mises strain \(e_\mathrm{vM }\) or strain energy density \(\varPsi \). For the sake of brevity, we will refer to all quantities of interest as \(y(\varvec{x},\varvec{\xi })\) in the following, and in addition, we will omit the dependence on the location and random phase angles for notational convenience. Apart from the expected value \(\mathbb {E}[y]\) or the coefficient of variation (COV) of the quantity of interest, we are interested in obtaining the density \(\pi _y(y)\) or a response statistic like

where \(\mathcal {A}\) is a \(\pi _{\varvec{\xi }}\)-measurable subset and \(1_{\mathcal {A}}\) is the corresponding indicator function. The case where \(\mathcal {A}=\left\{ y | y>y_0\right\} \) is of particular interest if we want to know the probability that, e.g., the von Mises stress exceeds a certain threshold \(y_0\); thus, (9) can be used to define a global or local failure probability.

3 Solution of the nonlinear stochastic boundary value problem

Solving the stochastic boundary value problem using sampling-based approaches like Monte Carlo (MC) only requires a deterministic solver and the ability to draw samples from the distribution \(\pi _{\xi }(\varvec{\xi })\). The distribution of the quantity of interest \(\pi _y(y)\) can then be approximated by computing a number (\(N_\mathrm{SAM }\) ) of random samples \(y_{i}=y(\varvec{\xi }_{i})\), with \(\varvec{\xi }_{i} \sim \pi _{\xi }(\varvec{\xi })\); which are sometimes also referred to as particles. These particles are equipped with a weight \(W^i\) according to their weight or probability, respectively. With slight abuse of notation, the approximation of \({\pi }_y(y)\) can be computed by:

where \(\delta _{y^{(i)}}\) is the delta-Dirac mass. In case of direct MC the weight of all samples is the same and equal to \(1/N_\mathrm{SAM }\). Hence:

The accuracy of the MC approximation depends on the number of independent samples \({N_\mathrm{SAM }}\) with a probabilistic error of \(O(1/\sqrt{N_\mathrm{SAM }})\). In contrast to Polynomial Chaos Expansion (PCE)-based approaches, the accuracy does not depend on the stochastic dimension of the problem per se. However, for an accurate computation of the response statistics, the number of required samples can be prohibitively large, especially if one is interested in the tails of the distribution to evaluate failure probabilities. With a solution time of current state-of-the-art models in computational biomechanics of the order of several CPU hours, direct MC with several thousand samples is impractical for the application to patient-specific models and large patient cohorts. The use of advanced MC schemes like subset simulation, importance sampling or sequential Monte Carlo (SMC) methods can alleviate the computational burden (Neal 2001; Au and Beck 2001; Del Moral et al. 2006). However, the aforementioned advanced sampling schemes are not applicable if one is interested in uncertainty quantification with respect to multiple response quantities simultaneously, e.g., stresses and strains.

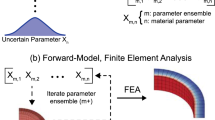

3.1 Bayesian multi-fidelity Monte Carlo

To reduce the computational effort, we implemented a framework based on a multi-fidelity sampling strategy employing the concepts proposed in (Koutsourelakis 2009; Kennedy and O’Hagan 2000). The general idea is to incorporate models with different levels of sophistication or fidelity in a sampling-based UQ approach. Roughly speaking, the sampling is done on an inexpensive, approximate, low fidelity model. Then, to account for the discrepancy between the low fidelity and high fidelity solution, a probabilistic correction factor is used, for the calculation of which only very few evaluations of the expensive high fidelity model are required.

We start, given a particular system of interest, by assuming that we have two computational models for this system, an accurate, expensive high fidelity model and a cheap approximate version. The latter could be obtained, for instance, by creating a much coarser discretization. Suppose that we are interested in computing a particular quantity \(y(\varvec{\xi })\) or rather its probability density \(\pi _y(y)\). Analogous to \(y(\varvec{\xi })\), the quantities of interest computed with the high fidelity model, we will refer to the corresponding quantity and its density in the approximate model as \(x(\varvec{\xi })\) and \(\pi _x(x)\) from here on. For example, if \(y\) is the von Mises stress at a particular location in the accurate model, \(x\) denotes the von Mises stress evaluated at the same spatial location in the low fidelity model. Since the approximate model is cheap to evaluate, the density \(\pi _x(x)\) can be readily approximated using any kind of sampling algorithm such as direct MC or SMC.

If the low fidelity model and the high fidelity model have the same stochastic structure, that is, they show a similar dependence on the random input parameters, then there is a statistical correlation between \(x\) and \(y\), as shown in Fig. 2a. This correlation can be exploited to accurately predict \(\pi _y(y)\) and does not at all depend on how good the low fidelity model approximates a value in a deterministic sense. Let us first consider the most extreme case, the one where we have a direct one-to-one relationship between the two model outputs \(x\) and \(y\), e.g., as shown in Fig. 2b. It is obvious that it would be easy to come up with a regression model that can reproduce the interrelation between \(x\) and \(y\). In combination with \(\pi _x(x)\) obtained from sampling, the calculation of \(\pi _y(y)\) is trivial. However, the relationship between \(x\) and \(y\) can also be exploited if no strict deterministic relationship exists. A noisy correlation as shown in Fig. 2a can also be exploited. If the interrelation between the two model outputs is captured as conditional probability density \(p(y|x)\), \(\pi _y(y)\) can be readily computed using

Hence, the methodology has two major requirements. First, the approach of course requires an approximate model that, while being computationally cheap, still provides sufficient stochastic information about the quantity of interest. Second, an efficient way to infer the conditional density \(p(y|x)\) is needed. As proposed by Koutsourelakis (2009), we use a Bayesian regression model to estimate the conditional density \(p(y|x)\). Therefore, we need a set of training samples \(\left\{ (x_j,y_j)\right\} _{j=1}^n\), i.e., solutions of the low and high fidelity model based on the same realizations of the random field or the same set of random phase angles \(\varvec{\xi }_j\), respectively. The Bayesian regression model then establishes a probabilistic link between the low and high fidelity model, thus allowing us to compute \(\pi _y(y)\) using (12) or \(Pr\left[ y\in \mathcal {A}\right] \) if we rewrite (9):

As a consequence, the regression model, in combination with the information from the low fidelity model, serves as a data fit surrogate for the high fidelity model. Another interpretation is that the regression model provides a probabilistic correction factor to account for the discrepancy between high and low fidelity solution. In order to facilitate the understanding for the reader, we want to provide a sketch of the basic steps of the proposed approach before explaining the details of the Bayesian regression and the computation of \(\pi _y(y)\) in the following subsections. Furthermore, we want to highlight some advantages of the proposed approach at this point.

Given an existing accurate, high fidelity model of our system, an approximate low fidelity model has to be constructed. This approximate model should have a similar stochastic structure as the high fidelity model. However, it is not necessary that the approximate model provides an accurate approximation in a deterministic sense, as needed in deterministic multi-level/grid schemes. The only prerequisite is a mere statistical correlation between the high fidelity and the approximate model, meaning that the approximate model and the high fidelity model have a similar dependence on the random input parameters. This very weak requirement offers a tremendous flexibility in the choice of approximate models. One way to create an approximate model is to simply use a coarser discretization. Additionally, model reduction techniques beyond coarsening the spatial and temporal discretization can be used. As shown in one of our numerical examples in Sect. 4, the multi-fidelity MC approach also works if a simpler physical model is used. In principle, also model reduction techniques such as proper orthogonal decomposition could provide suitable low fidelity models.

In the second step of the proposed approach, the density \(\pi _x(x)\) of our quantity of interest \(x\) has to be determined using the low fidelity model. Thereby, the sampling can be carried out using any kind of sampling algorithm and is by no means limited to direct MC. Independent of the particular choice of the sampling algorithm computing \(\pi _x(x)\) by sampling is much cheaper as compared to sampling of the high fidelity model. We used approximate models which are up to a factor of 120 cheaper to compute than the corresponding high fidelity model. In some cases, a factor of 1,000 can be achieved (Koutsourelakis 2009). Then, a small subset \(\left\{ x_j\right\} _{j=1}^n\) of all computed samples is selected. For an accurate computation of \(\pi _y(y)\), these samples should fully cover the support of \(\pi _x(x)\), i.e., cover the whole range of values that \(x\) can take. Since usually only a particulate approximation of \(\pi _x(x)\) is available, this range is approximated by the samples with the smallest and largest \(x\) value, respectively. In our experience, 100–200 evenly distributed samples which cover the range of all possible \(x\) values yield excellent results. Now the high fidelity solution \(\left\{ y_j\right\} _{j=1}^n\) corresponding to the \(\left\{ x_j\right\} _{j=1}^n\) is computed. The dataset \(\left\{ (x_j,y_j)\right\} _{j=1}^n\) is then used to fit a Bayesian regression model, which provides probabilistic information on \(y\) given \(x\). More specifically, the Bayesian regression provides the desired conditional probability distribution \(p(y|x)\). Compared to the costs associated with the computation of the high fidelity solution for the \(n\) samples, the costs of fitting the regression model are negligible. While we adopt the regression model used in (Koutsourelakis 2009) with minor modifications, the multi-fidelity MC approach is, of course, not limited to this particular choice of regression model. Once the conditional density \(p(y|x)\) is obtained, we can use standard probability theory to compute \(\pi _y(y)\). Overall, the proposed multi-fidelity MC approach can be summarized in six steps, which are given in Table 1.

3.1.1 Bayesian regression model

Here, we will briefly outline the main concepts of the Bayesian regression model proposed by Koutsourelakis (2009), which we adopt and modify by the addition of a linear term. As mentioned before, one key aspect of the proposed procedure lies in the efficient determination of the conditional density \(p(y|x)\) or conditional probability \(Pr\left[ y\in \mathcal {A}|x \right] \). We start by assuming an a priori unknown functional relationship between \(x\) and \(y\) plus some additive Gaussian noise:

with \(f(x,\varvec{\theta })\) being a combination of a first-order polynomial and a sum of Gaussian kernel functions

where the vector \(\varvec{\theta }\) contains all parameters of the model

Thus, given the model parameters \(\varvec{\theta }\) the conditional distribution \(p(y|x,\varvec{\theta },\sigma )\) is Gaussian:

where \(\phi (y-f(x,\varvec{\theta }),\sigma )\) is the Gaussian PDF defined as

Following a fully Bayesian approach, all unknown parameters are treated as random variables that are equipped with a probability distribution. Hence, instead of trying to obtain point estimates, the goal is to infer a probability distributions for each of the model parameters in \(\varvec{\theta }\) and the variance of the noise \(\sigma ^2\). This of course means that the conditional distribution \(p(y|x)\), where the parameters \(\varvec{\theta }\) are integrated out, is not necessarily Gaussian, and more complex distributions can be obtained. Exploiting the statistical correlation between the two quantities \(x\) and \(y\), we can use the training samples \(\left\{ (x_j,y_j)\right\} _{j=1}^n\) to infer the parameters \(\varvec{\theta }\) of the regression model as well as the variance of the Gaussian noise \(\sigma ^{2}\) using Bayes’ rule

After specifying the prior distributions \(p(\varvec{\theta })\) and \(p(\sigma ^2)\), Bayes’ rule modifies these prior distributions based on additional available information. In our case, this information is provided by the training samples \(\left\{ (x_j, y_j)\right\} _{j=1}^n\). Using these training samples, it is possible to evaluate how probable it is to observe the data given a particular choice of \(\varvec{\theta }\) and \(\sigma ^2\) using the likelihood \( p((x_{1:n}, y_{1:n})|\varvec{\theta },\sigma ^2)\). The combination of the prior and the likelihood gives rise to the posterior distribution of the parameters \(p(\varvec{\theta },\sigma ^2 |(x_{1:n}, y_{1:n}))\) given the data. The Bayes’ rule adjusts the distribution of the model parameters such that they are both probable under the prior and compatible with the observed evidence or data.

3.1.2 Prior distribution

We adopted the choice of priors given in (Koutsourelakis 2009) for the model parameters. Algebraic rearrangement and integration yield the following prior for the model parameters:

where \(s=1.0, a_{\tau }=1.0, a_{\mu }=0.01, a_0 =1.0 , b_0 = 1.0\) were used throughout this work. Additionally, a \(Inv-Gamma(a,b)\) prior was chosen for the variance \(\sigma ^2\) of the Gaussian noise in (14). The hyperparameters \(a=2\) and \(b=10^{-6}\) where used throughout this work. As additive Gaussian noise was assumed, the likelihood of the parameters \(p((x_{1:n}, y_{1:n})|\varvec{\theta },\sigma )\) for n training samples \(\left\{ (x_j, y_j)\right\} _{j=1}^n\) is given by

One major advantage of this approach is that the complexity of the Bayesian regression model, that is the number of Gaussian kernels \(k\) in (15), is also a model parameter that is inferred from the data. Hence, also highly irregular or nonlinear interrelations between \(x\) and \(y\) can be captured. Moreover, as the chosen Poisson prior for \(k\) ensures that sparse regression models are favored, over-fitting is avoided and the simplest regression model explaining the data is chosen (Koutsourelakis 2009).

3.1.3 Computing the posterior

After generating the training samples, Bayes’ rule is applied in an iterative manner inserting one pair of training samples \((x_j,y_j)\) at a time, using the resulting posterior distribution as a prior for the next pair. Naturally, as more training samples are used, the influence of the prior diminishes. The posterior density \(\pi _{\varvec{\theta },\sigma ^2}(\varvec{\theta },\sigma ^2)\) cannot be obtained as an analytic expression; hence, it is approximated using \(N_\mathrm{particles }\) discrete particles equipped with a weight proportional to their probability.

The position and weights of these particles can be computed using , e.g., advanced SMC schemes (Doucet and de Freitas 2001; Kemp 2003). In this work, we use the SMC algorithm proposed in (Koutsourelakis 2009).

3.1.4 Computing solution statistics of the high fidelity model

Having computed a particulate approximation of the posterior density \(\pi _{\varvec{\theta },\sigma ^2}(\varvec{\theta },\sigma ^2)\), we can evaluate the conditional distribution \(p(y|x)\). With the established quantitative probabilistic relationship between \(x\) and \(y\), the computation of an estimate of \(\pi _y(y)\) or \(Pr\left[ y\in \mathcal {A}\right] \) is now straightforward. A frequently used point estimate in Bayesian models is the posterior mean. The posterior mean \(\hat{\pi }_y(y)\) can be computed as:

Using particulate approximations of \(\pi _x(x)\) and the joint posterior density \(\pi _{\varvec{\theta },\sigma ^{2}}(\varvec{\theta },\sigma ^{2})\)

the posterior mean \(\hat{\pi }_y(y)\) can be readily approximated with

where \(\phi \) denotes the Gaussian PDF as in (18). Obviously, we can also evaluate the probability of \(y\) exceeding a specific threshold \(y_0\). Given the model parameters \(\varvec{\theta }\) and the variance \(\sigma ^2\) the probability of \(y\) exceeding a specific threshold \(y_0\) can be computed by

with \( q_{\mathcal {A}}(x;\varvec{\theta },\sigma )\) being defined as

where \(\varPhi (x)\) is the standard normal CDF. The posterior mean approximation of \( q_{\mathcal {A}}\), computed using the particulate approximation of \(\pi _{\varvec{\theta },\sigma ^2}(\varvec{\theta },\sigma ^2)\), can be used to produce an estimate of the exceedance/failure probability for a specific value of \(x\)

Substituting into (26) and using (24) yields an estimate for the failure probability in the sense that on average, given the training samples, the probability of \(y\) exceeding a threshold \(y_0\) is given by:

Another advantage of the Bayesian approach is that in addition to point estimates like the posterior mean, confidence intervals can be estimated from the posterior, quantifying the uncertainties in the inferred parameters and hence in the regression model. P-quantiles \(q_{\mathcal {A},p}(x)\) for the failure probability can be readily estimated using:

where \(H\) denotes the heavy side function. We use the \(p = 1\) and \(p=99\,\%\) quantiles \(q_{\mathcal {A},0.01}\) and \(q_{\mathcal {A},0.99}\) to provide confidence intervals for exceedance probabilities in the following sections.

4 Patient-specific examples

It is important to emphasize that the proposed approach is very general and can be readily applied to a wide range of problems. To demonstrate the capabilities of our approach, we perform UQ using two realistic AAA models with patient-specific geometries from our database, referred to as male67 and male71, as example for a large-scale nonlinear solid mechanics problem with uncertain constitutive properties. The geometry of the lumen and, if present, the intraluminal thrombus (ILT) were reconstructed from CT data. The model male71 exhibits ILT while male67 does not. The wall thickness cannot not be determined from the CT data and was assumed to be a constant 1.57 mm throughout the model. While this assumption is made here to restrict ourselves to uncertain material parameters for ease of discussion, we stress that the presented method can also be applied to consider other uncertainties, like uncertain regional variations in wall thickness. To demonstrate this capability, we consider a simpler generic example in a proof of concept study in the next section. In this third example, we study an uncertain constitutive property together with an uncertain wall thickness, thereby both of those quantities are modeled as random fields.

For the patient-specific examples, we use our existing workflow, which is described in detail for the deterministic case in (Reeps et al. 2010; Maier et al. 2010) and obtain high fidelity finite element models with a mesh size of roughly 1 mm, which are shown in Fig. 3a, c. These hybrid finite element discretizations consist of 15,228 (male67) and 169,791 (male71) linear hexahedra-, wedge- and tetrahedra-shaped elements, resulting in a problem size of 61,674 and 292,044 degrees of freedom, respectively. For the aneurysm male67, an approximate model which is shown in Fig. 3b is constructed by coarsening the discretization. Using an element size of approximately 3 mm, the resulting coarser model has 2,670 elements and 8,679 degrees of freedom. For the aneurysm male71, two approximate models were created. For the first approximate model, a coarser discretization and a truncated geometry were used as depicted in Fig. 3d, e, respectively, which results in 19,579 elements and 25,875 degrees of freedom. Secondly, to further reduce the computational effort, another approximate model was created using model reduction in the sense that the ILT was omitted entirely in addition to a coarser discretization and geometric truncation, see Fig. 3d, f, respectively. Therein, the size reduces to 3,164 elements and 10,320 degrees of freedom. The reduction in model size and complexity yields a tremendous reduction in computational costs. For the aneurysms male67 and male71, coarsening of the discretization and model reduction yield an approximate finite element model that is between 10 and 50 times cheaper than the original high fidelity model, respectively. The CPU time required to compute one sample of the different models along with the numbers of degrees of freedom is summarized in Table 2. We used a 6 core Intel Xeon 3.2 GHz workstation with 12 GB memory to establish the solution times of the different models. The simulations were computed on one core, except the high fidelity model of the male71 aneurysm, for the solution of which four cores were used. We applied simple clamped boundary conditions at all in- and outlets of the aneurysms and imposed an orthogonal pressure follower load, to mimic the load imposed by the blood pressure on the luminal surface of the ILT. If no ILT is present, pressure is applied on the luminal surface of the vessel wall. The shear stresses, induced by the blood flow, are negligible as compared to the pressure load. Hence, a full fluid–structure interaction simulation is not required. Since the geometries of the aneurysms were obtained from CT images, the in vivo imaged configuration is not stress free, but represents a loaded spatial configuration. In order to obtain meaningful results, this “prestressed” state has to be accounted for. To imprint the stresses from an assumed diastolic pressure of 87 mmHg in the in vivo imaged state, we used a modified updated Lagrangian formulation (MULF) (Gee et al. 2009, 2010). The obtained prestrained/prestressed state implicitly defines a stress-free reference geometry, while neglecting growth and remodeling processes in the wall. This implicitly defined geometry of course depends on the constitutive properties of the arterial wall and the ILT and hence varies from patient to patient and from sample to sample in an UQ analysis.

AAA finite element models based on patient-specific geometries. a Male67 high fidelity model. b Male67 approximate model. c Male71 high fidelity model. d Male71 approximate model. e Cross-sectional view of male71 approximate model with ILT. f Cross-sectional view of male71 approximate model without ILT

After the prestressing phase, the luminal pressure is increased to 121 mmHg, taking into account the imprinted stresses and strains acting on the imaged configuration. For the constitutive behavior of the ILT in the male71 aneurysm, the model proposed by Gasser et al. (2008) was chosen, using the parameters provided in his work. Furthermore, in all cases, the stochastic material law described above in (7) is used to model the aneurysmatic arterial wall.

To study the sensitivity with respect to the unknown correlation length \(d\) from (3), we investigated three random field models with different correlation lengths \(d = 12.5\), \(d = 25\) and \(d = 50\) mm, respectively. The chosen range covers random fields which exhibit rather short-scale fluctuations as well as highly correlated random fields with a correlation length in the range of the diameter of a typical aneurysm. All finite element calculations were done using our in-house research code BACI (Wall and Gee 2014). In order to obtain a reference solution for comparison with the proposed approach, we performed direct MC with all high fidelity finite element models for all three correlation lengths with a sample size of \(N_\mathrm{SAM }=50{,}000\). First, the impact of the uncertain constitutive parameter \(\beta \) on the relevant mechanical quantities such as Cauchy von Mises stress (\(\sigma _{vM}\)), Euler-Almansi von Mises strain (\(e_{vM}\)), and strain energy \(\varPsi \) is discussed on the basis of these MC simulations, and the influence of the correlation length on the distributions of these quantities is investigated. Then, in Sect. 4.2, we will compare the results of the employed Bayesian multi-fidelity MC approach to the MC reference solution and the accuracy and efficiency of the proposed multi-fidelity approach is examined.

4.1 Monte Carlo reference solution

As expected, in spite of drastic variations in the wall stiffness, the overall spatial pattern of the von Mises stress is still largely determined by the geometry of the aneurysm and rather insensitive to those variations. However, a slight dependency on the correlation length was found, where a smaller correlation length leads to greater variations in the overall stress pattern. Figure 4 shows the von Mises stress and strain resulting from five different showcase realizations of the stochastic constitutive law for the male71 aneurysm. The samples in Fig. 4 were computed with a correlation length of \(d=12.5\) mm for which the resulting stress pattern exhibits the largest variations. Nevertheless, the overall spatial pattern of the stress remains remarkably similar for all depicted realizations of the random field. Moreover, when the correlation length of the random field is increased, the variations in the spatial stress pattern become even less significant. This is in agreement with theoretical mechanical considerations, as the stress state in the aneurysm wall is dominated by in plane membrane stress, which is determined mostly by the overall geometry of the aneurysm and the traction boundary conditions.

In contrast to the spatial stress pattern, the spatial strain pattern of the samples very significantly indicate a strong dependency between local stiffness of the wall and local strain. Unlike in the stress pattern, regions with high strain are not determined by the overall shape or the geometry of the aneurysm but rather by regions with low wall stiffness as shown in Fig. 4c. The male67 aneurysm shows similar behavior and is hence not shown here.

In addition to this qualitative assessment, we examined the empirical probability distributions of the stress obtained from MC at several locations across the aneurysms, see Fig. 5. The mechanical quantities of interest were evaluated at single elements at these specific locations. Figure 6 shows the empirical probability densities of the von Mises stress, von Mises strain and strain energy at two locations on the male67 aneurysm. Location 1, shown in Fig. 5a, is located in the neck of the aneurysm above the sack. Location 2 is at the right lateral, distal end of the AAA sack, see Fig. 5a. Both locations are in regions that exhibit high wall stress. For the other aneurysm male71, Fig. 7 shows the probability densities evaluated at location 3, located at the center of the dorsal side of the aneurysm sack in the high stress region, and at location 4, located at the ventral side of the aneurysm sack in a low stress region with thick ILT underneath the AAA wall, see Fig. 5b, c. All empirical densities are plotted for the different correlation lengths of the random field in the stochastic constitutive model. In addition to the mean and COV, the 95 % quantiles are provided for each of the distributions in Figs. 6 and 7, respectively.

The obtained distributions for the stresses confirm the qualitative assessment that the stresses are only mildly affected by the local stiffness of the wall. The COVs of the stress distributions are below 0.12. If only the locations 1, 2, and 3, which all lie in high stress regions of the respective AAAs, are considered, the maximum COV of the stress distribution is 0.07. Also for these three elements, the COVs of the stress become even smaller with increasing correlation length of the random field. In contrast to this, the COV of the stress at location 4, which lies in a region with thick ILT and low wall stress, is less influenced by the correlation length. The 95 % quantile of the stress distributions, as a “worst-case” estimate, is approximately 2–19 % higher than the respective mean value, see Figs. 6a, b and 7a, b. This provides further evidence that uncertainties in the constitutive parameter \(\beta \) result in only moderate uncertainty in the computed stresses.

While this appears to be consistent with the findings from Raghavan and Vorp (2000), it should be stressed that Raghavan and Vorp only considered mild parameter variations within the 95 % confidence interval of their estimated mean value and not within the complete range of measured values. Furthermore, they did not consider spatial intra-patient variations of the constitutive parameters nor did they consider the prestressed state of the aneurysm or ILT. Findings similar to ours have also been reported for intracranial aneurysms by Ma et al. (2007) and Miller and Lu (2013). These two studies also indicate only a mild dependence of the wall stresses on the constitutive parameters; however, only spatially homogenous parameters were considered therein as well.

Our results show that the wall stresses are only mildly sensitive to variations in the constitutive parameter \(\beta \). This holds especially for the rather long-scale spatial heterogeneity obtained with the longer correlations lengths of the random field, see Fig. 6a. The sensitivity of the stress state on the material properties typically increases as the random field exhibits strong spatial gradients in the parameter field as one moves to smaller correlation lengths, indicating that only strongly localized variations in this parameter have a significant effect on the stresses. We argue that the reason for this insensitivity, in spite of strongly localized variations in \(\beta \), can be ascribed to the prestressed state of the imaged geometry. The prestress in the in vivo imaged configuration of cardiovascular structures has to be accounted for in order to obtain meaningful simulation results, as has been pointed out in numerous publications (Gee et al. 2009, 2010; Speelman et al. 2009; Miller and Lu 2013). All techniques to account for this essentially approximate a stress state that equilibrates with the external load given the spatial configuration. The boundary conditions in a typical cardiovascular problem resemble those termed statically determinate. Dominated by traction boundary condition emulating luminal blood pressure, the Dirichlet boundary conditions applied far away from the region of interest have only minor influence. Thus, in prestressed cardiovascular structures which are predominantly loaded by traction boundary conditions, the overall stress state, which is largely determined by the prestress, is governed by simple equilibrium of internal stress with the external loading and therefore is relatively insensitive to variations in the constitutive parameters.

In contrast to the stresses, the strain and strain energy exhibit large COVs and are therefore drastically affected by the constitutive parameter \(\beta \). The probability distributions depicted in the Figs. 6c–f and 7c–f reveal the large variations in local strain and strain energy. With COVs up to 0.28 and 0.6, respectively, the strains and strain energy exhibit significantly larger COVs than the stresses, rendering a statement about the true strain state of the aneurysm difficult when facing uncertain constitutive parameters. Whereas the distribution of the stresses are symmetric, both strains and strain energy exhibit skewed distributions. The contour plots in Fig. 4c reveal a close dependence between low \(\beta \) value and high local strain and vice versa, and the overall spatial pattern of the strain depends mostly on the realization of the random field and not on the geometry of the aneurysm. Since the local stress state is mostly dictated by the given spatial configuration and external load, local strains depend predominantly on the local stiffness. The softer the wall, the higher the strains need to be in order to reach a certain “predetermined” stress level.

Comparing the probability densities of the strain and strain energy at different correlation lengths in Figs. 6c–f and 7c–f, respectively, there is no noticeable difference between the three assumed correlation lengths. The variance of these probability distributions is virtually independent from the chosen correlation length for both, strains and strain energy. If the 95 % quantiles are used as an estimate for a worst-case scenario and compared to the mean values, we see that strain is potentially 60–70 % higher than the mean value and thus could be dramatically underestimated in deterministic models with population-averaged constitutive parameters. In case of the strain energy, the margin of uncertainty is even larger with a COV of typically more than 0.5, which results in a 95 % quantile that is more than twice as high as the mean value.

The explanation for these very large COVs is once again the non-stress-free in vivo imaged configuration of the aneurysm. The combination of a known deformed configuration and the load case leading to this configuration results in a computed stress state, which is only mildly sensitive to variations and uncertainties in the constitutive parameter \(\beta \). In a sense not knowing the stress-free reference configuration but the deformed configuration is an advantage for the prediction of wall stress. This is not the case for the computation of the strain state. With a stress state that is to a large extend determined by the geometry of the aneurysm, the strains become very sensitive to the local stiffness of the AAA wall. Assuming that the local stress state is dictated by the geometry and hence more or less fixed, the strain state that corresponds to this stress state depends on \(\beta \). Low local stiffness will result in very high local strains and vice versa. Thus, any uncertainty in \(\beta \) directly translates to uncertainty in the strain state, inhibiting an accurate prediction of the strains if the stress-free geometry and the constitutive properties are unknown or uncertain. The same argument holds if the strain energy is considered as quantity of interest. As a result, the probability distributions of the strains and strain energy evaluated at specific locations in the wall, exhibit very high COVs.

4.2 UQ using Bayesian multi-fidelity MC

Of course, conducting direct MC for large patient cohorts in a clinical setting is impractical. Hence, we will demonstrate the capabilities of the aforementioned UQ approach, which reduces the computational cost to an acceptable level for studies with a larger patient cohort. To demonstrate the efficiency and accuracy of the proposed framework, we chose a subset of the results from the previous chapter. Four examples which are summarized in Table 3 are considered, examining von Mises stress and strain at distinct locations of the two AAA models as quantities of interest. Moreover, we take into account different correlation lengths of the random field as well as different approximation schemes for the approximate models. After computing 50,000 MC samples using the approximate models, 200 of those were selected and the corresponding high fidelity samples were taken from the MC reference solution. The 200 samples were selected such that they evenly cover the entire support of the particulate approximation of \(\pi _x\). This can be achieved by sorting all samples into equally wide bins and then selecting a fixed number of particles from each bin. Then, this set of training samples was used to determine the posterior density \(\pi _{\varvec{\theta },\sigma ^{2}}(\varvec{\theta },\sigma ^{2})\) and subsequently to compute the conditional probability distribution \(p(y|x)\). Figure 8 shows a comparison between the solution of the approximate model and the high fidelity solution for all four examples. The color-coded 2D histograms, based on all 50,000 MC samples, show the interrelation between the quantity of interest computed on the approximate and the high fidelity model, respectively. The training samples that were used to infer the parameters of the regression model are shown in Fig. 8 as black dots. The obtained posterior mean as well as the 1 and 99 % quantiles of \(p(y|x)\) are also depicted in Fig. 8. In all four cases, the posterior mean of \(p(y|x)\) captures the interrelation between the approximate and high fidelity solution very well. Furthermore, the computed quantiles readily provide confidence intervals, which contain virtually all MC samples with very few exceptions. The reason for the noisy relationship between \(x\) and \(y\) is that the coarsening of the discretization yields larger discretization errors, and therefore, spurious effects due to the coarser discretization appear. Moreover, the coarser mesh is unable to resolve the finer details of the random field, which results in a smeared less detailed solution. We found that these effects are more distinct if the quantity of interest is the von Mises stress as compared to von Mises strain.

Comparison between approximate solution and high fidelity solution. In addition to the posterior mean and the 1 and 99 % quantiles of \(p(y|x)\), the figures show the used training samples (black dots) as well as all 50,000 samples as color-coded 2d histogram. a Approximate versus high fidelity solution, male67 aneurysm, \(d = 25\) mm, \(\sigma _{vM}\) at location 2. b Approximate versus high fidelity solution, male71 aneurysm, \(d = 25\) mm, \(\sigma _{vM}\) at location 3. c Approximate versus high fidelity solution, male71 aneurysm, \(d = 12.5\) mm, \(\sigma _{vM}\) at location 3. d Approximate versus high fidelity solution, male71 aneurysm, \(d = 25\) mm, \(e_{vM}\) at location 4

While the first two examples show an almost linear relation between approximate and high fidelity solution, example three and particularly example four exhibit a distinct nonlinear dependency. The Bayesian regression model is able to capture these nonlinear interrelations as well, without any modification of the formulation. It also can be seen in Fig. 8, especially in the latter two examples, that the lack of ILT in the approximate model leads to significant differences in the overall magnitude between the stresses computed with approximate and the high fidelity model, respectively. In fact, the stress and strain are considerably higher in the approximate model, due to the lack of ILT. By comparing the two densities \(\pi _x(x)\) and \(\pi _y(y)\), as shown in Fig. 9, this difference becomes even more apparent.

Exemplary comparison of empirical densities from approximate model \(\pi _x(x)\), high fidelity model \(\pi _y(y)\) and posterior mean approximation \(\hat{\pi }_y(y)\). a Example 1: male67, \(d = 25\), \(\sigma _{vM}\) at location 2. b Example 2: male71, \(d = 25\), \(\sigma _{vM}\) at location 3. c Example 3: male71 \(d = 12.5\), \(\sigma _{vM}\) at location 3. d Example 4: male71 \(d = 25\), \(e_{vM}\) at location 4

The posterior mean approximation \(\hat{\pi }_y(y)\) is computed for all examples using (23) and depicted in Fig. 9 together with \(\pi _x(x)\) and the MC reference solutions of the high fidelity model \(\pi _y(y)\). Despite the differences between \(\pi _x(x)\) and \(\pi _y(y)\), the posterior mean approximation \(\hat{\pi }_y(y)\) is in excellent agreement with the MC reference solution for all examples.

Table 4 lists the mean, COV and 95 % quantile for both, the MC reference \(\pi _y(y)\) and the posterior mean approximation \(\hat{\pi }_y(y)\). Additionally, the relative error between the MC reference and the Bayesian approach is given, thus allowing a quantitative assessment of the accuracy. For the mean value, a relative error below 1 % is achieved for all examples. The error of the estimation of the COV and the 95 % quantile is slightly higher. However, considering the crude approximate models and the gain in computational efficiency, the accuracy is more than sufficient for biomedical applications.

The ability to obtain an accurate estimate for the complete PDF, rather than just the first moments is a major advantage, because we can compute the probability that the quantity of interest exceeds a certain threshold \(y_0\). Although the exact definition of a failure threshold can be difficult in biomechanical systems, such a failure probability is a valuable tool in many applications including rupture risk stratification of AAAs. Under the assumption that a suitable failure threshold \(y_0\) can be defined, the probability that a threshold \(y_0\) is exceeded is computed using (29). Figure 10 depicts the posterior mean failure probability computed for a range of failure thresholds \(y_0\) for the chosen examples. Again an excellent agreement with the MC reference solution is achieved, at a fraction of the computational cost, see Table 5. In addition, credible intervals which are also shown in Fig. 10 can be computed using (30). The intervals are an indicator for the accuracy of the computed failure probability estimate based on the training data. As depicted in Fig. 10, the provided bounds contain the MC reference solution.

Probability of exceeding a local failure threshold \(y_0\) for a given quantity of interest. Comparison of MC reference solution to posterior mean approximation and posterior quantiles. a Example 1: male67, \(d = 25\), \(\sigma _{vM}\) at location 2. b Example 2: male71, \(d= 25\), \(\sigma _{vM}\) at location 3. c Example 3: male71 \(d=12.5\), \(\sigma _{vM}\) at location 3. d Example 4: male71 \(d= 25\), \(e_{vM}\) at location 4

The computational efficiency of the method depends on the ability to create cheap approximate models, which still provide “enough” relevant information. In our examples, the ratio of computational effort required to compute one sample of the accurate and the approximate model, respectively, is between 10.2 and 50. Whereas Koutsourelakis (2009) reports ratios of over 1,000, we believe that in general biomedical engineering problems such ratios can usually not be achieved. However, a ratio of 50, accomplished by using a coarser discretization and omitting the ILT in the approximate model, results in a tremendous reduction of the computational effort, see Table 5. Compared to direct MC using the high fidelity model, the total computational effort is reduced by a factor of 42. As the male67 patient does not exhibit ILT and merely a coarser discretization was used to create the approximate model, the computational cost for the male67 aneurysm is reduced by a factor of 10 compared to direct MC. However, the vast majority of AAAs in our patient database exhibit ILT and especially for large AAA, which are computationally expensive, being able to omit the ILT in the model will result in even greater savings. However, we would like to point out that omitting the ILT in the approximate model is obviously only a viable option as long as no uncertainties in the ILT properties itself are considered in the UQ analysis. By using direct MC as a sampling strategy for the approximate model, as compared to importance sampling or SMC, we are able to access additional quantities of interest by computing few extra samples in the high fidelity model and reuse all samples of the approximate model. We would like to stress the fact that the approximate model does not have to be accurate, as in deterministic multi-level/grid schemes. As shown in Fig. 9, approximate models without ILT yield significantly higher values for strain and stress due to the missing support of ILT for the AAA wall. Additionally, no restrictions to the interrelation between the approximate and high fidelity model apply. Even highly nonlinear interrelations are detected and accurately reproduced by the Bayesian regression model, the complexity of which is determined by the information provided by the training samples. Whereas inaccurate approximate models and nonlinear interrelations do not adversely affect the accuracy of the approach per se, coarsening of the discretization yields less detailed smeared out results due to the coarser representation of the random field, a larger discretization error, and potentially aggravates spurious numerical effects such as volumetric locking. This results in a noisier relationship between the approximate and the accurate model, i.e., a higher variance of the conditional probability distribution \(p(y|x)\). As the variance increases, the credible intervals will become larger too. Furthermore, the accurate prediction of the quantity of interest on the high fidelity model becomes more difficult, especially with regard to the tails of the distribution and the assessment of small failure probabilities.

While we used a set of 200 training samples and achieved an excellent agreement with the MC reference solution, the amount of training data needed depends on a number of factors. To be specific, the number of necessary training samples and hence runs of the high fidelity model, depend on the required accuracy, the employed approximate model and on the quantity of interest as well as the statistic to be estimated. Hence, it is difficult to provide an a priori estimates of how many samples are needed to achieve a given accuracy. However, the variance of the conditional distribution \(p(x|y)\) and the confidence intervals computed using (30) provide us with a good indicator whether additional data will improve the accuracy. If the variance can no longer be decreased by adding more training data, the low fidelity model has to be changed if a more accurate assessment is required. We note that more elaborate schemes to select the training samples could further reduce the number of evaluations of the high fidelity model. This could be achieved for instance by exploiting the confidence intervals; in the sense that additional samples are selected in regions of \(x\) which contribute more to the uncertainty in the estimate of \(Pr\left[ y\in \mathcal {A}\right] \) and where these intervals are relatively large (Koutsourelakis 2009). However, this is beyond the scope of this work and subject of ongoing research.

4.3 Implications for computational rupture risk assessment of AAAs

Considering these results with regard to AAA rupture risk assessment using deterministic computational models, it seems that stress-based failure or rupture models (Vande Geest et al. 2006a; Gasser et al. 2010; Reeps et al. 2012) are well suited for rupture risk assessment, since wall stress is a rather insensitive measure with regard to constitutive uncertainties in the AAA wall. However, while we considered uncertainty in the constitutive parameter \(\beta \), it is important to keep in mind that various other sources of uncertainty exist. Most importantly, uncertainties in the wall thickness are likely to have a profound affect on the stresses in the arterial wall. While we considered only the uncertainty in one constitutive parameter, the methodology presented here can readily be extended to include other uncertain model parameters. This is demonstrated for a simple example in the following section. The application to realistic patient-specific geometries with multiple random fields is beyond the scope of this paper, but can be done with the proposed method, and we are planning to do this in the future. We believe that rupture potential stratification employing stress-based measures can be improved by rigorous UQ and that population-averaged constitutive parameters and deterministic models are not sufficient for the accurate patient-specific assessment of AAA rupture potential.

Furthermore, while many of the conducted studies assess rupture potential by computing wall stresses based on an elastic model, it has been pointed out previously that the damage and failure process of soft tissue is more involved and cannot be captured with purely elastic models employing a von Mises stress-based failure criterion (Marini et al. 2011; Rodriguez et al. 2008; Peña et al. 2008; Volokh and Vorp 2008; Volokh 2010; Gasser and Holzapfel 2002). In addition, our results indicate that the von Mises stresses, while being only mildly sensitive to variations in \(\beta \), do not contain or reveal all information about the mechanical state of the tissue. With the stresses being determined mostly by the geometry of the aneurysm and the applied transmural pressure, the strains or strain energy is almost decoupled from the stresses as shown in Fig. 4. Advanced tissue damage and failure models, e.g., based on the evolution of the isochoric stored strain energy of the material, as proposed by Simo (1987) and applied to AAAs by Marini et al. (2011) require accurate prediction of the prevailing strain and strain energy. In lack of truly patient-specific parameters, detailed statistical and probabilistic models based on empirical data and rigorous UQ are the only way to obtain meaningful and reliable results.

However, it is well known that several growth and remodeling processes constantly rebuild and transform the tissue in the arterial wall; hence, cardiovascular systems cannot be considered to exhibit purely elastic behavior. Localized areas of high strains are likely to be remodeled such that elastic strain peaks in the tissue are reduced. Therefore, we believe that 95 % quantiles of both strains and strain energy are unrealistically high, as growth and remodeling processes would diminish such high strains.

To further reduce the uncertainty in the input parameters, the use of regression techniques to create statistical models which relate, e.g., the constitutive parameters to noninvasively measurable variables could help to reduce the uncertainty in patient-specific material properties or other model parameters. Since this empirical approach has been successfully applied to estimate the spatial distribution of AAA wall strength (Reeps et al. 2012; Vande Geest et al. 2006a), we believe that this approach could help to refine the probabilistic description of other uncertain model parameters as well.

5 Proof of concept: multiple sources of uncertainties