Abstract

Predetermination of background error covariance matrix B is challenging in existing ocean data assimilation schemes such as the optimal interpolation (OI). An optimal spectral decomposition (OSD) has been developed to overcome such difficulty without using the B matrix. The basis functions are eigenvectors of the horizontal Laplacian operator, pre-calculated on the base of ocean topography, and independent on any observational data and background fields. Minimization of analysis error variance is achieved by optimal selection of the spectral coefficients. Optimal mode truncation is dependent on the observational data and observational error variance and determined using the steep-descending method. Analytical 2D fields of large and small mesoscale eddies with white Gaussian noises inside a domain with four rigid and curved boundaries are used to demonstrate the capability of the OSD method. The overall error reduction using the OSD is evident in comparison to the OI scheme. Synoptic monthly gridded world ocean temperature, salinity, and absolute geostrophic velocity datasets produced with the OSD method and quality controlled by the NOAA National Centers for Environmental Information (NCEI) are also presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In ocean data assimilation (or analysis), the coordinates (x, y, z) are usually represented by the position vector r with grid points represented by r n , n = 1, 2, …, N, and observational locations represented by r (m), m = 1, 2, …, M. Here, N is the total number of the grid points, and M is the total number of observational points. A single or multiple variables c = (u, v, T, S, …), no matter two or three dimensional, can be ordered by grid point and by variable, forming a single vector of length NP with N the total number of grid points and P the number of variables. For multiple variables, non-dimensionalization is conducted before forming a single vector c (Chu et al. 2015) with “true”, analysis, and background fields (c t , c a, c b ) and observational data (c o ) being represented by N and M dimensional vectors,

where the superscript ‘T’ means transpose. The innovation (or called the observational increment

represents the difference between the observational and background data at the observational points r (m). Here, H = [h mn ] is an M × N linear observation operator matrix converting the background field c b (at the grid points, r n ) into “first guess observations” at the observational points r (m) (Fig. 1).

The analysis error (ε a ) and observational error (ε o ) are defined by

which are evaluated at the grid points. The two errors are usually independent of each other,

Minimization of the analysis error variance

gives the optimal analysis field c a for the “true” field c t .

A common practice in ocean data assimilation (or analysis) is to use a N × M weight matrix W = [w nm ] to blend c b (at the grid points r n ) with innovation d (at observational points r (m)) (Evensen 2003; Tang and Kleeman 2004; Chu et al. 2004a; 2015; Galanis et al. 2006; Oke et al. 2008; Han et al. 2013; Yan et al. 2015)

Minimization of the analysis error variance with respect to weights,

determines the weight matrix

Here, B is the N × N background error covariance matrix; R is the M × M observational error covariance matrix and is usually simplified as a product of an observational error variance (\( {e}_o^2 \)) and an identity matrix I,

Substitution of (7) into (5) leads to the optimal interpolation (OI) equation,

which produces the analysis field c a from the innovation d. The challenge for the OI method is the determination of the background error covariance matrix B.

An alternative approach is to use a spectral method with lateral boundary (Г) information to decompose the variable anomaly at the grid points [c(r n ) − c b (r n )] into (Chu et al. 2015),

where {ϕ k } are basis functions; K is the mode truncation. The eigenvectors of the Laplace operator with the same lateral boundary condition of (c − c b ) can be used as the set of the basis functions {ϕ k } and written in matrix (Chu et al. 2015)

For a given mode truncation K, minimization of the analysis error variance (4) with respect to the spectral coefficients

gives the spectral ocean data assimilation equation (Chu et al. 2004b, 2015),

where F is an N × N (diagonal) observational contribution matrix

Here, the matrices Φ, F, and H are all given in comparison to the OI Eq. (9) where the background error covariance matrix B needs to be determined.

This spectral method has been proven effective for the ocean data analysis. Chu et al. (2003a, b) named the spectral method as the optimal spectral decomposition (OSD). With it, several new ocean phenomena have been identified from observational data such as a bi-modal structure of chlorophyll-a with winter/spring (February–March) and fall (September–October) blooms in the Black Sea (Chu et al. 2005a), fall–winter recurrence of current reversal from westward to eastward on the Texas–Louisiana continental shelf from the current-meter, near-surface drifting buoy (Chu et al. 2005b), propagation of long Rossby waves at mid-depths (around 1000 m) in the tropical North Atlantic from the Argo float data (Chu et al. 2007), and temporal and spatial variability of the global upper ocean heat content (Chu 2011) from the data of the Global Temperature and Salinity Profile Program (GTSPP, Sun et al. 2009).

The spectral mode truncation is the key for the success of the OSD method. It acts as a spatial low pass filter for the fields to allow the highest wave numbers corresponding to the highest spectral eigenvalues without aliasing due to the information provided from the observational network.

Questions arise: Can a simple and effective mode truncation method be developed to take into account of model resolution (i.e., total number of model grid points)? What are the major differences between OI and OSD? What is the quality and uncertainty of the OSD method? The purpose of this paper is to answer these questions. The remainder of the paper is organized as follows. Section 2 describes error analysis. Section 3 presents the steep-descending mode truncation method. Section 4 shows idealized “truth” and “observational” fields. Section 5 compares analysis fields between OSD and OI. Section 6 introduces three synoptic monthly gridded world ocean temperature, salinity, and absolute geostrophic velocity datasets produced with the OSD method and quality controlled by the NOAA National Centers for Environmental Information (NCEI). Conclusions are given in Section 7. Appendices A and B briefly describe several methods to determine the H matrix. Appendix C shows the determination of basis functions. Appendix D presents the Vapnik-Chervonenkis dimension for mode truncation. Appendix E depicts a special B matrix for this study.

2 Error analysis

Low mode truncation does not represent the reality well, while high mode truncation may contain too much noise. Let the truncated spectral representation s K in (10) at the grid points form an N-dimensional vector,

The M-dimensional innovation vector [see (2)]

at observational points can be transformed into the grid points

where D(r n) represents the observational innovation at the grid points,

From Eq. (3a), observations at grid points are computed using c o(r n) = H T c o (r m ). The original background state, c b (r n ), keeps in the grid space. The matrix form of (16) is

where f n denotes contribution of all observational data unto the grid point r n . The larger the value of f n , the larger the observational influence on that grid point (r n). D is an N-dimensional vector at the grid points,

The analysis error (i.e., analysis ca versus “truth” c t ) in the spectral data assimilation [see (10)] is given by

Here, (10) and (17) are used. The analysis error is decomposed into two parts

with the truncation error given by

and the observational error given by

3 Steep-descending mode truncation

The Vapnik-Chervonenkis dimension (Vapnik 1983; Chu et al. 2003a, 2015) was used to determine the optimal mode truncation K OPT. As depicted in Appendix D, it depends only on the ratio of the total number of observational points (M) versus spectral truncation (K) and does not depend on the total number of model grid points (N). This method neglects observational error and ignores the model resolution. In fact, the analysis error variance over the whole domain is given by

where \( {e}_o^2 \) is the observational error variance [see (8)]. Here, the observational error is assumaed the same at grid points as at the grid points. This is due to the simplification of the error covariance matrix R = \( {e}_o^2 \) I. The Cauchy-Schwarz inequality shows that

The relative analysis error reduction at the mode-K can be expressed by the ratio

Both E K and E K-1 are large for small K (low-mode truncation), which may lead to a small value of γ K . Both E K and E K-1 are small for large K (high-mode truncation), which also leads to a small value of γ K . An optimal truncation should be between the low-mode and high-mode truncations with a larger value (over a threshold) of γ K . This procedure is illustrated as follows. The values (γ 2, γ 2, …, γ KB ) are calculated using (25) from a large K B (say 250). The mean and standard deviation of γ can be computed as,

Suppose that the relative error reductions (γ 2, γ 3, …, γ KB ) satisfy the Gaussian distribution. A 100(1 − α) % upper one-sided confidence bound on γ is given by

which is used as the threshold for the mode truncation. Here, z is the random variable satisfying the Gaussian distribution with zero mean and standard deviation of 1. If several γ values exceed the threshold, the highest mode

is selected for mode truncation. After the mode truncation K OPT is determined, the spectral coefficients (a k , k = 1, 2, …, K OPT) can be calculated, and so as the truncation error variance \( {E}_{K_{\mathrm{OPT}}}^2 \).

3.1 Multi-platform observations

Let observation be conducted by L instruments with different \( {e}_o^{(l)} \)deployed at \( {\mathbf{r}}_l^{\left({m}_l\right)} \) (m l = 1, 2, .., M L ; l = 1, 2, …, L). The total number of observations is \( M={\displaystyle \sum_{l=1}^L{M}_l} \). The M-dimensional observational vector is represented by

The observational error variance is given by

The relative error reduction γ K for mode truncation (25) is replaced by

After the mode truncation is determined, the OSD Eq. (13) is used to get the analysis field.

4 “Truth,” “background,” and “observational” fields

Consider an artificial non-dimensional horizontal domain (−19 < x < 19, −15 < y < 15) with the four curved rigid boundaries (Fig. 2):

Horizontal non-dimensional domain with four curved rigid boundaries with each boundary given by Eq. (32)

The domain is discretized with Δx = Δy = 0.5. The total number of the grid points inside the domain (N) is 3569. Figure 3 shows the first 12 basis functions {ϕ k }, which are the eigenvectors of the Laplacian operator with the Dirichlet boundary condition, i.e., b 1 = 0 in (61) of Appendix C.

Basis functions from ϕ1 to ϕ12 for the domain depicted by Eq. (32)

The first basis function ϕ 1(x n ) shows a one-gyre structure. The second and third basis functions ϕ 2(x n )and ϕ 3(x n )show the east-west and north-south dual-eddies. The fourth basis function ϕ 4(x n ) shows the east-west slanted dipole-pattern with opposite signs in the northeastern region (positive) and the southwestern region (negative). The fourth basis function ϕ 4(x n ) shows the tripole-pattern with negative values in the western and eastern regions and positive values in between. The higher order basis functions have more complicated variability structures.

Two “truth” fields for the non-dimensional domain with 4 rigid and curved boundaries (Fig. 2) contain multiple mesoscale eddies (treated as “truth”) given by

for the large-eddy field (Fig. 4a) and given by

for the small-eddy field (Fig. 4b). The background field is given by

The “observational” points {r (m)} are randomly selected inside the domain (Fig. 5) with the total number (M) of 300. The “observational” points {r (m)} are kept the same for all the sensitivity studies. The domain is discretized by Δx = Δy = 0.5 with total number (N) of grid points of 3569.

Sixteen sets of “observations” (c o ) are constructed from Fig. 4a, b using the analytical values plus white Gaussian noises (ε o ) of zero mean and various standard deviations (σ) from 0 (no noise) to 2.0 with 0.1 increment from 0 to 1.0 and 0.2 increment from 1.0 to 2.0 (total 16 sets), generated by the MATLAB,

Figure 6a, b show 6 out of the 16 constructed sets with σ = (0, 0.2, 0.5, 10., 1.6, 2.0). Both OSD and OI methods are used to get the analysis field ca(r n) from these “observations”. The bilinear interpolation (see Appendix B) is used for the observation operator H in this study.

a “Observational” data (c o ) from Fig. 4a with added white Gaussian noises of zero mean and various standard deviations: a 0 (i.e., no noise), b 0.2, c 0.5, d 1.0, e 1.6, and f 2.0. b “Observational” data (c o ) from Fig. 4b with added white Gaussian noises of zero mean and various standard deviations: a 0 (i.e., no noise), b 0.2, c 0.5, d 1.0, e 1.6, and f 2.0

5 Comparison between OSD and OI

-

a.

OSD analysis fields

The steep-descending mode truncation K OPT depends on the user-input parameter e o [see (25)] and observational noise σ. \( {E}_a^2 \) and γ K are computed from the “observational” data in Fig. 6a, b. The threshold of mode truncation (27) varies with the significance level α. In this study, (e 0, σ) vary between 0 and 2; α has two levels of (0.05, 0.10) with z 0.05 = 1.645, z 0.10 = 1.287 in (27). For given values of e 0 (= 0.2) and σ (= 0.8), the optimal mode truncation depends on the significance level α with K OPT = 58 for α = 0.05 (Fig. 7a) and K OPT = 67 for α = 0.10 (Fig. 7b). Most results shown in this section is for α = 0.05 since it it a commonly used significance level.

Dependence of \( {E}_a^2 \) and γ K on K for the “observational” data for the small-scale eddy field with σ = 0.8 and e o = 0.2 at two significant levels of a α = 0.05 (z 0.05 = 1.645) and b α = 0.10 (z 0.10 = 1.291) as the threshold of mode truncation [see Eq. (27)]. The optimal mode truncation is 58 for α = 0.05 and 67 for α = 0.10

For the large-eddy field, K OPT is not sensitive to the values of σ and e o . It is 7 in the upper-left portion and 6 in the lower-right portion of Table 1. For the small-eddy field, K OPT takes (58, 67) for the most cases, 178 for the high noise levels (σ ≥ 1.8) and low e o values (e o ≤ 1.0), and 82 for the low noise levels (σ ≤ 0.1) and low e o values (e o ≤ 0.3) (Table 2).

The analysis field using the OSD data assimilation (13) for a particular user-input parameter e o and noise level σ, \( {c}_a^{OSD}\left({\mathbf{r}}_n,{e}_o,\sigma \right) \), is represented in Fig. 8a (the large-eddy field) using “observations” in Fig. 6a (with various σ), and in Fig. 8b (the small-eddy field) using “observations” in Fig. 6b (with various σ). Comparison between Figs. 8a, b and 4a, b demonstrates the capability of the OSD method with the analysis fields \( {c}_a^{OSD}\left({\mathbf{r}}_n,\sigma, {e}_o\right) \) fully reconstructed for all occasions.

-

b.

OI Analysis Fields

With the assumption that the c field is statistically stationary and homogeneous, the OI Eq. (9) with the R and B matrices represented by (8) and (65) [see Appendix E] is used to analyze the “observational” data with three user-defined paramters: (r a , r b , e o ). Here, r a and r b are the decorrelation scale and zero crossing (r b > r a ); e o is the standard deviation of the observational error. Let these paramters take discrete values with total number of P a for r a , P b for r b , and P e for e o . In this study, we set P a = P b = P e = 5. e o has five values (0.2, 0.5, 1.0, 1.5, 2.0). Considering the horizontal domain from −15 to 15 in both (x, y) directions, r a takes 5 values (2, 3, 4, 5, 6); (r b - r a ) takes 5 values (0.5, 1.0, 1.5, 2.0, 2.5). There are 125 combinations of (r a , r b , e o ) for the test.

a The analysis field ca obtained by the spectral data assimilation [see Eq. (13)] using the steep-descending mode truncation with the significance level of α = 0.05 from the “observations” shown in Fig. 6a with six noise (σ) levels (0, 0.2, 0.5, 1.0, 1.6, 2.0) and six values of e o : a 0.2, (i.e., no noise), b 0.5, c 1.0, and d 2.0. b. The analysis field ca obtained by the spectral data assimilation [see Eq. (13)] using the steep-descending mode truncation with the significance level of α = 0.05 from the “observations” shown in Fig. 6a with six noise (σ) levels (0, 0.2, 0.5, 1.0, 1.6, 2.0) and four values of e o : a 0.2, (i.e., no noise), b 0.5, c 1.0, and d 2.0

The analysis field from the OI data assimilation (9), \( {c}_a^{OI}\left({\mathbf{r}}_n,\sigma, {r}_a,{r}_b,{e}_o\right) \), with four different sets of user-input parameters (r a , r b - r a , e o ): (2, 2.5, 1), (4, 5.5, 1), (6, 8.5, 1), and (6, 8.5, 2), are presented in Fig. 9a (the large-eddy field) using “observations” in Fig. 6a, and in Fig. 9b (the small-eddy field) using “observations” in Fig. 6b. Comparison between Figs. 9a, b and 4a, b demonstrates strong dependence of the OI output on the selection of the parameters (r a , r b , e o ). For the large-scale eddies (Fig. 9a), the analysis fields ca are very different from the “truth” field c t for r a = 2, r b = 2.5, e o = 1 for all “observations” (Fig. 6a); the difference between the reconstructed and “truth” fields decreases as r a and r b increase; the two fields are quite similar when r a = 6, r b = 8.5 for both e o = 1 and 2. Such similarity reduces with increasing e o . For the small-scale eddies (Fig. 9b), the analysis fields ca are totally different from the “truth” field c t for r a = 6, r b = 8.5, e o = 1 and 2 for all “observations” (Fig. 6b), less different as r a and r b decrease; and are quite similar to c t when r a = 2, r b = 2.5, e o = 1.

-

c.

Root mean square error

The analysis field from OSD, \( {c}_a^{OSD} \), depends only on the observational error variance \( {e}_o^2 \) and its uncertainty is represented by the root mean square error R OSD,

Average over all the values of e o leads to the overall uncertainty

The analysis field using OI (\( {c}_a^{OI} \)) depends on three user-defined parameters (r a , r b , e o ). Its uncertainty due to a particular parameter is represented by

which are compared to \( {\overline{R}}^{OSD}\left(\sigma \right) \) and R OSD(σ, e o ).

a The analysis field ca obtained by the OI data assimilation [see Eq. (9)] for “observations” shown in Fig. 6a various noise levels with various combinations of user-defined parameters (r a , r b, e o ,): (2, 2.5, 1), (4, 5.5, 1), (6, 8.5, 1), and (6, 8.5, 2). b. The analysis field ca obtained by the OI data assimilation [see Eq. (9)] for “observations” shown in Fig. 6b various noise levels with various combinations of user-defined parameters (r a , r b , e o ,): (2, 2.5, 1), (4, 5.5, 1), (6, 8.5, 1), and (6, 8.5, 2)

Figure 10 shows the comparison between R OI(σ, r a ) and \( {\overline{R}}^{OSD}\left(\sigma \right) \) for 5 different r a values: (2, 3, 4, 5, 6) and two types (the large-scale and small-scale) of the “observational” field. R OI(σ, r a ) monotonically increases with σ and is generally larger than \( {\overline{R}}^{OSD}\left(\sigma \right) \). For the “observations” representing the large-scale eddy fields (L x = 2, L y = 3, see Fig. 6a), \( {\overline{R}}^{OSD}\left(\sigma \right) \)increases slightly from 0.32 for σ = 0 to 0.34 for σ = 2.0. However, R OI(σ, r a = 2) is always larger than \( {\overline{R}}^{OSD}\left(\sigma \right) \)and increases from 0.37 for σ = 0 to 1.13 for σ = 2.0; R OI(σ, r a ≥ 3)is smaller than \( {\overline{R}}^{OSD}\left(\sigma \right) \)for small σ, equals \( {\overline{R}}^{OSD}\left(\sigma \right) \)at certain σ 0, and larger than \( {\overline{R}}^{OSD}\left(\sigma \right) \)for σ > σ0. The value of σ 0 increases with r a from 0.4 for r a = 3 to 1.0 for r a = 6. R OI(σ, r a = 6) increases from 0.13 for σ = 0 to 0.62 for σ = 2.0. For the “observations” representing the small-scale eddy field (L x = 5, L y = 7, see Fig. 6b), \( {\overline{R}}^{OSD}\left(\sigma \right) \)increases slightly from 0.22 for σ = 0 to 0.27 for σ = 0.4; evidently from 0.27 for σ = 0.4 to 0.40 for σ = 0.5; and slowly from 0.40 for σ = 0.5 to 0.71 for σ = 2.0. However, R OI(σ, r a ) is much larger than \( {\overline{R}}^{OSD}\left(\sigma \right) \) for any r a . For example, R OI(σ, r a = 2)increases from 0.43 for σ = 0 to 1.14 for σ = 2.0; …, R OI(σ, r a = 6)increases from 0.89 for σ = 0 to 1.06 for σ = 2.0.

Comparison between R OI(σ, r a ) and \( {\overline{R}}^{OSD}\left(\sigma \right) \) of the analysis fields from the same “observations” with different noise levels with varying parameter r a = (2, 3, 4, 5, 6) from top to bottom with the left panels using “observations” shown in Fig. 6a and the right panels using “observations” in Fig. 6b. The solid curves represent the OSD with the significance level of α = 0.05; and the dotted curves refer to the OI

Figure 11 shows the comparison between R OI(σ, r b ) and \( {\overline{R}}^{OSD}\left(\sigma \right) \) for 5 different (r b − r a ) values: (0.5, 1.0, 1.5, 2.0, 2.5) and two types (large-scale and small-scale) of the “observational” fields. R OI(σ, r b ) monotonically increases with σ and is generally larger than \( {\overline{R}}^{OSD}\left(\sigma \right) \). For the “observations” representing the large-scale eddy fields (L x = 2, L y = 3, see Fig. 6a), R OI(σ, r b − r a ) monotonically increases with σ from around 0.2 for σ = 0 to around 0.78 for σ = 2.0 for all the values of (r b − r a ) with σ 0 from 0.4 for (r b − r a ) = 0.5 to 0.6 for (r b − r a ) = 2.5. For the “observations” representing the small-scale eddy fields (L x = 5, L y = 7, see Fig. 6b), R OI(σ, r b − r a ) is much larger than \( {\overline{R}}^{OSD}\left(\sigma \right) \) for any (r b − r a ) and σ. For example, R OI(σ, r b − r a = 0.5) increases from 0.53 for σ = 0 to 1.00 for σ = 2.0; …, R OI(σ, r b − r a = 2.5)increases from 0.58 for σ = 0 to 1.00 for σ = 2.0.

Comparison between R OI(σ, r b ) and \( {\overline{R}}^{OSD}\left(\sigma \right) \) of the analysis fields from the same “observations” with different noise levels with different (r b − r a ) = (0.5, 1.0, 1.5, 2.0, 2.5) with the left panels using “observations” shown in Fig. 6a and the right panels using “observations” in Fig. 6b. The solid curves represent the OSD with the significance level of α = 0.05; and the dotted curves refer to the OI

Figure 12 shows the comparison between R OI(σ, e o ) and R OSD(σ, e o ) for 5 different e o values: (0.2, 0.5, 1.0, 1.5, 2.0) and two types (large-scale and small-scale) of the “observational” fields. First, R OI(σ, e o ) monotonically increases with σ and is evidently larger than R OSD(σ, e o ) for all σ and e o . Second, dependence of R OSD(σ, e o ) on σ is insensitive to the change of e o . For the “observations” representing the large-scale eddy fields (L x = 2, L y = 3, see Fig. 6a), R OI(σ, e o ) is close to R OSD(σ, e o ) for σ < 1.2, and much larger than R OSD(σ, e o ) for σ > 1.2 with e o = 0.2 and 0.5; and vice versa with e o = 1.0, 1.5, and 2.0. R OI(σ, e o = 2.0)increases slightly from 0.98 at σ = 0 to 1.08 at σ = 2.0 and is almost twice of R OSD(σ, e o ) for all σ. For the “observations” representing the small-scale eddy fields (L x = 5, L y = 7, see Fig. 6b), R OI(σ, e o ) is also larger than R OSD(σ, e o ). For example, R OI(σ, e o = 2.0)increases slightly from 1.37 at σ = 0 to 1.42 at σ = 2.0, which is two to three times of R OSD(σ, e o = 2.0) for σ < 1.0.

Comparison between R OI(σ, e o ) and R OSD(σ, e o ) of the analysis fields from the same “observations” with different noise levels with varying parameter e o = (0.2, 0.5, 1.0, 1.5, 2.0) from top to bottom with the left panels using “observations” shown in Fig. 6a and the right panels using “observations” in Fig. 6b. The solid curves represent the OSD with the significance level of α = 0.05; and the dotted curves refer to the OI

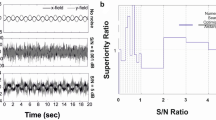

The overall performance between OI and OSD with various noise levels (σ) can be estimated by the error ratio,

Figure 13 shows the dependence of κ(σ) (evidently less than 1) on σ for the two types (large-scale and small-scale eddies) of the “observational” fields represented by Fig. 6a and b with two different significance levels (α = 0.05, 0.10) for the threshold of mode truncation in the OSD method (27). At α = 0.05 (Fig. 13a), for the large-scale eddy field, κ(σ) takes 0.71 at σ = 0; fluctuates with σ; and decreases to 0.57 at σ = 2.0. For the small-scale eddy field, κ(σ) increases monotonically with σ from 0.43 at σ = 0 to 0.67 at σ = 2.0. At α = 0.10 (Fig. 13b), for the large-scale eddy field, κ(σ) takes 1.17 at σ = 0; decreases monotonically with σ to 0.40 at σ = 2.0. For the small-scale eddy field, κ(σ) increases monotonically with σ from 0.36 at σ = 0 to 0.70 at σ = 2.0. It means that the OSD performs better for the test case. Integration of κ(σ) over the whole interval of the noise level [0, 2.0] yields

which means that the overall error for the OSD is 76 % (51 %) of the OI error for the large-scale (small-scale) eddy field for α = 0.05. The overall performance of the OSD method is relatively insensitive to the selection of the significance level α.

The computational cost of the OSD and OI methods is comparable in the test cases. In the OSD method, the steep-descending method for mode truncation requires (a) the computation of a large number K b in Eq. (26) of eigenvectors and (b) the construction and solution of the OSD Eq. (13) can be done once for all. In the OI method, however, the construction and solution of the OI Eq. (9) must be repeated each time background/observations changes.

6 Synoptic monthly gridded temperature and salinity fields

The OSD method is used to to produce the synoptic monthly gridded (SMG) temperature (T) and salinity (S) datasets (Chu and Fan 2016a; Chu et al. 2016) from the two world ocean observational (T, S) profile datasets [the NOAA national Centers for Environmental Information (NCEI)’s World Ocean Database (WOD) and the Global Temperature and Salinity Profile Program (GTSPP)]. The synoptic monthly gridded absolute geostrophic velocity dataset (Chu and Fan 2016b) is also established from the SMG-WOD (T, S) fields using the P vector method (Chu 1995; Chu and Wang 2003). These datasets have been quality controlled by the NCEI professionals and are openly downloaded for public use at http://data.nodc.noaa.gov/geoportal/rest/find/document?searchText=synoptic+monthly+gridded&f=searchPage. The duration is January 1945 to December 2014 for the synoptic monthly gridded WOD (T, S) and absolute geostrophic velocity fields and January 1990 to December 2009 for the synoptic monthly gridded GTSPP (T, S) fields.

7 Conclusions

Ocean spectral data assimilation has been developed on the base of the classic theory of the generalized Fourier series expansion such that any ocean field can be represented by a linear combination of the products of basis functions (or called modes) and corresponding spectral coefficients. The basis functions are the eigenvectors of the Laplace operator, determined only by the topography with the same lateral boundary condition for the assimilated variable anomaly. They are pre-calculated and independent on any observational data and background fields. The mode truncation K depends on the observational data and a user input parameter \( {e}_o^2 \) (i.e., observational error variance); and is determined via the steep-descending method.

The OSD completely changes the common ocean data assimilation procedures such as OI, KF, and variational methods, where the background error covariance matrix B needs to be pre-determined since the weight matrix W is used. However, the OSD uses the spectral form to represent the observational innovation at the grid points [see (17)]. Minimization of the truncation error variance leads to the optimal selection of the spectral coefficients. Thus, the background error covariance matrix B vanishes in the OSD procedure since the weight matrix W is not used. It is contrast to the existing OI method, where the B matrix is often assumed to be stationary and homogeneous with user-defined parameters.

The capability of the OSD method is demonstrated through its comparison to OI using analytical 2D fields of large and small mesoscale eddies inside a domain with 4 rigid and curved boundaries as “truth”, and addition to the “truth” of white Gaussian noises with zero mean and standard deviations (σ) varying from 0 (no noise) to 2.0 with 0.1 increment at randomly selected locations used as “observations.” A simple covariance function (Bretherton et al. 1976) was used for the OI procedure with three user-defined parameters (r a , r b , e o ) taking 5 possible values each. The OSD uses the same value of e o . The performance of OSD and OI is compared by (1) patterns for each set of 125 combinations of parameters, (2) root mean square errors for varying parameters, and (3) overall root mean square errors. The results show that the overall error reduction using the OSD is evident, which is 76 % (51 %) [72 % (59 %)] for significance level α = 0.05 (α = 0.10) of the OI error for the large-scale (small-scale) eddy field. In context of practical application, synoptic monthly gridded world ocean temperature, salinity, and absolute geostrophic velocity datasets have been produced with the OSD method and quality controlled by the NOAA National Centers for Environmental Information (NCEI).

Two issues need to be addressed on the correlation matrix. First, the comparison between the OSD and OI is at one particular instant in time. The B matrix used in the OI is based only on distance. Second, in the covariance matrix-based methods, when the covariance matrix is fixed once and for all, it is well-known that the very first data assimilation cycle is doing well, but subsequent cycles are less effective because the remaining error has a tendency to be orthogonal to the directions of the covariance matrix. In the OSD method, the correction is based on spectral functions (i.e., basis functions) chosen once-and-for all. More sophisticated, flow-based covariance matrix will allow OI to perform much better. Further verification and validation under real-time ocean conditions are needed to verify the quality of OSD in time cycles and to compare between OSD and OI methods.

In the two test cases (large and small eddy fields), it is clear that the optimal mode truncation K OPT (around 6 for the large eddy field and around 60 for the small eddy field) are very closed to the number of eigenvectors required to represent the truth field (Fig. 4). This shows the capability of the steep-descending mode truncation. However, the performance of the method for the truth field is a mixture of large and small scales in different parts of the domains needs to be further investigated.

References

Bretherton FP, Davis RE, Fandry CB (1976) A technique for objective analysis and design of oceanographic experiments applied to MODE-73. Deep-Sea Res Oceanogr Abstr 23:559–582. doi:10.1016/0011-7471(76)90001-2

Chu (1995) P-vector method for determining absolute velocity from hydrographic data

Chu PC (2008) Probability distribution function of the upper equatorial Pacific current speeds. Geophys Res Lett 35. doi:10.1029/2008GL033669

Chu PC (2009) Statistical characteristics of the global surface current speeds obtained from satellite altimeter and scatterometer data. IEEE J Sel Topics Earth Obs Remote Sensing 2(1):27–32

Chu PC (2011) Global upper ocean heat content and climate variability. Ocean Dyn 61(8):1189–1204

Chu PC, Fan CW (2016a) Synoptic monthly gridded three dimensional (3D) World Ocean Database temperature and salinity from January 1945 to December 2014 (NCEI Accession 0140938). NOAA National Centers for Environmental Information (NCEI), http://data.nodc.noaa.gov/cgi-bin/iso?id=gov.noaa.nodc:0140938.

Chu PC, Fan CW (2016b) Synoptic Monthly Gridded WOD Absolute Geostrophic Velocity (SMG-WOD-V) (January 1945–December 2014) with the P-vector method (NCEI Accession 0146195). NOAA National Centers for Environmental Information (NCEI), http://data.nodc.noaa.gov/cgi-bin/iso?id=gov.noaa.nodc:0146195

Chu PC, Wang GH (2003) Seasonal variability of thermohaline front in the central South China Sea. J Oceanogr 59:65–78

Chu PC, Ivanov LM, Margolina TM (2005b) Seasonal variability of the Black Sea chlorophyll-a concentration. J Mar Syst 56:243–261

Chu PC, Ivanov LM, Melnichenko OM (2005a) Fall-winter current reversals on the Texas-Louisiana continental shelf. J Phys Oceanogr 35:902–910

Chu PC, Fan CW, Sun LC (2016) Synoptic monthly gridded Global Temperature and Salinity Profile Programme (GTSPP) water temperature and salinity from January 1990 to December 2009 (NCEI Accession 0138647). NOAA National Centers for Environmental Information (NCEI), http://data.nodc.noaa.gov/cgi-bin/iso?id=gov.noaa.nodc:0138647.

Chu PC, Ivanov LM, Korzhova TP, Margolina TM, Melnichenko OM (2003a) Analysis of sparse and noisy ocean current data using flow decomposition. Part 1: theory. J Atmos Oceanic Technol 20:478–491

Chu PC, Ivanov LM, Korzhova TP, Margolina TM, Melnichenko OM (2003b) Part 2: application to Eulerian and Lagrangian data. J Atmos Ocean Technol 20:492–512

Chu PC, Ivanov LM, Margolina TM (2004b) Rotation method for reconstructing process and field from imperfect data. Int J Bifur Chaos 14:2991–2997

Chu PC, Ivanov LM, Melnichenko OV, Wells NC (2007) Long baroclinic Rossby waves in the tropical North Atlantic observed from profiling floats. J Geophys Res 112:C05032. doi:10.1029/2006JC003698

Chu PC, Tokmakian RT, Fan CW, Sun LC (2015) Optimal spectral decomposition (OSD) for ocean data assimilation. J Atmos Ocean Technol 32:828–841

Chu PC, Wang GH, Chen YC (2002) Japan/East Sea (JES) circulation and thermohaline structure, part 3, autocorrelation functions. J Phys Oceanogr 32:3596–3615

Chu PC, Wang GH, Fan CW (2004a) Evaluation of the U.S. Navy’s Modular Ocean Data Assimilation System (MODAS) using the South China Sea Monsoon Experiment (SCSMEX) data. J Oceanogr 60:1007–1021

Chu PC, Wells SK, Haeger SD, Szczechowski C, Carron M (1997) Temporal and spatial scales of the Yellow Sea thermal variability. J Geophys Res (Oceans) 102:5655–5668

Evensen G (2003) The ensemble Kalman filter: theoretical formulation and practical implementation. Ocean Dyn 53:343–367

Franke R, Nielson G (1991) Scattered data interpolation and application: a tutorial and survey. In: Hagen H, Roller D (eds) Geometric modelling, methods and applications. Springer, Berlin, pp. 131–160

Galanis GN, Louka P, Katsafados Kallos PG, Pytharoulis I (2006) Applications of Kalman filters based on non-linear functions to numerical weather predictions. Ann Geophys 24:2451–2460

Han GJ, Wu XR, Zhang SQ, Liu ZY, Li W (2013) Error covariance estimation for coupled data assimilation using a Lorenz atmosphere and a simple pynocline ocean model. J Clim 26:10218–10231

Oke PR, Brassington GB, Griffin DA, Schiller A (2008) The Bluelink Ocean Data Assimilation System (BODAS). Ocean Model 21:46–70

Spepard D (1968) A two-dimensional interpolation function for irregularly spaced data. Proc 23rd Nat Conf ACM, 517–523.

Sun LC, Thresher A, Keeley R, et al. (2009) The data management system for the Global Temperature and Salinity Profile Program (GTSPP). In Proceedings of the “OceanObs’09: Sustained Ocean Observations and Information for Society” Conference (Vol. 2), Venice, Italy, 21–25 September 2009, Hall, J, Harrison D.E. and Stammer, D., Eds., ESA Publication WPP-306

Tang Y, Kleeman R (2004) SST assimilation experiments in a tropical Pacific Ocean model. J Phys Oceanogr 34:623–642

Vapnik, VH (1983) Reconstruction of Empirical Laws from Observations (in Russian). Nauka, p 447

Yan CX, Zhu J, Xie JP (2015) An ocean data assimilation system in the Indian Ocean and West Pacific Ocean. Adv Atmos Sci. doi:10.1007/s00376-015-4121-z

Acknowledgments

The Office of Naval Research, the Naval Oceanographic Office, and the Naval Postgraduate School supported this study.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible Editor: Jean-Marie Beckers

This article is part of the Topical Collection on the 47th International Liège Colloquium on Ocean Dynamics, Liège, Belgium, 4–8 May 2015

Appendices

Appendix A. Determination of H-matrix using all grid points

IDW interpolation, using all grid points, is one of the most commonly used techniques for interpolation based on the assumption that the value of h mn in H-matrix are influenced more by the nearby points and less by the more distant points. Let

be the distance between the grid point (x i , y j ) and observational point (x (m), y (m)). The influence of the grid point x n on the observational point x (m) is given by (Spepard 1968)

where q is an arbitrary positive real number called the power parameter (typically, q = 2). Another form of h mn is given by (Franke and Nielson 1991)

where D (m) is the distance from the observational point x (m) to the most distant grid point. Equation (43) has been found to give better results than (42). As a result, c b(x (m), t), is somewhat symmetric about each grid point.

Appendix B. Determination of H-matrix using neighboring grid points

Consider the position vector x = (x, y) located inside the grid cell (Fig. 14),

x i ≤ x < x i + 1,y j ≤ y < y j + 1..

Mathematically, the variable c b at r (inside the grid cell) can be represented approximately by a polynomial,

where L = 1 refers to the bilinear interpolation, and L = 3 leads to the bicubic interpolation. For the bilinear interpolation, Eq. (44) becomes

or in matrix notation,

Since c b at four neighboring grid points: c b (x i , y j ), c b (x i+1, y j ), c b (x i+1, y j ), c b (x i+1, y j+1) are given, substitution of the four values into (45) leads to the determination of the four coefficients A 00, A 10, A 01, A 11. Using these coefficients, the bilinear interpolation (45) becomes

Let the observational point r (m) be located in the grid cell,

Evaluation of c b at the observational point r (m) using (46) leads to

where the proportional coefficients {\( {p}_{i,j}^{(m)},{p}_{i+1,j}^{(m)},{p}_{i,j+1}^{(m)},{p}_{i+1,j+1}^{(m)} \)} are defined by

It is noted that the proportionality coefficients {p i , j(m) , p i + 1 , j(m) , p i , j + 1(m) , p i + 1 , j + 1(m)} depend solely on the location of the observational points (r (m)), and

Setting L = 3 in (44) leads to the bicubic spline interpolation,

or in matrix notation,

which is rewritten by

Determination of the 10 coefficients (A 00, A 01, A 02, A 03, A 10, A 11, A 12, A 20, A 21, A 30) requires not only the values,

but also the derivatives at the neighboring grid points

The solution of the above set of 10 linear algebraic Eqs. (54) and (55) leads to the determination of the 10 coefficients (A 00, A 01, A 02, A 03, A 10, A 11, A 12, A 20, A 21, A 30). It is noted that values of c b at the 10 neighboring grid points (x i , y j ), (x i+1, y j ), (x i , y j+1), (x i+1, y j+1), (x i-1, y j ), (x i , y j-1), (x i+2, y j ), (x i-1, y j+1), (x i+1, y j-1), (x i , y j+2) are used to solve (54) and (55). Following (53), interpolation of c b at the 10 neighboring grid points on the observational r (m) = (x (m), y (m)) using the bicubic interpolation is given by

Thus, an equation similar to (48) can be written for evaluating c b at the observational point r (m) with the known 10 coefficients (A 00, A 01, A 02, A 03, A 10, A 11, A 12, A 20, A 21, A 30,

where the 10 corresponding coefficients {p i , j(m) , p i + 1 , j(m) , p i , j + 1(m) , p i + 1 , j + 1(m), p i − 1 , j(m), p i , j − 1(m), p i , + 2j(m), p i − 1 , j + 1(m), p i + 1 , j − 1(m), p i , j + 2(m)} are analytically determined and depends solely on the location of the observational points (r (m)), and

Since only 10 neighboring grid points are used to interpolate at the observational point r (m) using the bicubic interpolation, the matrix H has only 10 non-zero values in each row. However, it is too tedious to write it out.

Appendix C. Basis functions

As pointed by Chu et al. (2015), three necessary conditions should be satisfied in selection of basis functions {ϕ k (r)} as follows: (i) satisfaction of the same homogeneous boundary condition of the assimilated variable anomaly, (ii) orthonormality, and (iii) independence on the assimilated variables. The first necessary condition requires the same boundary condition for (c − c b ) and the basis functions {ϕ k }. The second necessary condition is given by

where δ kk′ is the Kronecker delta,

Due to their independence on the assimilated variable (the third necessary condition), the basis functions are available prior to the data assimilation.

The basis functions are the eigenvectors {ϕ k } of the Laplacian operator with the same boundary condition as the variable anomaly (c − c b ),

Here, {λ k } are the eigenvalues, e is the unit vector normal to the boundary; τ denotes a moving point along the boundary, and [b 1(τ) , b 2(τ)] are parameters varying with τ. The boundary condition in (61) becomes the Dirichlet boundary condition when b 1 = 0, and the Neumann boundary conditions when b 2 = 0. As pointed by Chu et al. (2015), different variable anomalies have different [b 1(τ) , b 2(τ)]. For example, the temperature, salinity, and velocity potential anomalies have b 2 = 0 for the rigid boundary and b 1 = 0 for the open boundary. However, the anomaly has b 1 = 0 for the rigid boundary and b 2 = 0 for the open boundary. It is obvious that the eigenvectors {ϕ k } are orthonormal and independent of the assimilated variables.

Appendix D. Vapnik-Chervonenkis dimension for mode truncation

The Vapnik-Chervonenkis dimension (Vapnik 1983; Chu et al. 2003a, 2015) is to seek the optimal mode truncation on the base of the first term of the analysis error (23),

with the cost function

Here, α (≪1) is the significance level. J * is the upper bound of J tr . For a given M, J tr decreases monotonically with K; μ increases with K if α is given. The optimal mode truncation is through the minimization of the cost function,

This method neglects observational error [only first term of (23) considered] and ignores the model resolution (represented by the total number of grid points N). The ratio of observational points (M) and the spectral truncation (K) is the key to determine the optimal mode truncation K OPT.

Appendix E. B matrix

The B matrix is often established based on the assumption of statistical stationarity and homogeneity of the reconstructed field with a simple covariance function, for example Bretherton et al. (1976) proposed

depending on distances only. Here, r ij is the distance between the two grid points r i and r aj ; r ay and r b are the decorrelation scale and zero crossing. To conduct the OI data assimilation, the three parameters (e o , r a , r b ) need to be defined by user. Chu et al. (1997, 2002) compute auto-correlation functions from historical observational data to fit the Gaussian function and get de-correlation scales for the B matrix. Recent studies show that some variables such as upper ocean current speed do not satisfy the normal distribution, but the Weibull distribution (Chu 2008, 2009).

Rights and permissions

About this article

Cite this article

Chu, P.C., Fan, C. & Margolina, T. Ocean spectral data assimilation without background error covariance matrix. Ocean Dynamics 66, 1143–1163 (2016). https://doi.org/10.1007/s10236-016-0971-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10236-016-0971-x