Abstract

Skills enabling and ensuring universal access to information have been investigated intensively during the past few years. The research results provide knowledge on the differences and digital divides. When examining ICT skill levels, the accuracy of assessment is one of the key issues to address the results and gain the applicable data for appropriate interventions to enhance digital inclusion. To date, ICT skill assessment is based mainly on self-reports and subjective evaluations. However, as previous studies have shown, people tend to overrate or underrate their own levels of competence. Thus, novel performance-based approach for assessing ICT skills is presented in this paper. The ICT skill test contains 42 tasks grouped into 17 ICT fields. The study was conducted with upper comprehensive and upper secondary level school students (n = 3159) and their teachers (n = 626) during years 2014–2016 in Finland. Using factor analysis, three ICT skills factors were created: basic digital skills, advanced technical skills, and professional ICT skills. The performance in the ICT skill test was also divided by gender, as the male students and teachers outperformed the female students and teacher. Outperformance also occurred by educational level, as both upper secondary level students and teachers were seen as possessing higher-level ICT skills than students and teachers at the comprehensive level. We thus argue that to compare the ICT skills and the validity of the assessments, we needed to ensure consistent assessment for both students and teachers. In addition, in order to diminish the ICT skill gap among students, interventions using formal education are urgently needed, and in particular, more attention should be given to both teacher training and in-service training.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During the past few years, universal access to digital information has received a lot of attention, as sufficient ICT skills can enable citizens to utilise certain key services in digitalised societies [1,2,3,4]. Consequently, ICT skills are important for educational systems and strategies when avoiding digital gaps citizens’ exclusions from basic services in society and when ensuring universal access to information, see [5, 6]. An open question, however, still remains what these essential skills in digital societies actually are. In the DIGCOMP framework project funded by the European Commission to develop and understand digital competence, five competence areas were categorised, namely information, communication, content creation, safety, and problem-solving competencies [6]. In turn, Binkley et al. [7] defined twenty-first century skills as ways of thinking, working, and living in a digitalised world. Further still, Fraillon et al. [8] defined computer and information literacy as the ‘individual’s ability to use computers to investigate, create, and communicate in order to participate effectively at home, at school, in the workplace, and in the society’ (p. 17). Consequently, the skills related to information and communication technology seem to be ever more important for citizens to have in their lives. Quite often, research on universal access to information turns into discussions on digital divides. Research on the digital divide and digital inclusion has moved during the past few decades from issues of availability and access of technology to research on the skills, and further yet to the actual outcomes of Internet and digital technology use [9, 10].

To analyse how to enhance digital inclusion using education, accurate knowledge of the level of current digital skills and competencies is needed. However, there are several challenges for the instruments currently available for assessing ICT skills, namely a conceptual ambiguity in the definitions and the operations of the assessment methods and the tendency of many of the instruments used oversimplify ICT skills [11,12,13]. To date, ICT assessments are often based on self-reporting and subjective evaluations [11]. Yet, as previous studies have shown, when people are making self-reports on skill assessments, they too easily overrate or underrate their levels of competence, see [12–14]. Therefore, an alternative approach for investigating the current levels of ICT competencies was employed here.

This paper reports the results of performance-based ICT skills assessment for both students and teachers. In order to improve education and ICT competencies in schools, assessments of both students and teachers’ skills are needed. Hence, we investigate the differences between the three factors of ICT competence regarding basic, advanced, and professional ICT skills. The ICT skills test used contained 42 performance-based items, which was then categorised into 17 fields of ICT skills (see Appendix 1). These 17 fields are based on the Finnish National Core Curriculum [15], the content of the eSkills certification programmes of the Finnish Information Society Development Centre, and the requirements of information and communication studies in Finnish universities of applied sciences.

We thus addressed the following research question:

How do students and teachers perform in the ICT skill test by gender and educational level and which kind of differences are there between the ICT skills of students and teachers?

This paper contributes to universal access research that can provide empirical results on how ICT skills assessment is constructed and conducted for both students and teachers. In this study, we evaluated ICT skills using three factors and the results identify the differences among and between the ICT skills of students and teachers. By using the same performance-based test for assessing ICT skills for students and teachers, the results and conclusions offered on how to enhance digital inclusion through education are more accurate than the results from only self-reported evaluation.

2 Digital divides compared to digital skills

The goal, which is to ensure universal access to information and thereby diminish digital divide, in recent studies has shifted its focus on these digital divides from limitations in access and availability of technology to differences in technology use and actual skills [1, 16] and even further to examine the differences in the benefits gained from Internet and technology use across all life realms [9]. In the 1990’s, primary attention was paid to inequalities in the availability of technology and Internet access in various countries. These considerations were strongly related to such issues as socio-economic background, race, and residence [10, 16]. This phase was called the first level of the digital divide.

Then, at the beginning of the 2000’s, the focus on digital divide research shifted to examine actual differences in skills and usage. When more people did gain access to the technology infrastructure, their skills and usage habits became the focus of research, and insufficient skills were noticed as playing a major role actual digital inclusion [17, 18]. This phase became known as the second-level digital divide [17].

In their study, van Deursen and van Dijk [18] pointed out that even though low-level educated individuals spent more hours and visited the Internet more frequently than medium- and high-level educated people, these lower educated people did not benefit as much from being online as the more educated people did. Low educated people tended to engage mainly in social interaction and gaming, quite time-consuming activities. Higher educated users tended to consume the Internet less in terms of time, though they used it in more beneficial ways and uses. For example, they used the Internet for personal development, information seeking, and following the news. Consequently, highly educated people tended to use Internet in ways that helped their knowledge and skills increase while low educated users did not. It was then argued that Internet usage habits can reflect known socio-economic differences, and thereby, Internet use could tend to reinforce rather than decrease social inequality, see, e.g. [9, 17].

The third level of the digital divide concerns the differences in tangible outputs that users gain from Internet use within certain populations that have broadly similar usage profiles and relatively autonomous and unlimited access to both the Internet and ICT infrastructures. In other words, the issue relates to gaps in users’ capacity to translate their time online into favourable offline outcomes. Research on this third-level divide examines who benefits and in what ways from Internet use [9]. In a societal context where Internet access is nearly universal, researchers have now detected various feedback effects. For example, outcomes achieved from the Internet use provide useful feedback on people’s offline status, which in turn influences digital inclusion factors as access, skills, use, and motivation, see, e.g. [1, 9]. When van Deursen and Helsper [9] examined the outcomes of digital engagement, their results showed that most of the digital divide indicators related to first of all skills and then their Internet usage patterns. Further still, highly educated individuals benefited more from being online than did less educated people, particularly in the domains of commerce, institutional government, and educational outcomes. The overall conclusion offered by van Deursen and Helsper [9] was that, although more and more people have gained access to Internet, entity offers the most to the higher socio-economic groups. Therefore, when information and services are increasingly offered online, the number of potential outcomes of such digitalisation increases. As people from higher socio-economic group achieve better offline benefits from their digital engagement than people of lower status, inequalities in society may also increase.

According to van Deursen and Helsper [9] in societies with near-universal Internet access, such as the Netherlands (or the Scandinavian countries, including Finland), the third-level digital divides have become crucial. This issue is a challenge for formal education practices as well. As Hatlevik et al. [19] pointed out, schools should identify their students’ level of competence and make distinct plans to equalise these observed ability differences. Further, in terms of the school’s role in developing their students’ ICT skills, Kivinen et al. [3] stated:

“According to policy-makers, school should prepare would-be citizens for the knowledge society by teaching ICT-literacy, knowledge-assessment, and skills of digital communication and cooperation. Yet at the moment not all teachers have proficiency in ICT themselves, pupils’ skills vary greatly, and many of the established school practices may not be smoothly compatible with the new objectives of the professed information society” (p. 377).

According to Goode [20], educational institutions have thus far also offered digital learning opportunities quite heterogeneously.

3 ICT skills evaluation

3.1 Concepts

According to Siddiq et al. [11], the concepts and constructs for ICT skill assessment should be conducted from either a domain perspective (ICT, digital, or twenty-first century) or a knowledge perspective (literacy, competence, and related skills). In their systematic review, Siddiq et al. [11] examined 38 assessment instruments for primary and secondary school students’ ICT literacy from years 2001 to 2014. Their review compressed the main dimensions from different studies to those related to information, communication, content creation, safety, problem-solving, and technical operational skills. The information dimension contains issues such as browsing, searching, filtering, evaluating information, storing, and retrieving information. Communication references interact through digital technologies, sharing information and content, engaging in online citizenship, and collaborating through digital technologies. Content creation includes developing content, integrating and re-elaborating it, copyright and licences, and computer programming. Safety involves protecting devices, managing and protecting personal digital data, protecting health, protecting the environment, and ‘net’ etiquette. Problem-solving in turn involves resolving tasks by using digital tools, collaborative problem-solving, innovating and creatively using technology, and identifying digital competence gaps. Finally, technical operational skills include the ability to solve technical problems, identify needs and technological responses, and use basic technical skills. [11].

Ferrari’s [6] DIGCOMP framework quite similarly divides digital competence into information skills, communication skills, content creation skills, safety skills, and problem-solving skills. Information skills include browsing, searching, filtering, evaluating, storing, and retrieving information. Communication skills include interacting, sharing, engaging, collaborating, net etiquette or ‘netiquette’, and managing digital identity. Content creation skills in DIGCOMP are identical to the review of Siddiq et al. [11] already mentioned here. Safety skills in DIGCOMP refer to protecting devices, data and digital identity, health, and the environment. Problem-solving skills in turn include solving technical problems, identifying needs and technological responses, innovation and creative technology use, and identifying digital competence gaps [6]. Van Deursen et al. [14] indeed remarked that Ferrari’s definitions are technically oriented and based on the number of devices used for online communication.

Helsper and Eynon [21] identified four distinct types of digital skills: Creative, social, technical, and critical. Their goal was to bring two important, but usually separate areas of study together: research on digital skills and literacy and research on the effective use of ICTs under the digital inclusion agenda. Creative skills based on their approach consist of uploading photographs, downloading music, and learning to use a new technology. Social skills in turn include participating in discussions online, making new friends, and uploading photographs. Technical skills involve cleaning up viruses, participating in discussions online, and learning to use a new technology. Finally, critical skills involve judging the reliability of a source and gathering information. Helsper and Eynon also [21] pointed out that social and creative skills are more activity than skills related. Van Deursen et al. [14] continued Helsper and Eynon’s classification by stating it was based on media literacy research, which suggested that skills should be evaluated beyond the mere technical level and instead as those skills relate to the ability to work with technologies for specific social purposes.

The Internet Skills Scale (ISS) designed by van Deursen et al. [14] measures five dimensions of Internet skills: operational, navigation information, social, creative, and mobile. Van Deursen et al. [14] argued that Internet skills should be considered distinct from computer skills since the use of the Internet requires more skills than the use of a computer. For example, information searching or content creation requires more than just the skills to use a computer. The operational factor of ISS contains, for example, abilities to download and save files, use shortcut keys, connect to a WIFI network, and adjust privacy settings. Information navigation skills include the abilities to decide the best keywords for online searches or the skills to navigate through web pages. The social factor of ISS includes the ability to know which information to share online or how to limit the audience on social media. The creative factor contains skills, such as how to create something new from existing online content, how to design a web page, and the knowledge for how to apply online content licences. The mobile factor in turn includes the ability to install or download apps and keep track of the costs of actual mobile app use.

When these assessments are conducted in the context of formal education, the results of ICT skill assessment are often desired to be applicable to the national curriculum development [22] or for school-level planning [5]. In addition, most of the research is often conducted with student samples, such as secondary or high school students [23, 19], university students [24], or teenagers in general [2]. Quite a few of the studies (see, e.g. [5, 13]) have assessed teachers’ ICT skills.

3.2 Assessment methods

There are a variety of methods used to conduct and evaluate ICT skill assessments, though surveys and self-reports seem to be the most dominant methods. In her review of Internet skill measurements and their assessments, Litt [25] classified Internet skill assessment methods into: (1) survey and self-reported measures, (2) performance/observation measures, and (3) combined and unique assessments. In surveys and self-reports, the dominant methods in the field of Internet and technology skill assessment, participants usually respond to a question or a set of questions about their own competence levels (e.g. [25, 2]) or evaluate their ability to perform specific tasks on the Internet (e.g. [18, 26]). In the second type of assessment method (according to Litt’s classification [25]), qualitative studies prefer observation-based measures or interviews, which incorporate ethnographic or practices. These types of studies have focused on, for example, observing a person’s actions during information search tasks [27, 28]. Interviews in their turn typically consist of open-ended questions like ‘What are you strong in?’ [3]. Litt [25] also argued that the majority of observation and performance Internet skills studies have a structure that reminds one of laboratory-like settings.

Task-based assessments are more unusual than assessment methods are, based on self-evaluations when evaluating ICT skills. Some studies have conducted a task-based assessment in which participants performed, for example, Internet tasks related to a holiday trip abroad [29] or government issues [28]. Claro et al. [22] developed a task-based assessment in their own virtual environment by simulating an actual environment where ICT skills are typically used. This virtual environment simulated common ICT applications and the tasks that were designed emulated real-life school work situations. The problems and tasks the participants solved in this assessment environment related to information, communication, ethics, and their social impacts. Moreover, Ainley et al. [5] used a similar task-based test design in their assessment instrument questions and for their tasks based on a realistic theme, and these followed a linear narrative structure. However, as Litt [25] summarised, performance- and observation-based measures do have challenges due to their time-consuming nature and the difficulties of cross-comparison and replication. Self-reports were criticised for their validity because of thee evaluations being subjective. Further, Van Deursen et al. [14] argued that because performance measurements are time-consuming and expensive, there is an urgent need for more accurate and reliable large-scale assessment instruments and more valid, updated, and nuanced self-assessments to have more generalisable and diverse samples.

To summarise, when comparing the assessment methods, the majority of assessment instruments use self-reporting. Most are online tests with multiple choice questions. Some have also included dynamic formats that require participants to interact with the tasks. A few were comprised of a dynamic task design that required some interaction with the test environment. The majority of tests are evaluated using quantitative methodologies [11]. In addition, Siddiq et al. [11] argued that the majority of the tests assess digital information search, retrieval, evaluation, and technical skills, though aspects like problem- solving with ICT, digital communication, and online collaboration are not covered to satisfaction. They also argued that there is a lack of documentation of the psychometric properties for many of the assessment instruments [11]. In the context of our study, Finnish basic education and secondary education level teachers’ ICT skills and practices have previously been investigated using a self-report questionnaire [30] and a teacher online survey called OPEKA [31]. The OPEKA group has currently developed a corresponding online survey (OPPIKA) for students. Therefore, we argue that performance-based assessments are indeed needed.

4 Research method, procedure, and data

In our study, student data were collected in Finland during the years 2014 and 2015. Altogether, 41 upper comprehensive and upper secondary level schools were chosen for convenience sampling, i.e. these schools were able to choose whether they wanted to be involved in the study or not. The students from the basic education level (grades 7–9/9) were tested one class at a time, so any bias caused by self-imposed participation was as slight as possible. Altogether, 3159 youths from 12 to 22 were tested, and of those, 51.6% were boys and 48.4% were girls. The mean age of the participants was 15.85 years. Further, 40% (N = 1261) of the students came from the basic education level (upper comprehensive schools, grades 7–9), and 60% (N = 1898) from the upper secondary level. In the Finnish education system, the upper secondary level is divided into general (leading to the matriculation examination) and vocational (leading to a vocational examination). Commonly, admission to higher education is based on the results of the matriculation examination and/or entrance tests, although both of the upper secondary level choices provide an opportunity to continue to the tertiary education level [32]. Of the upper secondary level students in this study, 54% came from general upper secondary schools, and 46% from vocational institutions.

Teacher data were collected in Finland during the years 2014–2016. The participating educational institutions could choose whether to test only students or also test teachers. The acceptable response rate among teachers from those organisations that elected to join was 70%. All organisations for which the response rate remained below that level were removed from the final data. Altogether, 626 teachers from the basic education level (comprehensive schools) and the upper secondary education level (general upper secondary schools) were tested, and of that total, 29.5% were male teachers, and 70.5% were female teachers. The mean age of these participants was 45.04 years (min 25, max 65). Among the teacher data, 78% (N = 490) of teachers came from basic education level schools, and 22% (N = 136) came from general upper secondary schools.

ICT skills were measured using the digital, performance-based ICT skill test. The test was the same for both the students and the teachers. Altogether, the test consisted of 42 task-based items, grouped into 17 fields of ICT skills (see Appendix 1). Tasks were implemented in such a way that the context (user interface, graphics) simulated common ICT applications and hence mirrored real-life settings. Each field contained 2–5 items, and the participants could achieve 4 points in each field, which could result in a maximum total score of 68 for the ICT skill test.

The tested competence areas (17 fields) were chosen based on the Finnish National Core Curriculum [15] current at the time of test development, the content of eSkills certification programmes (intended for working adults) for the Finnish Information Society Development Centre, and based on the requirements in the information and communications technology field at the universities of applied sciences. To ensure that these items were well understood by all potential respondents, a usability testing was conducted with upper comprehensive school students during the software development process during the fall of 2013.

5 Dimension of ICT skills of students and teachers

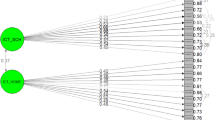

As a preliminary analysis, we conducted exploratory factor analyses to assess the construct validity of the scales used in our study. We used the principal components factor analysis with a varimax rotation to assess the factor loadings and dimensionality of our scales as well as to refine the measures. The factor analysis was conducted using the student data.

Before the exploratory factor analysis, we performed Bartlett’s test of sphericity to investigate factorability of the data, and Kaiser–Meyer–Olkin’s (KMO) test to measure sampling adequacy. These results indicated a significant test statistic for Bartlett’s test of sphericity, Chi-square 14,397.196, df 136, p ≤ .001, and a KMO value of .927, meaning that the data were suitable for structure detection.

The exploratory factor analysis of the data, using the principal component extraction method and a varimax rotation of all the 17 items, revealed three factors with eigenvalues over 2.0 (see Table 1). The first factor, ‘basic digital skills’, with an eigenvalue of 3.35, included eight items (Cronbach’s alpha = .81). The second factor, ‘advanced technical skills’, with an eigenvalue of 2.74, included five items (Cronbach’s alpha = .75). The third factor, ‘professional ICT skills’, with an eigenvalue of 2.22, included four items (Cronbach’s alpha = .66).

This three-factor solution explained 49% of the total variance, and the Cronbach’s alpha of the entire scale was .86. This outcome provided support for conceptualising the total ICT skills as a construct with the presented three subscales. However, because the values of .7 or .75 are often the ‘cut-off’ value for Cronbach’s alpha, see [33], at least the third factor should be considered as regenerating in the future in order to heighten its reliability estimate. Eventually, all the item loads based on the exploratory factor analysis were at least .49 or greater, which was a sign of their practical significance.

The factor scores were normalised by dividing the factor sums by the number of factor items to enable clear comparisons between the factors. After normalisation, the range for each factor was 0–4 points. For the student data, the average score on the basic digital skill factor was 1.995 out of 4 points, with a standard deviation of .787. The average score on the advanced technical skill factor was notably lower at 1.432, with a standard deviation of .867. Obviously, the most difficult factor for students was professional ICT skills, for which the average score was .657, with a standard deviation of .53. The means and standard deviations for the average scores for each ICT skill factor are shown in Table 2. Likewise, for the teachers, the average score on the basic digital skill factor was 2.331 out of 4 points, with a standard deviation of .812. The average score for the advanced technical skill factor was 1.595, with a standard deviation of 1.063. The professional ICT skills had an average score of .371, with a standard deviation of .530, which was even lower than that for the students’ performance for the same factor.

To examine the differences between the students and the teachers for the three factors of the ICT skill test, the analyses of variance (ANOVA) were utilised (see Table 2). In basic digital skills and advanced technical skills, teachers performed significantly better than the students (basic digital skills: F = 95,729, df = 1, p ≤ .001, advanced technical skills: F = 17,951, df = 1, p ≤ .001). For professional ICT skills, the students in their turn outperformed the teachers, and this difference was found to be significant (F = 30,325, df = 1, p ≤ .001).

5.1 ICT skills by gender

To examine whether there were any gender differences for the three factors of the ICT skill test, an analysis of variances (ANOVA) was conducted comparing the factor scores between the male and the female students (see Table 3) and also between the male and the female teachers (see Table 4). Among the students, the average scores of male students were discovered to be higher than the average scores of female students, though for basic digital skills, the difference was not significant (F = 5694, df = 1, p > .01). Instead, the significant differences in advanced technical skills (F = 245,111, df = 1, p ≤ .001) and professional ICT skills (F = 234,537, df = 1, p ≤ .001) were discovered by examining for gender. On the contrary, among the teachers, we discovered significant differences in all ICT skill factors (basic digital skills F = 19,243, df = 1 p ≤ .001, advanced technical skills F = 109,592, df = 1, p ≤ .001, professional ICT skills F = 58,941, df = 1, p ≤ .001), with male teachers being more skilled in all three factors than females were.

5.2 ICT skills by educational level

The analysis of variance (ANOVA) was utilised in the analysis of educational level differences for the three factors of ICT skills. Because there were three different education levels contained in the student data, the p values from ANOVA were adjusted using the Bonferroni correction for multiple comparisons. Among these students, there were significant differences for educational levels in all factors of the ICT skill test (basic digital skills F = 232,871, df = 1, p ≤ .001, advanced technical skills F = 86,217, df = 1, p ≤ .001, and professional ICT skills F = 17,268, df = 1, p ≤ .001) (see Table 5). The paired comparison indicated that in the case of basic digital skills, all educational levels differed significantly from one another, with the comprehensive school students being the weakest and the general upper secondary school students performing the best. For advanced technical skills, the basic education students performed significantly weaker than the upper secondary level students did, and in professional ICT skills, students from the vocational institutions significantly outperformed other education level students.

Accordingly, in the teacher data (see Table 6) for all ICT skill factors, upper secondary level teachers were found to perform significantly better than basic education level teachers (basic digital skills F = 9650, df = 1, p ≤ .01, advanced technical skills F = 18,459, df = 1, p ≤ .001, professional ICT skills F = 11,712, df = 1, p ≤ .01).

6 Conclusions and discussion

Research in digital inclusion has developed further and shifted its focus during the last decade. Digital skills have been recognised as an important aspect of success in highly digitalised societies, and correspondingly the need of assessing those skills has increased. Available instruments for ICT skill evaluation, however, have generally been insufficient. This paper reports a study on performance-based ICT skill assessment in the context of formal education for 3159 students and 629 teachers during the years 2014–2016.

After a preliminary factor analysis, three ICT skill factors were formed. The first factor, basic digital skills, consists of basic operational, content creation, and information searching skills. As expected, both students and teachers performed best in basic ICT skills. The second factor, advanced technological skills, consisted of more technical issues such as software and operating system installation and initialisation and maintenance and updating as well as the issues of information networks and security. Both students and teachers tended to gain notably fewer points from tasks belonging to this factor than those belonging to the previous factor. Worth noticing was that male teachers performed better on the first and second factors than did students, and the performance of female teachers in the first factor was better, although for the second factor weaker than for students. The third factor, professional ICT skills, included those skills needed for tertiary educational level studies (in the ICT field) in Finland. For both students and teachers, this factor was clearly the most difficult, and only a few participants resolved the tasks for this factor with high credits. It is worth noticing as well that for this factor students were found to perform noticeably better than both female and male teachers.

The previous study by Gui and Argentin [23] pointed out that Italian high school students (aged 15–20) performed best on basic operational skills, when that study investigated their digital skills in theoretical, operational, and evaluational domains. In a study of 15-year-old Chilean students, Claro et al. [22] found that students were able to solve tasks related to the use of information as consumers (i.e. searching, organising, or managing information), though struggled with tasks that related to the use of information as producers (i.e. developing their own ideas in a digital environment, refining digital information, or creating representation). Calvani et al. [34] argued that based on their results, students’ (aged 14–16) knowledge and competences were inadequate, especially when shifting from strictly technical aspects to critical cognitive and socio-ethical dimensions that were involved in the use of technologies. Van Deursen et al. [9] reported similar results among the Dutch for ages 16 to over 60; these people tended to manage basic operational (and communication) tasks, but had shortages, especially in their ability to use technology for creative purposes as information producers.

Our results in the current effort show that among the students studied, gender did not relate to significant differences in basic digital skills. For the tasks related to advanced technical skills and professional ICT skills, male students performed better than female students. The gender difference noticed among students in ICT skills test performance fell in line with previous research [35–37]. Overall, performance in ICT skills testing was highly divided by gender and more general among all students. Our results confirm the previous findings, namely that young people’s technology skills are rather heterogeneous (see, e.g. [34, 38], and the hypothesis stating that young people are called ‘digital natives’ is not supported [38, 21]. Male teachers significantly outperformed female teachers in every ICT skill factor [37]. The observed gender differences among the teachers in our analysis are in line with Ilomäki’s [37] research that found that male teachers estimate their ICT skills as being higher than female skills. Furthermore, examining the gender differences between teachers and students, our research found that the basic ICT skills of Finnish students are more equally distributed by gender than are the same respective skills of their teachers.

As expected, educational level was found to be related to ICT skills. Based on our data, the students in general upper secondary schools and in vocational institutions had better test results than comprehensive school students. This finding is mainly due to the simple fact that upper secondary level students are older than comprehensive school students. However, there also existed differences among upper secondary level students, even though these students were coevals. General upper secondary school students outperformed students from vocational institutions in basic digital skills and advanced technical skills. To the contrary, students from vocational institutions performed better on professional ICT skills. Therefore, not only age, but also the field of education, and differences in students’ interests, affected students’ ICT skills. Respectively, among teachers, upper secondary school teachers gained better results on all three ICT skill factors when compared to the other teachers.

With respect to gender and educational level differences among the teachers, it should be noted that in Finland all teachers, in both basic education level and upper secondary level education, are required to have a Master’s degree. Teachers in grades 1–6 in the basic education level are called class teachers (teaching de facto all subjects) and are required to have a Master of Education degree, and teachers in grades 7–9 at the basic level and all teachers in the upper secondary level are required to have both the Master’s degree in the applicable field of study depending on the subject(s) they are teaching and in pedagogical studies. In our teacher data, the effective size of the gender difference was equal among teachers of grades 7–9 and upper secondary level, although there did exist differences for teachers’ teaching areas, and among those teachers of grades 1–6 (class teachers), where all teachers have the same educational background and the same teaching area. Thus, among the teachers, the differences in ICT skills were more likely the consequence of differences in motivation, (leisure time) digital technology usage experience, and the professional requirements of different education level institutions than the consequence of other issues like academic background or teaching area.

Obviously, these findings also relate to in-service training and the interventions and staff development efforts that need to be made for all teachers to improve their ICT skills and thus ensure optimal digital skill learning opportunities for every student in the Finnish schools. According to PISA 2012 in Finland, the amount of teachers who report a high level of need to develop their ICT skills for teaching is 17.5%, which is less than in OECD countries average [39]. Weaknesses in teachers’ pedagogical ICT skills may have been seen, but the extent of that of the problem is not still fully realised.

We should also note that in a rapidly digitalising world, digital services will be used and digital information accessed everywhere in society. ICT skills can be learned by using social media, playing mobile games, seeking information, and running daily errands using the Internet. Therefore, in addition to informal learning, formal education should help ensure the ability to survive in a digital society and let every citizen benefit from digital engagement for in the future. It is very likely that the challenge is that computer science or information technology is not currently an independent subject at the comprehensive or upper secondary level education in Finland. Consequently, there are no such teachers in the schools, whose main responsibility is the teaching of ICT skills. Instead it becomes the responsibility of every teacher. That is why strengthening all teachers’ ICT skills and narrowing existing skill gaps between all teachers is essential when seeking to obtain equal digital learning opportunities for every student at all the educational levels.

References

van Deursen, A.J.A.M., Courtois, C., van Dijk, J.A.G.M.: Internet skills, sources of support, and benefiting from internet use. Int. J. Hum.-Comput. Interact. 30(4), 278–290 (2014)

Livingstone, S., Helsper, E.J.: Gradations in digital inclusion: children, young people, and the digital divide. New Media Soc. 9(4), 671–696 (2007)

Kivinen, O., Piiroinen, T., Saikkonen, L.: Two viewpoints on the challenges of ICT in education: knowledge-building theory vs. a pragmatist conception of learning in social action. Oxf. Rev. Educ. (2016). doi:10.1080/03054985.2016.1194263

Hargittai, E., Walejko, G.: The participation divide: content creation and sharing in the digital age. Inf. Commun. Soc. 11(2), 239–256 (2008)

Ainley, J., Fraillon, J., Schulz, W., Gebhardt, E.: Conceptualizing and measuring computer and information literacy in cross-national contexts. Appl. Meas. Educ. (2016). doi:10.1080/08957347.2016.1209205

Ferrari, A.: DIGCOMP: A Framework for Developing and Understanding Digital Competence in Europe. Publications Office of the European Union, 2013, European Union, 2013, Luxembourg (2013). http://jrc.es/EURdoc/JRC83167.pdf

Binkley, M., Erstad, E., Herman, J., Raizen, S., Ripley, M., Miller-Ricci, M., Rumble, M.: Defining 21st century skills. In: Griffin, P., McGaw, B., Care, E. (eds.) Assessment and Teaching of 21st Century Skills, pp. 17–66. Springer, Dordrecht (2012). doi:10.1007/978-94-007-2324-5

Fraillon, J., Schulz, W., Ainley, J.: International Computer and Information Literacy Study: Assessment Framework. IEA, Amsterdam (2013)

van Deursen, A.J.A.M., Helsper, E.J.: The third level digital divide: who benefits most from being online? Commun. Inf. Technol. Annu. 10, 29–52 (2015). doi:10.1108/S2050-206020150000010002

van Dijk, J.A.G.M.: The Deepening Divide: Inequality in the Information Society. Sage, London (2005)

Siddiq, F., Hatlevik, O., Olsen, E., Throndsen, R.V., Scherer, R.: Taking a future perspective by learning from the past—a systematic review of assessment instruments that aim to measure primary and secondary school students’ ICT literacy. Educ. Res. Rev. 19, 58–84 (2016)

Merrit, K., Smith, D., Renzo, J.C.D.: An investigation of self-reported computer literacy: is it reliable. Issues Inf. Syst. 6(1), 289–295 (2005)

Umar, I., Yusoff, T.: A study of Malaysian teacher’s level of ICT skills and practices, and its impact on teaching and learning. Soc. Behav. Sci. 116, 979–984 (2014)

van Deursen, A.J.A.M., Helsper, E.J., Eynon, R.: Development and validation of the Internet Skill Scale (IIS). Inf. Commun. Soc. 19(6), 804–823 (2016). doi:10.1080/1369118X.2015.1078834

FNBE: National Core Curriculum 2004. Finnish National Board of Education, Helsinki (2004). http://www.oph.fi/english/curricula_and_qualifications/basic_education/curricula_2004

van Dijk, J.A.G.M., Hacker, K.:The digital divide as a complex and dynamic phenomenon. In: Annual Conference of the International Communication Association, Acapulco, 1–5 June 2000

Hargittai, E., Hsieh, Y.P.: Digital inequality. In: Dutton, W.H. (ed.) Oxford Handbook of Internet Studies, pp. 129–150. Oxford University Press, Oxford (2013)

van Deursen, A.J.A.M., van Dijk, J.A.G.M.: Internet skills and the digital divide. New Media Soc. 13(6), 893–911 (2011)

Hatlevik, O.E., Guomundsdóttir, G.B., Loi, M.: Digital diversity among upper secondary students. Comput. Educ. 81(February 2015), 345–353 (2015). doi:10.1016/j.compedu.2014.10.019

Goode, J.: The digital identity divide: how technology knowledge impacts college students. New Media Soc. 12(3), 497–513 (2010)

Helsper, E., Eynon, R.: Digital natives: where is the evidence? Br. Educ. Res. J. 36(3), 503–520 (2010)

Claro, M., Preiss, D., San Martin, E., Jara, I., Hinostroza, J.E., Valenzuela, S., Cortes, F., Nussbaum, M.: Assessment of 21st century ICT skills in Chile: test design and results from high school level students. Comput. Educ. 59, 1042–1053 (2012)

Gui, M., Argentin, G.: Digital skills of internet natives: different forms of internet literacy in a random sample of northern Italian high school students. New Media Soc. 13(6), 963–980 (2011)

Thompson, P.: The digital natives as learners: technology use patterns and approaches to learning. Comput. Educ. 65(1), 12–33 (2013)

Litt, E.: Measuring users’ internet skills: a review of past assessments and a look toward the future. New Media Soc. 15(4), 612–630 (2013)

Livingstone, S., Helsper, E.: Balancing opportunities and risks in teenagers’ use of the internet: the role of online skills and internet self-efficacy. New media Soc. 12(2), 309–329 (2010). doi:10.1177/1461444809342697

Hargittai, E.: Beyond logs and surveys: in-depth measures of people’s web use skills. J. Am. Soc. Inf. Sci. Technol. 23(14), 1239–1244 (2002)

van Deursen, A.J.A.M., van Dijk, J.A.G.M.: Improving digital skills for the use of online public information and services. Gov. Inf. Q. 26(2), 333–340 (2009)

Eshet-Alkalai, Y., Amichai-Hamburger, Y.: Experiments in digital literacy. CyberPsychol. Behav. 7(4), 421–429 (2004)

Hakkarainen, K., Ilomäki, L., Lipponen, L., Muukkonen, H., Rahikainen, M., Tuominen, T., Lakkala, M., Lehtinen, E.: Students’ skills and practices of using ICT: results of a national assessment in Finland. Comput. Educ. 34(2), 103–117 (2000). doi:10.1016/S0360-1315(00)00007-5

Viteli, J.: Teachers and use of ICT in education: pilot study and testing of the Opeka system. In: Herrington, J., et al. (eds.) Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2013, pp. 2326–2346. AACE, Chesapeake (2013)

FNBE: Finnish Education in a Nutshell. Education in Finland. FNBE, Helsinki (2012). http://www.oph.fi/download/146428_Finnish_Education_in_a_Nutshell.pdf

Christmann, A., Van Aelst, S.: Robust estimation of Cronbach’s alpha. J. Multivar. Analysis 97, 1660–1674 (2006)

Calvani, A., Fini, A., Ranieri, M., Picci, P.: Are young generations in secondary school digitally competent? A study on Italian teenagers. Comput. Educ. 58, 797–807 (2012)

OECD: Pisa 2009 Results: Students On Line. Digital Technologies and Performance, vol. VI. OECD, Paris (2011)

Tømte, C., Hatlevik, O.E.: Gender-differences in Self-efficacy ICT related to various ICT-user profiles in Finland and Norway. How to self-efficacy, gender and ICT-user profiles relate to findings from PISA 2006. Comput. Educ. 57(1), 1416–1424 (2011)

Ilomäki, L.: Does gender have a role in ICT among Finnish teachers and students? Scand. J. Educ. Res. 55(3), 325–340 (2011)

Hargittai, E.: Digital na(t)ives? Variation in internet skills and uses among members of the ‘net generation’. Sociol. Inq. 80(1), 92–113 (2010)

OECD: The 2012 Programme for International Student Assessment (PISA), Computers, Education and Skills. OECD Publishing, Paris (2012). doi:10.1787/9789264239555-en

Acknowledgements

The authors would like to thank all the students and teachers who participated the ICT assessments. The work was supported by the University of Turku.

Author information

Authors and Affiliations

Corresponding author

Appendix 1

Appendix 1

Field | No. of items | Type of item | Description of items |

|---|---|---|---|

Basic operational skills | 2 | Multiple choice | There were two tasks. First, the participants had to choose between seven alternatives, namely the right answers (2) to the question: ‘How do you type special characters, which are not included in the QWERTY keyboard (i.e. Θ, ¾, ¥, ∉)’. In the second task, participants had to choose, between seven alternatives, namely which items were the correct answers to the question: ‘Which two statements about the clipboard are correct?’ |

Information seeking | 5 | Multiple choice and open-ended questions | Participants had to choose between four alternatives, namely the best information sources for the given situations (all together three tasks), from a search query for a ‘web search engine’ (Task 1), and how to evaluate and choose relevant and reliable outcomes for a given problem from the ‘search engine results page’ (Task 2) |

Word processing | 2 | Multiple choice (matching) | In the first task, participants had to choose between five alternatives for what modifications (paragraph and page formatting, header and footer) were presented for the text documents. Then, similarly in the second task, they had to choose, between five alternatives for the desired modifications to implement (indexing, page numbering, and page break) |

Spreadsheets | 3 | Multiple choice (matching) | Participants had to choose between four alternatives for the right formula for the spreadsheet cell, and similarly, between four alternatives, for the right function to solve the presented task. In addition, they needed to choose between five alternatives for the appropriate formatting actions to implement (formatting and ordering of cell content) |

Presentations | 2 | Multiple choice (matching) | Participants had to choose between four alternatives for the best implemented actions to take on the presented slide shows (by inserting background and bullets/numbering, formatting charts and graphics) (two tasks total) |

Image processing | 2 | Multiple choice (matching) | Participants had to choose between five alternatives for the best implemented formatting actions to present images (for formatting brightness and colours, cropping the picture, and/or removing elements from the image) |

Social networking | 4 | Multiple choice (case examples) | Participants had to choose, between four alternatives for the most appropriate and secure option for the social networking case (four tasks) |

Web content creation | 2 | Multiple choice (matching) | In the first task, participants had to choose from five html outputs the correct match for the given html code (a simple example containing text, link, input field, and font colours). In the second task, participants had to choose between seven alternatives for the correct answer to the question: ‘Which two statements about the (Finnish) exercise of freedom of expression in the mass media are correct?’ |

Software installation and initialisation | 2 | Multiple choice | Participants had to choose between seven alternatives for the right answers to two questions: ‘Which two statements about (options for installation, and installation of media in) software installation are correct?’ and between the four alternatives for the question ‘Which statement about (operations needed during the) software installation is correct?’ |

Operating system installation and initialisation | 2 | Multiple choice | Participants had to choose between four alternatives for the right answers to the questions: ‘Which statement about operating system installation and initialisation is correct?’ (two tasks) |

Maintenance and updating | 2 | Multiple choice | Participants had to choose between seven and four alternatives for the right answers to the question: ‘Which statement(s) about maintenance and updating are/is correct?’ (two tasks) |

Information security | 2 | Multiple choice | In the first task, participants had to choose between four alternatives for the correct action/conclusion in the case where web service store user passwords in a clear text format. In the second task, they had to choose, between seven alternatives for which two options were not proper information security methods |

Programming | 4 | Multiple choice | In the programming tasks, participants needed to choose between four alternatives to answer what the given code examples (2 pseudocode examples) does, and what were the values of these variables after running (2 questions) |

Database operations | 2 | Multiple choice (matching) | Participants had to choose between four alternatives for the correct SQL query for a given situation. In addition, they had to choose between four alternatives for the correct description for the presented database schema |

Information networks | 2 | Multiple choice (matching) | In the first task, participants had to choose between four alternatives for the right answers to the question: ‘Which statement about denial-of-service attack is correct?’ In the second task, participants had to recognise information network techniques and then choose the correct match from four alternatives for the presented network graph |

Server environments | 2 | Multiple choice | Participants had to choose between five and four alternatives for the correct statements regarding logical volume management (Task 1) and hot-swapping (Task 2) |

Digital technology | 2 | Multiple choice (matching) | In the first task, participants had to choose between six alternatives for the best match for the presented graph on logic gates. In the second task, they had to choose between five alternatives for the correct answer to the question: ‘On which branch of mathematics is digital technology based?’ |

Rights and permissions

About this article

Cite this article

Kaarakainen, MT., Kivinen, O. & Vainio, T. Performance-based testing for ICT skills assessing: a case study of students and teachers’ ICT skills in Finnish schools. Univ Access Inf Soc 17, 349–360 (2018). https://doi.org/10.1007/s10209-017-0553-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10209-017-0553-9