Abstract

Unilateral hearing loss (UHL) leads to an imbalanced input to the brain and results in cortical reorganization. In listeners with unilateral impairments, while the perceptual deficits associated with the impaired ear are well documented, less is known regarding the auditory processing in the unimpaired, clinically normal ear. It is commonly accepted that perceptual consequences are unlikely to occur in the normal ear for listeners with UHL. This study investigated whether the temporal resolution in the normal-hearing (NH) ear of listeners with long-standing UHL is similar to those in listeners with NH. Temporal resolution was assayed via measuring gap detection thresholds (GDTs) in within- and between-channel paradigms. GDTs were assessed in the normal ear of adults with long-standing, severe-to-profound UHL (N = 13) and age-matched, NH listeners (N = 22) at two presentation levels (30 and 55 dB sensation level). Analysis indicated that within-channel GDTs for listeners with UHL were not significantly different than those for the NH subject group, but the between-channel GDTs for listeners with UHL were poorer (by greater than a factor of 2) than those for the listeners with NH. The hearing thresholds in the normal or impaired ears were not associated with the elevated between-channel GDTs for listeners with UHL. Contrary to the common assumption that auditory processing capabilities are preserved for the normal ear in listeners with UHL, the current study demonstrated that a long-standing unilateral hearing impairment may adversely affect auditory perception—temporal resolution—in the clinically normal ear. From a translational perspective, these findings imply that the temporal processing deficits in the unimpaired ear of listeners with unilateral hearing impairments may contribute to their overall auditory perceptual difficulties.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

Hearing with two ears is better than one (e.g., Dirks and Wilson 1969; Bronkhorst and Plomp 1989; Middlebrooks and Green 1991). Unilateral hearing loss (UHL) leads to an imbalanced input to the brain and results in cortical reorganization. Several animal studies have demonstrated that UHL alters neuronal activation and binaural interactions in the central auditory pathways (Kitzes 1984; Reale et al. 1987). Human experiments also provide evidence of modified activation of the central auditory pathways following UHL (Scheffler et al. 1998; Bilecen et al. 2000; Ponton et al. 2001; Langers et al. 2005). However, the perceptual consequences of such alterations remain unknown. While the perceptual difficulties associated with the impaired ear in listeners with UHL are well documented, less is known regarding auditory processing abilities of the unimpaired ear in these listeners. It is commonly accepted that in individuals with UHL, perceptual consequences are unlikely to occur in the normal ear and that auditory processing in the normal ear in listeners with UHL is similar to the corresponding ear of individuals with normal hearing (NH). However, limited data exist to corroborate this assumption. The present study addresses this gap in knowledge.

Of interest here is the temporal resolution of the normal ear in listeners with UHL. One of the psychoacoustic approaches widely used to assess temporal resolution is gap detection. The gap detection threshold (GDT) represents sensitivity to the presence of a silent temporal gap flanked by two markers. The GDT can be measured in a within-channel task when the two markers flanking the gap are spectrally similar or in a between-channel task when the two markers are spectrally dissimilar (Phillips et al. 1997; Phillips and Smith 2004). To date, only three studies have measured within-channel GDTs in listeners with UHL (Glasberg et al. 1987; Moore and Glasberg 1988; Sininger and de Bode 2008). Two of these reports (Glasberg et al. 1987; Moore and Glasberg 1988) indirectly compared GDTs measured from the normal ears of listeners with primarily acquired UHL (age range 18 to 72 years for Glasberg et al. (1987); age range 45 to 72 years for Moore and Glasberg (1988)) with normative data from the same laboratory (Shailer and Moore 1983). They suggested that GDTs for the normal ears in UHL listeners are slightly smaller than those previously reported. They noted, however, that these differences could be due to the higher overall level or to the slightly higher marker-to-background ratio used in studies that included unilaterally deaf subjects (Glasberg et al. 1987; Moore and Glasberg 1988). In contrast, Sininger and de Bode (2008) reported no significant difference between the normal ears of listeners with UHL and NH on GDTs, measured using broadband noise (BBN) and tonal gap markers. Their data appear to be associated with a floor effect, and their findings in NH listeners could not be replicated by another study using a nearly identical procedure (Grose 2008). Importantly, within-channel gap detection, used in the three studies on unilaterally deaf listeners (Glasberg et al. 1987; Moore and Glasberg 1988; Sininger and de Bode 2008), combines the analysis of temporal aspects of the acoustic stimulus with the analysis of dynamic amplitude changes (Shailer and Moore 1985; Moore and Glasberg 1988). Consequently, performance on a within-channel gap detection task may reflect limits in intensity processing rather than efficiency of temporal processing per se.

The objective of the present study was to determine whether the temporal resolution of the NH ear of listeners with long-standing UHL is similar to one ear of listeners with NH. Temporal resolution was assayed by measuring GDTs in within- and between-channel conditions. These two gap detection tasks are influenced by fundamentally different perceptual mechanisms (Phillips et al. 1997; Phillips and Smith 2004). Between-channel gap detection requires a more “central” operation or occurs in the central auditory system (Eggermont 2000), wherein the relative timing of different perceptual channels are compared. Further, compared to within-channel gap detection, between-channel measurements are more relevant to categorical speech perception (Elangovan and Stuart 2008). Our working hypothesis was that the temporal resolution of the normal ears of UHL and NH listeners will be similar. We presumed this because the hearing thresholds are normal and because of a lack of any obvious clinical neurological deficits in the UHL listeners.

METHODS

Subjects

A total of 35 right-handed and healthy subjects participated in this study. The NH and UHL groups were age matched (two sided, t(33) = 1.25, P = 0.21). Twenty-two NH subjects (11 males and 11 females) were included. Twenty-one NH subjects were between the ages of 16 and 33 years (mean = 22 years), and one subject was 53 years old. The 53-year-old was included to better match the ages of listeners within the experimental group. All listeners had hearing thresholds of 20 dB hearing level (HL) or better at octave frequencies from 250 through 8000 Hz. None of the subjects had any formal training in music. For the NH group, data were collected from 11 right and 11 left ears in random order.

Thirteen subjects with UHL (nine males and four females), 16 to 36 years of age with one middle-aged (55 years) subject (mean = 26.08 years excluding the 55-year-old), with no confirmed etiology were enrolled. The pure-tone average for thresholds at 500, 1000, and 2000 Hz for the normal ear was similar to the NH group (two sided, t(33) = 1.63, P = 0.11). Relevant demographic and basic auditory characteristics are presented in Table 1. The onset of hearing loss was congenital in all but two cases, whose hearing loss was acquired during early childhood. The two cases also reported tinnitus in their impaired ears. One of these subjects (#13; Table 1) had mixed hearing loss. Individuals with UHL did not use a hearing aid, nor were they using any formal sign language.

GDT Stimuli and Measurement Procedures

All procedures were conducted inside a double-walled sound booth. The measurement of GDTs was performed using a customized version of Adaptive Tests of Temporal Resolution written in Visual Basic™ v. 6.0 (Lister et al. 2011a, b). This software used stored sound files with a 44,100-Hz sampling frequency and 16-bit resolution, and was run using a standard laptop with a Conexant CXT5047 sound card (Conexant, Irvine, CA, USA). Stimuli were delivered using ER-3A insert earphones (Etymotic Research, Elk Grove Village, IL, USA) via a calibrated audiometer.

The GDTs were measured in both within- and between-channel tasks at two presentation levels: 30 and 55 dB sensation level (SL). The order of testing was randomized and counter-balanced across subjects. For the within-channel condition, the stimuli were comprised of two narrowband noise (NBN) markers with quarter-octave bandwidths geometrically centered on 2000 Hz, and were shaped with a cosine-squared ramp to give 1-ms rise–fall times on the offset of the leading marker and the onset of the trailing marker. The onset of the leading and the offset of the trailing markers were also shaped with a cosine-squared ramp to create 10-ms rise–fall times. For the between-channel condition, the center frequencies of the NBN markers were 2000 Hz for the leading marker and 1000 Hz for the trailing marker, flanking the gap. The leading marker was 300 ms in duration, while the trailing marker duration randomly varied between 250 and 350 ms. The temporal gap in both conditions was defined as the time interval between the offset of the leading and onset of the trailing markers, and represented a zero-voltage period.

GDTs were measured using an adaptive two-interval, two-alternative, forced-choice method, targeting 70.7 % correct responses (Levitt 1971). In the standard interval, the leading and trailing markers separated by a fixed 1-ms gap were presented. This 1-ms gap ensured similar gating transients in both standard and target intervals (Phillips et al. 1997). In the target interval, two markers separated by a gap of adaptively varying duration were presented. The standard and target intervals were presented in random order. The listeners were instructed to select the target interval. The initial gap duration was set at 20 ms for within-channel and 100 ms for between-channel tasks. Two consecutive correct responses led to a decrease in the gap size by a factor of 1.2, and an incorrect response led to an increase. The threshold was defined as the geometric mean of the final six reversals of the total eight reversals.

All listeners received one complete run for practice and familiarization prior to the actual test runs. On average, each listener received 160–200 trials (40–50 trials/condition) prior to test runs. While obtaining a stable measurement is important, excessive practice (~500 trials) may induce early and rapid auditory learning (Hawkey et al. 2004). Two test runs were conducted in the same experimental session, separated by a brief interval (~20 min) that involved earphone removal and re-insertion in order to assess the stability of the GDT estimates. Data was accepted if the first GDT estimate was within a factor of 2 of the second GDT. The subject was re-instructed and practice runs were repeated if the data acceptance criterion was not met. The order of within- and between-channel measurements and presentation levels were randomized and counter-balanced across listeners.

RESULTS

GDT estimates for each of the two test runs at each level in within-and between-channel conditions in both subject groups showed a normal distribution (Shapiro–Wilk test; P > 0.05). All statistical procedures were therefore conducted on the measured GDT estimates rather than using logarithmic transformations. GDTs in two middle-aged subjects (one NH and the other UHL) were not the highest among the participants. The intra-subject repeatability was assessed using the Bland and Altman method (Bland and Altman 1999). This approach has been argued to be an efficient way to assess repeatability (McMillan 2014).

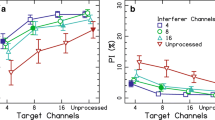

Figure 1 plots the test–retest difference in the within-channel condition for every subject against subject-specific test–retest averages for both subject groups, at two presentation levels. It shows the distribution of differences between the two sets of measurements. The average discrepancy between test sessions ranged from −0.44 to 0.48 ms across presentation levels and subject groups (see Fig. 1 for specific values). Overall, this Bland and Altman plot shows acceptable intra-subject repeatability for within-channel GDTs. The two GDT estimates were therefore averaged to obtain a single stable threshold estimate for answering experimental questions. The within-channel GDTs at two presentation levels for NH and UHL subjects are plotted in Figure 2, which also shows the between-channel GDTs. The within-channel GDTs were similar between the normal ears of UHL listeners (mean = 4.94 ms, SE = 0.53 for 30 dB SL; mean = 5.69 ms, SE = 0.80 for 55 dB SL) and NH listeners (mean = 5.01 ms, SE = 0.27 for 30 dB SL; mean = 4.68 ms, SE = 0.50 for 55 dB SL). One-way repeated measures analysis of variance of within-channel GDTs, with hearing status as a between-group factor, showed no main effect of level (F 1,33 = 0.45, P = 0.51, η p 2 = 0.13), group (F 1,33 = 0.35, P = 0.56, η p 2 = 0.01), or interaction between level and group (F 1,33 = 2.72, P = 0.12, η p 2 = 0.07).

Bland–Altman plots for within-channel GDTs: A 30 dB SL and B 55 dB SL. The difference in two within-channel GDT estimates is plotted as a function of the mean GDTs of two test runs for each NH (circle) and UHL listener (multiplication sign). The mean test–retest difference (bias; solid line), 95 % limits of agreement (±1.96 SDs of the bias; dashed lines), and 95 % CIs of the bias (dotted lines) are shown for the UHL subjects. For brevity, only the bias (gray) line for NH listeners is shown. The line of equality (0 in the y-axis; a line not drawn) lying within 95 % CIs of bias suggests no significant systematic error between the two test runs; the limits of agreement represent the range of values in which agreement between two test runs may lie for ~95 % of the sample. GDTs for normal ears of listeners with UHL and with NH are shown.

Figure 3 displays the Bland and Altman plots for between-channel GDTs measured at 30 and 55 dB SL for NH and UHL listeners. The mean test–retest difference ranged from −1.91 to 1.98 ms across presentation levels and subject groups (see Fig. 3 for specific values). This suggests acceptable intra-subject repeatability for between-channel GDT estimates. To obtain a single stable measure, the between-channel GDTs from two test runs were averaged. Figure 2 depicts the mean between-channel GDTs at two levels for the two groups of listeners. It is apparent that between-channel GDTs in the normal ear of the UHL subjects (mean = 60.75 ms, SE = 7.59 for 30 dB SL; mean = 56.97 ms, SE = 7.14 for 55 dB SL) were larger (by greater than a factor of 2) compared to NH subjects (mean = 27.51 ms, SE = 2.36 for 30 dB SL; mean = 24.48 ms, SE = 1.57 for 55 dB SL). The assumption of homogeneity of variance was not met for between-channel GDT data for both levels, as revealed by the Levene test (F 1,33 = 12.34, P < 0.01 for 30 dB SL; F 1,33 = 19.23, P < 0.01 for 55 dB SL). The Welch adjusted F ratio showed that the two subject groups differed significantly on their mean between-channel GDTs (Welch’s F 1,14.35 = 17.89, P < 0.01 for 30 dB SL, ω 2 = 0.33; Welch’s F 1,13.17 = 19.73, P < 0.01, ω 2 = 0.35 for 55 dB SL). Effect sizes greater than 0.138 can be interpreted as large (Kirk 1996).

Bland–Altman plots for between-channel GDTs: A 30 dB SL and B 55 dB SL. The test–retest difference for between-channel GDTs is plotted against the average of test–retest GDTs for each subject with NH (circle) and UHL (multiplication sign). The mean test–retest difference (bias; solid line), 95 % limits of agreement (±1.96 SDs of the test–retest differences; dashed lines), and 95 % CIs of the bias (dotted lines) are shown for the UHL subjects. For graphical simplicity, only the bias line for subjects with NH is shown. The line of equality (0 in the y-axis; a line not drawn) lying within 95 % CIs of bias suggests no significant systematic error between the two test runs; the limits of agreement represent the range of values in which agreement between two test runs may lie for ~95 % of the sample. GDTs for the normal ear of listeners with UHL and with NH are shown.

Since the hearing thresholds, averaged across frequencies 1000 and 2000 Hz, for the normal ear of listeners with UHL (mean = 16.54 dB HL, SE = 1.25) were slightly higher than those for the test ear of the NH listeners (mean = 12.61 dB HL, SE = 0.92), the hearing thresholds were averaged across frequencies 1000 and 2000 Hz because the NBNs for leading and trailing markers were centered at these frequencies. Pearson’s product moment correlation coefficients were computed to determine the association between the hearing thresholds and between-channel GDTs. Correlations, performed on data pooled across groups, were not significant (r = 0.07, two-tailed P = 0.67, n = 35 for 30 dB SL; r = 0.05, two-tailed P = 0.75, n = 35 for 55 dB SL). Correlations were also not significant for the UHL group (r = −0.04, two-tailed P = 0.17, n = 13 for 30 dB SL; r = −0.08, two-tailed P = 0.81, n = 13 for 55 dB SL). Likewise, Pearson’s product moment correlation showed no association between auditory thresholds, nor the pure-tone average for frequencies 1000 and 2000 Hz, in the impaired ear and between-channel GDTs in the normal ear (r = 0.15, two-tailed P = 0.63, n = 13 for 30 dB SL; r = 0.11, two-tailed P = 0.73, n = 13 for 55 dB SL).

DISCUSSION

The primary objective of this study was to determine whether the temporal resolution of the NH ear in individuals with UHL is similar to that of NH individuals. Temporal resolution was assessed using within- and between-channel GDTs. The principal findings are (1) the GDTs for both NH and UHL listeners showed acceptable intra-subject repeatability; (2) the within-channel GDTs for listeners with UHL were not significantly different than those for the NH listeners; (3) the between-channel GDTs for the normal ear of listeners with UHL were poorer (greater than a factor of 2) than those for listeners with NH; and (4) the hearing thresholds in the impaired or normal ears of UHL listeners cannot account for the elevated between-channel GDTs for these listeners. These results are important because they show significant perceptual consequences in the clinically normal ear for listeners with long-standing UHL. Specifically, this study demonstrated that the temporal resolution, as measured by between-channel GDTs, of the normal ear of listeners with UHL is reduced. This may suggest that listeners with long-term and early-onset UHL perceptually do not show the same pattern of response as the corresponding ear of listeners with NH.

Within-Channel Gap Detection

GDTs for listeners with NH are in general agreement with other studies. Despite presentation level differences, the within-channel GDTs measured in NH listeners were similar to those reported in previous studies (~4.5 ms; Lister et al. 2011a; Mishra and Panda 2014; Hess et al. 2012). Specifically, the finding that within-channel GDTs are similar across normal ears of listeners with UHL and NH is consistent with Sininger and de Bode’s results, even though they used tonal and broadband noise markers and their data appeared to have floor effects (Grose 2008). Associations between hearing thresholds and within-channel GDTs were not sought because no group difference was observed. It is important to consider the nature of a within-channel gap processing mechanism prior to making any interpretation. The relevant operation in a within-channel gap detection task involves a discontinuity detection, most likely the detection of the onset of the trailing marker, executed on the activity in the channel triggered by the stimulus (Oxenham 1997). Physiologically, within-channel gap information is available peripherally at the auditory nerve (Zhang et al. 1990).

A potential confound in making inferences from a within-channel GDT is that it may reflect both temporal and intensity resolution (Shailer and Moore 1985; Moore and Glasberg 1988). Regardless of what within-channel GDTs may reflect, “low-level” processes primarily determine the performance in this task. Studies in patients with brain damage lend support to this notion by showing that the performance in these patients was comparable with their age-matched or normal controls (Divenyi and Robinson 1989; Sidiropoulos et al. 2010). Not all evidences are coherent. Less than normal within-channel gap detection performance has been reported in ferrets (Kelly et al. 1996) and humans (Musiek et al. 2005; Stefanatos et al. 2007) with cortical damage. However, some of these studies used BBN markers to test gap detection (Divenyi and Robinson 1989; Kelly et al. 1996; Musiek et al. 2005). Since BBN stimulates a wide range of frequency channels, the detection of gaps is determined by the presence of temporal discontinuities across multiple channels instead of in a specific channel, as in the case of a NBN marker. Studies that used NBN markers indicate that certain aspects of the within-channel detection process are independent of cortical damage. For example, Stefanatos et al. (2007) reported that individuals with aphasia show normal within-channel gap detection performance at short gap durations (10 and 20 ms)—conditions that are comparable to those in the present study—suggesting a lack of effect of brain damage for these specific conditions.

In light of the mechanisms of the within-channel gap detection, it is possible that UHL does not alter peripheral auditory processing or organization in the normal side. Further confirmation of this inference could be obtained by measuring within-channel GDTs with relatively-short-duration stimuli, as short-duration stimuli could reveal subtle effects that were not obvious with longer duration stimuli. For instance, aging effects appear only when marker durations are short (Moore et al. 1992; Schneider et al. 1994; Schneider and Hamstra 1999).

Between-Channel Gap Detection

The between-channel GDTs in NH listeners reported here are slightly smaller than those reported previously (~40 ms; Lister et al. 2011a; Hess et al. 2012), but they are in close agreement with others (~30 ms; Phillips et al. 1997; Phillips and Smith 2004). The striking finding, to our surprise and contrary to the working hypothesis, is that the between-channel GDTs were elevated in UHL listeners compared to their NH counterparts. This effect was very robust, as it was observed at two stimulus levels with good intra-subject repeatability. Poor temporal resolution despite clinically normal audiograms has been previously reported for other populations, such as elderly listeners (Lister et al. 2002; Heinrich and Schneider 2006; Pichora-Fuller et al. 2006). However, elderly listeners show generalized deficits in both within- and between-channel GDTs, unlike listeners with UHL who presented a differential effect on between-channel GDTs. While we do not have a clear or complete explanation for this finding, several speculations are examined.

-

1.

Hearing thresholds: We labeled the unimpaired ear of UHL listeners as audiometrically or clinically normal (mean pure-tone average based on thresholds at 500, 1000, and 2000 Hz = 17.44 dB HL); however, some may categorize this as a slight/minimal hearing impairment (Clark 1981). Regardless of clinical classification, an important question to address is whether the differences in hearing thresholds between the unimpaired ears of the UHL listeners and the test ear of the NH listeners contributed to the observed difference between their between-channel GDTs. The hearing thresholds, averaged across 1000, 2000, and 4000 Hz, in the normal ear of listeners with UHL were 3.68 dB greater than those for the test ear of NH listeners. This minimal difference is unlikely to have influenced the present experimental outcomes because between-group comparisons were made at equal SLs and at a high SL (55 dB). Further, Lister et al. (2000) have shown that hearing status does not significantly influence between-channel GDTs, provided optimal audibility of the signal is ensured. Consistent with this, we did not find a relationship between hearing thresholds and between-channel GDTs. Despite this, one may argue that the group differences in between-channel GDT may be related to age and/or hearing threshold, because the UHL group was, on average, 4 years older than the NH group and had slightly higher hearing thresholds. However, if these factors played a role, then a difference across groups for within-channel GDTs likely would have been evident as well.

-

2.

Stimulus-related variables: Extraneous cues in the target interval, such as spectral splatter associated with short rise/fall times, may be audible, particularly for listeners with better auditory thresholds. The results would then depend on the ability to discriminate subtle changes in the spectral splatter and will not reflect across-channel gap sensitivity. Using a fixed 1-ms gap between the leading and trailing markers in the standard interval ensured similar gating transients between the two intervals (Phillips et al. 1997) and controlled the extraneous spectral splatter cue.

-

3.

Underlying mechanism: The relevant perceptual process in a between-channel gap detection task is the relative timing of the offset of activity in the channel representing the leading marker and the onset of activity in the channel representing the trailing marker (Phillips et al. 1997). The temporal markers are distributed across different frequency channels. The between-channel GDTs reflect higher, or central, auditory processes and are determined by neural responses in the primary auditory cortex (Eggermont 2000). Consistent with this, in a case with left-hemisphere temporo-parietal ischemic infarction and contralateral parieto-occipital cerebrovascular lesion, Sidiropoulos et al. (2010) reported unimpaired temporal resolution in a within-channel task that involved a single perceptual channel, but compromised encoding of the temporal structure in a between-channel task. On the other hand, Stefanatos et al. (2007) found that individuals with aphasia demonstrate poorer accuracy in detecting longer gap durations in both within- and between-channel conditions. Note that they measured the percentage of correct responses at fixed gap durations, but not the lowest detectable gap—GDT.

-

4.

Cognitive factors: Moore et al. (2010) showed a relationship between performance in auditory processing tests and cognitive abilities in children. There is no doubt that any perceptual test performed by an individual requires cognitive resources. However, it is currently unclear whether different perceptual tasks, for example, within- versus between-channel tasks, require significantly different degrees of cognitive loads. Phillips et al. (1997) conjectured that the between-channel task requires more cognitive resources, particularly auditory attention, compared to the within-channel task. Although it is unknown whether individuals with unilateral versus bilateral hearing impairment have different cognitive profiles, hearing loss has been generally found to be associated with lower scores on tests of memory and executive functions (Arlinger 2003; Lin et al. 2011). Thus, it is possible that listeners with UHL may have reduced attentional or other cognitive resources to fine-tune the between-channel mechanism compared to their NH counterparts. Unfortunately, direct cognitive measures on the current participants are not available. Intra-subject repeatability—a crude index of fluctuating attention—was found to be good across test conditions and listeners. However, this does not negate that listeners with UHL may have had subtle test paradigm-specific cognitive deficits.

-

5.

Effect of the impaired ear: Long-standing UHL could potentially cause alterations in central auditory processes underlying between-channel GDTs in the NH ear. Consequently, one would predict a greater effect size or worsening of GDTs with increasing severity of hearing loss in the impaired ear. However, correlation analysis results failed to support this notion. The hearing thresholds in the impaired ear were unrelated to the between-channel GDTs measured in the normal ear. Nevertheless, it is generally known that hearing thresholds are not a good indicator of central auditory function.

-

6.

Other factors: Gap detection deficits in the NH ear and tinnitus in the impaired ear in listeners with UHL are consistent with the reduced gap–startle reflex data from animal experiments, where one ear was deafened and the GDT has been reported to be reduced, supposedly due to tinnitus (e.g., Turner et al. 2006; Lobarinas et al. 2013). There are two issues with this explanation. First, the animal gap–startle reflex studies primarily used BBN gap markers in a within-channel paradigm; in contrast, the within-channel GDTs reported here were within normal range. Second, only two subjects reported tinnitus in their impaired ears.

It is possible that the etiology that caused the unilateral hearing impairment also induced subtle and sub-clinical auditory deficits in the normal ear. However, it is unlikely that all subjects have had the same pathology, even though the etiology is unknown.

Translational Significance

Many perceptually relevant tasks resemble the between-channel gap detection paradigm, for example, detecting voice-onset time to discriminate voiced /b/ and voiceless stop /p/ consonants (Phillips 1999; Elangovan and Stuart 2008). Elevated between-channel GDTs in normal ears of listeners with UHL may reflect subtle deficits in the processing of temporal cues of speech in these listeners, which may lead to poor speech-in-noise recognition as reported by others (Bess and Tharpe 1984; Bess et al. 1986; Lieu et al. 2010). While it is natural to identify the impaired ear as the source of perceptual deficits experienced by listeners with UHL, the current findings suggest that the processing deficits, gap detection in this case, in the normal ear may also contribute to the overall auditory difficulties in these listeners.

CONCLUSIONS

Contrary to the commonly accepted assumption that auditory processing is preserved in the NH ear of listeners with UHL, this study demonstrated for the first time that auditory processing—particularly temporal resolution as measured by between-channel gap detection—in the unimpaired (clinically normal-hearing) ear is compromised. Whether this impaired temporal processing was due to central alterations associated with a long-term unilateral hearing impairment or due to other factors requires further investigation. Future studies may also be directed towards investigating other psychoacoustic aspects, for example, frequency discrimination, in the normal ear of listeners with UHL to better understand the perceptual problems experienced by these listeners.

References

Arlinger S (2003) Negative consequences of uncorrected hearing loss—a review. Int J Audiol 42(Suppl 2):2S17–2S20

Bess FH, Tharpe AM (1984) Unilateral hearing impairment in children. Pediatrics 74:206–216

Bess FH, Tharpe AM, Gibler AM (1986) Auditory performance of children with unilateral sensorineural hearing loss. Ear Hear 7:20–26

Bilecen D, Seifritz E, Radü EW et al (2000) Cortical reorganization after acute unilateral hearing loss traced by fMRI. Neurology 54:765–767

Bland JM, Altman DG (1999) Measuring agreement in method comparison studies. Stat Methods Med Res 8:135–160

Bronkhorst AW, Plomp R (1989) Binaural speech intelligibility in noise for hearing-impaired listeners. J Acoust Soc Am 86:1374–1383

Clark JG (1981) Uses and abuses of hearing loss classification. ASHA 23:493–500

Dirks DD, Wilson RH (1969) The effect of spatially separated sound sources on speech intelligibility. J Speech Lang Hear Res 12:5–38

Divenyi PL, Robinson AJ (1989) Nonlinguistic auditory capabilities in aphasia. Brain Lang 37:290–326

Eggermont JJ (2000) Neural responses in primary auditory cortex mimic psychophysical, across-frequency-channel, gap-detection thresholds. J Neurophysiol 84:1453–1463

Elangovan S, Stuart A (2008) Natural boundaries in gap detection are related to categorical perception of stop consonants. Ear Hear 29:761–774

Glasberg BR, Moore BC, Bacon SP (1987) Gap detection and masking in hearing-impaired and normal-hearing subjects. J Acoust Soc Am 81:1546–1556

Grose J (2008) Gap detection and ear of presentation: examination of disparate findings: re: Sininger Y.S., & de Bode, S. (2008). Asymmetry of temporal processing in listeners with normal hearing and unilaterally deaf subjects. Ear Hear 29, 228-238. Ear Hear 29:973–976

Hawkey DJC, Amitay S, Moore DR (2004) Early and rapid perceptual learning. Nat Neurosci 7:1055–1056

Heinrich A, Schneider B (2006) Age-related changes in within- and between-channel gap detection using sinusoidal stimuli. J Acoust Soc Am 119:2316–2326

Hess BA, Blumsack JT, Ross ME, Brock RE (2012) Performance at different stimulus intensities with the within- and across-channel adaptive tests of temporal resolution. Int J Audiol 51:900–905

Kelly JB, Rooney BJ, Phillips DP (1996) Effects of bilateral auditory cortical lesions on gap-detection thresholds in the ferret (Mustela putorius). Behav Neurosci 110:542–550

Kirk RE (1996) Practical significance: a concept whose time has come. Educ Psychol Meas 56:746–759

Kitzes LM (1984) Some physiological consequences of neonatal cochlear destruction in the inferior colliculus of the gerbil, Meriones unguiculatus. Brain Res 306:171–178

Langers DRM, Van Dijk P, Backes WH (2005) Lateralization, connectivity and plasticity in the human central auditory system. Neuroimage 28:490–499

Levitt H (1971) Transformed up-down methods in psychophysics. J Acoust Soc Am 49:467–477

Lieu JEC, Tye-Murray N, Karzon RK, Piccirillo JF (2010) Unilateral hearing loss is associated with worse speech-language scores in children. Pediatrics 125:e1348–e1355

Lin FR, Ferrucci L, Metter EJ et al (2011) Hearing loss and cognition in the Baltimore longitudinal study of aging. Neuropsychology 25:763–770

Lister JJ, Koehnke JD, Besing JM (2000) Binaural gap duration discrimination in listeners with impaired hearing and normal hearing. Ear Hear 21:141–150

Lister J, Besing J, Koehnke J (2002) Effects of age and frequency disparity on gap discrimination. J Acoust Soc Am 111:2793–2800

Lister JJ, Roberts RA, Lister FL (2011a) An adaptive clinical test of temporal resolution: age effects. Int J Audiol 50:367–374

Lister JJ, Roberts RA, Krause JC et al (2011b) An adaptive clinical test of temporal resolution: within-channel and across-channel gap detection. Int J Audiol 50:375–384

Lobarinas E, Hayes SH, Allman BL (2013) The gap-startle paradigm for tinnitus screening in animal models: limitations and optimization. Hear Res 295:150–160

McMillan GP (2014) On reliability. Ear Hear 35:589–590

Middlebrooks JC, Green DM (1991) Sound localization by human listeners. Annu Rev Psychol 42:135–159

Mishra SK, Panda MR (2014) Experience-dependent learning of auditory temporal resolution: evidence from carnatic-trained musicians. Neuroreport 25:134–137

Moore BCJ, Glasberg BR (1988) Gap detection with sinusoids and noise in normal, impaired and electrically stimulated ears. J Acoust Soc Am 83:1093–1101

Moore BCJ, Peters RW, Glasberg BR (1992) Detection of temporal gaps in sinusoids by elderly subjects with and without hearing loss. J Acoust Soc Am 92:1923–1932

Moore DR, Ferguson MA, Edmondson-Jones AM et al (2010) Nature of auditory processing disorder in children. Pediatrics 126:e382–e390

Musiek FE, Shinn JB, Jirsa R et al (2005) GIN (Gaps-In-Noise) test performance in subjects with confirmed central auditory nervous system involvement. Ear Hear 26:608–618

Oxenham AJ (1997) Increment and decrement detection in sinusoids as a measure of temporal resolution. J Acoust Soc Am 102:1779–1790

Phillips DP (1999) Auditory gap detection, perceptual channels, and temporal resolution in speech perception. J Am Acad Audiol 10:343–354

Phillips DP, Smith JC (2004) Correlations among within-channel and between-channel auditory gap-detection thresholds in normal listeners. Perception 33:371–378

Phillips DP, Taylor TL, Hall SE et al (1997) Detection of silent intervals between noises activating different perceptual channels: some properties of “central” auditory gap detection. J Acoust Soc Am 101:3694–3705

Pichora-Fuller MK, Schneider BA, Benson NJ et al (2006) Effect of age on detection of gaps in speech and nonspeech markers varying in duration and spectral symmetry. J Acoust Soc Am 119:1143–1155

Ponton CW, Vasama JP, Tremblay K et al (2001) Plasticity in the adult human central auditory system: evidence from late-onset profound unilateral deafness. Hear Res 154:32–44

Reale RA, Brugge JF, Chan JC (1987) Maps of auditory cortex in cats reared after unilateral cochlear ablation in the neonatal period. Brain Res 431:281–290

Scheffler K, Bilecen D, Schmid N et al (1998) Auditory cortical responses in hearing subjects and unilateral deaf patients as detected by functional magnetic resonance imaging. Cereb Cortex 8:156–163

Schneider BA, Hamstra SJ (1999) Gap detection thresholds as a function of tonal duration for younger and older listeners. J Acoust Soc Am 106:371–380

Schneider BA, Pichora-Fuller MK, Kowalchuk D, Lamb M (1994) Gap detection and the precedence effect in young and old adults. J Acoust Soc Am 95:980–991

Shailer MJ, Moore BC (1983) Gap detection as a function of frequency, bandwidth, and level. J Acoust Soc Am 74:467–473

Shailer MJ, Moore BC (1985) Detection of temporal gaps in bandlimited noise: effects of variations in bandwidth and signal-to-masker ratio. J Acoust Soc Am 77:635–639

Sidiropoulos K, Ackermann H, Wannke M, Hertrich I (2010) Temporal processing capabilities in repetition conduction aphasia. Brain Cogn 73:194–202

Sininger YS, de Bode S (2008) Asymmetry of temporal processing in listeners with normal hearing and unilaterally deaf subjects. Ear Hear 29:228–238

Stefanatos GA, Braitman LE, Madigan S (2007) Fine grain temporal analysis in aphasia: evidence from auditory gap detection. Neuropsychologia 45:1127–1133

Turner JG, Brozoski TJ, Bauer CA et al (2006) Gap detection deficits in rats with tinnitus: a potential novel screening tool. Behav Neurosci 120:188–195

Zhang W, Salvi RJ, Saunders SS (1990) Neural correlates of gap detection in auditory nerve fibers of the chinchilla. Hear Res 46:181–200

Acknowledgments

The authors thank Jennifer Lister for providing the gap detection test software (ATTR©).

Conflict of Interest

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mishra, S.K., Dey, R. & Davessar, J.L. Temporal Resolution of the Normal Ear in Listeners with Unilateral Hearing Impairment. JARO 16, 773–782 (2015). https://doi.org/10.1007/s10162-015-0536-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10162-015-0536-6