Abstract

Stochastic (sub)gradient methods require step size schedule tuning to perform well in practice. Classical tuning strategies decay the step size polynomially and lead to optimal sublinear rates on (strongly) convex problems. An alternative schedule, popular in nonconvex optimization, is called geometric step decay and proceeds by halving the step size after every few epochs. In recent work, geometric step decay was shown to improve exponentially upon classical sublinear rates for the class of sharp convex functions. In this work, we ask whether geometric step decay similarly improves stochastic algorithms for the class of sharp weakly convex problems. Such losses feature in modern statistical recovery problems and lead to a new challenge not present in the convex setting: the region of convergence is local, so one must bound the probability of escape. Our main result shows that for a large class of stochastic, sharp, nonsmooth, and nonconvex problems a geometric step decay schedule endows well-known algorithms with a local linear (or nearly linear) rate of convergence to global minimizers. This guarantee applies to the stochastic projected subgradient, proximal point, and prox-linear algorithms. As an application of our main result, we analyze two statistical recovery tasks—phase retrieval and blind deconvolution—and match the best known guarantees under Gaussian measurement models and establish new guarantees under heavy-tailed distributions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stochastic (sub)gradient methods form the algorithmic core of much of modern statistical and machine learning. Such algorithms are typically sensitive to algorithm parameters, and require extensive step size tuning to achieve adequate performance. Classical tuning strategies decay the step size polynomially and lead to optimal sublinear rates of convergence on convex and strongly convex problems

where the loss functions \(f(\cdot ,z)\) are convex and \(\mathcal {X}\) is a closed convex set [45]. An alternative schedule, called geometric step decay, decreases the step size geometrically by halving it after every few epochs. In recent work [66], geometric step decay was shown to improve exponentially upon classical sublinear rates under the sharpness assumption:

where \(\mu >0\) is some constant and \(\mathcal {X}^*=\hbox {argmin}_{x\in \mathcal {X}} f(x)\) is the solution set. This result complements early works of Goffin [27] and Shor [62], which show that deterministic subgradient methods converge linearly on sharp convex functions if their step sizes decay geometrically. The work [66] also reveals a departure from the smooth strongly convex setting, where deterministic linear rates degrade to sublinear rates when the gradient is corrupted by noise [45].

Beyond the convex setting, sharp problems appear often in nonconvex statistical recovery problems, for example, in robust matrix sensing [40], phase retrieval [18, 20], blind deconvolution [8], and quadratic/bilinear sensing and matrix completion [7]. For such problems, sharpness is surprisingly common and corresponds to strong identifiability of the statistical model. In such settings, sharpness endows standard deterministic first-order algorithms with rapid local convergence guarantees, enabling the recovery of signals at optimal or near-optimal sample complexity in a variety of nonconvex problems. Despite this, we do not know whether stochastic algorithms equipped with geometric step decay—or any other step size schedule—linearly converge on sharp nonconvex problems.Footnote 1 Such algorithms, if available, could pave the way for new sample efficient strategies for these and other statistical recovery problems.

The main result of this work shows that for a large class of stochastic, sharp, nonsmooth, and nonconvex problems

a geometric step decay schedule endows well-known algorithms

with a local nearly linearFootnote 2 rate of convergence to minimizers.

This guarantee takes hold as soon as the algorithms are initialized in a small neighborhood around the set of minimizers and applies, for example, to the stochastic projected subgradient, proximal point, and prox-linear algorithms. Beyond sharp growth (1.1), we also analyze losses that grow sharply away from some closed set \(\mathcal {S}\), which is strictly larger than \(\mathcal {X}^*\). Such sets \(\mathcal {S}\) are akin to “active manifolds" in the sense of Lewis [38] and Wright [64]. For example, the loss \(f(x,y)=x^2+|y|\) is not sharp relative to its minimizer, but is sharp relative to the x-axis. For these problems, our algorithms converge nearly linearly to the set \(\mathcal {S}\). Finally, we illustrate the result with two statistical recovery problems: phase retrieval and blind deconvolution. For these recovery tasks, our results match the best known computational and sample complexity guarantees under Gaussian measurement models and establish new guarantees under heavy-tailed distributions.

1.1 Related work

Our paper is closely related to a number of influential techniques in stochastic, convex, and nonlinear optimization. We now survey these related topics.

Stochastic model-based methods. In this work, we use algorithms that iteratively sample and minimize simple stochastic convex models of the loss function. Throughout, we call these methods model-based algorithms. Such algorithms include the stochastic projected subgradient, prox-linear, and proximal point methods. Stochastic model-based algorithms are known to converge globally to stationary points at a sublinear rate on a large class of nonsmooth and nonconvex problems [11, 19]. Some model-based algorithms also possess superior stability properties and can be less sensitive to step size choice than traditional stochastic subgradient methods [3, 4].

Geometrically decaying learning rate in deterministic optimization. polynomially decaying step-sizes are common in stochastic optimization [35, 53, 55]. In contrast, we develop algorithms with step sizes that decay geometrically. Geometrically decaying step sizes were first analyzed in convex optimization by Shor [62, Thm 2.7, Sec. 2.3] and Goffin [27]. This step size schedule is also closely related to the step size rules of Eremin [21] and Polyak [52]. Similar schedules are known to accelerate convex subgradient methods under Hölder growth as shown in [32, 67]. Geometrically decaying step sizes for deterministic subgradient methods under weak convexity were systematically studied in [12]. The method of this work succeeds under similar assumptions on the population objective as in [12]: sharpness , Lipschitz continuity , and weak convexity , . In contrast to [12] which analyzes a deterministic subgradient method (based on linear approximations of the objective), the method of this work requires only stochastic estimates of the objective and converges under a wider family of nonlinear approximations of the objective (see Example 2.1). Surprisingly, the method only incurs an additional cost of \(\log ^2(1/\varepsilon )\) (stochastic) oracle evaluations, which arises due to our restart scheme. It is an intriguing open question whether one can remove this additional dependence on \(\log ^2(1/\varepsilon )\).

Geometric step decay in stochastic optimization. The geometric step decay schedule is common in practice: for example, see Krizhevsky et. al. [33] and He et al. [29]. It is a standard option in the popular deep learning libraries, such as Pytorch [49] and TensorFlow [1]. Geometric step decay has been analyzed in a number of recent papers in stochastic convex optimization, including [5, 25, 26, 34, 66, 68]. Among these papers, the work [66] relates most to ours. There, the authors propose two geometric step decay strategies that converge linearly on convex functions that are sharp and have bounded stochastic subgradients. These algorithms either use a moving ball constraint or follow a proximal-point type procedure. We follow the latter strategy, too, at least to obtain high-probability guarantees. The paper [66] differs from our work in that they assume convexity and a uniform bound on the stochastic subgradients. In contrast, we do not assume convexity and only assume that stochastic subgradients have a finite second moment. We are aware of only one subgradient method for stochastic nonconvex problems that converges linearly [3] under favorable assumptions. In [3], the authors develop a “clipped" subgradient method, which resembles a safeguarded stochastic Polyak step. In contrast to our work, their algorithms converge linearly only under “perfect interpolation," meaning that all terms in the expectation share a minimizer. Formally, the interpolation condition therein stipulates that, almost surely over z,

We do not make this assumption here. Indeed, the statistical recovery problems from Sect. 4 do not satisfy the interpolation condition in the presence of corruptions.

Restarts in deterministic optimization. Restart techniques have a long history in nonlinear programming, such as for conjugate gradient and limited memory quasi-Newton methods. They have also been used more recently to improve the complexity of algorithms in deterministic convex optimization. For example, restart schemes can accelerate sublinear convergence rates for convex problems that satisfy growth conditions as shown by Nesterov [47] and Nemirovskii and Nesterov [44], Renegar [54], O’Donoghue and Candes [48], Roulet and d’Aspremont [58], Freund and Lu [24], and Fercoq and Qu [22, 23] and others. In the nonconvex setting, stochastic restart methods are challenging to analyze, since the region of linear convergence is local. To overcome this challenge, one must bound the probability that the iterates leave this region. One of our main technical contributions is a technique for bounding this probability.

Finite sums. For finite sums, stochastic algorithms that converge linearly are more common. For example, for finite sums that are sharp and convex, Bertsekas and Nedić [43] prove that an incremental Polyak-type algorithm converges linearly. For finite sums that are smooth and strongly convex, variance reduced methods, such as SAG [59], SAGA [14], SDCA [60], SVRG [31], MISO/Finito [15, 41], SMART [10] and their proximal extensions converge linearly. The algorithms we develop here do not assume a finite sum structure.

Verifying sharpness. Sharp growth is a central assumption in this work. This property is surprisingly common in statistical recovery problems. For example, sharp growth has been established for robust matrix sensing [40], phase retrieval [18, 20], blind deconvolution [8], quadratic and bilinear sensing and matrix completion [7] problems. Consequently, the results of this paper apply in these settings.

1.2 Notation

We will mostly follow standard notation used in convex analysis and stochastic optimization. Throughout, the symbol \(\mathbb {R}^d\) will denote a d-dimensional Euclidean space with the inner product \(\langle \cdot ,\cdot \rangle \) and the induced norm \(\Vert x\Vert =\sqrt{\langle x,x\rangle }\). We denote the open ball of radius \(\varepsilon >0\) around a point \(x\in \mathbb {R}^d\) by the symbol \(B_{\varepsilon }(x)\); moreover, we write \(\mathbb {S}^{d-1}\) for the unit sphere in \(\mathbb {R}^d\). Given an event A, we write \(A^c\) for its complement. We also let \(1_{A}\) denote the indicator function of A, meaning a function that evaluates to one on A and zero off it. For any set \(Q\subset \mathbb {R}^d\), the distance function and the projection map are defined by

respectively. Consider a function \(f:\mathbb {R}^d\rightarrow \mathbb {R}\cup \{\pm \infty \}\) and a point x, with f(x) finite. The Fréchet subdifferential of f at x, denoted by \(\partial f(x)\), consists of all vectors \(v\in \mathbb {R}^d\) satisfying

A function f is called \(\rho \)-weakly convex on an open convex set U if the perturbed function \(f+\frac{\rho }{2}\Vert \cdot \Vert ^2\) is convex on U. The subgradients of such functions automatically satisfy the uniform approximation property (see [56]):

2 Algorithms, assumptions, and main results

In this section, we formalize our target problem and introduce algorithms to solve it. We then outline our main results. The complete theorem statements and proofs appear in Sect. 3. Throughout, we consider the minimization problem

for some function \(f:\mathbb {R}^d\rightarrow \mathbb {R}\) and a closed convex set \(\mathcal {X}\subseteq \mathbb {R}^d\). We define the set \(\mathcal {X}^*:=\hbox {argmin}_{x\in \mathcal {X}} f(x)\) and assume it to be nonempty. We also fix a probability space \((\Omega ,\mathcal {F},\mathbb {P})\) and equip \(\mathbb {R}^d\) with the Borel \(\sigma \)-algebra and make the following assumption.

-

(A1)

(Sampling) It is possible to generate i.i.d. realizations \(z_1,z_2, \ldots \sim \mathbb {P}\).

The algorithms we develop rely on a stochastic oracle model for Problem (\({\mathcal{S}\mathcal{O}}\)) that was recently introduced in [11]. These algorithms assume access to a family of functions \({f_x(\cdot ,z)}\)—called stochastic models—indexed by basepoints \(x\in \mathbb {R}^d\) and random elements \(z\sim \mathbb {P}\). Given these models, the generic stochastic model-based algorithm of [11] iterates the steps:

In this work, model-based algorithms form the core of the following restart strategy: given inner and outer loop sizes \(K\) and \(T\), respectively, as well as initial stepsize \(\alpha _0 > 0 \), perform:

This restart strategy is common in machine learning practice and is called geometric step decay. Restart schemes date back to the fundamental work of Nesterov [47] and Nemirovskii and Nesterov [44] and more recently appear in [22, 23, 25, 48, 54, 58, 66]. These strategies often improve the convergence guarantees of the algorithm they restart under growth assumptions, for example, by boosting an algorithm that converges sublinearly to one that converges linearly. In this work, we will show that restart scheme (2.3) similarly improves (2.2) for a large class of weakly convex stochastic optimization problems.

2.1 Assumptions

In this section, we formalize our assumptions on sharp growth of (2.1) as well as on accuracy, regularity, and Lipschitz continuity of the models.

2.1.1 Sharp Growth

We assume that \(f(\cdot )\) grows sharply as x moves in the direction normal to a closed set \(\mathcal {S}\).

-

(A2)

(Sharpness) There exists a constant \(\mu > 0\) and a closed set \(\mathcal {S}\subseteq \mathcal {X}\) satisfying \( \mathcal {X}^*\subseteq \mathcal {S}\) such that the following bound holds:

$$\begin{aligned} f(x) - \inf _{z \in \textrm{proj}_{\mathcal {S}}(x)}f(z) \ge \mu \cdot \textrm{dist}(x, \mathcal {S}) \qquad \forall x \in \mathcal {X}. \end{aligned}$$(2.4)

This property generalizes the classical sharp growth condition (1.1), where \(\mathcal {S}=\mathcal {X}^*\). The setting \(\mathcal {S}= \mathcal {X}^*\) is well-studied in nonlinear programming and often underlies rapid convergence guarantees for deterministic local search algorithms. Beyond the classical setting, \(\mathcal {S}\) could be a sublevel set of f or an “active manifold” in the sense of Lewis [38]; see Sect. D. When \(\mathcal {S}\ne \mathcal {X}^*\), we design stochastic algorithms that do not necessarily converge to global minimizers, but instead nearly linearly converge to \(\mathcal {S}\) with high probability. Finally, we note that when \(\mathcal {S}= \mathcal {X}^{*}\) the results of this paper remain valid if (2.4) only holds in a neighborhood of the solution set.Footnote 3

2.1.2 Accuracy and Regularity

We assume that the models are convex and under-approximate f up to quadratic error.

-

(A3)

(One-sided accuracy) There exists \(\eta > 0\) and an open convex set U containing \(\mathcal {X}\) and a measurable function \((x,y,z)\mapsto f_x(y,z)\), defined on \(U\times U\times \Omega \), satisfying

$$\begin{aligned} \mathbb {E}_{z}\left[ f_x(x,z)\right] =f(x) \qquad \forall x\in U, \end{aligned}$$and

$$\begin{aligned} \mathbb {E}_{z}\left[ f_x(y,z)-f(y)\right] \le \frac{\eta }{2}\Vert y-x\Vert ^2\qquad \forall x,y\in U. \end{aligned}$$ -

(A4)

(Convex models) The function \(f_x(\cdot ,z)\) is convex \(\forall x\in U\) and a.e. \(z\in \Omega \).

Models satisfying and , and their algorithmic implications, were analyzed in [11, Assumption B]; a closely related family of models was investigated in [3, 4]. Assumptions and imply that f is \(\eta \)-weakly convex on U, meaning that the assignment \(x \mapsto f(x) + \frac{\eta }{2}\Vert x\Vert ^2\) is convex [11, Lemma 4.1]. While we assume throughout the paper, we show in Remark 2 that our results hold under an even weaker assumption. For certain losses, models that satisfy and are easy to construct, as the following example shows.

Example 2.1

(Convex Composite Class) Stochastic convex composite losses take the form

where \(h(\cdot , z)\) are Lipschitz and convex and the nonlinear maps \(c(\cdot ,z)\) are \(C^1\)-smooth with Lipschitz Jacobian. Such losses appear often in data science and signal processing (see [7, 16, 19] and references therein). For this problem class, natural models include

-

(subgradient) \(f_x(y,z)=f(x,z)+\langle \nabla c(x, z)^\top v,y-x\rangle \) for any \(v\in \partial h(c(x, z), z)\).

-

(prox-linear) \(f_x(y,z)=h(c(x,z)+\nabla c(x,z)(y-x),z).\)

-

(proximal point) \(f_x(y,z)=f(y,z) + \frac{\eta }{2} \Vert x - y \Vert ^2\),

where \(\eta \) is large enough to guarantee the proximal model is convex.Footnote 4 If a lower bound \(\ell (z)\) on \(\inf f(\cdot ,z)\) is known, one can also choose a clipped model

-

(clipped) \(\tilde{f}_x(y, z) = \max \{ f_x(y,z), \ell (z)\} \),

for any of the models \( f_x(\cdot ,z)\) above, as was suggested by [3]. Intuitively, models that better approximate f(x, z) are likely to perform better in practice; see [3] for theoretical evidence supporting this claim. Fig. 1 contains a graphical illustration of one-sided models. \(\square \)

Illustration of one-sided models: \(f(x)=|x^2-1|\), \(f_{0.5}(y)=|1.25-y|\) (figure adapted from [11])

2.1.3 Lipschitz continuity

We assume that the models are Lipschitz on a tube surrounding \(\mathcal {S}\).

-

(A5)

(Lipschitz property) Define the tube

$$\begin{aligned} \mathcal {T}_{\gamma }:= \left\{ x \in \mathcal {X}\mid \textrm{dist}(x, \mathcal {S}) \le \frac{\gamma \mu }{\eta }\right\} \qquad \forall \gamma > 0. \end{aligned}$$We assume that there exists a measurable function \(L :\mathbb {R}^d \times \Omega \rightarrow \mathbb {R}_+\) such that

$$\begin{aligned} \min _{v\in \partial f_x(x,z)}\Vert v\Vert \le L(x,z) \end{aligned}$$(2.5)for all \(x\in \mathcal {T}_2\) and a.e. \(z\in \Omega \). Moreover, we assume there exists \(\textsf{L}> 0\) such that

$$\begin{aligned} \sup _{x \in \mathcal {T}_2} \sqrt{\mathbb {E}_{z}\left[ L(x, z)^2\right] } \le \textsf{L}. \end{aligned}$$

This Lipschitz property is local and differs from the global assumption of [11, Assumption B4]. The property holds only in \(\mathcal {T}_2\) since our algorithms will be initialized in this tube and will never leave it with high probability.Footnote 5 The local nature of this property is crucial to signal recovery applications, for example, blind deconvolution and phase retrieval. In these problems, global Lipschitz continuity does not hold; see Sect. 4.

Remark 1

(Finite-sums) While our results are stated in terms of the streaming model, they are also applicable to the finite-sum setting, where our loss function is in the form

Indeed, with \(\mathbb {P}= \textrm{Unif}(\{1, \dots , m\}) \implies \mathbb {P}(z_k = i) = \frac{1}{m}\), for any \(k \in \mathbb {N}\), \(i \in [m]\), we recover

2.2 Algorithms and results

Stochastic model-based algorithms (Algorithm 1) iteratively sample and minimize stochastic convex models of the loss function.Footnote 6 When equipped with models satisfying , and a global Lipschitz condition, these algorithms converge to stationary points of (2.1) at a sublinear rate [11, 19]. In this section, we show that such sublinear rates can be improved to local linear (or nearly linear) rates by using the restart strategy outlined in (2.2)–(2.3). We introduce two such strategies that succeed with probability \(1-\delta \), for any \(\delta > 0\). The first (Algorithm 2) allows for arbitrary sets \(\mathcal {S}\) in Assumption , but its sample complexity and initialization region scale poorly in \(\delta \). The second (Algorithm 5) assumes \(\mathcal {S}= \mathcal {X}^*\), but has much better dependence on \(\delta \).

Given assumptions –, the following theorem shows that the first restart strategy (Algorithm 2—Restarted Model Based Algorithm (RMBA)) converges nearly linearly to \(\mathcal {S}\) with high probability.

Theorem 2.1

(Informal) Fix a target accuracy \(\varepsilon >0\), failure probability \(\delta \in (0,\frac{1}{4})\), and a point \(x_0\in \mathcal {T}_{\gamma \sqrt{\delta }}\) for some \(\gamma \in (0,1)\). Then with appropriate parameter settings, the point \(x=\textrm{RMBA}(x_0, \alpha _0, K, T, \texttt {false})\) will satisfy \(\textrm{dist}(x,\mathcal {S})<\varepsilon \) with probability at least \(1-4\delta \). Moreover, the number of samples \(z_i\sim \mathbb {P}\) generated by the algorithm is at most

Theorem 2.1 has interesting consequences not only for convergence to global minimizers, but also for “active manifold identification." For example, when \(\mathcal {S}= \mathcal {X}^*\), Theorem 2.1 shows that with constant probability, Algorithm 2 converges nearly linearly to the true solution set. When \(\mathcal {S}\ne \mathcal {X}^*\) and is instead an “active manifold” in the sense of Lewis [38], Algorithm 2 nearly linearly converges to the active manifold. In our numerical evaluation, we illustrate this phenomenon for a sparse logistic regression problem. We empirically observe that the method converges linearly to the support of the solution, even though the overall convergence to the true solution may be sublinear.

In our numerical experiments, we find that Algorithm 2 succeeds for a wide variety of parameter settings, which may not necessarily satisfy the conditions set forth by the theory. Theorem 2.1, on the other hand, only guarantees Algorithm 2 succeeds with high probability when we greatly increase its sample complexity and initialize it close to \(\mathcal {S}\). We would like to boost Algorithm 2 into a new algorithm whose sample complexity and initialization requirements scale only polylogarithmicaly in \(1/\delta \). As a first attempt, we discuss the following two probabilistic techniques, both of which have limitations:

-

(Markov) One approach is to call Algorithm 2 multiple times for a moderately small value \(\delta \) and pick out the “best" iterate from the batch. This approach is flawed since, even in the convex setting, there is no procedure to test which iterate is “best" without increasing sample complexity.

-

(Ensemble) An alternative approach is based on a well-known resampling trick, which applies when \(\mathcal {S}=\{\bar{x}\}\) is a singleton set [45, p. 243], [30, 63, Algorithm 1]: Run m trials of Algorithm 2 with any fixed \(\delta <1/4\), and denote the returned points by \(\{ x_i\}_{i=1}^m\). Then with high probability, the majority of the points \(\{ x_i\}_{i=1}^m\) will be close to \(\bar{x}\). Finally, to find an estimate near \(\bar{x}\), choose any point that has at least m/2 other points close to it.

The ensemble technique is promising, but it requires \(\mathcal {S}\) to be a singleton. This limits its applicability since many low-rank recovery problems (e.g. blind deconvolution, matrix completion, robust PCA) have uncountably many solutions. We overcome this issue by embedding Algorithm 2 and the ensemble method within a proximal-point method. At each stage of this algorithm, we run multiple copies of a stochastic-model based method on a quadratically regularized problem that has a unique solution. Among those copies, we use the ensemble technique to pick out a “successful" iterate. We summarize the resulting nested procedure in Algorithms 3–5: Algorithm 3 (Proximal Model-Based Algorithm—PMBA) is a generic model-based algorithm applied on a quadratically regularized problem; Algorithm 4 (Ensemble PMBA—EPMBA) calls Algorithm 3 as suggested by the ensemble technique; finally, Algorithm 5 (Restarted PMBA (RPMBA)) updates the regularization term, in the style of a proximal point method.

We will establish the following guarantee. In the theorem, we assume \(\mathcal {S}=\mathcal {X}^*\).

Theorem 2.2

(Informal) Fix a target accuracy \(\varepsilon >0\), failure probability \(\delta \in (0,1)\), and a point \(x_0\in \mathcal {T}_{\gamma }\) for some \(\gamma \in (0,\frac{1}{4})\). Then with appropriate parameter settings, the point \(x=\textrm{RPMBA}(x_0, \rho _0, \alpha _0, K,\varepsilon _0, M, T)\) will satisfy \(\textrm{dist}(x,\mathcal {X}^*)<\varepsilon \) with probability at least \(1-\delta \). Moreover the total number of samples \(z_i\sim \mathbb {P}\) generated by the algorithm is at most

Theorem 2.2 resolves the initialization and sample complexity issues of Theorem 2.1. Incidentally, its claimed sample complexity also depends more favorably on \(\varepsilon \) and on the problem parameters \(\mu \) and \(\eta \). Theorem 2.2 is new in the weakly convex setting and also improves on prior work by Xu et al. [66] for convex problems. There, the results require stochastic subgradients to be almost surely bounded, hence, sub-Gaussian. In contrast, Theorem 2.2 guarantees that \(\textrm{dist}(x, \mathcal {X}^*) <\varepsilon \) with high-probability assuming only the local second moment bound .

3 Proofs of main results

In this section, we establish high-probability nearly linear convergence guarantees for Algorithm 2 and linear convergence guarantees for Algorithm 5 —the main contributions of this work. Throughout this section, we assume that Assumptions – hold.

3.1 Warm-up: convex setting

We begin with a short proof of nearly linear convergence for Algorithm 2 in the convex setting. We use this simplified case to explain the general proof strategy and point out the difficulty of extending the argument to the weakly convex setting. Since we restrict ourselves to the convex setting, throughout this section (Sect. 3.1) we suppose:

-

Assumption holds with \(\eta = 0\) and Assumption holds with \(\mathcal {S}= \mathcal {X}^*\).

-

The models \(f_x(\cdot , z)\) are \(L(z)\)-Lipschitz on \(\mathbb {R}^d\) for all x, where \(L:\Omega \rightarrow \mathbb {R}\) is a measurable function satisfying \(\sqrt{\mathbb {E}_{z}\left[ L( z)^2\right] } \le \textsf{L}\).

In particular, the tube \(\mathcal {T}_2\) is the entire space \(\mathcal {T}_2 = \mathbb {R}^d\), which alleviates the main difficulty of the weakly convex setting. The proof of convergence relies on the following known sublinear convergence guarantee for Algorithm 1.

Theorem 3.1

([11, Theorem 4.1]). Fix an initial point \(y_0 \in \mathbb {R}^d\) and let \(\alpha = \frac{C}{\sqrt{K+1}}\) for some \(C>0\). Then for any index \(K\in \mathbb {N}\), the point \(y=\textrm{MBA}(y_0, \alpha , K, \texttt {true})\) satisfies

The proof of nearly linear convergence now follows by iteratively applying Theorem 3.1 with a carefully chosen parameter \(C > 0\). The key idea of the proof is much the same as in the deterministic setting [44, 47, 58]. The proof proceeds by induction on the outer iteration counter \(t\). At the start of each inner iteration, we choose C to minimize the ratio in Equation (3.1), taking into account an inductive estimate on the initial square error \(\textrm{dist}^2(y_0, \mathcal {X}^*)\). We then run the inner loop until the estimate decreases by a fixed fraction. This strategy differs from deterministic setting in only one way: since the output of the inner loop is random, we extract a bound on the initial distance using Markov’s inequality.

Theorem 3.2

(Nearly linear convergence under convexity). Fix an initial point \(x_0 \in \mathbb {R}^d\), real \( \varepsilon >0\), \( \delta \in (0, 1)\), and an upper bound \(R_0 \ge \textrm{dist}(x_0, \mathcal {X}^*)\). Define parameters

Then with probability at least \( 1 - \delta \), the point \(x_{T} = \textrm{RMBA}(x_0, \alpha _0, K, T, \texttt {true})\) satisfies \( \textrm{dist}(x_T, \mathcal {X}^*) \le \varepsilon . \) Moreover, the total number of samples \(z_k\sim \mathbb {P}\) generated by the algorithm is bounded by

Proof

In what follows, set \(C_t=\frac{R_0}{\textsf{L}\cdot 2^{t+\frac{1}{2}} }\) and note the equality \(\alpha _t=\frac{C_t}{\sqrt{K+1}}\) for every index t in Algorithm 2. Let \( E_t\) denote the event that \(\textrm{dist}(x_t, \mathcal {S}) \le 2^{-t} \cdot R_0\). We wish to show the inequality

for all \(t\in \{0, \ldots , T\}\). To that end, observe

To lower bound the right-hand-side, observe by Markov’s inequality the estimate

Combining assumption and Theorem 3.1, we deduce

Therefore, combining (3.3), (3.4), and (3.5) we arrive at the claimed estimate

Iterating (3.2) and using the definition of T and K, we conclude that with probability

the estimate

holds as claimed. This completes the proof. \(\square \)

3.2 Weakly convex setting

We now present the convergence guarantees for Algorithm 2 in the weakly convex setting under Assumptions –. The proof of nearly linear convergence proceeds by inductively applying the following Lemma, which is similar to Lemma 3.1. Compared to the convex setting, the weakly convex setting presents a new challenge: the region of nearly linear convergence, \(\mathcal {T}_\gamma \), is local. The iterates of Algorithm 2 must therefore be shown to never leave \(\mathcal {T}_\gamma \) with high probability. We show this through a stopping time argument in the proof of the following Lemma (see Sect. A.1).

Lemma 3.3

Fix real numbers \(\delta \in (0, 1)\), \(\gamma \in (0,2)\), \(K \in \mathbb {N}\), and \(\alpha >0\). Let \(y_0\) be a random vector and let B denote the event \(\{ y_0 \in \mathcal {T}_{\gamma \sqrt{\delta }}\}\). Define

Then for any \(\varepsilon > 0\), the estimate \(\textrm{dist}(y_{K^*}, \mathcal {S}) \le \varepsilon \) holds with probability at least

The proof of nearly linear convergence of Algorithm 2 in the weakly convex setting now follows by inductively applying Lemma 3.3.

Theorem 3.4

(Nearly linear convergence under weak convexity) Fix real numbers \( \varepsilon >0\), \( \delta _2\in (0, 1)\), \(\gamma \in (0, 2)\). Let \(R_0\) denote the initial distance estimate satisfying \(\textrm{dist}(x_0, \mathcal {S}) \le R_0\le \frac{\gamma \mu }{\eta }\). Furthermore, define algorithm parameters

Then with probability at least \( 1 - (8/3)R_0^2\left( \tfrac{\eta }{\gamma \mu }\right) ^2 - \delta _2\), the point \(x_{T} = \textrm{RMBA}(x_0, \alpha _0, K, T, \texttt {false})\) satisfies \( \textrm{dist}(x_T, \mathcal {S}) \le \varepsilon . \) Moreover, the total number of samples \(z_k\sim \mathbb {P}\) generated by the algorithm is bounded by

Proof

For all \(t\), let \(E_t\) be the event \(\{\textrm{dist}(x_t, \mathcal {S}) \le 2^{-t}\cdot R_0\}\). In addition, define \(\delta _1:= R_0^2\left( \tfrac{\eta }{\gamma \mu }\right) ^2\). We claim that the inequality

holds for all \(t\in \{0,1\ldots , T\}\). To see this, apply Lemma 3.3 with \(y_0 = x_t\), \(\varepsilon =2^{-(t+1)}R_0\), \(\delta :=\delta _12^{-2t}\), \(\alpha = 2^{-t} \alpha _0\), thereby yielding

where the last inequality follows from the definitions of \(\alpha _0\) and \(R_0\). Iterating the inequality, we conclude

This completes the proof. \(\square \)

Observe that the probability of success \( 1 - (8/3)R_0^2\left( \tfrac{\eta }{\gamma \mu }\right) ^2 - \delta _2\) in Theorem 3.4 depends both on the initialization quality \(R_0\) and on \(\delta _2\). Moreover, \(\delta _2\) also appears inversely in the sample complexity \(\widetilde{O}\left( \frac{ \textsf{L}^2}{\delta _2^2 \mu ^2} \right) \). In the next section, we introduce an algorithm with probability of success independent of \(R_0\) and with sample complexity that depends only logarithmically on its success probability.

We close this section with the following remark, which shows that the results of Theorem 3.4 extend beyond the weakly convex setting.

Remark 2

(Beyond weakly convex problems). Assumptions and imply that f is \(\eta \)-weakly convex on U, meaning that the assignment \(x \mapsto f(x) + \frac{\eta }{2}\Vert x\Vert ^2\) is convex [11, Lemma 4.1]. Revisiting the proof of Lemma 3.3, however, we see that may be replaced by the following weaker assumption:

- \({\overline{\mathrm {(A3)}}}\):

-

(Two-point accuracy) There exists \(\eta > 0\) and an open convex set U containing \(\mathcal {X}\) and a measurable function \((x,y,z)\mapsto f_x(y,z)\), defined on \(U\times U\times \Omega \), satisfying

$$\begin{aligned} \mathbb {E}_{z}\left[ f_x(x,z)\right] =f(x) \qquad \forall x\in U, \end{aligned}$$and

$$\begin{aligned} \mathbb {E}_{z}\left[ f_x(y,z)-f(y)\right] \le \frac{\eta }{2}\Vert y-x\Vert ^2\qquad \forall x \in U, y \in \hbox {argmin}_{z \in \textrm{proj}_{\mathcal {S}}(x)} f(z). \end{aligned}$$

In the case \(\mathcal {S}= \mathcal {X}^*\), this assumption requires the model to touch the function at x and to lower bound it, up to quadratic error, at its nearest minimizer. This condition does not imply that f is weakly convex.

3.3 Convergence with high probability

In this section, we show that Algorithm 5 succeeds with high probability. Throughout this section (Sect. 3.3), we impose Assumptions – with \(\mathcal {S}=\mathcal {X}^*\).

The following lemma guarantees that with appropriate step size, the proximal point of the problem (2.1) at \(y\in \mathcal {T}_{\gamma }\) lies in \(\textrm{proj}_{\mathcal {X}^*}(y)\). We present the proof in Sect. B.2.

Lemma 3.5

Fix \(\gamma \in (0, 2)\), \(\rho >\eta \), and a point \(y \in \mathcal {T}_\gamma \). Then the proximal subproblem

is strongly convex and therefore has a unique minimizer \(\bar{y}\). Moreover, if \( \rho < \left( \tfrac{2-\gamma }{2\gamma }\right) \eta \), then the inclusion \(\bar{y}\in \textrm{proj}_{\mathcal {X}^*} (y)\) holds.

Lemma 3.5 shows that, unlike Algorithm 1, we can expect the output of Algorithm 3 to be near the minimizer \(\bar{y}_0 \in \mathcal {X}^*\) of the proximal subproblem \(f(y) + \frac{\rho }{2} \Vert y - y_0\Vert ^2\), at least with constant probability. This lemma underlies the validity of Lemma 3.6. We present its proof in Sect. B.3.

Lemma 3.6

Fix real numbers \(\delta \in (0, 1)\), \(\gamma \in (0, 2)\), \(\alpha >0\) \(K \in \mathbb {N}\), and \(\rho \) satisfying \(\eta< \rho < \left( \frac{2-\gamma \sqrt{\delta } }{2\gamma \sqrt{\delta }}\right) \eta \). Choose any point \(y_0 \in \mathcal {T}_{\gamma \sqrt{\delta }}\) and set \( \bar{y}_0:= \hbox {argmin}_{x \in \mathcal {X}} \left\{ f(x) + \frac{\rho }{2} \Vert x - y_0\Vert ^2\right\} . \) Define

Then for all \(\varepsilon > 0\), with probability at least

we have \(\Vert y_{K^*} - \bar{y}_0\Vert \le \varepsilon \).

The next lemma shows that we can boost the success probability of the inner loop arbitrarily high at only a logarithmic cost. This is an immediate application of Lemma 3.6 and the ensemble technique described in Sect. 2.2, which is formally stated in Lemma B.1.

Corollary 3.7

Assume the setting of Lemma 3.6 and suppose \(P(\varepsilon /3) \ge 2/3\). Then for any \(\delta ' > 0\), in the regime \(M> 48 \log (1/\delta ')\), the point

satisfies

Finally, we are ready to establish high probability convergence guarantees of Algorithm 5.

Theorem 3.8

(Linear convergence with high probability) Fix constants \(\gamma \in (0, 2)\), \(\varepsilon >0\), and \(\delta ' \in (0, 1)\). Let \(R_0\) denote the initial distance estimate satisfying \(\textrm{dist}(x_0, \mathcal {X}^*) \le R_0\le \frac{\gamma \mu }{4\eta }\). Furthermore, define algorithm parameters

and

Then with probability at least \( 1 - \delta '\), the point

satisfies \( \textrm{dist}(x_T, \mathcal {X}^*) \le \varepsilon . \) Moreover, the total number of samples \(z_k\sim \mathbb {P}\) generated by the algorithm is bounded by

Proof

For all \(t\), let \(E_t\) be the event \(\{\textrm{dist}(x_t, \mathcal {X}^*) \le 2^{-t}\cdot R_0\}\). Our goal is to show for all \(t\in \{0, \ldots , T\}\) the estimate \(\mathbb {P}(E_t) \ge 1 - t\delta '/T\) holds.

We proceed by induction. The base case follows since \(\mathbb {P}(E_0) = 1\) by definition of \(R_0\). Now suppose that the claimed estimate \(\mathbb {P}(E_t) \ge 1 - t\delta '/T\) is true for index \(t\). We will show it remains true with t replaced by \(t+1\). We will apply Lemma 3.6 conditionally with \(y_0 = x_t\) and error tolerance \(\epsilon _t\) in the event \(E_t\). To this end, we define \(\delta _1:= R_0^2\left( \tfrac{\eta }{\gamma \mu }\right) ^2\) and set \(\rho = \rho _t\), \(\delta :=\delta _12^{-2t}\), \(\alpha = \alpha _t\). Before we apply the Lemma, we verify that \(\rho \) meets the conditions of Lemma 3.6, namely that \(\eta< \rho < \left( \frac{2- \gamma \sqrt{\delta _1}2^{-t}}{2\gamma \sqrt{\delta _1} 2^{-t}}\right) \eta \). Indeed, given that \(\rho = \frac{2^t}{2\gamma \sqrt{\delta _1}}\cdot \eta \) (by definition of \(\delta _1\)), the bounds follow immediately from the restrictions \(\delta _1<1/16\) and \(\gamma \le 2\). In particular, it is straightforward to verify the bound

Now Lemma 3.6 yields that the random vector \( y_{K^*} = \textrm{PMBA}(x_t, \rho _t, \alpha _t, K_t)\) satisfies

where the second inequality follows from (3.8), while the third inequality uses the definition of K and the bound \(\delta _1 < 1/16\) and \(\textsf{L}\ge \mu \). Therefore, since \(M \ge 48\log (T/\delta ')\), we may apply Corollary 3.7 (conditionally) to deduce

Consequently,

as desired. This completes the proof. \(\square \)

4 Consequences for statistical recovery problems

Recent work has shown that a variety of statistical recovery problems are both sharp and weakly convex. Prominent examples include robust matrix sensing [40], phase retrieval [18], blind deconvolution [8], quadratic and bilinear sensing and matrix completion [7]. In this section, we briefly comment on how our current work leads to linearly convergent streaming algorithms for robust phase retrieval and blind deconvolution problems.

4.1 Robust Phase retrieval

Phase retrieval is a common task in computational science, with numerous applications including imaging, X-ray crystallography, and speech processing. In this section, we consider the real counterpart of this problem. For details and a historical account of the phase retrieval problem, see for example [6, 18, 28, 61]. Throughout this section, we fix a signal \(\bar{x} \in \mathbb {R}^d\) and consider the following measurement model.

Assumption A

(Robust Phase Retrieval) Consider random \(a \in \mathbb {R}^d\), \(\xi \in \mathbb {R}\), and \(u\in \{0, 1\}\) and the measurement model

We make the following assumptions on the random data.

-

1.

The variable \(u\) is independent of \(\xi \) and a. The failure probability \(p_{\textrm{fail}}\) satisfies

$$\begin{aligned} p_{\textrm{fail}}:= \mathbb {P}(u\ne 0) < 1/2. \end{aligned}$$ -

2.

The first absolute moment of \(\xi \) is finite, \(\mathbb {E}\left[ |\xi | \right] < \infty \).

-

3.

There exist constants \(\tilde{\eta }, \tilde{\mu }, \tilde{\textsf{L}} > 0\) such that for all \(v, w \in \mathbb {S}^{d-1}\), we have

$$\begin{aligned} \tilde{\mu } \le \mathbb {E}\left[ |\langle a, v\rangle \langle a, w\rangle |\right] , \qquad \sqrt{\mathbb {E}\left[ \langle a, v\rangle ^2\Vert a\Vert ^2\right] } \le \tilde{\textsf{L}}, \qquad \mathbb {E}\left[ \langle a, v\rangle ^2\right] \le \tilde{\eta }. \end{aligned}$$

Based on the above assumptions, the following theorem develops three models for the robust phase retrieval problem. We defer the proof to Sect. C.1.

Theorem 4.1

(Phase retrieval parameters). Consider the population data \(z = (a, u, \xi )\) and form the optimization problem

Then the sharpness property holds with \(\mathcal {S}=\mathcal {X}^*=\{\pm \bar{x}\}\) and \(\mu =(1-2p_{\textrm{fail}})\tilde{\mu } \Vert \bar{x}\Vert \). Moreover, given a measurable selection \(G(x, z) \in \partial f(x, z)\), the models

-

(subgradient) \(f^s_x(y, z) = f(x, z) + \langle G(x, z), y - x\rangle \),

-

(clipped subgradient) \(f^{cl}_x(y, z) = \max \{ f(x, z) + \langle G(x, z), y - x\rangle , 0\}\),

-

(prox-linear) \(f^{pl}_x(y, z) = | \langle a, x\rangle ^2 - b + 2\langle a, x\rangle \langle a, y - x\rangle | \)

satisfy Assumptions – with

With this theorem in hand, we deduce that on the phase retrieval problem, Algorithm 5 with subgradient, clipped subgradient, and prox-linear models converges linearly to \(\mathcal {X}^*\) with high probability, whenever the method is initialized within constant relative error of the optimal solution.

Theorem 4.2

Fix constants \(\gamma \in (0,2)\), \(\varepsilon >0\), and \(\delta '\in (0, 1)\). Consider the subgradient, clipped subgradient, and prox-linear oracles developed in Theorem 4.1. Suppose we are given a point \(x_0\) satisfying

Set parameters \( \rho _0, \alpha _0, K,\epsilon _0, M, T\) as in Theorem 3.8. In addition, define the iterate \(x_T= \textrm{RPMBA}(x_0, \rho _0, \alpha _0, K,\epsilon _0, M, T)\). Then with probability \(1-\delta '\), we have \(\textrm{dist}(x_T, \{\pm \bar{x}\}) \le \varepsilon \Vert \bar{x}\Vert \) after

stochastic subgradient, stochastic clipped subgradient, or stochastic prox-linear iterations.

We now examine Theorem 4.2 in the setting where the measurement vectors a follow a Gaussian distribution. We note, however, that the results of this section extend far beyond the Gaussian setting to heavy tailed distributions.

Example 4.1

(Gaussian setting) Let us analyze the population setting where \(a \sim N(0, I_{d\times d})\). In this case, it is straightforward to show by direct computation that

Consequently, if \(x_0 \in \mathbb {R}^d\) has error \( \textrm{dist}(x_0, \{\pm \bar{x}\}) \le c(1-2p_{\textrm{fail}}) \cdot \Vert \bar{x}\Vert , \) for some numerical constant c, then with probability \(1-\delta \), Algorithm 5 will produce a point \(x_T\) satisfying \(\textrm{dist}(x_T, \{\pm x\}) \le \varepsilon \Vert \bar{x}\Vert \) using only

samples. We note that the spectral initialization of Duchi and Ruan [18, Proposition 3] produces such a point \(x_0\) with sample complexity \(O(d(1-2p_{\textrm{fail}})^{-2})\) with high probability. Therefore, when taken together, combining this spectral initialization with Algorithm 5 produces a point \(x_T\) satisfying \(\textrm{dist}(x_T, \{\pm \bar{x}\}) \le \varepsilon \Vert \bar{x}\Vert \) with \(O\left( \frac{d}{(1-2p_{\textrm{fail}})^2} \log \left( \frac{1}{\varepsilon }\right) \log \left( \log \left( \frac{1}{\varepsilon }\right) /\delta \right) \right) \) samples, which is the best known sample complexity for Gaussian robust phase retrieval, up to logarithmic factors. We note that by leveraging standard concentration results, it is possible to prove similar results for empirical average minimization \(\min _x \frac{1}{m}\sum _{i=1}^m f(x,z_i)\), provided \(z_i\) are i.i.d samples of z and the number of samples satisfies \(m > rsim d (1-2p_{\textrm{fail}})^{-2}\).

4.2 Robust blind deconvolution

We next apply the proposed algorithms to the blind deconvolution problem. For a detailed discussion of the the problem, see for example the papers [2, 39]. Henceforth, fix integers \(d_1, d_2 \in \mathbb {N}\) and an underlying signal \((\bar{x}, \bar{y}) \in \mathbb {R}^{d_1}\times \mathbb {R}^{d_2}\). Define the quantity

Without loss of generality, we will assume \(\Vert \bar{x}\Vert = \Vert \bar{y}\Vert \). We consider the following measurement model:

Assumption B

(Robust Blind Deconvolution). Consider random \(\ell \in \mathbb {R}^{d_1}\), \(r \in \mathbb {R}^{d_2}\), \(\xi \in \mathbb {R}\), and \(u\in \{0, 1\}\) and the measurement model

We make the following assumptions on the random data.

-

1.

The variable \(u\) is independent of \(\xi \), \(\ell \), and r. The failure probability \(p_{\textrm{fail}}\) satisfies

$$\begin{aligned} p_{\textrm{fail}}:= \mathbb {P}(u\ne 0) < 1/2. \end{aligned}$$ -

2.

We have \(\mathbb {E}\left[ |\xi | \right] < \infty \).

-

3.

There exists constants \(\tilde{\eta }, \tilde{\mu }, \tilde{\textsf{L}} > 0\) such that for all \(M\in \mathbb {R}^{d_1 \times d_2}\) with \(\Vert M\Vert _F = 1\) and \(\textrm{rank}(M) \le 2\), we have

$$\begin{aligned} \tilde{\mu }\le \mathbb {E}\left[ |\langle \ell , M r\rangle | \right] \le \tilde{\eta }\end{aligned}$$ -

4.

There exists constants \(\tilde{\textsf{L}} > 0\) such that for all \(v \in \mathbb {S}^{d_1-1}, w \in \mathbb {S}^{d_2-1}\), we have

$$\begin{aligned} \sqrt{\mathbb {E}\left[ \left( |\langle \ell , v\rangle | \Vert r\Vert + |\langle r, w\rangle |\Vert \ell \Vert \right) ^2\right] } \le \tilde{\textsf{L}}. \end{aligned}$$

Based on the above assumptions, the following theorem develops three models for the robust blind deconvolution problem. We defer the proof to Sect. C.2.

Theorem 4.3

(Blind deconvolution parameters). Fix a real \(\nu > 1\) and define the set:

Consider the population data \(z = (a, u, \xi )\) and the form the optimization problem

Then the optimal solution set is \(\mathcal {X}^*= \{(\alpha \bar{x}, (1/\alpha )\bar{y}) \mid (1/\nu ) \le |\alpha | \le \nu \}\) and f satisfies the sharpness assumption with \(\mathcal {S}=\mathcal {X}^*\) and

Moreover, given a measurable selection \(G((x,y), z) \in \partial f((x,y), z)\), the models

-

(subgradient) \(f^s_{(x,y)}((\hat{x}, \hat{y}), z) = f((x, y), z) + \langle G((x, y), z), (\hat{x}, \hat{y}) - (x, y)\rangle \),

-

(clipped subgradient)

$$\begin{aligned} f^{cl}_{(x, y)}((\hat{x}, \hat{y}), z) = \max \{ f((x, y), z) + \langle G((x, y), z), (\hat{x}, \hat{y}) - (x, y)\rangle , 0\}, \end{aligned}$$ -

(prox-linear)

$$\begin{aligned} f^{pl}_{(x, y)}((\hat{x}, \hat{y}), z)= & {} | \langle \ell , x\rangle \langle r, y\rangle - (\langle \ell , \bar{x}\rangle \langle r, \bar{y}\rangle + u\cdot \xi )\\{} & {} + \langle \ell , x\rangle \langle r, \hat{y} - y\rangle + \langle r, y\rangle \langle \ell , \hat{x} -x\rangle | \end{aligned}$$

satisfy Assumptions – with

With this theorem in hand, we deduce that on the blind deconvolution problem, Algorithm 5 with subgradient, clipped subgradient, and prox-linear models converges linearly to \(\mathcal {X}^*\) with high probability, whenever the method is initialized within constant relative error of the solution set.

Theorem 4.4

Fix constants \(\gamma \in (0,2)\), \(\varepsilon >0\), and \(\delta '\in (0, 1)\). Consider the subgradient, clipped subgradient, and prox-linear oracles developed in Theorem 4.1. Suppose we are given a pair \((x_0,y_0) \in \mathbb {R}^{d_1 + d_2}\) satisfying

Set parameters \(\rho _0, \alpha _0, K,\epsilon _0, M, T\) as in Theorem 3.8. In addition, we define the iterate \((x_T,y_T)= \textrm{RPMBA}((x_0, y_0), \rho _0, \alpha _0, K,\epsilon _0, M, T)\). Then with probability \(1-\delta '\), we have \(\textrm{dist}((x_T,y_T), \mathcal {X}) \le \varepsilon \sqrt{D}\) after

stochastic subgradient, stochastic clipped subgradient, or stochastic prox-linear iterations.

We now examine Theorem 4.4 in the setting where the measurement vectors \(\ell , r\) follow a Gaussian distribution. We note, however, that the results of this section extend far beyond the Gaussian setting to heavy tailed distributions.

Example 4.2

(Gaussian setting). Let us analyze the population setting where \((\ell , r) \sim N(0, I_{(d_1 + d_2) \times (d_1 + d_2)})\). In this case, one can show by direct computation that

Consequently, if \((x_0, y_0) \in \mathbb {R}^{d_1 + d_2}\) has error \( \textrm{dist}((x_0, y_0), \mathcal {X}^*) \le c(1-2p_{\textrm{fail}}) \cdot \sqrt{D}/\nu , \) for some numerical constant \(c>0\), then with probability \(1-\delta \), Algorithm 5 will produce a pair \((x_T, y_T)\) satisfying \(\textrm{dist}((x_T, y_T), \mathcal {X}^*) \le \varepsilon \sqrt{D}\) using only

samples. We note that the spectral initialization of Charisopoulos et al. [8, Theorem 5.4 and Corollary 5.5] can produce such a pair \((x_0, y_0)\) with sample complexity \(O(\nu ^2(d_1 + d_2) (1-2p_{\textrm{fail}})^{-2})\) with high probability with \(\nu \le \sqrt{3}\). Therefore, when taken together, combining this spectral initialization with Algorithm 5 produces a pair \((x_T, y_T)\) satisfying \(\textrm{dist}((x_T, y_T), \mathcal {X}^*) \le \varepsilon \Vert \bar{x}\Vert \) with \(O\left( \frac{d_1 + d_2}{(1-2p_{\textrm{fail}})^2} \log \left( \frac{1}{\varepsilon }\right) \log \left( \log \left( \frac{1}{\varepsilon }\right) /\delta \right) \right) \) samples, which is the best known sample complexity for Gaussian robust blind deconvolution, up to logarithmic factors. We note by leveraging standard concentration results, it is possible to prove similar results for empirical average minimization \(\min _{(x,y)\in \mathcal {X}} \frac{1}{m}\sum _{i=1}^m f((x,y),z_i)\), provided \(z_i\) are i.i.d samples of z and the number of samples satisfies \(m > rsim (d_1 + d_2) (1-2p_{\textrm{fail}})^{-2}\).

5 Numerical Experiments

We now evaluate how Algorithm 2 performs both on the statistical recovery problems of Sect. 4 and on a sparse logistic regression problem. We test the convergence behavior, sensitivity to step size, and convergence to an active manifold. While testing the algorithms, we found that Algorithms 2 and 5 perform similarly, despite Algorithm 5 having superior theoretical guarantees. Thus, we do not evaluate Algorithm 5. The problems of Sect. 4 are both convex composite losses of the form in Example 2.1. For these problems, we therefore implement all four models from Example 2.1, using the closed-form solutions developed in [11, Section 5]. For the sparse logistic regression problem, we implement the stochastic proximal gradient method and measure convergence to the support set of the optimal solution. We provide a reference implementation [9] of the methods in Julia.

5.1 Convergence behavior

In this section, we demonstrate that Algorithm 2 converges nearly linearly on the Gaussian robust phase retrieval and blind deconvolution problems of Sect. 4 for a particular dimension, noise distribution, corruption frequency, and initialization quality. In phase retrieval, we set \(d = 100\) and in blind deconvolution, we set \(d_1 = d_2 = d. \) The measurements are corrupted independently with probability \(p_{\textrm{fail}}\): for phase retrieval, the corruption obeys \(\xi = \left| g \right| , \; g \sim N(0, 100)\), while for blind deconvolution, it obeys \(\xi \sim N(0, 100)\). The algorithms are all randomly initialized at a fixed distance \(R_0 > 0\) from the ground truth. The ground truth is normalized in all cases. We use Examples 4.1 and 4.2 to estimate \(\textsf{L}\), \(\eta \), and \(\mu \), and we set \(\gamma = 1\), \(R_0 = 0.25\), \(\delta _2 = \frac{1}{\sqrt{10}}\), and target accuracy \(\varepsilon = 10^{-5}\) to obtain T, K and \(\alpha _0\) parameters as in Theorem 3.4

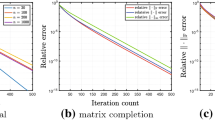

Figures 2 and 3 depict the convergence behavior of Algorithm 2 on robust phase retrieval and blind deconvolution problems in finite sample and streaming settings, respectively. In these plots, solid lines with markers show the mean behavior over 10 runs, while the transparent overlays show one sample standard deviation above and below the mean. In the finite-sample instances, we use \(m = 8 \cdot d\) measurements and corrupt a fixed fraction \(p_{\textrm{fail}}\) with large magnitude sparse noise; see Fig. 2. In the streaming instances, we draw a new i.i.d. sample at each iteration and corrupt it independently with probability \(p_{\textrm{fail}}\); see Fig. 3. In both figures, we plot in red the rate guaranteed by Theorem 3.4 and observe that the algorithms behave consistently with these guarantees. In presence of noise, the algorithms all converge nearly linearly at the rate predicted by Theorem 3.4, while in the noiseless case, all except the subgradient method converge to an exact solution (modulo numerical accuracy) within far fewer iterations.

5.1.1 Experiments on large-scale problems

We next demonstrate the performance of Algorithm 2 using the subgradient model on large-scale synthetic instances of phase retrieval and blind deconvolution in the finite sample setting with \(d = 512 \times 512\). At each step, we sample a random subset of measurements of size \(S \in \{32, \sqrt{d}, d\}\). The measurement matrices are sampled from the randomized Hadamard ensemble which consists of k vertically stacked \(d \times d\) Hadamard matrices composed with random binary masks:

and \(H_d\) is the \(d \times d\) Walsh-Hadamard matrix. We fix \(k = 8\), \(p_{\textrm{fail}}= 0\), \(\gamma = 1\) and \(\delta _2 = 1/3\) and initialize our algorithm randomly at distance \(R_0 = 0.25\) from the ground truth. We estimate \(\textsf{L}\), \(\eta \) and \(\mu \) using Examples 4.1 and 4.2, adjusted to take into account the batch size S.

We plot the distance from the solution as a function of the number of passes over the dataset in Fig. 4. The plots verify that Algorithm 2 converges nearly linearly to a solution at a dimension-independent rate. Moreover, they indicate that using a larger batch size S does not lead to a reduction over the number of passes required to reach a fixed accuracy.

5.2 Sensitivity to step size

We next explore how Algorithm 2 performs when \(\alpha _0\) is misspecified. Throughout, we scale \(\alpha _0\) by \(\lambda := 2^p\) for integers p between \(-10\) and 10. We run 25 trials of the algorithm and for each model and scalar \(\lambda \), we report two different metrics:

-

We report the sample mean and standard deviation of the number of “inner” loop iterations, or samples, needed to reach accuracy \(\varepsilon = 10^{-5}\). We use the parameters of Sect. 5.1 to cap the number of total iterations by

as Theorem 3.4 prescribes. This number is depicted as a dotted line in the figure.

-

We report the sample mean and standard deviation of the distance of the final iterate to the solution set.

Figures 5 and 6 show the results for phase retrieval and blind deconvolution problems with \(d = 100\) and \(p_{\textrm{fail}}\in \{0, 0.2\}\). In these plots, solid lines with markers show the mean behavior over 25 runs, while the transparent overlays show one sample standard deviation above and below the mean. The plots show that Algorithm 2 continues to perform as predicted by Theorem 3.4 even if \(\alpha _0\) is misspecified by a few orders of magnitude.

The prox-linear, proximal point, and clipped models perform similarly in all plots. As reported in [4], the prox-linear and clipped methods produce the same iterates. The iterates produced by the stochastic proximal point method and stochastic prox-linear are not identical, but they are practically indistinguishable. This is due to two factors: the proximal and prox-linear models agree up to an error that increases quadratically as we move from the basepoint, and the proximal subproblems force iterates to remain near the basepoint. Running the proximal point method for a much larger stepsize produces different iterates than the prox-linear method, though then the method fails to converge within the specified level of accuracy.

5.3 Activity identification

In this section, we demonstrate that Algorithm 2 nearly linearly converges to the active set of nonzero components in a sparse logistic regression problem. We model our experiment on [37, Section 6.2]. There, the authors find a sparse classifier for distinguishing between digits 6 and 7 from the MNIST dataset of handwritten digits [36]. In this problem, we are given a set of \(N = 12183\) samples \((x_i, y_i) \in \mathbb {R}^d \times \{-1, 1\}\), representing \(28\times 28\) dimensional images of digits and their labels, and we seek a target vector \(z:= (w, b) \in \mathbb {R}^{d+1}\), so that w is sparse and \(\textrm{sign}(\langle w, x_i \rangle + b) = \textrm{sign}(y_i)\) for most i. To find z, we minimize the function

where each component is a logistic loss:

We let \((\tilde{w}, \tilde{b})\) denote the minimizer of the logistic loss, which we find using the standard proximal gradient algorithm. Given \(\tilde{w}\), we denote its support set by \(S:= \{ i \in [d]: \left| \tilde{w}_i \right| > \epsilon \}\), where \(\epsilon \) accounts for the numerical precision of the system.

Our goal is to converge nearly linearly to the set

To that end, we will apply Algorithm 2 with the stochastic proximal gradient model:

Algorithm 2 equipped with this model results in the standard stochastic proximal gradient method. To apply the algorithm, we set parameters using Theorem 3.4. We set \(\gamma = 1\), \(\delta _2 = 1/\sqrt{10}\), \(\varepsilon = 10^{-5}\). We initialize \(w_0 = 0\), \(b_0 = 0\) and set \(R_0 = \Vert (\tilde{w}, \tilde{b}) - (w_0, b_0)\Vert \). We estimate \(\textsf{L}\) by the formula \( \textsf{L}:= \sqrt{\frac{1}{m} \sum _{i=1}^m \left\| x_i \right\| _2^2}. \) Finally, we estimate \(\mu \) by grid search over \((\tau , p)\) using the formula: \(\mu = \tau \cdot \sqrt{d} \cdot 2^{-p}\).

5.3.1 Evaluation

We compare the performance of Algorithm 2 with the Regularized Dual Averaging method (RDA) [46, 65], which was shown to have favorable manifold identification properties in [37]. In our setting, the latter method solves the following subproblem:

where \(\bar{g}_t\) in (5.2) is the running average over all stochastic gradients \(g_k:= \nabla f(z; i_k)\) sampled up to step t, and \(\gamma \) is a tunable parameter which is again determined by a simple grid search. Following the discussion in [37], RDA is initialized at \((w_0, b_0) = 0\); therefore we choose the same initial point \((w_0, b_0)\) for both methods. In addition to RDA, we also performed a comparison with the standard stochastic proximal gradient method, equipped with a range of polynomially decaying step sizes of the form

We found that the stochastic proximal gradient method performed comparably with RDA in all metrics, and therefore chose to omit it below.

The convergence plots in Fig. 7 confirm that the iterates of Algorithm 2 converge to the set \(\mathcal {S}\) at a nearly linear rate, while the function values converge at a sublinear rate. In contrast, the iterates generated by RDA converge sublinearly in both metrics.

Notes

Here, the term “nearly linear” signifies that the oracle / sample complexity of the method is \(O(\log ^3(1 / \varepsilon ))\) – i.e., it depends polylogarithmically on \(1 / \varepsilon \), in contrast to standard “linear” convergence where in the oracle complexity scales as \(O(\log (1 / \varepsilon ))\).

In particular, in the tube \(\mathcal {T}_{2}\) described in Assumption .

One could choose the product of the Lipschitz constants of h and \(\nabla c\).

Note that Algorithm 1 can be implemented using O(d) memory by maintaining a running average (in the convex case) or by terminating the iteration after \(K^{*} \sim \textrm{Unif}\left\{ 0, \dots , K\right\} \) steps (in the nonconvex case).

References

Abadi, M., Agarwal, A., Barham, P., Brevdo, E., Chen, Z., Citro, C., Corrado, G.S., Davis, A., Dean, J., Devin, M. et al.: Tensorflow: large-scale machine learning on heterogeneous systems, 2015. Software available from tensorflow.org 1(2) (2015)

Ahmed, A., Recht, B., Romberg, J.: Blind deconvolution using convex programming. IEEE Trans. Inf. Theory 60(3), 1711–1732 (2014)

Asi, H., Duchi, J.C.: Stochastic (approximate) proximal point methods: Convergence, optimality, and adaptivity. arXiv preprint arXiv:1810.05633 (2018)

Asi, H., Duchi, J.C.: The importance of better models in stochastic optimization. arXiv preprint arXiv:1903.08619 (2019)

Aybat, N.S., Fallah, A., Gurbuzbalaban, M., Ozdaglar, A.: A universally optimal multistage accelerated stochastic gradient method. arXiv preprint arXiv:1901.08022 (2019)

Candès, E.J., Strohmer, T., Voroninski, V.: PhaseLift: exact and stable signal recovery from magnitude measurements via convex programming. Comm. Pure Appl. Math. 66(8), 1241–1274 (2013)

Charisopoulos, V., Chen, Y., Davis, D., Díaz, M., Ding, L., Drusvyatskiy, D.: Low-rank matrix recovery with composite optimization: good conditioning and rapid convergence. arXiv preprint arXiv:1904.10020 (2019)

Charisopoulos, V., Davis, D., Díaz, M., Drusvyatskiy, D.: Composite optimization for robust blind deconvolution. arXiv:1901.01624 (2019)

COR-OPT. Geometric step decay: reference implementation. https://github.com/COR-OPT/GeomStepDecay (2019)

Davis, D.: SMART: The stochastic monotone aggregated root-finding algorithm. arXiv preprint arXiv:1601.00698 (2016)

Davis, D., Drusvyatskiy, D.: Stochastic model-based minimization of weakly convex functions. SIAM J. Optim. 29(1), 207–239 (2019)

Davis, D., Drusvyatskiy, D., MacPhee, K.J., Paquette, C.: Subgradient methods for sharp weakly convex functions. J. Optim. Theory Appl. 179(3), 962–982 (2018)

Davis, D., Drusvyatskiy, D., Paquette, C.: The nonsmooth landscape of phase retrieval. To appear in IMA J. Numer. Anal., arXiv:1711.03247 (2017)

Defazio, A., Bach, F., Lacoste-Julien, S.: SAGA: a fast incremental gradient method with support for non-strongly convex composite objectives. In: Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems, vol. 27, pp. 1646–1654. Curran Associates Inc, New York (2014)

Defazio, A., Domke, J., Caetano, T.S.: Finito: a faster, permutable incremental gradient method for big data problems. In: Xing, E.P., Jebara, T. (eds). Proceedings of the 31st International Conference on Machine Learning, volume 32 of Proceedings of Machine Learning Research, pp. 1125–1133. Bejing, China (2014). PMLR

Drusvyatskiy, D.: The proximal point method revisited. SIAG/OPT Views and News, 26(1) (2018)

Drusvyatskiy, D., Lewis, A.S.: Optimality, identifiablity, and sensitivity. arXiv preprint arXiv:1207.6628 (2012)

Duchi, J.C., Ruan, F.: Solving (most) of a set of quadratic equalities: composite optimization for robust phase retrieval. IMA J. Inf. Inference (2018). https://doi.org/10.1093/imaiai/iay015

Duchi, J.C., Ruan, F.: Stochastic methods for composite and weakly convex optimization problems. SIAM J. Optim. 28(4), 3229–3259 (2018)

Eldar, Y.C., Mendelson, S.: Phase retrieval: stability and recovery guarantees. Appl. Comput. Harmon. Anal. 36(3), 473–494 (2014)

Eremin, I.I.: The relaxation method of solving systems of inequalities with convex functions on the left-hand side. Dokl. Akad. Nauk SSSR 160, 994–996 (1965)

Fercoq, O., Qu, Z.: Restarting accelerated gradient methods with a rough strong convexity estimate. arXiv preprint arXiv:1609.07358 (2016)

Fercoq, O., Qu, Z.: Adaptive restart of accelerated gradient methods under local quadratic growth condition. arXiv preprint arXiv:1709.02300 (2017)

Freund, R.M., Haihao, Lu.: New computational guarantees for solving convex optimization problems with first order methods, via a function growth condition measure. Math. Program. 170(2), 445–477 (2018)

Ge, R., Kakade, S.M., Kidambi, R., Netrapalli, P.: The step decay schedule: a near optimal, geometrically decaying learning rate procedure. arXiv preprint arXiv:1904.12838 (2019)

Ghadimi, S., Lan, G.: Optimal stochastic approximation algorithms for strongly convex stochastic composite optimization, ii: shrinking procedures and optimal algorithms. SIAM J. Optim. 23(4), 2061–2089 (2013)

Goffin, J.L.: On convergence rates of subgradient optimization methods. Math. Program. 13(3), 329–347 (1977)

Goldstein, T., Studer, C.: Phasemax: convex phase retrieval via basis pursuit. IEEE Trans. Inf. Theory 64(4), 2675–2689 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hsu, D., Sabato, S.: Loss minimization and parameter estimation with heavy tails. J. Mach. Learn. Res. 17(1), 543–582 (2016)

Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. In: Proceedings of the 26th International Conference on Neural Information Processing Systems, NIPS’13, pp. 315–323, Curran Associates Inc, USA (2013)

Johnstone, P.R., Moulin, P.R.: Faster subgradient methods for functions with hölderian growth. Math. Program. 180, 417–450 (2019)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Kulunchakov, A., Mairal, J.: A generic acceleration framework for stochastic composite optimization. arXiv preprint arXiv:1906.01164 (2019)

Kushner, H.J., Yin, G.G.: Stochastic Approximation and Recursive Algorithms and Applications, volume 35 of Applications of Mathematics (New York). Stochastic Modelling and Applied Probability, 2nd edn. Springer-Verlag, New York (2003)

Lecun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 Nov (1998)

Lee, S., Wright, S.J.: Manifold identification in dual averaging for regularized stochastic online learning. J. Mach. Learn. Res. 13(JUN), 1705–1744 (2012)

Lewis, A.S.: Active sets, nonsmoothness, and sensitivity. SIAM J. Optim. 13(3), 702–725 (2002)

Li, X., Ling, S., Strohmer, T., Wei, K.: Rapid, robust, and reliable blind deconvolution via nonconvex optimization. Appl. Comput. Harmon. Anal. 47(3), 893–934 (2018)

Li, Y., Ma, C., Chen, Y., Chi, Y.: Nonconvex matrix factorization from rank-one measurements. arXiv:1802.06286, 2018

Mairal, J.: Incremental majorization-minimization optimization with application to large-scale machine learning. SIAM J. Optim. 25(2), 829–855 (2015)

Mordukhovich, B.S.: Variational Analysis and Generalized Differentiation I: Basic Theory. Grundlehren der mathematischen Wissenschaften, vol. 330. Springer, Berlin (2006)

Nedić, A., Bertsekas, D.: Convergence rate of incremental subgradient algorithms. In: Stochastic Optimization: Algorithms and Applications, pp. 223–264. Springer (2001)

Nemirovskii, A.S., Nesterov, Yu.E.: Optimal methods of smooth convex minimization. USSR Comput. Math. Math. Phys. 25(2), 21–30 (1985)

Nemirovsky, A.S., Yudin, D.B.: Problem Complexity and Method Efficiency in Optimization. A Wiley-Interscience Publication. John Wiley & Sons Inc, New York (1983). Translated from the Russian and with a preface by E. R. Dawson, Wiley-Interscience Series in Discrete Mathematics

Nesterov, Y.: Primal-dual subgradient methods for convex problems. Math. Program. 120(1), 221–259 (2009)

Nesterov, Y.: A method for solving the convex programming problem with convergence rate \(O(1/k^{2})\). Dokl. Akad. Nauk SSSR 269(3), 543–547 (1983)

O’Donoghue, B., Candès, E.: Adaptive restart for accelerated gradient schemes. Found. Comput. Math. 15(3), 715–732 (2015)

Paszke, A., Gross, S., Chintala, S., Chanan, G., Yang, E., DeVito, Z., Lin, Z., Desmaison, A., Antiga, L., Lerer, A.: Automatic differentiation in pytorch. In: NIPS-W (2017)

Penot, J.-P.: Calculus Without Derivatives. Graduate Texts in Mathematics, vol. 266. Springer, New York (2013)

Poliquin, R.A., Rockafellar, R.T.: Prox-regular functions in variational analysis. Trans. Am. Math. Soc. 348, 1805–1838 (1996)

Poljak, B.T.: Minimization of nonsmooth functionals. Ž. Vyčisl. Mat. i Mat. Fiz. 9, 509–521 (1969)

Polyak, B.T., Juditsky, A.B.: Acceleration of stochastic approximation by averaging. SIAM J. Control Optim. 30(4), 838–855 (1992)

Renegar, J., Grimmer, B.: A simple nearly-optimal restart scheme for speeding-up first order methods. arXiv preprint arXiv:1803.00151 (2018)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22, 400–407 (1951)

Rockafellar, R.T.: Favorable classes of Lipschitz-continuous functions in subgradient optimization. In: Progress in Nondifferentiable Optimization, Volume 8 of IIASA Collaborative Proc. Ser. CP-82, pp. 125–143. Int. Inst. Appl. Sys. Anal., Laxenburg (1982)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Grundlehren der mathematischen Wissenschaften, vol. 317. Springer, Berlin (1998)

Roulet, V., d’Aspremont, A.: Sharpness, restart and acceleration. In: Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 30, pp. 1119–1129. Curran Associates Inc, New York (2017)

Schmidt, M., Le Roux, N., Bach, F.: Minimizing finite sums with the stochastic average gradient. Math. Program. 162(1–2), 83–112 (2017)

Shalev-Shwartz, S., Zhang, T.: Proximal stochastic dual coordinate ascent. arXiv:1211.2717 (2012)

Shechtman, Y., Eldar, Y.C., Cohen, O., Chapman, H.N., Miao, J., Segev, M.: Phase retrieval with application to optical imaging: a contemporary overview. IEEE Signal Process. Mag. 32(3), 87–109 (2015)

Shor, N.Z.: Minimization Methods for Non-differentiable Functions, vol. 3. Springer Science & Business Media, New York (2012)

Tan, Y.S., Vershynin, R.: Phase retrieval via randomized Kaczmarz: Theoretical guarantees. Inf. Inference J. IMA 8(1), 97–123 (2018)

Wright, S.J.: Identifiable surfaces in constrained optimization. SIAM J. Control Optim. 31(4), 1063–1079 (1993)

Xiao, L.: Dual averaging methods for regularized stochastic learning and online optimization. J. Mach. Learn. Res. 11(OCT), 2543–2596 (2010)

Xu, Y., Lin, Q., Yang, T.: Accelerated stochastic subgradient methods under local error bound condition. arXiv preprint arXiv:1607.01027 (2016)

Yang, T., Lin, Q.: RSG: beating subgradient method without smoothness and strong convexity. J. Mach. Learn. Res. 19(1), 236–268 (2018)

Yang, T., Yan, Y., Yuan, Z., Jin, R.: Why does stagewise training accelerate convergence of testing error over SGD? arXiv preprint arXiv:1812.03934 (2018)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Research of Drusvyatskiy was supported by the NSF DMS 1651851 and CCF 1740551 awards. Research of Damek Davis supported by an Alfred P. Sloan research fellowship and NSF-DMS award 2047637.

Appendices

A Proofs from Sect. 3.2

We will need the following elementary lemma.

Lemma A.1

Suppose Assumptions and hold. Fix an arbitrary \(\gamma \in (0, 2)\) and consider two points \(y \in \mathcal {T}_\gamma \) and \(x \in \mathbb {R}^d\). Then the estimate holds:

Proof

Let \(v \in \partial f_y(y, z)\) be the minimal norm subgradient. Then, by definition \( f_y(y, z) \le f_y(x, z) + \langle v, y-x\rangle \). Applying (2.5) and Cauchy-Schwarz completes the proof. \(\square \)

1.1 A.1 Proof of Lemma 3.3

Throughout the proof, we suppose that Assumptions – hold. We let \(\mathcal {F}_k\) denote the \(\sigma \)-algebra generated by the history of the algorithm up to iteration \(k\) and define the shorthand for conditional expectation \(\mathbb {E}_k\left[ \cdot \right] := \mathbb {E}\left[ \cdot \mid \mathcal {F}_k\right] \). Define the stopping time

and the sequence of events

Define also for all indices k, the quantity:

Recall that our goal is to lower bound \(\mathbb {P}(D_{K^*}\le \varepsilon )\). To this end, we successively compute

where (A.1) follows from Markov’s inequality. The result will now follow immediately from the following two propositions, which establish an upper bound on \(\mathbb {E}\left[ D_{K^*} 1_{A_K} \right] \) and a lower bound on \(\mathbb {P}(A_K)\), respectively. We note that the first proposition is a quick modification of [11, Lemma 4.2]. We include a proof for completeness.

Proposition A.2

The following bounds hold:

Proof

The loss function \(y\mapsto f_{y_k}(y, z_k)+\frac{1}{2\alpha }\Vert y-y_k\Vert ^2\) is strongly convex on \(\mathcal {X}\) with constant \(1/\alpha \) and \(y_{k+1}\) is its minimizer. Hence for any \(y\in \mathcal {X}\), the inequality holds:

Rearranging and taking expectations, we successively deduce that provided \(y_k\in \mathcal {T}_\gamma \), we have

where (A.4) follows from Lemma A.1 while inequality (A.5) follows from Cauchy-Schwarz and Assumption .

Define \(c:=\sqrt{\mathbb {E}_k[\Vert y_{k+1}-y_k\Vert ^2]}\) and notice \(c\ge \mathbb {E}_k\Vert y_k- y_{k+1}\Vert \). Then, rearranging (A.5), we immediately deduce that if \(y_k \in \mathcal {T}_\gamma \), we have

where the third inequality follows from assumption , and the fourth inequality follows by maximizing the right-hand-side in \(c \in \mathbb {R}\). Then, dividing through by \(\frac{1}{2\alpha }\) and multiplying by \(1_{A_k}\), we arrive at

where the first inequality follows since \(A_{k+1} \subseteq A_{k}\), the second inequality follows since \(A_{k}\) is \(\mathcal {F}_k\) measurable, and the fourth inequality follows since on the event \(A_k\), we have \(y_k \in \mathcal {T}_{\gamma }\). This completes the proof of (A.2). Next, applying the law of total expectation, we obtain

Iterating the inequality and rearranging, we deduce

Dividing through by \((K+1) (2-\gamma )\alpha \mu \), we recognize the left-hand side as \(\mathbb {E}\left[ D_{K^*}1_{A_{K^*}}\right] \), and therefore

Finally, note \(\mathbb {E}\left[ D_{K^*}1_{A_{K}}\right] \le \mathbb {E}\left[ D_{K^*}1_{A_{K^*}}\right] \) since \(A_K\subseteq A_{K^*}\). This completes the proof of the proposition. \(\square \)

Now we will estimate the probability of the event \(A_{K}\).

Proposition A.3

The estimate holds:

Proof

Observe the decomposition

and therefore

We aim to upper bound \(\mathbb {P}(B \text { and } \tau \le K)\). To this end, let \(Y_k= D^2_{k\wedge \tau }1_{B}\) denote the stopped process. We successively compute

where the last estimate uses Markov’s inequality. Next, observe

We next upper bound \(\mathbb {E}[Y_K]\). To this end, observe

where (A.9) follows from (A.2). We now use the law of total expectation to iterate the above inequality:

Combining the estimates (A.6), (A.7), (A.8), and (A.10) completes the proof. \(\square \)

B Proofs from Sect. 3.3

1.1 B.1 The ensemble method

Lemma B.1

(Ensemble method). Let \(\{x_i\}_{i=1}^m\) be i.i.d. realizations of a random vector \(x\in \mathbb {R}^d\). Suppose that the estimate holds:

where \(p\in (\tfrac{1}{2},1)\) and \(\varepsilon >0\) are some real numbers and \(\bar{x}\in \mathbb {R}^d\) is a vector. Then with probability at least \(1-\exp (-\frac{1}{2p}m(p-\frac{1}{2})^2)\), there exists an index \(i^*\) satisfying

and for any index \(i^*\) satisfying (B.1) it must be that \(\Vert x_{i^*}-\bar{x}\Vert \le 3\varepsilon \).

Proof

By Chernoff’s bound, with probability at least \(1-\exp (-\frac{1}{2p}m(p-\frac{1}{2})^2)\), the estimate holds:

In particular, there exists an index \(i^*\) satisfying (B.1) Fix such an index \(i^*\). Clearly, there must exist another index j satisfying \(x_j\in B_{\varepsilon }(\bar{x})\cap B_{2\varepsilon }(x_{i^*})\). We therefore conlude \(\Vert x_{i^*}-\bar{x}\Vert \le \Vert x_{i^*}-x_j\Vert +\Vert x_j-\bar{x}\Vert \le 3\varepsilon \). This completes the proof. \(\square \)

1.2 B.2 Proof of Lemma 3.5

Fix \(\gamma \in (0, 2)\) and a point \(y \in \mathcal {T}_\gamma \). Recall that f is \(\eta \)-weakly convex on an open convex set containing \(\mathcal {X}\) [11, Lemma 4.1]. Consequently, the proximal subproblem (3.7) is \((\rho -\eta )\)-strongly convex. Before proving the remaining portion of the lemma, we first show that subgradients of the extended valued function \(f + \delta _\mathcal {X}\) are bounded below. This was essentially already observed in [12, Lemma 2.1]. We provide a quick proof for completeness.

Lemma B.2

The estimate:

Proof

Fix any \(x \in \mathcal {T}_\gamma \backslash \mathcal {X}^*\) and \(v \in \partial f(x) + N_{\mathcal {X}}(x)\), and let \(\bar{x} \in \textrm{proj}_{\mathcal {X}^*}(x)\). We successively compute

where the first inequality follows from sharpness , and the second from weak convexity of f and convexity of \(\mathcal {X}\). Rearranging and using the Cauchy-Schwarz inequality, we deduce

Dividing both sides by \(\textrm{dist}(x, \mathcal {X}^*)\) yields the result. \(\square \)

Now, let \(\bar{y}\) be any minimizer of the proximal problem (3.7) and suppose \( \rho < \left( \tfrac{2-\gamma }{2\gamma }\right) \eta \). Clearly, to establish Lemma 3.5, it suffices to argue the inclusion \(\bar{y}\in \mathcal {X}^{*}\). To this end, choose any \(\hat{y} \in \textrm{proj}_{\mathcal {X}^*}(y)\). Observe

where the first inequality follows from the definition of \(\bar{y}\), the second uses the definition of \(\hat{y}\), and the third follows from the assumption \(y\in \mathcal {T}_{\gamma }\). Thus, we deduce \(\Vert \bar{y}-y \Vert \le \frac{\gamma \mu }{\eta }\). Consequently, using sharpness we conclude

where the last inequality follows from the assumption \(\rho < \left( \tfrac{2-\gamma }{2\gamma }\right) \eta \). Consequently, \(\bar{y}\) lies in the tube \(\mathcal {T}_\gamma \). Now, define \(v:= \rho (y - \bar{y}) \in \partial f(\bar{y}) + N_{\mathcal {X}}(\bar{y})\). Appealing to Lemma B.2, we deduce in the case \(\bar{y} \notin \mathcal {X}^*\), the contradiction \((1-\tfrac{\gamma }{2}) \mu / \rho \le \Vert v\Vert /\rho = \Vert y - \bar{y}\Vert \le \frac{\gamma \mu }{\eta }\). Therefore, \(\bar{y}\) lies in \( \mathcal {X}^*\), as we had to show.

1.3 B.3 Proof of Lemma 3.6