Abstract

We present new results for the Frank–Wolfe method (also known as the conditional gradient method). We derive computational guarantees for arbitrary step-size sequences, which are then applied to various step-size rules, including simple averaging and constant step-sizes. We also develop step-size rules and computational guarantees that depend naturally on the warm-start quality of the initial (and subsequent) iterates. Our results include computational guarantees for both duality/bound gaps and the so-called FW gaps. Lastly, we present complexity bounds in the presence of approximate computation of gradients and/or linear optimization subproblem solutions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The use and analysis of first-order methods in convex optimization has gained a considerable amount of attention in recent years. For many applications—such as LASSO regression, boosting/classification, matrix completion, and other machine learning problems—first-order methods are appealing for a number of reasons. First, these problems are often very high-dimensional and thus, without any special structural knowledge, interior-point methods or other polynomial-time methods are unappealing. Second, optimization models in many settings are dependent on data that can be noisy or otherwise limited, and it is therefore not necessary or even sensible to require very high-accuracy solutions. Thus the weaker rates of convergence of first-order methods are typically satisfactory for such applications. Finally, first-order methods are appealing in many applications due to the lower computational burden per iteration, and the structural implications thereof. Indeed, most first-order methods require, at each iteration, the computation of an exact, approximate, or stochastic (sub)gradient and the computation of a solution to a particular “simple” subproblem. These computations typically scale well with the dimension of the problem and are often amenable to parallelization, distributed architectures, efficient management of sparse data-structures, and the like.

Our interest herein is the Frank–Wolfe method, which is also referred to as the conditional gradient method. The original Frank–Wolfe method, developed for smooth convex optimization on a polytope, dates back to Frank and Wolfe [9], and was generalized to the more general smooth convex objective function over a bounded convex feasible region thereafter, see for example Demyanov and Rubinov [3], Dunn and Harshbarger [8], Dunn [6, 7], also Levitin and Polyak [19] and Polyak [24]. More recently there has been renewed interest in the Frank–Wolfe method due to some of its properties that we will shortly discuss, see for example Clarkson [1], Hazan [13], Jaggi [15], Giesen et al. [11], and most recently Harchaoui et al. [12], Lan [18] and Temlyakov [25]. The Frank–Wolfe method is premised on being able to easily solve (at each iteration) linear optimization problems over the feasible region of interest. This is in contrast to other first-order methods, such as the accelerated methods of Nesterov [21, 22], which are premised on being able to easily solve (at each iteration) certain projection problems defined by a strongly convex prox function. In many applications, solving a linear optimization subproblem is much simpler than solving the relevant projection subproblem. Moreover, in many applications the solutions to the linear optimization subproblems are often highly structured and exhibit particular sparsity and/or low-rank properties, which the Frank–Wolfe method is able to take advantage of as follows. The Frank–Wolfe method solves one subproblem at each iteration and produces a sequence of feasible solutions that are each a convex combination of all previous subproblem solutions, for which one can derive an \(O(\frac{1}{k})\) rate of convergence for appropriately chosen step-sizes. Due to the structure of the subproblem solutions and the fact that iterates are convex combinations of subproblem solutions, the feasible solutions returned by the Frank–Wolfe method are also typically very highly-structured. For example, when the feasible region is the unit simplex \(\Delta _n := \{\lambda \in \mathbb {R}^n : e^T\lambda = 1, \lambda \ge 0\}\) and the linear optimization oracle always returns an extreme point, then the Frank–Wolfe method has the following sparsity property: the solution that the method produces at iteration \(k\) has at most \(k\) non-zero entries. (This observation generalizes to the matrix optimization setting: if the feasible region is a ball induced by the nuclear norm, then at iteration \(k\) the rank of the matrix produced by the method is at most \(k\)). In many applications, such structural properties are highly desirable, and in such cases the Frank–Wolfe method may be more attractive than the faster accelerated methods, even though the Frank–Wolfe method has a slower rate of convergence.

The first set of contributions in this paper concern computational guarantees for arbitrary step-size sequences. In Sect. 2, we present a new complexity analysis of the Frank–Wolfe method wherein we derive an exact functional dependence of the complexity bound at iteration \(k\) as a function of the step-size sequence \(\{\bar{\alpha }_k\}\). We derive bounds on the deviation from the optimal objective function value (and on the duality gap in the presence of minmax structure), and on the so-called FW gaps, which may be interpreted as specially structured duality gaps. In Sect. 3, we use the technical theorems developed in Sect. 2 to derive computational guarantees for a variety of simple step-size rules including the well-studied step-size rule \(\bar{\alpha }_k := \frac{2}{k+2}\), simple averaging, and constant step-sizes. Our analysis retains the well-known optimal \(O(\frac{1}{k})\) rate (optimal for linear optimization oracle-based methods [18], following also from [15]) when the step-size is either given by the rule \(\bar{\alpha }_k := \frac{2}{k+2}\) or is determined by a line-search. We also derive an \(O(\frac{\ln (k)}{k})\) rate for both the case when the step-size is given by simple averaging and in the case when the step-size is simply a suitably chosen constant.

The second set of contributions in this paper concern “warm-start” step-size rules and associated computational guarantees that reflect the the quality of the given initial iterate. The \(O(\frac{1}{k})\) computational guarantees associated with the step-size sequence \(\bar{\alpha }_k := \frac{2}{k+2}\) are independent of quality of the initial iterate. This is good if the objective function value of the initial iterate is very far from the optimal value, as the computational guarantee is independent of the poor quality of the initial iterate. But if the objective function value of the initial iterate is moderately close to the optimal value, one would want the Frank–Wolfe method, with an appropriate step-size sequence, to have computational guarantees that reflect the closeness to optimality of the initial objective function value. In Sect. 4, we introduce a modification of the \(\bar{\alpha }_k := \frac{2}{k+2}\) step-size rule that incorporates the quality of the initial iterate. Our new step-size rule maintains the \(O(\frac{1}{k})\) complexity bound but now the bound is enhanced by the quality of the initial iterate. We also introduce a dynamic version of this warm start step-size rule, which dynamically incorporates all new bound information at each iteration. For the dynamic step-size rule, we also derive a \(O(\frac{1}{k})\) complexity bound that depends naturally on all of the bound information obtained throughout the course of the algorithm.

The third set of contributions concern computational guarantees in the presence of approximate computation of gradients and linear optimization subproblem solutions. In Sect. 5, we first consider a variation of the Frank–Wolfe method where the linear optimization subproblem at iteration \(k\) is solved approximately to an (additive) absolute accuracy of \(\delta _k\). We show that, independent of the choice of step-size sequence \(\{\bar{\alpha }_k\}\), the Frank–Wolfe method does not suffer from an accumulation of errors in the presence of approximate subproblem solutions. We extend the “technical” complexity theorems of Sect. 2, which imply, for instance, that when an optimal step-size such as \(\bar{\alpha }_k := \frac{2}{k+2}\) is used and the \(\{\delta _k\}\) accuracy sequence is a constant \(\delta \), then a solution with accuracy \(O(\frac{1}{k} + \delta )\) can be achieved in \(k\) iterations. We next examine variations of the Frank–Wolfe method where exact gradient computations are replaced with inexact gradient computations, under two different models of inexact gradient computations. We show that all of the complexity results under the previously examined approximate subproblem solution case (including, for instance, the non-accumulation of errors) directly apply to the case where exact gradient computations are replaced with the \(\delta \)-oracle approximate gradient model introduced by d’Aspremont [2]. We also examine replacing exact gradient computations with the \((\delta , L)\)-oracle model introduced by Devolder et al. [4]. In this case the Frank–Wolfe method suffers from an accumulation of errors under essentially any step-size sequence \(\{\bar{\alpha }_k\}\). These results provide some insight into the inherent tradeoffs faced in choosing among several first-order methods.

1.1 Notation

Let \(E\) be a finite-dimensional real vector space with dual vector space \(E^*\). For a given \(s \in E^*\) and a given \(\lambda \in E\), let \(s^T\lambda \) denote the evaluation of the linear functional \(s\) at \(\lambda \). For a norm \(\Vert \cdot \Vert \) on \(E\), let \(B(c,r) = \{\lambda \in E : \Vert \lambda - c\Vert \le r\}\). The dual norm \(\Vert \cdot \Vert _*\) on the space \(E^*\) is defined by \(\Vert s\Vert _*:= \max \nolimits _{\lambda \in B(0,1)} \{s^T\lambda \} \) for a given \(s \in E^*\). The notation “\(\tilde{v} \leftarrow \arg \max \nolimits _{v \in S} \{f(v)\}\)” denotes assigning \(\tilde{v}\) to be any optimal solution of the problem \(\max \nolimits _{v \in S} \{f(v)\}\).

2 The Frank–Wolfe method

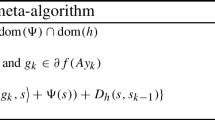

We recall the Frank–Wolfe method for convex optimization, see Frank and Wolfe [9], also Demyanov and Rubinov [3], Levitin and Polyak [19], and Polyak [24], stated here for maximization problems:

where \(Q \subset E\) is convex and compact, and \(h(\cdot ) : Q \rightarrow \mathbb {R}\) is concave and differentiable on \(Q\). Let \(h^*\) denote the optimal objective function value of (1). The basic Frank–Wolfe method is presented in Method 1, where the main computational requirement at each iteration is to solve a linear optimization problem over \(Q\) in step (2) of the method. The step-size \(\bar{\alpha }_k\) in step (4) could be chosen by inexact or exact line-search, or by a pre-determined or dynamically determined step-size sequence \(\{\bar{\alpha }_k\}\). Also note that the version of the Frank–Wolfe method in Method 1 does not allow a (full) step-size \(\bar{\alpha }_k = 1\), the reasons for which will become apparent below.

As a consequence of solving the linear optimization problem in step (2) of the method, one conveniently obtains the following upper bound on the optimal value \(h^*\) of (1):

and it follows from the fact that the linearization of \(h(\cdot )\) at \(\lambda _k\) dominates \(h(\cdot )\) that \(B^w_k\) is a valid upper bound on \(h^*\). We also study the quantity \(G_k\):

which we refer to as the “FW gap” at iteration \(k\) for convenience. Note that \(G_k \ge h^*- h(\lambda _k) \ge 0\). The use of the upper bound \(B^w_k\) dates to the original 1956 paper of Frank and Wolfe [9]. As early as 1970, Demyanov and Rubinov [3] used the FW gap quantities extensively in their convergence proofs of the Frank–Wolfe method, and perhaps this quantity was used even earlier. In certain contexts, \(G_k\) is an important quantity by itself, see for example Hearn [14], Khachiyan [16] and Giesen et al. [11]. Indeed, Hearn [14] studies basic properties of the FW gaps independent of their use in any algorithmic schemes. For results concerning upper bound guarantees on \(G_k\) for specific and general problems see Khachiyan [16], Clarkson [1], Hazan [13], Jaggi [15], Giesen et al. [11], and Harchaoui et al. [12]. Both \(B^w_k\) and \(G_k\) are computed directly from the solution of the linear optimization problem in step (2) and are recorded therein for convenience.

In some of our analysis of the Frank–Wolfe method, the computational guarantees will depend on the quality of upper bounds on \(h^*\). In addition to the Wolfe bound \(B^w_k\), step (3) allows for an “optional other upper bound \(B^o_k\)” that also might be computed at iteration \(k\). Sometimes there is structural knowledge of an upper bound as a consequence of a dual problem associated with (1), as when \(h(\cdot )\) is conveyed with minmax structure, namely:

where \(P\) is a closed convex set and \(\phi (\cdot ,\cdot ) : P \times Q \rightarrow \mathbb {R}\) is a continuous function that is convex in the first variable \(x\) and concave in the second variable \(\lambda \). In this case define the convex function \(f(\cdot ): P \rightarrow \mathbb {R}\) given by \(f(x) := \max \nolimits _{\lambda \in Q} \ \phi (x,\lambda )\) and consider the following duality paired problems:

where the dual problem corresponds to our problem of interest (1). Weak duality holds, namely \(h(\lambda ) \le h^* \le f(x)\) for all \(x \in P, \lambda \in Q\). At any iterate \(\lambda _k \in Q\) of the Frank–Wolfe method one can construct a “minmax” upper bound on \(h^*\) by considering the variable \(x\) in that structure:

and it follows from weak duality that \(B^o_k := B^m_k\) is a valid upper bound for all \(k\). Notice that \(x_k\) defined above is the “optimal response” to \(\lambda _k\) in a minmax sense and hence is a natural choice of duality-paired variable associated with the variable \(\lambda _k\). Under certain regularity conditions, for instance when \(h(\cdot )\) is globally differentiable on \(E\), one can show that \(B^m_k\) is at least as tight a bound as Wolfe’s bound, namely \(B^m_k \le B^w_k\) for all \(k\) (see Proposition 7.1), and therefore the FW gap \(G_k\) conveniently bounds this minmax duality gap: \(B^m_k - h(\lambda _k) \le B^w_k - h(\lambda _k) = G_k\).

(Indeed, in the minmax setting notice that the optimal response \(x_k\) in (6) is a function of the current iterate \(\lambda _k\) and hence \(f(x_k) - h(\lambda _k)=B^m_k - h(\lambda _k)\) is not just any duality gap but rather is determined completely by the current iterate \(\lambda _k\). This special feature of the duality gap \(B^m_k - h(\lambda _k)\) is exploited in the application of the Frank–Wolfe method to rounding of polytopes [16], parametric optimization on the spectrahedron [11], and to regularized regression [10] (and perhaps elsewhere as well), where bounds on the FW gap \(G_k\) are used to bound \(B^m_k - h(\lambda _k)\) directly).

We also mention that in some applications there might be exact knowledge of the optimal value \(h^*\), such as in certain linear regression and/or machine learning applications where one knows a priori that the optimal value of the loss function is zero. In these situations one can set \(B^o_k \leftarrow h^*\).

Towards stating and proving complexity bounds for the Frank–Wolfe method, we use the following curvature constant \(C_{h, Q}\), which is defined to be the minimal value of \(C\) satisfying:

(This notion of curvature was introduced by Clarkson [1] and extended in Jaggi [15]). For any choice of norm \(\Vert \cdot \Vert \) on \(E\), let \(\mathrm {Diam}_Q\) denote the diameter of \(Q\) measured with the norm \(\Vert \cdot \Vert \), namely \(\mathrm {Diam}_Q:=\max \nolimits _{\lambda , \bar{\lambda }\in Q} \{\Vert \lambda - \bar{\lambda }\Vert \}\) and let \(L_{h,Q}\) be the Lipschitz constant for \(\nabla h(\cdot )\) on \(Q\), namely \(L_{h,Q}\) is the smallest constant \(L\) for which it holds that:

It is straightforward to show that \(C_{h, Q}\) is bounded above by the more classical metrics \(\mathrm {Diam}_Q\) and \(L_{h,Q}\), namely

see [15]; we present a short proof of this inequality in Proposition 7.2 for completeness. In contrast to other (proximal) first-order methods, the Frank–Wolfe method does not depend on a choice of norm. The norm invariant definition of \(C_{h,Q}\) and the fact that (8) holds for any norm are therefore particularly appealing properties of \(C_{h,Q}\) as a behavioral measure for the Frank–Wolfe method.

As a prelude to stating our main technical results, we define the following two auxiliary sequences, where \(\alpha _k\) and \(\beta _k\) are functions of the first \(k\) step-size sequence values, \(\bar{\alpha }_1, \ldots , \bar{\alpha }_k\), from the Frank–Wolfe method:

(Here and in what follows we use the conventions: \(\prod _{j = 1}^0 \cdot = 1\) and \(\sum _{i = 1}^0 \cdot = 0\)).

The following two theorems are our main technical constructs that will be used to develop the results herein. The first theorem concerns optimality gap bounds.

Theorem 2.1

Consider the iterate sequences of the Frank–Wolfe method (Method 1) \(\{\lambda _k\}\) and \(\{\tilde{\lambda }_k\}\) and the sequence of upper bounds \(\{B_k\}\) on \(h^*\), using the step-size sequence \(\{\bar{\alpha }_k\}\). For the auxiliary sequences \(\{\alpha _k\}\) and \(\{\beta _k\}\) given by (9), and for any \(k \ge 0\), the following inequality holds:

\(\square \)

(The summation expression in the rightmost term above appears also in the bound given for the dual averaging method of Nesterov [23]. Indeed, this is no coincidence as the sequences \(\{\alpha _k\}\) and \(\{\beta _k\}\) given by (9) arise precisely from a connection between the Frank–Wolfe method and the dual averaging method. If we define \(s_k := \lambda _0 + \sum _{i = 0}^{k-1}\alpha _i\tilde{\lambda }_i\), then one can interpret the sequence \(\{s_k\}\) as the sequence of dual variables in a particular instance of the dual averaging method. This connection underlies the proof of Theorem 2.1, and the careful reader will notice the similarities between the proof of Theorem 2.1 and the proof of Theorem 1 in [23]. For this reason we will henceforth refer to the sequences (9) as the “dual averages” sequences associated with \(\{\bar{\alpha }_k\}\)).

The second theorem concerns the FW gap values \(G_k\) from step (2) in particular.

Theorem 2.2

Consider the iterate sequences of the Frank–Wolfe method (Method 1) \(\{\lambda _k\}\) and \(\{\tilde{\lambda }_k\}\), the sequence of upper bounds \(\{B_k\}\) on \(h^*\), and the sequence of FW gaps \(\{G_k\}\) from step (2), using the step-size sequence \(\{\bar{\alpha }_k\}\). For the auxiliary sequences \(\{\alpha _k\}\) and \(\{\beta _k\}\) given by (9), and for any \(\ell \ge 0\) and \(k \ge \ell + 1\), the following inequality holds:

\(\square \)

Theorems 2.1 and 2.2 can be applied to yield specific complexity results for any specific step-size sequence \(\{\bar{\alpha }_k\}\) (satisfying the mild assumption that \(\bar{\alpha }_k < 1\)) through the use of the implied \(\{\alpha _k\}\) and \(\{\beta _k\}\) dual averages sequences. This is shown for several useful step-size sequences in the next section.

Proof of Theorem 2.1

We will show the slightly more general result for \(k \ge 0\):

from which (10) follows by substituting \(B=B_k\) above.

For \(k=0\) the result follows trivially since \(\beta _1=1\) and the summation term on the right side of (12) is zero by the conventions for null products and summations stated earlier. For \(k \ge 1\), we begin by observing that the following equalities hold for the dual averages sequences (9):

and

We then have for \(i \ge 1\):

The inequality in the first line above follows from the definition of \(C_{h,Q}\) in (7) and \(\lambda _{i+1} -\lambda _i = \bar{\alpha }_i(\tilde{\lambda }_i - \lambda _i)\). The second equality above uses the identities (13), and the fourth equality uses the definition of the Wolfe upper bound (2). Rearranging and summing the above over \(i\), it follows that for any scalar \(B\):

Therefore

where the first equality above uses identity (14), the first inequality uses the fact that \(B_k \le B^w_i\) for \(i \le k\), and the second inequality uses (15) and the fact that \(\beta _1=1\). The result then follows by dividing by \(\beta _{k+1}\) and rearranging terms. \(\square \)

Proof of Theorem 2.2

For \(i\ge 1\) we have:

where the inequality follows from the definition of the curvature constant in (7), and the equality follows from the definition of the FW gap in (3). Summing the above over \(i \in \{\ell + 1, \ldots , k\}\) and rearranging yields:

Combining (17) with Theorem 2.1 we obtain:

and since \(B_\ell \ge h^*\ge h(\lambda _{k+1})\) we obtain:

and dividing by \(\sum _{i=\ell +1}^k \bar{\alpha }_{i}\) yields the result. \(\square \)

3 Computational guarantees for specific step-size sequences

Herein we use Theorems 2.1 and 2.2 to derive computational guarantees for a variety of specific step-size sequences.

It will be useful to consider a version of the Frank–Wolfe method wherein there is a single “pre-start” step. In this case we are given some \(\lambda _0 \in Q\) and some upper bound \(B_{-1}\) on \(h^*\) (one ca use \(B_{-1}=+\infty \) if no information is available) and we proceed like any other iteration except that in step (4) we set \(\lambda _{1} \leftarrow \tilde{\lambda }_0\), which is equivalent to setting \(\bar{\alpha }_0 := 1\). This is shown formally in the Pre-start Procedure 2.

Before developing computational guarantees for specific step-sizes, we present a property of the pre-start step (Procedure 2) that has implications for such computational guarantees.

Proposition 3.1

Let \(\lambda _1\) and \(B_0\) be computed by the pre-start step Procedure 2. Then \(B_0 - h(\lambda _1) \le \frac{1}{2}C_{h,Q}\).

Proof

We have \(\lambda _1 = \tilde{\lambda }_0\) and \(B_0 \le B^w_0\), whereby from the definition of \(C_{h,Q}\) using \(\alpha = 1\) we have:

and the result follows by rearranging terms.\(\square \)

3.1 A well-studied step-size sequence

Suppose we initiate the Frank–Wolfe method with the pre-start step Procedure 2 from a given value \(\lambda _0 \in Q\) (which by definition assigns the step-size \(\bar{\alpha }_0=1\) as discussed earlier), and then use the step-size \(\bar{\alpha }_i = 2/(i+2)\) for \(i \ge 1\). This can be written equivalently as:

Computational guarantees for this sequence appeared in Clarkson [1], Hazan [13] (with a corrected proof in Giesen et al. [11]), and Jaggi [15]. In unpublished correspondence with the first author in 2007, Nemirovski [20] presented a short inductive proof of convergence of the Frank–Wolfe method using this step-size rule.

We use the phrase “bound gap” to generically refer to the difference between an upper bound \(B\) on \(h^*\) and the value \(h(\lambda )\), namely \(B-h(\lambda )\). The following result describes guarantees on the bound gap \(B_k - h(\lambda _{k+1})\) and the FW gap \(G_k\) using the step-size sequence (18), that are applications of Theorems 2.1 and 2.2, and that are very minor improvements of existing results as discussed below.

Bound 3.1

Under the step-size sequence (18), the following inequalities hold for all \(k \ge 1\):

and

The bound (19) is a very minor improvement over that in Hazan [13], Giesen et al. [11], Jaggi [15], and Harchaoui et al. [12], as the denominator is additively larger by \(1\) (after accounting for the pre-start step and the different indexing conventions). The bound (20) is a modification of the original bound in Jaggi [15], and is also a slight improvement of the bound in Harchaoui et al. [12] in as much as the denominator is additively larger by \(1\) and the bound is valid for all \(k \ge 1\).

Proof of Bound 3.1

Using (18) it is easy to show that the dual averages sequences (9) satisfy \(\beta _k = \frac{k(k+1)}{2}\) and \(\alpha _k = k+1\) for \(k\ge 1\). Utilizing Theorem 2.1, we have for \(k\ge 1\):

where the second inequality uses \(B_k \le B_0\), the third inequality uses Proposition 3.1, the first equality substitutes the dual averages sequence values, and the final inequality follows from Proposition 7.3. This proves (19).

To prove (20) we proceed as follows. First apply Theorem 2.2 with \(\ell = 0\) and \(k = 1\) to obtain:

where the second inequality uses Proposition 3.1. Since \(\frac{13}{12} \le 4.5\) and \(\frac{13}{12} \le \frac{4.5}{2}\), this proves (20) for \(k = 1, 2\). Assume now that \(k \ge 3\). Let \(\ell = \lceil \frac{k}{2}\rceil - 2\) so that \(\ell \ge 0\). We have:

where the first inequality uses Proposition 7.5 and the second inequality uses \(\lceil \frac{k}{2}\rceil \le \frac{k}{2} + \frac{1}{2}\). We also have:

where the first inequality uses Proposition 7.5 and the second inequality uses \(\lceil \frac{k}{2}\rceil \ge \frac{k}{2}\). Applying Theorem 2.2 and using (21) and (22) yields:

where the second inequality uses the chain of inequalities used to prove (19), the third inequality uses \(\ell + 4 \ge \frac{k}{2} + 2\), and the fourth inequality uses \(k^2 + 4k + \frac{16}{3} \le k^2 + 6k + 8 = (k+4)(k+2)\).\(\square \)

3.2 Simple averaging

Consider the following step-size sequence:

where, as with the step-size sequence (18), we write \(\bar{\alpha }_0 = 1\) to indicate the use of the pre-start step Procedure 2. It follows from a simple inductive argument that, under the step-size sequence (23), \(\lambda _{k+1}\) is the simple average of \(\tilde{\lambda }_0, \tilde{\lambda }_1, \ldots , \tilde{\lambda }_k\), i.e., we have

Bound 3.2

Under the step-size sequence (23), the following inequality holds for all \(k \ge 0\):

and the following inequality holds for all \(k \ge 2\):

Proof of Bound 3.2

Using (23) it is easy to show that the dual averages sequences (9) are given by \(\beta _k = k\) and \(\alpha _k = 1\) for \(k\ge 1\). Utilizing Theorem 2.1 and Proposition 3.1, we have for \(k\ge 1\):

where the first equality substitutes the dual averages sequence values and the second inequality uses Proposition 7.5. This proves (24). To prove (25), we proceed as follows. Let \(\ell = \lfloor \frac{k}{2}\rfloor - 1\), whereby \(\ell \ge 0\) since \(k \ge 2\). We have:

where the first inequality uses Proposition 7.5 and the second inequality uses \(\ell \le \frac{k}{2} - 1\). We also have:

where the first inequality uses Proposition 7.5 and the second inequality uses \(\ell \ge \frac{k}{2} - 1.5\). Applying Theorem 2.2 and using (26) and (27) yields:

where the second inequality uses the bound that proves (24), the third inequality uses \(\frac{k}{2} - 1.5 \le \ell \le \frac{k}{2} - 1\) and the fourth inequality uses \(\frac{k+3}{k+1} \le \frac{5}{3}\) for \(k \ge 2\).\(\square \)

3.3 Constant step-size

Given \(\bar{\alpha }\in (0,1)\), consider using the following constant step-size rule:

This step-size rule arises in the analysis of the Incremental Forward Stagewise Regression algorithm (\(\mathrm {FS}_\varepsilon \)), see [10], and perhaps elsewhere as well.

Bound 3.3

Under the step-size sequence (28), the following inequality holds for all \(k \ge 1\):

If the pre-start step Procedure 2 is used, then:

If we decide a priori to run the Frank–Wolfe method for \(k\) iterations after the pre-start step Procedure 2, then we can optimize the bound (30) with respect to \(\bar{\alpha }\). The optimized value of \(\bar{\alpha }\) in the bound (30) is easily derived to be:

With \(\bar{\alpha }\) determined by (31), we obtain a simplified bound from (30) and also a guarantee for the FW gap sequence \(\{G_k\}\) if the method is continued with the same constant step-size (31) for an additional \(k + 1\) iterations.

Bound 3.4

If we use the pre-start step Procedure 2 and the constant step-size sequence (31) for all iterations, then after \(k\) iterations the following inequality holds:

Furthermore, after \(2k + 1\) iterations the following inequality holds:

It is curious to note that the bounds (24) and (32) are almost identical, although (32) requires fixing a priori the number of iterations \(k\).

Proof of Bound 3.3

Under the step-size rule (28) it is straightforward to show that the dual averages sequences (9) are for \(i \ge 1\):

whereby

It therefore follows from Theorem 2.1 that:

which proves (29). If the pre-start step Procedure 2 is used, then using Proposition 3.1 it follows that \(B_k - h(\lambda _1) \le B_0 - h(\lambda _1) \le \frac{1}{2}C_{h,Q}\), whereby from (29) we obtain:

completing the proof. \(\square \)

Proof of Bound 3.4

Substituting the step-size (31) into (30) we obtain:

where the second inequality follows from (i) of Proposition 7.4. This proves (32). To prove (33), notice that inequality (34) together with the subsequent chain of inequalities in the proofs of (29), (30), and (32) show that:

Using (35) and the substitution \(\sum _{i=k+1}^{2k+1}\bar{\alpha }_i = (k+1)\bar{\alpha }\) and \(\sum _{i=k+1}^{2k+1}\bar{\alpha }_i^2 = (k+1)\bar{\alpha }^2\) in Theorem 2.2 yields:

where the second inequality uses (ii) of Proposition 7.4 and the third inequality uses (i) of Proposition 7.4.\(\square \)

3.4 Extensions using line-searches

The original method of Frank and Wolfe [9] utilized an exact line-search to determine the next iterate \(\lambda _{k+1}\) by assigning \(\hat{\alpha }_k \leftarrow \arg \max \nolimits _{\alpha \in [0,1]}\{h(\lambda _k + \alpha (\tilde{\lambda }_k - \lambda _k)) \}\) and \(\lambda _{k+1} \leftarrow \lambda _k + \hat{\alpha }_k(\tilde{\lambda }_k - \lambda _k)\). When \(h(\cdot )\) is a quadratic function and the dimension of the space \(E\) of variables \(\lambda \) is not huge, an exact line-search is easy to compute analytically. It is a straightforward extension of Theorem 2.1 to show that if an exact line-search is utilized at every iteration, then the bound (10) holds for any choice of step-size sequence \(\{\bar{\alpha }_k\}\), and not just the sequence \(\{\hat{\alpha }_k\}\) of line-search step-sizes. In particular, the \(O(\frac{1}{k})\) computational guarantee (19) holds, as does (24) and (29), as well as the bound (38) to be developed in Sect. 4. This observation generalizes as follows. At iteration \(k\) of the Frank–Wolfe method, let \(A_k \subseteq [0,1)\) be a closed set of potential step-sizes and suppose we select the next iterate \(\lambda _{k+1}\) using the exact line-search assignment \(\hat{\alpha }_k \leftarrow \arg \max \nolimits _{\alpha \in A_k}\{h(\lambda _k + \alpha (\tilde{\lambda }_k - \lambda _k)) \}\) and \(\lambda _{k+1} \leftarrow \lambda _k + \hat{\alpha }_k(\tilde{\lambda }_k - \lambda _k)\). Then after \(k\) iterations of the Frank–Wolfe method, we can apply the bound (10) for any choice of step-size sequence \(\{\bar{\alpha }_i\}_{i = 1}^k\) in the cross-product \(A_1 \times \cdots \times A_k\).

For inexact line-search methods, Dunn [7] analyzes versions of the Frank–Wolfe method with an Armijo line-search and also a Goldstein line-search rule. In addition to convergence and computational guarantees for convex problems, [7] also contains results for the case when the objective function is non-concave. And in prior work, Dunn [6] presents convergence and computational guarantees for the case when the step-size \(\bar{\alpha }_k\) is determined from the structure of the lower quadratic approximation of \(h(\cdot )\) in (7), if the curvature constant \(C_{h,Q}\) is known or upper-bounded. And in the case when no prior information about \(C_{h,Q}\) is given, [6] has a clever recursion for determining a step-size that still accounts for the lower quadratic approximation without estimation of \(C_{h,Q}\).

4 Computational guarantees for a warm start

In the framework of this study, the well-studied step-size sequence (18) and associated computational guarantees (Bound 3.1) corresponds to running the Frank–Wolfe method initiated with the pre-start step from the initial point \(\lambda _0\). One feature of the main computational guarantees as presented in the bounds (19) and (20) is their insensitivity to the quality of the initial point \(\lambda _0\). This is good if \(h(\lambda _0)\) is very far from the optimal value \(h^*\), as the poor quality of the initial point does not affect the computational guarantee. But if \(h(\lambda _0)\) is moderately close to the optimal value, one would want the Frank–Wolfe method, with an appropriate step-size sequence, to have computational guarantees that reflect the closeness to optimality of the initial objective function value \(h(\lambda _0)\). Let us see how this can be done.

We will consider starting the Frank–Wolfe method without the pre-start step, started at an initial point \(\lambda _1\), and let \(C_1\) be a given estimate of the curvature constant \(C_{h,Q}\). Consider the following step-size sequence:

Comparing (36) to the well-studied step-size rule (18), one can think of the above step-size rule as acting “as if” the Frank–Wolfe method had run for \(\frac{2C_1}{B_1-h(\lambda _1)} \) iterations before arriving at \(\lambda _1\). The next result presents a computational guarantee associated with this step-size rule.

Bound 4.1

Under the step-size sequence (36), the following inequality holds for all \(k \ge 1\):

Notice that in the case when \(C_1=C_{h,Q}\), the bound in (37) simplifies conveniently to:

Also, as a function of the estimate \(C_1\) of the curvature constant, it is easily verified that the bound in (37) is optimized at \(C_1=C_{h,Q}\).

We remark that the bound (37) (or (38)) is small to the extent that the initial bound gap \(B_1-h(\lambda _1)\) is small, as one would want. However, to the extent that \(B_1-h(\lambda _1)\) is small, the incremental decrease in the bound due to an additional iteration is less. In other words, while the bound (37) is nicely sensitive to the initial bound gap, there is no longer rapid decrease in the bound in the early iterations. It is as if the algorithm had already run for \(\left( \frac{2C_1}{B_1 - h(\lambda _1)}\right) \) iterations to arrive at the initial iterate \(\lambda _1\), with a corresponding dampening in the marginal value of each iteration after then. This is a structural feature of the Frank–Wolfe method that is different from first-order methods that use prox functions and/or projections.

Proof of Bound 4.1

Define \(s = \frac{2C_1}{B_1 - h(\lambda _1)}\), whereby \(\bar{\alpha }_i = \frac{2}{s+1+i}\) for \(i \ge 1\). It then is straightforward to show that the dual averages sequences (9) are for \(i \ge 1\):

and

Furthermore, we have:

Utilizing Theorem 2.1 and (39), we have for \(k\ge 1\):

which completes the proof. \(\square \)

4.1 A dynamic version of the warm-start step-size strategy

The step-size sequence (36) determines all step-sizes for the Frank–Wolfe method based on two pieces of information at the initial point \(\lambda _1\): (i) the initial bound gap \(B_1 - h(\lambda _1)\), and (ii) the given estimate \(C_1\) of the curvature constant. The step-size sequence (36) is a static warm-start strategy in that all step-sizes are determined by information that is available or computed at the first iterate. Let us see how we can improve the computational guarantee by treating every iterate as if it were the initial iterate, and hence dynamically determine the step-size sequence as a function of accumulated information about the bound gap and the curvature constant.

At the start of a given iteration \(k\) of the Frank–Wolfe method, we have the iterate value \(\lambda _k \in Q\) and an upper bound \(B_{k-1}\) on \(h^*\) from the previous iteration. We also will now assume that we have an estimate \(C_{k-1}\) of the curvature constant from the previous iteration as well. Steps (2) and (3) of the Frank–Wolfe method then perform the computation of \(\tilde{\lambda }_k\), \(B_k\) and \(G_k\). Instead of using a pre-set formula for the step-size \(\bar{\alpha }_k\), we will determine the value of \(\bar{\alpha }_k\) based on the current bound gap \(B_k-h(\lambda _k)\) as well as on a new estimate \(C_{k}\) of the curvature constant. (We will shortly discuss how \(C_k\) is computed). Assuming \(C_k\) has been computed, and mimicking the structure of the static warm-start step-size rule (36), we compute the current step-size as follows:

where we note that \(\bar{\alpha }_k\) depends explicitly on the value of \(C_k\). Comparing \(\bar{\alpha }_k\) in (40) with (18), we interpret \(\frac{2C_k}{B_k-h(\lambda _k)}\) to be “as if” the current iteration \(k\) was preceded by \(\frac{2C_k}{B_k-h(\lambda _k)}\) iterations of the Frank–Wolfe method using the standard step-size (18). This interpretation is also in concert with that of the static warm-start step-size rule (36).

We now discuss how we propose to compute the new estimate \(C_k\) of the curvature constant \(C_{h,Q}\) at iteration \(k\). Because \(C_k\) will be only an estimate of \(C_{h,Q}\), we will need to require that \(C_k\) (and the step-size \(\bar{\alpha }_k\) (40) that depends explicitly on \(C_k\)) satisfy:

In order to find a value \(C_k \ge C_{k-1}\) for which (41) is satisfied, we first test if \(C_k := C_{k-1}\) satisfies (41), and if so we set \(C_k \leftarrow C_{k-1}\). If not, one can perform a standard doubling strategy, testing values \(C_k \leftarrow 2C_{k-1}, 4C_{k-1}, 8C_{k-1}, \ldots \), until (41) is satisfied. Since (41) will be satisfied whenever \(C_k \ge C_{h,Q}\) from the definition of \(C_{h,Q}\) in (7) and the inequality \(B_k-h(\lambda _k) \le B^w_k-h(\lambda _k)=\nabla h(\lambda _k)^T(\tilde{\lambda }_k - \lambda _k)\), it follows that the doubling strategy will guarantee \(C_k \le \max \{C_0, 2C_{h,Q}\}\). Of course, if an upper bound \(\bar{C} \ge C_{h,Q}\) is known, then \(C_k \leftarrow \bar{C}\) is a valid assignment for all \(k \ge 1\). Moreover, the structure of \(h(\cdot )\) may be sufficiently simple so that a value of \(C_k \ge C_{k-1}\) satisfying (41) can be determined analytically via closed-form calculation, as is the case if \(h(\cdot )\) is a quadratic function for example. The formal description of the Frank–Wolfe method with dynamic step-size strategy is presented in Method 3.

We have the following computational guarantees for the Frank–Wolfe method with dynamic step-sizes (Method 3):

Bound 4.2

The iterates of the Frank–Wolfe method with dynamic step-sizes (Method 3) satisfy the following for any \(k\ge 1\):

Furthermore, if the doubling strategy is used to update the estimates \(\{C_k\}\) of \(C_{h,Q}\), it holds that \(C_{k} \le \max \{C_0, 2C_{h,Q}\}\).

Notice that (42) naturally generalizes the static warm-start bound (37) (or (38)) to this more general dynamic case. Consider, for simplicity, the case where \(C_k = C_{h,Q}\) is the known curvature constant. In this case, (42) says that we may apply the bound (38) with any \(\ell \in \{1, \ldots , k\}\) as the starting iteration. That is, the computational guarantee for the dynamic case is at least as good as the computational guarantee for the static warm-start step-size (36) initialized at any iteration \(\ell \in \{1, \ldots , k\}\).

Proof of Bound 4.2

Let \(i \ge 1\). For convenience define \(A_i = \frac{2C_i}{B_i - h(\lambda _i)}\), and in this notation (40) is \(\bar{\alpha }_i = \frac{2}{A_i + 2}\). Applying (ii) in step (4) of Method 3 we have:

where the last inequality follows from the fact that \((a+2)^2 > a^2 + 4a + 3 = (a+1)(a+3)\) for \(a \ge 0\). Therefore

We now show by reverse induction that for any \(\ell \in \{1, \ldots , k\}\) the following inequality is true:

Clearly (44) holds for \(\ell =k\), so let us suppose (44) holds for some \(\ell + 1 \in \{2, \ldots , k\}\). Then

where the first inequality is the induction hypothesis, the second inequality uses (43), and the third inequality uses the monotonicity of the \(\{C_k\}\) sequence. This proves (44). Now for any \(\ell \in \{1, \ldots , k\}\) we have from (44) that:

proving the result.\(\square \)

5 Analysis of the Frank–Wolfe method with inexact gradient computations and/or subproblem solutions

In this section we present and analyze extensions of the Frank–Wolfe method in the presence of inexact computation of gradients and/or subproblem solutions. We first consider the case when the linear optimization subproblem is solved approximately.

5.1 Frank–Wolfe method with inexact linear optimization subproblem solutions

Here we consider the case when the linear optimization subproblem is solved approximately, which arises especially in optimization over matrix variables. For example, consider instances of (1) where \(Q\) is the spectrahedron of symmetric matrices, namely \(Q=\{ \Lambda \in \mathbb {S}^{n \times n} : \Lambda \succeq 0, \ I \bullet \Lambda = 1\}\), where \(\mathbb {S}^{n \times n}\) is the space of symmetric matrices of order \(n\), “\(\succeq \)” is the Löwner ordering thereon, and “\(\cdot \bullet \cdot \)” denotes the trace inner product. For these instances solving the linear optimization subproblem corresponds to computing the leading eigenvector of a symmetric matrix, whose solution when \(n \gg 0\) is typically computed inexactly using iterative methods.

For \(\delta \ge 0\) an (absolute) \(\delta \)-approximate solution to the linear optimization subproblem \(\max \nolimits _{\lambda \in Q}\left\{ c^T\lambda \right\} \) is a vector \(\tilde{\lambda } \in Q\) satisfying:

and we use the notation \(\tilde{\lambda } \leftarrow \text {approx}(\delta )_{\lambda \in Q}\left\{ c^T\lambda \right\} \) to denote assigning to \(\tilde{\lambda }\) any such \(\delta \)-approximate solution. The same additive linear optimization subproblem approximation model is considered in Dunn and Harshbarger [8] and Jaggi [15], and a multiplicative linear optimization subproblem approximation model is considered in Lacoste-Julien et al. [17]; a related approximation model is used in connection with a greedy coordinate descent method in Dudík et al. [5]. In Method 4 we present a version of the Frank–Wolfe algorithm that uses approximate linear optimization subproblem solutions. Note that Method 4 allows for the approximation quality \(\delta = \delta _k\) to be a function of the iteration index \(k\). Note also that the definition of the Wolfe upper bound \(B^w_k\) and the FW gap \(G_k\) in step (2.) are amended from the original Frank–Wolfe algorithm (Method 1) by an additional term \(\delta _k\). It follows from (45) that:

which shows that \(B^w_k\) is a valid upper bound on \(h^*\), with similar properties for \(G_k\). The following two theorems extend Theorems 2.1 and 2.2 to the case of approximate subproblem solutions. Analogous to the the case of exact subproblem solutions, these two theorems can easily be used to derive suitable bounds for specific step-sizes rules such as those in Sects. 3 and 4.

Theorem 5.1

Consider the iterate sequences of the Frank–Wolfe method with approximate subproblem solutions (Method 4) \(\{\lambda _k\}\) and \(\{\tilde{\lambda }_k\}\) and the sequence of upper bounds \(\{B_k\}\) on \(h^*\), using the step-size sequence \(\{\bar{\alpha }_k\}\). For the auxiliary sequences \(\{\alpha _k\}\) and \(\{\beta _k\}\) given by (9), and for any \(k \ge 0\), the following inequality holds:

\(\square \)

Theorem 5.2

Consider the iterate sequences of the Frank–Wolfe method with approximate subproblem solutions (Method 4) \(\{\lambda _k\}\) and \(\{\tilde{\lambda }_k\}\), the sequence of upper bounds \(\{B_k\}\) on \(h^*\), and the sequence of FW gaps \(\{G_k\}\) from step (2.), using the step-size sequence \(\{\bar{\alpha }_k\}\). For the auxiliary sequences \(\{\alpha _k\}\) and \(\{\beta _k\}\) given by (9), and for any \(\ell \ge 0\) and \(k \ge \ell + 1\), the following inequality holds:

\(\square \)

Remark 5.1

The pre-start step (Procedure 2) can also be generalized to the case of approximate solution of the linear optimization subproblem. Let \(\lambda _1\) and \(B_0\) be computed by the pre-start step with a \(\delta =\delta _0\)-approximate subproblem solution. Then Proposition 3.1 generalizes to:

and hence if the pre-start step is used (46) implies that:

where \(\alpha _0 := 1\).

Let us now discuss implications of Theorems 5.1 and 5.2, and Remark 5.1. Observe that the bounds on the right-hand sides of (46) and (47) are composed of the exact terms which appear on the right-hand sides of (10) and (11), plus additional terms involving the solution accuracy sequence \(\delta _1, \ldots , \delta _k\). It follows from (14) that these latter terms are particular convex combinations of the \(\delta _i\) values and zero, and in (48) the last term is a convex combination of the \(\delta _i\) values, whereby they are trivially bounded above by \(\max \{\delta _1, \ldots , \delta _k\}\). When \(\delta _i := \delta \) is a constant, then this bound is simply \(\delta \), and we see that the errors due to the approximate computation of linear optimization subproblem solutions do not accumulate, independent of the choice of step-size sequence \(\{\bar{\alpha }_k\}\). In other words, Theorem 5.1 implies that if we are able to solve the linear optimization subproblems to an accuracy of \(\delta \), then the Frank–Wolfe method can solve (1) to an accuracy of \(\delta \) plus a function of the step-size sequence \(\{\bar{\alpha }_k\}\), the latter of which can be made to go to zero at an appropriate rate depending on the choice of step-sizes. Similar observations hold for the terms depending on \(\delta _1, \ldots , \delta _k\) that appear on the right-hand side of (47).

Note that Jaggi [15] considers the case where \(\delta _i := \frac{1}{2}\delta \bar{\alpha }_iC_{h,Q}\) (for some fixed \(\delta \ge 0\)) and \(\bar{\alpha }_i := \frac{2}{i+2}\) for \(i \ge 0\) (or \(\bar{\alpha }_i\) is determined by a line-search), and shows that in this case Method 4 achieves \(O\left( \frac{1}{k}\right) \) convergence in terms of both the optimality gap and the FW gaps. These results can be recovered as a particular instantiation of Theorems 5.1 and 5.2 using similar logic as in the proof of Bound 3.1.

Proof of Theorem 5.1

First recall the identities (13) and (14) for the dual averages sequences (9). Following the proof of Theorem 2.1, we then have for \(i \ge 1\):

where the third equality above uses the definition of the Wolfe upper bound (2) in Method 4. The rest of the proof follows exactly as in the proof of Theorem 2.1. \(\square \)

Proof of Theorem 5.2

For \(i\ge 1\) we have:

where the equality above follows from the definition of the FW gap in Method 4. Summing the above over \(i \in \{\ell + 1, \ldots , k\}\) and rearranging yields:

The rest of the proof follows by combining (49) with Theorem 5.1 and proceeding as in the proof of Theorem 2.2. \(\square \)

5.2 Frank–Wolfe method with inexact gradient computations

We now consider a version of the Frank–Wolfe method where the exact gradient computation is replaced with the computation of an approximate gradient, as was explored in Sect. 3 of Jaggi [15]. We analyze two different models of approximate gradients and derive computational guarantees for each model. We first consider the \(\delta \)-oracle model of d’Aspremont [2], which was developed in the context of accelerated first-order methods. For \(\delta \ge 0\), a \(\delta \)-oracle is a (possibly non-unique) mapping \(g_\delta (\cdot ): Q \rightarrow E^*\) that satisfies:

Note that the definition of the \(\delta \)-oracle does not consider inexact computation of function values. Depending on the choice of step-size sequence \(\{\bar{\alpha }_k\}\), this assumption is acceptable as the Frank–Wolfe method may or may not need to compute function values. (The warm-start step-size rule (40) requires computing function values, as does the computation of the upper bounds \(\{B_k^w\}\), in which case a definition analogous to (50) for function values can be utilized).

The next proposition states the following: suppose one solves for the exact solution of the linear optimization subproblem using the \(\delta \)-oracle instead of the exact gradient. Then the absolute suboptimality of the computed solution in terms of the exact gradient is at most \(2\delta \).

Proposition 5.1

For any \(\bar{\lambda } \in Q\) and any \(\delta \ge 0\), if \(\tilde{\lambda } \in \arg \max \nolimits _{\lambda \in Q}\left\{ g_\delta (\bar{\lambda })^T\lambda \right\} \), then \(\tilde{\lambda }\) is a \(2\delta \)-approximate solution to the linear optimization subproblem \(\max \nolimits _{\lambda \in Q}\left\{ \nabla h(\bar{\lambda })^T\lambda \right\} \).

Proof

Let \(\hat{\lambda } \in \arg \max \limits _{\lambda \in Q}\left\{ \nabla h(\bar{\lambda })^T\lambda \right\} \). Then we have:

where the first and third inequalities use (50), the second inequality follows since \(\tilde{\lambda } \!\in \! \arg \max \nolimits _{\lambda \in Q}\left\{ g_\delta (\bar{\lambda })^T\lambda \right\} \), and the final equality follows since \(\hat{\lambda } \in \arg \max \nolimits _{\lambda \in Q}\left\{ \nabla h(\bar{\lambda })^T\lambda \right\} \). Rearranging terms then yields the result.\(\square \)

Now consider a version of the Frank–Wolfe method where the computation of \(\nabla h(\lambda _k)\) at step (1) is replaced with the computation of \(g_{\delta _k}(\lambda _k)\). Then Proposition 5.1 implies that such a version can be viewed simply as a special case of the version of the Frank–Wolfe method with approximate subproblem solutions (Method 4) of Sect. 5.1 with \(\delta _k\) replaced by \(2\delta _k\). Thus, we may readily apply Theorems 5.1 and 5.2 and Proposition 5.1 to this case. In particular, similar to the results in [2] regarding error non-accumulation for an accelerated first-order method, the results herein imply that there is no accumulation of errors for a version of the Frank–Wolfe method that computes approximate gradients with a \(\delta \)-oracle at each iteration. Furthermore, it is a simple extension to consider a version of the Frank–Wolfe method that computes both (i) approximate gradients with a \(\delta \)-oracle, and (ii) approximate linear optimization subproblem solutions.

5.2.1 Inexact gradient computation model via the \((\delta ,L)\)-oracle

The premise (50) underlying the \(\delta \)-oracle is quite strong and can be restrictive in many cases. For this reason among others, Devolder et al. [4] introduce the less restrictive \((\delta , L)\)-oracle model. For scalars \(\delta , L \ge 0\), the \((\delta , L)\)-oracle is defined as a (possibly non-unique) mapping \(Q \rightarrow \mathbb {R} \times E^*\) that maps \(\bar{\lambda } \rightarrow (h_{(\delta ,L)}(\bar{\lambda }), g_{(\delta , L)}(\bar{\lambda }))\) which satisfy:

where \(\Vert \cdot \Vert \) is a choice of norm on \(E\). Note that in contrast to the \(\delta \)-oracle model, the \((\delta , L)\)-oracle model does not assume that the function \(h(\cdot )\) is smooth or even concave—it simply assumes that there is an oracle returning the pair \((h_{(\delta ,L)}(\bar{\lambda }), g_{(\delta , L)}(\bar{\lambda }))\) satisfying (51) and (52).

In Method 5 we present a version of the Frank–Wolfe method that utilizes the \((\delta , L)\)-oracle. Note that we allow the parameters \(\delta \) and \(L\) of the \((\delta , L)\)-oracle to be a function of the iteration index \(k\). Inequality (51) in the definition of the \((\delta , L)\)-oracle immediately implies that \(B^w_k \ge h^*\). We now state the main technical complexity bound for Method 5, in terms of the sequence of bound gaps \(\{B_k - h(\lambda _{k+1})\}\). Recall from Sect. 2 the definition \(\mathrm {Diam}_Q := \max \nolimits _{\lambda , \bar{\lambda }\in Q} \{\Vert \lambda - \bar{\lambda }\Vert \}\), where the norm \(\Vert \cdot \Vert \) is the norm used in the definition of the \((\delta , L)\)-oracle (52).

Theorem 5.3

Consider the iterate sequences of the Frank–Wolfe method with the \((\delta , L)\)-oracle (Method 5) \(\{\lambda _k\}\) and \(\{\tilde{\lambda }_k\}\) and the sequence of upper bounds \(\{B_k\}\) on \(h^*\), using the step-size sequence \(\{\bar{\alpha }_k\}\). For the auxiliary sequences \(\{\alpha _k\}\) and \(\{\beta _k\}\) given by (9), and for any \(k \ge 0\), the following inequality holds:

\(\square \)

As with Theorem 5.1, observe that the terms on the right-hand side of (53) are composed of the exact terms which appear on the right-hand side of (10), plus an additional term that is a function of \(\delta _1, \ldots , \delta _k\). Unfortunately, Theorem 5.3 implies an accumulation of errors for Method 5 under essentially any choice of step-size sequence \(\{\bar{\alpha }_k\}\). Indeed, suppose that \(\beta _i = O(i^\gamma )\) for some \(\gamma \ge 0\), then \(\sum _{i = 1}^k\beta _{i+1} = O(k^{\gamma + 1})\), and in the constant case where \(\delta _i := \delta \), we have \(\frac{\sum _{i = 1}^k\beta _{i+1}\delta _i}{\beta _{k+1}} = O(k\delta )\). Therefore in order to achieve an \(O\left( \frac{1}{k}\right) \) rate of convergence (for example with the step-size sequence (18)) we need \(\delta = O\left( \frac{1}{k^2}\right) \). This negative result nevertheless contributes to the understanding of the merits and demerits of different first-order methods as follows. Note that in [4] it is shown that the “classical” gradient methods (both primal and dual), which require solving a proximal projection problem at each iteration, achieve an \(O\left( \frac{1}{k} + \delta \right) \) accuracy under the \((\delta , L)\)-oracle model for constant \((\delta , L)\). On the other hand, it is also shown in [4] that all accelerated first-order methods (which also solve proximal projection problems at each iteration) generically achieve an \(O\left( \frac{1}{k^2}+ k\delta \right) \) accuracy and thus suffer from an accumulation of errors under the \((\delta , L)\)-oracle model. As discussed in the Introduction herein, the Frank–Wolfe method offers two possible advantages over these proximal methods: (i) the possibility that solving the linear optimization subproblem is easier than the projection-type problem in an iteration of a proximal method, and/or (ii) the possibility of greater structure (sparsity, low rank) of the iterates. In Table 1 we summarize the cogent properties of these three methods (or classes of methods) under exact gradient computation as well as with the \((\delta , L)\)-oracle model. As can be seen from Table 1, no single method dominates in the three categories of properties shown in the table; thus there are inherent tradeoffs among these methods/classes.

Proof of Theorem 5.3

Note that (51) and (52) with \(\bar{\lambda } = \lambda \) imply that:

Recall properties (13) and (14) of the dual averages sequences (9). Following the proof of Theorem 2.1, we then have for \(i \ge 1\):

where the first inequality uses (52), and the second inequality uses (54) and the definition of the Wolfe upper bound in Method 5. The rest of the proof follows as in the proof of Theorem 2.1. \(\square \)

6 Summary/conclusions

The Frank–Wolfe method is the subject of substantial renewed interest due to the relevance of applications (e.g., regularized regression, boosting/classification, matrix completion, image construction, other machine learning problems), the need in many applications for only moderately high accuracy solutions, the applicability of the method on truly large-scale problems, and the appeal of structural implications (sparsity, low-rank) induced by the method itself. The method requires (at each iteration) the solution of a linear optimization subproblem over the feasible region of interest, in contrast to most other first-order methods which require (at each iteration) the solution of a certain projection subproblem over the feasible region defined by a strongly convex prox function. As such, the Frank–Wolfe method is particularly efficient in various important application settings including matrix completion.

In this paper we have developed new analysis and results for the Frank–Wolfe method. Virtually all of our results are consequences and applications of Theorems 2.1 and 2.2, which present computational guarantees for optimality gaps (Theorem 2.1) and the “FW gaps” (Theorem 2.2) for arbitrary step-size sequences \(\{\bar{\alpha }_k\}\) of the Frank–Wolfe method. These technical theorems are applied to yield computational guarantees for the well-studied step-size rule \(\bar{\alpha }_k := \frac{2}{k+2}\) (Sect. 3.1), simple averaging (Sect. 3.2), and constant step-size rules (Sect. 3.3). The second set of contributions in the paper concern “warm start” step-size rules and computational guarantees that reflect the quality of the given initial iterate (Sect. 4) as well as the accumulated information about the optimality gap and the curvature constant over a sequence of iterations (Sect. 4.1). The third set of contributions concerns computational guarantees in the presence of an approximate solution of the linear optimization subproblem (Sect. 5.1) and approximate computation of gradients (Sect. 5.2).

We end with the following observation: that the well-studied step-size rule \(\bar{\alpha }_k := \frac{2}{k+2}\) does not require any estimation of the curvature constant \(C_{h,Q}\) (which is generically not known). Therefore this rule is in essence fully automatically scaled as regards the curvature \(C_{h,Q}\). In contrast, the dynamic warm-start step-size rule (40), which incorporates accumulated information over a sequence of iterates, requires updating estimates of the curvature constant \(C_{h,Q}\) that satisfy certain conditions. It is an open challenge to develop a dynamic warm-start step-size strategy that is automatically scaled and so does not require computing or updating estimates of \(C_{h,Q}\).

References

Clarkson, K.L.: Coresets, sparse Greedy approximation, and the Frank–Wolfe algorithm. In: 19th ACM-SIAM Symposium on Discrete Algorithms, pp. 922–931 (2008)

d’Aspremont, A.: Smooth optimization with approximate gradient. SIAM J. Optim. 19(3), 1171–1183 (2008)

Demyanov, V., Rubinov, A.: Approximate Methods in Optimization Problems. American Elsevier Publishing, New York (1970)

Devolder, O., Glineur, F., Nesterov, Y.E.: First-order methods of smooth convex optimization with inexact oracle. Technical Report, CORE, Louvain-la-Neuve, Belgium (2013)

Dudík, M., Harchaoui, Z., Malick, J.: Lifted coordinate descent for learning with trace-norm regularization. In: AISTATS (2012)

Dunn, J.: Rates of convergence for conditional gradient algorithms near singular and nonsinglular extremals. SIAM J. Control Optim. 17(2), 187–211 (1979)

Dunn, J.: Convergence rates for conditional gradient sequences generated by implicit step length rules. SIAM J. Control Optim. 18(5), 473–487 (1980)

Dunn, J., Harshbarger, S.: Conditional gradient algorithms with open loop step size rules. J. Math. Anal. Appl. 62, 432–444 (1978)

Frank, M., Wolfe, P.: An algorithm for quadratic programming. Naval Res. Logist. Q. 3, 95–110 (1956)

Freund, R.M., Grigas, P., Mazumder, R.: Boosting methods in regression: computational guarantees and regularization via subgradient optimization. Technical Report, MIT Operations Research Center (2014)

Giesen, J., Jaggi, M., Laue, S.: Optimizing over the growing spectrahedron. In: ESA 2012: 20th Annual European Symposium on Algorithms (2012)

Harchaoui, Z., Juditsky, A., Nemirovski, A.: Conditional gradient algorithms for norm-regularized smooth convex optimization. Math. Program. (2014). doi:10.1007/s10107-014-0778-9

Hazan, E.L.: Sparse approximate solutions to semidefinite programs. In: Proceedings of Theoretical Informatics, 8th Latin American Symposium (LATIN), pp. 306–316 (2008)

Hearn, D.: The gap function of a convex program. Oper. Res. Lett. 1(2), 67–71 (1982)

Jaggi, M.: Revisiting Frank–Wolfe: projection-free sparse convex optimization, In: Proceedings of the 30th International Conference on Machine Learning (ICML-13), pp. 427–435 (2013)

Khachiyan, L.: Rounding of polytopes in the real number model of computation. Math. Oper. Res. 21(2), 307–320 (1996)

Lacoste-Julien, S., Jaggi, M., Schmidt, M., Pletscher, P.: Block-coordinate frank-wolfe optimization for structural svms. In: Proceedings of the 30th International Conference on Machine Learning (ICML-13) (2013)

Lan, G.: The complexity of large-scale convex programming under a linear optimization oracle. Department of Industrial and Systems Engineering, University of Florida, Gainesville, Florida. Technical Report (2013)

Levitin, E., Polyak, B.: Constrained minimization methods. USSR Comput. Math. Math. Phys. 6, 1 (1966)

Nemirovski, A.: Private communication (2007)

Nesterov, Y.E.: Introductory Lectures on Convex Optimization: A Basic Course, Applied Optimization, vol. 87. Kluwer Academic Publishers, Boston (2003)

Nesterov, Y.E.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005)

Nesterov, Y.E.: Primal-dual subgradient methods for convex problems. Math. Program. 120, 221–259 (2009)

Polyak, B.: Introduction to Optimization. Optimization Software, Inc., New York (1987)

Temlyakov, V.: Greedy approximation in convex optimization. University of South Carolina. Technical report (2012)

Author information

Authors and Affiliations

Corresponding author

Additional information

R. M. Freund: This author’s research is supported by AFOSR Grant No. FA9550-11-1-0141 and the MIT-Chile-Pontificia Universidad Católica de Chile Seed Fund.

P. Grigas: This author’s research has been partially supported through NSF Graduate Research Fellowship No. 1122374 and the MIT-Chile-Pontificia Universidad Católica de Chile Seed Fund.

Appendix

Appendix

Proposition 7.1

Let \(B_k^w\) and \(B_k^m\) be as defined in Sect. 2. Suppose that there exists an open set \(\hat{Q} \subseteq E\) containing \(Q\) such that \(\phi (x,\cdot )\) is differentiable on \(\hat{Q}\) for each fixed \(x \in P\), and that \(h(\cdot )\) has the minmax structure (4) on \(\hat{Q}\) and is differentiable on \(\hat{Q}\). Then it holds that:

Furthermore, it holds that \(B_k^w = B_k^m\) in the case when \(\phi (x,\cdot )\) is linear in the variable \(\lambda \).

Proof

It is simple to show that \(B_k^m \ge h^*\). At the current iterate \(\lambda _k \in Q\), define \(x_k \in \arg \min \limits _{x \in P}\phi (x, \lambda _k)\). Then from the definition of \(h(\lambda )\) and the concavity of \(\phi (x_k, \cdot )\) we have:

whereby \(\nabla _\lambda \phi (x_k, \lambda _k)\) is a subgradient of \(h(\cdot )\) at \(\lambda _k\). It then follows from the differentiability of \(h(\cdot )\) that \(\nabla h(\lambda _k) = \nabla _\lambda \phi (x_k, \lambda _k)\), and this implies from (55) that:

Therefore we have:

If \(\phi (x,\lambda )\) is linear in \(\lambda \), then the second inequality in (55) is an equality, as is (56).

Proposition 7.2

Let \(C_{h, Q}, \mathrm {Diam}_Q\), and \(L_{h,Q}\) be as defined in Sect. 2. Then it holds that \(C_{h, Q} \le L_{h,Q}(\mathrm {Diam}_Q)^2 \).

Proof

Since \(Q\) is convex, we have \(\lambda + \alpha (\tilde{\lambda }- \lambda ) \in Q\) for all \(\lambda , \tilde{\lambda } \in Q\) and for all \(\alpha \in [0,1]\). Since the gradient of \(h(\cdot )\) is Lipschitz, from the fundamental theorem of calculus we have:

whereby it follows that \(C_{h, Q} \le L_{h,Q}(\mathrm {Diam}_Q)^2 \).

Proposition 7.3

For \(k\ge 0\) the following inequality holds:

Proof

The inequality above holds at equality for \(k=0\). By induction, suppose the inequality is true for some given \(k\ge 0\), then

Now notice that

which combined with (57) completes the induction.\(\square \)

Proposition 7.4

For \(k\ge 1\) let \(\bar{\alpha }:= 1-\frac{1}{\root k \of {k+1}}\). Then the following inequalities holds:

-

(i)

\(\displaystyle \frac{\ln (k+1)}{k} \ge \bar{\alpha }\), and

-

(ii)

\((k+1)\bar{\alpha }\ge 1 \).

Proof

To prove (i), define \(f(t):= 1-e^{-t}\), and noting that \(f(\cdot )\) is a concave function, the gradient inequality for \(f(\cdot )\) at \(t=0\) is

Substituting \(t=\frac{\ln (k+1)}{k} \) yields

Note that (ii) holds for \(k = 1\), so assume now that \(k \ge 2\). To prove (ii) for \(k \ge 2\), substitute \(t = -\frac{\ln (k+1)}{k}\) into the gradient inequality above to obtain \(-\frac{\ln (k+1)}{k} \ge 1 - (k+1)^{\frac{1}{k}}\) which can be rearranged to:

Inverting (58) yields:

Finally, rearranging (59) and multiplying by \(k+1\) yields (ii).\(\square \)

Proposition 7.5

For any integers \(\ell , k\) with \(2 \le \ell \le k\), the following inequalities hold:

and

Proof

(60) and (61) are specific instances of the following more general fact: if \(f(\cdot ): [1, \infty ) \rightarrow \mathbb {R}_+\) is a monotonically decreasing continuous function, then

It is easy to verify that the integral expressions in (62) match the bounds in (60) and (61) for the specific choices of \(f(t) = \frac{1}{t}\) and \(f(t) = \frac{1}{t^2}\), respectively.\(\square \)

Rights and permissions

About this article

Cite this article

Freund, R.M., Grigas, P. New analysis and results for the Frank–Wolfe method. Math. Program. 155, 199–230 (2016). https://doi.org/10.1007/s10107-014-0841-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-014-0841-6