Abstract

Most interactive user interfaces (UIs) for virtual reality (VR) applications are based on the traditional eye-centred UI design principle, which primarily considers the user’s visual searching efficiency and comfort, but the hand operation performance and ergonomics are relatively less considered. As a result, the hand interaction in VR is often criticized as being less efficient and precise. In this paper, the user’s arm movement features, such as the choice of the hand being used and hand interaction position, are hypothesized to influence the interaction results derived from a VR study. To verify this, we conducted a free hand target selection experiment with 24 participants. The results showed that (a) the hand choice had a significant effect on the target selection results: for a left hand interaction, the targets located in spaces to the left were selected more efficiently and accurately than those in spaces to the right; however, in a right hand interaction, the result was reversed, and (b) the free hand interactions at lower positions were more efficient and accurate than those at higher positions. Based on the above findings, this paper proposes a hand-adaptive UI technique to improve free hand interaction performance in VR. A comprehensive comparison between the hand-adaptive UI and traditional eye-centred UI was also conducted. It was shown that the hand-adaptive UI resulted in a higher interaction efficiency and a lower physical exertion and perceived task difficulty than the traditional UI.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The rapid development of virtual reality (VR) technology has benefited a variety of domains, such as entertainment, education, and sports training (Adamovich et al. 2009; Bacca et al. 2014; Ohta and Tamura 2014). In VR applications, the user typically wears a head-mounted display (HMD), such as an Oculus Rift, an HTC VIVE or a Hololens, and interacts with the three-dimensional images through a user interface positioned in front of the eyes and interacts with the VR world using manually performed hand movements. The hand operations are conducted either through hand-held devices, such as a Wii controller, or by bare hand gestures based on Microsoft Kinect and Leap Motion. Natural interaction through bare hand movements without touching any medium is often called free hand interaction (Vogel and Balakrishnan 2005). Compared to operations performed through tangible controllers, free hand interaction has advantages in intuitiveness and flexibility and thus is recommended in VR applications (Fikkert 2010; Haque et al. 2015).

In current VR applications, the interactive UIs are most often designed based on the traditional eye-centred UI principle, which is also called the head-centred UI design (Mackinlay et al. 1991). According to this principle, the user’s eyes or head is treated as the reference frame centre, and the interactive objects are placed to benefit the visual perception effect with less consideration on hand interaction efficiency or comfort (Bowman 1999; Lubos et al. 2014). From this perspective, we posit that it is necessary to conduct a deep investigation on free hand interaction performance and related ergonomic issues and based on these studies to develop guidelines for designing more efficient and user-friendly UIs.

According to a literature review on biomechanics and human factors, the biomechanical structure of the user’s upper limb and its kinematic features make hand operation in some positions more flexible and convenient than in other positions (Danckert and Goodale 2001; Hincapié-Ramos et al. 2014; Previc 1990; Lubos et al. 2016). Based on this premise, it is reasonable to assume that (a) the user’s choice of the hand being used (i.e., the left hand or the right hand) and (b) the hand operating position are two crucial factors that affect the performance of free hand interaction in VR. To measure these effects, we conducted an experiment where participants completed a series of free hand target selection tasks in a specifically designed VR programme. In the experiment, the interaction performance was evaluated in terms of target selection efficiency and accuracy. Based on the comparative results for different hand choices and operating positions, the arm’s kinematic features and related effects on free hand interaction were delineated. Based on the findings, we developed a hand-adaptive UI technique in which the user’s hand in operation was identified and the UI components were distributed based on the interactive context and the hand position. Through a comparative study, the hand-adaptive UI was found to have advantages over the traditional eye-centred UI in terms of interaction efficiency, perceived task difficulty and physical exertion.

The remainder of this paper is organized as follows: Sect. 2 presents an overview of the related work. Section 3 states the research objectives and hypotheses tested. Section 4 presents an experiment to evaluate the effects of hand choice and operating position on free hand interaction results in VR. Based on the findings, Sect. 5 develops a hand-adaptive UI approach and conducts a comparative study to demonstrate its advantages against the traditional eye-centred UI approach. In addition, the guidelines for designing more efficient and user-friendly UIs for VR applications are summarized. Finally, Sect. 6 concludes this paper and gives prospects of the future work.

2 Literature review

2.1 Free hand interaction in VR

Free hand interaction, i.e., a natural interaction modality through arm movements and bare hand gestures, has been widely researched over the last decades. Compared to mouse- and Wii controller-mediated interactions, direct interaction by hand has advantages in being more intuitive and compatible with application scenarios (Fikkert 2010; Haque et al. 2015; Vogel and Balakrishnan 2005). In temporary interaction with interactive advertisings in shopping malls, for example, it is inconvenient for passers-by to take out phones or pick up specific devices to interact with the digital content on a display; instead, direct interaction through free hand gestures are more acceptable and preferred. According to Mine et al. (1997) and Lemmerman and LaViola (2007), applying free hand interaction in VR applications results in a higher level of usability and a deeper user immersion than that of indirect interactions.

In addition to its advantages, free hand interaction has been demonstrated to have some deficiencies, such as the ambiguity of hand gestures, inaccuracy in arm movements, and the fatigue problem (Boring et al. 2009; Harrison et al. 2012; Sagayam and Hemanth 2016). Quite a few technologies have been proposed to remedy these drawbacks. For example, Vogel and Balakrishnan (2005) have adopted a high-precision motion-tracking system, i.e., Vicon system, to produce accurate free hand interaction at a distance to the display. Haque et al. (2015) have used an EMG sensor worn on the user’s arm to implement a precise gestural interaction. On large-scale UIs with dense information, Mäkelä et al. (2014) have proposed a magnetic cursor technique to aid target pointing and selection. In this technique, stickiness and gravity features were applied to the targets or cursor so that small targets can be selected more easily. In addition, Lou et al. (2018) have examined arm fatigue in free hand interaction and discovered that a higher interaction difficulty resulted in a higher level of arm fatigue. To alleviate arm fatigue, the authors suggested placing the interactive targets at a low height and eliminating undersized targets.

Despite the large advances in gesture recognition and target aiming techniques, free hand interaction in VR applications has still been criticized to have deficiencies in comfort and productivity. The reason, as Lubos et al. (2016) explained, is that the majority of interactive UIs in VR applications are designed based on the traditional eye-centred UI principle (Mackinlay et al. 1991). In this principle, the user’s eyes or head are treated as the reference frame centre and the UI components are positioned to make them more efficiently perceived (Bowman 1999; Lubos et al. 2014). However, the interaction performance, such as target selection efficiency and accuracy, is often less considered. Apart from the eyes and the head, the user’s two arms can also be used to define the centre of the reference frame and be adapted to by the user in perceptual and motor activities (Colby 1998). Ware and Arsenault (2004) have investigated and discovered that different reference frames resulted in different interaction effects in a virtual environment. Based on this, Gerber and Bechmann (2005) and Lubos et al. (2016) have developed intelligent UI techniques to improve hand interaction in VR. In these techniques, the user’s shoulder and wrist joints were identified and tracked, and the interactive objects were positioned around the joints.

As neurophysiological studies have found, the human visual system has functional differences between the upper- and lower-half visual fields. The upper field was found to be more efficient in perceiving targets at a distance, while the lower field was specifically skilled at guiding gestural operations (Danckert and Goodale 2001; Previc 1990). In mouse- and touch-based interactions, Po et al. (2004) have found that interaction within the lower visual field was more efficient than that within the upper visual field. In Kinect-based motion-sensing interaction, Ren and O’Neill (2013) have also found that the gestural interaction in lower positions are generally more efficient than that in higher positions. But in this study, all participants were right handed, and the potential effects of handedness and hand orientation were less investigated.

Arm fatigue is another common problem in free hand interaction (Boring et al. 2009; Harrison et al. 2012). Hincapié-Ramos et al. (2014) have proposed a metric—consumed endurance (CE)—to quantitatively measure arm fatigue level and have shown that the arm posture (bent, extended) and the hand operating position (shoulder, waist) are two critical factors in free hand interaction fatigue. Specifically, hand interaction at a lower position at the waist level with the arm bent is more labour-saving. The arm fatigue problem has been found to cause spontaneous alternations of the working hand (Tanii et al. 1972) in single hand interaction. From this perspective, it is necessary to comparatively evaluate the interaction performance when two hands are, respectively, engaged in VR interaction, which seldom have been the subject of investigation in earlier research.

2.2 Target selection performance and evaluation methods

Target selection, namely, acquiring and grasping targets, remains by far the dominant interaction paradigm in most UIs (Vogel and Balakrishnan 2005). It generally contains two phases: ballistic and correction. At the ballistic phase, the user moves the pointer close to the target, then at the correction phase adjusts the pointer’s position and clicks to trigger a selection event (Liu et al. 2008).

In the HCI domain, Fitts’ Law (Fitts 1954) is a commonly accepted standard for modelling and evaluating target selection efficiency. In this model, the selection efficiency is usually reported in its reciprocal form, movement time (MT), which is stated as a log-linear function of target width (W) and movement amplitude (A). A number of extensions to Fitts’ Law have been proposed to better model the target selection efficiency in various tasks. Among them, the Shannon formulation of Fitts’ Law is the most widely accepted. In this formulation, the equation of MT is expressed as follows:

The expression of \( \log_{2} \left( {\frac{A}{W} + 1} \right) \) refers to the index of difficulty (ID). Two empirically determined constants, a and b are specific to different setups. Although Fitts’ Law was originally applied to mouse-based interaction on 2D interfaces, it has also been verified to be feasible for evaluating target selection efficiency in 3D environments (Wingrave and Bowman 2005; Murata and Iwase 2001).

Apart from the MT metric, error rate (ER) is another critical indicator in target selection evaluation. The ER can be calculated as the number of incorrect target selection trials out of the overall number of selections. Researchers such as Mackenzie et al. (2001) have proposed four methods for measuring ER in different target selection tasks. Among these methods, the target re-entry (TRE) method is particularly applicable to dwelling selection, where an error occurs when the pointer enters the target region but leaves without dwelling there for a sufficient amount of time. The calculation method of TRE is outlined as follows: ‘if this error (TRE) is recorded twice in a sequence of 10 trials, the TRE is reported as 0.2 or 20%’. In this paper, the target selection error is measured based on the TRE metric.

3 Hypotheses development

This study is aimed at investigating the effects of the hand choice and hand operating position on free hand interaction results in VR and, based on the findings, to summarize a set of guidelines for designing more efficient and user-friendly UIs in VR. As revealed in the literature review, the arm posture and the hand operating position are correlated with the arm fatigue level, but how they impact the hand interaction efficiency and accuracy are still unclear. Additionally, arm fatigue causes spontaneous alternations of the hand being used, which implies that it is necessary to have a comparative evaluation of the interaction performance between two hands (Tanii et al. 1972). According to Mine et al. (1997) and Shoemaker et al. (2010), arm movement in front of the body is more flexible and efficient than in peripheral spaces around the body. Neurophysiological studies have also showed that users could select targets that were located around the hand more accurately than those located in front of eyes (Lubos et al. 2016; Ware and Arsenault 2004). Based on these findings, we propose hypothesis 1 as follows:

H1:

VR environment, targets located at the same side of the hand being used will be selected more efficiently and accurately than those at the opposite side.

Neurophysiological research on human perceptual and motor systems has found that there are functional differences between upper and lower visual fields. The lower visual field is intrinsically more skilled at guiding gestural operations than the upper visual field (Danckert and Goodale 2001; Previc 1990). Evaluations on traditional mouse-based interaction have shown that pointer movement in downward directions has a higher pointing efficiency and selection accuracy than that in upward directions (Po et al. 2004; Ren and O’Neill 2013). Based on these findings, we propose hypothesis 2 as follows:

H2:

Targets located at lower positions relative to the hand are selected more efficiently and accurately than those at higher positions.

4 Experiment

We first conducted a multi-directional target selection experiment to investigate the effects of the hand choice and the hand operating position on free hand interaction results in a VR environment. In this experiment, the interaction performance of both hands, consisting of the target selection efficiency (measured in MT) and accuracy (measured in TRE), was comparatively evaluated.

4.1 Participants

Twenty-four volunteers (17 male and 7 female) aged 20–39 (M = 25.6, SD = 3.50) were recruited to participate in the experiment. The participants were staff and undergraduate students at the local university, specializing in digital media and industrial design. All participants had normal or corrected-to-normal vision, without any body impairments. No participant had used any VR applications before this experiment. The Edinburgh Inventory (Oldfield 1971) was adopted to measure the participants’ handedness characteristic. Four participants were identified to be left handed, while the others were right handed. Through the measurement tool of the HMD’s configuration utility, the inter-pupillary distance (IPD) of the participants was measured, ranging from 6.80 to 7.70 cm (M = 7.30, SD = 0.46). Given the IPD data, the HMD was tuned to provide a correct perspective and stereoscopic rendering for each participant. The arm lengths (from the shoulder joint to the palm centre) of the participants were also measured, ranging from 65.8 to 72.4 cm (M = 68.6, SD = 2.93). An appropriate distance from the targets to the participant was provided in the VR programme, ensuring that all the targets were reachable.

4.2 Apparatus

The experiment was conducted in a multimedia laboratory. A workstation computer (Windows 10, 32 GB memory, and 4.0 GHz Intel 32-core processor) was provided as the host computer of VR, and a 40-inch monitor was placed on a 1.2-metre-high desk. An Oculus Rift (Developer Kit) HMD was provided, which was connected to the computer through a 2-metre-long cable. The Oculus Rift HMD offers a nominal diagonal field of vision (FOV) of approximately 100 degrees at a resolution of 1920 × 1080 pixels (960 × 1080 for each eye). An ASUS Xtion PRO™ RGBD camera was mounted on the middle-top of the monitor to track hand movements, and it was connected to the HMD and the computer. The RGBD camera has a recognition precision of 0.5 cm at a distance of 1.5 metres and a refresh rate of 60 HZ. The camera was chosen for its good compatibility with the OpenNI API (http://www.openni.ru/) that was used to implement skeleton tracking and hand recognition in this experiment. In the centre front of the RGBD camera, a chair was fixed at a distance of 2 m from the monitor. The participants were instructed to sit upright in the chair to complete the study tasks. Figure 1 shows the experimental setup and scenario. The distance between the user’s palm and the camera was approximately 1.5 m.

4.3 Procedure

A simplified but representative VR programme was developed for evaluating target selection performance. In the VR programme, 8 spheres were presented in a 3D scene that was implemented through the Unity3D engine, as shown in the left screenshot of Fig. 2. For each selection trial, all spheres were visible and coloured blue except for the target that was rendered in orange. During the selection task, when the participant’s interacting hand was identified, a cross-style pointer appeared in the VR scene to represent the central position of the palm. Once the participant moved the pointer to the target sphere, the target became green to give a visual cue that the target had been selected.

Through a pretest, we selected a constant transfer ratio of 3.0 pixels/mm to transform the participant’s hand movement to the pointer’s movement in the VR scene. The transfer ratio here refers to the ratio between the pointer movement and input device displacement, which is also referred to as the control-to-display (CD) gain (Nancel et al. 2015). With a CD gain of 3.0, all participants could control the pointer to traverse the entire FOV with a hand movement distance of 48 cm while having a sufficient operation precision. Additionally, the RGBD camera was calibrated to ensure that the participant’s hand placed at stomach height could reach the lowest objects, and the participant’s hand placed at the forehead height could reach the top objects in the FOV. The hand-tracking programme was developed through a natural interaction API of OpenNI, which has been verified to be effective and accurate in recognizing and tracking body joints in real time (Cho and Lee 2012). In this programme, the participant’s chest joint was identified as the reference frame centre in the real world, which corresponds to the central point in the virtual scene. Specifically, when the participant placed the hand in front of his or her chest joint, the pointer was located at the centre of the FOV. Figure 3 illustrates the hand-tracking technique and the space range of the hand movements.

To measure the effect of interacting with the virtual world, 10 volunteer users were recruited to experience the programme and complete a Slater–Usoh–Steed presence questionnaire (SUS-PQ) (Usoh et al. 2006). A mean SUS-PQ score of 4.20 (SD = 1.09) was achieved, indicating that the VR programme used in this experiment had a qualified immersion and that the findings achieved from this experiment are generally applicable to VR applications.

Since a repeated-measures within-participant design was used with 3 variables for target size, 3 variables for movement distance and 2 variables for hand choice, each participant was required to complete 18 task blocks. As shown in Fig. 2, there were 8 spheres in the VR scene, and each sphere was highlighted 50 times as the target in a block; thus, one block consisted of 400 selection trials. From one sphere to another, the participant continuously completed all the selection trials until the VR programme terminated automatically. During this procedure, the participant was not permitted to change hands. The interval from when a new target was shown to when the pointer touched the target was defined as the movement time (MT). To complete a selection trial, the participant was required to hold the pointer at the target for 500 ms. If the pointer moved away without sustaining sufficient time, one error was counted, and the target location was recorded. The MT values for 400 trials and the error time of each target location were recorded and stored in a log file.

The participants were given an introduction about the purpose of the experiment and task requirements. In the phase prior to being presented with the formal blocks, the participants were instructed to practise until they were observed to have achieved a stable interaction performance. Between two blocks, the participants were given time to relax their eyes and arms. The total time for each participant, including the experimenter’s introduction, practice trials, formal blocks and breaks, lasted approximately 2.5 h.

4.4 Experimental design

In this experiment, a 2 × 3 × 3 repeated-measures within-participant design was adopted with the following three independent variables:

-

Hand choice: left hand, right hand;

-

Target width: 60 mm, 40 mm, 20 mm;

-

Amplitude (movement distance): 450 mm, 300 mm, 150 mm.

The Fitts’ index of difficulty (ID) for the blocks ranged from 1.81 to 4.55 bits. Each participant completed 18 blocks of 7200 trials with one block per combination of hand choice, target width and amplitude. The presentation order of the target widths and amplitudes was counterbalanced across the participants based on a Latin square. In addition, the order of the hand adopted was counterbalanced across the participants: Half used their left hand first, while the other half used their right hand first.

4.5 Analysis and results

Each of 24 participants completed 18 blocks, so that 432 experiment log files were collected. Each log file contained 400 MT records; thus, a total of 172,800 MT records were collected. MT records that were more than 3 standard deviations from the mean value were treated as outliers and excluded. Finally, 170,802 (approximately 98.8%) records were preserved. The MT and selection error data were confirmed as being normally distributed; thus, the mean values were calculated in the analyses. The study results were analysed by repeated-measures ANOVA with post hoc Tukey HSD tests and two-tailed dependent T tests for paired comparisons. All the reported results below are significant at least at the p <0.05 level.

To compare the target selection results at different positions, the target locations in the VR are categorized into different fields of vision (FOVs), as illustrated in the upper half of Fig. 4. Vertically, the target locations were categorized into upper and lower FOVs: the former consists of upper (U), upper-left (UL), and upper-right (UR) locations, while the latter consists of lower (L), lower-left (LL), and lower-right (LR) locations. Horizontally, the target positions were categorized into left and right FOVs: the former consists of left (L), upper-left (UL), and lower-left (LL) locations, while the latter consists of right (R), upper-right (UR), and lower-right (LR) locations. The user, respectively, performs upward, downward, leftward, and rightward hand movements to select the targets in the divided FOVs, as illustrated in the lower part of Fig. 4.

4.5.1 Movement time (MT)

The MT refers to the time the participant spent in moving the hand to reach a target and specifically does not include the homing time, dwell time, or reaction time when a discrete task is used (Soukoreff and MacKenzie 2004). Table 1 presents the MT results for the divided FOVs with two hands separated.

To compare the target selection efficiency in vertically divided FOVs, Fitts’ Law modelling of the MT results was performed for both hands in the upward and downward hand movement directions. As shown in Fig. 5, the MT value of target selection in the downward direction was significantly lower than that in the upward direction for both hands. Moreover, the Fitts’ Law modelling line of the downward direction had a smaller slope than that of the upward direction. A factorial repeated-measures ANOVA for Hand Choice × Vertical Direction (upward, downward)× Fitts’ ID confirmed a significant effect of Vertical Direction (F(1, 3159)=8555.16, p < 0.001), but no obvious effect of Hand Choice (F(1, 3159) = 0.011, p = 0.925). These results indicated that regardless of which hand was engaged, free hand selection of the targets that were located at the lower side of the hand was more efficient than selection of the targets at the upper side. In addition, an interaction effect was found for Vertical Direction × Fitts’ ID (F(6, 18,954) = 158.23, p < 0.001), indicating that in more difficult interaction tasks, target selection in downward directions had a more obvious superiority in efficiency compared to that in upward directions.

We then compared the MT results between the leftward and rightward movement directions with either hand. Figure 6 shows the contrastive result of the MT modelling between two hands. As presented in the left diagram, when the left hand is used, MT in the leftward direction was significantly lower than that in the rightward direction. But in the right diagram, when the right hand is used, the result was reversed. A factorial repeated-measures ANOVA for Hand Choice × Horizontal Direction (leftward, rightward)× Fitts’ ID showed that there was no significant effect of Hand Choice (F(1, 3159) = 0.203, p = 0.476) or Horizontal Direction (F(1, 3159) = 0.037, p = 0.194), but there was a notable interaction effect of Hand Choice × Horizontal Direction (F(1, 3159) = 2970.23, p < 0.001). Further analyses using a two-tailed dependent T test showed that left hand interaction at the left side had a significantly lower MT value than that at the right side (left side: M = 670.9; right side: M = 816.8; t(3159) = − 16.60, p < 0.001); but for the right hand, the MT value at the right side became lower than that at the left side (left side: M = 823.2; right side: M = 658.6; t(3159) = 16.56, p < 0.001). These results indicated that the choice of the hand being used has a decisive effect on the target selection efficiency in VR: targets located at the same side of the hand being used can be selected more efficiently than those at the opposite side.

4.5.2 Selection error rate (ER)

As explained in Sect. 2.2, we used the target re-entry (TRE) metric (MacKenzie et al. 2001) to assess the target selection ER. TRE is calculated by counting the selection error trials from the overall selection trials. Here error trials refer to the selection events where the cursor entered into an incorrect position or reached the target position but slide away without sustaining a sufficient amount of time. Table 2 presents the TRE results in different movement directions with two hands separated.

As shown in Fig. 7, TRE in the downward direction was lower than that in the upward direction for both hands. A factorial repeated-measures ANOVA for Hand Choice × Vertical Direction (upward, downward)× Fitts’ ID confirmed a significant effect of Vertical Direction (F(1, 859) = 4.78, p = 0.039), but no obvious effect of Hand Choice (F(1, 859) = 0.02, p = 0.89). It was calculated that free hand selection in the upward direction had a mean TRE of 0.14 (SD = 0.038); but in the downward direction, the mean TRE had a significantly lower value of 0.11 (SD = 0.027). These results indicated that free hand interaction in the downward direction was more accurate than that in the upward direction.

Figure 8 presents the contrastive results of the TRE in the leftward and rightward directions for either hand. The left hand had a lower TRE in selection of targets located at the left side, but the right hand selected targets located at the right side more accurately. A factorial repeated-measures ANOVA for Hand Choice × Horizontal Direction (leftward, rightward)× Fitts’ ID found no significant effect of Hand Choice (F(1, 859) = 2.28, p = 0.67) or Horizontal Direction (F(1, 859) = 3.08, p = 0.11), but found a notable interaction effect of Hand Choice × Horizontal Direction (F(1, 859) = 234.32, p < 0.001). Further analyses using two-tailed dependent T tests showed that the left hand had a significantly lower TRE at left side than at right side (left side: M = 0.11, SD = 0.031; right side: M = 0.15, SD = 0.036; p < 0.001), but the right hand performed oppositely (left side: M = 0.14, SD = 0.034; right side: M = 0.11, SD = 0.033; p < 0.001). Above results showed that choice of the hand had a decisive effect on the target selection accuracy: in left hand interaction, targets located at the left side could be selected more accurately; but in right hand interaction, targets located at the right side could be selected more accurately.

4.6 Discussion

The first experiment demonstrated that hand operation in the downward direction was more efficient and accurate than that in the upward direction. Thus, in a VR scene, targets at lower positions relative to the hand can be selected more efficiently and accurately than those at higher positions. This finding was consistent with the earlier finding that a user’s visually guided gestural operation in the lower FOV is more skilled and flexible than that in the upper FOV (Po et al. 2004). It has also been found that the choice of the hand being used had a crucial impact on free hand interaction in VR, the left hand selected targets at the left side more promptly and accurately, but the right hand performed better in selecting targets at the right side. Based on above findings, hypotheses 1(H1) and 2(H2) are supported.

Based on the effects of the hand choice and hand operating position on the target selection results in VR, implications and guidelines are drawn for building more efficient and easier-to-use UIs in VR applications. For example, since hand movement in the upward direction requires raising the hand against gravity, it not only has a lower operation efficiency and accuracy but also causes arm fatigue more easily than a hand downward movement. From this perspective, an optimization strategy for the UI component layout in VR is to place the interactive objects at lower heights. In sequential target selection tasks, it is suggested that the targets should be located based on the selection order, and a general principle is to place the latter targets at a lower height than that of the previous target. Given the hand choice effect on the interaction performance, we recommend applying computer vision techniques to identify the user’s hand in operation and developing a hand-adaptive UI layout in a 3D virtual environment.

5 Comparative study

In this section, we proposed a hand-adaptive UI technique to improve target selection performance in VR. Then, we conducted a comparative study to demonstrate the practical benefits of the hand-adaptive UI relative to the traditional eye-centred UI. In the comparative study, another group of participants were recruited to experience the hand-adaptive UI and the traditional one. They were required to complete a series of operation tasks in two UI conditions, respectively. Then their task performance, usability, perceived task difficulty and physical exertion were comparatively evaluated.

5.1 Hand-adaptive UI technique

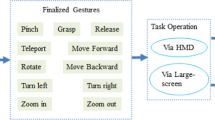

Given the effects of the hand choice and the hand operating position on free hand interaction in VR, we propose that an intelligent hand-adaptive technique that can adjust the UI component layout based on the hand orientation and position is a beneficial method for building interactive UIs in VR. Based on a literature review, the traditional eye-centred UI principle was derived from software and web UI design in which the visual perception of the UI information is considered more often than the user interaction performance; thus, the UI components are often located in the centre of the FOV for more efficient visual searching and monitoring (Mackinlay et al. 1991; Travis 1990). But in a hand-adaptive UI, the hand interaction performance receives more attention, and the user’s arm movement features are treated as important factors that influence user interaction results and ergonomics. In this technique, the user’s hand being used is identified through a non-intrusive method, and the UI components are located based on the hand position. For example, when the user interacts in the VR environment through his or her right hand, the hand-adaptive UI generates interactive targets at the lower-right side of the hand for a higher efficiency and accuracy in the target selection. Figure 9 presents a comparison of the effects of generating the interactive target layouts using the traditional eye-centred UI and hand-adaptive UI approaches.

The hand-adaptive UI technique is designed to identify and track the user’s joints (e.g., the left and right shoulder, two elbow and hand joints) in real time through an RGBD camera and the natural interaction API of OpenNI, as explained in Sect. 4.3. When only the left hand is identified or two hands simultaneously identified, but the left hand joint is obviously higher than the right hand joint (height difference ≥ 15 cm) will the user be judged to be interacting using the left hand. If two hands are operating at an almost equal height, a textual hint will be presented at the centre of the UI, and the interaction will be suspended. Otherwise, the user is judged to be interacting using the right hand.

We then performed a comparative study to demonstrate the benefits of the hand-adaptive UI relative to the traditional eye-centred UI through an e-shopping VR application.Footnote 1 Such an application was selected for an important reason: In this application, a computer-simulated 3D shopping mall scene with product shelves was rendered. The user is immersed in the shopping scene, navigates and seeks products from shelves similar to the operation in a real shopping scene. Since the target pointing and selection remains by far the dominant interaction paradigm in most UIs (Vogel and Balakrishnan 2005), such an e-shopping application is a good representation of a 3D programme in a VR environment.

5.2 Participants

Twenty volunteer participants (14 males and 6 females) were recruited with a mean age of 23.7 years (SD = 3.20). The participants were staff and students from a local college campus. They had normal or corrected-to-normal vision, without any physical injuries or discomfort. All of the participants used their right hand as the dominant hand. Eight of them had participated in the earlier experiment, while the other 12 had never experienced VR HMDs before this study. To provide a correct perspective and stereoscopic rendering on the HMD, the IPDs of the participants were measured through the same method as in the first experiment. The results ranged from 6.90 to 7.60 cm (M = 7.36, SD = 0.38).

5.3 Apparatus and method

The experimental apparatus, such as the Oculus HMD, ASUS RGBD camera, workstation computer, and other environmental conditions, remained the same as the first experiment. The virtual scene in the e-shopping application was implemented and rendered through the Unity3D engine in C#. In this application, a stereoscopic scene of a shopping mall was presented with different kinds of products placed on shelves. Once the user’s palm was identified, the palm centre was tracked to control the location of the pointer in the VR scene. The pointer was rendered as an orange coloured ‘+’ icon. When the user moved the pointer to the target and sustained it there for 1000 ms without sliding away, the target was selected and highlighted with an orange border. Then, a group of interactive buttons was superimposed on the 3D scene, including a button to add the product to the cart, a button with detailed product information, a purchase button and an exit button.

In the traditional eye-centred UI solution, these buttons were located at the centre of the FOV, as shown in the left screenshot of Fig. 9; but in the hand-adaptive UI proposal, the buttons were presented at the lower-left side or the lower-right side of the target based on the user’s hand, as shown in the right screenshot of Fig. 9. The distances from the target to the interactive buttons were flexibly adjusted from 64 mm to 324 mm based on the target location in the FOV. The traditional eye-centred UI was selected as the baseline, and it was compared with the hand-adaptive UI in terms of the interaction task performance, usability and user experience. Other variables, such as the button size, spatial distance between buttons and the aesthetic style of the buttons, were counterbalanced across two UI techniques.

Prior to the study, all the participants were given a brief introduction about the purpose of the study and task requirements, and then, they were provided a task sheet. The task sheet consisted of 10 items, each item was a statement about the interaction task, such as ‘find a pair of black shoes with a price of $110.0 from the Adidas store, and put it into your shopping cart’. The participants were given sufficient time to read through the task sheet and understand the task requirements. During the study, the experimenter repeatedly reminded the participant of the task items, and the participant was required to report when the task was completed. The application programme was designed to record the participant’s task performance, including the completion time of seeking products and the moments of acquiring different buttons. Note that the task sheets provided to different participants consisted of different tasks, but the difficulty was counterbalanced.

Each participant was required to complete two blocks: one block consisted of tasks using the traditional eye-centred UI, while the other block consisted of tasks using the hand-adaptive UI. The block order was counterbalanced across the participants: half of the participants started with the traditional UI, while the other half started with the hand-adaptive UI. Each participant was required to select only one hand to interact with, and he or she was allowed to alternate the interacting hand freely throughout the tasks. Between two blocks, the participant was given a half-hour to relax their arms and eyes, and different task sheets were assigned to two blocks to circumvent the learning effect. After each block, the participant was required to complete three questionnaires for respectively measuring perceived usability, task difficulty and physical exertion. More specifically, a System Usability Scale (SUS) questionnaire from Brooke (1996) was adopted to measure perceived usability; a Borg15 Scale from Borg (1982) was adopted to assess physical exertion in interaction; and a NASA TLX scale from Hart (2006) was adopted to evaluate perceived difficulty of the interaction tasks, in terms of 6 measurements. Afterwards, the participants were encouraged to give comments about the interaction techniques, UIs and overall user experience in the VR application.

5.4 Results

Given the time records in the task logs, the operation time (or response time) of acquiring specific buttons was calculated. Here, the operation time refers to the time interval from when a product was selected to when a functional button was activated, such as adding the product to the shopping cart or purchasing it. Additionally, the results of the questionnaires or scales were collected to analyse the participants’ subjective evaluations on the UI techniques. Figure 10 shows the contrastive results of (a) the operation time, (b) perceived physical exertion and (c) usability of the traditional UI and hand-adaptive UI. As presented, the hand-adaptive UI resulted in a far shorter operation time than the eye-centred UI (two-tailed independent T test, traditional UI: M =1055.9, SD = 224.76; hand-adaptive UI: M =696.3, SD = 118.72; T(19) = 5.69, p < 0.001), indicating that the new UI technique had an obvious strength in interaction efficiency relative to the traditional eye-centred technique. In the usability analysis, there was little significant difference between the hand-adaptive UI and the traditional UI (Mann–Whitney, U = 34.00, Z = − 0.054, p = 0.566). However, in the physical exertion assessment (Borg15 scale), the hand-adaptive UI was found to be less tiring than the eye-centred UI (Mann–Whitney, U = 13.00, Z = − 3.119, p = 0.002).

In regards to the perceived task difficulty, it was assessed through 6 measurements which were, respectively, mental demand, physical demand, temporal demand, overall performance, effort and frustration. Table 3 provides a brief interpretation about the purpose of each measurement. As expected, the result data of the perceived usability assessment, the physical exertion assessment, and the perceived difficulty assessment were found to be abnormally distributed and thus were analysed through a Mann–Whitney nonparametric test.

Table 4 shows the statistical result of the perceived task difficulty assessment, in separate measurements and overall. The hand-adaptive UI was perceived to have a significantly lower task difficulty than the traditional UI (Mann–Whitney, U =1.00, Z = − 2.73, p = 0.006). In the separated scales of mental and physical loads, perceived effort and frustration, the hand-adaptive UI achieved a higher level of user satisfaction than the traditional UI (all p < 0.001).

The participants’ comments were consistent with the statistical results: seven participants said that they preferred the hand-adaptive UI to the traditional eye-centred UI because the UI components in the latter interfered with the participant’s eyesight at some time. Especially when the participant was seeking targets in the VR scene, the UI components superimposed at the centre of the FOV became obstacles that harmed the participant’s visual searching effectiveness and concentration. In the hand-adaptive UI, however, the eyesight was less influenced by the interface components, and thus, it was generally accepted to be more immersive and easier to use than the traditional UI. Nine participants expressed that free hand interaction in the hand-adaptive UI was more efficient and convenient and less tiring, while the other 11 participants showed an indifferent attitude. Two participants complained that when moving their hand from one product to another in the hand-adaptive UI, all interactive buttons changed locations simultaneously, which introduced a higher difficulty in memorizing the components’ positions in the UI.

5.5 Discussion

Through a comparative study, the hand-adaptive UI was found to outperform the traditional eye-centred UI in terms of interaction efficiency, perceived task load, and physical comfort in a VR environment. Furthermore, an adaptive layout of the UI components based on the user’s hand being used was determined to be easily accepted by users and more appealing than the traditional static UI layout, and at the same time, it has little impact on usability. Based on the results of the target selection experiment and the comparative study, implications and suggestions for designing more efficient UIs in VR are discussed as follows:

First, more sophisticated ergonomic factors, such as the functional features of the user’s sensorimotor systems and the kinematic features of arm movement, have been shown to impact free hand interaction performance in VR, which has been investigated less often in traditional mouse-based interactions. From this perspective, several factors need to be simultaneously considered when designing UI components layout in VR applications. More specifically, (a) the sense of presence and user immersion, (b) effect of visual perception, (c) efficiency and accuracy of free hand interaction, (d) fatiguability in free hand interaction, (e) hand operation accuracy and comfort level at different positions, as well as (f) the user’s handedness characteristic are all important metrics that should be comprehensively considered in VR-related assessments.

Second, our work has found that free hand interaction performance in VR has a close correlation with kinematic features of arm movement. More specifically, hand pointing and target selection in some movement directions are more efficient and accurate than in other directions. In general, hand movement in downward directions has a more efficient pointing and more accurate selection than that in upward directions. The left hand interacts more efficiently and accurately in leftward directions, but the right hand interacts more efficiently and accurately in rightward directions. We termed this contrastive performance of two hands as the ‘hand choice effect’, which has seldom been investigated in earlier research. Based on the these, we suggest adopting computer vision-based techniques to identify the user’s hand in operation in real time and, based on this, building a hand-adaptive UI component layout in VR.

Last but not the least, the interactive targets in our first experiment were rendered as spheres without any semantics, but in practical VR applications, the targets are mostly real objects in various forms. Some participants in the experiment suggested designing real objects to replace the spheres. They expressed that real objects with semantic appearances could help them develop a spatial memory about the positions of the targets more easily; thus, they could acquire the targets without visual guidance. The participants who thought more highly of the hand-adaptive UI also appreciated the significance of a semantic layout of the UI components in VR interaction. They commented that in the hand-adaptive UI, the spatial layout of the targets based on the hand position was beneficial for building a semantic correlation between the targets and spatial positions. For example, the shopping cart button was placed at left or right side of the hand, and the purchase button was placed in the downward direction. Once the semantic correlation between buttons and spatial positions is built and memorized, users can acquire the buttons more efficiently. If possible, users can even complete eyes-free acquisition of the buttons through their spatial memory of the button positions. In future design of 3DUI layouts in VR applications, we suggest designers and ergonomic engineers take advantage of the user’s spatial memory and the semantic correlation between objects and spatial positions. To make the semantic correlation easier to understand, we suggest that the spatial locations should be consistent with users’ cognition and their daily experience. For example, the frequently acquired objects are better placed in hand surrounding spaces; and buttons such as the light switch and trash bin should be placed at high and low positions, respectively.

This research was conducted in a VR environment with an Oculus HMD for important reasons. First, the most fundamental purpose of this research is to investigate the free hand interaction performance and related influencing factors, but there are multiple fields free hand interaction techniques can be applied in, e.g., motion-sensing video games, VR and AR applications. Among these, VR is the most representative technology that has penetrated many aspects of life. Conclusions gained from VR experiments can benefit a large number of free hand interaction applications. Second, the interactive space in a VR environment is far larger than that in traditional interactions. In a VR interactive space, targets can be located at any positions surrounding the body, and the user needs to alternate the hand and perform multi-directional hand movements to acquire different targets. But in a limited interactive space, e.g., where targets are located at the left side of body, the user can select all targets through an arm posture without any large alternations in the hand position. From this perspective, a VR environment with an Oculus HMD is an appropriate experimental setup for measuring the effects of the hand choice and hand operating position on free hand interaction performance. Meanwhile, the findings drawn from this research are more applicable to VR applications.

6 Limitations and future work

There are limitations noted in this research. First, the visual searching and navigation efficiencies are also important evaluation metrics in VR, but in this paper they are not considered. To gain a more comprehensive understanding about the comparison between the hand-adaptive UI and the traditional eye-centred UI in terms of visual navigation and target searching efficiency, we plan to use an eyesight-tracking headset in our future experiments. Second, although the hand-adaptive UI has been found to cause less fatigue than the eye-centred UI (see in Fig. 10), factors that are responsible for arm fatigue level in free hand interaction are still not completely understood. In the future, it would be valuable to investigate a fatigue-free design, including the UI features, free hand interaction paradigms and techniques that are good for alleviating arm fatigue in a VR environment. Finally, the hand-adaptive UI technique in this paper identifies the user’s hand being used by comparing the heights of the left and right hands; thus, it is applicable to single-handed interaction. But in bimanual interactions, this mechanism is no longer valid. For example, when two hands simultaneously perform an interaction task, it is unnatural to raise one hand higher than the other hand. In the next iteration of the hand-adaptive technique, we will introduce new mechanisms for identifying the hand being used, e.g., performing a specific gesture to decide which hand is adapted.

Notes

This e-shopping VR application was supported by the ‘Buy+’ program from Taobao corporation (https://www.taobao.com), Alibaba group.

References

Adamovich SV, Fluet GG, Tunik E, Merians AS (2009) Sensorimotor training in virtual reality: a review. NeuroRehabilitation 25(1):29–44. https://doi.org/10.3233/nre-2009-0497

Bacca J, Baldiris S, Fabregat R, Graf S, Kinshuk K (2014) Augmented reality trends in education: a systematic review of research and applications. Educ Technol Soc 17(4):133–149

Borg GA (1982) Psychophysical bases of perceived exertion. Med Sci Sports Exerc 14(5):377–381

Boring S, Jurmu M, Butz A (2009) Scroll, tilt or move it: using mobile phones to continuously control pointers on large public displays. In: Proceedings of the 21st annual conference of the Australian computer–human interaction special interest group: design. ACM, pp 161–168. https://doi.org/10.1145/1738826.1738853

Bowman DA (1999) Interaction techniques for common tasks in immersive virtual environments—design, evaluation, and application. Ann Rheum Dis 28(3):37–53

Brooke J (1996) SUS-A quick and dirty usability scale. Usability Eval Ind 189(194):4–7

Cho OH, Lee WH (2012) Gesture recognition using simple-OpenNI for implement interactive contents. Future Inf Technol Appl Serv 179:141–146

Colby CL (1998) Action-oriented spatial reference frames in cortex. Neuron 20(1):15–24. https://doi.org/10.1016/S0896-6273(00)80429-8

Danckert J, Goodale MA (2001) Superior performance for visually guided pointing in the lower visual field. Exp Brain Res 137(3):303–308. https://doi.org/10.1007/s002210000653

Fikkert FW (2010) Gesture interaction at a distance. Universiteit Twente, Enschede

Fitts PM (1954) The information capacity of the human motor system in controlling the amplitude of movement. J Exp Psychol 121(3):381–391

Gerber D, Bechmann D (2005) The spin menu: a menu system for virtual environments. Proc IEEE Virtual Real 2005:271–272. https://doi.org/10.1109/VR.2005.1492790

Haque F, Nancel M, Vogel D (2015) Myopoint: pointing and clicking using forearm mounted electromyography and inertial motion sensors. In: Proceedings of the 33rd annual ACM conference on human factors in computing systems. ACM, Seoul, Republic of Korea, pp 3653–3656. https://doi.org/10.1145/2702123.2702133

Harrison C, Ramamurthy S, Hudson SE (2012) On-body interaction: armed and dangerous. In: Proceedings of the sixth international conference on tangible, embedded and embodied interaction. ACM, Kingston, Ontario, Canada, pp 69–76. https://doi.org/10.1145/2148131.2148148

Hart SG (2006) Nasa-task load index (Nasa-TLX); 20 years later. Hum Factors Ergon Soc Annu Meet Proc 50(9):904–908

Hincapié-Ramos JD, Guo X, Moghadasian P, Irani P (2014) Consumed endurance: a metric to quantify arm fatigue of mid-air interactions. In: Proceedings of the SIGCHI conference on human factors in computing systems. ACM, Toronto, Ontario, Canada, pp 1063–1072. https://doi.org/10.1145/2556288.2557130

Lemmerman DK, LaViola JJ (2007) Effects of interaction-display offset on user performance in surround screen virtual environments. Proc IEEE Virtual Real 2007:303–304. https://doi.org/10.1109/VR.2007.352513

Liu G, Chua R, Enns JT (2008) Attention for perception and action: task interference for action planning, but not for online control. Exp Brain Res 185(4):709–717. https://doi.org/10.1007/s00221-007-1196-5

Lou X, Peng R, Hansen P, Li XA (2018) Effects of user’s hand orientation and spatial movements on free hand interactions with large displays. Int J Hum Comput Interact 34(6):519–532

Lubos P, Bruder G, Steinicke F (2014) Analysis of direct selection in head-mounted display environments. In: Proceedings of the 2014 IEEE symposium on 3D user interfaces (3DUI), pp 11–18. https://doi.org/10.1109/3dui.2014.6798834

Lubos P, Bruder G, Ariza O, Steinicke F (2016) Touching the sphere: leveraging joint-centered kinespheres for spatial user interaction. In: Proceedings of the 2016 symposium on spatial user interaction. ACM, Tokyo, Japan, pp 13–22. https://doi.org/10.1145/2983310.2985753

MacKenzie IS, Kauppinen T, Silfverberg M (2001) Accuracy measures for evaluating computer pointing devices. In: Proceedings of the SIGCHI conference on human factors in computing systems. ACM, Seattle, Washington, USA, pp 9–16. https://doi.org/10.1145/365024.365028

Mackinlay J, Card SK, Robertson GG (1991) A semantic analysis of the design space of input devices. ACM Trans Inf Syst 9(2):99–122

Mäkelä V, Heimonen T, Turunen M (2014) Magnetic cursor: improving target selection in freehand pointing interfaces. In: Proceedings of the international symposium on pervasive displays. ACM, Copenhagen, Denmark, pp 112–117. https://doi.org/10.1145/2611009.2611025

Mine MR, Frederick P, Brooks J, Sequin CH (1997) Moving objects in space: exploiting proprioception in virtual-environment interaction. In: Proceedings of the 24th annual conference on computer graphics and interactive techniques, pp 19–26. https://doi.org/10.1145/258734.258747

Murata A, Iwase H (2001) Extending Fitts’ law to a three-dimensional pointing task. Hum Mov Sci 20(6):791–805. https://doi.org/10.1016/S0167-9457(01)00058-6

Nancel M, Pietriga E, Chapuis O, Beaudouin-Lafon M (2015) Mid-air pointing on ultra-walls. ACM Trans Comput Hum Interact: TOCHI 22(5):1–62

Ohta Y, Tamura H (2014) Mixed reality: merging real and virtual worlds. Springer, Berlin

Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9(1):97–113

Po BA, Fisher BD, Booth KS (2004) Mouse and touchscreen selection in the upper and lower visual fields. In: Proceedings of the SIGCHI conference on human factors in computing systems. ACM, Vienna, Austria, pp 359–366. https://doi.org/10.1145/985692.985738

Previc FH (1990) Functional specialization in the lower and upper visual fields in humans: its ecological origins and neurophysiological implications. Behav Brain Sci 13(3):519–542. https://doi.org/10.1017/S0140525X00080018

Ren G, O’Neill E (2013) 3D selection with freehand gesture. Comput Graph 37(3):101–120. https://doi.org/10.1016/j.cag.2012.12.006

Sagayam KM, Hemanth DJ (2016) Hand posture and gesture recognition techniques for virtual reality applications: a survey. Virtual Real. https://doi.org/10.1007/s10055-016-0301-0

Shoemaker G, Tsukitani T, Kitamura Y, Booth KS (2010) Body-centric interaction techniques for very large wall displays. In: Proceedings of the 6th Nordic conference on human–computer interaction: extending boundaries. ACM, Reykjavik, Iceland, pp 463–472. https://doi.org/10.1145/1868914.1868967

Soukoreff RW, MacKenzie IS (2004) Towards a standard for 1040 pointing device evaluation, perspectives on 27 years of Fitts’ Law research in HCI. Int J Hum Comput Stud 61(6):751–789

Tanii K, Kogi K, Sadoyama T (1972) Spontaneous alternation of the working arm in static overhead work. J Hum Ergol 1(2):143–155

Travis DS (1990) Applying visual psychophysics to user interface design. Behav Inf Technol 9(5):425–438

Usoh M, Catena E, Arman S, Slater M (2006) Using presence questionnaires in reality. Presence Teleoperator Virtual Environ 9(5):497–503

Vogel D, Balakrishnan R (2005) Distant freehand pointing and clicking on very large, high resolution displays. In: Proceedings of the 18th annual ACM symposium on user interface software and technology. ACM, Seattle, WA, USA, pp 33–42. https://doi.org/10.1145/1095034.1095041

Ware C, Arsenault R (2004) Frames of reference in virtual object rotation. In: Proceedings of the 1st symposium on applied perception in graphics and visualization. ACM, Los Angeles, California, USA, pp 135–141. https://doi.org/10.1145/1012551.1012576

Wingrave CA, Bowman DA (2005) Baseline factors for raycasting selection. In: Proceedings of HCI international 2005. Las Vegas, Nevada, USA, pp 1–10

Acknowledgements

This research was supported by the (National Natural Science Foundation of China) under Grant (61902097), the (Zhejiang Provincial Natural Science Funding) under Grant (Q19F020010), the (Open Research Funding of State Key Laboratory for Novel Software Technology of Nanjing University) under Grant (KFKT2019B18), and the (Open Research Funding of State Key Laboratory of Virtual Reality Technology and Systems) under Grant (VRLAB2020B03).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lou, X., Li, X.A., Hansen, P. et al. Hand-adaptive user interface: improved gestural interaction in virtual reality. Virtual Reality 25, 367–382 (2021). https://doi.org/10.1007/s10055-020-00461-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10055-020-00461-7