Abstract

Objective

The aim of the study was to develop, validate and analyze the educational impact of a high-fidelity simulation model for open preperitoneal mesh repair of an umbilical hernia.

Summary of background data

The number of surgical simulators available for training residents is limited. Primary for ethical reasons and secondary for the emerging pay-per-quality policies, practicing-on simulators rather than patients is considered gold standard. Validated full-procedural surgical models will become more and more important in training residents. Such models may assure that evidence-based standards regarding technical aspects of the procedures become integral part of the curriculum. Furthermore, they can be employed as a quality control of residents’ skills (Fonseca et al. in J Surg Educ 70:129–137, 2013).

Methods

In a repeated measures design, medical students, residents in their last year of training and attending surgeons performed an open preperitoneal mesh repair on the NANEP model [NANEP stands for the German acronym Nabelhernien-Netzimplatation-Präperitonal (English: Umbilical hernia mesh implantation preperitoneal)]. Subjects were categorized as “Beginners” (internship students) or “Experts” (residents and surgeons). Content validity was analyzed by criteria of subject-matter-experts. Blinded raters assessed surgical skills by means of the Competency Assessment Tool (CAT) using the online platform “CATLIVE”. Differential validity was measured by group differences. Proficiency gain was analyzed by monitoring the learning curve (Gallagher et al. in Ann Surg 241:364–372, 2005). Post-operative examination of the simulators shed light on criterion validity.

Results

The NANEP model-proofed content and construct-valid significant Bonferroni-corrected differences were found between beginners and experts (p < 0.05). Beginners showed a significant learning increase from the first to the second surgery (p < 0.05). Post-operative examination data confirmed criterion validity.

Conclusion

The NANEP model is an inexpensive, simple and efficient simulation model. It has highly realistic features, it has been shown to be of high-fidelity, full-procedural and benchtop-model. The NANEP model meets the main needs of surgical educational courses at the beginning of residency.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Simulation has been historically used in high-risk tasks, such as military and aviation operations and nuclear power stations [3] to prevent and predict human errors before they occur and ensure that competency training is achieved in simulation. Human error and team work failure are the most common causes for safety threats in critical scenarios [4, 5]. Simultaneously surgical training in the Operating Theatre (OT) is compromised as a result of multiple factors, but mainly costs of OT and time, as well as greatest awareness of ethical issues and patient demands. Therefore, time and opportunities for teaching are expected to be effective and efficient [6, 7]. For these reasons [8], extra-clinical simulation models complement surgical training, and there has been a surge of simulation-based programs massed or integrated within the training pathway that are proving greatly beneficial for surgical training. There is some evidence that simulation-based educational assessments in the field of surgical education positively correlate with patients’ outcomes [9].

From the research perspective, it has been suggested that surgical educational research, in particular for simulation-based training [10] should adhere to the IDEAL recommendations [11]. IDEAL stands for Idea, Development, Exploration, Assessment and Long-term study. Subsequently, the proposed NANEP model is described by the criteria Idea, Development and Exploration whereas Assessment and Long-term study are not subject of the current study.

The incidence of umbilical hernia repair is 5% in the normal population, which implies that the surgical intervention occurs quite frequently [12]. Validated surgical models for conventional, non-minimally invasive surgery are scarce [2, 13, 14], to date no model for umbilical hernia repair exists.

Materials and methods

The silicon-based NANEP model was designed to be used for an open preperitoneal mesh repair of umbilical hernia. The development of the surgical NANEP course was supervised by the Institute of Medical Teaching and Medical Education Research of the University of Wuerzburg.

NANEP model

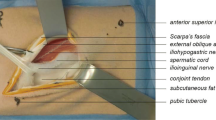

For construction of the NANEP model, a two-component silicone was used. The prototype was based on a human body. Different materials such as textiles, cotton and synthetic blood were used to achieve anatomical and visual realism and differing layers (e.g., skin, fatty tissue, abdominal fascia, peritoneum). Particular attention was placed on surgical handling (tissue handing), haptic feedback and the compatibility of the model with surgical instruments, suture materials and meshes, commonly employed in the OT. The umbilical hernia of the NANEP model was designed for the open preperitoneal mesh repair of umbilical hernia. The NANEP model can be classified as open surgical model [13]. It was manufactured at an expense of approximatively 20 Euro each.

Figure 1a, b shows the NANEP model, and Fig. 2a–d shows the most important surgical steps of umbilical hernia repair with mesh implantation in the NANEP model.

Sample size and design of the surgical NANEP course

The validation study of the NANEP model was conducted at the University Hospital Wuerzburg. Participants consisted of two groups, beginners (n = 12) and experts (n = 6). Twelve beginners were recruited among medical students. At the time point of assessment, they were in their final clinical year and reported pronounced interest in surgical techniques. The expert group consisted of six surgeons either in their final year of residency (n = 2) or in the first 2 years after residency (surgical specialists, n = 4). Sample size calculation was conducted according to Miskovic, for a power of 80% (Welch test) a minimum of 18 participants was suggested [15]. The index procedures were performed within the scope of the NANEP course (Fig. 3). One week prior to the course, participants received a written tutorial with operation steps. Prior to surgery 1 (operation 1, day 7) participants were exposed to a standardized video on which each operation step was demonstrated. Each surgery was performed by one participant, assisted by another participant. Each participant performed two operations with an interval of 1 week. Operations were video recorded. During the first operation, the tutor responded to questions of the participants and gave individual instructions. The second operation had to be performed without any additional assistance. After every surgery participants received oral feedback from the tutor. After the second operation, participants responded to the NANEP questionnaire (content validity). Afterwards, anonymized videos were uploaded on the platform CATLIVE.

The bilingual (English/German) online platform CATLIVE had been developed by the Institute for Artificial Intelligence and Applied Informatics of the Julius-Maximilians-University of Wuerzburg. Each video was rated by three blinded experts (reviewers) using the Competency Assessment Tool (CAT, construct validity, Fig. 3). Reviewer 1 was a renowned hernia expert and involved in the creation of the model, Reviewer 2 was involved in the development and construction of the model whereas reviewer 3 was a senior surgeon (general surgery). Reviewer 1 also rated the autopsy results.

Concepts used to assess the models’ quality

Content validity Validity in general is defined as the extent to which a test measures what it is supposed to measure [16, 17]. Content validity in particular is considered the most important step of test construction [18] since it determines construct validity and reliability. Content validity cannot be measured directly but requires definition of indicators [19, 20] which characterize the content [21]. In case of a surgical simulation model, these indicators are anatomy and realism [22] operationalized by items of the NANEP questionnaire. Reliability of items [21] was statistically inspected using Cronbach’s α [23, 24]. Values greater 0.70 are considered good [24], and values greater than 0.60 are considered acceptable [25, 26].

Construct validity Construct validity addresses the question whether the CAT measures competency of participants. In case the CAT measures competency, ratings of experts should be fairly consistent for all participants [20]. The latter was statistically approximated by calculation of interrater-reliability using the Finn coefficient [27]. The Finn coefficient varies between 0 and 1 where 1 implies absolute agreement between the raters. A Finn coefficient greater than 0.50 is considered acceptable, and values greater than 0.70 as good [28].

Differential validity Differential validity addresses the question whether evaluation criteria distinguish between beginners and experts [29]. For comparison, the Welch test was used since it proofed to be statistically superior to the common t test [30, 31].

Proficiency gain Once reliability and validity of the CAT tool is confirmed, performance and learning gain are measured [32]. An increase in competency from surgery 1 to surgery 2 is regarded a gain in learning. Results were statistically examined using the Welch test.

Autopsy An experienced surgeon examined all models after surgery. Aesthetic and functional criteria such as ligature of the fatty prolapse or suture of the hernial orifice plus peritoneal damage were investigated.

NANEP questionnaire

The NANEP questionnaire was used to collect demographic information and to evaluate content validity. Participants rated (1) anatomy, (2) realistic handling and (3) applicability for training. Response option was a 5-point Likert scale (1 = does not agree, 2 = does rather not agree, 3 = partially agrees, 4 = largely agrees, 5 = fully agree and 0 = N/A (not applicable)).

Competency assessment tool (CAT)

The CAT questionnaire was developed to measure competency in the field of colorectal surgery [33]. The CAT was adapted to suit the NANEP operation and segmented into procedural and content-specific aspects to capture the dynamic process of an operation. The NANEP operation itself can be segmented into three steps: (1) Exposure (2) Clearance of orifice and (3) Mesh position and orifice closure. Each step is classified by four categories: (I) Instrument use, (II) Tissue handling, (III) Near misses and errors, and (IV) End-product quality. The latter matrix accounts for 12 evaluation criteria, rated on four proficiency levels: 1 (worst performance) to 4 (best performance). Levels of competency are based on the Dreyfus model [34], Eraut [35] and Miskovic [15]. Additionally, raters were able to leave comments on the CATLIVE platform.

Results

The response rate for the NANEP questionnaire was 100%, and 18 forms were used for statistical analysis. The sample consisted of eight female and ten male participants. Twelve participants were internship students (assigned to the group of beginners) and 6 were surgeons doing general surgery residency or 2 years after completion of the residency (group of experts). Sex was equally distributed among the group of beginners with six male and six female students. The expert group consisted of two female and four male surgeons. Mean age of students was 26.18 years (SD = 2.27). On average participants were in this educational stage for 4.67 years (SD = 1.67). As expected mean age of residents or specialists was higher with 34.50 years (SD = 2.07). On average, they were in their position for 2.67 years (SD = 2.07).

Content validity

Overall, the NANEP model was rated positive. Results differed for anatomy, realistic handling and applicability for training (cf. tab. 1). Cronbach’s α was acceptable, all values exceeded 0.60. Open-answer-comments which occurred most frequently were: “Surgery felt extremely realistic”, “Practice of surgical skills was extremely helpful and fun!”, “A good opportunity to realistically practice an operation”, “Very good preparation for the surgery and great opportunity to recall anatomical structures” (Table 1).

Construct validity (CAT)

Response rate for the CAT evaluation was 100%, and 36 operations were analyzed. Except for the category complications, the α value was good. The Finn coefficient verified good interrater-reliability for all categories (cf. Fig. 4).

Differential validity and learning gain (CAT)

Comparison between beginners and experts verified differential validity. Experts significantly outperformed the beginners (Fig. 5).

Results CAT: means of beginners and experts in the 4 categories were compared. Mean (plus standard deviation) for the 4 categories: (I) Instrument use, (II) Tissue handling, (III) Near misses and errors, and (IV) End-product quality. Each category contains 3 steps: (1) Exposure, (2) Clearance of orifice, and (3) Mesh position and orifice closure. All 12 evaluation criteria were rated on four proficiency levels: 1 (worst performance) to 4 (best possible performance). In total, a maximum count for one category was 12, minimum count was 3. Results differ for beginners and experts, Welch test: ***p < .005

When analyzing the learning gain for beginners and experts separately, the learning gain for beginners is more pronounced. Beginners had a significant learning growth for the categories instrument use, tissue handling, near misses and errors and end-product quality. It may be noteworthy that experts began with excellent rating, limiting the possibility of improving skills (Fig. 6).

Results using CAT for assessment of video-taped operation of umbilical hernia in four categories: (I) Instrument use, (II) Tissue handling, (III) Near misses and errors and (IV) End-product quality. Results are shown for beginners and for experts, also stating standard deviations. Learning growth is analyzed by Welch test: *p < 0.05

Autopsy

Post-operative results were rated as follows: regarding the category “aesthetics” 42% were deficient, 33% satisfactory and 25% very good. Skin suture was perfect in 25% of the cases, in 64% skin asymmetric and in 11% loose. Suture of fascial orifice was insufficient in 53% of the cases, in 30% plain and in 17% bulged. 61% of the models showed no ligature. 25% of the models showed a plain and centered mesh, in 56% it was folded but still centered and in 19% it was not centered. In 86% of the cases, the peritoneum was intact, 3% were slightly injured and in 11% of the cases the mesh was exposed. Chi square test showed no significant difference for beginners and experts by inspection of repeated surgery. Photo examples of results are displayed in Fig. 7.

Autopsy results of post-operation NANEP model: a aesthetic (anterior view), case #06 very good, #04 deficient; b suture of hernial orifice (posterior view), case #28 sufficient and #04 insufficient due to untied knot; c ligature of fatty tissue (posterior view), case #18 sufficient and #31 no ligature; d peritoneum (posterior view), case #07 intact and #36 injured peritoneum

Discussion

The aim of the study was to develop a surgical simulator for open preperitoneal mesh repair of an umbilical hernia. The NANEP model can be characterized as high-fidelity simulator which enables to practice the entire operation. High-fidelity simulators are congruent to reality, which includes user interactions in real time [36]. The NANEP model reflects underlying anatomical morphology. Unlike low-fidelity models, it requires surgical decisions beyond a mere display of practical skills. The surgeon must choose adequate sewing material and technique, e.g., for a sufficient and flat fascial closure. A full-procedural simulation model [37] is characterized by a complex anatomical design which enables to perform an entire operation, as is the case for the open preperitoneal mesh repair of umbilical hernia. Benchtop models are usually considered as static low-fidelity models for practicing simple skills such as skin suture. It had been assumed that variable feedback would be impossible to integrate [38]. The NANEP model proofed this assumption wrong: benchtop models can be utilized to simulate entire operations.

In the present study, the NANEP model was validated with all its features, content validity was verified for anatomy, realistic handling and applicability for training. The non-significant p values for beginners and experts show content validity regardless of experience.

The significant proficiency gain for practical performance on the NANEP model is similar to results found in other studies [13, 39,40,41]. The significant proficiency gain for beginners indicates that the NANEP model is suitable for being implemented at the beginning of residency.

To effectively teach anatomy and practical skills, simulation models should become standard of the curricula [41, 42]. To maximize educational benefit when working with surgical simulation models, we recommend the following three principals:

-

1.

Offer repetitive opportunities for training, so practical skills can be improved [41, 42]. The latter proofed true for the NANEP model (Fig. 6).

-

2.

Ensure that the simulation model reflects a clinical need [41,42,43]. For this study, we selected umbilical hernia, since it is a frequent disease.

-

3.

Give participants feedback [41, 42]. In our study, this was done using the CATLIVE platform, which automatically creates a sheet with feedback for participants.

Furthermore, simulation-training programs can complement surgical training [22]. The paradox finding that experts did not reach the highest score and end-product quality dropped at the second attempt can be attributed in part to the Dunning-Kruger effect. The Dunning-Kruger effect describes a cognitive bias in which experts become too comfortable in their own skills and fail to readjust their performance. This may stress the need to use the model for simulation-training programs to complement surgical training [21]. Such programs are already successful: the London General Surgical Skills Program run at Imperial College London is obligatory for every surgeon in residency in London since 2009 and includes various simulation models, for open and laparoscopic procedures [44]. A similar program has been implemented in the USA. Since 2008, the Fundamentals of Laparoscopic Surgery-Program (FLS) training module of the American Board of Surgery (ABS) is compulsory for the ABS Certifying Examination for General Surgery [45].

Conclusion

This study is the first to present a surgical simulator for open preperitoneal mesh repair of an umbilical hernia. It supports evidence for the positive impact simulation models regarding the development of skills implemented at early stages of surgical residency. Whereas Idea, Development and Exploration were presented, Assessment and Long-Term Study require further research in terms of a curricula implementation and examination of patients’ outcomes.

References

Aggarwal R (2015) Surgical education research: an IDEAL proposition. Ann Surg 261:e55–e56

Fonseca AL, Evans LV, Gusberg RJ (2013) Open surgical simulation in residency training: a review of its status and a case for its incorporation. J Surg Educ 70:129–137

Gallagher AG, Ritter EM, Champion H, Higgins G, Fried MP, Moses G, Smith CD, Satava RM (2005) Virtual reality simulation for the operating room: proficiency-based training as a paradigm shift in surgical skills training. Ann Surg 241:364–372

Flin R, O’Connor P, Mearns K (2002) Crew resource management: improving team work in high reliability industries. Team performance management. An Int J 8:68–78

Daniel M, Makary MA (2016) Medical error—the third leading cause of death in the US. BMJ 353:i2139

Weller J, Boyd M, Cumin D (2014) Teams, tribes and patient safety: overcoming barriers to effective teamwork in healthcare. Postgrad Med J 90:149–154

Aggarwal R, Darzi A (2006) Technical-skills training in the 21st century. NEJM 355:2695–2696

Reznick RK, MacRae H (2006) Teaching surgical skills—changes in the wind. NEJM 355:2664–2669

Badash I, Burttt K, Solorzano CA, Carey JN (2016) Innovations in surgery simulation: a review of past, current and future techniques. Ann Transl Med 4:453

Brydges R, Hatala R, Zendejas B, Erwin PJ, Cook DA (2015) Linking simulation-based educational assessments and patient-related outcomes: a systematic review and meta-analysis. Acad Med 90:246–256

McCulloch P, Altman DG, Campbell WB et al (2009) No surgical innovation without evaluation: the IDEAL recommendations. Lancet 374:1105–1112

Dietz UA, Menzel S, Lock J, Wiegering A (2018) The Treatment of Incisional Hernia. Dtsch Arztebl Int 115:31–37

Davies J, Khatib M, Bello F (2019) Open surgical simulation—a review. J Surg Educ 70:618–627

Vick LR, Vick KD, BormanKR Salameh JR (2007) Face, content, and construct validities of inanimate intestinal anastomoses simulation. J Surg Educ 64:365–368

Miskovic D (2012) Proficiency gain and competency assessment in laparoscopic colorectal surgery. PhD Thesis. Imperial College London

Haynes SN, Richard D, Kubany ES (1995) Content validity in psychological assessment: a functional approach to concepts and methods. Psychol Assess 7:238–247

Yaghmale F (2003) Content validity and its estimation. J Med Educ 3:25–27

Bühner M (2011) Einführung in die Test-und Fragebogenkonstruktion. Pearson Deutschland GmbH

Polit DF, Beck CT (2006) The content validity index: are you sure you know what's being reported? Critique and recommendations. Res Nurs Health 29:489–497

Polit DF, Beck CT, Owen SV (2007) Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res Nurs Health 30:459–467

Tavakol M, Mohagheghi MA, Dennick R (2008) Assessing the skills of surgical residents using simulation. J Surg Educ 65:77–83

Satava RM (2006) Assessing surgery skills through simulation. Clin Teacher 3:107–111

Fisseni HJ (2004) Lehrbuch der psychologischen Diagnostik: mit Hinweisen zur Intervention. Hogrefe Verlag, Göttingen

Nunnally JC (1967) Psychometric theory. McGraw-Hill, New York

Bowling A (2014) Research methods in health: investigating health and health services. Maidenhead, McGraw-Hill Education

Lehmann IJ (1965) Educational measurements and their interpretation. Wadworth Publishing Co, Belmont

Finn RH (1970) A note on estimating the reliability of categorical data. Educ Psychol Meas 30:71–76

Eichhorn T (2011) Systematische psychologisch-diagnostische Gesprächsführung und Verhaltensbeobachtung zur Erfassung leistungsrelevanter Arbeitshaltungen. University of Vienna, Vienna

Stratford PW, Riddle DL (2005) Assessing sensitivity to change: choosing the appropriate change coefficient. Health Qual Life Out 3:23

Rasch D, Guiard V (2004) The robustness of parametric statistical methods. Psychol Sci 46:175–208

Guiard V, Rasch D (2004) The robustness of two sample tests for means. A reply on von Eye’s comment. Psychol Sci 46:549–554

Scalese RJ, Obeso VZ, Issenberg SB (2008) Simulation technology for skills training and competency assessment in medical education. J Gen Int Med 23:46–49

Miskovic D, Ni M, Wyles SM et al (2013) Is competency assessment at the specialist level achievable? A study for the national training programme in laparoscopic colorectal surgery in England. Ann Surg 257:476–482

Dreyfus SE, Dreyfus HL (1980) A five-stage model of the mental activities involved in directed skill acquisition. California Univ Berkeley Operations Research Center, California

Eraut M (2002) Developing professional knowledge and competence. Routledge, London

Bradley P (2006) The history of simulation in medical education and possible future directions. Med Educ 40:254–262

Roberts KE, Bell RL, Duffy AJ (2006) Evolution of surgical skills training. World J Gastroenterol 12:3219

Hammoud MM, Nuthalapaty FS, Goepfert AR et al (2008) To the point: medical education review of the role of simulators in surgical training. Am J Obstet Gynecol 199:338–343

Grober ED, Hamstra SJ, Wanzel KR, Reznik RK, Matsumoto ED, Sidhu RS, Jarvi KA (2004) The educational impact of bench model fidelity on the acquisition of technical skill: the use of clinically relevant outcome measures. Ann Surg 240:374–381

McGaghie WC, Issenberg SB, Petrusa ER, Scalese RJ (2006) Effect of practice on standardised learning outcomes in simulation-based medical education. Med Educ 40:792–797

Issenberg SB, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ (2005) Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teacher 27:10–28

Maran NJ, Glavin RJ (2003) Low-to high-fidelity simulation–a continuum of medical education? Med Educ 37:22–28

Kneebone R, Nestel D, Vincent C, Darzi A (2007) Complexity, risk and simulation in learning procedural skills. Med Educ 41:808–814

Khatib M, Nald N, Brenton H, Barakat NF, Sarker SK, Standfield N, Ziprin P, Kneebone R, Bello F (2014) Validation of open inguinal hernia repair simulation model: a randomized controlled educational trial. Am J Surg 208:295–301

Laubert T (2017) Ausbildung in laparoskopischen Techniken. Minimalinvasive Viszeralchirurgie. Springer, Heidelberg, pp 23–31

Acknowledgements

Dr. Alexander Wierlemann supported assessment as one of the raters (Department of General, Visceral, Vascular and Pediatric Surgery, University Hospital of Wuerzburg). Simone Menzel (Department of General, Visceral, Vascular and Pediatric Surgery, University Hospital of Wuerzburg) was of great help in developing the CATLIVE software and Hannah Gebhardt (Hernia-Group, University Hospital of Wuerzburg) provided morphological insights of clinical MRI findings of visible meshes implanted in patients according to the methodology presented in this study.

Author information

Authors and Affiliations

Contributions

All the authors contributed to the final preparation of this article, including approval of the final version of the manuscript. UF and CZ equally contributed to this study. UF, CZ and UAD developed the surgical simulator based on the work of SM. UF, SK and UD conceived and designed the study. UF, JB and UAD wrote the final study protocol and together with SM drafted the manuscript. UF and UAD implemented and ran the study, and collected the results. UF and JB analyzed the data and performed the statistical analyses. UF, UlAD, SO and FP developed the online platform.

Corresponding author

Ethics declarations

Conflict of interest

No conflict of interest is declared. The study was founded by the Medical Faculty Wuerzburg, Germany (Grant no. 620-2015) and third-party funds of working group of the Division of Hernia Repair and Abdominal Wall Reconstruction of the University Hospital Wuerzburg. The authors disclose that there are no conflicts of interest.

Ethics approval

The local institutional review and ethics board judged the project as not representing medical or epidemiological research on human subjects and as such adopted a simplified assessment protocol. The project was approved without any reservation under the proposal number 2016101302. Participation was voluntary and the results not accessible to the public.

Human and animal rights

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

No informed consent.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Friedrich, U., Backhaus, J., Zipper, C.T. et al. Validation and educational impact study of the NANEP high-fidelity simulation model for open preperitoneal mesh repair of umbilical hernia. Hernia 24, 873–881 (2020). https://doi.org/10.1007/s10029-019-02004-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10029-019-02004-9