Abstract

Anticipating consequences of disturbance interactions on ecosystem structure and function is a critical management priority as disturbance activity increases with warming climate. Across the Northern Hemisphere, extensive tree mortality from recent bark beetle outbreaks raises concerns about potential fire behavior and post-fire forest function. Silvicultural treatments (that is, partial or complete cutting of forest stands) may reduce outbreak severity and subsequent fuel loads, but longevity of pre-outbreak treatment effects on outbreak severity and post-outbreak fuel profiles remains underexplored. Further, treatments may present tradeoffs for other management objectives focused on ecosystem services (for example, carbon storage). We measured structure in old-growth subalpine forests following a recent (early 2000s) severe mountain pine beetle (MPB; Dendroctonus ponderosae) outbreak to examine effects of historical (1940s) cutting intensity on gray stage (~10 years after peak of outbreak) post-outbreak (1) fuel profiles and (2) aboveground biomass carbon. Compared to control (uncut) stands, historically cut stands subjected to the same MPB outbreak had approximately half the post-outbreak surface fuel loads, about 2–3x greater live canopy fuel loads, and greater within-stand spatial heterogeneity of dead canopy cover and available canopy fuel load. Post-outbreak total aboveground biomass carbon was similar across all stands, though historically cut stands had about 2x greater carbon in live biomass compared to uncut stands. These findings suggest tradeoffs with altered post-outbreak potential fire behavior and carbon storage in cut stands. Additional implications of historical silvicultural treatments for wildlife habitat, firefighting operations, and long-term carbon trajectories highlight temporal legacies of management on directing forest response to interacting disturbances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Highlights

-

Past silvicultural treatments altered fuels and carbon after recent beetle outbreak

-

Cut stands had reduced surface fuels but increased canopy fuels post-outbreak

-

Cut stands had similar total carbon as control stands, but more as live biomass

Introduction

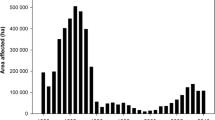

Insect outbreaks and wildfires are prominent natural disturbances in temperate forests worldwide and are projected to increase in response to warmer and drier conditions (Seidl and others 2017). Recent (1990s–2000s) outbreaks of native bark beetles (Coleoptera: Curculionidae: Scolytinae) have killed trees across tens of millions of hectares of forests in North America (Meddens and others 2012), while annual area burned, area burned at high severity, and number of large fires have increased over the past three decades (Parks and Abatzoglou 2020). As fires inevitably intersect with beetle outbreaks, heightened concern for potential fire behavior and effects on forest resources (Jenkins and others 2012) highlight the importance of further understanding this disturbance interaction.

Bark beetle outbreaks and wildfire can interact in complex ways that present challenges for management of forest ecosystem services. Interactions are highly dependent on outbreak stage (Simard and others 2011; Hicke and others 2012b; Jenkins and others 2012; Donato and others 2013a), forest type and associated fire regime (Baker 2009), and burning conditions (for example, Harvey and others 2014b). Although beetle outbreaks may not affect fire likelihood (Meigs and others 2015) or area burned (Hart and others 2015), beetle-induced tree mortality and shifts in fuel profiles can influence fire behavior (that is, fire reaction to influences of fuel, weather, and topography; NWCG 2021) by altering surface and crown fire likelihood through redistribution of live and dead fuels over time (Simard and others 2011; Collins and others 2012; Woolley and others 2019) and changing microclimate conditions (Page and Jenkins 2007). Beetle-killed trees also increase wildfire resistance to control (that is, relative difficulty of constructing and holding a control line as affected by resistance to line construction and fire behavior; NWCG 2021) by elevating firefighter safety hazards, complicating fire suppression operations, and contributing to dangerous post-fire conditions (Page and others 2013).

Outbreak severity (that is, beetle-induced tree mortality) and fire severity (that is, degree of fire-caused ecological change; Keeley 2009) may also interact to alter ecosystem functions such as carbon (C) cycling. Post-outbreak C trajectories are highly variable, with the magnitude and direction of response influenced by pre-outbreak stand structure, outbreak severity and spatial patterning, and post-outbreak management (Edburg and others 2011; Pfeifer and others 2011; Bright and others 2012; Davis and others 2022). By killing susceptible trees, beetle outbreaks redistribute C from live to dead pools and spur the release and regeneration of live trees, influencing forest C sequestration and emissions as trees decay and regenerate over time (Hansen and others 2015). Depending on the number and size distribution of killed and surviving trees, total C stocks can recover to pre-outbreak levels within several decades to a century (Edburg and others 2011; Pfeifer and others 2011). Combustion of beetle-killed snags further alters C cycles by reducing aboveground dead biomass and increasing deep charring on trees, influencing total C storage, availability, and longevity (Talucci and Krawchuk 2019). Consequently, implications of outbreak-fire interactions on ecosystem function are important considerations for managers.

Mitigating consequences of beetle and fire interactions on forest structure and function represents a key management priority (Morris and others 2017). Silvicultural treatments (for example, partial or complete cutting of stands) intended to increase resistance to bark beetle outbreaks (DeRose and Long 2014) may abate post-outbreak fire behavior and resistance to control by altering the quantity, composition, and spatial heterogeneity of stand structural components (Donato and others 2013b). However, treatments may also present a tradeoff with aboveground C trajectories due to the removal of biomass (Edburg and others 2011). Most studies of management effects on fire behavior and C storage following beetle outbreak have focused on post-outbreak salvage logging (for example, Griffin and others 2013) or pre-outbreak thinning over short timeframes (for example, 4 years prior to outbreak; Crotteau and others 2018); the longevity of pre-outbreak treatment effects on beetle outbreak and fire interactions remains a key knowledge gap. Simulations have projected treatment effects on post-outbreak fire behavior and C storage (for example, Pelz and others 2015), but empirical studies of treatment effects provide a critical test of these ecosystem processes.

We conducted a field study to examine the effects of historical silvicultural treatments on post-outbreak (gray stage, ~10 years following peak of outbreak) fuel profiles and C dynamics, using a long-term experimental study of old-growth lodgepole pine (Pinus contorta var. latifolia) stands cut at a range of intensities in the mid-twentieth century and subsequently affected by a severe mountain pine beetle (MPB; Dendroctonus ponderosae) outbreak in the early twenty-first century. Historical cutting had lasting effects on live stand structure and species composition following the outbreak (Morris and others 2022). Here, we explore effects of historical cutting intensity on potential management tradeoffs regarding post-outbreak dynamics and ecosystem processes.

We asked two questions: How do the legacies of historical silvicultural treatments influence post-outbreak (1) fuel profiles and (2) aboveground biomass C? Specifically, we examined the effects of pre-outbreak historical cutting intensity on post-outbreak (Q1) surface fuels, canopy fuels, and within-stand fuel heterogeneity, and (Q2) live and dead aboveground biomass C. Since historically cut stands had 3–24% less basal area killed by MPB than uncut stands on average (Morris and others 2022), post-outbreak live and dead surface fuels (Q1) were expected to decrease with greater cutting intensity (Jenkins and others 2008). Similarly, post-outbreak live canopy fuels (Q1) were expected to increase, and dead canopy fuels were expected to decrease, with greater cutting intensity (Jenkins and others 2012). Because non-stand-replacing disturbances such as bark beetle outbreaks promote fine-scale spatial complexity (Donato and others 2013a), post-outbreak within-stand fuel heterogeneity (Q1) was expected to be greater where MPB outbreak severity was greater; that is, greater in the historically lighter treatments (that is, low-density tree removal) and lower in the historically heavier treatments (that is, near-total tree removal) relative to uncut stands. Finally, total post-outbreak aboveground biomass C (Q2) was expected to be similar across all stands based on the growth and development of cut stands for 60 years before the outbreak (Donato and others 2013b). However, live aboveground biomass C (Q2) was expected to increase—and dead to decrease—with greater historical cutting intensity (Hansen and others 2015).

Methods

Study Area

The Fraser Experimental Forest (39° 53′ N, 105° 53′ W) is located on the Arapaho-Roosevelt National Forest (Colorado, USA) and ranges in elevation from 2700 to 3900 m (Alexander and others 1985). Mean annual temperature is 3 °C (range − 7 to 14 °C), and mean annual precipitation is 550 mm (PRISM Climate Group 2012). Prevalent soils include coarse-textured Cryochrepts derived from gneiss and schist (Huckaby and Moir 1998). Forest stands established following stand-replacing fire in 1685 (Bradford and others 2008). Overstory vegetation is dominated by lodgepole pine (Pinus contorta var. latifolia) in early- to mid-seral stages and subalpine fir (Abies lasiocarpa) and Engelmann spruce (Picea engelmannii) in later seral stages, with scattered aspen (Populus tremuloides). Sparse understory vegetation consists mainly of conifer regeneration, Shepherdia canadensis, and Vaccinium spp. Study stands are representative of subalpine mixed-species forest communities in the Rocky Mountains (Huckaby and Moir 1998) and are dominated by infrequent (135–280 years; Baker 2009) stand-replacing fire events driven primarily by regional-scale climatic variation (Sibold and others 2006).

Study Design

Experiment details are summarized in Wilm and Dunford (1948) and more recently in Morris and others (2022). Briefly, 20 2-ha harvest cutting units were established in 1938 within >250-year-old stands to examine the effect of cutting intensity on water yields (Wilm and Dunford 1948). Prior to cutting, all units were characterized by similar structure and species composition. In 1940, five different partial or complete cutting intensities were applied to four replicate units arranged in blocks: clearcut, 0% merchantable timber volume reserved (full overstory removal; all trees ≥ 24 cm diameter at breast height [dbh] removed); heavy, about 17% reserved (scattered seed-tree cutting); moderate, about 33% reserved (heavy selection cutting); light, 50% reserved (initial step shelterwood cutting); and control, 100% (70.0 m3/ha) reserved (uncut). Additional timber stand improvement (TSI) cutting of low vigor trees (dbh 9–24 cm) was simultaneously conducted on a random half of each cut unit. Regeneration was allowed to occur naturally following density reduction (that is, no planting was conducted). Six decades later, the experimental forest experienced a severe MPB outbreak between 2003 and 2010 (Vorster and others 2017). Across all experimental units, MPB killed 59% and 78% of susceptible host trees by stem density and basal area, respectively (Morris and others 2022). Mortality was greatest in historically uncut stands (73% of susceptible trees and 87% of susceptible basal area), and ranged from 46 to 67% of susceptible trees and 63–84% of susceptible basal area in stands where cutting occurred historically. See Appendix S1 for detailed post-outbreak stand structure.

Field Data Collection

We measured post-outbreak stand structure and fuels during summer 2018 (gray stage, ~10 years following peak of outbreak) within one 0.25 ha (50 × 50 m) plot per stand (see Morris and others 2022). In total, 28 plots were sampled across each of the treatment categories: control (n = 4), light+TSI (n = 4), moderate (n = 3), moderate+TSI (n = 3), heavy (n = 4), heavy+TSI (n = 4), clearcut (n = 3), and clearcut+TSI (n = 3). Light cutting without TSI was not sampled due to unsuitable plot locations within the treatment units. Plots were positioned between 2799 and 2999 m elevation on northerly aspects with 5.6–25.9° slopes. Sampling design was based on established protocols (Simard and others 2011; Donato and others 2013a) and described below.

Surface fuel profiles were quantified following Brown (1974). Dead surface fuels (height < 2 m) were measured along ten 10-m planar intercept transects (Appendix S2: Figure S1). Down woody debris (DWD) was categorized according to standard time-lag fuel size classes: 1-h, < 0.64 cm diameter; 10-h, 0.64–2.54 cm; 100-h, 2.54–7.62 cm; and 1000-h fuels, > 7.62 cm. Along each transect, we tallied 1- and 10-h fuels for 2 m and 100-h fuels for 3 m, and measured species, decay class (1–5; Lutes and others 2006), and diameter of 1000-h fuels along the entire 10 m. Litter and duff depths were measured at 0.5 and 1.5 m along each transect, and dead fuel depths (distance from highest dead particle to bottom of litter layer) were recorded at three intervals (0–0.5, 0.5–1.0, 1.0–1.5 m). Percent cover and height of live graminoids, forbs, and woody shrubs were measured in 1-m diameter microplots at the ends of each 10-m transect (20 total). Species and height of live tree seedlings (height 0.1–1.3 m) were recorded within three 2 × 25 m belt transects (150 m2 total).

Canopy fuel profiles were quantified for live and dead trees (height ≥ 1.4 m) rooted within three belt transects of varying size based on tree diameter: dbh < 5 cm, 2 × 25 belts (150 m2 total); dbh ≥ 5 cm, 4 × 50 m belts (600 m2 total) (Appendix S2: Figure S1). Species, dbh, and total height were measured for all trees; decay class (1–5, adapted from Lutes and others 2006) and broken top presence were also recorded for dead trees. Crown base height was measured for trees ≥ 5 cm dbh and modeled for smaller diameter trees using mean crown ratio of similar height trees (Appendix S2). Canopy cover was recorded by a single operator using a spherical densiometer facing each cardinal direction at 12 points evenly distributed across the plot area.

Fuel & Biomass Calculations

Field measures and allometric equations were used to generate fuel profiles for each stand and were scaled up to per ha values (Appendix S2: Table S1). Surface fuel profiles included biomass of DWD and live understory vegetation. Biomass of DWD was estimated by size class (fine woody debris [FWD]: 1-h, 10-h, 100-h fuels; coarse woody debris [CWD]: 1000-h fuels) using geometric scaling and decay class-specific relative densities (Appendix S2: Table S2; Brown 1974). Biomass of CWD was summarized for sound (decay class ≤ 3) and rotten (decay class > 3) fuels. All DWD estimates were slope-corrected. Dry biomass of live understory vegetation was estimated for each functional group (graminoids, forbs, low and tall shrubs) using equations based on percent cover and height of the dominant species (Olson and Martin 1981; Prichard and others 2013). Seedling biomass was estimated using total tree weight equations based on species and height (Brown 1978; Means and others 1994). Total live surface fuel biomass was aggregated into herbaceous (graminoids, forbs) and woody (shrubs, seedlings) classes.

Canopy fuel profiles included crown fuel biomass, available canopy fuel load (ACFL), canopy bulk density (CBD), canopy base height (CBH), and canopy cover. Biomass of live and dead crown fuels (foliage, 1-h, 10-h, 100-h branch size classes) was estimated for each tree using species- and region-specific equations based on dbh and/or height (Brown 1978; Standish and others 1985). Crown fuel corrections were applied for dead trees and broken-top trees (Appendix S2). ACFL was calculated as the sum of total foliage, total dead 1-h fuels, and half of live 1-h fuels for all trees (Reinhardt and others 2006; Donato and others 2013a). We distributed ACFL along the crown length of each tree in 0.25 m bins, summing by bins across all trees to generate vertical canopy fuel profiles for each stand (Simard and others 2011; Donato and others 2013a). CBD was calculated as the maximum running mean across 3-m of the vertical profile (Reinhardt and others 2006). CBH was calculated as the lowest height at which CBD exceeded 0.04 kg/m3 (Sando and Wick 1972; Cruz and others 2004; Donato and others 2013a).

To capture the effects of historical cutting intensity on within-stand spatial heterogeneity of post-outbreak fuel profiles, we calculated the coefficient of variation (CV) among transects for broad classes of surface and canopy fuels: FWD, CWD, fuel depth, canopy cover, crown base height, and ACFL (Fraterrigo and Rusak 2008; Donato and others 2013a). For ACFL, we evaluated heterogeneity in both horizontal and vertical dimensions. Horizontal heterogeneity in ACFL was calculated for separate canopy strata, distributed across the height range of the data: top (height ≥ 16 m), middle (8 ≤ height < 16 m), and bottom (height < 8 m) strata. Vertical heterogeneity in ACFL was calculated as the CV among ACFL in 1-m height bins distributed across the vertical canopy profile.

Aboveground biomass C included C stored in plant tissues located on or above the ground surface. To estimate post-outbreak total, live, and dead aboveground biomass C pools, we summed individual surface and canopy fuel components, assuming biomass to be 50% C (Schlesinger and Bernhardt 2013). Total aboveground biomass C was the sum of live and dead pools. Live C included stem, bark, branch, and leaf biomass for living vegetation; dead C included woody biomass for dead vegetation.

Statistical Analyses

To test effects of historical silvicultural treatments on post-outbreak fuel profiles and aboveground biomass C, we used generalized linear mixed-effects models predicting fuel and C variables as a function of cutting intensity. Models were specified to reflect the study design, with major treatment and TSI cutting terms treated as nested fixed effects, and block as a random effect in each model. Response variables were modeled by gamma distributions, with the exception of canopy cover, which required a beta distribution. We compared responses of each cut treatment to the uncut control, and within treatment effects of cutting with and without additional TSI. Significance for all statistical tests was assessed according to the following α-levels: strong (P < 0.01), moderate (P < 0.05), and suggestive (P < 0.1) evidence of differences. This approach minimizes the risk of overlooking ecologically meaningful effects due to modest sample size (that is, Type II error). We made inference about differences in potential fire behavior using our detailed analyses on fuel profiles but did not include analyses of modeled fire behavior due to limitations of empirical models in post-beetle outbreak fuel complexes (synthesized in Alexander and Cruz 2013; Hood and others 2018). Analyses were conducted in R (R Core Team 2022) using packages lme4 (Bates and others 2015) and glmmTMB (Brooks and others 2017) for model fitting and jtools (Long 2020) for model visualization.

Results

Relative to uncut stands, historically cut stands had about 2–3x greater live basal area and overstory density but similar live quadratic mean diameter (Figure 1). Conversely, historically cut stands had half as much dead basal area and about 2x smaller dead quadratic mean diameter, but similar dead overstory density and around 2–9x greater dead understory density of trees with fine branches and needles attached (Figure 1).

Post-outbreak stand structure across historical silvicultural treatments. Black circles are predicted means with 95% confidence intervals. Gray points are observed mean values for each plot replicate. Treatments increase in cutting intensity from left to right (Ctrl = control; Low = light; Mod = moderate; Hi = heavy; Cc = clearcut). Closed and open circles are cutting with and without timber stand improvement (TSI), respectively. Threshold for overstory E–G versus understory H–J density is 12 cm dbh. Dead trees were classified with fine branches and needles attached (F, I) or fine branches and needles fallen G, J. Asterisks denote difference from the control according to P < 0.01**, 0.05*, 0.1+. Brackets compare stands with and without TSI within the same major treatment. Panels A and C are reproduced from Morris and others (2022). See Appendix S1: Tables S3–S4 for model summaries.

Fuel Profiles

Surface Fuels

Gray stage post-outbreak surface fuel loads were lower in historically cut stands compared to uncut stands, though differences varied by fuel component (Figure 2; Appendix S3). In historically cut stands, loadings of 10-h fuels (Figure 2B), sound CWD (that is, 1000-h fuels; Figure 2D), live herbaceous vegetation (Figure 2I), and live woody vegetation (Figure 2J) were approximately half the level of uncut stands. Duff depth was about 2x greater in most historically cut stands than in uncut stands (Figure 2G). Most historically cut stands had loadings of 100-h fuels (Figure 2C) and depths of litter (Figure 2F) and dead fuel (Figure 2H) that were similar to uncut stands. Loadings of 1-h fuels (Figure 2A) and rotten CWD (that is, 1000-h fuels; Figure 2E) did not differ across treatments. TSI had little additional effect on surface fuel profiles, but further reduced live woody biomass for moderate and heavy treatments (Figure 2J). Within-stand CV in surface fuel loads was similar across stands (Appendix S3: Figure S1A–C).

Post-outbreak surface fuels across historical silvicultural treatments (Ctrl = control; Low = light; Mod = moderate; Hi = heavy; Cc = clearcut; TSI = timber stand improvement). Symbols described in Figure 1. See Appendix S3: Tables S2–S5 for model summaries.

Canopy Fuels

Canopy fuel loads were greater in historically cut stands compared to uncut stands, though differences varied by fuel component (Figures 3, 4; Appendix S3). For total canopy fuels, historically cut stands had about 2–3x greater foliage biomass (Figure 3A), about 1.5x greater 1-h (Figure 3D) and 10-h branch fuels (Figure 3G), about 2x greater ACFL (Figure 3M), and about 1.5x greater canopy cover (Figure 3P) than uncut stands. Total canopy fuel trends were driven primarily by live components: most historically cut stands had approximately 2–3x greater live foliage biomass (Figure 3B), live 1-h (Figure 3E), 10-h (Figure 3H), and 100-h (Figure 3K) branch fuels, live ACFL (Figure 3N), and live canopy cover (Figure 3Q) than uncut stands. Dead canopy fuels were similar across treatments with the exception of 100-h branch fuels (Figure 3L) and canopy cover (Figure 3R) which were approximately half in most historically cut stands compared to uncut stands. CBD was about 1.5x greater (Figure 4A) and CBH was around 6–8x taller (Figure 4B) in most historically cut stands than uncut stands. Vertical distribution of CBD was less even and concentrated at taller canopy heights in heavily cut stands compared to uncut and lighter cutting intensities (Figure 5). Within-stand CV in canopy fuels in most historically cut stands was approximately half for live canopy cover and CBH, and about 2x greater for dead canopy cover and ACFL compared to uncut stands (Appendix S3: Figure S1D–K). TSI had little additional effect on canopy fuel profiles.

Post-outbreak canopy fuels across historical silvicultural treatments (Ctrl = control; Low = light; Mod = moderate; Hi = heavy; Cc = clearcut; TSI = timber stand improvement). Symbols described in Figure 1. See Appendix S3: Table S7 for model summaries.

Post-outbreak A canopy base height and B canopy bulk density across historical silvicultural treatments (Ctrl = control; Low = light; Mod = moderate; Hi = heavy; Cc = clearcut; TSI = timber stand improvement). Symbols described in Figure 1. See Appendix S3: Table S8 for model summaries.

Aboveground Biomass C

Gray stage post-outbreak total aboveground biomass C was similar across treatments, though historically cut stands had greater live and less dead aboveground biomass C than uncut stands (Figure 6; Appendix S3). Total aboveground biomass C did not differ among treatments, except for moderate and clearcut + TSI, which had about 25% less total aboveground biomass C than uncut stands (Figure 6A). Live aboveground biomass C was around 2x greater in historically cut stands than uncut stands (Figure 6B), while dead aboveground biomass C was approximately half in historically cut compared to uncut stands (Figure 6C). TSI had little additional effect on aboveground biomass C, further increasing live aboveground biomass C in moderate intensity treatments and reducing dead aboveground biomass C for heavy and clearcut treatments (Figure 6).

Post-outbreak aboveground biomass carbon across historical silvicultural treatments (Ctrl = control; Low = light; Mod = moderate; Hi = heavy; Cc = clearcut; TSI = timber stand improvement). Symbols described in Figure 1. See Appendix S3: Table S14 for model summaries.

Discussion

Historical (1940s) silvicultural treatments produced lasting legacies on fuel profiles and aboveground biomass C into the gray stage following a MPB outbreak six decades later (Figure 7). Bark beetle outbreaks result in highly altered fuel complexes in which first canopy fuels, then dead woody fuels and live surface fuels, dominate over time (Donato and others 2013a; Jenkins and others 2014). Compared to uncut stands (Figure 7A), post-outbreak structure in historically cut stands was characterized by reduced surface and greater canopy fuel loads, reduced within-stand spatial heterogeneity of live—and greater heterogeneity of dead—canopy fuels, and similar total aboveground biomass C, but a larger portion of C stored in live aboveground biomass (Figure 7B). These results suggest potential for altered post-outbreak fire behavior and C trajectories in historically cut stands and highlight the role of past management in shaping feedbacks between interacting disturbances.

Outcomes from the interaction of historical (1940) silvicultural treatments with a recent (2003–2010) mountain pine beetle (Dendroctonus ponderosae) outbreak in lodgepole pine (Pinus contorta var. latifolia) stands of the Fraser Experimental Forest, Colorado, USA. Post-outbreak fuel profiles and aboveground biomass carbon differed between A uncut and B historically cut gray stage stands (photos from 2018). Note the contrasts in abundance and arrangement of live and dead biomass. Photo credits: left, M. S. Buonanduci; right, J. E. Morris.

Legacies of Historical Cutting on Post-Outbreak Fuel Profiles

Surface Fuels

Historical silvicultural treatments have long-lasting effects on reducing surface fuel loads from MPB outbreak into the gray stage post-outbreak. MPB outbreaks are typically characterized by gray-stage increases in dead surface fuels as beetle-killed trees drop needles, branches, and boles, and increases in live herbaceous and shrub surface fuels from growth responses to greater understory light availability (Jenkins and others 2008, 2014). Historical cutting produced strong legacy effects on stand structure at the time of outbreak that reduced both of these effects.

Compared to uncut stands, historically cut stands had less overall tree mortality from MPB, resulting in substantially lower CWD loads. Density-dependent mortality levels of smaller trees were high in historically cut stands in the decades preceding MPB outbreaks (Morris and others 2022). However, dead bole, branch, and foliage inputs from this mortality result primarily in FWD that is more rapidly decomposed (Harmon and others 1986) and likely to result in duff buildup (Keane 2008), as we observed in cut stands. Conversely, large diameter MPB-killed trees were more numerous in uncut stands, resulting in higher fuel loads of sound CWD, which decays slowly in subalpine forests (any rotten CWD pre-dated the MPB outbreak; Harmon and others 1986). Differences in rotten CWD among stands may emerge with greater time-since-outbreak as downed logs continue to decay. Additionally, due to less MPB-caused tree mortality compared to uncut stands, historically cut stands had greater live basal area and overstory density post-outbreak, limiting opportunities for outbreak-catalyzed increases in live surface fuels. Whereas uncut stands that experience outbreaks are characterized by canopy gaps that facilitate understory species establishment and growth releases (Jenkins and others 2014; Fornwalt and others 2018), historically cut stands maintained high canopy cover and relatively low-light understory conditions through the MPB outbreak, and had correspondingly minor live surface fuel responses post-outbreak. Historical cutting had no impact on surface fuel heterogeneity due to high spatial and temporal variability in fuels characteristic of lodgepole pine forests (Baker 2009), and/or attenuation of differences among stands by the time of measurement.

Canopy Fuels

Stands with historical silvicultural treatments sustained high canopy fuel loads into the gray stage following MPB outbreak. Generally, live canopy fuels (crown biomass, ACFL, CBD) decrease—and dead fuels increase—in the gray stage as needles and twigs fall from beetle-killed trees (Jenkins and others 2014). Compared to uncut stands, less tree mortality from MPB and greater live overstory density—particularly lodgepole pine density—in cut stands (Morris and others 2022) contributed to strong legacies of historical cutting on post-outbreak canopy fuels, with live fuels dominating overall trends. These conditions limit loss of live branches and foliage (Jenkins and others 2012) and maintain canopy architecture typical of lodgepole pine (self-pruning of lower branches; Lotan and Perry 1983) following outbreak, resulting in historically cut stands with greater crown fuel, ACFL, canopy cover, and CBD, and taller CBH than uncut stands. Silvicultural treatments had little effect on dead canopy fuels, with the exception of reduced fuel loads of the largest (100-h) dead branches in the most heavily cut stands. This is likely due to the lower abundance of large diameter branches on live or dead trees in the heaviest treatments as a function of historical cutting reducing overall quadratic mean diameter within the stands (Brown and others 1977; Morris and others 2022). This finding could also be affected by the allometric equations for dead crown biomass not accounting for effects of cutting-induced stand density differences on relationships of measured variables (dbh, height) with crown mass (for example, greater crown recession in dense stands; Cole and Jensen 1982), representing an area of possible future refinement.

Historical silvicultural treatments decreased spatial complexity of live—and increased complexity of dead—canopy fuels into the gray stage post-outbreak. Within-stand spatial heterogeneity in fuels increases with time-since-outbreak as fuels shift from the canopy to the surface (Donato and others 2013a). Legacy effects of historical cutting on post-outbreak stand dynamics reduced this effect for live canopy fuels. Compared to uncut stands, historically cut stands had a greater post-outbreak density of live overstory trees dominated by unsusceptible-sized (that is, diameter below MPB attack threshold) lodgepole pine (Morris and others 2022). These conditions reflect an earlier stand development stage and increased horizontal connectivity (Jenkins and others 2014), resulting in historically cut stands with less within-stand heterogeneity in live canopy cover and CBH. Conversely, greater post-outbreak density of dead understory trees in cut stands (a result of high pre-outbreak density-dependent mortality; Morris and others 2022) contributed to greater within-stand heterogeneity in dead canopy cover and ACFL compared to uncut stands.

Implications for Potential Fire Behavior

Legacies of historical treatments on fuel profiles suggest differences in gray stage post-outbreak potential fire behavior between cut and uncut stands. Surface fire behavior is commonly predicted using physical properties of fuel beds, fuel moisture, wind speed, and slope, assuming a spatially uniform fuel distribution (Rothermel 1972). In outbreak-affected stands, surface fire behavior depends on outbreak stage and severity (Page and Jenkins 2007), and surface fire potential increases with time-since-outbreak due to the buildup of surface fuel loads as beetle-killed trees decay and fall (Page and Jenkins 2007; Jenkins and others 2008; Hicke and others 2012b). Our findings suggest historical silvicultural treatments mitigate surface fuel accumulation; less DWD and live surface fuel in historically cut stands may reduce the likelihood of severe surface fire (for example, high soil heating and fuel consumption) by reducing reaction intensity, fireline intensity, rate of spread, and flame lengths compared to uncut stands (Klutsch and others 2011; Schoennagel and others 2012). Only uncut stands had post-outbreak total coarse fuel loads (80.9 Mg/ha) that exceed fuel reduction targets of 67 Mg/ha for reducing smoldering in subalpine forests (Pelz and others 2015), whereas total coarse fuel loads were below this level for all historically cut stands (21.9–58.0 Mg/ha). However, given adaptive traits of the three dominant conifer species to evade or avoid fire (for example, thin bark, low fire resistance; Hood and others 2021), even the lowest surface fuel loads present would likely be above a threshold of tree survival given fire occurrence (Lotan and Perry 1983).

Crown fire behavior is commonly predicted using fireline intensity, CBH, CBD, and foliar moisture content, assuming spatially homogeneous and continuous crown fuels (Van Wagner 1977). In outbreak-affected stands, crown fire behavior is influenced by outbreak stage and severity, canopy structure, and spatial pattern of tree mortality and associated effects on fuel distribution and wind patterns (Hoffman and others 2012, 2015; Schoennagel and others 2012; Linn and others 2013); crown fire potential declines during the gray stage post-outbreak as dead needles fall and CBD and aerial fuel continuity are reduced (Jenkins and others 2008; Klutsch and others 2011; Hicke and others 2012b). Our findings suggest that historical silvicultural treatments had variable effects on these dynamics. Less DWD, taller CBH, and greater vertical heterogeneity in ACFL in historically cut stands may reduce potential for torching and crown fire initiation compared to uncut stands by reducing fuel continuity and decreasing the likelihood of fire transitioning from the surface to the canopy (Collins and others 2012; Hoffman and others 2013). However, once crown fire is initiated, greater abundance and spatial homogeneity of live canopy cover (that is, greater fuel continuity) in historically cut stands may increase potential for transition from passive to active crown fire compared to uncut stands (Jenkins and others 2008). Further, greater CBD in historically cut stands may facilitate greater canopy fuel consumption and faster crown fire spread than uncut stands (Van Wagner 1977; Linn and others 2013; although see Moriarty and others 2019), though increased horizontal heterogeneity in ACFL in cut stands (that is, reduced fuel continuity) may dampen this effect (Pimont and others 2006; Donato and others 2013a). Despite differences in fuel structure and arrangement, actual crown fire likelihood may be similar across treatments since CBH (0.0–1.5 m) and CBD (0.081–0.283 kg/m3) in most cut and uncut stands fail to meet fuel reduction targets for reducing likelihood of passive crown fire initiation (CBH > 3.5 m) and active crown fire spread (CBD < 0.086 kg/m3) in subalpine forests (Pelz and others 2015). Estimates of canopy foliar moisture content are needed to better characterize crown fire potential and model fire behavior in MPB-killed stands (Jolly and others 2012).

Simulating fire behavior in outbreak-affected stands is challenging due to complex links between patterns of tree mortality and changes in fuel loading, arrangement, and availability (Hood and others 2018). Although we did not model fire behavior due to limitations of existing empirical models (see Methods), newly developed process-based models (for example, computational fluid dynamics models; Hoffman and others 2018) have shown promise in projecting fire behavior in dynamic post-outbreak environments (for example, Hoffman and others 2012, 2015; Linn and others 2013). Although outside the scope of this study, integrating our fuels data into process-based models could be an informative future approach given appropriate expertise and resources.

Whereas historical silvicultural treatments may modify potential fire behavior following bark beetle outbreak, manipulation of fuels in subalpine forested systems has less impact on fire frequency and severity in fire regimes that are not strongly fuel driven (Baker 2009). In forests characterized by stand-replacing fire regimes, fuel abundance has limited effects on fire occurrence (Schoennagel and others 2004) and extent (Kulakowski and Veblen 2007). Regional-scale climatic variability is the dominant driver of fire occurrence (Mietkiewicz and Kulakowski 2016) and area burned (Hart and others 2015), though fire effects and behavior are modulated by local- and meso-scale abiotic and biotic factors (Sibold and others 2006). Effects of bark beetle outbreaks on fire severity depend on burning conditions, outbreak severity, and the interval between outbreak and fire (Harvey and others 2014a). In gray stage stands, decreases in fire severity have been observed for some surface and crown fire metrics (for example, tree mortality, char height) under moderate burning conditions (that is, relatively low temperature and winds, high humidity; though see Agne and others 2016) due to less abundance of available fine fuels and live vegetation susceptible to wildfire (Harvey and others 2014a; Meigs and others 2016). Conversely, gray stage stands may exhibit increased consumption of boles and branches, deep char development, and surface fire severity, due to greater abundance of dead trees and coarse surface fuels that become available for combustion under extreme burning conditions (that is, high temperature and winds, low humidity; Buma and others 2014; Harvey and others 2014b; Talucci and Krawchuk 2019). Accordingly, under moderate burning conditions, our findings suggest historically cut stands may experience increased fire severity compared to uncut gray stage stands due to greater live foliage and 1-hr canopy fuels. However, extreme burning conditions would likely overwhelm these fuel effects, reducing or eliminating differences in fire severity among cut and uncut gray stage stands, with the exception of more limited deep char development in historically cut stands due to reduced surface fuels and fewer beetle-killed trees.

Given historical fire rotations of 175–350 years (Baker 2009), the mean probability of burning in subalpine forests would be less than 1% in a random given year and less than 50% in a 100-year period. Differences among stands attenuate over time-since-outbreak as stands regrow, and historical treatments may have limited effects on reducing fire likelihood and severity over similarly long fire return intervals. However, estimates of twenty-first century fire rotations in central Rocky Mountain subalpine forests (117 years) represent a near doubling of the average rate of burning over the past 2000 years (Higuera and others 2021). Thus, climate warming may increase the importance of fuels in lodgepole pine fire regimes as warmer and drier conditions reduce climate limitations on fire occurrence and shorten fire intervals (Turner and others 2019).

Legacies of Historical Cutting on Aboveground C Dynamics

Historical silvicultural treatments dampened effects of the MPB outbreak on live aboveground biomass C, but led to similar total aboveground biomass C levels as uncut stands. Through selective tree mortality, bark beetle outbreaks redistribute C from live to dead pools, though total C remains relatively constant from the dynamic balance between decomposition of beetle-killed snags and growth of surviving and regenerating trees (Donato and others 2013b; Hansen and others 2015). Consistent with these dynamics, total aboveground biomass C was similar across most treatments post-outbreak. However, historically cut stands had twice as much live and half as much dead biomass compared to uncut stands. Residual live vegetation is an important C sink following outbreaks that may experience increased productivity due to canopy openings and release from competition (Bowler and others 2012). Thus, by reducing outbreak severity (that is, greater post-outbreak live basal area and density), historical cutting may accelerate the recovery of post-outbreak live aboveground biomass C compared to uncut stands (Hicke and others 2012a), though future studies are needed to track aboveground biomass C trajectories several decades post-outbreak.

Although preserving greater amounts of live aboveground C for at least the first decade following a beetle outbreak, historical silvicultural treatments present additional tradeoffs with long-term on-site C storage and stability. Intentional removal of substantial amounts of live biomass by the treatments themselves may potentially result in less on-site C stored in cut stands over time. Typically, stocks of standing-live C in post-MPB-outbreak stands can return to pre-outbreak levels within around 40 years (Caldwell and others 2013), and productivity rates and total C stocks can recover within 100 years (Bradford and others 2008; Edburg and others 2011). While total aboveground biomass C in most historically cut stands had recovered to uncut levels by the time of measurement (78 years later) post-outbreak, woody aboveground biomass C was substantially less in the cut stands during the initial decades following treatment due to earlier stand development and reduced densities of large trees (Wilm and Dunford 1948). Beyond the initial removal of live biomass, historically cut stands are also likely to be susceptible to future beetle outbreaks sooner than uncut stands due to greater live basal area and density (Collins and others 2011), suggesting historical silvicultural treatments may reduce long-term C stability.

Dead biomass is also important to consider when managing for ecosystem C storage. In high-elevation subalpine forests, input and decomposition of dead biomass can contribute over 25% of total ecosystem C (Bradford and others 2008). Snags and coarse DWD can take approximately a century to decay depending on site conditions (Brown and others 1998). These slow decomposition rates allow dead biomass to represent a long-term C store, while DWD also contributes to soil formation, water cycling (Harmon and others 1986), and wildlife habitat (for example, Saab and others 2014). By reducing aboveground biomass C stored in snags and DWD compared to uncut stands, and burning slash during treatment operation, historical cutting may result in less persistent dead C stocks on site. However, forest products crafted from merchantable timber removed in silvicultural operations can store offsite a portion of the biomass removed (McKinley and others 2011), highlighting the importance of C lifecycle analyses when developing C management strategies. Although C fractions for dead woody biomass can vary across forests, biomes, species, tissue types, and decay classes (Martin and others 2021), most of these factors are regionally constant in our study, making relative comparisons among treatments useful, though decay rates may vary among stands due to unique temperature and moisture conditions produced by differences in structure (Harmon and others 1986).

Balancing Multiple Objectives for Forest Management

The effects of historical silvicultural treatments on fuel profiles and aboveground biomass C present important tradeoffs for wildlife habitat, operational firefighting activities, and C trajectories in beetle-killed forests, and raise several key contrasts to silvicultural treatments that are applied post-outbreak. Post-outbreak biomass legacies (for example, snags, DWD, understory vegetation) provide important wildlife habitat (Saab and others 2014), with optimal values of snag and DWD retention varying by wildlife taxa or management objective. By promoting greater live tree density and canopy cover in the long term, historical density reduction may help maintain post-outbreak habitat for wildlife species that utilize dense, closed-canopy stands. Conversely, reduction in dead surface and canopy fuels from density reduction may limit habitat for species dependent on canopy gaps, herbaceous vegetation, DWD, and snags (Saab and others 2014; Janousek and others 2019).

Modification of fuel profiles by historical treatments also suggests that wildfire resistance to control may differ between cut and uncut stands. Resistance to control considers operational dimensions of fire behavior such as suppression capacity and firefighter safety (Page and others 2013). Snags and DWD can facilitate spotting by serving as sources and receptors for embers (Hyde and others 2011). Thus, accumulation of standing dead trees and DWD can increase difficulties in fireline construction, access, and establishing escape routes and safety zones (Jenkins and others 2014). Accordingly, gray stage post-outbreak conditions in historically cut stands may present fewer operational fire hazards than uncut stands due to greater dominance of live trees (less contribution to spotting) and less CWD (ease of access and fireline construction efforts; Page and others 2013). Yet, greater CBD and potential for increased crown fire spread in historically cut stands may limit suppression capacity (Page and others 2013). Additionally, due to observations of unexpected increased fire behavior in MPB-attacked stands (for example, crown ignition, crown fire propagation in gray stage), firefighters are more likely to expect to see active fire behavior and utilize indirect suppression methods in outbreak-affected stands under nearly all burning conditions and outbreak stages (Moriarty and others 2019), potentially resulting in similar firefighting operations across all stands regardless of historical silvicultural treatment.

Historical silvicultural treatments may also have implications for C trajectories when outbreak-affected stands do burn. Compound disturbances can have additive effects on C loss (Buma and others 2014; Turner and others 2019). Beetle-killed trees are more likely than live trees to have branch structure consumed and develop deep wood charring (that is, incomplete combustion of dead wood) under both moderate and extreme burning conditions (for example, Talucci and Krawchuk 2019). Branch consumption of beetle-killed trees may limit C storage by reducing DWD accumulation and simplifying snag physical structure, promoting faster decay when fallen (Harmon and others 1986). Conversely, deep charring of biomass may extend long-term C storage through creation of pyrogenic C and influence soil nutrient cycling by limiting decomposition and slowing decay (Talucci and others 2020). Thus, although historical cutting may limit C loss through combustion by reducing the presence of beetle-killed trees and DWD, the post-fire C that remains may decay more rapidly than pyrogenic C from burned snags and logs.

Our findings present key contrasts to silvicultural treatments when they are applied post-outbreak (for example, salvage logging), and their effects on potential fire behavior and C trajectories, highlighting the importance of order and timing of interacting disturbances or management actions on ecosystem processes. In general, silvicultural treatments conducted post-outbreak consistently decrease canopy fuel loads (ACFL, CBD), but fuels created during harvesting operations can increase surface fuel loads (FWD, sound CWD) compared to uncut stands (Jenkins and others 2008; Collins and others 2012). Together these changes can lead to increased surface fire intensity (Jenkins and others 2014), but reduced crown fire intensity in stands cut post-outbreak (Baker 2009; Donato and others 2013b; Crotteau and others 2018). In contrast, partial or complete cutting decades prior to an outbreak may lead to opposite effects. Compared to uncut stands, historical cutting consistently led to increased canopy fuel loads but decreased surface fuel loads following outbreak. Collectively, historical silvicultural treatments followed by MPB outbreaks may reduce potential for severe surface fire but increase the potential for crown fire spread and transition from passive to active crown fire, compared to uncut stands. Finally, whereas post-outbreak salvage logging may reduce aboveground C storage and lengthen C recovery time compared to unharvested stands (for example, Donato and others 2013b), historical cutting before MPB outbreak resulted in total post-outbreak C levels similar to uncut stands.

Conclusion

Across western North America, spatial overlaps between fire and recent bark beetle outbreaks are receiving heightened public attention and raising concerns about altered potential fire behavior and ecosystem services. Our study capitalizes on a unique opportunity to leverage past silvicultural operations affected by subsequent MPB outbreak to test empirically the efficacy and longevity of management actions on influencing post-outbreak fuel profiles and aboveground C dynamics. We found that silvicultural treatments applied about 60 years prior to MPB outbreak resulted in post-outbreak conditions characterized by reduced surface fuel loads, increased canopy fuel loads, and dampened redistribution of aboveground biomass C from live to dead pools compared to uncut stands. This work extends observations of treatment longevity in subalpine forests, highlighting that structural and functional legacies of silvicultural treatments may last half a century and persist following a severe bark beetle outbreak. By creating variable conditions among stands, silvicultural treatments such as partial or complete cutting can support a variety of management outcomes and ecosystem services. Our findings of tradeoffs between modification of fuel profiles and aboveground C trajectories following historical cutting support the need to balance multiple objectives for effective forest management.

Data availability

Data analyzed in this study are available on Zenodo: https://doi.org/10.5281/zenodo.7675524

References

Agne MC, Woolley T, Fitzgerald S. 2016. Fire severity and cumulative disturbance effects in the post-mountain pine beetle lodgepole pine forests of the Pole Creek Fire. For Ecol Manag 366:73–86.

Alexander ME, Cruz MG. 2013. Are the applications of wildland fire behaviour models getting ahead of their evaluation again? Environ Model Softw 41:65–71.

Alexander RR, Troendle CA, Kaufmann MR, Shepperd WD, Crouch GL, Watkins RK. 1985. The Fraser Experimental Forest, Colorado: Research program and published research 1937-1985. Fort Collins, CO: U.S. Department of Agriculture, Forest Service, Rocky Mountain Forest and Range Experiment Station

Baker WL. 2009. Fire Ecology in Rocky Mountain Landscapes. Washington, D.C.: Island Press.

Bates D, Maechler M, Bolker B, Walker S. 2015. Fitting linear mixed-effects models using lme4. J Stat Softw 67:1–48.

Bowler R, Fredeen AL, Brown M, Andrew Black T. 2012. Residual vegetation importance to net CO2 uptake in pine-dominated stands following mountain pine beetle attack in British Columbia, Canada. For Ecol Manag 269:82–91.

Bradford JB, Birdsey RA, Joyce LA, Ryan MG. 2008. Tree age, disturbance history, and carbon stocks and fluxes in subalpine Rocky Mountain forests. Glob Change Biol 14:2882–97.

Bright BC, Hicke JA, Hudak AT. 2012. Estimating aboveground carbon stocks of a forest affected by mountain pine beetle in Idaho using lidar and multispectral imagery. Remote Sens Environ 124:270–81.

Brooks ME, Kristensen K, van Benthem KJ, Magnusson A, Berg CW, Nielsen A, Skaug HJ, Maechler M, Bolker BM. 2017. glmmTMB balances speed and flexibility among packages for zero-inflated generalized linear mixed modeling. R J 9:378–400.

Brown JK. 1974. Handbook for inventorying downed woody material. Ogden, UT: U.S. Department of Agriculture, Forest Service, Intermountain Forest and Range Experiment Station.

Brown JK. 1978. Weight and density of crowns of Rocky Mountain conifers. Ogden, UT: U.S. Department of Agriculture, Forest Service, Intermountain Forest and Range Experiment Station.

Brown JK, Snell JAK, Bunnell DL. 1977. Handbook for predicting slash weight of western conifers. Ogden UT: U.S. Department of Agriculture, Forest Service, Intermountain Forest and Range Experiment Station.

Brown PM, Shepperd WD, Mata SA, McClain DL. 1998. Longevity of windthrown logs in a subalpine forest of central Colorado. Can J For Res 28:5.

Buma B, Poore RE, Wessman CA. 2014. Disturbances, their interactions, and cumulative effects on carbon and charcoal stocks in a forested ecosystem. Ecosystems 17:947–59.

Caldwell MK, Hawbaker TJ, Briggs JS, Cigan PW, Stitt S. 2013. Simulated impacts of mountain pine beetle and wildfire disturbances on forest vegetation composition and carbon stocks in the Southern Rocky Mountains. Biogeosciences Discuss 10:12919–65.

Cole DM, Jensen CE. 1982. Models for describing vertical crown development of lodgepole pine stands. U.S. Department of Agriculture, Forest Service, Intermountain Forest and Range Experiment Station

Collins BJ, Rhoades CC, Hubbard RM, Battaglia MA. 2011. Tree regeneration and future stand development after bark beetle infestation and harvesting in Colorado lodgepole pine stands. For Ecol Manag 261:2168–75.

Collins BJ, Rhoades CC, Battaglia MA, Hubbard RM. 2012. The effects of bark beetle outbreaks on forest development, fuel loads and potential fire behavior in salvage logged and untreated lodgepole pine forests. For Ecol Manag 284:260–8.

Crotteau JS, Keyes CR, Hood SM, Affleck DLR, Sala A. 2018. Fuel dynamics after a bark beetle outbreak impacts experimental fuel treatments. Fire Ecol 14:13.

Cruz MG, Alexander ME, Wakimoto RH. 2004. Modeling the likelihood of crown fire occurrence in conifer forest stands. For Sci 50:640–58.

Davis TS, Meddens AJH, Stevens-Rumann CS, Jansen VS, Sibold JS, Battaglia MA. 2022. Monitoring resistance and resilience using carbon trajectories: Analysis of forest management–disturbance interactions. Ecol Appl 32:e2704.

DeRose RJ, Long JN. 2014. Resistance and resilience: a conceptual framework for silviculture. For Sci 60:1205–12.

Donato DC, Harvey BJ, Romme WH, Simard M, Turner MG. 2013. Bark beetle effects on fuel profiles across a range of stand structures in Douglas-fir forests of Greater Yellowstone. Ecol Appl 23:3–20.

Donato DC, Simard M, Romme WH, Harvey BJ, Turner MG. 2013. Evaluating post-outbreak management effects on future fuel profiles and stand structure in bark beetle-impacted forests of Greater Yellowstone. For Ecol Manag 303:160–74.

Edburg SL, Hicke JA, Lawrence DM, Thornton PE. 2011. Simulating coupled carbon and nitrogen dynamics following mountain pine beetle outbreaks in the western United States. J Geophys Res Biogeosciences 116:G04033.

Fornwalt PJ, Rhoades CC, Hubbard RM, Harris RL, Faist AM, Bowman WD. 2018. Short-term understory plant community responses to salvage logging in beetle-affected lodgepole pine forests. For Ecol Manag 409:84–93.

Fraterrigo JM, Rusak JA. 2008. Disturbance-driven changes in the variability of ecological patterns and processes. Ecol Lett 11:756–70.

Griffin JM, Simard M, Turner MG. 2013. Salvage harvest effects on advance tree regeneration, soil nitrogen, and fuels following mountain pine beetle outbreak in lodgepole pine. For Ecol Manag 291:228–39.

Hansen EM, Amacher MC, Van Miegroet H, Long JN, Ryan MG. 2015. Carbon dynamics in central US Rockies lodgepole pine type after mountain pine beetle outbreaks. For Sci 61:665–79.

Harmon ME, Franklin JF, Swanson FJ, Sollins P, Gregory SV, Lattin JD, Anderson NH, Cline SP, Aumen NG, Sedell JR, Lienkaemper GW, Cromack K, Cummins KW. 1986. Ecology of coarse woody debris in temperate ecosystems. Adv Ecol Res 15:133–302.

Hart SJ, Schoennagel T, Veblen TT, Chapman TB. 2015. Area burned in the western United States is unaffected by recent mountain pine beetle outbreaks. Proc Natl Acad Sci 112:4375–80.

Harvey BJ, Donato DC, Romme WH, Turner MG. 2014. Fire severity and tree regeneration following bark beetle outbreaks: the role of outbreak stage and burning conditions. Ecol Appl 24:1608–25.

Harvey BJ, Donato DC, Turner MG. 2014. Recent mountain pine beetle outbreaks, wildfire severity, and postfire tree regeneration in the US Northern Rockies. Proc Natl Acad Sci 111:15120–5.

Hicke JA, Allen CD, Desai AR, Dietze MC, Hall RJ, Ted Hogg EH, Kashian DM, Moore D, Raffa KF, Sturrock RN, Vogelmann J. 2012. Effects of biotic disturbances on forest carbon cycling in the United States and Canada. Glob Change Biol 18:7–34.

Hicke JA, Johnson MC, Hayes JL, Preisler HK. 2012. Effects of bark beetle-caused tree mortality on wildfire. For Ecol Manag 271:81–90.

Higuera PE, Shuman BN, Wolf KD. 2021. Rocky Mountain subalpine forests now burning more than any time in recent millennia. Proc Natl Acad Sci 118:e2103135118.

Hoffman C, Morgan P, Mell W, Parsons R, Strand EK, Cook S. 2012. Numerical simulation of crown fire hazard immediately after bark beetle-caused mortality in Lodgepole Pine Forests. For Sci 58:178–88.

Hoffman CM, Morgan P, Mell W, Parsons R, Strand E, Cook S. 2013. Surface fire intensity influences simulated crown fire behavior in Lodgepole Pine Forests with recent mountain pine beetle-caused tree mortality. For Sci 59:390–9.

Hoffman CM, Linn R, Parsons R, Sieg C, Winterkamp J. 2015. Modeling spatial and temporal dynamics of wind flow and potential fire behavior following a mountain pine beetle outbreak in a lodgepole pine forest. Agric For Meteorol 204:79–93.

Hoffman C, Sieg C, Linn R, Mell W, Parsons R, Ziegler J, Hiers J. 2018. Advancing the science of wildland fire dynamics using process-based models. Fire 1:32.

Hood SM, Keane RE, Smith HY, Egan J, Holsinger L. 2018. Chapter 13Conventional fire behavior modeling systems are inadequate for predicting fire behavior in bark beetle-impacted forests (Project INT-EM-F-11-03). In: Potter KM, Conkling BL, Eds. Forest health monitoring: national status, trends, and analysis 2017 general technical report SRS-233, . Asheville NC: U.S. Department of Agriculture, Forest Service, Southern Research Station. pp 167–76.

Hood SM, Harvey BJ, Fornwalt PJ, Naficy CE, Hansen WD, Davis KT, Battaglia MA, Stevens-Rumann CS, Saab VA. 2021. Fire ecology of rocky mountain forests. In: Collins B, Greenberg CH, Eds. Fire ecology and management past present and future of US forested ecosystems. Vol. 39. Managing Forest Ecosystems. Berlin: Springer. pp 287–336. https://doi.org/10.1007/978-3-030-73267-7_8.

Huckaby LS, Moir WH. 1998. Forest communities at Fraser Experimental Forest, Colorado. Southwest Nat 43:204–18.

Hyde JC, Smith AMS, Ottmar RD, Alvarado EC, Morgan P. 2011. The combustion of sound and rotten coarse woody debris: a review. Int J Wildland Fire 20:163.

Janousek WM, Hicke JA, Meddens AJH, Dreitz VJ. 2019. The effects of mountain pine beetle outbreaks on avian communities in lodgepole pine forests across the greater Rocky Mountain region. For Ecol Manag 444:374–81.

Jenkins MJ, Hebertson E, Page W, Jorgensen CA. 2008. Bark beetles, fuels, fires and implications for forest management in the Intermountain West. For Ecol Manag 254:16–34.

Jenkins MJ, Page WG, Hebertson EG, Alexander ME. 2012. Fuels and fire behavior dynamics in bark beetle-attacked forests in western North America and implications for fire management. For Ecol Manag 275:23–34.

Jenkins MJ, Runyon JB, Fettig CJ, Page WG, Bentz BJ. 2014. Interactions among the mountain pine beetle, fires, and fuels. For Sci 60:489–501.

Jolly WM, Parsons RA, Hadlow AM, Cohn GM, McAllister SS, Popp JB, Hubbard RM, Negron JF. 2012. Relationships between moisture, chemistry, and ignition of Pinus contorta needles during the early stages of mountain pine beetle attack. For Ecol Manag 269:52–9.

Keane RE. 2008. Biophysical controls on surface fuel litterfall and decomposition in the northern Rocky Mountains, USA. Can J For Res 38:1431–45.

Keeley JE. 2009. Fire intensity, fire severity and burn severity: a brief review and suggested usage. Int J Wildland Fire 18:116.

Klutsch JG, Battaglia MA, West DR, Costello SL, Negron JF. 2011. Evaluating potential fire behavior in lodgepole pine-dominated forests after a mountain pine beetle epidemic in north-central Colorado. West J Appl For 26:101–9.

Kulakowski D, Veblen TT. 2007. Effect of prior disturbances on the extent and severity of wildfire in Colorado subalpine forests. Ecology 88:759–69.

Linn RR, Sieg CH, Hoffman CM, Winterkamp JL, McMillin JD. 2013. Modeling wind fields and fire propagation following bark beetle outbreaks in spatially-heterogeneous pinyon-juniper woodland fuel complexes. Agric For Meteorol 173:139–53.

Long JA. 2020. jtools: Analysis and presentation of social scientific data. https://cran.r-project.org/package=jtools

Lotan JE, Perry DA. 1983. Ecology and regeneration of lodgepole pine. Washington: U.S. Department of Agriculture.

Lutes DC, Keane RE, Caratti JF, Key CH, Benson NC, Sutherland S, Gangi LJ. 2006. FIREMON: Fire effects monitoring and inventory system. Fort Collins CO: U.S. Department of Agriculture, Forest Service, Rocky Mountain Research Station.

Martin AR, Domke GM, Doraisami M, Thomas SC. 2021. Carbon fractions in the world’s dead wood. Nat Commun 12:889.

McKinley DC, Ryan MG, Birdsey RA, Giardina CP, Harmon ME, Heath LS, Houghton RA, Jackson RB, Morrison JF, Murray BC, Pataki DE, Skog KE. 2011. A synthesis of current knowledge on forests and carbon storage in the United States. Ecol Appl 21:1902–24.

Means JE, Hansen HA, Koerper GJ, Alaback PB, Klopsch MW. 1994. Software for computing plant biomass - BIOPAK users guide. Portland, OR: US Department of Agriculture, Forest Service, Pacific Northwest Research Station.

Meddens AJH, Hicke JA, Ferguson CA. 2012. Spatiotemporal patterns of observed bark beetle-caused tree mortality in British Columbia and the western United States. Ecol Appl 22:1876–91.

Meigs GW, Campbell JL, Zald HSJ, Bailey JD, Shaw DC, Kennedy RE. 2015. Does wildfire likelihood increase following insect outbreaks in conifer forests? Ecosphere 6:1–24.

Meigs GW, Zald HSJ, Campbell JL, Keeton WS, Kennedy RE. 2016. Do insect outbreaks reduce the severity of subsequent forest fires? Environ Res Lett 11:045008.

Mietkiewicz N, Kulakowski D. 2016. Relative importance of climate and mountain pine beetle outbreaks on the occurrence of large wildfires in the western USA. Ecol Appl 26:2525–37.

Moriarty K, Cheng AS, Hoffman CM, Cottrell SP, Alexander ME. 2019. Firefighter observations of “surprising” fire behavior in mountain pine beetle-attacked lodgepole pine forests. Fire 2:34.

Morris JL, Cottrell S, Fettig CJ, Hansen WD, Sherriff RL, Carter VA, Clear JL, Clement J, DeRose RJ, Hicke JA, Higuera PE, Mattor KM, Seddon AWR, Seppä HT, Stednick JD, Seybold SJ. 2017. Managing bark beetle impacts on ecosystems and society: priority questions to motivate future research. J Appl Ecol 54:750–60.

Morris JE, Buonanduci MS, Agne MC, Battaglia MA, Harvey BJ. 2022. Does the legacy of historical thinning treatments foster resilience to bark beetle outbreaks in subalpine forests? Ecol Appl 32:e02474.

National Wildfire Coordinating Group. 2021. NWCG Glossary of Wildland Fire, PMS 205. https://www.nwcg.gov/publications/pms205

Olson CM, Martin RE. 1981. Estimating biomass of shrubs and forbs in Central Washington Douglas-fir stands. Bend, OR: U.S. Department of Agriculture, Forest Service, Pacific Northwest Forest and Range Experiment Station.

Page W, Jenkins MJ. 2007. Predicted fire behavior in selected mountain pine beetle–infested lodgepole pine. For Sci 53:662–74.

Page WG, Alexander ME, Jenkins MJ. 2013. Wildfire’s resistance to control in mountain pine beetle-attacked lodgepole pine forests. For Chron 89:783–94.

Parks SA, Abatzoglou JT. 2020. Warmer and drier fire seasons contribute to increases in area burned at high severity in western US forests from 1985–2017. Geophys Res Lett 47:e2020GL089858.

Pelz KA, Rhoades CC, Hubbard RM, Battaglia MA, Smith FW. 2015. Species composition influences management outcomes following mountain pine beetle in lodgepole pine-dominated forests. For Ecol Manag 336:11–20.

Pfeifer EM, Hicke JA, Meddens AJH. 2011. Observations and modeling of aboveground tree carbon stocks and fluxes following a bark beetle outbreak in the western United States. Glob Change Biol 17:339–50.

Pimont F, Linn RR, Dupuy J-L, Morvan D. 2006. Effects of vegetation description parameters on forest fire behavior with FIRETEC. For Ecol Manag 234:S120.

Prichard SJ, Sandberg DV, Ottmar RD, Eberhardt E, Andreu A, Eagle P, Kjell Swedin. 2013. Fuel characteristic classification system version 3.0: technical documentation. Portland, OR: U.S. Department of Agriculture Forest Service, Pacific Northwest Research Station.

PRISM Climate Group. 2012. Oregon State University, http://prism.oregonstate.edu.

R Core Team. 2022. R: A language and environment for statistical computing. https://www.R-project.org/

Reinhardt E, Scott J, Gray K, Keane R. 2006. Estimating canopy fuel characteristics in five conifer stands in the western United States using tree and stand measurements. Can J For Res 36:2803–14.

Rothermel RC. 1972. A mathematical model for predicting fire spread in wildland fuels. Ogden, UT: U.S. Department of Agriculture Forest Service, Intermountain Forest and Range Experiment Station.

Saab VA, Latif QS, Rowland MM, Johnson TN, Chalfoun AD, Buskirk SW, Heyward JE, Dresser MA. 2014. Ecological consequences of mountain pine beetle outbreaks for wildlife in western North American forests. For Sci 60:539–59.

Sando RW, Wick CH. 1972. A method of evaluating crown fuels in forest stands. St. Paul, MN: U.S. Department of Agriculture Forest Service, North Central Forest Experiment Station.

Schlesinger WH, Bernhardt ES. 2013. Biogeochemistry: an analysis of global change, 3rd edn. San Diego, California: Academic Press.

Schoennagel T, Veblen TT, Romme WH. 2004. The interaction of fire, fuels, and climate across Rocky Mountain forests. BioScience 54:661–76.

Schoennagel T, Veblen TT, Negron JF, Smith JM. 2012. Effects of mountain pine beetle on fuels and expected fire behavior in Lodgepole Pine forests, Colorado, USA. PLoS ONE 7:e30002.

Seidl R, Thom D, Kautz M, Martin-Benito D, Peltoniemi M, Vacchiano G, Wild J, Ascoli D, Petr M, Honkaniemi J, Lexer MJ, Trotsiuk V, Mairota P, Svoboda M, Fabrika M, Nagel TA, Reyer CPO. 2017. Forest disturbances under climate change. Nat Clim Change 7:395–402.

Sibold JS, Veblen TT, González ME. 2006. Spatial and temporal variation in historic fire regimes in subalpine forests across the Colorado Front Range in Rocky Mountain National Park, Colorado, USA. J Biogeogr 33:631–47.

Simard M, Romme WH, Griffin JM, Turner MG. 2011. Do mountain pine beetle outbreaks change the probability of active crown fire in lodgepole pine forests? Ecol Monogr 81:3–24.

Standish JT, Manning GH, Demaerschalk JP. 1985. Development of biomass equations for British Columbia tree species. Victoria: Canadian Forestry Service Pacific Forest Research Centre.

Talucci AC, Krawchuk MA. 2019. Dead forests burning: the influence of beetle outbreaks on fire severity and legacy structure in sub-boreal forests. Ecosphere 10:e02744.

Talucci AC, Matosziuk LM, Hatten JA, Krawchuk MA. 2020. An added boost in pyrogenic carbon when wildfire burns forest with high pre-fire mortality. Fire Ecol 16:21.

Turner MG, Braziunas KH, Hansen WD, Harvey BJ. 2019. Short-interval severe fire erodes the resilience of subalpine lodgepole pine forests. Proc Natl Acad Sci 116:11319–28.

Van Wagner CE. 1977. Conditions for the start and spread of crown fire. Can J For Res 7:23–34.

Vorster AG, Evangelista PH, Stohlgren TJ, Kumar S, Rhoades CC, Hubbard RM, Cheng AS, Elder K. 2017. Severity of a mountain pine beetle outbreak across a range of stand conditions in Fraser Experimental Forest, Colorado, United States. For Ecol Manag 389:116–26.

Wilm HG, Dunford EG. 1948. Effect of timber cutting on water available for stream flow from a lodgepole pine forest. Washington: United States Department of Agriculture.

Woolley T, Shaw DC, Hollingsworth LT, Agne MC, Fitzgerald S, Eglitis A, Kurth L. 2019. Beyond red crowns: complex changes in surface and crown fuels and their interactions 32 years following mountain pine beetle epidemics in south-central Oregon, USA. Fire Ecol 15:4.

Acknowledgements

We thank the USDA Forest Service Rocky Mountain Research Station and K Elder, D McClain, and B Starr of the Fraser Experimental Forest for logistical support. We thank F Carroll, N Lau, A Link, A Liu, F Mac, S Riedel, and RT Sternberg for field assistance. We appreciate J Franklin, M Harmon, L Huckaby, D Kashian, W Moir, J Negrón, C Rhoades, and T Veblen for project insights. We thank E Alvarado, J Hille Ris Lambers, S Hart, and two anonymous reviewers for manuscript feedback. This work was supported by the (1) McIntire-Stennis Cooperative Forestry Research Program (grant no. NI17MSCFRXXXG003/project accession no. 1012773) from the USDA National Institute of Food and Agriculture; (2) the National Science Foundation (Award 1853520); (3) Graduate Research Opportunity Enhancement Fellowship from University of Washington’s College of the Environment; (4) and Jesse L. Riffe Family Endowed Fellowship from University of Washington’s School of Environmental and Forest Sciences. B Harvey acknowledges support from the Jack Corkery and George Corkery Jr. Endowed Professorship in Forest Sciences. This manuscript was written and prepared with a US Government employee on official time, and therefore, it is in the public domain and not subject to copyright.

Author information

Authors and Affiliations

Corresponding author

Additional information

Author Contributions JEM and BJH conceived and designed the study, with input from all authors. JEM led the field data collection with assistance from MSB, MCA, and BJH. JEM led the analysis with overall guidance from BJH, guidance from DCD on fuel and carbon calculations, guidance from MAB on stand history and silvicultural prescriptions, and support from all authors. JEM and BJH wrote the manuscript, and all authors contributed critically to drafts and approved the final manuscript.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Morris, J.E., Buonanduci, M.S., Agne, M.C. et al. Fuel Profiles and Biomass Carbon Following Bark Beetle Outbreaks: Insights for Disturbance Interactions from a Historical Silvicultural Experiment. Ecosystems 26, 1290–1308 (2023). https://doi.org/10.1007/s10021-023-00833-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10021-023-00833-5