Abstract

Impairments in social cognition have been frequently described in 22q11.2 deletion syndrome (22q11.2DS) and are thought to be a hallmark of difficulties in social interactions. The present study addresses aspects that are critical for everyday social cognitive functioning but have received little attention so far. Sixteen children with 22q11.2DS and 22 controls completed 1 task of facial expression recognition, 1 task of attribution of facial expressions to faceless characters involved in visually presented social interactions, and 1 task of attribution of facial expressions to characters involved in aurally presented dialogues. All three tasks have in common to involve processing of emotions. All participants also completed two tasks of attention and two tasks of visual spatial perception, and their parents completed some scales regarding behavioural problems of their children. Patients performed worse than controls in all three tasks of emotion processing, and even worse in the second and third tasks. However, they performed above chance level in all three tasks, and the results were independent of IQ, age and gender. The analysis of error patterns suggests that patients tend to coarsely categorize situations as either attractive or repulsive and also that they have difficulties in differentiating emotions that are associated with threats. An isolated association between the tasks of emotion and behaviour was found, showing that the more frequently patients with 22q11.2DS perceive happiness where there is not, the less they exhibit aggressive behaviour.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

It is now well documented that children and adults with 22q11.2 deletion syndrome (22q11.2DS) have poorer social competences, including mood lability, shyness, and difficulties in initiating and maintaining social relationships, compared with typically developing young people [1]. In 22q11.2DS, these social dysfunctions could be partly underlain by impairments in social cognitive processes (for a review, see [2]) and could also be linked to the emergence of psychosis [3]. Social cognition, which is defined as the ability to understand oneself and others in the social world [4], consists of emotional processing, theory of mind (ToM), attribution style, and social perception and knowledge [5]. The present study addresses three components of social cognition: (i) the identification of facial expressions, which has been extensively investigated in 22q11.2DS populations and yet provided inconsistent results, and two elements that are critical for everyday social cognitive functioning but have received extremely little attention in the literature, namely (ii) the comprehension and interpretation of visual scenes involving emotions and (iii) the identification of prosodic aspects of dialogues.

Concerning recognition of emotional facial expressions, despite frequent reports of social competences, several studies have failed to document examples other than a general impairment in 22q11.2DS [1, 6, 7], even though various and inconsistent deficits have been highlighted in the literature. Indeed, according to some authors, patients with 22q11.2DS have difficulties in recognizing facial expressions of fear, anger and disgust, while recognition of happiness, sadness and surprise may be efficient [8]. However, others have reported different results [3]. It has been suggested that such an impairment might be due to slowed, insufficient or inefficient gathering and processing of information. For instance, Franchini et al. [9] suggested that patients need more time to recognize emotions than healthy controls do, whereas using a morphing continuum, another study found that 22q11.2DS patients require higher intensities of emotion to accurately recognize facial expressions [7]. Conversely, patients with 22q11.2DS were found to exhibit fewer fixations on relevant facial features such as the eyes, the nose and the mouth [8, 10, 11], and such atypical and inefficient visual scanpath patterns could partially explain the poorer emotion identification skills. According to Campbell et al. [8], patients spent less time than controls looking at the eye region, which is known to be an important region for accurate emotion recognition, and spent more time looking at the mouth [10, 12]. Finally, in 22q11.2DS, impairments in emotional facial processing may be underlain by lower level visual and attentional impairments that could play a central role in the difficulties observed in these social cognitive skills [13].

Processing and understanding social information also requires collecting and processing cues beyond facial expressions, such as postural and vocal information that is present in social interactions or prosody. In most cases, the experimental tasks that have been used to study emotion recognition in 22q11.2DS have been based on emotional labelling of people depicted in photos [8] or in representations of a cognitive mental state described in vignettes [3]. Little has been done to investigate the understanding of more common social contexts, and when it has been done, it proved difficult to disentangle the social cognitive difficulties from executive deficits [1]. Concerning emotional prosody, only one study has compared the performance of patients with 22q11.2DS to that of healthy controls [14], and it failed to find any difference between these groups in the way they perceive and understand vocal emotions. In sum, contrary to the recognition of facial expressions, which has been extensively investigated, very little has been done regarding the interpretation and understanding of situations or social and environmental contexts, and almost nothing has been done to explore the recognition and interpretation of vocal prosody. However, real-life social events are not composed solely of facial expressions but are mostly made of complex interactions through which postures, attitudes and voices have to be interpreted. The way patients with 22q11.2DS comprehend scenes and vocal prosody has yet to be understood.

The existing studies regularly contradict each other. This might be due either to the variety of stimuli and paradigms used to assess the different facets of social cognition. But it also might also be due to the fact that it is rather uncommon that the same participants complete various tasks using similar stimuli and similar procedures. Another potential source of inconsistency might be the fact that some studies have focused only on children, others focused on adults, and some included both children and adults. Finally, most studies included patients with IQ levels lower than the normal range and failed to tease apart the effects of global cognitive deficits from impairments in social cognition. Here, we aimed to compare the performance of children with 22q11.2DS to that of controls on three tasks assessing emotional aspects of social cognition. Even though non-naturalistic stimuli were used, they were created to render the tasks comparable and, therefore, allow distinguishing the components of social cognition. The first task assessed the recognition of isolated emotional facial expressions (facial expression recognition task). The second task assessed the attribution of facial expressions to faceless characters involved in visually presented social interactions (face-cartoon-matching task). This task involved the emotional dimension of theory of mind (ToM), also called affective ToM [15], which is the ability to understand that other people have mental states that are independent from one’s own [16]. The third task assessed the attribution of facial expressions to characters involved in aurally presented dialogues (face-prosody-matching task). Investigating the performance of the same participants across all three aspects of social cognition is critical for comprehending social cognitive processes in a more global way. In fact, all three tasks used here involve some common processes (i.e. identification of an emotion, visual search for the right face and choosing it), and each task also involves some specific processes (e.g. taking into account visual or auditory cues, interpretation of the whole context, etc.). Therefore, comparison of the three tasks within each group leads to understand what determines performance. This is why all stimuli were specifically designed for the present study to enable the comparison of the three.

In addition to the analysis of correct performances, which could provide insight into what might not work correctly in 22q11.2DS patients’ recognition of facial expressions and an understanding of visual social contexts and vocal prosody, as well as the extent of such difficulties, we also aimed to analyze the confusion among the emotions in each task to unravel the way 22q11.2DS patients process emotional information to understand social contexts. The analysis of error patterns in 22q11.2DS patients, compared to controls, is even more important, as the literature is quite inconsistent on this issue. Understanding confusions between emotions in each task could provide some hypotheses about the origins of impairments in social cognition. For instance, regular confusion between two emotions in the facial expression recognition task might reveal that the parts of the faces that individuate emotions are less attended to and that scanning of some kinds of expressive faces might be incomplete [8]. On the other hand, regular confusion among emotions in the face-cartoon-matching task might reveal the existence of biases in comprehending the nature of the situations depicted [17], biases in interpreting social and environmental contexts [18] and even that some situations might regularly be appraised as a mixture of different emotions. Finally, regular confusion among emotions in the face-prosody-matching task might reveal difficulties in the perception and interpretation of vocal parameters that differentiate emotions [19]. Unfortunately, although we predicted that patients with 22q11.2DS would perform more poorly than controls in all three tasks, the scarcity of the data available in the literature, as well as the abovementioned inconsistencies, did not allow us to anticipate specific error patterns.

Simultaneously to the three social cognitive tasks, an assessment of visual spatial attention and perception abilities, as well as scanning and exploring competencies was proposed. According to some authors, these cognitive functions could underlie difficulties in emotional processing [8, 9, 12, 13]. Thus, we assumed that results obtained in the social cognitive tasks could be partly explained by measures of attention and visual spatial abilities. We also assessed several components of behaviour through parent-completed scales, more specifically irritability, agitation and crying; lethargy/social withdrawal; stereotypic behaviour; hyperactivity/noncompliance; and inappropriate speech, but also aggressive behaviour and self-esteem. The aim was to measure the impact of social cognitive impairments on children with 22q11.2DS’s behaviour. Social cognition appears to have a direct and strong impact on everyday life functioning in people with schizophrenia [20,21,22], but this relation is still poorly understood in people with 22q11.2DS. Indeed, social cognitive impairments have been extensively studied in relationship with the emergence of psychotic symptoms in adults [3], yet, little is known about the impact of social cognition on specific behaviour in children and adolescents with 22q11.2DS [23]. For instance, in a cross-sectional study, an association was observed between social cognitive measures and a behavioural screening questionnaire completed by parents [6]. However, another research failed to evidence any correlation between social cognition and behaviour [14]. These discrepancies may be due to methodological differences between the studies, but also due to the sensitivity of the variables used to assess cognition, social cognitive function and behaviour. It is, therefore, difficult to predict with precision whether such association would be found or not in children with 22q11.2DS, yet what was reported in adults may allow us to expect that social cognitive function will be associated with behaviour.

Methods

Participants

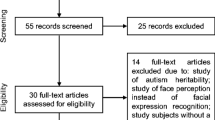

We conducted a power/sample size analysis. Based on ten studies that are cited in the introduction section of this paper, the average and sample-weighted effect size of the most prominent and frequently reported difference in social cognition between controls and 22q11.2DS patients, i.e. overall accuracy in facial expression recognition (apprehension of social contexts and perception of prosody were only rarely studied and it is not possible to conduct specific power/sample analyses on already published data) is d* = 1.058. Provided a power of 80% to detect such an effect, the total sample size needed is 32 individuals, combining controls and patients.

Sixteen children with 22q11.2DS and 22 healthy controls aged 5–13 years took part in the study. The two groups were matched in age and gender. Participants with difficulties in comprehension of the French language and/or with significant comorbid medical conditions, such as the presence or history of neurological disorders affecting the brain function, presence of severe visual or hearing impairment interfering with assessment, absence of French language proficiency or important reading difficulties, were excluded from the study.

The diagnosis of 22q11.2DS was confirmed in all patients by fluorescence in situ hybridization (FISH) and complete genomic hybridization (CHG-Array). To be included, participants with 22q11.2DS had to have an IQ in the normal range of 70–130 (assessed with Raven’s Coloured Progressive Matrices) [24]; this is a non-verbal test of fluid intelligence, all the items of which consist of visual geometric designs with a missing part. The participant is given six choices to pick from and fill in the missing part) and a normal ear, nose, and throat (ENT) examination.

Cognitive testing

Except from the above-mentioned evaluation of the IQ level, a short cognitive assessment was also conducted. This included two tests of visual spatial attention/scanning and exploring: (i) the Sky Search subtest from the Test of Everyday Attention for Children (TEA-Ch) assessing selective/focused attention [25], and (ii) the Overlapping lines task [26] assessing visual spatial scanning and control of oculomotor behaviour. The cognitive assessment also included two tests of visual spatial perception taken from the A Developmental NeuroPsychological Assessment battery (NEPSY-II) [27, 28]: (i) the arrows subtest assesses the ability to judge directionality, and (ii) the orientation subtests assesses the perception of visual spatial relations and positions. The choice of attention/scanning and visual spatial perception was made on the basis of previous findings suggesting that these functions may explain at least partly the performance of perception of facial expressions in 22q11.2DS [8, 9, 12, 13]. The proportion of errors was preferred to the proportion of correct responses (even if they reflect exactly the same thing) to have an overall coherent assessment with increasing scores denoting greater difficulty.

Please see Supplementary Material for a detailed description of the four tests.

Assessment of behaviour

Behaviour was assessed through three parent-completed scales: (i) aberrant behaviours were assessed with the The Aberrant Behavior Checklist (ABC-C) [29], a 58-item questionnaire assessing on a four-point scale (0 = it is not a problem; 3 = it is a very important problem) the following behaviours: irritability, agitation and crying; lethargy/social withdrawal; stereotypic behaviour; hyperactivity/noncompliance; and inappropriate speech. The larger the score, the more a behaviour is judged by parents as being problematic; (ii) aggressive behaviour was assessed with the reactive–proactive aggression questionnaire [30, 31], a 6-item questionnaire using a 5-point Likert scale (0 = never; 5 = almost always). The larger the score, the more aggressive the child is described by his/her parents; and (iii) self-esteem was assessed through the self-esteem true/false 8-item subscale of the MDI-C [32]. The larger the score is, the less the self-esteem.

Social cognition assessment

Social cognitive processes were assessed with a protocol intended for children and were composed of three tasks. All details can be found in the Supplementary Material. Visual stimuli were produced by a graphic designer using Photoshop CS5 software on a Wacom Bamboo A5 graphic tablet. All stimuli were especially created for this study and were validated through a sample of adults (see Supplementary Material). The first reason was to control at best all aspects of stimuli and avoid typical problems that exist with natural stimuli, such as photographs. These problems involve contrast, saliency, visual and auditory complexity. The second reason was to make all three tasks directly comparable since the response mode and the stimuli used for the responses were strictly identical in all three tasks. The third reason was that it was impossible to create natural stimuli for the face-cartoon-matching task and also for the face-prosody-matching task. The last reason was that some studies [33, 34] showed that young children have more difficulties recognizing facial expressions on photographs than on drawings of faces. The use of drawings minimizes confounds related to facial characteristics that are present in natural photographs but irrelevant to the emotion. These include gender, age, attractiveness, and ethnicity, as well as physical characteristics such as wrinkles and freckles.

All participants were tested in a silent room.

Facial expression recognition task

The aim of the first task was to assess the identification of isolated facial expressions. Six hairless and genderless cartoon faces expressing the six basic universal emotions were drawn with black ink according to the traits listed by Ekman and Friesen [35]. The emotions were happiness, surprise, sadness, anger, disgust and fear. The six faces were presented simultaneously on A4 landscape-oriented sheets of white paper in two rows of three faces each (Fig. 1). Five sheets (i.e. a total of 30 stimuli, with each expression being presented 5 times) were produced, and the spatial location of each facial expression changed from 1 sheet to the next. For each sheet, the participant was required to point to the face that looked happy, sad, disgusted, angry, surprised or fearful. After the final sheet, the examiner proceeded to point to and simultaneously name each one of the faces, even if all responses given by the participant were correct. This aimed to reinforce the identification of each facial expression for the subsequent tasks. The task lasted approximately 5 min.

Face-cartoon-matching task

The aim of this task was to assess the ability to understand the context of visually presented scenes [17] involving one to three faceless characters in situations where emotions were expressed. The scenes were drawn with black ink on white A4 landscape-oriented sheets. The target character was always wearing orange clothing to be immediately and easily detected and identifiable. The target character was a boy in half of the trials and a girl in the other half. Thirty scenarios were included in the test, depicting various everyday situations (see Supplementary Material for pilot testing and selection of stimuli). Each of the six facial expressions matched the emotion expressed by the target character, and for each expression, five scenarios were presented. A sixth scenario, which was associated with happiness, was used as both an example and a training trial. At the bottom of each scene, the six isolated facial expressions that were previously used were presented in a row that served as the answer choices (Fig. 2). The participant was required to point to the face the target character would make in each scene presented as quickly and as accurately as possible. The task lasted approximately 20 min.

Face-prosody-matching task

The aim of this task was to assess the ability to understand the emotional context of aurally presented dialogues involving two characters. The dialogues always consisted of three sentences, the propositional content of which contained no emotion-related words (i.e. “first character: There are spoons on the table. Second character: three chairs are also there. First character again: and the window is open.”) [36]. However, all three sentences were expressed with vocal emotion, and for each dialogue, the expressed vocal emotion was the same for all three sentences (e.g. all three sentences were expressed with anger). The dissociation between the propositional content and the emotional prosody aimed to drastically diminish any interference between the two. Thirty dialogues were created, 5 for each of the 6 basic emotions. A final dialogue (in which happiness was expressed) was created and used as an example and a training trial. The six isolated facial expressions used in the first test were used for the responses. They were presented on an A4 landscape-oriented white sheet, were placed on the table in front of the participant and were visible throughout the whole test. The participant was required to point to the face the characters would make in each dialogue. He/she was told to wait until the dialogue was over before giving an answer and to respond as accurately as possible. The task lasted less than 10 min.

Statistical analyses

Several statistical analyses were conducted: (i) statistical analyses on demographic characteristics, IQ level, performance on cognitive tasks and behaviour were performed with Welch’s t test and the Chi square test (χ2). Cohen’s d was used to express effect sizes; it represents the difference between two means divided by a standard deviation for the data. (ii) Performance on the three tasks involving social cognitive processes was analyzed through a mixed analysis of variance performed on the proportion of correct responses. The Greenhouse–Geisser sphericity correction was applied. Partial eta-squared (η2p) coefficients were used to express effect sizes. Multiple comparisons were conducted with Bonferroni-corrected Welch’s t tests, and Cohen’s d was used to express effect sizes. (iii) The comparison of performance in these three tasks to chance level was conducted with Welch’s t tests, and Cohen’s d was used to express effect sizes. All three abovementioned statistical analyses were performed with JASP software, version 0.8.1.2 (JASP Team, 2017) and actual power—provided a power of 80% to detect the effect—was computed with the G*Power 3.1 freeware. (iv) The analysis of error patterns was conducted using resampling statistics. Due to the important number of expected response/given response possibilities in the analysis of errors (N = 30), the number of cells that could have contained values of 0 was very high. The assumptions of parametric tests were, therefore, difficult to meet, so we proceeded in the analysis of error patterns with the aid of resampling statistics [37]. Permutation tests were conducted, allowing the comparison of the error patterns of the patient and the control groups in each of the three tasks that assessed social cognition. The computation of the statistical probability was based on 5000 permutations for each task. These analyses were conducted with the Microsoft Excel (2011) software and a lab-made program. Permutation tests are robust and have many more advantages than just their good performance with data of the kind explored here. They give exact statistical significance directly from the data being analyzed, and all irregularities of the observed data are maintained in the permuted datasets and are included in the estimation of the statistical probability. Of most interest is that they constitute powerful alternatives to more common corrections (e.g. Bonferroni procedures) for cases in which multiple comparisons are needed [38]. Since corrections are not needed, permutation tests are recommended in studies involving multiple statistical tests [39]. (v) Correlation analyses were conducted with the Spearman ρ coefficient for each group independently. When a significant correlation was found in one group between the tasks of social cognition and the remaining cognitive tasks and behavioural scales, the assessment of differences in correlations between the two groups was conducted with the Steiger’s test [40] to confirm or not that there is a group difference.

Results

Participants’ characteristics

No difference was found in age. A marked difference was, however, found regarding the IQ levels with patients scoring lower than controls. Finally, no group difference was found in terms of the percentages of boys and girls, but boys outnumbered girls in both groups. These demographic characteristics are presented in Table 1.

Cognitive testing

Patients had overall lower performance than controls in all cognitive tests. Indeed, as far as attention processes were concerned, patients made more errors than controls in the Sky search test of selective attention The proportion of errors was also greater for patients in the Overlapping lines test. As far as visual spatial perception was concerned, a similar pattern was found. The proportion of errors of patients was greater than that of the controls in the Arrows. The proportion of errors was also greater for patients in the Orientation test. These data are presented in Table 1.

Parent-rated behaviour

Behavioural problems, as assessed through the ABC-C, were always more important for patients than controls. Indeed, such differences were found for irritability, agitation and crying, lethargy/social withdrawal, stereotypic behaviour, hyperactivity/noncompliance, and inappropriate speech. Furthermore, problems with Self-Esteem, as assessed through the MDI-C, were higher in patients than in controls However, aggressive behaviour was not found to be more frequent in patients (M = 12.0, SD = 4.9) than in controls. These data are presented in Table 1.

Comparison between the control and 22q11.2DS groups in tasks of social cognition

To examine the differences in the performance of the tests of emotional perception and social cognition, a mixed analysis of variance was performed on the proportion of correct responses, with the task (facial expression recognition, face-cartoon matching and face-prosody matching) and emotion (happiness, surprise, sadness, anger, fear and disgust) as within-subjects factors and the group (patients vs. controls) as the between-subjects factor. Since the IQ levels were found to be different between the two groups, since boys outnumbered girls in both groups, and since developmental changes may occur within the age range of our samples, IQ, age and gender were used as covariate values to control for their effects on performance. Here, we report only reliable effects that involved the group. The main effect of group was revealed to be significant [F(1,33) = 11.14, p = 0.002, η2p = 0.25], with the overall proportion of correct responses being lower for the patients (M = 0.58, SD = 0.14) than for the controls (M = 0.82, SD = 0.1). Among the other effects, only the group × task interaction was revealed to be significant [F(2,66) = 3.40, p = 0.044, η2p = 0.09; Fig. 3], with the IQ level, age and gender explaining as much as 8% of the variance altogether [without IQ, age and gender as covariates, the group × task interaction was F(2,72) = 7.1, p = 0.002, η2p = 0.17]. Bonferroni-corrected multiple comparisons (cutoff level of significance p = 0.006) revealed that patients scored lower than the controls in the facial expression recognition task [t(36) = 4.4, p = 0.0001, d = 1.48; actual power 92.3%], the face-cartoon-matching task [t(36) = 4.15, p = 0.0002, d = 1.38; actual power 89.6%], and the face-prosody-matching task [t(36) = 6.4, p = 0.00001, d = 2.14; actual power 99.3%].

Each group was further analyzed individually. Bonferroni-corrected multiple comparisons (cutoff level of significance p = 0.006) showed that in the control group, the proportion of correct responses was higher in the facial expression recognition task (M = 0.92, SD = 0.10) than in the face-cartoon-matching task [M = 0.74, SD = 0.13; t(21) = 6.2, p = 0.001, d = 1.31; actual power 98.1%] and the face-prosody-matching task [M = 0.81, SD = 0.13; t(21) = 3.5, p = 0.006, d = 0.75; actual power 78.5%]. However, no difference was observed between the last two tasks [t(21) = 2.2, p = 0.13, d = 0.46]. A similar pattern was observed in the patient group. The proportion of correct responses was higher in the facial expression recognition task (M = 0.75, SD = 0.13) than in the face-cartoon-matching task [M = 0.53, SD = 0.17; t(15) = 6.7, p = 0.001, d = 1.7; actual power 98.5%] and the face-prosody-matching task [M = 0.45, SD = 0.19; t(15) = 7.4, p = 0.001, d = 1.9; actual power 99.2%]. No difference was observed between the last two tasks [t(15) = 1.5, p = 0.44, d = 0.38]. As seen in Fig. 3, the sharper decline in performance between the facial expression recognition task and the other two tasks in the patient group than in the controls drives the group × task interaction. Patients did not perform well in identifying the emotions in isolation (facial expression recognition task), but they performed even worse in context (face-cartoon and face-prosody tasks).

Of most interest was that, despite each patient’s performance being weaker than the performance of controls, it was always higher than chance level (since in each task, six responses were possible, the chance-level proportion was 0.166; Fig. 3). This was found to be the case in the facial expression recognition task [t(15) = 17.9, p = 0.001, d = 4.5; actual power 99.9%], the face-cartoon-matching task [t(15) = 8.5, p = 0.001, d = 2.1; actual power 99.6%] and the face-prosody-matching task [t(15) = 6.1, p = 0.001, d = 1.5; actual power 97.0%]. These results suggest that, despite their difficulties in social cognition, the patients did not respond randomly.

Correlations between cognitive performance, behaviour and the tasks of social cognition

Correlations were conducted separately for each of the two groups. At this aim, the IQ level, the selective attention score from the Sky search task, and the proportion of errors in the overlapping lines task, the arrows task and the orientation task were used for assessing the relationship with cognition. The scores in the five subscales of the ABC-C, the scores of the MDI-C and the aggression scale were used for assessing relations with behaviour. Finally, since the mixed ANOVA did not reveal any specific effect or interaction involving the six emotions, only the mean proportion of correct responses was considered for each of the three tasks of social cognition. After Bonferroni correction (cutoff level of significance p = 0.0014), the only significant finding was a negative correlation between the Arrows task of visual spatial perception and the mean proportion correct responses in the face-prosody task (ρ = − 0.71) for the control group. This correlation was not significant in the patient group (ρ = − 0.43). However, the comparison between the correlations did not reveal significant (z = 0.72, p = 0.24 two-tailed), suggesting that the observed difference between the groups is only artifactual.

Error patterns in the facial expression recognition task

The proportions of errors for each expected response/given response combination for each group and the results of the comparison between the two groups are presented in Table 2. Patients misrecognized three facial expressions more frequently than controls: sadness was misrecognized as fear (p = 0.01), surprise as happiness (p = 0.05), and disgust as surprise (p = 0.045).

Error patterns in the face-cartoon-matching task

Patients miscomprehended the nature of several situations depicted in the presented scenes more frequently than controls. First, situations involving sadness, surprise and fear were all misinterpreted as involving anger (ps = 0.014, 0.049 and 0.011, respectively). Second, situations involving disgust were misinterpreted as involving sadness (p = 0.014) and fear (p = 0.047).

Error patterns in the face-prosody-matching task

Even though, as shown earlier, the face-prosody-matching task was not more difficult than the previous one for either group, the patients mismatched several emotions more frequently than controls. Happiness, sadness, surprise and fear were misinterpreted as being anger (all ps < 0.021). Anger, surprise and disgust were misinterpreted as happiness (all ps < 0.04). Anger and sadness were misinterpreted as disgust (both ps < 0.05). Finally, anger was also misinterpreted as sadness (p = 0.015). Anger was, therefore, the emotion that was most miscomprehended, followed by sadness and surprise. However, anger was also the most frequently given response, just as in the face-cartoon-matching task, followed by happiness.

Correlations between cognitive performance, behaviour and error patterns

As explained earlier, due to the high number of expected response/given response possibilities in the analysis of errors, the number of cells that could have contained values of 0 was important. That was the reason why correlation analyses were impossible to carry out on each individual error pattern. To carry out analyses and to avoid Type I errors, data were collapsed across the three tasks and only the error patterns in which significant differences between the two groups were considered. These errors were clustered as a function of the response given by participants (e.g. whether the real stimulus was surprise, anger or disgust, it was considered as “happiness response” if a participant responded that he/she perceived happiness). Indeed, it can be assumed that the misattribution errors represent the way participants perceived the stimulus and reveal perceptual biases towards one emotion or another). Correlations were conducted separately for each of the two groups. As before, the IQ level, the selective attention score from the Sky search task, and the proportion of errors in the Overlapping lines task, the Arrows task and the Orientation task were used for assessing the relationship with cognition. The scores in the five subscales of the ABC-C, the scores of the MDI-C and the Aggression scale were used for assessing relations with behaviour. After Bonferroni correction (cutoff level of significance p = 0.0007), the only significant finding was a negative correlation between the frequency with which a “happiness” response was wrongly given and the Aggression scale (ρ = − 0.78) for the patient group. This correlation was not significant in the control group (ρ = − 0.12). The comparison between the correlations revealed significant (z = 2.28, p = 0.01 two-tailed). This result suggests that the more frequently children/adolescents with 22q11.2DS perceive happiness where there is not (whether on the face, in social contexts or in the voice), the less they exhibit aggressive behaviour. Seemingly, such a regulatory mechanism differentiates 22q11.2DS patients from controls.

Discussion

The aim of this study was to assess these components of emotional and social cognitive processes in children with 22q11.2DS compared to healthy children and to unravel the way they process social cognitive information, as well as to unravel differences among components of social cognition. A secondary aim was to assess the relationship between some neurocognitive processes, social cognition and behaviour.

Overall performance

In the present study, patients with 22q11.2DS presented with significant impairments in the processing of emotional and social information compared to healthy controls in recognizing facial expressions, interpreting visually depicted social interactions and emotional prosody through dialogues. Impairment in the recognition of emotional facial expression has already been demonstrated in the literature [2], but our study is the first in which deficits in attributing emotions, both to characters involved in visually presented social interactions and to characters in aurally presented dialogues, are highlighted. This suggests that social cognitive processing in 22q11.2DS is far more complex than just perceiving and recognizing isolated features on faces. Our patients performed even worse in the cartoon and prosody tasks than in the face recognition task. These two tasks are more complex, since they require selecting multiple relevant cues and integrating them with acquired knowledge [18] to form a globally coherent image of what occurs and what the characters’ emotional experiences are in each trial. It may be suggested that in attempting to perceive and interpret the nature of social interactions, through either visual or auditory cues, patients with 22q11.2DS have great difficulty and frequently misinterpret what happens. Another, complementary interpretation is that the drop in performance in the two more complex tasks could result from limitations in the processing of multiple target characters. Indeed, both the face-cartoon and the face-prosody-matching tasks involve several characters and also several pieces of secondary information to take into account. The multiplicity of target characters and information could probably exceed the processing capacity of patients and result in decreased performance. Of most interest was that all the above-mentioned observations were made even after having statistically controlled for the impact of age, gender and IQ level, suggesting that these patients face genuine social cognitive impairments that are independent from global cognitive efficiency. Furthermore, correlational analyses failed to find any relationship between performance in the three tasks of social cognition and more basic visual perceptual and attentional processes, suggesting that the involvement of such mechanisms in social information processing in 22q11.2DS may be limited.

Facial expression recognition

The analysis of the proportion of correct responses did not reveal any differential impairment in recognizing facial expressions as a function of the emotion assessed, as suggested through the extant literature [7, 8, 10, 11, 41]; rather, we found a more general impairment in 22q11.2DS compared to controls. However, the analysis of error patterns showed that 22q11.2DS patients misinterpreted three facial expressions more frequently than controls: sadness was mistaken as fear, surprise as happiness, and disgust as surprise. These confusions seem to relate to the visual details of the faces, such as the mouth width and the angle of the eyes and eyebrows (when sadness was confused with fear), the configuration of the mouth (between surprise and happiness), and the configuration of the eyes and the eyebrows (when disgust was misrecognized as surprise). However, this is only a hypothesis, and the present study does not offer any data to support it directly. Even though we did not use eye-tracking techniques in the present study, our findings do not support the assumption of an overall impaired pattern of face exploration, as has been proposed by some authors [8, 9, 12, 13], since this would result in a rather widespread and non-regular pattern of errors. Actually, the confusion is probably due to subtle visual details and could relate to perceptual or attentional impairments other than in visual scanning [13]. Yet, our study failed to find any relationship between perceptual or attentional impairments and recognition of emotions. Thus, it is not yet clear whether and how and to what degree specific cognitive impairments, such as those in visual perception and processing, influence facial expression recognition in 22q11.2DS.

Face-cartoon matching

The face-cartoon-matching task requires understanding and interpretation of visual scenes involving one of the six target emotions. This test encompassed more complex social cognitive processes than the facial expression recognition task, and thus involves the emotional dimension of ToM, also called the affective theory of mind [15]. This ability seems to be disordered in children and adolescents with 22q11.2DS [1, 6, 23, 42, 43], and our results confirm this. A recent study [1] suggested that such difficulties might be attributed to other abilities, like inferring the sequence of events when responding. Our results cannot be attributed to such processes since the face-cartoon-matching task was designed to decrease the involvement of such sequencing abilities as much as possible. Furthermore, the fact that the difference between the two groups was still there after controlling for IQ level suggests that there is a genuine deficit in the specific social cognitive processes involved.

The analysis of error patterns revealed that compared to controls, 22q11.2DS patients more frequently misinterpreted emotional situations involving sadness, surprise and fear as involving anger, and again compared to controls, situations involving disgust were more frequently misinterpreted as involving sadness or fear. The first category of confusion indicates that children with 22q11.2DS tend to use a simple attractive/repulsive—or happy/angry—dichotomy to interpret social situations. In typically developing children, emotion recognition improves with age. In a study focused on children from 4 to 11 years old, Chronaki et al. [44] showed that sadness recognition was delayed across development relative to anger and happiness. Our results may indicate that patients with 22q11.2DS have a delay in the ability to accurately differentiate specific emotions in the case of understanding complex social scenes [6, 45]. The use of the face-cartoon-matching task in a longitudinal study or an age transversal study would provide important insights into the developmental delays in 22q11.2DS.

The second category of confusion showed that compared to the controls, 22q11.2DS patients more frequently misinterpreted than controls the situations of disgust as involving sadness or fear. According to Reeve [46], negative emotions constitute a global response to threats. More specifically, while disgust constitutes a rejection response to a threat, fear marks a defensive response to the threat, and sadness, which is expressed after the threat, may be considered to result from it. In 22q11.2DS, the confusion of these emotions could be related to difficulties in differentiating specific responses to threats. This is an entirely new finding and should be further investigated to understand what may underlie such a difficulty in differentiating responses to threats.

Face-prosody matching

The face-prosody-matching task assessed the ability to understand the emotion expressed by two characters in their dialogue while ignoring the propositional content of those dialogues. It thus tested prosodic emotion recognition. The results showed that children with 22q11.2DS present with a reliable impairment in matching facial expressions to the vocal emotions expressed through dialogue. In the literature, only one paper reported data concerning the vocal components of emotional processing in a population of 22q11.2DS patients [14]. The results showed no difference between the 22q11.2DS patients and controls in recognizing happiness, sadness, anger and fear through the tone of the voice. In the study by Shashi et al. [14], accuracy for specific emotions was not analyzed, and this may be one reason why such impairment was not found. Furthermore, the authors interpreted the lack of difference between 22q11.2DS patients and healthy children as resulting from a split in the IQ indices in a part of their population of patients, with high rates of non-verbal learning deficits, which would provide a relative strength in verbal abilities and thus a better auditory discrimination of all stimuli, including emotions. The authors also proposed that they might be underpowered to detect differences between the 22q11.2DS and control groups. In the present study, we checked that the participants with 22q11.2DS had both normal ENT assessments and an IQ within the normal range, as assessed by a non-verbal measure [24], but we did not precisely test verbal and non-verbal learning abilities. Furthermore, the results were controlled for IQ level. We are, therefore, confident that the deficits observed here can be attributed to difficulties with social cognitive information processing rather than to IQ or ENT disturbances. Although verbal abilities were not involved in the task we used here (since vocal emotions were completely independent from the verbal content of the dialogues), future studies could use a measure of verbal learning to unravel its relationship with emotional voice recognition.

In addition to an overall decreased accuracy compared to the controls, 22q11.2DS patients also displayed some consistent error patterns, suggesting that despite the amount of confusion, it is not due to random responses. The analysis of the confusion allowed further examination of this impairment. Compared to the results for the controls, four vocal emotions were more frequently misinterpreted as anger; these were happiness, sadness, surprise and fear. Three others were misinterpreted as happiness: anger, surprise and disgust. Anger and sadness were misinterpreted as disgust. Finally, anger was also misinterpreted as sadness. Once again, such an error pattern is not compatible with a general impairment nor a limitation in processing multiple target characters during the task.

Interestingly, the two most frequent responses that gave rise to such confusion involved anger and happiness, and this brings to mind the attractive/repulsive—happy/angry—dichotomy that has been suggested to account for performance in the face-cartoon-matching task. However, it is also of interest that the two least frequent responses (i.e., disgust and sadness) bring to mind the second confusion category found in the face-cartoon-matching task, which entailed difficulties in differentiating specific responses to threats [46].

The observed error patterns suggest that difficulties in understanding and logically reasoning about social situations in 22q11.2DS might be related to two types of disturbances: a tendency to coarsely classify social situations into two opposing categories (attractive/repulsive) and a more specific difficulty in differentiating emotions that are associated with threats. Whether these two types of disturbances are hierarchized or not is difficult to know, even though in both the face-cartoon- and face-prosody-matching task responses, those entering the first type were more frequent than those entering the second one. The first type of disturbance surprisingly brings to mind the distinction between the systems of behavioural activation and inhibition in Gray’s [47] reinforcement sensitivity theory. The former is sensitive to reward and produces responses of approach, whilst the latter is triggered by fear and signals of punishment and produces avoidance. It is similar to the notion that patients with 22q11.2DS think mostly on the basis of cues of reward (i.e., attractive) and punishment (i.e., repulsive) in a coarsely dichotomous fashion. The two systems may affect the shaping and encoding of developmental experiences, such as socialization and understanding of social interactions, and lead to abnormal comprehension of those interactions. The behavioural activation and inhibition systems hypothesis seems to be the most adequate interpretation of the results and further investigation is necessary for confirming or refining our observations. This also might explain why error patterns in the facial expression recognition task were different, since that specific task does not involve logical social reasoning and interpretation of complex situations.

Social cognition and behaviour

The present study globally failed to evidence correlations between performance and error patterns in the tasks of social cognition, tasks assessing more basic cognitive processes, and parent-completed scales of everyday behaviour. The literature does not provide any convincing evidence that deficits in social cognition tasks are associated with problematic behaviour in 22q11.2DS. Indeed, social cognitive impairments have been studied in relationship with the emergence of psychotic symptoms in adults [3] and, to our knowledge, only a single study reported an association between social cognition and behaviour in 22q11.2DS [6]. The absence of reliable results in the present study may be due to the severe restrictions imposed by the procedures of statistical correction. However, an isolated finding was that the more frequently 22q11.2DS patients reported misperceiving other emotions as being happiness, the less the degree of aggressive behaviour described by their parents. Such thing was not found in controls. This may constitute a real difference in the socio-emotional functioning of 22q11.2DS patients since the degree of aggression was not found to be different between the groups. A study by Penton-Voak et al. [48] demonstrated that experimentally biasing the perception and recognition of emotions towards happiness led to changes in self-reported and independently-rated aggressive behaviour in adolescents with high-risk of criminal and antisocial behaviour. This is compatible with the above-mentioned result. Our finding may reflect the presence of a spontaneously developed positivity-oriented emotional mechanism that would help regulate some kinds of social interactions in 22q11.2DS. Are we facing the consequence of a behavioural activation system [47] that developed differently as to shape socialization and understanding of social interactions? However, this isolated finding may also reflect a false positive, and caution is needed when interpreting it, especially since the association between social cognition and behaviour is not always found in the extant literature.

Limitations

One possible limitation of our study is that it did not use natural stimuli such as photographs or audio recordings of real-life dialogues. The fact that all stimuli were especially designed for this study is instead a strength because it enables the comparison of the three tasks. In addition, it enables controlling for noise introduced by natural stimuli, such as contrast, saliency, complexity, as well as confounds related to facial characteristics that are irrelevant to the emotional facial expression, such as gender, ethnicity, age, attractiveness, moles, wrinkles and freckles. Furthermore, natural stimuli for the face-cartoon- and the face-prosody-matching tasks are impossible to obtain. Finally, all facial expressions used in the present study were exaggerated to better emphasize their main traits, to render them more evocative, and to focus children’s attention on the traits that were relevant for the tasks [33].

Several studies have suggested that impaired facial expression recognition in 22q11.2DS might be due to disordered visual scanpaths [8, 9, 12] or to deficits in perceptual processes [13]. These two accounts are difficult to disentangle, since the former may condition the efficiency of the latter, and vice versa. Although our findings did not support the assumption of a consistently impaired pattern of face exploration, it is difficult to be sure that this was not the case, given that no eye-tracking techniques were used. In addition, whether perceptual processes have an impact that goes beyond face identification is still a subject of investigation, and further studies are required to understand the degree to which such processes contribute to social cognitive impairments. One possible methodological limitation is that, during the training trial of the facial expression recognition task, the experimenter corrected the child’s response if incorrect. This adds a learning aspect to the task that may make it difficult to interpret the results in terms of facial expression recognition. However, this procedure was the best for allowing participants to know the emotion label that goes with each facial expression. Indeed, during the pilot study, it was found that some expressions were known, correctly recognized and associated with specific situations but that their names were not known by all participants.

Finally, we did not assess the contribution of higher executive functions to social cognition impairments. Although this was not within the scope of this study, the fact that the observed patterns of performance were independent from the g-factor (as assessed by Raven’s Coloured Progressive Matrix IQ test) suggests that at least some of these impairments are not dependent on other processes. This aspect should be further investigated using specific protocols, as should the relationship between impairments in emotional processing and symptomatology.

Conclusions

To conclude, this study showed that, compared to control participants, children with 22q11.2DS have significant social cognitive deficits that are independent of age, gender and IQ level. First, our results suggested that 22q11.2DS individuals have impaired emotional facial expression processing compared to their typically developing peers. Even though this impairment could be related both to poor visual exploration patterns, as highlighted by eye-tracking studies [8,9,10,11], and to cognitive skills, such as visual perception and processing [13], our study failed to find such a relationship. Second, 22q11.2DS patients presented with significant difficulties in a task requiring more complex social cognitive processes, such as affective ToM ability, assessed through the face-cartoon- and the face-prosody-matching tasks. Finally, this is the first time a deficit in interpreting vocal emotions has been shown in 22q11.2DS. However, some studies have suggested that cognitive deficits, notably, impaired visual perception and executive control, might best explain the impairments in complex social cognitive processes rather than specific difficulties with social stimuli in 22q11.2DS [1, 6]. Assessing the role of these functions in social cognitive difficulties was not within the scope of the present study. Instead, the aim was to unravel the way 22q11.2DS patients understand such complex situations and the way they respond. The analysis of error patterns in the face-cartoon- and face-prosody-matching tasks suggested that difficulties in 22q11.2DS might be related not only to a tendency to coarsely categorize social situations as either attractive or repulsive but also to difficulties in differentiating emotions that are associated with threats. The important insights into social cognitive processes, such as the kind of analyses being performed, allow us to estimate the specific techniques of remediation of social abilities that can be targeted and developed. For instance, the fact that 22q11.2DS patients misperceive some emotions as being happiness is related to decreased aggressive behaviour. Experimental studies in other populations suggest that similar effects may be obtained through training and adaptation procedures. This gives hope that remediation procedures be developed and even generalize beyond the specific link between perceiving happiness and toning down aggressive behaviour. Future research should not only further explore the relationship between basic cognitive processes and higher order cognition but also focus on the way 22q11.2DS patients process emotional information to understand social contexts, as well as the way these deficits contribute to psychopathology and functioning in daily life. Finally, the fact that the protocol is quite easy for children to complete, despite the possibility that their language abilities might be weak, opens new perspectives on the assessment of social cognition in other pathological conditions.

References

Campbell LE, McCabe KL, Melville JL, Strutt PA, Schall U (2015) Social cognition dysfunction in adolescents with 22q11.2 deletion syndrome (velo-cardio-facial syndrome): relationship with executive functioning and social competence/functioning. J Intellect Disabil Res 59:845–859

Norkett EM, Lincoln SH, Gonzalez-Heydrich J, D’Angelo EJ (2017) Social cognitive impairment in 22q11 deletion syndrome: a review. Psychiatry Res 253:99–106

Jalbrzikowski M, Carter C, Senturk D, Chow C, Hopkins JM, Green MF et al (2012) Social cognition in 22q11.2 microdeletion syndrome: relevance to psychosis? Schizophr Res 142:99–107

Penn DL, Sanna LJ, Roberts DL (2008) Social cognition in schizophrenia: an overview. Schizophr Bull 34:408–411

Pinkham AE, Penn DL, Green MF, Buck B, Healey K, Harvey PD (2014) The social cognition psychometric evaluation study: results of the expert survey and RAND panel. Schizophr Bull 40:813–823

Campbell LE, Stevens AF, McCabe K, Cruickshank L, Morris RG, Murphy DGM et al (2011) Is theory of mind related to social dysfunction and emotional problems in 22q11.2 deletion syndrome (velo-cardio-facial syndrome)? J Neurodev Disord 3:152–161

Leleu A, Saucourt G, Rigard C, Chesnoy G, Baudouin J-Y, Rossi M et al (2016) Facial emotion perception by intensity in children and adolescents with 22q11.2 deletion syndrome. Eur Child Adolesc Psychiatry 25:297–310

Campbell L, McCabe K, Leadbeater K, Schall U, Loughland C, Rich D (2010) Visual scanning of faces in 22q11.2 deletion syndrome: attention to the mouth or the eyes? Psychiatry Res 177:211–215

Franchini M, Schaer M, Glaser B, Kott-Radecka M, Debanné M, Schneider M et al (2016) Visual processing of emotional dynamic faces in 22q11.2 deletion syndrome. J Intellect Disabil Res. https://doi.org/10.1111/jir.12250

McCabe KL, Melville JL, Rich D, Strutt PA, Cooper G, Loughland CM et al (2013) Divergent patterns of social cognition performance in autism and 22q11.2 deletion syndrome (22q11DS). J Autism Dev Disord 43:1926–1934

McCabe K, Rich D, Loughland CM, Schall U, Campbell LE (2011) Visual scanpath abnormalities in 22q11.2 deletion syndrome: is this a face specific deficit? Psychiatry Res 189:292–298

Glaser B, Debbané M, Ottet M-C, Vuilleumier P, Zesiger P, Antonarakis SE et al (2010) Eye gaze during face processing in children and adolescents with 22q11.2 deletion syndrome. J Am Acad Child Adolesc Psychiatry 49:665–674

McCabe KL, Marlin S, Cooper G, Morris R, Schall U, Murphy DG et al (2016) Visual perception and processing in children with 22q11.2 deletion syndrome: associations with social cognition measures of face identity and emotion recognition. J Neurodev Disord 8:30

Shashi V, Veerapandiyan A, Schoch K, Kwapil T, Keshavan M, Ip E et al (2011) Social skills and associated psychopathology in children with chromosome 22q11.2 deletion syndrome: implications for interventions. J Intellect Disabil Res 56:865–878

Shamay-Tsoory SG, Aharon-Peretz J (2007) Dissociable prefrontal networks for cognitive and affective theory of mind: a lesion study. Neuropsychologia 45:3054–3067

Tager-Flusberg H, Sullivan K (2000) A componential view of theory of mind: evidence from Williams syndrome. Cognition 76:59–90

Kolb B, Wilson B, Taylor L (1992) Developmental changes in the recognition and comprehension of facial expression: implications for frontal lobe function. Brain Cogn 20:74–84

Crick NR, Dodge KA (1994) A review and reformulation of social information-processing mechanisms in children's social adjustment. Psychol Bull 115:74–101

Scherer K (2003) Vocal communication of emotion: a review of research paradigms. Speech Commun 40:227–256

Mucci A, Galderisi S, Rossi A, Rocca P, Bertolino A, Bucci P et al (2016) Relationships between neurocognition, social cognition and functional outcome in schizophrenia. Eur Psychiatry Suppl 33:S67

Fett AK, Viechtbauer W, Dominguez M, Penn DL, van Os J, Krabbendam L (2011) The relationship between neurocognition and social cognition with functional outcomes in schizophrenia: a meta-analysis. Neurosci Biobehav Rev 35:573–588

Schmidt SJ, Mueller DR, Roder V (2011) Social cognition as a mediator variable between neurocognition and functional outcome in schizophrenia: empirical review and new results by structural equation modeling. Schizophr Bull 37(2):S41–S54

Badoud D, Schneider M, Menghetti S, Glaser B, Debbané M, Eliez S (2017) Understanding others: a pilot investigation of cognitive and affective facets of social cognition in patients with 22q11.2 deletion syndrome (22q11DS). J Neurodev Disord 9:35

John RJ (2003) Raven progressive matrices. In: McCallum R (ed) Handbook of nonverbal assessment. Springer US, Boston, pp 223–237

Manly T, Robertson I, Anderson V, Mimmo-Smith I (2004) TEA-Ch Test d’évaluation de l’attention chez l’enfant. ECPA, Paris

Rey A (1964) L'examen clinique en psychologie. PUF, coll. Le psychologue, Paris

Korkman M, Kirk U, Kemp S (2007) NEPSY-II: A developmental neuropsychological assessment, Second Edition. San Antonio, TX: Harcourt Assessment

Korkman M, Kirk U, Kemp S (2012) NEPSY-II Bilan neuropsychologique de l’enfant 2nde édition. ECPA, Paris

Aman M, Singh N (1994) Aberrant Behavior Checklist French (European). Questionnaire sur les comportements anormaux version pour les sujets non-institutionalisés. Slosson Educational Publication, East Aurora

Dodge KA, Coie JD (1987) Social-information-processing factors in reactive and proactive aggression in children's peer groups. J Pers Soc Psychol 53:1146–1158

Poulin F, Boivin M (2000) Reactive and proactive aggression: evidence of a two-factor model. Psychol Assess 12:115–122

Berndt DJ, Kaiser CF (1999) Manuel: Echelle composite de dépression pour enfant (MDI-C). ECPA, Paris

Brechet C (2017) Children's recognition of emotional facial expressions through photographs and drawings. J Genet Psychol 178:139–146

MacDonald PM, Kirkpatrick SW, Sullivan LA (1996) Schematic drawings of facial expressions for emotion recognition and interpretation by preschool-aged children. Genet Soc Gen Psychol Monogr 122:373–388

Ekman P, Friesen WV (1971) Constants across cultures in the face and emotion. J Pers Soc Psychol 17:124–129

Ross ED, Thompson RD, Yenkosky J (1997) Lateralization of affective prosody in brain and the callosal integration of hemispheric language functions. Brain Lang 56:27–54

Dugard P, File P, Todman J, Todman JB (2012) Single-case and small-n experimental designs: a practical guide to randomization tests. Routledge Academic, New York

Sham PC, Purcell SM (2014) Statistical power and significance testing in large-scale genetic studies. Nat Rev Genet 15:335–346

Camargo A, Azuaje F, Wang H, Zheng H (2008) Permutation-based statistical tests for multiple hypotheses. Source Code Biol Med 3:15

Steiger JH (1980) Tests for comparing elements of a correlation matrix. Psychol Bull 87:245–251

Jalbrzikowski M, Villalon-Reina JE, Karlsgodt KH, Senturk D, Chow C, Thompson PM et al (2014) Altered white matter microstructure is associated with social cognition and psychotic symptoms in 22q11.2 microdeletion syndrome. Front Behav Neurosci 8:393

Ho JS, Radoeva PD, Jalbrzikowski M, Chow C, Hopkins J, Tran W-C et al (2012) Deficits in mental state attributions in individuals with 22q11.2 deletion syndrome (Velo-Cardio-facial syndrome). Autism Res 5:407–418

Angkustsiri K, Goodlin-Jones B, Deprey L, Brahmbhatt K, Harris S, Simon TJ (2013) Social impairments in chromosome 22q11.2 deletion syndrome (22q11.2DS): autism spectrum disorder or a different endophenotype? J Autism Dev Disord 44:739–746

Chronaki G, Garner M, Hadwin JA, Thompson MJ, Chin CY, Sonuga-Barke EJ (2015) Emotion-recognition abilities and behavior problem dimensions in preschoolers: evidence for a specific role for childhood hyperactivity. Child Neuropsychol 21:25–40

Gur RE, Yi JJ, McDonald-McGinn DM, Tang SX, Calkins ME, Whinna D et al (2014) Neurocognitive development in 22q11.2 deletion syndrome: comparison with youth having developmental delay and medical comorbidities. Mol Psychiatry 19:1205–1211

Reeve J (2014) Understanding motivation and emotion, 6th edn. Wiley, Hoboken

Gray JA (1987) The psychology of fear and stress, 2nd edn. Cambridge University Press, Cambridge

Penton-Voak IS, Thomas J, Gage SH, McMurran M, McDonald S, Munafò MR (2013) Increasing recognition of happiness in ambiguous facial expressions reduces anger and aggressive behaviour. Psychol Sci 24:688–697

Acknowledgements

This study benefited from funding support from the LABEX CORTEX (ANR-11-LABX-0042) of the University of Lyon, under the “Investissements d’ Avenir” programme (ANR-11-IDEX-0007) run by the French National Research Agency (ANR). The authors are grateful to Maité Delgado for the production and adaptation of all stimuli of the three emotion tasks used in the present study. Many thanks to Margaux Vurpas for preparing and conducting the pilot study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

The study was conducted in accordance with the Declaration of Helsinki and was approved by the local Ethics Committee (CPP Lyon-Sud Est IV, No. 15/041; ANSM, No. 2017-A00881-52; NCT03284060).

Informed consent

Informed written consent was obtained from all children and from their parents (or legal guardians).

Data repository

The data can be found at https://recherche.univ-lyon2.fr/etmeco/data/Peyroux-22q11-Data.zip.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Peyroux, E., Babinet, MN., Cannarsa, C. et al. What do error patterns in processing facial expressions, social interaction scenes and vocal prosody tell us about the way social cognition works in children with 22q11.2DS?. Eur Child Adolesc Psychiatry 29, 299–313 (2020). https://doi.org/10.1007/s00787-019-01345-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00787-019-01345-1