Abstract

This study aims to explore the impact of conversational AI agents on user perceptions within e-tail platforms, driven by the growing significance of digital commerce and the need to understand user interactions with emerging technologies. E-tail, short for electronic retail, encompasses the online buying and selling of goods and services. Methodologically, the research utilizes a sample size of 158 participants and employs the Technology Acceptance Model (TAM) as a theoretical framework. TAM, renowned for its emphasis on perceived usefulness and ease of use, provides a robust lens through which to examine user acceptance of new technologies. The study finds that social presence significantly influences user attitudes, particularly in interactions with anthropomorphized AI agents, while transparent agent recommendations positively impact trusting beliefs. However, persistent concerns surrounding data privacy underscore the need for enhanced protective measures. The contributions of this study lie in its explanation of the intricate dynamics between user acceptance, social presence, and trust in the context of conversational AI agents within e-tail platforms, offering valuable insights for both academia and industry stakeholders navigating the digital transformation landscape.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Artificial intelligence (AI) has emerged as a transformative force in the e-commerce world, with the power to reshape how we shop and interact with technology [1, 2]. As technology continues to push the boundaries of e-tail, the online retail landscape, businesses find themselves in a race to keep pace with these rapid changes, adapting to new trends and innovations as they come [3]. E-tailing, or e-retailing, refers to the electronic commerce model enabling users to purchase goods or services directly from a seller via the Internet, facilitated through a web browser [4]. E-commerce, on the other hand, is a general term for any type of commercial activity conducted electronically [5]. According to recent reports, conversational AI can enhance e-tail users’ services by responding swiftly, promptly, and accurately to their enquiries; lowering customer service wait times; and boosting the number of users that customer support can manage [6,7,8]. Another report suggests that the global AI-powered e-commerce solution market will reach $16.8 billion by 2030, wherein AI will handle 80% of all customer interactions [9].

The advent of e-tail websites has revolutionized the way users shop online, and conversational AI agents have become an integral part of the online shopping experience [10, 11]. In recent years, conversational AI agents have emerged as a popular tool for providing personalized recommendations and information to users on e-tail platforms [12, 13]. Conversational artificial intelligence (CAI) agents are computer programs and systems designed to engage in natural language conversations by incorporating machine learning (ML), virtual reality (VR), and natural language processing (NLP) to understand and generate human-like text or speech responses [14, 15]. These agents provide recommendations based on users’ past behaviour, preferences, and contextual information [16, 17]. The integration of conversational AI agents into e-tail settings is a testament to the dynamic evolution of technology in reshaping human–computer interaction. Such agents aim to replicate not only the functionality but also the social dimensions of a personal shopping assistant [18, 19]. As the importance of these agents in e-tail continues to grow, it becomes paramount to explore the broader implications of their social presence in order to harness their benefits.

In the world of conversational AI agents, the term “social presence” carries a unique significance. It describes the degree to which users perceive these AI agents as social entities, almost as if they were engaging with another human being [20, 21]. These agents, with their increasingly human-like features, mannerisms, and conversational patterns, evoke the intricate dynamics of human-to-human interaction [22]. Yet, as AI blurs the lines between technology and humanity, it raises concerns about how users perceive and accept these agents. The concept of social presence introduces a new twist: technology is no longer just a tool for communication; it becomes a companion, actively shaping the very experiences it facilitates. Prior research has highlighted the transformative potential of conversational AI agents in the e-tail domain [23, 24]. Previous studies have indicated that AI agents improve user engagement and sales by providing a more natural, human-like shopping experience, enhanced by their ability to offer expert recommendations [25, 26].

While much of the focus has been on technical advancements, the human side of AI adoption is equally critical. Despite the transformative potential of conversational AI agents in enhancing e-tail user experiences, there exists a gap in understanding the nuanced facets of social presence, user perceptions, and trust within this context. While conversational AI agents aim to replicate human-like interactions, users may still harbor reservations and scepticism, leading to challenges in broader acceptance and adoption. This gap underscores the need to investigate the complex interplay between anthropomorphism, technology, and human behaviour to shape positive user perceptions and foster trust in conversational AI agents within e-tail settings [27, 28]. To foster broader user acceptance, it is imperative to understand how to shape positive user perceptions in conversational AI agents. Addressing this gap is vital for the effective integration of these agents in various online domains [29]. Our research aims towards understanding the psychological and behavioural impact of AI-driven agents on e-commerce users. Although prior studies have examined technical efficiency and operational benefits, little attention has been paid to how users perceive these agents and what drives their acceptance or rejection. By exploring these human factors, our study aims to shed light on the broader implications of conversational AI in online retail environments. Additionally, the research seeks to extend the framework of the Technology Acceptance Model (TAM) by incorporating key variables relevant to the adoption of conversational AI agents, contributing to the theoretical understanding of technology acceptance in the context of e-commerce. TAM was chosen as the theoretical framework for this research because of the framework’s long history and adaptability to encompass variables that are integral to the context of technology adoption [30,31,32,33]. Additionally, the significance of trust and social presence in technology adoption and online interactions further justifies their inclusion in the model. The subsequent sections provide an overview of the foundational concepts that underpin our study, outlining the methodology employed, presenting the resulting data, and discussing the implications of these findings.

2 Background and hypothesis development

2.1 Conversational AI agents and anthropomorphism

A conversational agent is a computer designed system that mimics human-like conversations using natural language, vocally or in-text [14, 34]. These agents may be observed in an array of applications, performing diverse and fascinating functions such as customer support [35, 36], education [37], e-tail [38], healthcare [39], fintech [40], and retail [41]. Conversational agents offer easy interfaces, are available 24 h a day, deliver rapid replies, are omnichannel, and can participate in human-like discussions [42, 43]. These agents have a significant influence on electronic businesses. Leading companies, including IBM with its Watson Assistant [44] and Soul Machines with its digital avatars like Roman (Fig. 1), are increasingly outsourcing conversational AI agents. This strategic approach enables e-tailers to harness specialized expertise and integrate advanced conversational agents, exemplifying a contemporary trend in leveraging external capabilities for enhanced technological solutions.

Gestures (visual cues), voice (spoken cues), and natural written language can all be used to provide input to agents (linguistic cues) [45,46,47]. Previous studies on conversational AI agents have largely focused on their technical performance and operational efficiency. These works have documented the speed and accuracy with which AI agents can handle customer queries, as well as their ability to scale customer service operations. However, they have often overlooked the psychological and social dynamics that play a crucial role in user acceptance.

Anthropomorphism, on the other hand, is the attribution of human-like qualities to non-human objects [48,49,50]. In line with the Computers Are Social Actors (CASA) paradigm [51,52,53] when conversational agents have anthropomorphic clues (e.g., name, gender, and typing emulation), users react to them as if they were human beings [54].

2.2 Technology acceptance model (TAM)

The Technology Acceptance Model (TAM) has gained widespread recognition as the leading framework for understanding technology adoption, particularly when it comes to assessing the acceptance of new technologies [33, 55, 56]. TAM’s core premise is that technology users make rational decisions about how to engage with a given technology. These decisions hinge on two primary factors: perceived usefulness (PU) and perceived ease of use (PEOU), which together shape user attitudes and behaviours [57, 58]. Given TAM’s robust approach to analysing technology adoption, it became the central framework guiding this study.

The Technology Acceptance Model (TAM) has emerged as a cornerstone in understanding the factors that influence technological acceptability. Its strengths lie in its consistent measurement techniques, empirical reliability, and conceptual simplicity [24, 59, 60]. Moreover, TAM has demonstrated its versatility by explaining a significant portion of the variance in users’ intentions to use technology [61,62,63]. This wide applicability is further reinforced by its use across numerous studies, leading to a robust set of questions and metrics for evaluating technology adoption. However, while TAM serves as a reliable framework, it is important to consider additional factors unique to the technology being examined to capture the complete dynamics of its adoption. This comprehensive perspective helps ensure that the insights gained are both accurate and relevant to the context of the technology in question.

2.3 The effect of humanoid embodiment on social presence

Humanoid embodiment refers to the degree to which a conversational AI agent resembles a human in terms of appearance, voice, and behaviour [47, 64]. Previous research has suggested that humanoid embodiment can enhance the perceived social presence of conversational AI agents, which is the feeling of being in a social interaction with another intelligent being [65,66,67]. As conversational AI agents become more humanlike, they trigger more social desirability in user responses [54]. The physical embodiment of humanoid robots can generate a social effect on humans, such as enhancing their attention and memory, as well as reducing their anxiety and boredom [68, 69]. These studies provide evidence that humanoid embodiment positively influences perceived social presence in conversational AI agents. Hence, we hypothesize:

-

H1: Humanoid embodiment positively influences perceived social presence in conversational AI agents.

Conversational AI agents can increase users’ sense of social presence by providing a more personal and human-like interaction [70, 71]. Users are more likely to perceive the agent as social when the agent uses natural language, provides personalized recommendations, and has a more human-like personality [72, 73]. Conversational AI agents with a higher level of social presence gain more user acceptability and behavioural intent to adopt these agents [74].

Thus, it is hypothesized:

-

H2: Perceived social presence positively influences users’ attitudes toward conversational AI agents.

2.4 Effects of transparency and data privacy on trusting beliefs

Recent research underscores the pivotal role of transparency in user data collection as a catalyst for fostering trusting beliefs in conversational AI agents [75]. Users are more likely to trust recommendations when they have a clear understanding of how these recommendations are generated and personalized [76, 77]. Similarly, when AI agents provide users with insights into the decision-making processes behind recommendations, users are more inclined to trust the AI agent’s suggestions [78, 79]. These studies collectively highlight the significance of transparency as a key element in building trusting relationships between users and conversational AI agents, shedding light on its fundamental role in enhancing user experiences and acceptance of AI-driven recommendations.

Thus,

-

H3: Transparency in recommendations positively influences users’ trusting beliefs in conversational AI agents.

Data privacy is introduced as a key variable as it affects users’ sense of safety online [80, 81]. Data privacy risk is an inevitable step when examining hazards online, since technological advancement has raised security concerns about online identity theft and exploitation of user information [82, 83]. Furthermore, privacy risk is a barrier to the adoption of conversational AI agents since users do not have complete control over their information and are concerned about their personal information being sold to other parties. Users are more inclined to trust AI agents when there are strong data privacy safeguards in check [84, 85]. Users exhibit greater trust when they believe their data is secure and strict privacy measures are in place [86].

Hence, we hypothesize,

-

H4: Data privacy protection positively influences users’ trusting beliefs in conversational AI agents.

2.5 Trusting beliefs

From an e-tailer’s standpoint, trust can be defined as “the subjective probability by which the users expect that a website will perform a given transaction by their confident expectation” [87]. Users bear a certain risk while shopping online due to the nature of internet shopping [88]. When users are faced with unknown risks, trust can help them overcome this dilemma [89, 90]. In the absence of risk, trust becomes less crucial. Risk introduces a degree of unpredictability to the purchasing process, making trust an important tool for managing uncertainty and ensuring a smooth transaction [91, 92].

Researchers have investigated the significance of trust and its crucial function in online interactions and purchasing behaviour, emphasizing its capacity to yield favorable outcomes as anticipated [93, 94]. The TAM framework incorporates trust in numerous ways. Previous research has also found that trust is a factor of attitude as it influences users’ attitudes [95, 96].

Hence, it is hypothesized:

-

H5: Users’ trusting beliefs positively influence users’ attitudes toward conversational AI agents.

2.6 The interplay of perceived usefulness and perceived ease of use

Perceived usefulness is defined as “the extent to which individuals believe that using the new technology will enhance their task performance” [97, 98]. The usefulness of a technology is critical to its acceptability. This notion was expanded onto the adoption, or willingness to adopt, conversational AI agents. According to research, usefulness has a significant beneficial effect on behavioural intention to use AI agents [99, 100]. Empirical evidence from prior studies indicates that, within the technical domain, perceived usability plays a crucial role in promoting the adoption and use of a specific technology [101, 102]. Thus, it is reasonable to investigate the influence of perceived utility on user attitudes toward conversational AI agents. Conversational agents’ value must be recognized since they may provide personalization, social presence, expert suggestions, adaptability, and convenience. As a result, it is hypothesized:

-

H6: Perceived usefulness positively influences users’ attitudes towards conversational AI agents.

The perceived ease of use of a technology is defined as “the degree to which an individual believes that using a particular technology will be free of mental effort” [33]. The ease of use of technology is a sign of its acceptability. Users readily embrace technology that is easy to understand and use.

Studies indicate that technology users often have preconceived expectations regarding the ease or difficulty of utilizing a certain technology [103,104,105]. To understand users’ expectations, researchers need to explore their perceptions of ease of use. Studies indicate that ease of use plays a pivotal role in shaping users’ views on technological inventions, highlighting its importance as a factor to consider in research on user behaviour [106,107,108].

Perceived ease of use has a direct influence on perceived usefulness in the Technology Acceptance Model. This direct effect represents the immediate influence that users’ perceptions of a technology’s ease of use exert on their assessment of its usefulness. When users deem a technology as simple to use, their sense of its usefulness improves. Hence, we hypothesize:

-

H7a: Perceived ease of use positively influences perceived usefulness of conversational AI agents.

-

H7b: Perceived ease of use positively influences users’ attitudes towards conversational AI agents.

2.7 Users’ attitudes and intentions

Behaviour towards technology is a direct result of components such as behavioural intention, which is generated by a user’s attitude [109]. Attitude is referred to as a user’s favorable or unfavorable feelings around the usage of AI agents on the e-tail platform [110]. The user’s perception of the consequences of their conduct has a substantial impact on their willingness to behave in that manner [111]. As a result, it is pertinent to link user attitude and intention to use, which is hypothesized as follows:

-

H8: Users’ attitudes toward the agent positively influence their intention to adopt conversational AI agents.

The background review identified a gap in understanding human interaction with AI agents. This gap includes the social presence of these agents, user perceptions of their reliability and trustworthiness, and the broader behavioural trends in e-tail platforms. By targeting these areas, our research offers a fresh perspective on AI’s role in e-commerce and aims to fill a critical void in the existing literature.

3 Research framework

The research hypotheses are depicted in the conceptual framework given in Fig. 2. This study investigates the direct impact of social presence, trusting beliefs, perceived utility, and perceived ease of use on users’ attitudes about conversational AI agents and behavioural intents to adopt them, as indicated in the conceptual framework. Furthermore, we investigate the significance of transparency and data privacy in establishing trusting beliefs in conversational AI agents, as well as the function of humanoid embodiment in replicating the agent’s social presence.

4 Research methodology

4.1 Measurements

The present study employs a comprehensive measurement approach featuring a scale comprised of 37 items designed to assess nine distinct variables. The questionnaire utilized for data collection drew upon well-established and pre-validated scales, with careful adaptations to tailor their relevance to the context of our study. Participants in the research were requested to express their perspectives on these variables utilizing a “five-point Likert scale.” This Likert scale, spanning from one to five, assigned the numerical values one for a position of “strongly disagree” and five for a standpoint of “strongly agree.” The items for the construct of humanoid embodiment were adopted from Keeling et al. [112], social presence from Gefen and Straub [95], transparency from Matsui and Yamada [113], data privacy from Zhang et al. [82], trusting beliefs from Qiu and Benbasat [114], perceived usefulness and perceived ease of use from Davis [115], attitude towards technology from Qiu and Benbasat [65], and behavioural intentions from Kim et al. [55].

4.2 Procedure

An online survey instrument was constructed using Google Forms, accompanied by a comprehensive message explaining the study’s objectives and rationale. The study employed a detailed research procedure to examine the effectiveness and user perceptions of conversational AI agents in the e-tail context. An integral component of the study involved the integration of a video sourced from Soul Machines, an online platform specializing in the development of conversational AI agents for e-tail applications [116]. This video featured a simulated conversational interaction between an AI agent and a human user, showcasing the practical implementation of these agents in providing products and services.

Subsequently, participants were systematically presented with the aforementioned video and then prompted to respond to a series of inquiries crafted to elicit their perceptions and reactions to the demonstrated interaction. The questions encompassed diverse facets, including user experience, perceived usefulness, social presence, and trust in the AI agent.

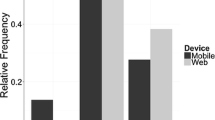

4.3 Sample and data collection

This study focused on a specific target population comprising individuals who possess familiarity with the concept of conversational AI agents in the context of e-tail platforms. To gather data from this selective population, a purposive sampling technique was employed. Notably, the demographic distribution of the respondents revealed a predominant representation within the age bracket of 25 to 34 years. The data collection process garnered a total of 180 survey responses, from which a judiciously selected subset of 158 responses was deemed suitable for subsequent analysis. This process of purposive sampling and data collection ensured a focus on respondents with a relevant knowledge base, thereby enhancing the study’s relevance and comprehensiveness.

The hypotheses were tested using the partial least squares structural equation modeling (PLS-SEM) tool. PLS-SEM was employed for the analysis due to its adaptability to data needs, model complexity, and relationship specifications.

5 Findings

5.1 Measurement model assessment

At first, the measurement model is tested for item factor loadings, construct’s convergent reliability, and discriminant validity followed by path analysis for the structural model [117]. Table 1 displays the factor loadings, Cronbach alpha (for construct reliability), and average variance explained (AVE) values to ensure the convergent reliability of the constructs. The value for factor loadings should be above 0.70 to be accepted [118]. The Cronbach alpha values should be between 0.70 and 0.90 to be reliable and the AVE values should be above 0.50 [117]. The factor loadings in our model are above the threshold value, and the Cronbach alpha and AVE are also above the threshold value and thus are accepted. This validates the reliability of our model.

The discriminant validity of the constructs is assessed using Heterotrait-Monotrait ratio. The values for the HTMT ratio should be less than 0.85 [119]. Table 2 shows the HTMT values which are less than 0.85 and thus, our model has discriminant validity.

Through the convergent and discriminant validity analysis, we can say that our measurement model is reliable and all the constructs are distinct from each other.

5.2 Hypothesis testing

We further performed bootstrapping using 5000 samples to test our hypotheses. Results are tabulated in Tables 3 and 4. A visual representation of the model in PLS-SEM is presented in Fig. 3.

5.3 Structural model assessment

5.3.1 Evaluation for explanation power

The model was tested for explanation power (R2), as depicted in Table 5. The explanation power of 0.25, 0.50, and 0.70 implies weak, moderate, and strong relations respectively. For our analysis, a moderate relationship was established. The f2 value helps in assessing the impact of the independent variable on the dependent variables. For f2 values, 0.02, 0.15, and 0.35 denote small, medium, and large effects [118]. Our study shows a large effect size for the intention. Through Q2, the predictability of the model is assessed. Any value above zero is acceptable to suggest accuracy; for our model, the values were 0.561 and 0.556 for intention and attitude respectively. Hence, the accuracy of the model was suggested.

5.3.2 Goodness of fit index

Standardized root means square values (SRMR) are used to check the goodness of fit of the model [118]. A value less than 0.08 shows a good fit, and this model suggests a good fit with an SRMR value of 0.060.

6 Discussion

As the adoption of conversational AI by users has led to a paradigm shift across various domains, the interaction between individuals and technology has been revamped. Despite the challenges posed by these technological advancements, users are facilitating the adoption of these “untact” services, which allow them to connect with websites without face-to-face interaction [120]. This study delves into the key AI and technology-related variables which impact users’ behavioural intentions to adopt AI agents. Our findings explored the interplay between AI-related factors, technology-related factors, and human-related factors which can shape a positive attitude towards these AI agents and also further facilitate the adoption by e-tailers. Our research showcased the growing acceptance of these conversational AI agents by users across the e-tail domain.

From our study, we found that users are engaging with AI agents for several tasks ranging from product selection to cart management during their e-tail journey. First, transparency (β = 0.435, p = 0.013) was found to be a key determinant of trusting beliefs and thus, more transparent AI systems can help in building the trust of the users, whereas persistent concerns surrounding data privacy underscore the need for enhanced protective measures to gain users’ trust and acceptance. Second, humanoid embodiment (β = 0.660, p = 0.001) was a significant predictor of social presence, which suggests that when conversational AI agents exhibit human-like characteristics or appearance, users are more likely to perceive them as social entities. This finding highlights the importance of designing AI agents with anthropomorphic features to enhance users’ sense of social presence during interactions. It implies that the degree to which AI agents resemble humans plays a crucial role in shaping users’ perceptions and experiences, ultimately influencing their engagement and acceptance of the technology.

Third, the paper confirmed the impact of trusting beliefs (β = 0.201, p = 0.013), social presence (β = 0.268, p = 0.001), perceived usefulness (β = 0.394, p = 0.001), and perceived ease of use (β = 0.140, p = 0.001) on attitude. The level of trust in these AI agents significantly impacts the attitude of users; thus, the e-tail websites should focus on building more secure and transparent AI agents which can enhance users’ level of trust and facilitate a positive attitude. As social presence was another determinant of attitude, it can be inferred that an agent with a high social presence or a sense of social interaction can lead to a more favorable user attitude than one with a low social presence. Thus, e-tail websites should aim to develop more human-like agents. The study also found that when users perceive the agents as easy to use, they are more likely to find them useful. This result underscores the importance of a seamless user experience. Users’ perception of AI agents as useful has a positive impact on their overall attitude toward them. This finding aligns with TAM which suggests that perceived usefulness is a key driver of technology acceptance. A positive attitude (β = 0.789, p = 0.001) of users can strongly impact users’ intentions towards these AI agents. Overall, these findings have a range of theoretical contributions and practical implications, which we explore in the following sections.

6.1 Theoretical contributions

From a theoretical perspective, this study contributes to the growing body of literature on conversational AI agents in e-commerce and e-tail platforms, focusing on user perceptions and social presence. It underscores the influence of anthropomorphism on user behaviour, shedding light on the underlying psychological mechanisms. According to the present research, anthropomorphizing conversational AI agents offer a more personalised shopping experience to e-tail users. Our findings suggest a strong correlation between anthropomorphized agents and a higher user acceptance, adding to the theory of anthropomorphism. Secondly, this study also significantly contributes to the theory of social presence by exploring the intricate dynamics of user interaction with conversational AI agents on e-tail platforms. This study also contributes to academia by extending the TAM framework to incorporate key variables relevant to the study of conversational AI agents. By enhancing the TAM framework, this research provides a robust model that offers a comprehensive understanding of user behaviour and acceptance of AI-driven conversational agents in various contexts. Academicians can utilize this refined model to guide future research endeavors in conversational AI technology.

6.2 Managerial implications

The deployment of conversational AI agents on e-tail platforms carries profound managerial implications. Firstly, these agents can significantly enhance customer support by providing uninterrupted service, particularly during non-working hours, thus reducing dependency on human agents. Secondly, the ability of AI agents to deliver personalized product recommendations fosters user satisfaction and cultivates brand loyalty. Furthermore, these agents serve as invaluable sources of real-time data, facilitating research and development initiatives and informing robust marketing strategies. The multilingual capabilities of AI agents contribute to global reach, broadening the customer base but the issue of user data privacy prevails. E-tailers ought to adhere to data privacy regulations and prioritize the trust and integrity of users.

The study faces some challenges, including addressing user reservations and scepticism towards AI agents and navigating the complexities of social presence in technology-mediated interactions. Future researchers can be directed towards these issues as overcoming these challenges is essential for advancing our understanding of user behaviour and technology adoption in the rapidly evolving e-tail landscape.

Data availability

The data that support the findings of this study are available from the corresponding author (GR) upon reasonable request.

References

Rashidin MdS, Gang D, Javed S, Hasan M (2022) The role of artificial intelligence in sustaining the E-commerce ecosystem. J Glob Inf Manag 30(8):1–25. https://doi.org/10.4018/jgim.304067

Jacobides MG, Brusoni S, Candelon F (2021) The evolutionary dynamics of the artificial intelligence ecosystem. Strat Sci 6(4):412–435. https://doi.org/10.1287/STSC.2021.0148

Ramu VB, Yeruva AR (2023) Optimising AIOps system performance for e-commerce and online retail businesses with the ACF model. Int J Intellect Prop Manag 13(3–4):412–429. https://doi.org/10.1504/IJIPM.2023.134064

Baskaran K, M.R. V, (2014) e-Shopping experience in e-tail market. Int Jour Info Sys Soc Chn. 5(2):13–24. https://doi.org/10.4018/ijissc.2014040102

Burt S, Sparks L (2003) E-commerce and the retail process: a review. J Retail Consum Serv 10(5):275–286. https://doi.org/10.1016/S0969-6989(02)00062-0

E-commerce customer service statistics for 2023. In: Gnani.ai. https://www.gnani.ai/resources/blogs/ecommerce-customer-service-statistics/. Accessed 5 Jun 2024

Conversational AI market size, statistics, Growth Analysis & Trends. In: MarketsandMarkets. https://www.marketsandmarkets.com/Market-Reports/conversational-ai-market-49043506.html. Accessed 5 Jun 2024

Saha S (2023) Conversational AI market. In: Future Market Insights. https://www.futuremarketinsights.com/reports/conversational-ai-market. Accessed 5 Jun 2024

Sharma V (2023) How artificial intelligence is driving ecommerce industry? In: Tech Blog | Mobile App, eCommerce, Salesforce Insights. https://www.emizentech.com/blog/artificial-intelligence-in-ecommerce-industry.html#:~:text=The%20role%20of%20AI%20in,24%2F7%20support%20to%20customers. Accessed 5 Jun 2024

Bavaresco R et al (2020) Conversational agents in business: a systematic literature review and future research directions. Comput Sci Rev 36:100239. https://doi.org/10.1016/j.cosrev.2020.100239

Diederich S, Lichtenberg S, Brendel AB, Trang S (2019) Promoting sustainable mobility beliefs with persuasive and anthropomorphic design: insights from an experiment with a conversational agent. In ICIS 2019 Proceedings 3. https://aisel.aisnet.org/icis2019/sustainable_is/sustainable_is/3

Li CY, Zhang JT (2023) Chatbots or me? Consumers’ switching between human agents and conversational agents. J Retail Consum Serv 72(August 2022):103264. https://doi.org/10.1016/j.jretconser.2023.103264

Song X, Xiong T (2021) A survey of published literature on conversational artificial intelligence. In: 2021 7th International Conference on Information Management, ICIM 2021, pp 113–117. https://doi.org/10.1109/ICIM52229.2021.9417135

Kusal S, Patil S, Choudrie J, Kotecha K, Mishra S, Abraham A (2022) AI-based conversational agents: a scoping review from technologies to future directions. IEEE Access. https://doi.org/10.1109/ACCESS.2022.3201144

Wassan JT, Ghuriani V (2023) Recent trends in deep learning for conversational AI. Artificial Intelligence. https://doi.org/10.5772/intechopen.113250

CT and Piyush Nishant KP, Piyush N, Choudhury T, Kumar P (2016) Conversational commerce a new era of e-business, In: 2016 International Conference System Modeling & Advancement in Research Trends (SMART), IEEE, pp 322–327. https://doi.org/10.1109/SYSMART.2016.7894543

Yan R (2018) “Chitty-chitty-chat bot”: deep learning for conversational AI. Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, pp 5520–5526. https://doi.org/10.24963/ijcai.2018/778

Loveys K, Sebaratnam G, Sagar M, Broadbent E (2020) The effect of design features on relationship quality with embodied conversational agents: a systematic review. Int J Soc Robot 12(6):1293–1312. https://doi.org/10.1007/s12369-020-00680-7

Chattaraman V, Kwon W-S, Gilbert JE (2012) Virtual agents in retail web sites: benefits of simulated social interaction for older users. Comput Human Behav 28(6):2055–2066. https://doi.org/10.1016/j.chb.2012.06.009

Nowak KL, Biocca F (2003) The effect of the agency and anthropomorphism on users sense of telepresence, copresence, and social presence in virtual environments. Presence: Teleoperators Virtual Environ 12(5):481–494. https://doi.org/10.1162/105474603322761289

van Doorn J et al (2010) Customer engagement behavior: theoretical foundations and research directions. J Serv Res 13(3):253–266. https://doi.org/10.1177/1094670510375599

Schmidt N-H, Erek K, Kolbe LM, Zarnekow R (2011) Examining the contribution of green IT to the objectives of IT departments: empirical evidence from German enterprises. Australas J Inf Syst 17(1):127–140. https://doi.org/10.3127/ajis.v17i1.614

Ikumoro AO, Jawad MS (2019) Intention to use intelligent conversational agents in e-commerce among Malaysian SMEs: an integrated conceptual framework based on tri-theories including unified theory of acceptance, use of technology (UTAUT), and T-O-E. Int J Acad Res Business Social Sci 9(11):205–235. https://doi.org/10.6007/ijarbss/v9-i11/6544

Balakrishnan J, Dwivedi YK (2024) Conversational commerce: entering the next stage of AI-powered digital assistants. Ann Oper Res 333(2–3):653–687. https://doi.org/10.1007/s10479-021-04049-5

Ling EC, Tussyadiah I, Tuomi A, Stienmetz J, Ioannou A (2021) Factors influencing users’ adoption and use of conversational agents: a systematic review. Psychol Mark 38(7):1031–1051. https://doi.org/10.1002/mar.21491

Lal M, Neduncheliyan S (2023) An optimal deep feature–based AI chat conversation system for smart medical application. Pers Ubiquitous Comput 27(4):1483–1494. https://doi.org/10.1007/s00779-023-01713-4

Han MC (2021) The impact of anthropomorphism on consumers’ purchase decision in chatbot commerce. J Internet Commer 20(1):46–65. https://doi.org/10.1080/15332861.2020.1863022

Arsovski S, Cheok A, Wong S (2018) Open-domain neural conversational agents: the step towards artificial general intelligence. Int J Adv Comp Sci Appl 9(6). https://doi.org/10.14569/IJACSA.2018.090654

Luo X, Tong S, Fang Z, Qu Z (2019) Frontiers: machines vs. humans: the impact of artificial intelligence chatbot disclosure on customer purchases. Mark Sci 38(6):937–947. https://doi.org/10.1287/mksc.2019.1192

Kasilingam DL (2020) Understanding the attitude and intention to use smartphone chatbots for shopping. Technol Soc 62. https://doi.org/10.1016/j.techsoc.2020.101280

Yılmaz M, Rızvanoğlu K (2021) ‘Understanding users’ behavioral intention to use voice assistants on smartphones through the integrated model of user satisfaction and technology acceptance: a survey approach. J Eng Design Technol 20(6):1738–1764. https://doi.org/10.1108/JEDT-02-2021-0084

Jawad A, Parvin T, Hosain M (2022) Intention to adopt mobile-based online payment platforms in three Asian countries: an application of the extended technology acceptance model. J Contemp Market Sci 5. https://doi.org/10.1108/JCMARS-08-2021-0030

Davis F, Davis F (1989) Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q 13:319. https://doi.org/10.2307/249008

Borsci S et al (2022) The chatbot usability scale: the design and pilot of a usability scale for interaction with AI-based conversational agents. Pers Ubiquit Comput 26:95–119. https://doi.org/10.1007/s00779-021-01582-9

Hadi R (2019) When humanizing customer service chatbots might backfire. NIM Market Intell Rev 11:30–35. https://doi.org/10.2478/nimmir-2019-0013

Sheehan B, Jin HS, Gottlieb U (2020) Customer service chatbots: anthropomorphism and adoption. J Bus Res 115:14–24. https://doi.org/10.1016/j.jbusres.2020.04.030

Smutny P, Schreiberova P (2020) Chatbots for learning: a review of educational chatbots for the Facebook Messenger. Comput Educ 151:103862. https://doi.org/10.1016/j.compedu.2020.103862

Shafi PM, Jawalkar GS, Kadam MA, et al (2020) AI—assisted chatbot for e-commerce to address selection of products from multiple products. Studies in Systems, Decision and Control. https://doi.org/10.1007/978-3-030-39047-1_3

Fitzpatrick KK, Darcy A, Vierhile M (2017) Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR Ment Health 4(2):e19. https://doi.org/10.2196/mental.7785

Huang SYB, Lee C-J (2022) Predicting continuance intention to fintech chatbot. Comput Human Behav 129:107027. https://doi.org/10.1016/j.chb.2021.107027

Tran AD, Pallant JI, Johnson LW (2021) Exploring the impact of chatbots on consumer sentiment and expectations in retail. J Retail Consum Serv 63:102718. https://doi.org/10.1016/j.jretconser.2021.102718

Li C-Y, Zhang J-T (2023) Chatbots or me? Consumers’ switching between human agents and conversational agents. J Retail Consum Serv 72:103264. https://doi.org/10.1016/j.jretconser.2023.103264

Luria M, Reig S, Tan XZ, Steinfeld A, Forlizzi J, Zimmerman J (2019) Re-embodiment and co-embodiment: exploration of social presence for robots and conversational agents. In: Proceedings of the 2019 on Designing Interactive Systems Conference, pp 633–644. https://doi.org/10.1145/3322276.3322340

IBM Watson. https://www.ibm.com/watson. Accessed 14 Dec 2023

Al Farisi R, Ferdiana R, Adji TB (2022) The effect of anthropomorphic design cues on increasing chatbot empathy. In 2022 1st International Conference on Information System and Information Technology, pp 370–375. https://doi.org/10.1109/ICISIT54091.2022.9873008

Chen J, Guo F, Ren Z, Li M, Ham J (2023) Effects of anthropomorphic design cues of chatbots on users’ perception and visual behaviors. Int J Hum Comput Interact. https://doi.org/10.1080/10447318.2023.2193514

Cowell AJ, Stanney KM (2003) Embodiment and interaction guidelines for designing credible, trustworthy embodied conversational agents, in Intelligent Virtual Agents. IVA 2003. Lect Notes Comput Sci 2792:301–309. https://doi.org/10.1007/978-3-540-39396-2_50

Epley N, Akalis S, Waytz A, Cacioppo JT (2008) Creating social connection through inferential reproduction: loneliness and perceived agency in gadgets, gods, and greyhounds. Psychol Sci 19(2):114–120. https://doi.org/10.1111/j.1467-9280.2008.02056.x

Waytz A, Heafner J, Epley N (2014) The mind in the machine: anthropomorphism increases trust in an autonomous vehicle. J Exp Soc Psychol 52:113–117. https://doi.org/10.1016/j.jesp.2014.01.005

Park D, Namkung K (2021) Exploring users mental models for anthropomorphized voice assistants through psychological approaches. Appl Sci 11(23). https://doi.org/10.3390/app112311147

Nass C, Moon Y (2000) Machines and mindlessness: social responses to computers. J Soc Issues 56(1):81–103. https://doi.org/10.1111/0022-4537.00153

Nass C, Fogg BJ, Moon Y (1996) Can computers be teammates? Int J Hum Comput Stud 45(6):669–678. https://doi.org/10.1006/ijhc.1996.0073

Paul SC, Bartmann N, Clark JL (2021) Customizability in conversational agents and their impact on health engagement. Hum Behav Emerg Technol 3(5):1141–1152. https://doi.org/10.1002/hbe2.320

Go E, Sundar SS (2019) Humanizing chatbots: the effects of visual, identity and conversational cues on humanness perceptions. Comput Human Behav 97:304–316. https://doi.org/10.1016/j.chb.2019.01.020

Kim C, Mirusmonov M, Lee I (2010) An empirical examination of factors influencing the intention to use mobile payment. Comput Human Behav 26:310–322. https://doi.org/10.1016/j.chb.2009.10.013

Sonntag M, Mehmann J, Teuteberg F (2022) AI-based conversational agents for customer service – A study of customer service representative’ perceptions using TAM 2’, in Wirtschaftsinformatik 2022 Proceedings. https://aisel.aisnet.org/wi2022/adoption_diffusion/adoption_diffusion/3

Arghashi V, Yuksel CA (2022) Interactivity, inspiration, and perceived usefulness! How retailers’ AR-apps improve consumer engagement through flow. J Retail Consum Serv 64:102756. https://doi.org/10.1016/j.jretconser.2021.102756

Islami M, Asdar M, Baumassepe A (2021) Analysis of perceived usefulness and perceived ease of use to the actual system usage through attitude using online guidance application. Hasanuddin J Business Strat 3:52–64. https://doi.org/10.26487/hjbs.v3i1.410

Schierz PG, Schilke O, Wirtz BW (2010) Understanding consumer acceptance of mobile payment services: an empirical analysis. Electron Commer Res Appl 9(3):209–216. https://doi.org/10.1016/j.elerap.2009.07.005

Kim S, Garrison G (2009) Investigating mobile wireless technology adoption: an extension of the technology acceptance model. Inf Syst Front 11(3):323–333. https://doi.org/10.1007/s10796-008-9073-8

Natarajan T, Balasubramanian SA, Kasilingam DL (2018) The moderating role of device type and age of users on the intention to use mobile shopping applications. Technol Soc 53:79–90. https://doi.org/10.1016/j.techsoc.2018.01.003

Natarajan T, Balasubramanian SA, Kasilingam DL (2017) Understanding the intention to use mobile shopping applications and its influence on price sensitivity. J Retail Consum Serv 37:8–22. https://doi.org/10.1016/j.jretconser.2017.02.010

Lewandowski T, Delling J, Grotherr C, Böhmann T (2021) State-of-the-art analysis of adopting AI-based conversational agents in organizations: a systematic literature review. PACIS 2021 Proceedings. https://aisel.aisnet.org/pacis2021/167

Siemon D, Jusmann S (2021) Appearance of embodied conversational agents in knowledge management, in AMCIS 2021 Proceedings 1. https://aisel.aisnet.org/amcis2021/adopt_diffusion/adopt_diffusion/1

Qiu L, Benbasat I (2010) A study of demographic embodiments of product recommendation agents in electronic commerce. Int J Hum Comput Stud 68(10):669–688. https://doi.org/10.1016/j.ijhcs.2010.05.005

Goertzel B (2009) The embodied communication prior: a characterization of general intelligence in the context of embodied social interaction. In: Proceedings of the 2009 8th IEEE International Conference on Cognitive Informatics. https://doi.org/10.1109/COGINF.2009.5250687

Seaborn K, Miyake NP, Pennefather P, Otake-Matsuura M (2021) Voice in human–agent interaction: a survey. ACM Comput Surv 54(4). https://doi.org/10.1145/3386867

Pandey AK, Gelin R (2018) A mass-produced sociable humanoid robot: Pepper: the first machine of its kind. IEEE Robot Autom Mag 25(3):40–48. https://doi.org/10.1109/MRA.2018.2833157

Fox J, Gambino A (2021) Relationship development with humanoid social robots: applying interpersonal theories to human–robot interaction. Cyberpsychol Behav Soc Netw 24(5):294–299. https://doi.org/10.1089/cyber.2020.0181

Kim Y, Sundar SS (2012) Anthropomorphism of computers: is it mindful or mindless? Comput Human Behav 28(1):241–250. https://doi.org/10.1016/j.chb.2011.09.006

Lee KM, Nass C (2003) Designing social presence of social actors in human computer interaction. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems 5:289–296. https://doi.org/10.1145/642611.642662

Laban G, Araujo T (2022) Don’t take it personally: resistance to individually targeted recommendations from conversational recommender agents. In: Proceedings of the 10th International Conference on Human-Agent Interaction, pp 57–66. https://doi.org/10.1145/3527188.3561929

Seeger A-M, Pfeiffer J, Heinzl A (2021) Texting with humanlike conversational agents: designing for anthropomorphism. J Assoc Inf Syst 22:931–967. https://doi.org/10.17705/1jais.00685

Amin M, Ryu K, Cobanoglu C, Nizam A (2021) Determinants of online hotel booking intentions: website quality, social presence, affective commitment, and e-trust. J Hosp Market Manag 30(7):845–870. https://doi.org/10.1080/19368623.2021.1899095

Schmidt P, Biessmann F, Teubner T (2020) Transparency and trust in artificial intelligence systems. J Decis Syst 29(4):260–278. https://doi.org/10.1080/12460125.2020.1819094

Zhang Y, Xu S, Zhang L, Yang M (2021) Big data and human resource management research: an integrative review and new directions for future research. J Bus Res 133:34–50. https://doi.org/10.1016/j.jbusres.2021.04.019

Wanner J, Herm L-V, Heinrich K, Janiesch C (2022) The effect of transparency and trust on intelligent system acceptance: evidence from a user-based study. Electron Mark 32(4):2079–2102. https://doi.org/10.1007/s12525-022-00593-5

Kao W, Kao W, Huang L, Leu J (2010) A study on consumers trust formation model toward recommendation agents: Elaboration likelihood model. ICEB 2010 Proceedings (Shanghai, China). https://aisel.aisnet.org/iceb2010/30

Rheu M, Shin JY, Peng W, Huh-Yoo J (2021) Systematic review: trust-building factors and implications for conversational agent design. Int J Hum Comput Interact 37(1):81–96. https://doi.org/10.1080/10447318.2020.1807710

Kronemann B, Kizgin H, Rana N, Dwivedi Y (2023) How AI encourages consumers to share their secrets? The role of anthropomorphism, personalisation, and privacy concerns and avenues for future research. Span J.Mark- ESIC 27(1):3–19. https://doi.org/10.1108/SJME-10-2022-0213

Song M, Xing X, Duan Y, Cohen J, Mou J (2022) Will artificial intelligence replace human customer service? The impact of communication quality and privacy risks on adoption intention. J Retail Consum Serv 66:102900. https://doi.org/10.1016/j.jretconser.2021.102900

Zhang B, Wang N, Jin H (2014) Privacy concerns in online recommender systems: influences of control and user data input. In: Proceedings of the Tenth USENIX Conference on Usable Privacy and Security (SOUPS '14). USENIX Association, pp 159–173

Zhang C, Jiang H, Cheng X, Zhao F, Cai Z, Tian Z (2021) Utility analysis on privacy-preservation algorithms for online social networks: an empirical study. Pers Ubiquitous Comput 25(6):1063–1079. https://doi.org/10.1007/s00779-019-01287-0

Chen QQ, Park HJ (2021) How anthropomorphism affects trust in intelligent personal assistants. Ind Manag Data Syst 121(12):2722–2737. https://doi.org/10.1108/IMDS-12-2020-0761

Hsiao KL, Chen CC (2022) What drives continuance intention to use a food-ordering chatbot? An examination of trust and satisfaction. Library Hi Tech 40(4):929–946. https://doi.org/10.1108/LHT-08-2021-0274

Kim Y, Lee H (2022) The rise of chatbots in political campaigns: the effects of conversational agents on voting intention. Int J Hum Comput Interact. https://doi.org/10.1080/10447318.2022.2108669

Pavlou PA (2003) Consumer acceptance of electronic commerce: integrating trust and risk with the technology acceptance model. Int J Electron Commer 7(3):101–134. https://doi.org/10.1080/10864415.2003.11044275

Bhatti A, Rehman SU, Ahtisham Z, Akram H (2021) Factors effecting online shopping behaviour with trust as moderation. J Pengurusan 60:109–122. https://doi.org/10.17576/pengurusan-2020-60-09

Kim D, Ferrin D, Rao R (2008) A trust-based consumer decision-making model in electronic commerce: the role of trust, perceived risk, and their antecedents. Decis Support Syst 44:544–564. https://doi.org/10.1016/j.dss.2007.07.001

Jan IU, Ji S, Kim C (2023) What (de) motivates customers to use AI-powered conversational agents for shopping? The extended behavioral reasoning perspective. J Retail Consum Serv 75:103440. https://doi.org/10.1016/j.jretconser.2023.103440

Song Y (2020) Building a “Deeper” Trust: mapping the facial anthropomorphic trustworthiness in social robot design through multidisciplinary approaches. Design J 23(4):639–649. https://doi.org/10.1080/14606925.2020.1766871

Jensen T, Khan MMH, Fahim MAA, Albayram Y (2021) Trust and anthropomorphism in tandem: the interrelated nature of automated agent appearance and reliability in trustworthiness perceptions. Proceedings of the 2021 ACM Designing Interactive Systems Conference, pp 1470–1480. https://doi.org/10.1145/3461778.3462102

Harrigan M, Feddema K, Wang S, Harrigan P, Diot E (2021) How trust leads to online purchase intention founded in perceived usefulness and peer communication. J Consum Behav 20(5):1297–1312. https://doi.org/10.1002/cb.1936

Dabbous A, Aoun Barakat K, Merhej Sayegh M (2020) Social commerce success: antecedents of purchase intention and the mediating role of trust. J Internet Commerce 19(3):262–297. https://doi.org/10.1080/15332861.2020.1756190

Gefen D, Straub DW (2004) Consumer trust in B2C e-commerce and the importance of social presence: experiments in e-products and e-services. Omega (Westport) 32(6):407–424. https://doi.org/10.1016/j.omega.2004.01.006

Khotrun Nada N, Eka Saputri M, Sari D, Fakhri M (2022) The effect of consumer trust, attitude and behavior toward consumer satisfaction in online shopping platform. Proceedings of the International Conference on Industrial Engineering and Operations Management. https://doi.org/10.46254/sa03.20220409

Lee Y-C (2006) An empirical investigation into factors influencing the adoption of an e-learning system. Online Inf Rev 30:517–541. https://doi.org/10.1108/14684520610706406

Chang Y-W, Chen J (2021) What motivates customers to shop in smart shops? The impacts of smart technology and technology readiness. J Retail Consum Serv 58:102325. https://doi.org/10.1016/j.jretconser.2020.102325

Lu H-P, Su P (2009) Factors affecting purchase intention on mobile shopping web sites. Internet Res 19:442–458. https://doi.org/10.1108/10662240910981399

Keni K (2020) How perceived usefulness and perceived ease of use affecting intent to repurchase? J Manajemen 24:481. https://doi.org/10.24912/jm.v24i3.680

Thakur R, Srivastava M (2013) Customer usage intention of mobile commerce in India: an empirical study. J Indian Business Res 5:52–72. https://doi.org/10.1108/17554191311303385

Wu J-H, Wang S-C (2005) What drives mobile commerce?: an empirical evaluation of the revised technology acceptance model. Inf Manag 42(5):719–729. https://doi.org/10.1016/j.im.2004.07.001

King WR, He J (2006) A meta-analysis of the technology acceptance model. Inf Manag 43(6):740–755. https://doi.org/10.1016/j.im.2006.05.003

Jan AU, Contreras V (2011) Technology acceptance model for the use of information technology in universities. Comput Human Behav 27(2):845–851. https://doi.org/10.1016/j.chb.2010.11.009

Tahar A, Riyadh HA, Sofyani H, Purnomo WE (2020) Perceived ease of use, perceived usefulness, perceived security and intention to use E-filing: the role of technology readiness. J Asian Finance Econo Bus. https://doi.org/10.13106/jafeb.2020.vol7.no9.537

Agrebi S, Jallais J (2015) Explain the intention to use smartphones for mobile shopping. J Retail Consum Serv 22:16–23. https://doi.org/10.1016/j.jretconser.2014.09.003

Zhang L, Zhu J, Liu Q (2012) A meta-analysis of mobile commerce adoption and the moderating effect of culture. Comput Human Behav 28(5):1902–1911. https://doi.org/10.1016/j.chb.2012.05.008

Oyman M, Bal D, Ozer S (2022) Extending the technology acceptance model to explain how perceived augmented reality affects consumers’ perceptions. Comput Human Behav 128:107127. https://doi.org/10.1016/j.chb.2021.107127

Song SW, Shin M (2022) Uncanny valley effects on chatbot trust, purchase intention, and adoption intention in the context of E-commerce: The moderating role of avatar familiarity. Int J Hum Comput Interact 40:1–16. https://doi.org/10.1080/10447318.2022.2121038

Khare A, Kautish P, Khare A (2023) The online flow and its influence on awe experience: an AI-enabled e-tail service exploration. Int J Retail Distrib Manag 51(6):713–735. https://doi.org/10.1108/IJRDM-07-2022-0265

Agrawal SR, Mittal D (2022) Optimizing customer engagement content strategy in retail and E-tail: available on online product review videos. J Retail Consum Serv 67:102966. https://doi.org/10.1016/j.jretconser.2022.102966

Keeling K, Beatty S, McGoldrick P, Macaulay L (2004) Face value? Customer views of appropriate formats for embodied conversational agents (ECAs) in online retailing. Proceedings of the Hawaii International Conference on System Sciences. https://doi.org/10.1109/HICSS.2004.1265426

Matsui T, Yamada S (2019) Designing trustworthy product recommendation virtual agents operating positive emotion and having copious amount of knowledge. Front Psychol. https://doi.org/10.3389/fpsyg.2019.00675

Qiu L, Benbasat I (2009) Evaluating anthropomorphic product recommendation agents: a social relationship perspective to designing information systems. J Manage Inf Syst 25(4):145–181. https://doi.org/10.2753/MIS0742-1222250405

Davis F (1985) A technology acceptance model for empirically testing new end-user information systems: theory and results. Dissertation in The Sloan School of Management. https://www.researchgate.net/publication/35465050_A_Technology_Acceptance_Model_for_Empirically_Testing_New_End-User_Information_Systems

SoulMachines (2022) Meet Sarah, financial agent of the future, dedicated to improve your well-being. In: YouTube. https://www.youtube.com/watch?v=jpePkszVBvk. Accessed 4 Jun 2024

Kumar P, Kumar N, Aggarwal P, Yeap JAL (2021) Working in lockdown: the relationship between COVID-19 induced work stressors, job performance, distress, and life satisfaction. Curr Psychol 40(12):6308–6323. https://doi.org/10.1007/s12144-021-01567-0

Hair JF, Risher JJ, Sarstedt M, Ringle CM (2018) When to use and how to report the results of PLS-SEM. Eur Bus Rev 31:2–24. https://doi.org/10.1108/EBR-11-2018-0203

Hair JF, Ringle CM, Sarstedt M (2011) PLS-SEM: indeed a silver bullet. J Mark Theory Pract 19(2):139–152. https://doi.org/10.2753/MTP1069-6679190202

Lee SM, Lee D (2020) “Untact”: a new customer service strategy in the digital age. Serv Bus 14(1):1–22. https://doi.org/10.1007/s11628-019-00408-2

Author information

Authors and Affiliations

Contributions

AG, GR, and UT: conceptualization, research design, data collection, analysis, literature search, and manuscript preparation. All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Raut, G., Goel, A. & Taneja, U. Humanizing e-tail experiences: navigating user acceptance, social presence, and trust in the realm of conversational AI agents. Pers Ubiquit Comput (2024). https://doi.org/10.1007/s00779-024-01814-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00779-024-01814-8