Abstract

A striking and debilitating property of the nervous system is that damage to this tissue can cause chronic intractable pain, which persists long after resolution of the initial insult. This neuropathic form of pain can arise from trauma to peripheral nerves, the spinal cord, or brain. It can also result from neuropathies associated with disease states such as diabetes, human immunodeficiency virus/AIDS, herpes, multiple sclerosis, cancer, and chemotherapy. Regardless of the origin, treatments for neuropathic pain remain inadequate. This continues to drive research into the underlying mechanisms. While the literature shows that dysfunction in numerous loci throughout the CNS can contribute to chronic pain, the spinal cord and in particular inhibitory signalling in this region have remained major research areas. This review focuses on local spinal inhibition provided by dorsal horn interneurons, and how such inhibition is disrupted during the development and maintenance of neuropathic pain.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

All roads begin at the inhibitory gate

The gate control theory, proposed by Melzack and Wall in 1965 (Melzack and Wall 1965), took elements of intensity, pattern, and specificity theories that were supported by experimental findings and provided a circuit-based framework that could reconcile various differences and controversies (Fig. 1a). At the time, their ideas provided a conceptual leap in thinking on spinal sensory processing and highlighted the importance of cross talk between different afferent populations, and between spinal interneurons with projection neurons. The Gate Control theory proposed ‘pain’ signals that reached the brain came from a gated circuit that resided within the dorsal horn of the spinal cord. Notably, it placed inhibitory interneurons (labeled SG in Fig. 1a) as critical modulators of incoming sensory information. They proposed that low-threshold mechanoreceptors (LTMRs) activated inhibitory interneurons, which in turn ‘closed the gate’ by inhibiting activation of spinal cord projection neurons (labeled T in Fig. 1a). This circuit effectively suppressed transmission of ‘pain’ signals to the brain, even in the presence of high-threshold nociceptive input. Interestingly, the circuit described to achieve this gating was mediated by pre-synaptic inhibition of nociceptor terminals by inhibitory interneurons. Conversely, activation of nociceptors not only activated projection neurons, but also ‘opened the gate’ by inhibiting the activity of SG inhibitory interneurons. Subsequent data necessitated several revisions of the circuitry outlined in the original theory. However, the theory has endured for more than 50 years, because it predicted connections that: could be tested; used to generate hypotheses; and often explained preclinical and clinical observations. Of relevance for this review, spinal cord circuits and inhibitory function are still at the centre of research efforts to understand and treat pain, including pain of neuropathic origin.

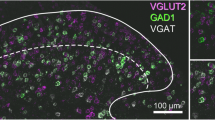

Role of inhibition in spinal sensory processing. a Schematic showing the gate control circuit, as proposed by Melzack and Wall (1965) in Fig. 4, superimposed over the spinal cord dorsal horn. This work identified inhibitory interneurons (labeled SG) as mediators of a spinal gate for sensory processing. Note that SG is the terminology used for substantial gelatinosa cells/neurons by Melzack and Wall. The modern abbreviation for inhibitory interneuron is IN. Activation of the SG inhibitory population by low-threshold afferent inputs (L) allowed inhibitory interneurons to suppress dorsal horn output signals relayed by projection neurons (labeled T—for first central transmission cells/neurons by Melzack and Wall) and thus suppress pain signalling (Action System). Alternatively, nociceptive afferent inputs (S) inhibited SG inhibitory interneurons while exciting projection neurons and producing pain signals via the Action System. This theory also incorporated descending Central Command signals. b Upper panel shows dorsal horn image (VGAT::TdTom) from a transgenic mouse, which expresses the fluorescent protein TdTomato in inhibitory interneurons. The lower panel shows the same section immunolabeled for the transcription factor Pax2. This marks inhibitory GABAergic interneurons in the dorsal horn. Note, inhibitory interneurons are distributed throughout the dorsal horn; however, the TdTomato labeling is most prominent in the superficial dorsal horn (Laminae I–II, dashed line = lamina II–III border). c Schematics summarising the mechanisms underlying post-synaptic (left) and pre-synaptic inhibition (right) in the dorsal horn. The centre inset shows an example post-synaptic inhibitory connection (a) between an inhibitory interneuron (blue) and a neighbouring dorsal horn neuron (grey); and a pre-synaptic inhibitory connection (b) between an inhibitory interneuron (blue) and primary afferent terminal (green). Post-synaptic inhibition in the dorsal horn (left) can be mediated by the neurotransmitters GABA (blue), glycine (purple), or both. Arrival of a pre-synaptic action potential evokes neurotransmitter release into the synaptic cleft and its subsequent binding to GABAA, and/or glycine receptors. Opening of this ligand gated ion channels (1) allows chloride influx (2) because of the low intracellular chloride concentration that is maintained by the KCC2 transporter. Rising intracellular chloride hyperpolarizes membrane potential (3) and reduces the likelihood of action potential generation in the post-synaptic neuron (4). Pre-synaptic inhibition is almost always mediated by GABA, but not glycine. Arrival of a pre-synaptic action potential evokes neurotransmitter release, which binds to GABAA receptors on primary afferent terminals (1, green). Opening of this ligand gated ion channels allows chloride efflux (2) from the afferent terminal because of the relatively high intracellular chloride concentration in sensory neurons, which is maintained by the NKCC1 transporter. Falling intracellular chloride depolarizes membrane potential (3) and suppresses neurotransmitter release from primary afferent terminals (4)

Spinal inhibition

Since inhibitory interneurons were placed at the ‘gate’ of the pain pathway (Fig. 1a), inhibition has been the overwhelming focus of research in this area. Accordingly, this section provides a brief overview of these neurons, ionotropic inhibition, the neurotransmitters and receptors involved, post-synaptic and pre-synaptic inhibition, as well as the importance of inhibition in regulating pain (Fig. 1). The Todd group has employed extensive quantitative studies to show inhibitory cells account for 26% of all neurons within the superficial dorsal horn (SDH; LI–II) and 38% of those in LIII in the mouse (Polgar et al. 2013). This is similar to proportions previously reported in rat (Todd and Sullivan 1990). Though comparable quantification has not been performed for deeper lamina, qualitative data suggest a smaller portion of neurons express GABA particularly in LIV (Barber et al. 1982; Magoul et al. 1987; Nowak et al. 2011). Regardless, within LI–III, virtually all inhibitory interneurons express GABA, while a portion, particularly within the deeper layers, is immunoreactive for glycine (Todd and Sullivan 1990; Polgar et al. 2003). Although GABA/glycinergic interneurons are not homogenously distributed across the SDH, both GABA/glycinergic terminals are found throughout this region (Spike et al. 1997; Mackie et al. 2003), and the majority of neurons receive a mix of GABA and glycinergic synaptic inputs (Chery and de Koninck 1999; Keller et al. 2001; Cronin et al. 2004; Inquimbert et al. 2007; Yasaka et al. 2007; Anderson et al. 2009; Labrakakis et al. 2009; Takazawa and MacDermott 2010; Gradwell et al. 2017).

Neurotransmitters and their receptors

In 1969, Curtis and Crawford showed that application of GABA and glycine induced hyperpolarization in spinal motoneurons (Curtis and Crawford 1969). This proved GABA and glycine-mediated fast inhibitory synaptic transmission in the spinal cord. These inhibitory neurotransmitters act primarily on GABAA and glycine receptors, respectively. In addition, GABA can act on GABAB receptors (Chery and De Koninck 2000), and glycine acts as a co-agonist at NMDA receptors (Bowery and Smart 2006). Throughout this review, we focus on GABAA and glycine receptors.

GABAA receptors are heteropentameric receptors typically formed by a combination of α, β, and γ subunits in a ratio of 2:2:1 (Sieghart 1995; Hevers and Luddens 1998). The subunit composition of these receptors is a major determinant of their capacity to generate transient ‘phasic’ or persistent ‘tonic’ inhibition. Tonic GABAergic inhibition is generated by extrasynaptic α5 or δ subunit-containing GABAA receptors (Semyanov et al. 2004; Belelli et al. 2009). These types of persistent GABAergic currents have previously been described within the spinal dorsal horn (Takahashi et al. 2006; Takazawa and MacDermott 2010). In contrast, GABAA receptors containing an γ subunit in association with α1-3 subunits are more common at synapses where they mediate phasic inhibition (Farrant and Nusser 2005).

Glycine receptors are also heteropentameric receptors most commonly formed by a combination of 2 α and 3 β subunits, though α subunit-containing homomeric receptors can also form (Lynch 2004; Grudzinska et al. 2005; Yang et al. 2012). Glycine receptors are of particular interest to spinal cord circuitry, as although glycinergic neurons are located throughout the CNS, they are highly concentrated within the brainstem and spinal cord (Betz et al. 2006). In addition, a novel α 3 subunit-containing glycine receptor subtype is concentrated in the superficial dorsal horn (Harvey et al. 2004) (Lynch and Callister 2006). As with the GABAA receptor, glycine can produce tonic glycinergic currents within the dorsal horn. These have typically been thought to arise from extrasynaptic, homomeric forms of the receptor (Muller et al. 2008). In contrast, our work has shown that heteromeric α/β glycine receptors are also capable of producing tonic currents, and are responsible for them in dorsa horn inhibitory interneurons (Gradwell et al. 2017).

Post-synaptic inhibition

Long before any electrical correlates were known, Sherrington (1932) proposed the concept of neuronal inhibition while studying spinal reflexes. Several decades after his work, the investigation of synaptic transmission was transformed by the development of the intracellular recording technique. Thereafter, the consequences of inhibitory synaptic transmission and inhibitory post-synaptic potentials (IPSPs), generated by stimulation of Ia afferents, were studied extensively in motoneurons (Brock et al. 1952). These IPSPs were shown to diminish depolarizations produced by synaptic excitation to a level below the threshold for action potential discharge (Coombs et al. 1955b). Eccles (1960) later showed that the effects of varying membrane potential on the IPSP aligned with the flow of various ions down their electro-chemical gradients (Fig. 1c). Indeed, electrophoretic injection of chloride ions into motoneurons reduced the IPSP, because there was a net efflux of Cl− down a new electro-chemical gradient (Coombs et al. 1955c). In Eccles’ Nobel lecture on the ionic mechanisms of post-synaptic inhibition, he stated that IPSPs are “due to ions moving down their electro-chemical gradients…These currents would be caused to flow by increases in the ionic permeability of the subsynaptic membrane that are produced under the influence of the inhibitory transmitter substance” (Eccles 1964).

The inhibitory transmission identified above was shown to have a reversal potential near, or even negative to the resting membrane potential of motor neurons (Coombs et al. 1955a). This inhibition is critically dependent upon the Cl− concentration within and outside of the target neuron. Under normal conditions, central neurons, including those in the dorsal horn, maintain a low intracellular Cl− concentration. This means activation of either the GABAA or glycine receptor causes Cl− influx and drives membrane hyperpolarization (Fig. 1c) (Rivera et al. 1999). The importance of this relationship for the integrity of inhibition was elegantly demonstrated by Prescott et al. (2006). They showed that shifting the Cl− reversal potential compromises inhibitory control and results in hyperexcitability in LI dorsal horn neurons. This highlights how the strength and polarity of inhibitory transmission is largely dependent upon intracellular Cl− concentration. Under normal conditions, central neurons maintain low intracellular Cl− concentration through the activity of the K+/Cl− co-transporter (KCC2), which transports K+ and Cl− out of the cell (Fig. 1c) (Doyon et al. 2016). In these neurons, KCC2 expression outweighs that of Na+/K+/Cl− co-transporter (NKCC1) activity, responsible for the transport of Na+, K+, and Cl− into the cell (Fig. 1c) (Kahle et al. 2008; Benarroch 2013). The critical role of these co-transporters for inhibition has understandably created interest within the pain signalling field; for review, see (Price et al. 2005). Membrane potential also has critical effects on the driving force for Cl− ions (\( V_{\text{m}} {-}E_{{{\text{Cl}}^{ - } }} \)) through GABAA or glycine receptors. As membrane potential becomes more depolarized, driving force and Cl− influx are enhanced to reduce the efficacy of synaptic excitation. This effect on excitatory transmission is often called ‘shunting’ and has been shown to occur as a result of both phasic and tonic GABAA and glycine receptor activity (Chance et al. 2002; Mitchell and Silver 2003; Prescott and De Koninck 2003). This crucial mechanism for reducing neuronal excitability can occur when there is a change in driving force, as mentioned above for the case of Cl− influx, and when there is a decrease in cell input resistance (i.e., increased membrane conductance) because of GABAA or glycine receptor activation. Importantly, such inhibitory control of central neuron excitability has been demonstrated within the dorsal horn, (Takazawa and MacDermott 2010).

Pre-synaptic inhibition

Building on the relatively new concept of post-synaptic inhibition (at that time), Frank and Fuortes first proposed another form of inhibition, pre-synaptic inhibition, in 1957 (Fig. 1c). This idea was based on the observation that muscle afferent volleys reduced the size of monosynaptic excitatory potentials in motoneurons without any changes in the membrane potential or excitability in motoneurons, as would occur if it resulted from post-synaptic inhibition. They concluded that the reduced EPSP was due to reduced excitatory action of the Ia pre-synaptic fiber in the spinal cord. Following the work of Frank and Fuortes, Eccles studied pre-synaptic inhibition in the cat spinal cord and demonstrated that the Ia afferent EPSP depression which he observed was due to primary afferent depolarization (PAD). He also proposed a critical role for GABA, and GABA receptors in this phenomenon (Eccles et al. 1961, 1963). This work demonstrated that GABAA antagonists, but not glycine receptor antagonists, reduced both pre-synaptic inhibition of spinal monosynaptic reflexes, as well as PAD. While this principle role for GABAergic signalling is supported by a substantial body of evidence (see reviews, Rudomin and Schmidt 1999; Willis 1999; Rudomin 2009), it is important to acknowledge work that suggests GABAB receptors may also play a role in pre-synaptic inhibition in the spinal cord (Stuart and Redman 1992). Furthermore, roles for serotonin, dopamine, noradrenaline, and acetylcholine have also been proposed (Hochman et al. 2010; Garcia-Ramirez et al. 2014). This work remains somewhat controversial and needs further study; however, we are limited by the techniques available for the study of functional pre-synaptic inhibition.

Identifying the mechanistic link between PAD and decreased transmitter release in primary afferents (Clements et al. 1987) has proven technically challenging, because the small axo-axonic structures responsible are not readily amenable to intracellular recordings. It has, however, been established that primary afferent neurons exhibit relatively high expression of the NKCC1 transporter and low expression of KCC2 (Fig. 1c) (Alvarez-Leefmans et al. 2001; Kanaka et al. 2001; Price et al. 2006; Mao et al. 2012). Consequently, primary afferent neurons maintain a high intracellular Cl− concentration and a Cl− equilibrium of approximately − 30 mV versus ~ − 70 mV in central neurons (Rocha-Gonzalez et al. 2008). Therefore, opening of Cl− channels, and specifically GABAA receptors, allows for the efflux of Cl− and PAD. A number of mechanisms have been proposed to explain how PAD produces pre-synaptic inhibition. For example, PAD may cause inactivation of voltage-dependent Na+ and Ca2+ channels (Graham and Redman 1994), as well as ‘shunting’ effects that lead to the disruption of propagating action potentials (APs) along the afferent fiber toward their central terminal (Segev 1990). Such ‘shunting’ has been shown to affect action potential (AP) propagation in afferents within the brainstem (Verdier et al. 2003). PAD inactivation of Ca2+ channels may also reduce pre-synaptic calcium influx, and thus impair transmitter release from afferent terminals (Thanawala and Regehr 2013). Importantly, the time-course of pre-synaptic inhibition (300–400 ms) is much longer than that of post-synaptic inhibition (10–30 ms) (Eccles et al. 1962). Thus, despite opposing effects on membrane potential, both post-synaptic and pre-synaptic inhibition provides powerful mechanisms to regulate and gate sensory signalling in the spinal cord (Fig. 1c).

Experimentally reduced inhibition evokes pain

It is well accepted that the balance between excitation and inhibition in the spinal dorsal horn is essential for the maintenance of ‘normal’ sensory function, and numerous studies have shown that pain hypersensitivity is often associated with reduced ‘inhibitory tone’ within the spinal dorsal horn. Early work applying spinal GABAA or glycine receptor antagonists found that blocking either receptor induces hypersensitivity (allodynia), exaggerated nociceptive responses (hyperalgesia), and spontaneous pain behaviours (Beyer et al. 1985; Yaksh 1989; Sivilotti and Woolf 1994; Sherman and Loomis 1995; Loomis et al. 2001). Though some GABA/glycinergic synapses within the SDH arise from descending inputs (Antal et al. 1996; Francois 2017), the majority are formed by local interneuron axons. Thus, loss of function in these inputs is viewed as the most likely cause of the above behavioural changes. More recent work has shown that ablating or silencing inhibitory interneurons in the spinal dorsal horn leads to thermal and mechanical hypersensitivity, as well as spontaneous aversive behaviour (Foster et al. 2015; Koga et al. 2017). Ablating glycinergic neurons with a GlyT2:Cre line, Foster et al. (2015) showed removal of these neurons results in mechanical, heat, and cold hypersensitivity, spontaneous pain behaviours, and an increase in neuronal activation as measured by c-fos labeling. Alternatively, Koga et al. (2017) targeted GABAergic neurons using a VGAT:Cre mouse line, demonstrating that hM4Di-mediated silencing of the GABAergic population also results in spontaneous pain behaviours such as licking, biting, flinching, and increased neuronal activation as shown by c-fos labeling. Depending on the inhibitory population targeted, mice have also been shown to engage in excessive scratching and biting. This leads to hair loss in affected dermatomes, an observation thought to be similar to the ‘faulty gate’ proposal for spinal itch processing circuits that produces pathological spontaneous itching (Ross et al. 2010).

The dorsal horn of the spinal cord maintains a somatotopic arrangement and modular architecture that defines body and modality representation within discrete circuits. This is critical for a normally organised sensory experience and functional borders between these circuits can be ‘blurred’ by compromised or reduced inhibition. For example, using calcium imaging in spinal cord slices Ruscheweyh and Sandkuhler (2005) showed that under normal conditions, electrical stimulation of primary afferents produces ‘confined’ excitation within the dorsal horn, as would be expected by the distinct termination patterns of these afferents. However, when inhibition was blocked with GABA and glycine antagonists, the same stimulation caused widespread excitation and violated the modality-specific borders in the dorsal horn. Under these conditions, innocuous (non-nociceptive) signalling can gain the ability to excite pain specific circuits and vice versa (Baba et al. 2003; Torsney and MacDermott 2006; Keller et al. 2007); for review, see (Sandkuhler 2009). These observations emphasise that spinal inhibitory interneurons play critical roles in a mechanical allodynia network, forming the ‘gates’ that prevent incoming low-threshold fiber signals from activating nociceptive networks. The actions of this network have also been highlighted by Torsney and MacDermott (2006) in their recordings from putative projection neurons in L1 (the neuron responsible for relaying nociceptive information to the brain—marked T in Fig. 1a). Under normal conditions, projection neurons receive largely monosynaptic inputs from Aδ and C-fiber nociceptive afferents. However, pharmacological block of inhibition (with bicuculline and strychnine), as above in the calcium imaging studies, resulted in additional polysynaptic input from large myelinated afferents. Together, these findings strongly support the existence of polysynaptic pathways between the deep (non-noxious) and superficial (noxious) dorsal horn that are usually silenced by inhibition. The capacity of nerve injury or pathological states to similarly compromise this inhibitory gating remains a major hypothesis for allodynia in neuropathic pain.

Mechanisms underlying reduced inhibitory efficacy in pain

The literature covered above demonstrates the pathological effects of compromised inhibition in the dorsal horn. Therefore, understanding how chronic pain impacts inhibition in this region is likely to uncover promising targets for future treatments. The existing evidence suggests the reduced inhibition associated with neuropathic pain can arise from mechanisms that include: decreased GABA release (Lever et al. 2003); downregulation of GABA; reduction in the activity of glutamic acid decarboxylase (GAD) an enzyme that produces GABA; altered pre- and post-synaptic GABA receptor function (Castro-Lopes et al. 1993; Ibuki et al. 1997; Eaton et al. 1998; Fukuoka et al. 1998); inhibition of glycine receptor function (Zeilhofer 2005); reduced afferent input to inhibitory neurons (Kohno et al. 2003; Polgar and Todd 2008); or even a loss/death of GABAergic interneurons (Moore et al. 2002; Scholz et al. 2005). The latter mechanism remains controversial as a number of studies have cast doubt on neuronal loss as a cause of inhibitory dysfunction (Polgar et al. 2003, 2004; Polgar and Todd 2008). Regardless of mechanism, changes in inhibitory tone are thought to underlie the hyperalgesia and allodynia that characterize chronic pain. Most work examining these changes has focused on post-synaptic inhibition; however, many of the changes influencing post-synaptic mechanisms could influence the efficacy of primary afferent fiber synapses, via pre-synaptic inhibition. Below, we discuss changes to both post- and pre-synaptic inhibition in neuropathic pain models and assess how these changes are likely to influence nociceptive dorsal horn circuits.

Pharmacology

A number of studies have examined the effect of manipulating GABA/glycinergic inhibition in neuropathic pain models. Enhancing inhibition by application of GABAA/B receptor agonists has been shown to reverse hyperalgesia and allodynia and in various pain models (Fig. 2, panel 2) including CCI (Hwang and Yaksh 1997; Eaton et al. 1999; Malan et al. 2002), SNI (Rode et al. 2005), PNI (Patel et al. 2001), and sciatic nerve crush injury (Naik et al. 2008). This work suggests that GABAA/B receptors modulate nociceptive transmission and inhibit the neural circuits that enable neuropathic pain. Interestingly, exactly when increased inhibition is delivered appears to be critical. Eaton et al. (1999) demonstrated that a single injection of GABA can reverse thermal and tactile hypersensitivity following CCI, if provided within the first 2 weeks after injury. However, if the injections are made after 2 weeks, they were not effective. This finding suggests that altered GABAe rgic inhibition is either most important, or most readily manipulated, during induction rather than the maintenance phase of neuropathic pain.

Impact of altered inhibition on spinal sensory processing and pain behaviour. Upper panels (1 & 2, red) summarise outcomes when inhibition is altered experimentally (1) and in nerve injury neuropathic pain models (2). Under experimental conditions, inhibition can be compromised by GABAA—and glycine receptor antagonists or via genetic disruption of inhibitory activity [i.e., ablating inhibitory interneurons or suppressing their activity with designer receptors activated by designer drugs (DREADs)]. Both interventions effectively enhance excitatory signalling in the dorsal horn and drive pain-related behaviours. The lower panel (3, blue) summarises the outcomes of enhancing dorsal horn inhibition in neuropathic pain models. Increasing inhibition with GABA receptor agonists and modulators, glycine transporter inhibitors, or transplanting GABAergic interneurons reduces pain behaviours and the aberrant excitatory signalling associated with neuropathic pain

Further examining the mechanisms of GABAergic analgesia, (Knabl et al. 2008) used a GABAA receptor point-mutated knock-in mouse to study the role of specific GABAA receptor subunits (i.e., α2 and α3). Diazepam, a positive allosteric modulator of the GABAA receptor, reduced CCI-induced heat hyperalgesia, cold allodynia, and mechanical sensitization. While almost identical hyperalgesic effects were observed in mice carrying insensitive α1 subunits, those carrying insensitive α2-subunits showed a pronounced reduction in diazepam-induced anti-hyperalgesia. Likewise, those animals with insensitive α3- and α5-subunits also showed reductions, though smaller, in diazepam-induced anti-hyperalgesia. Interestingly, the facilitation of GABAergic currents by diazepam was completely abolished in nociceptive DRGs in α2-insensitive mice, whereas there was no alteration in α1-, α3-, or α5-insensitive mice. When LI–II dorsal horn neurons were examined, facilitation of GABAergic currents by diazepam was reduced in α2- and α3-insensitive mice, but not in α1- or α5-insensitive mice. Taken together, these data suggest that α2- and/or α3-containing GABAA receptors are critical to GABA’s analgesic effects within the dorsal horn. The contributions of these subunits to pre- and post-synaptic inhibition, however, are likely to differ with the α2-subunit mediating primarily pre-synaptic effects, and the α3-subunit being important for GABA’s post-synaptic efficacy. These differential mechanisms suggest that more work is needed to differentiate the analgesic effects of GABA-mediated pre- and post-synaptic inhibition. Finally, this work shows both pre-synaptic inhibition and post-synaptic inhibition contribute to GABAergic analgesia in the CCI model. However, the degree that each mechanism contributes in other neuropathic pain models, such as SNI, is not clear.

Enhancing glycinergic inhibition as a therapeutic has also shown promise in animal models of neuropathic pain (Fig. 2, panel 3). The most common approach here is to enhance glycinergic transmission via inhibition of glycine transporters 1 and 2 (GlyT1/2). Morita et al. (2008) demonstrated that i.v. or intrathecal administration of GlyT1/2 inhibitors, or knockdown of spinal GlyTs by small interfering RNA reduces allodynia in PNI mice. Furthermore, this study demonstrated that these effects were antagonised by administration of strychnine or siRNA GlyRα3β knockdown. This confirms a GlyR-mediated mechanism of action. Similarly, other studies have demonstrated that GlyT1/2 inhibitors reduce mechanical allodynia and thermal hyperalgesia in CCI models of neuropathic pain (Hermanns et al. 2008; Barthel et al. 2014; Werdehausen et al. 2015). Interestingly, in one study, thermal hyperalgesia was only attenuated after 12 days of treatment, whereas mechanical allodynia was attenuated with just 4 days of treatment (Barthel et al. 2014). The variable timeframe suggests different modality-specific efficacy, which could be due to: distinct circuits underlying the generation of hyperalgesia and allodynia; or differential glycinergic control of circuits producing thermal hyperalgesia and mechanical allodynia.

The circuits underlying sensory processing within the dorsal horn are remarkably complex and our current understanding is incomplete. This makes it difficult to predict the outcomes of new therapeutic manipulations. A good example is our group’s finding that tonic glycinergic inhibition significantly reduces the excitability of PV+INs (inhibitory interneurons that express parvalbumin) (Gradwell et al. 2017). Thus, enhancing glycinergic transmission to provide analgesia, a strategy the literature suggests would be effective, also has the potential to suppress the activity of an important population of inhibitory interneurons that have an established role in maintaining the separation of touch and noxious signals in the dorsal horn (Petitjean et al. 2015). Despite this, the experimental work discussed above still suggests glycine transporter blockade produces analgesia and the other actions of this manipulation must overwhelm the likely silencing of PV+INs. We, therefore, believe that comprehensive examination of the circuits underlying neuronal function will allow us to better appreciate how sensory processing occurs within the dorsal horn, and how we can intervene when this processing is disrupted.

An alternative approach to supplementing inhibition, spinal transplant of GABAergic neurons, is also showing promise as a potential neuropathic pain therapy (Fig. 2, panel 3). This was first demonstrated by Mukhida et al. (2007) who transplanted mouse GABAergic cells or human neural precursor cells differentiated into a GABAergic phenotype into the spinal cord 10 days after SNL. The transplants resulted in increased paw withdrawal threshold in response to mechanical stimuli. Another study transplanted immature mouse telencephalic GABAergic precursor cells into the spinal cord of mice with SNI (Braz et al. 2012). The transplanted cells successfully integrated within the dorsal horn and formed local connections with primary afferent terminals and dorsal horn neurons. Mice receiving transplants showed a complete reversal of mechanical hypersensitivity induced by peripheral nerve injury. Similar findings were produced by transplant of a subcloned derivative of the human NT2 cell line. The cells differentiated to secrete GABA and glycine, 2 weeks after CCI, and mechanical allodynia and thermal hyperalgesia were reduced (Vaysse et al. 2011). Intraspinal transplant of GABAergic neural progenitor cells also reduces mechanical and thermal hyperalgesia, as well as cold allodynia for 1–3 weeks following CCI. The Vaysse et al. 2011) study examined potential mechanisms by recording from LIII-V neurons. They show that the transplanted GABAergic cells did not influence the potentiation of Aβ fiber responses observed with CCI, but reduced wind-up and post-discharge responses. As with the above pharmacological studies, the timing of GABAergic cell transplantation appears critical. This temporal consideration was highlighted by the reversal of thermal hyperalgesia and tactile allodynia with transplanted neuronal cells bioengineered to synthesise GABA. This only occurred if implantation occurred within 1 or 2 weeks following the CCI intervention, and appears to support a greater importance of dysfunctional inhibition in the induction, rather than the maintenance of neuropathic pain.

Because enhancing inhibition in neuropathic pain states causes analgesia, it is not surprising that GABA/glycine receptor-antagonist administration is pro-nociceptive. Yamamoto and Yaksh (1993) showed that intrathecal strychnine or bicuculline, following CCI, enhanced thermal hyperalgesia. In contrast, Hwang and Yaksh (1997) demonstrated injection of GABAA/B antagonists had little effect on mechanical thresholds following SNL, but reversed the anti-allodynic effects of the respective agonists. This later finding suggests that GABAergic inhibition has lost efficacy, or the relevant circuits are overwhelmed by maximal excitation has been reached. Studies have also used GABA/glycine receptor antagonists to investigate the mechanisms underlying neuropathic pain. Under normal conditions, bicuculline application causes repetitive long-lasting polysynaptic EPSCs in LI–II dorsal horn neurons evoked by dorsal root stimulation (Baba et al. 2003). This effect was most notable at Aβ and Aδ stimulation intensities. In contrast, the extent of bicuculline-mediated augmentation was less pronounced in SNI rats, suggesting that GABAergic inhibition was already compromised. Whether the observed increase in polysynaptic inputs arose from reduced inhibition or enhanced excitation was not addressed, but other work suggests that reduced inhibition is most likely (Muller et al. 2003; Harvey et al. 2004).

Somewhat surprisingly, evidence also exists for enhanced GABAergic inhibition following nerve injury (Kontinen et al. 2001). In extracellular recordings from wide dynamic range (WDR) neurons, bicuculline facilitated Aδ fiber-evoked activity in nerve ligated and non-ligated rats, but increased C-fiber-evoked activity in the nerve ligation group only. Bicuculline had no effect on Aβ fiber-mediated activity in any group, and strychnine had no effect on stimulation responses from Aβ- or C-fibers in the ligated or non-ligated conditions. These data suggest that GABAergic tone is increased in neuropathic rats, possibly as compensation to overcome excessive excitation (Kontinen et al. 2001). This finding implies that it is not necessarily a reduction in inhibition that drives neuropathic pain, but rather increased excitability within the dorsal horn that can no longer be restrained by inhibition. Thus, for WDR neurons, attempts at increasing inhibition with GABA/Gly receptor agonists, reducing transporter activity, or using GABAergic cell transplant might only have modest effects on reducing overall levels of excitability in the spinal cord.

The final outcome of the strategies listed above will also depend on the exact site where inhibition occurs (pre- and/or post-synaptic), and each site’s involvement in the different types of neuropathic pain as well as during the development and maintenance phases. A more precise understanding of how inhibitory mechanisms change under neuropathic conditions will provide critical insight into how we might control them and avoid unwanted, or unpredictable, off-target effects.

To die or not to die: nerve injury and inhibitory-cell death

One of the most controversial mechanisms proposed to underlie neuropathic pain is a reduction in inhibition via selective death of GABAergic neurons. Early work suggested that CCI induced a loss of neurons that were immunoreactive for GABA (i.e., GABA-IR neurons) (Ibuki et al. 1997; Eaton et al. 1998). These studies reported that the reduction could be so dramatic that no GABAergic neurons remained 2 weeks post-injury. There was also a reduction in immunoreactivity for the two enzymes/isoforms involved in GABA synthesis. Importantly, these enzymes, GAD65 and GAD67, are differentially located in the spinal cord (Hughes et al. 2005; Betley et al. 2009). GAD65 is expressed exclusively in terminals pre-synaptic to primary afferents, and thus serves as a marker for pre-synaptic inhibitory inputs. The more ubiquitous GAD67 is a cytosolic enzyme that is widely distributed throughout the soma, dendrites, and axons of GABAergic neurons. GAD67, therefore, identifies GABAergic neurons and their processes.

Others have also reported a reduction in GABAergic neuron numbers in neuropathic pain models (Maione et al. 2002; de Novellis et al. 2004). Castro-Lopes et al. (1993) described a reduction, though less dramatic, in the number of GABA-IR neurons in LI–III following sciatic nerve transection. Vaysse et al. (2011) also demonstrated a decrease in GABA-IR following CCI, and (Lee et al. 2009) showed that partial or complete lesion of the tibial nerve results in reduced GABA-IR neurons. However, in the Lee study, there was no evidence of cell death as shown by lack of co-localization between GABA- and the cell death marker, caspase 3-IR. The reduced immunoreactivity, coupled with no indication of cell death, implies that reduced GABA synthesis versus cell death underlies reports of GABA-IR cell loss.

Using both CCI and SNI models, Moore et al. (2002) reported a 20–40% reduction in the levels of GAD65 following nerve injury, while GAD67 was unaffected. This finding agrees with Braz et al. (2012), who reported a decrease in spinal cord levels of GAD65, but not GAD67 mRNA ipsilateral to a spinal nerve injury. Interestingly, others report a reduction in GAD67 expression, with no change to GAD65 following CCI (Vaysse et al. 2011) or dorsal root rhizotomy (Kelly et al. 1973). Lorenzo et al. (2014) performed a quantitative analysis on the number of GAD65 terminals within LI–II following CCI, and showed that the density of GAD65 terminals was reduced throughout LI–II and was maximal at 3–4 weeks following CCI. The time-course of changes correlated with the loss of Isolectin B4 (IB4) labeling, which was used to mark the area of the SDH affected by the CCI. It also matched the time-course of altered thresholds to mechanical and thermal stimuli. It is possible that independent of a change in the number of GABAergic neurons, these cells may retract or extend their processes, a mechanism demonstrated in hippocampal neurons following stress (McEwen 1999). Another possible interpretation of these studies is that loss of GAD does not necessarily reflect loss of inhibitory terminals, but instead downregulation of the GAD protein. Regardless, reduced GAD expression should alter the ability of terminals to produce GABA, and thus limit their inhibitory function.

In relation to the study by Lorenzo et al. (2014), pre-synaptic GAD65-IR terminals may be lost after glomerular IB4+ central terminals degenerate, supported by the correlated loss of IB4+ and GAD65+ terminals. To help determine whether reduced GAD reflects cell death, TUNEL-positive profiles can be assessed to mark apoptosis (Whiteside and Munglani 2001). Using this approach, Moore et al. (2002) detected apoptotic neurons within the SDH following SNI. However, the great majority of TUNEL-positive cells were not NeuN-positive, indicating significant death of non-neuronal populations. This finding is at odds with the reduction of GABAergic neurons observed in the previously discussed studies, but is in line with those of Lee et al. (2009) who showed poor co-localization between GABA-IR and the cell death marker, caspase 3. Considered together, this work speaks less to whether GABAergic neurons do or do not die, but instead the transcription/translation of GAD65/67, as well as a potential reduction in GABA-IR. Such changes would make labeling with these markers weaker and potentially below the threshold for detection. This would lead to the conclusion of cell death.

Work employing stereological cell counting methods has provided important evidence to challenge the idea of GABAergic cell death in neuropathic pain states. Studies using this approach have reported no loss of neurons following CCI (Polgar et al. 2004), and no change in GABA or glycinergic neuron numbers (Polgar et al. 2003), even though the animals develop thermal hyperalgesia. Furthermore, following SNI, the overall number of neurons in LI–III does not change, even though the animals develop tactile allodynia (Polgar et al. 2005). Consistent with the work of Moore et al. (2002) and Lee et al. (2009), this study also showed, using two markers of apoptosis (TUNEL and cleaved caspase 3), that although cell death did occur 7 days following SNI, the great majority of the dead cells were non-neuronal. In line with these findings, Hermanns et al. (2009) used a transgenic mouse line to express GFP in neurons with the glycine transporter 2 gene and found no reduction in glycinergic neurons following CCI. The number of GFP + neurons was assessed using the physical dissector method, and quantitative comparisons revealed no differences between CCI and sham groups. CCI mice developed thermal hyperalgesia and mechanical allodynia, suggesting that the loss of spinal glycinergic neurons is not required for the development of neuropathic pain. Recent work by Petitjean et al. (2015) as well as our group (Boyle et al. 2019) has also investigated inhibitory interneurons, as marked by parvalbumin (PV), following SNI. Both studies reported no loss of inhibitory PV+ cells following SNI, despite the development of neuropathic pain behaviours. Though it remains controversial whether death of inhibitory interneurons occurs in neuropathic pain models these findings demonstrate, at the very least, that neuronal death is not required for the development of neuropathic pain.

Despite the care employed in the above studies, work continues to raise questions about the potential for nerve injury to cause apoptosis in dorsal horn neurons. Using a stereological analysis Scholz et al. (2005) reported neuron number in LI–III was reduced by 22% after SNI. They also used in situ hybridisation for GAD67 mRNA and proposed that GABAergic neuron numbers in LI–III 4 decreased by ~ 25% within weeks following SNI. Electrophysiological analysis in this study showed that afferent-evoked inhibitory post-synaptic currents were reduced. Importantly, these changes to inhibition were not due to reduced GABA sensitivity, because the GABAAR agonist muscimol enhanced outward currents in LII neurons following SNI and never evoked inward currents. Note that, in this study, GABA-mediated currents are outward, because they used a Cs2SO4-based internal solution in their recording pipette and held membrane potential at 0 mV. Blocking afferent activity in the nerve proximal to the lesion reduced nerve injury evoked apoptosis. Furthermore, continuous intrathecal administration of a caspase inhibitor (zVAD) protected against cell loss and changes to inhibitory signalling. Finally, zVAD treatment for 4 weeks following SNI attenuated the development of mechanical allodynia, hyperalgesia, and cold allodynia. These interventions lead Scholz et al. to conclude that loss of GABAergic neurons underlies reduced inhibition in nerve-lesion pain models. This, however, disagrees with results from the anatomical/counting studies described above (Polgar et al. 2003, 2004; Hermanns et al. 2009). One explanation is that cell death occurs slowly (over several weeks) after the injury and this may have masked the magnitude of cell loss in cell counts made within the first 2 weeks after injury.

Though timing may explain some discrepancies in the literature, one study performed 4 weeks following SNI still suggested that the number of neurons in LI–III does not change, at least at this time point (Polgar et al. 2005). A caveat to this work was that markers for cell death were examined 1 week following surgery; however, the stable number of neurons at the endpoint for analysis implied no cell death. Adding an additional twist to the debate on nerve injury-related cell death, Coggeshall et al. (2001) suggested that cell loss is activity-dependent. In their work, sciatic nerve transection did not result in neuronal loss; however, activation of A-fibers following the injury caused substantial cell death throughout LI–III. This requirement for afferent activation agrees with the finding of Scholz et al. (2005) that blocking primary afferent activity reduced nerve injury evoked apoptosis. Further support for the role of activity in initiating cell death comes from reported SNI-mediated decreases in dorsal horn neuron number. Inquimbert et al. (2018) showed conditional deletion of Grin1, the essential subunit of N-methyl-d-aspartate-type (NMDA) glutamate receptors, markedly reduced apoptotic profiles. Furthermore, using a GAD1 transgenic line, the same authors demonstrated the number of GABAergic neurons was reduced in LI–II at 4 weeks following SNI, whereas there was no change in LIII–IV. Despite the proposed loss of GABAergic neurons, the expression of GAD1, GAD2, GlyT2, and the vesicular inhibitory amino acid transporter (Viaat) was stable, and this was proposed to be due to upregulation of these proteins in surviving neurons. Importantly, Grin1 deletion was also shown to alleviate mechanical and cold allodynia, and constitutive deletion of Bax, a key protein involved in apoptosis, protected against cell death and hypersensitivity to mechanical or cold stimulation. Finally, Yowtak et al. (2013) reported a reduction in GABAergic neuron numbers in the lateral parts of LI–II on the ipsilateral side of SNL mice. Neuron numbers completely recovered in animals treated with an ROS scavenger and mechanical allodynia was slightly reduced. Of note, this work used a GAD67:eGFP mouse line to label GABAergic neurons. This limited the reliance on immunolabeling and reduced the chance of ‘missing’ cells because of nerve injury induced changes to marker expression (i.e., GABA or GAD). However, a limitation of this approach is that not all GABAergic neurons are marked in the GAD67:eGFP mouse line—only about a third of GABAergic neurons are labeled. Furthermore, ~ 15% of the eGFP + neurons within LII are not immunoreactive for GABA, and, therefore, likely to be excitatory neurons (Heinke et al. 2004). These caveats reinforce the caution that needs to be applied when interpreting transgenic protein-labeled tissues (Graham and Hughes 2019).

In summary, the issue of whether or not neuronal cell death occurs in neuropathic pain states is unresolved. The clear discrepancies in findings are difficult to reconcile, though they most likely relate to experimental issues such as animal model, markers, time-points, afferent activity, and counting methods. The importance of the issue as well as the inconsistency of conclusions undoubtedly warrants further investigation. What must be determined first, however, are the methods most appropriate to examine the question. The ability of anti-apoptotic substances and cell death gene deletion to ameliorate the behavioural consequences of neuropathic pain is promising, regardless of the exact underlying mechanism. Considering multiple studies report death of non-neuronal cells (Moore et al. 2002; Polgar et al. 2005; Lee et al. 2009), it is likely that glial cells die following neuropathic injury. Thus, when anti-nociceptive effects are observed, they may actually result from inhibition of apoptosis and its protection of non-neuronal populations. This fits with now well-accepted views that neuropathic pain is not exclusively caused by neuronal mechanisms, but also involves immune cells and neuronal-glial interactions; for review, see (Liu and Yuan 2014; Machelska and Celik 2016; Wei et al. 2019). On balance, although GABA/GAD-IR/mRNA may be reduced in neuropathic states, specific inhibitory interneuron death appears unlikely, but further work is required to resolve this important debate.

To fire or not to fire: changes in intrinsic properties

Without any cell death, the intrinsic excitability of inhibitory interneurons will also influence their capacity to a maintain inhibition at appropriate levels within dorsal horn sensory circuits. For example, spinal inhibition would be compromised if membrane excitability decreased or the features of action potential discharge switched to low activity patterns in inhibitory neurons. Pursuing this hypothesis, Schoffnegger et al. (2006) used a transgenic line where inhibitory interneurons were marked by GFP-linked GAD67 expression. They recorded from LII neurons in CCI and sham-operated mice. The passive and active membrane properties of GAD67 neurons were similar in both groups. There was also no difference in the incidence of different action potential discharge patterns, with a similar distribution of initial bursting (42% vs. 46%), tonic firing (24% vs. 16%), and gap firing (29% vs. 31%) discharge for sham-operated and neuropathic mice, respectively. The only differences detected were a higher membrane resistance, and lower cell capacitance in one cell class (gap firing GAD67 + neurons) in neuropathic mice. The same group also examined the membrane properties of LIII GABAergic neurons following CCI using the same GAD67 line (Gassner et al. 2013). As with their previous study, passive membrane properties of GABAergic interneurons were similar in CCI and naïve mice. In addition, LIII GAD67 neurons displayed similar portions of AP discharge patterns; tonically firing (35% vs. 37%), gap firing (33% vs. 26%), initial bursting (13% vs. 14%), and delayed firing (10% vs. 16%) discharge in naïve and CCI mice, respectively. Together, these findings suggest that the intrinsic properties of inhibitory interneurons remain stable following neuropathic injury. However, as noted above, the transgenic mouse line employed in these studies only captures one-third of the GABAergic neurons in this region (Heinke et al. 2004). This limitation may mean that these conclusions do not generalise to all GABAerigc neurons.

Another study using the GAD67 mouse line recorded from GABAergic neurons located in LI–II following spinal nerve ligation (Yowtak et al. 2013). Recordings from sham mice exhibited mostly (7/8) tonic-firing discharge patterns, with only 1 neuron showing delayed firing. In contrast, of the 26 GABAergic neurons recorded from SNL mice, 14 exhibited tonic-firing discharge, 11 delayed, and 1 transient/initial bursting. This implies SNL can change neuronal excitability. Some caution should be taken in interpreting these results as only a few recordings were performed in each group and passive membrane properties of GABAergic neurons were not examined. Regardless, when specifically examining neurons exhibiting tonic firing, this work showed that application of a ROS scavenger was capable of enhancing spike number only in SNL mice, suggesting that a nerve injury-related rise in ROS can change GABAergic neuron excitability. Unfortunately, these experiments did not assess whether ROS scavengers altered action potential discharge in neurons exhibiting other types of discharge (delayed or initial bursting). Regardless, we have shown that it is possible for neurons to switch their firing pattern, in that case following blockade of tonic glycine currents (Gradwell et al. 2017).

In addition to sampling limitations, the treatment of presumptive inhibitory populations as a single group is problematic. It is widely accepted that several subclasses of GABAergic interneurons exist (Boyle et al. 2017) and this may obscure significant changes to intrinsic excitability in one of these subpopulations. On this point, we have assessed the intrinsic excitability of a subpopulation of inhibitory interneurons expressing parvalbumin (PV) following SNI (Boyle et al. 2019). The properties of PV+ neurons were recorded in naïve mice, as well as from the contralateral and ipsilateral dorsal horn of SNI mice. No differences were detected in resting membrane potential, membrane resistance, cell capacitance, incidence of discharge pattern, rheobase current, AP threshold, width, and amplitude, AHP amplitude, or the incidence and amplitude of hyperpolarisation activated cation currents (Ih). In the SNI animals, there was, however, an increase in the current required to elicit the tonic-firing discharge pattern, as well as a decrease in action potential discharge frequency, specifically in recordings from the ipsilateral side. These findings suggest that PV+INs are more difficult to recruit and have reduced output upon activation following SNI.

The overall stability of PV+IN intrinsic membrane properties, in nerve injury models, is in agreement with the Schoffnegger et al. (2006) and Gassner et al. (2013) studies on GAD67:eGFP neurons. In contrast, the observed reduction in PV+IN excitability, expressed as increased tonic rheobase current and altered action potential frequency, was not tested for in these previous studies. Furthermore, the likely sampling of several GABAergic populations in that work, versus specific assessment of PV+INs, may have obscured such an effect in their data. Balasubramanyan et al. (2006) have also examined intrinsic properties in unidentified neurons within LI–II of CCI rats and reported no change in the relative incidence of action potential discharge responses, resting membrane potential, rheobase, or input resistance. Despite this, when CCI recordings were separated by action potential discharge pattern, those cells that exhibited tonic action potential discharge had increased cumulative latencies (i.e., reduced overall discharge), while those cells classed as phasic firing exhibited increased overall discharge. These findings are consistent with our work in mice on unidentified neurons in CCI models of neuropathic pain. Together, these data suggest that the neuron intrinsic excitability may change, albeit only subtly, in neuropathic pain states. As both studies identify tonic-firing cells as a more ‘plastic’ discharge type, these changes may be neuron specific. Thus, subsequent studies could examine the intrinsic excitability of distinct, labeled, inhibitory populations in nerve injury models.

Reduced GABA/Gly release

Even without cell death or reduced excitability, the amount of GABA/glycine released by inhibitory interneurons within the dorsal horn could directly impact inhibition. Indeed, extracellular GABA levels have been shown to be directly correlated with the development of allodynia. A study by Stiller et al. (1996) reported partial constriction of the sciatic nerve resulted in reduced extracellular GABA levels in nerve-injured rats displaying allodynia, whereas levels were only modestly decreased in the non-allodynic rats with nerve injury. Similarly, using potassium stimulation to evoke neurotransmitter release, Lever et al. (2003) demonstrated that SNL rats have significantly reduced GABA levels in the dorsal horn. This was taken as evidence of reduced GABA release from inhibitory interneurons; however, changes to GABA reuptake are equally possible. VGAT1 is the transporter responsible for the reuptake of GABA, and is following CCI Miletic et al. 2003; Shih et al. 2008). Such reduction to VGAT1 is likely to reduce synaptic GABA content, and in turn, decrease GABAergic inhibition. In contrast to these studies, Somers and Clemente (2002) found no change in the synaptosomal content of GABA/glycine at 12 days following CCI, despite reduced mechanical and thermal pain thresholds. This is in line with the work by Polgar and Todd (2008) who demonstrated, using a quantitative immunogold method, that the level of GABA in inhibitory boutons within LI–II is unaffected by SNI. In contrast to studies identifying reduced VGAT1 (Miletic et al. 2003; Shih et al. 2008), Daemen et al. (2008) reported an upregulation of VGAT1 in the dorsal horn of rats 7 days following CCI, and a VGAT1 antagonist was able to reduce tactile and thermal hyperalgesia. This work suggests that altered GABA availability contributes to neuropathic pain states, consistent with other work, showing that VGAT1 selective inhibitors or VGAT1 knockout reduces nociceptive responses in response to thermal (tail-flick) and chemical (formalin and acetic acid) pain assays (Hu et al. 2003; Xu et al. 2008). Conversely, Hu et al. (2003) demonstrated that mice overexpressing VGAT1 display significant hyperalgesia in thermal and chemical assays. Taken together with previous studies describing spinal GABA depletion and associated pain behaviour, these depleted levels might reflect enhanced pre-synaptic reuptake via upregulated VGAT-1. How the resulting enhanced synaptic GABA content could be pro-nociceptive is unclear? Reduced tonic GABAergic inhibition is one mechanism? Despite these findings, not all work supports decreased extracellular neurotransmitter levels, with a high-performance liquid chromatography study detecting increased GABA and glycine in the dorsal horn following CCI (Satoh and Omote 1996). Furthermore, treatment with the NMDA receptor antagonist, MK-801, prior to CCI abolished the elevated glycine and GABA and prevented the development of hyperalgesia. Thus, while most studies suggest that inhibitory neurotransmitter concentration is reduced under neuropathic conditions, there is evidence that this might not be uniform across all forms of neuropathic pain.

Direct assessment of inhibition, via electrophysiology, clearly shows that post-synaptic inhibition is reduced in neuropathic pain states. For example, a number of studies provide circuit-based evidence that reduced glycinergic inhibition contributes to the development of tactile allodynia. Much of this work has focussed on altered inhibition in a particular population of excitatory interneurons, identified by protein kinase C gamma (PKCγ) expression. These neurons are thought to form a link between tactile and nociceptive pathways (i.e., they relay touch signals into pain circuits) (Miraucourt et al. 2007). Paired recordings in spinal cord slices have shown PKCγ+ neurons that receive Aβ-fiber (touch) input are normally inhibited by glycinergic inputs (Lu et al. 2013). This prevents Aβ-fibers activating nociceptive pathways and causing pain. Following SNL, the amplitude of glycinergic inputs is reduced, failure rates are increased, and the connections switch from short-term potentiation to short-term depression. This reduction in inhibition opens or ‘un-gates’ the polysynaptic pathway for Aβ-fiber-mediated touch input to activate nociceptive pathways and produce allodynia.

Related work by Petitjean et al. (2015) extended the above findings and proposed that parvalbumin expressing inhibitory interneurons (PV+INs) also inhibit the PKCγ+ population—the number of these inhibitory contacts was reduced following nerve injury. Ablation experiments also show that removal of the PV+IN population is sufficient to produce mechanical allodynia, while chemogenetic activation of these cells alleviates mechanical hypersensitivity in nerve-injured mice. Taken together, this work suggests that PV+IN-mediated inhibition of the PKCγ+ population forms a critical ‘gate’ to touch-evoked pain and that these inputs are altered in chronic pain states. Exactly, how this occurs is, however, not clear. In contrast to the findings of Petitjean et al. (2015), a recent study by our group (Boyle et al. 2019) failed to find evidence for significant loss of PV+IN inhibitory contacts onto the PKCγ+ population following SNI. Rather, we show that a different class of excitatory cells, vertical cells, also represent a polysynaptic pathway for touch signals to activate nociceptive circuits, and these cells are under strong PV + IN gating. A possible explanation for this discrepancy is the use of different nerve-damage model, with our work sparing the sural nerve, whereas Petitjean et al. (2015) spared the tibial nerve. Again, this implies that subtle differences in injury models have variable effects on dorsal horn circuitry. In addition, the Petitjean work only sampled PV + IN input on the soma of PKCγ+, whereas the majority of synaptic inputs arrive on dendrites.

Other electrophysiological studies support the idea that neuropathic states may differentially influence specific circuits within the dorsal horn. Recordings from LII neurons showed that fast excitatory transmission is unchanged following sciatic nerve transection, CCI, and SNI (Moore et al. 2002). In contrast, the same study showed that afferent-evoked IPSC incidence, amplitude, and duration were reduced following CCI and SNI, but not transection. Again, this highlights the importance of the exact injury models used to produce pain states. Furthermore, changes to inhibition appeared to be specific to GABAAR-mediated IPSCs. Nerve injury caused a slight reduction in GABAAR-mediated sIPSC frequency, but no change in GABAAR-mediated sIPSC or mIPSC amplitudes, as well as no change in GlyR-mediated sIPSC frequency or amplitude. A reduction in GABAAR-mediated IPSC frequency with no change in amplitude suggests that pre-synaptic GABA release was reduced following CCI and SNI. The same group reported similar findings in another study (Scholz et al. 2005), again recording afferent-evoked IPSCs in LII neurons, and showed that the number of neurons with detectable afferent-evoked IPSCs decreases by ~ 30% at 2 weeks after SNI. The peak amplitude and decay time constant were also reduced, suggesting a marked reduction in GABAergic transmission. Loss of inhibition was not due to reduced GABA sensitivity, as the GABAAR agonist muscimol evoked enhanced outward currents in LII neurons following SNI, but not evoked inward currents. Both of these studies suggest a specific reduction in GABAergic inhibition, a finding that is at odds with those that provide evidence for reduced glycinergic inhibition. In further support of reduced glycinergic inhibition following partial nerve ligation, Imlach et al. (2016) showed that LII radial interneurons receive reduced glycinergic, but not reduced GABAergic input. This was detected as a reduction in the amplitude of the glycinergic component of evoked IPSCs, after stimulating within LIII–IV. Nerve injury also reduced the probability of glycine release, glycinergic spontaneous IPSC frequency, and increased spontaneous IPSC decay time constant. These changes in decay time constant were suggested to be due to an increase in GlyRα2 expression, as this form of the receptor has slower kinetics (Graham et al. 2006). Interestingly, no changes were observed in recordings from identified vertical or central/islet cells suggesting again, specificity within the circuits that are disrupted under neuropathic conditions. Reduced glycinergic sIPSC frequency is interpreted as reduced release probability in pre-synaptic terminals, whereas changes to decay time constant are generally considered to arise from changes in synapse location of post-synaptic receptor composition. Given changes to IPSCs were only observed in radial cells, this suggests radial cells alone alter their receptor expression, and release probability at glycinergic synapses is selectively altered only at the inputs to radial cells following nerve injury.

The different contributions of glycinergic versus GABAergic input in the studies of Imlach et al. (2016) and Moore et al. (2002) and Scholz et al. (2005) are potentially informative. One notable difference between these studies is that Imlach et al. (2016) used electrical stimulation in LIII–IV, whereas Moore et al. (2002) and Scholz et al. (2005) used dorsal root stimulation. Imlach et al. (2016) also reported that glycinergic IPSCs could not be elicited when more dorsal or ventral regions were stimulated. This is an interesting finding as glycinergic interneurons are distributed throughout the dorsal horn, yet they seemed to preferentially activate glycinergic interneurons. The dorsal root stimuli used by Moore et al. (2002) and Scholz et al. (2005) may have activated more GABAergic versus glycinergic neurons, and, therefore, allowed a more thorough investigation of the GABAergic neuron population. Regardless, each study strongly suggests that GABA/glycinergic inhibition is diminished following nerve injury. Any changes to IPSC amplitude, frequency, or decay time will influence the efficacy of inhibition within the dorsal horn and by extension contribute to aberrant sensory processing in the neuropathic dorsal horn.

Putting the above observations together and assessing the potential impact of altered inhibition within the dorsal horn at a circuit level of analysis, Schoffnegger et al. (2008) examined the spread of excitation throughout sensory processing circuits using Ca2+ imaging in spinal cord slices. In naïve mice, neuronal excitation was confined within the deeper laminae after electrical stimulation of Aβ fibers, or glutamate injection into the deep dorsal horn. In neuropathic mice, however, excitation spread from the deep to superficial laminae—i.e., laminae that are normally only activated at nociceptive stimulus intensities. Together with the above data on GABAergic and glycinergic interneuron distribution, it is clear that neuropathic states allow tactile information to excite the more superficially located nociceptive circuits. Interestingly, the Schoffnegger et al. (2008)’s study also showed that application of bicuculline and strychnine to spinal cord slices resulted in synchronous network activity in roughly 66% of recordings from neuropathic mice, but only 5% in naïve animals. This suggests that enhancement of excitatory signalling has occurred in neuropathic mice; however, inhibition still plays some role, albeit reduced, in preventing hyperexcitability.

Changes to GABA/Gly Rs

Reduced inhibition could result from changes in post-synaptic receptor expression. Moore et al. (2002) found that GABAAR expression was not decreased in two nerve injury models (SNI/CCI). In fact, it was even marginally increased in the CCI model. Receptor expression in their study was assessed using an antibody to GABAAR β2/β3 receptors, which labels both synaptic and extrasynaptic receptors (Alvarez et al. 1996). Thus, this approach does not differentiate receptor location. Later, Polgar and Todd (2008) used labeling for the GABAA β3 subunit and confirmed that 94% of the immunoreactive puncta were in contact with VGAT-positive boutons, suggesting a synaptic site. When immunoreactive intensity was compared between naïve and SNI rats, no differences were observed. From this finding, it can be inferred that neither synaptic nor extrasynaptic GABAaR expression is changed in neuropathic pain.

In support of the suggestion by Moore et al. (2002), that GABAAR expression is increased following CCI, Castro-Lopes et al. (1995) found enhanced GABAAR binding following neuroectomy. In contrast, the same study reported GABABR binding in LII was decreased. Consistent with enhanced GABAAR expression, Scholz et al. (2005) found the GABAAR agonist muscimol evoked enhanced outward currents in LII neurons following SNI. Taken together, it seems that overall GABAAR expression is not reduced in neuropathic pain models, but instead post-synaptic neurons may increase GABAAR expression as a compensatory mechanism for reduced inhibitory input (Graham et al. 2003, Tadros et al. 2014). Once more, these changes may be model dependent, as Moore et al. (2002) identified changes to GABAAR expression following CCI, but not SNI.

This confusion over receptor expression changes in neuropathic pain models does not appear to be the case for glycine receptors. Simpson and Huang (1998) showed a bilateral reduction in the number of glycine receptors within LII–IV following CCI of the sciatic nerve. Nerve injury resulted in a dramatic reduction on the ipsilateral side (80%) and a more modest (20%) reduction on the contralateral side. Such marked reduction in glycine receptor expression would undoubtedly contribute to the reduced glycinergic inhibition observed in PKCy+ neurons (Lu et al. 2013), as well as LII radial cells (Imlach et al. 2016) following nerve injury. In contrast, western blot analysis by Imlach et al. (2016) demonstrated an increase in GlyRα2 expression following partial nerve ligation. This altered glycine receptor subunit expression was proposed to underlie an increase in decay time constant for IPSCs, but not changes in IPSC amplitude. This may represent a compensatory mechanism in the face of falling overall inhibitory tone. Enhancing the decay time of glycinergic currents would allow prolong the effect of inhibition and thus alter temporal precision and the potential for temporal summation during spinal sensory processing.

In summary, it appears that GABA receptor expression remains stable or is even enhanced following nerve injury, whereas glycine receptor expression is reduced. These changes would encourage a shift to more GABA-dominant inhibition. Interestingly, we recently reported a shift to GABA-dominant inhibition in the dorsal horn of aged animals (3–4 vs. 28–32 months) (Mayhew et al. 2019). In terms of neuropathic signalling, the functional implications of these changes are not clear and may differ depending on the laminae/region examined. For example, circuits within the deeper lamina, such as those involved in tactile allodynia, may experience an overall reduction in inhibition due to reduced glycine receptor expression. In contrast, LII circuits, which receive GABA-dominant inhibition (Graham et al. 2003), may experience an overall enhancement in inhibition due to increased GABA receptor expression. It is most likely that, however, any changes to the balance between GABA and glycinergic inhibition are detrimental to sensory processing. Previous work on brainstem inhibitory circuits shows that the co-release of GABA and glycine is necessary for optimal inhibition with each component, via its varying kinetics, contributing to the control of neuron discharge (Russier et al. 2002). Similar shifts in the dorsal horn are likely to reduce the efficacy of inhibitory interneurons in modulating activity in nociceptive circuits.

Reduced afferent input to inhibitory interneurons

An alternative circuit-based mechanism that could compromise inhibition in spinal sensory processing is reduced primary afferent drive to inhibitory interneurons. While a number of studies have shown that the incidence and amplitude of afferent-evoked IPSCs is reduced following nerve injury (Moore et al. 2002; Scholz et al. 2005), this work does not demonstrate that the change in afferent drive is directed to inhibitory interneurons. Others have demonstrated directly, by recording from LII GABAergic neurons, a reduction in excitatory input to inhibitory neurons. After CCI, miniature excitatory post-synaptic current (mEPSC) frequency was reduced, but mEPSC amplitude was unaffected (Leitner et al. 2013). The same study demonstrated that CCI did not change the density or morphology of dendritic spines or the number of excitatory synaptic contacts on GABAergic neurons. Instead, activation of Aδ- and C-fiber afferent input displayed reduced transmitter release probability following CCI. This reduced excitatory drive resulted in fewer GABAergic neurons being activated following noxious stimuli, as assessed by C-fos expression.

Lu et al. (2013) used SNL to show that Aβ-evoked monosynaptic EPSP amplitude and release probability was reduced in primary afferents contacting LIII glycinergic neurons. This reduced afferent drive was associated with the recruitment of excitatory interneurons, presumably through a lack of inhibition, following the same Aβ stimulation. Kim et al. (2012) proposed an alternative mechanism for reduced primary afferent drive to inhibitory interneurons following nerve injury. Specifically, TRPV1 mRNA was detected in roughly two-thirds of inhibitory interneurons within LI–II, as labeled by GAD65, versus one-quarter of GAD65 negative neurons. Application of the TRPV1 agonist, capsaicin, induced a long-lasting depression in EPSCs evoked by electrical stimulation of the dorsal root entry zone. Reduced membrane expression of the AMPA receptor subunit GluR2 was the proposed mechanism. Thus, reduced post-synaptic receptor expression at primary afferent synapses was thought to underlie reduced IPSC amplitude and inhibitory interneuron recruitment in deep dorsal horn projection neurons. Furthermore, disrupting this mechanism using TRPV1 knockout mice or spinal TRPV1 inhibition alleviated mechanical sensitivity after CCI. This work suggests that increased nociceptive input, particularly from peptidergic afferents, can reduce afferent drive via decreasing GluR2 expression on post-synaptic neurons. The reduced afferent drive is more pronounced in inhibitory interneurons, because more of these neurons exhibit TRPV1 mRNA. Taken together, these studies provide evidence that primary afferent input, from various afferent classes, is reduced following nerve injury. Although it seems counterintuitive, one effective therapy for neuropathic pain may be to enhance primary afferent release probability, and thus enhance inhibition. An obvious caveat to this proposal is the findings of Kim et al. (2012). They suggest that reduced afferent drive in pain models may be more confined to inhibitory neurons, and thus increasing primary afferent release probability might risk greater recruitment of excitatory neurons.

Chloride co-transporter changes

As noted above, GABA and glycine-mediated inhibition relies on the appropriate expression of chloride co-transporters to maintain hyperpolarising responses and disruption of chloride equilibrium can alter inhibition. The De Koninck group has proposed a depolarizing shift in chloride equilibrium as a mechanism for neuropathic pain (Coull et al. 2003). This shift occurs through a 50% reduction in the expression of KCC2, a transporter responsible for maintaining low intracellular Cl− concentration, following peripheral nerve injury. As intracellular Cl− rises, the driving force for chloride ions is decreased and the efficacy of GABA and glycinergic inhibition is reduced. This work even suggested that in extreme cases, GABA or glycine receptor activation could cause synaptic excitation. Placed in current models for spinal sensory processing, they proposed that GABAergic neurons receiving Aβ input and providing feed-forward ‘inhibition’ onto nociceptive neurons may actually excite these circuits. They also demonstrated that peripheral nerve injury or pharmacological disruption of chloride transport enhanced the responsiveness of LI projection neurons to noxious tactile stimulation, as well as enhancing spontaneous AP discharge (Keller et al. 2007). These changes are consistent with symptoms such as hyperalgesia, allodynia, and increased spontaneous pain frequently reported in neuropathic states. In support of disrupted chloride homeostasis as a generalised mechanism in neuropathic pain, other groups have shown downregulation of KCC2 in dorsal horn neurons following CCI (Wei et al. 2013), SNI (Modol et al. 2014; Kahle et al. 2016), and SNL (Zhou et al. 2012, Li et al. 2016).

In addition to altering sensory signalling, Li et al. (2016) demonstrated enhancing KCC2 expression in both dorsal horn and DRG neurons abolishes mechanical hyperalgesia and reduces tactile allodynia after nerve ligation. This study demonstrated a depolarizing shift in the equilibrium potential for GABA-mediated events (EGABA) of about 14 mV under neuropathic conditions. Treatment with a KCC2 vector fully restored EGABA in spinal dorsal horn neurons. Zhou et al. (2012) have also supported the idea that GABA/glycine-mediated ‘inhibition’ can become excitatory following SNL. Specifically, prolonged application of glycine in the dorsal horn of SNL rats increased spontaneous firing, whereas brief application caused only small hyperpolarizations, or even depolarization in dorsal horn neurons. In contrast, glycine application in sham tissue inhibited spontaneous firing activity, and brief application hyperpolarized membrane potential in all dorsal horn neurons. These data suggest that reduced KCC2 expression severely limits the efficacy of GABA/glycinergic inhibition and can even cause membrane depolarization. A recent study has suggested that the kinase WNK1/HSN2 is crucial for reduced KCC2 activity and the development of neuropathic pain (Kahle et al. 2016). WNK1/HSN2 contributes to nerve injury hypersensitivity by decreasing the activity of KCC2, consequentially causing a depolarizing shift in EGABA and reduced GABA-mediated inhibition. In line with these observations, blocking WNK reduced both cold allodynia and mechanical hyperalgesia—presumably by restoring EGABA and GABAergic inhibition. This study provides a useful therapeutic target for correcting the reduction in inhibitory efficacy that often follows nerve injury.

As suggested above, changes to KCC2 activity/expression can allow GABA/glycinergic inhibition to become excitatory in neuropathic states. This is, however, at odds with studies showing that facilitation/activation of GABA/glycine receptors or the transplantation of GABAergic neurons is anti-nociceptive in neuropathic animals. If GABA/glycinergic inhibition were excitatory in neuropathic states, these approaches should enhance pain. There are a number of explanations for this discrepancy. First, the anti-nociceptive effects of GABA receptor activation may reside at primary afferent terminals and be a consequence of enhanced pre-synaptic inhibition. This idea will be developed further in a subsequent section. Alternatively, GABA/glycine-mediated analgesia could result from increase shunting conductance, as proposed by De Koninck’s group in later work on this topic (Prescott et al. 2006). Despite reduced GABA/glycine receptor-mediated hyperpolarization, GABA/glycinergic inhibition and the associated increases in membrane conductance may maintain the ability to shunt excitatory inputs (Staley and Mody 1992; Gulledge and Stuart 2003). Prescott et al. (2006) suggest that this shunting mechanism is unable to compensate if the reduced Eanion exceeds a critical value. This appears to be plausible in the context of earlier findings (Coull et al. 2003). Therefore, although GABA/glycinergic inhibition may not become ‘excitatory’, the ability of inhibition to appropriately control firing rate of post-synaptic neurons is likely to be compromised in neuropathic pain states. Under these conditions, enhancing inhibition to reinstate effective inhibitory control will maintain an anti-nociceptive action. Taken together, reduced efficacy of GABA/glycinergic inhibition due to reduced KCC2 expression is one likely mechanism that contributes to neuropathic pain. Importantly, the work of Li et al. (2016) and Kahle et al. (2016) suggests that targeting KCC2 is a viable approach to treating neuropathic pain.

Pre-synaptic inhibition