Abstract

The rapid growth of the number of mobile broadband subscribers has led to an exponential increase of the Internet traffic, of which a major part is video traffic. In 4G/5G mobile networks, the eNBs should cache the popular contents to reduce the transit cost at the backhaul links. Recently, HTTP-based adaptive streaming is widely used to transfer the video contents to the end users in the Internet. With HTTP-based adaptive streaming, each video content is stored in several representations corresponding to different video performances. The representation with a better performance yields a higher user satisfaction, however, it consumes more storage and transit cost. In this paper, we propose a cooperative caching model in which the eNBs collaborate in caching and request routing the video contents. The proposed optimization problem is a large-scale integer linear program which is an NP-hard problem. Based on alternating direction method of multipliers technique, a distributed algorithm converging to the solution to the relaxation problem is then developed. It results to fractional caching and request routing which serve as a performance benchmark of the cooperative caching. Moreover, we propose a nearest-neighbor request routing policy and a lightweight-cooperative eviction algorithm for integral caching. Extensive simulations show that the proposed request routing and eviction algorithms achieve more than 78% of the performance benchmark.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, the number of mobile broadband subscribers has grown at a double-digit percentage per year. According to [1], it has reached 3.6 billion accounting for 84% of the population by 2016. The growing number of mobile broadband subscribers has led to the exponential growth of the mobile data. It puts a pressure on the backhaul links with limited link capacity. As the observation regarding to the explosion of mobile data traffic, a major part of mobile multimedia traffic is due to the duplicate downloads of the popular video contents. Therefore, 4G/5G network tends to cache the popular contents at the cache-enabled evolved NodeBs (eNB) to reduce the traffic on the backhaul links [2,3,4].

Without collaboration between the eNBs in caching, if the requested content exists at the eNB, the content is transmitted to the user equipment (UE), otherwise, the request is redirected to a cache server, which can be in content delivery network. Recently, the cooperation between the cache nodes has shown its efficiency in reducing the cost of the transit traffic in the networks [11,12,13]. Cooperative caching at the eNBs in radio access network (RAN) is also studied in [5, 6, 14, 15]. It not only requires a caching policy but also a request routing for the cache nodes. It is modeled by an large-scale integer linear program (ILP) or mixed integer linear program (MILP). However, ILP/MILP is an NP-hard problem and it requires a centralized and exponential time of computation to solve for an optimal solution. Therefore, a distributed approximation algorithm is necessary.

On the other hand, most of the Internet traffic nowadays is multimedia traffic, in which HTTP-based adaptive streaming is a prominent video streaming technique [16]. This technique provides a flexible and smooth service to the UEs [17, 18]. In HTTP-based adaptive streaming, each video content is stored in several representations with different sizes corresponding to different video performances. Apple HTTP Live Streaming [19], Microsoft Smooth Streaming [20], YouTube [21], etc. have recommended sets of representations with different bitrates and resolutions. A higher representation has a higher resolution and average bitrate, and hence, it yields a higher user satisfaction. However, it also consumes more storage and transit cost in the network. Typically, optimization model for video content caching is more difficult than that for homogeneous one, especially, when there is a cooperation between the cache nodes.

In this paper, we study the cooperative caching for HTTP-based adaptive streaming video contents at the eNBs. The objective is to maximize a combination of the utility and the cost saving per request. The contributions of our work are as follows:

-

1.

We propose a cooperative caching model which jointly solves for the optimal request routing and caching for a group of cache-enabled eNBs. It is a large-scale ILP problem. The solution to the relaxation problem describes the fractional-optimal caching and request routing strategies and serves as the performance benchmark of the cooperative caching.

-

2.

We apply alternating direction method of multipliers (ADMM) to decompose the large-scale relaxation problem and develop a distributed algorithm which converges to the optimal solution to the relaxation problem.

-

3.

From some observations on the ILP problem, we propose a nearest-neighbor request routing and a lightweight-cooperative eviction algorithm which their performance is close to the benchmark in many simulations.

The rest of paper is organized as follows. Section 2 introduces several related works. Section 3 describes the centralized cooperative caching model. Section 4 presents the distributed algorithm for fractional-optimal caching and request routing. The nearest-neighbor request routing and lightweight-cooperative eviction algorithm for integral caching are developed in Sect. 5. Section 6 shows the simulation results and Sect. 7 concludes the work.

2 Related work

Caching policies at cache nodes can be classified into two categories: proactive and reactive caching. In proactive caching, the contents are proactively cached according to the result of some calculations, whereas the caching is implemented online and reactively in reactive caching [4]. Caching is performed via content placement or content eviction. Content placement algorithms concern about selecting the contents to cache. For example, leave-cache-everywhere (LCE), which caches every content going through a cache node, is a simple strategy used in content-centric networks [7]. Eviction algorithms such as least-recently-used (LRU), least-frequently-used (LFU), first-in-first-out (FIFO) concern about selecting the contents to evict [8,9,10]. Algorithms in [7,8,9,10] are for a standalone cache node.

The collaborative caching is proved to leverage the caching efficiency of the cache network [11,12,13]. Given the demands of the contents, the optimal content placement is proposed by solving a large-scale ILP/MILP minimizing total bandwidth cost of the transit traffic [11,12,13]. The constraints in the optimization problems can be on storage capacity, link bandwidth, etc. Solving the ILP/MILP results to a jointly optimized caching and request routing between the cache nodes. However, the exact solution requires a centralized computation with exponential complexity since the optimization problem is NP-hard. The references [11, 13] address the cooperative caching for a hierarchical IPTV network. Users only send the requests to the bottom level nodes in [11, 13]. The aim is to minimize total transit cost of the network. In the work [11], based on the assumption on symmetric topology, cache size, and demand of the nodes, the authors proposed a local-greedy algorithm that yields a solution close to the optimum. The work [13] study a three-level cache hierarchy. The large-scale problem is decomposed to more specific collaboration between the cache nodes. The work [12] proposes a centralized caching algorithm for a flat video-on-demand (VoD) network. The caching model has additional link capacity constraints. The relaxation problem is solved periodically and the videos are then proactively cached at the nodes according to the rounded solution. The centralized algorithm run in the off-peak hours of the VoD network [12].

Caching in RAN of mobile networks has been widely studied recently [2,3,4,5,6, 14, 15]. The works [2,3,4] introduce the architecture of mobile network with caching in RAN and describe some potential techniques. The base stations also collaborate to increase caching efficiency [5, 6, 14, 15]. The work [14] investigates multi-tier cooperative caching for heterogeneous networks in which the user requests can be sent to any tier. The problem in [14] is more complicated than the ones in [11, 13]. It is decomposed to subproblems corresponding to caching cooperation on different tiers. The problem is solved at periods of time. The work [6] proposes an online caching algorithm for base stations in RAN. The optimization problem does not have the couple constraints on the storage capacities of the base stations, therefore, it can be decomposed to independent subproblems. Each subproblem is associated with each content. Content popularities are not integrated in the caching model in [6]. The paper [15] uses extreme learning machine neural network to predict content popularities and then proposes an MILP model to find an optimal storage size of the base stations, cache contents and request routing. To address the large-scale problem, the authors classified the contents into groups, hence, the decision is made for a group of contents. The objective is link cost, which is actually the delay of the download. The initial caching at the eNBs follows the solution to MILP. The eNBs then apply a heuristic algorithm modified from LRU to replace the contents. In [5], the objective is to minimize the energy consumption by eNBs. Energy consumption of eNBs includes energy consumption for caching contents and energy to retrieve contents from peer eNBs. The optimization problem is NP-hard. The authors adopted Lagrangian for the dual problems and solved for a near-optimal solution.

The cooperative caching model in our work is quite similar to the works [11,12,13,14]. However, the features that distinguish our work and those similar works are:

-

The works [11,12,13,14] do not consider video contents, however, our model is applied for HTTP-based video streaming contents. The performance of the video is integrated into the objective of the optimization problem.

-

For fractional caching, we propose a novel technique based on ADMM to solve the relaxation problem, whereas, the large-scale caching problem in [12] takes hours to solve at a central site. The fractional caching is not discussed in [11, 13, 14] .

-

For integral caching, we decoule the collaborative caching to request routing and content placement and we also propose a reactive eviction algorithm. The works [11,12,13,14] propose the algorithms that implement proactive caching and request routing jointly.

3 Centralized caching model

In this section, we develop a cooperative caching model for a set of eNBs.

3.1 System model

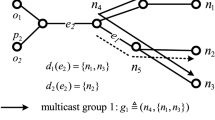

Consider a RAN domain including N cache-enabled eNBs which provide caching service to the UEs. To support cooperative caching, some eNBs can transmit contents directly to each other via directly connected links or eNB’s backhaul links. Let \(c_{i0}\) be the cost when eNB i downloads a unit of data from the cache server and \(c_{ij}\) be the cost to download from eNB j. These costs can be calculated from some link’s metrics such as bandwidth, hop count, delay, etc. The value \(c_{ij}\) can be greater than or less than \(c_{i0}\) depending on whether the link between two eNBs i and j has high bandwidth or not. We assume that the messages passing between eNBs to exchange caching information are small and their transit costs are negligible. (Please see the main notations described in Table 1).

Let \(x_i^{(m,k)}\) be the variable indicating whether eNB i stores representation (m, k) and \(y_{ij}^{(m,k)}\) be the variable indicating whether the representation (m, k) is fetched from j to i for the request of (m, k) at eNB i. Let’s define variable \(y^{(m,k)}_{i0}\) indicating whether item (m, k) is downloaded from the cache server for the request of (m, k) to node i. The constraint \(x_i^{(m,k)} + \sum _{j \in \mathcal {N} , j \ne i} y_{ij}^{(m,k)} + y^{(m,k)}_{i0} = 1\) holds for all (m, k) and \(i \in \mathcal {N}\). When there is a request of (m, k) at eNB i, one of three following instances occurs:

-

1.

if (m, k) exists at eNB i, i.e., \(x_i^{(m,k)}=1\) and \(y_{i0}^{(m,k)} = y_{ij}^{(m,k)}=0, \forall j, j \ne i\), then the transit cost is zero,

-

2.

if (m, k) does not exist at eNB i and it is loaded from the cache server for the request at i, i.e., \(x_i^{(m,k)}=0, y_{ij}^{(m,k)}=0, \forall j, j \ne i\), and \(y_{i0}^{(m,k)} = 1\), then the transit cost is \(s^{(m,k)} c_{i0} y_{i0}^{(m,k)}\), and

-

3.

if (m, k) does not exist at eNB i and it is loaded from one of the other eNBs, i.e., \(x_i^{(m,k)}=y_{i0}^{(m,k)} = 0\) and there exists j such that \(y_{ij}^{(m,k)}=1\), then the transit cost is \(\sum _{j \in \mathcal {N} , j \ne i} s^{(m,k)} c_{ij}y_{ij}^{(m,k)}\).

Hence, the transit cost for a request of content (m, k) at eNB i is given by

On the other hand, when there is a request for (m, k) to node i , but if there is no cache in the RAN, the transit cost will be \(s^{(m,k)} c_{i0}\). Hence, the cost saving for a request of (m, k) at eNB i is

Due to \(x^{(m,k)}_i + \sum _{j \in \mathcal {N} , j \ne i} y^{(m,k)}_{ij} + y^{(m,k)}_{i0} = 1\), the transit cost for a request of (m, k) at eNB i is rewritten

Hence, the average cost saving associated with eNB i in considered period is

Denote \(u^{(m,k)}\) be the utility associated with representation (m, k). We assume that the higher representation yields a higher utility value, \(u^{(m,1)}< u^{(m,2)}< \cdots < u^{(m,K)}\) for all \(m \in \mathcal {M}\). The utility associated with a representation can represent the quality-of-experience (QoE) of a video, and ideally, it should be measured via mean-opinion-score (MOS). MOS is a subjective perceptual metric measuring the satisfaction of end users. It is a “direct rating” measurement obtained from asking users directly about their video perception with respect to specific performance metrics. However, a MOS measurement is a costly and time consuming approach. Several works have tried to establish an objective parametric model which is more mathematically tractable based on the quality-of-service (QoS) metrics (such as bitrate, packet loss, bandwidth, etc.). The works [23, 24] have recommended the logarithmic model for the subjective parametric QoE recently. The optimum solution to the QoE-maximization problems can be easily obtained because of the concave shape of the logarithmic utility. Moreover, the proportional-fair resource allocation is also achieved with logarithmic utility function [25]. For example, the work [22] fits the MOS dataset to logarithmic utility functions (see [22, Table II]). In this paper, our analysis framework can be applied for any utility model. We use the logarithm function of bitrates for the utilities associated with the representations in the simulations. The utility that eNB i supports if it caches representation (m, k) is \(d_i^{(m,k)}u^{(m,k)}\), where \(d_i^{(m,k)}\) is the probability of a request for representation (m, k) at eNB i. Thus, the average of utility that the eNB i can support is given by

Therefore, the weighted sum of utility (5) and cost savings (4) associated to eNB i is given by

where \(\gamma \in [0,1]\) is the weighted parameter to balance two performance metrics. We aim to maximize the total of weighted sum of utility and cost savings. The optimization problem for cooperative caching in RAN is as follows

Constraint (8) is the cache size constraint of the eNBs. Total sizes of all representations of all video contents at node i must not exceed node i’s cache size. Constraint (9) means that node j only serves the request of representation (m, k) at node i if it stores this representation in its cache. Constraint (10) is actually the constraint \(x_i^{(m,k)} + \sum _{j \in \mathcal {N} , j \ne i} y_{ij}^{(m,k)} + y^{(m,k)}_{i0} = 1\) in which the variable \(y^{(m,k)}_{i0}\) is eliminated. From the optimal solution to problem \(\mathrm{(SYS)}\), an eNB know the set of representations it should cache and where to redirect the request whenever a requested representation is not cached (cache miss). Hence, the caching and request routing are jointly addressed by problem \(\mathrm{(SYS)}\).

Given the network topology, the cost for network deployment is assumed constant and we can eliminate it from the objective of the optimization model. We also assume that the processing cost such as request routing, caching or evicting the content is negligible. In problem \(\mathrm{(SYS)}\), if \(u^{(m,k)} = 1\) for all \(k \in \mathcal {K}, m\in \mathcal {M}\), the sum of utility becomes the number of cache hits and the optimization is to maximize the weighted sum of the number of cache hits and the cost saving in the RAN domain.

3.2 Fractional caching and request routing

Problem \(\mathrm{(SYS)}\) is a large-scale multi-dimensional Knapsack problem which is NP-hard [27]. Solving it requires an exponential time. If the contents can be stored fractionally, we can relax constraint (11) and \(\mathrm{(SYS)}\) becomes a linear program

The problem \(\mathrm {(fSYS)}\) can be solved in a polynomial time. In problem \(\mathrm {(fSYS)}\), \(x_i^{(m,k)}\) can be interpreted as the fraction of representation (m, k) stored at node i and \(y_{ij}^{(m,k)}\) as the fraction of representation (m, k) sent from node j to serve the request at node i. The solution to \(\mathrm {(fSYS)}\) serves as the performance benchmark for the cooperative caching.

4 Distributed algorithm for fractional caching and request routing

Even problem \(\mathrm{(fSYS)}\) can be solved in a polynomial time, it needs a centralized computation at the central controller. In this session, we propose a distributed caching algorithm based on ADMM method [26]. Problem \(\mathrm{(fSYS)}\) is decomposed into subproblems. Each one is associated with an eNB.

Constraints (13), (15) and (16) are separable in problem \(\mathrm{(fSYS)}\). Let’s define the set \(\mathcal {F}_i = \{(\varvec{x}_i,\varvec{y}_i)|\) (13), (15), (16)\(\}\). Using slack variables \(\varvec{r}_{ij} = (r_{ij}^{(m,k)})_{m \in \mathcal {M}, k \in \mathcal {K}}\) to transform the inequality constraint to equality one, the constraint (14) is rewritten as follows

where \(\varvec{r}_{i} = (\varvec{r}_{ij})_{j \in \mathcal {N}, j \ne i}\).

Let \(\varvec{\nu }_{ij} = (\nu _{ij}^{(m,k)})_{m \in \mathcal {M}, k \in \mathcal {K}}\) be the Lagrange multipliers associated with new equality constraint (17). Denote \(\varvec{\nu }_{i} = (\varvec{\nu }_{ij})_{j \in \mathcal {N}, j \ne i}\). The augmented Lagrangian function is given by

where \(\rho \) is a constant, e.g., \(\rho = 1\). ADMM algorithm consists of the following iterations [26]:

-

1.

(x, y, r)-update: eNB i sequentially solves for the solution \((\varvec{x}_i,\varvec{y}_i,\varvec{r}_i)\) to the following problem

$$\begin{aligned} \mathrm{Max.~}&L_{\rho } \nonumber \\ \mathrm{st.~}&(\varvec{x}_i,\varvec{y}_i) \in \mathcal {F}_i, \forall i \in \mathcal {N}, \end{aligned}$$(20)$$\begin{aligned}&\varvec{r}_i \ge 0, \forall i \in \mathcal {N}, \end{aligned}$$(21)$$\begin{aligned} \mathrm{variables:}~&\varvec{x}_i,\varvec{y}_i,\varvec{r}_i, \end{aligned}$$(22)which is equivalent to

$$\begin{aligned} \mathrm{(fNOD)}_\mathrm{i}~ \mathrm{Max.}~&\varvec{z}_i(\varvec{x}_i,\varvec{y}_i) - \sum _{j \in \mathcal {N}, j \ne i} \nu _{ij}^T (\varvec{y}_{ij} + \varvec{r}_{ij}) + \sum _{j \in \mathcal {N}, j \ne i} \nu _{ji}^T \varvec{x}_{i} \nonumber \\&- \frac{\rho }{2} \left( \sum _{j \in \mathcal {N}, j \ne i} (\varvec{y}_{ij} + \varvec{r}_{ij} - \varvec{x}_{j})^2 + \sum _{j \in \mathcal {N}, j \ne i} (\varvec{y}_{ji} + \varvec{r}_{ji} - \varvec{x}_{i})^2 \right) \nonumber \\ \mathrm{st.~}&(\varvec{x}_i,\varvec{y}_i) \in \mathcal {F}_i, \end{aligned}$$(23)$$\begin{aligned}&\varvec{r}_i \ge 0, \end{aligned}$$(24)$$\begin{aligned} \mathrm{variables:}~&\varvec{x}_i,\varvec{y}_i,\varvec{r}_i. \end{aligned}$$(25) -

2.

\(\nu \)-update:

$$\begin{aligned} \varvec{\nu }_{ij} := \varvec{\nu }_{ij} + \rho (\varvec{y}_{ij} + \varvec{r}_{ij} - \varvec{x}_{j}), \forall i, j \in \mathcal {N}, j \ne i. \end{aligned}$$(26)

Algorithm 1 describes the ADMM algorithm for the fractional-optimal caching and request routing strategies of the system. In each iteration, eNB i receives \(\varvec{x}_j\), \(\varvec{y}_j\) for all \(j \in \mathcal {N}, j \ne i\), and \(\varvec{\nu }_j\) for all \(j \in \mathcal {N}\) from the controller. It sequentially updates its caching \(\varvec{x}_i\) and request routing \(\varvec{y}_i\) and then sends the changed values back to the controller.

ADMM caching algorithm is a proactive algorithm in which the eNBs calculate for the optimal solution and pre-cache the representations according to the solution. Extensive simulations show that the fractional-optimal solution has only several fractional values. However, the ADMM algorithm requires a large number of messages passing in the network. In next section, we develop a reactive eviction algorithm for integral caching which only needs a lightweight cooperation between the eNBs.

5 Integral request routing and caching

The integral-optimal solution to \((\mathrm {SYS})\) requires an exponential complexity computation. If the network has many contents, the integral-optimal solution is impossible. In this section, we use an ad-hoc and heuristic approach to design request routing and eviction algorithms for the integral caching. Based on some observations about the optimal solution to problem \((\mathrm {SYS})\), we first propose a nearest-neighbor request routing algorithm. With the proposed request routing, we then propose an eviction algorithm for the eNB to optimize its objective distributively.

5.1 Nearest-neighbor request routing

Let \((\varvec{x}^*_i, \varvec{y}^*_i)_{i \in \mathcal {N}}\) be the optimal solution to the problem \(\mathrm{(SYS)}\). We have some following observations.

Lemma 1

If \(c_{ij} > c_{i0}\) for some eNBs i, j then \(y_{ij}^{(m,k)} = 0\) for all \(k \in {\mathcal {K}}\) and \(m \in {\mathcal {M}}\).

Lemma 2

If there are three eNBs i, j, l such that \(c_{ij} < c_{il}\) then \(y_{ij}^{(m,k)*} \ge y_{il}^{(m,k)*}\) for all (m, k).

Lemma 3

If \(c_{ij} = c_{il}\), and \(x_j^{(m,k)*}=x_l^{(m,k)*}=1\), \(y_{ij}^{(m,k)*}=1\), and \(y_{il}^{(m,k)*}=0\) are the solution to \(\mathrm{(SYS)}\), then \(y_{ij}^{(m,k)*}=0, y_{il}^{(m,k)*}=1\) are also the solution to \(\mathrm{(SYS)}\).

Proof

We prove Lemma 1 by contradiction. Assume that there are two eNBs i and j that \(c_{ij} > c_{i0}\), however, there exists (m, k) such that \(y_{ij}^{(m,k)*} > 0\). The new point \((\varvec{x}^*_i, \varvec{y}^{\prime }_i)_{i \in \mathcal {N}}\) in which \(\varvec{y}_i^{\prime } = \varvec{y}_i^*\) for all \(i\in \mathcal {N}\) except \(y_{ij}^{(m,k)\prime } = 0\) yields a strictly higher objective than \((\varvec{x}_i, \varvec{y}_i)_{i\in \mathcal {N}}\) does. Moreover, this new point still satisfies the constraints of (SYS). That is contradiction.

We also prove Lemma 2 by contradiction. If there exists a representation (m, k) such that \(y_{ij}^{(m,k)*} < y_{il}^{(m,k)*}\) for some eNBs i, j, l. Constraint \(x_i^{(m,k)*} + \sum _{j \in \mathcal {N}, j \ne i} y_{ij}^{(m,k)*} \le 1\) implies \(y_{il}^{(m,k)*}=1\) and \(x_{i}^{(m,k)*} = y_{ij}^{(m,k)*} = 0\) (node i does not cache representation (m, k) and the request of (m, k) is forwarded to node l). From Lemma 1, we must have \(c_{ij} < c_{il} \le c_{i0}\). Thus, \(0 \le c_{i0} - c_{il} < c_{i0} - c_{ij}\). Consider the new point \((\varvec{x}^*_i, \varvec{y}^{\prime }_i)_{i \in \mathcal {N}}\), in which \(\varvec{y}^{\prime }_i = \varvec{y}^{*}_i\) for all \(i \in \mathcal {N}\) but \({y}_{ij}^{(m,k)\prime } = 1\) and \({y}_{il}^{(m,k)\prime } = 0\). We have \(0 \le (c_{i0} - c_{il})y_{il}^{(m,k)*} < (c_{i0} - c_{ij})y^{(m,k)\prime }_{ij}\). Therefore, the point \((\varvec{x}_i^*, \varvec{y}^{\prime }_i)_{i \in \mathcal {N}}\) yields a strictly higher objective than \((\varvec{x}_i^*, \varvec{y}_i^*)_{i \in \mathcal {N}}\) does, which is contradiction.

Lemma 3 is easily proved.

ENBs j is said to be neighbors of eNB i if \(c_{ij} < c_{i0}\). Lemma 1 means that if the cost to download a content from eNBs j is more expensive than from the cache server, node i would rather download the requested representation from the cache server than from the node j. Lemma 2 implies that if eNB i does not cache (m, k) then the request of (m, k) is forwarded to the neighbor which stores (m, k) and has the least cost to i. Lemma 3 means that if two eNBs with the same least cost cache (m, k), downloading (m, k) from any of them yields the same objective. The ties can be broken randomly. Inspired by Lemmas 1–3, we propose a nearest-neighbor request routing for eNB i described in Algorithm 2.

5.2 Lightweight-cooperative eviction algorithm

Using the nearest-neighbor request routing, we design an eviction algorithm for the eNBs. Define \(c_{-i}^{(m,k)}= \min (\min _{j \in \mathcal N^{(m,k)}_i} c_{ij}, c_{i0})\), where \(\mathcal N^{(m,k)}_i\) is the set of i’s neighbors that cache (m, k). Note that \(\mathcal N^{(m,k)}_i\) can be an empty set if none of i’s neighbors has (m, k). \(c_{-i}^{(m,k)}\) is the cost to download a data unit of (m, k) from the nearest i’s neighbor that caches (m, k) or the cache server in case none of i’s neighbor caches (m, k). Clearly, \(c_{-i}^{(m,k)}\) depends on the lists of cached contents at the other eNBs in the network domain.

Given the list of cached items at the eNBs, the eNB i distributively maximizes its objective which is the linear combination of utility and cost saving associated with eNB i. As the network uses the nearest-neighbor request routing, the cost saving of i is \(d_i^{(m,k)} s^{(m,k)} \left( c_{i0} - c_{-i}^{(m,k)} (1-x_i^{(m,k)})\right) \). Hence, the optimization problem associated with i is given by

which is equivalent to

Problem \(\mathrm{(NOD)}_i\) is a Knapsack problem [27]. Given the set of items with different values and sizes, and the limited size of the knapsack, Knapsack problem is to put the items into the knapsack to maximize the sum of values. The greedy algorithm can solve the relaxation of problem \(\mathrm{(NOD)}_i\). The optimal solution has only one fractional item. The items are sorted in descending order of the ratio \(\frac{\mathrm{value}}{\mathrm{size}}\). The items are then selected from the highest to the lowest and put into the knapsack until the knapsack is full. Only a fraction of the first item exceeding the capacity of the knapsack is selected [27].

Applying the greedy algorithm, eNB i can proactively caches the items according to the rounded solution to the relaxation of \(\mathrm{(NOD)}_i\). However, we further propose a reactive eviction algorithm for eNBs. Let \(\mathcal {L}_i\) be the list of representations of all the video contents cached at node i. Each representation (m, k) in \(\mathcal {L}_i\) is associated with the value \(d_i^{(m,k)}\left( \gamma \frac{u^{(m,k)}}{s^{(m,k)}} + (1-\gamma )c_{-i}^{(m,k)}\right) \). Each time the storage is full, the representation with the least value is evicted. Gradually, the list only contains the representations with the highest values.

Algorithm 3 describes the proposed eviction algorithm. Each eNB runs two parallel processes. The content placement process caches any missed content that passes through the node when the content is fetched back to the UE from a neighbor or from the cache server. The eviction process evicts the representation with the smallest value v(m, k) whenever the cache size exceeds the threshold. In practice, Algorithms 2 and 3 can be implemented in the following approach. The eNB i keeps a list of neighbors in a decreasing order of cost \(c_{ij}\). When there is a miss, eNB i asks its neighbors in this order until one of the neighbors has the requested item. If none of the neighbors caches the item, the cache server will serve the request.

In Algorithm 3, eNB i makes the caching decision only based on the current cache lists of the i’s neighbors. Hence, Algorithm 3 is mentioned as the lightweight-cooperative algorithm, which contrasts to the full cooperation described in Algorithm 1 that requires a large amount of messages passing in the network.

6 Performance evaluation

In the simulations, we consider a cluster of eNBs including 10 cache-enabled nodes. The cost to transmit 1 MB between two eNBs is \(c_{ij}=1\) unit if they are neighbors of each other or 5 units if they are not. The cost to download 1 MB from the cache server is \(c_{i0}=3\) units. We assume that the probability that two eNBs are neighbors of each other follows Bernoulli’s distribution with probability \(p_n=0.5\) unless explicitly stated otherwise.

The video catalog includes 1000 video contents. The playback time of each content is assumed 10 min. There are four types of representations chosen from the recommended set of YouTube [21] (\(K=4\)). Their average bitrates are given in Table 1. We assume that the utility corresponding to representation k is \(\log (r^k)\), where \(r^k\) is the average bitrate of representation k. The probability of a request for representation (m, k) at eNB i, \(d_i^{(m,k)}\), include two independent probabilities: content popularity \(p_i^m\) and the probability for a request at level-k presentation at eNB i, \(q_{ki}\). The content popularity \(p_i^m\) is assumed to follows Zipf’s distribution in which the popularity of m-th content is proportional to

The exponent \(\alpha \) characterizes the steepness of the distribution. The higher \(\alpha \) yields a steeper demand [11,12,13,14, 28]. In the simulations, \(\alpha \) is varied from 0.4 to 1.1. The probability for a request at level-k presentation at eNB i, \(q_{ki}\), is assumed to follow a uniform distribution (Table 2).

We first verify the convergence of ADMM algorithm, Algorithm 1, in Sect. 6.1 and compare the result to the optimal solution to problem \(\mathrm (fSYS)\) which serves as the performance benchmark. We then compare the performances of lightweight-cooperative eviction algorithm, Algorithm 3, to the traditional LRU/LFU and LRU/LFU with the nearest-neighbor request routing (called modified LRU/LFU) via event-driven simulations in Sects. 6.2–6.4. The lightweight-cooperative, modified LRU and modified LFU eviction algorithms use nearest-neighbor request routing described in Algorithm 2, whereas the traditional LRU/LFU does not. The miss contents are downloaded from the cache server in traditional LRU and LFU algorithms.

In the event-driven simulations, we generate 500,000 requests to the eNBs. The requests are uniformly distributed among the eNBs. If the next request for (m, k) sent to eNB i is missed, eNB i will cache the returned (m, k). Whenever the cache size at the eNB exceeds the threshold, some representations at the eNB will be evicted. For lightweight-cooperative caching, the representations with least values v(m, k) are evicted according to Algorithm 3. If LRU or LRU is used, the least-frequently-used or least-recently-used representations are evicted from eNB i’s cache.

We conduct different simulations when varying the demand steepness, the cache sizes of eNBs, and the number of neighbors of an eNB. The performances of the eviction algorithms are evaluated in four metrics, i.e., objective value, transit cost, utility, and cache-hit ratio. The cache-hit ratio is the ratio of the number of requests served by RAN and the total number of requests. The cache lists at the eNBs are empty initially. The performance is observed in last 400,000 requests.

6.1 Convergence of the ADMM algorithm

In many simulations we have conducted, Algorithm 1 always converges to the optimal solution to problem \(\mathrm{(fSYS)}\). For example, with the storage size of each eNB is 2% of the catalog, \(\alpha = 0.8\), and \(p_{n}=0.5\), Algorithm 1 converges to the optimal value calculated by the centralized approach as shown in Fig. 1.

6.2 Varying the demand steepness

In this simulation, the storage size of each eNB is 2% of the catalog size and \(p_{n}=0.5\). We monitor the performances of eviction algorithms and compare them to the benchmark when varying the exponent \(\alpha \) of the content distribution from 0.4 to 1.1.

In general, the performances of all considered algorithms increase as \(\alpha \) increases (Fig. 2). This observation also agrees with the intuition that the caching is more efficient when the distribution of the content’s popularity gets steeper. The objective value of the lightweight-cooperative eviction algorithm is higher than that of the other eviction algorithms such as LRU and LFU, with or without the nearest-neighbor request routing, and is always more than 78% of the benchmark for any \(\alpha \) values. When \(\alpha =0.8\), the lightweight-cooperative eviction algorithm achieves 88% whereas the modified and traditional LFU yield the same objective value, which is 73% of the optimal solution. The modified and traditional LRU outputs only 69 and 30% of the benchmark, respectively (see Fig. 2a). LRU always has a worse performance than the others. LRU with the proposed request routing yields a better performance than the one without the proposed request routing, which shows the efficiency of Algorithm 2.

Figure 2b–d show the transit cost, utility and cache hit which the RAN supports per request. The transit cost of the proposed eviction algorithm is lower than those of the traditional and modified LRU or LFU eviction algorithms (see Fig. 2b). The utility and the cache hit are slightly lower than those of LFU (Fig. 2c, d). However, we can change parameter \(\gamma \) to adjust the weights of the objectives. For example, if \(\gamma \) is close to 1, we emphasize on maximizing the utility, whereas if \(\gamma \) is close to 0, we emphasize on minimizing the transit cost. The traditional and modified LFU or LRU eviction algorithms always result in the same utility and cache hit since the caching decisions made by LFU or LRU only depend on the demand of the contents and requests arrival, which are independent from the request routing algorithm (see Fig. 2c, d).

The transit cost of the modified LRU is lower than that of the traditional LRU which implies the efficiency of our proposed request routing algorithm. The cooperation between the eNBs will reduce the transit traffic because the node would rather download a miss representation from one of its neighbor than from the cache server. However, the transit costs of modified and traditional LFU algorithms are the same. Since the demand of the representations for the eNBs and the storage sizes of the eNBs are assumed to be symmetric, the representations cached at the eNBs are the same if we use LFU, i.e., the items with the highest demand. The cooperation between the eNBs has no affect with LFU.

6.3 Varying the eNB’s cache sizes

In this simulation, we fix \(\alpha =0.8\), \(p_{n}=0.5\) and vary the cache sizes of the eNBs from 1 percent to 5 percent of the catalog’s size. Figure 3 shows the performances of the eviction algorithms.

Overall, the objective values of the optimal solution, lightweight-cooperative, LFU and LRU evictions increase as the cache sizes increase. The objective of the lightweight-cooperative eviction algorithm is higher than that of the LRU and LFU eviction algorithms with and without Algorithm 2 and more than 88 percent of the benchmark (see Fig. 3a).

Similar to the results in Sect. 6.2, the transit cost of the lightweight-cooperative eviction algorithm is better than that of LRU and LFU (Fig. 3b). The utility and cache hit ratio of the lightweight-cooperative eviction algorithm are slightly lower than those of LFU (Fig. 3c, d). However, we can increase \(\gamma \) to emphasize more on maximizing the utility and cache hit ratio than minimizing the transit cost.

In Figs. 2a and 3a, the performance metrics of LFU are quite close to those of the lightweight-cooperative eviction algorithm. However, LFU actually has a drawback compared to the proposed algorithms as shown in the next simulation.

6.4 Varying the average number of direct links between eNBs

In this simulation, \(\alpha =0.8\) and the cache sizes of eNBs are 2 percent of the catalog’s size. We vary \(p_{n}\) from 0.2 to 0.8. The higher \(p_{n}\) means two eNBs are more likely neighbors of each other. For each \(p_{n}\) value, we conduct 5 simulation runs and take out the average value of each performance metric.

Figure 4 shows the performances of different eviction algorithms when varying \(p_{n}\). The higher \(p_{n}\) yields a better performance. It indicates that the our proposed request routing is more efficient when increasing \(p_{n}\).

The objective values of the lightweight-cooperative and LRU eviction algorithms increase when \(p_{n}\) increases (see Fig. 4), which shows the effectiveness of the collaboration between the eNBs. Algorithm 3 achieves more than 95% of the benchmark in all cases, whereas the objective value of LFU, with or without the nearest-neighbor request routing, is around 80% of the benchmark. The modified LRU yields a higher performance than the traditional LRU. The objective value of the traditional LRU is only around 33% of the benchmark whereas the one of the modified LRU increases from 58 to 83% as \(p_{n}\) increases.

In all cases, the performances of LFU, with or without our proposed request routing, do not change when \(p_{n}\) increases. The reason is that with LFU, eNBs store the most popular items. If the eNBs are symmetric in the cache sizes and content’s demand, the cache lists at the eNBs are the same. Hence, if there is any cache miss for item (m, k), this representation does not exist at the other eNBs also. The transit cost, cache hit, utility are unchanged even when we increase the number of links between the eNBs. The caching cooperation in LFU does not have any affect.

7 Conclusions

We have proposed a cooperative caching model for video contents in cache-enabled RANs of 4G/5G mobile networks. It is a large-scale ILP problem and requires an exponential complexity computation to solve. The solution to the relaxation problem yields an optimal-fractional caching and request routing strategies and serves as a performance benchmark. We have proposed an ADMM distributed algorithm that converges to the optimal-fractional solution. The nearest-neighbor request routing and the lightweight-cooperative eviction algorithm for the eNBs are also developed for integral caching. Extensive simulations have shown that our proposed request routing and eviction algorithms achieve more than 78% of the performance benchmark.

References

ITU, ICT Facts and Figures 2016 (2016)

Wang X, Chen M, Taleb T, Ksentini A, Leung V (2014) Cache in the air: exploiting content caching and delivery techniques for 5G systems. IEEE Commun Mag 52(2):131–139

Andreev S, Galinina O, Pyattaev A, Hosek J, Masek P, Yanikomeroglu H, Koucheryavy Y (2016) Exploring synergy between communications, caching, and computing in 5G-grade deployments. IEEE Commun Mag 54(8):60–69

Bastug E, Bennis M, Debbah M (2014) Living on the edge: The role of proactive caching in 5G wireless networks. IEEE Commun Mag 52(8):82–89

Xu Y, Li Y, Wang Z, Lin T, Zhang G, Ci S (2014) Coordinated caching model for minimizing energy consumption in radio access network. In: IEEE international conference on communications (ICC), pp 2406–2411

Gharaibeh A, Khreishah A, Ji B, Ayyash M (2016) A provably efficient online collaborative caching algorithm for multicell-coordinated systems. IEEE Trans Mob Comput 15(8):1863–1876

Jacobson V, Smetters DK, Thornton JD, Plass MF, Briggs NH, Braynard RL (2009) Networking named content. In: The 5th international conference on Emerging networking experiments and technologies, pp 1–12

Raghavan RMP (1995) Randomized algorithms. Cambridge University Press, New York

Cao P, Irani S (1997) Cost-aware www proxy caching algorithms. In Usenix symposium on internet technologies and systems, vol 12, no 97, pp 193–206

Psounis K, Prabhakar B (2002) Efficient randomized web-cache replacement schemes using samples from past eviction times. IEEE/ACM Trans Netw (TON) 10(4):441–455

Borst S, Gupta V, Walid A (2010) Distributed caching algorithms for content distribution networks. In: IEEE INFOCOM, pp 1–9

Applegate D, Archer A, Gopalakrishnan V, Lee S, Ramakrishnan KK (2016) Optimal content placement for a large-scale VoD system. IEEE/ACM Trans Netw 24(4):2114–2127

Dai J, Hu Z, Li B, Liu J, Li B (2012) Collaborative hierarchical caching with dynamic request routing for massive content distribution. In: IEEE INFOCOM, pp 2444–2452

Li X, Wang X, Li K, Han Z, Leung VC (2017) Collaborative multi-tier caching in heterogeneous networks: modeling, analysis, and design. IEEE Trans Wireless Commun 16(10):6926–6939

Tanzil SS, Hoiles W, Krishnamurthy V (2017) Adaptive scheme for caching youtube content in a cellular network: machine learning approach. IEEE Access 5:5870–5881

Cisco (2016) Cisco visual networking index: Forecast and methodology, 2015-2020

Stockhammer T (2011) Dynamic adaptive streaming over HTTP: standards and design principles. In: The 2nd annual ACM conference on Multimedia systems, pp 133–144

Oyman O, Singh S (2012) Quality of experience for HTTP adaptive streaming services. IEEE Commun Mag 5(4):20–27

Apple, Using http live streaming. [Online]. http://goo.gl/fJIwC. Accessed 1 Apr 2018

Microsoft, On-demand smooth streaming. [Online]. https://docs.microsoft.com/en-us/iis/media/on-demand-smooth-streaming/getting-started-with-iis-smooth-streaming. Accessed 1 Apr 2018

Youtube, ‘Recommended upload encoding settings (Advanced). [Online]. https://support.google.com/youtube/answer/1722171?hl=en-GB. Accessed 1 Apr 2018

Zhang W, Wen Y, Chen Z, Khisti A (2013) Qoe-driven cache management for http adaptive bit rate streaming over wireless networks. IEEE Trans Multimed 15(6):1431–1445

Reichl P, Tuffin B, Schatz R (2013) Logarithmic laws in service quality perception: where microeconomics meets psychophysics and quality of experience. Telecommun Syst 52(2):587–600

Egger S, Reichl P, Hofeld T, Schatz R (2012) “Time is bandwidth”? Narrowing the gap between subjective time perception and Quality of Experience. In: IEEE ICC, pp 1325–1330

Kelly FP, Maulloo AK, Tan DK (1998) Rate control for communication networks: shadow prices, proportional fairness and stability. J Oper Res Soc 49(3):237–252

Boyd S, Parikh N, Chu E, Peleato B, Eckstein J (2011) Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends Mach Learn 3(1):1–122

Kellerer H, Pferschy U, Pisinger D (2004) Knapsack Problems. Springer, Berlin, Heidelberg

Breslau L, Cao P, Fan L, Phillips G, Shenker S (1999) Web caching and zipf-like distributions: evidence and implications. In: IEEE INFOCOM, pp 126–134

Acknowledgements

This research is funded by Vietnam National Foundation for Science and Technology Development (NAFOSTED) under Grant Number 102.02-2015.36.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Vo, P.L., Tran, N.H. Cooperative caching for HTTP-based adaptive streaming contents in cache-enabled radio access networks. Computing 101, 435–453 (2019). https://doi.org/10.1007/s00607-018-0647-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00607-018-0647-0

Keywords

- Cooperative caching

- Adaptive bitrate streaming

- Radio access network

- Alternating direction method of multipliers