Abstract

Teaching–learning-based optimization (TLBO) has been widely used to solve global optimization problems. However, the optimization problems in various fields are becoming more and more complex. The canonical TLBO is easy to be trapped in the local optimum when dealing with these problems. In this paper, a new TLBO algorithm with collective intelligence concept introduced is proposed, namely collective information-based TLBO (CIBTLBO). CIBTLBO uses the information from the top learners to form CITeachers and uses the neighborhood information of each learner to form NTeachers, and these teachers help other learners learn in the teacher phase. Furthermore, CITeacher also helps in the learner phase. To demonstrate superiority of the proposed algorithm, experiments on 28 benchmark functions from CEC2013 are carried out, and the benchmark functions are set to 10, 30, 50 and 100 dimensions, respectively. The results show that the proposed CIBTLBO algorithm outperforms the other previous related algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the real life, the optimization problems in various fields are becoming more and more complex. To solve these problems, many nature-inspired optimization algorithms which simulate natural phenomena and animals’ actions have been proposed. These nature-inspired optimization algorithms can be divided into two types: evolution algorithms (EAs) and swarm intelligence (SI). EAs are inspired by the natural phenomena, the typical EAs may include genetic algorithm (GA) (Goldberg 1989), Evolutionary programming (EP) (Fogel 1994) and differential evolution (DE) (Storn and Price 1997), etc. On the other hand, SIs are inspired by the animal behavior and social behavior. The typical Sis may include particle swarm optimization (PSO) (Kennedy and Eberhart 1995), artificial bee colony algorithm (ABC) (Karaboga and Basturk 2008), biogeography-based optimization (BBO) (Simon 2008), harmony search (HS) (Geem et al. 2001) and teaching–learning-based optimization (TLBO) (Rao et al. 2011, 2012; Rao 2015), etc. It has been proved that most of these algorithms can achieve good performance on handling different problems.

Among them, TLBO which simulates the teaching and learning behavior in a classroom is becoming more and more popular and was applied to engineering applications (Rao 2015), because it has several advantages, such as eliminating of tuning controlling parameters and rapid convergence capability.

However, TLBO has the chance to trap into local optimum when solving the complex problems. To avoid TLBO getting trapped in the local optimum, a large search range may be used in the early stage of the algorithm when solving some complex multimodal problems. In Rao and Patel (2012), Elitist TLBO modified the duplicate solutions to avoid local optima. In Zou et al. (2014), DGSTLBO generated several groups in the population and lets them learn independently to keep away from local optima. In Wang et al. (2014), NSTLBO made use of the information that comes from the neighborhood topology to avoid local optima. In Ye et al. (2015), a TLBO variant utilized bi-phase crossover scheme and special local search operators to balance exploration and exploitation. To improve the performance of TLBO, a new variant of the TLBO algorithm called collective information-based TLBO (CIBTLBO) is proposed in this paper. Collective intelligence is introduced in TLBO by utilizing the collective information of the m best learners to update the other learners in the teacher phase and the learner phase. Extensive experiments have been carried out on 28 benchmark functions derived from CEC 2013. The results show that the proposed scheme is able to enhance the performance of TLBO for most of the considered problems.

The remainder of this paper is organized as follows: Sect. 2 mainly describes the standard TLBO framework and some related works. Section 3 describes the detailed implementation of the proposed algorithm. In Sect. 4, the experiment based on the benchmark functions is conducted for verification. Finally, Sect. 5 concludes this paper.

2 Teaching–learning-based optimization and related works

2.1 TLBO

Inspired by the teaching and learning behaviors in the classroom, Rao et al. (2011, 2012) first proposed the teaching–learning-based optimization (TLBO). The process of TLBO can be divided into two phases: “Teacher Phase” and “Learner Phase.” In the teacher phase, the teacher helps the learners to acquire knowledge and improve their grades, while in the learner phase, learners start to learn from each other and improve their own grades. The working process of these two phases is described in details as follow.

2.1.1 Teacher phase

In this phase, a teacher is the learner with the best grades selected from the learners. The teacher tries to increase the average grades of the whole class by using his own knowledge, while the other learners get the knowledge by utilizing the difference between the teacher and the mean grade of the whole class.

Assume Teacher and Mean are the best solution in the class and the mean solution of all learners in the class, respectively. The difference between the Teacher and the Mean is obtained as (Rao et al. 2011, 2012):

For the ith learner Xi, the updating formula is given as (Rao et al. 2011; Rao et al. 2012):

where TF is the teaching factor and r is a random vector whose elements are random numbers in the domain [0, 1]. r has the same dimension as the Teacher. TF is determined using the following expression.

It’s clear that the value of TF can be either 1 or 2. The newXi replaces Xi if it has better fitness value.

2.1.2 Learner phase

In this phase, all learners try to enhance their grades by interacting with others. A learner randomly chooses another learner in the class, and he or she will learn new things if that learner has more knowledge than him or her. For the ith learner Xi, the updating formula is given by (Rao et al. 2011, 2012):

where r is a random vector whose elements are random numbers in the domain [0, 1]. r has the same dimension as Xi. j is a random integer in the domain (0, NP), NP is the size of the class and j is not equal to i. f(Xi) and f(Xj) are the fitness values of the learners Xi and Xj, respectively. The newXi replaces Xi if it has better fitness value.

2.2 Some related works

Since TLBO was first proposed by Rao et al. (2011, 2012), it received more and more attention and has been applied to solve real-world optimization problems due to advantages of simple and high efficiency. Therefore, several TLBO variants have been proposed. In Rao and Patel (2012), some elite solutions are employed to replace the worst solutions in the population after learner phase. The proposed Elitist TLBO was proved to be effective for solving complex constrained optimization problems. What is more, the Elitist TLBO can be easily applied to some real-world optimization problems or some problems with a large number of variables and objectives. And some experiments on unconstrained benchmark problems were reported in Rao and Patel (2013). Niknam et al. (2012) proposed a θ-multi-objective TLBO algorithm that uses phase angles instead of designed variables in the evolutionary process and apply it to solve the dynamic economic emission dispatch problem. In Venkata Rao and Patel (2013), a modified TLBO increases the number of teachers in order to make sure learners can learn from their teachers. The algorithm was used to solve multi-objective problems of heat exchangers successfully. In addition, the modified TLBO speeds up the convergence rate of the basic TLBO, outperforms GA approach on thermodynamic optimization and is easily customized for thermodynamic optimization with a large number of variables and objectives. Zou et al. (2014) presented a TLBO algorithm with dynamic group strategy, namely DGSTLBO, in which the learners are divided into several groups, and the teaching and learning behaviors are carried out in groups. DGSTLBO can make good use of local information and generate better quality solutions for the high-dimensional problems. Wang et al. (2014) proposed an improved TLBO with neighborhood search (NSTLBO) which is expected to get rid of the local optimal by introducing the concept of neighborhood topology. Xu et al. (2015) presented a TLBO algorithm that is to solve the flexible job-shop problem with fuzzy processing time, with the specially designed operations; the proposed TLBO obtains good performance. Ghasemi et al. (2015) proposed a TLBO using Lévy mutation strategy, namely LTLBO. The algorithm is suitable for solving optimal power flow (OPF) problems and is competitive among some published methods. Zou et al. (2014) proposed a bare-bones TLBO (BBTLBO) which incorporated a Gaussian sampling learning and neighborhood search strategy in the standard TLBO. In teacher phase, the proposed strategy can balance the exploration and the exploitation. In addition, it is more effective in generating better solutions in learner phase. Niknam et al. (2013) proposed a modified TLBO (MTLA), which added a “modified phase” to the standard TLBO. It exhibited the good ability to help learners escape from the local optima. In Rao and Patel (2014), MO-ITLBO was implemented by introducing tutorial training, self-motivated learning, external archive, and a grid-based approach was utilized to maintain population diversity. It achieved good performance in handling multi-objective optimization problems. In Patel and Savsani (2016) and Raja et al. (2016), a tutorial training and self-learning-inspired TLBO (TS-TLBO) was used for the multi-objective optimization of stirling heat engine and rotary regenerator. Ghasemi et al. (2014, 2015) demonstrated some TLBO variants’ applications in solving optimal power flow and reactive power dispatch problems.

Besides TLBO, PSO has been also widely used because of its good optimization abilities and simple framework. However, PSO also suffers from the premature convergence. Researchers have made lots of efforts to develop different PSO variants to improve PSO’s performance. Peram et al. (2003) presented an algorithm fitness-distance-ratio-based PSO (FDR-PSO). Target particles choose nearby particles with better fitness values to update themselves, and the ratio of the fitness values was used to guide the direction together with the information of distance. Liang et al. (2006) proposed comprehensive learning PSO (CLPSO) in which a particle can use the information from all other particles to update its velocity allowing particles to search a larger space. Huang et al. (2012) proposed an example-based learning PSO (ELPSO) to avoid the premature convergence of PSO. The algorithm uses a set of global best particles to update the target particle instead of using a single global best particle. The algorithm can achieve good convergence speed maintaining the population diversity. Qu et al. (2013) proposed a distance-based locally informed PSO (LIPS). LIPS uses some local best values and Euclidean distance-based neighborhood to guide the particles. Local search ability has been enhanced, which is demonstrated by the good performance in solving multimodal problems.

3 Proposed algorithm

3.1 Motivations

The canonical TLBO simulates the teaching and learning behaviors in a classroom. A teacher is selected from the learners, and he/she wants to help all learners to improve their knowledge. When canonical TLBO is used to solve some complex problems, it may easily get trapped in a local optimal. In order to improve the convergence ability of the canonical TLBO and avoid getting trapped in the local optimum, a collective information-based TLBO (CIBTLBO) is proposed. A new generation approach is proposed to improve the exploration capability of the algorithm together with the neighborhood topology. To balance the exploration and exploitation, some modifications are also required in the learner phase.

3.2 Proposed algorithm

Before introducing the proposed algorithm, some related background knowledge is presented. The concept of collective intelligence (CI) (Lévy 1997; McGonigal 2008) first came out in 1994. It was first presented by French philosopher P. Lévy to describe the influence of the internet on cultural and consumption of knowledge. CI uses the information from multiple individuals to solve problems rather than the information from single individual. In Wolpert and Tumer (1999), the emerging science of how to design a CI was surveyed. In Malone et al. (2009), a new framework was proposed to give a better interpretation on CI to finish a task; four key questions should be answered first (Who, Why, What and How). The different ways to finish tasks for crowds are shown in Table 1 (Malone et al. 2009). The good examples of CI can be found in Malone et al. (2009) and Heylighen (1999), for example, Google, Wikipedia, Threadless, social behavior of insects and the “invisible hand” of the market mechanism. In this paper, the concept of CI is introduced into TLBO for performance enhancement.

Inspired by the collective information concept proposed in DE (Zheng et al. 2017), a collective information vector Xci_vector,G is generated using

where i = 1,…, N, N is the population size, m is a random integer within the domain [1, i], Xj,G are m vectors that have smaller or equal fitness values than Xi,G ,and Xj,G are sorted in ascending order (for minimization problem). wj are weighting factors that determined by the following expression.

The smaller the fitness value is, the larger the weight factor is.

In human society, people interact with others and become more and more similar to others. They mainly influence or imitate the people around them, and they may become better or worse than before. Therefore, introducing a neighborhood topology is reasonable for improving the algorithm performance. There are different neighborhood topology structures (Wang et al. 2014; Kennedy 1999; Weber et al. 2011), such as ring, stars, pyramid and torus. For simplicity and further performance enhancement, the ring topology is adopted here. In the ring topology, individuals are arranged in a ring. Ring topology has a k-neighborhood radius; the neighborhood of each individual in the ring consists of 2k + 1 members (including itself). Figure 1 shows a ring topology of X1,G with 2-neighborhood radius.

Besides the above background knowledge, we also make some changes in both teacher phase and learner phase operations. The following parts show the details.

3.2.1 Modification in teacher phase

In teacher phase, the Difference_Mean consists of two parts: a local update part and a global update part. For the local update part, the best individual in a learner’s neighborhood is chosen as the neighborhood teacher (NTeacher), and two other individuals in the neighborhood are chosen randomly. NTeacher helps learners to know more about their neighbors and learn from the best neighbor.

The local update part is generated using the following expression.

where i ≠ p1 ≠ p2, p1 and p2 are the indexes in the neighborhood. r1 is a random vector that has the value within (0, 1).

The global update part is similar to the local update part. It uses a collective information vector to replace the neighborhood teacher, and the vector can be regarded as CITeacher. Two other individuals are selected randomly from the whole population. CITeacher helps learners to form a general impression of the whole class and do more exploration.

The global update part is generated using the following expression.

where i ≠ g1 ≠ g2, g1 and g2 are indexes in global, r2 is also random vector like r1.

Afterward, those two parts are combined together to update the ith learner Xi, G as follows.

where δ is also a weight factor (Das et al. 2009). δ can have many advanced models, such as linear model, exponential model and random model.

-

1.

Linear model

$$ \delta = {G \mathord{\left/ {\vphantom {G {G_{\text{Max}} }}} \right. \kern-0pt} {G_{\text{Max}} }} $$(11) -

2.

Exponential model

$$ \delta = {{\exp (G} \mathord{\left/ {\vphantom {{\exp (G} {G_{\text{Max}} }}} \right. \kern-0pt} {G_{\text{Max}} }} \cdot \ln (2)) - 1 $$(12) -

3.

Random model

$$ \delta = {\text{rand}}(0,1) $$(13)

The introduction of neighborhood topology and collective information vector are trying to improve the local search and global search capabilities. And the weight factor δ is used to adjust the contribution from local search and global search during the process.

3.2.2 Modification in learner phase

Since students can learn by themselves after class and get some improvement, this behavior is imitated in the proposed algorithm. Similar to the teacher phase, the collective information vector is also utilized. An additional item is added for both situations in learner phase, as given in the following expression.

where r is the same vector as that utilized in the basic learner phase, Xi, G is the current individual. After the learners learn from each other, each one has the chance to change one of its dimensions to that of the collective information vector with a small probability (self-mutation factor β).

where drand is a randomly chosen dimension.

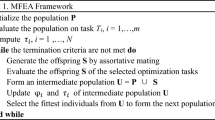

3.3 Pseudo-code for CIBTLBO

As described above, the proposed algorithm collective information-based TLBO (CIBTLBO) is presented in Algorithm 1. The proposed operation is highlighted using “\( \Leftarrow \)”.

4 Experiential study and discussion

In this section, some experiments are carried out to study the performance of the proposed CIBTLBO.

4.1 Test problems

Test problems come from CEC2013 competition (Liang et al. 2013), which consists of five unimodal functions (F1–F5), fifteen basic multimodal functions (F6–F20) and eight composition functions (F21–F28).

4.2 Performance measure

The performance of compared algorithms is evaluated by solution error value. The solution error value is recorded as zero if it is smaller than 10−8 (Liang et al. 2013). All compared algorithms need to run all functions in CEC2013 for 50 times. The mean values and the standard deviation values are calculated accordingly. The performance of all compared algorithms is measured by a Wilcoxon signed-rank test (Sheskin 2003) at a significance level of 0.05. Symbols “+”, “−” and “=” are used to indicate the results of the test. Symbol “+” means that the compared algorithms perform significantly better than the proposed algorithm, while symbols “−” and “=” mean significantly worse and similar, respectively. The best values of each function are highlighted in boldface.

4.3 Parameter settings

The algorithms for comparisons include TLBO (Rao et al. 2011, 2012), ELPSO (Huang et al. 2012), LIPS (Qu et al. 2013), DGSTLBO (Zou et al. 2014), Elitist-TLBO (Rao and Patel 2012), modified TLBO (Venkata Rao and Patel 2013), BBTLBO (Zou et al. 2014), MTLA (Niknam et al. 2013), MGBTLBO (Ghasemi et al. 2015), and DE (Storn and Price 1997). Due to the space limitation, the table of each experiment is divided into two parts. The parameter settings of the algorithms are as follow.

-

1.

The population size (NP) of ELPSO and LIPS is set as 40. The population size of the other algorithms is 100.

-

2.

The refreshing gap of ELPSO is 7.

-

3.

For DGSTLBO, the group size is 5, regrouping period is 5, and learning probability is set to 0.5.

-

4.

The elite size of Elitist-TLBO is 8.

-

5.

The teacher number of modified TLBO is 3.

-

6.

Neighborhood radius k = 2, weight factor δ uses exponential model, self-mutation factor β = 0.2 for CIBTLBO.

-

7.

The number of maximum function evaluation (FEs) is 150,000 and the number of independent runs is 50.

4.4 Comparison with other algorithms

In this subsection, the performance of proposed CIBTLBO is compared with the above-listed algorithms by evaluating the functions in CEC2013, and the dimension of functions is set to 10, 30, 50 and 100 (10-D, 30-D, 50-D and 100-D).

4.4.1 Results for 10-D problems

From Tables 2 and 3, it can be observed that DE achieves the best performance on unimodal functions and composition functions, LIPS and Elitist-TLBO win on 3 multimodal functions, BBTLBO wins on 2 multimodal functions, while ELPSO, modified TLBO and DGSTLBO win on 1 function, respectively. The proposed algorithm CIBTLBO also achieves good results on multimodal functions and wins on 4 functions (F11, F12, F13, F20). Besides DE, both CIBTLBO and LIPS achieve good performance on composition functions. Figure 2 shows the convergence curves of some compared algorithms on some functions of 10-D in terms of the minimum mean fitness values over 50 runs.

4.4.2 Results for 30-D problems

From Tables 4 and 5, it can be concluded that the proposed CIBTLBO and DE achieve the best results on most of the benchmark functions. For unimodal functions, DE (F3, F5) and MTLA (F4, F5) win on 2 functions, while TLBO and CIBTLBO win on 1 function. For multimodal functions, CIBTLBO (F12, F13, F19, F20) and LIPS (F15, F16, F17, F18) win on 4 functions; ELPSO, DE and MGBTLBO win on 2 functions, respectively. For composition functions, DE (F24, F25, F27) win on 3 functions, while CIBTLBO and LIPS win on 2 functions, respectively. For the 30-D problems, LIPS demonstrate its good capability of solving multimodal functions, DE shows some good results on composition functions, and CIBTLBO exhibits good performance on multimodal functions and take the second place on composition functions. The exploration capability of TLBO is maintained. This is mainly contributed by the introduction of NTeacher and CITeacher, which can help the algorithm to search for the optimal point on the complex problems. Some convergence curves of some compared algorithms on some functions of 30-D in terms of the minimum mean fitness values over 50 runs are shown in Fig. 3.

4.4.3 Results for 50-D problems

By comparing the data in Tables 6 and 7 with those in the above tables, it can be found that both the mean value and standard deviation in Tables 6 and 7 are larger than the corresponding values in the above tables. This is because the difficulty of solving the problems increases with the increasing dimension. For 50-D problems, the proposed CIBTLBO wins on 5 functions (F1, F12, F13, F19, F20), Elitist-TLBO (F8, F11, F14, F17, F19, F22, F23) wins on 7 functions, DE (F3, F5, F6, F7, F10, F24, F25, F28) wins on 8 functions, MTLA (F4, F16, F18, F20) wins on 4 functions, and LIPS (F15, F21) wins on 2 functions. BBTLBO (F20), ELPSO (F27), modified TLBO (F26), TLBO (F2) and DGSTLBO (F8) win on 1 function, respectively. It can be observed that Elitist-TLBO achieves good performance on multimodal functions; CIBTLBO exhibits the best performance on multimodal functions and performs well on some composition functions (F24, F25, F27, F28). For the high-dimension (50-D) problem, the introduced teachers (NTeacher and CITeacher) demonstrate their capability in global searching, which help to find the global optimum and avoid trapping into local optimum. Figure 4 shows some convergence curves of some compared algorithms on some functions of 50-D in terms of the minimum mean fitness values over 50 runs.

4.4.4 Results for 100-D problems

From the test data for 100-D problems given in Tables 8 and 9, it can be observed that DE (F1, F3, F5, F6, F10, F13, F18, F19, F24) win on 9 functions, ELPSO (F4, F9, F25, F26, F27, F28) win on 6 functions, CIBTLBO (F7, F12, F13, F17, F27) and Elitist-TLBO (F11, F14, F17, F21, F22) win on 5 functions, respectively. LIPS (F15, F21) wins on 2 functions, and both TLBO (F2) and MTLA (F16) win on 1 function. CIBTLBO, Elitist-TLBO and DE mainly have some good performance on multimodal functions. ELPSO show some good results on the last few composition functions. Although CIBTLBO can not win the first prize on composition functions, it performs the best on most of the composition functions except ELPSO. The excellent properties of CIBTLBO on solving 100-D problems benefits from the introduction of CITeahcer and NTeacher, because they can collect the information from the “class” and help to learn more about the search area and avoid local optimum.

4.4.5 Experimental discussion

From the above experiments, CIBTLBO is found to achieve good performance. It is mainly contributed by the collective intelligence and neighborhood topology. In the teacher phase, learners can obtain information from the whole class, which helps them to know more about the class. At the same time, they can also use the information from its neighbors. In the learner phase, learners not only learn from another learner, but also collect some information from the class. A good balance between exploration and exploitation is achieved.

5 Conclusions

This paper proposed a collective information-based TLBO (CIBTLBO) algorithm, which introduces the concept of collective intelligence and the neighborhood topology. CITeacher and NTeacher are generated to enhance both global and local search together with new teaching and learning techniques. Experimental results on CEC2013 benchmark functions have shown that the proposed algorithm CIBTLBO is effective on handling different functions including multimodal and composition functions when comparing with the previous related works.

References

Das S, Abraham A, Chakraborty UK, Konar A (2009) Differential evolution using a neighborhood-based mutation operator. IEEE Trans Evol Comput 13(3):526–553

Fogel LJ (1994) Evolutionary programming in perspective: the top-down view. In: Computational intelligence: imitating life. IEEE Press, Piscataway

Geem ZW, Kim JH, Loganathan GV (2001) A new heuristic optimization algorithm: harmony search. Simulation 76(2):60–70

Ghasemi M, Ghavidel S, Rahmani S, Roosta A, Falah H (2014) A novel hybrid algorithm of imperialist competitive algorithm and teaching learning algorithm for optimal power flow problem with non-smooth cost functions. Eng Appl Artif Intell 29:54–69

Ghasemi M, Ghavidel S, Gitizadeh M, Akbari E (2015a) An improved teaching–learning-based optimization algorithm using Lévy mutation strategy for non-smooth optimal power flow. Int J Electr Power Energy Syst 65:375–384

Ghasemi M, Taghizadeh M, Ghavidel S, Aghaei J, Abbasian A (2015b) Solving optimal reactive power dispatch problem using a novel teaching–learning-based optimization algorithm. Eng Appl Artif Intell 39:100–108

Goldberg DE (1989) Genetic algorithms in search optimization and machine learning. Addison-Wesley, Reading

Han Huang H, Qin ZH, Lim A (2012) Example-based learning particle swarm optimization for continuous optimization. Inf Sci 182(1):125–138

Heylighen F (1999) Collective intelligence and its implementation on the web: algorithms to develop a collective mental map. Comput Math Organ Theory 5(3):253–280

Karaboga D, Basturk B (2008) On the performance of artificial bee colony (ABC) algorithm. Appl Soft Comput 8(1):687–697

Kennedy J (1999) Small worlds and mega-minds: effects of neighborhood topology on particle swarm performance. In: Proceedings of the 1999 congress on evolutionary computation-CEC99 (Cat. No. 99TH8406), Washington, DC, vol 3, pp 1938

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of the IEEE international conference on neural network, pp 1942–1948

Lévy P (1997) Collective intelligence. Harper Collins, New York

Liang JJ, Qin AK, Suganthan PN, Baskar S (2006) Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans Evol Comput 10(3):281–295

Liang JJ, Qu BY, Suganthan PN, Hernández-Díaz AG (2013) Problem definitions and evaluation criteria for the CEC 2013 special session on real-parameter optimization, Technical report. Nanyang Technological University

Malone TW, Laubacher R, Dellarocas CN (2009) Harnessing crowds: mapping the genome of collective intelligence. MIT Sloan Research Paper 4732-09

McGonigal J (2008) Why i love bees: a case study in collective intelligence gaming. In: Salen K (ed) The ecology of games: connecting youth, games, and learning. MIT Press, Cambridge, MA, pp 199–227

Niknam T, Golestaneh F, Sadeghi MS (2012) θ-Multiobjective teaching–learning-based optimization for dynamic economic emission dispatch. IEEE Syst J 6(2):341–352

Niknam T, Azizipanah-Abarghooee R, Aghaei J (2013) A new modified teaching–learning algorithm for reserve constrained dynamic economic dispatch. IEEE Trans Power Syst 28(2):749–763

Patel V, Savsani V (2016) Multi-objective optimization of a stirling heat engine using TS-TLBO (tutorial training and self learning inspired teaching–learning based optimization) algorithm. Energy 95:528–541

Peram T, Veeramachaneni K, Mohan CK (2003) Fitness-distance-ratio based particle swarm optimization. In: Swarm intelligence symposium, SIS ‘03. Proceedings of the 2003 IEEE, pp 174–181

Qu BY, Suganthan PN, Das S (2013) A distance-based locally informed particle swarm model for multimodal optimization. IEEE Trans Evol Comput 17(3):387–402

Raja BD, Jhala RL, Patel V (2016) Multi-objective optimization of a rotary regenerator using tutorial training and self-learning inspired teaching–learning based optimization algorithm (TS-TLBO). Appl Therm Eng 93:456–467

Rao RV (2015) Teaching–learning-based optimization algorithm and its engineering applications. Springer, London

Rao RV, Patel V (2012) An elitist teaching–learning-based optimization algorithm for solving complex constrained optimization problems. Int J Ind Eng Comput 3(4):535–560

Rao R, Patel V (2013) Comparative performance of an elitist teaching–learning-based optimization algorithm for solving unconstrained optimization problems. Int J Ind Eng Comput 4(1):29–50

Rao RV, Patel V (2014) A multi-objective improved teaching–learning based optimization algorithm for unconstrained and constrained optimization problems. Int J Ind Eng Comput 5(1):1–22

Rao RV, Savsani VJ, Vakharia DP (2011) Teaching–learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput Aided Des 43(3):303–315

Rao RV, Savsani VJ, Vakharia DP (2012) Teaching–learning-based optimization: an optimization method for continuous non-linear large scale problems. Inf Sci 183(1):1–15

Sheskin D (2003) Handbook of parametric and nonparametric statistical procedures. Chapman & Hall, London

Simon D (2008) Biogeography-based optimization. IEEE Trans Evol Comput 12(6):702–713

Storn R, Price K (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11(4):341–359

Venkata Rao R, Patel V (2013) Multi-objective optimization of heat exchangers using a modified teaching–learning-based optimization algorithm. Appl Math Model 37(3):1147–1162

Wang L, Zou F, Hei X, Yang D, Chen D, Jiang Q (2014) An improved teaching–learning-based optimization with neighborhood search for applications of ANN. Neurocomputing 143:231–247

Weber M, Neri F, Tirronen V (2011) A study on scale factor in distributed differential evolution. Inf Sci 181(12):2488–2511

Wolpert DH, Tumer K (1999) An introduction to collective intelligence. arXiv preprint cs/9908014

Xu Y, Wang L, Wang S, Liu M (2015) An effective teaching–learning-based optimization algorithm for the flexible job-shop scheduling problem with fuzzy processing time. Neurocomputing 148:260–268

Zheng LM, Zhang SX, Tang KS, Zheng SY (2017) Differential evolution powered by collective information. Inf Sci 399:13–29

Zou F, Wang L, Hei XH, Chen DB, Yang DD (2014a) Teaching–learning-based optimization with dynamic group strategy for global optimization. Inf Sci 273(8):112–131

Zou F, Wang L, Hei X, Chen D, Jiang Q, Li H (2014b) Bare-bones teaching–learning-based optimization. Sci World J 2014(4):1–17

Funding

This study was funded by the National Natural Science Foundation of China (No. 61671485) and Guangdong Natural Science Foundation (No. 2015A030312010).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Peng, Z.K., Zhang, S.X., Zheng, S.Y. et al. Collective information-based teaching–learning-based optimization for global optimization. Soft Comput 23, 11851–11866 (2019). https://doi.org/10.1007/s00500-018-03741-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-03741-2