Abstract

This paper addresses characterizations of integral input-to-state stability (iISS) for hybrid systems. In particular, we give a Lyapunov characterization of iISS unifying and generalizing the existing theory for pure continuous-time and pure discrete-time systems. Moreover, iISS is related to dissipativity and detectability notions. Robustness of iISS to sufficiently small perturbations is also investigated. As an application of our results, we provide a maximum allowable sampling period guaranteeing iISS for sampled-data control systems with an emulated controller.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There have been considerable attempts toward stability analysis of nonlinear systems in the presence of exogenous inputs over the last few decades. In particular, Sontag [22] introduced the notion of input-to-state stability (ISS) which is indeed a generalization of \(H_\infty \) stability for nonlinear systems. Many applications of ISS in analysis and design of feedback systems have been reported [25]. A variant of ISS notion was introduced in [24] extending \(H_2\) stability to nonlinear systems. This generalization is called integral input-to-state stability (iISS) which was studied for continuous-time systems in [4], followed by an investigation into iISS of discrete-time systems in [1]. As long as we are interested in stability analysis with respect to compact sets, it has been established that iISS is a more general concept rather than ISS and so every ISS system is also iISS, while the converse is not necessarily true [24].

There is a wide variety of dynamical systems that cannot be simply described either by differential or difference equations. This gives rise to the so-called hybrid systems that combine both continuous-time (flows) and discrete-time (jumps) behaviors. Significant contributions concerned with modeling of hybrid systems have been developed in [10]. In particular, a framework was developed in [10] which not only models a wide range of hybrid systems, but also allows the study of stability and robustness of such systems.

This paper investigates iISS for hybrid systems modeled by the framework in [10]. Although the notion of iISS is well-understood for switched and impulsive systems (cf. [15] and [11] for more details), to the best of our knowledge, no further generalization of iISS being applicable to a wide variety of hybrid systems has been developed yet. Toward this end, we provide a Lyapunov characterization of iISS unifying and generalizing the existing theory for pure continuous-time and pure discrete-time systems. Furthermore, we relate iISS to dissipativity and detectability notions. We also establish robustness of the iISS property to vanishing perturbations. We finally illustrate the effectiveness of our results by application to determination of a maximum allowable sampling period (MASP) guaranteeing iISS for sampled-data systems with an emulated controller. To be more precise, we show that if a continuous-time controller renders a closed-loop system iISS, the iISS property of the closed-loop control system is preserved under an emulation-based digital implementation if the sampling period is taken less than the corresponding MASP.

The rest of this paper is organized as follows: First we introduce our notation in Sect. 2. In Sect. 3, a description of hybrid systems, solutions and stability notions are given. The main results are presented in Sect. 4. Section 5 gives the iISS property of sampled-data control systems. Section 6 provides the concluding remarks.

2 Notation

In this paper, \(\mathbb R_{\ge 0}\) (\(\mathbb R_{> 0}\)) and \({\mathbb {Z}}_{\ge 0}\) (\({\mathbb {Z}}_{> 0}\)) are nonnegative (positive) real and nonnegative (positive) integer numbers, respectively. \(\mathbb {B}\) is the open unit ball in \(\mathbb R^{n}\). The standard Euclidean norm is denoted by \(\left| \cdot \right| \). Given a set \({\mathcal {A}}\subset \mathbb R^{n}\), \(\overline{{\mathcal {A}}}\) denotes its closure. \(\left| x\right| _{\mathcal {A}}\) denotes \(\inf \nolimits _{y \in {\mathcal {A}}}\left| x-y\right| \) for a closed set \({\mathcal {A}}\subset \mathbb R^{n}\) and any point \(x \in \mathbb R^{n}\). Given an open set \(\mathcal {X} \subset \mathbb R^{n}\) containing a compact set \({\mathcal {A}}\), a function \(\omega :\mathcal {X} \rightarrow \mathbb R_{\ge 0}\) is a proper indicator for \({\mathcal {A}}\) on \(\mathcal {X}\) if \(\omega \) is continuous, \(\omega (x)=0\) if and only if \(x\in {\mathcal {A}}\), and \(\omega (x_i) \rightarrow +\infty \) when either \(x_i\) tends to the boundary of \(\mathcal {X}\) or \(\left| x_i\right| \rightarrow +\infty \). The identity function is denoted by \({{\mathrm{id}}}\). Composition of functions from \(\mathbb R\) to \(\mathbb R\) is denoted by the symbol \(\circ \).

A function \(\alpha :\mathbb R_{\ge 0}\rightarrow \mathbb R_{\ge 0}\) is said to be positive definite (\(\alpha \in \mathcal {PD}\)) if it is continuous, zero at zero and positive elsewhere. A positive definite function \(\alpha :\mathbb R_{\ge 0}\rightarrow \mathbb R_{\ge 0}\) is of class-\(\mathcal {K}\) (\(\alpha \in \mathcal {K}\)) if it is strictly increasing. It is of class-\(\mathcal {K}_\infty \) (\(\alpha \in \mathcal {K}_\infty \)) if \(\alpha \in \mathcal {K}\) and also \(\alpha (s) \rightarrow +\infty \) if \(s \rightarrow \infty \). A continuous function \(\gamma \) is of class-\(\mathcal {L}\) (\(\gamma \in \mathcal {L}\)) if it is nonincreasing and \(\lim _{s \rightarrow +\infty } \gamma (s) \rightarrow 0\). A function \(\beta :\mathbb R_{\ge 0}\times \mathbb R_{\ge 0}\rightarrow \mathbb R_{\ge 0}\) is of class-\(\mathcal {KL}\) (\(\beta \in \mathcal {KL}\)), if for each \(s \ge 0\), \(\beta (\cdot ,s) \in \mathcal {K}\), and for each \(r \ge 0\), \(\beta (r,\cdot ) \in \mathcal {L}\). A function \(\beta :\mathbb R_{\ge 0}\times \mathbb R_{\ge 0}\times \mathbb R_{\ge 0}\rightarrow \mathbb R_{\ge 0}\) is of class-\(\mathcal {KLL}\) (\(\beta \in \mathcal {KLL}\)), if for each \(s \ge 0\), \(\beta (\cdot ,s,\cdot ) \in \mathcal {KL}\) and \(\beta (\cdot ,\cdot ,s) \in \mathcal {KL}\). The interested reader is referred to [12] for more details about comparison functions.

3 Hybrid systems and stability definitions

Consider the following hybrid system with state \(x \in \mathcal {X}\) and input \(u \in \mathcal {U} \subset \mathbb R^d\) as follows

The flow and jump sets are designated by \(\mathcal {C}\) and \(\mathcal {D}\), respectively. We denote the system (1) by a 6-tuple \(\mathcal {H}=(f,g,\mathcal {C},\mathcal {D},\mathcal {X},\mathcal {U})\). Basic regularity conditions borrowed from [6] are imposed on the system \(\mathcal {H}\) as follows

-

(A1)

\(\mathcal {X}\subset \mathbb R^{n}\) is open, \(\mathcal {U} \subset \mathbb R^d\) is closed, and \(\mathcal {C}\) and \(\mathcal {D}\) are relatively closed sets in \(\mathcal {X}\times \mathcal {U}\).

-

(A2)

\(f :\mathcal {C}\rightarrow \mathbb R^{n}\) and \(g :\mathcal {D}\rightarrow \mathcal {X}\) are continuous.

-

(A3)

For each \(x \in \mathcal {X}\) and each \(\epsilon \ge 0\) the set \(\{ f(x,u) \mid u \in \mathcal {U} \cap \epsilon \overline{\mathbb {B}} \}\) is convex.

Here, we refer to the assumptions (A1)–(A3) as Standing Assumptions. We note that the Standing Assumptions guarantee the well-posedness of \(\mathcal {H}\) (cf. [10, Chapter 6] for more details). Throughout the paper we suppose that the Standing Assumptions hold except otherwise stated.

The following definitions are needed in the sequel. A subset \(E \subset \mathbb R_{\ge 0}\times {\mathbb {Z}}_{\ge 0}\) is called a compact hybrid time domain if \(E=\bigcup _{j=0}^{J} ([t_j , t_{j+1}],j)\) for some finite sequence of real numbers \(0 = t_0 \le \cdots \le t_{J+1}\). We say E is a hybrid time domain if, for each pair \((T,J) \in E\), the set \(E \cap ([0,T] \times \{ 0,1, \dots ,J \})\) is a compact hybrid time domain. For each hybrid time domain E, there is a natural ordering of points: given \((t,j), (t^\prime ,j^\prime ) \in E\), \((t,j) \preceq (t^\prime ,j^\prime )\) if \(t+j \le t^\prime + j^\prime \), and \((t,j) \prec (t^\prime ,j^\prime )\) if \(t+j < t^{\prime }+j^{\prime }\). Given a hybrid time domain E, we define

The operations \({{\sup }_t}\) and \({{\sup }_j}\) on a hybrid time domain E return the supremum of the \(\mathbb R\) and \({\mathbb {Z}}\) coordinates, respectively, of points in E. A function defined on a hybrid time domain is called a hybrid signal. Given a hybrid signal \(x : \mathrm {dom}x \rightarrow \mathcal {X}\), for any \(s \in \left[ 0, {{\sup }_t} \mathrm {dom}\,x \right] \backslash \{+\infty \}\), i(s) denotes the maximum index i such that \((s,i) \in \mathrm {dom}x\), that is, \(i(s) := \max \{ i \in {\mathbb {Z}}_{\ge 0}:(s,i) \in \mathrm {dom}x \}\). A hybrid signal \(x :\mathrm {dom}\,x \rightarrow \mathcal {X}\) is a hybrid arc if for each \(j \in {\mathbb {Z}}_{\ge 0}\), the function \(t \mapsto z(t,j)\) is locally absolutely continuous on the interval \(I^{j} := \{ t :(t,j) \in \mathrm {dom}x \}\). A hybrid signal \(u :\mathrm {dom} \, u \rightarrow \mathcal {U}\) is a hybrid input if for each \(j \in {\mathbb {Z}}_{\ge 0}\), \(u(\cdot ,j)\) is Lebesgue measurable and locally essentially bounded.

Let a hybrid signal \(v :\mathrm {dom} \, v \rightarrow \mathbb R^{n}\) be given. Let \((0,0), (t,j) \in \mathrm {dom} \, v\) such that \((0,0) \prec (t,j)\) and \({\varGamma }(v)\) denotes the set of \((t^{\prime },j^{\prime }) \in \mathrm {dom} \, v\) so that \((t^{\prime },j^{\prime }+1) \in \mathrm {dom} \, v\). Define

Let \(\gamma _1,\gamma _2 \in \mathcal {K}\) and let \(u :\mathrm {dom}\, u \rightarrow \mathcal {U}\) be a hybrid input such that for all \((t,j) \in \mathrm {dom}\,u\) the following hold

We denote the set of all such hybrid inputs by \(\mathcal {L}_{\gamma _1,\gamma _2}\). Also, if \(\left\| u_{(t,j)}\right\| _{\gamma _1,\gamma _2} < r\) for some \(r > 0\) and all \((t,j) \in \mathrm {dom}\,u\), we write \(u \in \mathcal {L}_{\gamma _1,\gamma _2} (r)\). Assume that the hybrid input \(u :\mathrm {dom}\,u \rightarrow \mathcal {U}\). For each \(T \in \left[ 0, \mathrm {length} (\mathrm {dom}\,u) \right] \backslash \{+\infty \}\), the hybrid input \(u_T :\mathrm {dom}\,u \rightarrow \mathcal {U}\) is defined by

and is called the T-truncation of u. The set \(\mathcal {L}_{\gamma _1,\gamma _2}^e\) \(\left( \mathcal {L}_{\gamma _1,\gamma _2}^e (r)\right) \) consists of all hybrid inputs \(u(\cdot ,\cdot )\) with the property that for all \(T \in [0,\infty )\), \(u_T \in \mathcal {L}_{\gamma _1,\gamma _2} \left( u_T \in \mathcal {L}_{\gamma _1,\gamma _2} (r) \right) \), and is called the extended \(\mathcal {L}_{\gamma _1,\gamma _2}\)-space.

A hybrid arc \(x :\mathrm {dom}\,x \rightarrow \mathcal {X}\) and a hybrid input \(u :\mathrm {dom}\,u \rightarrow \mathcal {U}\) is a solution pair (x, u) to \(\mathcal {H}\) if \(\mathrm {dom}x = \mathrm {dom}u\), \((x(0,0),u(0,0)) \in \mathcal {C}\cup \mathcal {D}\), and

-

for each \(j \in {\mathbb {Z}}_{\ge 0}\), \((x(t,j),u(t,j)) \in \mathcal {C}\) and \(\dot{x}=f(x(t,j),u(t,j))\) for almost all \(t \in I^j\) where \(I^{j}\) has nonempty interior;

-

for all \((t,j) \in {\varGamma }(x)\), \((x(t,j),u(t,j)) \in \mathcal {D}\) and \(x(t,j+1)=g(x(t,j),u(t,j))\).

A solution pair (x, u) to \(\mathcal {H}\) is maximal if it cannot be extended, it is complete if \(\mathrm {dom}\,x\) is unbounded. A maximal solution to \(\mathcal {H}\) with the initial condition \(\xi := x(0,0)\) and the input u is denoted by \(x(\cdot ,\cdot ,\xi ,u)\). The set of all maximal solution pairs (x, u) to \(\mathcal {H}\) with \(\xi := x(0,0) \in \mathcal {X}\) is designated by \(\varrho ^u (\xi )\).

3.1 Stability notions

Given the system \(\mathcal {H}\) and a nonempty and compact \({\mathcal {A}}\subset \mathcal {X}\), then \({\mathcal {A}}\) is called

-

0-input pre-stable if for any \(\epsilon > 0\) there exists \(\delta > 0\) such that each solution pair \((x,0) \in \varrho ^u (\xi )\) with \(\left| \xi \right| _{\mathcal {A}}\le \delta \) satisfies \(\left| x(t,j,\xi ,0)\right| _{\mathcal {A}}\le \epsilon \) for all \((t,j) \in \mathrm {dom}\,x\).

-

0-input pre-attractive if there exists \(\delta > 0\) such that each solution pair \((x,0) \in \varrho ^u (\xi )\) with \(\left| \xi \right| _{\mathcal {A}}\le \delta \) is bounded (with respect to \(\mathcal {X}\)) and if it is complete then \(\lim _{(t,j) \in \mathrm {dom}\, x , \, t+j \rightarrow +\infty } \left| x(t,j,\xi ,0)\right| _{\mathcal {A}}\rightarrow 0\).

-

0-input pre-asymptotically stable (pre-AS) if it is both 0-input pre-stable and 0-input pre-attractive.

-

0-input asymptotically stable (AS) if it is 0-input pre-AS and there exists \(\delta > 0\) such that each solution pair \((x,0) \in \varrho ^u (\xi )\) with \(\left| \xi \right| _{\mathcal {A}}\le \delta \) is complete.

It should be noted that the prefix ”pre-” emphasizes that not every solution requires to be complete. If all solutions are complete, then we drop the pre.

Definition 1

Let \({\mathcal {A}}\subset \mathcal {X}\) be a compact set. Also, let \(\omega \) be a proper indicator for \({\mathcal {A}}\) on \(\mathcal {X}\). The hybrid system \(\mathcal {H}\) is said to be pre-integral input-to-state stable (pre-iISS) with respect to \({\mathcal {A}}\) if there exist \(\alpha \in \mathcal {K}_\infty \), \(\gamma _1,\gamma _2 \in \mathcal {K}\) and \({\tilde{\beta }} \in \mathcal {KLL}\) such that for all \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e\), all \(\xi \in \mathcal {X}\), and all \((t,j) \in \mathrm {dom}\, x\), each solution pair (x, u) to \(\mathcal {H}\) satisfies

Remark 1

We point out that \(\alpha \) on the left-hand side of (2) is redundant. In particular, \(\mathcal {H}\) is pre-iISS with respect to \({\mathcal {A}}\) if and only if there exist \(\eta ,\gamma _1,\gamma _2 \in \mathcal {K}\) and \(\beta \in \mathcal {KLL}\) satisfying

We, however, place emphasis on (2) for two reasons: firstly, (2) is consistent with the continuous-time and discrete-time counterparts in [1, 4]. Secondly, (2) simplifies exposition of proofs.

Definition 2

Given a compact set \({\mathcal {A}}\subset \mathcal {X}\), let \(\omega \) be a proper indicator for \({\mathcal {A}}\) on \(\mathcal {X}\). A smooth function \(V :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\) is called an iISS Lyapunov function with respect to \((\omega ,\left| \cdot \right| )\) for (1) if there exist functions \(\alpha _1,\alpha _2 \in \mathcal {K}_\infty \), \(\sigma \in \mathcal {K}\), and \(\alpha _3 \in \mathcal {PD}\) such that

Definition 3

[4] A positive definite function \(W :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\) is called a semi-proper if there exist \(\pi \in \mathcal {K}\), and a proper positive definite function \(W_0\) such that \(W (\cdot ) = \pi ( W_0 (\cdot ) )\).

The following definitions are required to relate pre-iISS to the hybrid invariance principle [10].

Definition 4

([21, Definition 6.2]) Given sets \({\mathcal {A}}, K \subset \mathcal {X}\), the distance to \({\mathcal {A}}\) is 0-input detectable relative to K for \(\mathcal {H}\) if every complete solution pair (x, 0) to \(\mathcal {H}\) such that \(x(t,j) \in K\) for all \((t,j) \in \mathrm {dom}x\) implies that \(\lim _{(t,j)\rightarrow +\infty ,(t,j)\in \mathrm {dom}x} \omega (x(t,j)) = 0\) where \(\omega \) is a proper indicator for \({\mathcal {A}}\) on \(\mathcal {X}\).

Definition 5

Let \(\omega \) be a proper indicator for \({\mathcal {A}}\) on \(\mathcal {X}\). \(\mathcal {H}\) is said to be smoothly dissipative with respect to \({\mathcal {A}}\) if there exists a smooth function \(V :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\), called a storage function, functions \(\alpha _4,\alpha _5 \in \mathcal {K}_\infty \), \(\sigma \in \mathcal {K}\), and a continuous function \(\rho : \mathcal {X}\rightarrow \mathbb R_{\ge 0}\) with \(\rho (\xi ) = 0\) for all \(\xi \in {\mathcal {A}}\) such that

We note that Definition 5 subsumes Definition 2 as a special case. As we will see later (cf. Theorem 1), the existence of a storage function V plus the 0-input detectability relative to K is equivalent to the existence of an iISS Lyapunov function.

4 Main results

This section addresses equivalences for pre-iISS. Particularly, a Lyapunov characterization of pre-iISS together with other related notions is presented.

Given a set \(S \subset \mathcal {X}\times \mathcal {U}\), we denote \({\varPi }_0 (S) := \{ x \in \mathcal {X}: (x,0) \in S \}\). Here is the main result of this paper.

Theorem 1

Let \({\mathcal {A}}\subset \mathcal {X}\) be a compact set. Also, let \(\omega \) be a proper indicator for \({\mathcal {A}}\) on \(\mathcal {X}\). Suppose that the Standing Assumptions hold. Also, assume that \({\varPi }_0 (\mathcal {C}) \cup {\varPi }_0 (\mathcal {D}) = \mathcal {X}\). Then the following are equivalent

-

(i)

\(\mathcal {H}\) is pre-iISS with respect to \({\mathcal {A}}\).

-

(ii)

\(\mathcal {H}\) admits a smooth iISS Lyapunov function with respect to \((\omega ,\left| \cdot \right| )\).

-

(iii)

\(\mathcal {H}\) is smoothly dissipative with respect to \({\mathcal {A}}\) and the distance to \({\mathcal {A}}\) is 0-input detectable relative to \(\{ \xi \in \mathcal {X}:\rho (\xi ) = 0 \}\) with \(\rho \) as in (7) and (8).

-

(iv)

\(\mathcal {H}\) is 0-input pre-AS and \(\mathcal {H}\) is smoothly dissipative with respect to \({\mathcal {A}}\) with \(\rho \equiv 0\).

Proof

We show that \((ii) \Rightarrow (i)\) in Sect. 4.2. We also give a proof of the implication \((i) \Rightarrow (ii)\) in Sect. 4.3. The implication \((iv) \Rightarrow (ii)\) immediately follows from the combination of Proposition 2 (see below) and Definition 5. To see the implication \((ii) \Rightarrow (iii)\), let the iISS Lyapunov function V be a storage function with \(\rho (x) := \alpha _3 (\omega (x))\) and \(\alpha _3\) as in (4) and (5). So \(\mathcal {H}\) is smoothly dissipative. Moreover, the distance to \({\mathcal {A}}\) is 0-input detectable relative to \(\{ \xi \in \mathcal {X}:\rho (x) = 0 \}\) because \(\rho (x) = 0\) implies that \(x \in {\mathcal {A}}\). Finally the implication \((iii) \Rightarrow (iv)\) is provided as follows: Let V be a storage function. Also, assume that \(u \equiv 0\). According to [9, Theorem 23], \({\mathcal {A}}\) is 0-input pre-stable. To show 0-input pre-attractivity of \({\mathcal {A}}\), consider a complete solution pair (x, 0) to \(\mathcal {H}\), that is bounded by 0-input pre-stability of \({\mathcal {A}}\). We first note that \(\mathcal {H}\) satisfying the Standing Assumptions and \(u \equiv 0\) imply that the invariance principle for hybrid systems (e.g., Corollary 8.4 in [10]) can be applied. According to [10, Corollary 8.4], there exists some \(r \ge 0\) such that every complete solution (x, 0) to \(\mathcal {H}\) converges to the largest weakly invariant set contained in

where \(\rho _\mathcal {C}^{-1} (0) := \{ \xi \in \mathcal {C}: \rho (\xi ) = 0 \}\) and \(\rho _\mathcal {D}^{-1} (0) := \{ \xi \in \mathcal {D}: \rho (\xi ) = 0 \}\). It follows from the 0-input detectability relative to \(\{ \xi \in \mathcal {X}:\rho (\xi ) = 0 \}\) that every complete solution contained in the set (9) converges to \({\mathcal {A}}\). Moreover, from (6), the only invariant set in (9) is obtained for \(r=0\). As the set (9) lies in \({\mathcal {A}}\) for \(r=0\), then \({\mathcal {A}}\) is 0-input pre-attractive. Eventually, we note that smooth dissipativity of \(\mathcal {H}\) with respect to \((\omega ,\left| \cdot \right| )\) with \(\rho \equiv 0\) is obviously satisfied. This completes the proof. \(\square \)

Remark 2

The assumption \({\varPi }_0 (\mathcal {C}) \cup {\varPi }_0 (\mathcal {D}) = \mathcal {X}\) means that the union of the flow set and the jump set generated by the disturbance-free system covers \(\mathcal {X}\). As shown in [8, Section IV], there are hybrid systems not satisfying the assumption, hybrid systems with logic variables for instance. This assumption could be relaxed at the expense of further technicalities following similar lines as in the proof of [10, Theorem 7.31]. However, we do not focus on that as it makes the proofs much more complicated without considerable appreciation.

4.1 Illustrative example

Here we verify iISS of a hybrid system using an iISS Lyapunov function. Consider a first-order integrator

where \(u\in \mathbb R\) is the control input to the system. We aim to control the system using a reset controller under input constraints (i.e., \(|u| \le \overline{u}\) for some given \(\overline{u} >0\)). As shown in [18], designing a reset controller subjected to disturbances and input constraints leads to a hybrid system of the form (1) as follows

where \(x := (x_p,x_c)\) is the sate of the closed-loop system, \(w \in \mathbb R\) is the disturbance input, \(\mathcal {C}= \{ (x,w) \in \mathbb R^2 \times \mathbb R: x_p ( x_c - x_p) \le 0 \}\), \(\mathcal {D}= \{ (x,w) \in \mathbb R^2 \times \mathbb R: x_p ( x_c - x_p) \ge 0 \}\), and the constants \(b , k > 0\) and \(\lambda _p , \lambda _c < 0\) are chosen later. From \(\mathcal {D}\), the output of controller is reset to zero whenever \(x_p ( x_c - x_p) \ge 0\). Note that for sufficiently large w each solution to the system is unbounded, which shows that the system is not ISS.

Corollary 1

Consider system (11). Given \(b , k > 0\) and \(\lambda _p , \lambda _c < 0\), assume that there exist real positive numbers \(c_1,c_2>0\) such that

Take the proper indicator \(\omega (\cdot ) = \left| \cdot \right| \). Then system (11) is pre-iISS with respect to the origin.

Proof

Take the following iISS Lyapunov function candidate

Obviously, V satisfies (6) for some appropriate \(\alpha _1,\alpha _2 \in \mathcal {K}_\infty \) and \(\omega (\cdot ) = \left| \cdot \right| \). Picking \((x,w) \in \mathcal {C}\), we have

Using Young’s inequality and the facts that \(\left| \arctan (s)\right| \le \pi /2\) and \(\left| s\right| /(1+s^2) \le 1\) for all \(s \in \mathbb R\) give

From the fact that \(\frac{s^2}{1+s^2} \le [\arctan (s)]^2\) for all \(s \in \mathbb R\), we have

Now we consider jump equations on the set \(\mathcal {D}\). For any \((x,w) \in \mathcal {D}\) we get

where \(0< \rho < c_2\). Note that \((x,w) \in \mathcal {D}\) implies that \(x_p \arctan (x_p) \le x_c \arctan (x_c)\). So we have

It follows from (12), (13) and (14) that V is an iISS Lyapunov function for system (11). \(\square \)

Finding an iISS Lyapunov function is not always easy. Alternatively, either item (iii) or (iv) can be used to conclude the iISS property; see Sect. 5.

4.2 Proof of the implication \((ii) \Rightarrow (i)\)

Consider a solution pair (x, u) to \(\mathcal {H}\). Given (4) and (5), we have

for almost all t such that \((t,j) \in \mathrm {dom}\,x \backslash {\varGamma }(x)\); and

for all \((t,j) \in {\varGamma }(x)\). Applying [4, Lemma IV.1] to \(\alpha _3\), there exist \(\rho _1 \in \mathcal {K}_\infty \) and \(\rho _2 \in \mathcal {L}\) such that

for almost all t such that \((t,j) \in \mathrm {dom}\,x \backslash {\varGamma }(x)\); and

for all \((t,j) \in {\varGamma }(x)\). Exploiting (3) and letting \({\tilde{\rho }} ( \cdot ) := \rho _1 \circ \alpha _2^{- 1} ( \cdot ) \rho _2 \circ \alpha _1^{- 1} ( \cdot )\) yield

for almost all t such that \((t,j) \in \mathrm {dom}\,x \backslash {\varGamma }(x)\); and

for all \((t,j) \in {\varGamma }(x)\). Define the hybrid arcs z and v by

It should be pointed out that the hybrid arcs z and v are defined on the same hybrid time domain \(\mathrm {dom}\,x\) because, by the assumption, \(\mathrm {dom}\,x = \mathrm {dom}\,u\). It follows from (15), (17) and (18) that the following hold for almost all t such that \((t,j) \in \mathrm {dom} \, z \backslash {\varGamma }(z)\)

From (16), (17) and (18), we have for all \((t,j) \in {\varGamma }(z)\)

It follows from (19), (20) and Lemma 9 (see Appendix 1), there exists \(\beta \in \mathcal {KLL}\) such that

for all \((t,j) \in \mathrm {dom}\, z\). An immediate consequence from (17), (18), and the facts that \(z(0,0) = V(x(0,0))\) and \(\left\| v_{(t,j)}\right\| _\infty = v(t,j)\) is

for all \((t,j) \in \mathrm {dom}\,x\). Exploiting (3) and denoting \({{\tilde{\beta }}} (\cdot ,\cdot ,\cdot ) \) \( :=\beta (\alpha _2 (\cdot ),\cdot ,\cdot )\), \(\gamma _1 (\cdot ) := 2\sigma (\cdot )\) and \(\gamma _2 (\cdot ) := 2\sigma (\cdot ), \alpha (\cdot ) := \alpha _1(\cdot )\) gives the conclusion

4.3 Proof of the implication \((i) \Rightarrow (ii)\)

The proof is split into the following steps: (1) we recall Theorem 2 that an inflated system, say \(\mathcal {H}_\sigma \), remains pre-iISS under small enough perturbations when \(\mathcal {H}\) is pre-iISS; (2) we define an auxiliary system, say \({\hat{\mathcal {H}}}\), and then we show that some selection result holds for \({\hat{\mathcal {H}}}\) and \(\mathcal {H}\); (3) we start constructing a smooth converse iISS Lyapunov function for \(\mathcal {H}\) with providing a preliminary possibly non-smooth function, denoted by \(V_0\), and we show that \(V_0\) cannot increase too fast along solutions of \({\hat{\mathcal {H}}}\) (cf. Lemma 2 below); (4) we initially smooth \(V_0\) and obtain the partially smooth function \(V_s\) (cf. Lemma 3 below); (5) we smooth \(V_s\) on the whole state space and get the smooth function \(V_1\) (cf. Lemma 4 below); (6) we pass from the results for \({\hat{\mathcal {H}}}\) to the similar ones for \(\mathcal {H}\) (cf. Lemma 5 below); (7) we give a characterization of 0-input pre-AS (cf. Proposition 2 below); (8) finally we combine the results of Lemma 5 with those of Proposition 2 to obtain the smooth converse iISS Lyapunov function V.

Remark 3

It should be noted that the construction of a smooth converse iISS Lyapunov function follows the same steps as those in [4] but with different tools and technicalities. Particularly, the authors in [4] provided a preliminary possibly non-smooth iISS Lyapunov function and then appealed to [14, Theorem B.1] and [14, Proposition 4.2] to smooth the preliminary iISS Lyapunov function regardless robustness of iISS to sufficiently small perturbations. However, such a procedure does not necessarily hold for the case of hybrid systems as the procedure relies on uniform convergence of solutions. This is the reason that we appeal to results in [7, Sections VI.B-C], that is originally developed in [27], to smooth our preliminary iISS Lyapunov function. Toward this end, we need to establish robustness of the pre-iISS property for hybrid systems to vanishing perturbations, which is challenging and has not been previously studied in the literature.

4.3.1 Robustness of pre-iISS

Here we show robustness of pre-iISS to small enough perturbations (cf. Theorem 2 below). To be more precise, there exists an inflated hybrid system, denoted by \(\mathcal {H}_\sigma \), remaining pre-iISS under sufficiently small perturbations when the original system \(\mathcal {H}\) is pre-iISS.

Given the hybrid system \(\mathcal {H}\), a compact set \({\mathcal {A}}\subset \mathcal {X}\), and a continuous function \(\sigma :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\) that is positive on \(\mathcal {X}\backslash {\mathcal {A}}\), the \(\sigma \)-perturbation of \(\mathcal {H}\), denoted by \(\mathcal {H}_\sigma \), is defined by

where

In what follows, by an admissible perturbation radius, we mean any continuous function \(\sigma :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\) such that \(x+\sigma (x) \overline{\mathbb {B}} \subset \mathcal {X}\) for all \(x \in \mathcal {X}\).

Theorem 2

Let \(\mathcal {H}\) satisfy the Standing Assumptions. Let \({\mathcal {A}}\subset \mathcal {X}\) be a compact set. Assume that the hybrid system \(\mathcal {H}\) is pre-iISS with respect to \({\mathcal {A}}\). There exists an admissible perturbation radius \(\sigma :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\) that is positive on \(\mathcal {X}\backslash {\mathcal {A}}\) such that the hybrid system \(\mathcal {H}_\sigma \), the \(\sigma \)-perturbation of \(\mathcal {H}\), is pre-iISS with respect to \({\mathcal {A}}\), as well.

Proof

See Appendix 1. \(\square \)

Remark 4

Besides the contribution of Theorem 2 to proof of our main result, it is of independent interest. We note that model (22) arises in many practical cases. For instance, assume that \(\mathcal {H}\) is pre-iISS. Different types of perturbations such as slowly varying parameters, singular perturbations, highly oscillatory signals to \(\mathcal {H}\) provide a perturbed system which may be modeled by (22) (cf. [3, 16, 28] for more details). Theorem 2 guarantees pre-iISS of the perturbed system under the certain conditions.

4.3.2 The auxiliary system \(\hat{\mathcal {H}}\) and the associated properties

We need to define the following auxiliary system \(\hat{\mathcal {H}}\). Assume that \(\mathcal {H}\) is pre-iISS with respect to \({\mathcal {A}}\) satisfying (2) with suitable functions \(\alpha \), \({{\tilde{\beta }}}\), \(\gamma _1\), \(\gamma _2\). Pick any \(\varphi \in \mathcal {K}_\infty \) with \(\max \{ \gamma _1 \circ \varphi (s), \gamma _2 \circ \varphi (s) \} \le \alpha (s)\) for all \(s \in \mathbb R_{\ge 0}\). Define the following hybrid inclusion

where

The hybrid inclusion (27) is denoted by \(\hat{\mathcal {H}} := (\hat{F}, \hat{G}, {\hat{\mathcal {C}}} , {\hat{\mathcal {D}}},\mathcal {O})\) where \(\mathcal {O} = {\hat{\mathcal {C}}} \cup {\hat{\mathcal {D}}}\). We note that \(\mathcal {O} = \mathcal {X}\) because \(\mathcal {X}\supset \mathcal {O} = {\hat{\mathcal {C}}} \cup {\hat{\mathcal {D}}} \supset {\varPi }_0 (\mathcal {C}) \cup {\varPi }_0 (\mathcal {D}) = \mathcal {X}\). We also note that \(\hat{F}(x) = \overline{\mathrm {co}} \, \hat{F}(x)\) for each \(x \in {\hat{\mathcal {C}}}\) and the data of \({\hat{\mathcal {H}}}\) satisfy the Hybrid Basic Conditions (cf. Assumption 6.5 in [10]). To distinguish maximal solutions to \({\hat{\mathcal {H}}}\) from those to \(\mathcal {H}\), we denote a maximal solution to \({\hat{\mathcal {H}}}\) starting from \(\xi \) by \(x_\varphi (\cdot ,\cdot ,\xi )\). Let \({\hat{\varrho }} (\xi )\) denote the set of all maximal solutions of \({\hat{\mathcal {H}}}\) starting from \(\xi \in \mathcal {X}\).

We first relate solutions to \(\mathcal {H}\) to those to \({\hat{\mathcal {H}}}\) using the following claim whose proof follows from similar lines as in the proof of [6, Claim 3.7] with minor modifications.

Claim 1

Assume that \(\mathcal {H}\) is pre-forward complete. For each solution x to \({\hat{\mathcal {H}}}\), there exists a hybrid input u such that (x, u) is a solution pair to \(\mathcal {H}\) with \(\left| u(t,j)\right| \le \varphi (\omega (x(t,j)))\) for all \((t,j) \in \mathrm {dom}x\).

The following lemma assures that \({\hat{\mathcal {H}}}\) is pre-forward complete.

Lemma 1

Pre-iISS of \(\mathcal {H}\) implies that there exists \(\varphi \in \mathcal {K}_\infty \) such that \(\hat{\mathcal {H}}\) is pre-forward complete.

Proof

Let \(d :\mathrm {dom} \, d \rightarrow \overline{\mathbb {B}}\) be a hybrid input with \(\mathrm {dom} \, d = \mathrm {dom} \, x\) such that \(d \in \mathcal {M}\), where

By the definition of \(\hat{\mathcal {H}}\), Claim 1, the pre-iISS assumption of \(\mathcal {H}\) and the fact that \(\max \{ \gamma _1 \circ \varphi (s), \gamma _2\circ \varphi (s) \} \le \alpha (s)\) for all \(s \in \mathbb {R}_{\ge 0}\), for each solution \(x_\varphi \) to \(\hat{\mathcal {H}}\), there exists a solution pair \((x_\varphi ,\varphi (\omega (x_{\varphi }))d)\) to \(\mathcal {H}\) with \(d \in \mathcal {M}\) such that the following hold

where \({\tilde{\beta }}_0 (\cdot ) := {\tilde{\beta }}(\cdot ,0,0)\). It follows with [20, Proposition 1] that

Therefore, the maximal solution x is bounded if the corresponding hybrid domain is compact. It shows that every maximal solution of x is either bounded or complete. \(\square \)

The following hybrid inclusion is defined by

where

that is extended from \(\hat{\mathcal {H}}\). We denote \(\hat{\mathcal {H}}_\sigma \) by \((\hat{F}_\sigma ,\hat{G}_\sigma ,{\hat{\mathcal {C}}}_\sigma ,{\hat{\mathcal {D}}}_\sigma ,\mathcal {X})\). Since \(\sigma \) is an admissible perturbation radius, \({\hat{\mathcal {C}}}_\sigma \cup {\hat{\mathcal {D}}}_\sigma = {\hat{\mathcal {C}}} \cup {\hat{\mathcal {D}}}\). A maximal solution to \(\hat{\mathcal {H}}_\sigma \) starting from \(\overline{\xi }\) is denoted by \(\overline{x}_\varphi (\cdot ,\cdot ,\xi )\). Let \(\hat{\varrho }_\sigma (\xi )\) denote the set of all maximal solution to \(\hat{\mathcal {H}}_\sigma \) starting from \(\xi \in \mathcal {X}\). It is straightforward to see the combination of Lemma 1 and Theorem 2 ensures that \(\hat{\mathcal {H}}_{\sigma }\) is pre-forward complete.

Corollary 2

Pre-iISS of \(\mathcal {H}_\sigma \) implies that there exists \(\varphi \in \mathcal {K}_\infty \) such that \({\hat{\mathcal {H}}}_\sigma \) is pre-forward complete.

It should be pointed out that, by [7, Proposition 3.1], \({\hat{\mathcal {H}}}_\sigma \) satisfies the Standing Assumptions as long as \(\hat{\mathcal {H}}\) satisfies the same conditions and \(\sigma \) is an admissible perturbation radius.

4.3.3 The preliminary function \(V_0\)

We start constructing the smooth converse iISS Lyapunov function with giving a possibly non-smooth function \(V_0\). Before proceeding to the main result of this subsection, we define the following set. Consider a hybrid signal \(d :\mathrm {dom} \, d \rightarrow \overline{\mathbb {B}}\) with \(\mathrm {dom} \, d = \mathrm {dom} \, \overline{x}\) such that \(d \in \overline{\mathcal {M}}\), where

Lemma 2

Let \({\mathcal {A}} \subset {\mathcal {X}}\) be a compact set. Also, let \(\sigma :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) be an admissible perturbation radius that is positive on \({\mathcal {X}} \backslash {\mathcal {A}}\). Let \(\omega \) be a proper indicator on \(\mathcal {X}\) for \({\mathcal {A}}\). Assume that \(\mathcal {H}_\sigma \) is pre-iISS with respect to \({\mathcal {A}}\) satisfying (2) with suitable functions \(\alpha \in \mathcal {K}_\infty \), \(\overline{\beta } \in \mathcal {KLL}\), \(\overline{\gamma }_1,\overline{\gamma }_2 \in \mathcal {K}\). Let \(\varphi \in \mathcal {K}_\infty \) such that \(\max \{ \overline{\gamma }_1 \circ \varphi (s), \overline{\gamma }_2 \circ \varphi (s) \} \le \overline{\alpha }(s)\) for all \(s \in \mathbb R_{\ge 0}\). Then there exists a function \(V_0 :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) defined by

where for each \(\xi \in {\mathcal {X}}\) and \(d \in \overline{\mathcal {M}}\), \(z(\cdot ,\cdot ,\xi ,d)\) is defined by

such that

The proof of the lemma is not presented due to space constraints. However, it follows the same arguments given in the proof of \(2 \Rightarrow 1\) in [4, Theorem 1] and the proof of [1, Theorem 1]. We refer the reader to [19] for more details.

4.3.4 Initial smoothing

Here we construct a partially smooth function on \({\mathcal {X}}\) from \(V_{0}\).

Lemma 3

Let \({\mathcal {A}}\subset {\mathcal {X}}\) be a compact set. Also, let \(\sigma :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) be an admissible perturbation radius that is positive on \({\mathcal {X}} \backslash {\mathcal {A}}\). Let \(\omega \) be a proper indicator on \(\mathcal {X}\) for \({\mathcal {A}}\). Assume that \(\mathcal {H}_\sigma \) is pre-iISS with respect to \({\mathcal {A}}\). Then for any \(\xi \in \mathcal {X}\) and \(\left| \mu \right| \le 1\), there exist \(\underline{\alpha }_s, \overline{\alpha }_s, {{\tilde{\gamma }}}_1 , {{\tilde{\gamma }}}_2 \in \mathcal {K}_\infty \), and a continuous function \(V_s :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\), smooth on \(\mathcal {X}\backslash {\mathcal {A}}\), such that

Proof

Let the functions \(V_0\), \(\alpha \), \(\overline{\beta }\), \(\overline{\gamma }_1\), \(\overline{\gamma }_2\) and \(\varphi \) come from Lemma 2. We begin with giving the following property of \(V_0\) whose proof follows from the similar arguments as those in [7, Proposition 7.1] with essential modifications.

Proposition 1

The function \(V_0\) is upper semi-continuous on \(\mathcal {X}\).

To prove the lemma, we follow the same approach as the one in [7, Section VI.B] to construct a partially smooth function \(V_s\) from \(V_0\). Let \(\psi :\mathbb R^{n}\rightarrow [0,1]\) be a smooth function which vanishes outside of \(\overline{\mathbb {B}}\) satisfying \(\int \psi (\xi ) \mathrm {d} \xi = 1\) where the integration (throughout this subsection) is over \(\mathbb R^{n}\). We find a partially smooth and sufficiently small function \({\tilde{\sigma }} :\mathcal {X}\backslash {\mathcal {A}}\rightarrow \mathbb R_{> 0}\) and define the function \(V_s :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\) by

so that some desired properties [cf. items (a), (b) and (c) below] are met. In other words, we find an appropriate \({{\tilde{\sigma }}}\) such that the following are obtained

-

(a)

The function \(V_s\) is well-defined, continuous on \(\mathcal {X}\), smooth and positive on \(\mathcal {X}\backslash {\mathcal {A}}\);

-

(b)

as much as possible for some \(\underline{\alpha }_s,\overline{\alpha }_s \in \mathcal {K}_\infty \) the following conditions hold

$$\begin{aligned}&V_s (\xi ) |_{\xi \in {\mathcal {A}}} = 0,&\end{aligned}$$(35)$$\begin{aligned}&\underline{\alpha }_s (\omega (\xi )) \le V_s (\xi ) \le \overline{\alpha }_s (\omega (\xi )) \qquad \forall \xi \in \mathcal {X};&\end{aligned}$$(36) -

(c)

for some \({\tilde{\gamma }}_1,{\tilde{\gamma }}_2 \in \mathcal {K}_\infty \), it holds that

$$\begin{aligned} \max _{f \in \hat{F}(\xi )} \langle \nabla V_s (\xi ),f \rangle&\le {\tilde{\gamma }}_1 (\left| \mu \right| \varphi (\omega (\xi )) ) \qquad \forall \xi \in \hat{\mathcal {C}} \backslash {\mathcal {A}},&\end{aligned}$$(37)$$\begin{aligned} \max _{g \in \hat{G}(\xi )} V_s (g) - V_s (\xi )&\le {\tilde{\gamma }}_{2} (\left| \mu \right| \varphi (\omega (\xi ))) \qquad \forall \xi \in \hat{\mathcal {D}} .&\end{aligned}$$(38)

Regarding (a), we appeal to [13, Theorem 3.1] to achieve the desired properties. This theorem requires that \(V_0 (\xi ) |_{\xi \in {\mathcal {A}}} = 0\), which is shown in the previous subsection, \(V_0\) is upper semi-continuous on \(\mathcal {X}\), which is established by Proposition 1, and the openness of \(\mathcal {X}\backslash {\mathcal {A}}\), which is guaranteed by [7, Lemma 7.5].

Regarding (b), the property (35) follows from the definition of \(V_s\), the upper semi-continuity of \(V_0\), and the openness of \(\mathcal {X}\backslash {\mathcal {A}}\). Also, it follows from [7, Lemma 7.7] that we can pick the function \({{\tilde{\sigma }}}\) sufficiently small such that for any \(\mu _1,\mu _2 \in \mathcal {K}_\infty \) satisfying

the following hold

So the inequalities (36) are obtained, as well.

Regarding (c), let \(\sigma _2\) be a continuous function that is positive on \(\mathcal {X}\backslash {\mathcal {A}}\) and that satisfies \(\sigma _2 (\xi ) \le \sigma (\xi ) \) for all \(\xi \in {\mathcal {X}}\). We first construct functions \(\sigma _2\) and \({\tilde{\sigma }}\) so that for each \(\xi \in {\mathcal {X}}\backslash {\mathcal {A}}\), for each \(\overline{x}_\varphi \in \hat{\varrho }_{\sigma _2} (\xi )\), for each \(\eta \in \overline{\mathbb {B}}\) and \((t,j) \in \mathrm {dom}\,\overline{x}_\varphi \) such that \(\overline{x}_\varphi (t,j,\xi ) \in {\mathcal {X}} \backslash {\mathcal {A}}\), the function defined on \((t,j) \in \mathrm {dom}\,\overline{x}_\varphi \cap [0,t] \times \{0,\dots ,j\}\) given by \((\tau ,k) \mapsto \overline{x}_\varphi (\tau ,k) + {\tilde{\sigma }} (\overline{x}_\varphi (\tau ,k)) \eta \) can be extended to a complete solution of \(\hat{\mathcal {H}}_\sigma \). Now, pick a maximal solution \(\overline{x}_\varphi (h,m,\xi )\) to \(\hat{\mathcal {H}}_{\sigma _2}\). First, let \(m = 0\). So according to the definition of \(V_s\), Lemma 7.2 in [7], (32) and the fact \(\psi :\mathbb R^{n}\rightarrow [0,1]\) that we get for any \(\left| \mu \right| \le 1\) and for any \(\overline{x}_{\varphi } \in \hat{\varrho }_{\sigma _2} (\xi )\) so that \(\xi \in \hat{\mathcal {C}} \backslash {\mathcal {A}}\)

It follows from [7, Claim 6.3] that for any \(\xi \in \hat{\mathcal {C}} \backslash {\mathcal {A}}\) and \(f \in \hat{F}(\xi )\), there exists a solution \(\overline{x}_\varphi \in \hat{\varrho }_{\sigma _2} (\xi )\) such that for small enough \(h > 0\), we get that \((h,0) \in \mathrm {dom} \, \overline{x}_\varphi \) and \(\overline{x}_\varphi = \xi + h f\). So it follows with smoothness of \(V_s\) on \({\mathcal {X}} \backslash {\mathcal {A}}\), Claim 6.3 in [7], the inequality (41) and the mean value theorem that

where z lies in the line segment joining \(\xi \) to \(\xi + h f\). It follows from uniform continuity of \(\omega \) with respect to \(\eta \) on \(\overline{\mathbb {B}}\) that for any \(\xi \in \hat{\mathcal {C}} \backslash {\mathcal {A}}\) and \(f \in \hat{F}(\xi )\)

From Claim 7.6 and Lemma 7.7 in [7], there exists some \(\sigma _u (\cdot )\) with \({\tilde{\sigma }} (\xi ) \le \sigma _{u} (\xi )\) for all \(\xi \in {\mathcal {X}} \backslash {\mathcal {A}}\) so that we get for all \(\xi \in \hat{\mathcal {C}} \backslash {\mathcal {A}}\) and \(f \in \hat{F}(\xi )\)

Therefore, it is easy to see that for any \(\overline{\gamma }_1 , \varphi , \mu \in \mathcal {K}_\infty \) with \(\mu _2 > {{\mathrm{id}}}\) and any \(\left| \mu \right| \le 1\) the exists \({{\tilde{\gamma }}}_1 \in \mathcal {K}_\infty \) such that (37) holds.

Now let \((h,m) = (0,1)\). So it follows with the definition of \(V_s\), Lemma 7.2 in [7], the growth condition (33), and the fact that \(\psi :\mathbb R^{n}\rightarrow [0,1]\) that for any \(\left| \mu \right| \le 1\) and each \(\xi \in \hat{\mathcal {D}}\) and \(g \in \hat{G}(\xi )\)

From [7, Claim 7.6] and [7, Lemma 7.7], there exists \(\sigma _{u}\) with \({\tilde{\sigma }} (\xi ) \le \sigma _{u} (\xi )\) for all \(\xi \in {\mathcal {X}} \backslash {\mathcal {A}}\) so that we have for all \(\xi \in \mathcal {D} \backslash {\mathcal {A}}\) and \(g \in \hat{G}(\xi )\)

With the same arguments as those for flows, there exists \({\tilde{\gamma }}_2 \in \mathcal {K}_\infty \) such that the following hold

Moreover, if \(\xi \in \hat{\mathcal {D}}\) and \(g \in {\mathcal {A}}\) then \(0 = V_{s} (g) \le V_{s} (\xi ) + {\tilde{\gamma }}_2 (\left| \mu \right| \varphi (\omega (\xi )))\). So the growth condition (38) holds.\(\square \)

4.3.5 Final smoothing

The next lemma is to do with smoothing \(V_{s}\) on \({\mathcal {A}}\).

Lemma 4

Let \(\mathcal {H}\) be pre-iISS. Also, let \(V_{s}\), \({\tilde{\gamma }}_{1},{\tilde{\gamma }}_{2}\) and \(\varphi \) come from Lemma 3. For any \(\xi \in {\mathcal {X}}\) and \(\left| \mu \right| \le 1\), there exist \(\underline{\alpha }, \overline{\alpha } \in \mathcal {K}_\infty \), and a \(\mathcal {K}_\infty \)-function p, smooth on \((0,+\infty )\) such that \(V_1 :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) is defined by

where \(V_s\), coming from Lemma 3, is smooth on \({\mathcal {X}}\) and the following hold

Proof

With Lemma 4.3 in [14], there exists a smooth function \(p \in \mathcal {K}_\infty \) such that \(p' (s) > 0\) for all \(s > 0\) where \(p' (\cdot ) := \frac{dp}{ds} (\cdot )\) and \(p(V_{s} (\xi ))\) is smooth for all \(\xi \in {\mathcal {X}}\). Without loss of generality, one can assume that \(p' (s) \le 1\) for all \(s > 0\) (cf. Page 1090 of [4] for more details). Using the definition of \(V_1\) and (40), we have

Therefore, (45) holds.

It follows from, in succession, the definition of \(V_1\), (37) and the fact that \(0 < p' (s) \le 1\) for all \(s > 0\) that for all \(\xi \in \hat{\mathcal {C}} \backslash {\mathcal {A}}\)

It follows with the fact that \(\nabla V_1 (\xi ) = 0\) and \(\omega (\xi ) = 0\) for all \(\xi \in {\mathcal {A}}\), and \({\tilde{\gamma }}\) and \(\varphi \) are zero at zero that

It follows with, in succession, the definition of \(V_1\), the mean value theorem, the last inequality of (43), the fact that \(0 < p' (s) \le 1\) for all \(s > 0\) that for all \(\xi \in \hat{\mathcal {D}}\)

where z lies on the segment joining \(V_s (\xi )\) to \(V_s (g)\). \(\square \)

4.3.6 Return to \(\mathcal {H}\)

The following lemma is immediately obtained from Lemma 4 and (28).

Lemma 5

Let \(\mathcal {H}\) be pre-iISS. Let \(\varphi ,{\tilde{\gamma }}_1,{\tilde{\gamma }}_2 \in \mathcal {K}_\infty \) be generated by Lemma 3. Also, let \(\underline{\alpha } , \overline{\alpha } \in \mathcal {K}_\infty \) and \(V_1 :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) come from Lemma 4. Then the following hold

for any \((\xi ,u) \in \mathcal {C}\) with \(\left| u\right| \le \varphi (\omega (\xi ))\)

for any \((\xi ,u) \in \mathcal {D}\) with \(\left| u\right| \le \varphi (\omega (\xi ))\)

4.3.7 A characterization of 0-input pre-AS

To continue with the proof, we need a dissipation characterization of 0-input pre-AS, which is stated in Proposition 2. This proposition is a unification and generalization of [4, Proposition II.5].

Proposition 2

\(\mathcal {H}\) is 0-input pre-AS if and only if there exist a smooth semi-proper function \(W :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\), \(\lambda \in \mathcal {K}\) and a continuous function \(\rho \in \mathcal {PD}\) such that

Proof

Sufficiency is clear. We establish necessity. To this end, the following lemma is needed.

Lemma 6

\(\mathcal {H}\) is 0-input pAS if and only if there exist a smooth Lyapunov function \(V :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\) and \(\alpha _1,\alpha _2,\alpha _3,\chi \in \mathcal {K}_\infty \) and a nonzero smooth function \(q :\mathbb R_{\ge 0}\rightarrow \mathbb R_{> 0}\) with the property that \(q(s) \equiv 1\) for all \(s \in [0,1]\) such that

where I is the \(m \times m\) identity matrix.

Proof

See Appendix 1. \(\square \)

Now we can pursue the proof of Proposition 2. Let \(\mathcal {H}\) be 0-input pre-AS. Recalling Lemma 6, there exists a Lyapunov function V with the properties (51)–(53). Using [26, Remark 2.4], we can show that there exists some \(\alpha _{4} \in \mathcal {K}_\infty \) such that (52) and (53) are equivalent to

Given [4, Corollary IV.5], there exists \(\lambda \in \mathcal {K}\) such that \(\alpha _4 (sr) \le \lambda (s) \lambda (r)\) for all \((s,r) \in \mathbb R_{\ge 0}\times \mathbb R_{\ge 0}\). So we have

where \(u := q(\omega (\xi ))I\nu \). Define \(\pi :\mathbb R_{\ge 0}\rightarrow \mathbb R_{\ge 0}\) as

where \(c > 0\) and \(\theta \in \mathcal {K}\) are defined below. We note that \(\pi \in \mathcal {K}\). Let \(W(r) := \pi (V(r))\) for all \(r \ge 0\). Taking the time derivative and difference of \(W(\xi )\) and recalling (54) and (55) yield

It follows from (51) that

Let \(c := \lambda (g(0)) = \lambda (1)\). By the fact that q is smooth everywhere and the definition of c, one can construct \(\theta \in \mathcal {K}\) such that

It follows with (56) that

where \(\rho (s) := \frac{\alpha _3 (s)}{c + \theta \circ \alpha _2 (s)}\) for all \(s \ge 0\). This proves the necessity. \(\square \)

As pre-iISS implies 0-input pre-AS, it follows from Proposition 2 that there exist a smooth semi-proper function W, \(\lambda \in \mathcal {K}\) and \(\rho \in \mathcal {PD}\) such that (49) and (50) hold. Define \(V :\mathcal {X}\rightarrow \mathbb R_{\ge 0}\) by \(V (\xi ) := W (\xi ) + V_1 (\xi )\) with \(V_1\) coming from Lemma 5. It follows from Lemma 5 and Proposition 2 that V is smooth everywhere and there exist \(\alpha _1,\alpha _2 \in \mathcal {K}_\infty \) such that

We also have for any \((\xi ,u) \in \mathcal {C}\) with \(\left| u\right| \le \varphi (\omega (\xi ))\)

and for any \((\xi ,u) \in \mathcal {D}\) with \(\left| u\right| \le \varphi (\omega (\xi ))\)

where \(\eta (\cdot ) := {\tilde{\gamma }} (\cdot ) + \lambda (\cdot )\) and \({\tilde{\gamma }} (\cdot ) := \max \{ {\tilde{\gamma }}_1 (\cdot ) , {\tilde{\gamma }}_2 (\cdot ) \}\). To show that V satisfies (3) and (4), let \(\chi = \varphi ^{-1}\) and define

Then

It is obvious that \(\kappa \in \mathcal {K}\). By considering two cases of \(u \in \mathcal {U}\) in which \(\left| u\right| \le \varphi (\omega (\xi ))\) and \(\left| u\right| \ge \varphi (\omega (\xi ))\), we get

These estimates together with (57) show that V is a smooth iISS Lyapunov function for \(\mathcal {H}\). \(\square \)

5 iISS for sampled-data systems

A popular approach to design sampled-data systems is the emulation approach. The idea is to first ignore communication constraints and design a continuous-time controller for a continuous-time plant. Then to provide certain conditions under which stability of the sampled-data control system in a certain sense is preserved in a digital implementation. The emulation approach enjoys considerable advantages in terms of the choice of continuous-time design tools. A central issue in the emulation design is the choice of the sampling period guaranteeing stability of the sampled-data system with the emulated controller. In a seminal work, Nešić et al. [17] developed an explicit formula for a maximum allowable sampling period (MASP) that ensures asymptotic stability of sampled-data nonlinear systems with emulated controllers.

Here we show the effectiveness of Theorem 1 by establishing that the MASP developed in [17] also guarantees iISS for a sampled-data control system. Consider the following plant model

where \(x_p \in \mathbb R^{n_p}\) is the plant state, \(u \in \mathbb R^{n_u}\) is the control input, \(w \in \mathbb R^{n_w}\) is the disturbance input, and \(y \in \mathbb R^{n_y}\) is the plant output. Assume that \(f_p :\mathbb R^{n_p} \times \mathbb R^{n_u} \times \mathbb R^{n_w} \rightarrow \mathbb R^{n_p}\) is locally Lipschitz and \(f_p (0,0) = 0\). Since we follow the emulation method, we assume that we know a continuous-time controller, which stabilizes the origin of system (58) in the sense of iISS in the absence of network. We focus on dynamic controllers of the form

where \(x_c \in \mathbb R^{n_c}\) is the controller state. Let \(g_c :\mathbb R^{n_c} \rightarrow \mathbb R^{n_u}\) be continuously differentiable in its argument.

We consider the scenario where the plant and the controller are connected via a digital channel. In particular, we assume that the plant is between a hold device and a sampler. Transmissions occur only at some given time instants \(t_j, j \in {\mathbb {Z}}_{> 0}\), such that \(\epsilon \le t_j-t_{j-1} \le \tau _\mathrm {MASP}\), where \(\epsilon \in (0,\tau _\mathrm {MASP}]\) represents the minimum time between any two transmission instants. Note that \(\epsilon \) can be taken arbitrarily small and it is only used to prevent Zeno behavior [10]. As in [17], a sampled-data control system with an emulated controller of the form (59) can be modeled by

where \(\hat{y} \in \mathbb R^{n_y}\) and \(\hat{u} \in \mathbb R^{n_u}\) are, respectively, the vectors of most recently transmitted plant and controller output values. These two variables are generated by the holding function \(\hat{f}_p\) and \(\hat{f}_c\) between two successive transmission instants. The use of zero-order-hold devices leads to \(\hat{f}_p = 0\) and \(\hat{f}_c = 0\) for instance. In addition, \(e := (e_y,e_u) \in \mathbb {R}^{n_e}\) denotes the sampling-induced errors where \(e_y := \hat{y} - y\in \mathbb R^{n_y}\) and \(e_u := \hat{u} - u\in \mathbb R^{n_u}\). Given \(x := (x_p,x_c) \in \mathbb R^{n_x}\), it is more convenient to transform (60) into a hybrid system as

where \(\tau \in \mathbb R_{\ge 0}\) represents a clock and w denotes the disturbance input. We also have the flow set \(\mathcal {C}:= \{ (x,e,\tau ,w) :\tau \in [0,\tau _{\mathrm {MASP}}]\}\) and the jump set \(\mathcal {D}:= \{ (x,e,\tau ,w) :\tau \in [\epsilon ,\tau _{\mathrm {MASP}}]\}\).

To present our results, we need to make the following assumption.

Assumption 1

There exist locally Lipschitz functions \(V :\mathbb R^{n_x} \rightarrow \mathbb R_{\ge 0}\), \(W :\mathbb {R}^{n_e} \rightarrow \mathbb R_{\ge 0}\), a continuous function \(H :\mathbb R^{n_x} \rightarrow \mathbb R_{\ge 0}\), \(\underline{\alpha }_x,\overline{\alpha }_x,\underline{\alpha }_e,\overline{\alpha }_e \in \mathcal {K}_\infty \), \({\tilde{\alpha }} \in \mathcal {PD}\), \(\sigma _1,\sigma _2 \in \mathcal {K}\) and real numbers \(L,\gamma > 0\) such that the following hold

for all almost \(x \in \mathbb R^{n_x}\), for all \(e \in \mathbb R^{n_e}\) and all \(w \in \mathbb R^{d}\)

moreover,

and for almost all \(e \in \mathbb R^{n_e}\), for all \(x \in \mathbb R^{n_x}\) and all \(w \in \mathbb {R}^d\)

According to (63) and (64), the emulated controller guarantees the iISS property for subsystem \(\dot{x} = f(x,e,w)\) with W and w as inputs. These properties can be verified by analysis of robustness of the closed-loop system (58)–(59) with respect to input and/or output measurement errors in the absence of digital network. Finally, sufficient conditions under which (66) holds are the function g is globally Lipschitz and there exists \(M > 0\) such that \(\left| \frac{\partial W(\kappa ,e)}{\partial e}\right| \le M\).

The last condition is on the MASP. As in [17], we need to have a system which has a sufficiently high bandwidth so that the following assumption holds.

Assumption 2

Let \(\tau _{\mathrm {MASP}}\) satisfies \(\tau _{\mathrm {MASP}} < \mathcal {T}(\gamma ,L)\) where

with \(r := \sqrt{\left| (\gamma / L)^2-1\right| }\).

Now we are ready to give the main result of this section.

Theorem 3

Let Assumptions 1 and 2 hold. Then hybrid system (61) and (62) is iISS with respect to the compact set \({\mathcal {A}} := \{ (x,e,\tau ) :x = 0 , e = 0 \}\).

Proof

To prove the theorem, we appeal to Theorem 1. In particular, we establish hybrid system (61) and (62) is smoothly dissipative. On the other hand, hybrid system (61) and (62) is also 0-input AS under Assumptions 1 and 2, as shown in [17]. Hence, by the implication \((iv) \Rightarrow (i)\) of Theorem 1, (69) is iISS. Toward the dissipative property of (61) and (62), the following two lemmas are required to give the proof.

Lemma 7

Given \(c > 1\) and \(\lambda \in (0,1)\), define

where \(r := \sqrt{\left| (\gamma / L)^2-c\right| }\). Let \(\phi :[0,\tilde{\mathcal {T}}] \rightarrow \mathbb {R}\) be the solution to

Then \(\phi (\tau ) \in [\lambda ,\lambda ^{-1}]\) for all \(\tau \in [0,\tilde{\mathcal {T}}]\).

Lemma 8

For any fixed \(\gamma \) and L, \(\tilde{\mathcal {T}}(\cdot ,\cdot ,\gamma ,L) : (1,+\infty ) \times (0,1) \rightarrow \mathbb R_{> 0}\) is continuous and strictly decreasing to zero with respect to the first two arguments.

Let \(\tau _{\mathrm {MASP}} < \mathcal {T} (\gamma ,L)\) be given. For the sake of convenience, denote \(\xi := [x^\top ,e^\top ,\tau ]^\top \), \(F(\xi ,w) := [f(x,e,w)^\top ,g(x,e,w)^\top ,1]^\top \) and \(G(\xi ,w) := [x^\top ,0^\top ,0]^\top \). Also, rewrite hybrid system (61) and (62) as

It follows from Lemma 8 that there exist \(c > 1\) and \(\lambda \in (0,1)\) such that \(\tau _{\mathrm {MASP}} = \tilde{\mathcal {T}} (c,\lambda ,\gamma ,L)\). Let the quadruple \((c,\lambda ,\gamma ,L)\) generate \(\phi \) via Lemma 7. Also, let

By (63), (65) and the fact that \(\phi (\tau ) \in [\lambda ,\lambda ^{-1}]\) for all \(\tau \in [0,\tau _{\mathrm {MASP}}]\) (cf. Lemma 7), there exist \(\underline{\alpha },\overline{\alpha }\in \mathcal {K}_\infty \) such that the following hold

For any \((\xi ,w) \in \mathcal {C}\), we have

It follows from (64), (66) and (68) that

From Young’s inequality, for any \(\varepsilon > 0\) we have

It follows from Lemma 7 that

Given \(\sigma (\cdot ) := \sigma _1 (\cdot ) + \frac{1}{\lambda ^2\varepsilon } [\sigma _2 (\cdot )]^2\), we get

Picking \(\varepsilon \) sufficiently small such that \(c-\varepsilon -1 > 0\) gives

Then

Also, for any \((\xi ,w) \in \mathcal {D}\), we have

It follows from (69) that

By the fact that \(W(0) = 0\), we get

Thus

for all \((\xi ,w) \in \mathcal {D}\). Given (70), (71) and (72), we conclude that (69) is smoothly dissipative with \(\rho (\xi ) \equiv 0\) as in (7) and (8). \(\square \)

Remark 5

Variants of Theorem 3 including a (semiglobal) practical iISS property can be obtained by appropriate modifications to Assumption 1. Moreover, motivated by the connections between other engineering systems such as networked control systems and event-triggered control systems with sampled-data systems, we foresee that the application of our results to sampled-data systems can be useful for the study of the iISS property for such hybrid systems.

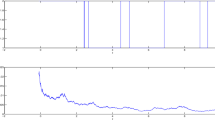

To verify the effectiveness of Theorem 3, we give an illustrative example. Consider the continuous-time plant with a bounded-input controller

where \(x,u,w \in \mathbb R\). Ignoring the digital channel, the closed-loop system is not ISS but iISS. Given the digital communication effects, we write the system into a hybrid system the same as (61) and (62)

Taking \(V(x) = \left| x\right| ,W(e)=\left| e\right| \), we have that the requirements in Assumption 1 are satisfied with \(L = 3, \gamma = 10\) and \(H(x) = \frac{\left| x\right| }{1+x^2}\). The choice of parameters gives \(\tau _{\mathrm {MASP}} \simeq 0.13\).

6 Conclusions

This paper was primarily concerned with Lyapunov characterizations of pre-iISS for hybrid systems. In particular, we established that the existence of a smooth iISS Lyapunov function is equivalent to pre-iISS which unified and extended results in [1, 4]. We also related pre-iISS to dissipativity and detectability notions. Robustness of pre-iISS to vanishing perturbations was investigated, as well. We finally illustrated the effectiveness of our results by providing a maximum allowable sampling period guaranteeing iISS for sampled-data control systems.

Our results can be extended in several directions. In particular, further potential equivalent characterizations of pre-iISS in terms of time-domain behaviors including 0-input pre-AS plus uniform-bounded-energy-bounded-state as well as bounded energy weakly converging state plus 0-input pre-local stability (cf. [2, 5] for the existing equivalent characterizations for continuous-time systems). Moreover, other related notions such as strong iISS, integral input-output-to-state stability and integral output-to-state stability could be investigated.

Notes

Without loss of generality, in this proposition, we assume that the length of hybrid time domain of interest is infinite.

References

Angeli D (1999) Intrinsic robustness of global asymptotic stability. Syst Control Lett 38(45):297–307

Angeli D, Ingalls B, Sontag ED, Wang Y (2004) Separation principles for input-output and integral-input-to-state stability. SIAM J Control Optim 43(1):256–276

Angeli D, Nešić D (2002) A trajectory-based approach for the stability robustness of nonlinear systems with inputs. Math Control Signals Syst 15(4):336–355

Angeli D, Sontag ED, Wang Y (2000) A characterization of integral input-to-state stability. IEEE Trans Autom Control 45(6):1082–1097

Angeli D, Sontag ED, Wang Y (2000) Further equivalences and semiglobal versions of integral input to state stability. Dyn Control 10(2):127–149. doi:10.1023/A:1008356223747

Cai C, Teel AR (2009) Characterizations of input-to-state stability for hybrid systems. Syst Control Lett 58(1):47–53

Cai C, Teel AR, Goebel R (2007) Smooth Lyapunov functions for hybrid systems part I: existence is equivalent to robustness. IEEE Trans Autom Control 52(7):1264–1277

Cai C, Teel AR, Goebel R (2008) Smooth Lyapunov functions for hybrid systems part II: (pre)asymptotically stable compact sets. IEEE Trans Autom Control 53(3):734–748

Goebel R, Sanfelice RG, Teel AR (2009) Hybrid dynamical systems. IEEE Control Syst 29(2):28–93

Goebel R, Sanfelice RG, Teel AR (2012) Hybrid dynamical systems: modeling, stability, and robustness. Princeton University Press, Princeton

Hespanha JP, Liberzon D, Teel AR (2008) Lyapunov conditions for input-to-state stability of impulsive systems. Automatica 44(11):2735–2744

Kellett CM (2014) A compendium of comparison function results. Math. Control Signals Syst. 26(3):339–374. doi:10.1007/s00498-014-0128-8

Kellett CM, Teel AR (2005) On the robustness of \(\cal{KL}\)-stability for difference inclusions: smooth discrete-time Lyapunov functions. SIAM J Control Optim 44(3):777–800

Lin Y, Sontag ED, Wang Y (1996) A smooth converse Lyapunov theorem for robust stability. SIAM J Control Optim 34(1):124–160

Mancilla-Aguilar J, García R (2001) On converse Lyapunov theorems for ISS and iISS switched nonlinear systems. Syst Control Lett 42(1):47–53

Moreau L, Nešić D, Teel AR (2001) A trajectory based approach for robustness of input-to-state stability. In: Proceedings of the 2001 American control conference, vol. 5, pp. 3570–3575

Nešić D, Teel AR, Carnevale D (2009) Explicit computation of the sampling period in emulation of controllers for nonlinear sampled-data systems. IEEE Trans Autom Control 54(3):619–624

Nešić D, Zaccarian L, Teel AR (2008) Stability properties of reset systems. Automatica 44(8):2019–2026

Noroozi N, Khayatian A, Geiselhart R (2016) A characterization of integral input-to-state stability for hybrid systems. Technical report. arXiv:1606.07508

Noroozi N, Nešić D, Teel AR (2014) Gronwall inequality for hybrid systems. Automatica 50(10):2718–2722

Sanfelice RG, Goebel R, Teel AR (2007) Invariance principles for hybrid systems with connections to detectability and asymptotic stability. IEEE Trans Autom Control 52(12):2282–2297

Sontag ED (1989) Smooth stabilization implies coprime factorization. IEEE Trans Autom Control 34(4):435–443

Sontag ED (1990) Further facts about input to state stabilization. IEEE Trans Autom Control 35(4):473–476

Sontag ED (1998) Comments on integral variants of ISS. Syst Control Lett 34(12):93–100

Sontag ED (2008) Input to state stability: basic concepts and results. Springer, Berlin

Sontag ED, Wang Y (1995) On characterizations of the input-to-state stability property. Syst Control Lett 24(5):351–359

Teel AR, Praly L (2000) A smooth Lyapunov function from a class-\(\cal{KL}\) estimate involving two positive semidefinite functions. ESAIM: Control Optim Calculus Var 5:313–367

Wang W, Nešić D, Teel AR (2012) Input-to-state stability for a class of hybrid dynamical systems via averaging. Math Control Signals Syst 23(4):223–256

Acknowledgements

The first author is very grateful to Andy Teel for numerous discussions and for raising the questions which led to this paper. The first author also warmly thanks Dragan Nešić for his illuminating suggestions and insightful discussions. Particularly, the proof of the implication \((ii) \Rightarrow (i)\) of Theorem 1 is the fruit of a collaboration with him. Finally, the authors would like to thank the Associate Editor and reviewers for several constructive comments which helped to improve the paper.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: A comparison-like Lemma for hybrid systems

The lemma below is a generalization of [4, Lemma IV.2] for hybrid systems. The proof of the lemma follows from similar lines as in the proof of [4, Corollary IV.3]. For more details, we refer the reader to [19].

Lemma 9

Let \(\rho \in \mathcal {PD}\) with \(\rho (r) < r\) for all \(r > 0\), and \(z :\mathrm {dom}z \rightarrow \mathbb R\) be a hybrid arc with \(z(0,0) \ge 0\). Consider a hybrid signal \(v :\mathrm {dom}v \rightarrow \mathbb R_{\ge 0}\) such that \(\mathrm {dom}v = \mathrm {dom}z\) and for each j, \(v(\cdot ,j)\) is continuous. Furthermore, assume that

-

for almost all t such that \((t,j) \in \mathrm {dom}z \backslash {\varGamma }(z)\)

$$\begin{aligned} \dot{z}(t,j) \le -\rho (\mathrm {max} \{ z(t,j) + v(t,j) , 0 \}) ; \end{aligned}$$ -

for all \((t,j) \in {\varGamma }(z)\) it holds that

$$\begin{aligned} z(t,j+1) - z(t,j) \le -\rho (\mathrm {max} \{ z(t,j) + v(t,j) , 0 \}) . \end{aligned}$$

Then, there exists \(\beta \in \mathcal {KLL}\) such that

Appendix B: Proof of Theorem 2

Before proceeding to the proof, we make the following observation followed by two new notions.

Remark 6

It should be pointed out that there is no loss of generality in working with \(\mathcal {KL}\) functions rather than \(\mathcal {KLL}\) functions (cf. [7, Lemma 6.1] for more details). Moreover, we note that \(\max \{a,b,c\} \le a+b+c \le \max \{3a,3b,3c\}\) for all \(a,b,c \in \mathbb R_{\ge 0}\). Hence, \(\mathcal {H}\) is pre-iISS with respect to \({\mathcal {A}}\) if and only if there exist \(\alpha \in \mathcal {K}_\infty \), \(\gamma _1,\gamma _2 \in \mathcal {K}\) and \(\beta \in \mathcal {KL}\) if for all \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e\), all \(\xi \in \mathcal {X}\), and all \((t,j) \in \mathrm {dom}x\), each solution pair (x, u) to \(\mathcal {H}\) satisfies

For the sake of convenience, here we prefer to use the max-type estimate (73) rather than (2). The next two notions are required later.

Definition 6

Let \({\mathcal {A}}\subset {\mathcal {X}}\) be a compact set, and \(\sigma :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) be an admissible perturbation radius that is positive on \({\mathcal {X}} \backslash {\mathcal {A}}\). Also, let \(\omega \) be a proper indicator for \({\mathcal {A}}\) on \({\mathcal {X}}\). The hybrid system \(\mathcal {H}\) is said to be semiglobally practically robustly pre-integral input-to-state stable (SPR-pre-iISS) with respect to \({\mathcal {A}}\) if there exist \(\alpha \in \mathcal {K}_\infty \), \(\beta \in \mathcal {KL}\), \(\gamma _1,\gamma _2 \in \mathcal {K}\) such that for each pair of positive real numbers \((\varepsilon ,r)\), there exists \(\delta ^* \in (0,1)\) such that for any \(\delta \in (0,\delta ^*]\) each solution pair \((\overline{x},u)\) to \(\mathcal {H}_{\delta \sigma }\), the \(\delta \sigma \)-perturbation of \(\mathcal {H}\), exists for all \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e(r)\), all \(\overline{\xi } \in {\mathcal {X}}\) with \(\omega (\overline{\xi }) \le r\) and all \((t,j) \in \mathrm {dom}\,\overline{x}\), and also satisfies

Definition 7

Let \({\mathcal {A}}\subset {\mathcal {X}}\) be a compact set, and \(\sigma :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) be an admissible perturbation radius that is positive on \({\mathcal {X}} \backslash {\mathcal {A}}\). Also, let \(\omega \) be a proper indicator for \({\mathcal {A}}\) on \({\mathcal {X}}\). The hybrid system \(\mathcal {H}\) is said to be SPR-pre-iISS with respect to \({\mathcal {A}}\) on finite time intervals if there exist \(\alpha \in \mathcal {K}_\infty \), \(\gamma _1,\gamma _2 \in \mathcal {K}\) and \(\beta \in \mathcal {KL}\) such that for each triple of positive real numbers \((T,\varepsilon ,r)\), there exists \(\delta ^* \in (0,1)\) such that for any \(\delta \in (0,\delta ^*]\) each solution pair \((\overline{x},u)\) to \(\mathcal {H}_{\delta \sigma }\), the \(\delta \sigma \)-perturbation of \(\mathcal {H}\), exists for all \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e (r)\), all \(\overline{\xi } \in {\mathcal {X}}\) with \(\omega (\overline{\xi }) \le r\) and all \((t,j) \in \mathrm {dom}\,\overline{x}\) with \(t+j \le T\), and also satisfies

Here are the steps of the proof: (1) We show that semiglobal practical robust pre-iISS on compact time intervals is equivalent to semiglobal practical robust pre-iISS on the semi-infinite interval (cf. Proposition 3 belowFootnote 1); (2) we establish that if solutions of some inflated system can be made arbitrarily close on arbitrary compact time intervals to some solution of the original system when the original system is pre-iISS, then the inflated system is semiglobally practically robustly pre-iISS (cf. Proposition 4 below). (3) we show that semiglobal practical robust pre-iISS implies pre-iISS (cf. Proposition 5 below). (4) the combination of Propositions 4 and 5 provides what we need, that is to say, the existence of an inflated hybrid system remaining pre-iISS under sufficiently small perturbations when the original system is pre-iISS.

The first step provides a link between the last two definitions.

Proposition 3

The following are equivalent

-

(A)

\(\mathcal {H}\) is SPR-pre-iISS with respect to \({\mathcal {A}}\) on finite time intervals.

-

(B)

\(\mathcal {H}\) is SPR-pre-iISS with respect to \({\mathcal {A}}\).

Proof

The implication \(A) \Rightarrow B)\) is clear. To establish the implication \(B) \Rightarrow A)\), let the gain functions \(\alpha \), \(\beta \), \(\gamma _1\) and \(\gamma _2\) come from Remark 6. Take arbitrary strictly positive \(\varepsilon ,r\), and let \(T > 0\) be sufficiently large such that

Let \(\delta ^* \in (0,1)\) come from the assumption of SPR-pre-iISS on finite time intervals, corresponding to the values \((2T,\frac{\varepsilon }{2},\max \left\{ r , r + \varepsilon , \alpha ^{-1} (r + \varepsilon ) \right\} )\). Let \(\delta \) be fixed but arbitrary with \(\delta \in (0,\delta ^*]\). So for all \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e (r)\), for all \(\overline{\xi } \in {\mathcal {X}}\) with \(\omega (\overline{\xi }) \le \max \left\{ r , r + \varepsilon , \alpha ^{-1}(r + \varepsilon ) \right\} \) and for all \((t,j) \in \mathrm {dom}\,\overline{x}\) with \(t+j \le 2T\), each solution pair \((\overline{x},u)\) to \(\mathcal {H}_{\delta \sigma }\) exists and satisfies

Let \((t_{kT},j_{kT}) := (t,j)\) with \(t + j = kT , k =0,1,2,\dots \) and \((t,j) \in \mathrm {dom}\,\overline{x}\). It follows with the fact that \(\omega (\overline{\xi }) \le \max \left\{ r,r + \varepsilon , \alpha ^{-1}(r + \varepsilon ) \right\} \), the fact that \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e (r)\), and the choice of T (cf. (74)) that

It follows from (75) that the following hold

Exploiting the semigroup property of solutions, (76) and the fact that \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e (r)\), and the choice of \(\delta \), the solution pair \((\overline{x},u)\) to \(\mathcal {H}_{\delta \sigma }\) with the initial value \(\overline{x}(t_T,j_T,\overline{\xi },u)\) exists for all \((t,j) \in \mathrm {dom}\,\overline{x}\) with \(T \le t+j \le 3T\) and it also satisfies

Again it follows from (76), the fact that \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e (r)\), and (74) that

By repeating this procedure, the following hold for all \((t,j) \in \mathrm {dom}\,\overline{x}\) with \(kT \le t+j \le (k+2)T,k=2,3,4,\dots \)

So we have for all \(\overline{\xi } \in {\mathcal {X}}\) with \(\omega (\overline{\xi }) \le \max \left\{ r , r + \varepsilon , \alpha ^{-1}(r + \varepsilon ) \right\} \), for all \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e(r)\), and for all \((t,j) \in \mathrm {dom}\,\overline{x}\) with \(t+j \ge T\)

We also get for all \(\overline{\xi } \in {\mathcal {X}}\) with \(\omega (\overline{\xi }) \le \max \left\{ r , r + \varepsilon , \alpha ^{-1}(r + \varepsilon ) \right\} \), for all \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e(r)\), and for all \((t,j) \in \mathrm {dom}\overline{x}\) with \(0 \le t+j < T\)

which completes the proof. \(\square \)

The following concepts, borrowed from [10], are required to give Proposition 4.

Definition 8

Two hybrid signals \(x :\text {dom } x \rightarrow \mathbb R^{n}\) and \(y :\mathrm {dom}y \rightarrow \mathbb R^{n}\) are said to be (\(T,\varepsilon \))-close if

-

1.

for each \((t,j) \in \text {dom }x\) with \(t + j \le T\) there exists s such that \((s,j) \in \mathrm {dom}y\), with \(\left| t - s\right| \le \varepsilon \) and \(\left| x(t, j) - y(s, j)\right| \le \varepsilon \);

-

2.

for each \((t,j) \in \text {dom }y\) with \(t + j \le T\) there exists s such that \((s,j) \in \text {dom }x\), with \(\left| t - s\right| \le \varepsilon \) and \(\left| x(t, j) - y(s, j)\right| \le \varepsilon \).

Definition 9

(Reachable Sets) Given an arbitrary compact set \(K_0 \subset {\mathcal {X}}\) and \(T \in \mathbb R_{\ge 0}\), the reachable set from \(K_0\) in hybrid time less or equal to T is the set

We now give a result stating that if solutions to \(\mathcal {H}\) and solutions to \(\mathcal {H}_{\delta \sigma }\), the \(\delta \sigma \) perturbation of \(\mathcal {H}\), are (\(T,\varepsilon \))-close when \(\mathcal {H}\) is pre-iISS, then the system \(\mathcal {H}\) is SPR-pre-iISS.

Proposition 4

Let \({\mathcal {A}}\subset {\mathcal {X}}\) be a compact set and \(\sigma :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) be an admissible perturbation radius that is positive on \({\mathcal {X}} \backslash {\mathcal {A}}\). Also, let \(\omega \) be a proper indicator for \({\mathcal {A}}\) on \({\mathcal {X}}\). Assume that the following conditions hold

- (a):

-

\(\mathcal {H}\) is pre-iISS with respect to \({\mathcal {A}}\).

- (b):

-

For each triple \((T,{\tilde{\varepsilon }},r)\) of positive real numbers there exists some \(\delta \in (0,1)\) such that each solution pair \((\overline{x},u)\) to \(\mathcal {H}_{\delta \sigma }\), the \(\delta \sigma \)-perturbation of \(\mathcal {H}\), with \(\omega (\overline{\xi }) \le r + \delta \) and \(u \in \mathcal {L}_{\gamma _1,\gamma _2}^e (r)\) there exist a solution pair (x, w) to \(\mathcal {H}\) with \(\omega (\xi ) \le r\), and \(\left\| w_{(s,j)}\right\| _{\gamma _1,\gamma _2} \le \left\| u_{(t,j)}\right\| _{\gamma _1,\gamma _2}\) for all \(\left| t-s\right| \le {\tilde{\varepsilon }}\), \((s,j) \in \mathrm {dom}\, w\) and \((t,j) \in \mathrm {dom}\, u\) such that \(\overline{x}\) and x are \((T,{\tilde{\varepsilon }})\)-close.

Then \(\mathcal {H}\) is SPR-pre-iISS with respect to \({\mathcal {A}}\).

Proof

This is proved using steps in the proof of [28, Proposition 3]. From the result of Proposition 3, we only need to show that \(\mathcal {H}\) is SPR-pre-iISS with respect to \({\mathcal {A}}\) on finite time intervals. Assume that \(\sigma :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) is an admissible perturbation radius that is positive on \(\xi \in {\mathcal {X}} \backslash {\mathcal {A}}\). Let \(\omega \) be a proper indicator for \({\mathcal {A}}\) on \({\mathcal {X}}\). Also, let the functions \(\alpha \in \mathcal {K}_\infty \), \(\beta \in \mathcal {KL}\) and \(\gamma _1,\gamma _2 \in \mathcal {K}\) come from Definition 7. Let the triple of \((T,\varepsilon ,r)\) be given. Let \(K_0 := \left\{ \xi \in {\mathcal {X}} :\omega (\xi ) \le r \right\} \). It is clear that \(K_0\) is a compact set. Let \({\mathcal {R}}_{\le T} (K_0)\) be the reachable set from \(K_0\) for \(\mathcal {H}\). It follows from [10, Lemma 6.16] that the set \({\mathcal {R}}_{\le T} (K_0)\) is compact because \(\mathcal {H}\) is pre-iISS. Using the continuity of \(\omega \) and \(\beta \), and the fact that \(\beta (s,l) \rightarrow 0\) as \(l \rightarrow +\infty \), let \({\tilde{\varepsilon }}_1 > 0\) be sufficiently small so that

By convention, \(l = l - {\tilde{\varepsilon }}_{1}\) if \(l - {\tilde{\varepsilon }}_{1} < 0\).

Let \({\tilde{\varepsilon }}_{2}\) be small enough such that for all \(x \in {\mathcal {R}}_{\le T} (K_0)\) and \(\overline{x} \in {\mathcal {R}}_{\le T} (K_0 + {\tilde{\varepsilon }}_2 \overline{\mathbb {B}})\) satisfying \(\left| x - \overline{x}\right| \le {\tilde{\varepsilon }}_2\) we have

Let \({\tilde{\varepsilon }} := \min \{ {\tilde{\varepsilon }}_1 , {\tilde{\varepsilon }}_2 \}\). Let the data \((T,{\tilde{\varepsilon }},r)\) generate \(\delta > 0\) from the item (b) of Proposition 4. From this item, for each solution pair \((\overline{x},u)\) to \(\mathcal {H}_{\delta \sigma }\) with \(\overline{\xi } \in (K_0 + \delta \overline{\mathbb {B}})\) and \(u \in \mathcal {L}_{\gamma _{1},\gamma _{2}}^e (r)\) there exists some solution pair (x, w) to \(\mathcal {H}\) with \(\xi \in K_{0}\) and \(\left\| w_{(s,j)}\right\| _{\gamma _{1},\gamma _{2}} \le \left\| u_{(t,j)}\right\| _{\gamma _{1},\gamma _{2}}\) for all \(\left| t-s\right| \le {\tilde{\varepsilon }}\), \((s,j) \in \text {dom }w\) and \((t,j) \in \text {dom }u\) such that \(\overline{x}\) and x are \((T,{\tilde{\varepsilon }})\)-close. It follows from the item (a) of Proposition 4 and the definition of \({\tilde{\varepsilon }}\) that for all \((t,j) \in \mathrm {dom}\,\overline{x}\) with \(t+j \le T\), each solution pair \((\overline{x},u)\) to \(\mathcal {H}_{\delta \sigma }\) with \(\overline{\xi } \in (K_{0}+\delta \overline{\mathbb {B}})\) and \(u \in \mathcal {L}_{\gamma _{1},\gamma _2}^e (r)\) satisfies

This completes the poof. \(\square \)

Remark 7

The condition (\(\mathrm {b}\)) of Proposition 4 is not restrictive. With same augments as those in proof of [28, Proposition 1], one can provide sufficient conditions under which the condition (\(\mathrm {b}\)) of Proposition 4 holds. In particular, pre-iISS together with the Standing Assumptions is enough to get the desired property.

Now we pass from semiglobal results to global results. The following theorem shows that semiglobal practical robust pre-iISS implies pre-iISS.

Proposition 5

Let \({\mathcal {A}}\subset {\mathcal {X}}\) be a compact set. Assume that the hybrid system \(\mathcal {H}\) is SPR-pre-iISS with respect to \({\mathcal {A}}\). There exists an admissible perturbation radius \(\sigma _2 :{\mathcal {X}} \rightarrow \mathbb R_{\ge 0}\) that is positive on \({\mathcal {X}} \backslash {\mathcal {A}}\) such that the hybrid system \(\mathcal {H}_{\sigma _2}\), the \(\sigma _2\)-perturbation of \(\mathcal {H}\), is pre-iISS with respect to \({\mathcal {A}}\).

Proof