Abstract

This paper is concerned with finite sample approximations to the supremum of a non-degenerate U-process of a general order indexed by a function class. We are primarily interested in situations where the function class as well as the underlying distribution change with the sample size, and the U-process itself is not weakly convergent as a process. Such situations arise in a variety of modern statistical problems. We first consider Gaussian approximations, namely, approximate the U-process supremum by the supremum of a Gaussian process, and derive coupling and Kolmogorov distance bounds. Such Gaussian approximations are, however, not often directly applicable in statistical problems since the covariance function of the approximating Gaussian process is unknown. This motivates us to study bootstrap-type approximations to the U-process supremum. We propose a novel jackknife multiplier bootstrap (JMB) tailored to the U-process, and derive coupling and Kolmogorov distance bounds for the proposed JMB method. All these results are non-asymptotic, and established under fairly general conditions on function classes and underlying distributions. Key technical tools in the proofs are new local maximal inequalities for U-processes, which may be useful in other problems. We also discuss applications of the general approximation results to testing for qualitative features of nonparametric functions based on generalized local U-processes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is concerned with finite sample approximations to the supremum of a U-process of a general order indexed by a function class. We begin with describing our setting. Let \(X_1,\ldots ,X_n\) be independent and identically distributed (i.i.d.) random variables defined on a probability space \((\Omega , {\mathcal {A}}, {\mathbb {P}})\) and taking values in a measurable space \((S, {\mathcal {S}})\) with common distribution P. For a given integer \(r \geqslant 2\), let \({\mathcal {H}}\) be a class of jointly measurable functions (kernels) \(h:S^{r} \rightarrow {\mathbb {R}}\) equipped with a measurable envelope H (i.e., H is a nonnegative function on \(S^{r}\) such that \(H \geqslant \sup _{h \in {\mathcal {H}}}|h|)\). Consider the associated U-process

where \(I_{n,r} = \{ (i_{1},\ldots ,i_{r}) : 1 \leqslant i_{1},\ldots ,i_{r} \leqslant n, i_{j} \ne i_{k} \ \text {for} \ j \ne k \}\) and \(| I_{n,r} | = n!/(n-r)!\) denotes the cardinality of \(I_{n,r}\). Without loss of generality, we may assume that each \(h \in {\mathcal {H}}\) is symmetric, i.e., \(h(x_{1},\ldots ,x_{r}) = h(x_{i_{1}},\ldots ,x_{i_{r}})\) for every permutation \(i_{1},\ldots ,i_{r}\) of \(1,\ldots ,r\), and the envelope H is symmetric as well. Consider the normalized U-process

The main focus of this paper is to derive finite sample approximation results for the supremum of the normalized U-process, namely, \(Z_{n} := \sup _{h \in {\mathcal {H}}} {\mathbb {U}}_{n}(h)/r\), in the case where the U-process is non-degenerate, i.e., \(\mathrm {Var}({\mathbb {E}}[h(X_1,\ldots ,X_{r}) \mid X_1]) > 0\) for all \(h \in {\mathcal {H}}\). The function class \({\mathcal {H}}\) is allowed to depend on n, i.e., \({\mathcal {H}}= {\mathcal {H}}_{n}\), and we are primarily interested in situations where the normalized U-process \({\mathbb {U}}_{n}\) is not weakly convergent as a process (beyond finite dimensional convergence). For example, there are situations where \({\mathcal {H}}_n\) depends on n but \({\mathcal {H}}_{n}\) is further indexed by a parameter set \(\Theta \) independent of n. In such cases, one can think of \({\mathbb {U}}_{n}\) as a U-process indexed by \(\Theta \) and can consider weak convergence of the U-process in the space of bounded functions on \(\Theta \), i.e., \(\ell ^{\infty }(\Theta )\). However, even in such cases, there are a variety of statistical problems where the U-process is not weakly convergent in \(\ell ^{\infty }(\Theta )\), even after a proper normalization. The present paper covers such “difficult” (and in fact yet more general) problems.

U-processes are powerful tools for a broad range of statistical applications such as testing for qualitative features of functions in nonparametric statistics [1, 25, 38], cross-validation for density estimation [43], and establishing limiting distributions of M-estimators (see, e.g., [4, 18, 50, 51]). There are two perspectives on U-processes: (1) they are infinite-dimensional versions of U-statistics (with one kernel); (2) they are stochastic processes that are nonlinear generalizations of empirical processes. Both views are useful in that: (1) statistically, it is of greater interest to consider a rich class of statistics rather than a single statistic; (2) mathematically, we can borrow the insights from empirical process theory to derive limit or approximation theorems for U-processes. Importantly, however, (1) extending U-statistics to U-processes requires substantial efforts and different techniques; and (2) generalization from empirical processes to U-processes is highly nontrivial especially when U-processes are not weakly convergent as processes. In classical settings where indexing function classes are fixed (i.e., independent of n), it is known that Uniform Central Limit Theorems (UCLTs) in the Hoffmann-Jørgensen sense hold for U-processes under metric (or bracketing) entropy conditions, where U-processes are weakly convergent in spaces of bounded functions [4, 8, 18, 44] (these references also cover degenerate U-processes where limiting processes are Gaussian chaoses rather than Gaussian processes). Under such classical settings, [5, 56] study limit theorems for bootstrapping U-processes; see also [3, 6, 9, 19, 32,33,34, 55] as references on bootstraps for U-statistics. Giné and Mason [27] introduce a notion of the local U-process motivated by a density estimator of a function of several variables proposed by [24] and establish a version of UCLTs for local U-processes. More recently, [11] studies Gaussian and bootstrap approximations for high-dimensional (order-two) U-statistics, which can be viewed as U-processes indexed by finite function classes \({\mathcal {H}}_n\) with increasing cardinality in n. To the best of our knowledge, however, no existing work covers the case where the indexing function class \({\mathcal {H}}= {\mathcal {H}}_n\) (1) may change with n; (2) may have infinite cardinality for each n; and (3) need not verify UCLTs. This is indeed the situation for many of nonparametric specification testing problems [1, 25, 38]; see examples in Sect. 4 for details.

In this paper, we develop a general non-asymptotic theory for directly approximating the supremum \(Z_n = \sup _{h \in {\mathcal {H}}} {\mathbb {U}}_n (h)/r\) without referring a weak limit of the underlying U-process \(\{{\mathbb {U}}_n(h) : h \in {\mathcal {H}}\}\). Specifically, we first establish a general Gaussian coupling result to approximate \(Z_n\) by the supremum of a Gaussian process \(W_P\) in Sect. 2. Our Gaussian approximation result builds upon recent development in modern empirical process theory [13,14,15] and high-dimensional U-statistics [11]. As a significant departure from the existing literature [4, 14, 15, 27], our Gaussian approximation for U-processes has a multi-resolution nature, which is neither parallel with the theory of U-processes with fixed function classes nor that of empirical processes. In particular, unlike U-processes with fixed function classes, the higher-order degenerate components are not necessarily negligible compared with the Hájek (empirical) process (in the sense of the Hoeffding projections [31]) and they may impact error bounds of the Gaussian approximation.

However, the covariance function of the Gaussian process \(W_P\) depends on the underlying distribution P which is unknown and hence the Gaussian approximation developed in Sect. 2 is not directly applicable to statistical problems such as computing critical values of a test statistic defined by the supremum of a U-process. On the other hand, the (Gaussian) multiplier bootstrap developed in [13, 15] for empirical processes is not directly applicable to U-processes since the Hájek process also depends on P and hence is unknown. Our second main contribution is to develop a fully data-dependent procedure for approximating the distribution of \(Z_n\). Specifically, we propose a novel jackknife multiplier bootstrap (JMB) tailored to U-processes in Sect. 3. The key insight of the JMB is to replace the (unobserved) Hájek process by its jackknife estimate (cf. [10]). We establish finite sample validity of the JMB (i.e., conditional multiplier CLT) with explicit error bounds. As a distinguished feature, our error bounds involve a delicate interplay among all levels of the Hoeffding projections. In particular, the key innovations are a collection of new powerful local maximal inequalities for level-dependent degenerate components associated with the U-process (see Sect. 5). To the best of our knowledge, there has been no theoretical guarantee on bootstrap consistency for U-processes whose function classes change with n and which do not converge weakly as processes. Our finite sample bootstrap validity results with explicit error bounds fill this important gap in literature, although we only focus on the supremum functional.

It should be emphasized that our approximation problem is different from the problem of approximating the wholeU-process \(\{{\mathbb {U}}_n(h) : h \in {\mathcal {H}}\}\). In testing monotonicity of nonparametric regression functions, [25] consider a test statistic defined by the supremum of a bounded U-process of order-two and derive a Gaussian approximation result for the normalized U-process. Their idea is a two-step approximation procedure: first approximate the U-process by its Hájek process and then apply Rio’s coupling result [47], which is a Komlós–Major–Tusnády (KMT) [36] type strong approximation for empirical processes indexed by Vapnik-Červonenkis (VC) type classes of functions. See also [35, 41] for extensions of the KMT construction to other function classes. It is worth noting that the two-step approximation of U-processes based on KMT type approximations in general requires more restrictive conditions on the function class and the underlying distribution in statistical applications. Our regularity conditions on the function class and the underlying distribution for the Gaussian and bootstrap approximations are easy to verify and are less restrictive than those required for KMT type approximations since we directly approximate the supremum of a U-process rather than the whole U-process; in fact, our approximation results can cover examples of statistical applications for which KMT type approximations are not applicable or difficult to apply; see Sect. 4 for details. In particular, both Gaussian and bootstrap approximation results of the present paper allow classes of functions with unbounded envelopes provided suitable moment conditions are satisfied.

To illustrate the general approximation results for suprema of U-processes, we consider the problem of testing qualitative features of the conditional distribution and regression functions in nonparametric statistics [1, 25, 38]. In Sect. 4, we propose a unified test statistic for specifications (such as monotonicity, linearity, convexity, concavity, etc.) of nonparametric functions based on the generalized local U-process (the name is inspired by [27]). Instead of attempting to establish a Gumbel type limiting distribution for the extreme-value test statistic (which is known to have slow rates of convergence; see [30, 46]), we apply the JMB to approximate the finite sample distribution of the proposed test statistic. Notably, the JMB is valid for a larger spectrum of bandwidths, allows for an unbounded envelope, and the size error of the JMB is decreasing polynomially fast in n, which should be contrasted with the fact that tests based on Gumbel approximations have size errors of order \(1/\log n\). It is worth noting that [38], who develop a test for the stochastic monotonicity based on the supremum of a (second-order) U-process and derive a Gumbel limiting distribution for their test statistic under the null, state a conjecture that a bootstrap resampling method would yield the test whose size error is decreasing polynomially fast in n [38, p. 594]. The results of the present paper formally solve this conjecture for a different version of bootstrap, namely, the JMB, in a more general setting. In addition, our general theory can be used to develop a version of the JMB test that is uniformly valid in compact bandwidth sets. Such “uniform-in-bandwidth” type results allow one to consider tests with data-dependent bandwidth selection procedures, which are not covered in [1, 25, 38].

1.1 Organization

The rest of the paper is organized as follows. In Sect. 2, we derive non-asymptotic Gaussian approximation error bounds for the U-process supremum in the non-degenerate case. In Sect. 3, we develop and study a jackknife multiplier bootstrap (with Gaussian weights) tailored to the U-process to further approximate the distribution of the U-process supremum in a data-dependent manner. In Sect. 4, we discuss applications of the general results developed in Sects. 2 and 3 to testing for qualitative features of nonparametric functions based on generalized local U-processes. In Sect. 5, we prove new multi-resolution and local maximal inequalities for degenerate U-processes with respect to the degeneracy levels of their kernel. These inequalities are key technical tools in the proofs for the results in the previous sections. In Sect. 6, we present the proofs for Sects. 2, 3. Appendix contains additional proofs, discussions, and auxiliary technical results.

1.2 Notation

For a nonempty set T, let \(\ell ^{\infty }(T)\) denote the Banach space of bounded real-valued functions \(f: T \rightarrow {\mathbb {R}}\) equipped with the sup norm \(\Vert f \Vert _{T} := \sup _{t \in T}|f(t)|\). For a pseudometric space (T, d), let \(N(T,d,\varepsilon )\) denote the \(\varepsilon \)-covering number for (T, d), i.e., the minimum number of closed d-balls with radius at most \(\varepsilon \) that cover T. See [53, Section 2.1] or [29, Section 2.3] for details. For a probability space \((T,{\mathcal {T}},Q)\) and a measurable function \(f: T \rightarrow {\mathbb {R}}\), we use the notation \(Q f := \int f dQ\) whenever the integral is defined. For \(q \in [1,\infty ]\), let \(\Vert \cdot \Vert _{Q,q}\) denote the \(L^{q}(Q)\)-seminorm, i.e., \(\Vert f \Vert _{Q,q} := (Q|f|^{q})^{1/q} := (\int |f|^{q} dQ)^{1/q}\) for finite q while \(\Vert f \Vert _{Q,\infty }\) denotes the essential supremum of |f| with respect to Q. For a measurable space \((S,{\mathcal {S}})\) and a positive integer r, \(S^{r} = S \times \cdots \times S\) (r times) denotes the product space equipped with the product \(\sigma \)-field \({\mathcal {S}}^{r}\). For a generic random variable Y (not necessarily real-valued), let \({\mathcal {L}}(Y)\) denote the law (distribution) of Y. For \(a,b \in {\mathbb {R}}\), let \(a \vee b = \max \{ a,b \}\) and \(a \wedge b = \min \{ a,b \}\). Let \(\lfloor a \rfloor \) denote the integer part of \(a \in {\mathbb {R}}\). “Constants” refer to finite, positive, and non-random numbers.

2 Gaussian approximation for suprema of U-processes

In this section, we derive non-asymptotic Gaussian approximation error bounds for the U-process supremum in the non-degenerate case, which is essential for establishing the bootstrap validity in Sect. 3. The goal is to approximate the supremum of the normalized U-process, \(\sup _{h \in {\mathcal {H}}} {\mathbb {U}}_{n}(h)/r\), by the supremum of a suitable Gaussian process, and derive bounds on such approximations.

We first recall the setting. Let \(X_{1},\ldots ,X_{n}\) be i.i.d. random variables defined on a probability space \((\Omega ,{\mathcal {A}},{\mathbb {P}})\) and taking values in a measurable space \((S,{\mathcal {S}})\) with common distribution P. For a technical reason, we assume that S is a separable metric space and \({\mathcal {S}}\) is its Borel \(\sigma \)-field. For a given integer \(r \geqslant 2\), let \({\mathcal {H}}\) be a class of symmetric measurable functions \(h: S^{r} \rightarrow {\mathbb {R}}\) equipped with a symmetric measurable envelope H. Recall the U-process \(\{ U_{n}(h) : h \in {\mathcal {H}}\}\) defined in (1) and its normalized version \(\{ {\mathbb {U}}_{n}(h) : h \in {\mathcal {H}}\}\) defined in (2). In applications, the function class \({\mathcal {H}}\) may depend on n, i.e., \({\mathcal {H}}= {\mathcal {H}}_{n}\). However, in Sects. 2 and 3, we will derive non-asymptotic results that are valid for each sample size n, and therefore suppress the possible dependence of \({\mathcal {H}}= {\mathcal {H}}_n\) on n for the notational convenience.

We will use the following notation. For a symmetric measurable function \(h: S^{r} \rightarrow {\mathbb {R}}\) and \(k=1,\ldots ,r\), let \(P^{r-k}h\) denote the function on \(S^{k}\) defined by

whenever the latter integral exists and is finite for every \((x_{1},\ldots ,x_{k}) \in S^{k}\) (\(P^{0}h = h\)). Provided that \(P^{r-k}h\) is well-defined, \(P^{r-k}h\) is symmetric and measurable.

In this paper, we focus on the case where the function class \({\mathcal {H}}\) is VC (Vapnik-Červonenkis) type, whose formal definition is stated as follows.

Definition 2.1

(VC type class) A function class \({\mathcal {H}}\) on \(S^{r}\) with envelope H is said to be VC type with characteristics (A, v) if \( \sup _Q N({\mathcal {H}}, \Vert \cdot \Vert _{Q,2}, \varepsilon \Vert H\Vert _{Q,2}) \leqslant (A / \varepsilon )^v\) for all \(0 < \varepsilon \leqslant 1\), where \(\sup _{Q}\) is taken over all finitely discrete distributions on \(S^{r}\).

We make the following assumptions on the function class \({\mathcal {H}}\) and the distribution P.

- (PM)

The function class \({\mathcal {H}}\) is pointwise measurable, i.e., there exists a countable subset \({\mathcal {H}}' \subset {\mathcal {H}}\) such that for every \(h \in {\mathcal {H}}\), there exists a sequence \(h_k \in {\mathcal {H}}'\) with \(h_k \rightarrow h\) pointwise.

- (VC)

The function class \({\mathcal {H}}\) is VC type with characteristics \(A \geqslant (e^{2(r-1)}/16) \vee e\) and \(v \geqslant 1\) for envelope H. The envelope H satisfies that \(H \in L^{q}(P^{r})\) for some \(q \in [4,\infty ]\) and \(P^{r-k}H\) is everywhere finite for every \(k=1,\ldots ,r\).

- (MT)

Let \({\mathcal {G}}:= P^{r-1} {\mathcal {H}}:= \{ P^{r-1} h : h \in {\mathcal {H}}\}\) and \(G:=P^{r-1}H\). There exist (finite) constants

$$\begin{aligned} b_{{\mathfrak {h}}} \geqslant b_{{\mathfrak {g}}} \vee \sigma _{{\mathfrak {h}}} \geqslant b_{{\mathfrak {g}}} \wedge \sigma _{{\mathfrak {h}}} \geqslant {\overline{\sigma }}_{{\mathfrak {g}}} > 0 \end{aligned}$$such that the following hold:

$$\begin{aligned} \begin{aligned}&\Vert G\Vert _{P,q} \leqslant b_{{\mathfrak {g}}}, \qquad \sup _{g \in {\mathcal {G}}} \Vert g \Vert _{P,\ell }^{\ell } \leqslant {\overline{\sigma }}_{{\mathfrak {g}}}^2 b_{{\mathfrak {g}}}^{\ell -2}, \ \ell =2,3,4, \\&\Vert P^{r-2} H \Vert _{P^{2},q} \leqslant b_{{\mathfrak {h}}}, \ \text {and} \ \sup _{h \in {\mathcal {H}}} \Vert P^{r-2}h \Vert _{P^{2},\ell }^{\ell } \leqslant \sigma _{{\mathfrak {h}}}^{2} b_{{\mathfrak {h}}}^{\ell -2}, \ \ell =2,4, \end{aligned} \end{aligned}$$where q appears in Condition (VC).

Some comments on the conditions are in order. Conditions (PM), (VC), and (MT) are inspired by Conditions (A)–(C) in [15]. Condition (PM) is made to avoid measurability difficulties. Our definition of “pointwise measurability” is borrowed from Example 2.3.4 in [53]; [29, p. 262] calls a pointwise measurable function class a function class satisfying the pointwise countable approximation property. Condition (PM) ensures that, e.g., \(\sup _{h \in {\mathcal {H}}} {\mathbb {U}}_{n}(h) = \sup _{h \in {\mathcal {H}}'} {\mathbb {U}}_{n}(h)\), so that \(\sup _{h \in {\mathcal {H}}} {\mathbb {U}}_{n}(h)\) is a (proper) random variable. See [53, Section 2.2] for details.

Condition (VC) ensures that \({\mathcal {G}}\) is VC type as well with characteristics \(4\sqrt{A}\) and 2v for envelope \(G=P^{r-1}H\); see Lemma 5.4 ahead. Since \(G \in L^{2}(P)\) by Condition (VC), it is seen from Dudley’s criterion on sample continuity of Gaussian processes (see, e.g., [29, Theorem 2.3.7]) that the function class \({\mathcal {G}}\) is P-pre-Gaussian, i.e., there exists a tight Gaussian random variable \(W_P\) in \(\ell ^\infty ({\mathcal {G}})\) with mean zero and covariance function

Recall that a Gaussian process \(W= \{ W(g) : g \in {\mathcal {G}}\}\) is a tight Gaussian random variable in \(\ell ^{\infty }({\mathcal {G}})\) if and only if \({\mathcal {G}}\) is totally bounded for the intrinsic pseudometric \(d_{W}(g,g') = ({\mathbb {E}}[ (W(g)-W(g'))^{2}])^{1/2}, g,g' \in {\mathcal {G}}\), and W has sample paths almost surely uniformly \(d_{W}\)-continuous [53, Section 1.5]. In applications, \({\mathcal {G}}\) may depend on n and so the Gaussian process \(W_P\) (and its distribution) may depend on n as well, although such dependences are suppressed in Sects. 2 and 3. The VC type assumption made in Condition (VC) covers many statistical applications. However, it is worth noting that in principle, we can derive corresponding results for Gaussian and bootstrap approximations under more general complexity assumptions on the function class beyond the VC type, as our local maximal inequalities for the U-process in Theorem 5.1 ahead, which are key technical results in the proofs of the Gaussian and bootstrap approximation results, can cover more general function classes than VC type classes; but the resulting bounds would be more complicated and may not be clear enough. For the clarity of exposition, we focus on VC type function classes and present a Gaussian coupling bound for general function classes in “Appendix E”.

Condition (MT) imposes suitable moment bounds on the kernel and its Hájek projection. Specifically, this moment condition contains interpolated parameters which control the lower moments (i.e., \(L^{2}, L^{3}\), and \(L^{4}\) sizes) and the envelopes of \({\mathcal {H}}\) and \({\mathcal {G}}\).

Under these conditions on the function class \({\mathcal {H}}\) and the distribution P, we will first construct a random variable, defined on the same probability space as \(X_{1},\ldots ,X_{n}\), which is equal in distribution to \(\sup _{g \in {\mathcal {G}}} W_{P}(g)\) and “close” to \(Z_{n}\) with high-probability. To ensure such constructions, a common assumption is that the probability space is rich enough. For the sake of clarity, we will assume in Sects. 2 and 3 that the probability space \((\Omega ,{\mathcal {A}},{\mathbb {P}})\) is such that

where \(X_{1},\ldots ,X_{n}\) are the coordinate projections of \((S^{n},{\mathcal {S}}^{n},P^{n})\), multiplier random variables \(\xi _{1},\ldots ,\xi _{n}\) to be introduced in Sect. 3 depend only on the “second” coordinate \((\Xi ,{\mathcal {C}},R)\), and U(0, 1) denotes the uniform distribution (Lebesgue measure) on (0, 1) (\({\mathcal {B}}(0,1)\) denotes the Borel \(\sigma \)-field on (0, 1)). The augmentation of the last coordinate is reserved to generate a U(0, 1) random variable independent of \(X_{1},\ldots ,X_{n}\) and \(\xi _{1},\ldots ,\xi _{n}\), which is needed when applying the Strassen–Dudley theorem and its conditional version in the proofs of Proposition 2.1 and Theorem 3.1; see “Appendix B” for the Strassen–Dudley theorem and its conditional version. We will also assume that the Gaussian process \(W_{P}\) is defined on the same probability space (e.g. one can generate \(W_{P}\) by the previous U(0, 1) random variable), but of course \(\sup _{g \in {\mathcal {G}}} W_{P}(g)\) is not what we want since there is no guarantee that \(\sup _{g \in {\mathcal {G}}}W_{P}(g)\) is close to \(Z_{n}\).

Now, we are ready to state the first result of this paper. Recall the notation given in Condition (MT) and define

with the convention that \(\sum _{k=3}^{r} =0\) if \(r=2\). The following proposition derives Gaussian coupling bounds for \(Z_{n} = \sup _{h \in {\mathcal {H}}} {\mathbb {U}}_{n}(h)/r\).

Proposition 2.1

(Gaussian coupling bounds) Let \(Z_n = \sup _{h \in {\mathcal {H}}} {\mathbb {U}}_n(h)/r\). Suppose that Conditions (PM), (VC), and (MT) hold, and that \(K_{n}^{3} \leqslant n\). Then, for every \(n \geqslant r+1\) and \(\gamma \in (0,1)\), one can construct a random variable \({\widetilde{Z}}_{n,\gamma }\) such that \({\mathcal {L}}({\widetilde{Z}}_{n,\gamma }) = {\mathcal {L}}(\sup _{g \in {\mathcal {G}}} W_P(g))\) and

where \(C,C'\) are constants depending only on r, and

In the case of \(q=\infty \), “1 / q” is interpreted as 0.

In statistical applications, bounds on the Kolmogorov distance are often more useful than coupling bounds. For two real-valued random variables V, Y, let \(\rho (V,Y)\) denote the Kolmogorov distance between the distributions of V and Y, i.e., \(\rho (V,Y) := \sup _{t \in {\mathbb {R}}} | {\mathbb {P}}(V \leqslant t) - {\mathbb {P}}(Y \leqslant t)|\). To derive a Kolomogorov distance bound, we will assume that there exists a constant \({\underline{\sigma }}_{{\mathfrak {g}}} > 0\) such that

Condition (5) implies that the U-process is non-degenerate. For the notational convenience, let \({\widetilde{Z}} = \sup _{g \in {\mathcal {G}}} W_{P}(g)\).

Corollary 2.2

(Bounds on the Kolmogorov distance between \(Z_n\) and \(\sup _{g \in {\mathcal {G}}}W_{P}(g)\)) Assume that all the conditions in Proposition 2.1 and (5) hold. Then, there exists a constant C depending only on \(r, {\overline{\sigma }}_{{\mathfrak {g}}}\) and \({\underline{\sigma }}_{{\mathfrak {g}}}\) such that

In particular, if the function class \({\mathcal {H}}\) and the distribution P are independent of n, then \(\rho (Z_n, {\widetilde{Z}} )= O(\{ (\log n)^{7}/n \}^{1/8} )\).

Condition (5) is used to apply the “anti-concentration” inequality for the Gaussian supremum (see Lemma A.1), which is a key technical ingredient of the proof of Corollary 2.2. The dependence of the constant C on the variance parameters \({\underline{\sigma }}_{{\mathfrak {g}}}\) and \({\overline{\sigma }}_{{\mathfrak {g}}}\) is not a serious restriction in statistical applications. In statistical applications, the function class \({\mathcal {H}}\) is often normalized in such a way that each function \(g \in {\mathcal {G}}\) has (approximately) unit variance. In such cases, we may take \({\underline{\sigma }}_{{\mathfrak {g}}} = {\overline{\sigma }}_{{\mathfrak {g}}} = 1\) or \(({\underline{\sigma }}_{{\mathfrak {g}}},{\overline{\sigma }}_{{\mathfrak {g}}})\) as positive constants independent of n; see Sect. 4 for details.

Remark 2.1

(Comparisons with Gaussian approximations to suprema of empirical processes) Our Gaussian coupling (Proposition 2.1) and approximation (Corollary 2.2) results are level-dependent on the Hoeffding projections of the U-process \({\mathbb {U}}_n\) (cf. (17) and (18) for formal definitions of the Hoeffding projections and decomposition). Specifically, we observe that: (1) \({\underline{\sigma }}_{{\mathfrak {g}}}, {\overline{\sigma }}_{{\mathfrak {g}}}, b_{{\mathfrak {g}}}\) quantify the contribution from the Hájek (empirical) process associated with \({\mathbb {U}}_n\); (2) \(\sigma _{{\mathfrak {h}}}, b_{{\mathfrak {h}}}\) are related to the second-order degenerate component associated with \({\mathbb {U}}_n\); (3) \(\chi _n\) contains the effect from all higher order projection terms of \({\mathbb {U}}_n\). For statistical applications in Sect. 4 where the function class \({\mathcal {H}}= {\mathcal {H}}_n\) changes with n, the second and higher order projections terms are not necessarily negligible and we have to take into account the contributions of all higher order projection terms. Hence, the Gaussian approximation for the U-process supremum of a general order is not parallel with the approximation results for the empirical process supremum [14, 15].

3 Bootstrap approximation for suprema of U-processes

The Gaussian approximation results derived in the previous section are often not directly applicable in statistical applications such as computing critical values of a test statistic defined by the supremum of a U-process. This is because the covariance function of the approximating Gaussian process \(W_{P}(g), g \in {\mathcal {G}}\), is often unknown. In this section, we study a Gaussian multiplier bootstrap, tailored to the U-process, to further approximate the distribution of the random variable \(Z_{n} = \sup _{h \in {\mathcal {H}}} {\mathbb {U}}_{n}(h)/r\) in a data-dependent manner. The Gaussian approximation results will be used as building blocks for establishing validity of the Gaussian multiplier bootstrap.

We begin with noting that, in contrast to the empirical process case studied in [13, 15], devising (Gaussian) multiplier bootstraps for the U-process is not straightforward. From the Gaussian approximation results, the distribution of \(Z_{n}\) is well approximated by the Gaussian supremum \(\sup _{g \in {\mathcal {G}}} W_{P}(g)\). Hence, one might be tempted to approximate the distribution of \(\sup _{g \in {\mathcal {G}}}W_{P}(g)\) by the conditional distribution of the supremum of the the multiplier process

where \(\xi _{1},\ldots ,\xi _{n}\) are i.i.d. N(0, 1) random variables independent of the data \(X_{1}^{n} := \{ X_{1},\ldots ,X_{n} \}\) and \({\overline{g}} = n^{-1} \sum _{i=1}^{n} g(X_{i})\). However, a major problem of this approach is that, in statistical applications, functions in \({\mathcal {G}}\) are unknown to us since functions in \({\mathcal {G}}\) are of the form \(P^{r-1}h\) for some \(h \in {\mathcal {H}}\) and depend on the (unknown) underlying distribution P. Therefore, we must devise a multiplier bootstrap properly tailored to the U-process.

Motivated by this fundamental challenge, we propose and study the following version of Gaussian multiplier bootstrap. Let \(\xi _1,\ldots ,\xi _n\) be i.i.d. N(0, 1) random variables independent of the data \(X_1^n\) [these multiplier variables will be assumed to depend only on the “second” coordinate in the probability space construction (3)]. We introduce the following multiplier process:

where \(\sum _{(i,i_{2},\ldots ,i_{r})}\) is taken with respect to \((i_{2},\ldots ,i_{r})\) while keeping i fixed. The process \(\{ {\mathbb {U}}_n^\sharp (h) : h \in {\mathcal {H}}\}\) is a centered Gaussian process conditionally on the data \(X_{1}^{n}\) and can be regarded as a version of the (infeasible) multiplier process (6) with each \(g(X_{i})\) replaced by a jackknife estimate. In fact, the multiplier process (6) can be alternatively represented as

where \(\overline{P^{r-1}h} = n^{-1} \sum _{i=1}^{n} P^{r-1}h(X_{i})\). For \(x \in S\), denote by \(\delta _{x}\) the Dirac measure at x and denote by \(\delta _{x} h\) the function on \(S^{r-1}\) defined by \((\delta _{x} h)(x_{2},\ldots ,x_{r}) =h(x,x_{2},\ldots ,x_{r})\) for \((x_{2},\ldots ,x_{r}) \in S^{r-1}\). For each \(i=1,\ldots ,n\) and a function f on \(S^{r-1}\), let \(U_{n-1,-i}^{(r-1)}(f)\) denote the U-statistic with kernel f for the sample without the i-th observation, i.e.,

Then the proposed multiplier process (7) can be alternatively written as

that is, our multiplier process (7) replaces each \((P^{r-1}h)(X_{i})\) in the infeasible multiplier process (8) by its jackknife estimate \(U_{n-1,-i}^{(r-1)} (\delta _{X_{i}}h)\).

In practice, we approximate the distribution of \(Z_{n}\) by the conditional distribution of the supremum of the multiplier process \(Z_{n}^{\sharp }:=\sup _{h \in {\mathcal {H}}} {\mathbb {U}}_{n}^{\sharp }(h)\) given \(X_{1}^{n}\), which can be further approximated by Monte Carlo simulations on the multiplier variables.

To the best of our knowledge, our multiplier bootstrap method for U-processes is new in the literature, at least in this generality; see Remark 3.1 for comparisons with other bootstraps for U-processes. We call the resulting bootstrap method the jackknife multiplier bootstrap (JMB) for U-processes.

Now, we turn to proving validity of the proposed JMB. We will first construct couplings \(Z_{n}^{\sharp }\) and \({\widetilde{Z}}_{n}^{\sharp } := {\widetilde{Z}}_{n,\gamma }^{\sharp }\) (a real-valued random variable that may depend on the coupling error \(\gamma \in (0,1)\)) such that: 1) \({\mathcal {L}}({\widetilde{Z}}_{n}^{\sharp } \mid X_{1}^{n} ) = {\mathcal {L}} ( {\widetilde{Z}} )\), where \({\mathcal {L}}(\cdot \mid X_{1}^{n})\) denotes the conditional law given \(X_{1}^{n}\) (i.e., \({\widetilde{Z}}_{n}^{\sharp }\) is independent of \(X_{1}^{n}\) and has the same distribution as \({\widetilde{Z}} = \sup _{g \in {\mathcal {G}}}W_{P}(g)\)); and at the same time 2) \(Z_{n}^{\sharp }\) and \({\widetilde{Z}}_{n}^{\sharp }\) are “close” to each other. Construction of such couplings leads to validity of the JMB. To see this, suppose that \(Z_{n}^{\sharp }\) and \({\widetilde{Z}}_{n}^{\sharp }\) are close to each other, namely, \({\mathbb {P}}(|Z_{n}^{\sharp } - {\widetilde{Z}}_{n}^{\sharp }| > r_{1}) \leqslant r_{2}\) for some small \(r_{1},r_{2} > 0\). To ease the notation, denote by \({\mathbb {P}}_{\mid X_{1}^{n}}\) and \({\mathbb {E}}_{\mid X_{1}^{n}}\) the conditional probability and expectation given \(X_{1}^{n}\), respectively (i.e., the notation \({\mathbb {P}}_{\mid X_{1}^{n}}\) corresponds to taking probability with respect to the “latter two” coordinates in (3) while fixing \(X_{1}^{n}\)). Then,

by Markov’s inequality, so that, on the event \(\{ {\mathbb {P}}_{\mid X_{1}^{n}} (|Z_{n}^{\sharp } - {\widetilde{Z}}_{n}^{\sharp }| > r_{1}) \leqslant r_{2}^{1/2} \}\) whose probability is at least \(1-r_{2}^{1/2}\), for every \(t \in {\mathbb {R}}\),

and likewise \({\mathbb {P}}_{\mid X_{1}^{n}} (Z_{n}^{\sharp } \leqslant t) \geqslant {\mathbb {P}}({\widetilde{Z}} \leqslant t-r_{1} ) - r_{2}^{1/2}\). Hence, on that event,

The first term on the right hand side can be bounded by using the anti-concentration inequality for the supremum of a Gaussian process (cf. [14, Lemma A.1] which is stated in Lemma A.1 in “Appendix A”), and combining the Gaussian approximation results, we obtain a bound on the Kolmogorov distance between \({\mathcal {L}}(Z_{n}^{\sharp } \mid X_{1}^{n})\) and \({\mathcal {L}}(Z_{n})\) on an event with probability close to one, which leads to validity of the JMB.

The following theorem is the main result of this paper and derives bounds on such couplings. To state the next theorem, we need the additional notation. For a symmetric measurable function f on \(S^{2}\), define \(f^{\odot 2} = f^{\odot 2}_{P}\) by

Let \(\nu _{{\mathfrak {h}}} := \Vert (P^{r-2}H)^{\odot 2} \Vert _{P^{2},q/2}^{1/2}\).

Theorem 3.1

(Bootstrap coupling bounds) Let \(Z_n^\sharp = \sup _{h \in {\mathcal {H}}} {\mathbb {U}}_n^\sharp (h)\). Suppose that Conditions (PM), (VC), and (MT) hold. Furthermore, suppose that

Then, for every \(n \geqslant r+1\) and \(\gamma \in (0,1)\), one can construct a random variable \({\widetilde{Z}}_{n,\gamma }^\sharp \) such that \({\mathcal {L}}({\widetilde{Z}}_{n,\gamma }^\sharp \mid X_1^n) = {\mathcal {L}}(\sup _{g \in {\mathcal {G}}} W_P(g))\) and

where \(C, C'\) are constants depending only on r, and

In the case of \(q=\infty \), “1 / q” is interpreted as 0.

We note that \(\nu _{{\mathfrak {h}}}^{q} \leqslant \Vert P^{r-2}H\Vert _{P^{2},q}^{q} \leqslant b_{{\mathfrak {h}}}^{q}\), but in our applications \(\nu _{{\mathfrak {h}}} \ll b_{{\mathfrak {h}}}\) and this is why we introduced such a seemingly complicated definition for \(\nu _{{\mathfrak {h}}}\). To see that \(\nu _{{\mathfrak {h}}} \leqslant b_{{\mathfrak {h}}}\), observe that by the Cauchy–Schwarz and Jensen inequalities,

Condition (9) is not restrictive. In applications, the function class \({\mathcal {H}}\) is often normalized in such a way that \({\overline{\sigma }}_{{\mathfrak {g}}}\) is of constant order, and under this normalization, Condition (9) is a merely necessary condition for the coupling bound (10) to tend to zero.

The proof of Theorem 3.1 is lengthy and involved. A delicate part of the proof is to sharply bound the sup-norm distance between the conditional covariance function of the multiplier process \({\mathbb {U}}_{n}^{\sharp }\) and the covariance function of \(W_{P}\), which boils down to bounding the term

To this end, we make use of the following observation: for a \(P^{r-1}\)-integrable function f on \(S^{r-1}\), \(U_{n-1,-i}^{(r-1)}(f)\) is a U-statistic of order \((r-1)\), and denote by \(S_{n-1,-i}(f)\) its first Hoeffding projection term. Conditionally on \(X_{i}\), \(U_{n-1,-i}^{(r-1)} (\delta _{X_{i}}h) - P^{r-1}h(X_{i}) - S_{n-1,-i}(\delta _{X_{i}}h)\) is a degenerate U-process, and we will bound the expectation of the squared supremum of this term conditionally on \(X_{i}\) using “simpler” maximal inequalities (Corollary 5.6 ahead). On the other hand, the term \(n^{-1} \sum _{i=1}^{n} \{ S_{n-1,-i}(\delta _{X_{i}}h) \}^{2}\) is decomposed into

where the order of degeneracy of the latter term is 1, and we will apply “sharper” local maximal inequalities (Corollary 5.5 ahead) to bound the suprema of both terms. Such a delicate combination of different maximal inequalities turns out to be crucial to yield sharper regularity conditions for validity of the JMB in our applications. In particular, if we bound the sup-norm distance between the conditional covariance function of \({\mathbb {U}}_{n}^{\sharp }\) and the covariance function of \(W_{P}\) in a cruder way, then this will lead to more restrictive conditions on bandwidths in our applications, especially for the “uniform-in-bandwidth” results [cf. Condition (T5\('\)) in Theorem 4.4].

The following corollary derives a “high-probability” bound for the Kolmogorov distance between \({\mathcal {L}}( Z_{n}^{\sharp } \mid X_{1}^{n})\) and \({\mathcal {L}}({\widetilde{Z}})\) (here a high-probability bound refers to a bound holding with probability at least \(1 - C n^{-c}\) for some constants C, c).

Corollary 3.2

(Validity of the JMB) Suppose that Conditions (PM), (VC), (MT), and (5) hold. Let

with the convention that \(1/q = 0\) when \(q=\infty \). Then, there exist constants \(C,C'\) depending only on \(r, {\overline{\sigma }}_{{\mathfrak {g}}}\), and \({\underline{\sigma }}_{{\mathfrak {g}}}\) such that, with probability at least \(1-C \eta _{n}^{1/4}\),

If the function class \({\mathcal {H}}\) and the distribution P are independent of n, then \(\eta _{n}^{1/4}\) is of order \(n^{-1/16}\), which is polynomially decreasing in n but appears to be non-sharp. Sharper bounds could be derived by improving on \(\gamma ^{-3/2}\) in front of the \(n^{-1/4}\) term in (10). The proof of Theorem 3.1 consists of constructing a “high-probability” event on which, e.g., the sup-norm distance between the conditional covariance function of \({\mathbb {U}}_{n}^{\sharp }\) and the covariance function of \(W_{P}\) is small. To construct such a high-probability event, the current proof repeatedly relies on Markov’s inequality, which could be replaced by more sophisticated deviation inequalities. However, this is at the cost of more technical difficulties and more restrictive moment conditions. In addition, we derive a conditional UCLT for the JMB in “Appendix D” when \({\mathcal {H}}\) is fixed and P does not depend on n.

Remark 3.1

(Connections to other bootstraps) There are several versions of bootstraps for non-degenerateU-processes. The most celebrated one is the empirical bootstrap

where \(X_1^*,\ldots ,X_n^*\) are i.i.d. draws from the empirical distribution \(P_{n} = n^{-1} \sum _{i=1}^n \delta _{X_i}\) and \(V_n(h) = n^{-r} \sum _{i_{1},\ldots ,i_{r}=1}^{n} h(X_{i_1},\ldots ,X_{i_{r}})\) is the V-statistic associated with kernel h (cf. [5, 6, 11]). A slightly different bootstrap procedure

is proposed in [3]; see Remark 2.7 therein. If \({\mathcal {H}}= \{h\}\) is a singleton and the associated U-statistic \(U_{n}(h)\) is non-degenerate, then \({\mathbb {U}}_{n}^{\natural }(h)\) and \({\mathbb {U}}_n^*(h)\) are asymptotically equivalent in the sense that they have the same weak limit that is given by the centered Gaussian random variable \(W_{P}(P^{r-1}h)\); see Theorem 2.4 and Corollary 2.6 in [3]. Since the bootstrap \({\mathbb {U}}_{n}^{\natural }(h)\) can be viewed as the empirical bootstrap applied to a V-statistic estimate of the Hájek projection, i.e., \({\mathbb {U}}_{n}^{\natural }(h) =n^{-1/2} \sum _{i=1}^{n} (\delta _{X_{i}^{*}} - P_{n}) P_{n}^{r-1} h\), our JMB is connected to (but still different from) \({\mathbb {U}}_{n}^{\natural }(h)\) in the sense that we apply the multiplier bootstrap to a jackknife U-statistic estimate of the Hajek projection. Another example is the Bayesian bootstrap (with Dirichlet weights)

where \(w_{i} = \eta _{i} / (n^{-1} \sum _{j=1}^n \eta _{j})\) for \(i=1,\dots ,n\) and \(\eta _1,\dots ,\eta _n\) are i.i.d. exponential random variables with mean one (i.e., \((w_{1},\dots ,w_{n})\) follows a scaled Dirichlet distribution) independent of \(X_{1}^{n} = \{ X_{1},\dots ,X_{n} \}\) [39, 40, 48, 56]. If \({\mathcal {H}}\) is a fixed VC type function class and the distribution P is independent of n (hence the distribution of the approximating Gaussian process \(W_{P}\) is independent of n), then the conditional distributions (given \(X_1^n\)) of the empirical bootstrap process \(\{{\mathbb {U}}_n^*(h) : h \in {\mathcal {H}}\}\) and the Bayesian bootstrap process \(\{{\mathbb {U}}_n^\flat (h) : h \in {\mathcal {H}}\}\) (with Dirichlet weights) are known to have the same weak limit as the U-process \(\{r^{-1} {\mathbb {U}}_n(h) : h \in {\mathcal {H}}\}\), where the weak limit is the Gaussian process \(W_{P} \circ P^{r-1}\) in the non-degenerate case [5, 56]. The proposed multiplier process in (7) is also connected to the empirical and Baysian bootstraps (or more general randomly reweighted bootstraps) in the sense that the latter two bootstraps also implicitly construct an empirical process whose conditional covariance function is close to that of \(W_P\) under the supremum norm (cf. [11]). Recall that the conditional covariance function of \({\mathbb {U}}_n^\sharp \) can be viewed as a jackknife estimate of the covariance function of \(W_P\). For the special case where \(r = 2\) and \({\mathcal {H}}= {\mathcal {H}}_n\) is such that \(|{\mathcal {H}}_n| < \infty \) and \(|{\mathcal {H}}_n|\) is allowed to increase with n, [11] shows that the Gaussian multiplier, empirical and randomly reweighted bootstraps (\({\mathbb {U}}_n^\flat (h)\) with i.i.d. Gaussian weights \(w_i \sim N(1,1)\)) all achieve similar error bounds. In the U-process setting, it would be possible to establish finite sample validity for the empirical and more general randomly reweighted bootstraps, but this is at the price of a much more involved technical analysis which we do not pursue in the present paper.

4 Applications: testing for qualitative features based on generalized local U-processes

In this section, we discuss applications of the general results in the previous sections to generalized localU-processes, which are motivated from testing for qualitative features of functions in nonparametric statistics (see below for concrete statistical problems).

Let \(m \geqslant 1, r \geqslant 2\) be fixed integers and let \({\mathcal {V}}\) be a separable metric space. Suppose that \(n \geqslant r+1\), and let \(D_{i} = (X_{i},V_{i}), i=1,\dots ,n\) be i.i.d. random variables taking values in \({\mathbb {R}}^m \times {\mathcal {V}}\) with joint distribution P defined on the product \(\sigma \)-field on \({\mathbb {R}}^{m} \times {\mathcal {V}}\) (we equip \({\mathbb {R}}^{m}\) and \({\mathcal {V}}\) with the Borel \(\sigma \)-fields). The variable \(V_{i}\) may include some components of \(X_{i}\). Let \(\Phi \) be a class of symmetric measurable functions \(\varphi :{\mathcal {V}}^r \rightarrow {\mathbb {R}}\), and let \(L: {\mathbb {R}}^{m} \rightarrow {\mathbb {R}}\) be a (fixed) “kernel function”, i.e., an integrable function on \({\mathbb {R}}^{m}\) (with respect to the Lebesgue measure) such that \(\int _{{\mathbb {R}}^{m}} L(x)dx = 1\). For \(b > 0\) (“bandwidth”), we use the notation \(L_{b} (\cdot ) = b^{-m}L(\cdot /b)\). For a given sequence of bandwidths \(b_{n} \rightarrow 0\), let

where \({\mathcal {X}}\subset {\mathbb {R}}^{m}\) is a (nonempty) compact subset. Consider the U-process

which we call, following [27], the generalized localU-process. The indexing function class is \(\{ h_{n,\vartheta } : \vartheta \in \Theta \}\) which depends on the sample size n. The U-process \(U_{n}(h_{n,\vartheta })\) can be seen as a process indexed by \(\Theta \), but in general is not weakly convergent in the space \(\ell ^{\infty }(\Theta )\), even after a suitable normalization (an exception is the case where \({\mathcal {X}}\) and \(\Phi \) are finite sets, and in that case, under regularity conditions, the vector \(\{\sqrt{nb_{n}^{m}} (U_{n}(h_{n,\vartheta }) - P^{r}h_{n,\vartheta }) \}_{\vartheta \in \Theta }\) converges weakly to a multivariate normal distribution). In addition, we will allow the set \(\Theta \) to depend on n.

We are interested in approximating the distribution of the normalized version of this process

where \(c_{n}(\vartheta ) > 0\) is a suitable normalizing constant. The goal of this section is to characterize conditions under which the JMB developed in the previous section is consistent for approximating the distribution of \(S_{n}\) (more generally we will allow the normalizing constant \(c_{n}(\vartheta )\) to be data-dependent). There are a number of statistical applications where we are interested in approximating distributions of such statistics. We provide a couple of examples. All the test statistics discussed in Examples in 4.1 and 4.2 are covered by our general framework. In Examples 4.1 and 4.2, \(\alpha \in (0,1)\) is a nominal level.

Example 4.1

(Testing stochastic monotonicity) Let X, Y be real-valued random variables and denote by \(F_{Y \mid X}(y \mid x)\) the conditional distribution function of Y given X. Consider the problem of testing the stochastic monotonicity

Testing for the stochastic monotonicity is an important topic in a variety of applied fields such as economics [7, 23, 52]. For this problem, [38] consider a test for \(H_0\) based on a local Kendall’s tau statistic, inspired by [25]. Let \((X_{i},Y_{i}), i=1,\dots ,n\) be i.i.d. copies of (X, Y). Lee et al. [38] consider the U-process

where \(b_n \rightarrow 0\) is a sequence of bandwidths and \(\mathrm {sign}(x) = 1(x > 0) - 1(x < 0)\) is the sign function. They propose to reject the null hypothesis if \(S_n = \sup _{(x,y) \in {\mathcal {X}}\times {\mathcal {Y}}} U_n(x, y)/c_n(x)\) is large, where \({\mathcal {X}},{\mathcal {Y}}\) are subsets of the supports of X, Y, respectively and \(c_n(x) > 0\) is a suitable normalizing constant. Lee et al. [38] argue that as far as the size control is concerned, it is enough to choose, as a critical value, the \((1-\alpha )\)-quantile of \(S_{n}\) when X, Y are independent, under which \(U_{n}(x,y)\) is centered. Under independence between X and Y, and under regularity conditions, they derive a Gumbel limiting distribution for a properly scaled version of \(S_{n}\) using techniques from [45], but do not consider bootstrap approximations to \(S_{n}\). It should be noted that [38] consider a slightly more general setup than that described above in the sense that they allow \(X_{i}\) not to be directly observed but assume that estimated \(X_{i}\) are available, and also cover the case where X is multidimensional.

Example 4.2

(Testing curvature and monotonicity of nonparametric regression) Consider the nonparametric regression model \(Y = f(X) + \varepsilon \) with \({\mathbb {E}}[ \varepsilon \mid X] = 0\), where Y is a scalar outcome variable, X is an m-dimensional vector of regressors, \(\varepsilon \) is an error term, and f is the conditional mean function \(f(x) = {\mathbb {E}}[ Y \mid X=x]\). We observe i.i.d. copies \(V_{i} = (X_{i},Y_{i}), i=1,\dots ,n\) of \(V=(X,Y)\). We are interested in testing for qualitative features (e.g., curvature, monotonicity) of the regression function f.

Abrevaya and Jiang [1] consider a simplex statistic to test linearity, concavity, convexity of f under the assumption that the conditional distribution of \(\varepsilon \) given X is symmetric. To define their test statistics, for \(x_{1},\dots ,x_{m+1} \in {\mathbb {R}}^{m}\), let \(\Delta ^{\circ } (x_{1},\dots ,x_{m+1}) = \{ \sum _{i=1}^{m+1} a_{i} x_{i} : 0< a_{j} < 1, j=1,\dots ,m+1, \ \sum _{i=1}^{m+1}a_{i} = 1\}\) denote the interior of the simplex spanned by \(x_{1},\dots ,x_{m+1}\), and define \({\mathcal {D}}= \bigcup _{j=1}^{m+2} {\mathcal {D}}_{j}\), where

The sets \({\mathcal {D}}_{1},\dots ,{\mathcal {D}}_{m+2}\) are disjoint. For given \(v_{i}=(x_{i},y_{i}) \in {\mathbb {R}}^{m} \times {\mathbb {R}}, i=1,\dots ,m+2\), if \((x_{1},\dots ,x_{m+2}) \in {\mathcal {D}}\) then there exist a unique index \(j=1,\dots ,m+2\) and a unique vector \((a_{i})_{1 \leqslant i \leqslant m+2,i \ne j}\) such that \(0< a_{i} < 1\) for all \(i \ne j, \sum _{i \ne j} a_{i}=1\), and \(x_{j}=\sum _{i \ne j} a_{i} x_{i}\); then, define \(w(v_{1},\dots ,v_{m+2}) = \sum _{i \ne j} a_{i} y_{i} - y_{j}\). The index j and vector \((a_{i})_{1 \leqslant i \leqslant m+2,i \ne j}\) are functions of \(x_{i}\)’s. The set \({\mathcal {D}}\) is symmetric (i.e., its indicator function is symmetric) and \(w(v_{1},\dots ,v_{m+2})\) is symmetric in its arguments.

Under this notation, [1] consider the following localized simplex statistic

where \(\varphi (v_1, \dots , v_{m+2}) = 1\{(x_1,\dots ,x_{m+2}) \in {\mathcal {D}}\} \mathrm {sign}(w(v_1,\dots ,v_{m+2}))\), which is a U-process of order \((m+2)\). To test concavity and convexity of f, [1] propose to reject the hypotheses if \({\overline{S}}_n = \sup _{x \in {\mathcal {X}}} U_n(x)/c_n(x)\) and \({\underline{S}}_n = \inf _{x \in {\mathcal {X}}} U_n(x)/c_n(x)\) are large and small, respectively, where \({\mathcal {X}}\) is a subset of the support of X and \(c_n(x) > 0\) is a suitable normalizing constant. The infimum statistic \({\underline{S}}_n\) can be written as the supremum of a U-process by replacing \(\varphi \) with \(-\varphi \), so we will focus on \({\overline{S}}_{n}\). Precisely speaking, they consider to take discrete deign points \(x_{1},\dots ,x_{G}\) with \(G = G_{n} \rightarrow \infty \), and take the supremum or infimum on the discrete grids \(\{ x_{1},\dots ,x_{G} \}\). Abrevaya and Jiang [1] argue that as far as the size control is concerned, it is enough to choose, as a critical value, the \((1-\alpha )\)-quantile of \({\overline{S}}_{n}\) when f is linear, under which \(U_{n}(x)\) is centered due to the symmetry assumption on the distribution of \(\varepsilon \) conditionally on X. Under linearity of f, [1, Theorem 6] claims to derive a Gumbel limiting distribution for a properly scaled version of \({\overline{S}}_{n}\), but the authors think that their proof needs a further justification. The proof of Theorem 6 in [1] proves that, in their notation, the marginal distributions of \({\widetilde{U}}_{n,h}(x_{g}^*)\) converge to N(0, 1) uniformly in \(g =1,\dots ,G\) (see their equation (A.1)), and the covariances between \({\widetilde{U}}_{n,h}(x_{g}^*)\) and \({\widetilde{U}}_{n,h}(x_{g'}^*)\) for \(g \ne g'\) are approaching zero faster than the variances, but what they need to show is that the joint distribution of \(({\widetilde{U}}_{n,h}(x_{1}^*),\dots ,{\widetilde{U}}_{n,h}(x_{G}^*))\) is approximated by \(N(0,I_{G})\) in a suitable sense, which is lacking in their proof. An alternative proof strategy is to apply Rio’s coupling [47] to the Hájek process associated to \(U_{n}\), but it seems non-trivial to apply Rio’s coupling since it is non-trivial to verify that the function \(\varphi \) is of bounded variation.

On the other hand, [25] study testing monotonicity of f when \(m=1\) and \(\varepsilon \) is independent of X. Specifically, they consider testing whether f is increasing, and propose to reject the hypothesis if \(S_{n} = \sup _{x \in {\mathcal {X}}} {\check{U}}_{n}(x)/c_{n}(x)\) is large, where \({\mathcal {X}}\) is a subset of the support of X,

and \(c_{n}(x) > 0\) is a suitable normalizing constant. Ghosal et al. [25] argue that as far as the size control is concerned, it is enough to choose, as a critical value, the \((1-\alpha )\)-quantile of \(S_{n}\) when \(f \equiv 0\), under which \(U_{n}(x)\) is centered. Under \(f \equiv 0\) and under regularity conditions, [25] derive a Gumbel limiting distribution for a properly scaled version of \(S_{n}\) but do not study bootstrap approximations to \(S_{n}\).

In Appendix F, we discuss some alternative tests in the literature for concavity/convexity and monotonicity of regression functions.

Now, we go back to the general case. In applications, a typical choice of the normalizing constant \(c_{n}(\vartheta )\) is \(c_n(\vartheta ) = b_{n}^{m/2} \sqrt{\mathrm {Var}_{P}(P^{r-1}h_{n,\vartheta })}\) where \(\mathrm {Var}_{P}(\cdot )\) denotes the variance under P, so that each \(b_{n}^{m/2} c_{n}(\vartheta )^{-1}P^{r-1}h_{n,\vartheta }\) is normalized to have unit variance, but other choices (such as \(c_{n}(\vartheta ) \equiv 1\)) are also possible. The choice \(c_n(\vartheta ) = b_{n}^{m/2} \sqrt{\mathrm {Var}_{P}(P^{r-1}h_{n,\vartheta })}\) depends on the unknown distribution P and needs to be estimated in practice. Suppose in general (i.e., \(c_n(\vartheta )\) need not to be \(b_{n}^{m/2} \sqrt{\mathrm {Var}_{P}(P^{r-1}h_{n,\vartheta })}\)) that there is an estimator \({\widehat{c}}_{n}(\vartheta ) = {\widehat{c}}_{n}(\vartheta ; D_{1}^{n}) > 0\) for \(c_{n}(\vartheta )\) for each \(\vartheta \in \Theta \), and instead of original \(S_{n}\), consider

We consider to approximate the distribution of \({\widehat{S}}_{n}\) by the conditional distribution of the JMB analogue of \({\widehat{S}}_{n}\): \({\widehat{S}}_{n}^{\sharp } := \sup _{\vartheta \in \Theta } b_{n}^{m/2}{\mathbb {U}}_{n}^{\sharp }(h_{n,\vartheta })/{\widehat{c}}_{n}(\vartheta )\), where

and \(\xi _{1},\dots ,\xi _{n}\) are i.i.d. N(0, 1) random variables independent of \(D_{1}^{n} = \{ D_{i} \}_{i=1}^{n}\). Recall that for a function f on \(({\mathbb {R}}^{m} \times {\mathcal {V}})^{r-1}\), \(U_{n-1,-i}^{(r-1)}(f)\) denotes the U-statistic with kernel f for the sample without the i-th observation, i.e., \(U_{n-1,-i}^{(r-1)} (f) = |I_{n-1,r-1}|^{-1} \sum _{(i,i_{2},\dots ,i_{r}) \in I_{n,r}} f(D_{i_{2}},\dots ,D_{i_{r}})\).

Let \(\zeta , c_{1},c_{2}\), and \(C_{1}\) be given positive constants such that \(C_{1} >1\) and \(c_{2} \in (0,1)\), and let \(q \in [4,\infty ]\). Denote by \({\mathcal {X}}^{\zeta }\) the \(\zeta \)-enlargement of \({\mathcal {X}}\), i.e., \({\mathcal {X}}^{\zeta } := \{ x \in {\mathbb {R}}^{m} : \inf _{x' \in {\mathcal {X}}} | x - x' | \leqslant \zeta \}\) where \(|\cdot |\) denotes the Euclidean norm. Let \(\mathrm {Cov}_{P}(\cdot ,\cdot )\) and \(\mathrm {Var}_{P}(\cdot )\) denote the covariance and variance under P, respectively. For the notational convenience, for arbitrary r variables \(d_{1},\dots ,d_{r}\), we use the notation \(d_{k:\ell } = (d_{k},d_{k+1},\dots ,d_{\ell })\) for \(1 \leqslant k \leqslant \ell \leqslant r\). We make the following assumptions.

- (T1)

Let \({\mathcal {X}}\) be a non-empty compact subset of \({\mathbb {R}}^{m}\) such that its diameter is bounded by \(C_{1}\).

- (T2)

The random vector X has a Lebesgue density \(p(\cdot )\) such that \(\Vert p \Vert _{{\mathcal {X}}^{\zeta }} \leqslant C_{1}\).

- (T3)

Let \(L:{\mathbb {R}}^{m} \rightarrow {\mathbb {R}}\) be a continuous kernel function supported in \([-1,1]^m\) such that the function class \({\mathfrak {L}} := \{ x \mapsto L(ax+b) : a \in {\mathbb {R}}, b \in {\mathbb {R}}^{m} \}\) is VC type for envelope \(\Vert L \Vert _{{\mathbb {R}}^{m}} = \sup _{x \in {\mathbb {R}}^{m}}|L(x)|\).

- (T4)

Let \(\Phi \) be a pointwise measurable class of symmetric functions \({\mathcal {V}}^r \rightarrow {\mathbb {R}}\) that is VC type with characteristics (A, v) for a finite and symmetric envelope \({\overline{\varphi }} \in L^{q}(P^{r})\) such that \(\log A \leqslant C_{1}\log n\) and \(v \leqslant C_{1}\). In addition, the envelope \({\overline{\varphi }}\) satisfies that \(( {\mathbb {E}}[ {\overline{\varphi }}^{q} (V_{1:r}) \mid X_{1:r}=x_{1:r}] )^{1/q} \leqslant C_{1}\) for all \(x_{1:r} \in {\mathcal {X}}^{\zeta } \times \cdots \times {\mathcal {X}}^{\zeta }\) if q is finite, and \(\Vert {\overline{\varphi }} \Vert _{P^{r},\infty } \leqslant C_{1}\) if \(q=\infty \)

- (T5)

\(nb_{n}^{3mq/[2(q-1)]} \geqslant C_{1}n^{c_{2}}\) with the convention that \(q/(q-1) = 1\) when \(q=\infty \), and \(2m(r-1)b_{n} \leqslant \zeta /2\).

- (T6)

\(b_{n}^{m/2}\sqrt{\mathrm {Var}_{P} (P^{r-1}h_{n,\vartheta })} \geqslant c_{1}\) for all n and \(\vartheta \in \Theta \).

- (T7)

\(c_{1} \leqslant c_{n}(\vartheta ) \leqslant C_{1}\) for all n and \(\vartheta \in \Theta \). For each fixed n, if \(x_{k} \rightarrow x\) in \({\mathcal {X}}\) and \(\varphi _{k} \rightarrow \varphi \) pointwise in \(\Phi \), then \(c_{n}(x_{k},\varphi _{k}) \rightarrow c_{n}(x,\varphi )\).

- (T8)

With probability at least \(1-C_{1}n^{-c_{2}}\), \(\sup _{\vartheta \in \Theta } \left| \frac{{\widehat{c}}_n(\vartheta )}{c_n(\vartheta )}- 1\right| \leqslant C_1 n^{-c_2}\).

Some comments on the conditions are in order. Condition (T1) allows the set \({\mathcal {X}}\) to depend on n, i.e., \({\mathcal {X}}= {\mathcal {X}}_{n}\), but its diameter is bounded (by \(C_{1}\)). For example, \({\mathcal {X}}\) can be discrete grids whose cardinality increases with n but its diameter must be bounded (an implicit assumption here is that the dimension m is fixed; in fact the constants appearing in the following results depend on the dimension m, so that m should be considered as fixed). Condition (T2) is a mild restriction on the density of X. It is worth mentioning that V may take values in a generic measurable space, and even if V takes values in a Euclidean space, V need not be absolutely continuous with respect to the Lebesgue measure (we will often omit the qualification “with respect to the Lebesgue measure”). In Examples 4.1 and 4.2, the variable V consists of the pair of regressor vector and outcome variable, i.e., \(V=(X, Y)\) with Y being real-valued, and our conditions allow the distribution of Y to be generic. In contrast, [25, 38] assume that the joint distribution of X and Y have a continuous density (or at least they require the distribution function of Y to be continuous) and thereby ruling out the case where the distribution of Y has a discrete component. This is essentially because they rely on Rio’s coupling [47] when deriving limiting null distributions of their test statistics. Rio’s coupling is a powerful KMT [36] type strong approximation result for general empirical processes, but requires the underlying distribution to be defined on a hyper-cube and to have a density bounded away from zero on the hyper-cube. In contrast, our analysis is conditional on X and we only require some moment conditions and VC type conditions on the function class. Thus our JMB does not require Y to have a density for its validity and thereby having a wider applicability in this respect.

Condition (T3) is a standard regularity condition on kernel functions L. Sufficient conditions under which \({\mathfrak {L}}\) is VC type are found in [28, 29, 43]. Condition (T4) allows the envelope \({\overline{\varphi }}\) to be unbounded. Condition (T4) allows the function class \(\Phi \) to depend on n, as long as the VC characteristics A and v satisfy that \(\log A \leqslant C_{1}\log n\) and \(v \leqslant C_{1}\). For example, \(\Phi \) can be a discrete set whose cardinality is bounded by \(Cn^{c}\) for some constants \(c,C>0\). Condition (T5) relaxes bandwidth requirements in [25, 38] where \(m = 1\) and \(q = \infty \). For example, [25] assume \(n b_n^2 /(\log n)^{4} \rightarrow \infty \) and \(b_n \log n \rightarrow 0\) for size control. For the problem of testing for regression/stochastic monotonicity of univariate functions, our test statistic is of order \(r=2\). If we choose a bounded kernel (such as the sign kernel), then we only need \(n^{-2/3+c} \lesssim b_{n} \lesssim 1\) for some small constant \(c > 0\). Further, our general theory allows us to develop a version of the JMB that is uniformly valid in compact bandwidth sets, which can be used to develop versions of tests that are valid with data-dependent bandwidths in Examples 4.1 and 4.2; see Sect. 4.1 ahead for details.

Condition (T6) is a high-level condition and implies the U-process to be non-degenerate. Let \(\varphi _{[r-1]}(v_{1},x_{2:r}) := {\mathbb {E}}[\varphi (v_{1},V_{2:r}) \mid X_{2:r}=x_{2:r}] \prod _{j=2}^{r} p(x_{j})\), and observe that

for \(\vartheta = (x,\varphi )\), where \(x-b_{n}x_{2:r}= (x-b_{n}x_{2},\dots ,x-b_{n}x_{r})\). From this expression, in applications, it is not difficult to find primitive regularity conditions that guarantee Condition (T6). To keep the presentation concise, however, we assume Condition (T6).

Condition (T7) is concerned with the normalizing constant \(c_{n}(\vartheta )\). For the special case where \(c_n(\vartheta ) = b_{n}^{m/2} \sqrt{\mathrm {Var}_{P}(P^{r-1}h_{n,\vartheta })}\), Condition (T7) is implied by Conditions (T4) and (T6). Condition (T8) is also a high-level condition, which together with (T7) implies that there is a uniformly consistent estimate \({\widehat{c}}_{n}(\vartheta )\) of \(c_{n}(\vartheta )\) in \(\Theta \) with polynomial error rates. Construction of \({\widehat{c}}_{n}(\vartheta )\) is quite flexible: for \(c_{n}(\vartheta ) = b_{n}^{m/2} \sqrt{\mathrm {Var}_{P}(P^{r-1}h_{n,\vartheta })}\), one natural example is the jackknife estimate

The following lemma verifies that the jackknife estimate (13) obeys Condition (T8) for \(c_{n}(\vartheta ) = b_{n}^{m/2} \sqrt{\mathrm {Var}_{P}(P^{r-1}h_{n,\vartheta })}\). However, it should be noted that other estimates for this normalizing constant are possible depending on applications of interest; see [1, 25, 38].

Lemma 4.1

(Estimation error of the normalizing constant) Suppose that Conditions (T1)–(T7) hold. Let \(c_{n}(\vartheta ) = b_{n}^{m/2} \sqrt{\mathrm {Var}_{P}(P^{r-1}h_{n,\vartheta })}, \vartheta \in \Theta \) and \({\widehat{c}}_{n}(\vartheta )\) be defined in (13). Then there exist constants c, C depending only on \(r, m, \zeta , c_{1},c_{2}, C_{1}, L\) such that

Now, we are ready to state finite sample validity of the JMB for approximating the distribution of the supremum of the generalized local U-process.

Theorem 4.2

(JMB validity for the supremum of a generalized local U-process) Suppose that Conditions (T1)–(T8) hold. Then there exist constants c, C depending only on \(r, m, \zeta , c_{1},c_{2}, C_{1}, L\) such that the following holds: for every n, there exists a tight Gaussian random variable \(W_{P,n}(\vartheta ), \vartheta \in \Theta \) in \(\ell ^{\infty }(\Theta )\) with mean zero and covariance function

for \(\vartheta , \vartheta ' \in \Theta \), and it follows that

where \({\widetilde{S}}_{n}:=\sup _{\vartheta \in \Theta }W_{P,n}(\vartheta )\).

Theorem 4.2 leads to the following corollary, which is another form of validity of the JMB. For \(\alpha \in (0,1)\), let \(q_{{\widehat{S}}_{n}^\sharp }(\alpha ) = q_{{\widehat{S}}_{n}^\sharp }(\alpha ; D_{1}^{n})\) denote the conditional \(\alpha \)-quantile of \({\widehat{S}}_{n}^\sharp \) given \(D_{1}^{n}\), i.e., \(q_{{\widehat{S}}_{n}^\sharp } (\alpha ) = \inf \left\{ t \in {\mathbb {R}}: {\mathbb {P}}_{\mid D_{1}^{n}} ({\widehat{S}}_{n}^\sharp \leqslant t) \geqslant \alpha \right\} \).

Corollary 4.3

(Size validity of the JMB test) Suppose that Conditions (T1)–(T8) hold. Then there exist constants c, C depending only on \(r, m, \zeta , c_{1},c_{2}, C_{1}, L\) such that

4.1 Uniformly valid JMB test in bandwidth

A version of Theorem 4.2 continues to hold even if we additionally take the supremum over a set of possible bandwidths. For a given bandwidth \(b \in (0,1)\), let

and for a given candidate set of bandwidths \({\mathcal {B}}_n \subset [{\underline{b}}_n, {\overline{b}}_n]\) with \(0< {\underline{b}}_n \leqslant {\overline{b}}_n < 1\), consider

where \(c_{n}(\vartheta ,b) > 0\) is a suitable normalizing constant and \({\widehat{c}}(\vartheta ,b) > 0\) is an estimate of \(c(\vartheta ,b)\). Following a similar argument used in the proof of Theorem 4.2, we are able to derive a version of the JMB test that is also valid uniformly in bandwidth, which opens new possibilities to develop tests that are valid with data-dependent bandwidths in Examples 4.1 and 4.2. For related discussions, we refer the readers to Remark 3.2 in [38] for testing stochastic monotonicity and [22] for kernel type estimators.

Consider the JMB analogue of \({\widehat{S}}_{n}\):

Let \(\kappa _{n} = {\overline{b}}_n / {\underline{b}}_n\) denote the ratio of the largest and smallest possible values in the bandwidth set \({\mathcal {B}}_{n}\), which intuitively quantifies the size of \({\mathcal {B}}_{n}\). To ease the notation and to facilitate comparisons, we only consider \(q = \infty \). We make the following assumptions instead of Conditions (T5)–(T8).

- (T5\('\)):

\(n {\underline{b}}_{n}^{3m/2} \geqslant C_1 n^{c_2} \kappa _{n}^{m(r-2)}\), \(\kappa _{n} \leqslant C_1 {\underline{b}}_{n}^{-1/(2r)}\), and \(2m(r-1){\overline{b}}_{n} \leqslant \zeta /2\).

- (T6\('\)):

\(b^{m/2}\sqrt{\mathrm {Var}_{P} (P^{r-1}h_{\vartheta ,b})} \geqslant c_{1}\) for all n and \((\vartheta , b) \in \Theta \times {\mathcal {B}}_{n}\).

- (T7\('\)):

\(c_{1} \leqslant c_{n}(\vartheta ,b) \leqslant C_{1}\) for all n and \((\vartheta , b) \in \Theta \times {\mathcal {B}}_{n}\). For each fixed n, if \(x_{k} \rightarrow x\) in \({\mathcal {X}}\), \(\varphi _{k} \rightarrow \varphi \) pointwise in \(\Phi \), and \(b_k \rightarrow b\) in \({\mathcal {B}}_n\), then \(c_{n}(x_{k},\varphi _{k}, b_{k}) \rightarrow c_{n}(x,\varphi ,b)\).

- (T8\('\)):

With probability at least \(1 - C_1 n^{-c_2}\), \(\sup _{(\vartheta , b) \in \Theta \times {\mathcal {B}}_{n}} \left| \frac{{\widehat{c}}_n(\vartheta ,b)}{c_n(\vartheta ,b)} - 1\right| \leqslant C_1 n^{-c_2}\).

Theorem 4.4

(Bootstrap validity for the supremum of a generalized local U-process: uniform-in-bandwidth result) Suppose that Conditions (T1)–(T4) with \(q=\infty \), and Conditions (T5\('\))–(T8\('\)) hold. Then there exist constants c, C depending only on \(r, m, \zeta , c_{1},c_{2}, C_{1}, L\) such that the following holds: for every n, there exists a tight Gaussian random variable \(W_{P,n}(\vartheta ,b), (\vartheta , b) \in \Theta \times {\mathcal {B}}_n\) in \(\ell ^{\infty }(\Theta \times {\mathcal {B}}_n)\) with mean zero and covariance function

for \((\vartheta ,b), (\vartheta ',b') \in \Theta \times {\mathcal {B}}_{n}\), and the result (15) continues to hold with \({\widetilde{S}}_{n}:=\sup _{(\vartheta , b) \in \Theta \times {\mathcal {B}}_n}W_{P,n}(\vartheta ,b)\).

If \({\underline{b}}_{n} = {\overline{b}}_{n} = b_n\) (i.e., \({\mathcal {B}}_{n} = \{b_n\}\) is a singleton set), then Conditions (T5\('\))–(T8\('\)) reduce to (T5)–(T8) and Theorem 4.4 covers Theorem 4.2 with \(q = \infty \) as a special case. Condition (T5\('\)) states that the size of the bandwidth set \({\mathcal {B}}_{n}\) cannot be too large. Conditions (T6\('\))–(T8\('\)) are completely parallel with Conditions (T6)–(T8). Such “uniform-in-bandwidth” type results are not covered in [1, 25, 38].

4.2 A simulation study on testing for monotonicity of regression

We provide a numerical example to verify the size validity of the JMB test for monotonicity of regression in Example 4.2. We generate i.i.d. univariate covariates \(X_{1},\dots ,X_{n}\) from the uniform distribution on [0, 1] and consider the zero regression function \(f \equiv 0\) (which implies that the covariate X and the response Y are stochastically independent). As argued in [25], \(f \equiv 0\) is the hardest case in terms of size control under the null hypothesis \(H_{0} : f \text{ is } \text{ increasing } \text{ on } [0,1]\). We consider two error distributions: (i) Gaussian distribution \(\varepsilon _{i} \sim N(0, 0.1^{2})\); (ii) (scaled) Rademacher distribution \({\mathbb {P}}(\varepsilon _{i} = \pm 0.1) = 1/2\). For both error distributions, the (unnormalized) U-process \({\check{U}}_{n}(x)\) defined in (12) has mean zero (i.e., \({\mathbb {E}}[{\check{U}}_{n}(x)] = 0\) for all \(x \in [0,1]\)). The Rademacher distribution is not covered in [25]. We use the Epanechnikov kernel \(L(x) = 0.75(1-x^{2})\) for \(x \in [-1,1]\) and \(L(x) = 0\) otherwise, together with bandwidth parameter \(b_{n} = n^{-1/5}\). We consider three sample sizes \(n=100,200,500\). For each setup, we generate 2000 bootstrap samples. We consider test of the form

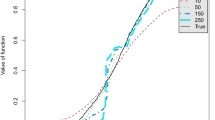

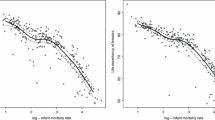

where \({\widehat{c}}_{n}(x)\) is given in (13) and the critical value q is calibrated by the JMB. In particular, for any nominal size \(\alpha \in (0, 1)\), the value of \(q := q(\alpha )\) is chosen as the \((1-\alpha )\)-th conditional quantile of the JBM. Empirical rejection probability of the JMB test is obtained by averaging over 5000 simulations. We observe that the empirical rejection probability is close to the nominal size of the JMB test. Table 1 shows the proportion of rejections at the nominal sizes \(\alpha =0.05, 0.10\), and Fig. 1 shows the JMB approximation of the proportion of rejections uniformly in \(\alpha \in (0, 1)\).

5 Local maximal inequalities for U-processes

In this section, we prove local maximal inequalities for U-processes, which are of independent interest and can be useful for other applications. These multi-resolution local maximal inequalities are key technical tools in proving the results stated in the previous sections.

We first review some basic terminologies and facts about U-processes. For a textbook treatment on U-processes, we refer to [18]. Let \(r \geqslant 1\) be a fixed integer and let \(X_{1},\dots ,X_{n}\) be i.i.d. random variables taking values in a measurable space \((S,{\mathcal {S}})\) with common distribution P.

Definition 5.1

(Kernel degeneracy; Definition 3.5.1 in [18]) A symmetric measurable function \(f: S^{r} \rightarrow {\mathbb {R}}\) with \(P^{r}f=0\) is said to be degenerate of order k with respect to P if \(P^{r-k}f(x_{1},\dots ,x_{k}) = 0\) for all \(x_{1},\dots ,x_{k} \in S\). In particular, f is said to be completely degenerate if f is degenerate of order \(r-1\), and f is said to be non-degenerate if f is not degenerate of any positive order.

Let \({\mathcal {F}}\) be a class of symmetric measurable functions \(f: S^{r} \rightarrow {\mathbb {R}}\). We assume that there is a symmetric measurable envelope F for \({\mathcal {F}}\) such that \(P^{r}F^{2} < \infty \). Furthermore, we assume that each \(P^{r-k}F\) is everywhere finite. Consider the associated U-process

For each \(k=1,\dots ,r\), the Hoeffding projection (with respect to P) is defined by

The Hoeffding projection \(\pi _k f\) is a completely degenerate kernel of k variables. Then, the Hoeffding decomposition of \(U_{n}^{(r)}(f)\) is given by

In what follows, let \(\sigma _{k}\) be any positive constant such that \(\sup _{f \in {\mathcal {F}}} \Vert P^{r-k}f \Vert _{P^{k},2} \leqslant \sigma _{k} \leqslant \Vert P^{r-k}F \Vert _{P^{k},2}\) whenever \(\Vert PF^{r-k} \Vert _{P^{k},2} > 0\) (take \(\sigma _{k} = 0\) when \(\Vert P^{r-k} F \Vert _{P^{k},2} = 0\)), and let

where \(X_{(i-1)k+1}^{ik} = (X_{(i-1)k+1},\dots ,X_{ik})\).

We will assume certain uniform covering number conditions for the function class \({\mathcal {F}}\). For \(k=1,\dots ,r\), define the uniform entropy integral

where \(P^{r-k}{\mathcal {F}}= \{ P^{r-k}f : f \in {\mathcal {F}}\}\) and \(\sup _{Q}\) is taken over all finitely discrete distributions on \(S^k\). We note that \(P^{r-k}F\) is an envelope for \(P^{r-k}{\mathcal {F}}\). To avoid measurablity difficulties, we will assume that \({\mathcal {F}}\) is pointwise measurable. If \({\mathcal {F}}\) is pointwise measurable and \(P^{r} F < \infty \) (which we have assumed) then \(\pi _{k}{\mathcal {F}}:= \{ \pi _{k} f : f \in {\mathcal {F}}\}\) and \(P^{r-k}{\mathcal {F}}\) for \(k=1,\dots ,r\) are all pointwise measurable by the dominated convergence theorem.

Let \(\varepsilon _{1},\dots ,\varepsilon _{n}\) be i.i.d. Rademacher random variables such that \({\mathbb {P}}(\varepsilon _{i}=\pm 1)=1/2\). A real-valued Rademacher chaos variable of order k, X, is a polynomial of order k in the Rademacher random variables \(\varepsilon _{i}\) with real coefficients, i.e.,

where \(a, a_{i}, a_{i_{1} i_{2}}, \dots , a_{i_{1} \dots i_{k}} \in {\mathbb {R}}\). If only the monomials of degree k in the variables \(\varepsilon _{i}\) in X are not zero, then X is a homogeneous Rademacher chaos of order k; see Section 3.2 in [18].

Definition 5.2

(Rademacher chaos process of orderk; page 220 in [18]) A stochastic process \(X(t), t \in T\) is said to be a Rademacher chaos process of order k if for all \(s, t \in T\), the joint law of (X(s), X(t)) coincides with the joint law of two (not necessarily homogeneous) Rademacher chaos variables of order k.

In the remainder of this section, the notation \(\lesssim \) signifies that the left hand side is bounded by the right hand side up to a constant that depends only on r. Recall that \(\Vert \cdot \Vert _{{\mathcal {F}}} = \sup _{f \in {\mathcal {F}}} | \cdot |\).

Theorem 5.1

(Local maximal inequalities for U-processes) Suppose that \({\mathcal {F}}\) is poinwise measurable and that \(J_{k}(1) < \infty \) for \(k=1,\dots ,r\). Let \(\delta _{k} =\sigma _{k}/ \Vert P^{r-k} F \Vert _{P^{k},2}\) for \(k=1,\dots ,r\). Then

for every \(k=1,\dots ,r\). If \(\Vert P^{r-k} F \Vert _{P^{k},2} = 0\), then the right hand side is interpreted as 0.

The proof of Theorem 5.1 relies on the following lemma on the uniform entropy integrals.

Lemma 5.2

(Properties of the maps \(\delta \mapsto J_k(\delta )\)) Assume that \(J_{k} (1) < \infty \) for \(k=1,\dots ,r\). Then, the following properties hold for every \(k=1,\dots ,r\). (i) The map \(\delta \mapsto J_k(\delta )\) is non-decreasing and concave. (ii) For \(c \geqslant 1\), \(J_k(c \delta ) \leqslant c J_k(\delta )\). (iii) The map \(\delta \mapsto J_k(\delta ) / \delta \) is non-increasing. (iv) The map \((x,y) \mapsto J_k(\sqrt{x/y}) \sqrt{y}\) is jointly concave in \((x,y) \in [0,\infty ) \times (0,\infty )\).

Proof of Lemma 5.2

The proof is almost identical to [14, Lemma A.2] and hence omitted. \(\square \)

Proof of Theorem 5.1

Pick any \(k=1,\dots ,r\). It suffices to prove (20) when \(\Vert P^{r-k} F \Vert _{P^{k},2} > 0\) since otherwise there is nothing to prove (recall that we have assumed that \(P^{r} F^{2} < \infty \), which ensures that \(\Vert P^{r-k} F\Vert _{P^{k},2} < \infty \)). Let \(\varepsilon _{1},\dots ,\varepsilon _n\) be i.i.d. Rademacher random variables independent of \(X_1^n\). In addition, let \(\{ X_{i}^{j} \}\) and \(\{ \varepsilon _{i}^{j} \}\) be independent copies of \(\{ X_{i} \}\) and \(\{ \varepsilon _{i} \}\). From the randomization theorem for U-processes [18, Theorem 3.5.3] and Jensen’s inequality, we have

Conditionally on \(X_{1}^{n}\),

is a (homogeneous) Rademacher chaos process of order k. Denote by \({\mathbb {P}}_{I_{n,k}} = |I_{n,k}|^{-1} \sum _{(i_1,\dots ,i_k) \in I_{n,k}} \delta _{(X_{i_{1}},\dots ,X_{i_{k}})}\) the empirical distribution on all possible k-tuples of \(X_1^n\); then Corollary 3.2.6 in [18] yields

where \(\Vert \cdot \Vert _{\psi _{2/k} \mid X_{1}^{n}}\) denotes the Orlicz (quasi-)norm associated with \(\psi _{2/k}(u) = e^{u^{2/k}} - 1\) evaluated conditionally on \(X_{1}^{n}\). The \(\Vert \cdot \Vert _{\psi _{2/k} \mid X_{1}^{n}}\)-diameter of the function class \( {\mathcal {F}}\) is at most \(2\sigma _{I_{n,k}}\) with \(\sigma _{I_{n,k}}^{2} := \sup _{f \in {\mathcal {F}}} \Vert P^{r-k} f \Vert _{{\mathbb {P}}_{I_{n,k}},2}^{2}\). So, since the first moment is bounded by the \(\psi _{2/k}\)-(quasi)norm up to a constant that depends only on k (and hence r), by Corollary 5.1.8 in [18] together with Fubini’s theorem and a change of variables, we have

The last inequality follows from the definition of \(J_{k}\). Since \(J_k(\sqrt{x/y}) \sqrt{y}\) is jointly concave in \((x,y) \in [0,\infty ) \times (0,\infty )\) by Lemma 5.2 (iv), Jensen’s inequality yields

We shall bound \({\mathbb {E}}[\sigma _{I_{n,k}}^{2}]\). To this end, we will use Hoeffding’s averaging [49, Section 5.1.6]. Let

Then, the U-statistic \(\Vert P^{r-k} f \Vert _{{\mathbb {P}}_{I_{n,k}},2}^{2} = |I_{n,k}|^{-1} \sum _{I_{n,k}} (P^{r-k}f)^{2}(X_{i_{1}},\dots ,X_{i_{k}})\) is the average of the variables \(S_{f,k}(X_{j_{1}},\dots ,X_{j_{n}})\) taken over all the permutations \(j_{1},\dots ,j_{n}\) of \(1,\dots ,n\). Hence,

by Jensen’s inequality, so that \(z \leqslant {\widetilde{z}} := \sqrt{B_{n,k} / \Vert P^{r-k}F \Vert _{P^{k},2}^2}\). Since the blocks \(X_{(i-1)k+1}^{ik}, i=1,\dots ,m\) are i.i.d.,

where (1) follows from the triangle inequality, (2) follows from the symmetrization inequality [53, Lemma 2.3.1], (3) follows from the contraction principle [29, Corollary 3.2.2], and (4) follows from the Cauchy–Schwarz inequality. By (a version of) the Hoffmann-Jørgensen inequality to the empirical process [53, Proposition A.1.6],

The analysis of the expectation on the right hand side is rather standard. From the first half of the proof of Theorem 5.2 in [14] (or repeating the first half of this proof with \(r=k=1\)), we have

Since the integral on the right hand side is bounded by \(J_{k}({\widetilde{z}})\), we have

Therefore, we conclude that

By Lemma 5.2 (i) and applying [54, Lemma 2.1] with \(J(\cdot )= J_k(\cdot ), r=1, A^2 = \Delta ^2\), and \(B^2 = \Vert M_k\Vert _{{\mathbb {P}},2} / (\sqrt{n} \Vert P^{r-k} F \Vert _{P^{k},2})\), we have

Combining (21) and (22), we arrive at

We note that \(\Delta \geqslant \delta _k\) and recall that \(\delta _{k} =\sigma _{k}/\Vert P^{r-k} F \Vert _{P^{k},2}\). Since the map \(\delta \mapsto J_k(\delta )/\delta \) is non-increasing by Lemma 5.2 (iii), we have

In addition, since \(J_k(\delta _k) / \delta _k \geqslant J_k(1) \geqslant 1\), we have

Finally, since