Abstract

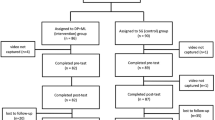

The emergency cricothyroidotomy (EC) is a critical procedure. The high cost of failures increases the demand for evidence-based training methods. The aim of this study was to present and evaluate self-directed video-guided simulation training. Novice doctors were given an individual 1-h simulation training session. One month later, an EC on a cadaver was performed. All EC’s were video recorded. An assessment tool was used to rate performance. Performance was compared with a pass/fail level for the EC. We found a high reliability, based on Pearson’s r (0.88), and a significant progression of skill during training (p < 0.001). Eleven out of 14 succeeded in creating an airway on the cadaver in 64 s (median, range 39–86 s), but only four achieved a passing score. Our 1-h training protocol successfully raised the competence level of novice doctors; however, the training did not ensure that all participants attained proficiency.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A critical element in management guidelines for situations in which intubation and oxygenation are not possible is the creation of a surgical airway, or the emergency cricothyroidotomy (EC) [1–3]. EC is a high-risk procedure—a failed attempt and the loss of the airway are immediately life-threatening. It has recently been defined as an essential surgical procedure by the World Bank indicating its potential when addressing surgically avertable deaths [4]. Otolaryngology residents fear the procedure due to its unpredictability and the hectic nature of the cannot intubate/cannot oxygenate scenario [5]. Therefore, training in EC is a high priority in all departments that receive trauma patients or those with a high likelihood of encountering patients with an unsuspected difficult airway, such as Departments of Emergency Medicine and Otolaryngology Head and Neck Surgery. Most EC’s are performed by either anesthesiologists, trauma surgeons or head and neck surgeons—although no comprehensive data exist on the prevalence of EC’s across the all aspects of the healthcare system, an educational effort should be directed toward these groups [6, 7].

Despite EC’s notoriety, the procedure is very rarely performed. During routine general anaesthesia, the incidence is as low as 0.002 % [6]. In the context of emergency medicine, this number is higher. Of patients requiring a controlled airway, an intubation, 1 % in emergency rooms and 10 % in pre-hospital settings end up needing a surgical airway [7]. The procedure’s relative rarity hinders a see-one, do-one approach to education. Developing competence through supervised performance and routine by repetition is unfeasible and the training programs for this procedure must reflect these challenges.

Simulation training can be valuable for learning procedures, such as the emergency surgical airway [8–10], because the method is readily available and can be repeated. It offers the junior doctor a safe environment in which to gain familiarity with the procedure. Simulation-based training programs have been proved to be more effective than non-simulation education (lecture or video presentation) [11]. However, supervised training of junior doctors in surgical procedures, be it on patients or simulators, is costly and time-consuming. In the context of limited working and training hours and limited budgets, new ways to educate must be explored. A self-directed simulation training with no faculty present that utilizes video instructions can be a cost-effective method for teaching basic surgical skills [12, 13].

This study aimed to explore the feasibility and effect of teaching emergency cricothyroidotomy to novice doctors using only an instructional video and self-directed training on a low-fidelity model.

The research questions were:

-

1.

What is the effect of self-directed video-guided simulation-based training on the EC competency of novice doctors?

-

2.

Can self-directed, video-guided, simulation-based training be sufficient to achieve competency in emergency cricothyroidotomy?

Materials and methods

Participants

Sixteen house doctors (interns) without prior training in head and neck surgery were included in the study, recruited from four different community hospitals.

The procedure

We chose the rapid four-step technique (RFST) for performing EC [14], which is a simple, straightforward technique that has been well tested in a clinical setting and found to be fast and efficient [6].

Simulation-based training and testing

Each participant completed a 1-h individual session consisting of watching an instructional video on an iPad, “hands-on” training on a low-fidelity model, and an end-of-course test. The 3-min instructional video demonstrated on a cadaver how to perform the RFST procedure [15]. The model, an Airsim Advance Crico (Trucorp, ©Belfast, N. Ireland)—an anatomically correct training model of the head and neck made from a polymer compound—was available along with a tray of relevant instruments (Fig. 1). Participants were instructed to perform four RFST procedures, because a prior study suggested that skill levels on simple phantoms most likely plateau after that point [16]. Participants were allowed to review the instructional video as needed between their RFST attempts, but not during an attempt. No other instruction or supervision was provided. The end-of-course test was made more difficult, by adding an extra layer of “tissue” to the model between the skin and the larynx. Each training attempt as well as the end-of-course test was video recorded.

Cadaver test

One month after completing the simulation-based training session, the participants were invited to attend a final training session. No detailed information was given on the nature of this second session. When arriving, each participant was immediately called to perform the procedure on an unembalmed cadaver with no preparation time. Each was given a tray with relevant instruments, and the procedure was timed and video recorded. Cadavers were not selected with the procedure in mind and had natural anatomical variations, that is, some were obese and some had short necks. None had pretracheal pathology.

Assessment

Two blinded specialists—one a head and neck surgeon (BC) and one anesthesiologist (MB) experienced in difficult airway management—reviewed all the video material and rated all four training attempts and the cadaver test, using an OSATS-based rating scale (Table 1) with established evidence of validity [17, 18]. Both raters were experienced in assessment using the rating scale. A previous study had defined a score above 12.2 points per minute as a passing score indicating proficiency in the EC procedure [17].

Statistical analysis

We established interrater reliability of the assessment by calculating Pearson’s r for the test scores. An interrater reliability above 0.8 is sufficient for summative assessment (high-stakes assessment with consequences) [19]. When assessing score progression, we used related samples, Friedman’s two-way analysis of variance by ranks. We used Pearson’s correlation to determine correlation between scores per minute of the last training attempt and the cadaver test. The statistical analysis was performed using a statistical software package (PASW, version 19.0; SPSS Inc, Chicago, IL).

Ethical considerations

Information was given to participants in accordance with the standards of the National Committee on Health Research Ethics. Educational studies without any patients are exempt from ethical approval, according to standard practices. All participants were given verbal and written information about the study, and all signed informed consent forms. All participants were anonymous and free to withdraw from the study at any time. None of the novice doctors worked at the same institution as the authors or had any professional relationship before or after the study. The unembalmed cadavers were obtained with approval from the University of Copenhagen through the Department of Cellular and Molecular Medicine.

Results

The interrater reliability was high, Pearson’s r = 0.88. The results of the training attempts are shown in Table 2 and Fig. 2. We found a significant progression of skills between the training attempts (p < 0.001).

Median age was 29 years (range 25–34 years), 10 were males, and 6 were females.

Two participants failed to appear to the cadaver test. These participants had achieved a mean score of 10.5 on their last attempt in training, which was near average.

We found a moderate but highly significant correlation when correlating the last training attempt and the cadaver test, r = 0.61, p < 0.001 (Fig. 3).

Three of the 14 participants failed to secure an airway on the cadaver test, and the remaining 11 succeeded in 64 s (median, range 39–86 s). When applying a pass/fail level determined in an earlier study [17], we found that only 4 of the 14 participants achieved a passing score indicating proficiency in the EC.

Discussion

The 1-h self-directed, simulation-based training using instructional video successfully improved EC performance. The skill level archived at the end of training significantly correlated with performance on a cadaver 1 month later. This was archived in a cost-effective manner with inexpensive equipment and no senior faculty present. However, the improvement in skill was insufficient for most participants to achieve a test score above the predefined pass/fail level.

A high interrater correlation confirmed the reliability of these results, a finding that aligns well with an earlier study using the assessment tool that found an interrater reliability of 0.811 [7].

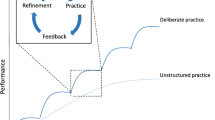

The progressions of skills of these pure novices from their first to their fourth training attempt indicated that the training was useful in acquiring basic competency, despite lack of supervision. However, four unsupervised training attempts were insufficient for trainees to reach the plateau phase of their learning curves.

Two participants did not attend the cadaver test; however, their test results from the simulation training were similar to those of other participants, and their tests probably would not have affected our result.

We found only a moderate correlation between the fourth training attempt and the cadaver test. Previous studies conducted on EC have shown that training on low-fidelity models correlates well to performance in high-fidelity tests, and that retention can be maintained for up to a year [20, 21]. However, participants in these studies were either residents or attending physicians in anesthesiology, and we can assume that their initial skill levels were far higher than those of our novice participants. When assessing learning, we can infer a level of competence from a test performance apart from the training scenario. A time delay between tests reveals the subjects’ ability to replicate their performance determining that they “know what”, but a change in test model can assess the subjects’ ability to apply their knowledge to a different but similar situation, indicating that they “know how” [22]. In the mentioned studies, transfer or retention tests were conducted in a safe, known environment either on the same model at different times or on different models with little time delay [10, 20, 21]. In our study, we tested subjects both at different times to assess retention, in different contexts to assess transfer, and lastly, in a stressful environment to assess stability of performance. For practical reasons, our time delay for the test was only a month, which can be considered short for a procedure that is rarely performed. This is a limitation of the study as a follow-up test could have indicated the need for refresher courses. A later assessment, however, would not have altered the conclusions of this study with regard to immediate training effect.

When evaluating a new educational modality, we find it relevant to perform a transfer test on the highest possible level of fidelity. This practice negates the risk of training for testing as opposed to training for real-life performance, or improving performance in the simulator model as opposed to performance in real life. The use of fresh, frozen cadavers must be considered the gold standard in EC skills assessment [23]. Because, in real life, EC must be performed under stressful conditions, we gave the participants the equivalent of a “mock call” [24], asking them to perform immediately on the cadavers with no time to prepare. Even with a procedure as technically simple as the RFST, a stressful environment can be expected to affect any doctor’s performance, not the least a novice house doctor. Testing that is realistic in context, environment, and the characteristics of the model used greatly increases the generalizability of the results.

When assessing competence in a time-sensitive procedure, such as EC, it is tempting to view the time from “knife to airway” as the sole indicator of skill level [25]. From this viewpoint, 11 of our 14 novices succeeded in creating an airway in less than 86 s. However, there is a strong disconnect between the very positive outcomes using this indicator and clinical reality. In several simulation studies, success rates were between 90 and 100 % using less than 60 s [26–28], which is far better than reports from actual surveys on airway management in which more than half of initial attempts at EC fail completely [6]. We believe that a score representing both technical proficiency and speed is superior to speed alone as this considers the performer’s ability to use relevant techniques to complete the procedure even under difficult conditions, such as abnormal anatomy or bleeding. When taking this approach, we found that our training program was insufficient to educate young doctors to pass the cadaver test. Eleven of 14 were able to create an airway under these conditions. Five of 11 succeeded despite a flawed use of instruments and a failing to optimize conditions by dorsal flexion of the neck resulting in a lower and ultimately a failing score. These technical failures are cause for concern and indicate the need for further training despite a successfully performed procedure. We believe only four (29 %) demonstrated sufficient skill from which to infer competency in emergency cricothyroidotomy.

There are several options for adjusting the simulation program in light of these findings. First, we must consider that four training attempts might be too few to achieve proficiency and additional attempts could be added to the program. Other programs indicate that five or more attempts improve outcome [16]. The addition of further attempts could be complemented with the use of process goals. Studies have demonstrated that participants who assume a process orientation and identify set goals improve the value of self-directed learning particular in the field of procedural skills [29, 30]. It may also be relevant to speculate how feedback during the training could have affected the outcome; the influence of feedback in simulation training has been previously examined [31]. EC is technically simple, but some guidance even from non-experts could conceivably improve the results. A recently published paper explored using medical students as non-expert educational assistants or facilitators in a simulation setting with positive results [32]. Because physicians have different learning curves, we would in conclusion recommend training until mastery performance level instead of using a fix number of performed EC procedures [33, 34]. A prior study established a pass/fail score that can be used to ensure EC competence by the physicians if applied in an “End of Course” test [17].

Considering time spent and general costs, we found that self-directed video-guided simulation training significantly improved the performance scores of novice doctors and should be part of an EC training program. Moreover, we believe that a self-directed training process can have a positive effect on motivation and initial acquisition of basic skills. However, it is important to acknowledge that our setup did not ensure that all participants reached the required competency level.

The self-directed, video-guided simulation-based training had a significant effect on novice doctors’ emergency cricothyroidotomy performance on cadavers. However, the majority of the physicians did not progress to the required competency level in emergency cricothyroidotomy with this educational intervention alone.

References

Henderson JJ, Popat MT, Latto IP, Pearce AC (2004) Difficult Airway Society guidelines for management of the unanticipated difficult intubation. Anaesthesia 59:675–694

Apfelbaum JL, Hagberg CA, Caplan RA et al (2013) Practice guidelines for management of the difficult airway: an updated report by the American Society of Anesthesiologists Task Force on Management of the Difficult Airway. Anesthesiology 118(2):251–270

Walls RM, Murphy MF (2012) Manual of emergency airway management, 4th edn. Lippincott Williams and Wilkins, Philadelphia, p 24

Mock CN, Donkor P, Gawande A et al (2015) Essential surgery: key messages from Disease Control Priorities, 3rd edn. Lancet 385(9983):2209–2219

Malekzadeh S, Malloy KM, Chu EE et al (2011) Emergencies boot camp: using simulation to onboard residents. Laryngoscope 121:2114–2121

Bair AE, Panacek EA, Wisner DH, Bales R, Sakles JC (2003) Cricothyrotomy: a 5-year experience at one institution. J Emerg Med 24(2):151

Cook TM, Woodall N, Frerk C (2011) Major complications of airway management in the UK: results of the fourth National Audit Project of the Royal College of Anaesthetists and the Difficult Airway Society. Part 1: anaesthesia. Br J Anaesth 106(5):617–631

Zirkle M, Blum R, Raemer DB, Healy G, Roberson DW (2005) Teaching emergency airway management using medical simulation: a pilot program. Laryngoscope 115:495–500

Reznek M, Harter P, Krummel T (2002) Virtual reality and simulation: training the future emergency physician. Acad Emerg Med 9:78–87

Jayaraman V, Feeney JM, Brautigam RT et al (2014) The use of simulation procedural training to improve self-efficacy, knowledge, and skill to perform cricothyroidotomy. Am Surg 80(4):377–381

Kennedy CC, Cannon EK, Warner DO, Cook DA (2014) Advanced airway management simulation training in medical education: a systematic review and meta-analysis. Crit Care Med 42(1):169–178

Spencer JA, Jordan RK (1999) Learner centred approaches in medical education. BMJ 318:1280–1283

Jowett N, LeBlanc V, Xeroulis G et al (2007) Surgical skill acquisition with self-directed practice using computer-based video training. Am J Surg 193:237–242

Brofeldt T, Panacek EA, Richards JR (1996) An easy cricothyrotomy approach: the rapid four-step technique. Acad Emerg Med 3:1060–1063

Melchiors J, Todsen T, Konge L, Charabi B, von Buchwald C (2016) Cricothyroidotomy—the emergency surgical airway. Head Neck 38(7):1129–1131

Wong DT, Prabhu AJ, Coloma M et al (2003) What is the minimum training required for successful cricothyroidotomy? Anesthesiology 98:349–353

Melchiors J, Todsen T, Nilsson P et al (2015) Preparing for emergency: a valid, reliable assessment tool for emergency cricothyroidotomy skills. Otolaryngol Head Neck Surg 152(2):260–265

Reznick RK, MacRae H (2006) Teaching surgical skills—changes in the wind. N Engl J Med 355:2664–2669

Downing SM (2004) Reliability: on the reproducibility of assessment data. Med Educ 38:1006–1012

Friedman Z, You-Ten KE, Bould MD et al (2008) Teaching lifesaving procedures: the impact of model fidelity on acquisition and transfer of cricothyrotomy skills to performance on cadavers. Anesth Analg 107:1663–1669

Boet S, Borges BCR, Naik VN et al (2011) Complex procedural skills are retained for a minimum of 1 year after a single high-fidelity simulation training session. Br J Anaesth 107(4):533–539

Broudy HS (1977) Types of knowledge and purposes of education. In: Anderson RC, Spiro RJ, Montague WE (eds) Schooling and the acquisition of knowledge. Erlbaum, Hillsdale, pp 1–17

Daniel M, Parkin IG, Bradley PJ (2006) Anatomy and head and neck surgery. ENT News 15:78–80

Villamaria FJ, Pliego JF, Wehbe-Janek H et al (2008) Using simulation to orient code blue teams to a new hospital facility. Simul Healthc 3(4):209–216

Shetty K, Nayyar V, Stachowski E et al (2013) Training for cricothyroidotomy. Anaesth Intensive Care 41:623–630

Sulaiman L, Tighe SQM, Nelson RA (2006) Surgical vs wire-guided cricothyroidotomy: a randomised crossover study of cuffed and uncuffed tracheal tube insertion. Anaesthesia 61:565–570

Vadodaria BS, Gandhi SD, McIndoe AK (2004) Comparison of four different emergency airway access equipment sets on a human patient simulator. Anaesthesia 59:73–99

Assmann NM, Wong DT, Morales E (2007) A comparison of a new indicator-guided with a conventional wire-guided percutaneous cricothyroidotomy device in mannequins. Anesth Analg 105:148–154

Brydges R, Nair P, Ma I et al (2012) Directed self-regulated learning versus instructor-regulated learning in simulation training. Med Educ 46:648–656

Brydges R, Carnahan H, Safir O et al (2009) How effective is self-guided learning of clinical technical skills? It’s all about process. Med Educ 43:507–515

Strandbygaard J, Bjerrum F, Maagaard M (2013) Instructor feedback versus no instructor feedback on performance in a laparoscopic virtual reality simulator: a randomized trial. Ann Surg 257(5):839–844

Vedel C, Bjerrum F, Mahmood B et al (2015) Medical students as facilitators for laparoscopic simulator training. J Surg Educ 72(3):446–451

Barsuk JH, McGaghie WC, Cohen ER, O’Leary KJ, Wayne DB (2009) Simulation-based mastery learning reduces complications during central venous catheter insertion in a medical intensive care unit. Crit Care Med 37(10):2697–2701

Cook DA, Brydges R, Zendejas B, Hamstra SJ, Hatala R (2013) Mastery learning for health professionals using technology-enhanced simulation: a systematic review and meta-analysis. Acad Med 88:1178–1186

Acknowledgments

The authors thank Mikael J. V. Henriksen MD for technical assistance (Fig. 1) and Jørgen Tranum-Jensen, MD, professor in the Department of Cellular and Molecular Medicine at the University of Copenhagen, for access to the cadavers and for the collaboration in the anatomy laboratory.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

No specific funding was received for this project.

Conflict of interest

All authors declare that no conflict of interest exists.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Rights and permissions

About this article

Cite this article

Melchiors, J., Todsen, T., Nilsson, P. et al. Self-directed simulation-based training of emergency cricothyroidotomy: a route to lifesaving skills. Eur Arch Otorhinolaryngol 273, 4623–4628 (2016). https://doi.org/10.1007/s00405-016-4169-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00405-016-4169-0