Abstract

Purpose

Patients screened for colorectal cancer (CRC) frequently turn to the Internet to improve their understanding of tests used for detection, including colonoscopy, flexible sigmoidoscopy, fecal occult blood test (FOBT), and CT colonography. It was of interest to determine the quality and readability levels of online health information.

Methods

The screening tools were googled, and the top 20 results of each test were analyzed for readability, accessibility, usability, and reliability. The 80 articles excluded scientific literature and blogs. We used ten validated readability scales to measure grade levels, and one-way ANOVA and Tukey’s honestly statistical different (HSD) post hoc analyses to determine any statistically significant differences among the four diagnostic tests. The LIDA tool assessed overall quality by measuring accessibility, usability, and reliability.

Results

The 80 articles were written at an 11.7 grade level, with CT colonography articles written at significantly higher levels than FOBT articles, F(3, 75) = 3.07, p = 0.033. LIDA showed moderate percentages in accessibility (83.9 %), usability (73.0 %), and reliability (75.9 %).

Conclusions

Online health information about CRC screening tools are written at higher levels than the National Institute of Health (NIH) and American Medical Association (AMA) recommended third to seventh grade levels. More patients could benefit from this modality of information if it were written at a level and quality that would better facilitate understanding.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Colon cancer is the third most common cause of cancer death in the USA, with approximately 140,000 new cases of large bowel cancer being diagnosed annually and more than 50,000 Americans expected to die of colorectal cancer each year [1]. While the rates of colon cancer screening have increased from 38 % in 1990 to 65 % in 2010, in patients with low literacy—defined as less than a high school level—the screening rate remains under 50 % [2]. Corroborating this fact, Meissner et al. showed that colorectal cancer screening rates are higher in adults who are better educated [3]. The health belief model [4] demonstrates that health information that addresses patients’ perceptions and beliefs about colorectal screening can increase patient compliance with screening guidelines [5].

The Internet is a widely accessible tool for patients to gain a more comprehensive understanding of their conditions, diagnostic tests, and treatment plans. The Pew Internet and the American Life Project reported that 66 % of the 128 million American adults that have Internet access use it to search for health information [6]. “The Social Life of Health Information” demonstrated that of the 74 % of American adults who utilize the Internet, 80 % have searched online for multiple topics regarding specific diseases and treatments [7]. American adults additionally use the Internet to share the perspectives of others enduring similar health problems, interpret reviews of recommended drugs, and evaluate the online rankings of medical providers [8]. In fact, from 2002 to 2005, the percentage of Americans who reported the Internet playing a crucial role in coping with a major illness increased by 40 % [6].

With a growing reliance on the Internet for health information, it is crucial to consider patient’s literacy, as it imparts their ability to interpret health information [9]. Studies show that health literacy is an independent predictor of health-related quality of life [10]. In fact, patients with low health literacy have less understanding about their health, worse health patterns [11], and lower life expectancies [12] than those with higher health literacy. Another study indicated that 15 % of adults with below basic health literacy, 31 % of those with basic health literacy, 40 % with intermediate health literacy, and 62 % with proficient health literacy rely on the Internet for information regarding health topics [13]. Additionally, poor health literacy has been correlated with an overall increase in health care costs [14], likely due to increased complications that require hospital intervention [15], increased hospitalizations, and poorer overall health [16].

Many national physician organizations fund websites with patient information about conditions, treatments, and procedures to improve patient understanding. Still, several studies demonstrate that health information is written at a level that is too advanced for the average American to fully comprehend [7, 9, 13, 17–28]. Recent studies show that the average American reads at an eighth grade level, and with this in mind, the National Institutes of Health (NIH) and American Medical Association (AMA) recommend that patient education materials be written at a third to seventh grade level [29, 30].

This study investigates the characteristics of online patient education articles about colorectal cancer screening tools that can impact health literacy. These include assessing readability, reliability, accessibility, and usability of these articles in an attempt to explain why the rates of colorectal cancer screening are stagnant despite the growing use of the Internet for health information. Readability is defined as the level of difficulty of written text and corresponds to patient comprehension. Reliability indicates the accuracy of information, which includes assessing the quality control used to monitor the information and ensuring that the information reflects the most current knowledge. Accessibility measures the quality of web navigation, including opening the website in different browsers, utilizing automatic tools, and accessing information. Usability refers to the ability for users to obtain the information they desire from a website based on its design and structure [31]. We specifically evaluate three common tests—colonoscopy, flexible sigmoidoscopy, and fecal occult blood test (FOBT)—as well an additional tool gastroenterologists may utilize for screening—CT colonography. It was also of interest to determine if there were any differences among the various screening tools. To the best of our knowledge, this is the first study to look at the readability, accessibility, usability, and reliability of information about these colorectal screening tools from websites that patients would most commonly encounter online.

Methods

In October 2015, “colonoscopy”, “flexible sigmoidoscopy”, “fecal occult blood test”, and “CT colonography” were searched on google.com. The top 20 patient education results for each search attempt were copied and pasted into individual Word documents, for a total of 80 articles. Pictures and accompanying captions, references, and URLs were deleted to avoid adding variables that could skew the results. Scientific literature was also excluded. These articles were analyzed with ten frequently used readability assessments to determine if the text is at an appropriate academic grade level. The validated tools include the Flesch reading ease (FRE) [11], Flesch-Kincaid grade level (FKGL) [32], simple measure of gobbledygook (SMOG) [12], Coleman-Liau index (CLI) [33], gunning fog index (GFI) [34], New Dale-Chall (NDC) [35], FORCAST formula [36], Fry graph [17], Raygor reading estimate [18] [18], and the new fog count (NFC) [35].

The FRE evaluated readability by generating scores between 0 and 100. The scores corresponded to the ranges including “very difficult” (0–30), “difficult” (31–50), “fairly difficult” (51–60), “standard” (61–70), “fairly easy” (71–80), “easy” (81–90), and “very easy” (91–100). The remaining nine tools for calculating the readability level are based on various quantitative algorithms (Table 1). The differences in level of readability between the five different search terms were assessed with a one-way ANOVA and post hoc analysis was done using Tukey’s honestly significant difference (HSD) test.

The LIDA instrument, developed by Minervation, was used by three separate evaluators (ESJ, AMJ, PJT) to measure reliability, accessibility, and usability [37]. LIDA is a widely used 54-item questionnaire to assess medical websites [38–41]. Each question is given a score from 0 to 3, 0 corresponding to “never,” 1 corresponding to “sometimes,” 2 corresponding to “mostly,” and 3 corresponding to “always.” The elements that are measured include browser text, full text availability, meeting Internet standards, clarity, consistency, functionality, engageability, conflicts of interest, content production, and output of content. These are automatically computed to create the percentages of the three domains—reliability, accessibility, and usability [31]. A satisfactory score is >90 %, a moderate score is 50 to 90 %, and a poor score is <50 %. Each of the investigators received an unused LIDA tool containing the individual questions along with two pages containing information about the LIDA tool and its goals. A separate data entry sheet with the URLs of each website was administered to the evaluators. No responses were shared among the investigators while the articles were being assessed.

Results

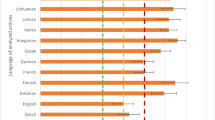

Collectively, the 80 articles were written at an 11.7 grade level, with none of the articles written below a seventh grade level. Over one third (36.3 %) of articles were written above the 12th grade level, which corresponds to a college level and higher. All of the patient education articles about colonoscopies, flexible sigmoidoscopies, FOBTs, and CT colonography were written above grade levels 8.0, 8.2, 7.3, and 8.8, respectively, and at an average grade level of 11.6, 11.4, 10.8, and 12.8, respectively (Fig. 1).

Readability assessments scores for all patient education articles. FRE Flesch reading ease, CLI Coleman-Liau index, NDC new dale challenge, FKGL Flesch-Kincaid grade level, GFI gunning fox index, NFC new fog count, RRE Raygor readability estimate, SMOG simple measure of gobbledygook. This figure shows the mean reading levels of all the articles as measured with the nine individual readability scales (CLI, FORECAST, Fry, NDC, FKGL, GFI, NFC, RRE, and SMOG). The red line depicts the 12th grade reading level, and the shaded area represents the NIH and AMA recommended third to seventh grade levels. The asterisks indicates that the average grade level of CT colonoscopy articles is significantly higher than the average grade level of FOBT articles [F(3, 75) = 3.07, p = 0.033]

Each of the readability tests showed results that were higher than the AMA-/NIH-recommended third to seventh grade levels. The FRE test showed the majority of articles were in the range of 45 to 50, corresponding to “Difficult,” with an average of 46.5 ± 15.0. The Fry graph (Fig. 2) revealed the highest average at 13.4 ± 3.2, and the NFC produced the lowest average grade level at 9.4 ± 3.2. The CLI and NDC demonstrated that the articles collectively averaged a grade level of 11.4 ± 2.1 and 11.1 ± 2.8, respectively. The individual averages produced by each of the readability tests for the four screening methods are depicted in (Table 2, Fig. 1). One-way ANOVA results showed significant differences with the CT colonography articles written at a more difficult level when compared to the FOBT, F(3,75) = 3.07, p = 0.033, and the Tukey HSD post hoc analysis confirmed these significant differences at p < 0.05. There were no significant differences between other groups.

The LIDA tool showed the accessibility, usability, and reliability of the information was 83.9, 73.0, and 75.9 %, respectively. A depiction of the scores for the individual screening tools is found in Fig. 3. These results correspond to moderate percentages, which range from 50 to 90 %.

LIDA results of articles. This box-and-whisker plot depicts the LIDA scores of each screening tool. The boxes show the 25th, 50th, and 75th percentiles of the scores. The whiskers show the maximum and minimum values. The points in the boxes represent the average LIDA percentage for each screening tool. The shaded blue box represents the 50–90 % range, representing “moderate” quality. None of the values were unsatisfactory (under 50 %), and the majority were moderate (between 50 and 90 %)

Discussion and conclusions

Colon cancer is a preventable disease with adequate screening. Studies show that screening rates are higher in patients whose perceptions and beliefs about colorectal screening are addressed [5]. Approximately 84 million American adults use the Internet to search for health and medical information [6]. Over 70 % of patients report that the knowledge they garner from the Internet influences their medical decisions [42]. Thus, it is imperative to ensure that the information found online is written at a level that will enhance patients’ understanding of colorectal cancer screening tests and increase the screening.

We aimed to simulate the patient’s experience patients of returning from a doctor’s visit and searching for a medical term online. Google was chosen to search keywords, as it is the number one search engine in the world, with 67.6 % of the US search engine market share [10]. The top 20 articles for each screening tool were chosen as they would represent those resources that a patient would most likely consult and included society sponsored sites such as Medline Plus, the National Institute of Health (NIH), the American College of Gastroenterology’s patient education and resource centers, the American Society of Gastrointestinal Endoscopy, and other common health sites such as WebMD, Wikipedia, and MedicineNet. Scientific research articles were excluded as these were not written specifically for patient education and often have extremely complex medical jargon. The articles surveyed were collectively written at an 11.7 grade level, with no article written under a seventh grade level, and over one third of articles written above the 12th grade level, which corresponds to college level or higher.

With over 100,000 new cases of large bowel cancer being diagnosed annually, the implications of this study are critical. Online sources can be beneficial or disadvantageous to the physician-patient relationship. If the readability level of an article is too high for the general patient population, then it may elicit more questions rather than clarify existing ones. This may translate into confusion for the patient and an overall sense of distrust for the physician, who may not always be able to adequately answer patients’ questions within given appointment times. Studies show that effective communication lowers patient anxiety and improves overall clinical outcomes [43]. If resources are written at a level that can facilitate the understanding of a condition, patients could use the information as a supplement for appointments to make informed decisions about the next steps in their medical care, such as participating in screenings to detect colon cancer or premalignant lesions.

Ten readability assessments were utilized to confirm the validity of the results, since each of the tests utilize distinct characteristics of articles to assign grade levels (Table 1). For instance, the FORCAST scale is most often utilized to assess non-narrative patient materials and ignores sentence structure. The NDC scale is often used to investigate medical literature as it is based on a list of words appropriate for a fourth grade level. Both tests showed average grade levels of 11.0 and 11.1, respectively, both of which are higher than recommended.

There are inherent flaws in the readability assessments, as there are in any type of tool used to objectify subjective information. The scales use the length of sentences, number of words in each sentence, and other parameters to determine the complexity of the text. The actual content of the words and sentences are not interpreted. Thus, shorter words that are considered less complex by the algorithms may still serve as a source of confusion for patients seeking health information. For instance, the words “fecal” and “biopsy” are short in length but still qualify as medical jargon and may be beyond the scope of what is considered common knowledge. Likewise, lengthy words that might be commonly understood by the public could inappropriately increase the level of readability. Additionally, the readability assessments do not take into consideration word order, which could have an effect on patient comprehension.

For this reason, the LIDA instrument was utilized to complement these readability studies in determining the reliability, accessibility, and usability of websites. Ensuring a website is reliable, current, and unbiased is important for patients who consider online information when making health-related decisions. The reliability score was moderate—75.9 % (moderate range 50–90 %). This could be improved by enforcing stricter guidelines about citing relevant sources and updating health information regularly. The LIDA assessment revealed an accessibility score of 83.9 %, which was on the higher spectrum of the moderate range (50–90 %). All the articles assessed were freely available and did not require any form of registration which makes the information more accessible. The information from the majority of the sites was also written in a traditional black-and-white format, which also resulted in higher scores as all patients, including colorblind patients, could easily access the information. Choice of increasing font size could improve this score by easing the online experience of visually impaired patients. The usability category of the LIDA tool had the lowest percentage—73.0 %, which was also in the moderate range. While the site designs were clear, easy to navigate, and consistent from one page to another, the websites were often lacking interactive presentations of information, such as videos, images, and simulations. If this aspect were improved, the overall quality of online health information about colorectal cancer screening tools may be improved, and patients may benefit tremendously.

A similar study was conducted 2 years prior in which the authors investigated colorectal cancer screening patient education articles for its readability, health content, and suitability which evaluates the content, graphics, layout/typography, and learning stimulation (47). Despite our study being performed 2 years after and evaluating a broader expanse of articles from different aspects—readability, reliability, accessibility, and usability—the conclusions have been unchanged, in that online health information about colon cancer screening is still written at much too high of a grade level. Overall, the implications of this study are quite significant and relevant given the increasing utilization of the Internet in making decisions about health care. If the health information found on these websites could be written at lower grade levels, it would facilitate patients’ understanding about their medical conditions, tests, and treatments. This could potentially help improve the doctor-patient relationship, increase colon cancer screening rates, and ultimately reduce poor health outcomes. Further directions for this work include surveying patients about whether the information they find online is helpful or detrimental to their decision to undergo screening. This would further help us determine how well the results of these objective readability tools correspond to subjective perceptions of the information. It would also be of interest to determine how images and simulation videos/exercises affect the patient’s understanding.

References

Siegel R, Ma J, Zou Z, Jemal A (2014) Cancer statistics, 2014. CA Cancer J Clin 64(1):9

Charnock D. (1998) The DISCERN handbook: quality critera for consumer health information on treatment choices

Cherla DV, Sanghvi S, Choudhry OJ, Liu JK, Eloy JA (2012) Readability assessment of internet-based patient education materials related to endoscopic sinus surgery. Laryngoscope 122(8):1649–1654. doi:10.1002/lary.23309

Coleman M, Liau TL (1975) A computer readability formula designed for machine scoring. J Appl Psychol 60:2

Colaco M, Svider PF, Agarwal N, Eloy JA, Jackson IM (2012) Readability Assessment of Online Urology Patient Education Materials. J Urol.

Badarudeen S, Sabharwal S (2008) Readability of patient education materials from the American Academy of Orthopaedic surgeons and pediatric Orthopaedic Society of North America web sites. J Bone Joint Surg Am 90(1):199–204

Paasche-Orlow MKTH, Brancati FL (2003) Readability standards for informedconsent forms as compared with actual readability. N Engl J Med 348(8):721–726

Badarudeen S, Sabharwal S (2010) Assessing readability of patient education materials: current role in orthopaedics. Clin Orthop Relat Res 468(10):2572–2580. doi:10.1007/s11999-010-1380-y

O’Connor A, Rostom A, Fiset V (1999) Decision aids for patients facing health treatment or screening decisions: systematic review. BMJ 319(7212):731–734

Berland GK, Elliott MN, Morales LS, Algazy JI, Kravitz RL, Broder MS, Kanouse DE, Munoz JA, Puyol J, Lara M, Watkins KE, Yang H, McGlynn EA (2001) Health information on the internet accessibility, Quality, and Readability in English and Spanish. JAMA 285(20):2612–2621

Sudore R, Mehta K, Simonsick E, Harris T, Newman A, Satterfield S (2006) Limited literacy in older people and disparities in health and healthcare access. J Am Geriatr Soc 54(5):770–776

McLaughlin GH (1969) SMOG grading: a new readability formula. J Read 12:8

Baker DW, Wolf MS, Feinglass J, Thompson JA, Gazmararian JA, Huang J (2007) Health literacy and mortality among elderly persons. Arch Intern Med 167(14):1503–1509

Chall JS (1995) Readability revisited: the new Dale-Chall readability formula. Brookline Books Cambridge, MA

Brown JB, Weston WW, Stewart MA (1989) Patient-centred interviewing part II: finding common ground. Can Fam Physician 35:153–157

Bouton ME, Shirah GR, Nodora J, Pond E, Hsu CH, Klemens AE, et al. (2012) Implementation of educational video improves patient understanding of basic breast cancer concepts in an undereducated county hospital population. J Surg Oncol 105(1):48–54. doi:10.1002/jso.22046

Agarwal N, Chaudhari A, Hansberry DR, Tomei KL, Prestigiacomo CJ (2013) A comparative analysis of neurosurgical online education materials to assess patient comprehension. J Clin Neurosci. doi:10.1016/j.jocn.2012.10.047

Agarwal N, Sarris C, Hansberry DR, Lin MJ, Barrese JC, Prestigiacomo CJ (2013) Quality of patient education materials for rehabilitation after neurological surgery. NeuroRehabilitation 32(4):817–821. doi:10.3233/nre-130905

Eloy JA, Li S, Kasabwala K, Agarwal N, Hansberry DR, Baredes S, et al. (2012) Readability assessment of patient education materials on major otolaryngology association websites. Otolaryngol Head Neck Surg. doi:10.1177/0194599812456152

Hansberry DR, Agarwal N, Gonzales SF, Baker SR (2013) Are we effectively informing patients? A quantitative analysis of online patient education resources from the American Society of Neuroradiology. Am J Neuroradiol.in press.

Hansberry DR, Kraus C, Agarwal N, Baker SR, Gonzales SF (2013) Health literacy in vascular and interventional radiology: a comparative analysis of online patient education resources. CardioVasc Int Radiol. in press.

Hansberry DR, Agarwal N, Shah R, Schmitt PJ, Baredes S, Setzen M, et al. (2013) Analysis of the readability of patient education materials from surgical subspecialties. Laryngoscope. doi:10.1002/lary.24261

Hansberry DR, Suresh R, Agarwal N, Heary RF, Goldstein IM (2013) Quality assessment of online patient education resources for peripheral neuropathy. J Peripher Nerv Syst 18(1):44–47. doi:10.1111/jns5.12006

Kasabwala K, Agarwal N, Hansberry DR, Baredes S, Eloy JA (2012) Readability assessment of patient education materials from the American Academy of otolaryngology—head and neck surgery foundation. Otolaryngol Head Neck Surg 147(3):466–471. doi:10.1177/0194599812442783

Misra P, Agarwal N, Kasabwala K, Hansberry DR, Setzen M, Eloy JA (2012) Readability analysis of healthcare-oriented education resources from the american academy of facial plastic and reconstructive surgery (AAFPRS). Laryngoscope. doi:10.1002/lary.23574

Agarwal N, Feghhi DP, Gupta R, Hansberry DR, Quinn JC, Heary RF, et al. (2014) A comparative analysis of minimally invasive and open spine surgery patient education resources. J Neurosurg Spine 21(3):468–474. doi:10.3171/2014.5.SPINE13600

Agarwal N, Hansberry DR, Singh PL, Heary RF, Goldstein IM (2014) Quality assessment of spinal cord injury patient education resources. Spine 39(11):E701–E7E4. doi:10.1097/BRS.0000000000000308

Hansberry DR, Ramchand T, Patel S, Kraus C, Jung J, Agarwal N, et al. (2014) Are we failing to communicate? Internet-based patient education materials and radiation safety. Eur J Radiol 83(9):1698–1702. doi:10.1016/j.ejrad.2014.04.013

National Institutes of Health (2007) How to write easy to read health materials. http://www.nlm.nih.gov/medlineplus/etr.html. Accessed July 29, 2014.

Dollahite J, Thompson C, McNew R (1996) Readability of printed sources of diet and health information. Patient Educ Couns 27(2):123–134

The Minervation validation instrument for healthcare websites (LIDA tool) [database on the Internet]2007. Accessed: September 2012

2007. MTLIMvifhcw.

Health literacy: report of the Council on Scientific Affairs (1999) Ad Hoc Committee on Health Literacy for the Council on Scientific Affairs, American Medical Association. JAMA. 281(6):552–7.

Covering kids & families. Health literacy style manual 2005.

Simply put—a guide for creating easy-to-undestand materials. 3 ed. Atlanta, Georgia: US Department of Health and Human Services—Centers for Disease Control and Prevention (CDC); 2009.

Agarwal N, Hansberry D, Sabourin V, Tomei K, Prestigiacomo C (2013) A comparative analysis of the quality of patient education materials from medical specialties. JAMA Intern Med:1–2. doi:10.1001/jamainternmed.2013.6060

Cotugna N, Vickery CE, Carpenter-Haefele KM (2005) Evaluation of literacy level of patient education pages in health-related journals. J Community Health 30(3):213–219

D’Alessandro DM, Kingsley P, Johnson-West J (2001) The readability of pediatric patient education materials on the world wide web. Arch Pediatr Adolesc Med 155(7):807–812

Davis TC, Williams MV, Marin E, Parker RM, Glass J (2002) Health literacy and cancer communication. CA Cancer J Clin 52(3):134–149

Diaz JAGR, Ng JJ, Reinert SE, Friedmann PD, Moulton AW (2002) Patients’ use of the internet for medical information. J Gen Intern Med 17:180–185

Doak CC, Doak LG, Friedell GH, Meade CD (1998) Improving comprehension for cancer patients with low literacy skills: strategies for clinicians. CA Cancer J Clin 48(3):151–162

Albright J, de Guzman C, Acebo P, Paiva D, Faulkner M, Swanson J (1996) Readability of patient education materials: implications for clinical practice. Appl Nurs Res 9(3):139–143

Baker DW, Parker RM, Williams MV, Clark WS, Nurss J (1997) The relationship of patient reading ability to self-reported health and use of health services. Am J Public Health 87(6):1027–1030

Acknowledgments

There were no sources of funding and support. There were no additional contributors to the study, and all authors contributed substantially to the study design, data accrual, analysis, and interpretation of the work, drafting and revision of the manuscript, and final approval of the version to be published.

Author contributions

ESJ, AMJ, PA, and DRH were involved with the manuscript study concept and design. ESJ, AMJ, and PJT were involved with acquisition of data. DRH and PJT were responsible for statistical analysis and interpretation of data. ESJ, AMJ, and PA drafted the manuscript. CD and SC provided critical revision of the manuscript for important intellectual content.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosures

None of the authors have any disclosures.

Grant funding

N/A

Rights and permissions

About this article

Cite this article

John, E.S., John, A.M., Hansberry, D.R. et al. Colorectal cancer screening patient education materials—how effective is online health information?. Int J Colorectal Dis 31, 1817–1824 (2016). https://doi.org/10.1007/s00384-016-2652-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00384-016-2652-0