Abstract

Purpose

The aim of this study was to identify (1) the type of skill evaluation methods and (2) how the effect of training was evaluated in simulation-based training (SBT) in pediatric surgery.

Methods

Databases of PubMed, Cochrane Library, and Web of Science were searched for articles published from January 2000 to January 2017. Search concepts of Medical Subject Heading terms were “surgery,” “pediatrics,” “simulation,” and “training, evaluation.”

Results

Of 5858 publications identified, 43 were included. Twenty papers described simulators as assessment tools used to evaluate technical skills. Reviewers differentiated between experts and trainees using a scoring system (45%) and/or a checklist (25%). Simulators as training tools were described in 23 papers. While the training’s effectiveness was measured using performance assessment scales (52%) and/or surveys (43%), no study investigated the improvement of the clinical outcomes after SBT.

Conclusion

Scoring, time, and motion analysis methods were used for the evaluation of basic techniques of laparoscopic skills. Only a few SBT in pediatric surgery have definite goals with clinical effect. Future research needs to demonstrate the educational effect of simulators as assessment or training tools on SBT in pediatric surgery.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The simulation-based training (SBT) can be valuable for patients and training staff for safety. The evidence of skill transferability from SBT to the clinical environment has been reported [1]. In pediatric education, a meta-analysis showed that SBT was a highly effective educational modality [2]. However, given the lower number of cases and the technical complexity due to small working space in pediatric surgery than in general surgery, carefulness and safety are required; thus, SBT would have an important role in pediatric surgery. In fact, the use of SBT in pediatric surgery has been expanding and, in recent years, various simulators are emerging with the development of medical engineering technology [3]. Considering the effectiveness of training methods, competency assessment tools are essential. Recently, a systematic review on the validity and strength of SBT in pediatric surgery [4] described the current SBT models’ validity and level of evidence and provided recommendations. However, the role of simulators as assessment tools and the effect of training of using simulators on the field of pediatric surgery are still unclear. In this study, we aimed to examine the use of simulators in measuring surgical competence and to evaluate the effectiveness of SBT in pediatric surgery.

Methods

The conducting and reporting of the current systematic review conformed to the Preferred Reporting Items for Systematic Review and Meta- analysis (PRISMA) guidelines.

Study search strategy

This study was designed with the help of librarians to minimize sampling biases and a third party for the increased generalizability. Comprehensive literature searches were undertaken using the PubMed, Cochrane Library, and Web of Science databases from January 2000 to July 2017. A broad search was employed comprising four separate search concepts of Medical Subject Heading terms using “OR” to define the elements of “surgery,” “pediatrics,” “simulation,” and “training, evaluation.” The search results for each concept area were combined using “AND.” Data saturation was achieved through hand search from the papers’ reference lists.

Study extraction and data analysis

Two investigators reviewed all extracted studies independently. Studies that reported SBT which evaluated subject’s performance and/or training effects were included. Inclusion criteria were SBT for residents, fellows, and faculty members using a trainer box, virtual reality simulator, physical simulator, cadaver, or animal models. The objective SBT range was prepared based on Accreditation Council for Graduate Medical Education (ACGME) Program Requirements for Graduate Medical Education in Pediatric Surgery and the Japanese Society of Pediatric Surgeons requirements [5, 6]. No language limits were applied. We excluded studies that had only an evaluation of the simulators themselves. Exclusion criteria included SBT for medical school, pharmacology, and analgesia. Letters to editor, conference abstracts, and review articles were also excluded. Any discrepancies in interpretation were resolved through consensus adjudication. These studies were classified based on the training effects using the Kirkpatrick model and classified into four levels: level 1, reaction, if a trainee perceived value of the training; level 2, learning, if trainee’s knowledge or skill improved; level 3, behavioral change, if trainee’s behavior changed in the clinical environment, and level 4, results, if the training affected patient outcomes [7].

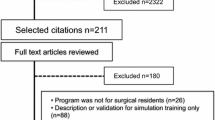

Results

A total of 5858 unique citations were retrieved based on the research question, and 1390 duplicates were removed electronically. The remaining 4468 abstracts were screened by titles and content. Finally, 67 articles were reviewed using full text analysis. Two additional articles were found through hand searching the reference list, and the final data were extracted from 43 articles (Fig. 1). These reports were mainly from the US, Japan, and Canada (30%, 28%, and 16%, respectively). A total of 81% of the studies were published after 2010. Settings, measures, and recourses of selected studies were described from the viewpoints of assessment and training of simulation as follows.

Assessment tools

Twenty papers described simulators as assessment tools used for the evaluation of technical skills of trainer [8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27]. Table 1 describes 20 simulators regarding procedure contents, types of simulators, evaluation subjects, and evaluation methods (Table 1). Of 20 studies, 10 evaluated basic techniques of laparoscopic surgery, and most of these studies went through technical evaluation based on scoring, time, and penalties [8,9,10,11,12,13,14,15,16,17]. Six studies focused on thoracoscopic surgical training [20,21,22,23,24,25]. In contrast, most studies on thoracoscopic surgical training evaluated specific procedures such as diaphragmatic hernia repair, esophageal atresia, and trachea-esophageal fistula. The metrics that differentiated between novices and experts were time, accuracy, and/or performance assessment scales.

Evaluation of training effects

Twenty-three papers evaluated the effectiveness of SBT [28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50]. They used simulators for the training of endoscopic basic surgical skills, fundoplication, airway foreign body, gastroschisis, trauma, acute care, extracorporeal membrane oxygenation cannulation, urology, fetal therapy, and cardiology. Table 2 shows a description of training outlines and training effects. While most training subjects were residents, two studies were targeted for fellows in acute care or urology [42, 47]. The required time for the training courses was between 3 h and 2 days for basic laparoscopic skills, 2 days for fundoplication, and between 1 h and one day for foreign body aspiration. One study about the training of acute care procedure for the faculty evaluated the length of the retention and it was 6 months [42]. Training efficiency was evaluated using mainly performance assessment scales (52%) and/or surveys (43%). Considering the Kirkpatrick model for evaluation of education, all studies were classified to levels 1 or 2, and no training model had a clinical effect that corresponded to level 3 or 4.

Discussion

In this review, we examined the evaluation methods and impact of SBT in pediatric surgery. The evaluation to discriminate the trainers was undertaken using objective assessment methods such as scoring or a checklist, so that the risk of subjective assessment is reduced. In terms of educational outcomes, basic skill training involves accumulating evidence; however, limited studies have assessed the long-term retention of the training effect and no training method has been tested for skill acquisition in the clinical environment or improvement in patients’ clinical outcomes.

An appropriate assessment method is important in competency-based training [51]. A previous study showed that in-training evaluation reports by faculty members were at risk of subjective assessments [52]. In our review, one-fourth of the articles used an objective assessment scale such as the fundamentals of laparoscopic surgery (FLS), pediatric laparoscopic surgery (PLS), and objective structured assessment of technical skill, which had been previously validated. Therefore, the risk of subjective assessment could be reduced. In particular, the PLS simulator is considered the most effective assessment tool for basic laparoscopic procedures [8, 11, 15, 16]. The PLS simulator is a modified FLS trainer for pediatric use. It could distinguish experts, intermediates, and novices by motion analysis in addition to basic laparoscopic skills such as peg transfer, pattern cutting, ligating loop, extracorporeal suturing, and intracorporeal suturing. According to our review, half of the papers used time, path length, and suturing tension as objective evaluation scales for basic laparoscopic surgery, and they could properly distinguish expert from novice surgeons depending on the training content and targets. Thus, it would be useful to apply validated evaluation tools to SBT for more advanced or complex procedures in the future studies.

The metrics called time and accuracy, which include the time to complete the tasks and precision or error, are simple to interpret the results and do not require specific tools. However, from a clinical point of view, it is clear that a faster procedure does not always mean a secure outcome. To effectively use the metrics for surgical performance, it would be better if there was an expert who could provide supplementary feedback at the qualitative evaluation to lead to clinical practices [53]. Motion analysis has recently been well introduced, especially in the training of endoscopic surgery [10,11,12,13,14,15, 18, 19, 21, 28, 29, 31]. This metric measures various aspects of movements objectively such as velocity, acceleration, roll, range, and path length depending on the algorithm of the setting. As this metric can assess separate segments individually, it has potential to be used for formative assessment [54]. Considering the purpose of the evaluation, intended use, and the way of interpretation in advance would support the targeted assessments.

Given the limited opportunities for trainees to perform pediatric surgery, SBT is becoming increasingly important. Regarding the evaluation of training effects, half of these reviewed articles have focused on emergency initial treatments, which was common in the field of pediatrics, and only one-fourth focused on training for specific procedures. While there were relatively many level 2 studies in the field of basic skills or overlapping with pediatrics, such as those on acute care and foreign body aspiration, only two level 2 studies [31, 49] were found in the field of advanced pediatric surgery. The ultimate goal of training is to increase clinical effectiveness; however, no training model corresponding to level 3 or 4 was found in this review. Because of the ease of evaluation, the evidence of the Kirkpatrick model 1 or 2 for basic skill training has gradually accumulated. However, it will be necessary to prove the effect of SBT on more advanced and specific procedures and the outcome of Kirkpatrick model 3 or 4 with higher impact.

Although the retention of the training effect is also an important point in training, in our review, the programed training method had focused on the short-term training effect and did not evaluate the retention of the long-term training effect, except one study [42]. According to a systematic review of the spacing of surgical skill training sessions for medical trainees, distributed training sessions are possibly better than mass training, but no evidence was obtained about the optimal assessment interval [55]. There is little evidence about the long-term retention of training effects; thus, it is difficult to make recommendations at this time.

With regard to impact of the simulation training on clinical outcomes, Cox et al. reviewed Kirkpatrick’s level 4 studies in 2015 and reported 12 appreciable articles [56]. Among the literature, Zendejas et al. reported the simulation training of laparoscopic totally extraperitoneal (TEP) inguinal hernia repair [57]. This training was for residents and consisted of two elements: a web-based online cognitive part and a simulation-based skill training part. The clinical effect was measured by the improvement in operative time, operative performance score, complication rate, and length of hospitalization. The used operative performance score was the Global Operative Assessment of Laparoscopic Skills (GOALS), which was a valid and reliable tool to assess technical skills during a variety of procedures [58]. In general surgery, a more procedure-specific assessment tool that had the reliability and validity of the scores in the operating room and the skills laboratory, the GOALS-Groin Hernia (GOALS-GH), was used [59, 60]. Hernia repair was also a common procedure in pediatric surgery, and the laparoscopic percutaneous extraperitoneal closure (LPEC) method [61] is recently in use. However, to our knowledge, there are no such tools to show the transferability from a bench model to the clinical setting. It is necessary to provide evidence of the transferability of performance improvement and its quality as effects of SBT. However, Barsness et al. [3] reported that it was difficult to address this issue within the field of pediatric surgery because of limits related to infrastructure, knowledge, or time. They suggested referring to the evidence obtained in the educational field of general surgery. Transferability certainly could not be achieved without information of the number of cases. In future studies, collaboration research of multi-institutes for SBT in pediatric surgery would be necessary.

This study provides a review of simulators measuring technical competence and evaluates the effectiveness of SBT in pediatric surgery. As for the training effects, no study on its clinical outcomes was found. It is necessary to accumulate evidence on SBT transferability to clinical practice in pediatric surgery.

References

Dawe SR, Pena GN, Windsor JA, Broeders JAJL, Cregan PC, Hewett PJ et al (2014) Systematic review of skills transfer after surgical simulation-based training. Br J Surg 101:1063–1076. https://doi.org/10.1002/bjs.9482

Cheng A, Lang TR, Starr SR, Pusic M, Cook DA (2014) Technology-enhanced simulation and pediatric education: a meta-analysis. Pediatrics 133:e1313–e1323. https://doi.org/10.1542/peds.2013-2139

Barsness KA (2015) Trends in technical and team simulations: challenging the status Quo of surgical training. Semin Pediatr Surg 24:130–133. https://doi.org/10.1053/j.sempedsurg.2015.02.011

Patel EA, Aydın A, Desai A, Dasgupta P, Ahmed K (2018) Current status of simulation-based training in pediatric surgery: a systematic review. J Pediatr Surg. https://doi.org/10.1016/j.jpedsurg.2018.11.019

Accreditation Council for Graduate Medical Education (2016) ACGME program requirements for graduate medical education in the subspecialties of pediatrics. https://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/320S_pediatric_subs_2016.pdf. Accessed 11 Feb 2019

The Japanese Society of Pediatric Surgeons Website. https://www.jsps.gr.jp/about/rule_contents/rules. Accessed 11 Feb 2019

Kirkpatrick D (1996) Great ideas revisited. Techniques for evaluating training programs. Revisiting Kirkpatrick’s four-level model. Train Dev 50:54–59

Azzie G, Gerstle JT, Nasr A, Lasko D, Green J, Henao O et al (2011) Development and validation of a pediatric laparoscopic surgery simulator. J Pediatr Surg 46:897–903. https://doi.org/10.1016/j.jpedsurg.2011.02.026

Nasr A, Gerstle JT, Carrillo B, Azzie G (2013) The pediatric laparoscopic surgery (PLS) simulator: methodology and results of further validation. J Pediatr Surg 48:2075–2077. https://doi.org/10.1016/j.jpedsurg.2013.01.039

Hamilton JM, Kahol K, Vankipuram M, Ashby A, Notrica DM, Ferrara JJ (2011) Toward effective pediatric minimally invasive surgical simulation. J Pediatr Surg 46:138–144. https://doi.org/10.1016/j.jpedsurg.2010.09.078

Nasr A, Carrillo B, Gerstle JT, Azzie G (2014) Motion analysis in the pediatric laparoscopic surgery (PLS) simulator: validation and potential use in teaching and assessing surgical skills. J Pediatr Surg 49:791–794. https://doi.org/10.1016/j.jpedsurg.2014.02.063

Harada K, Takazawa S, Tsukuda Y, Ishimaru T, Sugita N, Iwanaka T et al (2014) Quantitative pediatric surgical skill assessment using a rapid-prototyped chest model. Minim Invasive Ther Allied Technol 24:226–232. https://doi.org/10.3109/13645706.2014.996161

Takazawa S, Ishimaru T, Harada K, Deie K, Fujishiro J, Sugita N et al (2016) Pediatric thoracoscopic surgical simulation using a rapid-prototyped chest model and motion sensors can better identify skilled surgeons than a conventional box trainer. J Laparoendosc Adv Surg Tech 26:740–747. https://doi.org/10.1089/lap.2016.0131

Retrosi G, Cundy T, Haddad M, Clarke S (2015) Motion analysis-based skills training and assessment in pediatric laparoscopy: construct, concurrent, and content validity for the eoSim simulator. J Laparoendosc Adv Surg Tech 25:944–950. https://doi.org/10.1089/lap.2015.0069

Trudeau MO, Carrillo B, Nasr A, Gerstle JT, Azzie G (2017) Educational role for an advanced suturing task in the pediatric laparoscopic surgery simulator. J Laparoendosc Adv Surg Tech 27:441–446. https://doi.org/10.1089/lap.2016.0516

Herbert GL, Cundy TP, Singh P, Retrosi G, Sodergren MH, Azzie G et al (2015) Validation of a pediatric single-port laparoscopic surgery simulator. J Pediatr Surg 50:1762–1766. https://doi.org/10.1016/j.jpedsurg.2015.03.057

Shepherd G, Delft D, Truck J, Kubiak R, Ashour K, Grant H (2015) A simple scoring system to train surgeons in basic laparoscopic skills. Pediatr Surg Int 32:245–252. https://doi.org/10.1007/s00383-015-3841-6

Ieiri S, Ishii H, Souzaki R, Uemura M, Tomikawa M, Matsuoka N et al (2013) Development of an objective endoscopic surgical skill assessment system for pediatric surgeons: suture ligature model of the crura of the diaphragm in infant fundoplication. Pediatr Surg Int 29:501–504. https://doi.org/10.1007/s00383-013-3276-x

Jimbo T, Ieiri S, Obata S, Uemura M, Souzaki R, Matsuoka N et al (2016) A new innovative laparoscopic fundoplication training simulator with a surgical skill validation system. Surg Endosc 31:1688–1696. https://doi.org/10.1007/s00464-016-5159-4

Usón-Casaús J, Pérez-Merino EM, Rivera-Barreno R, Rodríguez-Alarcón CA, Sánchez-Margallo FM (2014) Evaluation of a Bochdalek diaphragmatic hernia rabbit model for pediatric thoracoscopic training. J Laparoendosc Adv Surg Tech 24:280–285. https://doi.org/10.1089/lap.2013.0358

Obata S, Ieiri S, Uemura M, Jimbo T, Souzaki R, Matsuoka N et al (2015) An endoscopic surgical skill validation system for pediatric surgeons using a model of congenital diaphragmatic hernia repair. J Laparoendosc Adv Surg Tech 25:775–781. https://doi.org/10.1089/lap.2014.0259

Barsness KA, Rooney DM, Davis LM, Chin AC (2014) Validation of measures from a thoracoscopic esophageal atresia/tracheoesophageal fistula repair simulator. J Pediatr Surg 49:29–33. https://doi.org/10.1016/j.jpedsurg.2013.09.069

Takazawa S, Ishimaru T, Harada K, Tsukuda Y, Sugita N, Mitsuishi M et al (2015) Video-based skill assessment of endoscopic suturing in a pediatric chest model and a box trainer. J Laparoendosc Adv Surg Tech 25:445–453. https://doi.org/10.1089/lap.2014.0269

Maricic MA, Bailez MM, Rodriguez SP (2016) Validation of an inanimate low cost model for training minimal invasive surgery (MIS) of esophageal atresia with tracheoesophageal fistula (AE/TEF) repair. J Pediatr Surg 51:1429–1435. https://doi.org/10.1016/j.jpedsurg.2016.04.018

Deie K, Ishimaru T, Takazawa S, Harada K, Sugita N, Mitsuishi M et al (2017) Preliminary study of video-based pediatric endoscopic surgical skill assessment using a neonatal esophageal atresia/tracheoesophageal fistula model. J Laparoendosc Adv Surg Tech 27:76–81. https://doi.org/10.1089/lap.2016.0214

Barber SR, Kozin ED, Dedmon M, Lin BM, Lee K, Sinha S et al (2016) 3D-printed pediatric endoscopic ear surgery simulator for surgical training. Int J Pediatr Otorhinolaryngol 90:113–118. https://doi.org/10.1016/j.ijporl.2016.08.027

Weinstock P, Rehder R, Prabhu SP, Forbes PW, Roussin CJ, Cohen AR (2017) Creation of a novel simulator for minimally invasive neurosurgery: fusion of 3D printing and special effects. J Neurosurg Pediatr 20:1–9. https://doi.org/10.3171/2017.1.PEDS16568

Nakajima K, Wasa M, Takiguchi S, Taniguchi E, Soh H, Ohashi S et al (2003) A modular laparoscopic training program for pediatric surgeons. JSLS 7:33–37

Ieiri S, Nakatsuji T, Higashi M, Akiyoshi J, Uemura M, Konishi K et al (2010) Effectiveness of basic endoscopic surgical skill training for pediatric surgeons. Pediatr Surg Int 26:947–954. https://doi.org/10.1007/s00383-010-2665-7

Pérez-Duarte FJ, Díaz-Güemes I, Sánchez-Hurtado MA, Cano Novillo I, Berchi García FJ, García Vázquez A et al (2012) Design and validation of a training model on paediatric and neonatal surgery. Cir Pediatr 25:121–125

Jimbo T, Ieiri S, Obata S, Uemura M, Souzaki R, Matsuoka N et al (2015) Effectiveness of short-term endoscopic surgical skill training for young pediatric surgeons: a validation study using the laparoscopic fundoplication simulator. Pediatr Surg Int 31:963–969. https://doi.org/10.1007/s00383-015-3776-y

Deutsh ES (2008) High-fidelity patient simulation mannequins to facilitate aerodigestive endoscopy training. Arch Otolaryngol Head Neck Surg 134:625–629. https://doi.org/10.1001/archotol.134.6.625

Binstadt E, Donner S, Nelson J, Flottemesch T, Hegarty C (2008) Simulator training improves fiber-optic intubation proficiency among emergency medicine residents. Acad Emerg Med 15:1211–1214. https://doi.org/10.1111/j.1553-2712.2008.00199.x

Jabbour N, Reihsen T, Sweet RM, Sidman JD (2011) Psychomotor skills training in pediatric airway endoscopy simulation. Otolaryngol Head Neck Surg 145:43–50. https://doi.org/10.1177/0194599811403379

Griffin GR, Hoesli R, Thorne MC (2017) Validity and efficacy of a pediatric airway foreign body training course in resident education. Ann Otol Rhinol Laryngol 120:635–640. https://doi.org/10.1177/000348941112001002

Deutsch ES, Christenson T, Curry J, Hossain J, PhD Zur K et al (2009) Multimodality education for airway endoscopy skill development. Ann Otol Rhinol Laryngol 118:81–86

Dabbas N, Muktar Z, Ade-Ajayi N (2009) GABBY: an ex vivo model for learning and refining the technique of preformed silo application in the management of gastroschisis. Afr J Paediatr Surg 6:73–76. https://doi.org/10.4103/0189-6725.54766

Tugnoli G, Ribaldi S, Casali M, Calderale SM, Coletti M, Villani S et al (2007) The education of the trauma surgeon: the "trauma surgery course" as advanced didactic tool. Ann Ital Chir 78:39–44

Lehner M, Heimberg E, Hoffmann F, Heinzel O, Kirschner H-J, Heinrich M (2017) Evaluation of a pilot project to introduce simulation-based team training to pediatric surgery trauma room care. Int J Pediatr 2017:1–6. https://doi.org/10.1155/2017/9732316

Nishisaki A Scrattish L Boulet J Kalsi M Maltese M Castner T et al (2008) Effect of recent refresher training on in situ simulated pediatric tracheal intubation psychomotor skill performance. In: Henriksen K Battles JB Keyes MA et al (eds) Advances in patient safety: new directions and alternative approaches (vol. 3: performance and tools). Agency for Healthcare Research and Quality (US), Rockville

Reid J, Stone K, Brown J, Caglar D, Kobayashi A, Lewis-Newby M et al (2012) The Simulation Team Assessment Tool (STAT): development, reliability and validation. Resuscitation 83:879–886. https://doi.org/10.1016/j.resuscitation.2011.12.012

Nishisaki A, Hales R, Biagas K, Cheifetz I, Corriveau C, Garber N et al (2009) A multi-institutional high-fidelity simulation “boot camp” orientation and training program for firstyear pediatric critical care fellows. Pediatr Crit Care Med 10:157–162. https://doi.org/10.1097/PCC.0b013e3181956d29

Cheng A, Goldman RD, Aish MA, Kissoon N (2010) A simulation-based acute care curriculum for pediatric emergency medicine fellowship training programs. Pediatr Emerg Care 26:475–476. https://doi.org/10.1097/PEC.0b013e3181e5841b

Stone K, Reid J, Caglar D, Christensen A, Strelitz B, Zhou L, Quan L (2014) Increasing pediatric resident simulated resuscitation performance: a standardized simulation-based curriculum. Resuscitation 85:1099–1105. https://doi.org/10.1016/j.resuscitation.2014.05.005

Atamanyuk I, Ghez O, Saeed I, Lane M, Hall J, Jackson T et al (2013) Impact of an open-chest extracorporeal membrane oxygenation model for in situ simulated team training: a pilot study. Interact Cardiovasc Thorac Surg 18:17–20. https://doi.org/10.1093/icvts/ivt437

Allan CK, Pigula F, Bacha EA, Emani S, Fynn-Thompson F, Thiagarajan RR et al (2013) An extracorporeal membrane oxygenation cannulation curriculum featuring a novel integrated skills trainer leads to improved performance among pediatric cardiac surgery trainees. Simul Healthc 8:221–228. https://doi.org/10.1097/SIH.0b013e31828b4179

Brydges R, Farhat WA, El-Hout Y, Dubrowski A (2010) Pediatric urology training: performance-based assessment using the fundamentals of laparoscopic surgery. J Surg Res 161:240–245. https://doi.org/10.1016/j.jss.2008.12.041

Soltani T, Hidas G, Kelly MS, Kaplan A, Selby B, Billimek J et al (2016) Endoscopic correction of vesicoureteral reflux simulator curriculum as an effective teaching tool: pilot study. J Pediatr Urol 12:45.e1–45.e6. https://doi.org/10.1016/j.jpurol.2015.06.017

Peeters SHP, Akkermans J, Slaghekke F, Bustraan J, Lopriore E, Haak MC et al (2015) Simulator training in fetoscopic laser surgery for twin-twin transfusion syndrome: a pilot randomized controlled trial. Ultrasound Obstet Gynecol 46:319–326. https://doi.org/10.1002/uog.14916

Yoo SJY, Spray T, Austion EH, Yun TJ, van Arsdell GS (2017) Hands-on surgical training of congenital heart surgery using 3-dimensional print models. J Thorac Cardiovasc Surg 153:1530–1540. https://doi.org/10.1016/j.jtcvs.2016.12.054

Holmboe ES, Sherbino J, Long DM, Swing SR, Frank JR, For the International CBME Collaborators (2010) The role of assessment in competency-based medical education. Med Teach 32:676–682. https://doi.org/10.3109/0142159X.2010.500704

Fried GM, Feldman LS (2007) Objective assessment of technical performance. World J Surg 32:156–160. https://doi.org/10.1007/s00268-007-9143-y

Jones DB, Schwaitzberg SD (2019) Operative endoscopic and minimally invasive surgery, 1st edn. CRC Press, Boca Raton, pp 184–187

Farcas MA, Trudeau MO, Nasr A, Gerstle JT, Carrillo B, Azzie G (2016) Analysis of motion in laparoscopy: the deconstruction of an intra- corporeal suturing task. Surg Endosc 31:3130–3139. https://doi.org/10.1007/s00464-016-5337-4

Cecilio-Fernandes D, Cnossen F, Jaarsma DADC, Tio RA (2018) Avoiding surgical skill decay a systematic review on the spacing of training sessions. J Surg Educ 75:471–480. https://doi.org/10.1016/j.jsurg.2017.08.002

Cox T, Seymour N, Stefanidis D (2015) Moving the needle: simulation’s impact on patient outcomes. Surg Clin N Am 95:827–838. https://doi.org/10.1016/j.suc.2015.03.005

Zendejas B, Cook DA, Bingener J, Huebner M, Dunn WF, Sarr MG et al (2011) Simulation-based mastery learning improves patient outcomes in laparoscopic inguinal hernia repair. Ann Surg 254:502–509. https://doi.org/10.1097/SLA.0b013e31822c6994 (discussion 509–11)

Vassiliou MC, Feldman LS, Andrew CG, Bergman S, Leffondré K, Stanbridge D et al (2005) A global assessment tool for evaluation of intraoperative laparoscopic skills. Am J Surg 190:107–113

Kurashima Y, Feldman LS, Al-Sabah S, Kaneva PA, Fried GM, Vassiliou MC (2011) A tool for training and evaluation of laparoscopic inguinal hernia repair: the Global Operative Assessment of Laparoscopic Skills-Groin Hernia (GOALS-GH). Am J Surg 201:54–61. https://doi.org/10.1016/j.amjsurg.2010.09.006

Ghaderi I, Manji F, Park YS, Juul D, Ott M, Harris I et al (2015) Technical skills assessment toolbox. Ann Surg 261:251–262. https://doi.org/10.1097/SLA.0000000000000520

Oue T, Kubota A, Okuyama H, Kawahara H (2005) Laparoscopic percutaneous extraperitoneal closure (LPEC) method for the exploration and treatment of inguinal hernia in girls. Pediatr Surg Int 21:964–968. https://doi.org/10.1007/s00383-005-1556-9

Acknowledgements

This research received no specific grant from any funding agency in the public and commercial sectors.

Funding

This study was not funded by any grant.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest for this study.

Ethical approval

Not applicable, since the study is a systematic review.

Informed consent

Not applicable, since the study is a systematic review.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yokoyama, S., Mizunuma, K., Kurashima, Y. et al. Evaluation methods and impact of simulation-based training in pediatric surgery: a systematic review. Pediatr Surg Int 35, 1085–1094 (2019). https://doi.org/10.1007/s00383-019-04539-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00383-019-04539-5