Abstract

The present paper's primary goal is to propose a novel hybrid model with high reliability to predict peak particle velocity (PPV)—a ground vibration evaluation unit in mine blasting. This model is based on the coupling of the multivariate adaptive regression splines (MARS), particle swarm optimization (PSO), and multi-layer perceptron neural networks (MLP). To this end, a strategy of stacking the MARS models was applied. Multiple MARS models were developed first with different hyper-parameters. Subsequently, the outcome predictions from these MARS models were merged as a new data set. The MLP model was then developed based on the newly generated data set, called the MARS–MLP model. To improve the accuracy and reduction of the MARS–MLP model's error, the PSO algorithm was applied in terms of optimization of the MARS–MLP's weights, called the MARS–PSO–MLP model. The proposed MARS–PSO–MLP model was then compared with the stand-alone MARS, MLP, empirical models, and the hybrid PSO–MLP model (without stacking MARS models) using the same data set. The results revealed that the proposed strategies could significantly boost the MARS and MLP models' performance with the PSO algorithm's effective help. The proposed MARS–PSO–MLP model yielded the highest accuracy and reliability with a root-mean-squared error (RMSE) of 1.569, mean absolute error (MAE) of 1.017, and squared-correlation (R2) of 0.902. In comparison, the stand-alone models (i.e., MARS and MLP) and the hybrid model of PSO–MLP provided lower performances with an RMSE of 1.582 to 1.704, MAE of 0.941 to 1.427, and R2 of 0.871 to 0.891. In contrast, poor performance with an RMSE of 5.059, MAE of 3.860, and R2 of 0.127 was found for the empirical model, and it is not a reliable method to predict PPV in this study. This work's findings also indicated that explosive charge per delay, monitoring distance, spacing, powder factor, and burden have significant effects on PPV, the incredibly explosive charge per delay, and monitoring distance. Remarkable, the stemming variable has a minimal impact on PPV, and its role in the modeling of PPV is not exact.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Safety issues in opencast mining are considered important issues, especially those related to blasting [1]. Serious side effects can occur during blasting, such as flyrock, ground vibration, slope failure, airblast, dust, and toxic [2,3,4,5]. Ground vibration is the biggest concern among those effects, and it was represented by peak particle velocity (PPV) [5,6,7,8,9,10,11]. Many scientists confirmed that the adverse effects caused by blasting, especially PPV, are mainly caused by the useless energy of explosives (accounting for 70–80%) [12,13,14].

From the feedback of PPV on buildings and the surrounding environment, some baseline solutions such as increasing safety distance, increasing quality and standards for buildings, and applying existing blasting methods (e.g., creating barriers, blasting delays) have been involved in the 1990s [15, 16]. However, these methods are sometimes not very effective and increase the cost of blasting [17].

Based on the empirical approach, many scholars studied and proposed empirical formulas for predicting PPV, such as the United States Bureau of Mines (USBM) by [18], Langefors, Kihlstrom [19], Ambraseys [20], Ghosh, Daemen [21], Roy [22], Ak, Konuk [23] and Simangunsong, Wahyudi [24]. The empirical equations are often simple and easy to calculate; nevertheless, their performance is not expected [25,26,27,28,29,30]. Therefore, the state-of-the-art methods are essential requirements with the purpose of accuracy improvement in predicting PPV. In this study, artificial intelligence (AI)—a remarkable achievement of information sciences in recent years—is considered to predict PPV. The structure of this work includes seven sections, as follows:

-

(1)

Introduction

-

(2)

Related works

-

(3)

Methodology

-

(4)

Study site and data acquisition

-

(5)

Results

-

(6)

Discussion

-

(7)

Conclusion

2 Related works

Indeed, information technology development, especially AI, has contributed to rapidly improving human life performance and quality [31,32,33,34,35,36]. It helps computers smarter, more knowledgeable, and exceptionally capable of predicting real-world problems with high accuracy [37,38,39,40,41]. Regarding blast-induced ground vibration, AI has been widely applied for predicting the intensity of ground vibration. For example, Hasanipanah et al. [30] used the support vector machine (SVM) algorithm to predict PPV based on the charged explosive and PPV measurement distances. They obtained a superior performance with a variance accounted for (VAF) of 94.24, squared correlation coefficient (R2) of 0.957, and root-mean-squared error (RMSE) of 0.34. In another study, Hajihassani et al. [42] embedded the PSO algorithm to an artificial neural network (ANN) model that aims to improve the ANN model's accuracy in predicting PPV. The PSO algorithm significantly enhanced the ANN model's accuracy with an R2 of 0.89 and a mean squared error (MSE) of 0.038. A similar study on the hybridization of the imperialist competitive algorithm (ICA) and ANN for the prediction of PPV was also conducted by the same authors [28], and the obtained results are positive (i.e., R2 = 0.976; RMSE = 0.685).

By another approach, Armaghani et al. [43] successfully applied an ANFIS for solving the PPV prediction issue in an open-pit mine. Their outcome predictions indicated that the ANFIS model is a good candidate for predicting PPV with a VAF of 97.345, R2 of 0.973, and RMSE = 0.987. Amiri et al. [44] combined ANN and k-nearest neighbors (k-NN) algorithm for predicting PPV in open-pit mines. They claimed that the outcome predictions from the ANN-k-NN model could be acceptable with the possibility of 87.84%. Hasanipanah et al. [45] applied the CART model to predict and evaluate PPV with an R2 of 0.950 and RMSE of 0.170. Similar to the PSO–ANN and ICA–ANN models that were developed by Hajihassani et al. [42],Hajihassani et al. [28], Taheri et al. [46] developed the ABC–ANN model based on the optimization of the artificial bee colony (ABC) algorithm and ANN model. The results obtained from this model were good, with an RMSE of 0.220 and R2 = 0.920. To optimize the prediction of PPV, Sheykhi et al. [47] applied a clustering technique (i.e., fuzzy C-means) to process the data set before developing the predictive model based on the SVM algorithm. Finally, they found that their model (FCM–SVM) could predict PPV with an acceptable performance (RMSE = 1.800; VAF = 85.250; R2 = 0.853). Shang et al. [48] also developed a hybrid model based on the firefly algorithm (FFA) and ANN, named FFA–ANN for predicting PPV. The CART, k-NN, and SVM models were also employed as the baseline models for a comprehensive comparison of the proposed FFA–ANN model. Ultimately, the FFA–ANN was claimed with the most dominant performance, and it was introduced in practical engineering. A similar hybrid model based on the FFA and bagged SVM model (i.e., FFA–BSVM) was also applied for predicting PPV by Ding et al. [49]. Several types of ANN models were compared with that of the FFA–BSVM model, and they showed that the FFA–BSVM is the best model in the study of Ding et al. [49]. Itemset Mining (IM)–a machine learning algorithm for extraction of patterns were applied to select potential instances. Subsequently, an ANN model was developed based on the selected instances, aiming to improve the ANN model's accuracy in predicting PPV, and it was called IM–ANN hybrid model. Yang et al. [50] also applied two optimization algorithms (i.e., PSO and GA (genetic algorithm)) for optimization of the ANFIS model in predicting PPV. They found that the GA–ANFIS model provided a better improvement than the PSO–ANFIS model in terms of accuracy and error. In another study, Fattahi, Hasanipanah [51] developed two novel hybrid models, i.e., RVR (relevance vector regression)–BA (bat-inspired algorithm) and RVR–GWO (grey wolf optimization), for predicting PPV. The results demonstrated that the RVR–GWO is better than those of the RVR–BA model with an MSE of 7.920 and correlation coefficient of 0.915.

Based on the referenced papers, it can be seen that the hybrid models based on optimization algorithms or clustering algorithms tend to provide better performance than those of the single or conventional models in predicting PPV. Indeed, many researchers proposed hybrid models for predicting PPV with promising results, such as the PSO–XGBoost model (PSO–extreme gradient boosting machine) [52]; the MFA–SVR model (modified firefly algorithm–support vector regression) [53]; the FFA–ANN model (firefly algorithm–ANN) [48]; the PSO–k-NN model [54]; the ICA–M5Rules model [55]; the GA–ANN model (genetic algorithm–ANN) [56]; the FCM–ANFIS model [57]; the ANFIS–GA model [50]; the HKM–CA model (hierarchical Kmeans clustering–cubist algorithm) [58]; the ICA–XGBoost model [59]; the HKM–ANN model [60]; the GA–SVR–RBF model [17], and the HHO–RF model (Harris Hawks optimization–random forest) [61]. And they claimed that hybrid models tend to provide better performance than those of the single models. For this reason, we have developed a novel hybrid model for PPV prediction based on the multivariate adaptive regression splines (MARS), PSO, and multi-layer perceptron neural networks (MLP), namely, MARS–PSO–MLP model. The single models, such as MLP and MARS, and the hybrid model (i.e., PSO–MLP) were also developed for comparison purposes. Besides, the empirical equation of USBM was also considered to evaluate ground vibration's intensity from the empirical point of view.

3 Methodology

3.1 Empirical

Empirical methods are often used as the rapid estimation methods to estimate PPV since their convenient and straightforward. Of the empirical equations, the USBM equation [18] is introduced as the most common equation for calculating PPV. Therefore, it was selected to implement the PPV predictions and described as follows:

where W is the maximum amount of explosive charged per delay (in Kg); D is the distance from blasts to seismograph (in m); k and \(\alpha\) denote the site coefficients.

3.2 MARS

MARS is a shortened name of the multivariate adaptive regression splines algorithm proposed first by Friedman [62], and it was officially introduced in 1995 by Friedman, Roosen [63]. It is considered as a flexible algorithm to describe the relationships in a data set with a few variables [64]. Inspired by generalized additive modeling and the CART model's recursive partitioning technique, MARS creates a continuous model based on the constant derivatives. These tasks allow the MARS algorithm to find optimal variable interactions and transformations with the complex structure of the data [65].

By the use of the piecewise linear regressions method, MARS can build flexible models easily. It uses different regression slopes at different intervals of the independent variable space to approximate the nonlinearity of a model. It is worth noting that the regression slopes are flexible, and they depend on the intersection points. Another advantage of the MARS is the searching ability of variables one by one and their relation [66].

For the development of the MARS model, a two-stage process is applied, as follows:

-

(1)

Different maximum number of basis functions are constructed to overfit the initial data set. The variables can be entered by categorical, continuous, or ordinal. They can interconnect with each other or be used as additive components.

-

(2)

Basis functions are taken into account to delete based on their contributions. The order of least contribution is applied for this task using the criterion of generalized cross-validation (GCV). Based on the values of GCV, the importance of variables can be observed when a variable is removed from the model. Another vital point of the MARS is processing the data's missing values using dummy variables. It can show the data's missing values, and we can further process the model aiming to get higher performance.

Using the piecewise linear regressions method to generate flexible models and the two-stage process, MARS can track and solve the data with complex structure and high-dimensional. In this study, MARS is investigated to predict PPV and combined with the PSO algorithm and MLP to enhance the model accuracy (i.e., MARS and MLP) in estimating PPV.

3.3 MLP

MLP was introduced as one of the ANN structures that has been widely applied in many fields [67,68,69,70]. Like those of the other ANN structures (e.g., feedforward neural networks, recurrent neural networks, single layer feedforward), MLP receives input signals and process them before sending them to other neurons in the hidden layer(s). At least one hidden layer is contained in the structure of the MLP [71]. Hidden layers are called the intermediate layers, and they are often added to boost the networkability [72]. Typically, these intermediate layers are useful for non-linear problems.

For MLP, the number of neurons in the input and output layers are prior knowledge. However, it is hard to previous understanding how many neurons are necessary for the hidden layer. The network's processing time would increase, and the over-fitting phenomenon may have occurred if there are too many neurons in the hidden layer. On the other hand, few neurons in the hidden layer would increase the network's error [73]. Therefore, determining the optimal structure of an MLP, i.e., the appropriate number of hidden layers and neurons is one of the most crucial steps.

Furthermore, a suitable training algorithm is an essential and indispensable part of MLP. It is reflected through the processing time and the error of the network. In other words, a suitable training algorithm for MLP is the one that achieves the lowest error and sufficient training time (or shortens training time). In actual fact, the following algorithms are often used to train an MLP: feedforward back-propagation (FFBP), and Levenberg–Marquardt [74,75,76]. Herein, the FFBP algorithm is used to train the MLP for predicting PPV. The general architecture of the MLP model for this purpose is illustrated in Fig. 1.

3.4 PSO algorithm

PSO is one of the nature-based algorithms which is applied for optimization problems. It was proposed by Kennedy, Eberhart [77] inspired by the behaviors of swarms, such as flock bird, ant, fish, to name a few. The PSO algorithm's main idea is to share and update the information of individuals in the swarm, aiming to improve the foraging performance [78]. Each individual is considered a particle in a swarm, and they fly/move with a specific velocity in a given searching space with high dimensional. For each position found during searching, they share the experiences and the places to other particles [54]. Based on the shared positions, the particles continuously search, share, and update if they found another better place. It is worth mentioning that particles' fitness is evaluated by an objective function during searching and optimizing processes. In the PSO algorithm, each the best position found is called the local best (Pb), and the best solution in the swarm is called the global best (Gb). Further details of the PSO algorithm can be found in the following papers [52, 79,80,81,82,83,84,85].

3.5 Hybridization of MLP, PSO, and MARS

In this section, the pattern of the PSO–MLP and MARS–PSO–MLP are proposed. Accordingly, the PSO algorithm is used as an extended feature to optimize the MLP model, i.e., weights. It enables the MLP model to expand the search domain of weights to find out the optimal performance. Furthermore, one of the most remarkable characteristics of the proposed MARS–PSO–MLP model is combining the predictive values of the MARS models and the PSO–MLP model. For this task, the MARS approach is applied to predict PPV first, and several MARS models would be taken into account to get the PPV predictions. Subsequently, they are framed to develop the pattern of the MLP. The PSO algorithm is also involved in developing the MLP based on the predictive values of the developed MARS models aiming to optimize weights of the MLP. RMSE in Eq. (2) would be used as the objective function and stopping condition of the PSO–MLP and MARS–PSO–MLP models. The smallest fitness value of RMSE represents the best prediction accuracy. The frameworks of the PSO–MLP and MARS–PSO–MLP models are proposed in Figs. 2 and 3.

3.6 Model evaluation method

From the statistical viewpoint, it isn't easy and not enough to make a comprehensive evaluation for a predictive model using only one performance index. Therefore, this study used three performance indexes to overcome the above drawback, including RMSE, R2, and MAE. Whereas RMSE was used to express the results' dispersion degree, MAE was used to indicate the deviation of the outcome predictions. Besides, R2 was used to measure the linear correlation of the measured and predicted PPVs. These indices are described as follows:

where \(n_{blasting\_events}\) is the total number of sample points (i.e., blasting events), \(PPV_{i}\) and \(\widehat{PPV}_{i}\) represent the ith actual and predicted PPV values, \(\overline{PPV}_{i}\) represents the average value of the measured PPV values.

4 Study site and data acquisition

To carry out this study, blasts were designed and undertaken at the Deo Nai open-pit coal mine, located in Quang Ninh province of northern Vietnam, as shown in Fig. 4. This large open-pit coal mine in Vietnam with a maximum explosive charge is up to 10 tons per blast. Remarkable, this mine's surroundings are residential and other open-pit mines, as illustrated in Fig. 4. In some cases, the dumpsites of other mines are the slopes of this mine. Therefore, ground vibrations are considered as the big concern herein. It can make instability of dumpsites and slopes as well as vibration of buildings.

Some seismographs were used to measure PPV intensity induced by blasts in this mine, such as Micromate or Blast mate III (Instantel). They were placed in the sensitive points that can be severely affected by blast-induced PPV. Finally, ground vibrations from 193 blasting events were measured in the range of 1.01 to 23.79 mm/s.

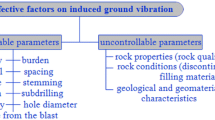

In mine blasting and controlling blast-induced ground vibration, many researchers indicated that blasting parameters significantly affect PPV [86]. They are categorized in the controllable parameters group, since they can adjust or change by blasting engineers [87,88,89]. On the other hand, the uncertainty parameters, such as geological and geophysical conditions, tensile strength, compressive strength of rock mass, rock quality designation, rock fracture parameters, to name a few, are classified in the uncontrollable parameters [90,91,92]. Therefore, the historical data of blasting parameters of 193 blasting events, such as maximum amount of the explosive charged per delay (W), the length of borehole (HL), burden (B), spacing (S), stemming (T), and powder factor (PF), were used as the input variables for PPV prediction in this study. In addition, the monitoring distance of PPV from blast sites to seismograph (D) was calculated based on the blast sites' locations and seismograph that were determined by a GPS receiver. The details of the data set are summarized through the histogram and density plots in Fig. 5.

5 Results

A blast-induced ground vibration modeling is typically taken into account as a regression problem in which both input variables and outcome predictions are numeric. To prepare for such a predictive model, it is necessary to define two separate data sets for training and to test the model. To do so, 193 blasting events with the inputs and output were randomly divided into two different data sets. One for training the PPV prediction models with 80% of the whole data set (~ 157 blasting events), and one for testing the trained PPV prediction models (~ 36 blasting events). It is important to note that all the prediction models are developed and tested on the same data points as divided earlier.

5.1 Empirical model

For modeling the PPV by empirical approach, Duvall, Fogelson [3] proposed an empirical equation based on the characteristics of rock mass and scaled distance determined based on D and W, as described in equation (Eq. 1). This equation is also known as the USBM as an alternative name. In Eq. (1), k and d are the coefficients that present the characteristics of rock mass at the study site, and they can be determined by the multivariate regression analysis method [93]. Finally, the empirical model for PPV prediction in this study was defined based on the training data set, as described in the following equation:

5.2 MARS model

Given the importance of fine-tuning the MARS's parameters to reach an optimal MARS model for PPV prediction, a grid search for the parameters of the MARS model was established for the maximum number of basis functions used and the penalty values, as shown in Fig. 6. It is worth noting that the statistical method of tenfold cross-validation was applied to estimate the MARS model's skill and select the best MARS model for a given predictive modeling problem (i.e., PPV prediction). The data set was standardized before developing the MARS model using the Box–Cox transformation method [94] to avoid overfitting.

In addition to the development of the MARS model to predict PPV, the predictive values of a series of the MARS models are also to propose the novel MARS–PSO–MLP model, as introduced above. Therefore, the seven best MARS models were selected based on the obtained results in Fig. 6 for the next step (developing the MARS–PSO–MLP model), and the performance of the seven MARS models are listed in Table 1. Herein, the MARS-01 model is the best, and it was used as a stand-alone model to compare with the other models in the next sections. Note that there are two parameters used to control the MARS models' performance, including penalty values (d) and the maximum number of basis functions (f).

5.3 MLP model

To develop the MLP model for PPV prediction in this mine, a structure with a single hidden layer of the MLP model was used to prevent the overfitting phenomenon, and this structure was also recommended for such a simple problem (i.e., PPV prediction) [95,96,97,98]. Subsequently, the trial and error procedure was applied to determine the best number of neurons. To avoid overfitting, the Min–Max scaling method was used with the normalized data values are in the range of – 1 to 1. The FFBP algorithm was applied to train the MLP model in this case. Eventually, an MLP model was developed for predicting PPV, as illustrated in Fig. 7.

5.4 PSO–MLP model

As stated earlier, an MLP model's primary problem is the network's architecture, and it has been described above. However, in MLP, the information between neurons are represented by the weights, and they can fine-tune to reach an optimal MLP model for PPV prediction. Therefore, we applied the PSO optimizer to enhance the MLP model's accuracy through the weights' fine-tuning, as proposed in Fig. 2. To do so, the parameters of the PSO algorithm were set up first, as follows:

- The weight of the bird: [0.4, 0.9];

- The effect of local best and global best: 1.2;

- Lower and upper bound: [– 1, 1];

- Population sizes: 50, 100, 150, 200, 250, 300, 350, 400, 450, 500;

- Maximum number of iterations: 1000;

- Fitness function: RMSE (Eq. 2);

- Programming language: R (version 4.0.3).

Next, the optimization process starts to optimize the MLP model weights for PPV prediction through the stopping conditions, as shown in Fig. 8. Ultimately, the optimal PSO–MLP model for PPV prediction in this mine was defined, and its architecture is shown in Fig. 9.

5.5 MARS–PSO–MLP model

Whereas the empirical, MARS, MLP, and PSO–MLP models were developed using the same original training data set, the MARS–PSO–MLP model was developed based on the compilation of the original training data set and combination of the predictive values from the MARS models in Table 1 (seven MARS models), as described in Fig. 3. Subsequently, the predictive values of these seven MARS models are merged to develop an MLP model. In other words, another MLP (with the same structure as the MLP and PSO–MLP models) was developed based on the alternative training data set from the predictive values of the seven developed MARS models. To optimize this new MLP model, the PSO algorithm was also applied as those applied for the PSO–MLP model with the same settings, and some exciting results are noticed in Fig. 10. Ultimately, the optimal MARS–PSO–MLP model was developed, as shown in Fig. 11. It is important to note that despite the architecture of the MLP, PSO–MLP, and MARS–PSO–MLP are the same; however, their weights are very different (it can be observed through the lines in Figs. 7, 9, and 11) due to the optimization of the PSO algorithm and the contributions of the MARS models.

Once the PPV prediction models were well-developed as described above, PPV predictions, in this study, are done using five developed models, including empirical, MARS, MLP, PSO–MLP, and MARS–PSO–MLP. The results of PPV prediction using the five developed models are calculated in Table 2.

6 Discussion

From the calculated results in Table 2, it is evident that all four AI models (i.e., MARS, MLP, PSO–MLP, and MARS–PSO–MLP) have relatively few errors, and their accuracy is acceptable. Of those, the MARS's performance is slightly lower than the MLP's performance on the training phase. However, it is somewhat higher than the MLP model on the testing phase. Remarkably, the MLP model's accuracy was boosted by the optimization feature of the PSO algorithm, and the performance of the PSO–MLP is higher than those of MLP (without optimization) and MARS models on all parts of the data set.

In this study, the stacking approach was applied to develop and propose the novel hybrid MARS–PSO–MLP model for predicting PPV. The results in Table 2 indicated that the proposed hybrid MARS–PSO–MLP model yielded an outstanding performance with the highest accuracy. Its performance even is higher than the PSO–MLP model on all the data sets. This finding indicates that the combination strategy of stacking and optimization methods of MARS and MLP models provided an alternative training data set with a better normalization and regression. Therefore, the proposed MARS–PSO–MLP model better performed in predicting PPV. These results can be observed in Fig. 12.

In contrast to the AI models, the highest error and the lowest convergence were found in the empirical model provided, as shown in Table 2. This result indicated that a simple linear equation is not suitable for predicting PPVs in this mine. Instead, a non-linear equation or complexity "black box" algorithms should be considered and used to predict PPV.

As shown in Fig. 12, on the training data set, the correlation of PPV values (measured versus predicted) of the MARS, MLP, PSO–MLP, and MARS–PSO–MLP models is superior to the empirical model. Of those, the correlation of the MARS and MLP are not too dissimilar. Notably, the optimization models' correlation (PSO–MLP and MARS–PSO–MLP) is improved significantly, especially the proposed MARS–PSO–MLP model. On the testing data set, the optimization models' regression level is better than the other models as well, especially the proposed MARS–PSO–MLP model. For further assessment of the developed models in practice, the models' residuals on the testing data set were computed and visualized in Fig. 13.

Taking a look at Fig. 13, it can be seen that the residual plots show a somewhat random pattern. Accordingly, the distributions of PPV predictions on the four developed AI models are symmetrical, have no obvious patterns, and tend to cluster towards the middle of the plots. Still, the residuals of the empirical model are not. Besides, the histogram of the models' residuals is also an excellent metric to evaluate whether the models are valid or not. As shown in Fig. 13, we can see that the histogram of the empirical model is not normal (i.e., not bell-shaped), and it should not be a valid model for PPV prediction. In contrast, the four AI models provided normal histograms, especially the proposed MARS–PSO–MLP model. This indicates that the proposed MARS–PSO–MLP model is the best fit model for PPV prediction.

To better understand the mechanism of the proposed MARS–PSO–MLP model, as well as the role of the input variables in the modeling, the importance level of the input variables was investigated through a sensitivity analysis, as shown in Fig. 14. The results show that W, D, S, PF, and B have significant effects on PPV, especially W and D. Remarkable, the T variable has a minimal impact on PPV, and its role in modeling PPV is not exact.

7 Conclusion

Ground vibration is one of the most damaging effects in mine blasting, and accurate prediction of PPV has an essential meaning in controlling and reducing the adverse impacts on the surroundings. The results showed that MARS and MLP are potential tools for predicting PPV with acceptable accuracy. Based on the stacking method's flexibility strategy, the MARS models and the PSO algorithm significantly improved the MLP model's accuracy. In other words, by fully considering and combining the predictive values of seven MARS models and the robust optimization ability of the PSO algorithm, the proposed MARS–PSO–MLP model significantly improved the accuracy of the MARS, MLP, and PSO–MLP models in predicting PPV. In conclusion, the proposed MARS–PSO–MLP model is a reliable method for PPV prediction in mine blasting.

Furthermore, the W, D, S, PF, and B variables are highly sensitive to PPV, especially W and D, and should be used and taken into account to predict PPV. In addition, the T variable has a minimal impact on PPV, and its role in the modeling of PPV is not exact.

Although the MARS–PSO–MLP is introduced as a novel intelligent model for predicting PPV with high accuracy; however, it should be considered to optimize the blasting parameters in future works based on the predicted results to reduce the intensity of ground vibration. In addition, characteristics of rock mass and geological conditions are also challenges for future studies in predicting PPV.

References

Bui NX, Ho GS (2020) Vietnamese Surface Mining - Training and scientific research for integrating the Fourth Industrial Revolution. J Min Earth Sci 61(5):1–15

Bhandari S (1997) Engineering rock blasting operations. A A Balkema 388:388

Duvall WI, Fogelson DE (1962) Review of criteria for estimating damage to residences from blasting vibrations. US Department of the Interior, Bureau of Mines

Esmaeili M, Osanloo M, Rashidinejad F, Bazzazi AA, Taji M (2014) Multiple regression, ANN and ANFIS models for prediction of backbreak in the open pit blasting. Eng Comput 30(4):549–558

Gad EF, Wilson JL, Moore AJ, Richards AB (2005) Effects of mine blasting on residential structures. J Perform Constr Facilit 19(3):222–228

Chen G, Huang S (2000) Analysis of ground vibrations caused by open pit production blasts. Explos Blast Tech pp 63–70

Davies B, Farmer I, Attewell P (1964) Ground vibration from shallow sub-surface blasts. Engineer 217 (5644)

Dindarloo Saeid R (2015) Prediction of blast-induced ground vibrations via genetic programming. Int J 6:021

Khandelwal M, Saadat M (2015) A dimensional analysis approach to study blast-induced ground vibration. Rock Mech Rock Eng 48(2):727–735

Khandelwal M, Singh T (2007) Evaluation of blast-induced ground vibration predictors. Soil Dyn Earthq Eng 27(2):116–125

Monjezi M, Ghafurikalajahi M, Bahrami A (2011) Prediction of blast-induced ground vibration using artificial neural networks. Tunn Undergr Space Technol 26(1):46–50

Nguyen H, Bui X-N, Tran Q-H, Moayedi H (2019) Predicting blast-induced peak particle velocity using BGAMs, ANN and SVM: a case study at the Nui Beo open-pit coal mine in Vietnam. Environ Earth Sci 78(15):479. https://doi.org/10.1007/s12665-019-8491-x

Hosseini SA, Tavana A, Abdolahi SM, Darvishmaslak S (2019) Prediction of blast-induced ground vibrations in quarry sites: a comparison of GP, RSM and MARS. Soil Dyn Earthq Eng 119:118–129

Hoang Nguyen, Nam Xuan Bui, Hieu Quang Tran, Giang Huong Thi Le, (2020) A novel soft computing model for predicting blast - induced ground vibration in open - pit mines using gene expression programming. Journal of Mining and Earth Sciences 61 (5):107-116

Mayor R, Flanders R (1990) Technical manual simplified computer model of air blast effects on building walls. US Department of State, Office of Diplomatic Security, Washington DC

Army U (1998) Technical manual design and analysis of hardened structures to conventional weapons effects. Army TM5–855–1, Washington DC

Nguyen H, Choi Y, Bui X-N, Nguyen-Thoi T (2019) Predicting blast-induced ground vibration in open-pit mines using vibration sensors and support vector regression-based optimization algorithms. Sensors 20(1):132

Duvall WI, Petkof B (1958) Spherical propagation of explosion-generated strain pulses in rock. Bureau of Mines

Langefors U, Kihlstrom B (1963) The modern techniques of rock blasting. Wiley, New York

Ambraseys N (1968) Rock mechanics in engineering practice

Ghosh A, Daemen JJ A simple new blast vibration predictor (based on wave propagation laws). In: The 24th US Symposium on Rock Mechanics (USRMS), 1983. American Rock Mechanics Association

Roy PP (1991) Vibration control in an opencast mine based on improved blast vibration predictors. Min Sci Technol 12(2):157–165

Ak H, Konuk A (2008) The effect of discontinuity frequency on ground vibrations produced from bench blasting: a case study. Soil Dyn Earthq Eng 28(9):686–694

Simangunsong GM, Wahyudi S (2015) Effect of bedding plane on prediction blast-induced ground vibration in open pit coal mines. Int J Rock Mech Min Sci 79:1–8

Ghasemi E, Ataei M, Hashemolhosseini H (2013) Development of a fuzzy model for predicting ground vibration caused by rock blasting in surface mining. J Vib Control 19(5):755–770

Verma A, Singh T (2013) Comparative study of cognitive systems for ground vibration measurements. Neural Comput Appl 22(1):341–350

Monjezi M, Hasanipanah M, Khandelwal M (2013) Evaluation and prediction of blast-induced ground vibration at Shur River Dam, Iran, by artificial neural network. Neural Comput Appl 22(7–8):1637–1643

Hajihassani M, Armaghani DJ, Marto A, Mohamad ET (2015) Ground vibration prediction in quarry blasting through an artificial neural network optimized by imperialist competitive algorithm. Bull Eng Geol Environ 74(3):873–886

Hasanipanah M, Armaghani DJ, Khamesi H, Amnieh HB, Ghoraba S (2016) Several non-linear models in estimating air-overpressure resulting from mine blasting. Eng Comput 32(3):441–455

Hasanipanah M, Monjezi M, Shahnazar A, Armaghani DJ, Farazmand A (2015) Feasibility of indirect determination of blast induced ground vibration based on support vector machine. Measurement 75:289–297

Duan Y, Edwards JS, Dwivedi YK (2019) Artificial intelligence for decision making in the era of Big Data–evolution, challenges and research agenda. Int J Inf Manag 48:63–71

Dwivedi YK, Hughes L, Ismagilova E, Aarts G, Coombs C, Crick T, Duan Y, Dwivedi R, Edwards J, Eirug A (2019) Artificial Intelligence (AI): multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int J Inf Manag 101994

Jarrahi MH (2018) Artificial intelligence and the future of work: Human-AI symbiosis in organizational decision making. Bus Horiz 61(4):577–586

Kordon AK (2020) Applying data science: how to create value with artificial intelligence. Springer, New York

Wu F, Lu C, Zhu M, Chen H, Zhu J, Yu K, Li L, Li M, Chen Q, Li X (2020) Towards a new generation of artificial intelligence in China. Nat Mach Intell 2(6):312–316

Tran HQ, Bui NX, Nguyen H, Nguyen TA, Nguyen LQ (2020) Applicable posssibility of advanced technologies and equipment in surface mines of Vietnam. J Min Earth Sci 61(5):16–32

Wirtz BW, Weyerer JC, Geyer C (2019) Artificial intelligence and the public sector—applications and challenges. Int J Public Admin 42(7):596–615

Asteris PG, Mokos VG (2019) Concrete compressive strength using artificial neural networks. Neural Comput Appl 1–20

Asteris PG, Argyropoulos I, Cavaleri L, Rodrigues H, Varum H, Thomas J, Lourenço PB (2018) Masonry compressive strength prediction using artificial neural networks. In: International conference on transdisciplinary multispectral modeling and cooperation for the preservation of cultural Heritage, Springer, New York, pp 200–224

Asteris PG, Ashrafian A, Rezaie-Balf M (2019) Prediction of the compressive strength of self-compacting concrete using surrogate models. Comput Concr 24(2):137–150

Tran BD, Vu TD, Pham VV, Nguyen TA, Nguyen AD, Le GHT (2020) Developing a mathematical model to optimize long - term quarrying planing for limestone quarries producing cement in Vietnam. J Min Earth Sci 61(5):58–70

Hajihassani M, Armaghani DJ, Monjezi M, Mohamad ET, Marto A (2015) Blast-induced air and ground vibration prediction: a particle swarm optimization-based artificial neural network approach. Environ Earth Sci 74(4):2799–2817

Armaghani DJ, Momeni E, Abad SVANK, Khandelwal M (2015) Feasibility of ANFIS model for prediction of ground vibrations resulting from quarry blasting. Environ Earth Sci 74(4):2845–2860

Amiri M, Amnieh HB, Hasanipanah M, Khanli LM (2016) A new combination of artificial neural network and K-nearest neighbors models to predict blast-induced ground vibration and air-overpressure. Eng Comput 32(4):631–644

Hasanipanah F, Amnieh A, Monjezi (2017) Forecasting blast-induced ground vibration developing a CART model. Eng Comput 33(2):307–316

Taheri K, Hasanipanah M, Golzar SB, Majid MZA (2017) A hybrid artificial bee colony algorithm-artificial neural network for forecasting the blast-produced ground vibration. Eng Comput 33(3):689–700

Sheykhi H, Bagherpour R, Ghasemi E, Kalhori H (2018) Forecasting ground vibration due to rock blasting: a hybrid intelligent approach using support vector regression and fuzzy C-means clustering. Eng Comput 34(2):357–365

Shang Y, Nguyen H, Bui X-N, Tran Q-H, Moayedi H (2019) A novel artificial intelligence approach to predict blast-induced ground vibration in open-pit mines based on the firefly algorithm and artificial neural network. Nat Resour Res 29(2):723–737. https://doi.org/10.1007/s11053-019-09503-7

Ding X, Hasanipanah M, Rad HN, Zhou W (2020) Predicting the blast-induced vibration velocity using a bagged support vector regression optimized with firefly algorithm. Eng Comput 1–12

Yang H, Hasanipanah M, Tahir MM, Bui DT (2019) Intelligent prediction of blasting-induced ground vibration using ANFIS optimized by GA and PSO. Nat Resour Res 29(2):739–750. https://doi.org/10.1007/s11053-019-09515-3

Fattahi H, Hasanipanah M (2020) Prediction of blast-induced ground vibration in a mine using relevance vector regression optimized by metaheuristic algorithms. Nat Resour Res 1–15

Zhang X, Nguyen H, Bui X-N, Tran Q-H, Nguyen D-A, Bui DT, Moayedi H (2019) Novel soft computing model for predicting blast-induced ground vibration in open-pit mines based on particle swarm optimization and XGBoost. Nat Resour Res 29(2):711–721. https://doi.org/10.1007/s11053-019-09492-7

Chen W, Hasanipanah M, Rad HN, Armaghani DJ, Tahir M (2019) A new design of evolutionary hybrid optimization of SVR model in predicting the blast-induced ground vibration. Eng Comput 1–17

Bui X-N, Jaroonpattanapong P, Nguyen H, Tran Q-H, Long NQ (2019) A novel hybrid model for predicting blast-induced ground vibration based on k-nearest neighbors and particle Swarm optimization. Sci Rep 9(1):1–14

Fang Q, Nguyen H, Bui X-N, Nguyen-Thoi T (2019) Prediction of blast-induced ground vibration in open-pit mines using a new technique based on imperialist competitive algorithm and M5Rules. Nat Resour Res 29(2):791–806. https://doi.org/10.1007/s11053-019-09577-3

Azimi Y, Khoshrou SH, Osanloo M (2019) Prediction of blast induced ground vibration (BIGV) of quarry mining using hybrid genetic algorithm optimized artificial neural network. Measurement 147:106874

Xue X (2019) Neuro-fuzzy based approach for prediction of blast-induced ground vibration. Appl Acoust 152:73–78

Nguyen H, Bui X-N, Tran Q-H, Mai N-L (2019) A new soft computing model for estimating and controlling blast-produced ground vibration based on hierarchical K-means clustering and cubist algorithms. Appl Soft Comput 77:376–386. https://doi.org/10.1016/j.asoc.2019.01.042

Ding Z, Nguyen H, Bui X-N, Zhou J, Moayedi H (2019) Computational intelligence model for estimating intensity of blast-induced ground vibration in a mine based on imperialist competitive and extreme gradient boosting algorithms. Nat Resour Res. https://doi.org/10.1007/s11053-019-09548-8

Nguyen H, Drebenstedt C, Bui X-N, Bui DT (2019) Prediction of blast-induced ground vibration in an open-pit mine by a novel hybrid model based on clustering and artificial neural network. Nat Resour Res 29(2):691–709

Yu Z, Shi X, Zhou J, Chen X, Qiu X (2020) Effective assessment of blast-induced ground vibration using an optimized random forest model based on a harris hawks optimization algorithm. Appl Sci 10(4):1403

Friedman JH (1991) Multivariate adaptive regression splines. Ann Stat 1–67

Friedman JH, Roosen CB (1995) An introduction to multivariate adaptive regression splines. Sage Publications Sage CA, Thousand Oaks, CA

Lee T-S, Chiu C-C, Chou Y-C, Lu C-J (2006) Mining the customer credit using classification and regression tree and multivariate adaptive regression splines. Comput Stat Data Anal 50(4):1113–1130

Zhou Y, Leung H (2007) Predicting object-oriented software maintainability using multivariate adaptive regression splines. J Syst Softw 80(8):1349–1361

Zhang W, Goh ATC (2013) Multivariate adaptive regression splines for analysis of geotechnical engineering systems. Comput Geotech 48:82–95

Anemangely M, Ramezanzadeh A, Tokhmechi B, Molaghab A, Mohammadian A (2018) Drilling rate prediction from petrophysical logs and mud logging data using an optimized multilayer perceptron neural network. J Geophys Eng 15(4):1146–1159

Çaylak Ç, Kaftan İ (2014) Determination of near-surface structures from multi-channel surface wave data using multi-layer perceptron neural network (MLPNN) algorithm. Acta Geophys 62(6):1310–1327. https://doi.org/10.2478/s11600-014-0207-8

Ewees AA, Elaziz MA, Alameer Z, Ye H, Jianhua Z (2020) Improving multilayer perceptron neural network using chaotic grasshopper optimization algorithm to forecast iron ore price volatility. Resour Policy 65:101555

Heidari AA, Faris H, Aljarah I, Mirjalili S (2019) An efficient hybrid multilayer perceptron neural network with grasshopper optimization. Soft Comput 23(17):7941–7958

Rosa JP, Guerra DJ, Horta NC, Martins RM, Lourenço NC (2020) Overview of artificial neural networks. In: Using artificial neural networks for analog integrated circuit design automation, Springer, pp 21–44

Naghsh-Nilchi AR, Aghashahi M (2010) Epilepsy seizure detection using eigen-system spectral estimation and Multiple Layer Perceptron neural network. Biomed Signal Process Control 5(2):147–157

Nguyen H, Bui X-N, Bui H-B, Mai N-L (2018) A comparative study of artificial neural networks in predicting blast-induced airblast overpressure at Deo Nai open-pit coal mine. Vietnam Neural Comput Appl 32(8):3939–3955. https://doi.org/10.1007/s00521-018-3717-5

Ouadfeul S-A, Aliouane L (2015) Total organic carbon prediction in shale gas reservoirs from well logs data using the multilayer perceptron neural network with Levenberg Marquardt training algorithm: application to Barnett Shale. Arab J Sci Eng 40(11):3345–3349

Ansari H, Zarei M, Sabbaghi S, Keshavarz P (2018) A new comprehensive model for relative viscosity of various nanofluids using feedforward back-propagation MLP neural networks. Int Commun Heat Mass Transf 91:158–164

Hamzehie M, Mazinani S, Davardoost F, Mokhtare A, Najibi H, Van der Bruggen B, Darvishmanesh S (2014) Developing a feed forward multilayer neural network model for prediction of CO2 solubility in blended aqueous amine solutions. J Nat Gas Sci Eng 21:19–25

Kennedy J, Eberhart R (1995) Particle swarm optimization (PSO). In: Proc. IEEE International Conference on Neural Networks, Perth, Australia, pp 1942–1948

Nguyen H, Bui H-B, Bui X-N (2020) Rapid determination of gross calorific value of coal using artificial neural network and particle swarm optimization. Nat Resour Res. https://doi.org/10.1007/s11053-020-09727-y

Esmin AA, Coelho RA, Matwin S (2015) A review on particle swarm optimization algorithm and its variants to clustering high-dimensional data. Artif Intell Rev 44(1):23–45

Kennedy J (2011) Particle swarm optimization. In: Encyclopedia of machine learning. Springer, New York, pp 760–766

Lalwani S, Sharma H, Satapathy SC, Deep K, Bansal JC (2019) A survey on parallel particle swarm optimization algorithms. Arab J Sci Eng 44(4):2899–2923

Poli R, Kennedy J, Blackwell T (2007) Particle swarm optimization. Swarm Intell 1(1):33–57

Shanthi M, Meenakshi DK, Ramesh PK (2018) Particle swarm optimization. In: Advances in swarm intelligence for optimizing problems in computer science, Chapman and Hall/CRC, pp 115–144

Shi Y (2001) Particle swarm optimization: developments, applications and resources. In: evolutionary computation, Proceedings of the 2001 Congress on, 2001. IEEE, pp 81–86

Wang D, Tan D, Liu L (2018) Particle swarm optimization algorithm: an overview. Soft Comput 22(2):387–408

Nguyen AD, Nhu BV, Tran BD, Pham HV, Nguyen TA (2020) Definition of amount explosive per blast for spillway at the Nui Mot lake - Binh Dinh province. J Min Earth Sci 61(5):117–124

Jimeno EL, Jimino CL, Carcedo A (1995) Drilling and blasting of rocks. CRC Press, New York

Faradonbeh RS, Hasanipanah M, Amnieh HB, Armaghani DJ, Monjezi M (2018) Development of GP and GEP models to estimate an environmental issue induced by blasting operation. Environ Monit Assess 190(6):351

Hasanipanah M, Amnieh HB, Khamesi H, Armaghani DJ, Golzar SB, Shahnazar A (2018) Prediction of an environmental issue of mine blasting: an imperialistic competitive algorithm-based fuzzy system. Int J Environ Sci Technol 15(3):551–560

Singh T (2004) Artificial neural network approach for prediction and control of ground vibrations in mines. Min Technol 113(4):251–256

Afeni TB, Osasan SK (2009) Assessment of noise and ground vibration induced during blasting operations in an open pit mine—a case study on Ewekoro limestone quarry. Nigeria Min Sci Technol (China) 19(4):420–424

Singh T, Singh V (2005) An intelligent approach to prediction and control ground vibration in mines. Geotech Geol Eng 23(3):249–262

Alexopoulos EC (2010) Introduction to multivariate regression analysis. Hippokratia 14(Suppl 1):23

Box GE, Cox DR (1964) An analysis of transformations. J Roy Stat Soc: Ser B (Methodol) 26(2):211–243

Maiorov V, Pinkus A (1999) Lower bounds for approximation by MLP neural networks. Neurocomputing 25(1–3):81–91

Bandyopadhyay G, Chattopadhyay S (2007) Single hidden layer artificial neural network models versus multiple linear regression model in forecasting the time series of total ozone. Int J Environ Sci Technol 4(1):141–149

Guliyev NJ, Ismailov VE (2018) On the approximation by single hidden layer feedforward neural networks with fixed weights. Neural Netw 98:296–304

Belciug S, Gorunescu F (2018) Learning a single-hidden layer feedforward neural network using a rank correlation-based strategy with application to high dimensional gene expression and proteomic spectra datasets in cancer detection. J Biomed Inf 83:159–166

Acknowledgements

This paper was supported by the Ministry of Education and Training (MOET) in Viet Nam under grant number B2020-MDA-16. The authors also thank the Center for Mining, Electro-Mechanical research of Hanoi University of Mining and Geology (HUMG), Hanoi, Vietnam, and the research team of Innovations for Sustainable and Responsible Mining (ISRM) of HUMG.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nguyen, H., Bui, XN., Tran, QH. et al. Prediction of ground vibration intensity in mine blasting using the novel hybrid MARS–PSO–MLP model. Engineering with Computers 38 (Suppl 5), 4007–4025 (2022). https://doi.org/10.1007/s00366-021-01332-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-021-01332-8