Abstract

We study the expressivity of deep neural networks. Measuring a network’s complexity by its number of connections or by its number of neurons, we consider the class of functions for which the error of best approximation with networks of a given complexity decays at a certain rate when increasing the complexity budget. Using results from classical approximation theory, we show that this class can be endowed with a (quasi)-norm that makes it a linear function space, called approximation space. We establish that allowing the networks to have certain types of “skip connections” does not change the resulting approximation spaces. We also discuss the role of the network’s nonlinearity (also known as activation function) on the resulting spaces, as well as the role of depth. For the popular ReLU nonlinearity and its powers, we relate the newly constructed spaces to classical Besov spaces. The established embeddings highlight that some functions of very low Besov smoothness can nevertheless be well approximated by neural networks, if these networks are sufficiently deep.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Today, we witness a worldwide triumphant march of deep neural networks, impacting not only various application fields, but also areas in mathematics such as inverse problems. Originally, neural networks were developed by McCulloch and Pitts [48] in 1943 to introduce a theoretical framework for artificial intelligence. At that time, however, the limited amount of data and the lack of sufficient computational power only allowed the training of shallow networks, that is, networks with only few layers of neurons, which did not lead to the anticipated results. The current age of big data and the significantly increased computer performance now make the application of deep learning algorithms feasible, leading to the successful training of very deep neural networks. For this reason, neural networks have seen an impressive comeback. The list of important applications in public life ranges from speech recognition systems on cell phones over self-driving cars to automatic diagnoses in healthcare. For applications in science, one can witness a similarly strong impact of deep learning methods in research areas such as quantum chemistry [61] and molecular dynamics [47], often allowing to resolve problems which were deemed unreachable before. This phenomenon is manifested similarly in certain fields of mathematics, foremost in inverse problems [2, 10], but lately also, for instance, in numerical analysis of partial differential equations [8].

Yet, most of the existing research related to deep learning is empirically driven and a profound and comprehensive mathematical foundation is still missing, in particular for the previously mentioned applications. This poses a significant challenge not only for mathematics itself, but in general for the “safe” applicability of deep neural networks [22].

A deep neural network in mathematical terms is a tuple

consisting of affine-linear maps \(T_\ell : {\mathbb {R}}^{N_{\ell - 1}} \rightarrow {\mathbb {R}}^{N_\ell }\) (hence \(T_\ell (x) = A_\ell \, x + b_\ell \) for appropriate matrices \(A_\ell \) and vectors \(b_\ell \), often with a convolutional or Toeplitz structure) and of nonlinearities \(\alpha _{\ell }: {\mathbb {R}}^{N_{\ell }} \rightarrow {\mathbb {R}}^{N_{\ell }}\) that typically encompass componentwise rectification, possibly followed by a pooling operation.

The tuple in (1.1) encodes the architectural components of the neural network, where L denotes the number of layers of the network, while \(L-1\) is the number of hidden layers. The highly structured function \({\mathtt {R}}(\Phi )\) implemented by such a network \(\Phi \) is then defined by applying the different maps in an iterative (layer-wise) manner; precisely,

We call this function the realization of the deep neural network \(\Phi \). It is worth pointing out that most of the literature calls this function itself the neural network; one can, however—depending on the choice of the activation functions—imagine the same function being realized by different architectural components, so that it would not make sense, for instance, to speak of the number of layers of \({\mathtt {R}}(\Phi )\); this is only well defined when we talk about \(\Phi \) itself. The complexity of a neural network can be captured by various numbers such as the depth L, the number of hidden neurons \(N(\Phi ) = \sum _{\ell =1}^{L-1} N_{\ell }\), or the number of connections (also called the connectivity, or the number of weights) given by \(W(\Phi ) = \sum _{\ell = 1}^{L} \Vert A_\ell \Vert _{\ell ^0}\), where \(\Vert A_\ell \Vert _{\ell ^0}\) denotes the number of nonzero entries of the matrix \(A_\ell \).

From a mathematical perspective, the central task of a deep neural network is to approximate a function \(f : {\mathbb {R}}^{N_0} \rightarrow {\mathbb {R}}^{N_L}\), which, for instance, encodes a classification problem. Given a training data set \(\big ( x_i,f(x_i) \big )_{i=1}^m\), a loss function \({\mathcal {L}} : {\mathbb {R}}^{N_L} \times {\mathbb {R}}^{N_L} \rightarrow {\mathbb {R}}\), and a regularizer \({\mathcal {P}}\), which imposes, for instance, sparsity conditions on the weights of the neural network \(\Phi \), solving the optimization problem

typically through a variant of stochastic gradient descent, yields a learned neural network \({\widehat{\Phi }}\). The objective is to achieve \({\mathtt {R}}({\widehat{\Phi }}) \approx f\), which is only possible if the function f can indeed be well approximated by (the realization of) a network with the prescribed architecture. Various theoretical results have already been published to establish the ability of neural networks—often with specific architectural constraints—to approximate functions from certain function classes; this is referred to as analyzing the expressivity of neural networks. However, the fundamental question asking which function spaces are truly natural for deep neural networks has never been comprehensively addressed. Such an approach may open the door to a novel viewpoint and lead to a refined understanding of the expressive power of deep neural networks.

In this paper, we introduce approximation spaces associated with neural networks. This leads to an extensive theoretical framework for studying the expressivity of deep neural networks, allowing us also to address questions such as the impact of the depth and of the activation function, or of so-called (and widely used) skip connections on the approximation power of deep neural networks.

1.1 Expressivity of Deep Neural Networks

The first theoretical results concerning the expressivity of neural networks date back to the early 90s, at that time focusing on shallow networks, mainly in the context of the universal approximation theorem [16, 35, 36, 43]. The breakthrough result of the ImageNet competition in 2012 [38] and the ensuing worldwide success story of neural networks have brought renewed interest to the study of neural networks, now with an emphasis on deep networks. The surprising effectiveness of such networks in applications has motivated the study of the effect of depth on the expressivity of these networks. Questions related to the learning phase are of a different nature, focusing on aspects of statistical learning and optimization, and hence constitute a different research field.

Let us recall some of the key contributions in the area of expressivity, in order to put our results into perspective. The universal approximation theorems by Hornik [35] and Cybenko [16] can be counted as a first highlight, stating that neural networks with only one hidden layer can approximate continuous functions on compact sets arbitrarily well. Examples of further work in this early stage, hence focusing on networks with a single hidden layer, are approximation error bounds in terms of the number of neurons for functions with bounded first Fourier moments [5, 6], the failure of those networks to provide localized approximations [13], a fundamental lower bound on approximation rates [12, 18], and the approximation of smooth/analytic functions [50, 52]. Some of the early contributions already study networks with multiple hidden layers, such as [29] for approximating continuous functions, and [53] for approximating functions together with their derivatives. Also, [13] which shows in certain instances that deep networks can perform better than single-hidden-layer networks can be counted toward this line of research. For a survey of those early results, we refer to [24, 57].

More recent work focuses predominantly on the analysis of the effect of depth. Some examples—again without any claim of completeness—are [23], in which a function is constructed which cannot be expressed by a small two-layer network, but which is implemented by a three-layer network of low complexity, or [51] which considers so-called compositional functions, showing that such functions can be approximated by neural networks without suffering from the curse of dimensionality. A still different viewpoint is taken in [14, 15], which focus on a similar problem as [51] but attacking it by utilizing results on tensor decompositions. Another line of research aims to study the approximation rate when approximating certain function classes by neural networks with growing complexity [9, 49, 55, 62, 68].

1.2 The Classical Notion of Approximation Spaces

In classical approximation theory, the notion of approximation spaces refers to (quasi)-normed spaces that are defined by their elements satisfying a specific decay of a certain approximation error; see, for instance, [21] In this introduction, we will merely sketch the key construction and properties; we refer to Sect. 3 for more details.

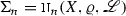

Let X be a quasi-Banach space equipped with the quasi-norm \(\Vert \cdot \Vert _{X}\). Furthermore, here, as in the rest of the paper, let us denote by \({\mathbb {N}}= \{1,2,\ldots \}\) the set of natural numbers and write \({\mathbb {N}}_{0} = \{0\} \cup {\mathbb {N}}\), \({\mathbb {N}}_{\ge m} = \{n \in {\mathbb {N}}, n \ge m\}\). For a prescribed family \(\Sigma = (\Sigma _{n})_{n \in {\mathbb {N}}_{0}}\) of subsets \(\Sigma _{n} \subset X\), one aims to classify functions \(f \in X\) by the decay (as \(n \rightarrow \infty \)) of the error of best approximation by elements from \(\Sigma _{n}\), given by \(E(f,\Sigma _{n})_X :=\inf _{g \in \Sigma _{n}} \Vert f - g \Vert _{X}\). The desired rate of decay of this error is prescribed by a discrete weighted \(\ell ^q\)-norm, where the weight depends on the parameter \(\alpha > 0\). For \(q = \infty \), this leads to the class

Thus, intuitively speaking, this class consists of those elements of X for which the error of best approximation by elements of \(\Sigma _{n}\) decays at least as \({\mathcal {O}}(n^{-\alpha })\) for \(n \rightarrow \infty \). This general philosophy also holds for the more general classes  , \(q > 0\).

, \(q > 0\).

If the initial family \(\Sigma \) of subsets of X satisfies some quite natural conditions, more precisely \(\Sigma _0 = \{0\}\), each \(\Sigma _n\) is invariant to scaling, \(\Sigma _n \subset \Sigma _{n+1}\), and the union \(\bigcup _{n \in {\mathbb {N}}_0} \Sigma _n\) is dense in X, as well as the slightly more involved condition that \(\Sigma _n + \Sigma _n \subset \Sigma _{cn}\) for some fixed \(c \in {\mathbb {N}}\), then an abundance of results are available for the approximation classes  . In particular,

. In particular,  turns out to be a proper linear function space, equipped with a natural (quasi)-norm. Particular highlights of the theory are various embedding and interpolation results between the different approximation spaces.

turns out to be a proper linear function space, equipped with a natural (quasi)-norm. Particular highlights of the theory are various embedding and interpolation results between the different approximation spaces.

1.3 Our Contribution

We introduce a novel perspective on the study of expressivity of deep neural networks by introducing the associated approximation spaces and investigating their properties. This is in contrast with the usual approach of studying the approximation fidelity of neural networks on classical spaces. We utilize this new viewpoint for deriving novel results on, for instance, the impact of the choice of activation functions and the depth of the networks.

Given a so-called (nonlinear) activation function \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\), a classical setting is to consider nonlinearities \(\alpha _\ell \) in (1.1) corresponding to a componentwise application of the activation function for each hidden layer \(1 \le \ell < L\), and \(\alpha _{L}\) being the identity. We refer to networks of this form as strict \(\varrho \)-networks. To introduce a framework of sufficient flexibility, we also consider nonlinearities where for each component either \(\varrho \) or the identity is applied. We refer to such networks as generalized \(\varrho \)-networks; the realizations of such generalized networks include various function classes such as multilayer sparse linear transforms [41], networks with skip connections [54], ResNets [32, 67], or U-nets [58].

Let us now explain how we utilize this framework of approximation spaces. Our focus will be on approximation rates in terms of growing complexity of neural networks, which we primarily measure by their connectivity, since this connectivity is closely linked to the number of bytes needed to describe the network, and also to the number of floating point operations needed to apply the corresponding function to a given input. This is in line with recent results [9, 55, 68] which explicitly construct neural networks that reach an optimal approximation rate for very specific function classes, and in contrast to most of the existing literature focusing on complexity measured by the number of neurons. We also consider the approximation spaces for which the complexity of the networks is measured by the number of neurons.

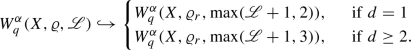

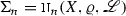

In addition to letting the number of connections or neurons tend to infinity while keeping the depth of the networks fixed, we also allow the depth to evolve with the number of connections or neurons. To achieve this, we link both by a non-decreasing depth-growth function \({\mathscr {L}}: {\mathbb {N}}\rightarrow {\mathbb {N}}\cup \{ \infty \}\), where we allow the possibility of not restricting the number of layers when \({\mathscr {L}}(n) = \infty \). We then consider the function families \(\mathtt {W}_n(\Omega \rightarrow {\mathbb {R}}^{k},\varrho , {\mathscr {L}})\) (resp. \(\mathtt {N}_n(\Omega \rightarrow {\mathbb {R}}^{k},\varrho , {\mathscr {L}})\)) made of all restrictions to a given subset \(\Omega \subseteq {\mathbb {R}}^{d}\) of functions which can be represented by (generalized) \(\varrho \)-networks with input/output dimensions d and k, at most n nonzero connection weights (resp. at most n hidden neurons), and at most \({\mathscr {L}}(n)\) layers. Finally, given a space X of functions \(\Omega \rightarrow {\mathbb {R}}^{k}\), we will use the sets  (resp.

(resp.  ) to define the associated approximation spaces. Typical choices for X are

) to define the associated approximation spaces. Typical choices for X are

with  the space of uniformly continuous functions on \(\Omega \) that vanish at infinity, equipped with the supremum norm. For ease of notation, we will sometimes also write

the space of uniformly continuous functions on \(\Omega \) that vanish at infinity, equipped with the supremum norm. For ease of notation, we will sometimes also write  , and

, and  (resp.

(resp.  ).

).

Let us now give a coarse overview of our main results, which we are able to derive with our choice of approximation spaces based on  or

or  .

.

1.3.1 Core properties of the novel Approximation Spaces

We first prove that each of these two families \(\Sigma = (\Sigma _n)_{n \in {\mathbb {N}}_0}\) satisfies the necessary requirements for the associated approximation spaces  —which we denote by

—which we denote by  and

and  , respectively—to be amenable to various results from approximation theory. Under certain conditions on \(\varrho \) and \({\mathscr {L}}\), Theorem 3.27 shows that these approximation spaces are even equipped with a convenient (quasi-)Banach spaces structure. The spaces

, respectively—to be amenable to various results from approximation theory. Under certain conditions on \(\varrho \) and \({\mathscr {L}}\), Theorem 3.27 shows that these approximation spaces are even equipped with a convenient (quasi-)Banach spaces structure. The spaces  and

and  are nested (Lemma 3.9) and do not generally coincide (Lemma 3.10).

are nested (Lemma 3.9) and do not generally coincide (Lemma 3.10).

To prepare the ground for the analysis of the impact of depth, we then prove nestedness with respect to the depth growth function. In slightly more detail, we identify a partial order \(\preceq \) and an equivalence relation \(\sim \) on depth growth functions such that the following holds (Lemma 3.12 and Theorem 3.13):

-

(1)

If \({\mathscr {L}}_{1} \preceq {\mathscr {L}}_{2}\), then

for any \(\alpha \), q, X and \(\varrho \); and

for any \(\alpha \), q, X and \(\varrho \); and -

(2)

if \({\mathscr {L}}_{1} \sim {\mathscr {L}}_{2}\), then

for any \(\alpha \), q, X and \(\varrho \).

for any \(\alpha \), q, X and \(\varrho \).

The same nestedness results hold for the spaces  . Slightly surprising and already insightful might be that under mild conditions on the activation function \(\varrho \), the approximation classes for strict and generalized \(\varrho \)-networks are in fact identical, allowing to derive the conclusion that their expressivities coincide (see Theorem 3.8).

. Slightly surprising and already insightful might be that under mild conditions on the activation function \(\varrho \), the approximation classes for strict and generalized \(\varrho \)-networks are in fact identical, allowing to derive the conclusion that their expressivities coincide (see Theorem 3.8).

1.3.2 Approximation Spaces Associated with ReLU Networks

The rectified linear unit (ReLU) and its powers of exponent \(r \in {\mathbb {N}}\)—in spline theory better known under the name of truncated powers [21, Chapter 5, Equation (1.1)]— are defined by

where \(x_{+} = \max \{ 0,x \} = \varrho _{1}(x)\), with the ReLU activation function being \(\varrho _{1}\). Considering these activation functions is motivated practically by the wide use of the ReLU [42], as well as theoretically by the existence [45, Theorem 4] of pathological activation functions giving rise to trivial—too rich—approximation spaces that satisfy  , for all \(\alpha ,q\). In contrast, the classes associated with \(\varrho _{r}\)-networks are non-trivial for \(p \in (0,\infty ]\) (Theorem 4.16). Moreover, strict and generalized \(\varrho _{r}\)-networks yield identical approximation classes for any subset \(\Omega \subseteq {\mathbb {R}}^d\) of nonzero measure (even unbounded), for any \(p \in (0,\infty ]\) (Theorem 4.2). Furthermore, for any \(r \in {\mathbb {N}}\), these approximation classes are (quasi-)Banach spaces (Theorem 4.2), as soon as

, for all \(\alpha ,q\). In contrast, the classes associated with \(\varrho _{r}\)-networks are non-trivial for \(p \in (0,\infty ]\) (Theorem 4.16). Moreover, strict and generalized \(\varrho _{r}\)-networks yield identical approximation classes for any subset \(\Omega \subseteq {\mathbb {R}}^d\) of nonzero measure (even unbounded), for any \(p \in (0,\infty ]\) (Theorem 4.2). Furthermore, for any \(r \in {\mathbb {N}}\), these approximation classes are (quasi-)Banach spaces (Theorem 4.2), as soon as

The expressivity of networks with more general activation functions can be related to that of \(\varrho _{r}\)-networks (see Theorem 4.7) in the following sense: If \(\varrho \) is continuous and piecewise polynomial of degree at most r, then its approximation spaces are contained in those of \(\varrho _{r}\)-networks. In particular, if \(\Omega \) is bounded or if \({\mathscr {L}}\) satisfies a certain growth condition, then for \(s,r \in {\mathbb {N}}\) such that \(1 \le s \le r\)

Also, if \(\varrho \) is a spline of degree r and not a polynomial, then its approximation spaces match those of \(\varrho _{r}\) on bounded \(\Omega \). In particular, on a bounded domain \(\Omega \), the spaces associated with the leaky ReLU [44], the parametric ReLU [33], the absolute value (as, e.g., in scattering transforms [46]), and the soft-thresholding activation function [30] are all identical to the spaces associated with the ReLU.

Studying the relation of approximation spaces of \(\varrho _{r}\)-networks for different r, we derive the following statement as a corollary (Corollary 4.14) of Theorem 4.7: Approximation spaces of \(\varrho _{2}\)-networks and \(\varrho _{r}\)-networks are equal for \(r \ge 2\) when \({\mathscr {L}}\) satisfies a certain growth condition, showing a saturation from degree 2 on. Given this growth condition, for any \(r \ge 2\), we obtain the following diagram:

1.3.3 Relation to Classical Function Spaces

Focusing still on ReLU networks, we show that ReLU networks of bounded depth approximate \(C_{c}^{3}(\Omega )\) functions at bounded rates (Theorem 4.17) in the sense that, for open \(\Omega \subset {\mathbb {R}}^d\) and \(L := \sup _{n} {\mathscr {L}}(n) < \infty \), we prove

As classical function spaces (e.g., Sobolev, Besov) intersect \(C^{3}_{c}(\Omega )\) non-trivially, they can only embed into  or

or  if the networks are somewhat deep (\(L \ge 1 + \alpha /2\) or \(\lfloor L/2 \rfloor \ge \alpha /2\), respectively), giving some insight about the impact of depth on the expressivity of neural networks.

if the networks are somewhat deep (\(L \ge 1 + \alpha /2\) or \(\lfloor L/2 \rfloor \ge \alpha /2\), respectively), giving some insight about the impact of depth on the expressivity of neural networks.

We then study relations to the classical Besov spaces \({B^{s}_{\sigma ,\tau } (\Omega ) := B^s_{\tau }(L_\sigma (\Omega ;{\mathbb {R}}))}\). We establish both direct estimates—that is, embeddings of certain Besov spaces into approximation spaces of \(\varrho _{r}\)-networks—and inverse estimates— that is, embeddings of the approximation spaces into certain Besov spaces.

The main result in the regime of direct estimates is Theorem 5.5 showing that if \(\Omega \subset {\mathbb {R}}^d\) is a bounded Lipschitz domain, if \(r \ge 2\), and if \(L := \sup _{n \in {\mathbb {N}}} {\mathscr {L}}(n)\) satisfies \(L \ge 2 + 2 \lceil \log _2 d \rceil \), then

For large input dimensions d, however, the condition \(L \ge 2 + 2 \lceil \log _2 d \rceil \) is only satisfied for quite deep networks. In the case of more shallow networks with \(L \ge 3\), the embedding (1.4) still holds (for any \(r \in {\mathbb {N}}\)), but is only established for \(0< \alpha < \tfrac{\min \{ 1, p^{-1} \}}{d}\). Finally, in case of \(d = 1\), the embedding (1.4) is valid as soon as \(L \ge 2\) and \(r \ge 1\).

Regarding inverse estimates, we first establish limits on possible embeddings (Theorem 5.7). Precisely, for \(\Omega = (0,1)^{d}\) and any \(r \in {\mathbb {N}}\), \(\alpha ,s \in (0,\infty )\), and \(\sigma , \tau \in (0,\infty ]\) we have, with \(L := \sup _{n} {\mathscr {L}}(n) \ge 2\):

-

if \(\alpha < \lfloor L/2\rfloor \cdot \min \{ s, 2 \}\) then

does not embed into \(B^{s}_{\sigma ,\tau } (\Omega )\);

does not embed into \(B^{s}_{\sigma ,\tau } (\Omega )\); -

if \(\alpha < (L-1) \cdot \min \{ s, 2 \}\) then

does not embed into \(B^{s}_{\sigma ,\tau } (\Omega )\).

does not embed into \(B^{s}_{\sigma ,\tau } (\Omega )\).

A particular consequence is that for unbounded depth \(L = \infty \), none of the spaces  ,

,  can embed into any Besov space of strictly positive smoothness \(s>0\).

can embed into any Besov space of strictly positive smoothness \(s>0\).

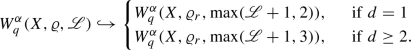

For scalar input dimension \(d=1\), an embedding into a Besov space with the relation \(\alpha = \lfloor L/2 \rfloor \cdot s\) (respectively, \(\alpha = (L-1) \cdot s\)) is indeed achieved for \(X = L_{p}( (0,1) )\), \(0< p < \infty \), \(r \in {\mathbb {N}}\), (Theorem 5.13):

1.4 Expected Impact and Future Directions

We anticipate our results to have an impact in a number of areas that we now describe together with possible future directions:

-

Theory of Expressivity We introduce a general framework to study approximation properties of deep neural networks from an approximation space viewpoint. This opens the door to transfer various results from this part of approximation theory to deep neural networks. We believe that this conceptually new approach in the theory of expressivity will lead to further insight. One interesting topic for future investigation is, for instance, to derive a finer characterization of the spaces

,

,  , for \(r \in \{1,2\}\) (with some assumptions on \({\mathscr {L}}\)).

, for \(r \in \{1,2\}\) (with some assumptions on \({\mathscr {L}}\)).Our framework is amenable to various extensions; for example, the restriction to convolutional weights would allow a study of approximation spaces of convolutional neural networks.

-

Statistical Analysis of Deep Learning Approximation spaces characterize fundamental trade-offs between the complexity of a network architecture and its ability to approximate (with proper choices of parameter values) a given function f. In statistical learning, a related question is to characterize which generalization bounds (also known as excess risk guarantees) can be achieved when fitting network parameters using m independent training samples. Some “oracle inequalities” [60] of this type have been recently established for idealized training algorithms minimizing the empirical risk (1.2). Our framework in combination with existing results on the VC dimension of neural networks [7] is expected to shed new light on such generalization guarantees through a generic approach encompassing various types of constraints on the considered architecture.

-

Design of Deep Neural Networks—Architectural Guidelines Our results reveal how the expressive power of a network architecture may be impacted by certain choices such as the presence of certain types of skip connections or the selected activation functions. Thus, our results provide indications on how a network architecture may be adapted without hurting its expressivity, in order to get additional degrees of freedom to ease the task of optimization-based learning algorithms and improve their performance. For instance, while we show that generalized and strict networks have (under mild assumptions on the activation function) the same expressivity, we have not yet considered so-called ResNet architectures. Yet, the empirical observation that a ResNet architecture makes it easier to train deep networks [32] calls for a better understanding of the relations between the corresponding approximations classes.

1.5 Outline

The paper is organized as follows.

Section 2 introduces our notations regarding neural networks and provides basic lemmata concerning the “calculus” of neural networks. The classical notion of approximation spaces is reviewed in Sect. 3, and therein also specialized to the setting of approximation spaces of networks, with a focus on approximation in \(L_p\) spaces. This is followed by Sect. 4, which concentrates on \(\varrho \)-networks with \(\varrho \) the so-called ReLU or one of its powers. Finally, Sect. 5 studies embeddings between  (resp.

(resp.  ) and classical Besov spaces, with

) and classical Besov spaces, with  .

.

2 Neural Networks and Their Elementary Properties

In this section, we formally introduce the definition of neural networks used throughout this paper and discuss the elementary properties of the corresponding sets of functions.

2.1 Neural Networks and Their Main Characteristics

Definition 2.1

(Neural network) Let \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\). A (generalized) neural network with activation function \(\varrho \) (in short: a \(\varrho \)-network) is a tuple \(\big ( (T_1, \alpha _1), \dots , (T_L, \alpha _L) \big )\), where each \(T_\ell : {\mathbb {R}}^{N_{\ell - 1}} \rightarrow {\mathbb {R}}^{N_\ell }\) is an affine-linear map, \(\alpha _L = \mathrm {id}_{{\mathbb {R}}^{N_L}}\), and each function \(\alpha _\ell : {\mathbb {R}}^{N_\ell }\rightarrow {\mathbb {R}}^{N_\ell }\) for \(1 \le \ell < L\) is of the form \(\alpha _\ell = \bigotimes _{j = 1}^{N_\ell } \varrho _j^{(\ell )}\) for certain \(\varrho _j^{(\ell )} \in \{\mathrm {id}_{{\mathbb {R}}}, \varrho \}\). Here, we use the notation

Definition 2.2

A \(\varrho \)-network as above is called strict if \(\varrho _j^{(\ell )} = \varrho \) for all \(1 \le \ell < L\) and \(1 \le j \le N_\ell \). \(\blacktriangleleft \)

Definition 2.3

(Realization of a network) The realization \({\mathtt {R}}(\Phi )\) of a network \({\Phi = \big ( (T_1, \alpha _1), \dots , (T_L, \alpha _L) \big )}\) as above is the function

The complexity of a neural network is characterized by several features.

Definition 2.4

(Depth, number of hidden neurons, number of connections) Consider a neural network \(\Phi = \big ( (T_1, \alpha _1), \dots , (T_L, \alpha _L) \big )\) with \(T_\ell : {\mathbb {R}}^{{N_{\ell - 1}}} \rightarrow {\mathbb {R}}^{N_\ell }\) for \(1 \le \ell \le L\).

-

The input-dimension of \(\Phi \) is \({d_{\mathrm {in}}}(\Phi ) := N_0 \in {\mathbb {N}}\), its output-dimension is \({d_{\mathrm {out}}}(\Phi ) := N_L \in {\mathbb {N}}\).

-

The depth of \(\Phi \) is \(L(\Phi ) := L \in {\mathbb {N}}\), corresponding to the number of (affine) layers of \(\Phi \).

We remark that with these notations, the number of hidden layers is \(L-1\).

-

The number of hidden neurons of \(\Phi \) is \(N(\Phi ) := \sum _{\ell = 1}^{L-1} N_\ell \in {\mathbb {N}}_0\);

-

The number of connections (or number of weights) of \(\Phi \) is \(W(\Phi ) := \sum _{\ell = 1}^{L} \Vert T_\ell \Vert _{\ell ^0} \in {\mathbb {N}}_0\), with \({\Vert T\Vert _{\ell ^0} := \Vert A\Vert _{\ell ^{0}}}\) for an affine map \(T: x \mapsto A x + b\) with A some matrix and b some vector; here, \(\Vert \cdot \Vert _{\ell ^{0}}\) counts the number of nonzero entries in a vector or a matrix. \(\blacktriangleleft \)

Remark 2.5

If \(W(\Phi ) = 0\), then \({\mathtt {R}}(\Phi )\) is constant (but not necessarily zero), and if \(N(\Phi )=0\), then \({\mathtt {R}}(\Phi )\) is affine-linear (but not necessarily zero or constant). \(\blacklozenge \)

Unlike the notation used in [9, 55], which considers \(W_0 (\Phi ) := \sum _{\ell = 1}^L (\Vert A^{(\ell )}\Vert _{\ell ^0} + \Vert b^{(\ell )}\Vert _{\ell ^0})\) where \({T_\ell \, x = A^{(\ell )} x + b^{(\ell )}}\), Definition 2.4 only counts the nonzero entries of the linear part of each \(T_\ell \), so that \(W(\Phi ) \le W_{0}(\Phi )\). Yet, as shown with the following lemma, both definitions are in fact equivalent up to constant factors if one is only interested in the represented functions. The proof is in Appendix A.1.

Lemma 2.6

For any network \(\Phi \), there is a “compressed” network \({\widetilde{\Phi }}\) with \({\mathtt {R}}( {\widetilde{\Phi }} ) = {\mathtt {R}}(\Phi )\) such that \(L({\widetilde{\Phi }}) \le L(\Phi )\), \(N({\widetilde{\Phi }} \,) \le N(\Phi )\), and

The network \({\widetilde{\Phi }}\) can be chosen to be strict if \(\Phi \) is strict. \(\blacktriangleleft \)

Remark 2.7

The reason for distinguishing between a neural network and its associated realization is that for a given function \(f : {\mathbb {R}}^d\rightarrow {\mathbb {R}}^k\), there might be many different neural networks \(\Phi \) with \(f = {\mathtt {R}}(\Phi )\), so that talking about the number of layers, neurons, or weights of the function f is not well defined, whereas these notions certainly make sense for neural networks as defined above. A possible alternative would be to define, for example,

and analogously for N(f) and W(f); but this has the considerable drawback that it is not clear whether there is a neural network \(\Phi \) that simultaneously satisfies, e.g., \(L(\Phi ) = L(f)\) and \(W(\Phi ) = W(f)\). Because of these issues, we prefer to properly distinguish between a neural network and its realization.\(\blacklozenge \)

Remark 2.8

Some of the conventions in the above definitions might appear unnecessarily complicated at first sight, but they have been chosen after careful thought. In particular:

-

Many neural network architectures used in practice use the same activation function for all neurons in a common layer. If this choice of activation function even stays the same across all layers—except for the last one—one obtains a strict neural network.

-

In applications, network architectures very similar to our “generalized” neural networks are used; examples include residual networks (also called “ResNets,” see [32, 67]), and networks with skip connections [54].

-

As expressed in Sect. 2.3, the class of realizations of generalized neural networks admits nice closure properties under linear combinations and compositions of functions. Similar closure properties do in general not hold for the class of strict networks.

-

The introduction of generalized networks will be justified in Sect. 3.3, where we show that if one is only interested in approximation theoretic properties of the respective function class, then—at least on bounded domains \(\Omega \subset {\mathbb {R}}^d\) for “generic” \(\varrho \), but also on unbounded domains for the ReLU activation function and its powers—generalized networks and strict networks have identical properties. \(\blacklozenge \)

2.2 Relations Between Depth, Number of Neurons, and Number of Connections

We now investigate the relationships between the quantities describing the complexity of a neural network \(\Phi = \big ( (T_1,\alpha _1), \dots , (T_L, \alpha _L) \big )\) with \(T_\ell : {\mathbb {R}}^{N_{\ell - 1}} \rightarrow {\mathbb {R}}^{N_\ell }\).

Given the number of (hidden) neurons of the network, the other quantities can be bounded. Indeed, by definition we have \(N_\ell \ge 1\) for all \(1 \le \ell \le L-1\); therefore, the number of layers satisfies

Similarly, as \(\Vert T_{\ell }\Vert _{\ell ^{0}} \le N_{\ell -1} N_{\ell }\) for each \(1 \le \ell < L\), we have

showing that \(W(\Phi ) = {\mathcal {O}}([N(\Phi )]^2 + d k)\) for fixed input and output dimensions d, k. When \(L(\Phi )=2\), we have in fact \( W(\Phi ) = \Vert T_{1}\Vert _{\ell ^{0}}+\Vert T_{2}\Vert _{\ell ^{0}}\le N_{0}N_{1}+N_{1}N_{2} = (N_{0}+N_{2})N_{1} = (d_{\mathrm {in}} (\Phi ) + d_{\mathrm {out}}(\Phi )) \cdot N(\Phi ) \).

In general, one cannot bound the number of layers or of hidden neurons by the number of nonzero weights, as one can build arbitrarily large networks with many “dead neurons.” Yet, such a bound is true if one is willing to switch to a potentially different network which has the same realization as the original network. To show this, we begin with the case of networks with zero connections.

Lemma 2.9

Let \(\Phi = \big ( (T_1,\alpha _1), \dots , (T_L, \alpha _L) \big )\) be a neural network. If there exists some \(\ell \in \{1,\dots ,L\}\) such that \(\Vert T_{\ell }\Vert _{\ell ^{0}} = 0\), then \({\mathtt {R}}(\Phi ) \equiv c\) for some \(c \in {\mathbb {R}}^{k}\) where \(k = d_{\mathrm {out}}(\Phi )\).\(\blacktriangleleft \)

Proof

As \(\Vert T_{\ell }\Vert _{\ell ^{0}} = 0\), the affine map \(T_{\ell }\) is a constant map \({\mathbb {R}}^{N_{\ell - 1}} \ni y \mapsto b^{(\ell )} \in {\mathbb {R}}^{N_{\ell }}\). Therefore, \(f_{\ell } = \alpha _{\ell } \circ T_{\ell }: {\mathbb {R}}^{N_{\ell -1}} \rightarrow {\mathbb {R}}^{N_{\ell }}\) is a constant map, so that also \({\mathtt {R}}(\Phi ) = f_{L} \circ \cdots \circ f_{\ell } \circ \cdots \circ f_{1}\) is constant. \(\square \)

Corollary 2.10

If \(W(\Phi ) < L(\Phi )\), then \({\mathtt {R}}(\Phi ) \equiv c\) for some \(c \in {\mathbb {R}}^{k}\) where \(k = d_{\mathrm {out}}(\Phi )\).\(\blacktriangleleft \)

Proof

Let \(\Phi = \big ( (T_1,\alpha _1), \dots , (T_L,\alpha _L) \big )\) and observe that if \(\sum _{\ell =1}^L \Vert T_{\ell }\Vert _{\ell ^{0}} = W(\Phi ) < L(\Phi ) = \sum _{\ell =1}^{L} 1\), then there must exist \(\ell \in \{1,\dots ,L\}\) such that \(\Vert T_{\ell }\Vert _{\ell ^{0}}=0\), so that we can apply Lemma 2.9. \(\square \)

Indeed, constant maps play a special role as they are exactly the set of realizations of neural networks with no (nonzero) connections. Before formally stating this result, we introduce notations for families of neural networks of constrained complexity, which can have a variety of shapes as illustrated in Fig. 1.

Definition 2.11

Consider \(L \in {\mathbb {N}}\cup \{\infty \}\), \(W,N \in {\mathbb {N}}_0 \cup \{\infty \}\), and \(\Omega \subseteq {\mathbb {R}}^{d}\) a non-empty set.

-

\({\mathcal {NN}}_{W,L,N}^{\varrho ,d,k}\) denotes the set of all generalized \(\varrho \)-networks \(\Phi \) with input dimension d, output dimension k, and with \(W(\Phi ) \le W\), \(L(\Phi ) \le L\), and \(N(\Phi ) \le N\).

-

\({\mathcal {SNN}}_{W,L,N}^{\varrho ,d,k}\) denotes the subset of networks \(\Phi \in {\mathcal {NN}}_{W,L,N}^{\varrho ,d,k}\) which are strict.

-

The class of all functions \(f : {\mathbb {R}}^d \rightarrow {\mathbb {R}}^k\) that can be represented by (generalized) \(\varrho \)-networks with at most W weights, L layers, and N neurons is

$$\begin{aligned} {\mathtt {NN}}_{W,L,N}^{\varrho ,d,k} := \big \{ {\mathtt {R}}(\Phi ) \,:\, \Phi \in {\mathcal {NN}}_{W,L,N}^{\varrho ,d,k} \big \} . \end{aligned}$$The set of all restrictions of such functions to \(\Omega \) is denoted \({\mathtt {NN}}_{W,L,N}^{\varrho ,d,k}(\Omega )\).

-

Similarly,

$$\begin{aligned} {\mathtt {SNN}}_{W,L,N}^{\varrho ,d,k} := \big \{ {\mathtt {R}}(\Phi ) \,:\, \Phi \in {\mathcal {SNN}}_{W,L,N}^{\varrho , d, k} \big \} . \end{aligned}$$The set of all restrictions of such functions to \(\Omega \) is denoted \({\mathtt {SNN}}_{W,L,N}^{\varrho ,d,k}(\Omega )\).

Finally, we define \({\mathtt {NN}}_{W,L}^{\varrho ,d,k} := {\mathtt {NN}}^{\varrho ,d,k}_{W,L,\infty }\) and \({\mathtt {NN}}_{W}^{\varrho ,d,k} := {\mathtt {NN}}^{\varrho ,d,k}_{W,\infty ,\infty }\), as well as \({{\mathtt {NN}}^{\varrho ,d,k} := {\mathtt {NN}}^{\varrho ,d,k}_{\infty ,\infty ,\infty }}\). We will use similar notations for \({\mathtt {SNN}}\), \({\mathcal {NN}}\), and \({\mathcal {SNN}}\). \(\blacktriangleleft \)

Remark 2.12

If the dimensions d, k and/or the activation function \(\varrho \) are implied by the context, we will sometimes omit them from the notation.\(\blacklozenge \)

The considered network classes include a variety of networks such as: (top) shallow networks with a single hidden layer, where the number of neurons is of the same order as the number of possible connections; (middle) “narrow and deep” networks, e.g., with a single neuron per layer, where the same holds; (bottom) “truly” sparse networks that have much fewer nonzero weights than potential connections

Lemma 2.13

Let \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\), and let \(d,k \in {\mathbb {N}}\), \(N \in {\mathbb {N}}_{0} \cup \{\infty \}\), and \(L \in {\mathbb {N}}\cup \{\infty \}\) be arbitrary. Then,

Proof

If \(f \equiv c\) where \(c \in {\mathbb {R}}^{k}\), then the affine map \(T : {\mathbb {R}}^d \rightarrow {\mathbb {R}}^{k}, x \mapsto c\) satisfies \(\Vert T \Vert _{\ell ^0} = 0\) and the (strict) network \(\Phi := \big ( (T, \mathrm {id}_{{\mathbb {R}}^{k}}) \big )\) satisfies \({\mathtt {R}}(\Phi ) \equiv c = f\), \(W(\Phi ) = 0\), \(N(\Phi ) = 0\) and \(L(\Phi ) = 1\). By Definition 2.11, we have \(\Phi \in {\mathcal {SNN}}_{0,1,0}^{\varrho ,d,k}\) whence \(f \in {\mathtt {SNN}}_{0,1,0}^{\varrho ,d,k}\). The inclusions \({\mathtt {SNN}}_{0,1,0}^{\varrho ,d,k} \subset {\mathtt {NN}}_{0,1,0}^{\varrho ,d,k} \subset {\mathtt {NN}}_{0,L,N}^{\varrho ,d,k}\) and \({\mathtt {SNN}}_{0,1,0}^{\varrho ,d,k} \subset {\mathtt {SNN}}_{0,L,N}^{\varrho ,d,k} \subset {\mathtt {NN}}_{0,L,N}^{\varrho ,d,k}\) are trivial by definition of these sets. If \(f \in {\mathtt {NN}}_{0,L,N}^{\varrho ,d,k}\), then there is \(\Phi \in {\mathcal {NN}}_{0,L,N}^{\varrho ,d,k}\) such that \(f = {\mathtt {R}}(\Phi )\). As \(W(\Phi ) = 0 < 1 \le L(\Phi )\), Corollary 2.10 yields \(f = {\mathtt {R}}(\Phi ) \equiv c\). \(\square \)

Our final result in this subsection shows that any realization of a network with at most \(W \ge 1\) connections can also be obtained by a network with W connections but which additionally has at most \(L \le W\) layers and at most \(N \le W\) hidden neurons. The proof is postponed to Appendix A.2.

Lemma 2.14

Let \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\), \(d,k \in {\mathbb {N}}\), \(L \in {\mathbb {N}}\cup \{\infty \}\), and \(W \in {\mathbb {N}}\) be arbitrary. Then, we have

The inclusion is an equality for \(L \ge W\). In particular, \({\mathtt {NN}}_{W}^{\varrho ,d,k} = {\mathtt {NN}}_{W,\infty ,W}^{\varrho ,d,k} = {\mathtt {NN}}_{W,W,W}^{\varrho ,d,k}\). The same claims are valid for strict networks, replacing the symbol \({\mathtt {NN}}\) by \({\mathtt {SNN}}\) everywhere.\(\blacktriangleleft \)

To summarize, for given input and output dimensions d, k, when combining (2.2) with the above lemma, we obtain that for any network \(\Phi \), there exists a network \(\Psi \) with \({\mathtt {R}}(\Psi ) = {\mathtt {R}}(\Phi )\) and \(L(\Psi ) \le L(\Phi )\), and such that

When \(L(\Phi ) = 2\), we have in fact \(N(\Psi ) \le W(\Psi ) \le W(\Phi ) \le (d+k) N(\Phi )\); see the discussion after (2.2).

Remark 2.15

(Connectivity, flops and bits.) A motivation for measuring a network’s complexity by its connectivity is that the number of connections is directly related to several practical quantities of interest such as the number of floating point operations needed to compute the output given the input, or the number of bits needed to store a (quantized) description of the network in a computer file. This is not the case for complexity measured in terms of the number of neurons.\(\blacklozenge \)

2.3 Calculus with Generalized Neural Networks

In this section, we show as a consequence of Lemma 2.14 that the class of realizations of generalized neural networks of a given complexity—as measured by the number of connections \(W(\Phi )\)—is closed under addition and composition, as long as one is willing to increase the complexity by a constant factor. To this end, we first show that one can increase the depth of generalized neural networks with controlled increase in the required complexity.

Lemma 2.16

Given \(\varrho :{\mathbb {R}}\rightarrow {\mathbb {R}}\), \({d,k \in {\mathbb {N}}}\), \(c := \min \{d,k\}\), \(\Phi \in {\mathcal {NN}}^{\varrho ,d,k}\), and \(L_0 \in {\mathbb {N}}_{0}\), there exists \(\Psi \in {\mathcal {NN}}^{\varrho ,d,k}\) such that \({\mathtt {R}}(\Psi ) = {\mathtt {R}}(\Phi )\), \(L(\Psi ) = L(\Phi ) + L_0\), \(W(\Psi ) = W(\Phi ) + c L_0\), \(N(\Psi ) = N(\Phi ) + c L_0\).\(\blacktriangleleft \)

This fact appears without proof in [60, Section 5.1] under the name of depth synchronization for strict networks with the ReLU activation function, with \(c = d\). We refine it to \(c = \min \{d,k\}\) and give a proof for generalized networks with arbitrary activation function in Appendix A.3. The underlying proof idea is illustrated in Fig. 2.

A consequence of the depth synchronization property is that the class of generalized networks is closed under linear combinations and Cartesian products. The proof idea behind the following lemma, whose proof is in Appendix A.4, is illustrated in Fig. 3 (top and middle).

Lemma 2.17

Consider arbitrary \(d,k,n \in {\mathbb {N}}\), \(c \in {\mathbb {R}}\), \(\varrho :{\mathbb {R}}\rightarrow {\mathbb {R}}\), and \(k_i \in {\mathbb {N}}\) for \(i \in \{1,\dots ,n\}\).

-

(1)

If \(\Phi \in {\mathcal {NN}}^{\varrho ,d,k}\), then \(c \cdot {\mathtt {R}}(\Phi ) = {\mathtt {R}}(\Psi )\) where \(\Psi \in {\mathcal {NN}}^{\varrho ,d,k}\) satisfies \(W(\Psi ) \le W(\Phi )\) (with equality if \(c \ne 0\)), \(L(\Psi ) = L(\Phi )\), \(N(\Psi ) = N(\Phi )\). The same holds with \({\mathcal {SNN}}\) instead of \({\mathcal {NN}}\).

-

(2)

If \(\Phi _i \in {\mathcal {NN}}^{\varrho , d, k_i}\) for \(i \in \{1,\dots ,n\}\), then \(({\mathtt {R}}(\Phi _{1}),\ldots ,{\mathtt {R}}(\Phi _{n})) = {\mathtt {R}}(\Psi )\) with \(\Psi \in {\mathcal {NN}}^{\varrho ,d,K}\), where

$$\begin{aligned} L(\Psi )= & {} \max _{i = 1,\dots ,n} L(\Phi _{i}), \quad W(\Psi ) \le \delta +\sum _{i=1}^{n} W(\Phi _{i}), \quad \\ N(\Psi )\le & {} \delta +\sum _{i=1}^{n} N(\Phi _{i}), \quad \text {and} \quad K:=\sum _{i=1}^{n} k_{i}, \end{aligned}$$with \(\delta := c \cdot \big (\max _{i=1,\dots ,n} L(\Phi _{i})-\min _{i} L(\Phi _{i})\big )\) and \(c := \min \{d, K-1 \}\).

-

(3)

If \(\Phi _1,\dots ,\Phi _n \in {\mathcal {NN}}^{\varrho , d, k}\), then \(\sum _{i=1}^n {\mathtt {R}}(\Phi _{i}) = {\mathtt {R}}(\Psi )\) with \(\Psi \in {\mathcal {NN}}^{\varrho ,d,k}\), where

$$\begin{aligned} L(\Psi ) = \max _{i} L(\Phi _{i}), \quad W(\Psi ) \le \delta + \sum _{i=1}^{n} W(\Phi _{i}), \qquad \text {and} \quad N(\Psi ) \le \delta + \sum _{i=1}^{n} N(\Phi _{i}), \end{aligned}$$with \(\delta := c \left( \max _{i} L(\Phi _{i})-\min _{i} L(\Phi _{i})\right) \) and \(c := \min \{ d,k \}\). \(\blacktriangleleft \)

One can also control the complexity of certain networks resulting from compositions in an intuitive way. To state and prove this, we introduce a convenient notation: For a matrix \(A \in {\mathbb {R}}^{n \times d}\), we denote

where \(e_1,\dots , e_n\) is the standard basis of \({\mathbb {R}}^n\). Likewise, for an affine-linear map \(T = A \bullet + b\), we denote \(\Vert T \Vert _{\ell ^{0,\infty }} := \Vert A \Vert _{\ell ^{0,\infty }}\) and \(\Vert T \Vert _{\ell ^{0,\infty }_{*}} := \Vert A \Vert _{\ell ^{0,\infty }_{*}}\).

Lemma 2.18

Consider arbitrary \(d,d_1,d_2,k,k_1 \in {\mathbb {N}}\) and \(\varrho :{\mathbb {R}}\rightarrow {\mathbb {R}}\).

-

(1)

If \(\Phi \in {\mathcal {NN}}^{\varrho ,d,k}\) and \(P: {\mathbb {R}}^{d_{1}} \rightarrow {\mathbb {R}}^{d}\), \(Q:{\mathbb {R}}^{k} \rightarrow {\mathbb {R}}^{k_{1}}\) are two affine maps, then \(Q \circ {\mathtt {R}}(\Phi ) \circ P = {\mathtt {R}}(\Psi )\) where \(\Psi \in {\mathcal {NN}}^{\varrho ,d_{1},k_{1}}\) with \(L(\Psi )= L(\Phi )\), \(N(\Psi )= N(\Phi )\) and

$$\begin{aligned} W(\Psi ) \le \Vert Q \Vert _{\ell ^{0,\infty }} \cdot W(\Phi ) \cdot \Vert P \Vert _{\ell ^{0,\infty }_{*}}. \end{aligned}$$The same holds with \({\mathcal {SNN}}\) instead of \({\mathcal {NN}}\).

-

(2)

If \(\Phi _1 \in {\mathcal {NN}}^{\varrho , d, d_1}\) and \(\Phi _2 \in {\mathcal {NN}}^{\varrho , d_1, d_2}\), then \({\mathtt {R}}(\Phi _2) \circ {\mathtt {R}}(\Phi _1) = {\mathtt {R}}(\Psi )\) where \(\Psi \in {\mathcal {NN}}^{\varrho , d, d_2}\) and

$$\begin{aligned} W(\Psi )= & {} W(\Phi _{1})+W(\Phi _{2}), \quad L(\Psi ) = L(\Phi _1)+L(\Phi _2), \quad \\ N(\Psi )= & {} N(\Phi _1)+N(\Phi _2)+d_1. \end{aligned}$$ -

(3)

Under the assumptions of Part (2), there is also \(\Psi ' \in {\mathcal {NN}}^{\varrho , d, d_2}\) such that \({\mathtt {R}}(\Phi _2) \circ {\mathtt {R}}(\Phi _1) = {\mathtt {R}}(\Psi ')\) and

$$\begin{aligned} W(\Psi ')\le & {} W(\Phi _{1}) + \max \{ N(\Phi _{1}),d \} \, W(\Phi _{2}), \quad \\ L(\Psi ')= & {} \! L(\Phi _1) \!+\! L(\Phi _2) \!-\! 1, \quad N(\Psi ') = N(\Phi _1) \!+\! N(\Phi _2). \end{aligned}$$In this case, the same holds for \({\mathcal {SNN}}\) instead of \({\mathcal {NN}}\). \(\blacktriangleleft \)

The proof idea of Lemma 2.18 is illustrated in Fig. 3 (bottom). The formal proof is in Appendix A.5. A direct consequence of Lemma 2.18-(1) that we will use in several places is that \({x \mapsto a_2 \, g(a_{1}x+b_{1})+b_{2} \in {\mathtt {NN}}^{\varrho ,d,k}_{W,L,N}}\) whenever \(g \in {\mathtt {NN}}^{\varrho ,d,k}_{W,L,N}\), \(a_{1},a_{2} \in {\mathbb {R}}\), \(b_{1} \in {\mathbb {R}}^{d}\), \(b_{2} \in {\mathbb {R}}^{k}\).

Our next result shows that if \(\sigma \) can be expressed as the realization of a \(\varrho \)-network, then realizations of \(\sigma \)-networks can be re-expanded into realizations of \(\varrho \)-networks of controlled complexity.

Lemma 2.19

Consider two activation functions \(\varrho ,\sigma \) such that \(\sigma = {\mathtt {R}}(\Psi _{\sigma })\) for some \( \Psi _{\sigma } \in {\mathcal {NN}}^{\varrho ,1,1}_{w,\ell ,m} \) with \(L(\Psi _{\sigma }) = \ell \in {\mathbb {N}}\), \(w \in {\mathbb {N}}_{0}\), \(m \in {\mathbb {N}}\). Furthermore, assume that \(\sigma \not \equiv \mathrm {const}\).

Then, the following hold:

-

(1)

if \(\ell =2\) then for any W, N, L, d, k we have \( {\mathtt {NN}}_{W,L,N}^{\sigma ,d,k} \subset {\mathtt {NN}}_{Wm^{2},L,Nm}^{\varrho ,d,k} \)

-

(2)

for any \(\ell ,W,N,L,d,k\) we have \( {\mathtt {NN}}_{W,L,N}^{\sigma ,d,k} \subset {\mathtt {NN}}_{mW + w N, 1 + (L-1)\ell , N(1+m)}^{\varrho ,d,k}. \) \(\blacktriangleleft \)

The proof of Lemma 2.19 is in Appendix A.6. In the case, when \(\sigma \) is simply an s-fold composition of \(\varrho \), we have the following improvement of Lemma 2.19.

Lemma 2.20

Let \(s \in {\mathbb {N}}\). Consider an activation function \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\), and let \(\sigma := \varrho \circ \cdots \circ \varrho \), where the composition has s “factors.”

We have

The same holds for strict networks, replacing \({\mathtt {NN}}\) by \({\mathtt {SNN}}\) everywhere.\(\blacktriangleleft \)

The proof is in Appendix A.7. In our next result, we consider the case where \(\sigma \) cannot be exactly implemented by \(\varrho \)-networks, but only approximated arbitrarily well by such networks of uniformly bounded complexity.

Lemma 2.21

Consider two activation functions \(\varrho , \sigma : {\mathbb {R}}\rightarrow {\mathbb {R}}\). Assume that \(\sigma \) is continuous and that there are \(w,m \in {\mathbb {N}}_{0}\), \(\ell \in {\mathbb {N}}\) and a family \(\Psi _{h} \in {\mathcal {NN}}_{w,\ell ,m}^{\varrho ,1,1}\) parameterized by \(h \in {\mathbb {R}}\), with \(L(\Psi _{h}) = \ell \), such that \(\sigma _{h} := {\mathtt {R}}(\Psi _{h}) \xrightarrow [h \rightarrow 0]{} \sigma \) locally uniformly on \({\mathbb {R}}\). For any \(d,k \in {\mathbb {N}}\), \(W,N \in {\mathbb {N}}_{0}\), \(L \in {\mathbb {N}}\), we have

where the closure is with respect to locally uniform convergence.\(\blacktriangleleft \)

The proof is in Appendix A.8. In the next lemma, we establish a relation between the approximation capabilities of strict and generalized networks. The proof is given in Appendix A.9.

Lemma 2.22

Let \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\) be continuous and assume that \(\varrho \) is differentiable at some \(x_0 \in {\mathbb {R}}\) with \(\varrho ' (x_0) \ne 0\). For any \(d,k \in {\mathbb {N}}\), \(L \in {\mathbb {N}}\cup \{\infty \}\), \(N \in {\mathbb {N}}_0 \cup \{\infty \}\), and \(W \in {\mathbb {N}}_0\), we have

where the closure is with respect to locally uniform convergence.\(\blacktriangleleft \)

2.4 Networks with Activation Functions that can Represent the Identity

The convergence in Lemma 2.22 is only locally uniformly, which is not strong enough to ensure equality of the associated approximation spaces on unbounded domains. In this subsection, we introduce a certain condition on the activation functions which ensures that strict and generalized networks yield the same approximation spaces also on unbounded domains.

Definition 2.23

We say that a function \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\) can represent \(f: {\mathbb {R}}\rightarrow {\mathbb {R}}\) with n terms (where \(n \in {\mathbb {N}}\)) if \(f \in {\mathtt {SNN}}^{\varrho ,1,1}_{\infty ,2,n}\); that is, if there are \(a_i, b_i, c_i \in {\mathbb {R}}\) for \(i \in \{1,\dots ,n\}\), and some \(c \in {\mathbb {R}}\) satisfying

A particular case of interest is when \(\varrho \) can represent the identity \(\mathrm {id}: {\mathbb {R}}\rightarrow {\mathbb {R}}\) with n terms. \(\blacktriangleleft \)

As shown in Appendix A.10, primary examples are the ReLU activation function and its powers.

Lemma 2.24

For any \(r \in {\mathbb {N}}\), \(\varrho _r\) can represent any polynomial of degree \(\le r\) with \(2r + 2\) terms.\(\blacktriangleleft \)

Lemma 2.25

Assume that \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\) can represent the identity with n terms. Let \(d,k \in {\mathbb {N}}\), \(W, N \in {\mathbb {N}}_0\), and \(L \in {\mathbb {N}}\cup \{\infty \}\) be arbitrary. Then, \( {\mathtt {NN}}_{W,L,N}^{\varrho ,d,k} \subset {\mathtt {SNN}}_{n^2 \cdot W, L, n \cdot N}^{\varrho ,d,k} \). \(\blacktriangleleft \)

The proof of Lemma 2.25 is in Appendix A.9. The next lemma is proved in Appendix A.11.

Lemma 2.26

If \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\) can represent all polynomials of degree two with n terms, then:

-

(1)

For \(d \in {\mathbb {N}}_{\ge 2}\), the multiplication function \(M_{d}: {\mathbb {R}}^{d} \rightarrow {\mathbb {R}}, x \mapsto \prod _{i=1}^{d} x_{i}\) satisfies

$$\begin{aligned} M_{d} \in {\mathtt {NN}}^{\varrho ,d,1}_{6n(2^{j}-1),2j,(2n+1)(2^{j}-1)-1} \quad \text {with}\quad j = \lceil \log _{2} d \rceil . \end{aligned}$$In particular, for \(d =2\) we have \(M_2 \in {\mathtt {SNN}}^{\varrho ,d,1}_{6n,2,2n}\).

-

(2)

For \(k \in {\mathbb {N}}\), the multiplication map \(m : {\mathbb {R}}\times {\mathbb {R}}^k \rightarrow {\mathbb {R}}^k, (x,y) \mapsto x \cdot y\) satisfies \( m \in {\mathtt {NN}}^{\varrho , 1+k, k}_{6kn, 2,2kn} \). \(\blacktriangleleft \)

3 Neural Network Approximation Spaces

The overall goal of this paper is to study approximation spaces associated with the sequence of sets \(\Sigma _{n}\) of realizations of networks with at most n connections (resp. at most n neurons), \(n \in {\mathbb {N}}_{0}\), either for fixed network depth \(L \in {\mathbb {N}}\), or for unbounded depth \(L = \infty \), or even for varying depth \(L = {\mathscr {L}}(n)\).

In this section, we first formally introduce these approximation spaces, following the theory from [21, Chapter 7, Section 9], and then specialize these spaces to the context of neural networks. The next sections will be devoted to establishing embeddings between classical functions spaces and neural network approximation spaces, as well as nesting properties between such spaces.

3.1 Generic Tools from Approximation Theory

Consider a quasi-BanachFootnote 1 space X equipped with the quasi-norm \(\Vert \cdot \Vert _{X}\), and let \(f \in X\). The error of best approximation of f from a non-empty set \(\Gamma \subset X\) is

In case of  [as in Eq. (1.3)] with \(\Omega \subseteq {\mathbb {R}}^{d}\) a set of nonzero measure, the corresponding approximation error will be denoted by \(E(f,\Gamma )_{p}\). As in [21, Chapter 7, Section 9], we consider an arbitrary family \(\Sigma = (\Sigma _{n})_{n \in {\mathbb {N}}_{0}}\) of subsets \(\Sigma _{n} \subset X\) and define for \(f \in X\), \(\alpha \in (0,\infty )\), and \(q \in (0,\infty ]\) the following quantity (which will turn out to be a quasi-norm under mild assumptions on the family \(\Sigma \)):

[as in Eq. (1.3)] with \(\Omega \subseteq {\mathbb {R}}^{d}\) a set of nonzero measure, the corresponding approximation error will be denoted by \(E(f,\Gamma )_{p}\). As in [21, Chapter 7, Section 9], we consider an arbitrary family \(\Sigma = (\Sigma _{n})_{n \in {\mathbb {N}}_{0}}\) of subsets \(\Sigma _{n} \subset X\) and define for \(f \in X\), \(\alpha \in (0,\infty )\), and \(q \in (0,\infty ]\) the following quantity (which will turn out to be a quasi-norm under mild assumptions on the family \(\Sigma \)):

As expected, the associated approximation class is simply

For \(q = \infty \), this class is precisely the subset of elements \(f \in X\) such that \(E(f,\Sigma _{n})_X = {\mathcal {O}}(n^{-\alpha })\), and the classes associated with \(0<q<\infty \) correspond to subtle variants of this subset. If we assume that \(\Sigma _n \subset \Sigma _{n+1}\) for all \(n \in {\mathbb {N}}_0\), then the following “embeddings” can be derived directly from the definition; see [21, Chapter 7, Equation (9.2)]:

Note that we do not yet know that the approximation classes  are (quasi)-Banach spaces. Therefore, the notation \(X_{1}\hookrightarrow X_{2}\)—where for \(i \in \{1,2\}\) we consider the class \(X_{i} := \{x \in X: \Vert x\Vert _{X_{i}}<\infty \}\) associated with some “proto”-quasi-norm \(\Vert \cdot \Vert _{X_{i}}\)—simply means that \(X_{1} \subset X_{2}\) and \(\Vert \cdot \Vert _{X_{2}} \le C \cdot \Vert \cdot \Vert _{X_{1}}\), even though \(\Vert \cdot \Vert _{X_{i}}\) might not be proper (quasi)-norms and \(X_{i}\) might not be (quasi)-Banach spaces. When the classes are indeed (quasi)-Banach spaces (see below), this corresponds to the standard notion of a continuous embedding.

are (quasi)-Banach spaces. Therefore, the notation \(X_{1}\hookrightarrow X_{2}\)—where for \(i \in \{1,2\}\) we consider the class \(X_{i} := \{x \in X: \Vert x\Vert _{X_{i}}<\infty \}\) associated with some “proto”-quasi-norm \(\Vert \cdot \Vert _{X_{i}}\)—simply means that \(X_{1} \subset X_{2}\) and \(\Vert \cdot \Vert _{X_{2}} \le C \cdot \Vert \cdot \Vert _{X_{1}}\), even though \(\Vert \cdot \Vert _{X_{i}}\) might not be proper (quasi)-norms and \(X_{i}\) might not be (quasi)-Banach spaces. When the classes are indeed (quasi)-Banach spaces (see below), this corresponds to the standard notion of a continuous embedding.

As a direct consequence of the definitions, we get the following result on the relation between approximation classes using different families of subsets.

Lemma 3.1

Let X be a quasi-Banach space, and let \(\Sigma = (\Sigma _n)_{n \in {\mathbb {N}}_0}\) and \(\Sigma ' = (\Sigma _n')_{n \in {\mathbb {N}}_0}\) be two families of subsets \(\Sigma _n, \Sigma _n' \subset X\) satisfying the following properties:

-

(1)

\(\Sigma _0 = \{0\} = \Sigma _0'\);

-

(2)

\(\Sigma _n \subset \Sigma _{n+1}\) and \(\Sigma _n' \subset \Sigma _{n+1}'\) for all \(n \in {\mathbb {N}}_0\); and

-

(3)

there are \(c \in {\mathbb {N}}\) and \(C > 0\) such that \(E(f,\Sigma _{c m})_{X} \le C \cdot E(f,\Sigma '_{m})_{X}\) for all \(f \in X, m \in {\mathbb {N}}\).

Then,  holds for arbitrary \(q \in (0,\infty ]\) and \(\alpha > 0\). More precisely, there is a constant \(K = K(\alpha ,q,c,C) > 0\) satisfying

holds for arbitrary \(q \in (0,\infty ]\) and \(\alpha > 0\). More precisely, there is a constant \(K = K(\alpha ,q,c,C) > 0\) satisfying

Remark

One can alternatively assume that \(E(f, \Sigma _{c m})_X \le C \cdot E(f, \Sigma _m ')_X\) only holds for \(m \ge m_0 \in {\mathbb {N}}\). Indeed, if this is satisfied and if we set \(c' := m_0 \, c\), then we see for arbitrary \(m \in {\mathbb {N}}\) that \(m_0 m \ge m_0\), so that

Here, the last step used that \(m_0 \, m \ge m\), so that \(\Sigma _m ' \subset \Sigma _{m_0 \, m}'\). \(\blacklozenge \)

The proof of Lemma 3.1 can be found in Appendix B.1.

In [21, Chapter 7, Section 9], the authors develop a general theory regarding approximation classes of this type. To apply this theory, we merely have to verify that \(\Sigma = (\Sigma _n)_{n \in {\mathbb {N}}_0}\) satisfies the following list of axioms, which is identical to [21, Chapter 7, Equation (5.2)]:

-

(P1)

\(\Sigma _0 = \{0\}\);

-

(P2)

\(\Sigma _n \subset \Sigma _{n+1}\) for all \(n \in {\mathbb {N}}_0\);

-

(P3)

\(a \cdot \Sigma _n = \Sigma _n\) for all \(a \in {\mathbb {R}}{\setminus } \{0\}\) and \(n \in {\mathbb {N}}_0\);

-

(P4)

There is a fixed constant \(c \in {\mathbb {N}}\) with \(\Sigma _n + \Sigma _n \subset \Sigma _{cn}\) for all \(n \in {\mathbb {N}}_0\);

-

(P5)

\(\Sigma _{\infty } := \bigcup _{j \in {\mathbb {N}}_0} \Sigma _j\) is dense in X;

-

(P6)

for any \(n \in {\mathbb {N}}_0\), each \(f \in X\) has a best approximation from \(\Sigma _n\).

As we will show in Theorem 3.27, Properties (P1)–(P5) hold in  for an appropriately defined family \(\Sigma \) related to neural networks of fixed or varying network depth \(L \in {\mathbb {N}}\cup \{\infty \}\).

for an appropriately defined family \(\Sigma \) related to neural networks of fixed or varying network depth \(L \in {\mathbb {N}}\cup \{\infty \}\).

Property (P6), however, can fail in this setting even for the simple case of the ReLU activation function; indeed, a combination of Lemmas 3.26 and 4.4 shows that ReLU networks of bounded complexity can approximate the discontinuous function \({\mathbb {1}}_{[a,b]}\) arbitrarily well. Yet, since realizations of ReLU networks are always continuous, \({\mathbb {1}}_{[a,b]}\) is not implemented exactly by such a network; hence, no best approximation exists. Fortunately, Property (P6) is not essential for the theory from [21] to be applicable: By the arguments given in [21, Chapter 7, discussion around Equation (9.2)] (see also [4, Proposition 3.8 and Theorem 3.12]), we get the following properties of the approximation classes  that turn out to be approximation spaces, i.e., quasi-Banach spaces.

that turn out to be approximation spaces, i.e., quasi-Banach spaces.

Proposition 3.2

If Properties (P1)–(P5) hold, then the classes  are quasi-Banach spaces satisfying the continuous embeddings (3.2) and

are quasi-Banach spaces satisfying the continuous embeddings (3.2) and  . \(\blacktriangleleft \)

. \(\blacktriangleleft \)

Remark

Note that  is in general only a quasi-norm, even if X is a Banach space and \(q \in [1,\infty ]\). Only if one additionally knows that all the sets \(\Sigma _n\) are vector spaces [that is, one can choose \(c = 1\) in Property (P4)], one knows for sure that

is in general only a quasi-norm, even if X is a Banach space and \(q \in [1,\infty ]\). Only if one additionally knows that all the sets \(\Sigma _n\) are vector spaces [that is, one can choose \(c = 1\) in Property (P4)], one knows for sure that  is a norm. \(\blacklozenge \)

is a norm. \(\blacklozenge \)

Proof

Everything except for the completeness and the embedding  is shown in [21, Chapter 7, Discussion around Equation (9.2)]. In [21, Chapter 7, Discussion around Equation (9.2)], it was shown that the embedding (3.2) holds. All other properties claimed in Proposition 3.2 follow by combining Remark 3.5, Proposition 3.8, and Theorem 3.12 in [4]. \(\square \)

is shown in [21, Chapter 7, Discussion around Equation (9.2)]. In [21, Chapter 7, Discussion around Equation (9.2)], it was shown that the embedding (3.2) holds. All other properties claimed in Proposition 3.2 follow by combining Remark 3.5, Proposition 3.8, and Theorem 3.12 in [4]. \(\square \)

3.2 Approximation Classes of Generalized Networks

We now specialize to the setting of neural networks and consider \(d,k \in {\mathbb {N}}\), an activation function \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\), and a non-empty set \(\Omega \subseteq {\mathbb {R}}^d\).

Our goal is to define a family of sets of (realizations of) \(\varrho \)-networks of “complexity” \(n \in {\mathbb {N}}_{0}\). The complexity will be measured in terms of the number of connections \(W \le n\) or the number of neurons \(N \le n\), possibly with a control on how the depth L evolves with n.

Definition 3.3

(Depth growth function) A depth growth function is a non-decreasing function

Definition 3.4

(Approximation family, approximation spaces) Given an activation function \(\varrho \), a depth growth function \({\mathscr {L}}\), a subset \(\Omega \subseteq {\mathbb {R}}^d\), and a quasi-Banach space X whose elements are (equivalence classes of) functions \(f : \Omega \rightarrow {\mathbb {R}}^k\), we define  , and

, and

To highlight the role of the activation function \(\varrho \) and the depth growth function \({\mathscr {L}}\) in the definition of the corresponding approximation classes, we introduce the specific notation

The quantities  and

and  are defined similarly. Notice that the input and output dimensions d, k as well as the set \(\Omega \) are implicitly described by the space X. Finally, if the depth growth function is constant (\({\mathscr {L}}\equiv L\) for some \(L \in {\mathbb {N}}\)), we write \(\mathtt {W}_n(X, \varrho , L)\), etc. \(\blacktriangleleft \)

are defined similarly. Notice that the input and output dimensions d, k as well as the set \(\Omega \) are implicitly described by the space X. Finally, if the depth growth function is constant (\({\mathscr {L}}\equiv L\) for some \(L \in {\mathbb {N}}\)), we write \(\mathtt {W}_n(X, \varrho , L)\), etc. \(\blacktriangleleft \)

Remark 3.5

By convention,  , while \({\mathtt {NN}}_{0,L}^{\varrho ,d,k}\) is the set of constant functions \(f \equiv c\), where \(c \in {\mathbb {R}}^{k}\) is arbitrary (Lemma 2.13), and \({\mathtt {NN}}_{\infty ,L,0}^{\varrho ,d,k}\) is the set of affine functions.\(\blacklozenge \)

, while \({\mathtt {NN}}_{0,L}^{\varrho ,d,k}\) is the set of constant functions \(f \equiv c\), where \(c \in {\mathbb {R}}^{k}\) is arbitrary (Lemma 2.13), and \({\mathtt {NN}}_{\infty ,L,0}^{\varrho ,d,k}\) is the set of affine functions.\(\blacklozenge \)

Remark 3.6

Lemma 2.14 shows that \({\mathtt {NN}}^{\varrho ,d,k}_{W,L} = {\mathtt {NN}}^{\varrho ,d,k}_{W,W}\) if \(L \ge W \ge 1\); hence, the approximation family  associated with any depth growth function \({\mathscr {L}}\) is also generated by the modified depth growth function \({\mathscr {L}}' (n) := \min \{n, {\mathscr {L}}(n) \}\), which satisfies \({\mathscr {L}}' (n) \in \{1, \dots , n \}\) for all \(n \in {\mathbb {N}}\).

associated with any depth growth function \({\mathscr {L}}\) is also generated by the modified depth growth function \({\mathscr {L}}' (n) := \min \{n, {\mathscr {L}}(n) \}\), which satisfies \({\mathscr {L}}' (n) \in \{1, \dots , n \}\) for all \(n \in {\mathbb {N}}\).

In light of Eq. (2.1), a similar observation holds for  with \({\mathscr {L}}' (n) := \min \{n+1, {\mathscr {L}}(n) \}\).

with \({\mathscr {L}}' (n) := \min \{n+1, {\mathscr {L}}(n) \}\).

It will be convenient, however, to explicitly specify unbounded depth as \({\mathscr {L}} \equiv +\infty \) rather than the equivalent form \({\mathscr {L}}(n) = n\) (resp. rather than \({\mathscr {L}}(n) = n+1\)).\(\blacklozenge \)

We will further discuss the role of the depth growth function in Sect. 3.5. Before that, we compare approximation with generalized and strict networks.

3.3 Approximation with Generalized Versus Strict Networks

In this subsection, we show that if one only considers the approximation theoretic properties of the resulting function classes, then—under extremely mild assumptions on the activation function \(\varrho \)—it does not matter whether we consider strict or generalized networks, at least on bounded domains \(\Omega \subset {\mathbb {R}}^d\). Here, instead of the approximating sets for generalized neural networks defined in (3.3)–(3.4) we wish to consider the corresponding sets for strict neural networks, given by  , and

, and

and the associated approximation classes that we denote by

Since generalized networks are at least as expressive as strict ones, these approximation classes embed into the corresponding classes for generalized networks, as we now formalize.

Proposition 3.7

Consider \(\varrho \) an activation function, \({\mathscr {L}}\) a depth growth function, and X a quasi-Banach space of (equivalence classes of) functions from a subset \(\Omega \subseteq {\mathbb {R}}^{d}\) to \({\mathbb {R}}^{k}\). For any \(\alpha >0\) and \(q \in (0,\infty ]\), we have  and

and  ; hence,

; hence,

Proof

We give the proof for approximation spaces associated with connection complexity; the proof is similar for the case of neuron complexity. Obviously,  for all \(n \in {\mathbb {N}}_{0}\), so that the approximation errors satisfy

for all \(n \in {\mathbb {N}}_{0}\), so that the approximation errors satisfy  for all \(n \in {\mathbb {N}}_0\). This implies

for all \(n \in {\mathbb {N}}_0\). This implies  whence

whence  . \(\square \)

. \(\square \)

Under mild conditions on \(\varrho \), the converse holds on bounded domains when approximating in \(L_{p}\). This also holds on unbounded domains for activation functions that can represent the identity.

Theorem 3.8

(Approximation classes of strict vs. generalized networks) Consider \(d \in {\mathbb {N}}\), a measurable set \(\Omega \subseteq {\mathbb {R}}^{d}\) with nonzero measure, and \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\) an activation function. Assume either that:

-

\(\Omega \) is bounded, \(\varrho \) is continuous, and \(\varrho \) is differentiable at some \(x_{0} \in {\mathbb {R}}\) with \(\varrho '(x_{0}) \ne 0\); or that

-

\(\varrho \) can represent the identity \(\mathrm {id}: {\mathbb {R}}\rightarrow {\mathbb {R}}, x \mapsto x\) with m terms for some \(m \in {\mathbb {N}}\).

Then, for any depth growth function \({\mathscr {L}}\), \(k \in {\mathbb {N}}\), \(\alpha > 0\), \(p,q \in (0,\infty ]\), with  as in Eq. (1.3), we have the identities

as in Eq. (1.3), we have the identities

and there exists \(C < \infty \) such that

Before giving the proof, let us clarify the precise choice of (quasi)-norm for the vector-valued spaces  from Eq. (1.3). For \(f = (f_{1},\ldots ,f_{k}): \Omega \rightarrow {\mathbb {R}}^{k}\) and \(0< p < \infty \), it is defined by \( \Vert f\Vert _{L_p(\Omega ;{\mathbb {R}}^k)}^{p} := \sum _{\ell =1}^{k} \Vert f_{\ell }\Vert _{L_{p}(\Omega ;{\mathbb {R}})}^{p} = \int _{\Omega } |f(x)|_{p}^{p} \, {\mathrm{d}}x \), where \(|u|_{p}^{p} := \sum _{\ell =1}^{k}|u_{\ell }|^{p}\) for each \(u \in {\mathbb {R}}^{k}\). For \(p=\infty \), we use the definition \(\Vert f\Vert _{\infty } := \max _{\ell = 1,\ldots ,k} \Vert f_{\ell }\Vert _{L_{\infty }(\Omega ;{\mathbb {R}})}\).

from Eq. (1.3). For \(f = (f_{1},\ldots ,f_{k}): \Omega \rightarrow {\mathbb {R}}^{k}\) and \(0< p < \infty \), it is defined by \( \Vert f\Vert _{L_p(\Omega ;{\mathbb {R}}^k)}^{p} := \sum _{\ell =1}^{k} \Vert f_{\ell }\Vert _{L_{p}(\Omega ;{\mathbb {R}})}^{p} = \int _{\Omega } |f(x)|_{p}^{p} \, {\mathrm{d}}x \), where \(|u|_{p}^{p} := \sum _{\ell =1}^{k}|u_{\ell }|^{p}\) for each \(u \in {\mathbb {R}}^{k}\). For \(p=\infty \), we use the definition \(\Vert f\Vert _{\infty } := \max _{\ell = 1,\ldots ,k} \Vert f_{\ell }\Vert _{L_{\infty }(\Omega ;{\mathbb {R}})}\).

Proof

When \(\varrho \) can represent the identity with m terms, we rely on Lemma 2.25 and on the estimate \({\mathscr {L}}(n) \le {\mathscr {L}}(m^2 n)\) to obtain for any \(n \in {\mathbb {N}}\) that

and similarly  , so that

, so that

We now establish similar results for the case where \(\Omega \) is bounded, \(\varrho \) is continuous, and \(\varrho '(x_{0}) \ne 0\) is well defined for some \(x_{0} \in {\mathbb {R}}\). We rely on Lemma 2.22. First, note by continuity of \(\varrho \) that any \(f \in {\mathtt {NN}}^{\varrho ,d,k} \supset {\mathtt {SNN}}^{\varrho ,d,k}\) is a continuous function \(f : {\mathbb {R}}^d \rightarrow {\mathbb {R}}^k\). Furthermore, since \(\Omega \) is bounded, \({\overline{\Omega }}\) is compact, so that \(f|_{{\overline{\Omega }}}\) is uniformly continuous and bounded. Clearly, this implies that \(f|_{\Omega }\) is uniformly continuous and bounded as well. Since  , this implies

, this implies

and similarly for  . Since \(\Omega \subset {\mathbb {R}}^d\) is bounded, locally uniform convergence on \({\mathbb {R}}^d\) implies convergence in

. Since \(\Omega \subset {\mathbb {R}}^d\) is bounded, locally uniform convergence on \({\mathbb {R}}^d\) implies convergence in  . Hence, for any \(n \in {\mathbb {N}}_0\), using that \({\mathscr {L}}(n) \le {\mathscr {L}}(4n)\), Lemma 2.22 yields

. Hence, for any \(n \in {\mathbb {N}}_0\), using that \({\mathscr {L}}(n) \le {\mathscr {L}}(4n)\), Lemma 2.22 yields

where the closure is taken with respect to the topology induced by  . Similarly, we have

. Similarly, we have

Now, for an arbitrary subset  , observe by continuity of

, observe by continuity of  that

that

that is, if one is only interested in the distance of functions f to the set \(\Gamma \), then switching from \(\Gamma \) to its closure \({\overline{\Gamma }}\) (computed in  ) does not change the resulting distance. Therefore,

) does not change the resulting distance. Therefore,

In both settings (\(\varrho \) can represent the identity, or \(\Omega \) is bounded and \(\varrho \) differentiable at \(x_0\)), Lemma 3.1 shows  and

and  for some \(C \in (0,\infty )\). The conclusion follows using Proposition 3.7. \(\square \)

for some \(C \in (0,\infty )\). The conclusion follows using Proposition 3.7. \(\square \)

3.4 Connectivity Versus Number of Neurons

Lemma 3.9

Consider \(\varrho : {\mathbb {R}}\rightarrow {\mathbb {R}}\) an activation function, \({\mathscr {L}}\) a depth growth function, \(d,k \in {\mathbb {N}}\), \(p \in (0,\infty ]\) and a measurable \(\Omega \subseteq {\mathbb {R}}^d\) with nonzero measure. With  , we have for any \(\alpha >0\) and \(q \in (0,\infty ]\)

, we have for any \(\alpha >0\) and \(q \in (0,\infty ]\)

and there exists \(c > 0\) such that

When \(L := \sup _{n} {\mathscr {L}}(n)=2\) (i.e., for shallow networks), the exponent \(\alpha /2\) can be replaced by \(\alpha \); that is,  with equivalent norms.\(\blacktriangleleft \)

with equivalent norms.\(\blacktriangleleft \)

Remark

We will see in Lemma 3.10 that  if, for instance, \(\varrho = \varrho _r\) is a power of the ReLU, if \(\Omega \) is bounded, and if \(L := \sup _{n \in {\mathbb {N}}} {\mathscr {L}}(n)\) satisfies \(3 \le L < \infty \). In general, however, one cannot expect the spaces to be always distinct. For instance, if \(\varrho \) is the activation function constructed in [45, Theorem 4], if \(L \ge 3\), and if \(\Omega \) is bounded, then both

if, for instance, \(\varrho = \varrho _r\) is a power of the ReLU, if \(\Omega \) is bounded, and if \(L := \sup _{n \in {\mathbb {N}}} {\mathscr {L}}(n)\) satisfies \(3 \le L < \infty \). In general, however, one cannot expect the spaces to be always distinct. For instance, if \(\varrho \) is the activation function constructed in [45, Theorem 4], if \(L \ge 3\), and if \(\Omega \) is bounded, then both  and

and  coincide with \(X_p(\Omega )\). \(\blacklozenge \)

coincide with \(X_p(\Omega )\). \(\blacklozenge \)

Proof

We give the proof for generalized networks. By Lemma 2.14 and Eq. (2.3),

for any \(n \in {\mathbb {N}}\). Hence, the approximation errors satisfy

By the first inequality in (3.7),  and

and  .

.

When \(L=2\), by the remark following Eq. (2.3) we get \({\mathtt {NN}}^{\varrho ,d,k}_{\infty ,{\mathscr {L}}(n),n} \subset {\mathtt {NN}}^{\varrho ,d,k}_{(d+k)n,{\mathscr {L}}(n),\infty }\); hence,  so that Lemma 3.1 shows

so that Lemma 3.1 shows  , with a corresponding (quasi)-norm estimate; hence, these spaces coincide with equivalent (quasi)-norms.

, with a corresponding (quasi)-norm estimate; hence, these spaces coincide with equivalent (quasi)-norms.

For the general case, observe that \(n^{2}+(d+k)n +dk \le (n+\gamma )^{2}\) with \(\gamma := \max \{ d,k \}\). Let us first consider the case \(q < \infty \). In this case, we note that if \((n+\gamma )^2 + 1 \le m \le (n+\gamma +1)^2\), then \(n^2 \le m \le (2\gamma +2)^2 \, n^2\), and thus, \(m^{\alpha q - 1} \lesssim n^{2 \alpha q - 2}\), where the implied constant only depends on \(\alpha , q\), and \(\gamma \). This implies

where \(C = C(\alpha ,q,\gamma ) < \infty \), since the sum has \(((n+\gamma )+1)^2 - (n+\gamma )^2 = 2 n+2\gamma + 1 \le 4n (2\gamma +1)\) many summands. By the second inequality in (3.7), we get for any \(n \in {\mathbb {N}}\)

It follows that

To conclude, we use that  with \(C' = \sum _{m=1}^{(\gamma +1)^{2}} m^{\alpha q-1}\).

with \(C' = \sum _{m=1}^{(\gamma +1)^{2}} m^{\alpha q-1}\).

The proof for \(q=\infty \) is similar. The proof for strict networks follows along similar lines. \(\square \)

The final result in this subsection shows that the inclusions in Lemma 3.9 are quite sharp.

Lemma 3.10

For \(r \in {\mathbb {N}}\), define \(\varrho _r : {\mathbb {R}}\rightarrow {\mathbb {R}}, x \mapsto (x_+)^r\).

Let \(\Omega \subset {\mathbb {R}}^d\) be bounded and measurable with non-empty interior. Let \(L, L' \in {\mathbb {N}}_{\ge 2}\), let \(r_1, r_2 \in {\mathbb {N}}\), let \(p_1,p_2,q_1,q_2 \in (0,\infty ]\), and \(\alpha , \beta > 0\). Then, the following hold:

-

(1)

If

, then \(L' - 1 \ge \tfrac{\beta }{\alpha } \cdot \lfloor L/2 \rfloor \).

, then \(L' - 1 \ge \tfrac{\beta }{\alpha } \cdot \lfloor L/2 \rfloor \). -

(2)

If

, then \(\lfloor L/2 \rfloor \ge \frac{\alpha }{\beta } \cdot (L' - 1)\).

, then \(\lfloor L/2 \rfloor \ge \frac{\alpha }{\beta } \cdot (L' - 1)\).

In particular, if  , then \(L = 2\).\(\blacktriangleleft \)

, then \(L = 2\).\(\blacktriangleleft \)

The proof of this result is given in Appendix E.

3.5 Role of the Depth Growth Function

In this subsection, we investigate the relation between approximation classes associated with different depth growth functions. First, we define a comparison rule between depth growth functions.

Definition 3.11

(Comparison between depth growth functions) The depth growth function \({\mathscr {L}}\) is dominated by the depth growth function \({\mathscr {L}}'\) (denoted \({\mathscr {L}} \preceq {\mathscr {L}}'\) or \({\mathscr {L}}' \succeq {\mathscr {L}}\)) if there are \(c,n_{0} \in {\mathbb {N}}\) such that