Abstract

Mobile smart phones were completely changing people’s communication within the last ten years. However, these devices do not only offer communication through different channels but also devices and applications for fun and recreation. In this respect, mobile phone cameras include now relatively fast (up to 240 Hz) cameras to capture high-speed videos of sport events or other fast processes. The article therefore explores the possibility to make use of this development and the wide spread availability of these cameras in the terms of velocity measurements for industrial or technical applications and fluid dynamics education in high schools and at universities. The requirements for a simplistic PIV (particle image velocimetry) system are discussed. A model experiment of a free water jet was used to prove the concept and shed some light on the achievable quality and determine bottle necks by comparing the results obtained with a mobile phone camera with data taken by a high-speed camera suited for scientific experiments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivation

The reliable characterization of fluid flows is very important for the basic physical understanding of e.g., aerodynamics, turbulence research and process engineering, among many other fundamental fields. However, it would also be useful for many industrial applications where internal or external flows occur in complex geometries or for the education of pupils or undergraduate students for the understanding of basic fluid mechanical concepts. In addition, the qualitative visualization of particle trajectories that follow a fluid flow and the quantitative measurements of the velocity magnitude is very helpful for the optimization and monitoring of industrial apparatus (medical devices, chemical engineering apparatus, heating, water supply, waste water management, etc.).

A sad example where it was necessary to estimate the flow rate using a non-scientific video camera was the Deepwater Horizon accident in 2010 (Crone and Tolstoy 2010). Different data evaluation techniques were applied to evaluate the images of a video sequence showing the rising oil plume. The videos were captured with a frame rate of 30 Hz by a video camera installed in a remotely controlled under water vehicle. For the illumination, only the front light of the submarine was available. Different methods were tested and compared. Correlation-based methods were proved to be very robust and gave good estimates under certain conditions (Staack et al. 2012).

In recent years, particle image velocimetry (PIV) or particle image tracking velocimetry (PTV) has become one of the major tools in fluid mechanics to measure flow fields (Adrian 2005; Kähler et al. 2016). Whereas two-component velocity measurements in a two-dimensional plane are state of the art, modern techniques such as tomographic PIV (Elsinga et al. 2006) or multi- or single-camera PTV (particle tracking velocimetry) allow for the measurements of the three-dimensional velocity field in a volume. Very sophisticated techniques were developed and qualified among each other (Kähler et al. 2016). The underlying principle of PIV and PTV is the imaging of small tracer particles, that were suspended in the flow and should follow the fluid motion faithfully, at two successive time steps. By the evaluation of the displacement of the particles [either with cross-correlation (Willert and Gharib 1991; Scarano 2002) or with tracking of individual particle images (Malik et al. 1993)] and knowing the optical magnification, the velocity vector can be estimated.

However, the major drawback is the high effort in terms of complex setups, costly and fragile experimental devices and comprehensive expert knowledge about the data acquisition and evaluation routines. For a decent 2D2C PIV setup, a double-pulse or high-speed camera costs in the order of 25,000 to 50,000 Euro. The laser light source would be another 25,000 Euro and the data acquisition and processing infrastructure adds easily up to a total amount of about 60,000 to 100,000 Euro.

On the other hand, often the flow velocities in many industrial applications are low and very high precision measurements are not necessary. Furthermore, the surrounding might be harsh and the financial barrier is often too high for small companies or schools/universities to purchase a sophisticated PIV setup. However, in today's mobile phones, cameras, capable of image recording with high frame rates and reasonable quality, are implemented. Due to mass production, the cost of these devices is typically in the order of 500–1000 Euro and they can in principle be used for PIV or PTV. The main benefit of using a camera with constant frame rate in comparison with double frame cameras is that no synchronization is necessary if a continuous light source is used, and thus, a costly and complex synchronization is not necessary. Solid-state cw-lasers are available for approximately 1000 Euro per Watt optical power, and high-power LEDs are even cheaper. For the data evaluation, free software or demo/education versions are available for free or low budget. This software serves fully satisfactory for the 2D2C flow field estimation. Therefore, it was the scope of the current analysis to evaluate whether a PIV system featuring a mobile phone’s camera and a low-budget cw-laser would be suitable for reliable flow field estimation. It has to be mentioned that the study was limited to flows in water for two reasons. First of all, higher Reynolds numbers can be reached in comparison to air flows due to the lower kinematic viscosity of water, and thus, the technical relevance might be higher. Second, larger particles can be used in water, and therefore, the signal-to-noise ratio is much larger using low-power light sources like low-power cw-lasers or LEDs.

The paper is organized as follows. First, the requirements for different flow applications are discussed to give a guideline for the application of such a system. Second, a mobile phone camera was qualified in terms of field of view and data readout procedure to evaluate limits for the application. Third, a simple nozzle test flow was characterized simultaneously by a high-speed PIV system that meets scientific requirements and the mobile phone camera to determine the related uncertainties of the proposed low-budget system. The paper ends with a summary and an outlook.

1.2 Sampling requirements and currently available cameras in mobile phones

In Fig. 1 (left), the required acquisition frequency f is given versus the fluid velocity u for different particle displacements in physical space. Figure 1 (right) shows the particle image displacement on the sensor in pixels as a function of the particle displacement in physical space and the magnification, typical for mobile phone cameras, similar to the analysis by Hain and Kähler (2007). The graph is based on the assumption of a size of \(1.5 \times 1.5\,\upmu \mathrm {m}^2\) per pixel, similar to that of the camera of the iPhone 6 (Apple Inc, USA 2015) and typical for modern mobile phone cameras. The graphs show that a broad fluid mechanical spectrum is covered by using a mobile phone camera for flow investigations. The Reynolds number given on the second horizontal axis in Fig. 1 (left) should provide a first estimate for the user and is based on a length scale of 0.1 m and the kinematic viscosity of water at 20 °C (\(\nu = 1 \cdot 10^{-6}\,\frac{\mathrm {m^2}}{\mathrm {s}}\)).

Left particle displacement \(\Delta x\) in physical space depending on the flow velocity and the acquisition frequency. The dashed line marks \(240\,\mathrm {Hz}\), the maximum acquisition rate of the mobile phone camera at a resolution of \(1280 \times 720\,\mathrm {px}\). Right particle image displacement \(\Delta X\) in pixel in the image plane depending on \(\Delta x\) and the magnification factor assuming a pixel size of \(1.5 \times 1.5\,\upmu \mathrm {m}^2\), which is the pixel size of the mobile phone camera (Apple Inc USA 2015)

Unfortunately, data on current mobile phone camera frame rates are limited. Most often the camera chip size in terms of pixel is reduced for higher frame rate recordings. The frame rates are usually specified for the shorter length of the chip size. Currently sampling rates of up to 120/240 Hz are the highest frame rates on the market at a typical resolution of \(1280 \times 720\,\mathrm {pixel}\) for different manufacturers. However, it is relatively unlikely that the frame rates will go up much higher in the future since 240 Hz offer a good compromise for slow motion recording of most sport scenes and the ability to capture enough light for the imaging of the scene.

Another important issue is the exposure time which very often cannot be manually set. However, some of the latest operating systems offer the ability to manually control the exposure time which is a great benefit to avoid motion blur as discussed later.

1.3 Low-cost light sources

Nowadays, double-pulse Nd:YAG lasers are typically applied in conventional PIV system. These lasers offer pulses with a duration in the order of ~8 ns, and thus, motion blur can be avoided in most of the applications. Two cavities allow for a completely free timing of the two lasers pulses, i.e., the laser pulse separation is adaptable to the experimental requirements without changing the pulse energy or duration. Depending on the layout of the cavities, very low divergence (\(M^{2}<1.5\)) may be obtained, which allows to focus the laser beam over large distances. This is required for many PIV applications in order to generate a thin laser light sheet over the whole field of view. However, a drawback of these lasers is the high price. Depending on the cavity layout and the manufacturer, such lasers with \(2 \times 200\,\mathrm {mJ}\) @ \(532\,\mathrm {nm}\) and a repetition frequency of \(10\,\mathrm {Hz}\) cost in the order of 35,000–60,000 Euro.

For time-resolved flow investigations, lasers with higher repetition rates are required. Dual-cavity high-speed lasers for PIV applications with frequencies of up to \(10\,\mathrm {kHz}\) are often employed. At \(1\,\mathrm {kHz}\) these lasers have pulse energies in the order of \(20\,\mathrm {mJ}\) and their price is in the order of 100,000 Euro or above.

Most of the applications in air require the lasers mentioned above, since the size of the seeding particles is typically in the order of \(1\,\upmu \mathrm {m},\) and thus, the scattered light intensity is quite weak. However, due to the high density of liquids, the size of the seeding particles used for investigations in liquids can be much larger. Hollow glass spheres are available which have a specific weight to match the specific weight of water and their size is minor problematic, as they follow the flow faithfully. Often hollow glass spheres with diameters in the order of \(10\,\upmu \mathrm {m}\) are used for measurements in macroscopic domains. These large particles scatter much more light than the small particles used in air, and thus, lasers with a relatively low energy/power can be applied for measurements in liquids/water. In recent years, many low-cost lasers became available on the market. For PIV applications typically \(532\,\mathrm {nm}\) diode-pumped solid-state (DPSS) lasers are used, which offer a low \(M^2\) if designed as single-mode lasers. These lasers are available with a power of more than \(10\,\mathrm {W}\) at considerably lower costs than pulsed lasers, typically used in scientific PIV setups. Recently Willert (2015) showed the suitability of a cw-laser illumination in combination with a high-speed camera for the measurement of the statistical properties in a turbulent flow. Other lasers which are well suited for PIV applications and which offer a higher beam quality than DPSS lasers are optically pumped semiconductor lasers (OPSL). These lasers typically have smaller beam diameters as DPSS lasers, and they offer a very low \(M^{2}<1.5\). This allows for a generation of thin and homogeneous laser light sheets. The costs for these lasers are a little bit higher compared to the DPSS lasers (approx. 50 % more expensive).

A cheaper light source compared to the lasers so far are high-power LEDs. Willert et al. (2010) and Buchmann et al. (2012) have shown that these LEDs can be applied for measurements in liquids, even for volume illumination. They provide a radiant power in excess of \(10\,\mathrm {W}\), and the price for a complete system is below 1000 Euro. However, the drawback in comparison with lasers is the higher thickness of the illuminated region and the higher divergence which leads to light sheets with a poorer homogeneity.

2 Camera qualification

2.1 Field of view and optical distortions

For sampling rates lower than 120 Hz, the sensor size is \(1920\times 1080\) pixel for the mobile phone camera. In order to increase the sampling rate, the active sensor size is lowered to \(1280\times 720\) pixel. To estimate the corresponding field of view (FOV) and the optical distortions, a calibration target was translated in viewing direction from the closest point in front of the mobile phone where a focused image could be recorded, \(z = 65\) mm up to \(z = 200\) mm for \(f_{\mathrm {rec}} = 30\) and 120 Hz. In Fig. 2, the spatial resolution in mm/pixel determined in x- and y-direction can be seen at the lines with symbols at the left y-axis. The corresponding FOV is illustrated by the plain lines at the right y-axis. It can be seen that the spatial resolution in both directions is equal for the same frame rate, but differs between the frame rates. For \(f_{\mathrm {rec}} = 120\) Hz the spatial resolution can reach values between 0.03 and 0.1 for the investigated distances. For the same distances, the spatial resolution is \(0.055 \ldots 0.15\) for a frequency of \(f_{\mathrm {rec}} = 240\) Hz which suggests that some kind of pixel binning is undertaken. However, the software is programmed in a way that the FOV stays constant when changing the frame rate. For the closest distance, the short axis is about 33 mm and the long axis is 58 mm. The FOV increases linearly to values of 104 and 184 mm (short and long axis) for the largest distance of \(z = 200\) mm which is a reasonable large FOV for many PIV applications.

Since the camera objective is optimized for image acquisition in air, the distortions are very small as shown in Fig. 3 where the mean and rms calibration errors are plotted over distance z. It can be seen that both values have the same order of magnitude and increase with distance or larger FOVs so that the relative error stays almost constant. In general, the calibration errors are below 0.03 mm which are considered to be very low values and are proof for a reasonable good optics of the camera.

However, the user has to be aware that the objective (as also most camera objectives) is optimized for air. So-called pincushion distortion will appear on the image if a scenery is imaged through planes of refractive index change (glass window and water for the current experiments). This distortion is larger for wide angle lenses as typically most mobile phone camera lenses are. Most of the image distortions can be corrected with a proper calibration. For a quantification of the pincushion effect in the current investigation, a calibration plate with an equidistant grid was placed at the measurement plane. The mean difference for the outermost lines of the grid to the line in the middle where the distortions should be ideally zero was 3.7 pixel (over 360 pixel) and 8.3 pixel (over 640 pixel) for the x- and y-direction, respectively. This corresponds to ≈0.01 pixel/pixel. Using the same third-order calibration function as for the data in Fig. 3 the mean magnitude of the calibration error was with ≈0.024 mm still very low for a field of view of \({\approx}120\times 70\) mm2 at 240 Hz and thus only 20 % larger than in air for the same conditions. However, the perspective distortion due to high viewing angles cannot be accounted for and will lead to erroneous results in the case of out-of-plane movement. In the current setup, the maximum viewing angle was approximately 16°.

2.2 Readout and frequency stability

For reliable measurements in PIV, the time interval between two successive frames has to be known with high precision. To test whether the image acquisition frequency was constant, a signal with known frequency was generated by a high-power LED. The LED was driven by a function generator (Instek AFG 2125). The signal of the LED was additionally qualified by a fast photodiode (Alphalas GmbH, UPD200-UD, rise time <175 ps) and a fast oscilloscope (LeCroy 4096 HD, 1 GHz sampling rate). The comparison of the driving signal and the light output for the used frequency range showed negligible differences, and it can be assumed that the square signal of the LED can be taken as basis to qualify the readout procedure. During the inspection of the data, it was observed that the mobile phone camera tried to adjust the exposure time immediately when the illumination is changing. For that reason, the recorded intensity signal shows a temporal delay to the signal of the LED.

At this point, it has also to be mentioned that most mobile phone cameras work with a rolling shutter, which means that not the entire image is captured at once, but the pixels are scanned successively. This leads to a short time delay between pixels. Therefore, pulsed light sources cannot be used, since the duration of the light pulses is so short that only part of the image would be illuminated. Frame straddling (Raffel et al. 2007) to ensure a certain time delay between images and lower the exposure time to avoid streaks would therefore not work.

In principle, it would also be possible to use other recording modes designed for the photography of sport scenes instead of the video mode. Modern mobile phones offer the possibility to shoot ten or more pictures at frequencies of about 10 Hz, and for some models the exposure time can be manually set. Even if a global shutter can be used in this mode, the synchronization with a pulsed light source cannot easily be made and a continuous light source has to be employed despite the fact that the low frame rate of 10 Hz decreases the range of applicability strongly (cmp. Fig. 1).

However, to conclude for the currently used video mode, the frequency was well captured for both frame rates and no hint of any kind of instability for the timing was found. This is a very important prerequisite for reliable measurements.

3 Experimental verification

3.1 Setup

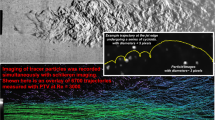

For the verification of the measurements with the mobile phone camera, a simple jet flow was established in a water basin with dimensions \(15.5\times 12.5\times 16\) cm3. The nozzle was made from Teflon and had a diameter of 5 mm. The jet axis will be referred to as x-axis. A schematic of the setup is shown in Fig. 4. The water was seeded with 1 \(\upmu\)m large polystyrene particles (Vestosint) and driven by a syringe pump. The laser light sheet was generated expanding the beam of a 1W cw-laser light source. In order to estimate the ground truth, the flow was observed from the opposite side with a scientific high-speed camera (Dimax HS 4 by PCO GmbH, pixel size 11 \(\upmu\)m). The spatial resolution of the mobile phone camera was 12.41 pixel/mm, whereas the spatial resolution of the high-speed camera was 23.23 pixel/mm with a Zeiss f = 50 mm objective lens to have approximately the same field of view. The exposure time was limited to 700 \(\upmu\)s in order to avoid motion blur. As already stated, the exposure time of the mobile phone camera could not be adjusted. Two different volume flow rates of 50 and 100 ml/min were set by the syringe pump (Harvard Systems) and resulted in Reynolds numbers, based on the nozzle diameter and the measured exit velocities of Re = 330 and 550, respectively. To see whether the mobile phone can also provide data for the developing flow, the image capturing was started just before initiating the flow and images were captured for a longer time to allow the jet to reach a steady state. Since the mobile phone camera could not be triggered, the two cameras were synchronized a posteriori via cross-correlation of the unsteady velocity signal over time. Therefore, the temporal velocity signal at the location \(x = 70\) mm and \(y = 0\) mm was correlated between the two cameras. The largest correlation value appears when the two signals superimpose and the temporal offset between the two cameras can be determined. This was necessary to make sure that the same time spans for the developing flow were taken into account for the averaging of the final results.

3.2 Flow measurements

The frame rate for the results was set to 240 Hz which gives in a maximum displacement of 10 pixel for the scientific high-speed camera and about half of that value of 5 pixel for the mobile phone camera. The advantage of the higher sampling rate is that motion blur can be limited, since it was not possible to manually adjust the exposure time. The effect of motion blur can be seen in the raw images shown in Fig. 5. Using the lower frequency results in clearly visible streaks in the core of the jet, whereas only slight motion blur can be seen for the larger acquisition frequency. However, due to the lower displacement using the higher frame rate of 240 Hz the results have a much larger relative uncertainty for the measurements with the mobile phone camera since the displacement between images is very small. However, the flow in this case is highly oversampled and it is possible to apply the correlation not only for a time difference of \(\Delta t = 1/240\,\) s, but uses also information from correlations of images that are separated by \(n\times \Delta t\), with \(n \in \mathbb {N}\) (Hain and Kähler 2007). Currently many different ideas to make use of the temporal information were developed and successfully applied (Sciacchitano et al. 2012; Lynch and Scarano 2013; Cierpka et al. 2013; Schröder et al. 2015). For a recent overview of their performance and the interested reader is referred to Kähler et al. (2016).

For the final interrogation windows, a size of \(32\times 32\) pixel was chosen for the high-speed camera and \(16\times 16\) pixels for the mobile phone camera with an overlap of 50 %. Using these values, the vector spacing was 0.65 mm for the mobile phone camera and 0.69 mm for the high-speed camera. The velocity fields were finally overlapped and interpolated to a common grid with 0.7 mm spacing using MATLAB routines with cubic data interpolation. Since the measurements were not a priori synchronized, the temporal evolution of the velocity at the axis of the jet was correlated between both cameras and the temporal correspondence between the vector fields could be captured. For the mean flow fields and rms distributions, 4000 corresponding vector fields were taken.

The images taken with 120 Hz showed large motion blur as shown in Fig. 5. However, the results for 120 Hz (not shown) match quite well between the mobile phone camera and the high-speed camera. The motion blur in the core of the jet does not seem to influence the results strongly since cross-correlation is very robust and only straight streaks appear. However, in the case of curved path lines this would affect the results to a larger extent; therefore, the following discussion is focused on the evaluation of images taken with 240 Hz, where the motion blur was considerably lower.

Instantaneous flow fields showing the starting process after turning on the jet are presented in Fig. 6. On the left side of the figure, the flow field using the images of the scientific high-speed camera is shown. For the results presented in the middle, the cross-correlation was calculated using \(\Delta t = 1/240\,\) s, and on the right side each image was correlated with its second successor, giving \(\Delta t = 2\times 1/240\,\)s.

The main features are visible in all of the fields. However, the noise in the velocity distribution of the mobile phone camera (middle and right side of Fig. 6) is a little bit higher than in the field of the scientific high-speed camera. This might be due to the different interrogation window sizes. However, although the interrogation window sizes are different for both of the cameras, the regions in physical space are nearly the same due to the different magnification factors. Therefore, the number of particles/particle images in an interrogation window corresponds well. It is also obvious from the figure that taking a larger \(\Delta t\), i.e., perform correlations between images with larger time delay, results in smoother vector fields as the relative error decreases. However, it can also be seen that in the region of the jet core, where the velocity gradients are very large, the velocity is underestimated by taking a twice as large \(\Delta t\) due to the fact that in order to have the same displacement with the mobile phone camera the particles had to move a twice as large distance in physical space. This results in a loss of pairs due to out-of plane motion for fast particles and very strong in-plane gradients, which results in an underestimation of the mean velocity.

To give an overview of the flow, in Fig. 7 the mean velocities in x- and y-direction are shown using a fairly simple standard cross-correlation and no image preprocessing. The mean flow fields for both cameras show the expected jet flow with a high-speed core flow and decreasing velocities with increasing distance from the nozzle. The agreement in the spatial distribution among the different cameras is quite good from visual inspection and only minor differences show up in the distributions of the velocity. The artifact seen in the data for the mobile phone camera at \(x = 20\) mm and \(y = -3\) mm is attributed to some water droplets on the window of the water basin and should be regarded as outlier. The mean absolute differences for all velocity components are also listed in Table 1. For the u-component, the mean difference is 0.77 mm/s which is about 1 % of the jet core velocity. It can therefore be stated that there is as well a good quantitative agreement among the measurements.

However, in the case of the larger time delay between the correlated images (Fig. 7, right side) the velocity shows considerable lower values in the jet core than in the case of \(\Delta t = 1/240\) s. This is attributed to the aforementioned effect of the large gradients and could be lowered by the application of more advanced evaluation schemes (e.g., pyramid correlation or particle tracking).

The rms values of the velocity are shown in Fig. 9. Again, the results compare well among the different cameras and data evaluation schemes. High values for \(u_\mathrm {rms}\) are especially seen in the jet core region due to the transient nature of the jet. The generation of vortices is responsible for the large values of the \(v_\mathrm {rms}\) distribution. However, the differences between the scientific high-speed camera and the mobile phone camera are larger than for the mean values. To understand the distribution, one has to consider competing effects. Since the particle image displacement for the mobile phone camera was about half the displacement of the high-speed camera in the case of \(\Delta t = 1/240\) s, the relative errors are larger which results in a larger deviation for the rms values as shown in Table 1. For the evaluation with \(\Delta t = 2\times 1/240\) s the differences are much lower, as the relative error decreases. This effect can especially be seen in regions of stagnant flow. However, as already mentioned more advanced evaluation schemes as for example the pyramid correlation (Sciacchitano et al. 2012) can be used to determine reliable mean values and to reduce the overestimation of the rms values due to the large relative errors. The pyramid correlation approach makes use of information from different time delays (here \(\pm 1\times 1/240\) s) and performs correlation averaging. In the case of the current measurements, the pyramid approach gave very similar results (therefore not shown) as the correlation with \(\Delta t = 1/240\) s for the mean values and shows much lower differences for the rms values as shown in Table 1.

In Fig. 8, profiles of the stream-wise velocity in x-direction at y = 0 mm (top) and in y-direction at \(x = 10, 30, 60\) mm (bottom) for Re = 550 and image acquisition rates of 240 Hz for \(\Delta t = 1/240\) s (black dashed line) and \(\Delta t = 2\times 1/240\) s (blue dotted line) are shown. These profiles made it obvious that the larges differences occur in the high-speed region close to the jet exit where the larges gradients appear. The mean stream-wise velocity shows a 10 % higher peak for the measurement by the mobile phone camera for the evaluation with \(\Delta t = 1/240\,\) s. The mean velocity with the evaluation with a \(\Delta t = 2\times 1/240\,\) s shows the already discussed effect of underestimated velocities in the core region of the jet. However, the agreement for \(x \ge 20\) mm is very good. The same trend can be observed in the vertical profiles at different downstream location in the bottom of the figure. Again, the velocity close to the jet exit deviates among the different evaluations but further downstream a good agreement is achieved.

Profiles of the stream-wise velocity in x-direction at y = 0 mm (top) and in y-direction at \(x = 10, 30, 60\) mm (bottom) for Re = 550 and image acquisition rates of 240 Hz for \(\Delta t = 1/240\,\) s (black dashed line for the mobile phone camera and red solid line for the scientific high-speed camera) and \(\Delta t = 2\times 1/240\,\) s (blue dotted line)

4 Conclusion and outlook

It was shown that PIV measurements using standard modern mobile phone cameras and low-cost light sources are feasible. The results also show that the application is limited to relatively moderate flow velocities or coarse spatial resolutions. To avoid motion blur, it is recommended to use always the highest frame rate possible and apply advanced evaluation techniques like sliding ensemble correlation or pyramid correlation, especially when large displacement gradients occur.

However, it is possible to have a first idea of the flow field and identify technical problematic regions in industrial applications or use the technique for educational purposes, which increases the range of useful application of modern mobile phones. Although the quality and range of applications could be enlarged if the hard- and/or software would be modified, it was the aim of the current study to demonstrate that reliable flow field measurements can be carried out without any modification or expert knowledge. Since nowadays mobile phones are a major part of students everyday life, the visualization of flow fields in fluid dynamics classes could help to make lecturing more demonstrative and lively. If an easy to use software would provide the velocity data instantaneously on the phone and allows to control the exposure time, the range of application would even be extended and a wider use for technical applications and education can be expected.

Maybe an overambitious concept would be the extension to multi-camera PIV measurements using synchronized phones. Some phones offer the synchronization with other Wi-Fi-connected devices. Thus, stereoscopic as well as tomographic PIV recordings under low seeding conditions and/or thick light sheets are possible in principle.

The major limitation so far is the fact that it is not possible to adjust the exposure time independently of the acquisition frame rate for many operating systems. However, very recent mobile phone cameras and the corresponding software offer the possibility to adjust the exposure time manually.

For completeness, it has to be mentioned that despite the interesting possibilities for education and some technical or industrial applications, the results should be regarded as qualitative. For measurements in the scientific field, specialized cameras will still be necessary in the future. As a final remark, the mentioned frame rates and resolution discussed in the text are based on current available information and the authors reserve the right not to be responsible for correctness or completeness.

References

Adrian R (2005) Twenty years of particle image velocimetry. Exp Fluids 39:159–169. doi:10.1007/s00348-005-0991-7

Apple Inc USA (2015) Specifications of the iPhone 6. https://www.apple.com/iphone-6/specs. Accessed Oct 16

Buchmann NA, Willert CE, Soria J (2012) Pulsed, high-power LED illumination for tomographic particle image velocimetry. Exp Fluids 53:1545–1560. doi:10.1007/s00348-012-1374-5

Cierpka C, Lütke B, Kähler CJ (2013) Higher order multi-frame particle tracking velocimetry. Exp Fluids 54:1533. doi:10.1007/s00348-013-1533-3

Crone TJ, Tolstoy M (2010) Magnitude of the 2010 gulf of mexico oil leak. Science 330:634. doi:10.1126/science.1195840

Elsinga GE, Scarano F, Wieneke B, van Oudheusden BW (2006) Tomographic particle image velocimetry. Exp Fluids 41:933–947. doi:10.1007/s00348-006-0212-z

Hain R, Kähler C (2007) Fundamentals of multiframe particle image velocimetry (PIV). Exp Fluids 42:575–587. doi:10.1007/s00348-007-0266-6

Kähler CJ, Astarita T, Vlachos P, Sakakibara J, Hain R, Discetti S, La Foy R, Cierpka C (2016) Main results of the 4th International PIV Challenge. Exp Fluids 57:97. doi:10.1007/s00348-016-2173-1

Lynch K, Scarano F (2013) A high-order time-accurate interrogation method for time-resolved PIV. Meas Sci Technol 24:035305. doi:10.1088/0957-0233/24/3/035305

Malik NA, Dracos T, Papantoniou DA (1993) Particle tracking velocimetry in three-dimensional flows. Exp Fluids 15:279–294. doi:10.1007/BF00223406

Raffel M, Willert CE, Wereley ST, Kompenhans J (2007) Particle image velocimetry: a practical guide. Springer, New York

Scarano F (2002) Iterative image deformation methods in PIV. Meas Sci Technol 13:R1. doi:10.1088/0957-0233/13/1/201

Schröder A, Schanz D, Michaelis D, Cierpka C, Scharnowski S, Kähler CJ (2015) Advances of PIV and 4D-PTV “Shake-The-Box” for turbulent flow analysis—the flow over periodic hills. Flow Turbul Combust 95:193–209. doi:10.1007/s10494-015-9616-2

Sciacchitano A, Scarano F, Wieneke B (2012) Multi-frame pyramid correlation for time-resolved PIV. Exp Fluids 53:1087–1105. doi:10.1007/s00348-012-1345-x

Staack K, Wereley S, Garbe CS, Willert C (2012) A comparison of state-of-the-art image evaluation techniques for analysis of opaque flows. In: 16th International symposium on applications of laser techniques to fluid mechanics, Lisbon, Portugal, July 9–12

Willert C, Gharib M (1991) Digital particle image velocimetry. Exp Fluids 10:181–193. doi:10.1007/BF00190388

Willert C, Stasicki B, Klinner J, Moessner S (2010) Pulsed operation of high-power light emitting diodes for imaging flow velocimetry. Meas Sci Technol 21:075402. doi:10.1088/0957-0233/21/7/075402

Willert CE (2015) High-speed particle image velocimetry for the efficient measurement of turbulence statistics. Exp Fluids 56: doi:10.1007/s00348-014-1892-4

Acknowledgments

The authors would like to thank C.J. Kähler for the support in terms of laboratory time and equipment.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cierpka, C., Hain, R. & Buchmann, N.A. Flow visualization by mobile phone cameras. Exp Fluids 57, 108 (2016). https://doi.org/10.1007/s00348-016-2192-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-016-2192-y