Abstract

This paper is concerned with the study of a diffusive perturbation of the linear LSW model introduced by Carr and Penrose. A main subject of interest is to understand how the presence of diffusion acts as a selection principle, which singles out a particular self-similar solution of the linear LSW model as determining the large time behavior of the diffusive model. A selection principle is rigorously proven for a model which is a semiclassical approximation to the diffusive model. Upper bounds on the rate of coarsening are also obtained for the full diffusive model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Carr and Penrose (1998) introduced a linear version of the Lifschitz–Slyozov–Wagner (LSW) model (Lifschitz and Slyozov 1961; Wagner 1961). In this model, the density function \(c_0(x,t), \ x>0,t>0,\) evolves according to the system of equations,

The parameter \(\Lambda _0(t) > 0\) in (1.1) is determined by the conservation law (1.2) and is therefore given by the formula,

One can also see that the derivative of \(\Lambda _0(t)\) is given by

whence \(\Lambda _0(\cdot )\) is an increasing function.

The system (1.1), (1.2) can be interpreted as an evolution equation for the probability density function (pdf) of random variables. Thus, let us assume that the initial data \(c_0(x)\ge 0, \ x>0,\) for (1.1), (1.2) satisfy \(\int _0^\infty c_0(x) \ \hbox {d}x<\infty \), and let \(X_0\) be the nonnegative random variable with pdf \(c_0(\cdot )/\int _0^\infty c_0(x) \ \hbox {d}x\). The conservation law (1.2) implies that the mean \(\langle X_0\rangle \) of \(X_0\) is finite, and this is the only absolute requirement on the variable \(X_0\). If for \(t>0\) the variable \(X_t\) has pdf \(c_0(\cdot ,t)/\int _0^\infty c(x,t) \ \hbox {d}x\), then (1.1) with \(\Lambda _0(t)=\langle X_t\rangle \) is an evolution equation for the pdf of \(X_t\). Equation (1.4) now tells us that \(\langle X_t\rangle \) is an increasing function of t.

There is an infinite one-parameter family of self-similar solutions to (1.1), (1.2). Using the normalization \(\langle X_0\rangle =1\), the initial data for these solutions are given by

The random variable \(X_t\) corresponding to the evolution (1.1), (1.2) with initial data (1.5) is then given by

The main result of Carr and Penrose (1998) (see also Carr 2006) is that a solution of (1.1), (1.2) converges at large time to the self-similar solution with parameter \(\beta \), provided the initial data and the self-similar solution of parameter \(\beta \) behave in the same way at the end of their supports. In Sect. 2, we give a simple proof of the Carr–Penrose convergence theorem using the beta function of a random variable introduced in Conlon (2011).

The large time behavior of the Carr–Penrose (CP) model is qualitatively similar to the conjectured large time behavior of the LSW model (Niethammer 1999), provided the initial data have compact support. In the LSW model, there is a one-parameter family of self-similar solutions with parameter \(\beta , 0<\beta \le 1\), all of which have compact support. The self-similar solution with parameter \(\beta <1\) behaves in the same way toward the end of its support as does the CP self-similar solution with parameter \(\beta \). It has been conjectured (Niethammer 1999) that a solution of the LSW model converges at large time to the LSW self-similar solution with parameter \(\beta \), provided the initial data and the self-similar solution of parameter \(\beta \) behave in the same way at the end of their supports. A weak version of this result has been proven in Conlon and Niethammer (2014).

It was already claimed in Lifschitz and Slyozov (1961) and Wagner (1961) that the only physically relevant self-similar LSW solution is the one with parameter \(\beta =1\). This has been explained in a heuristic way in several papers (Meerson 1999; Rubinstein and Zaltzman 2000; Velázquez 1998), by considering a model in which a second-order diffusion term is added to the first-order LSW equation. It is then argued that diffusion acts as a selection principle, which singles out the \(\beta =1\) self-similar solution as giving the large time behavior. In this paper, we study a diffusive version of the Carr–Penrose model, with the goal of understanding how a selection principle for the \(\beta =1\) self-similar solution (1.5) operates.

In our diffusive CP model, we simply add a second-order diffusion term with coefficient \(\varepsilon /2>0\) to the CP equation (1.1). Then, the density function \(c_\varepsilon (x,t)\) evolves according to a linear diffusion equation, subject to the linear mass conservation constraint as follows:

We also need to impose a boundary condition at \(x=0\) to ensure that (1.7), (1.8) with given initial data \(c_\varepsilon (x,0) = c_0(x) \ge 0, \ x > 0\), satisfying the constraint (1.8) has a unique solution. We impose the Dirichlet boundary condition \(c_\varepsilon (0,t)=0, \ t>0,\) because in this case the parameter \(\Lambda _\varepsilon (t) > 0\) in (1.7) is given by the formula

Hence, the diffusive CP model is an evolution equation for the pdf \(c_\varepsilon (\cdot ,t)/\int _0^\infty c_\varepsilon (x,t) \hbox {d}x\) of a random variable \(X_{\varepsilon ,t}\) and \(\Lambda _\varepsilon (t)=\langle X_{\varepsilon ,t}\rangle \). Furthermore, it is easy to see from (1.7), (1.8) that

It follows from (1.10) and the maximum principle (Protter and Weinberger 1984) applied to (1.7) that the function \(t\rightarrow \Lambda _\varepsilon (t)\) is increasing.

Smereka (2008) studied a discretized CP model and rigorously established a selection principle for arbitrary initial data with finite support. He also proved that the rate of convergence to the \(\beta =1\) self-similar solution (1.5) is logarithmic in time. Since discretization of a first-order PDE introduces an effective diffusion, one can just as well apply the discretization algorithm of Smereka (2008) to (1.7). In the discretized model of Smereka, time t is left continuous and the x discretization \(\Delta x\) for (1.7) is required to satisfy the condition \(\varepsilon =2\Delta x\). In Smereka (2008), the large time behavior of solutions to this discretized model is studied by using a Fourier method. The Fourier method cannot be implemented if the assumption \(\varepsilon =2\Delta x\) is dropped.

In Sect. 2, we discuss a variety of models, which in some sense interpolate between the CP model (1.1), (1.2) and the diffusive CP model (1.7), (1.8). We first show that the CP and the discretized diffusive model are algebraically equivalent if and only if \(\varepsilon =2\Delta x\). In particular, they are associated with the unique two-dimensional non-Abelian Lie algebra. Next we study the CP model (1.1), (1.2) with Gaussian initial data. It follows from Carr and Penrose (1998) that the solution converges at large time to the \(\beta =1\) self-similar solution (1.5). We prove that the rate of convergence is logarithmic in time as in the Smereka model. The solution \(c_\varepsilon (x,t)\) to (1.7) with initial data of compact support has the property that for \(t>0\) the function \(c_\varepsilon (x,t)\) is approximately Gaussian at large x. Based on this observation, we conjecture that for a large class of initial data with compact support, the solution \(c_\varepsilon (\cdot ,t)\) to the diffusive CP model (1.7), (1.8) converges as \(t\rightarrow \infty \) to the \(\beta =1\) self-similar solution (1.5). Furthermore, the rate of convergence is logarithmic in time.

In the remainder of the paper, we take some steps in the direction of proving this conjecture. First in Sect. 2, we study the semiclassical approximation to the solution of (1.7). This leads us, as in the classical works of Hopf (1950) and Lax (1973), to a Burgers’ equation describing the approximate evolution of the diffusive CP model. The solution \(v_\varepsilon (x,t), \ x,t>0,\) of the Burgers’ equation is related to the solution \(c_\varepsilon (x,t)\) of (1.7), (1.8) as follows: There exists a positive random variable \(X_t\) such that \(v_\varepsilon (x,t)=1/E[X_t-x \ | \ X_t>x]\) and \(X_t\) approximates the random variable with pdf \(c_\varepsilon (x,t)/\int _0^\infty c_\varepsilon (x',t) \ \hbox {d}x'\). We relate the Burgers’ model to the diffusive CP model by introducing an interpolating family of models with parameter \(\nu , \ 0\le \nu \le 1\). Each of these models is an evolution equation for a function \(x\rightarrow 1/E[X_t-x \ | \ X_t>x]\), where \(X_t, \ t>0,\) are positive random variables with the property that the function \(t\rightarrow \langle X_t\rangle \) is increasing. The evolution PDE is of viscous Burgers’ type (Hopf 1950) with viscosity coefficient proportional to \(\nu \). The \(\nu =1\) model is identical to the diffusive CP model (1.7), (1.8), while the \(\nu =0\) model is the Burgers’ model. We shall refer to the Burgers’ model as the inviscid CP model since its evolution PDE is an inviscid Burgers’ equation (Smoller 1994). Similarly, we refer to the model with \(0<\nu \le 1\) as the viscous CP model with viscosity \(\nu \). Our hierarchy of models can be summarized as follows:

CP model \((\varepsilon =\nu =0)\rightarrow \) inviscid CP model \((\varepsilon >0, \ \nu =0)\rightarrow \) viscous CP model \((\varepsilon >0,\ 0<\nu \le 1)\rightarrow \) diffusive CP model \((\varepsilon >0, \nu =1)\).

In Sect. 3, we study the large time behavior of the inviscid CP model and establish a version of our conjecture for this model. We obtain the following theorem:

Theorem 1.1

Suppose the initial data for the inviscid CP model correspond to the nonnegative random variable \(X_0\), and assume that \(X_0\) satisfies

Then, \(\lim _{t\rightarrow \infty } \langle X_t\rangle /t=1\), and for any \(\eta ,m,M>0\) with \(m<M\), there exists \(T>0\) such that

Assume in addition that the function \(x\rightarrow E[X_0-x \ | \ X_0>x] \) is \(C^1\) and convex for x close to \(\Vert X_0\Vert _\infty \) with

Then, there exists \(C,M,T>0\) such that the mean of \(X_t\) satisfies the inequality

and the distribution function of \(X_t\) the inequality

Remark 1

Observe that a \(\beta <1\) self-similar solution (1.5) of the CP model has \(\Vert X_0\Vert _\infty =1/(1-\beta )\) and \(E[X_0-x \ | \ X_0>x]=1-(1-\beta )x, \ 0\le x<\Vert X_0\Vert _\infty \). Hence, the \(\beta <1\) self-similar solution satisfies all the conditions of Theorem 1.1 provided \(\varepsilon <1\). The condition \(\varepsilon <1\) is not crucial since for any \(\varepsilon >0\) one can rescale the initial data so that \(\varepsilon <\langle X_0\rangle \). Therefore, Theorem 1.1 proves a selection principle for the \(\beta =1\) self-similar solution (1.5) and establishes a rate of convergence which is logarithmic in time.

Remark 2

The condition (1.11) implies that the initial condition \(v_\varepsilon (x,0), \ x>0,\) for the inviscid Burgers’ equation is increasing. Hence, the Burgers’ equation may be solved by means of the method of characteristics. If \(v_\varepsilon (\cdot ,0)\) is not an increasing function, then the solution of the Burgers’ equation at some positive time contains shocks, corresponding to discontinuities of the function \(v_\varepsilon (\cdot ,t)\). A shock at time t gives rise to an atom in the distribution of the random variable \(X_t\).

The remainder of the present paper is devoted to the study of the diffusive CP model (1.7), (1.8). Since existence and uniqueness has already been proven for a diffusive version of the LSW model (Conlon 2010), we do not revisit this issue. In Sect. 6, we consider the problem of proving convergence of solutions of the diffusive CP model as \(\varepsilon \rightarrow 0\) to a solution of the CP model over some fixed time interval \(0\le t\le T\). Thus, we assume that the CP and diffusive CP models have the same initial data corresponding to a random variable \(X_0\). Let \(X_t, \ t>0,\) be the random variable corresponding to the solution of (1.1), (1.2) and \(X_{\varepsilon ,t}, t>0,\) the random variable corresponding to the solution of (1.7), (1.8). We show that \(X_{\varepsilon ,t}\) converges in distribution as \(\varepsilon \rightarrow 0\) to \(X_t\), uniformly in the interval \(0\le t\le T\). We also prove convergence of the diffusive coarsening rates (1.10) as \(\varepsilon \rightarrow 0\) to the CP coarsening rate (1.4). One easily sees from the formula (1.10) that a boundary layer analysis becomes necessary in this case. In the diffusive model, there exists a boundary layer with length of order \(\varepsilon \) so that \(c_\varepsilon (x,t)\simeq c_0(x,t)\) for \(x/\varepsilon >>1\). Since \(c_\varepsilon (0,t)=0\) one has that \(\partial c_\varepsilon (0,t)/\partial x\simeq 1/\varepsilon \), whence the RHS of (1.10) remains bounded above 0 as \(\varepsilon \rightarrow 0\) and in fact converges to the RHS of (1.4).

In Sect. 7, we concentrate on understanding large time behavior of the diffusive CP model for fixed \(\varepsilon >0\). We carry this out by relating it to the problem discussed in the previous paragraph of proving convergence as \(\varepsilon \rightarrow 0\) over a finite time interval. To see why the two problems are related, observe that one can always rescale \(\langle X_0\rangle \) to be equal to 1 in both the CP and diffusive CP models. Since the CP model is dilation invariant, the evolution PDE (1.1) remains the same. However, for the diffusive CP model the diffusion coefficient in (1.7) changes from \(\varepsilon \) to \(\varepsilon /\langle X_0\rangle \). Since \(\lim _{t\rightarrow \infty } \langle X_t\rangle =\infty \) in the diffusive CP model, an analysis of large time behavior can be made equivalent to an analysis of solutions to (1.7), (1.8) as \(\varepsilon \rightarrow 0\). In order to show that the conclusions of Theorem 1.1 also hold for the diffusive CP model, one needs to accomplish two objects: (a) prove that solutions to the viscous CP model are close to solutions of the inviscid model away from the boundary; (b) establish good control of the solution in the boundary layer. We do not address the problem of proving (a) in this paper. Instead, we prove in Sect. 7 the much simpler result that log-concave functions form an invariant set for the linear diffusion Eq. (1.7). To address (b) we apply the results of Sect. 5 which give bounds on the ratio of two Green’s functions for the PDE (1.7), the Dirichlet Green’s function for the half-line and the Green’s function for the full line. These bounds are uniform in \(\varepsilon \) as \(\varepsilon \rightarrow 0\). We also use the results of Sect. 5 in Sect. 6 to prove convergence of coarsening rates as \(\varepsilon \rightarrow 0\). However, this can be accomplished with less delicate estimates, as was carried out in Conlon (2010) for the case of the diffusive LSW model. Our main result on the large time behavior of the diffusive CP model is a bound on the coarsening rate, which is uniform is time:

Theorem 1.2

Suppose the initial data for the diffusive CP model (1.7), (1.8) correspond to the nonnegative random variable \(X_0\) with integrable pdf. Then, \(\lim _{t\rightarrow \infty } \langle X_t\rangle =\infty \). If in addition the function \( x\rightarrow E[X_0-x \ | \ X_0>x], \ 0\le x<\Vert X_0\Vert _\infty ,\) is decreasing, then there are constants \(C,T>0\) such that

It is unnecessary to read Sects. 4, 5 in order to follow the proof of Theorem 1.2. However, these sections do begin to address a core difficulty in trying to extend the results of Theorem 1.1 to the viscous CP model. For the inviscid CP model, as for the CP model itself, there is no boundary condition at \(x=0\). In the case of the viscous CP model, a boundary condition at \(x=0\) becomes necessary in order to have uniqueness of the solution. In this paper, we have chosen the Dirichlet condition \(c_\varepsilon (0,t)=0, \ t>0\), for (1.7) because the function \(\Lambda _\varepsilon (\cdot )\) is given by the same formula as in the CP model and is increasing. Beyond this we do not have a justification for preferring the Dirichlet condition over other boundary conditions. That said, it seems likely that no matter what boundary condition at \(x=0\), a delicate boundary layer analysis will be required in order to understand large time behavior of the model. Our belief is that this boundary layer analysis is the simplest in the case of the Dirichlet condition.

To prove the ratio of Greens’ functions results in Sect. 5, we use Green’s function representations derived from semiclassical analysis. The derivations are carried out in Sect. 4 using two parallel approaches: the variational method of stochastic control theory and the probabilistic method using conditioned Markov processes. The variational method is a generalization of the approach of Hopf (1950) and Lax (1973) to the study of Burgers’ equation and hence shows the connection with the inviscid CP model studied in Sect. 3. In the probabilistic approach, we obtain a representation for a generalized Brownian bridge process in terms of Brownian motion. It is this representation which is used in Sect. 5 to prove the estimates on the ratio of Greens’ functions.

2 The Carr–Penrose Model and Extensions

The analysis of the CP model (Carr and Penrose 1998) is based on the fact that the characteristics for the first-order PDE (1.1) can be easily computed. Thus, let \(b:\mathbf {R}\times \mathbf {R}^+\rightarrow \mathbf {R}\) be given by \(b(y,s)=A(s)y-1, \ y\in \mathbf {R}, \ s\ge 0\), where \(A:\mathbf {R}^+\rightarrow \mathbf {R}^+\) is a continuous nonnegative function. We define the mapping \(F_A:\mathbf {R}\times \mathbf {R}^+\rightarrow \mathbf {R}\) by setting

From (2.1), we see that the function \(F_A\) is given by the formula

If we let \(w_0:\mathbf {R}^+\times \mathbf {R}^+\rightarrow \mathbf {R}^+\) be the function

where \(c_0(\cdot ,\cdot )\) is the solution to (1.1), then from the method of characteristics we have that

The conservation law (1.2) can also be expressed in terms of \(w_0\) as

Observe now that the functions \(m_{1,A}, \ m_{2,A}\) of (2.2) are related by the differential equation

It follows from (2.5), (2.6) that if we define variables \([u(t),v(t)], t\ge 0,\) by

then the CP model (1.1), (1.2) with given initial data \(c_0(\cdot ,0)\) is equivalent to the two-dimensional dynamical system

Note, however, that the dynamical law for the two-dimensional evolution depends on the initial data for (1.1), (1.2), whereas the initial condition is always \(u(0)=1, \ v(0)=0\).

We can understand the two-dimensionality of the CP model and relate it to some other models of coarsening by using some elementary Lie algebra theory. Thus, observe that for operators \({\mathcal {A}}_0,\mathcal {B}_0\) acting on functions \(f:\mathbf {R}\rightarrow \mathbf {R}\), which are defined by

The initial value problem (1.1) can be written in operator notation as

It follows from (2.9) that the Lie algebra generated by \({\mathcal {A}}_0,\mathcal {B}_0\) is the unique two-dimensional non-Abelian Lie algebra. The corresponding two-dimensional Lie group is the affine group of the line (see Chapter 4 of Stillwell 2008). That is the Lie group consists of all transformations \(z\rightarrow az+b, \ z\in \mathbf {R},\) with \(a>0, \ b\in \mathbf {R}\). The solutions of Eq. (2.10) are a flow on this group. Hence, solutions of (2.10) for all possible functions \(\Lambda _0(\cdot )\) lie on a two-dimensional manifold.

Next we consider the discretized version of the CP model studied by Smereka (2008). Letting \(\Delta x\) denote space discretization, then a standard discretization of (1.7) with Dirichlet boundary condition is given by

where

The backward difference approximation for the derivative of \(J_\varepsilon (x,t)\) is chosen in (2.11) to ensure stability of the numerical scheme for large x. Let \(D,D^*\) be the discrete derivative operators acting on functions \(u:(\Delta x)\mathbf {Z}\rightarrow \mathbf {R}\) defined by

Then, using the notation of (2.13) we can rewrite (2.11) as

Observe that for operators \({\mathcal {A}}_{\Delta x},\mathcal {B}_{\Delta x}\) defined by

Choosing \(\varepsilon = 2\Delta x\) in (2.14), we see that the equation can be expressed in terms of \({\mathcal {A}}_{\Delta x},\mathcal {B}_{\Delta x}\) as

Comparing (2.9), (2.10) to (2.15), (2.16), we see that we can obtain a representation for the solution to (2.15), (2.16) by using the fact that the solution to (2.9), (2.10) is given by (2.4). To see this, we use the fact that for \({\mathcal {A}}_0,\mathcal {B}_0\) as in (2.9) then

From (2.4), (2.7), (2.17), it follows that the solution to (2.9), (2.10) is given by

where u(t), v(t) are given by (2.7). Hence, the solution to (2.15), (2.16) is given by

where \({\mathcal {A}}_{\Delta x},\mathcal {B}_{\Delta x}\) are given by (2.15) and u(t), v(t) are given by (2.7) with \(\Lambda _\varepsilon \) in place of \(\Lambda _0\).

The operator \({\mathcal {A}}_{\Delta x}\) of (2.15) is the generator of a Poisson process. Thus,

where \(X_s\) is the discrete random variable taking values in \((\Delta x)\mathbf {Z}\) with pdf

If \(f,g:\mathbf {R}\rightarrow \mathbf {R}\) are continuous functions of compact support, then it is easy to see from (2.21) that

as we expect from (2.17). The operator \(\mathcal {B}_{\Delta x}\) of (2.15) is the generator of a Yule process (Karlin and Taylor 1975). Letting \(\mathbf {Z}^+\) denote the positive integers, the operator \(e^{-\mathcal {B}_{\Delta x}s}\) for \(s>0\) acts on functions \(f:(\Delta x)\mathbf {Z}^+\rightarrow \mathbf {R}\). Then,

where \(Y_s\) is a discrete random variable taking values in \((\Delta x)\mathbf {Z}^+\). The pdf of \(Y_s\) conditioned on \(Y_0=\Delta x\) is given by

Hence, \(Y_s\) conditioned on \(Y_0=\Delta x\) is a geometric variable. More generally, the variable \(Y_s\) conditioned on \(Y_0=m\Delta x\) with \(m\ge 2\) is a sum of m independent geometric variables with distribution (2.24) and is hence negative binomial. The identity (2.23) therefore implies that

where the \(Y_s^j, \ j=1,2,\ldots ,\) are independent and have the distribution (2.24). Since the mean of \(Y_s\) is \(e^s\Delta x\), it follows from (2.25) that

as we expect from (2.17).

The Smereka model consists of the evolution determined by (2.11) with \(\varepsilon =2\Delta x\) and the conservation law

We see from (2.19) that the model is equivalent to a two-dimensional dynamical system with dynamical law depending on the initial data. The first differential equation in this system is given by the first equation in (2.8). The second differential equation is determined by differentiating the expression on the LHS of (2.27) and setting it equal to zero. Using (2.21), (2.24), we can write the LHS of (2.27) in terms of u(t), v(t). In the case when the initial data are given by

it has a simple form. Thus, from (2.19), (2.21), (2.24) we have that

From (2.29), we see that the conservation law (2.27) becomes in this case

Hence, from the first equation of (2.8) and (2.30) we conclude that \(v(\cdot )\) is the solution to the initial value problem

The initial value problem (2.31) was derived in §3 of Smereka (2008) by a different method. It can be solved explicitly, and so we obtain the formulas

when the initial data are given by (2.28). Hence, from (2.29), (2.32) we have an explicit expression for \(c_\varepsilon (\cdot ,t)\), and it is easy to see that this converges as \(t\rightarrow \infty \) to the self-similar solution corresponding to the \(\beta =1\) random variable defined by (1.5). It was also shown in Smereka (2008) that if the initial data have finite support then \(c_\varepsilon (\cdot ,t)\) converges as \(t\rightarrow \infty \) to the \(\beta =1\) self-similar solution.

The large time behavior of the CP model can be easily understood using the beta function of a random variable introduced in Conlon (2011). If X is a random variable with pdf \(c_X(\cdot )\), we define functions \(w_X(\cdot ), h_X(\cdot )\) by

Evidently, one has that

The beta function \(\beta _X(\cdot )\) of X is then defined by

An important property of the beta function is that it is invariant under affine transformations. That is

One can also see that the function \(h_X(\cdot )\) is log-concave if and only if \(\sup \beta _X(\cdot )\le 1\).

To understand the large time behavior of the CP model, we first observe that the rate of coarsening Eq. (1.4) can be rewritten as

Furthermore, the beta function of the self-similar variable \(X_0\) with pdf defined by (1.5) and parameter \(\beta >0\) is simply a constant \(\beta _{X_0}(\cdot )\equiv \beta \). We have already shown that the time evolution of the CP equation (1.1) is given by the affine transformation (2.4). It is also relatively simple to establish that for a random variable \(X_0\) corresponding to the initial data for (1.1), (1.2), then \(\lim _{t\rightarrow \infty } F_{1/\Lambda _0}(0,t)=\Vert X_0\Vert _\infty \). Hence, it follows from (2.35), (2.36) that if \(\lim _{x\rightarrow \Vert X_0\Vert _\infty }\beta _{X_0}(x)=\beta \) for the initial data random variable \(X_0\) of (1.1), (1.2), then the large time behavior of the CP model is determined by the self-similar solution (1.5) with parameter \(\beta \).

We have already observed from (1.4) that the function \(\Lambda _0(\cdot )\) in the CP model is increasing. If we assume that \(\inf \beta _{X_0}(\cdot )>0\), we can also see that \(\lim _{t\rightarrow \infty } \Lambda _0(t)=\infty \). Hence, in this case there exists a doubling time \(T_\mathrm{double}\) for which \(\Lambda _0(t)=2\Lambda _0(0)\) when \(t=T_\mathrm{double}\). Evidently, \(\inf \beta _{X_t}(\cdot )\ge \inf \beta _{X_0}(\cdot )\) and \(\sup \beta _{X_t}(\cdot )\le \sup \beta _{X_0}(\cdot )\). The notion of doubling time can be a useful tool in obtaining an estimate on the rate of convergence of the solution of the CP model to a self-similar solution at large time.

We illustrate this by considering the CP model with Gaussian initial data. In particular, we assume the initial data \(c_0(\cdot )\) are given by the formula

where \(L\ge L_0>0\) and K(L), a(L) are uniquely determined by the requirement that (1.2) holds and the function \(\Lambda _0(\cdot )\) in (1.3) satisfies \(\Lambda _0(0)=1\). It is easy to see that the beta function for the initial data (2.38) is bounded above and below strictly larger than zero, uniformly in \(L\ge L_0\). Hence, from (2.37) there are constants \(T_1,T_2>0\) depending only on \(L_0\) such that \(T_1\le T_\mathrm{double}\le T_2\) for all \(L\ge L_0\). It follows also from (2.2) that there are constants \(\lambda _0,\lambda _1,\mu _0,\mu _1>0\) depending only on \(L_0\) such that \(F_{1/\Lambda _0}(x,T_\mathrm{double})=\lambda (L)x+\mu (L), \ x\in \mathbf {R},\) where \(0<\lambda _0\le \lambda (L)\le \lambda _1<1\) and \( 0<\mu _0\le \mu (L)\le \mu _1\) for \(L\ge L_0\). Since \(F_{1/\Lambda _0}\) is a linear function, \(c(\cdot ,T_\mathrm{double})\) is also Gaussian. Rescaling so that the mean of \(X_t\) is now 1 at time \(T_\mathrm{double}\), we see that \(c(x,T_\mathrm{double})\) is given by the formula (2.38) with L replaced by A(L), where

Since we are assuming that \(\Lambda _0(0)=1\), there are constants \(a_0,a_1>0\) depending only on \(L_0\) such that \(a_0\le a(L)\le a_1\) for \(L\ge L_0\). We conclude then from (2.39) that

It is easy to estimate from (2.40) the rate of convergence to the \(\beta =1\) self-similar solution \(c(x,t)=(1+t)^{-2}\exp [-x/(1+t)]\) for solutions to the CP model with Gaussian initial data. First, we estimate the beta function of a Gaussian random variable.

Lemma 2.1

Let \(L>0\) and \(Z_L\) be a positive random variable with pdf proportional to \(e^{-z-z^2/2L}, \ z>0\). Then, for any \(L_0>0\) there is a constant C depending only on \(L_0\) such that if \(L\ge L_0\) the beta function \(\beta _L\) for \(Z_L\) satisfies the inequality

Proof

We use the formula for the beta function \(\beta (\cdot )\) of the pdf \(c(\cdot )\) given by (2.35). Thus,

Letting \(c(z)=e^{-z-z^2/2L}\), we have that

It follows from (2.42), (2.43) on making a change of variable that

where \(\delta =1/L[1+z/L]^2\). It is easy to see that there is a universal constant K such that the RHS of (2.44) is bounded above by K for all \(\delta >0\). We also have by Taylor expansion in \(\delta \) that \(\beta _L(z)=1-\delta +O(\delta ^2)\) if \(\delta \) is small. The inequality (2.41) follows. \(\square \)

Proposition 2.1

Let \(c_0(\cdot ,\cdot )\) be the solution to the CP system (1.1), (1.2) with Gaussian initial data and \(\Lambda _0(\cdot )\) be given by (1.3). Then, there exists \(t_0>2\) and constants \(C_1,C_2>0\) such that

Proof

The initial data can be written in the form \(c_0(x,0)=K_0\exp [-A_0(x+B_0)^2]\), where \(K_0,A_0,B_0\) are constants with \(K_0,A_0>0\). It follows from (2.2), (2.4) that for \(t>0\) one has \(c_0(x,t)=K_t\exp [-A_t(x+B_t)^2]\), where \(B_t=[B_0+F_{1/\Lambda _0}(0,t)](A_0/A_t)^{1/2}\). Since \(\lim _{t\rightarrow \infty } F_{1/\Lambda _0}(0,t)=\infty \), it follows that we may assume wlog that the initial data are of the form (2.38) and \(\Lambda _0(0)=1\). Evidently, then \(\beta _{X_0}(x)=\beta _L(a(L)x), \ x\ge 0,\) where \(\beta _L\) satisfies the inequality (2.41).

Assume now that the initial data for (1.1), (1.2) are given by (2.38) where \(L=L_0>0\), and let \(L_t\) be the corresponding value of L determined by \(c_0(\cdot ,t)\). We have then from (2.40) and the discussion preceding it that for \(N=1,2,\ldots ,\) there exist times \(t_N\) such that

Since \(L_t\) is an increasing function of t, it follows from (2.41), (2.46) that \(\beta _{X_t}(0)\) is bounded above and below as in (2.45). Now using the identity (2.37), we obtain the inequality (2.45). \(\square \)

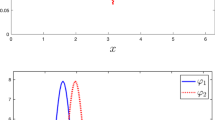

We wish next to compare the foregoing to the situation of the diffusive CP model with Gaussian initial data. From (4.15), the solution to (1.7) with initial data \(c_0(\cdot )\) is given by

where \(G_{\varepsilon ,D}\) is the Dirichlet Green’s function for the half-space \(\mathbf {R}^+\) defined by (4.14) with \(A(s)=1/\Lambda _\varepsilon (s)\). If we replace the Dirichlet Green’s function \(G_{\varepsilon ,D}\) by the full-space Green’s function \(G_\varepsilon \) of (4.11), then the solution \(c_\varepsilon (\cdot ,t)\) is Gaussian for \(t>0\) provided \(c_\varepsilon (\cdot ,0)\) is Gaussian, just as in the CP model. We shall see in Sect. 5 that it is legitimate to approximate \(G_{\varepsilon ,D}(x,y,0,t)\) by \(G_\varepsilon (x,y,0,t)\) provided \(x,y\ge M\varepsilon \) for some large constant M. Making the approximation \(G_{\varepsilon ,D}\simeq G_\varepsilon \) in (2.47), we obtain a formula similar to (2.39) for the length scale \(A_\varepsilon (L)\) of the Gaussian at doubling time. It is given by

As in (2.39), the functions \(\lambda _\varepsilon (L),\mu _\varepsilon (L)\) are obtained from the coefficients of the linear function \(F_{1/\Lambda _\varepsilon }\), when \(t=T_{\varepsilon ,\mathrm{double}},\) where \(T_{\varepsilon ,\mathrm{double}}\) denotes the doubling time for the diffusive model. The expression \(\sigma ^2_\varepsilon (L)\) is given by the formula for \(\sigma ^2_A(T)\) in (4.10) with \(A(s)=1/\Lambda _\varepsilon (s), \ s\le T,\) and \(T=T_{\varepsilon ,\mathrm{double}}\). In Sect. 6, we shall study the \(\varepsilon \rightarrow 0\) limit of the diffusive CP model. We prove that if the CP and diffusive CP models have the same initial data, then \(\lim _{\varepsilon \rightarrow 0}\Lambda _\varepsilon (t)=\Lambda _0(t)\) uniformly in any finite interval \(0\le t\le T\). It follows that \(\lim _{\varepsilon \rightarrow 0}A_\varepsilon (L)=A(L)\), where A(L) is defined by (2.39).

We wish next to try to understand the evolution of the diffusive CP model when initial data are non-Gaussian. Let \(w_\varepsilon (x,t), \ h_\varepsilon (x,t)\) be defined in terms of the solution \(c_\varepsilon (\cdot ,\cdot )\) to (1.7) by

Then, \(w_\varepsilon (\cdot ,t),h_\varepsilon (\cdot ,t)\) are proportional to the functions (2.33) corresponding to the random variable \(X_t\) with pdf \(c_\varepsilon (\cdot ,t)/ \int _0^\infty c_\varepsilon (x,t) \ \hbox {d}x\). Making the approximation \(G_{\varepsilon ,D}\simeq G_\varepsilon \), we see from (2.47), (2.49), (4.11) that

Writing \(h_\varepsilon (x,t)=\exp [-q_\varepsilon (x,t)], \ x,t>0,\) in (2.51), we see from (4.11) that the semiclassical approximation to \(q_\varepsilon (x,t)\) is given by the formula

Let us assume that \(q_\varepsilon (\cdot ,0)\) is given similarly to (2.38) by

The minimizer in (2.52) is then \(y_\mathrm{min}(x,t)\) where

If we substitute \(y=y_\mathrm{min}(x,t)\) into (2.52), we obtain a quadratic formula for \(q_\varepsilon (x,t)\) similar to (2.53). If \(t=T_{\varepsilon ,\mathrm{double}}\), then L in (2.53) is replaced by \(A_\varepsilon (L)\) as in (2.48).

More generally we can consider the case when \(q_\varepsilon (\cdot ,0)\) is convex so (2.52) is a convex optimization problem with a unique minimizer \(y=y_\mathrm{min}(x,t)\). In that case, it is easy to see that

It follows from (2.55) that if the inequality

holds at \(t=0\), then it holds for all \(t>0\). We define now the function \(\beta _\varepsilon :[0,\infty )\times \mathbf {R}^+\rightarrow \mathbf {R}\) in terms of \(q_\varepsilon \) by the formula

We can see from (2.35) that the function \(h_\varepsilon (\cdot ,t)=\exp [-q_\varepsilon (\cdot ,t)]\) is proportional to \(h_{X_t}(\cdot )\) for some random variable \(X_t\) if and only if \(\beta _\varepsilon (\cdot ,t)\) is nonnegative. Hence, by the remark after (2.56), if \(q_\varepsilon (\cdot ,0)\) corresponds to a random variable \(X_0\), then \(q_\varepsilon (\cdot ,t)\) corresponds to a random variable \(X_t\) for all \(t>0\). From (2.55), (2.57), we have that

It follows from (2.58) that if \(\sup \beta _\varepsilon (\cdot ,0)\le 1\), then \(\sup \beta _\varepsilon (\cdot ,t)\le 1\) for \(t>0\). Furthermore, (2.58) also indicates that \(\beta _\varepsilon (\cdot ,t)\) should increase toward 1 as \(t\rightarrow \infty \).

It is well known (Hopf 1950) that the solution \(q_\varepsilon \) to the optimization problem (2.52) satisfies a Hamilton–Jacobi PDE. We can derive this PDE by using (2.52), (2.55). From (2.52), we see that the minimizer \(y_\mathrm{min}(x,t)\) is the solution to the equation

We also have on differentiating (2.52) the identity

Note that in (2.60) we have used (2.59) to conclude that in differentiating (2.52) with respect to t, the coefficient of \(\partial y_\mathrm{min}(x,t)/\partial t\) is 0. Using the fact that each of the functions \(m_{1,1/\Lambda _\varepsilon }(t), \ m_{2,1/\Lambda _\varepsilon }(t), \ \sigma ^2_{1/\Lambda _\varepsilon }(t)\) is solutions to linear first-order differential equations, we conclude from (2.55), (2.59), (2.60) that \(q_\varepsilon (x,t)\) is a solution to the Hamilton–Jacobi PDE

Differentiating (2.61) with respect to x and setting \(v_\varepsilon (x,t)=\partial q_\varepsilon (x,t)/\partial x\), we see that \(v_\varepsilon (x,t)\) is the solution to the inviscid Burgers’ equation with linear drift,

If \(q_\varepsilon (\cdot ,t)\) corresponds to the random variable \(X_t\), then \(v_\varepsilon (x,t)=E[X_t-x \ | \ X_t>x]^{-1}, \ x\ge 0\), and since \(\Lambda _\varepsilon (t)=E[X_t]\), we have that

The system (2.62), (2.63) is a model for the evolution of the pdf of a random variable \(X_t\) which is intermediate between the CP and diffusive CP models. To obtain the pdf of \(X_t\) from the function \(v_\varepsilon (\cdot ,t)\), we let \(c_\varepsilon (\cdot ,t)=c_{X_t}(\cdot ), \ w_\varepsilon (\cdot ,t)=w_{X_t}(\cdot ), \ h_\varepsilon (\cdot ,t)=h_{X_t}(\cdot )\) as in (2.33). Then, \(v_\varepsilon (x,t)=w_\varepsilon (x,t)/h_\varepsilon (x,t)\) and

We also have that

where \(A_\varepsilon (\cdot )\) can be an arbitrary positive function. Evidently, (2.64), (2.65) uniquely determine the pdf of \(X_t\) from the function \(v_\varepsilon (\cdot ,t)\).

We can do a more systematic derivation of the model (2.62), (2.63) by beginning with the solution \(c_\varepsilon \) to the diffusive CP model (1.7), (1.8). Setting \(w_\varepsilon ,h_\varepsilon \) to be given by (2.49), then we see on integration of (1.7) that \(w_\varepsilon \) is a solution to the PDE

If we integrate (2.66), then we obtain a PDE for \(h_\varepsilon \),

Setting \(h_\varepsilon (x,t)=\exp [-q_\varepsilon (x,t)]\), it follows from (2.67) that \(q_\varepsilon (x,t)\) is a solution to the PDE

If we differentiate (2.68) with respect to x, we obtain a PDE for the function \(v_\varepsilon (x,t)=\partial q_\varepsilon (x,t)/\partial x\), whence we have

For \(0<\nu \le 1\), we define the viscous CP model with viscosity \(\nu \) as the solution to the PDE

with boundary condition

and with the constraint

Assuming that (2.70), (2.71) has a classical solution, we show that if the initial data for (2.70) correspond to a random variable \(X_0\), then \(v_{\varepsilon ,\nu }(\cdot ,t)\) corresponds to a random variable \(X_t\) for \(t>0\) in the sense that \(v_{\varepsilon ,\nu }(x,t)=E[X_t-x \ | \ X_t>x]^{-1}, \ x\ge 0\). To see this we define \(\Gamma _{\varepsilon ,\nu }\) similarly to \(\Gamma _\varepsilon \) in (2.64) but with \(v_\varepsilon \) replaced by \(v_{\varepsilon ,\nu }\) on the RHS. It follows from (2.70), (2.71) that \(\Gamma _{\varepsilon ,\nu }\) satisfies the PDE

with Dirichlet boundary condition \(\Gamma _{\varepsilon ,\nu }(0,t)=0, \ t>0\). Hence by the maximum principle (Protter and Weinberger 1984), if \(\Gamma _{\varepsilon ,\nu }(\cdot ,0)\) is nonnegative, then \(\Gamma _{\varepsilon ,\nu }(\cdot ,t)\) is nonnegative for \(t>0\). We see from (2.64) that the nonnegativity of \(\Gamma _{\varepsilon ,\nu }(\cdot ,t)\) is equivalent to \(v_{\varepsilon ,\nu }(\cdot ,t)\) corresponding to a random variable \(X_t\). We have shown that for \(0<\nu \le 1\) the viscous CP model corresponds to the evolution of a random variable \(X_t, \ t\ge 0\). If \(\nu =1\) the model is identical to the diffusive CP model (1.7), (1.8) with Dirichlet condition \(c_\varepsilon (0,t)=0, \ t>0\).

We can think of the inviscid CP model (2.62), (2.63) as the limit of the viscous CP model (2.70), (2.71), (2.72) as the viscosity \(\nu \rightarrow 0\). It is not clear, however, what happens to the boundary condition (2.71) in this limit. Unless the initial data \(v_\varepsilon (\cdot ,0)\) for (2.62) are increasing, the solution \(v_\varepsilon (\cdot ,t)\) develops discontinuities at some finite time (Smoller 1994). For an entropy satisfying solution \(v_\varepsilon \), discontinuities have the property that the solution jumps down across the discontinuity. Hence, if \(v_\varepsilon (\cdot ,t)\) is discontinuous at the point z, then

Observe now that for a random variable X, the function \(x\rightarrow E[X-x \ | \ X>x]\) has discontinuities precisely at the atoms of X. In that case, the function jumps up across the discontinuity. Since \(v_\varepsilon (x,t)=E[X_t-x \ | \ X_t>x]^{-1}\) for some random variable \(X_t\), it follows that at discontinuities of \(v_\varepsilon (\cdot ,t)\) the function jumps down. Thus, discontinuities of \(v_\varepsilon (\cdot ,t)\) correspond to atoms of \(X_t\), and the entropy condition for (2.62) is automatically satisfied.

We have already observed that the function \(t\rightarrow \Lambda _0(t)\) in the CP model (1.1), (1.2) is increasing and that the function \(t\rightarrow \Lambda _\varepsilon (t)\) in the diffusive CP model (1.7), (1.8) is also increasing. To determine whether the function \(t\rightarrow \Lambda _{\varepsilon ,\nu }(t)\) in the viscous CP model (2.70)–(2.72) is increasing, we observe on setting \(x=0\) in (2.70) and using (2.72) that \(v_{\varepsilon ,\nu }(0,t)\) satisfies the equation

We have already seen that \(\Gamma _{\varepsilon ,\nu }(\cdot ,t)\) is a nonnegative function, and from (2.71), it follows that \(\Gamma _{\varepsilon ,\nu }(0,t)=0\) for \(t>0\). Hence, \(\partial \Gamma _{\varepsilon ,\nu }(0,t)/\partial x\ge 0\) for \(t>0\). We conclude then from (2.75) that the function \(t\rightarrow v_{\varepsilon ,\nu }(0,t)\) is decreasing provided

Thus, from (2.72) we see that if (2.76) holds, then the function \(t\rightarrow \Lambda _{\varepsilon ,\nu }(t)\) is increasing. Note that in the case of the diffusive CP model when \(\nu =1\) the condition (2.76) is redundant.

3 The Inviscid CP Model—Proof of Theorem 1.1

We shall restrict ourselves here to considering the solutions of (2.62), (2.63) when the initial data \(v_\varepsilon (\cdot ,0)\) are nonnegative, increasing and also the function \(\Gamma _\varepsilon (\cdot , 0)\) of (2.64) is nonnegative. The condition (2.76) becomes now \(v_\varepsilon (0,t)\le \varepsilon ^{-1}\), and assuming this holds also, we see that in this case (2.62) may be solved by the method of characteristics. To carry this out, we set \(\tilde{v}_\varepsilon (x,t)=m_{1,1/\Lambda _\varepsilon }(t)v_\varepsilon (x,t)\), where \(m_{1,A}(\cdot )\) is defined by (2.2). Then, (2.62) is equivalent to

From (3.1), it follows that if \(x(s), \ s\ge 0,\) is a solution to the ODE

and characteristics do not intersect, then \(\tilde{v}_\varepsilon (x(t),t) =\tilde{v}_\varepsilon (x(0),0)\) for \(t\ge 0\). We can therefore calculate the characteristics of (3.1) by setting \(\tilde{v}_\varepsilon (x(s),s)=\tilde{v}_\varepsilon (x(0),0)=v_\varepsilon (x(0),0)\). We define the function \(F_{\varepsilon ,A}(x,t, v_0(\cdot ))\) depending on \(x,t\ge 0\) and increasing function \(v_0:[0,\infty )\rightarrow (0,\infty )\) by

where \(F_A\) is given by (2.2) and \(\sigma _A^2(\cdot )\) by (4.10). Since \(v_0(\cdot )\) is an increasing function, there is a unique solution z to (3.3) for all \(x\ge 0\) provided \(v_0(0)\le \varepsilon ^{-1} m_{1,A}(t)m_{2,A}(t)/\sigma _A^2(t)\). If this condition holds, then the method of characteristics now yields the solution to (2.62) as

with \(A=1/\Lambda _\varepsilon \). It follows from (3.3), (3.4) that \(v_\varepsilon (0,t) \le m_{2,A}(t)/\varepsilon \sigma _A^2(t)\le \varepsilon ^{-1}\).

We wish to prove a global existence and uniqueness theorem for solutions of (2.62), (2.63). To describe our assumptions on the initial data \(v_\varepsilon (\cdot ,0)\), we shall consider functions \(v_0:[0,x_\infty )\rightarrow \mathbf {R}^+\) with the properties:

Note that (3.6) implies that \(v_0(\cdot )\) is locally Lipschitz continuous in the interval \([0,x_\infty )\).

Lemma 3.1

Assume the function \( v_0(\cdot )=v_\varepsilon (\cdot ,0)\) satisfies (3.5), (3.6) and in addition that \((1+\delta _0)v_\varepsilon (0,0)<\varepsilon ^{-1}\) for some \(\delta _0>0\). Then, there exists \(\delta _1>0\) depending only on \(\delta _0\) such that there is a unique solution to (2.62), (2.63) for \(0\le t\le T=\delta _1/v_\varepsilon (0,0)\).

Proof

Let \(T,\delta _2>0\) and \(\mathcal {E}\) be the space of continuous functions \(V:[0,T]\rightarrow \mathbf {R}^+\) satisfying

For \(V\in \mathcal {E}\), we define a function \(\mathcal {B}V(t), \ 0\le t\le T,\) by \(\mathcal {B}V(t)=v_\varepsilon (0,t)\) where \(v_\varepsilon \) is the function (3.4) with \(A(s)=V(s), \ 0\le s\le T\). We shall show that if \(T>0\) is sufficiently small then \(V\in \mathcal {E}\) implies \(\mathcal {B}V\in \mathcal {E}\). To see this, we first observe from (3.3) that \(\mathcal {B}V(0)=v_\varepsilon (0,0)\). Next we note that for a function \(v_0(\cdot )\) satisfying (3.5), (3.6), then

Since \(V\in \mathcal {E}\), it follows from (3.7) that with \(A(\cdot )=V(\cdot )\), then

Similarly, we have that

From (3.9), (3.10), we have that for \(\delta _1,\delta _2>0\) sufficiently small, depending only on \(\delta _0\), that

Hence, there is a unique solution \(z(t)\le m_{2,A}(t)/m_{1,A}(t)\) to (3.3) with \(x=0\) provided \(0\le t\le T\). Since \(\mathcal {B}V(t) =v_\varepsilon (z(t),0)/m_{1,A}(t)\), it follows from (3.8), (3.9) that on choosing \(\delta _1>0\) sufficiently small, depending only on \(\delta _2\), that the function \(\mathcal {B}V(t), \ 0\le t\le T\), also satisfies (3.7).

Next we show that \(\mathcal {B}\) is a contraction on the space \(\mathcal {E}\) with metric \(d(V_1,V_2)=\sup _{0\le t\le T}|V_2(t)-V_1(t)|\) for \(V_1,V_2\in \mathcal {E}\). To see this, let \(z_1(t), \ z_2(t), \ 0\le t\le T,\) be the solutions to (3.3) with \(x=0\) corresponding to \(V_1,V_1\in \mathcal {E}\), respectively. Then, from (3.4) we have

The second term on the RHS of (3.12) is bounded as

We use (3.6) to bound the first term in (3.12). Thus, we have that

From (3.3), (3.5), it follows that

The RHS of (3.15) can be bounded similarly to (3.13), and so we obtain the inequality

It follows from (3.12)–(3.16) that

provided \(\delta _1>0\) is chosen sufficiently small depending only on \(\delta _2\). Evidently, \(\mathcal {B}\) is a contraction mapping on \(\mathcal {E}\) and therefore has a unique fixed point if one also has \(10\delta _1<1\). \(\square \)

Lemma 3.2

Let \(v_\varepsilon (x,t), \ x\ge 0, \ 0\le t\le T\) be the solution to (2.62), (2.63) constructed in Lemma 3.1. Then, for any t satisfying \(0< t\le T\) the function \(v_0(\cdot )=v_\varepsilon (\cdot ,t)\) satisfies (3.5), (3.6) with \(x_\infty =\infty \). In addition, the function \(t\rightarrow v_\varepsilon (0,t), \ 0\le t\le T,\) is continuous and decreasing.

Proof

Since \(\varepsilon >0\), it follows from the fact that (3.5) holds for \(v_0(\cdot )=v_\varepsilon (\cdot ,0)\) that (3.3) has a unique solution \(z<x_\infty \) for any \(x>0\). Hence, \(x_\infty =\infty \) if \(t>0\), and it is also clear that the function \(x\rightarrow v_\varepsilon (x,t), \ x\ge 0,\) is increasing. We have therefore shown that (3.5) holds for \(v_0(\cdot )=v_\varepsilon (\cdot ,t)\) and \(x_\infty =\infty \) if \(0<t\le T\).

Next we wish to show that (3.6) holds for \(v_0(\cdot )=v_\varepsilon (\cdot ,t)\) with \(0<t\le T\). To see this, we observe from (3.4), (3.6) that for \(0\le x_1\le x_2<\infty \),

where \(z(x,t)=F_{\varepsilon ,1/\Lambda _\varepsilon }(x,t,v_\varepsilon (\cdot ,0))\). We see from (3.3) that \(0\le \partial z(x,t)/\partial x \le 1/m_{1,1/\Lambda _\varepsilon }(t)\), whence

To show that the function \(t\rightarrow v_\varepsilon (0,t)\) is continuous and decreasing, we write \(v_\varepsilon (0,t)=v_0(z(t))/m_{1,1/\Lambda _\varepsilon }(t)\) where \(v_0(\cdot )=v_\varepsilon (\cdot ,0)\) and z(t) is the solution z to (3.3) with \(x=0\). We see from (3.3) that the function \(t\rightarrow z(t)\) is Lipschitz continuous, whence the function \(t\rightarrow v_\varepsilon (0,t)\) is continuous. If \(z\rightarrow v_0(z)\) is differentiable at \(z=z(t)\), then it follows from (2.2) that

Differentiating (3.3) with respect to t at \(x=0\), we obtain the equation

Hence, (3.20), (3.21) imply that

From (2.63), (3.4), (3.6), we have that

We conclude from (3.22), (3.23) that \(\partial v_\varepsilon (0,t)/\partial t\le 0\). In the case when the function \(z\rightarrow v_0(z)\) is not differentiable at \(z=z(t)\), we can do an approximation argument to see that the function \(s\rightarrow v_\varepsilon (0,s)\) is decreasing close to \(s=t\). We have therefore shown that the function \(t\rightarrow v_\varepsilon (0,t)\) is decreasing, whence \(v_\varepsilon (0,t)\le v_\varepsilon (0,0)< \varepsilon ^{-1}\) for \(0\le t\le T\). Since the RHS of (3.21) is the same as \([1-\varepsilon v_\varepsilon (0,t)]/m_{1,1/\Lambda _\varepsilon }(t)\), this implies that the function \(t\rightarrow z(t)\) is increasing. \(\square \)

Proposition 3.1

Assume the initial data for (2.62), (2.63) satisfy the conditions of Lemma 3.1. Then, there exists a unique continuous solution \(v_\varepsilon (x,t), \ x,t\ge 0,\) globally in time to (2.62), (2.63). The solution \(v_\varepsilon (\cdot ,t)\) satisfies (3.5), (3.6) for \(t>0\) with \(x_\infty =\infty \), and the function \(t\rightarrow v_\varepsilon (0,t)\) is decreasing. Furthermore, there is a constant \(C(\delta _0)\) depending only on \(\delta _0\) such that \(\Lambda _\varepsilon (t)\le \Lambda _\varepsilon (0)+C(\delta _0)[\Lambda _\varepsilon (0)+t], \ t\ge 0\).

Proof

The global existence and uniqueness follow immediately from Lemmas 3.1, 3.2 upon using the fact that the function \(t\rightarrow v_\varepsilon (0,t)\) is decreasing. To get the upper bound on the function \(\Lambda _\varepsilon (\cdot )\), we observe that Lemmas 3.1 implies that with \(T_0=0,\)

It follows from (3.24) that

We also have that

From (3.25), (3.26), we conclude that \(\Lambda _\varepsilon (t) \le (1+\delta _2)^2t/\delta _1\) provided \(t\ge T_1\), whence the result follows. \(\square \)

The upper bound on the coarsening rate implied by Proposition 3.1 is independent of \(\varepsilon >0\) as \(\varepsilon \rightarrow 0\). We can see from (3.22) that a lower bound on the rate of coarsening depends on \(\varepsilon \). In fact, if we choose \(v_0(z)=1/[\Lambda _\varepsilon (0)-z], \ 0<z<\Lambda _\varepsilon (0),\) then \(v'_0(z)=v_0(z)^2\), and so at \(t=0\) the RHS of (3.22) is zero if \(\varepsilon =0\). The random variable \(X_0\) corresponding to this initial data is simply \(X_0\equiv \mathrm{constant}\), and it is easy to see that if \(\varepsilon =0\), then \(X_t\equiv X_0\) for all \(t>0\). For \(\varepsilon >0\), however, we have the following:

Lemma 3.3

Assume the initial data for (2.62), (2.63) satisfy the conditions of Lemma 3.1, and let z(t) be as in Lemma 3.2. Then, \(\lim _{t\rightarrow \infty } z(t)=x_\infty \), and if \(\varepsilon >0\), one has \(\lim _{t\rightarrow \infty }\Lambda _\varepsilon (t)=\infty \).

Proof

Let \(\lim _{t\rightarrow \infty }\Lambda _\varepsilon (t)=\Lambda _{\varepsilon ,\infty }\) and assume first that \(\Lambda _{\varepsilon ,\infty }<\infty \). In that case \(\lim _{t\rightarrow \infty } m_{1,\Lambda _\varepsilon }(t)=\infty \), and hence, (2.63), (3.4) imply that \(\lim _{t\rightarrow \infty }v_0(z(t))=\infty \). We conclude from (3.5), (3.6) that if \(\Lambda _{\varepsilon ,\infty }<\infty \), then \(\lim _{t\rightarrow \infty } z(t)=x_\infty \). We also have from (2.2) that

It follows now from (3.3), (3.27) that if \(\varepsilon >0\), then \(\limsup _{t\rightarrow \infty } v_0(z(t))\le 2\Lambda _{\varepsilon ,\infty }/\varepsilon \Lambda _\varepsilon (0)<\infty \), which yields a contradiction.

Next we assume that \(\Lambda _{\varepsilon ,\infty }=\infty \), which we have just shown always holds if \(\varepsilon >0\). The function \(t\rightarrow z(t)\) is increasing, and let us suppose that \(\lim _{t\rightarrow \infty } z(t)=z_\infty <x_\infty \). Then, from (2.63), (3.4) we have that \(\lim _{t\rightarrow \infty } m_{1,1/\Lambda _\varepsilon }(t)=\infty \). We use the fact that for any \(T\ge 0,\) there exists a constant \(K_T\) such that

From (3.3), (3.28), we obtain the inequality

Choosing T sufficiently large so that \( m_{1,1/\Lambda _\varepsilon }(T)\ge 2\varepsilon v_0(z_\infty )\), we conclude from (3.28), (3.29) that there is a constant \(C_1\) such that \(\sigma _{1/\Lambda _\varepsilon }^2(t)/m_{1,1/\Lambda _\varepsilon }(t)^2\le C_1\) for \(t\ge T\). If we also choose T such that \(m_{1,1/\Lambda _\varepsilon }(t)\ge \varepsilon v_0(z(t))/2\) for \(t\ge T\), we have from (3.6), (3.21) that

Since the function \(t\rightarrow z(t)\) is increasing and \(\lim _{t\rightarrow \infty } z(t)=z_\infty <\infty \), it follows from (2.63), (3.4), (3.30) that there is a constant \(C_2\) such that

However, (3.31) implies that \(\lim _{t\rightarrow \infty } m_{1,1/\Lambda _\varepsilon }(t)\le \exp [C_2]\) and so we have again a contradiction. We conclude that \(\lim _{t\rightarrow \infty } z(t)=x_\infty \). \(\square \)

Lemma 3.4

Assume the initial data for (2.62), (2.63) satisfy the conditions of Lemma 3.1 with \(x_\infty =\infty \), and that for \(0<\delta \le 1\), one has

Then, \(\lim _{t\rightarrow \infty } \Lambda _\varepsilon (t)/t=1\) for any \(\varepsilon \ge 0\).

Proof

The main point about the condition (3.32) is that it is invariant under the dynamics determined by (2.62), (2.63). It is easy to see this in the case \(\varepsilon =0\) since we have, on using the notation of (3.3), that

For \(\varepsilon >0\), we have

Since the function \(x\rightarrow v_\varepsilon (x,0)\) is increasing, it follows from (3.3) that

We conclude now from (3.4), (3.34), (3.35) that

From Lemma 3.3 and (3.36), there exists \(T_0\ge 0\) such that

We use (3.37) to estimate \(\Lambda _\varepsilon (T_0+t)/\Lambda _\varepsilon (T_0)\) in the interval \(0\le t\le \delta /v_\varepsilon (0,T_0)\). Thus, we have

We conclude from (3.37), (3.38) that

On integrating (3.39), we have

Hence, (3.38), (3.40) imply that

We define now \(T_1>T_0\) as the minimum time \(T_1=T_0+t\) such that \(\Lambda _\varepsilon (T_0+t)\ge (1+\delta /2)\Lambda _\varepsilon (T_0)\). The inequality (3.41) now yields bounds on \(T_1-T_0\) as

provided the RHS of (3.42) is less than \(\delta \). In view of (3.32), this will be the case if \(\delta >0\) is sufficiently small. We can iterate the inequality (3.42) by defining \(T_k, \ k=1,2,\ldots ,\) as the minimum time such that \(\Lambda _\varepsilon (T_k)\ge (1+\delta /2)\Lambda _\varepsilon (T_{k-1})\). Thus, we have that

On summing (3.43) over \(k=1,\ldots ,N\), we conclude that

It follows from (3.44) that

Now using the fact that \(\lim _{\delta \rightarrow 0}\gamma (\delta )/\delta =0\), we conclude from (3.45) that \(\lim _{t\rightarrow \infty }\Lambda _\varepsilon (t)/t=1\). \(\square \)

Remark 3

Theorem 5.4 of Carr and Penrose (1998) implies in the case \(\varepsilon =0\) convergence to the exponential self-similar solution for initial data \(v_\varepsilon (x,0), \ x\ge 0,\) which has the property \(\lim _{x\rightarrow \infty } v_\varepsilon (x,0)/x^\alpha =v_{\varepsilon ,\infty }\) with \(0<v_{\varepsilon ,\infty }<\infty \) provided \(\alpha >-1\). It is easy to see that if \(\alpha \ge 0\), then such initial data satisfy the condition (3.32) of Lemma 3.4.

In Carr (2006), necessary and sufficient conditions—(5.18) and (5.19) of Carr (2006)—for convergence to the exponential self-similar solution are obtained in the case \(\varepsilon =0\). Note that (5.19) of Carr (2006) implies the condition (3.32) of Lemma 3.4.

Next we obtain a rate of convergence theorem for \(\lim _{t\rightarrow \infty }\Lambda _\varepsilon (t)/t\) which generalizes Proposition 2.1 to the system (2.62), (2.63). We assume that the function \(x\rightarrow v_\varepsilon (x,0)\) is \(C^1\) for large x, in which case the condition (3.32) becomes \(\lim _{x\rightarrow \infty } v_\varepsilon (x,0)^{-2}\partial v_\varepsilon (x,0)/\partial x=0\), or equivalently \(\lim _{x\rightarrow \infty }\beta _{X_0}(x)=1\) for the initial condition random variable \(X_0\). More precisely, we have that if \(v_\varepsilon (x,0)^{-2}\partial v_\varepsilon (x,0)/\partial x\le \eta \) for \(x\ge x_\eta \), then

The inequality (3.46) implies (3.32) holds with \(\gamma (\delta )\le \eta \delta /(1-\eta \delta )\). We conclude that (3.32) holds if \(\lim _{x\rightarrow \infty } v_\varepsilon (x,0)^{-2}\partial v_\varepsilon (x,0)/\partial x=0\).

The condition on the initial data to guarantee a logarithmic rate of convergence for \(\Lambda _\varepsilon (t)/t\) is similar to (3.32). We require that there exists \(\delta ,\gamma (\delta ),x_\delta >0\) such that

Observe that if (3.47) holds for arbitrarily small \(\delta >0\) and \(\liminf _{\delta \rightarrow 0}x_\delta =x_0\), then the function \(x\rightarrow 1/v_\varepsilon (x,0)\) is convex for \(x\ge x_0\). Furthermore, if the function \(x\rightarrow v_\varepsilon (x,0)\) is \(C^2\), then on taking \(\delta \rightarrow 0\) in (3.47) we obtain the second-order differential inequality

Suppose now that (3.48) holds with \(\gamma (0) = \eta >0\) for \(x\ge x_0\). Then, we have that

On integrating (3.49) and using the fact that the function \(x\rightarrow v_\varepsilon (x,0)\) is increasing, we conclude that (3.47) holds for all \(\delta >0\) with \(\gamma (\delta )=\eta \) and \(x_\delta =x_0\).

It is easy to see that (3.48) is invariant under affine transformations. That is if the function \(x\rightarrow v_\varepsilon (x,0)\) satisfies (3.48) for all \(x>0\), then given any \(\lambda ,k>0\) so also does the function \(x\rightarrow \lambda v_\varepsilon (\lambda x+k,0)\). We can solve the differential equation determined by equality in (3.48). The solution is given by the formula

Since we require \(\gamma (0)>0\), it follows from (3.48) that \(\alpha \) must satisfy either \(\alpha > 0\) or \(\alpha <-1\). Note that the function \(x\rightarrow 1/v_\varepsilon (x,0)\) of (3.50) is convex precisely for this range of \(\alpha \) values.

Lemma 3.5

Assume the initial data \(x\rightarrow v_\varepsilon (x,0)\) for (2.62), (2.63) are \(C^1\) increasing and that the function \(x\rightarrow 1/v_\varepsilon (x,0)\) is convex for sufficiently large x. Assume further that there exists \(\delta ,\gamma (\delta ),x_\delta >0\) such that (3.47) holds. Then, there exists constant \(C_0,t_0>0\) such that

Proof

Since the inequality (3.47) is invariant under affine transformations, we see as in Lemma 3.4 that in the case \(\varepsilon =0\) there exists \(T_0>0\) such that if \(t\ge T_0\) the function \(x\rightarrow 1/v_\varepsilon (x,t)\) is convex for \(x\ge 0\), and

Next observe that since \(\lim _{x\rightarrow \infty } v_\varepsilon (x,0)^{-2}\partial v_\varepsilon (x,0)/\partial x=0\), we may for any \(\nu >0\) choose \(T_0\) such that \(v_\varepsilon (x,T_0)^{-2}\partial v_\varepsilon (x,T_0)/\partial x\le \nu \) for \(x\ge 0\). It follows then from (3.46) that

Hence, as in (3.39) we see from (3.38), (3.53) that

Integrating (3.54), we conclude that for \(0\le t< \Lambda _\varepsilon (T_0)/\nu \),

Using the inequality \(-\log (1-z)\le 3z/2\) when \(0\le z\le 1/3\), we conclude from (3.55) that

Similarly to (3.41), we have from (3.56) that

In the case \(\varepsilon =0\), the LHS of (3.56) is \(\hbox {d}z(t)/\hbox {d}t\), so on integration we have that

We choose now \(\nu \) sufficiently small so that \(2\log [1+1/2\nu ]/3>\delta \), and let \(T_1\) be the minimum \(T_0+t\) such that \(z(t)\ge \delta /v_\varepsilon (0,T_0)\). Then, we have that

Furthermore, (3.57) implies that \(\Lambda _\varepsilon (T_1)/\Lambda _\varepsilon (T_0)\le 1+1/2\nu \). We now iterate the foregoing to yield a sequence of times \(T_k, \ k=1,2,\ldots ,\) with the properties that

It follows from (3.60) that

The inequality (3.61) implies the lower bound in (3.51) since the function \(t\rightarrow \hbox {d}\Lambda _\varepsilon (t)/\hbox {d}t\) is increasing for \(t\ge T_0\).

To deal with \(\varepsilon >0\), we first assume that the function \(x\rightarrow v_\varepsilon (x,0)\) is \(C^2\) for \(x>0\). Letting z(x, t) be the solution to (3.3) we have that

We also have that

It follows from (3.62)–(3.65) that the ratio (3.48) for the function \(x\rightarrow v_\varepsilon (x,t)\) is given by

Hence, if (3.48) holds for all sufficiently large x, then Lemma 3.3 implies that there exists \(T_0>0\) such that the RHS of (3.66) is bounded above by 2 for \(x\ge 0,t\ge T_0\). It follows that the function \(x\rightarrow 1/v_\varepsilon (x,t)\) is convex for \(x\ge 0\) provided \(t\ge T_0\). Observe that if \(0<\gamma (0)\le 2\) in (3.48), then the RHS of (3.66) is bounded above by \(2-\gamma (0)\). However, if \(\gamma (0)>2\), then we can only bound the RHS above by 0. We have shown that if (3.48) holds for all sufficiently large x, then there exists \(T_0>0\) such that

The identity (3.66) applied to the example (3.50) provides us with an illustration of how the selection principle operates. In the case \(1<\gamma (0)<2\) when \(\alpha >1\) in (3.50), then \(\lim _{z\rightarrow \infty }\partial v_\varepsilon (z,0)/\partial z=\infty \), in which case for \(t>0\) the RHS of (3.66) converges to 0 as \(x\rightarrow \infty \). Thus, if the initial data have \(\alpha >1\), then for \(t>0\) we expect the function \(v_\varepsilon (x,t)\) to behave linearly in x at large x.

In the case when we only assume that the function \(x\rightarrow v_\varepsilon (x,0)\) is \(C^1\) for \(x>0\), we can make a more careful version of the argument of the previous paragraph. We have now from (3.62), (3.64) that

Since the function \(x\rightarrow z(x,t)\) is increasing for \(x\ge 0\), it follows that the second function on the RHS of (3.68) is increasing for \(x\ge 0\). Since we are assuming that the function \(z\rightarrow 1/v_\varepsilon (z,0)\) is convex for all large z, it follows that the first function on the RHS of (3.68) is also increasing for \(x\ge 0\) provided \(t\ge T_0\) and \(T_0\) is sufficiently large. We conclude that the function \(x\rightarrow 1/v_\varepsilon (x,t)\) is convex for \(x\ge 0\) provided \(t\ge T_0\).

We can also obtain an inequality (3.52) for a \(\delta \) which is twice the \(\delta \) which occurs in (3.47). To show this, we consider two possibilities. In the first of these (3.52) follows from the monotonicity of the second function on the RHS of (3.68). We use the inequality

which follows from (3.4), (3.62), the monotonicity of the function \(z\rightarrow v_\varepsilon (z,0)\), and Taylor’s formula. Observe next from the convexity of the function \(z\rightarrow 1/v_\varepsilon (z,0)\) that for \(0<\rho '<\rho \),

Furthermore, we have similarly to (3.53) that for \(\nu >0\) there exists \(T_0>0\) such that for \(0<\rho '<\rho \) and \(t\ge T_0\),

Now let us assume that \(t\ge T_0\) and

Then, (3.70), (3.71) imply upon setting \(\eta =\delta /2\) in (3.69) and choosing \(\nu \) less than some constant depending only on \(\delta \), that the second term on the RHS is bounded below by \(\delta /6\) for \(t\ge T_0\). This implies that (3.52) holds with \(\gamma (\delta )=1/6\).

Alternatively, we assume that (3.72) does not hold. Then on choosing \(\nu \) sufficiently small, depending only on \(\delta \), we see that (3.62), (3.70), (3.71) implies

Then, we use the first term on the RHS of (3.68) and (3.47), (3.73) to establish (3.52) with \(2\delta \) in place of \(\delta \). We have proved then that there exists \(T_0>0\) such that (3.52) holds (with \(\delta \) replaced by \(2\delta \)).

We wish next to establish an inequality like (3.59) in the case \(\varepsilon >0\), in which case we need to examine the terms of (3.21) that depend on \(\varepsilon \). Using the notation of (4.27), (4.28) the \(\varepsilon \) dependent coefficient on the LHS of (3.21) is given by

The \(\varepsilon \) dependent coefficient on the RHS of (3.21) is given by

We choose now \(T_0\) large enough so that \(\varepsilon /\Lambda _\varepsilon (T_0)<1/2\), whence (3.75) implies that the term in brackets on the RHS of (3.21) is at least 1/2. We also have from (3.74) that

Now using (4.27), we conclude from (3.76) that for any \(K>0\),

It follows from (3.52), (3.62), (3.77) that there exists \(T_1>T_0\) such that (3.59) holds. Therefore, we can define a sequence \( T_k, \ k=1,2,\ldots ,\) of times having the properties (3.60).

In order to estimate \(\hbox {d}\Lambda _\varepsilon (T_{k-1}+t)/\hbox {d}t\) for \(0\le t\le T_k-T_{k-1}\), we need to examine the terms of (3.22) that depend on \(\varepsilon \). Similarly to (3.77), we have from (2.63), (3.22) and the convexity of the function \(x\rightarrow 1/v_\varepsilon (x,T_{k-1})\) that

Noting from (3.41) that \(\Lambda _\varepsilon (T_k)\) grows exponentially in k, we conclude from (3.60), (3.61) and (3.78) that the lower bound in (3.51) holds. To obtain the upper bound in (3.51), we use the identity

obtained from (2.62), (2.63). Evidently, the RHS of (3.79) does not exceed 1. \(\square \)

Lemma 3.6

Assume \(\varepsilon >0\) and the initial data for (2.62), (2.63) satisfy the conditions of Lemma 3.1 with \(x_\infty <\infty \). Then, for any \(t>0\) the function \(x\rightarrow v_\varepsilon (x,t)\) satisfies \(\lim _{x\rightarrow \infty } v_\varepsilon (x,t)/x=v_\infty (t)\) for some \(v_\infty (t)>0\).

Assume in addition that the initial data are \(C^1\), the function \(x\rightarrow 1/v_\varepsilon (x,0)\) is convex for x sufficiently close to \(x_\infty \), and \(\liminf _{x\rightarrow x_\infty } \partial v_\varepsilon (x,0)/\partial x>0\). Then, for any \(t>0\) the function \(x\rightarrow 1/v_\varepsilon (x,t)\) is convex for x sufficiently large, and the inequality (3.47) holds for all \(\delta >0\).

Proof

From (3.3), (3.4), we have that

Hence, \(\lim _{x\rightarrow \infty } v_\varepsilon (x,t)/x=1/\varepsilon \sigma _{1/\Lambda _\varepsilon }^2(t)\).

It is easy to see from our assumptions that (3.47) is satisfied if \(v_\varepsilon (x,0)\) is \(C^2\) for x sufficiently close to \(x_\infty \). In that case, it follows from the convexity of the function \(x\rightarrow 1/v_\varepsilon (x,0)\) close to \(x_\infty \) and (3.66) that there exists \(\eta (t)>0\) and \(x_\eta (t)\) with

for \(x>x_\eta (t)\). If we only assume the function \(x\rightarrow v_\varepsilon (x,t)\) is \(C^1\), then we use (3.68), whence (3.47) follows from (3.69). \(\square \)

Proof of Theorem 1.1:

Note the assumption that the function \(x\rightarrow E[X_0-x \ | \ X_0>x]\) is decreasing implies that the initial data \(v_\varepsilon (\cdot ,0)\) for (2.62), (2.63) are continuous and increasing. Now \(\lim _{t\rightarrow \infty }\langle X_t\rangle /t=1\) follows from Lemma 3.4, the remark following it and Lemma 3.6.

To prove (1.12), we first observe from (2.65) that

Since the function \(x\rightarrow v_\varepsilon (x,t)\) is increasing, it follows from (3.82) that

The lower bound in (1.12) follows if we can show that there exists \(T>0\) such that we may take \(\delta =M\) in (3.37) with \(2\gamma (\delta )<\eta \) provided \(t>T\). This is a consequence of (3.36) since (3.80) implies that we may assume there are constants \(\lambda _0>0\) and \(k_1,k_2\) such that the initial data satisfy an inequality \(\lambda _0x+k_1\le v_\varepsilon (x,0)\le \lambda _0x+k_2\) at large x. The upper bound in (1.12) can be obtained similarly. Thus, we have from (3.82) that

Now we argue as in the lower bound since \(x\ge mt\) implies that \(xv_\varepsilon (0,t)\ge m\).

To prove the logarithmic rate of convergence, we first observe that the inequality (1.14) follows from Lemmas 3.5 and 3.6. Now from the argument of Lemma 3.5, we see that there exists \(C,T>0\) such that for \(t\ge T\), the function \(x\rightarrow v_\varepsilon (x,t)^{-2}\partial v_\varepsilon (x,t)/\partial x\) is positive decreasing and satisfies the inequality

From (3.85), we see that

4 Representations of Green’s Functions

Let \(b:\mathbf {R}\times \mathbf {R}\rightarrow \mathbf {R}\) be a continuous function which satisfies the uniform Lipschitz condition

for some constant \(A_\infty \). Then, the terminal value problem

has a unique solution \(u_\varepsilon \) which has the representation

where \(G_\varepsilon \) is the Green’s function for the problem. The adjoint problem to (4.2), (4.3) is the initial value problem

The solution to (4.5), (4.6) is given by the formula

For any \(t<T\) let \(Y_\varepsilon (s), \ s>t,\) be the solution to the initial value problem for the SDE

where \(B(\cdot )\) is Brownian motion. Then, \(G_\varepsilon (\cdot ,y,t,T)\) is the probability density for the random variable \(Y_\varepsilon (T)\). In the case when the function b(y, t) is linear in y, it is easy to see that (4.8) can be explicitly solved. Thus, let \(A:\mathbf {R}\rightarrow \mathbf {R}\) be a continuous function and \(b:\mathbf {R}\times \mathbf {R}\rightarrow \mathbf {R}\) the function \(b(y,t)=A(t)y-1\). The solution to (4.8) is then given by

Hence, the random variable \(Y_\varepsilon (T)\) conditioned on \(Y_\varepsilon (0)=y\) is Gaussian with mean \(m_{1,A}(T)y-m_{2,A}(T)\) and variance \(\varepsilon \sigma _A^2(T)\), where \(m_{1,A},m_{2,A}\) are given by (2.2) and \(\sigma ^2_A\) by

The Green’s function \(G_\varepsilon (x,y,0,T)\) is therefore explicitly given by the formula

To obtain the formula (4.11), we have used the fact that the solution to the terminal value problem (4.2), (4.3) has a representation as an expectation value \(u_\varepsilon (y,t)=E[u_0(Y_\varepsilon (T)) \ | \ Y(t)=y \ ]\), where \(Y_\varepsilon (\cdot )\) is the solution to the SDE (4.8). The initial value problem (4.5), (4.6) also has a representation as an expectation value in terms of the solution to the SDE

run backwards in time. Thus, in (4.12) \(B(s), \ s<T,\) is Brownian motion run backwards in time. The solution \(v_\varepsilon \) of (4.5), (4.6) has the representation

Next we consider the terminal value problem (4.2), (4.3) in the half-space \(y>0\) with Dirichlet boundary condition \(u_\varepsilon (0,t)=0, \ t<T\). In that case, the solution \(u_\varepsilon (y,t)\) has the representation

in terms of the Dirichlet Green’s function \(G_{\varepsilon ,D}\) for the half-space. Similarly, the solution to (4.5), (4.6) in the half-space \(x>0\) with Dirichlet condition \(v_\varepsilon (0,t)=0, \ t>0,\) has the representation

The function \(G_{\varepsilon ,D}(\cdot ,y,t,T)\) is the probability density of the random variable \(Y_\varepsilon (T)\) for solutions \(Y_\varepsilon (s), \ s>t,\) to (4.8) which have the property that \(\inf _{t\le s\le T} Y_\varepsilon (s)>0\). No explicit formula for \(G_{\varepsilon ,D}(x,y,0,T)\) in the case of linear \(b(y,t)=A(t)y-1\) is known except when \(A(\cdot )\equiv 0\). In that case, the method of images yields the formula

It follows from (4.11), (4.16) that

We may interpret the formula (4.17) in terms of conditional probability for solutions \(Y_\varepsilon (s), \ s\ge 0,\) of (4.8) with \(b(\cdot ,\cdot )\equiv -1\). Thus, we have that

We wish to generalize (4.18) to the case of linear \(b(y,t)=A(t)y-1\) in a way that is uniform as \(\varepsilon \rightarrow 0\). To see what conditions on the function \(A(\cdot )\) are needed, we consider for \(x,y\in \mathbf {R},t<T,\) the function q(x, y, t) defined by the variational formula

The Euler–Lagrange equation for the minimizing trajectory \(y(\cdot )\) of (4.19) is

and we need to solve (4.20) for the function \(y(\cdot )\) satisfying the boundary conditions \(y(t)=y, \ y(T)=x\). In the case \(b(y,t)=A(t)y-1\) Eq. (4.20) becomes

It is easy to solve (4.21) with the given boundary conditions explicitly. In fact, taking \(t=0\) we see from (4.20) that

where the constant C(x, y, T) is given by the formula

with \(m_{1,A}(T),m_{2,A}(T)\) as in (2.2) and \(\sigma _A^2(T)\) as in (4.10). It follows from (4.11), (4.19), (4.22), (4.23) that the Green’s function \(G_\varepsilon (x,y,0,T)\) is given by the formula

The minimizing trajectory \(y(\cdot )\) for (4.19) has probabilistic significance as well as the function q(x, y, t, T). One can easily see that for solutions \(Y_\varepsilon (s), \ 0\le s\le T,\) of (4.8), the random variable \(Y_\varepsilon (s)\) conditioned on \(Y_\varepsilon (0)=y, \ Y_\varepsilon (T)=x,\) is Gaussian with mean and variance given by

where the function \(\sigma _A^2(s,t)\) is defined by

Let \(m_{1,A}(s,t), \ m_{2,A}(s,t)\) be defined by

The minimizing trajectory \(y(\cdot )\) for the variational problem (4.19) is explicitly given by the formula

Now the process \(Y_\varepsilon (s), \ 0\le s\le T,\) conditioned on \(Y_\varepsilon (0)=y, \ Y_\varepsilon (T)=x,\) is in fact a Gaussian process with covariance independent of x, y,

where the symmetric function \(\Gamma :[0,T]\times [0,T]\rightarrow \mathbf {R}\) is given by the formula

The function \(\Gamma _A\) is the Dirichlet Green’s function for the operator on the LHS of (4.21). Thus, one has that

and \(\Gamma _A(0,s_2)=\Gamma _A(T,s_2)=0\) for all \(0<s_2<T\).

We can obtain a representation of the conditioned process \(Y_\varepsilon (\cdot )\) in terms of the white noise process, which is the derivative \(dB(\cdot )\) of Brownian motion, by obtaining a factorization of \(\Gamma \) corresponding to the factorization

To do this, we note that the boundary value problem

has a solution if and only if the function \(v:[0,T]\rightarrow \mathbf {R}\) satisfies the orthogonality condition

Hence, it follows from (4.33) that we can solve the boundary value problem

by first finding the solution \(v:[0,T]\rightarrow \mathbf {R}\) to

which satisfies the orthogonality condition (4.35). Then, we solve the differential equation in (4.34) subject to the condition \(u(0)=0\).

The solution to (4.35), (4.37) is given by an expression

where the kernel \(k:[0,T]\times [0,T]\rightarrow \mathbf {R}\) is defined by

If \(v:[0,T]\rightarrow \mathbf {R}\) satisfies the condition (4.35) then

is the solution to (4.34). It follows that the kernel \(\Gamma _A\) of (4.31) has the factorization \(\Gamma _A=KK^*\), and so the conditioned process \(Y_\varepsilon (\cdot )\) has the representation

where \(y(\cdot )\) is the function (4.29). In the case \(A(\cdot )\equiv 0\) Eq. (4.41) yields the familiar representation

for the Brownian bridge process.

We can obtain an alternative representation of the conditioned process \(Y_\varepsilon (\cdot )\) in terms of Brownian motion by considering a stochastic control problem. Let \(Y_\varepsilon (\cdot )\) be the solution to the stochastic differential equation

where \(\lambda _\varepsilon (\cdot ,s)\) is a non-anticipating function. We consider the problem of minimizing the cost function given by the formula

The minimum in (4.44) is to be taken over all non-anticipating \(\lambda _\varepsilon (\cdot ,s)\), \(t \le s < T\), which have the property that the solutions of (4.43) with initial condition \(Y_\varepsilon (t) = y\) satisfy the terminal condition \(Y_\varepsilon (T) = x\) with probability 1. Formally, the optimal controller \(\lambda ^*\) for the problem is given by the expression

Evidently, in the classical control case \(\varepsilon =0\) the solution to (4.43), (4.44) is the solution to the variational problem (4.19). If \(b(y,t)=A(t)y-1\) is a linear function of y, then one expects as in the case of LQ problems that the difference between the cost functions for the classical and stochastic control problems is independent of y. Therefore, from (4.11), (4.24) we expect that

It is easy to see that if we solve the SDE (4.43) with controller given by (4.46) and conditioned on \(Y_\varepsilon (t)=y\), then \(Y_\varepsilon (T)=x\) with probability 1 and in fact the process \(Y_\varepsilon (s), \ t\le s\le T,\) has the same distribution as the process \(Y_\varepsilon (s), \ t\le s\le T,\) satisfying the SDE (4.8) conditioned on \(Y_\varepsilon (t)=y, \ Y_\varepsilon (T)=x\). Thus, we have obtained the Markovian representation for the conditioned process of (4.8). Note, however, that the stochastic control problem with cost function (4.44) does not have a solution since the integral in (4.44) is logarithmically divergent at \(s=T\) for the process (4.43) with optimal controller (4.46).

Solving (4.43) with drift (4.46) and \(Y_\varepsilon (0)=y\), we see on taking \(t=0\) that (4.41) holds with kernel \(k:[0,T]\times [0,T]\rightarrow \mathbf {R}\) given by

Observe that the kernel (4.47) corresponds to the Cholesky factorization \(\Gamma _A=KK^*\) of the kernel \(\Gamma _A\) (Ciarlet 1989). In the case \(A(\cdot )\equiv 0\) Eq. (4.47) yields the Markovian representation

for the Brownian bridge process.

We can also express the ratio (4.17) of Green’s functions for the linear case \(b(y,t)=A(t)y-1\) in terms of the solution to a PDE. Thus, we assume \(x>0\) and define

where \(Y_\varepsilon (\cdot )\) is the solution to the SDE (4.43) with drift (4.46). Then, u(y, t) is the solution to the PDE

with boundary and terminal conditions given by

In the case \(A(\cdot )\equiv 0\) the PDE (4.50) becomes

Evidently, the function u defined by

is the solution to (4.51), (4.53). Observe that the RHS of (4.53) at \(t=0\) is the same as the RHS of (4.17).

5 Estimates on the Dirichlet Green’s Function

In this section, we shall obtain estimates on the ratio of the Dirichlet to the full-space Green’s function in the case of linear drift \(b(y,t)=A(t)y-1\). In particular, we shall prove a limit theorem which generalizes the formula (4.17):

Proposition 5.1

Assume \(b(y,t)=A(t)y-1\) where (4.1) holds and the function \(A(\cdot )\) is nonnegative. Then, for \(\lambda ,y, T>0\) the ratio of the Dirichlet to full-space Green’s function satisfies the limit

where \(m_{1,A}(T),m_{2,A}(T)\) are given by (2.2) and \(\sigma _A^2(T)\) by (4.10).

Note that since we are assuming \(A(\cdot )\) is nonnegative in the statement of the proposition, it follows from (4.10) that \(m_{2,A}(T)/\sigma _A^2(T)\le 1\). Hence, the RHS of (5.1) always lies between 0 and 1. We can see why (5.1) holds from the representation (4.39), (4.41) for the conditioned process \(Y_\varepsilon (s), \ 0\le s\le T\). Thus, we have that

Since \(\sigma _A^2(s,T)=O(T-s)\), the conditioned process \(Y_\varepsilon (s)\) close to \(s=T\) is approximately the same as

Observe now from (4.29) that

Hence, for s close to T the process \(Y_\varepsilon (s), \ s<T,\) is approximately Brownian motion with a constant drift. Thus, let \(Z_\varepsilon (t), \ t>0,\) be the solution to the initial value problem for the SDE

where we assume the drift \(\mu \) is positive. Then, from (5.3), (5.4) we see that \(Y_\varepsilon (T-t)\simeq Z_\varepsilon (t)\) if \(\mu \) is given by the formula

Observe now that \(P(\inf _{t>0} Z_\varepsilon (t)<0)=e^{-2\lambda \mu }\), whence the RHS of (5.1) is simply \(P(\inf _{t>0} Z_\varepsilon (t)>0)\) when \(\mu \) is given by (5.6). Since the time for which \(Z_\varepsilon (t)\) is likely to become negative is \(t\simeq O(\varepsilon )\), the approximations above are justified and so we obtain (5.1).

Proof of Proposition 5.1

Let \(Y_\varepsilon (s), \ 0\le s\le T,\) be given by (5.2) where \(y(T)=\lambda \varepsilon \). Then, we have that for \(0<a\varepsilon \le T\),

where \(Z_\varepsilon (\cdot )\) is the solution to (5.5) with \(\mu \) given by (5.6) and \(\tilde{Z}_\varepsilon (\cdot )\) is given by the formula

We use the inequality