Abstract

Objectives

Quantifying radiation burden is essential for justification, optimization, and personalization of CT procedures and can be characterized by a variety of risk surrogates inducing different radiological risk reflections. This study compared how twelve such metrics can characterize risk across patient populations.

Methods

This study included 1394 CT examinations (abdominopelvic and chest). Organ doses were calculated using Monte Carlo methods. The following risk surrogates were considered: volume computed tomography dose index (CTDIvol), dose-length product (DLP), size-specific dose estimate (SSDE), DLP-based effective dose (EDk ), dose to a defining organ (ODD), effective dose and risk index based on organ doses (EDOD, RI), and risk index for a 20-year-old patient (RIrp). The last three metrics were also calculated for a reference ICRP-110 model (ODD,0, ED0, and RI0). Lastly, motivated by the ICRP, an adjusted-effective dose was calculated as \( E{D}_r=\frac{RI}{R{I}_{rp}}\times E{D}_{OD} \). A linear regression was applied to assess each metric’s dependency on RI. The results were characterized in terms of risk sensitivity index (RSI) and risk differentiability index (RDI).

Results

The analysis reported significant differences between the metrics with EDr showing the best concordance with RI in terms of RSI and RDI. Across all metrics and protocols, RSI ranged between 0.37 (SSDE) and 1.29 (RI0); RDI ranged between 0.39 (EDk) and 0.01 (EDr) cancers × 103patients × 100 mGy.

Conclusion

Different risk surrogates lead to different population risk characterizations. EDr exhibited a close characterization of population risk, also showing the best differentiability. Care should be exercised in drawing risk predictions from unrepresentative risk metrics applied to a population.

Key Points

• Radiation risk characterization in CT populations is strongly affected by the surrogate used to describe it.

• Different risk surrogates can lead to different characterization of population risk.

• Healthcare professionals should exercise care in ascribing an implicit risk to factors that do not closely reflect risk.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Medical imaging has become essential in modern medicine. As for every medical procedure, diagnostic imaging also carries a certain degree of risk, partly due to the use of ionizing radiation. Currently, computed tomography (CT) represents the modality with one of the highest associated radiation burden to patients as over 50% of the total medical radiation exposure is related to the use of CT [1, 2]. To ensure patient-centric effective justification and optimization of radiological procedures, it is critical to accurately ascertain the associated radiation burden of CT procedures with quantitative metrics [3]. In particular, the personalization of CT exams can be achieved tailoring the radiation dose to the appropriate clinical indication and patient size and it necessitates accurate and reliable risk estimation [4].

The exact radiation risk for an individual exam is nearly impossible to ascertain as many radiation risk factors are unknown and isolating radiation risks from other risks to the patient is methodologically unattainable. However, the need for a risk metric has motivated the use of various quantities as metrics of radiation risk, either intentionally or by implication [5]. For instance, in the absence of better or more convenient alternatives, dose descriptors such as volume computed tomography dose index (CTDIvol) and dose length product (DLP) and quantities such as size-specific dose estimates (SSDE) [6,7,8,9], which technically only describe the radiation input to the patient, have been treated as risk metrics. Likewise, effective dose (ED), which is factually a radiation protection quantity and does not account for age and sex, has been used as a surrogate of the patient's detriment. Given the known fact that sex, age, and tissue-characteristics affect radiation risk [3], risk index (RI) has been proposed as a surrogate for actual patient risk [5, 10]. However, there is currently no system to compute RI for clinical cases because it is labor-intensive and computationally expensive [11]. Given the diversity of these quantities, it is unclear how the particular choice of a surrogate might influence the analysis of risk estimates across a patient population.

The purpose of this study was to compare how different radiation risk surrogates characterize radiation burden across a clinical dataset. This study considered twelve different radiation risk surrogates proposed or effectually in use, each characterizing certain radiation burden attributes, many based on implied assumptions. A commercial dose monitoring system provided scanner output data and patient data. A Monte Carlo–based approach was used to calculate organ doses. All metrics were compared with RI which was assumed to be the closest surrogate to actual patient risk.

Materials and methods

This study was performed in compliance with the Health Insurance Portability and Accountability Act (HIPAA) and institutional review board (IRB) requirements. It included 1394 CT examinations performed at the Duke University Hospital on adult patients between January 2017 and January 2019 using one scanner model (Discovery CT750HD GE Healthcare) and two clinical protocols: abdominopelvic CT with contrast media administration (A&P) and chest CT without contrast media administration (chest). Only diagnostic series were considered. Localizer, contrast-enhanced monitoring, contrast-enhanced premonitoring, and reformatted series were excluded by filtering out data with series descriptions corresponding to these types of scans. A dose monitoring system (DoseWatch, GE Healthcare) supplied acquisition parameters, patient effective diameter (PD) [6], patient water equivalent diameter (WED) [7], and image data. PD and WED were measured in the central image of the scanned range [7]. Scanning parameters, protocol names, patient ages, patient effective diameters, and patient water equivalent diameters data are summarized in Tables 1 and 2.

Risk metric calculations and uncertainties

Patient-specific organ doses (OD) were estimated in each CT study using patient-informed approaches validated in previous studies [11,12,13]. In summary, each patient was matched to a virtual human model from the XCAT phantom library (18–78 years old, 52–117 kg, 23/35 M/F). The matching was based on patient diameter and patient lung (chest) or patient diameter and abdomen height (A&P) to find a phantom with the closest organ distribution [11]. Organ doses for each patient were then calculated and used to determine the associated radiation risk surrogates. Doses were calculated for 25 organs in male and 26 organs in female patients. Organ dose uncertainties were calculated by comparing the organ dose between a matched phantom with the dose calculated using the proposed method and the original ground truth phantom with dose calculated using the Monte Carlo method [11, 12, 14]. In particular, a leave one phantom out strategy was implemented: for each phantom, one phantom, referred to as ground truth, was matched to another in the cohort. Then, the ground truth phantom dose was calculated using Monte Carlo methods.

Table 3 summarizes the twelve risk metrics involved in the study. In particular, CTDIvol, SSDE, and DLP are X-ray output metrics provided by the scanners, following the definitions described in the AAPM TG 204 and AAPM TG 96 reports [6, 8], and tracked by the dose monitoring system. Defining organ dose (ODD) is the dose to an organ, which is sensitive enough to be used as a primary radiation burden indication for a specific CT protocol and anatomical region. In particular, ODD was defined as dose to the stomach for the A&P exams and to the lungs for the chest exams [3, 5] (for other CT protocols and anatomical regions different organs can be selected). DLP-based effective dose (EDk) is the effective dose calculated by multiplying the DLP for the scanned anatomical region conversion factors (k) provided for adult patients in Table A.2 of the ICRP publication 102 [9]. Because the dose monitoring system provides all scanned anatomical region lengths, even if partially exposed, we applied the specific k factor per each region in the scanned area: EDk = ∑rDLPrkr, where DLPr is the DLP for the region r (e.g., chest, neck, abdomen-pelvis) and kr is the specific conversion factor for the region r (i.e., kchest = 0.014) [11]. Organ dose-based effective dose (EDOD) is the effective dose calculated based on the patient-specific organ doses of the actual patient following the ICRP publication 103 definition [3]: EDOD = ∑TwTODT, where ODT is the equivalent dose for the organ T and wT is the tissue-specific weighing factor. EDOD incorporates organ sensitivities. Risk index (RI) is the radiation risk index calculated based on the actual patient-specific organ doses: RI = ∑TrTODT, where ODT is the equivalent dose for the organ T and rT is the gender-, age-, and tissue-specific lifetime attributable risk of cancer incidence in the US population reported in BEIR VII [10, 15]. RI incorporates organ-specific, patient age, and patient sex sensitivities. Risk index for a reference patient (RIrp) is the RI calculated for a reference 20-year-old patient undergoing the same exam [10]. Reference organ dose (ODD,0), reference effective dose (ED0), and reference risk index (RI0) are reference values of the associated metrics calculated by rescaling, using the CTDIvol as rescaling factor, the organ doses for a reference phantom undergoing a standard exam in a commercial scanner (CTDIvol = 6.01 mGy), as described by Zhang et al [16]. In particular, Zhang et al calculated organ doses for the reference male and female phantoms described in ICRP publication (n. 110 [17]). Finally, the relative effective dose (EDr) is effective dose calculated adjusting EDOD for a factor (\( f=\frac{RI}{RI_{rp}} \)) that takes into account patient age and sex; thus EDr = f × EDOD. This metric was motivated by ICRP’s latest development suggesting that effective dose may be adjusted to incorporate the influence of other contributing factors to risk [18].

Technically speaking, not all these metrics are metrics of risk. But they have all been used that way. Some are used as implied indices of risk (CTDIvol, SSDE, DLP), some directly influence risk (organ dose singular reflections ODD and ODD,0), some are reflective of different ways of computing effective dose (EDk, EDOD, ED0, EDr), and some aim to more directly model individual risk (RI, RIrp, RI0).

Uncertainties associated with all the risk metrics were calculated in terms of relative error averaged across all patients and clinical protocols. In particular, CTDIvol was reported by the CT scanner and the associated relative uncertainty is the required tolerance for corrective action implemented in our institution's quality assurance program according to the American College of Radiology Computed Tomography Quality Control Manual. The same relative uncertainty is associated with the other CTDIvol-derived dose descriptors DPL and SSDE, as well as to EDk. Relative errors for the other metrics were calculated propagating the organ dose errors.

Risk metric comparisons

A linear regression was applied to assess each metric’s dependency to RI, assumed to be the closest patient risk surrogate because its calculation incorporates granular information of the imaging procedure, as well as the patient age, sex, and radio-sensitivity [5, 10, 19]. For each fit, the slope (S) was normalized by the ratio of the mean value of RI and that of the metric to compute a risk sensitivity index (RSI) to be representative of the relative sensitivity of the metric to actual risk. The closer to 1 the RSI, the better the metric. The root mean square error (RMSE) of the fit was further divided by the fit slope (S) to compute a risk differentiability index (\( RDI=\frac{RMSE}{S} \)) to represent how well a particular risk surrogate can characterize differences in radiation risks across patients and procedures. The lower the RDI, the better the differentiability of the metric. RDI and RSI serve as indicators of how well a radiation risk metric characterizes radiation burden across clinical CT examinations.

Results

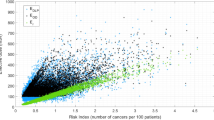

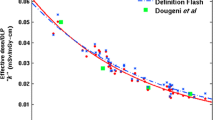

The numerical values of mean, median, interquartile range (first and third quartile), minimum, and maximum for each metric are reported in Table 4. Moreover, the related standard deviations, coefficient of variations, and relative errors are also reported in Table 4. Across all metrics and protocols, RSI ranged between 0.37 (SSDE) and 1.29 (RI0); RDI ranged between 0.39 (EDk) and 0.01 (EDr) cancers per 1000 patients per 100 mGy. The fit R2, RSI, and RDI data are shown in Table 5. The analysis reported significant differences between the metrics. EDr showed the best concordance with RI in terms of R2, RSI, and RDI. Figures 1 and 2 report the patient data and patient data linear regression for each metric to RI for the Chest and A&P protocols respectively. A significance test was performed for the hypothesis that there is no relationship between each metrics and risk index. The resulting p values were < 0.01 in each case and the reported correlations are considered significant.

Linear regression of each metric to RI for the Chest protocol. Y-axis units are reported in Table 3. RI unit (x-axes) is the number of cancers per 105 patients per 100 mGy

Linear regression of each metric to RI for the A&P protocol. Y-axis units are reported in Table 3. RI unit (x-axes) is the number of cancers per 105 patients per 100 mGy

Discussion

The study explored how twelve different radiation risk surrogate metrics characterize radiation burden across two sets of clinical CT examination types. The data clearly showed that different risk metrics can lead to different characterizations of population risk. As the metric most reflective of the specifics of an individual patient in terms of size, organ distribution, age, sex, and protocol factors, RI was taken to be the closest surrogate of actual patient risk, which was why it was chosen as the normalization reference against which to compare other radiation burden indexes. All metrics, except for EDr and RI0, showed poor risk differentiability with RDI greater or equal to 0.3 cancers per 1000 patients per 100 mGy. Similar results were noted in terms of risk sensitivity (RSI) and wellness of fit (R2). Some metrics showed similar values of RDI, RSI, and R2; however, care should be exercised in drawing risk predictions from unrepresentative risk metrics applied to a population as risk overestimation or underestimation can negatively affect justification and optimization of CT procedures.

Despite the critical role of radiation burden in radiology, metrics harmonization is far from being achieved. Conversely, the procedure risk is often assessed using different metrics indiscriminately with each radiation index carrying a different sensitivity to population risk. Even the burden depictions using metrics that describe the device output, i.e., CTDIvol and SSDE, cannot be easily compared with each other, as the International Commission of Radiological Protection has recently stated [20]. In the recommendation for the appropriate use of radiation metrics in the definition of diagnostic reference levels (DRL), the Commission highlighted how “SSDE is not considered to be an appropriate quantity to adopt as a DRL at this time, as it is not as widely used as CTDIvol,” and “the recommended DRL quantities for CT are CTDIvol and DLP” [20]. However, DRL and its use directly aim to manage the optimization and, with it, the radiation risk associated with imaging. Our study shows neither CTDI, DLP, nor SSDE (as used by some for DRL) [21] is an adequate surrogate of risk. Furthermore, despite the fact that SSDE incorporates patient size information, it showed a poor relative sensitivity of the metric to actual risk. The application of patient body habitus conversion factors to calculate SSDE results is a loss of relative sensitivity to actual patient risk, namely RI, which is calculated considering organ-specific, patient age, and patient sex sensitivities.

The discrepancy in the conflicting depiction of different risk surrogates further complicates CT scanner dose-reduction claims, such as those associated with automated tube current modulation techniques. Dose-saving claims are often depicted in terms of CTDI of DLP reduction (namely X-ray flux reduction), neither of which correlated closely with radiation risk that takes into account specific organ sensitivities as well as patient age and sex factors. Furthermore, they affect different metrics differently, for example changing the established equivalency between the protection quantities EDk and EDOD [11] and introducing variabilities in radiation output [22,23,24]. The AAPM Task Group 23, in Report no. 96, showed how the values of effective dose predicted by DLP (EDk) and the values of effective dose calculated using more rigorous calculations, such as EDOD in this study, “are remarkably consistent, with a maximum deviation from the mean of approximately 10% to 15%” [8, 25]. However, this correlation did not take into account the effect of ATCM techniques where the difference between DLP-based and organ dose–based effective dose can be as high as 230% [11]. EDk and EDOD are not the same and they should not be treated equivalently.

The use of the protection quantity effective dose as a risk metric is a recurring topic of discussion in the scientific community. The limits of such a radiation index are well known. For instance, effective dose was not originally defined for the estimation of personal risk and does not consider age and sex factors. However, it is a metric that allows the comparison of different modalities and procedures in terms of radiation burden. In this scenario, the ICRP Task Group 79 is working on a report to provide guidance on when the quantity effective dose can be used [18] claiming that “the use of effective dose for patient exposures is problematic particularly when it is used to assess risk in specific individuals, including children. However, effective dose may be a useful tool for comparisons of, for example, different diagnostic examinations and interventional procedures, the use of different technologies for the same medical examinations, and the use of similar technologies and procedures in different hospitals and countries.” The relative effective dose (EDr) introduced in this study showed the best agreement with the risk characterization depicted using risk index, namely the closest metric to the actual patient risk. EDr can offer a new approach in the use of effective dose as a risk quantity extending its sensitivity to also include age and sex factors while maintaining the established prevalence and usefulness of the concept.

This study was limited to only two clinical protocols and one scanner model. Future studies can investigate the described comparisons for a wider range of clinical operations and scanner models. Also, the patient cohorts only included adult subjects; the methodology can be extended to pediatric practice. Furthermore, for each metric, the data distribution is not uniform with only a few reported cases (Figs. 1 and 2) showing high values in the y-axes. Such outlier data can affect the linear regression results; in this study, we did not exclude any values. Moreover, like all dosimetric studies in real CT populations, the scanning range can affect the estimation of dose quantities and introduce a systematic source of variability. In this study, we used a previously validated approach that considered the actual scanned area to calculate doses to 25 organs in male and 26 organs in female patients [11, 12]. The presented data can enable the systematic study of dose and patient size to improve consistency and reduce variability in clinical operations [23]. Lastly, it is important to emphasize that, whereas the characterization of risk is a critical aspect of justification and optimization and is a focus of the present study, for a comprehensive radiological procedure evaluation, the induced benefits should be considered as well [5, 22]. The implementation of automated techniques to measure CT image quality in vivo and in patient populations will enable the simultaneous assessment of radiation burden and image quality to put them in balance to achieve consistent and optimized imaging [26,27,28].

Conclusions

This study showed how radiation risk characterization in CT populations is strongly affected by the surrogate used to describe it. When making decisions pertaining to clinical practice and optimization, healthcare professionals should exercise care in ascribing an implicit risk to factors that do not closely reflect risk.

Change history

31 March 2021

A Correction to this paper has been published: https://doi.org/10.1007/s00330-021-07903-z

Abbreviations

- CTDIvol :

-

Volume computed tomography dose index

- DL:

-

Dose length product

- ED0 :

-

Organ dose–based effective dose from reference phantom

- EDk :

-

DLP-based effective dose

- EDOD :

-

Organ dose–based effective dose

- EDr :

-

Relative effective dose

- IRB:

-

Institutional review board

- OD:

-

Patient-specific organ dose

- ODD :

-

Defining organ dose

- ODO,0 :

-

Defining organ dose from reference phantom

- RDI:

-

Risk differentiability index

- RI:

-

Risk index

- RI0 :

-

Risk index from reference phantom

- RIrp :

-

Risk index for a reference patient

- RSI:

-

Risk Sensitivity index

- SSDE:

-

Size-specific dose estimate

References

NCRP (2019) National Council on Radiation Protection and Measurements. Medical Radiation Exposure of Patients in the United States (2019). NCRP Report no. 184

European Commission (2014) Radiation Protection N° 180 Medical Radiation Exposure of the European Population

(2007) The 2007 Recommendations of the International Commission on Radiological Protection. ICRP Publication 103. Ann ICRP 37:9–34. https://doi.org/10.1016/j.icrp.2007.10.003

Mayo-Smith WW (2016) Image Wisely - International Safety Initiatives. Protocol Design. https://www.imagewisely.org/-/media/Image-Wisely/Files/CT/IW-Mayo-Smith-Protocol-Design.pdf

Samei E, Järvinen H, Kortesniemi M et al (2018) Medical imaging dose optimisation from ground up: expert opinion of an international summit. J Radiol Prot 38:967–989. https://doi.org/10.1088/1361-6498/aac575

Boone J, Strauss K, Cody D, et al (2011) AAPM report n. 204. Size-specific dose estimates (SSDE) in pediatric and adult body ct examinations, the Report of AAPM Task Group 204. College Park, MD

McCollough C, Bakalyar DM, Bostani M, et al (2014) AAPM report n. 220. Use of water equivalent diameter for calculating patient size and size-specific dose estimates (SSDE) in CT: the report of AAPM Task Group 220. College Park, MD

McCollough C, Cody D, Edyvean S, et al (2008) AAPM report n.96. The Measurement, Reporting, and Management of Radiation Dose in CT, The Report of AAPM Task Group 23. College Park, MD

Valentin J (2007) Managing patient dose in multi-detector computed tomography (MDCT). ICRP Publication 102. Ann ICRP 37. https://doi.org/10.1016/j.icrp.2007.09.001

(2006) Health risks from exposure to low levels of ionizing radiation: BEIR VII phase 2. National Academies Press, Washington, D.C.

Fu W, Ria F, Segars WP et al (2021) Patient-informed organ dose estimation in clinical ct: implementation and effective dose assessment in 1048 clinical patients. AJR Am J Roentgenol 216:1–11

Tian X, Segars WP, Dixon RL, Samei E (2016) Convolution-based estimation of organ dose in tube current modulated CT. Phys Med Biol 61:3935–3954. https://doi.org/10.1088/0031-9155/61/10/3935

Hoye J, Sharma S, Zhang Y et al (2019) Organ doses from CT localizer radiographs: development, validation, and application of a Monte Carlo estimation technique. Med Phys 46:5262–5272. https://doi.org/10.1002/mp.13781

Samei E, Ria F, Tian X, Segars PW (2020) A database of 40 patient-based computational models for benchmarking organ dose estimates in CT. Med Phys. https://doi.org/10.1002/mp.14373

Li X, Samei E, Segars WP et al (2011) Patient-specific radiation dose and cancer risk estimation in CT: part II. Application to patients. Med Phys 38:408–419

Zhang Y, Li X, Paul Segars W, Samei E (2012) Organ doses, effective doses, and risk indices in adult CT: comparison of four types of reference phantoms across different examination protocols. Med Phys 39:3404–3423. https://doi.org/10.1118/1.4718710

ICRP (2009) Adult reference computational phantoms. ICRP Publication 110. Ann ICRP 39:21–45. https://doi.org/10.1016/j.icrp.2009.07.004

ICRP The use of effective dose as a risk related radiological protection quantity. A Task Group under Committee 2. http://www.icrp.org/icrp_group.asp?id=54

Samei E, Tian X, Paul Segars W, Frush DP (2017) Radiation risk index for pediatric CT: a patient-derived metric. Pediatr Radiol 47:1737–1744. https://doi.org/10.1007/s00247-017-3973-z

Vañó E, Miller DL, Martin CJ et al (2017) ICRP publication 135: diagnostic reference levels in medical imaging. Ann ICRP 46:1–144. https://doi.org/10.1177/0146645317717209

Kanal KM, Butler PF, Sengupta D et al (2017) U.S. Diagnostic reference levels and achievable doses for 10 adult ct examinations. Radiology 284:120–133. https://doi.org/10.1148/radiol.2017161911

Ria F, Davis JT, Solomon JB et al (2019) Expanding the concept of diagnostic reference levels to noise and dose reference levels in CT. AJR Am J Roentgenol 213:889–894. https://doi.org/10.2214/AJR.18.21030

Ria F, Wilson JM, Zhang Y, Samei E (2017) Image noise and dose performance across a clinical population: patient size adaptation as a metric of CT performance. Med Phys 44:2141–2147. https://doi.org/10.1002/mp.12172

Ria F, Solomon JB, Wilson JM, Samei E (2020) Technical note: validation of TG 233 phantom methodology to characterize noise and dose in patient CT data. Med Phys 47:1633–1639. https://doi.org/10.1002/mp.14089

McCollough CH (2003) Patient dose in cardiac computed tomography. Herz 28:1–6. https://doi.org/10.1007/s00059-003-2447-2

Abadi E, Sanders J, Samei E (2017) Patient-specific quantification of image quality: an automated technique for measuring the distribution of organ Hounsfield units in clinical chest CT images. Med Phys 44:4736–4746. https://doi.org/10.1002/mp.12438

Sanders J, Hurwitz L, Samei E (2016) Patient-specific quantification of image quality: an automated method for measuring spatial resolution in clinical CT images. Med Phys 43:5330–5338. https://doi.org/10.1118/1.4961984

Christianson O, Winslow J, Frush DP, Samei E (2015) Automated technique to measure noise in clinical ct examinations. AJR Am J Roentgenol 205:W93–W99. https://doi.org/10.2214/AJR.14.13613

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is Dr. Ehsan Samei.

Conflict of interest

E.S. discloses relationship with the following entities unrelated to the present publication: GE, Siemens, Bracco, Imalogix, 12Sigma, SunNuclear, Metis Health Analytics, Cambridge University Press, and Wiley and Sons. The remaining authors of this manuscript declare no relationships with any companies whose products or services may be related to the subject matter of the article.

Statistics and biometry

Francesco Ria, Wanyi Fu, and Jocelyn Hoye have significant statistical expertise.

Informed consent

Written informed consent was waived by the Institutional Review Board.

Ethical approval

Institutional Review Board approval was obtained.

Methodology

• retrospective

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: The affiliations of Wanyi Fu, Jocelyn Hoye, W. Paul Segars and Anuj J. Kapadia were incorrect.

Rights and permissions

About this article

Cite this article

Ria, F., Fu, W., Hoye, J. et al. Comparison of 12 surrogates to characterize CT radiation risk across a clinical population. Eur Radiol 31, 7022–7030 (2021). https://doi.org/10.1007/s00330-021-07753-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-021-07753-9