Abstract

Homeostasis occurs in a biological or chemical system when some output variable remains approximately constant as an input parameter \(\lambda \) varies over some interval. We discuss two main aspects of homeostasis, both related to the effect of coordinate changes on the input–output map. The first is a reformulation of homeostasis in the context of singularity theory, achieved by replacing ‘approximately constant over an interval’ by ‘zero derivative of the output with respect to the input at a point’. Unfolding theory then classifies all small perturbations of the input–output function. In particular, the ‘chair’ singularity, which is especially important in applications, is discussed in detail. Its normal form and universal unfolding \(\lambda ^3 + a\lambda \) is derived and the region of approximate homeostasis is deduced. The results are motivated by data on thermoregulation in two species of opossum and the spiny rat. We give a formula for finding chair points in mathematical models by implicit differentiation and apply it to a model of lateral inhibition. The second asks when homeostasis is invariant under appropriate coordinate changes. This is false in general, but for network dynamics there is a natural class of coordinate changes: those that preserve the network structure. We characterize those nodes of a given network for which homeostasis is invariant under such changes. This characterization is determined combinatorially by the network topology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and outline

Homeostasis is an important concept, occurring widely in biology, especially biochemistry, and in many other areas including control engineering. It refers to a regulatory mechanism that keeps some specific variable close to a fixed value as other parameters vary. In this paper we define and investigate a reformulation of homeostasis in terms of singularity theory, centered on the input–output function of the system. This approach makes it possible to calculate homeostasis points in models, classifies different types of homeostasis point, and describes all small perturbations of the input–output function near a homeostasis point.

Section 2 opens the discussion with a motivational example of homeostasis: regulation of the output ‘body temperature’ in an opossum, when the input ‘environmental temperature’ varies. The graph of body temperature against environmental temperature \(\lambda \) varies linearly, with nonzero slope, when \(\lambda \) is either small or large, while in between is a broad flat region, where homeostasis occurs. This general shape is called a ‘chair’ by Nijhout et al. (2004, (2014), and plays a central role in the paper. This example is used in Sect. 3 to motivate a reformulation of homeostasis in terms of the derivative of an output variable (with respect to an input) being zero at some point, hence approximately constant near that point. We discuss this mathematical reformulation in terms of singularities of input–output functions, which are functions from parameter space to a selected variable. For network dynamics, this is usually the state of a specific node.

Section 4 discusses how the input–output function transforms under certain coordinate changes in the admissible system and how this leads to an unfolding theory based on elementary catastrophe theory (Golubitsky 1978; Poston and Stewart 1978; Zeeman 1977).

Section 5 explores the consequences of this result for homeostasis, with special emphasis on the two simplest singularities: simple homeostasis and the chair. We characterize these singularities and discuss their normal forms (the simplest form into which the singularity can be transformed by suitable coordinate changes), and (universal) unfoldings, which classify all small perturbations as other system parameters vary. We examine the unfolding of the chair to estimate the region over which homeostasis occurs, in the sense that the output varies by no more than a small amount \(\delta \) as the input changes. We relate the unfolding of the chair to observational data on two species of opossum and the spiny rat.

Section 6 provides a brief discussion of how chair points can be calculated analytically by implicit differentiation, and considers a special case with extra structure, common in biochemical applications, where the calculations simplify. This special case is discussed for a specific example in Sect. 8.

The paper then turns to the second main idea: how network structure affects the invariance of homeostasis under appropriate coordinate changes.

Section 7 recalls some of the ideas of network systems from Golubitsky et al. (2005); Stewart et al. (2003), specifically the notion of an admissible ODE—one that respects the network architecture—and provides simple examples to illustrate the idea. In Sect. 8 we apply the method of Sect. 6 to a model of lateral inhibition (corresponding to a three-node feed-forward network) introduced by Nijhout et al. (2014), and prove that a chair point exists under very general assumptions.

We then turn to the question of invariance of homeostasis, for a given variable—and in particular an observation of a given node in the network—under coordinate changes that preserve network structure. Here out current methods require the network to be fully inhomogeneous; that is, all couplings (arrows) are distinct. The key idea is that network-preserving diffeomorphisms are defined to be those changes of coordinates that preserve admissibility for all admissible maps: see Sect. 9. Finally, we characterize right network-preserving coordinate changes in Sect. 10, proving that whether homeostasis of a single node variable is an invariant of network-preserving changes of coordinates can be determined by a combinatorial condition on the network architecture. See Corollary 10.5.

2 Motivating example of homeostasis

Homeostasis occurs when some feature of a system remains essentially constant as an input parameter varies over some range of values. For example, the body temperature of an organism might remain roughly constant despite variations in its environment. (See Fig. 1 for such data in the brown opossum where body temperature remains approximately constant over a range of 18 \(^\circ \)C in environmental temperature, Morrison 1946; Nijhout et al. 2014.) Or in a biochemical network the equilibrium concentration of some important biochemical molecule might not change much when the organism has ingested food.

Homeostasis is almost exactly opposite to bifurcation. At a bifurcation, the state of the system undergoes a change so extensive that some qualitative property (such as number of equilibria, or the topological type of an attractor) changes. In homeostasis, the state concerned not only remains topologically the same: some feature of that state does not even change quantitatively. For example, if a steady state does not bifurcate as a parameter is varied, that state persists, but can change continuously with the parameter. Homeostasis is stronger: the steady state persists, and in addition some feature of that steady state remains almost constant.

Homeostasis is biologically important, because it protects organisms against changes induced by the environment, shortage of some resource, excess of some resource, the effect of ingesting food, as so on. The literature is extensive [http://www.biology-online.org/4/1_physiological_homeostasis.html (updated 2000)]. However, homeostasis is not merely the existence (and persistence as parameters vary) of a stable equilibrium of the system, for two reasons.

First, homeostasis is a stronger condition than ‘the equilibrium varies smoothly with parameters’, which just states that there is no bifurcation. In the biological context, nonzero linear variation of the equilibrium as parameters change is not normally considered to be homeostasis, unless the slope is very small. For example, in Fig. 1, body temperature appears to be varying linearly when the environmental temperature is either below \(10~^\circ \)C or above \(30~^\circ \)C and is approximately constant in between. Nijhout et al. (2014) call this kind of variation (linear, constant, linear) a chair.

Second, some variable(s) of the system may be homeostatic while others undergo larger changes. Indeed, other variables may have to change dramatically to keep some specific variable roughly constant.

As noted, Nijhout et al. (2014) suggest that there is a chair in the body temperature data of opossums (Morrison 1946). We take a singularity-theoretic point of view and suggest that chairs are better described by a homogeneous cubic function (that is, like \(\lambda ^3\), where \(\lambda \) is the input parameter) rather than by the piecewise linear description given previously. Figure 2a shows the least squares fit of a cubic function to data for the brown opossum, which is a cubic with a maximum and a minimum. In contrast, the least squares fit for the eten opossum, Fig. 2b, is monotone.

These results suggest that in ‘opossum space’ there should be a hypothetical type of opossum that exhibits a chair in the system input–output function of environmental temperature to body temperature. In singularity-theoretic terms, this higher singularity acts as an organizing center, meaning that the other types of cubic can be obtained by small perturbations of the homogeneous cubic. In fact, data for the spiny rat have a best-fit cubic very close to the homogeneous cubic, Fig. 2c. We include this example as a motivational metaphor, since we do not consider a specific model for the regulation of opossum body temperature.

The horizontal coordinate is environmental temperature; the vertical coordinate is body temperature. From Morrison (1946): a data from the brown opossum; b data from the eten opossum; c data from the spiny rat. The curves are the least squares best fit of the data to a cubic polynomial

This example, especially Fig. 2, motives a reformulation of homeostasis in a form that can be analysed using singularity theory. We discuss this in Sects. 3–6. Singularity theory uses changes of coordinates to simplify functions near singular points, converting them into polynomial ‘normal forms’. General coordinate changes can mix up variables, which destroys homeostasis of a given variable, so in this part of the paper we restrict the coordinate changes so that homeostasis of a chosen variable is preserved.

This raises a more general issue: when is homeostasis of a given variable invariant under a reasonable class of coordinate changes? In Sects. 7–10 we answer this question for network dynamics, assuming the network to be fully inhomogeneous.

3 Input–output functions

First we set up the basic notion of an input–output function. In applications, homeostasis is a property of a distinguished variable in a many-variable system of ODEs. For example in thermoregulation, the body temperature is homeostatic, but other system variables may change—in fact, must change in order for body temperature to remain roughly constant. (If not, the entire system would be independent of the environmental temperature, hence effectively decoupled from it. This condition is too strong, and does not correspond to real examples of homeostasis.) So we consider a system of ODEs in a vector of variables \(\mathbf {X}= (x_1, \ldots , x_n) \in \mathbb {R}^n\), denote a distinguished variable by \(\mathbf {Z}\), and let \(\mathbf {Y}\) denote all the other variables. For later examples it is convenient to renumber the variables if necessary, so that

and

We can now reformulate homeostasis as the vanishing of the derivative of the input–output function with respect to the input variable. Suppose that

is a system of ODEs, where \(\mathbf {X}\in \mathbb {R}^n\) and the input parameter \(\lambda \in \mathbb {R}\). (More generally, there could be multiple input parameters, in which case \(\lambda \in \mathbb {R}^k\).) Suppose that (3.1) has a linearly stable equilibrium at \((\mathbf {X}_0,\lambda _0)\). By the implicit function theorem there exists a family of linearly stable equilibria \(\widetilde{\mathbf {X}}(\lambda )\) near \(\lambda _0\) such that \(\widetilde{\mathbf {X}}(\lambda _0)=\mathbf {X}_0\) and

With the above decomposition \(\mathbf {X}= (\mathbf {Y},\mathbf {Z})\), we write the equilibria that depend on \(\lambda \) as

and call \(\widetilde{\mathbf {Z}}(\lambda )\) the system input–output function (for the variable \(\mathbf {Z}\)). This path of equilibria exhibits homeostasis in the \(\mathbf {Z}\)-variable, in the usual sense of that term, if \(\widetilde{\mathbf {Z}}(\lambda )\) remains roughly constant as \(\lambda \) is varied.

We now introduce a more formal mathematical definition of (one possible interpretation of) homeostasis, which opens up a potential singularity-theoretic approach. The statement ‘the equilibrium value of some variable remains roughly constant as a parameter varies near some point’ is roughly equivalent to the statement ‘the derivative of the variable with respect to the parameter vanishes at that point’. This condition leads to local quadratic or higher-order dependency on the parameters; this is ‘flatter’ than linear changes, and is guaranteed by the vanishing derivative.

From now on we use subscripts \(g_\lambda , g_{\lambda \lambda }\), and so on to denote the \(\lambda \)-derivatives of any function g. We can now state:

Definition 3.1

-

(a)

The path of equilibria \((\widetilde{\mathbf {Y}}(\lambda ),\widetilde{\mathbf {Z}}(\lambda ))\) of (3.2) exhibits \(\mathbf {Z}\) -homeo stasis at \(\lambda _0\) if

$$\begin{aligned} \widetilde{\mathbf {Z}}_\lambda (\lambda _0) = 0 \end{aligned}$$(3.4) -

(b)

If further \(\widetilde{\mathbf {Z}}_{\lambda \lambda }(\lambda _0) \ne 0\), this is a point of simple homeostasis.

-

(c)

The path of equilibria \((\widetilde{\mathbf {Y}}(\lambda ),\widetilde{\mathbf {Z}}(\lambda ))\) of (3.2) has a \(\mathbf {Z}\) -chair point at \(\lambda _0\) if

$$\begin{aligned} \widetilde{\mathbf {Z}}_\lambda (\lambda _0) = \widetilde{\mathbf {Z}}_{\lambda \lambda }(\lambda _0) = 0 \quad \text{ and }\quad \widetilde{\mathbf {Z}}_{\lambda \lambda \lambda }(\lambda _0) \ne 0. \end{aligned}$$(3.5)

Singularity theory has been widely used in a different but related context: bifurcations (Golubitsky and Schaeffer 1985; Golubitsky et al. 1988; Guckenheimer and Holmes 1983). We take inspiration from this approach, but new issues arise in the formulation of the set-up. Steady state (and to some extent Hopf) bifurcations can be viewed as singularities,and classified using singularity theory and nonlinear dynamics. Such classifications often employ changes of coordinates to put the system into some kind of ‘normal form’ (Golubitsky and Schaeffer 1985; Golubitsky et al. 1988; Guckenheimer and Holmes 1983). The changes of coordinates employed in the literature are of several kinds, depending on what structure is being preserved. The changes of coordinates that are appropriate to the study of critical points of potential functions (elementary catastrophe theory, Bröcker and Lander 1975; Gibson 1979; Martinet 1982; Poston and Stewart 1978; Zeeman 1977) is right equivalence; the changes of coordinates that are appropriate to the study of zeros of a map are contact equivalences (Golubitsky 1978; Golubitsky and Guillemin 1973; Golubitsky and Schaeffer 1985); and the changes of coordinates that are usually appropriate to the study of dynamics of differential equations are vector field changes of coordinates (Guckenheimer and Holmes 1983). We do not require the precise definition of these terms here, but we emphasise that there are several different ways for coordinate changes to act, and each action preserves different features of the dynamics.

4 Singularity theory of input–output functions

In this section we use singularity theory to study how the input–output function \(\widetilde{\mathbf {Z}}(\lambda )\) changes as the vector field \(F(\mathbf {X},\lambda )\) is perturbed. To do this we use a combination of two types of coordinate changes in F, defined by

where \(\Lambda (\lambda _0) = \lambda _0\), \(\Lambda '(\lambda _0)>0\), and \(K=(\kappa _1,\ldots ,\kappa _n)\in \mathbb {R}^n\).

Next we show that certain changes of coordinates in F as in (4.1) lead to changes in coordinates of the input–output function \(\widetilde{\mathbf {Z}}(\lambda )\). The input–output changes of coordinates provide the basis for the application of singularity theory.

Theorem 4.1

Let \(F(\mathbf {X},\lambda )\) be admissible and let \(\Lambda (\lambda )\) be a reparametrization of \(\lambda \). Let \(K=(\kappa _1,\ldots ,\kappa _n)\in \mathbb {R}^n\) be a constant. Define the map \(\hat{F}\) by (4.1). Then

-

(a)

The zero set mapping \(\mathbf {X}(\lambda )\) transforms to

$$\begin{aligned} {\hat{\mathbf {X}}}(\lambda ) = \widetilde{\mathbf {X}}(\Lambda (\lambda )) + K \end{aligned}$$(4.2) -

(b)

The input–output function \(\mathbf {Z}(\lambda )\) transforms to

$$\begin{aligned} {\hat{\mathbf {Z}}}(\lambda ) = \widetilde{\mathbf {Z}}(\Lambda (\lambda )) + \kappa _n \end{aligned}$$(4.3) -

(c)

Simple homeostasis and chairs are preserved by the input–output transformation (4.3).

Proof

This is a straightforward calculation. For (a), substitute (4.2) into (4.1). Part (b) is then immediate. For (c), use the chain rule:

Since \(\Lambda _\lambda \) is nowhere zero, \(\hat{\mathbf {Z}}_\lambda (\lambda )\) is zero if and only if \(\widetilde{\mathbf {Z}}_\lambda (\Lambda (\lambda ))\) is zero. Hence, homeostasis points are preserved in the sense that if \(\lambda _0\) is one for \(\widetilde{\mathbf {Z}}\), then \(\Lambda ^{-1}(\lambda _0)\) is one for \(\hat{\mathbf {Z}}\). Next observe that when \(\widetilde{\mathbf {Z}}_\lambda (\lambda _0)=0\)

Hence \(\hat{\mathbf {Z}}_{\lambda \lambda }(\lambda _0)\ne 0\) if and only if \( \widetilde{\mathbf {Z}}_{\lambda \lambda }(\Lambda (\lambda _0))\ne 0\) and simple homeostasis is preserved.

To see that chairs are preserved, assume that the defining conditions \(\widetilde{\mathbf {Z}}_\lambda (\lambda _0)= \widetilde{\mathbf {Z}}_{\lambda \lambda }(\lambda _0) = 0\) and the nondegeneracy condition \(\widetilde{\mathbf {Z}}_{\lambda \lambda \lambda }(\lambda _0)\ne 0\) hold. As noted \(\hat{\mathbf {Z}}_\lambda (\Lambda ^{-1}(\lambda _0))=0\) and by (4.5) \(\hat{\mathbf {Z}}_{\lambda \lambda }(\Lambda ^{-1}(\lambda _0))=0\). Next, differentiate (4.4) with respect to \(\lambda \) twice and evaluate at \(\lambda _0\) to obtain

Hence the nondegeneracy condition for a chair is also preserved. \(\square \)

The transformation (4.3) is the standard change of coordinates in elementary catastrophe theory (Golubitsky 1978; Poston and Stewart 1978; Zeeman 1977). It is a combination of right equivalence (\(\Lambda (\lambda )\)) in singularity theory and translation by a constant (\(\kappa _n\)). We can therefore use standard results from elementary catastrophe theory to find normal forms and universal unfoldings of input–output functions \(\mathbf {Z}(\lambda )\), as we now explain.

Because \(\mathbf {Z}= x_n\) is 1-dimensional we consider singularity types near the origin of a 1-variable function g(x), where \(g:\mathbb {R}\rightarrow \mathbb {R}\). Such singularities are determined by the first nonvanishing \(\lambda \)-derivative \(g^{(k)}(0)\) (unless all derivatives vanish, which is an ‘infinite codimension’ phenomenon that we do not discuss further.) Informally, the codimension of a singularity is the number of conditions on derivatives that determine it. This is also the minimum number of extra variables required to specify all small perturbations of the singularity, up to suitable changes of coordinates. These perturbations can be organized into a family of maps called the universal unfolding, which has that number of extra variables.

Definition 4.2

\(G(\lambda , a)\) is an unfolding of \(g(\lambda )\) if \(G(\lambda ,0)=g(\lambda )\). G is a universal unfolding of g if every unfolding of \(H(\lambda ,b)\) factors through G. That is,

It follows that every small perturbation \(H(\cdot , b)\) is equivalent to a perturbation \(G(\cdot ,a)\) of g in the G family.

If such k exists, the normal form is \(\pm \lambda ^k\). Simple homeostasis occurs when \(k = 2\), and a chair when \(k=3\). When \(k \ge 3\) the universal unfolding for catastrophe theory equivalence is

for parameters \(a_j\) and when \(k = 2\) the universal unfolding is \(\pm \lambda ^2\). The codimension in this setting is therefore \(k-2\). See (Bröcker and Lander 1975) Example 14.9 and Theorem 15.1; (Gibson 1979) Chapter IV (4.6) and Chapter VI (6.3); and (Martinet 1982) Chapter XI Sect. 1.1 and Chapter XII Sects. 3.1, 7.2.

To summarize: the normal form of the input–output function for simple homeostasis is

and no unfolding parameter is required. Similarly,

is the normal form of the input–output function for a chair, and

is a universal unfolding.

5 Discussion of chair normal form

We determine some quantitative geometric features of the chair normal form, which control the interval of input parameters over which the output varies by no more than a specified amount. The calculations are elementary but the results are of interest because they relate the singularity-theoretic version of homeostasis (vanishing of derivate of input–output function) to the usual one (output is roughly constant as input varies). Here we consider only the normal form, but the results can in principle be transferred to the original input–output function by inverting the coordinate changes used to put it into normal form. We do not pursue this procedure here, except to note that the power-law dependence on \(\delta \) in the asymptotic analysis is preserved by diffeomorphisms, hence applies more generally.

For comparison, we briefly discuss the normal form (4.7) for simple homeostasis. Here it is clear that in order for \(\lambda ^2\) to differ from 0 by at most \(\delta \), we must have \(\lambda \) in the homeostasis interval \((-\delta ^{1/2}, \delta ^{1/2})\).

Next, we discuss the analogous question for the chair normal form (4.8) whose universal unfolding is (4.9). Changing the signs of \(\lambda \) and a if necessary, we may assume the normal form has a plus sign and the unfolding is \(\lambda ^3+a\lambda \). Define the \(\delta \)-homeostasis region for a given unfolding parameter a to be the set of inputs \(\lambda \) such that

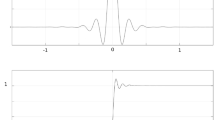

The value of a in (4.9) affects this region. The calculations are routine but the results are of interest. Figure 3 illustrates the possibilities. In this figure, \(\delta \ll 1\) is fixed and the gray rectangle indicates the region where \(|\mathbf {Z}_{a}(\lambda )|<\delta \).

Geometry of the normal form (4.9). Plot c corresponds to \(a>0\), plot b to \(a=0\), plot a to \(0>a>-3(\frac{\delta }{2})^{2/3}\), and plot d to \(-3(\frac{\delta }{2})^{2/3} > a\)

There are four plots in Fig. 3. In (a–c) the interval on which \(\mathbf {Z}_{a}(\lambda )=\lambda ^3+a\lambda <\delta \) is given by \((\lambda _1,\lambda _2)\). Here \(\lambda _2\) is defined by

and since \(\mathbf {Z}_{a}(\lambda )\) is odd, \(\lambda _1=-\lambda _2\). The transition to plot (d) in Fig. 3 occurs when \(\delta \) is a critical value of \(\mathbf {Z}_{a}\). At that point (which occurs at \(a=-3(\frac{\delta }{2})^{2/3}\)) the region on which \(\mathbf {Z}_{a}\) is \(\delta \) close to 0 splits into two intervals. We now assume

Equation (5.1) implicitly defines \(\lambda _2\equiv \lambda _2(a)\). We claim that when a satisfies (5.2), the length of the \(\delta \)-homeostasis region is \(2\lambda _2(a)\), and

We consider only values of a for which the \(\delta \)-homeostasis region is a connected interval of \(\lambda \)-values. For such a we discuss how this region varies as \(\delta \) tends to zero, which is one way to measure how robust homeostasis is to small changes in parameters, or indeed to the model equations.

When \(a<0\) its effect is to push \(\widetilde{\mathbf {Z}}_{a}\) into the region with two critical points [plot (a)], so the length of the \(\delta \)-homeostasis interval increases. When \(a>0\) it pushes \(\widetilde{\mathbf {Z}}_{a}\) into the monotone region [plot (c)] and the length of this interval decreases. It is straightforward to calculate \(\lambda _2(0) = \delta ^{1/3}\) and to use implicit differention of (5.1) to verify that

Thus the \(\delta \)-homeostasis interval for the chair is asymptotically (as \(\delta \rightarrow 0\)) wider than that for simple homeostasis, even when \(a = 0\). When \(a < 0\) it becomes wider still.

Remark 5.1

This suggests that a chair is a more robust form of homeostasis than simple homeostasis, which may lead to it being more common in the natural world. We suspect this fact is significant.

We relate Fig. 3 to the data for opossum thermoregulation.

-

\(a > 0\) There are no critical points. This is in fact the case for the best-fit cubic curve to the data for the eten opossum, Fig. 2b. The slope is slightly positive at the inflection point.

-

\(a=0\) The degenerate singularity that acts as an organizing center for the unfolding, there is a unique critical point.

-

\(a < 0\) There are two critical points. This is the case for the best-fit cubic curve to the data for the brown opossum, Fig. 2a. Now the slope is slightly negative at the inflection point.

The transition between these two curves in ‘opossum space’ is organized by the chair point \(a=0\) at which \(\widetilde{\mathbf {Z}}_{0}(\lambda ) = \lambda ^3\). In fact, data for the spiny rat give a best-fit cubic very close to this form, Fig. 2c.

6 Computation of homeostasis points

In this section we apply the singularity-theoretic formalism to obtain a method for finding homeostasis points (such as chairs) analytically (sometimes with numerical assistance) in specific models.

Suppose that F is a vector field on \(\mathbb {R}^n\) and \((\mathbf {X}_0,\lambda _0)\) is a stable equilibrium of (3.1).

Let \(\mathbf {Z}= x_n\), and as usual let \(\widetilde{\mathbf {X}}(\lambda ) = (\widetilde{\mathbf {Y}}(\lambda ),\widetilde{\mathbf {Z}}(\lambda ))\) be the family of equilibria satisfying \((\widetilde{\mathbf {Y}}(\lambda _0),\widetilde{\mathbf {Z}}(\lambda _0))=\mathbf {X}_0\). By (3.5) the input–output function \(\widetilde{\mathbf {Z}}(\lambda )\) has a \(\mathbf {Z}\)-chair at \(\lambda _0\) if

The derivatives in (6.1) can be computed from F using implicit differentiation, as is standard in bifurcation theory.

Let \(J=(DF)(\mathbf {X}_0,\lambda _0)\). Differentiate \(F(\widetilde{\mathbf {Y}}(\lambda ),\widetilde{\mathbf {Z}}(\lambda ),\lambda )\equiv 0\) with respect to \(\lambda \) to get

A second implicit differentiation at a point where \(\widetilde{\mathbf {Z}}_\lambda =0\) then yields

evaluated at \((\mathbf {Y}_0,\mathbf {Z}_0,\lambda _0)\). The third derivative \(\widetilde{\mathbf {Z}}_{\lambda \lambda \lambda }(\lambda _0)\) is generally nonzero. We do not derive the formula, but in any particular application this derivative can in principle be computed using implicit differentiation.

For an important special class of equations occurring in biological applications, the formulas for (6.2) and (6.3) simplify. Suppose that the first component of the equation is

and that all other components of the equation are independent of \(\lambda \). Suppose also that the output variable is the last variable \(x_n\). Assumption (6.4) implies that \(F_\lambda = e_1\) where \(e_1=(1,0,\ldots ,0)^\mathrm {T}\), \(F_{\lambda \lambda }=0\), and \(DF_\lambda =0\). Hence

and

Lemma 6.1

Let B be the \((n-1)\times (n-1)\) minor of J obtained by deleting its first row and last column. Then \(\lambda _0\) is a homeostasis point if and only if \(\det B = 0\).

Proof

We can use Cramer’s rule to solve (6.5) for \(\mathbf {Y}_\lambda (\lambda _0)\). Specifically,

Hence, \(\lambda _0\) is a homeostasis point if and only if \(\widetilde{\mathbf {Z}}_\lambda (\lambda _0)=0\), which occurs if and only if \(\det (B) = 0\). \(\square \)

Remark 6.2

Suppose that a system of ODEs, depending on an auxiliary parameter \(\mu \) (in addition to the input parameter \(\lambda \)), leads to a chair point at \(\mu =\mu _0\). Then the input–output function \(\widetilde{\mathbf {Z}}(\lambda ;\mu )\) depends on \(\mu \), and that function factors through, as in (4.6), the universal unfolding \(\pm \lambda ^3+a\lambda \) for a chair point. A standard question in singularity theory is: When is \(\widetilde{\mathbf {Z}}(\lambda ,\mu )\) a universal unfolding of \(\widetilde{\mathbf {Z}}(\lambda ,\mu _0)\)? The answer in this case is straightforward (and expected); namely,

We apply Lemma 6.1 to a biological example, with network dynamics, in Sect. 8.

7 Admissible vector fields

We now examine the above ideas in a specialized context, networks of dynamical systems. Networks are common in applications, especially to biochemistry, and the interplay between network topology and homeostasis is of some interest. We begin by clarifying what we mean by a network of dynamical systems, using the formalism of Golubitsky and Stewart (2006); Golubitsky et al. (2005) and Stewart et al. (2003).

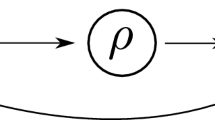

A central concept of network theory, in this approach, is that of an admissible vector field, or more simply an admissible map. Informally, this is a vector field whose structure encodes the network topology, by associating a variable with each cell and associating coupling terms between these cell variables with the arrows (directed edges) of the network. The corresponding class of ODEs, which we call admissible, determines the possible dynamical properties of the network.

This formalism defines a network to be a directed graph, whose nodes represent state variables and whose arrows represent couplings. Two nodes have the same node symbol if their associated state spaces are identical, and two arrows have the same arrow symbol if the coupling formulas are identical, once corresponding variables are substituted. Simple examples make the idea clear; formal definitions are in Golubitsky et al. (2005) and Stewart et al. (2003).

Examples of regular networks, where all nodes are the same and all arrows are the same, are shown in Fig. 4. We describe the corresponding classes of admissible ODEs.

The admissible ODEs associated with the networks in Fig. 4 have, respectively, the forms:

where \(x_j\in \mathbb {R}^k\) and \(f:\mathbb {R}^k\times \mathbb {R}^k\rightarrow \mathbb {R}^k\) is any smooth (infinitely differentiable) function. Of course networks need not be regular. Figure 5 (left) is an an example of a three-node network with two node types and three arrow types. The associated admissible ODEs have the form:

for appropriate smooth functions f, g.

In previous work Golubitsky and Stewart (2006); Golubitsky et al. (2005) and Stewart et al. (2003), we used identical nodes and arrows to classify robust synchrony, phase-shift synchrony, and bifurcations in admissible ODEs for networks. This theory was generally aimed at applications in mathematical neuroscience, where identical nodes and arrows often makes sense, as did higher dimensional phase-spaces \(k>1\). However, most biochemical networks are special in this context, since node types are usually different (they correspond to different chemical compounds, which implies distinct nodal equations), node phase spaces are one-dimensional (since nodes represent the concentration of a chemical compound), and different arrows usually have different types (they correspond to different reaction rates).

Figure 5 (right) is an example of a four-node network in which all nodes are distinct and all arrows are different. The associated admissible maps are:

In fact, it is easy to specify the class of admissible maps on fully inhomogeneous networks. It consists of maps \(F=(f_1,\ldots ,f_n)\) where \(f_i\) depends only on those \(x_j\) for which an arrow connects node j to node i. The only special assumption we make about the admissible systems in this paper is to assume that the internal phase spaces are one-dimensional (\(k=1\)). Beginning in Sect. 9, we assume that the networks are fully inhomogeneous.

If j is a node, we call \(\dot{x}_j = f_j(\mathbf {X}, \lambda )\), or just \(f_j(\mathbf {X}, \lambda )\), the nodal equation for j.

8 Homeostasis caused by feed-forward inhibition

We now apply our reformulation of homeostasis in terms of singularities to a biological model. Our methods make it possible to carry out a systematic search of a given model, to find parameter values at which homeostasis points or chair points occur.

Feed-forward inhibition (often called lateral inhibition) is an important concept in a variety of physiological systems Nijhout et al. (2014) and Savageau and Jacknow (1979). We explain it briefly in a moment. (Nijhout et al. 2014, Sect. 2.3) give an example of an admissible system of ODEs for a three-node feed-forward network that models feed-forward inhibition and that exhibits homeostasis. In their paper, the nodes of Fig. 6 represent neurons. Connections \(1 \rightarrow 2\) and \(1 \rightarrow 3\) are excitatory, but \(2 \rightarrow 3\) is inhibitory. Therefore, when node 1 fires, it sends an excitatory signal to node 3, but this is in competition with an inhibitory signal from node 2. Nijhout et al. (2014) mention three applications of this model, to simultaneity detection (Kremkow et al. 2010), synchrony decoding (Patel and Reed 2013), and to explain homeostasis and plasticity in the developing nervous system (Turrigiano and Nelson 2004).

We show how our techniques make finding homeostasis points and chair points in this network straightforward. Admissible ODEs for Fig. 6 have the form

for arbitrary functions f, g, h defined on the appropriate spaces. (The only special feature at this stage is that we also introduce \(\lambda \) as an input parameter, adding it to the first nodal equation.) Suppose that \(\mathbf {X}_0= (x^0,y^0,z^0)\) is a linearly stable equilibrium. The Jacobian of (8.1) at any \(\mathbf {X}\in \mathbb {R}^n\) is

so the equilibrium \(\mathbf {X}_0\) is linearly stable if and only if

at \(\mathbf {X}_0\). The implicit function theorem then implies that for \(\lambda \) near 0 there is a unique family of equibria \(\widetilde{\mathbf {X}}(\lambda ) = (x(\lambda ),y(\lambda ),z(\lambda ))\) with \(\widetilde{\mathbf {X}}(\lambda _0)=(\tilde{x}_0.\tilde{y}_0,\tilde{z}_0)\).

If, more strongly, we assume that the inequalities (8.2) hold everywhere, \((\tilde{x}_0.\tilde{y}_0,\tilde{z}_0)\) is the unique equilibrium of (8.1). Since \(f_x<0\), f is monotone decreasing and \(\tilde{x}_0\) is unique. Since \(g_y<0\) it follows that \(g(\tilde{x}_0,y)\) is monotone decreasing in y and hence \(\tilde{y}_0\) is unique. Finally, since \(h_z<0\), it follows that \(h(\tilde{x}_0,\tilde{y}_0,z)\) is monotone decreasing in z and \(\tilde{z}_0\) is unique.

We use Lemma 6.1 to determine whether homeostasis is possible. Note that

and

By Lemma 6.1, homeostasis is possible in the third coordinate of \(\mathbf {X}_0\) if and only if \(\det (B)=0\).

Nijhout et al. (2014) consider model equations for lateral inhibition that are admissible for this network. Their models have the specific form

where \(\sigma \) and \(\tau \) are given functions. In Nijhout et al. (2014) these functions are piecewise smooth; here we assume that they are smooth sigmoidal-like functions. In particular they are positive and have positive derivative everywhere. The equilibrium \(\mathbf {X}(\lambda )\) is given by

which is linearly stable.

Lemma 8.1

Assume that \(\tau (\lambda )\) in (8.4) is monotone. Then

-

(a)

There is a homeostasis point in the z-variable at \(\lambda _0\) if \(\sigma _\lambda (\lambda _0) = 1\).

-

(b)

There is a chair point in the z-variable if in addition \(\sigma _{\lambda \lambda }(\lambda _0)=0\) and \(\sigma _{\lambda \lambda \lambda }(\lambda _0) \ne 0\).

-

(c)

Suppose \(\sigma \) depends on an additional parameter \(\mu \). Then (8.4) is a universal unfolding of the chair if \(\sigma _{\lambda \mu }(\lambda _0) \ne 0\).

Proof

By (8.5),

Since \(\tau _\lambda \) is nowhere zero, homeostasis occurs if and only if \(\sigma _\lambda (\lambda _0)=1\). Next,

Since \(\sigma _\lambda (\lambda _0)= 1\), it follows that

Again, since \(\tau _\lambda \ne \) is non-zero, \(z_{\lambda \lambda }(\lambda _0)=0\) if and only if \(\sigma _{\lambda \lambda }(\lambda _0)=0\). Next,

and \(\lambda _0\) is a chair point when \(\sigma _{\lambda \lambda \lambda }(\lambda _0)\ne 0\). Finally, if \(\sigma \) depends on a parameter \(\mu \), then \(\mu \) is a universal unfolding parameter for the chair if (6.7) is valid; that is \(z_{\lambda \mu }(\lambda _0)\ne 0\). This happens precisely when \(\sigma _{\lambda \mu }(\lambda _0)\ne 0\). \(\square \)

We return to this example at the end of Sect. 10, after discussing invariance of homeostasis under coordinate changes.

9 Changes of coordinates revisited

One of the starting points for this paper was the realization that in certain networks, but not all networks, \(\mathbf {Z}\)-homeostasis is an invariant of network-preserving changes of coordinates.

In Sect. 4 we discussed how certain changes of coordinates in the admissible map F (4.1) changed the input–output function \(\widetilde{\mathbf {Z}}(\lambda )\). Specifically, we considered changes of coordinates \(\Lambda (\lambda )\) in the input parameter and the constant shift \(F(X,\lambda )\mapsto F(X-K,\lambda )\).

These coordinate changes preserve network structure, in the sense that they map admissible maps to admissible maps, provided \(\kappa _i=\kappa _j\) if admissibility demands that \(f_i=f_j\). Regardless of the network, \(\kappa _i\in \mathbb {R}\) can be chosen arbitrarily for any specific node i. However, other types of coordinate change can also preserve network structure. The main new ingredient is to consider, in addition, right equivalence by a diffeomorphism \(\Phi :\mathbb {R}^n\rightarrow \mathbb {R}^n\), namely,

Proposition 9.1

The right equivalence (9.1) transforms \(\mathbf {X}(\lambda )\) to the left equivalence

on input–output functions.

Proof

The right equivalence \(G=0\) reduces to

Now compute

where the last equality follows from (3.2). \(\square \)

In general, the equivalence (9.1) does not preserve homeostasis for \(\widetilde{\mathbf {Z}}(\lambda )\), because \(\Phi \) need not preserve the \(\mathbf {Z}\) variable. For example, if \(n=2, \mathbf {Y}= x_1, \mathbf {Z}=x_2\), and

then \(\mathbf {Z}\) has a simple homeostasis point at \(\lambda _0 = 0\). However, under the coordinate change \((x_1, x_2) \mapsto (x_1+ x_2,x_1-x_2)\) this map becomes \((\lambda +\lambda ^2,\lambda -\lambda ^2)\) which has derivative (1, 1) at \(\lambda _0 = 0\); in particular the second coordinate does not exhibit homeostasis locally near 0. More generally, suppose some coordinate i in \(\widetilde{\mathbf {Y}}(\lambda )\) has a nonzero \(\lambda \) derivative at the origin. Then setting \(\Phi (\mathbf {Y},\mathbf {Z})=(\mathbf {Y},x_i-x_n)\) will destroy \(\mathbf {Z}\) homeostasis at \(\lambda _0=0\).

However, homeostasis of \(\mathbf {Z}\) is preserved if \(\Phi \) has a special form:

Theorem 9.2

Suppose that \(\Phi \) preserves the variable \(\mathbf {Z}\), in the sense that there are maps \(\Theta ,\Psi \) such that

Then homeostasis for \(\widetilde{\mathbf {Z}}(\lambda )\) is preserved.

Proof

By (9.2) the input–output map \(\mathbf {Z}(\lambda )\) transforms to

By the chain rule, homeostasis for \(\widetilde{\mathbf {Z}}(\lambda )\) is preserved. \(\square \)

Theorems 4.1c and 9.2 show that homeostasis is an invariant of general network-preserving contact equivalences if network-preserving right equivalences \(\Phi \) always satisfy (9.3). We show in Sect. 10 that the special form of \(\Phi \) in (9.3) often arises naturally on a fully inhomogeneous network. Therefore in the network context there are special circumstances in which the variables in \(\mathbf {Z}\) are preserved. More precisely, we restrict the form of \(\Phi \) so that the corresponding coordinate change preserves the network structure. This topic is investigated at length in Golubitsky and Stewart (2015), for several different types of coordinate change.

10 Right network preserving diffeomorphisms

Let \(F: \mathbb {R}^n \rightarrow \mathbb {R}^n\) be a smooth map, and let \(\Phi : \mathbb {R}^n \rightarrow \mathbb {R}^n\) be a diffeomorphism. The right action of \(\Phi \) transforms F into

Definition 10.1

We say that \(\Phi \) is right network-preserving if G is admissible whenever F is admissible.

Theorem 10.4 classifies all network-preserving right diffeomorphisms in combinatorial terms of the network architecture. From now on we assume that the network is fully inhomogeneous.

Definition 10.2

The extended input set J(i) of node i is the set of all j such that either \(j=i\) or there exists an arrow connecting node j to node i. The extended output set O(i) is the set of all j such that either \(j=i\) or there exists an arrow connecting node i to node j.

Define

and

Lemma 10.3

\(\bar{R}(i)=R(i)\).

Proof

If \(j\in \bar{R}(i)\) then \(j\in J(m)\) for every \(m\in O(i)\), so in particular \(j\in J(i)\). That is, \(m\in O(j)\) for every \(m\in O(i)\), which implies \(O(i)\subseteq O(j)\) and \(j\in R(i)\). Conversely, suppose \(j\in R(i)\). Then \(j\in J(i)\) and \(O(i)\subseteq O(j)\). It follows that if \(m\in O(i)\), then \(m\in O(j)\). Or, if \(m\in O(i)\), then \(j\in J(m)\). So \(j\in \bar{R}(i)\). \(\square \)

Theorem 10.4

Assume the network is fully inhomogeneous. A diffeomorphism \(\Phi = (\phi _1, \ldots , \phi _n)\) is right network-preserving if and only if

Proof

Suppose \(\Phi \) is right network-preserving. We capture the restrictions on \(\Phi =(\phi _1,\ldots ,\phi _n)\) as follows. First, let V(k) be the indices that the function \(\phi _k\) depends on. Fix j and let \(i \in O(j)\). We claim that \(V(j)\subset J(i)\). It follows that

Let \(F = [f_1, \ldots , f_n]\) where \(f_k(x) = 0\) when \(k \ne i\) and \(f_i(x) = x_j\). Since \(j\in J(i)\), F is admissible; so \(G=F\Phi \) is also admissible. Writing \(G = [g_1, \ldots ,g_n]\), it follows that \(g_i(x) = \phi _j(x)= \phi _j(x_{V(j)})\) and \(g_k(x) = 0\) when \(k \ne i\). In order for G to be admissible we must have \(V(j)\subset J(i)\).

Conversely, let \(\Phi \) be a diffeomorphism satisfying (10.3) and let \(F=[f_1, \ldots , f_n]\) be admissible. Then let

We need to show that G is admissible; that is, we need to show that \(g_i(x)\) depends only on variables in J(i).

We see that \(g_i(x) = f_i(\phi _{J(i)}(x))\). This function can depend on a variable \(x_k\) only if \(k\in \bar{R}(j)\) for some \(j\in J(i)\) (or \(i\in O(j)\)). Since \(i\in O(j)\), it follows that \(\bar{R}(j)\subset J(i)\). Therefore, \(j\in J(i)\) and \(G=F\Phi \) is admissible. \(\square \)

Corollary 10.5

Let i be a node and choose \(\mathbf {Z}= x_i\). Then \(\mathbf {Z}\)-homeostasis is an invariant of right network-preserving diffeomorphisms if and only if \(R(i)=\{i\}\).

Example 10.6

Consider the network in Fig. 5. A short computation shows

Hence, for this network right network preserving diffeomorphisms have the form

By Corollary 10.5 only \(x_1\)-homeostasis is an invariant of network-preserving changes of coordinates in this example.

Example 10.7

Consider the 3-cell network in Sect. 8. It is easy to verify that

By Corollary 10.5, homeostasis is an invariant for x but not for y or z.

Nevertheless, the calculation in Sect. 8 shows that homeostasis does occur in the z-variable for the class of models in (8.5). Here, homeostasis in the z-variable can be destroyed by right network-preserving coordinate changes, but the choice of coordinates has a specific biological meaning, so homeostasis in this variable is still a significant phenomenon.

This example shows that the interplay between specific models and the coordinate changes employed in singularity theory is delicate. This issue will be examined in Golubitsky and Stewart (2016) where we develop the theory in greater generality.

References

Bröcker T, Lander L (1975) Differentiable germs and catastrophes. Cambridge University Press, Cambridge

Gibson CG (1979) Singular points of smooth mappings. Pitman, London

Golubitsky M (1978) An introduction to catastrophe theory and its applications. SIAM Rev 20(2):352–387

Golubitsky M, Guillemin V (1973) Stable mappings and their singularities. Springer, New York

Golubitsky M, Schaeffer DG (1985) Singularities and groups in bifurcation theory I, Applied Mathematics Series, vol 51. Springer, New York

Golubitsky M, Stewart I (2006) Nonlinear dynamics of networks: the groupoid formalism. Bull Am Math Soc 43:305–364

Golubitsky M, Stewart I (2015) Coordinate changes for network dynamics (preprint)

Golubitsky M, Stewart I (2016) Homeostasis for multiple inputs and outputs (in preparation)

Golubitsky M, Stewart I, Schaeffer DG (1988) Singularities and groups in bifurcation theory II, Applied Mathematics Series, vol 69. Springer, New York

Golubitsky M, Stewart I, Török A (2005) Patterns of synchrony in coupled cell networks with multiple arrows. SIAM J Appl Dyn Syst 4:78–100

Guckenheimer J, Holmes P (1983) Nonlinear oscillations, dynamical systems, and bifurcations of vector fields. Springer, New York

Kremkow J, Perrinet L, Masson G, Aertsen A (2010) Functional consequences of correlated excitatory and inhibitory conductances in cortical networks. J Comput Neurosci 28:579–594

Martinet J (1982) Singularities of smooth functions and maps. Cambridge University Press, Cambridge

Morrison PR (1946) Temperature regulation in three Central American mammals. J Cell Comp Physiol 27:125–137

Nijhout HF, Best J, Reed MC (2014) Escape from homeostasis. Math Biosci 257:104–110

Nijhout HF, Reed MC (2014) Homeostasis and dynamic stability of the phenotype link robustness and stability. Integr Comp Biol 54:264–275

Nijhout HF, Reed MC, Budu P, Ulrich C (2004) A mathematical model of the Folate cycle—new insights into Folate homeostasis. J Biol Chem 279:55008–55016

Patel M, Reed M (2013) Stimulus encoding within the barn owl optic tectum using gamma oscillations vs. spike rate: a modeling approach. Network: Comput. Neural Syst 24:52–74

Poston T, Stewart I (1978) Catastrophe theory and its applications, surveys and reference works in Math. vol. 2. Pitman, London

Savageau MA, Jacknow G (1979) Feedforward inhibition in biosynthetic pathways: inhibition of the aminoacyl-tRNA synthetase by intermediates of the pathway. J Theor Biol 77:405–425

Stewart I, Golubitsky M, Pivato M (2003) Symmetry groupoids and patterns of synchrony in coupled cell networks. SIAM J Appl Dynam Syst 2:609–646

Turrigiano GG, Nelson SB (2004) Homeostatic plasticity in the developing nervous system. Nat Rev Neurosci 5:97–107

Zeeman EC (1977) Catastrophe theory: selected papers 1972–1977. Addison-Wesley, London

Acknowledgments

We thank Mike Reed and Janet Best for many helpful conversations—in particular for an introduction to the notion of a chair. This research was supported in part by the National Science Foundation Grant DMS-0931642 to the Mathematical Biosciences Institute.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Golubitsky, M., Stewart, I. Homeostasis, singularities, and networks. J. Math. Biol. 74, 387–407 (2017). https://doi.org/10.1007/s00285-016-1024-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00285-016-1024-2