Abstract

Inability to recognise and/or express effective anti-predator behaviour against novel predators as a result of ontogenetic and/or evolutionary isolation is known as ‘prey naiveté’. Natural selection favours prey species that are able to successfully detect, identify and appropriately respond to predators prior to their attack, increasing their probability of escape and/or avoidance of a predator. However, for many prey species, learning and experience are necessary to develop and perform appropriate anti-predator behaviours. Here, we investigate how a remnant population of bilbies (Macrotis lagotis) in south-west Queensland responded to the scents of two predators, native dingoes (Canis familiaris) and introduced feral cats (Felis catus); a procedural control (rabbits; Oryctolagus cuniculus); and an experimental control (no scent). Bilbies in Queensland have shared more than 8000 years of co-evolutionary history with dingoes and less than 140 years with feral cats and less than 130 years with rabbits. Bilbies spent the greatest proportion of time investigating and the least amount of time digging when cat and dingo/dog faeces were present. Our results show that wild-living bilbies displayed anti-predator responses towards the olfactory cues of both a long-term predator (dingoes) and an evolutionary novel predator (cats). Our findings suggest that native species can develop anti-predator responses towards introduced predators, providing support for the idea that predator naiveté can be overcome through learning and natural selection as a result of exposure to introduced predators.

Significance statement

Not so naïve—As a result of lifetime and/or evolutionary isolation from predators, some prey species appear to be naïve towards introduced predators. This is particularly the case in Australia, where native mammalian species appear to be naïve towards recently introduced predators such as the feral cat and European red fox. In a study of wild-living bilbies, we found that 150 years of co-evolutionary experience is enough to develop predator recognition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The inability to recognise and/or express effective anti-predator behaviour against novel predators as a result of lifetime or evolutionary isolation from predators is known as ‘prey naiveté’ (Goldthwaite et al. 1990; Carthey and Banks 2014). A lack of predator recognition to introduced, novel predators is the most damaging form of naiveté as prey are unable to mount effective anti-predator responses (Cox and Lima 2006; Ferrari et al. 2015).

Natural selection favours prey species that are able to successfully detect, identify and appropriately respond to predators prior to their attack, increasing their probability of escape and/or avoidance of a predator (Monclús et al. 2005). However, not all species or even individuals are able to accurately recognise a predator. Anti-predator behaviour may be innate (genetically based), be learnt through experience or be a combination of the two (Jolly et al. 2018). In many species of mammals (Owings and Owings 1979; Fendt 2006), birds (Göth 2001) and fish (Berejikian et al. 2003), predator recognition is an innate trait. Despite years, decades or even thousands of years of isolation from predators, some prey species retain predator recognition skills of their ancestral predators (Blumstein et al. 2008; Li et al. 2011; Steindler et al. 2018). However, for many other prey species, learning and experience are necessary to properly develop and perform appropriate anti-predator behaviours (Griffin et al. 2000). Prey that are able alter their behavioural patterns in accordance with their learnt experiences are expected to be at a selective advantage in response to potential predation threats from introduced predators (Maloney and McLean 1995; Kovacs et al. 2012).

The ‘learned recognition’ hypothesis suggests that through lifetime experience with predators, naïve prey may enhance their ability to recognise and respond to predators (Turner et al. 2006; Saul and Jeschke 2015). Failure to recognise and appropriately respond to a predation threat increases the risk of a fatal encounter with predators (Chivers and Smith 1995). As such, prey that are able to alter their behaviour in accordance with learned information are expected to have a greater degree of flexibility in their response to the risk of predation (Brown and Chivers 2005). The development of learnt anti-predator recognition skills towards evolutionary and/or ontogenetically novel predators has been shown in a broad array of taxa including fish (Ferrari 2014), birds (Maloney and McLean 1995) and mammals (Griffin et al. 2000).

How long it takes to learn predator recognition of previously novel predators depends on the prey species and how readily adjustable they are to novel interactions (Cox and Lima 2006). Studies have found that despite over 200 years of coexistence with introduced predators, some naïve species are yet to evolve the appropriate anti-predator risk assessment responses (Hayes et al. 2006; McEvoy et al. 2008). In contrast, other studies have found that 200 years or less is sufficient to learn, develop and select for appropriate predator recognition skills (Maloney and McLean 1995; Banks et al. 2018). Consistent with this idea, a global meta-analysis on factors influencing expression of prey naiveté found that naiveté declined with the number of generations since predator introduction (Anton et al. 2020).

The introduction of novel predators has caused significant damage to native prey populations, particularly in areas where prey species may be considered ‘naivé’ (Cox and Lima 2006) and is a major contributing factor to failed reintroduction attempts of locally extinct species (Moseby et al. 2016). With an increasing reliance on ‘safe-havens’ such as predator-free islands and fenced reserves for threatened species recovery programmes, we need to develop a better understanding of the role that lifetime experience with predators plays in the development of appropriate anti-predator responses (Legge et al. 2018). Indeed, there is concern that isolation from all predators may prohibit predator-driven natural selection processes, preventing a ‘future beyond the fence’ for threatened species reintroductions (Moseby et al. 2016; Jolly et al. 2018).

The bilby (Macrotis lagotis) is an omnivorous, burrowing, medium-sized (body weight 1.5–2.5 kg), nocturnal marsupial that was once widespread in Australia (Burbidge and Woinarski 2016). In the last 150 years, bilbies have undergone a severe range decline which has been attributed in part to naiveté towards introduced predators, the red fox (Vulpes vulpes) and feral cat (Felis catus) (Burbidge and Woinarski 2016). A study of wild bilbies living within the ‘Arid Recovery’ predator-free fenced reserve in South Australia found that bilbies with no ontogenetic exposure to mammalian predators recognised the scent of a native predator, the dingo (Canis familiaris), which they have shared over 8000 years of co-evolutionary history (Zhang et al. 2020), but did not recognise the scent of a recently introduced predator, the feral cat (Steindler et al. 2018). The bilbies inhabiting the Arid Recovery safe-haven were considered to be wild, because they were not supplementary fed and were exposed to avian and reptilian predators (Steindler et al. 2018). These findings suggest that bilbies have innate recognition of dingoes, but not feral cats, and that a prey species’ ability to respond to the odour of their predators scales with the duration of their evolutionary coexistence (Peckarsky and Penton 1988).

In this study, we investigate the recognition of predator scents by a remnant population of bilbies that were coexisting with dingoes, feral cats and rabbits in south-west Queensland. In particular, we were interested to evaluate whether non-safe-havened bilbies were naïve to the scent of feral cats like the ontogenetically predator naïve population within the Arid Recovery safe-haven (Steindler et al. 2018) or had developed recognition of the scent of feral cats. If the latter, we expected that bilbies should be more wary when both cat and dingo/dog faeces are present compared to a herbivore (rabbit, Oryctolagus cuniculus) and experimental control (no odour). Non-safe-havened bilbies would be at selective advantage if able to successfully detect, identify and respond to both predators with which they co-exist. Predator recognition could be due to either learned recognition of the threat posed by cats through their lifetime or strong natural selection imposed by cats over evolutionary time. However, if bilbies recognised dingo/dog faeces but not cat faeces, it would suggest that bilbies responses towards predators are constrained by their period of evolutionary coexistence and bilbies remain ‘naïve’ to introduced feral cats.

We used faecal samples as they are a useful indicator of predator presence (Hayes et al. 2006) and provide prey with information regarding predation risk, even when a predator is absent at the time of detection (McEvoy et al. 2008). Bilbies in south-west Queensland have shared more than 8000 years of co-evolutionary history with dingoes (Zhang et al. 2020), less than 140 years with feral cats (Abbott 2002) and less than 130 years with European rabbits (Zenger et al. 2003). Bilbies are able to produce a litter of 1–3 young, four times a year and have a captive longevity of 5 to 9 years (Southgate et al. 2000). Based on the reproductive rate of the bilby, we made the assumption that the bilby population in south-west Queensland has gone through 44 generations potentially living with and exposed to predation by feral cats over the past 140 years.

Materials and methods

Study area

We studied wild bilbies across 21 nights in October 2016 within Astrebla Downs National Park, Queensland (Fig. 1; 24° 12′ 24.60″ S, 140° 34′ 5.39″ E). Astrebla Downs National Park is located in the Channel Country, a region consisting of flat to undulating erosion plains dissected by minor drainage lines. The vegetation of the Channel Country is dominated by barley Mitchell grass (Astrebla pectinate), with other herbs and grass growing during periods of wet climatic conditions. The climate is arid, with low annual rainfall and high summer temperatures (Gibson 2001). At the time of sampling, dingoes, feral cats and rabbits were present in the park. Bilbies in the park are also predated upon by birds of prey (ML personal observations).

(a) Map of Australia showing the approximate location of Astrebla Downs National Park. (b) Map of Queensland with the exact location of Astrebla Downs National Park (1740 km2). (c) Map of Astrebla Downs National Park. The green circles indicate the locations of the burrows where odour recognition studies on wild greater bilbies (Macrotis lagotis) were conducted

Sources and storage of treatment odours

We used faeces from two placental predators, with which wild bilbies have shared varying periods of co-evolutionary history (dingoes/dogs (> 8000 years), cats (< 200 years)), as well as a procedural control (faeces from harmless herbivore, rabbit < 200 years), and a experimental control which was no faeces present. We collected fresh faeces from domestic dog, cat and rabbit sources. Although it would have been preferable to use dingo faeces, we used domestic dog scats as a surrogate for dingo scats because obtaining the required quantity of dingo scats was not feasible at the time of the study and previous studies have shown that scats from dogs are chemically indistinguishable from those of dingoes (Carthey et al. 2017). Hereafter, we refer to these scents as dingo/dog. To overcome the issue of decomposition of faecal odours after deposition, all faecal samples were collected fresh from private pet owners and boarding kennel facilities, and stored and sealed in airtight zip lock bags, and frozen at minus 20 °C (Carthey et al. 2017). Disposable gloves were worn at all times when handling faeces to prevent cross contamination of odours. As faecal samples were collected from private pet owners and boarding kennel shared yard facilities, the total number of donor individuals is unknown; however, we estimate that samples came from between two and fifteen individuals. Faeces allocation to burrows was randomised throughout the experiment, reducing the chance of potential donor effects. We did not consider diet to be a potential confounding source during analysis, since the diet of domestic cats, dogs and rabbits was consistent between individuals and made up of a mix of raw meats and commercially available pet foods (Carthey et al. 2017).

Bilby behaviour

As we were unable to track individual bilbies, we conducted a population-level evaluation of bilby behaviour by conducting our experiments adjacent to bilby burrows and treating each burrow individually. As studying wild populations of bilbies can often be problematic due to their cryptic nature, placing faecal odour treatments outside the entrances of active burrows was the most effective way to test population-level predator odour recognition and behavioural responses (Steindler et al. 2018). Active bilby burrows (Fig. 2) were identified by the presence of fresh scats and/or fresh diggings around their entrances. Although seasonally and across years bilby burrow use is in a constant state of change, bilbies are known to use two to three burrows per night (Lavery and Kirkpatrick 1997). Based on population estimates developed by Lavery and Kirkpatrick (1997), we estimate from the 128 burrows we examined in October 2016 that there were at least 30 bilbies present within our study area.

(A) Greater bilby and (B) experimental setup for predator odour discrimination study of wild bilbies at Astrebla Downs National Park. Infrared motion sensor video camera mounted on a metal post outside the burrow entrance of a wild bilby, where odour treatments (cat, dog and rabbit faeces and experimental control—no odour) were presented

We used a repeated measures design, in which each faecal odour treatment was presented once at each burrow, according to a predetermined balanced order. We controlled for order effects experimentally and assessed these effects statistically. Faecal odour treatments were presented on consecutive nights. Since many mammalian predators scent mark features in the landscape, such as the burrows of prey species, by depositing urinary and faecal odours (Gorman and Trowbridge 1989), we deployed faeces at the entrance of bilbies’ burrows. Faeces were presented on the surface of the ground, within 20 cm of the burrow entrance. In cases where there were multiple burrow entrances present, faeces were only presented at the burrow entrance that showed most recent signs of activity. One piece of cat and dog faeces of similar size and weight (approximately 25–30 g) and 20 pellets of rabbit faeces were presented outside the burrow accordingly. Faeces and all faecal traces, including a fine layer of sand on which the faeces were placed, were removed the following day post treatment. Faecal odours were replaced as per the predetermined balanced order for the duration of the experiment per burrow.

At each burrow a Scoutguard SG550V or Scoutguard Zeroglow (Scoutguard; Australia), an infrared motion sensor video camera was mounted to the metal post, 20–100 cm off the ground (Fig. 2). Cameras were programmed to take 60-s video, when triggered, to enable species identification and observe behavioural responses to the odour treatments, with a 0-s interval between possible triggers, from dusk until dawn (1800–0600 h).

Behavioural scoring

We constructed an ethogram of bilby behaviours (Table 1) based upon the initial observations of experimental videos. All behaviours were treated as mutually exclusive (Blumstein and Daniel 2007). We scored video recordings ≤ 60 s using the event recorder JWatcher (Blumstein and Daniel 2007), only quantifying the first 60-s video footage from each burrow location when a bilby was present, with scoring commencing at the start of each 60-s video. We did this because our study focused on quantifying bilbies’ initial behavioural responses to the presence of predator faeces and we wanted to eliminate the potential for our observations to be influenced by habituation to the presence of faeces. As bilbies may not have been within the field of view of the camera at the commencement of scoring, we were unable to analyse total length of time for each behaviour and as a result calculated the proportion of time in sight allocated to each behaviour. As bilbies were unmarked, we were unable to differentiate between individuals and only scored one video per night, per burrow.

For analysis, we combined behaviours in which bilbies were digging outside the burrow entrance and digging within the field of view of the camera, to create a new category ‘digging’ (Table 1). We combined behaviours in which bilbies moved slowly: slow approach (slow movement towards odour treatment and/or burrow), slow entrance (individual enters burrow slowly), slow exit (individual exits burrow slowly) and slow retreat (slow movement away from odour treatment and/or burrow) to form a new category ‘walk’ (Table 1). We combined behaviours in which bilbies moved rapidly: fast approach (rapid movement towards odour treatment and/or burrow), fast entrance (individual enters burrow quickly), fast exit (individual exits burrow rapidly) and fast retreat (rapid movement away from odour treatment and/or burrow) to create the new category ‘run’ (Table 1). In most cases, videos were scored blind with respect to treatment, unless it was possible to visually identify the type of faeces that was deployed.

Analysis of behavioural data

We fitted a series of linear mixed effects models in SPSS-25 (IBM Corp., Armonk, NY, USA) with diagonal error structure to test whether faecal odour treatment caused wild bilbies to allocate different proportions of time to the composite behaviours: investigate odour, digging, bi-pedal stance, walk and run. We had two fixed effects: treatment (cat, dog, rabbit and control) and presentation order (1 to 4) in our models. To account for the possibility of non-independence between observations, we included burrow id (1 to 128) as a random effect. In no case was presentation order significant; however, we retained it as a (repeated measure in the analysis to control for its effect statistically: Quinn and Keough 2002). Because the response variables were not normally distributed and the dataset contained many zero values, we log transformed (log10 [behaviour + 1]) each variable prior to analysis to normalise their distributions (Quinn and Keough 2002). Because we wished to understand the pattern of responses, in instances where the effect of odour was significant (P < 0.05), we used Fisher’s least significant difference (LSD) post hoc analysis to examine planned comparisons (cat vs. dog, cat vs. rabbit, cat vs. control, dog vs. rabbit, dog vs. control and rabbit vs. control) for differences in response to each odour treatment. We set our alpha to 0.05 for all tests.

To test whether burrow location influenced bilby behavioural responses to the odour treatments, we tested for spatial autocorrelation in the residuals of the fitted values for each behaviour, using Moran’s index (i), calculated in the spatial analyst module of ArcGis v10.2. Spatial autocorrelation occurs when the value of a variable at any one location in space can be predicted by the values of nearby locations. The existence of spatial autocorrelation indicates that sampling units are not independent from one another (Fortin and Dale 2005).

Results

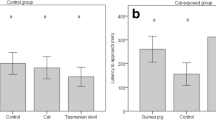

There was a significant effect of treatment on the proportion of time that bilbies spent investigating faecal odours (Table 2; F3,141.361 = 7.073; P ≤ 0.005; Fig. 3a). Planned post hoc comparisons (Table S2 in the Supplementary information) revealed that bilbies spent more time investigating predator faecal odours compared to the experimental control (no faeces) (Fisher’s LSD, cat vs. control, P ≤ 0.005 and dog vs. control, P ≤ 0.005; Fig. 3a) and a harmless herbivore (rabbit) (Fisher’s LSD, cat vs. rabbit, P = 0.025 and dingo/dog vs. rabbit, P ≤ 0.005; Fig. 3a). There was no significant difference in the time spent investigating cat and dog faeces (Fisher’s LSD, cat vs. dingo/dog, P = 0.277) and rabbit faeces and the control (Fisher’s LSD, rabbit vs. control, P = 0.403).

The mean (± 1 SEMs) proportion of time in sight (PIS) that wild greater bilbies allocated to the behaviours (a) investigate odour, (b) digging, (c) bi-Pedal stance, (d) walk and (e) run in response to faecal odour treatments (cat, n = 44, experimental control (no odour), n = 35; dog, n = 38; and rabbit, n = 36) outside bilby burrows. Similar letters (e.g. A or B) above bars identify pairwise comparisons that are not statistically distinguishable (P > 0.05) for response variables where a significant main effect was observed

There was a significant effect of treatment on the proportion of time that bilbies allocated to digging (Table 2; F3,131.405 = 2.715, P = 0.047; Fig. 3b). Bilbies spent less time digging outside the burrow entrance and within the vicinity of the burrow when predator faeces were present compared to the experimental control (no faeces) (Fisher’s LSD, cat vs. control, P = 0.038 and dingo/dog vs. control, P = 0.008; Fig. 3b). There was no difference between the time spent digging when predator faeces were present (Fisher’s LSD, cat vs. dingo/dog, P = 0.462). There was no difference in the proportion of time spent digging when cat and rabbit faeces (Fisher’s LSD, cat vs. rabbit, P = 0.427), dingo/dog and rabbit faeces (Fisher’s LSD, dingo/dog vs. rabbit, P = 0.144) and rabbit faeces and the control (Fisher’s LSD, rabbit vs. control, P = 0.215) were present (Table S2 in the Supplementary information).

There was a significant effect of treatment on the proportion of time that bilbies engaged in bi-pedal stance (Table 2; F3,108.206 = 4.572; P = 0.005; Fig. 3c). Bilbies spent less time in a bi-pedal stance when faecal odour treatments were present compared to the experimental control (no faeces) (Fisher’s LSD, cat vs. control, P = 0.018, dingo/dog vs. control, P = 0.006 and rabbit vs. control, P ≤ 0.005; Fig. 3c). There was no difference in the proportion of time allocated to bi-pedal stance when predator (cat and dog) and harmless herbivore (rabbit) faeces were present (Fisher’s LSD, cat vs. dingo/dog, P = 0.604, cat vs. rabbit, P = 0.162 and dingo/dog vs. rabbit, P = 0.380; Fig. 3c).

There was no effect of treatment on the proportion of time that bilbies allocated to walking (Table 2; F3,143.811 = 0.694, P = 0.557; Fig. 3d) and running (F3,110.065 = 0.403, P = 0.751; Fig. 3e). There was no spatial autocorrelation in the residuals of the fitted values for any of the analysed behaviours for bilbies (Table S1 in the Supplementary information). These results indicate that the burrows and treatment sites were independent for the purpose of our analysis.

Discussion

Our results show that bilbies living outside of a safe-haven displayed anti-predator responses towards the olfactory cues of both a long-term predator (dingoes/dogs) and an evolutionary novel predator (cats). However, from previous research, we know that safe-havened bilbies that were completely isolated from all mammalian predators responded to the faecal odours of their long-term historical predator, the dingo/dog but not cats (Steindler et al. 2018). These contrasting findings suggest that anti-predator responses displayed by non-safe-havened bilbies towards cat odour may be the result of lifetime learning (Turner et al. 2006; Saul and Jeschke 2015) or selection for individuals that have learnt and developed appropriate anti-predator responses over evolutionary time (Kovacs et al. 2012).

Bilbies spent the greatest proportion of time investigating and the least amount of time digging when cat and dingo/dog faeces were present. These findings may be due to bilbies making a trade-off between the costs and benefits of these behaviours (Lima and Dill 1990). Recognition of predator odour cues allows prey to perform anti-predator responses that will increase their chances of survival (Chivers et al. 1995). However, prey animals require information to make these decisions (Bouskila and Blumstein 1992) and often exploit the chemosensory cues found in faeces to provide information on predator activity level and diet (Ferrero et al. 2011). Thus, approaching and investigating predator cues may allow prey individuals to assess the situation and modify their behaviour according to the perceived predatory threat (Lima and Dill 1990; Cremona et al. 2014; Carthey and Banks 2018). In the case of bilbies, investigating predator scats may have enabled individuals to assess the likelihood for a potential lethal encounter with a cat and/or dingo within the area. Although it is important to note that we were unable to test for whether predator recognition confers survival benefits for bilbies. Further research is required to determine whether predator recognition and the effectiveness of bilbies anti-predator responses are linked.

Bilbies invested the least proportion of time to standing bi-pedal when predator and herbivore faeces were present compared to the control (no odour). Bilbies typically adopt the upright bi-pedal posture when entering or leaving a burrow, or when foraging (Johnson and Johnson 1983). That bilbies equally reduced the proportion of time they stood bi-pedal when rabbit and predator odours were present suggests that this behaviour was not an anti-predator response.

Previous studies have suggested that naïve species generalise their response to predators, irrespective of their evolutionary and/or lifetime experience, as a result of the common constituents (Dickman and Doncaster 1984; Nolte et al. 1994), such as (kairomones) found in carnivore odours (Ferrero et al. 2011). Although bilbies responded to both cat and dingo/dog odour through increased investigation and decreased digging compared to the experimental control (no odour), we do not believe that this was a result of generalisation. Aversion to all carnivore smells may be costly in terms of missed opportunities, such as foraging and mate selection (Lima and Bednekoff 1999). Naïve prey are at a selective advantage if they are able to learn and respond to specific predatory smells, rather than respond to all carnivorous smells (Blumstein et al. 2002; Powell and Banks 2004). Barrio et al. (2010) suggested that the common constituent’s hypothesis may only apply when taxonomic levels are closely related. Since cats and dogs diverged between 52 and 57 million years ago (Hedges et al. 2006), the differences between the two families could be too great for bilbies to generalise the odours. Within the study area, both dingoes and cats are known to predate on bilbies (Lollback et al. 2015). Thus, we suggest that exposure to cat and dingo predation over evolutionary time and throughout their lifetime is likely to be a greater driver for the predator response behaviour displayed by wild bilbies in this study, rather than a generalised response to predator odours per se.

A caveat of our study is that we were unable to test for whether the use of alternative odour sources, such as whole body odour or urine, may have led to similar or different results. For example, laboratory rats respond more strongly and consistently to whole body odour than to urine or faecal odours (Masini et al. 2005). Blanchard and Blanchard (2004) suggest that the different responses displayed by rats towards body odour and faecal odour may be explained by the rapid dissipation of body odours in the environment, a consequence of which means that fresh body odour indicates imminent danger. We assumed that bilbies’ behavioural responses to faecal odours were a product of their evolutionary history with dingoes and cats. However, based on the research by Masini et al. (2005), as well as the idea that whole body odour samples indicate more imminent risk to prey (Carthey and Banks 2014), this may not be the case. As such, we recommend that further field studies are undertaken in order to discern the influence of the type of odour used and whether different types of odours elicit different behavioural responses by bilbies.

Our results support the idea that ‘naïve’ prey will not remain eternally naïve and have the ability to respond and develop appropriate anti-predator responses towards introduced predators (Banks et al. 2018). However, it is unclear whether these predator recognition abilities have become ‘hard-wired’ or whether they are experience dependent. For example, phenotypic plasticity and learning may provide a valuable short-term response to change, but hinder the potential for long-term adaptation (Schlaepfer et al. 2005). As such, in order to successfully manage bilbies and other predator ‘naïve’ species towards introduced predators, we need to better understand the heritability of anti-predator behaviours and whether introduced predator recognition abilities are lost and/or gained through lifetime experience (Carthey and Blumstein 2018).

In regions where invasive predators pose a threat to native species, one commonly used strategy to mitigate predator impacts is to establish refuge populations of native species within ‘safe-havens’, such as predator-free islands or within predator-free fenced reserves (Legge et al. 2018). However, completely isolating populations from predators runs the risk of creating predator naïve populations. This is because populations that are isolated from predators may lose their anti-predator responses due to relaxed selection and limited opportunities for learning how to respond to predators (Moseby et al. 2016; Jolly et al. 2018).

In the case of bilbies, the results of this study suggest that bilbies living outside of a safe-haven recognise cats as a threat, whilst Steindler et al. (2018) found that bilbies living within a safe-haven did not respond to cat scent. The contrasting behavioural response to cat scent displayed by wild bilbies living within and outside of safe-havens has implications for managing populations of bilbies and other endangered mammals within safe-havens. This is because they suggest that naïve prey species, such as bilbies, can acquire anti-predator responses when their populations are exposed to predators (Ross et al. 2019), and that completely isolating prey from predators may compromise their anti-predator responses (Moseby et al. 2016; Jolly et al. 2018). One potential solution that has been proposed to tackle the problem of prey naiveté within safe-havened populations is to expose these populations to predators under carefully controlled conditions (Ross et al. 2019; Jolly and Phillips 2020), taking advantage of the behavioural responses that predators can induce in their prey. However, the challenge with such an approach will be providing the appropriate conditions necessary for anti-predator skills to be retained and/or developed, whilst also ensuring that prey populations are not driven extinct by predation.

Data availability

The datasets generated and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Abbott I (2002) Origin and spread of the cat, Felis catus, on mainland Australia, with a discussion of the magnitude of its early impact on native fauna. Wildl Res 29:51–74. https://doi.org/10.1071/WR01011

Anton A, Geraldi N, Ricciardi A, Dick J (2020) Global determinantsof prey naiveté to exotic predators. Proc R Soc B 287:20192978. https://doi.org/10.1098/rspb.2019.2978

Banks PB, Carthey AJR, Bytheway JP (2018) Australian native mammals recognize and respond to alien predators: a metaanalysis. Proc R Soc B 285:20180857

Barrio IC, Bueno CG, Banks PB, Tortosa FS (2010) Prey naiveté in an introduced prey species: the wild rabbit in Australia. Behav Ecol 21:986–991. https://doi.org/10.1093/beheco/arq103

Berejikian BA, Tezak E, LaRae AL (2003) Innate and enhanced predator recognition in hatchery-reared Chinook salmon. Environ Biol Fish 67:241–251. https://doi.org/10.1023/A:1025887015436

Blanchard CD, Blanchard RJ (2004) Antipredator defense. In: Whishaw IQ, Kolb B (eds) The behavior of the laboratory rat: a handbook with tests. Oxford University Press, Oxford, p 520

Blumstein DT, Daniel JC (2007) Quantifying behavior the JWatcher way. Sinauer Associates Incorporated, Sunderland, MA

Blumstein DT, Mari M, Daniel JC, Ardron JG, Griffin AS, Evans CS (2002) Olfactory predator recognition: wallabies may have to learn to be wary. Anim Conserv 5:87–93. https://doi.org/10.1017/s1367943002002123

Blumstein DT, Barrow L, Luterra M (2008) Olfactory predator discrimination in yellow-bellied marmots. Ethology 114:1135–1143. https://doi.org/10.1111/j.1439-0310.2008.01563.x

Bouskila A, Blumstein DT (1992) Rules of thumb for predation hazard assessment: predictions from a dynamic model. Am Nat 139:161–176. https://doi.org/10.1086/285318

Brown GE, Chivers DP (2005) Learning as an adaptive response to predation. Ecology of predator–prey interactions. Oxford University Press, Oxford, England, pp 34–54

Burbidge AA, Woinarski J (2016) Macrotis lagotis. The IUCN Red List of Threatened Species 2016, https://doi.org/10.2305/IUCN.UK.2016-2.RLTS.T12650A21967189.en

Carthey AJ, Banks PB (2014) Naïveté in novel ecological interactions: lessons from theory and experimental evidence. Biol Rev 89:932–949. https://doi.org/10.1111/brv.12087

Carthey AJR, Banks PB (2018) Naïve, bold, or just hungry? An invasive exotic prey species recognises but does not respond to its predators. Biol Invasions 20:3417–3429

Carthey AJR, Blumstein DT (2018) Predicting predator recognition in a changing world. Trends Ecol Evol 33:106–115. https://doi.org/10.1016/j.tree.2017.10.009

Carthey AJ, Bucknall MP, Wierucka K, Banks PB (2017) Novel predators emit novel cues: a mechanism for prey naivety towards alien predators. Sci Rep 7:16377

Chivers DP, Smith RJF (1995) Free-living fathead minnows rapidly learn to recognize pike as predators. J Fish Biol 46:949–954

Chivers DP, Brown GE, Smith RJF (1995) Acquired recognition of chemical stimuli from pike, Esox lucius, by brook sticklebacks, Culaea inconstans (Osteichthyes, Gasterosteidae). Ethology 99:234–242. https://doi.org/10.1111/j.1439-0310.1995.tb00897.x

Cox JG, Lima SL (2006) Naiveté and an aquatic–terrestrial dichotomy in the effects of introduced predators. Trends Ecol Evol 21:674–680. https://doi.org/10.1016/j.tree.2006.07.011

Cremona T, Crowther MS, Webb JK (2014) Variation of prey responses to cues from a mesopredator and an apex predator. Austral Ecol 39:749–754. https://doi.org/10.1111/aec.12138

Dickman CR, Doncaster CP (1984) Responses of small mammals to red fox (Vulpes vulpes) odour. J Zool 204:521–531. https://doi.org/10.1111/j.1469-7998.1984.tb02384.x

Fendt M (2006) Exposure to urine of canids and felids, but not of herbivores, induces defensive behavior in laboratory rats. J Chem Ecol 32:2617–2627. https://doi.org/10.1007/s10886-006-9186-9

Ferrari MCO (2014) Short-term environmental variation in predation risk leads to differential performance in predation-related cognitive function. Anim Behav 95:9–14. https://doi.org/10.1016/j.anbehav.2014.06.001

Ferrari MCO, Crane AL, Brown GE, Chivers DP (2015) Getting ready for invasions: can background level of risk predict the ability of naïve prey to survive novel predators? Sci Rep 5:8309. https://doi.org/10.1038/srep08309

Ferrero DM, Lemon JK, Fluegge D, Pashkovski SL, Korzan WJ, Datta SR, Spehr M, Fendt M, Liberles SD (2011) Detection and avoidance of a carnivore odor by prey. Proc Natl Acad Sci U S A 108:11235–11240. https://doi.org/10.1073/pnas.1103317108

Fortin M-J, Dale MR (2005) Spatial analysis: a guide for ecologists. Cambridge University Press, Cambridge

Gibson LA (2001) Seasonal changes in the diet, food availability and food preference of the greater bilby (Macrotis lagotis) in south-western Queensland. Wildl Res 28:121–134. https://doi.org/10.1071/WR00003

Goldthwaite RO, Coss RG, Owings DH (1990) Evolutionary dissipation of an antisnake system: differential behavior by California and Arctic ground squirrels in above- and below-ground contexts. Behaviour 112:246–269. https://doi.org/10.1163/156853990X00220

Gorman ML, Trowbridge BJ (1989) The role of odor in the social lives of carnivores. In: Gittleman JL (ed) Carnivore behaviour, ecology, and evolution. Springer, USA, pp 57–88

Göth A (2001) Innate predator-recognition in Australian brush-turkey (Alectura lathami, Megapodiidae) hatchlings. Behaviour 138:117–136

Griffin AS, Blumstein DT, Evans CS (2000) Training captive-bred or translocated animals to avoid predators. Conserv Biol 14:1317–1326

Hayes RA, Nahrung HF, Wilson JC (2006) The response of native Australian rodents to predator odours varies seasonally: a by-product of life history variation? Anim Behav 71:1307–1314. https://doi.org/10.1016/j.anbehav.2005.08.017

Hedges SB, Dudley J, Kumar S (2006) TimeTree: a public knowledge-base of divergence times among organisms. Bioinformatics 22:2971–2972. https://doi.org/10.1093/bioinformatics/btl505

Johnson C, Johnson K (1983) Behaviour of the bilby, Macrotis lagotis (Reid), (Marsupialia: Thylacomyidae) in captivity. Wildl Res 10:77–87. https://doi.org/10.1071/WR9830077

Jolly CJ, Phillips BL (2020) Effects of rapid evolution due to predator-free conservation on endangered species recovery. Conserv Biol published online. https://doi.org/10.1111/cobi.13521

Jolly CJ, Webb JK, Phillips BL (2018) The perils of paradise: an endangered species conserved on an island loses antipredator behaviours within 13 generations. Biol Lett 14:20180222. https://doi.org/10.1098/rsbl.2018.0222

Kovacs E, Crowther M, Webb J, Dickman C (2012) Population and behavioural responses of native prey to alien predation. Oecologia 168:947–957. https://doi.org/10.1007/s00442-011-2168-9

Lavery HJ, Kirkpatrick TH (1997) Field management of the bilby Macrotis lagotis in an area of south-western Queensland. Biol Conserv 79:271–281. https://doi.org/10.1016/S0006-3207(96)00085-7

Legge S, Woinarski JCZ, Burbidge AA et al (2018) Havens for threatened Australian mammals: the contributions of fenced areas and offshore islands to protecting mammal species that are susceptible to introduced predators. Wildl Res 45:627–644

Li C, Yang X, Ding Y, Zhang L, Fang H, Tang S, Jiang Z (2011) Do Père David’s deer lose memories of their ancestral predators? PLoS One 6:e23623. https://doi.org/10.1371/journal.pone

Lima SL, Bednekoff PA (1999) Temporal variation in danger drives antipredator behavior: the predation risk allocation hypothesis. Am Nat 153:649–659

Lima SL, Dill LM (1990) Behavioral decisions made under the risk of predation: a review and prospectus. Can J Zool 68:619–640. https://doi.org/10.1139/z90-092

Lollback GW, Mebberson R, Evans N, Shuker JD, Hero J-M (2015) Estimating the abundance of the bilby (Macrotis lagotis): a vulnerable, uncommon, nocturnal marsupial. Austr Mamm 37:75–85

Maloney RF, McLean IG (1995) Historical and experimental learned predator recognition in free-living New-Zealand robins. Anim Behav 50:1193–1201

Masini CV, Sauer S, Campeau S (2005) Ferret odor as a processive stress model in rats. Behav Neurosci 119:280–292. https://doi.org/10.1037/0735-7044.119.1.280

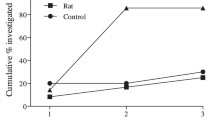

McEvoy J, Sinn DL, Wapstra E (2008) Know thy enemy: behavioural response of a native mammal (Rattus lutreolus velutinus) to predators of different coexistence histories. Austr Ecol 33:922–931. https://doi.org/10.1111/j.1442-9993.2008.01863.x

Monclús R, Rödel HG, von Holst D, De Miguel J (2005) Behavioural and physiological responses of naïve European rabbits to predator odour. Anim Behav 70:753–761. https://doi.org/10.1016/j.anbehav.2004.12.019

Moseby KE, Blumstein DT, Letnic M (2016) Harnessing natural selection to tackle the problem of prey naïveté. Evol Appl 9:334–343. https://doi.org/10.1111/eva.12332

Nolte DL, Mason JR, Epple G, Aronov E, Campbell DL (1994) Why are predator urines aversive to prey? J Chem Ecol 20:1505–1516. https://doi.org/10.1007/bf02059876

Owings DH, Owings SC (1979) Snake-directed behavior by black-tailed prairie dogs (Cynomys ludovicianus). Z Tierpsychol 49:35–54. https://doi.org/10.1111/j.1439-0310.1979.tb00272.x

Peckarsky BL, Penton MA (1988) Why do Ephemerella nymphs scorpion posture: a “ghost of predation past”? Oikos 53:185–193. https://doi.org/10.2307/3566061

Powell F, Banks PB (2004) Do house mice modify their foraging behaviour in response to predator odours and habitat? Anim Behav 67:753–759. https://doi.org/10.1016/j.anbehav.2003.08.016

Quinn GP, Keough MJ (2002) Experimental design and data analysis for biologists. Cambridge university press, Cambridge

Ross AK, Letnic M, Blumstein DT, Moseby KE (2019) Reversing the effects of evolutionary prey naiveté through controlled predator exposure. J Appl Ecol 56:1761–1769

Saul WC, Jeschke JM (2015) Eco-evolutionary experience in novel species interactions. Ecol Lett 18:236–245. https://doi.org/10.1111/ele.12408

Schlaepfer MA, Sherman PW, Blossey B, Runge MC (2005) Introduced species as evolutionary traps. Ecol Lett 8:241–246. https://doi.org/10.1111/j.1461-0248.2005.00730.x

Southgate R, Christie P, Bellchambers K (2000) Breeding biology of captive, reintroduced and wild greater bilbies, Macrotis lagotis (Marsupialia: Peramelidae). Wildl Res 26:621–628. https://doi.org/10.1071/WR99104

Steindler LA, Blumstein DT, West R, Moseby KE, Letnic M (2018) Discrimination of introduced predators by ontogenetically naïve prey scales with duration of shared evolutionary history. Anim Behav 137:133–139. https://doi.org/10.1016/j.anbehav.2018.01.013

Turner AM, Turner SE, Lappi HM (2006) Learning, memory and predator avoidance by freshwater snails: effects of experience on predator recognition and defensive strategy. Anim Behav 72:1443–1450

Zhang S-J, Wang G-D, Ma P, Zhang L-L, Yin T-T, Liu Y-H, Otecko NO, Wang M, Ma Y-P, Wang L, Mao B, Savolainen P, Zhang Y-P (2020) Genomic regions under selection in the feralization of the dingoes. Nat Commun 11:671. https://doi.org/10.1038/s41467-020-14515-6

Zenger KR, Richardson BJ, Vachot-Griffin AM (2003) A rapid population expansion retains genetic diversity within European rabbits in Australia. Mol Ecol 12:789–794. https://doi.org/10.1046/j.1365-294X.2003.01759.x

Acknowledgements

We thank private pet owners (Edwina Ring), Calabash Kennels & Cattery (Iris Bleach) and the Sydney Cat Protection Society for supply of odours and Sam Fischer and Chris Mitchell (Queensland National Park staff) for their assistance with the study. We would like to thank the reviewers for the comments and feedback, as they have greatly improved the structure of the paper.

Funding

This research was funded by the Holsworth Wildlife Research Endowment (to LS and ML) and Australian Research Council grant LP130100173 (ML).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors delare that they have no conflicts of interest.

Ethical approval

All applicable international, national, and/or institutional guidelines for the care and use of animals were followed. This research was conducted according to University of New South Wales Animal Ethics approval 15/19A.

Informed consent

Written informed consent was obtained from the owners of dogs, cats and rabbits whose faeces were used in this experiment.

Additional information

Communicated by A. I Schulte-Hostedde

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

ESM 1

(DOCX 16 kb).

Rights and permissions

About this article

Cite this article

Steindler, L., Letnic, M. Not so naïve: endangered mammal responds to olfactory cues of an introduced predator after less than 150 years of coexistence. Behav Ecol Sociobiol 75, 8 (2021). https://doi.org/10.1007/s00265-020-02952-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00265-020-02952-8