Abstract

In this paper we consider non-smooth convex optimization problems with (possibly) infinite intersection of constraints. In contrast to the classical approach, where the constraints are usually represented as intersection of simple sets, which are easy to project onto, in this paper we consider that each constraint set is given as the level set of a convex but not necessarily differentiable function. For these settings we propose subgradient iterative algorithms with random minibatch feasibility updates. At each iteration, our algorithms take a subgradient step aimed at only minimizing the objective function and then a subsequent subgradient step minimizing the feasibility violation of the observed minibatch of constraints. The feasibility updates are performed based on either parallel or sequential random observations of several constraint components. We analyze the convergence behavior of the proposed algorithms for the case when the objective function is strongly convex and with bounded subgradients, while the functional constraints are endowed with a bounded first-order black-box oracle. For a diminishing stepsize, we prove sublinear convergence rates for the expected distances of the weighted averages of the iterates from the constraint set, as well as for the expected suboptimality of the function values along the weighted averages. Our convergence rates are known to be optimal for subgradient methods on this class of problems. Moreover, the rates depend explicitly on the minibatch size and show when minibatching helps a subgradient scheme with random feasibility updates.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The large sum of functions in the objective function and/or the large number of constraints in most of the practical optimization applications led the stochastic optimization field to become an essential tool for many applied mathematics areas, such as machine learning and statistics [12, 30], constrained control [22], sensor networks [1], computer science [10], inverse problems [4], operations research and finance [27]. For example, in machine learning applications the optimization algorithms involve numerical computation of parameters for a system designed to make decisions based on yet unseen data [12, 30]. In particular, in support vector machines one maps the data into a higher dimensional input space and constructs an optimal separating hyperplane in this space by learning, eventually online, the hyperplanes corresponding to each data in the training set [30]. This leads to a convex optimization problem with a large number of functional constraints.

1.1 Contributions

To deal with such optimization problems having (possibly) infinite number of functional constraints, we propose subgradient methods with random feasibility updates. At each iteration, the algorithms take a subgradient step aimed at only minimizing the objective function, followed by a feasibility step for minimizing the feasibility violation of the observed minibatch of convex constraints achieved through the Polyak’s subgradient iteration [23, 25]. The feasibility updates in the first algorithm are performed using parallel random observations of several constraint components, while in the second algorithm we consider sequential random observations of constraints. Both algorithms are reminiscent of a learning process where we try to learn the constraint set while simultaneously minimizing an objective function. The proposed algorithms are applicable to the situation where the whole constraint set of the problem is not known in advance, but it is rather learned in time through observations. Also, these algorithms are of interest for (non-smooth) constrained optimization problems where the constraints are known but their number is either large or not finite.

We study the convergence properties of the proposed random minibatch subgradient algorithms for the case when the objective function need not be differentiable but it is strongly convex, while the functional constraints are accessed trough a bounded first-order black-box oracle. In doing so, we can avoid the need for projections to the set of constraints, which may be expensive computationally. For a diminishing stepsize, we prove sublinear convergence rates of order \(\mathcal{O}(1/t)\), where t is the iteration counter, for the expected distances of the weighted averages of the iterates from the constraint set, as well as for the expected suboptimality of the function values along the weighted averages. Our convergence rates are known to be optimal for this class of subgradient schemes for solving non-smooth convex problems with functional constraints. Moreover, our rates depend explicitly on the minibatch size and show when minibatching works for a subgradient method with random feasibility updates. To the best of our knowledge, this is the first work proving that subgradient methods with random minibatch feasibility steps are better than their non-minibatch variants. More explicitly, the convergence estimate for the parallel algorithm depends on a key parameter \(L_N\) (see eq. (13) below), which determines whether minibatching helps (\(L_N <1\)) or not (\(L_N=1\)) and how much (the smaller \(L_N\), the better is the complexity), see Theorem 2. For the sequential variant, we show that minibatching always helps and the complexity depends exponentially on the minibatch size (see Theorem 3).

1.2 Related Works

In spite of its wide applicability, the study on efficient solution methods for optimization problems with many constraints is still limited. The most prominent work is the stochastic gradient descent (SGD) [12, 18, 26]. Even though SGD is a well-developed methodology, it only applies to optimization problems with simple constraints, requiring the whole feasible set to be projectable. A line of work that is known as alternating projections, focuses on applying random projections for solving problems that are involving the intersection of a (infinite) number of sets. The case when the objective function is not present in the formulation, which corresponds to the convex feasibility problem, is studied e.g. in [2, 9, 14, 16]. For this particular setting, [14, 16] combines the smoothing technique with (minibatch) SGD, leading to stochastic alternating projection algorithms having linear convergence rates. In [22] stochastic proximal point type steps are combined with alternating projections for solving stochastic optimization problems with infinite intersection of sets. Stochastic forward-backward algorithms have been also applied to solve optimization problems with many constraints. However, the papers introducing those general algorithms focus on proving only assymptotic convergence results and do not derive convergence rates, or they assume the number of constraints is finite, which is more restricted than our settings [6, 28, 31]. In the case where the number of constraints is finite and the objective function is deterministic, Nesterov’s smoothing framework is studied in [3, 21, 29] in the setting of accelerated proximal gradient methods. Incremental subgradient methods or primal-dual approaches were also proposed for solving convex optimization problems with finite intersection of simple sets through an exact penalty reformulation in [7, 10].

The paper most related to our work is [17], see also [23,24,25], where iterative subgradient methods with random feasibility steps are proposed for solving convex problems with functional constraints. Our algorithms are minibatch extensions of the algorithm proposed in [17]. Moreover, in [17] only sublinear convergence rates of order \(\mathcal{O}(1/\sqrt{t})\) are established for convex objective functions, while in this paper we show that \(\mathcal{O}(1/t)\) rates are valid under a relaxed strong convexity condition. Finally, since we deal with minibatching and a relaxed strong convexity assumption, our convergence analysis requires additional insights that differ from that of [17]. Similarly, in [22] a stochastic optimization problem with infinite intersection of sets is considered and stochastic proximal point steps are combined with alternating projections for solving it. However, in order to prove sublinear convergence rates \(\mathcal{O}(1/t)\), [22] requires strongly convex and smooth objective functions, while our results are valid for a more relaxed strong convexity condition and possible non-smooth fuctions. Lastly, [22] assumes the projectability of individual sets, whereas in our case, the constraints might not be projectable.

1.3 Notation

The inner product of two vectors x and y in \(\mathbb {R}^n\) is denoted by \(\langle x,y\rangle \), while \(\Vert x\Vert \) denotes the standard Euclidean norm. We write \(\mathrm{dist}(\bar{x}, X)\) for the distance of a vector \(\bar{x}\) from a closed convex set X, i.e., \(\mathrm{dist}(\bar{x},X) =\min _{x\in X}\Vert x-\bar{x}\Vert \), while \(\varPi _X[\bar{x}]\) denotes the projection of \(\bar{x}\) onto X, i.e., \(\varPi _X[\bar{x}]=\mathrm{argmin}_{x\in X}\Vert x-\bar{x}\Vert ^2\). For a scalar a, we write \(a^+=\max \{a, 0\}\). For a convex function h, we denote \(s_h(x)\) a subgradient of h at x and \(\partial h(x)\) denote the set of all subgradients of h at x. If h is differentiable at x, then its gradient is denoted \(\nabla h(x)\). We write \(\mathsf {Pr}\left\{ \omega \right\} \) and \(\mathsf {E}\left[ \omega \right] \) to denote respectively the probability distribution and the expectation of a random variable \(\omega \). Finally, the big \(\mathcal {O}\) notation, i.e. \(f(t) \le \mathcal {O}(g(t))\), means that there exist \(C>0 \; \text {and} \; t_0\) such that \(f(t) \le C \cdot g(t)\) for all \(t \ge t_0\).

1.4 Outline

The content of the paper is as follows. In Sect. 1.5 we introduce our problem of interest and the main assumptions. In Sect. 2 we propose a parallel random minibatch subgradient algorithm and derive its convergence rate, while in Sect. 3 the sequential variant is analyzed. Finally, in Sect. 4 we discuss some extensions, while in Sect. 5 we report some preliminary numerical results.

1.5 Problem Formulation

In this paper we are interested in solving the following convex constrained minimization problem:

where \(\mathscr {A}\) is an arbitrary collection of indices and Y is a closed convex set. The objective function f and all constraint functions \(g_\omega \) are assumed convex. We also assume that the optimization problem (1) has finite optimum and we let \(f^*\) and \(X^*\) denote the optimal value and the optimal set, respectively,

We work under the premise that the collection \(\mathscr {A}\) is large, possibly infinite (even uncountable). Such problems have many applications in engineering, machine learning, computer science, operations research and finance [4, 12, 22, 27, 30]. Let us now formally state the assumptions on the functions f and \(g_\omega \), with \(\omega \in \mathscr {A}\), of problem (1).

Assumption 1

Let the following hold:

-

(a)

The set Y is closed, convex and simple (i.e., easy for projection). The constraint set X and the optimal set \(X^*\) are non-empty.

-

(b)

The objective function \(f: \mathbb {R}^n \rightarrow \bar{\mathbb {R}}\) is strongly convex on the set Y with a constant \(\mu >0\), i.e.:

$$\begin{aligned} f(y) \ge f(x) + \langle s_f(x), y - x \rangle + \frac{\mu }{2} \Vert y - x\Vert ^2 \quad \forall x, y \in Y, \; s_f(x) \in \partial f(x). \end{aligned}$$The subgradients of the function f are uniformly bounded on the set Y, i.e., there is \(M_f>0\) such that

$$\begin{aligned} \Vert s_f(x)\Vert \le M_f \quad \forall s_f(x) \in \partial f(x) \; \hbox {and} \; x \in Y. \end{aligned}$$ -

(c)

The functional constraints \(g_\omega : \mathbb {R}^n \rightarrow \bar{\mathbb {R}}\) are convex, not necessarily differentiable, and have bounded subgradients on the set Y, i.e., there is \(M_g>0\) such that

$$\begin{aligned} \Vert d\Vert \le M_g \quad \forall d \in \partial g_\omega (x), \; x \in Y \; \hbox {and } \; \omega \in \mathscr {A}. \end{aligned}$$

We assume, that the domains of definition of the functions f and \(g_\omega \) contain Y. It follows immediately from Assumption 1(b) that (see e.g., [13]):

Note that the conditions of Assumption 1(b) may look contradictory since the following relations need to hold:

where the second inequality follows from the convexity of f and the third one from the Cauchy-Schwartz inequality. This implies that \(\Vert x - x^*\Vert \le 2 M_f/\mu \) for any \( x \in X \subseteq Y\). Note that this inequality is always valid provided that the set Y is compact and our optimization model (1) allows us to impose such an assumption on the set Y. Moreover, when the sets \(X_\omega \) are simple for projection operation, then one may choose an alternative equivalent description of the constraint sets by letting \(g_\omega (x) = \mathrm{dist}(x,X_\omega )\) for all \(x \in \mathbb {R}^n\). Note that in this case \(d(x) = \frac{x - \varPi _{X_\omega }[x]}{\mathrm{dist}(x,X_\omega )} \in \partial g_\omega (x)\) for all \(x \not \in X_\omega \). Furthermore, \(\Vert d(x)\Vert =1\), thus the subgradients are bounded with \(M_g=1\) in this case. Therefore, our approach is more general than those from most of the existing works, which usually assume projectability of each \(X_\omega \) (see also Related works paragraph from Sect. 1).

2 Parallel Random Minibatch Subgradient Algorithm

To solve the convex problem with functional constraints (1), we first propose a subgradient method with parallel random minibatch feasibility updates. More precisely, our first algorithm is a parallel minibatch extension of the algorithm proposed in [17], leading to the following iterative process:

Here, \(\alpha _k>0\) and \(\beta >0\) are deterministic stepsizes and recall that \(s_f(x)\) denotes a subgradient of f at x and \(g_\omega ^+(x) = \max \{g_\omega (x), 0\}\). The method takes one subgradient step for the objective function, followed by N feasibility updates in parallel, which are then averaged and projected onto the set Y. In a parallel implementation, we assume available \(N+1\) cores collocated on the same machine, of which one is designated as a central core; the central core sends \(v_k\) to all other cores, which perform the update (2b) and send their updates to the central core; finally the central core performs the average step (2c) and the optimality step (2a). We note that at each of the feasibility update step N random constraints are selected from the collection of the constraint sets according to the probability distribution \(\mathbf P \), i.e., the index variable \(\omega _{k}^{i}\) is random with values in the set \(\mathscr {A}\). The vector \(d_{k}^{i}\) is chosen as \(d_{k}^{i} \in \partial g^+_{\omega _{k}^{i}}(v_{k})\) if \(g^+_{\omega _{k}^{i}}(v_{k})>0\) and \(d_{k}^{i}=d\) for some \(d \ne 0\) if \(g^+_{\omega _{k}^{i}}(v_{k})=0\). When \(g^+_{\omega _{k}^{i}}(v_{k})=0 \), we have \(z_{k}^{i}=v_k\) for any choice of \(d\ne 0\). Note that the feasibility step (2b) has the special form of Polyak’s subgradient iteration, see e.g., [23, 25]. Moreover, when \(X_\omega \) are projectable, then one chooses \(g_\omega (x) = g_\omega ^+(x) = \mathrm{dist}(x,X_\omega )\) for all \(x \in \mathbb {R}^n\) and the update (2b) becomes a usual projection step:

The initial point \(x_0\in Y\) is selected randomly with an arbitrary distribution. The projection on the set Y in the updates (2a) and (2c) is used to ensure that each \(v_k\) and \(x_k\) remain in the set Y, over which the functions f and \(g_\omega \) are assumed to have bounded subgradients. Our next assumption deals with the random variables \(\omega _k^i\) for \(i=1:N\) chosen according to the probability distribution \(\mathbf P \). For this, we introduce the sigma-field \(\mathscr {F}_{k}\) induced by the history of the method, i.e., by the realizations of the initial point \(x_0\) and the variables \(\omega _t^i\) up to main iteration k:

which contains the same information as the set \(\{x_0\}\cup \{\{v_{t}, x_t\}\mid 1\le t\le k\}\). For notational convenience, we will allow \(k=0\) by letting \(\mathscr {F}_{0}=\{x_0\}\). We impose the following assumption.

Assumption 2

There exists a constant \(c \in (0, \infty )\) such that

Assumption 2 does not require that \(J_k=\{\omega _k^1,\ldots ,\omega _k^N\}\) are conditionally independent, given \(\mathscr {F}_{k-1}\). For example, when the collection \(\mathscr {A}\) is finite, the indices \(i\in \mathscr {A}\) can be selected randomly without replacement, i.e., given the realizations of \(\omega _k^1=j_1,\ldots ,\omega _k^{i-1}=j_{i-1}\), the index \(\omega _k^i\) can be random with realizations in \(\mathscr {A}\setminus \{j_1,\ldots ,j_{i-1}\}\). As another example, the index set \(\mathscr {A}\) can be partitioned in N disjoint sets \(\cup _{i=1}^N \mathscr {A}_1=\mathscr {A}\), and each \(w_k^i\) can be uniformly distributed over the index set \(\mathscr {A}_i\). Such a sampling allows for a parallel computation of all \(z_k^i\) in the algorithm (2). One can also combine the preceding two possibilities, by using a smaller partition of the set \(\mathscr {A}\), and in each of the partitions choose the corresponding \(\omega _k^i\) sequentially, without replacement. Assumption 2 is crucial in our convergence analysis of method (2). It summarizes all the information we need regarding the distributions of the random variables \(\omega _{k}^i\) and the initial point \(x_0\). A discussion on the equivalence between the Assumption 2 and the linear regularity condition for the sets \((X_\omega )_{\omega \in \mathscr {A}}\) can be found in [14, 16, 17]. When each set \(X_\omega \) is given by a linear inequality \(a_\omega ^T x + b_\omega \le 0\), one can verify that the intersection of these halfspaces over any arbitrary index set \(\mathscr {A}\) is linearly regular provided that the sequence \((a_\omega )_{\omega \in \mathscr {A}}\) is bounded, see [5, 8]. Hence, Assumption 2 is also satisfied in this case. Moreover, Assumption 2 holds provided that the interior of the intersection over the arbitrary index set \(\mathscr {A}\) has an interior point [25]. However, Assumption 2 holds for more general sets, e.g., when a strengthened Slater condition holds for a collection of convex functional constraints \((X_\omega )_{\omega \in \mathscr {A}}\), such as the generalized Robinson condition, as detailed in Corollary 2 of [11].

2.1 Preliminary Results

In this section, we derive some preliminary results for later use in the convergence analysis of method (2). We start by recalling a basic property of the projection operation on a closed convex set \(Y\subseteq \mathbb {R}^n\) [16]:

We now show that the parameter c in Assumption 2 satisfies the following inequality:

Lemma 1

Let Assumption 1(c) and Assumption 2 hold. Then, we have:

Proof

Let \(y \in Y\) be such that \(y \not \in X\). Then, there exists \(\bar{\omega } \in \mathscr {A}\) such that the convex function \(g_{\bar{\omega }}\) satisfies \(g_{\bar{\omega }}(y) >0\). Consequently, for any \(s_g(y) \in \partial g_{\bar{\omega }}(y)\) we also have \(s_g(y) \in \partial g_{\bar{\omega }}^+(y)\), and using convexity of \(g_{\bar{\omega }}^+\), we obtain:

or equivalently

On the other hand for those \(\omega \in \mathscr {A}\) for which \(g_\omega (y) =0\) we automatically have

In conclusion, for any \(\omega \in \mathscr {A}\) there holds:

Combining the preceding inequality and Assumption 2, we obtain:

which proves our relation \(c M_g^2 \ge 1\). \(\square \)

We now derive a relation between the iterates \(v_{k+1}\) and \(x_{k}\).

Lemma 2

Let Assumptions 1(a) and 1(b) hold. Let \(v_{k+1}\) be obtained via equation (2a) for a given \(x_k\in Y\). Then, for the unique optimal solution \(x^*\) of the problem (1) and \(\rho \in (0, \; 1)\), we have:

Proof

Using the standard analysis of the projected subgradient method and the fact that the subgradients of f are uniformly bounded on Y, we have for the optimal solution \(x^*\) of (1) the following inequality, see e.g., [23, 24]:

We provide a lower bound on \(f(x_{k}) - f(x^*)\). We consider two choices, namely, one is based on the strong convexity of f and the other is based on considering another intermittent point. By the strong convexity of f, we have

where the second inequality follows from the optimality conditions for \(x^*\) and the last inequality follows from the Cauchy-Schwartz and boundedness of the subgradients of f on Y. The other choice consists of adding and subtracting \(f(\varPi _X[x_k])\), which yields

where the last inequality follows by the convexity of f and the Cauchy-Schwarz inequality. By Assumption 1(b), the subgradients of f are uniformly bounded on Y and hence, also on X, implying that

We now let \(\rho \in (0, 1)\) be arbitrary. By multiplying relation (5) with \(\rho \) and relation (6) with \((1-\rho )\), and by adding the resulting relations, we obtain

By using the estimate (7) in relation (4), we obtain

and after re-arranging some of the terms we get the relation of the lemma. \(\square \)

Remark 1

The best choice for the parameter \(\rho \) is not apparent at this point. It is important to have it in order to have the function value involved in the expression, but it can be that \(\rho =\frac{1}{2}\) will just do fine.

We next state a result that will be used to provide a basic relation between the iterates \(v_k\) and \(x_{k-1}\). The relation is stated in a generic form, and its proof can be found in [23, 24].

Lemma 3

[23, 24] Let g be a convex function over a closed convex set Z, and let y be given by

where \(d\ne 0\). Then, for any \(\bar{z} \in Z\) such that \(g^+(\bar{z})=0\), we have

In the analysis, we will also make use of the relation for averages, stating that for given vectors \(u_1,\ldots , u_N\in \mathbb {R}^n\) and their average \(\bar{u}=\frac{1}{N}\sum _{i=1}^N u_i\), the following relation is valid for any vector \(w\in \mathbb {R}^n\):

Now we provide a basic relation for the iterate \(x_k\) upon completion of the N randomly sampled feasibility updates.

Lemma 4

Let Assumption 1(a) hold. Let \(x_{k}\) be obtained via updates (2b) and (2c) for a given \(v_k\in Y\) and \(\beta >0\). Then, the following relation holds:

where \(V_N(v_k)\) is the total variation of the minibatch subgradients, i.e.,

Proof

By the projection property (3) and the definition of \(x_k\), we have for any \(y\in X\) that:

By the definition we have \(\bar{z}_k=\frac{1}{N}\sum _{i=1}^N z_{k}^{i}\). Thus, by using relation (9) for the collection \(z_k^1,\ldots ,z_k^N\), we have for any \(w\in \mathbb {R}^n\),

Letting \(w=y\) in the preceding relation and combining the resulting relation with (10), we obtain

Now, we use the definition of the iterates \(z_{k}^i\) in algorithm (2) and Lemma 3, with \(Z=\mathbb {R}^n\). Thus, we obtain for any \(y\in X\) (for which we would have \(g_{\omega _{k}^i}^+(y)=0\) for any realization of \(\omega _{k}^i\)) and for any \(i=1,\ldots ,N\),

Hence, it follows that for any \(y \in X\),

From the definition of the iterates \(z_{k}^i\) in algorithm (2), we see that

By defining

we have

Therefore, we obtain for any \(y \in X\),

The statement of the lemma follows by letting \(y=\varPi _X[v_k]\) in the preceding relation and using the fact that \(\Vert x_k - \varPi _X[x_k]\Vert \le \Vert x_{k} - \varPi _X[v_k\Vert \Vert \). \(\square \)

Let us define the following parameters:

where \(L_N^k =0\) if \(g_{\omega _k^i}(v_k) \le 0\) for all \(i=1:N\).

From Jensen’s inequality it follows that \(L_N^k \le 1\). However, there are also convex functions \(g_\omega \) such that \(L_N^k < 1\). We postpone the derivation of such examples of functional constraints satisfying condition \(L_N^k < 1\) until Sect. 2.3. The parameter \(L_N \le 1\) will play a key role in our derivations below. In particular, we obtain the following simplification for Lemma 4.

Lemma 5

Let Assumptions 1(a) and 1(c) hold. Let \(L_N \le 1\) as defined in (13) and \(x_{k}\) be obtained via updates (2b) and (2c) for a given \(v_k\in Y\) and extrapolated stepsize \(\beta \in (0, \, 2/L_N)\). Then, the following relation holds:

Proof

Note that the total variation of the minibatch subgradients \(V_N(v_k)\) can be written equivalently as:

Using the previous expression of \(V_N\) and the definitions of \(L_N^k\) and \(L_N\) from (13) in Lemma 4, we get:

By Assumption 1(c) each function \(g_i\) has bounded subgradients uniformly on Y. Hence, we have \(\Vert d_k^i\Vert \le M_g\), which used in the previous inequality implies the statement of the lemma. \(\square \)

Note that the previous result shows that we can use extrapolated stepsize \(\beta \in (0, 2/L_N)\) in minibatch settings instead of the typical \(\beta \in (0, 2)\) used e.g. in [17]. Clearly, when \(L_N<1\) we have \(2/L_N >2\) and consequently, such extrapolation will accelerate convergence of the parallel algorithm. This can be also observed in simulations (see e.g. Fig. 3 below). Moreover, the largest decrease in Lemma 5 is obtained by maximizing \(\beta (2 - \beta L_N)\), that is, the optimal stepsize is \(\beta = 1/L_N\). We now combine Lemma 2 and Lemma 5 to provide a basic relation for the subsequent analysis.

Lemma 6

Consider the method in (2), and let Assumption 1 hold. Let the stepsize \(\alpha _k\) be such that \(1 - \frac{\alpha _k\mu }{2}>0\) for all \(k\ge 0\) and stepsize \(\beta \in (0, \; 2/L_N)\), with \(L_N \le 1\) defined in (13). Then, the iterates of the method (2) satisfy the following recurrence for the optimal solution \(x^*\) and for all \(k\ge 0\):

where \(\eta >0\) is arbitrary.

Proof

Let \(x^*\in X\) be the unique optimal solution of problem (1). Then, we use Lemma 2 for \(\rho =\frac{1}{2}\) so that for all \(k\ge 0\), we have

Using the same reasoning as in the proof of Lemma 5 for the inequality (12) with \(y=x^*\) gives:

Combining the preceding two relations yields

We next approximate the term that is linear in \(\alpha _k\), i.e. \(2 \alpha _k M_f \Vert \varPi _X[x_k]- x_k\Vert \), with a sum of two quadratic terms, one of which is in the order of \(\alpha _k^2\), as:

for any arbitrary \(\eta >0\). Substituting the preceding estimate in (14), we obtain the stated relation. \(\square \)

2.2 Convergence Rates

In this section we derive the convergence rates of Algorithm (2). For this, we first provide a recurrence relation for the iterates in expectation, which is the key relation for our convergence rate results. Note that \(c M_g^2 \ge 1\) according to Lemma 1 and \(L_N \in (0, 1]\). In the sequel we provide a detailed convergence analysis for the non-trivial case \(c M_g^2 L_N > 1\). The other case, i.e. \(c M_g^2 L_N \le 1\), implies almost sure feasibility for any \(x_t\) generated by the parallel algorithm, with \(t \ge 1\), and it will be discussed in Remark 3.

Theorem 1

Consider the iterative process (2), and let Assumption 1 and Assumption 2 hold. Let the stepsizes \(\alpha _k\) be such that \(1 - \frac{\alpha _k\mu }{2}>0\) for all \(k\ge 0\) and \(\beta \in (0, \; 2/L_N)\), with \(L_N \le 1\) defined in (13), and assume \(c M_g^2 L_N> 1\). Then, for the algorithm (2), by defining \(q_N= \frac{\beta (2 - \beta L_N)}{c M_g^2} <1\), we have almost surely for all \(k\ge 0\),

Proof

From Lemma 6, by taking the conditional expectation on the past \(\mathscr {F}_{k-1}\), we have almost surely for all \(k\ge 0\),

where \(\eta >0\) is arbitrary. By Assumption 2, it follows that

Hence

Taking the conditional expectation on the past \(\mathscr {F}_{k-1}\) in the relation of Lemma 5, and using relation (16), we obtain almost surely

where we denote

Recall that we assume \(c M_g^2 L_N > 1\), then \(q_N < 1\) (since \(\max _{\beta } \beta (2-\beta L_N)=1/L_N\)). Hence, \(1-q_N>0\). By dividing with \(1-q_N\), we further obtain

Substituting the preceding estimate in relation (16), yields

We now use estimate (19) in relation (15), and thus obtain

By the definition of q (see (18)), we have

Hence,

and by letting \(\eta = \frac{1}{2} \left( 1 - \frac{\alpha _k\mu }{2}\right) \frac{q_N}{1-q_N} > 0\), the desired relation follows. \(\square \)

We now turn our attention to the stepsize \(\alpha _k\). We consider \(\alpha _k\) of the form:

for some diminishing sequence \(\gamma _k\) as detailed below. Indeed, for this choice, the recurrence from Theorem 1 becomes:

where recall that \(q_N= \frac{\beta (2-\beta L_N)}{c M_g^2}\). Let \(\gamma _k\) be given by

Since the sequence \(\gamma _k\) is decreasing, we have

implying that

Using this estimate in (20), we obtain

Next, we note that

Dividing (21) by \(\gamma _k^2\) and using the preceding inequality we have for all \(k\ge 1\), after taking total expectations and rearranging terms:

Summing these over \(k=1,\ldots , t\), for some \(t>0\), we obtain

Using the definition of \(\gamma _k\), (22) implies

We finally obtain by the linearity of the expectation operation:

Define for \(t\ge 1\) the sum

Define also the following weighted averages (convex combinations)

with \(a_k=\frac{(k+1)^2 }{S_t}\), hence satisfying \(\sum _{k=1}^t a_k=1\). Using convexity of the function f and of the norm-squared, we have

If we define \(b_N^p = q_N(1-q_N)^{-1} = (1-q_N)^{-1} -1\), then (25) becomes:

Next theorem summarizes the convergence rates followed from the previous discussion. For simplicity of the exposition, we omit the constants and express the rates only in terms of the dominant powers of t:

Theorem 2

Let Assumption 1 and Assumption 2 hold and the stepsizes \(\alpha _k = \frac{4}{\mu (k+1)}\) and \(\beta \in (0, \; 2/L_N)\), with \(L_N \le 1\) defined in (13). Let also assume \(c M_g^2 L_N> 1\). Then, \(q_N= \frac{\beta (2-\beta L_N)}{c M_g^2} <1\) and \(b_N^p = (1-q_N)^{-1} -1\). Moreover, the following sublinear rates for suboptimality and feasibility violation hold for the average sequence \( \hat{x}_t\) generated by the parallel algorithm (2):

Proof

From the recurrence (26), omitting the constants but keeping the terms depending on \(b_N^p = (1-q_N)^{-1} -1\), we get the following convergence rates in terms of these weighted averages \(\hat{w}_t\) and \(\hat{x}_t\):

Since \(\hat{w}_t\in X\) and using the Jensen’s inequality we get the following convergence rate for the feasibility violation of the constraints:

Since \(\hat{x}_t\in Y\) and \(\hat{w}_t\in X\subset Y\), by the subgradient boundedness of f on Y, it follows that

which combined with \(\mathsf {E}\left[ f(\hat{w}_t) - f^*)\right] \le \mathcal{O} \left( \frac{1}{t} + \frac{1}{b_N^p t} \right) \), yields also the following convergence rate for suboptimality

which proves our theorem. \(\square \)

We observe that the convergence estimate for the feasibility violation depends explicitly on the minibatch size N via the key parameter \(L_N\). For the optimal stepsize \(\beta = 1/L_N\) we get \(q_N = 1/c M_g^2 L_N\) and \(b_N^p = 1/ (c M_g^2 L_N -1)\). Hence, \(b_N^p\) is large provided that \(L_N \ll 1\) (small). Note that if \(L_N=1\), then \(b_N^p\) does not depend on N and hence complexity does not improve with minibatch size N. However, as long as \(L_N <1\) (and it can be also the case that \(L_N \sim 0\)), then \(b_N^p\) becomes large, which shows that minibatching improves complexity. To the best of our knowledge, this is the first time that a subgradient method with random minibatch feasibility updates is shown to be better than its non-minibatch variant. We have identified\(L_N\)as the key quantity determining whether minibatching helps (\(L_N < 1\)) or not (\(L_N = 1\)), and how much (the smaller\(L_N\), the more it helps). Note also that the suboptimality estimate contains a term which does not depend on the minibatch size N as it happens for feasibility violation estimate. This is natural, since the minibatch feasibility steps have no effect on the minimization step of the objective function.

Remark 2

Note that the convergence rates \(\mathcal{O} \left( \frac{1}{t} \right) \) for feasibility and suboptimality are known to be optimal for the stochastic subgradient method for solving the optimization problem (1) under Assumption 1, see [19, 20]. Moreover, the iterative process (2) does not require knowledge of the subgradient norm bounds \(M_f\) and \(M_g\) from Assumption 1, nor the constant c from Assumption 2. These values are only affecting the constants in the convergence rates, they are not needed for the stepsize selection. The stepsize \(\alpha _k\) requires only knowledge of some estimate of the strong convexity constant \(\mu \). Moreover, since \(L_N \le 1\), we can use e.g., stepsize \(\beta \in (0, \; 2) \subseteq (0, \; 2/L_N)\). Of course, a larger stepsize \(\beta \) leads to a faster convergence. Hence, if \(L_N < 1\) and it can be computed, then we should choose an extrapolated steplength \(\beta = (2 -\delta )/L_N\) for some \(\delta \in (0, 2)\) small. When \(L_N\) cannot be computed explicitly, we propose to approximate it online with \(L_N^k\), and use at each iteration an adaptive extrapolated stepsize \(\beta _k\) of the form \(\beta _k = (2-\delta )/L_N^k\) for some \(\delta \in (0, \; 2)\) (see also the discussion from Sect. 4, equation (36)).

Remark 3

The convergence rates from Theorem 2 hold for the non-trivial case \(q_N = \frac{\beta (2-\beta L_N)}{c M_g^2} <1\). Note that the inequality \(q_N<1\) is always satisfied, provided that \(c M_g^2 L_N> 1\). On the other hand, the case \(q_N \ge 1\) (e.g., \(c M_g^2 L_N \le 1\) and \(\beta = 1/L_N\)) turns out to be the ideal case, since then we have from (17) that \( \mathsf {E}\left[ \mathrm{dist}^2(x_{k}, X) \mid \mathscr {F}_{k-1}\right] \le 0 \quad \forall k \ge 1\). Therefore, in this ideal case we achieve almost sure feasibility for the sequence \(x_t\) generated by the parallel algorithm (see (2)) after one step:

Using this feasibility relation in the same derivations from Sect. 2.2 we also get a suboptimality estimate for the average sequence \( \hat{x}_t\) as in Theorem 2:

Clearly, from Jensen’s inequality we also have almost sure feasibility for the average sequence \( \hat{x}_t\):

We skip these details since the proof is the same as for the non-trivial case.

2.3 Example of Functional Constraints Having \(L_N<1\)

Let us recall the definition of the parameters \(L_N^k\) and \(L_N\) from (13):

From Jensen’s inequality we have \(L_N^k \le 1\) and consequently \(L_N \le 1\). On the other hand, Theorem 2 shows that \(L_N \ll 1\) is beneficial for a subgradient scheme with minibatch feasibility updates. In this section we provide an example of functional constraints \(g_\omega \) for which \(L_N < 1\). Let us consider m linear inequality constraints for the convex problem (1):

Without loss of generality we assume \(\Vert a_\omega \Vert =1\) for all \(\omega \). Let us define the matrix \(A= [a_1 \cdots a_m]^T\) and the subset of indexes selected at the current iteration \(J_k =\{ \omega ^1_k \cdots \omega ^N_k \} \subset \mathscr {A}\). We also denote \(J_k^+ = \{ \omega \in J_k: a_\omega ^T v_k + b_\omega > 0 \}\) and denote \(A_{J_k^+}\) the submatrix of A having the rows indexed in the set \(J_k^+\). With these notations and using that \(\Vert a_\omega \Vert =1\) for all \(\omega \), then \(L_N^k\) can be written explicitly as (assuming that \(|J_k^+| \ge 1\)):

where the first inequality follows from the definition of the maximal eigenvalue \(\lambda _{\max }\) of a matrix, the second inequality follows from the fact that \(J_k^+ \subseteq J_k\), and the third inequality holds strictly provided that the submatrix \(A_{J_k}\) has at least rank two. In conclusion, if the matrix A has e.g. full row rank and consider a sampling of \(J_k\) based on a given probability \(\mathbf P \), then \(L_N\) satisfies:

Note that for particular sampling rules we can compute \(L_N\) efficiently, such as when we consider a uniform distribution over a fixed partition of \(\mathscr {A}= \cup _{i=1}^\ell J_i\) of equal size. The reader may find other examples of functional constraints satisfying \(L_N<1\) and we believe that this paper opens a window of opportunities for algorithmic research in this direction.

3 Sequential Random Minibatch Subgradient Algorithm

In this section we consider a sequential variant of the algorithm (2) defined in terms of the following iterative process:

This method takes, as for the parallel variant, one subgradient step for the objective function, followed by Nsequential feasibility updates. As before, the vector \(d_{k}^{i}\) is chosen as \(d_{k}^{i} \in \partial g^+_{\omega _{k}^{i}}(z_{k}^{i-1})\) if \(g^+_{\omega _{k}^{i}}(v_{k})>0\), and \(d_{k}^{i}=d\) for some \(d \ne 0\) if \(g^+_{\omega _{k}^{i}}(z_{k}^{i-1})=0\). Note that in this variant, the feasibility updates use the projection on Y in order to confine the intermittent iterates \(z_k^i\) and \(x_k\) to the set Y, where \(g_\omega \)’s and f (for the last step) are assumed to have uniformly bounded subgradients.

In this section we analyze the convergence properties of this new algorithm (28). Given \(x_{k-1}\), the update of \(v_k\) is the same as in the parallel method (2), thus Lemma 2 still applies here. We need an analog of Lemma 5.

Lemma 7

Let Assumptions 1(a) and 1(c) hold. Let \(x_{k}\) be generated by algorithm (28) with \(\beta \in (0, \; 2)\). Then, the following relations are valid:

Proof

We start with the definition of \(z_{k}^i\) in (28b) and Lemma 3, with \(Z=Y\). Thus, we obtain for all \(y\in X\) (which satisfies \(g_{\omega _{k}^i}^+(y)=0\) for any realization of \(\omega _{k}^i\)) and for all \(i=1,\ldots ,N\),

By using \(\Vert d_k^i\Vert ^2 \le M_g^2\), we have for all \(i=1,\ldots ,N\),

The distance relation for z-iterates follows by taking the minimum over \(y\in X\) on both sides of inequality (29). By summing relations (29) over \(i=1,\ldots ,N\), and by using \(z^0_k=v_k\) and \(z^N_k=x_k\), we obtain for any \(y\in X\),

The distance relation follows by taking the minimum over \(y\in X\) on both sides of the preceding inequality. \(\square \)

Taking \(\rho =1/2\) in Lemma 2 we get:

and using the inequality for \(\Vert x_k-y\Vert ^2\) from Lemma 7 in \(y=x^*\), yields:

Taking the conditional expectation on \(\mathscr {F}_{k-1}\) and \(z_k^{i-1}\), and using Assumption 2, give

Using the iterated expectation rule, we obtain

which, when combined with the distance relation of Lemma 7 gives for all \(i=1,\ldots ,N\)

Recall that \(c M_g^2 \ge 1\) according to Lemma 1. In the subsequent analysis we consider the non-trivial case \(cM_g^2 >1\). The ideal case \(cM_g^2 =1\) will allow to get feasibility in expectation in one step and obtain a similar convergence rate result as in Remark 3. Hence, using the definition of \(x_k\), i.e., \(x_k=z_k^N\), and letting \(q=\frac{\beta (2-\beta )}{c M_g^2} \in (0,1)\) (since we assume \(cM_g^2 >1\) and \(\beta \in (0,2)\)), we have for all \(i=1,\ldots ,N\),

implying that for all \(i=1,\ldots ,N\),

From (31) and (32) for all \(i=1,\ldots ,N\),

By summing over i

However,

Finally, we get

and consequently

Let us denote \(b_N^s= (1-q)^{-N}-1\). It is clear that \(b_N^s \rightarrow \infty \) as \(N \rightarrow \infty \). Taking expectation in (30) and using the previous inequality we get an analog of Lemma 6:

for any \(\eta >0\). Let us consider the same stepsize as for the parallel scheme, i.e. \(\alpha _k = \frac{2}{\mu } \gamma _k\), choose \(\eta = \frac{1}{2}\left( 1 - \frac{\alpha _k\mu }{2}\right) b_N^s > 0\), and take the full expectation, to get the following recurrence (analog to Theorem 1):

Using now \(\gamma _k = \frac{2}{k+1}\), then \(1-\gamma _k\ge \frac{1}{3}\) and we get:

Since, \(\frac{1- \gamma _k}{\gamma _k^2}\le \frac{1}{\gamma _{k-1}^2}\) for all \(k\ge 1\), dividing (33) by \(\gamma _k^2\) and using the preceding inequality we have for all \(k\ge 1\):

Summing these over \(k=1,\ldots , t\), for some \(t>0\), we obtain the following recurrence relation for the algorithm (28):

Using the same definition for the weighted averages \(\hat{w}_t\) and \(\hat{x}_t\) from (24) and \(\gamma _k =\frac{2}{k+1}\) in (34), we get the main recurrence for the sequential variant (28):

Next theorem summarizes the convergence rates that follow from the recurrence relation (35) of the sequential algorithm (28).

Theorem 3

Let Assumption 1 and Assumption 2 hold and the stepsizes \(\beta \in (0,2)\) and \(\alpha _k = \frac{4}{\mu (k+1)}\). Let also \(q= \frac{\beta (2-\beta )}{c M_g^2} <1\) and \(b_N^s= (1-q)^{-N}-1\). Then, the following sublinear rates for suboptimality and feasibility violation hold for the average sequence \( \hat{x}_t\) from (24) generated by the sequential algorithm (28):

Proof

Defining the same average sequences \(\hat{w}_t\) and \(\hat{x}_t\) as in (24), we get the following convergence rates (omitting the constants but keeping the terms depending on \(b_N^s\)):

Hence, we get the following convergence rate for the feasibility violation of the constraints that depends explicitly on the minibatch size N via the term \(b_N\):

Using the same reasoning as in the proof of Theorem 2, we also get the following convergence rate for suboptimality:

which proves the statements of the theorem. \(\square \)

We observe that also for the sequential algorithm (28) the convergence estimate for the feasibility violation depends explicitly on the minibatch size N via the term \(b_N^s\) (recall that \(b_N^s \rightarrow \infty \) as \(N \rightarrow \infty \)). Since \(b_N^s\) is an increasing sequence in N, it follows that the larger is the minibatch size N the better is also the complexity of the sequential algorithm (28) in terms of constraints feasibility. In conclusion, for the sequential variant our rates prove that minibatching always helps and the feasibility estimate depends exponentially on the minibatch size N. On the other hand, the suboptimality estimate contains a term which does not depend on the minibatch size N as it happens for feasibility violation estimate. Recall that for the parallel algorithm we proved that minibatching works only for \(L_N < 1\) and the estimates depend linearly on \(L_N\).

4 Extensions

In this section we discuss some possible extensions of the framework presented in this paper related to the objective function, algorithms and stepsizes. Some of these extensions will be considered in our future work.

First, from our convergence analysis it is easy to note that the derivations still remain valid for a larger class of objective functions in the model (1). More precisely, we can replace the boundedness on the subgradients of f (Assumption 1(b)), i.e. \( \Vert s_f(x)\Vert \le M_f\), with a more general assumption, that is there exist two constants \(M_{f,1}, M_{f,2} \ge 0\) such that the (sub)gradients of f satisfy the following inequality:

Clearly, this condition covers the class of functions with bounded subgradients, e.g. take \(M_{f,2}=0\), and also the class of functions with Lipschitz continuous gradients [20]. Indeed, if there is \(L_f>0\) such that the gradients \(\nabla f\) satisfy:

then we immediately get

which proves our inequality for \(M_{f,1} = \max _{x \in X^*} \Vert \nabla f(x^*)\Vert \) and \(M_{f,2} = L_f\). Our convergence analysis can be easily adapted for this more general assumption, however, the recurrence relations will be more cumbersome. For example, the recurrence from Lemma 2 becomes now:

Second, when the objective function f has an easy proximal operator we can replace the subgradient steps (2a) and (28a) by a proximal point step:

An algorithm combining the proximal point step with a single feasibility step (i.e., \(N=1\)) has been considered in [22] and convergence rates of order \(\mathcal {O}(1/t)\) have been proved provided that the objective function is smooth (i.e., it has Lipschitz continuous gradient) and strongly convex. Note that it is easy to extend that convergence analysis to the minibatch settings following the framework developed in this paper.

Third extension is still related to the objective function, by considering f in the composite form, i.e.:

where \(f_1\) is smooth and \(f_2\) can be non-smooth but admits an easy proximal operator. Note that if the set Y is present in the optimization model (1), then it can be included in \(f_2\) as the indicator function. For this composite objective function, steps (2a) and (28a) can be replaced by:

Note that for \( f_{2}(x) = 1_{Y}(x)\), the indicator function of the convex set Y, we recover the updates (2a) and (28a). Hence, it will be interesting to extend our convergence analysis to this general composite objective function f.

Finally, in the parallel algorithm (see (2)) the feasibility steps depend on an extrapolated stepsize \(\beta \in (0, \, 2/L_N)\). When \(L_N\) cannot be computed explicitly, we propose to approximate it online with \(L_N^k\), and use at each iteration an adaptive extrapolated stepsize \(\beta _k\) of the form:

for some \(\delta \in (0, \; 2)\) sufficiently small. The convergence rate of the parallel algorithm for this adaptive choice (36) of the stepsize \(\beta _k\) will be analyzed in our future work (see e.g., [15] for some preliminary results related to the convex feasibility problem).

5 Preliminary Numerical Results

Many data-driven optimization applications can be formulated as convex optimization problems with the objective function composed of a quadratic term and a regularizer and constraints (so-called constrained Lasso) of the form:

where the problem is parametrised by the data (measurements) y, H is an appropriate linear operator (e.g., the forward operator, the circular convolution) and D is another linear operator (e.g., the identity, the finite difference or the Wavelet transform). Additionally, we impose constraints of the form \(x \in X=[l, \; u] \cap \{ x: \; Ax + b \le 0 \}\), where \(A \in \mathbb {R}^{m \times n}\). The constrained Lasso arises e.g., in image deblurring or denoising, computerised tomography or some inverse problems, see.e.g [4]. Note that for this formulation the strong convexity Assumption 1(b) holds for full column matrices H (see e.g., [13]) and also the linear regularity Assumption 2 holds (see e.g. [14]). Moreover, the set \(Y=[l,\, u]\) is compact so that the objective function has bounded subgradients and the functional constraints \(g_\omega (x) = a_\omega ^T x + b_\omega \) are linear and, consequently, Assumption 1 also holds.

In our experiments we use synthetic data, where H is Toeplitz-like matrix and D the finite difference operator (as in image deblurring [4]). We also generate A randomly, with \(m=3n\) constraints. We consider a partition of \(\mathscr {A}=\{1, 2, \cdots , m\} \) of equal size N, i.e., \(\mathscr {A}=\cup _{i=1}^\ell J_i\). Hence, \(m= N \cdot \ell \). We compute \(L_N\) as in (27) for this partition. We consider full iterations, i.e. we plot the behavior of the algorithms over epochs tN / m (number of passes over all the rows of matrix A).

In the first set of experiments we compare the parallel (see (2)) and sequential (see (28)) algorithms for different minibatch sizes \(N=1, 50\) and 100 on a constrained Lasso problem with \(n=10^3\). The plots in Fig. 1 present the convergence behavior of these algorithms in terms of feasibility violation of the average point over full iterations tN / m: parallel algorithm (left) and sequential algorithm (right). As we can see from Fig. 1, increasing the minibatch size N usually leads to better convergence for both algorithms.

Then, we compare the parallel algorithm with the extrapolated stepsize \(\beta =1.9/L_N\) and the sequential algorithm with \(\beta =1.9\). The results on a problem of dimension \(n=10^3\) and minibatch size \(N=10\) are displayed in Fig. 2: suboptimality (left) and feasibility violation (right) in the average point over full iterations. We observe a faster convergence for the sequential algorithm, as our theory also predicted.

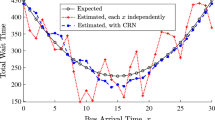

Finally, we compare the parallel algorithm (2) based on our extrapolated stepsize \(\beta =1.9/L_N\) and a variant with fixed stepsize \(\beta =1.9\). The results on a constrained Lasso problem of dimension \(n=10^3\) and minibatch size \(N=10\) are displayed in Fig. 3: suboptimality (left) and feasibility violation (right). We observe that extrapolation \(\beta = 1.9/L_N > 2\) accelerates substantially the parallel algorithm in terms of feasibility criterion. Note also that all the plots show a \(\mathcal {O}(1/t)\) rate for the average sequence in the feasibility criterion, thus supporting our theoretical findings.

6 Conclusions

In this paper we have considered (non-smooth) convex optimization problems with (possibly) infinite intersection of constraints. For solving this general class of convex problems we have proposed subgradient algorithms with random minibatch feasibility steps. At each iteration, our algorithms take first a step for minimizing the objective function and then a subsequent step minimizing the feasibility violation of the observed minibatch of constraints. The feasibility updates were performed based on either parallel or sequential random observations of several constraint components. For a diminishing stepsize and for strongly convex objective functions, we have proved sublinear convergence rates for the expected distances of the weighted averages of the iterates from the constraint set, as well as for the expected suboptimality of the function values along the weighted averages. Our convergence rates are optimal for subgradient methods with random feasibility steps for solving this class of non-smooth convex problems. Moreover, the rates depend explicitly on the minibatch size. From our knowledge, this work is the first deriving conditions when minibatching works for subgradient methods with random minibatch feasibility updates and proving how better is their complexity compared to the non-minibatch variants. Finally, our convergence analysis shows that for the sequential algorithm minibatching always helps and the feasibility estimate depends exponentially on the minibatch size, while for the parallel algorithm we proved that minibatching works only when some parameter of the optimization problem is strictly less than 1. The numerical results also support the convergence results.

References

Blatt, D., Hero, A.O.: Energy based sensor network source localization via projection onto convex sets. IEEE Trans. Signal Process. 54(9), 3614–3619 (2006)

Bauschke, H., Borwein, J.: On projection algorithms for solving convex feasibility problems. SIAM Rev. 38(3), 367–376 (1996)

Bot, R.I., Hendrich, C.: A double smoothing technique for solving unconstrained nondifferentiable convex optimization problems. Comput. Optim. Appl. 54(2), 239–262 (2013)

Bot, R.I., Hein, T.: Iterative regularization with general penalty term—theory and application to \(L_1\) and TV regularization. Inverse Probl. 28(10), 1–19 (2012)

Burke, J., Ferris, M.: Weak sharp minima in mathematical programming. SIAM J. Control Optim. 31(6), 1340–1359 (1993)

Bianchi, P., Hachem, W., Salim, A.: A constant step forward-backward algorithm involving random maximal monotone operators, (2017) arxiv preprint (arXiv:1702.04144)

Bertsekas, D.P.: Incremental proximal methods for large scale convex optimization. Math. Program. 129(2), 163–195 (2011)

Fercoq, O., Alacaoglu, A., Necoara, I., Cevher, V.: Almost surely constrained convex optimization. In: International Conference on Machine Learning (ICML) (2019)

Keys, K., Zhou, H., Lange, K.: Proximal distance algorithms: theory and practice. J. Mach. Learn. Res. 20(66), 1–38 (2019)

Kundu, A., Bach, F., Bhattacharya, C.: Convex optimization over inter-section of simple sets: improved convergence rate guarantees via an exact penalty approach. In: International Conference on Artificial Intelligence and Statistics (2018)

Lewis, A., Pang, J.: Error bounds for convex inequality systems. In: Crouzeix, J., Martinez-Legaz, J., Volle, M. (eds.) Generalized Convexity, Generalized Monotonicity, pp. 75–110. Cambridge University Press, Cambridge (1998)

Moulines, E., Bach, F.R.: Non-asymptotic analysis of stochastic approximation algorithms for machine learning. Advances in Neural Information Processing Systems (2011)

Necoara, I., Nesterov, Yu., Glineur, F.: Linear convergence of first order methods for non-strongly convex optimization. Math. Program. 175(1–2), 69–107 (2018)

Necoara, I., Richtarik, P., Patrascu, A.: Randomized projection methods for convex feasibility problems: conditioning and convergence rates. SIAM J. Optim. 28(4), 2783–2808 (2019)

Necoara, I., Nedić, A.: Minibatch stochastic subgradient-based projection algorithms for solving convex inequalities, arxiv preprint (2019)

Nedić, A.: Random projection algorithms for convex set intersection Problems. In: IEEE Conference on Decision and Control, pp. 7655–7660 (2010)

Nedić, A.: Random algorithms for convex minimization problems. Math. Program. 129(2), 225–273 (2011)

Nemirovski, A., Juditsky, A., Lan, G., Shapiro, A.: Robust stochastic approximation approach to stochastic programming. SIAM J. Optim. 19(4), 1574–1609 (2009)

Nemirovski, A., Yudin, D.: Problem Complexity Method Efficiency in Optimization. Wiley, New York (1983)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Kluwer, Boston (2004)

Ouyang, H., Gray, A.: Stochastic smoothing for nonsmooth minimizations: accelerating SGD by exploiting structure, (2012) arxiv preprint (arXiv:1205.4481)

Patrascu, A., Necoara, I.: Nonasymptotic convergence of stochastic proximal point algorithms for constrained convex optimization. J. Mach. Learn. Res. 18(198), 1–42 (2018)

Polyak, B.T.: A general method of solving extremum problems. Doklady Akademii Nauk USSR 174(1), 33–36 (1967)

Polyak, B.T.: Minimization of unsmooth functionals. USSR Comput. Math. Math. Phys. 9, 14–29 (1969)

Polyak, B.: Random algorithms for solving convex inequalities. Stud. Comput. Math. 8, 409–422 (2001)

Polyak, B.T., Juditsky, A.B.: Acceleration of stochastic approximation by averaging. SIAM J. Control Optim. 30(4), 838–855 (1992)

Rockafellar, R.T., Uryasev, S.P.: Optimization of conditional value-at-risk. J. Risk 2, 21–41 (2000)

Shefi, R., Teboulle, M.: Rate of convergence analysis of decomposition methods based on the proximal method of multipliers for convex minimization. SIAM J. Optim. 24(1), 269–297 (2014)

Tran-Dinh, Q., Fercoq, O., Cevher, V.: A smooth primal-dual optimization framework for nonsmooth composite convex minimization. SIAM J. Optim. 28(1), 96–134 (2018)

Vapnik, V.: Statistical Learning Theory. Wiley, New York (1998)

Wang, M., Chen, Y., Liu, J., Gu, Y.: Random multiconstraint projection: stochastic gradient methods for convex optimization with many constraints, (2015) arxiv preprint (arxiv:1511.03760)

Acknowledgements

This research was supported by the National Science Foundation under CAREER Grant CMMI 07-42538 and by the Executive Agency for Higher Education, Research and Innovation Funding (UEFISCDI), Romania, PNIII-P4-PCE-2016-0731, project ScaleFreeNet, No. 39/2017.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nedić, A., Necoara, I. Random Minibatch Subgradient Algorithms for Convex Problems with Functional Constraints. Appl Math Optim 80, 801–833 (2019). https://doi.org/10.1007/s00245-019-09609-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00245-019-09609-7