Abstract

Movement corrections to somatosensory targets have been found to be shorter in latency and larger in magnitude than corrections to external visual targets. Somatosensory targets (e.g., body positions) can be identified using both tactile (i.e., skin receptors) and proprioceptive information (e.g., the sense of body position derived from sensory organs in the muscles and joints). Here, we investigated whether changes in tactile information alone, without changes in proprioception, can elicit shorter correction latencies and larger correction magnitudes than those to external visual targets. Participants made reaching movements to a myofilament touching the index finger of the non-reaching finger (i.e., a tactile target) and a light-emitting diode (i.e., visual target). In one-third of the trials, target perturbations occurred 100 ms after movement onset, such that the target was displaced 3 cm either away or toward the participant. We found that participants demonstrated larger correction magnitudes to visual than tactile target perturbations. Moreover, we found no differences in correction latency between movements to perturbed tactile and visual targets. Further, we found that while participants detected tactile stimuli earlier than visual stimuli, they took longer to initiate reaching movements to an unperturbed tactile target than an unperturbed visual target. These results provide evidence that additional processes may be required when planning movements to tactile versus visual targets and that corrections to changes in tactile target positions alone may not facilitate the latency and magnitude advantages observed for corrections to somatosensory targets (i.e., proprioceptive-tactile targets).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In our everyday actions, we interact with objects that move relative to our environment and or our body. For example, when the path of a beach volleyball is altered by the wind, a player must adjust their initial action to make good contact with the ball. Likewise, a spectator in the stands can quickly grasp their hat when they feel that the force of the wind may blow it away. In the first example, an individual would use visual feedback to make limb-corrections to the changing location of the volleyball, while in the second example the individual would rely primarily on tactile information from the skin to detect changes in their hat’s position. While much motor control research has been devoted to trajectory corrections based on visual target information (e.g., Smeets and Brenner 1995; Brenner and Smeets 1997; Saunders and Knill 2003; Cressman et al. 2006; Franklin et al. 2016), fewer studies have investigated limb trajectory amendments based on somatosensory information (i.e., proprioception, tactile afference; e.g., Bernier et al. 2007; Sober and Sabes 2005; Sarlegna and Sainburg 2007).

Previous studies have found that movements to somatosensory targets (i.e., consisting of both proprioceptive, and tactile information) are planned and controlled differently than movements to external visual targets (Manson et al. 2019; Bernier et al. 2007; Sober and Sabes 2005; Sarlegna and Sainburg 2007). For example, Manson et al. (2019) compared limb-trajectory amendments in response to the motion of a visual target (LED) or a somatosensory target (i.e., tip of the index finger on the non-reaching limb), in the absence of vision of the reaching limb. On some trials, the target position was shifted after movement onset (~ 100 ms or 200 ms) and the latency and magnitude of corrections to the new target position were measured. The authors found that there were longer correction latencies in response to visual target displacements than somatosensory target displacements. Also, the magnitude of the correction at the end of the limb trajectory was smaller for the visual than for the somatosensory target displacements. The authors interpreted the differences in the latency and magnitude findings across target modalities as evidence that different correction mechanisms, perhaps relying on different sensorimotor pathways (i.e., visual, non-visual) are used for movements to visual and somatosensory targets. For corrections to somatosensory targets, the authors suggested that faster, non-visual sensorimotor pathways were used (see also: Scott et al. 2015). In contrast, for corrections to visual targets, the authors suggested that slower visual sensorimotor pathways were employed (see also: Reichenbach et al. 2009).

Although, correction latencies were shorter, and correction magnitudes were larger for somatosensory targets (i.e., the combination of proprioception and tactile afference) as compared to external visual targets, it remains unclear whether changes in tactile information alone are sufficient to produce these correction advantages. In contrast to studies examining somatosensory-based corrections, previous work has found that the latency and magnitude of tactile-based movement corrections were not different than visual-based corrections (Pruszynski et al. 2016). In a study by Pruszynski et al. (2016), participants performed reaching movements to an external target. Critically, information about the shift in target position was presented either visually (i.e., participants could see the target shift) or via tactile inputs (i.e., participants felt the displacement of a rod on the thumb of their non-reaching hand). The authors reported no significant differences in either the latency or magnitude of movement corrections between tactile and visual shift presentation modalities. The absence of behavioral differences led the authors to hypothesize that there may be an overlap in the sensorimotor pathways responsible for tactile and visual based movement corrections. It is worth noting that tactile inputs in the work by Pruszynski et al. (2016) provided information about the movement of an external target. Thus, in Pruszynski et al. (2016), tactile inputs provided an indirect indication about the change in target position. It is not known whether tactile-based corrections are different than visual-based corrections when tactile inputs are a direct reflection of changes in target position (i.e., targets located on the body). Perhaps, corrections to changes in tactile targets on the body would yield the correction latency and correction magnitude advantages shown when individuals correct to somatosensory targets (i.e., Manson et al. 2019).

In the present study, we investigated the latencies and magnitudes of movement corrections to changes in tactile information on the body and external visual information. Target perturbations occurred either toward or away from the body. If the advantages in latency and magnitude, when correcting to a body location, are attributable to both proprioceptive and tactile modalities, then there should be shorter correction latencies and larger correction magnitudes in response to tactile than visual target perturbations, with no differences in target modality across the direction of target perturbation. Alternatively, if the advantages in latency and magnitude, when correcting to a body location, are primarily due to the proprioceptive modality, then correction latencies and magnitudes in response to tactile target perturbations should not be shorter or larger than correction latencies and magnitudes in response to visual target perturbations.

Methods

Participants

Twenty participants (15 women; age range: 18–35) were recruited from the University of Toronto community. All participants were right-handed as assessed by the Edinburgh handedness questionnaire (adapted from Oldfield 1971), were self-declared neurologically healthy, and had normal or corrected-to-normal vision.

Informed consent was obtained prior to the onset of the experiment and the study was approved by the University of Toronto Research Ethics committee. The experiment took 1.5 h to complete, and the participants were compensated $15 CAD for their time.

Apparatus

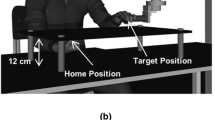

The experimental setup is shown in Fig. 1. The experiment took place at the Sensorimotor Integration Lab at the University of Toronto. Participants were seated in a dark room on an adjustable kneeling chair in front of a table (height: 75.5 cm). Placed on the table was a custom-built aiming surface. The aiming surface consisted of a black tinted Plexiglass (60 cm wide by 45 cm long by 0.5 cm thick) mounted 12 cm above a wooden base. A textured home position (2 cm by 2 cm) was located on the underside of the aiming surface. Facing the table was a protective cage containing a Selectively Compliant Assembly Robot Arm (SCARA; Epson E2L853, Seiko Epson Corp., Tokyo, Japan). The end-effector of the robot was used to present the target stimulus for both the tactile and visual targets. The tactile target was the felt position of a nylon myofilament (size: 6.65 units; Rolyan Semmes-Weinstein Monofilaments; Smith & Nephew Inc, Germantown, USA) on the medial side of the index finger of the non-reaching hand (Fig. 1). The visual target was a green LED light (6 mm in diameter). Only one target modality was presented at a time. Specifically, during the tactile condition, only the tactile target was available and during the vision condition, only the visual target was available. When the robot was in a neutral position, the target attached to the robot arm was positioned 0.5 cm above the aiming surface and 30 cm to the left of the home position.

Depictions of the experimental setup (not to scale). a Front-facing view of the participant and experimental apparatus, including the reaching hand underneath the aiming board and the target hand holding onto the robot arm for the tactile target modality. b Bird’s eye view of the participant and experimental setup with the home position, original target position at 30 cm from the home position (middle of the side of the left index finger), and the possible directions of target perturbations along the axis of perturbation (away and toward indicated by an arrow). The robotic device used to perturb the target location for both the tactile (monofilament) and visual (LED) target modalities is also depicted. Participants performed underhanded reaching movements from the home position to the target position, hence the unseen reaching head underneath the board

The participant’s right index finger (i.e., reaching finger) and the robot arm were affixed with an infrared light emitting diode (IRED) which was monitored by an Optotrak Certus (Northern Digital Inc., Waterloo, Ontario, Canada) motion tracking system sampling at 200 Hz. A custom MATLAB script (version 7.10, R2010a, Mathworks Inc., Natick, MA, USA) was used to send outputs to both the aiming console and robot effector, as well as to collect motion tracking data. A piezoelectric buzzer (SC628, Mallory Sonalert Products Inc., Indianapolis, Indiana, USA) was used to provide the participant with auditory cues regarding trial onset and feedback regarding movement time. The piezoelectric buzzer and the visual target stimulus (i.e., LED) were activated using MATLAB (version 7.10) and a custom-made parallel port connection.

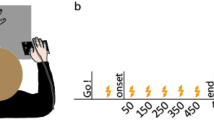

Procedure

The experiment included two target modalities (tactile, vision). The presentation of each target modality was blocked, and the order of blocks was counterbalanced across participants. For each target modality, a reaching task and a detection task were performed. The reaching task was always performed prior to the detection task. In the reaching task, participants were instructed to reach the target (i.e., tactile, visual) as accurately as possible within a time bandwidth of 450–600 ms (i.e., movement onset to movement end). The movement time bandwidth was chosen to minimize speed-accuracy trade-offs. In the detection task, participants were asked to lift their finger from the home position as soon as they detected a change in the target position.

Reaching task protocol

In the reaching task, participants performed reaching movements from the home position to the target location. Reaching movements were made freely underneath the aiming surface, and participants were instructed to reach the target as accurately as possible.

For both target modalities, the target was presented 30 cm to the left of the home position. The right index finger (i.e., reaching finger) was placed on the home position located underneath the aiming surface. In each trial, participants performed an underhanded reaching movement from the home position to the target position. Participants were instructed to keep all fingers on the reaching hand in a fist except for the index finger. Participants were also instructed to stay within a 450–600 ms movement time bandwidth. If the participant’s movement time fell within the movement time bandwidth, they were presented with two short 50 ms beeps as auditory feedback. Alternatively, they heard 3 short 50 ms beeps when the movement time bandwidth was too short, which occurred on 8.7% of the tactile target trials and 9.8% of the visual target trials. Participants heard one long 100 ms beep if the movement time was too long, which occurred on 14.1% of the tactile target trials and 12.8% of the visual target trials. Trials outside of the bandwidth were still included in the analyses. The auditory feedback also served as an instruction to move back to the home position.

For both target modality conditions, there were two types of reaching trials: unperturbed and perturbed (i.e., perturbation) reaching trials. In the unperturbed reaching trials, the target remained stationary throughout the duration of the trial. In the perturbation trials, the target was perturbed either 3 cm away or 3 cm toward the participant’s body, (~ 95 ms, SD = 4) after movement onset. Because robot movement was audible, target perturbations were presented in one of two directions (i.e., away, toward) to prevent anticipatory corrections.

Movement onset was defined as the time at which the effector was moving above 3 cm/s for 10 ms. For the perturbation trials, participants were instructed to make corrections to the new target location as quickly and as accurately as possible.

In both target modality conditions, two phases were conducted: a familiarization phase, and an experimental phase. In the familiarization phase, participants performed 10 trials to acclimate themselves with the reaching task, movement time bandwidth, and auditory feedback. There were 6 no-perturbation trials and 4 perturbation trials (2 trials with the target perturbed away, 2 trials with the target perturbed toward). In the experimental phase, participants performed 90 trials, with 60 no-perturbation trials and 30 perturbation trials (15 trials with the target perturbed away, 15 trials with the target perturbed toward). Perturbation trials made up one-third of the trials. In both phases, the perturbation and no-perturbation trials were pseudo-randomized such that perturbation trials did not occur more than twice in a row. Overall, the smaller proportion of perturbation trials (i.e., one-third of all trials), in addition to the two perturbation directions (i.e., away, or toward), made it more difficult for participants to anticipate and plan for target perturbation trials.

Detection task protocol

Following the completion of the reaching task, participants performed a detection task. The purpose of the detection task was to assess if target modality influenced the time it took participants to detect and respond to the onset of target motion (e.g., Donders 1868). For the tactile and visual detection tasks, participants responded to the movement of the tactile target or the visual target, respectively.

In the detection trials, the target was perturbed either 3 cm away or 3 cm toward the participant’s body, or remained stationary (i.e., catch trials). Target perturbations would occur after a random foreperiod (~ 100–200 ms), following the start of the trial. Participants were instructed to lift their right index finger from the home position as soon as they perceived target motion. The participants reacted to all the perturbation trials and none of the participants reacted to the catch trials. Once the robot returned to its neutral position, participants were instructed to bring their index finger back onto the home position.

There was a total of 35 trials in each target modality condition, with 2 trials in the familiarization phase, and 33 trials in the experimental phase. In the familiarization phase, participants performed 2 perturbation trials (1 trial with the target perturbed away, 1 trial with the target perturbed toward) to familiarize themselves with the detection task. The experimental trials consisted of 30 perturbation trials (15 trials with the target perturbed away, 15 trials with the target perturbed toward), and 3 no-perturbation trials (i.e., catch trials). The perturbation and catch trials were pseudo-randomized such that catch trials did not occur two times consecutively.

Data analysis

Reaching task protocol

Trials were excluded if the participant’s movement times, reaction times, endpoint errors in the amplitude axis (i.e., initial movement axis) or endpoint errors in the direction axis (i.e., axis of target perturbation), exceeded 3 standard deviations above the mean. The means were calculated within each target modality, for each participant. Perturbation trials were also excluded if the robot did not move (this rarely occurred). An average of 6% of reaching trials were excluded for each participant. Because some participants began their movements prior to the ‘Go signal’, the kinematic data from movement start—reaction time (RT), movement time (MT), time to peak velocity (TtPV), peak velocity (PV), time after peak velocity (TaPV)—were not available for 4 participants. These four participants were still included in the examination of movement endpoints (i.e., correction magnitude, endpoint variable error).

Comparisons of no-perturbation trials

Prior to the examination of the main dependent variables, paired samples t-tests were conducted to examine the effect of target modality on variables pertaining to reaching kinematics (RT, TtPV, TaPV, PV), and movement endpoints (movement amplitude; variable error). The purpose of this step was to investigate whether there were any differences between movements to tactile or visual targets, in the absence of perturbations.

Correction latency

Correction latency was calculated as the time difference between the onset of target motion, which was after movement onset, and the onset of upper-limb corrections towards the direction of the moving target (Fig. 2). Using the acceleration and velocity profiles of reaching movements in the axis of perturbation, correction latency was computed via a custom MATLAB script. Specifically, movement position data in the axis of perturbation were used to compute a velocity and acceleration profile for each individual trial. Movement position data were collected at a frequency of 200 Hz for 3.0 s which generated 600 samples of position data for each movement trajectory. The movement position data were subject to a second-order recursive bidirectional Butterworth filter with a low-pass filter of 12.5 Hz. Subsequently position data in the axis of perturbation was differentiated to obtain velocity and acceleration profiles for each reaching movement. Prior to any analyses, the velocity and acceleration profiles were converted into Z-scores (using a within trial computation).

Correction latency analysis (example trials from one participant). a Target perturbed away from the participant. b Target perturbed toward the participant. In both graphs, changes in acceleration (solid black line) and velocity (solid gray solid line) are presented. The perturbation onset sample is displayed as a vertical dashed black line. The significance criteria for the acceleration profile appropriate for the direction of perturbation is demonstrated as a black dashed horizontal line. The time-point marked as correction latency is represented by a grey circle. Note the direction of acceleration and velocity profile

To compute correction latency in a perturbation trial, the acceleration profile was examined. If following the perturbation, there was a significant increase in acceleration in the direction of the perturbation, after robot movement onset (defined as a Z-score ± 1.96), then the first sample was marked as the onset of movement correction. If there was a significant change in the acceleration profile, the velocity profile was examined. If there was an accompanying change in the velocity profile in the direction of perturbation (defined as a Z-score ± 0.5), this trial was deemed as having a correction toward the perturbed target location. If the trial was marked as having a correction, correction latency was computed as the difference between the sample at which the perturbation of the robot began and the sample at which there was a significant increase in acceleration (Fig. 2).

Across all participants, an average of 23.80 trials (out of 30) were analyzed in the tactile target condition (away = 10.85, toward = 12.75) and an average of 26.30 trials (out of 30) were analyzed in the visual target condition (away = 13.80, toward = 12.50). Of the trials that were excluded, 95.5% were excluded because participants corrected less than 0.75 cm towards the perturbed target; and 4.5% were excluded because there were no notable changes in acceleration towards the perturbed target. Correction latency was analyzed using a 2-target modality (tactile, vision) × 2-perturbation direction (away, toward) repeated measures ANOVA.

Correction magnitude

The magnitude of correction was calculated only for the axis of perturbation (i.e., direction axis; Fig. 1b) and was computed as the average absolute difference between the average end position (in cm) of perturbation trials and no-perturbation trials. Correction magnitude was analyzed using a 2-target modality (tactile, vision) × 2-perturbation direction (away, toward) repeated measures ANOVA.

Endpoint variable error

Variable error was calculated as the standard deviation of movement endpoints to each target position. Two types of variable error were computed: variable error in the axis of perturbation (i.e., direction variable error) and variable error in the amplitude axis (amplitude variable error). Variable error was analyzed using a 2-target modality (tactile, visual) by 2-perturbation direction (away, toward) repeated measures ANOVA.

Detection task protocol

Trials were excluded if the reaction time exceeded 3 standard deviations above or below the mean reaction time for each participant. A total of 4.82% of all detection task trials across participants and conditions were excluded. One participant was excluded from the reaction time analyses due to an error resulting in their data not being saved. Reaction time was computed as the difference between the onset of robot movement (i.e., when robot movement was above 3 cm/s) and the onset of the finger lift (i.e., when finger movement was above 3 cm/s for 10 ms). The reaction time data were analyzed using a 2-target modality (tactile, visual) by 2-perturbation direction (away, toward) repeated measures ANOVA.

Results

Reaching task protocol

A summary of the average variables pertaining to reaching kinematics and movement endpoints across the target modality conditions are shown in Tables 1 and 2.

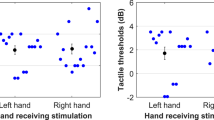

Comparison of no-perturbation trials

First the no-perturbation trials were analyzed to determine whether the target modality had a significant effect on reaching variables (see: Tables 1 and 2 for all values). When comparing reaching movements to visual or tactile targets, the paired-samples t-tests revealed a significant effect of reaction time, t(15) = 3.88, p = 0.001, dz = 0.97, and variable error in the axis of perturbation, t(19) = 4.91, p < 0.005, dz = 1.11. Participants took longer to initiate movements to a tactile target (M = 260 ms, SD = 74 ms) than to a visual target (M = 210 ms, SD = 47). There were also larger variable errors when moving to a tactile target (M = 1.57 cm, SD = 0.46) than a visual target (M = 1.15 cm, SD = 0.26). There were no significant effects of target modality for movement time, movement amplitude, TtPV, TaPV and PV (ps > 0.16).

Correction latency

Correction Latency results for each condition are displayed in Fig. 3b. The analyses for correction latency revealed no effect of perturbation direction, F(1, 19) = 0.95, p = 0.34, ηp2 = 0.48, no effect of target modality, F(1, 19) = 1.54, p = 0.23, ηp2 = 0.075, and no interaction, F(1, 19) = 1.29, p = 0.27, ηp2 = 0.064.

a Correction latency (ms). The collapsed average correction latency for each participant. The average (i.e., mean) correction latency within each condition (for all participants) is represented with black data points. The average (i.e., mean) correction latency within the perturbation directions in each condition is represented with the black solid line. Overall, there were no significant differences in the correction latencies exhibited across the conditions and perturbation directions. b Correction Magnitude (cm). The correction magnitude across the away and toward perturbation directions in tactile and visual target conditions. The average (i.e., mean) correction magnitudes within the perturbation directions in each condition is represented with the black solid line. Overall, there was a significant main effect of condition (***), where participants exhibited larger correction magnitudes in the vision condition than the tactile condition

Correction magnitude

Correction magnitude results for each condition are displayed in Fig. 3a. For correction magnitude, the ANOVA yielded no significant main effect of perturbation direction, F(1, 19) = 0.67, p = 0.42, ηp2 = 0.034, a main effect of target modality, F (1, 19) = 10.95, p = 0.004, ηp2 = 0.37, and no significant interaction between perturbation direction and target modality, F (1, 19) = 2.074, p = 0.17, ηp2 = 0.09. Overall, corrections to tactile target perturbations (1.89 cm, SD = 0.95) were smaller than corrections to visual target perturbations (2.34 cm, SD = 0.82).

Endpoint variable error

For direction variable error, the ANOVA yielded no significant main effects for target modality, F (1, 19) = 0.42, p = 0.53, ηp2 = 0.021, or perturbation direction, F(1, 19) = 2.33, p = 0.143, ηp2 = 0.11, and no significant interactions, F(1, 19) = 4.02, p = 0.059, ηp2 = 0.175. For amplitude variable error, the ANOVA yielded a significant main effect of target modality, F(1, 19) = 33.0, p < 0.001, ηp2 = 0.64, no significant main effect of direction, F(1, 19) = 2.76, p = 0.11, ηp2 = 0.13, and no significant interactions, F(1, 19) = 0.89, p = 0.77, ηp2 = 0.005. Overall, participants demonstrated smaller amplitude variable errors when moving to a perturbed visual target than when moving to a perturbed tactile target (Table 2).

Detection task protocol

The analysis for reaction time showed no significant main effect of perturbation direction, F(1,18) = 1.64, p = 0.69, ηp2 = 0.01, a significant main effect of target modality, F(1, 18) = 6.54, p = 0.02, ηp2 = 0.27, and no significant interaction between perturbation direction and target modality, F(1,18) = 3.30, p = 0.86, ηp2 = 0.16. Overall, participants demonstrated shorter reaction times to tactile target perturbations (195 ms, SD = 70) than visual target perturbations (240 ms, SD = 38).

Discussion

The goal of this experiment was to investigate the latencies and magnitudes of movement corrections to changes in tactile information on the body and external visual information. We found no differences in correction latency. However, participants demonstrated a smaller magnitude of correction in the tactile than the visual target condition. Moreover, we found that while participants demonstrated shorter detection times to tactile targets, they took longer to initiate movements to tactile targets than visual targets. Overall, these results provide evidence that corrections based on changes in tactile information alone may not yield latency and magnitude advantages, when compared to corrections based on visual information.

Previous work has found that correction latencies to somatosensory (i.e., tactile and proprioceptive) target perturbations were significantly shorter than correction latencies to visual target perturbations (Manson et al. 2019). In contrast to this work, we found that there were no correction latency differences in corrections to tactile and visual targets. One possible explanation is that corrections based on tactile information alone, may be more in line with visual-based corrections than proprioceptive-based corrections. Research examining the neural processing of tactile and visual motion supports the idea that the time course of corrections to changes in tactile information should not be different than corrections to changes in visual information. Specifically, in both the tactile and visual systems, information regarding motion is obtained from a spatiotemporal pattern of activation across a sensory sheet (i.e., the retina and the skin; Pack and Bensmaia 2015). For both modalities, motion information is relayed through to the cortex via the thalamic nuclei, and there is evidence that both tactile and visual motor loops involve processing in similar neural circuits (e.g., the posterior parietal cortex; Bremmer et al. 2001; Pack and Bensmaia 2015). Thus, if tactile and visual target modalities rely on similar motion detection mechanisms, and use overlapping motor circuits, then it is not surprising that correction latencies to tactile targets were not different than correction latencies to visual targets.

Alternatively, it is possible that the absence of correction latency differences across conditions in the reaching task could indicate that additional processes may be required to plan or correct movements to tactile target locations versus visual target locations. Specifically, we also found that there were significantly shorter reaction times in response to tactile target perturbations than visual target perturbations in the detection task. This result is consistent with studies showing that humans are faster when responding to tactile stimuli applied to the body versus external visual stimuli (e.g., Ng and Chan 2012). In our study, these shorter reaction times did not translate to earlier corrections in the reaching task. The finding that shorter response times in the detection task did not convert to shorter correction latencies in the reaching task provides evidence that additional transformations may be required when reaching to tactile target locations as compared to visual target locations (Brandes and Heed 2015). For example, in the work by Brandes and Heed (2015), participants performed two tasks: a movement initiation task, and movement correction task. In the movement initiation task, participants made reaching movements to a pre-defined visual target position in response to a visual or tactile cue. The authors found no differences in movement initiation time across cue-modalities. In the movement correction task, participants performed movements to a pre-defined visual target position, however, they were required to correct their initial movement trajectory to a new tactile target or visual target presented after movement onset (targets were located on the participant’s left and right feet). Participants demonstrated earlier corrections to the visual than tactile targets. The authors concluded that additional time was required to localize the tactile target position in visual space prior to moving to the tactile target location (i.e., movement corrections to tactile targets required additional sensorimotor transformations to a visual reference frame, Yamamoto and Kitazawa 2001; Shore et al. 2002). These results, along with the findings of the present study, support the hypothesis that additional processes are required when planning movements to tactile targets compared to visual targets.

In the present study, participants also demonstrated significantly smaller correction magnitudes in response to tactile target perturbations versus visual target perturbations (Table 2). The undershooting of tactile target positions noted in our study is in line with previous work which showed that the perceived movement of tactile stimuli on the skin (e.g., “tactile distance”) is systematically underestimated (e.g., Weber and Ross 1978; Marks 1983; Cholewiak 1999; Longo 2017; Van De Lagemaat et al. 2018; Nguyen et al. 2016). For example, in a seminal study by Marks (1983), participants judged the distance between two electrical stimuli applied to the skin on various body sites including the forearm, abdomen, and forehead. In addition, participants also estimated the distance between two visual stimuli presented on an external monitor as a control condition. The authors found that distance estimates for the tactile stimuli on both the forearm and abdomen were shorter than both the actual presentation distance and the estimates for visual stimuli (see also Green 1982, for similar findings using mechanical stimulation, and Cholewiak (1999) for similar results on the finger). Furthermore, significant compression of tactile distance also occurs if participants are presented with moving tactile stimuli (Nguyen et al. 2016). Although the exact mechanism underlying the compression of tactile distance is still debated, both the orientation of tactile receptive fields and the deformation pattern of the tactile space due to stretch are hypothesized to play a role (Fiori and Longo 2018).

Although our analyses revealed significant differences in correction magnitude between target modalities, it is worth noting that participants undershot both perturbed visual targets and perturbed tactile targets. This systematic undershoot could be explained by the experimental paradigm and the relatively long correction latency compared to previous studies (e.g., Manson et al. 2019). Using a similar experimental setup, Manson et al. (2019) found that participants’ correction magnitudes were smaller when targets were perturbed 100 ms after movement onset compared to when targets were perturbed before movement onset. Moreover, there were larger correction magnitudes in the somatosensory condition than the vision condition as correction latencies in response to somatosensory target perturbations (~ 88 ms) were significantly shorter than correction latencies in response to visual target perturbations (~ 100 ms). Thus, in Manson et al. (2019), the shorter correction latencies in the somatosensory condition provided participants with more time to make larger corrections to the target perturbations. In the present study, participants exhibited correction latencies that did not differ across conditions (~ 226 ms in tactile condition, ~ 219 ms in vision condition; Table 1), while also being more than twice as long as in Manson et al. (2019). Such apparent latency differences in addition to the comparable movement times in our work vs. Manson et al.’s (2019), illustrates that in our experiment, participants had less time to make corrections to the perturbed target locations. In other words, we suspect that earlier corrections times combined with comparable movement times could have afforded participants more time to implement larger correction amplitudes.

In contrast to previous studies (e.g., Pruszynski et al. 2016), we found no differences in endpoint variability (i.e., direction variable error) between the tactile and visual target modalities. In our experiment, tactile information reflected direct changes in the target location, whereby in the work by Pruszynski et al. (2016), tactile information reflected the changes of an external target location. To estimate the movement of an external target, tactile information must be transformed to reflect changes in the external space. Because tactile information is hypothesized to be represented in a different spatial reference frame (i.e., mixed body- and gaze-centered) than external targets (i.e., gaze-centered; Pritchett et al. 2012; Mueller and Fiehler 2016), complex sensorimotor transformations are likely required to derive estimates of external target locations. These additional transformations are hypothesized to create errors in motor planning (Sarlegna et al. 2009) and may explain the differences in variability across vision and tactile conditions in the work by Pruszynski et al. (2016). In the present study, because changes in tactile information corresponded to changes in target location, less complex localization processes were likely required to perform movement corrections. The absence of complex localization processes in our study likely resulted in less error and may explain the absence of variability differences across target modalities.

Conclusion

The present study aimed to investigate the latency and magnitude of movement corrections to tactile and visual targets. While there were no differences in the latency of movement corrections between target modalities, participants made larger corrections in response to visual than tactile targets. Moreover, participants reacted faster to changes in tactile target positions versus visual target positions in a detection task, but participants took longer to initiate movements to tactile than visual targets in a reaching task. These results provide evidence that additional processes may be required when planning movements to tactile targets versus visual targets and that corrections to changes in tactile target positions alone may not facilitate the latency and magnitude advantages observed for corrections to somatosensory targets (i.e., proprioceptive and tactile target).

Data availability

The datasets collected and used for the current study are available from the corresponding author on reasonable request.

References

Bernier PM, Gauthier GM, Blouin J (2007) Evidence for distinct, differentially adaptable sensorimotor transformations for reaches to visual and proprioceptive targets. J Neurophysiol 98(3):1815–1819

Brandes J, Heed T (2015) Reach trajectories characterize tactile localization for sensorimotor decision making. J Neurosci 35(40):13648–13658

Bremmer F, Schlack A, Duhamel JR, Graf W, Fink GR (2001) Space coding in primate posterior parietal cortex. Neuroimage 14(1):S46–S51

Brenner E, Smeets JB (1997) Fast responses of the human hand to changes in target position. J Mot Behav 29(4):297–310

Cholewiak RW (1999) The perception of tactile distance: Influences of body site, space, and time. Perception 28(7):851–875

Cressman EK, Franks IM, Enns JT, Chua R (2006) No automatic pilot for visually guided aiming based on colour. Exp Brain Res 171(2):174–183

Donders FC (1868) Die schnelligkeit psychischer processe: Erster artikel. Archiv für Anatomie, Physiologie und wissenschaftliche Medicin, pp 657–681

Fiori F, Longo MR (2018) Tactile distance illusions reflect a coherent stretch of tactile space. Proc Natl Acad Sci 115(6):1238–1243

Franklin DW, Reichenbach A, Franklin S, Diedrichsen J (2016) Temporal evolution of spatial computations for visuomotor control. J Neurosci 36(8):2329–2341

Green BG (1982) The perception of distance and location for dual tactile pressures. Percept Psychophys 31(4):315–323

Longo MR (2017) Hand posture modulates perceived tactile distance. Sci Rep 7(1):1–8

Manson GA, Blouin J, Kumawat AS, Crainic VA, Tremblay L (2019) Rapid online corrections for upper limb reaches to perturbed somatosensory targets: evidence for non-visual sensorimotor transformation processes. Exp Brain Res 237(3):839–853

Marks LE (1983) Similarities and differences among the senses. Int J Neurosci 19(1–4):1–11

Mueller S, Fiehler K (2016) Mixed body-and gaze-centered coding of proprioceptive reach targets after effector movement. Neuropsychologia 87:63–73

Ng AW, Chan AH (2012) Finger response times to visual, auditory and tactile modality stimuli. In: Proceedings of the international multiconference of engineers and computer scientists, vol 2, pp 1449–1454

Nguyen EH, Taylor JL, Brooks J, Seizova-Cajic T (2016) Velocity of motion across the skin influences perception of tactile location. J Neurophysiol 115(2):674–684

Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9(1):97–113

Pack CC, Bensmaia SJ (2015) Seeing and feeling motion: canonical computations in vision and touch. PLoS Biol 13(9):e1002271

Pritchett LM, Carnevale MJ, Harris LR (2012) Reference frames for coding touch location depend on the task. Exp Brain Res 222(4):437–445

Pruszynski JA, Johansson RS, Flanagan JR (2016) A rapid tactile-motor reflex automatically guides reaching toward handheld objects. Curr Biol 26(6):788–792

Reichenbach A, Thielscher A, Peer A, Bülthoff HH, Bresciani JP (2009) Seeing the hand while reaching speeds up on-line responses to a sudden change in target position. J Physiol 587(19):4605–4616

Sarlegna FR, Sainburg RL (2007) The effect of target modality on visual and proprioceptive contributions to the control of movement distance. Exp Brain Res 176(2):267–280

Sarlegna FR, Przybyla A, Sainburg RL (2009) The influence of target sensory modality on motor planning may reflect errors in sensori-motor transformations. Neuroscience 164(2):597–610

Saunders JA, Knill DC (2003) Humans use continuous visual feedback from the hand to control fast reaching movements. Exp Brain Res 152(3):341–352

Scott SH, Cluff T, Lowrey CR, Takei T (2015) Feedback control during voluntary motor actions. Curr Opin Neurobiol 33:85–94

Shore DI, Spry E, Spence C (2002) Confusing the mind by crossing the hands. Cogn Brain Res 14(1):153–163

Smeets JB, Brenner E (1995) Perception and action are based on the same visual information: distinction between position and velocity. J Exp Psychol Hum Percept Perform 21(1):19

Sober SJ, Sabes PN (2005) Flexible strategies for sensory integration during motor planning. Nat Neurosci 8(4):490–497

Van De Lagemaat JM, Kuling IA, Visell Y (2018) Tactile distances are greatly underestimated in perception and motor reproduction. In: 2018 IEEE haptics symposium (HAPTICS), pp 301–306. IEEE

Weber EH, Ross HE (1978) The sense of touch. Academic Press for [the] Experimental Psychology Society

Yamamoto S, Kitazawa S (2001) Reversal of subjective temporal order due to arm crossing. Nat Neurosci 4(7):759–765

Funding

Ontario Ministry of Research and Innovation (Grant no: 302419); Natural Sciences and Engineering Research Council of Canada (Grant no: RGPIN-2020-05831); Canada Foundation for Innovation (Grant no: 302419).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest with regard to the present research.

Additional information

Communicated by Melvyn A. Goodale.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Abdulrabba, S., Tremblay, L. & Manson, G.A. Investigating the online control of goal-directed actions to a tactile target on the body. Exp Brain Res 240, 2773–2782 (2022). https://doi.org/10.1007/s00221-022-06445-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-022-06445-0