Abstract

How deep is the linkage between action and perception? Much is known about how object perception impacts on action performance, much less about how action performance affects object perception. Does action performance affect perceptual judgment on object features such as shape and orientation? Answering these questions was the aim of the present study. Participants were asked to reach and grasp a handled mug without any visual feedback before judging whether a visually presented mug was handled or not. Performing repeatedly a grasping action resulted in a perceptual categorization aftereffect as measured by a slowdown in the judgment on a handled mug. We suggest that what people are doing may impact on their perceptual judgments on the surrounding things.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

How deep is the linkage between action and perception? Visual perception of objects is well known to affect action performance (Craighero et al. 1999, 2002; Witt et al. 2005, 2008; Witt and Proffitt 2008; Witt 2011). For instance, seeing an object such as a teapot or a mug with its handle oriented towards the left or the right makes people faster in performing a press or a grasping action with the hand ipsilateral to the handle side, even if they should not act upon the viewed object (Tucker and Ellis 1998, 2001, 2004; Ellis and Tucker 2000; Costantini et al. 2010). Much less is known about whether and how action performance affect object perception (Brockmole et al. 2013; Thomas 2015), although a number of studies seem to suggest right so (Bekkering and Neggers 2002; Gozli et al. 2012; Chan et al. 2013; Davoli and Tseng 2015).

There is evidence that doing a given action might affect visual motion perception. Turning a knob either clockwise or anticlockwise has been shown to bias the perceived direction of rotating visual displays (Wohlschlager 2000). Similar findings have been obtained in the auditory domain. Pressing keys on a keyboard in a left-to-right or in a right-to-left order has been found to bias the perceived direction of the change in pitch of tone pairs in expert pianists (Repp and Knoblich 2007). Even more interesting, previous studies demonstrated that performing repeatedly a given action might induce a perceptual aftereffect, consisting in a loss in function of visual perception of stimuli congruent with the motor training. For instance, Musseler and Hommel (1997) ran a series of experiments in which participants were presented with masked left or right arrows shortly before executing an already prepared manual left or right key-press. They found a sort of ‘blindness to response-compatible stimuli’: the perception of a right-pointing arrow was impaired when presented during the execution of a right-hand action compared to that of a left-hand action, and vice versa (see also Musseler et al. 2001).

More recently, Cattaneo et al. (2011) asked participants to observe an ambiguous picture of a hand and to judge whether it was actually pushing or pulling an object, after being motorically trained to perform, blindfolded, a push or a pull action. The results showed a clear perceptual aftereffect: after push training, participants were biased to judge the viewed action as a pulling action, while pull training had, as consequence, the opposite bias. This study showed that the perceptual representation of an external effector can be strongly modulated by the repeated practice of one’s own effector, provided that the two share a sensorimotor link. At the theoretical level, both the motor simulation theory (Gallese and Sinigaglia 2011; Jeannerod 2001) and the common coding theory (Hommel et al. 2001; Prinz 2011) can be summoned to explain this finding, because the motor processes and representations acquired during training (participants were blindfolded) were shared with the visual representation during perception, by means of the fact that the locus of action (acted or observed) was in both cases the hand. What is more striking, however, is that this finding speaks in favour of a truly motor-sensory analogous of a perceptual aftereffect (adaptation consisted of repeated actions; the direction of the perceptual effect ran in the direction opposite to training), suggesting that the category of aftereffects applies not only cross-modally, but even across the sensory-motor frontier. The results of Cattaneo et al. (2011) are fascinating as they convincingly suggest that the purely motor activation of what is normally a visuo-motor representation (the pushing or pulling action) produces a visual aftereffect, but it must be highlighted that the focus of visual perception in that case (a hand) is strictly related to the domain of the trained visuo-motor scheme (one’s hand).

The above-mentioned studies have all focused on motion perception. A natural question arises as to whether the sensory-motor link they found can be extended to the visual perception of remote objects? Might action performance also be demonstrated to influence the perceptual processing of relevant object visual features such as shape and orientation? If this were the case, should we expect a similar perceptual aftereffect when visually judging on object features which are congruent with the performed action?

Answering these questions was the aim of the present study, and two experiments were carried out to this aim. Participants should repeatedly reach and grasp a right-handled mug, without visual feedback, before being asked to perceptually judge whether a visually presented mug was handled or not (Experiment 1). The visually presented handled mug could be without handle, or with the handle oriented either sides. As a control condition, they should undertake the perceptual judgment tasks after reaching and merely touching the handled mug (Experiment 2). To avoid any purely perceptual biases, participants were prevented from seeing their moving hands as well as the targeted handled mug during the motor training in both the experiments.

If action performance affected the perceptual processing of object features such as shape and orientation by inducing a related perceptual aftereffect, participants should be expected to be slower in the perceptual categorization task when the visually presented mug has the handle oriented towards the right—the same side as the motor training—according to the well-known ‘repulsive’ effect of long-lasting adaptation on perceptual categorization (Palumbo et al. 2017). This bias should not be observed after the reach-to-touch training.

Methods

Participants

50 right-handed participants (20 male, mean age 22.3) were recruited and randomly assigned to one of the two experiments. All of them reported to have normal or corrected-to-normal vision, were naïve as to the purposes of the experiments and gave their informed consent. The study was approved by the local Ethics committee, and was conducted in accordance with the ethical standards of the 1964 Declaration of Helsinki.

Stimuli and procedure

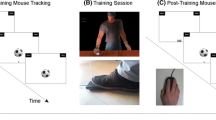

Experiment 1 was composed of two phases: first a motor training, then a perceptual task. In the motor training, the manipulandum consisted of a mug with a handle, whereas in the perceptual task the visual stimuli consisted of three pictures (1024 × 768 pixels) depicting a 3D room, with a table and a mug on it, created by means of 3DStudioMax v.13 (Fig. 1a). In half of the perceptual trials, the depicted mug had a handle oriented either towards the right or the left. In the other half the mug was without handle. The pictures were presented with Psychtoolbox v3 implemented in Matlab. Trials were divided into four balanced blocks of 45 pictures presented in a random order, for a total of 180 trials (45 trials × 4 blocks).

Participants sat comfortably on a chair in front of a computer screen positioned 57 cm from them. In the motor training phase, participants were instructed to reach and to grasp the handle of the mug with their right hand repeatedly for 3 min, without picking it up. The cup was located on a surface at a comfortable reaching distance in front of the participant, and with the handle oriented towards the right. Each reach-to-grasp movement lasted approximately 1500 ms; hence during the motor training, participants performed on average 120 reach-to-grasp movements. To avoid any access to visual information concerning both their hand’s movements and the handled mug, a black box was used to cover the participant’s arm, and prevented them from seeing their own hand and the handled mug during the whole duration of the motor training. The experimenter closely monitored the correct execution of the motor training from the opposite side. Then, the perceptual task started. All of the 180 stimuli were presented for a total duration of 6 min. A blank screen with a fixation cross-lasting 3 s preceded the stimulus, then the image was shown for 100 ms, and finally the fixation cross-followed it for 1900 ms (Fig. 1a). The task consisted of judging, for each trial, the presence or absence of the handle in the picture of the mug visually presented on the computer screen (Lenovo 19 inches). Subjects were asked to respond as accurately as possible and they were required to respond even if in doubt. Subjects had to say "yes" (“sì” in Italian) when they saw the handle and "no" (“no” in Italian) otherwise into a voice key, so the task switched to the next trial. Vocal latency times of each single response, given during presentation of the fixation cross, were recorded.

In experiment 2, visual stimuli and experimental setting were the same as in experiment 1. The only difference concerned the motor training: participants should reach and merely touch the mug with their fist for 3 min.

Data analysis

RTs were analysed using a mixed model 2 × 2 ANOVA with motor adaptation (reach-to-grasp vs touch) as between-subject factor, and orientation of the handle (left vs right) as within-subject factor. “No handle” trials were not included in the analysis because “yes” responses cannot be compared to “no” responses when voice-key answers are recorded. Indeed, their frequency spectrum may be different and thus affect the detection threshold of the voice key. Furthermore, as we were interested in adaptation effect, “no” responses do not inform us on the presence of the effect. In line with previous studies on perceptual adaptation (e.g. Cattaneo et al. 2011), we considered only the first 45 trials, corresponding to half of the adaptation time. RTs were log-transformed.

Results

Accuracy was higher than 95% in both experiments; hence, errors were not analysed and excluded from further analyses.

Two participants (one in experiment 1 and one in experiment 2) were discarded as their RTs were two standard deviations larger than the group mean.

The ANOVA reveal the significant motor adaptation by orientation of the handle interaction [F(1,46) = 4.0; p = 0.05; ηp2 = 0.08, Fig. 1b]. Simple-effect analyses with Bonferroni correction method revealed that participants were slower at detection right-handled stimuli (mean log-RT 6.36 ± 0.03) than left-handled stimuli [mean log-RT 6.32 ± 0.03; t(23) = 2.6, p = 0.017] only in experiment 1, in which the adaptation phase consisted in reach-to-grasp movements. The same difference was not significant in experiment 2 in which the adaptation phase consisted in reach-to-touch movements [right-handled stimuli, mean log-RT 6.37 ± 0.03; left-handled stimuli mean log-RT 6.39 ± 0.04; t(23) = − 0.068, p = 0.53).

Discussion

The aim of the present study was to investigate whether action performance affects object perception. More specifically, our investigation concerned the impact of action performance on perceptual judgment about relevant object features such as orientation. To this end, we asked participants to perceptually judge whether a visually presented mug was handled (Experiment 1) just after a motor training consisting of repeatedly grasping a handled mug while they were prevented from any visual feedback. These perceptual judgment tasks were contrasted with those undertaken just after a motor training in which participants should reach and merely touch the handled mug (Experiments 2).

The main finding was that the repeated performance of a reach-to-grasp action induced a slowdown in visually judging those object features that were congruent with motor training. In responding to the perceptual judgment task, participants were significantly slower when presented with a right-handled mug than when presented with a left-handled mug or a mug without the handle. None of these effects was reported when participants responded to the perceptual judgment task just after the reach-to-touch motor training. At first sight, these results might appear to contradict the interplay between the motor system and visual perception in the firmly-established literature on the action–perception linkage. It would be expected, indeed, that motor training, via the acquisition of spatial coordinates that are shared with the visual system, would have facilitated congruent rather than incongruent trials. Moreover, a number of studies investigating the effects of motor training on ensuing visual decisions have shown that the familiarity with a given motor action increases the ability and precision in judging visually presented actions, the more so when the action is the same across the motor task and the visual task (Casile and Giese 2006). This has been shown particularly true in the domain of sports and motor expertise, as demonstrated by experiments using the temporal occlusion paradigm in expert performers, in which participants improve their prediction of body or object movements after practicing those specific movements or merely because of their extensive training (Casile and Giese 2006; Aglioti et al. 2008). One crucial difference between this scenario and the present study, however, resides in the fact that the required judgment in our study involved the stable physical features possessed by an object which the participant interacted with, rather than a future state (e.g. the future spatial position in a motion event). We thus believe that our results could be explained as the manifestation of an aftereffect occurring between the motor training and the visual judgment, assuming that aftereffects usually involve (1) a prior long exposure to a given stimulus, (2) the presentation of a second stimulus, and (3) a contrastive, repulsive effect of the former on the latter (Palumbo et al. 2017). Under this interpretation, the slowdown of reaction times in the visual classification in the congruent trials might depend on the fact that the spatial coordinates describing the handled mug as acquired motorically would decrease the strength of their representation as a result of the prolonged manipulation. This could be interpreted in the framework of motor simulation (Gallese and Sinigaglia 2011; Hommel et al. 2001; Jeannerod 2001; Prinz 2011) and common coding theories as both the motor and the visual representations of the grasped (or graspable) mug presume the computation of a set of spatial coordinates, that are intrinsic to the shaping of action both in the context of grasping itself and in the visual perception of the object’s affordances.

It is worth noting that our finding complements what reported by Tipper and colleagues (Tipper et al. 2006). They contrasted a perceptual judgment task in which participants were to identify the shape (e.g. square or round) of an object (a door handle right or left oriented) with a perceptual judgment task in which they were to discriminate its colour (blue or green). The results showed that participants were faster in identifying the shape of the handles when there was compatibility between the orientation of the handle and the required response. Interestingly, they were even faster when presented with depressed handles, which not only evoked an action but also clearly suggested that this action had taken place. Neither effect was found in the colour discrimination task.

The difference between our finding and Tipper et al.’s (2006) concerns the action state manipulation involved. Differently from Tipper et al., we manipulated the action state of the participants rather than the action state of the observed objects. Indeed, in our study participants were not to passively perform the perceptual judgment task; rather, they should judge the shape of the presented mug, after being motorically trained to grasp it. It is likely that the motor training provided participants with a motor representation of the reach-to-grasp action, which functioned as a scaffold biasing their performance in judging whether the presented mug was handled or not. This could also explain why we have found a perceptual aftereffect instead of a priming effect in the object shape discrimination.

A natural question arises as to how to provide our finding with a neuronal account. What kind of processes might be invoked to account for the perceptual aftereffect we found to be induced by the reach-to-grasp motor training?

Perceptual aftereffects are typically correlated to visual adaptation. Visual neuron responses have been reported to be selectively reduced by repeated exposure to specific visual stimuli, with systematic biases in low-level (e.g. orientation, contrast, and spatial frequency) and high-level (faces, bodies, objects, and geometrical shapes) perceptual tasks (Regan and Hamstra 1992; Suzuki and Cavanagh 1998; Webster and MacLin 1999; Leopold et al. 2001; Rhodes et al. 2007; Thompson and Burr 2009; Matsumiya and Shioiri 2014; Mohr et al. 2016). More recently, it has been demonstrated that perceptual aftereffects can be also correlated with motor adaptation. Cattaneo et al. (2011) showed that repeated execution of an action induced a strong visual aftereffect, as a result of the adaptation of a specific class of sensorimotor neurons, mirror neurons, which selectively respond to actions, regardless of whether they are executed or just observed. Participants were presented with pictures illustrating a hand perpendicularly touching a small ball and were requested to judge whether the hand was actually pushing or pulling the small ball. The perceptual judgment task was performed just after a motor training session in which participants were blindfolded and should push or pull chickpeas. After push training, they were biased to judge the depicted hand as a pulling hand, while pull training induced the opposite bias. Strikingly, these biases vanished when delivering TMS over participants’ ventral premotor cortex after their motor training and before their perceptual judgment task.

It is tempting to assume that the perceptual aftereffects we found concerning object features such as shape and orientation also correlated with a similar motor adaptation. There is large evidence that relevant features of objects (e.g. the shape and the orientation of an handled mug) are selectively encoded by a specific class of visuo-motor neurons called canonical neurons (Rizzolatti et al. 1988; Jeannerod 1995). These neurons are typically recruited during the execution of object-related actions such as grasping or manipulating, but they also respond to the visual presentation of objects with different sizes and shapes, even when the objects have to be fixated, without being target of any actual grasping action (Murata et al. 1997, 2000; Raos et al. 2006; Umilta et al. 2007). Very often, a strict congruence has been observed between the type of hand grip coded by a given neuron and the size or shape of the object effective in triggering its visual response (Murata et al. 1997; Raos et al. 2006). Because of their functional properties, canonical neurons have been claimed to be responsible for those sensory-motor transformations that are necessary for visually guided object-related actions (Jeannerod 1995; Rizzolatti and Sinigaglia 2008). Similar results have been obtained in humans (Grafton et al. 1996; Chao and Martin 2000; Grezes et al. 2003). In particular, viewing an object such as a handled mug has been shown to increase the cortical excitability of primary cortex, provided that the object was actually reachable and graspable (Buccino et al. 2009; Cardellicchio et al. 2011).

Although further research is needed, our finding seems to suggest an adaptation mechanism also for canonical neurons: because these neurons are triggered from both motor and visual inputs (Matelli et al. 1986; Gerbella et al. 2011), the effects of their firing history driven by motor performance can be observed in the visual domain. This could also explain why participants’ responses were slower when the handle of the mug was spatially aligned with their right hand than when it was aligned with their left hand, being the former, but not the latter, involved in the motor training. Indeed, canonical neurons are highly selective, being their responses strictly dependent upon the most suitable orientation of action-related object features (Fogassi et al. 2001).

However, one cannot exclude an even simpler explanation: that the aftereffect might have occurred merely due to the exposure to the haptic and proprioceptive spatial representation of the mug involved during motor adaptation, our results thus representing an instance of a crossmodal touch-to-vision aftereffect (Konkle et al. 2009). This would not diminish the significance of the present and of other similar results, i.e. that of a motor-to-perceptual aftereffect, but rather it would ascribe their interpretation to the sensory component involved during motor performance, the multimodal experience of one’s own behaviour being inextricably linked to that very behaviour.

To sum up, although our effects should be interpreted with caution given the small effect size, our finding suggests that what people are doing may impact on their perceptual judgments on the surrounding things. Otherwise said, motor processes and representations involved in action performance may also shape perceptual experience, at least when referring to object features such as shape and orientation. This conclusion seems to give rise to a further question as to whether the same may hold for other object features such as, say, colours. But answering this question will require another study, and we hope to do it very soon.

References

Aglioti SM, Cesari P, Romani M, Urgesi C (2008) Action anticipation and motor resonance in elite basketball players. Nat Neurosci 11:1109–1116

Bekkering H, Neggers SF (2002) Visual search is modulated by action intentions. Psychol Sci 13:370–374

Brockmole JR, Davoli CC, Abrams RA, Witt JK (2013) The world within reach: effects of hand posture and tool use on visual cognition. Curr Direct Psychol Sci 22:38–44

Buccino G, Sato M, Cattaneo L, Roda F, Riggio L (2009) Broken affordances, broken objects: a TMS study. Neuropsychologia 47:3074–3078

Cardellicchio P, Sinigaglia C, Costantini M (2011) The space of affordances: a TMS study. Neuropsychologia 49:1369–1372

Casile A, Giese MA (2006) Nonvisual motor training influences biological motion perception. Curr Biol 16:69–74

Cattaneo L, Barchiesi G, Tabarelli D, Arfeller C, Sato M, Glenberg AM (2011) One's motor performance predictably modulates the understanding of others' actions through adaptation of premotor visuo-motor neurons. Soc Cogn Affect Neurosci 6:301–310

Chan D, Peterson MA, Barense MD, Pratt J (2013) How action influences object perception. Front Psychol 4:462

Chao LL, Martin A (2000) Representation of manipulable man-made objects in the dorsal stream. Neuroimage 12:478–484

Costantini M, Ambrosini E, Tieri G, Sinigaglia C, Committeri G (2010) Where does an object trigger an action? An investigation about affordances in space. Exp Brain Res 207:95

Craighero L, Fadiga L, Rizzolatti G, Umilta C (1999) Action for perception: a motor-visual attentional effect. J Exp Psychol Hum Percept Perform 25:1673–1692

Craighero L, Bello A, Fadiga L, Rizzolatti G (2002) Hand action preparation influences the responses to hand pictures. Neuropsychologia 40:492–502

Davoli CC, Tseng P (2015) Editorial: taking a hands-on approach: current perspectives on the effect of hand position on vision. Front Psychol 6:1231

Ellis R, Tucker M (2000) Micro-affordance: the potentiation of components of action by seen objects. Br J Psychol 91(Pt 4):451–471

Fogassi L, Gallese V, Buccino G, Craighero L, Fadiga L, Rizzolatti G (2001) Cortical mechanism for the visual guidance of hand grasping movements in the monkey: a reversible inactivation study. Brain 124:571–586

Gallese V, Sinigaglia C (2011) What is so special about embodied simulation? Trends Cogn Sci 15(11):512–519. https://doi.org/10.1016/j.tics.2011.09.003

Gerbella M, Belmalih A, Borra E, Rozzi S, Luppino G (2011) Cortical connections of the anterior (F5a) subdivision of the macaque ventral premotor area F5. Brain Struct Funct 216:43

Gozli DG, West GL, Pratt J (2012) Hand position alters vision by biasing processing through different visual pathways. Cognition 124:244–250

Grafton ST, Arbib MA, Fadiga L, Rizzolatti G (1996) Localization of grasp representations in humans by positron emission tomography. 2. Observation compared with imagination. Exp Brain Res 112:103–111

Grezes J, Tucker M, Armony J, Ellis R, Passingham RE (2003) Objects automatically potentiate action: an fMRI study of implicit processing. Eur J Neurosci 17:2735–2740

Hommel B, Müsseler J, Aschersleben G, Prinz W (2001) The theory of event coding (TEC). Framew Percept Action Plan 24(5):849–878 (discussion 878–937)

Jeannerod M (1995) Mental imagery in the motor context. Neuropsychologia 33:1419–1432

Jeannerod M (2001) Neural simulation of action: a unifying mechanism for motor cognition. NeuroImage 14:103–109

Konkle T, Wang Q, Hayward V, Moore CI (2009) Motion aftereffects transfer between touch and vision. Curr Biol 19:745–750

Leopold DA, O'Toole AJ, Vetter T, Blanz V (2001) Prototype-referenced shape encoding revealed by high-level aftereffects. Nat Neurosci 4:89–94

Matelli M, Camarda R, Glickstein M, Rizzolatti G (1986) Afferent and efferent projections of the inferior area 6 in the macaque monkey. J Comp Neurol 251:281–298

Matsumiya K, Shioiri S (2014) Moving one's own body part induces a motion aftereffect anchored to the body part. Curr Biol 24:165–169

Mohr HM, Rickmeyer C, Hummel D, Ernst M, Grabhorn R (2016) Altered visual adaptation to body shape in eating disorders: implications for body image distortion. Perception 45:725–738

Murata A, Fadiga L, Fogassi L, Gallese V, Raos V, Rizzolatti G (1997) Object representation in the ventral premotor cortex (area F5) of the monkey. J Neurophysiol 78:2226–2230

Murata A, Gallese V, Luppino G, Kaseda M, Sakata H (2000) Selectivity for the shape, size, and orientation of objects for grasping in neurons of monkey parietal area AIP. J Neurophysiol 83:2580–2601

Musseler J, Hommel B (1997) Blindness to response-compatible stimuli. J Exp Psychol Hum Percept Perform 23:861–872

Musseler J, Steininger S, Wuhr P (2001) Can actions affect perceptual processing? Q J Exp Psychol A 54:137–154

Palumbo R, D'Ascenzo S, Tommasi L (2017) Editorial: high-level adaptation and aftereffects. Front Psychol 8:217

Prinz W (2011) A common coding approach to perception and action. Relation Between Percept Action. https://doi.org/10.1007/978-3-642-75348-0_7

Raos V, Umilta MA, Murata A, Fogassi L, Gallese V (2006) Functional properties of grasping-related neurons in the ventral premotor area F5 of the macaque monkey. J Neurophysiol 95:709–729

Regan D, Hamstra SJ (1992) Shape discrimination and the judgement of perfect symmetry: dissociation of shape from size. Vision Res 32:1845–1864

Repp BH, Knoblich G (2007) Action can affect auditory perception. Psychol Sci 18:6–7

Rhodes G, Maloney LT, Turner J, Ewing L (2007) Adaptive face coding and discrimination around the average face. Vision Res 47:974–989

Rizzolatti G, Sinigaglia C (2008) Mirrors in the brain. How our minds share actions, emotions, and experience. Oxford University Press, Oxford

Rizzolatti G, Camarda R, Fogassi L, Gentilucci M, Luppino G, Matelli M (1988) Functional organization of inferior area 6 in the macaque monkey. II. Area F5 and the control of distal movements. Exp Brain Res 71:491–507

Suzuki S, Cavanagh P (1998) A shape-contrast effect for briefly presented stimuli. J Exp Psychol Hum Percept Perform 24:1315–1341

Thomas LE (2015) Grasp posture alters visual processing biases near the hands. Psychol Sci 26:625–632

Thompson P, Burr D (2009) Visual aftereffects. Curr Biol 19:R11–14

Tipper S, Paul M, Hayes A (2006) Vision-for-action: the effects of object property discrimination and action state on affordance compatibility effects. Psychon Bull Rev 13:493–498

Tucker M, Ellis R (1998) On the relations between seen objects and components of potential actions. J Exp Psychol Hum Percept Perform 24:830–846

Tucker M, Ellis R (2001) The potentiation of grasp types during visual object categorization. Visual Cognition 8:769–800

Tucker M, Ellis R (2004) Action priming by briefly presented objects. Acta Physiol (Oxf) 116:185–203

Umilta MA, Brochier T, Spinks RL, Lemon RN (2007) Simultaneous recording of macaque premotor and primary motor cortex neuronal populations reveals different functional contributions to visuomotor grasp. J Neurophysiol 98:488–501

Webster MA, MacLin OH (1999) Figural aftereffects in the perception of faces. Psychon Bull Rev 6:647–653

Witt JK (2011) Action’s effect on perception. Curr Direct Psychol Sci 20:201–206

Witt JK, Proffitt DR (2008) Action-specific influences on distance perception: a role for motor simulation. J Exp Psychol Hum Percept Perform 34:1479–1492

Witt JK, Proffitt DR, Epstein W (2005) Tool use affects perceived distance, but only when you intend to use it. J Exp Psychol Hum Percept Perform 31:880–888

Witt JK, Linkenauger SA, Bakdash JZ, Proffitt DR (2008) Putting to a bigger hole: golf performance relates to perceived size. Psychon Bull Rev 15:581–585

Wohlschlager A (2000) Visual motion priming by invisible actions. Vision Res 40:925–930

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Costantini, M., Tommasi, L. & Sinigaglia, C. How action performance affects object perception. Exp Brain Res 237, 1805–1810 (2019). https://doi.org/10.1007/s00221-019-05547-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-019-05547-6