Abstract

We compute the leading asymptotics for the maximum of the (centered) logarithm of the absolute value of the characteristic polynomial, denoted \(\Psi _N\), of the Ginibre ensemble as the dimension of the random matrix N tends to \(\infty \). The method relies on the log-correlated structure of the field \(\Psi _N\) and we obtain the lower-bound for the maximum by constructing a family of Gaussian multiplicative chaos measures associated with certain regularization of \(\Psi _N\) at small mesoscopic scales. We also obtain the leading asymptotics for the dimensions of the sets of thick points and verify that they are consistent with the predictions coming from the Gaussian Free Field. A key technical input is the approach from Ameur et al. (Ann Probab 43(3):1157–1201, 2015) to derive the necessary asymptotics, as well as the results from Webb and Wong (Proc Lond Math Soc (3) 118(5):1017–1056, 2019).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

The Ginibre ensemble is the canonical example of a non-normal random matrix. It consists of a \(N\times N\) matrix filled with independent complex Gaussian random variables of variance 1/N [31]. It is well-known that the eigenvalues \((\lambda _1, \dots , \lambda _N)\) of a Ginibre matrix are asymptotically uniformly distributed inside the unit disk \(\mathbb {D} = \{ z\in \mathbb {C} : |z| < 1\}\) in the complex plane – this is known as the circular law [10, 16]. The Ginibre eigenvalues have the same law as the particles in a one component two-dimensional Coulomb gas confined by the potential \(Q(x) = |x|^2/2\) at a specific temperature, see [56]. That is, the joint law of the eigenvalues is given by \(\mathrm {d}\mathbb {P}_N \propto e^{- \mathrm {H}_N(x)} {\textstyle \prod _{j=1}^N} \mathrm {d}^2 x_j\) where the energy of a configuration \(x\in \mathbb {C}^N\) is

and \(\mathrm {d}^2x\) denotes the Lebesgue measure on \(\mathbb {C}\). Moreover, these eigenvalues form a determinantal point process on \(\mathbb {C}\) with a correlation kernel

This means that all the correlation functions (or marginals \(\mathbb {P}_{N,n}\)) of this point process are given by

where \((N)_n = N(N-1)\cdots (N-n+1)\). We refer to [33, Chapter 4] for an introduction to determinantal processes and to [33, Theorem 4.3.10] for a derivation of the Ginibre correlation kernel.

In this article we are interested in the asymptotics of the modulus of the characteristic polynomial \(z \in \mathbb {C}\mapsto \prod _{j=1}^N|z-\lambda _j|\) of the Ginibre ensemble and in particular on the maximum size of its fluctuations. Before stating our main result, we need to review some basic properties of the Ginibre eigenvalues process.

First, it follows from a classical result in potential theory that the equilibrium measure which describes the limit of the empirical measure \(\frac{1}{N} \sum _{j=1}^N \delta _{\lambda _j}\) is indeed the circular law: \(\sigma (\mathrm {d}x) = \frac{1}{\pi } \mathbb {1}_{\mathbb {D}} \mathrm {d}^2 x\), see [56, Section 3.2]. Let \((x)_+ = x \vee 0 \) for \(x\in \mathbb {R}\). This can be deduced from the fact that the logarithmic potential of the circular law

satisfies the condition

Then, Rider–Viràg [53] showed that the fluctuations of the empirical measure of the Ginibre eigenvalues around the circular law are described by a Gaussian noise. This result was generalized to other ensembles of random matrices in [3, 4], as well as to two-dimensional Coulomb gases at an arbitrary positive temperature in [9, 46]. Let us define

This measure describes the fluctuations of the Ginibre eigenvalues and, by [53, Theorem 1.1], for any function \(f\in \mathscr {C}^2(\mathbb {C})\) with at most exponential growth, we have as \(N\rightarrow \infty \),

If f has compact support inside the support of the equilibrium measure, then the asymptotic variance is given by

The object that we study in this article is the centered logarithm of the Ginibre characteristic polynomial:

See Fig. 1 below for a sample of the random function \(\Psi _N(z)\). Note that it follows from the convergence of the empirical measure to the circular law that for any \(z\in \mathbb {C}\), we have in probability as \(N\rightarrow \infty \),

so that the second term on the RHS of (1.9) is necessary to have the field \(\Psi _N\) asymptotically centered. In fact, it follows from the result of Webb–Wong [60] that \(\mathbb {E}_N[\Psi _N(z)] \rightarrow 1/4\) for all \(z\in \mathbb {D}\) as \(N\rightarrow \infty \). Moreover, if we interpret \(\Psi _N\) as a random generalized function, then the central limit theorem (1.7) implies that \(\Psi _N\) converges in distribution to the Gaussian free field (GFF)Footnote 1 on \(\mathbb {D}\) with free boundary conditions, see [53, Corollary 1.2] and also [4, 58] for further details. Even though the GFF is a random distribution, it can be though of as a random surface which corresponds to the two-dimensional analogue of Brownian motion [57]. The convergence result of Rider–Viràg indicates that we can think of the field \(\Psi _N\) as an approximation of the GFF in \(\mathbb {D}\). The main feature of the GFF is that it is a log-correlated Gaussian process on \(\mathbb {C}\). This log-correlated structure is already visible for the absolute value of the Ginibre characteristic polynomial as it is possible to show that for any \(z, x\in \mathbb {D}\),

as \(N\rightarrow +\infty \). By analogy with the GFF and other log-correlated fields, we can make the following prediction regarding the maximum of the field \(\Psi _N\). We have as \(N\rightarrow +\infty \),

where the random variable \(\xi _N\) is expected to converge in distribution. Analogous predictions have been made for other log-correlated fields associated with normal random matrices. For instance, Fyodorov–Keating [28] first conjectured the asymptotics of the maximum of the logarithm of the absolute value of the characteristic polynomial of the circular unitary ensembleFootnote 2 (CUE), including the distribution of the error term and Fyodorov–Simm [30] made analogous prediction for the Gaussian Unitary EnsembleFootnote 3 (GUE).

The main goal of this article is to verify the leading order in the asymptotic expansion (1.11). More precisely, we prove the following result:

Theorem 1.1

For any \(0<r<1\) and any \(\epsilon >0\), it holds

It is worth pointing out that like many other asymptotic properties of the eigenvalues of random matrices, we expect the results of Theorem 1.1, as well as the prediction (1.11) modulo the limiting distribution of \(\xi _N\), and Theorem 1.3 below to be universal. This means that these results should hold for other random normal matrix ensembles with a different confining potential Q as well as for other non-Hermitian Wigner ensembles under reasonable assumptions on the entries of the random matrix. In the remainder of this section, we review the context and most relevant results related to Theorem 1.1, and we provide several motivations to study the characteristic polynomial of the Ginibre ensemble.

1.1 Comments on Theorem 1.1 and further results

The study of characteristic polynomials for different ensembles of random matrices is an interesting and active topic because of its connections to several problems in diverse areas of mathematics. In particular, there are the analogy between the logarithm of the absolute value of the characteristic polynomial of the CUE and the Riemann \(\zeta \)-function [38], as well as the connections with Toeplitz or Hankel determinant with Fisher–Hartwig symbols, e.g. [18, 19, 24, 41]. Of essential importance is also the connection between characteristic polynomial of random matrices, log-correlated fields and the theory of Gaussian multiplicative chaos [28, 35]. This connection has been used in several recent works to compute the asymptotics of the maximum of the logarithm of the characteristic polynomial for various ensembles of random matrices. For the CUE, a result analogous to Theorem 1.1 was first obtained by Arguin–Belius–Bourgade [5]. Then, the correction term was computed by Paquette–Zeitouni [49] and the counterpart of the conjecture (1.11) was established for the circular \(\beta \)-ensembles for general \(\beta >0\) by Chhaibi–Madaule–NajnudelFootnote 4 [20]. For the characteristic polynomial of the GUE, as well as other Hermitian unitary invariant ensembles, the law of large numbers for the maximum of the absolute value of the characteristic polynomial was obtained in [43]. Cook and Zeitouni [23] also obtained a law of large numbers for the maximum of the characteristic polynomial of a random permutation matrix, in which case their result does not match with the prediction from Gaussian log-correlated field because of arithmetic effects. These results rely on the log-correlated structure of characteristic polynomials and proceed by analogy with the case of branching random walk using a modified second moment method [39]. This method has also been successful to compute the asymptotics of the Riemann \(\zeta \)-function in a random interval of the critical line, see [6, 32, 47, 55]. Further recent results on the deep connections between log-correlated fields, Gaussian multiplicative chaos and characteristic polynomials of \(\beta \)-ensembles can be found in [21, 22, 44] In particular, we prove in [22] the counterpart of Theorem 1.1 for the imaginary part of the characteristic polynomial of a large class of Hermitian unitary invariant ensembles and show that this implies optimal rigidity bounds for the eigenvalues. Likewise, by adapting the proof of the upper-bound in Theorem 1.1, we can obtain precise rigidity estimates for linear statistics of the Ginibre ensemble in the spirit of [8, Theorem 1.2] and [46, Theorem 2].

Theorem 1.2

For any \(0<r<1\) and \( \kappa >0\), define

For any \(\eta >0\) (possibly depending on N with \(\eta \le \frac{N}{\log N}\)), there exists a constant \(C_{r}>0\) such that

We believe that Theorem 1.2 is of independent interest since it covers any smooth mesoscopic linear statistic at arbitrary small scales in a uniform way. This is to be compared to the local law of [17, Theorem 2.2] which is valid for general Wigner ensembles, but not with the (optimal) logarithmic bound for the fluctuations and without such uniformity in f. The Proof of Theorem 1.2 is given in Sect. 3.2 and it relies on the basic observation that in the sense of distribution, the Laplacian of the field \(\Psi _N\) is related to the empirical measure of the Ginibre ensemble suitably centered: \(\Delta \Psi _N = 2\pi N \left( \frac{1}{N} \sum _{j=1}^N \delta _{\lambda _j} - \frac{1}{\pi }\mathbb {1}_\mathbb {D}\right) \).

The Proof of Theorem 1.1 consists of an upper-boundFootnote 5 which is based on the subharmonicity of the logarithm of the absolute value of the Ginibre characteristic polynomial and the moments asymptotics from Webb–Wong [60] and of a lower-bound which exploits the log-correlated structure of the field \(\Psi _N\). More precisely, by relying on the robust approach from [45], we obtain the lower-bound in Theorem 1.1 by constructing a family of subcritical Gaussian multiplicative chaos measures associated with certain mesoscopic regularization of the field \(\Psi _N\) — see Theorem 2.2 below for further details. Gaussian multiplicative chaos (GMC) is a theory which goes back to Kahane [37] and it aims at encoding geometric features of a log-correlated field by means of a family of random measures. These GMC measures are defined by taking the exponential of a log-correlated field through a renormalization procedure. We refer the readers to Sect. 2.1 for a brief overview of the theory and to the review of Rhodes–Vargas [51] or the elegant and short article of Berestycki [11] for more comprehensive presentations. It is well-known that in the subcritical phase, these GMC measures live on the sets of so-called thick pointsFootnote 6 of the underlying field [51, Section 4]. By exploiting this connection, we obtain from our analysis the leading order of the measure of the sets of thick points of the characteristic polynomial for large N.

Theorem 1.3

Let us define the set of \(\beta \)-thick points of the Ginibre characteristic polynomial:

and let \(|\mathscr {T}_N^\beta (r)|\) be its Lebesgue measure. For any \(0<r<1\), any \(0 \le \beta < 1/\sqrt{2}\) and any small \(\epsilon >0\), we have

The Proof of Theorem 1.3 will be given in Sect. 4 and the result has the following interpretation. By (1.9), the field \(-\Psi _N\) corresponds to the (electrostatic) potential energy generated by the random charges \((\lambda _1, \dots , \lambda _N)\) and the negative uniform background \(\sigma \). One may view \(-\Psi _N\) as a complex energy landscape and the asymptotics (1.14) describe the multi-fractal spectrum of the level sets near the extreme local minima of this landscape. Moreover, as a consequence of Theorems 1.1 and 1.3, we obtain the leading order of the corresponding free energy, i.e. the logarithm of the partition function of the Gibbs measure \(e^{\beta \Psi _N}\) for \(\beta >0\). Namely, by adapting the Proof of [5, Corollary 1.4], it holds for any \(0<r<1\), in probability,

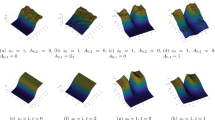

The fact that the free energy is constant and equal to \({\displaystyle \lim _{N\rightarrow +\infty }}\frac{\max _{\mathbb {D}_r} \Psi _N}{\log N}\) in the supercritical regime \(\beta >\sqrt{8}\) is called freezing. This property is typical for Gaussian log-correlated fields and our results rigorously establish that the Ginibre characteristic polynomial behave according to the Gaussian predictions which is a well-known heuristic in random matrix theory. Moreover, this freezing scenario is instrumental to predict the full asymptotic behavior (1.11) of the maximum of the field \(\Psi _N\), including the law of the error term, see e.g. [27]. For an illustration of level sets of the random function and in particular of the geometry of thick points, see Fig. 2.

Let us return to the connections between our results and the theory of Gaussian multiplicative chaos. The family of GMC measures associated to the GFF are called Liouville measures and they play a fundamental role in recent probabilistic constructions in the context of quantum gravity, imaginary geometries, as well as conformal field theory. We refer to the reviews [7, 52] for further references on these aspects of the theory. Thus, motivated by the result of Rider–Viràg, it is expected that a random measure whose density is given by a smallFootnote 7 power of the characteristic polynomial (see Fig. 3) converges when suitably normalized:

where \(\mu _\mathrm {G}^\gamma \) is a Liouville measure with parameter \(0<\gamma < \sqrt{8}\). Hence, this provides an interesting connection between the Ginibre ensemble of random matrices and random geometry. As we observed in [22, Section 3], this convergence result in the subcritical phase implies the lower-bound in Theorem 1.1. An important observation that we make in this paper is that it suffices to establish the convergence of \(\frac{e^{\gamma \psi _N(z)}}{\mathbb {E}_N\left[ e^{\gamma \psi _N(z)}\right] } \frac{\mathrm {d}^2z}{\pi }\) to a GMC measure for a suitable regularization \(\psi _N\) of the field \(\Psi _N\) in order to capture the correct leading order asymptotics of its maximum and thick points. The main issues are to work with a regularization at an optimal mesoscopic scale \(N^{-1/2+\alpha }\) for arbitrary small \(\alpha >0\) and to be able to obtain the convergence in the whole subcritical phase. In particular, our result on GMC, Theorem 2.2, provides strong evidence that the prediction (1.16) holds.

It is an important and challenging problem to obtain (1.16) already in the subcritical phase. In particular, this requires to derive the asymptotics of joint moments of the characteristic polynomials. For a single \(z\in \mathbb {D}_r\), such asymptotics are obtained by Webb–Wong in [60] using Riemann–Hilbert techniques. Let us recall their main result which is also a key input in our method.

Theorem 1.4

[60, Theorem 1.1]. For any fixed \(0<r<1\), we have

where the error term is uniform for \(\gamma \) in compact sets of \(\{ \gamma \in \mathbb {C}: \mathfrak {R}\gamma >-2\}\) and \(z\in \mathbb {D}_r\).

Remark 1.5

The asymptotics of the joint exponential moments of \(\Psi _N\) remain conjectural, see e.g. [60, Section 1.2], except for even moments for which there are explicit formulae, see [1, 26, 29]. These formulae rely on the determinantal structure of the Ginibre ensemble: for any \(n\in \mathbb {N}\), we have for any \(z_1, \dots , z_n \in \mathbb {C}\) such that \(z_1 \ne \cdots \ne z_n\),

where \(K_{N+n}\) is the Ginibre kernel as in (1.2). Using the off-diagonal (Gaussian) decay of the Ginibre kernel, we can show that

uniformly for all \(\inf _{i\ne j} |z_i -z_j| \ge c\sqrt{\frac{\log N}{N}}\) if \(c>0\) is a sufficiently large constant. If \(|z_i| \le c\sqrt{\frac{\log N}{N}}\), we also have \(K_{N+n}(z_i,z_i) = \tfrac{N}{\pi }\left( 1+ \mathcal {O}(N^{-1}) \right) \). Thus, by (1.5) and (1.9) we obtain that for any given \(z_1, \dots , z_n \in \mathbb {D}\), such that \(z_1 \ne \cdots \ne z_n\),

which matches exactly with the Fisher–Hartwig predictions from [60, Section 1.2] with \(\gamma _1= \cdots = \gamma _n =2\). \(\blacksquare \)

Sample of the (normalized) Ginibre characteristic polynomial \(\frac{\prod _{j=1}^N |z-\lambda _j| }{\mathbb {E}_N[ \prod _{j=1}^N |z-\lambda _j| ] }\) for a random matrix of dimension \(N=3000\). This is an approximation of the Liouville measure \(\mu _G^\gamma \) with (subcritical) parameter \(\gamma =1\)

1.2 Outline of the article

The remainder of this article is devoted to the Proof of Theorem 1.1. The result follows directly by combining the upper-bound of Proposition 3.1 and the lower-bound from Proposition 2.1. As we already emphasized the proof of the lower-bound follows from the connection with GMC theory and the details of the argument are reviewed in Sect. 2. In particular, it is important to obtain Gaussian asymptotics for the exponential moments of a mesoscopic regularization of the field \(\Psi _N\), see Proposition 2.3. These asymptotics are obtained by using the method developed by Ameur–Hedenmalm–Makarov [4] which relies on Ward identity, also known as loop equation, and the determinantal structure of the Ginibre ensemble. Compared with the proof of the central limit theorem in [4], we face two significant extra technical challenges: we must consider a mesoscopic linear statistic coming from a test function which develops logarithmic singularities as \(N\rightarrow \infty \). This implies that we need a more precise approximation for the correlation kernel of the biased determinantal process. For these reasons, we give a detailed Proof of Proposition 2.3 in Sects. 5 and 6. Our proof for the upper-bound is given in Sect. 3 and it relies on the subharmonicity of the logarithm of the absolute value of the Ginibre characteristic polynomial and the asymptotics from Theorem 1.4. In Sect. 3.2, we discuss an application to linear statistics of the Ginibre eigenvalues and give the Proof of Theorem 1.2.

2 Proof of the Lower-Bound

Recall that \(\Psi _N\) denotes the centered logarithm of the absolute value of the Ginibre characteristic polynomial, (1.9). The goal of this section is to obtained the following result:

Proposition 2.1

For any \(r>0\) and any \(\delta >0\), we have

Our strategy to prove Proposition 2.1 is to obtain an analogous lower-bound for a mesoscopic regularization of \(\Psi _N\) which is also compactly supported inside \(\mathbb {D}\). Note that it is also enough to consider the maximum in a disk \(\mathbb {D}_{\epsilon _0} = \{x\in \mathbb {C}: |x|\le \epsilon _0 \}\) for a small \(\epsilon _0>0\). To construct such a regularization, let us fix \(0< \epsilon _0 \le 1/4\) and a mollifier \(\phi \in \mathscr {C}^\infty _c(\mathbb {D}_{\epsilon _0})\) which is radial.Footnote 8 For any \(0<\epsilon <1\), we denote \(\phi _\epsilon (\cdot ) = \phi (\cdot /\epsilon ) \epsilon ^{-2}\) and to approximate the logarithm of the characteristic polynomial, we consider the test function

We also denote \(\psi =\psi _1\). For technical reason, it is simpler to work with test function compactly supported inside \(\mathbb {D}\) — which is not the case for \(\psi _\epsilon \). However, this can be fixed by making the following modification: for any \(z \in \mathbb {D}_{\epsilon _0}\), we define

It is easyFootnote 9 to see that the function \(g_N^z\) is smooth and compactly supported inside \(\mathbb {D}(z, \epsilon _0)\). Since we are interested in the regime where \(\epsilon (N)\rightarrow 0\) as \(N\rightarrow \infty \), we emphasize that \(g_N^z\) depends on the dimension \(N\in \mathbb {N}\) of the matrix. Then, the random field \(z\mapsto \mathrm {X}(g_N^z)\) is related to the logarithm of the Ginibre characteristic polynomial as follows:

In particular, \(z\mapsto \mathrm {X}(g_N^z)\) is still an approximate log-correlated field. Indeed, according to (1.7), (1.8) and formula (2.8) below, we expect that as \(N\rightarrow +\infty \)

This should be compared with formula (1.10).

2.1 Gaussian multiplicative chaos

Let \(\mathrm {G}\) be the Gaussian free field (GFF) on \(\mathbb {D}\) with free boundary conditions. That is, \(\mathrm {G}\) is a Gaussian process taking values in the space of Schwartz distributions with covariance kernel:

Up to a factor of \(1/\pi \), the RHS of (2.4) is the Green’s functionFootnote 10 for the Laplace operator \(-\Delta \) on \(\mathbb {C}\). Because of the singularity of the kernel (2.4) on the diagonal, \(\mathrm {G}\) is called a log-correlated field and it cannot be defined pointwise. In general, \(\mathrm {G}\) is interpreted as a random distribution valued in a Sobolev space \(H^{-\alpha }(\mathbb {D})\) for any \(\alpha >0\) [7]. In particular, for any mollifier \(\phi \) as above and any \(\epsilon >0\), we view

as a regularization of \(\mathrm {G}\).

The theory of Gaussian multiplicative chaos aims at defining the exponential of a log-correlated field. Since such a field is merely a random distribution, this is a non trivial problem. However, in the so-called subcritical phase, this can be done by a quite simple renormalization procedure. Namely, for \(\gamma >0\), we define \(\mu ^\gamma _\mathrm {G}= : e^{\gamma \mathrm {G}} :\) as

It turns out that this limit exists almost surely as a random measure on \(\mathbb {D}\) and that it does not depend on the mollifier \(\phi \) within a reasonable class. Moreover, in the case of the GFF normalized as in (2.4), it is a non trivial measure if and only if the parameter \(0<\gamma < \sqrt{8}\) — this is called the subcritical phase [7, 11, 54]. For general log-correlated field, the theory of GMC goes back to the work of Kahane [37] and in the case of the GFF, the construction \(\mu ^\gamma _\mathrm {G}\) was re-discovered by Duplantier–Sheffield [25] and Rhodes–Vargas [50] from different perspectives. In a sense, the random measure \(\mu ^\gamma _\mathrm {G}\) encodes the geometry of the GFF. For instance, the support of \(\mu ^\gamma _\mathrm {G}\) is a fractal set which is closely related to the concept of thick points [34]. We will not discuss these issues here and refer instead to [7, 22] for further details. Let us just point out that the relationship between Theorem 2.2 and Corollary 2.4 below is based on such arguments.

For log-correlated fields which are only asymptotically Gaussian, especially those coming from random matrix theory such as the logarithm of the Ginibre characteristic polynomial \(\Psi _N\), the theory of Gaussian multiplicative chaos has been developed in [45, 59]. The construction in [45] is inspired from the approach of Berestycki [11] and it has been recently applied to unitary random matrices in [48], as well as to Hermitian unitary invariant random matrices in [12, 22]. In this paper, we construct subcritical GMC measures coming from the regularization \( \mathrm {X}(g_N^z)\), (2.3), of the logarithm of the Ginibre characteristic polynomial at a scale \(\epsilon = N^{-1/2+\alpha }\) for any small \(\alpha >0\). This mesoscopic regularization makes it simpler to compute the leading asymptotics of the exponential moments of the field \( \mathrm {X}(g_N^z)\) — see Proposition 2.3 below. Then, using the main results from [45], it allows us to prove that the limit of the renormalized exponential \(\mu ^\gamma _N = : e^{\gamma \mathrm {X}(g_N^z)} :\) exists for all \(\gamma >0\) in the subcritical phase and that it is absolutely continuous with respect to the GMC measure \(\mu ^\gamma _\mathrm {G}\).

Theorem 2.2

Recall that \(0< \epsilon _0 \le 1/4\) is fixed. Let \(\gamma >0\) and \(g_N^{z}\) be as in (2.2) with \(\epsilon =\epsilon (N) = N^{-1/2+\alpha }\) for a fixed \(0<\alpha <1/2\). Let us define the random measure \(\mu ^\gamma _N\) on \( \mathbb {D}_{\epsilon _0}\) by

For any \(0<\gamma < \gamma _* = \sqrt{8}\), the measure \(\mu ^\gamma _N\) converges in law as \(N\rightarrow +\infty \) with respect to the weak topology toward a random measure \(\mu ^\gamma _\infty \) which has the same law, up to a deterministic constant, as \(e^{\gamma \mathrm {G}_1(x)}\mu ^\gamma _\mathrm {G}(\mathrm {d}x)\), where \(\mathrm {G}_1\) is the smooth Gaussian process obtained from \(\mathrm {G}\) as in (2.5) with \(\epsilon =1\) and \(\mu ^{\gamma }_\mathrm {G}\) is the GMC measure (2.6). In particular, our convergence covers the whole subcritical phase.

The Proof of Theorem 2.2 follows from applying [45, Theorem 2.6]. Let us check that the correct assumptions hold. First, we can deduce [45, Assumption 2.1, Assumption 2.2] from the CLT of Rider–Viràg (1.7). Indeed, for any fixed \(\epsilon >0\), as \(\psi _\epsilon \) is a smooth function, the process \((z, \epsilon )\mapsto \mathrm {X}\big (\psi _\epsilon (\cdot -z)\big )\) converges in the sense of finite dimensional distributions to a mean-zero Gaussian process whose covariance is given by (1.8).Footnote 11 Namely, letting \(\Sigma (\cdot ;\cdot )\) be the quadratic form associated with \(\Sigma (\cdot )\), we have for any \(z_1, z_2 \in \mathbb {D}_{\epsilon _0}\) and \(0<\epsilon _1, \epsilon _2 \le \epsilon _0\),

where the error term is uniform. In particular, (2.7) shows that the process \((z, \epsilon )\mapsto \mathrm {X}\big (\psi _\epsilon (\cdot -z)\big )\) converges in the sense of finite dimensional distributions to \((z, \epsilon )\mapsto \mathrm {G}_\epsilon (z)\) as in (2.5), which comes from mollifying a GFF. In this case, the [45, Assumption 2.3] follows e.g. from [11, Theorem 1.1]. So, the only important input to deduce Theorem 2.2 is to verify [45, Assumption 2.4] which consists in obtaining Gaussian asymptotics for the joint exponential moments of the field \(\mathrm {X}(g_N^z)\). Namely, we need the following asymptotics:

Proposition 2.3

Fix \(0<\alpha <1/2\), \(R>0\) and let \(\epsilon =\epsilon (N) = N^{-1/2+\alpha }\). For any \(n\in \mathbb {N}\), \(\gamma _1 , \dots \gamma _n \in \mathbb {R}\), \(z_1, \dots , z_n \in \mathbb {C}\), we denote

with parameters \( \epsilon (N)\le \epsilon _1(N) \le \cdots \le \epsilon _n(N) <1\). We have

where \(\Sigma \) is given by (1.8) and the error term is uniform for all \(\mathbf {z} \in \mathbb {D}_{\epsilon _0}^{\times n}\) and \(\mathbf {\gamma } \in [-R,R]^n\).

The Proof of Proposition 2.3 is the most technical part of this paper and it is postponed to Sect. 5. It relies on adapting in a non-trivial way the arguments of Ameur–Hedenmalm–Makarov from [4]. In particular, our proofs relies heavily on the determinantal structure of the Ginibre eigenvalues and we need local asymptotics for the correlation kernel of the ensemble obtained after making a small perturbation of the Ginibre potential — see Sect. 5.1. It turns out that these asymptotics are universal and can be derived using techniques inspired from the works of Berman [13, 14] which have also been applied to study the fluctuations of the eigenvalues of normal random matrices in [2,3,4].

As an important consequence of Theorem 2.2, we obtain the following corollary:

Corollary 2.4

Fix \(0<\alpha <1/2\), let \(\epsilon =\epsilon (N) = N^{-1/2+\alpha }\) and let \(\psi _\epsilon \) be as in (2.1). If \(\gamma _*=\sqrt{8}\), then for any \(\delta >0\) and any \(0< \epsilon _0 \le 1/4\), we have

The Proof of Corollary 2.4 follows from [22, Theorem 3.4] with a few non trivial modifications, the details are given in Sect. 2.3.

2.2 Proof of Proposition 2.1

We are now ready to complete the Proof of Proposition 2.1. Observe that by (1.9) and (2.1), we have for \(z\in \mathbb {C}\) and \(0<\epsilon \le 1\),

In particular since \({\text {supp}}(\phi _\epsilon ) \subseteq \mathbb {D}_{\epsilon _0}\) for any \(0<\epsilon \le 1\), this implies that we have a deterministic bound for any \(z\in \mathbb {C}\),

Then

and by Corollary 2.4 with \(\alpha =\delta \), we obtain

Since \(0< \epsilon _0 \le 1/4\) and \(0<\delta <1/2\) are arbitrary, this yields the claim.

2.3 Proof of Corollary 2.4

This corollary follows from the results on the behavior of extreme values for general log-correlated fields which are asymptotically Gaussian developed in [22, Section 3]. Let us fix \(0<\epsilon _0 \le 1/4\). First of all, we verify that it follows from Proposition 2.3 and formula (2.8) that for any \(\gamma \in \mathbb {R}\), as \(N\rightarrow +\infty \)

uniformly for all \(z\in \mathbb {D}_{\epsilon _0}\). These asymptotics show that the field \(z\mapsto \mathrm {X}(g_N^{z})\) satisfies [22, Assumptions 3.1] on the disk \(\mathbb {D}_{\epsilon _0}\). Moreover, by Theorem 2.2, \(\mu ^\gamma _N(\mathbb {D}_{\epsilon _0}) \rightarrow \mu ^\gamma _\infty (\mathbb {D}_{\epsilon _0}) \) in distribution as \(N\rightarrow +\infty \) where \(0<\mu ^\gamma _\infty (\mathbb {D}_{\epsilon _0}) <+\infty \) almost surely. This follows from the fact that the random measure \(\mu ^\gamma _\infty (\mathrm {d}x) \propto e^{\gamma \mathrm {G}_1(x)}\mu ^\gamma _\mathrm {G}(\mathrm {d}x)\), \(\mathrm {G}_1\) is a smooth Gaussian process on \(\mathbb {D}\), \(\mathbb {D}_{\epsilon _0}\) is a continuity set for the GMC measure \( \mu ^\gamma _\mathrm {G}\) and \(0<\mu ^\gamma _\mathrm {G}(\mathbb {D}_{\epsilon _0}) <+\infty \) almost surely. Thus [22, Assumptions 3.3] holds and we can applyFootnote 12 [22, Theorem 3.4] to obtain a lower-bound for the maximum of the field \(z\mapsto \mathrm {X}(g_N^{z})\). This shows that for any \(0< \epsilon _0 \le 1/4\) and any \(\delta >0\),

Let us point out that heuristically, the lower-bound (2.11) follows from the facts that the random measure \(\mu ^\gamma _N\) from Theorem 2.2 has most of its mass in the set \(\left\{ z\in \mathbb {D}_{\epsilon _0} : \mathrm {X}(g_N^{z}) \ge \gamma (1-\delta )\Sigma ^2(g_N^{z}) \right\} \) for large N and that \(\mu ^\gamma _N\) is a non-trivial measure if and only if \(\gamma < \gamma _*\). Moreover, by [22, Proposition 3.8], we also obtain a lower-bound for the measure of the sets where the field \(z\mapsto \mathrm {X}(g_N^{ z})\) takes extreme values. Namely, under the assumptions of Proposition 2.2, we have for any \(0 \le \gamma < \frac{\gamma _*}{\sqrt{2}}\) and any small \(\delta >0\),

In Sect. 4, we use these asymptotics to compute the leading order of the measure of the sets of thick points of the Ginibre characteristic polynomial, hence proving Theorem 1.3.

Let us return to the Proof of Corollary 2.4 and recall that \(g_N^{z} = \psi _{\epsilon }(\cdot -z) - \psi (\cdot -z)\) with \(\epsilon =\epsilon (N)\). So, in order to obtain the lower-bound, we must show that the random variable \(\max _{z\in \mathbb {D}_{\epsilon _0}} \left| \mathrm {X}\left( \psi (\cdot -z) \right) \right| \) remains small compared to \(\log \epsilon (N)^{-1}\) for large \(N\in \mathbb {N}\). To prove this claim, we rely on the following general bound.

Lemma 2.5

Let \( \mathscr {F}_{r,0}\) be as in (1.12). For any \(0<r<1\), there exists a constant \(C_r>0\) such that

Proof

It follows from the estimate (3.1) below that we have uniformly for all \(\gamma \in [-1,1]\) and all \(z\in \mathbb {D}_r\),

In particular, by Markov’s inequality, this implies that for any \(\lambda >0\),

Observe that according to (1.6), we have for any test function \(f\in \mathscr {C}^2(\mathbb {C})\),

In particular, this implies that for all \(f\in \mathscr {F}_{r,0}\),

Then, by Jensen’s inequality,

Therefore, it holds that

Hence, to obtain the bound (2.13), it suffices to show that for all \(x\in \mathbb {D}_r\),

Let us fix \(z\in \mathbb {D}_r\) and \(\ell _N = \frac{1}{2} \log \sqrt{N}\). Using (2.14) with \(\gamma = \lambda /\ell _N \), we obtain for any \(0<\lambda < \ell _N\),

Then, by integrating this estimate, we obtain

Moreover, using the bound (2.15), we also have

because \(N^{1/8} e^{-\ell _N} = N^{-1/8}\). By combining the estimates (2.18) and (2.19), we obtain for any \(N\in \mathbb {N}\),

This proves the inequality (2.17) and it completes the proof. \(\square \)

We are now ready to complete the Proof of Corollary 2.4.

Proof of Corollary 2.4

Let us recall that we let \(\epsilon =\epsilon (N) = N^{-1/2+\alpha }\) for \(0<\alpha <1/2\). Moreover, by (2.1), we have \(\Delta \psi = \phi \in \mathscr {C}^\infty _c(\mathbb {D}_{\epsilon _0})\) with \(0<\epsilon _0 \le 1/4\). Then, for any \(z\in \mathbb {D}_{\epsilon _0}\), the function \(x\mapsto \psi (x-z) / \Vert \phi \Vert _\infty \) belongs to \(\mathscr {F}_{1/2,0}\). By Lemma 2.5 and Chebyshev’s inequality, this implies that for any \(\delta >0\),

In particular, the RHS of (2.20) converges to 0 as \(N\rightarrow +\infty \). Moreover, since \(\mathrm {X}(\psi _{\epsilon }(\cdot -z)) = \mathrm {X}(g_N^{z}) + \mathrm {X}(\psi (\cdot -z))\) and \(\gamma ^*\ge 1\), we have

By (2.11) and (2.20), this implies that

which completes the proof. \(\square \)

3 Proof of the Upper-Bound

The goal of this section is to prove the upper-bound in Theorem 1.1. Then, in Sect. 3.2, we adapt the proof in order to prove Theorem 1.2.

Proposition 3.1

For any fixed \(0<r<1\) and \(\varepsilon >0\), we have

In order to prove Proposition 3.1, we need the following consequence of Theorem 1.4: for any \(0<r<1\), there exists a constant \(C_r>0\) such that for any \(\gamma \in [-1,4]\),

In fact, we do not need the precise asymptotics (1.17) and the upper-bound (3.1) for the Laplace transform of the field \(\Psi _N\) suffices for our applications. For instance, it is straightforward to deduce the following bounds.

Lemma 3.2

Fix \(0<r<1\) and recall the definition (1.13) of the set \(\mathscr {T}_N^\beta \) of \(\beta \)-thick points. We have for any \(\beta \in [0,1]\),

Proof

By Markov’s inequality, we have for any \(\beta \ge 0\),

Taking \(\gamma =4\beta \) and using the estimate (3.1), this implies the claim. \(\square \)

For the Proof of Proposition 3.1, we also need the following simple Lemma.

Lemma 3.3

Recall that \((\lambda _1, \dots , \lambda _N)\) denotes the eigenvalues of a Ginibre random matrix. For any \(\delta \in [0,1]\) (possibly depending on N), we have for all \(N\ge 3\),

Proof

Let us recall that Kostlan’s Theorem [40] states that the random variables \(\left\{ N |\lambda _1|^2 , \dots , N |\lambda _N|^2\right\} \) have the same law as \(\left\{ \varvec{\gamma }_1, \dots , \varvec{\gamma }_N\right\} \) where \(\varvec{\gamma }_k\) are independent random variables with distribution \(\mathbb {P}[\varvec{\gamma }_k \ge t] = \frac{1}{\Gamma (k)} \int _t^{+\infty } s^{k-1} e^{-s} ds\) for \(k=1, \dots , N\). By a union bound and a change of variable, this implies that

where \(\phi (s) = s- \log s -1\). Since \(\phi \) is strictly convex on \([0,+\infty )\) with \(\phi '(t)=1-1/t\), this implies that

Using that \(\phi (1+\delta ) \ge t^2/4\) for all \(\delta \in [-1,1]\), this completes the proof. \(\square \)

We are now ready to give the Proof of Proposition 3.1.

3.1 Proof of Proposition 3.1

Fix \(0<r<1\) and a small \(\varepsilon >0\) such that \(r' = r+2\sqrt{\varepsilon } <1\). For \(z\in \mathbb {C}\), let \(P_N(z) := {\textstyle \prod _{j=1}^N } |z-\lambda _j|\) and recall that the logarithmic potential of the circular law is \(\varphi \), (1.4). Conditionally on the event \(\left\{ \max _{j\in [N]} |\lambda _j| \le \frac{3}{2} \right\} \), we have the a-priori bound: \(\max _{z\in \overline{\mathbb {D}}}|P_N(z)| \le (\frac{5}{2})^N\). Since \(\Psi _N = \log P_N - N\varphi \) and \(-\varphi \le 1/2\), by Lemma 3.3, this shows that

The function \(\Psi _N\) is upper-semicontinuous on \(\mathbb {C}\), so that it attains it maximum on \(\overline{\mathbb {D}_r}\). Let \(x_* \in \overline{\mathbb {D}_r}\) such that

Since the function \(z\mapsto \log P_N(z)\) is subharmonic on \(\mathbb {C}\), we have for any \(\delta >0\),

Observe that as \(\varphi (z) = \frac{|z|^2-1}{2} \) for \(z\in \mathbb {D}\), if \(\mathbb {D}(x_*,\delta ) \subset \mathbb {D}\), then

By (3.3), this implies that

Choosing \(\delta =\sqrt{ \varepsilon \frac{\log N}{N}}\) in (3.4), we obtain

On the event \(\Big \{{\textstyle \max _{\overline{\mathbb {D}_r}}}\ \Psi _N\ge \frac{1+\varepsilon }{\sqrt{2}} \log N \Big \}\), this implies that

On the other-hand, by (1.13) with \(\beta = 1/\sqrt{2}\),

Combining (3.5) and (3.6), this implies

Hence, we conclude that for any \(\eta \in [0,1]\), on the event \(\Big \{ \frac{1+\varepsilon }{\sqrt{2}} \log N \le \max _{\overline{\mathbb {D}_r}} \Psi _N \le \max _{\overline{\mathbb {D}_{r'}}} \Psi _N \le \frac{\varepsilon ^2}{2} (\log N)^{1+\eta } \Big \}\),

By Lemma 3.2 applied with \(\beta = 1/\sqrt{2}\), this implies that

By a similar argument as (3.4), with \(\delta = \varepsilon \sqrt{\frac{(\log N)^{1+\eta }}{2N}}\) and choosing \(x_*\in \overline{\mathbb {D}_r}\) such that \(\Psi _N(x_*) = {\textstyle \max _{\overline{\mathbb {D}_{r'}}}}\ \Psi _N\), it holds conditionally on the event \( \big \{\max _{\overline{\mathbb {D}_{r'}}} \Psi _N \ge N \delta ^2\big \}\),

Let \(\mathscr {A} = \left\{ z\in \overline{\mathbb {D}_{r''}} : \Psi _N(z) \ge \frac{\varepsilon ^2(\log N)^{1+\eta }}{8} \right\} \) with \(r'' = r'+\epsilon <1\). Conditionally on the event \( \big \{ \tfrac{\varepsilon ^2}{2} (\log N)^{1+\eta } \le \max _{\overline{\mathbb {D}_{r'}}} \Psi _N\)\( \le \max _{\overline{\mathbb {D}}} \Psi _N \le 3N\big \}\), this gives

so that with \(\eta =1/2\),

A variation of the Proof of Lemma 3.2 using the estimate (3.1) with \(0<r''<1\) and \(\gamma =4\) shows that \( \mathbb {E}_N\left[ |\mathscr {A}| \right] \le C_{r''} N e^{-\varepsilon ^2(\log N)^{3/2}/2}\). By (3.8), we conclude that

In order to complete the proof, it remains to observe that by combining the estimates (3.7), (3.9) and (3.2), we have proved that if \(\varepsilon >0\) is sufficiently small, then

3.2 Concentration for linear statistics: Proof of Theorem 1.2

In order to prove Theorem 1.2, we need the following bounds as well as Lemma 3.3.

Lemma 3.4

Fix \(\eta >0\) and \(0<r<1\). There exists a universal constant \(A>0\) such that conditionally on the event \(\mathscr {B} = \{ \max _{j=1,\ldots , N} |\lambda _j| \le 2\} \), we have for any function \(f\in \mathscr {C}^2(\mathbb {C})\) (possibly depending on \(N\in \mathbb {N}\)) which is harmonic in \(\mathbb {C}\setminus \overline{\mathbb {D}_r}\),

where \(\mathscr {G}_\eta := \left\{ z\in \mathbb {D}_r : |\Psi _N(z)| > \eta \log N \right\} \) (with \(\eta >0\) possibly depending on \(N\in \mathbb {N}\)) and \(C>0\). Moreover, there exists a constant \(C_r>0\) such that for any \(\kappa >0\),

Proof

Observe that for any \(f\in \mathscr {C}^2(\mathbb {C})\) which is harmonic in \(\mathbb {C}\setminus \overline{\mathbb {D}_r}\), by definition of \(\mathscr {G}_\eta \), we have

Then, by the Cauchy–Schwartz inequality,

and by (1.9), it holds conditionally on the event \(\mathscr {B}\),

where \(\displaystyle C= \sqrt{ 2 \sup _{|x| \le 2} \int _\mathbb {D}\left( \log |z-x| \right) ^2 \mathrm {d}^2z + \frac{8\pi }{15}} \) is a numerical constant. This shows that

Then, according to formula (2.16) and (3.12), we obtain (3.10). In order to estimate the size of the set \(\mathscr {G}_\eta \), let us observe that combining (2.14) with \(\gamma =1\) and Markov’s inequality, we obtain

By Markov’s inequality, this yields the estimate (3.11). \(\square \)

Proof of Theorem 1.2

By Lemma 3.4, for any test function \(f \in \mathscr {F}_{r,\kappa }\), it holds conditionally on the event \(\mathscr {B} = \{ \max _{j=1,\dots , N} |\lambda _j| \le 2\} \) that for any small \(\eta >0\)

Hence, this implies that if \(N \in \mathbb {N}\) is sufficiently large,

By Lemma 3.3 with \(\delta =1\) and (3.11), we have shown that \(\mathbb {P}_N[\mathscr {B}^c] \le \sqrt{N} e^{-N}\) and \( \mathbb {P}_N\left[ |\mathscr {G}_\eta | \ge N^{-9/8-\kappa } \right] \le C_r N^{5/4+\kappa -\eta }\). By combining these estimates, this completes the proof. \(\square \)

4 Thick Points: Proof of Theorem 1.3

Like the Proof of Theorem 1.1, the Proof of Theorem 1.3 consists of a separate upper-bound (4.1) and lower-bound (Proposition 4.1 below) and it relies on similar techniques. In particular, the upper-bound follows directly from Lemma 3.2. Namely, by Markov’s inequality, we have for any \(\beta \in [0,1]\) and \(\delta >0\),

Then, to obtain the lower-bound, we rely the fact that the field \(\Psi _N\) can be well approximated by \(\mathrm {X}\left( \psi _\epsilon (\cdot -z)\right) \) for \(\epsilon = N^{-1/2+\alpha }\) with a small scale \(\alpha >0\) and use the estimate (2.12).

Proposition 4.1

For any \(0<r<1\), any \(0\le \beta <1/\sqrt{2}\) and any \(\delta >0\), we have

Proof

We fix parameters \(r\in (0,1)\), \(\beta \in [0,1/\sqrt{2})\) and we abbreviate \(\mathscr {T}_N^\beta = \mathscr {T}_N^\beta (r)\). Recall that \(\phi \in \mathscr {C}^\infty _c(\mathbb {D}_{\epsilon _0})\) is a mollifier and that for any \(z\in \mathbb {C}\),

where \(\epsilon =\epsilon (N) = N^{-1/2+\alpha }\) — the scale \(0<\alpha <1/2\) will be chosen later in the proof depending on \(\beta \) and \(\delta \). Throughout the proof, we assume that \(\epsilon \) is small compared to \(\epsilon _0\le 1/4\), we let \(\mathrm {c} = \sup _{x\in \mathbb {C}} \phi (x)\) and for a small \(\delta \in (0, 1/2]\),

We also define the event (of large probability):

Since \(g_N^{z}= \psi _\epsilon (\cdot -z) -\psi (\cdot -z)\) by (2.2), we have for any \(\gamma >0\),

Then, using the estimates (2.12) and (2.20), we obtain that for any \(0 \le \gamma < \frac{\gamma _*}{\sqrt{2}}\),

Hence, choosing the scale \(\alpha = \frac{\delta }{8\sqrt{2}(\gamma +\delta /2)}\) with \(\gamma = \sqrt{8}\beta \), this implies that for any \(0\le \beta <1/\sqrt{2}\),

By formula (4.2) and the definition of \(\beta \)-thick points, we have conditionally on \(\mathscr {A}\), for any \(z\in \mathbb {D}_{\epsilon _0}\),

where we used that \( \phi _\epsilon (x-z) \le \mathrm {c} \epsilon ^{-2} \mathbb {1}_{|x-z| \le \epsilon /4}\) at the last step. Now, let us tile the disk \(\mathbb {D}_{\epsilon _0}\) with squares of area \(\epsilon ^{2}\). To be specific, let \(M = \lceil \epsilon ^{-1} \rceil \) and \(\square _{i,j} = [i\epsilon , (i+1)\epsilon ] \times [j\epsilon , (j+1)\epsilon ]\) for all integers \(i,j \in [-M,M]\). Note that since \(z\mapsto \mathrm {X}\left( \psi _\epsilon (\cdot -z)\right) \) is a continuous process, for any \(i,j \in \mathbb {Z} \cap [-M,M]\), we can choose

The point of this construction is that we have the deterministic bound

Moreover if \(z_{i,j} \in \Upsilon _N^\beta \), (4.4) shows that conditionally on \(\mathscr {A}\),

By (4.5), this implies that

Since the squares \(\square _{i,j}\) are disjoint (except for their sides) and \(z_{i,j} \in \square _{i,j}\), we further have the deterministic bound

Hence, we conclude that conditionally on \(\mathscr {A}\), for \(0\le \beta <1/\sqrt{2}\) and \(\delta >0\) sufficiently small (but independent of N),

Finally, according to Proposition 3.1, we have \(\mathbb {P}_N[\mathscr {A}] \rightarrow 1\) as \(N\rightarrow +\infty \), so that by combining the previous estimate with (4.3), this completes the proof. \(\square \)

5 Gaussian Approximation

In this section, we turn to the proof of our main asymptotic result: Proposition 2.3. Its proof relies on the so-called Ward’s identity or loop equation which have already been used in [4] as well as [8, 9] to study the fluctuations of linear statistics of eigenvalues of random normal matrices and two-dimensional Coulomb gases respectively. For completeness, we provide a detailed proof of the loop equation that we use in Sect. 5.2. Then, to show that the error terms in this equation are small, we rely on the determinantal structure of the ensemble obtained after making a small perturbation of the potential Q and on a local approximation of its correlation kernel (see Proposition 5.3 below). This approximation is justified in Sect. 6 based on the method from [4] and we use it to prove that the error terms are indeed negligible as \(N\rightarrow +\infty \) in Sects. 5.4–5.7. Finally, we finish the Proof of Proposition 2.3 in Sect. 5.8 by using a classical argument introduced by Johansson [36] to prove a CLT for linear statistics of \(\beta \)-ensembles on \(\mathbb {R}\). Before starting our analysis, we need to introduce further notations.

5.1 Notation

For any \(N\in \mathbb {N}\), we let

Let us recall that by Cauchy’s formula, if f is smooth and compactly supported inside \(\mathbb {D}\), we have

where \(\sigma (\mathrm {d}x) = \frac{1}{\pi } \mathbb {1}_{\mathbb {D}} \mathrm {d}^2 x\) denotes the circular law. Throughout Sect. 5, we fix \(n\in \mathbb {N}\), \(\mathbf {\gamma } \in [-R,R]^n\), \(\mathbf {z} \in \mathbb {D}_{\epsilon _0}^{\times n}\) and we let \(g_N = g_N^{\mathbf {\gamma }, \mathbf {z}}\) be as in formula (2.9). We recall that as \(\mathbf {z} \in \mathbb {D}_{\epsilon _0}^{\times n}\) varies, the functions \(x\mapsto g_N^{\mathbf {\gamma }, \mathbf {z}}(x)\) remain smooth and compactly supported inside \(\mathbb {D}_{2\epsilon _0}\) for all \(N\in \mathbb {N}\). Let us define for \(t>0\),

The biased measure \(\mathbb {P}_N^*\) corresponds to an ensemble of the type (1.1) with a perturbed potential \(Q^* := Q- \frac{tg_N}{2N}\). Therefore, under \(\mathbb {P}_N^*\), \(\lambda =(\lambda _1, \dots \lambda _N)\) also forms a determinantal point process on \(\mathbb {C}\) with a correlation kernel:

where \((p_0^*, \dots , p_{N-1}^*)\) is an orthonormal basis of \(\mathscr {P}_N\) with respect to the inner product inherited from \(L^2(e^{-2N Q^*})\) such that \({\text {deg}}(p_k^*) = k\) for \(k=0,\dots , N-1\). We denote

and we define the perturbed one-point function: \(u_N^*(x) : = K_N^*(x,x) \ge 0 \). By definitions, we record that for any \(N\in \mathbb {N}\) and all \(x\in \mathbb {C}\),

Finally, we set \(\widetilde{u}_N^* : = u_N^* - \sigma \), so that for any smooth function \(f:\mathbb {C}\rightarrow \mathbb {C}\), we have

Conventions 5.1

As in Proposition 2.3, we fix a scale \(0<\alpha <1/2\) and let \(\epsilon =\epsilon (N) = N^{-1/2+\alpha }\). We also fix \(\beta >1\) and let \(\delta = \delta (N) = \sqrt{(\log N)^\beta /N}\) as in Proposition 5.3 below. Throughout Sect. 5, we assume that the dimension \(N\in \mathbb {N}\) is sufficiently large so that \( \delta /\epsilon \le 1/4\) and \(( \delta /\epsilon )^\ell \le N^{-1}\) for a fixed \(\ell \in \mathbb {N}\) — e.g. we can pick \(\ell = \lfloor 2/\alpha \rfloor \). Moreover, \(C, N_0 >0\) are positive constant which may change from line to line and depend only on the mollifier \(\phi \), the parameters \(R,\alpha ,\beta , \epsilon _0>0\), \(n, \ell \in \mathbb {N}\) and \(t\in [0,1]\) above. Then, we write \(A_N=\mathcal {O}(B_N)\) if there exists such a constant \(C>0\) such that \(0\le A_N \le C B_N\).

5.2 Ward’s identity

Formula (5.8) below is usually called Ward’s equation or loop equation and the terms \(\mathfrak {T}^k_N\) for \(k=1,2,3\) should be treated as corrections because of the factor 1/N in front of them.

This equation is the key input of a method pioneered by Johansson [36] to establish that linear statistics of \(\beta \)-ensembles are asymptotically Gaussian. In the following, we follow the approach of Ameur–Hedenmalm–Makarov [4, Section 2] who applied Johansson’s method to study the fluctuations of the eigenvalues of random normal matrices, including the Ginibre ensemble.

Proposition 5.2

If \(g\in \mathscr {C}^2_c(\mathbb {D})\), we have for any \(N\in \mathbb {N}\) and \(t\in (0,1]\),

where \(\Sigma (\cdot ;\cdot )\) denotes the quadratic form associated with (1.8),

and

Proof

An integration by parts gives for any \(h\in \mathscr {C}^1(\mathbb {C})\) with compact support:

Observe that with \(h = \overline{\partial }g\), by (5.7) and (5.2), it holds

On the one-hand, using the determinantal formula for the second correlation function of the ensemble \(\mathbb {P}_N^*\), we have

where the second term is equal to \(\mathfrak {T}^3_N(g)\) and the first term on the RHS satisfies

On the other-hand by (1.5), \(\partial Q (x) = \partial \varphi (x) = \frac{1}{2} \int \frac{1}{x-z} \sigma (\mathrm {d}z) \) for all \(x\in \mathbb {D}\) and as= \({\text {supp}}(h) \subset \mathbb {D}\), we also have

Combining formulae (5.11), (5.12) and (5.13), we obtain

By formula (5.10), this implies that

Combining formulae (5.9) and (5.14) with \(h = \overline{\partial }f\), this shows that

where we used that \(\partial \overline{\partial }g = \frac{1}{4} \Delta g\). Finally using that \(g\in \mathscr {C}^2_c(\mathbb {D})\), \(\displaystyle \int \Delta g(x) \sigma (\mathrm {d}x) =0 \) and by (1.8), we conclude that

Combining formulae (5.15) and (5.16), this completes the proof. \(\square \)

5.3 Kernel approximation

Recall that the probability measure \(\mathbb {P}_N^*\) induces a determinantal process on \(\mathbb {C}^N\) with correlation kernel \(K_N^*\), (5.5). In order to control the RHS of (5.8), we need the asymptotics of the this kernel as the dimension \(N\rightarrow +\infty \). In general, this is a challenging problem, however it is expected that \(K_N^*\) decays quickly off diagonal and its asymptotics near the diagonal are universal in the sense that they are similar to that of the Ginibre correlation kernel \(K_N\). In Sect. 6, using the method from Ameur–Hedenmalm–Makarov [2, 4] which relies on Hörmander’s inequality and the properties of reproducing kernels, we compute the asymptotics of \(K_N^*\) near the diagonal. Recall that \(g_N = g_N^{\mathbf {\gamma }, \mathbf {z}}\) as in (2.9) and our Conventions 5.1. Let us also define the approximate Bergman kernel:

where \(\Upsilon _N^w(u) : = \sum _{i=0}^{\ell } \frac{u^i}{i!} \partial ^i g_N(w)\). We also let

Let us state our main approximation result for the perturbed kernels which corresponds to [3, Lemma A.1] in the case where the test function \(g_N\) depends on \(N\in \mathbb {N}\) and develops logarithmic singularities as \(N\rightarrow +\infty \). Because of these significant differences, we adapt the proof in Sect. 6.3.

Proposition 5.3

Let \(\vartheta _N : = \mathbb {1}_\mathbb {D}+ \sum _{k=1}^n \epsilon _k^{-2} \mathbb {1}_{\mathbb {D}(z_k, \epsilon _k)}\) and \(\delta = \delta (N) : = \sqrt{(\log N)^\beta /N}\) for \(\beta >1\). There exist constants \(L, N_0>0\) such that for all \(N\ge N_0\), we have for any \(z\in \mathbb {D}_{1-2\delta }\) and all \(w\in \mathbb {D}(z,\delta )\),

Remark 5.4

We emphasize again that the constants \(L, N_0>0\) do not depend on \(\mathbf {\gamma }\in [-R,R]^n\), \(\mathbf {z} \in \mathbb {D}_{\epsilon _0}^{\times n}\), nor \(t\in [0,1]\). Consequently, the estimates of Sects. 5.4–5.7 bear the same uniformity even though this will not be emphasized to lighten the presentation. In fact, since the parameter \(t\in (0,1]\) is not relevant for our analysis, we will also assume that \(t=1\) to simplify notation — this amounts to changing the parameters \(\mathbf {\gamma }\) to \(t\mathbf {\gamma }\). \(\blacksquare \)

In the remainder of this section and in Sect. 5.4, we discuss some consequences of the approximation of Proposition 5.3. Then, in Sects. 5.5–5.7, we control the error terms \(\mathfrak {T}^k_N(g_N)\) for \(k=1,2,3\) in order to complete the Proof of Proposition 2.3 in Sect. 5.8.

By definitions, with \(t=1\), we have for any \(z\in \mathbb {C}\),

Then according to (5.7) and by taking \(w=z\) in Proposition 5.3, this implies that for any \(z\in \mathbb {D}_{1-2\delta }\),

where we used that the circular density \(\sigma (z) = 1/\pi \) if \(z\in \mathbb {D}\).

Lemma 5.5

It holds as \(N\rightarrow +\infty \),

Proof

First, let us observe that since \(\sigma \) is a probability measure supported on \(\overline{\mathbb {D}}\), we have by (5.6),

Moreover, by (5.19) and using that \(\displaystyle \int _\mathbb {D}\vartheta _N(x) \mathrm {d}^2x = (n+1)\pi \), we also have

Since \(\widetilde{u}_N^* = u_N^* - \sigma \) and \(\displaystyle \int _{|x| \le 1-2\delta } \sigma (\mathrm {d}x) = (1-2\delta )^2 \), the previous estimate shows that

Combining (5.22) with formula (5.20), we conclude that as \(N\rightarrow +\infty \),

Moreover, using the uniform bound from Lemma 6.2 below, there exists \(C>0\) such that \( | \widetilde{u}_N^*(x) | \le C N\) for all \(x\in \mathbb {C}\) which implies that

Combining the estimates (5.21), (5.23) and (5.24), this completes the proof. \(\square \)

5.4 Technical estimates

We denote the Gaussian density with variance 2/N by \(\Phi _N(u) : = \tfrac{N}{\pi } e^{-N|u|^2}\). Since for any \(x,z\in \mathbb {C}\),

we deduce from formulae (5.17)–(5.18) with \(t=1\) that

We should view the last factor of (5.26) as a correction. Indeed on small scales, i.e. if \(|x-z| \le \delta \), then \( e^{g_N(z)-g_N(x) -2 \mathfrak {R}\left\{ \sum _{i=1}^\ell \frac{(z-x)^i}{i!} \partial ^i g_N(x) \right\} } = 1+\mathcal {O}(\eta )\) where \(\eta = \delta /\epsilon \) goes to 0 as \(N\rightarrow +\infty \). In particular, this implies that for N is sufficiently large, it holds for all \(x,z\in \mathbb {C}\) such that \(|x-z| \le \delta \),

Actually, formula (5.26) shows that on microscopic scales, \(| K^\#_N(z,x) |^2\) is well approximated by the Gaussian kernel \(\Phi _N(x-z)\). As in [4, Lemma 3.3], we use this fact to prove the following Lemma.Footnote 13

Lemma 5.6

It holds uniformly for all \(x\in \mathbb {D}\), as \(N\rightarrow +\infty \),

where \(\vartheta _N\) is as in Proposition 5.3.

Proof

Throughout this proof, let us fix \(x\in \mathbb {D}\). Since \(g_N\) is a smooth function, by Taylor’s Theorem up to order \(2\ell \), there exists a matrix \(\mathrm {M} \in \mathbb {R}^{\ell \times \ell }\) (with positive entries) such that for all \(u \in \mathbb {D}_{\delta }\),

Let \( \mathrm {Y}^1_x (u): = \sum _{i=1}^{\ell -1} \frac{ \mathrm {M}_{i,i} }{4} |u|^{2i} \Delta ^i g_N(x) \) and \( \mathrm {A}^1_x (u): = \underset{0<i+j < 2\ell , i\ne j}{\textstyle \sum _{i,j=1}^{2\ell -1} } \mathrm {M}_{i,j} u^i \overline{u}^j \partial ^{i} \overline{\partial }^j g_N(x) -2 \mathfrak {R}\left\{ {\textstyle \sum _{i=1}^\ell } \tfrac{u^i}{i!} \partial ^i g_N(x) \right\} \) for \(u\in \mathbb {C}\). Recall that by assumptions, \(\Vert \nabla ^k g_N \Vert _{\infty } \le C\epsilon ^{-k}\) for all integer \(k\in [1, 2 \ell ]\) and \(\eta = \delta /\epsilon \), so that with the previous notation:

Using the condition \(\eta ^\ell \le N^{-1}\), by (5.26), the previous expansion shows that for all \(z\in \mathbb {D}(x,\delta )\),

Importantly, note that for \(|u|\le \delta \),

and that both \(\Phi _N\) and \(\mathrm {Y}^1_x\) are radial functions, so that it holds for any \( k \in \mathbb {N}\),

Hence, using (5.28)–(5.30), this implies that for any \(x\in \mathbb {D}\),

where we used that \(\Phi _N\) is a probability measure. Moreover, we verify by (2.9) and (2.1) that \(|\Delta ^{k+1} g_N(x)| \le C \epsilon ^{-2k} \vartheta _N(x) \) for all integer \(k\in [0, \ell ]\), so we can bound \(e^{\mathrm {Y}^1_x (u)} = 1+ \mathcal {O}\big (|u|^2 \vartheta _N(x) \big )\) uniformly for all \(|u|\le \delta \), Since for any integer \(j\ge 0\),

we conclude that for all \(x\in \mathbb {D}\),

with uniform errors. Since \(\sigma (x)= 1/\pi \) for \(x\in \mathbb {D}\), this completes the proof. \(\square \)

We can use Proposition 5.3 and Lemma 5.6 to estimate a similar integral for the correlation kernel \( K_N^*\). This corresponds to the counterpart of [4, Corollary 3.4].

Lemma 5.7

It holds for any \(x\in \mathbb {D}_{1-2\delta }\), as \(N\rightarrow +\infty \)

Proof

First of all, let us bound

According to Proposition 5.3, it holds for any \(x\in \mathbb {D}_{1-2\delta }\),

Similarly, using the estimate (5.27),

As \(\vartheta _N \le (n+1) \epsilon ^{-2} \le N\), this shows that for any \(x\in \mathbb {D}_{1-2\delta }\),

Using the reproducing property (5.6) and Lemma 5.6, we conclude that for any \(x\in \mathbb {D}_{1-2\delta }\),

Using the estimate \(| \widetilde{u}_N^*(x) | \le L \vartheta _N(x)\), see (5.19), this yields the claim. \(\square \)

Finally, we need a last Lemma which relies on the anisotropy of the approximate Bergman kernel \(K_N^{\#}\) that we can already see from formula (5.26).

Lemma 5.8

It holds as \(N\rightarrow +\infty \),

Proof

The proof if analogous to that of Lemma 5.6. Since \(g_N\) is a smooth function, by Taylor Theorem up to order \(2\ell \in \mathbb {N}\), it holds for any \(x\in \mathbb {D}_{1/2}\) and \(z\in \mathbb {D}(x,\delta )\),

where \(u = (z-x) \ne 0\). Let \( \mathrm {Y}^2_x (u): = \sum _{j=0}^{\ell -1} \frac{ \mathrm {M}_{j+1,j} }{4} |u|^{2j} \Delta ^{j+1} g_N(x) \) and \( \mathrm {A}^2_x (u): = \underset{0<i+j\le 2\ell , i\ne j+1}{\textstyle \sum _{i,j=0}^{2\ell } } \mathrm {M}_{i,j} u^{i-1} \overline{u}^j \partial ^{i+1} \overline{\partial }^{j+1} g_N(x) \) for \(u\in \mathbb {C}\). Since \(\Vert \nabla ^{2(\ell +1)} g_N\Vert _\infty \delta ^{2\ell } \le C \eta ^{2\ell } \epsilon ^{-2} \le CN^{-1}\) because we choose \(\ell \in \mathbb {N}\) in such a way \(\eta ^\ell \le N^{-1}\) with \(\eta = \delta /\epsilon \), this shows that uniformly for all \(x\in \mathbb {D}_{1/2}\) and \(z\in \mathbb {D}(x,\delta )\),

By Lemma 5.6, we immediately see that \(\displaystyle \iint _{\begin{array}{c} |x| \le 1/2 \\ |z-x| \le \delta \end{array}} |K_N^{\#}(x,z)|^2 \mathrm {d}^2z \mathrm {d}^2x = \frac{N}{4}+ \mathcal {O}(1)\) and the previous expansion implies that

Using formula (5.28), (5.29) and the estimates \(|\mathrm {Y}^1_x(u)| , |\mathrm {A}^1_x(u)| = \mathcal {O}(\epsilon ^{-2})\) which are uniform for \(x,u\in \mathbb {C}\), we obtain by a change of variable,

The error term will be negligible. If we proceed exactly as in the Proof of Lemma 5.6, see (5.30), then only the radial parts contributes:

Moreover, using that \(|\Delta ^{k+1} g_N(x)| \le C \epsilon ^{-2k} \vartheta _N(x) \) for all integer \(k\in [0, \ell ]\), we can develop for all \(|u| \le \delta \), \( \mathrm {Y}^2_x(u) \exp \left( \mathrm {Y}^1_x(u)\right) = \Delta g_N(x)+ \mathcal {O}(\vartheta _N(x)|u|^2 )\) uniformly for all \(x\in \mathbb {D}_{1/2}\) — here we used again that the parameter \(\eta \le 1/4\) to control the error term. Hence, by (5.31), we conclude that

Since the first integral on the RHS vanishes and the second integral is O(1), this completes the proof. \(\square \)

5.5 Error of type \(\mathfrak {T}^1_N\)

In Sects. 5.5–5.7, we use the estimates from Sects. 5.3 and 5.4 to bound the error terms when we apply Proposition 5.2 to the function \(g_N = g_N^{\mathbf {\gamma }, \mathbf {z}}\) given by (2.9). Let us abbreviate

Proposition 5.9

We have \(\left| \mathfrak {T}^1_N(g_N) \right| = \mathcal {O}\left( \Sigma ^2 \epsilon ^{-2} \right) \), uniformly for all \(t\in (0,1]\), as \(N\rightarrow +\infty \).

Proof

A trivial consequence of the estimate (5.19) is that \(|\widetilde{u}_N^*(x)| \le C \epsilon ^{-2}\) for all \(|x| \le 1/2\). Since \({\text {supp}}(g_N) \subseteq \mathbb {D}_{1/2}\), this implies that

where we used that \(\Delta g_N(x) = {\textstyle \sum _{k=1}^n} \gamma _k \left( \phi _{\epsilon _k}(x-z_k) - \phi (x-z_k) \right) \) so that \(\displaystyle \int |\Delta g_N(x)| \mathrm {d}^2x \le 2 {\textstyle \sum _{k=1}^n} |\gamma _k|\) since \(\phi \) is a probability density function on \(\mathbb {C}\). Similarly, we have

since \(\overline{\partial }g_N= \overline{\partial g_N} \) so that \(\overline{\partial }g_N(x) \partial g_N(x) \ge 0\) for all \(x\in \mathbb {C}\) and the previous integral is equal to \(\pi \Sigma ^2\). By definition of \( \mathfrak {T}^1_N\) — see Proposition 5.2 — this proves the claim. \(\square \)

5.6 Error of type \(\mathfrak {T}^2_N\)

Proposition 5.10

Recall that \(\eta = \delta /\epsilon \). It holds as \(N\rightarrow +\infty \), \(| \mathfrak {T}^2_N(g_N)| = \mathcal {O}\left( \Sigma N \eta \right) \).

Proof

Fix a small parameter \(0< \kappa \le 1/4\) independent of \(N\in \mathbb {N}\) and let us split

where

Since \({\text {supp}}(g_N) \subseteq \mathbb {D}_{1/2}\), by Lemma 5.5, the second term on the RHS of (5.35) satisfies

Moreover, using Cauchy–Schwartz inequality and (5.19), this implies that

According to the notation of Proposition 5.3, we verify \({ \displaystyle \int _{|x|\le 1/2} } \vartheta ^2_N(x) \mathrm {d}^2x \le \tfrac{\pi }{2}+ 2\pi \sum _{j,k=1}^n \epsilon _k^{-2} \epsilon _j^{-2}(\epsilon _k^2+\epsilon _j^{2}) \le C\epsilon ^{-2}\), so that by (5.34),

The estimates (5.37) and (5.38) show that with \(\eta = \delta /\epsilon \),

Let \(\mathscr {S}_N :=\bigcup _{k=1}^n \mathbb {D}(z_k,\epsilon _k)\). In order to control the integral (5.36), we split it into \(n+1\) parts and use (5.19) which is valid for all \(x\in {\text {supp}}(g_N)\), then we obtain

On the one hand, it follows from (5.19) that for any \(x\in \mathbb {D}(z_k,\epsilon _k)\),

On the other hand, as \(|\widetilde{u}_N^*(z)| \le nL \epsilon ^{-2}\) for all \(|z| \le 3/4\), it also holds for all \(x\in \mathbb {D}_{1/2}\),

Combining these two bounds with (5.40), we conclude that

By the Cauchy–Schwartz inequality and (5.34), this implies that

Since our parameters \(\epsilon _1, \dots , \epsilon _n \ge \epsilon \), we have \( \sum _{k, j =1}^n \epsilon _k^{-1} \epsilon _j^{-2} (\epsilon _j + \epsilon _k) \le 2n^2 \epsilon ^{-2}\). Hence, we have proved that

Since \(\epsilon \ge \delta \ge N^{-1/2}\), by combining the estimates (5.39) and (5.41) with (5.35), this completes the proof. \(\square \)

5.7 Error of type \(\mathfrak {T}^3_N\)

Proposition 5.11

We have \(\left| \mathfrak {T}^3_N(g_N) \right| = \mathcal {O}(N\eta )\) as \(N\rightarrow +\infty \).

Proof

First, let us observe that by Lemma 5.7,

Since \(\Vert \nabla g_N\Vert _\infty = \mathcal {O}(\epsilon ^{-1})\) and \(\displaystyle \int _{|x|\le 1/2} \vartheta _N(x) \mathrm {d}^2 x \le (n+1)\pi \), this shows that

Second, since \(\displaystyle \left| \frac{\overline{\partial }g_N(x)- \overline{\partial }g_N(z)}{x-z} \right| \le \Vert \nabla ^2 g_N\Vert _\infty = \mathcal {O}(\epsilon ^{-2})\) for all \(x,z\in \mathbb {C}\), we have

If we integrate the estimate (5.32), respectively (5.33), over the set \(|x| \le 1/2\), we obtain

and

Here we used again that \(\displaystyle \int _{|x|\le 1/2} \vartheta _N(x) \mathrm {d}^2 x \le (n+1)\pi \). These bounds imply that

By symmetry, since \({\text {supp}}(g_N) \subseteq \mathbb {D}_{1/2}\),

Then, using the estimate (5.43) and Lemma 5.8, we obtain

Finally, it remains to combine the estimates (5.42) and (5.44) to complete the proof. \(\square \)

5.8 Proof of Proposition 2.3

We are now ready to give the Proof of Proposition 2.3. Recall that we use the notation of Sect. 5.1. When we combine Propositions 5.9, 5.10 and 5.11, we obtain that as \(N\rightarrow +\infty \),

where, by Remark 5.4, the error term is uniform for all \(\mathbf {z} \in \mathbb {D}_{\epsilon _0}^{\times n}\) , all \(t\in (0,1]\) and all \(\mathbf {\gamma } \in [-R,R]^{n}\) for a fixed \(R>0\). Since \(\Sigma ^2(g_N) = \mathcal {O}(\log N)\) according to the asymptotics (2.8) and \(\eta = \delta /\epsilon = (\log N)^{\beta /2} N^{-\alpha }\), this implies that as \(N\rightarrow +\infty \)

The main idea of the proof, which originates from [36] is to observe that for any \(t>0\),

Hence, by Proposition 5.2 applied to the function \(g_N = g_N^{\mathbf {\gamma }, \mathbf {z}}\), using the estimate (5.45), we conclude that

where the error term is uniform for all \(t\in [0,1]\), all \(\mathbf {\gamma }\) in compact subsets of \(\mathbb {R}^n\) and all \(\mathbf {z} \in \mathbb {D}_{\epsilon _0}^{\times n}\). Then, if we integrate the asymptotics (5.46) for \(t\in [0,1]\), we obtain (2.10).

6 Kernel Asymptotics

In this section, we obtain the asymptotics for the correlation kernel induced by the biased measure (5.3) that we need in Sect. 5 in order to control the error term in Ward’s equation. Let us introduce

and similarly for the norm \(\Vert \cdot \Vert _{Q^*}\). Recall that \(Q(x) = |x|^2/2\) is the Ginibre potential and \(Q^* = Q- \frac{g_N}{2N}\) is a potential which is perturbed by the function \(g_N = g_N^{\mathbf {\gamma }, \mathbf {z}} \in \mathscr {C}_c^\infty (\mathbb {D}_{1/2})\) given by (2.9) with \(\mathbf {z} \in \mathbb {D}_{\epsilon _0}^{\times n}\) and \(\gamma \in [-R,R]^n\) for some fixed \(n\in \mathbb {N}\) and \(R>0\). We rely on the Conventions 5.1 and we choose \(N_0 \in \mathbb {N}\) sufficiently large so that \(\eta \le 1/4\) and \(\Vert \Delta g_N\Vert _\infty \le N\) for all \(N\ge N_0\).

6.1 Uniform estimates for the 1-point function

In this section, we collect some simple estimates on the 1-point function \(u_N^*\) which we need. We skip the details since the argument is the same as in [2, Section 3] only adapted to our situation.

Lemma 6.1

There exists a universal constant \(C>0\) such that if \(N\ge N_0\), for any function f which is analytic in \(\mathbb {D}(z; 2/\sqrt{N})\) for some \(z\in \mathbb {C}\),

Proof

If \(N\ge N_0\), we have \(\Delta Q^* \le 3\) and by [2, Lemma 3.1], we obtain

This immediately yields the claim. \(\square \)

Lemma 6.2

With the same \(C>0\) as in Lemma 6.1, it holds for all \(N\ge N_0\) and all \(z\in \mathbb {C}\),

Proof

Fix \(z\in \mathbb {C}\) and let us apply Lemma 6.1 to the polynomial \(k^*_N(\cdot ,z)\), we obtain

since \(\Vert k^*_N(\cdot ,z)\Vert _{Q^*}^2 = k^*_N(z,z)\) because of the reproducing property of the kernel \(k^*_N\). Taking \(w=z\) in the previous bound and using that \(u_N^*(z) =k^*_N(z,z) e^{-2N Q^*(z)}\), we obtain the claim.

6.2 Preliminary lemmas

Recall that we let \(\Upsilon _N^w(u) = \sum _{i=0}^{\ell } \frac{u^i}{i!} \partial ^i g_N(w) \) and that we defined the approximate Bergman kernel \(k^\#\) by

We note that this kernel is not Hermitian but it is analytic in \(x\in \mathbb {C}\) and we define the corresponding operator:

for any \(f\in L^2(e^{-2N Q^*})\). According to (5.4), we make a similar definition for \(K^*_N[f] \). Our next Lemma is the counterpart of [4, Lemma A.2] and it relies on the analytic properties of the function \(\Upsilon _N^w\). Since the test function \(g_N\) develops logarithmic singularities for large N, we need to adapt the proof accordingly.

Assumption 6.3

Let \(\chi \in \mathscr {C}^\infty _c\big (\mathbb {D}_{2\delta }\big )\) be a radial function such that \(0\le \chi \le 1\), \(\chi =1\) on \(\mathbb {D}_{\delta }\), and \(\Vert \nabla \chi \Vert _\infty \le C \delta ^{-1}\) for a \(C>0\) independent of \(N\in \mathbb {N}\). In the following for any \(z\in \mathbb {C}\), we let \(\chi _z = \chi (\cdot -z)\).

Lemma 6.4

There exists a constant \(C>0\) (which depends only on \(R>0\), the mollifier \(\phi \) and \(n, \ell \in \mathbb {N}\)) such that for any \(z\in \mathbb {C}\) and any function \(f \in L^2(e^{-2N Q^*})\) which is analytic in \(\mathbb {D}(z, 2 \delta )\),

where \(\vartheta _N\) is as in Proposition 5.3.

Proof

We fix \(z\in \mathbb {C}\) and by definitions,

By formula (5.2), since \(\chi _z(z) =1\) and \(\Upsilon _N^z(0) =g_N(z) \in \mathbb {R}\), we obtain

Since f is analytic in \(\mathbb {D}(z,2 \delta ) = {\text {supp}}(\chi _z)\), this implies that

where we let

Using the Assumptions 6.3, the second term on the RHS of (6.3) satisfies

Recall \(\Vert \nabla ^k g_N\Vert _\infty \le C \epsilon ^{-k}\) for \(k=1, \dots , \ell \) and we assume that \(\eta = \delta /\epsilon \le 1/4\). Then, by Taylor’s formula, if \(|x-z| \le 2\delta \),

This shows that on the RHS of (6.5), \(|e^{ g_N(x)-\Upsilon _N^z(x-z) }| \le C e^{ g_N(x)/2 - g_N(z)/2} \). Moreover, by rearranging (5.25),

which shows that

By Cauchy–Schwartz inequality and (6.1), we conclude that the second term on the RHS of (6.3) is bounded by

Here we used that \(\delta ^{-2} \le N\) and that for any \(r>0\),

The RHS of (6.8) will be negligible and it remains to control \(\mathfrak {Z}_N\). Using again formulae (6.6)–(6.7) and taking the \(|\cdot |\) inside the integral (6.4), we obtain

where we used that \(\Vert \chi _z\Vert _\infty \le 1\). Since the function \(g_N\) is smooth, by Taylor’s Theorem up to order \(2\ell \), it holds for any \(|u| \le 2\delta \),

where the coefficients \( \mathrm {M}_{i,j} >0\). Let us recall that \(\Vert \nabla ^{k} g_N\Vert _\infty \le C \epsilon ^{-k}\) for any integer \(k\in [1,2\ell ]\), \(\eta =\delta /\epsilon \) is small and we fixed \(\ell \in \mathbb {N}\) in such a way that the parameter \(\eta ^\ell \le N^{-1}\). In particular, we have constructed \(\Upsilon _N^z\) in such a way that we deduce from the previous expansion that for any \(x\in \mathbb {D}(z,2\delta )\),

and we have used that \(\partial \overline{\partial }g_N = \frac{1}{4} \Delta g_N\). Using (2.9), (2.1) and the definition of \(\vartheta _N\), it is straightforward to verify that for any integer \(k\in [0,2\ell ]\) and uniformly for all \(z\in \mathbb {C}\), \(| \nabla ^k (\Delta g_N)(z)| \le C \epsilon ^{-k} \vartheta _N(z) \). Hence, these estimates imply that uniformly for all \(x\in \mathbb {D}(z,2\delta )\),

Note that the error term is 0 if \(z\notin \mathbb {D}\) since \(g_N\) has compact support in \(\mathbb {D}_{\epsilon _0}\). Therefore, by combining (6.10) and (6.11), we conclude that

where we have used the Cauchy–Schwartz inequality and (6.9) with \(r=0\). Finally, by combining the estimates (6.8) and (6.12) with formula (6.3), this completes the proof. \(\square \)

Our next Lemma is the counterpart of [4, (4.12)]. The proof needs again to be carefully adapted but the general strategy remains the same as in [4] and relies on Hörmander’s inequality and the fact that (5.4) is the reproducing kernel of the Hilbert space \(\mathscr {P}_N \cap L^2(e^{-2NQ^*})\).

Lemma 6.5

For any integer \(\kappa \ge 0\), there exists \(N_\kappa \in \mathbb {N}\) (which depends only on \(R>0\), the mollifier \(\phi \) and \(n, \ell \in \mathbb {N}\)) such that if \(N \ge N_\kappa \), we have for all \(z\in \mathbb {D}_{1-2\delta }\) and all \(w\in \mathbb {D}(z,\delta )\),

Proof

In this proof, we fix N and \(z\in \mathbb {D}_{1-2\delta }\). We let \(f := \chi _z k^\#_N(\cdot ,z)\) where \(\chi _z\) is as in Assumptions 6.3 and \(W(x) := N \big (\varphi (x) +1/2\big ) + \log \sqrt{1+|x|^2}\) where \(\varphi \) is as in equations (1.4)–(1.5). Let also V be the minimal solution in \(L^2(e^{-2W})\) of the problem \(\overline{\partial }V= \overline{\partial }f\) and recall that Hörmander’s inequality, e.g. [2, formula (4.5)], for the \(\overline{\partial }\)–equation states that

Here we used that W is strictly subharmonic.Footnote 14 By (1.5), since \(W(x) \ge N Q(x) \) and \(\Delta W(x) \ge N \Delta Q(x) = 2N\) for all \(x\in \mathbb {D}\), this implies that

Moreover, by (1.4), there exists a universal constant \(c>0\) such that \(W(x) \le N Q(x) + c \). Therefore, we obtain

Recall that \(Q^*= Q -\frac{g_N}{2N}\) where the perturbation \(g_N\) is given by (2.9) and satisfies \(\Vert g_N\Vert _\infty \le C \log \epsilon ^{-1}\). This implies that \(L^2(e^{-2NQ^*}) \cong L^2(e^{-2NQ})\) with for any function \(h\in L^2(e^{-2NQ})\):

By (6.13), this equivalence of norms shows that if \(N\in \mathbb {N}\) is sufficiently large,

Observe that by (1.4), \(W(x) = (N+1) \log |x| +o(1)\) as \(|x| \rightarrow +\infty \), hence the Bergman space \(A^2(e^{-2W})\) coincide with \(\mathscr {P}_N\) and we must have \(V- f \in \mathscr {P}_N\), see (5.1).

Now, we let U to be the minimal solution in \(L^2(e^{-2NQ^*})\) of the problem \(U-f \in \mathscr {P}_N\). Since U has minimal norm, (6.14) implies that

Since \(k^\#_N(\cdot ,z)\) is analytic, see (5.17), \(\overline{\partial }f = k^\#_N(\cdot ,z) \overline{\partial }\chi _z\) and according to the Assumptions 6.3,

Recall that \(\eta = \delta /\epsilon \le 1/4\) and \(\Vert \nabla ^i g_N\Vert \le C \epsilon ^{-i} \) for all \(i=1,\dots , \ell \). Then by (5.17) with \(t=1\), it holds for all \(z \in \mathbb {C}\) and \(|x-z| \le 2\delta \), \(\Upsilon _N^z(x-z) = g_N(z) + \mathcal {O}(\eta )\) so that

By (6.7) and using that \(|g_N(x)-g_N(z)| =\mathcal {O}(\eta )\), this shows that

Then by (6.9) and using that \(\delta ^{-2} \le N\), we obtain

Combining the previous estimate with (6.15), we conclude that

We may now turn (6.16) into a pointwise estimate using Lemma 6.1. Note that both f and U are analyticFootnote 15 in \(\mathbb {D}(z;\delta )\), this implies that for any \(w\in \mathbb {D}(z;\delta )\)

Since \(k^*_N\) is the reproducing kernel of the Hilbert space \(\mathscr {P}_N \cap L^2(e^{-2NQ^*})\), see (5.4), it is well known that minimal solution U is given by

Conseqently, as \(f = \chi _z k^\#_N(\cdot ,z)\) and \(\chi _z =1\) on \(\mathbb {D}(z;\delta )\), by (6.17), we conclude that for any \(w\in \mathbb {D}(z;\delta )\),

Since \(e^{N \delta ^2}\) grows faster than any power of \(N\in \mathbb {N}\), this completes the proof. \(\square \)

We are now ready to give the proof of our main approximation for the correlation kernel \(K^*_N\), see (5.5).

6.3 Proof of Proposition 5.3

We apply Lemma 6.4 to the function \(f(x) = k^*_N(x,w)\) which is analytic for \(x\in \mathbb {C}\) with norm

by the reproducing property. Hence, we obtain for any \(z\in \mathbb {D}\) and \(w\in \mathbb {C}\),

By Lemma 6.2, this shows that

Recall that by (6.2), we have

so that

Then, since the kernel \(k^*_N\) is Hermitian, it follows from the bound (6.18) that for any \(z, w\in \mathbb {D}\),

Finally, by Lemma 6.5, we conclude that for any \(z\in \mathbb {D}_{1-2\delta }\) and all \(w\in \mathbb {D}(z,\delta )\),

Notes

We briefly review the definition of the GFF in Sect. 2.1.

A random \(N\times N\) matrix sampled from the Haar measure on the unitary group.

A random \(N\times N\) Hermitian matrix with independent Gaussian entries suitably normalized.

They obtain tightness of the appropriately centered maximum for both real and imaginary part of the logarithm of the characteristic polynomial. See also [42] for the asymptotics of the measures of thick points by a different approach.

See Theorem 1.4 below.

The concept of thick points is crucial to describe the geometric properties of log-correlated fields. Informally, these points corresponds to the extremal values of the field.

This means that \(\phi (x)\) is a smooth probability density function which only depends on |x| with compact support in the disk \(\mathbb {D}_{\epsilon _0}\). Note that we can work with any such mollifier.

This follows from the fact that since the mollifier \(\phi \) is radial and compactly supported, \(\psi _\epsilon (z) = \log |z|\) for all \(|z| \ge \epsilon \) and for any \(\epsilon >0\).

We chose this unusual normalization in order to match with formula (1.10).

This formula for the limiting covariance in the Rider–Viràg CLT holds for test functions which are harmonic outside of \(\mathbb {D}\) [53]. In particular, it can be applied to (2.1) if \(z\in \mathbb {D}\) and \(\epsilon >0\) is small enough. Then, one deduces the counterpart of (2.7)–(2.8) holds for the field (2.3) which is supported in \(\mathbb {D}_{2\epsilon _0}\) by linearity.

Note that our normalization does not match with the standard convention for log-correlated fields used in [22, Section 3]. Actually, we apply [22, Theorem 3.4] to the field \(\mathrm {X}(z)= \sqrt{2} \mathrm {X}(g_N^z)\) — this explains why the critical value is \(\gamma ^*= \sqrt{8}\) as well as the factor \(\frac{\gamma ^*}{2}\) in (2.11).

Note that our approximations are more precise than in [4].

Note that we have \(\Delta \left( \log \sqrt{1+|x|^2} \right) = \frac{4}{(1+|x|^2)^2} >0\) for \(x\in \mathbb {C}\).

Here we used that \(\chi _z=1\) on \(\mathbb {D}(z;\delta )\) so that \(\overline{\partial }f=0\) and that \(\overline{\partial }f = \overline{\partial }U\) since \(U-f \in \mathscr {P}_N\) by definition of U.

References

Akemann, G., Vernizzi, G.: Characteristic polynomials of complex random matrix models. Nuclear Phys. B 660(3), 532–556 (2003)

Ameur, Y., Hedenmalm, H., Makarov, N.: Berezin transform in polynomial bergman spaces. Commun. Pure Appl. Math. 63(12), 1533–1584 (2010)

Ameur, Y., Hedenmalm, H., Makarov, N.: Fluctuations of eigenvalues of random normal matrices. Duke Math. J. 159(1), 31–81 (2011)

Ameur, Y., Hedenmalm, H., Makarov, N.: Random normal matrices and ward identities. Ann. Probab. 43(3), 1157–1201 (2015)

Arguin, L.-P., Belius, D., Bourgade, P.: Maximum of the characteristic polynomial of random unitary matrices. Commun. Math. Phys. 349(2), 703–751 (2017)

Arguin, L.-P., Belius, D., Bourgade, P., Radziwiłł, M., Soundararajan, K.: Maximum of the Riemann zeta function on a short interval of the critical line. Commun. Pure Appl. Math. 72(3), 500–535 (2019)