Abstract

This work nests the Agent-Based macroeconomic perspective into the earlier history of macroeconomics. We discuss how the discipline in the 70’s took a perverse path relying on models grounded on fictitious rational representative agent in order to try to pathetically circumvent aggregation and coordination problems. The Great Recession was a natural experiment for macroeconomics, showing the inadequacy of the predominant theoretical framework grounded on DSGE models. After discussing the pathological fallacies of the DSGE-based approach, we claim that macroeconomics should consider the economy as a complex evolving system, i.e. as an ecology populated by heterogenous agents, whose far-from-equilibrium interactions continuously change the structure of the system. This in turn implies that more is different: macroeconomics cannot be shrink to representative-agent micro, but agents’ complex interactions lead to emergence of new phenomena and hierarchical structure at the macro level. This is what is taken into account by agent-based models, which provide a novel way to model complex economies from the bottom-up, with sound empirically-based microfoundations. We present the foundations of Agent-Based macroeconomics and we discuss how the contributions of this special issue push its frontier forward. Finally, we conclude by discussing the ways ahead for the fully acknowledgement of agent-based models as the standard way of theorizing in macroeconomics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Psychology is not applied biology, nor is biology applied chemistry. P. W. Anderson (1972), “More is different”, Science

1 Introduction

Basically all scientific disciplines, natural and social ones — with the noticeable exception of a good deal of contemporary economics —, distinguish between “lower”, more micro, levels of description of whatever phenomenon, and “high level” ones, regarding collective outcomes, which are typically not isomorphic to the former.Footnote 1 So, in physics, thermodynamics is not postulated on the kinetic properties of some “representative” or “average” molecules! And even more so in biology, ethology or medicine. This is fundamental point repeatedly emphasized by Kirman (2016) and outside our discipline by Anderson (1972) and Prigogine (1980), among a few outstanding others. The basic epistemological notion is that the aggregate of interacting entities yield emergent properties, which cannot be mapped down to the (conscious or unconscious) behaviors of some identifiable underlying components.Footnote 2 This is so obvious to natural scientists that it would be an insult to them to remind that the dynamics of a beehive cannot be summarized by the dynamics adjustment of a “representative bee” (the example is discussed, again, in Kirman 2016). The relation between “micro” and “macro” has been acutely at the centre of all social sciences since their inception. Think of one of the everlasting questions, namely the relationship between agency and structure, which is at the core of most interpretations of social phenomena. Or, nearer to our concerns here, consider the (often misunderstood) notion of Adam Smith’s invisible hand: this is basically a proposition about the lack of isomorphisms between the greediness of individual butchers and bakers, on the one hand, and they relatively orderly delivery of meat and bread across markets.

1.1 The happy childhood of macroeconomics

But let us focus here on the status of “macroeconomics” in the economic discipline (see also in Haldane and Turrell 2019 in this special issue). To be rough, macroeconomics sees the open light with Keynes. For sure, enlightening analysis came before, including Wicksell’s one, but the distinctiveness of macro levels of interpretation came with him. Indeed, up to the 70’s, there were basically two “macros”.

One were equilibrium growth theories. While it is the case that e.g. models á la Solow invoked maximizing behaviors in order to establish equilibrium input intensities, no claim was made that such allocations were the work of any “representative agent” in turn taken to be the “synthetic” (??) version of some underlying General Equilibrium (GE). By the same token, the distinction between positive (that is purportedly descriptive) and normative models, before Lucas and companions, was absolutely clear to the practitioners. Hence, the prescriptive side was kept distinctly separated. Ramsey (1928) type models, asking what a benevolent Central Planner would do, were reasonably kept apart from any question on the “laws of motion” of capitalism, á la Harrod (1939), Domar (1946), Kaldor (1957), and indeed Solow (1956). Finally, in the good and in the bad, technological change was kept separated rate from the mechanisms of resource allocation: the famous “Solow residual” was, as well known, the statistical counterpart of the drift in growth models with an exogenous technological change.

Second, in some land between purported GE “microfoundations” and equilibrium growth theories, lived for at least three decades a macroeconomics sufficiently “Keynesian” in spirit and quite neoclassical in terms of tools. It was the early “neo-Keynesianism” (also known as Neoclassical Synthesis) — pioneered by Hicks (1937), and shortly thereafter by Modigliani, Patinkin and a few other American “Keynesians” — which Joan Robinson contemptuously defined as “bastard Keynesians”. It is the short-term macro which students used to learn up to the ’80s, with IS-LM curves - meant to capture the aggregate relations between money supply and money demand, interest rates, savings and investments —, Phillips curves on the labour market, and a few other curves. In fact, the “curves” were (are) a precarious compromise between the notion that the economy is supposed to be in some sort of equilibrium — albeit of a short-term nature — and the notion of a more “fundamental” equilibrium path to which the economy is bound to tend in the longer run.

That was some sort of mainstream, especially on the other side of the Atlantic. There were also a tale, which we could call (and they called themselves) genuine Keynesians. They were predominantly in Europe, especially in the U.K. and in Italy: see Pasinetti (1974, 1983) and Harcourt (2007) for an overview.Footnote 3 The focus was only on the basics laws of motions of capitalist dynamics. They include the drivers of aggregate demand; the multiplier leading from the “autonomous” components of demand such as governement expenditures and exports to aggregate income; the accelerator, linking aggregate investment to past variations in aggregate income itself; and the relation between unemployment, wage/profits shares and investments.Footnote 4 Indeed, such stream of research is alive and progressing, refining upon the modeling of the “laws of motion” and their supporting empirical evidence: cf. Lavoie (2009), Lavoie and Stockhammer (2013), Storm and Naastepad (2012a, 2012bb) among quite a few others.

Indeed, a common characteristics of the variegated contributions from “genuine Keynesianism”, often known also as post-Keynesian, is the skepticism about any microfoundation, to its own merit and also to its own peril. Part of the denial comes from a healthy rejection of methodological individualism and its axiomatization as the ultimate primitive of economic analysis. Part of it, in our view, comes from the misleading notion that microfoundations necessarily means methodological individualism. As if the interpretation of the working of a beehive had to necessarily build upon the knowledge of “what individual bees think and do”. On the contrary, microfoundations might well mean how the macro structure of the beehive influences the distribution of the behaviors of the bees. A sort of macrofoundation of the micro. All this entails a major terrain of dialogue between the foregoing stream of Keynesian models and agent-based ones. We shall discuss this challenge below.

Now, back to the roots of modern macroeconomics. The opposite extreme to “bastard Kenesianism” was not Keynesian at all, even if sometimes took up the IS-LM-Phillips discourse. The best concise synthesis is Friedman (1968). Historically it went under the heading of monetarism, but basically was the pre-Keynesian view that the economy left to itself travels on a unique equilibrium path in the long- and short-run. Indeed, in a barter, pre-industrial economy where the Say’s law and the quantitative theory of money holds, monetary policy cannot influence the interest rate and fiscal policy completely crowds out private consumption and investment: “there is a natural rate of unemployment which policies cannot influence”, see the deep discussion, as usual, in Solow (2018). Milton Friedman was the obvious ancestor to Lucas et al., but he was still too far from the subsequent axiomatic and still to prove to empirical checks.Footnote 5

1.2 “New Classical (??)” talibanism and beyond

What happened next? Starting from the beginning of the 70’s, we think that everything which could get worse got worse and more: in that we agree with Krugman (2011) and Romer (2016) that macroeconomics plunged into a Dark Age.Footnote 6

First, “new classical economics” (even if the reference to the Classics cannot be more far away from the truth) fully abolished the distinction between the normative and positive domains —, between models á la Ramsey vs. models á la Harrod-Domar, Solow, etc. (notwithstanding the differences amongst the latter ones). In fact, the striking paradox for theorists who are in good part market talibans is that one starts with a model which is essentially of a benign, forward-looking, central planner, and only at the end, by way of an abundant dose of hand-waving, one claims that the solution of whatever intertemporal optimization problem is in fact supported by a decentralized market equilibrium.

Things could be much easier for this approach if one could build a genuine “general equilibrium” model (that is with many agents, heterogeneous at least in their endowments and preferences). However, this is not possible for the well-known, but ignored (Sonnenschein 1972; Mantel 1974; Debreu 1974) theorems (more in Kirman 1989). Assuming by construction that the coordination problem is solved resorting to the “representative agent” fiction is simply a pathetic shortcut which does not have any theoretical legitimacy (Kirman 1992).

However, the “New Classical” restoration went so far as to wash away the distinction between “long-term” and “short-term” — with the latter as the locus where all “frictions”, “liquidity traps”, Phillips curves, some (temporary!) real effects of fiscal and monetary policies, etc. had hazardously survived before. Why would a representative agent endowed with “rational” expectations able to solve sophisticated inter-temporal optimization problems from here to infinity display any friction or distortion in the short-run, if competitive markets always clear? We all know the outrageously silly propositions, sold as major discoveries, also associated with infamous “rational expectation revolution” concerning the ineffectiveness of fiscal and monetary policies and the general properties of markets to yield Pareto first-best allocations [In this respect, of course, it is easier for that to happen if “the market” is one representative agent: coordination and allocation failures would involve serious episodes of schizophrenia by that agent itself!].

While Lucas and Sargent (1978) wrote an obituary of Keynesian macroeconomics, we think that in other times, nearly the entire profession would have reacted to such a “revolution” as Bob Solow once did when asked by Klamer (1984) why he did not take the “new Classics” seriously:

Suppose someone sits down where you are sitting right now and announces to me he is Napoleon Bonaparte. The last thing I want to do with him is to get involved in a technical discussion of cavalry tactics at the battle of Austerlitz. If I do that, I am tacitly drawn in the game that he is Napoleon. Now, Bob Lucas and Tom Sargent like nothing better than to get drawn in technical discussions, because then you have tacitly gone along with their fundamental assumptions; your attention is attracted away from the basic weakness of the whole story. Since I find that fundamental framework ludicrous, I respond by treating it as ludicrous — that is, by laughing at it — so as not to fall in the trap of taking it seriously and passing on matters of technique. (Solow in Klamer 1984 p. 146)

The reasons why the profession, and even worse, the world at large took these “Napoleons” seriously, we think, have basically to do with a Zeitgeist where the hegemonic politics was that epitomized by Ronald Reagan and Margaret Thatcher, and their system of beliefs on the “magic of the market place” et similia. And, crucially, this became largely politically bipartisan, leading to financial deregulations, massive waves of privatization, tax cuts and surging inequality, etc. Or think of the disasters produced for decades around the world by the IMF-inspired Washington Consensus or the by latest waves of austerity and structural-reform policies in the European Union — as such another creed on the magic of markets, the evil of governments and the miraculous effects of blood, sweat and tears (not surprisingly Fitoussi and Saraceno 2013 wrote about a Berlin-Washington consensus). The point we want to make is that the changes in the hegemonic (macro) theory should be primarily interpreted in terms of the political economy of power relations among social and political groups, with little to write home about “advancements” in the theory itself ... On the contrary!

The Mariana Trench of fanaticism was reached with Real Business Cycle models (Kydland and Prescott 1982) positing optimal Pareto business cycles driven by economy-wide technological shocks (sic). The natural question immediately arising concerns the nature of such shocks. What are they? Are recessions driven by episodes of technological regress (e.g. people going back to wash clothes in rivers or using candles for some few quarters)? The candid answer provided by one of the founding father of RBC theory is that: “They’re that traffic out there” (“there” refers to a congested bridge, as cited in Romer 2016 p. 5). Needless to say, the RBC propositions were (and are) not supported by any empirical evidence. But the price paid by macroeconomics for this sort of intellectual trolling was and still is huge!

1.3 New Keynesians, new monetarists and the new neoclassical synthesis

Since the 80’s, “New Keynesian” economists instead of following Solow’s advicesFootnote 7 basically accepted New Classical and RBC framework and worked on the edges of auxiliary assumptions. So, they introduced monopolistic competition and a plethora of nominal and real rigidities into models with representative-agent cum rational-expectations microfoundations. New Keynesian models restored some basic results which were undisputed before the New Classical Middle Age, such as the non-neutrality of money. However, the price paid to talk and discuss about cavalry tactics in Austerlitz with “New Classicals” and RBC Napoleons was tall. The methodological infection was so deep that Mankiw and Romer (1991) claimed that New Keynesian macroeconomics should be renamed New Monetarist macroeconomics and De Long (2000) discussed “the triumph of monetarism”. “New Keynesianism” of different vintages represents what we could call homeopathic Keynesianism: the minimum quantities to be added to the standard model, sufficient to mitigate the most outrageous claims of the latter.

Indeed, the widespread sepsis came with the appearance of a new New Neoclassical Synthesis, (Goodfriend 2007), grounded upon Dynamic Stochastic General Equilibrium (DSGE) models (Clarida et al. 1999; Woodford 2003; Galí and Gertler 2007). In a nutshell, such models have a RBC core supplemented with monopolistic competition, nominal imperfections and a monetary policy rule and they can accomodate various forms of “imperfections”, “frictions” and “inertias”. To many respects DSGE models are simply the late-Ptolemaic phase of the theory: add epicycles at full steam without any theoretical and empirical discipline in order to better match the data. Of course, in the epicycles frenzy one is never touched by the sense of ridiculous in assuming that the mythical representative agent at the same time is extremely sophisticated when thinking about future allocations, but falls into backward looking habits when deciding about consumption or, when having to change prices, is tangled by sticky prices! (Caballero 2010 offers a thorough picture of this surreal state of affairs).

“New Keynesianism”, however, is a misnomer, in that sometimes it is meant to cover also simple but quite powerful models whereby Keynesian system properties are obtained out of otherwise standard microfoundations, just taking seriously “imperfections” as structural, long-term characteristics of the economy. Pervasive asymmetric information require some genuine heterogeneity and interactions among agents (see Akerlof and Yellen 1985; Akerlof, 2002, 2007; Greenwald and Stiglitz, 1993a, b among others) yielding Keynesian properties as ubiquitous outcomes of coordination failures (for more detailed discussions of Stiglitz’ contributions see Dosi and Virgillito2017).Footnote 8 All that, still without considering the long-term changes in the so-called fundamentals, technical progress...

1.4 What about innovation dynamics and long-run growth?

We have argued that even the coordination issue has been written out of the agenda by assuming it as basically solved by construction. But what about change? What about the Unbound Prometheus (Landes 1969) of capitalist search, discovery and indeed destruction?

In Solow (1956) and subsequent contributions, technical progress appears by default but it does so in a powerful way as the fundamental driver of long-run growth, to be explained outside the sheer allocation mechanism.Footnote 9 On the contrary, in the DSGE workhorse, there is no Prometheus: “innovations” come as exogenous technology shocks upon the aggregate production function, with the same mythical agent optimally adjusting its consumption and investment plans. And the macroeconomic time series generated by the models are usually detrended to focus on their business cycle dynamics.Footnote 10 End of the story.

The last thirty years have seen also the emergence of new growth theories (see e.g. Romer 1990; Aghion and Howitt 1992), bringing — as compared to the original Solow model — some significant advancements and, in our view, equally significant drawbacks. The big plus is the endogenization of technological change: innovation is endogenized into economic dynamics. But that is done just as either a learning externality or as the outcome of purposeful expensive efforts by profit maximising agents. In the latter case, the endogenization comes at what we consider a major price (although many colleaugues would deem it as a major achievement) of reducing innovative activities to an equilibrium outcome of optimal intertemporal allocation of resources, with or without (probabilizable) uncertainty. Hence by doing that, one looses also the genuine Schumpeterian notion of innovation as a disequilibrium phenomenon (at least as a transient!).

Moreover, endogenous growth theories do not account for business cycles fluctuations. This is really unfortunate as Bob Solow (2005) puts it:

Neoclassical growth theory is about the evolution of potential output. In other words, the model takes it for granted that aggregate output is limited on the supply side, not by shortages (or excesses) of effective demand. Short-run macroeconomics, on the other hand, is mostly about the gap between potential and actual output. (...) Some sort of endogenous knitting-together of the fluctuations and growth contexts is needed, and not only for the sake of neatness: the short run and its uncertainties affect the long run through the volume of investment and research expenditure, for instance, and the growth forces in the economy probably influence the frequency and amplitude of short-run fluctuations. (...) To put it differently, it would be a good thing if there were a unified macroeconomics capable of dealing with trend and fluctuations, rather than a short-run branch and a long-run branch operating under quite different rules. My quarrel with the real business cycle and related schools is not about that; it is about whether they have chosen an utterly implausible unifying device. (Solow 2005, pp. 5-6)

We shall argue below that evolutionary agent-based models entail such an alternative unification. However, these have not been dominant spectacles for the interpretation of what happened over the last few decades.

1.5 From the “Great Moderation” to the great recession

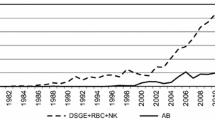

In the beginning of this century, under the new consensus reached by the New Neoclassical Synthesis (NNS), Lucas (2003) declared that: “The central problem of depression prevention had been solved”. Moreover, a large number of NNS contributions claimed that economic policy was finally becoming more of a science (?!?, Mishkin 2007; Galí and Gertler 2007; Goodfriend 2007; Taylor2007).Footnote 11 This was possible by the ubiquitous presence of DSGE models in academia and a universe of politicians and opinion-makers under the “free market” / “free Wall Street” globalization spell,Footnote 12 and helped by the “divine coincidence”, whereby inflation targeting, performed under some form of Taylor rule, appeared to be a sufficient condition for stabilizing the whole economy. During this Panglossian period, some economists went so far as claiming that the “scientific approach” to macroeconomics policy incarnated in DSGE models was the ultimate cause of the so-called Great Moderation, i.e. the fall of GDP volatility experienced by most developed economists since the beginning of the 80’s, and that only minor refinements to the consensus workhorse model were needed.

Unfortunately, as it happened with the famous statement made by Francis (Fukuyama 1992) about an alleged “end of history”,Footnote 13 these positions have been proven to be substantially wrong by subsequent events. Indeed, a relatively small “micro” event, the bankruptcy of Lehman Brothers in 2008 triggered a major financial crisis which caused the Great Recession, the deepest downturns experience by developed economies since 1929.

In that respect, the Great Recessions turned out to be a “natural experiment” for economic analysis, showing the inadequacy of the predominant theoretical frameworks. Indeed, as Krugman (2011) points out, not only DSGE models did not forecast the crisis, but they did not even admit the possibility of such event and, even worse, they did not provide any useful advice to policy makers to put back the economy on a steady growth path (see also Turner 2010; Stiglitz 2011, 2015; Bookstaber 2017; Haldane and Turrell 2019; Caverzasi and Russo2018).

DSGE scholars have reacted to such a failure trying to amend their models with a new legion of epicycles e.g. financial frictions, homeopathic doses of agent heterogeneity and exogenous fat-tailed shocks (see e.g. Lindé and Wouters 2016 and the discussion in Section 3). At the same time, an increasing number of economists have claimed that the 2008 “economic crisis is a crisis for economic theory” (Kirman 2010b, 2016; Colander et al.2009, Krugman 2009, 2011; Farmer and Foley 2009; Caballero 2010; Stiglitz2011, 2015, 2017; Kay 2011; Dosi 2012b; Romer 2016). Indeed, history is the smoking gun against the basic assumptions of mainstream DSGE macroeconomics, e.g. rational expectations, representative agents: they are deeply flawed and prevent by construction the understanding of the emergence of deep downturns together with standard fluctuations (Stiglitz 2015) and, more generally, the very dynamics of economies. Indeed, resorting to representative-agent microfoundations, how can one understand central phenomena such as rising inequality, bankruptcy cascades and systemic risks, innovation, structural change and their co-evolution with climate dynamics?

1.6 Towards a complexity macroeconomics

In an alternative paradigm, macroeconomics should consider the economy as a complex evolving system, an ecology populated by heterogenous agents (e.g. firms, workers, banks) whose far-from-equilibrium local interactions yield some collective order, even if the structure of the system continuously change (more on that in Farmer and Foley 2009; Kirman 2010b, 2016; Dosi 2012b; Dosi and Virgillito2017; Rosser 2011). In such a framework, first more is different (Anderson 1972): there is not any isomorphism between the micro- and macroeconomic levels and higher levels of aggregations can lead to the emergence of new phenomena (e.g. business cycles and self-sustained growth), new statistical regularities (e.g. Phillips curve) and completely new structures (i.e. markets, institutions).

Second, the economic system exhibit self-organized criticality: imbalances can build over time leading to the emergence of tipping points which can be triggered by apparently innocuous shocks (this is straightforward in climate change, cf. Steffen et al. 2018; but to other fields in economics, see Bak et al. 1992 and Battiston et al. 2016.)

Third, in a complex world, deep uncertainty (Knight 1921, Keynes 1921, 1936) is so pervasive that agents cannot build the “right” model of the economy, and, even less so, share it among them as well as with the modeler (Kirman 2014).

Rather, fourth, they must rely on heuristics (Simon 1955, 1959; Cyert and March1992), which turns out to be robust tools for inference and actions (Gigerenzer and Brighton 2009; Haldane 2012; Dosi et al. 2017a).

Of course, fifth, local interactions among purposeful agents do not generally lead to efficient outcomes or optimal equilibria.

Finally, from a normative point of view, when complexity is involved policy makers ought to aim at resilient systems which often require redundancy and degeneracy (Edelman and Gally 2001). To put it in a provocative way: would someone fly with a plane designed by a New Classical macroeconomist, who alike early aerodynamic congresses conclusively argues that in equilibrium airplane cannot fly?

Once complexity is seriously taken into account in macroeconomics, one of course has to rule out DSGE models. A natural alternative candidate is agent-based computational economics (ACE, Tesfatsion 2006; LeBaron and Tesfatsion 2008; Fagiolo and Roventini 2017; Dawid and Delli Gatti 2018; Caverzasi and Russo2018), which straightforwardly embeds heterogeneity, bounded rationality, endogenous out-of-equilibrium dynamics, and direct interactions among economic agents. In that, ACE provides an alternative way to build macroeconomic models with genuine microfoundations, which take seriously the problem of aggregation and are able to jointly account for the emergence of self-sustained growth and business cycles punctuated by major crises. Furthermore, on the normative side, due to the extreme flexibility of the set of assumptions regarding agent behaviors and interactions, ACE models represent an exceptional laboratory to design policies and to test their effects on macroeconomic dynamics.

As argued by Haldane and Turrell (2019) in this special issue, the first prototypes of agent-based model (ABM) was developed by Enrico Fermi in the 30’s in order to study the movement of neutrons (of course the Nobel laureate Fermi, a physicist, never thought to build a model sporting a representative neutron!). With the adoption of the Monte Carlo methods, ABMs flourished in many disciplines ranging from physics, biology, ecology, epidemiology, all the way to the military (more on that in Turrell 2016). The last years have also seen a surge of agent-based models in macroeconomics (see Fagiolo and Roventini 2012, 2017 and Dawid and Delli Gatti 2018 for surveys): an increasing number of papers involving macroeconomic ABMs have addressed fiscal policy, monetary policy, macroprudential policy, labor market policy, and climate change. Moreover, ABMs have been increasingly developed by policy makers in Central Banks and other institutions as they are complementary to older macroeconomic models (Haldane and Turrell 2019).Footnote 14

Along this vein, the papers in this Special Issue contribute to enrich Agent-Based macroeconomics from different perspectives (see also Section 5). Dosi et al. (2019) contribute to the debate on the granular origin of business cycles. Fluctuations are also studied by Rengs and Scholz-Wäckerle (2019) considering the role of consumption. Some papers explore the complex interactions between financial markets and real dynamics (Assenza and Delli Gatti 2019; Meijers et al. 2019), focusing on learning and agents’ adaptation capabilities (Seppecher et al. 2019; Arifovic 2019), and studying the implications for monetary policy (Salle et al. 2019) and macroprudential regulation (Raberto et al. 2019). The links between inequality, innovation and growth are studied in Caiani et al. (2019) and Ciarli et al. (2019). The adoption of a production network framework allows Gualdi and Mandel (2019) to analyze the relationship between the sectoral composition of the economy, innovation and growth.

Naturally there are still open issues that must be addressed by Agent-Based macroeconomics. First, transparency, reproducibility and replication of the results generated by ABMs should be improved. Dawid et al. (2019) in this special issue is a welcomed advancement in this respect. However, let us consider at greater details the reasons why, in our view, DSGEs are a dead end.

2 The emperor is still naked: the intrinsic limits of DSGE models

In line with the RBC tradition, the backbone of DSGE models (Clarida et al. 1999; Woodford 2003; Galí and Gertler 2007) is a standard stochastic equilibrium model with variable labor supply: the economy is populated by an infinitely-lived representative household, and by a representative firm, whose homogenous production technology is hit by exogenous shocks.Footnote 15 All agents form their expectations rationally (Muth 1961). The New Keynesian flavor of the model is provided by money, monopolistic competition and sticky prices. Money has usually only the function of unit of account and the nominal rigidities incarnated in sticky prices allow monetary policy to affect real variables in the short run. The RBC scaffold of the model allows the computation of the “natural” level of output and real interest rate, that is the equilibrium values of the two variables under perfectly flexible prices. In line with the Wickselian tradition, the “natural” output and interest rate constitute a benchmark for monetary policy: the Central Bank cannot persistently push the output and the interest rate away from their “natural” values without creating inflation or deflation. Finally, imperfect competition and possibly other real rigidity might imply that the “natural” level of output is not socially efficient.

DSGE models are commonly represented by means of vector auto-regression (VAR) models usually estimated employing full-information Bayesian techniques (see e.g. Smets and Wouters 2003, 2007). Different types of shocks are usually added to improve the estimation. Moreover, as the assumption of forward-looking agents prevents DSGE models to match the econometric evidence on the co-movements of nominal and real variables (e.g., the response of output and inflation as to a monetary policy shock is too fast to match the gradual adjustment showed by the corresponding empirical impulse-response functions), a legion of “frictions” — generally not justified on theoretical grounds — such as predetermined price and spending decisions, indexation of prices and wages to past inflation, sticky wages, habit formation in preferences for consumption, adjustment costs in investment, variable capital utilization, etc. Once the parameters of the model are estimated and the structural shocks are identified, policy-analysis exercises are carried out assuming that the DSGE model is the “true” data generating process of the available time series.

The usefulness of DSGE models is undermined by theoretical, empirical and political-economy problems. Let us discuss each of them in turn (for a more detailed analysis see Fagiolo and Roventini 2017).

Theoretical issues

As already mentioned above, DSGE models suffer the same well-known problems of Arrow-Debreu general-equilibrium models (see Kirman 1989 for a classical reference) and more. More specifically, the well-known (Sonnenschein 1972; Mantel1974; Debreu 1974) theorems show that the uniqueness and even less so, the stability of the general equilibrium cannot be attained even if one places stringent and unrealistic assumptions about agents, even under amazing information requirements. Indeed, Saari and Simon (1978) show that an infinite amount of information is required to reach the equilibrium for any initial price vector.

The representative agent (RA) shortcut has been taken to circumvent any aggregation problem. The RA assumption implies that there is isomorphism between micro- and macro-economics, with the latter shrunk to the former. This, of course, is far from being innocent: there are (at least) four reasons for which it cannot be defended Kirman (1992).Footnote 16 First, individual rationality does not imply “aggregate rationality”: one cannot provide any formal justification to support the assumption that the macro level behaves as a maximizing individual. Second, even if one forgets also that, one cannot safely perform policy analyses with RA macro models, because the reactions of the representative agent to shocks or parameter changes may not coincide with the aggregate reactions of the represented agents. Third, the lack of micro/macro isomorphism is revealed even if the representative agent appears to prefer a, all the “represented” individuals might well prefer b. Finally, the RA assumption also induces additional difficulties on testing grounds, because whenever one tests a proposition delivered by a RA model, one is also jointly testing the very RA hypothesis. Hence, one tests together the rejection of the latter with the rejection of the specific model proposition (more on that in Forni and Lippi 1997, 1999; Pesaran and Chudik2011).

Finally, rational-expectations equilibria of DSGE models may not be determinate. The existence of multiple equilibria at the global level (see e.g. Schmitt-Grohé and Uribe 2000; Benhabib et al. 2001; Ascari and Ropele2009) leads to the implicit “small” shock assumption (Woodford 2003) required to compute impulse-response functions and to perform policy analysis exercises.

Empirical issues

The estimation and testing of DSGE models are usually performed assuming that they represent the “true” data generating process (DGP) of the observed data (Canova 2008). This implies that the ensuing inference and policy experiments are valid only if the DSGE model mimics the unknown DGP of the data.Footnote 17 Notice that such an epistemology widespread in economics but unique to the latter (and to theology!) assumes that the world is “transparent” and thus the “model” faithfully reflects it. All other scientific disciplines are basically there to conjecture, verify and falsify models of the world. Not our own! So, also concerning DSGE models, their econometric performance is assessed along the identification, estimation and evaluation dimensions (Fukac and Pagan 2006).

Given the large number of non-linearities present in the structural parameters, DSGE models are hard to identify (Canova 2008). This leads to a large number of identification problems, which can affect the parameter space either at the local or at the global level.Footnote 18 Identification problems lead to biased and fragile estimates of some structural parameters and do not allow to rightly evaluate the significance of the estimated parameters applying standard asymptotic theories. This opens a ridge between the real and the DSGE DGPs, depriving parameter estimates of any economic meaning and making policy analysis exercises useless (Canova 2008).

Such identification problems also affect the estimation of DGSE models. Bayesian methods apparently address the estimation (and identification) issues by adding a prior function to the (log) likelihood function in order to increase the curvature of the latter and obtain a smoother function. However, this choice is not harmless: if the likelihood function is flat — and thus conveys little information about the structural parameters — the shape of the posterior distribution resembles the one of the prior, reducing estimation to a more sophisticated calibration procedure carried out on an interval instead on a point (see Canova 2008; Fukac and Pagan 2006). Indeed, the likelihood functions produced by most DSGE models are quite flat (see e.g. the exercises performed by Fukac and Pagan 2006). In this case, informal calibration is a more honest and consistent strategy for policy analysis experiments (Canova 2008).

Evaluating a DSGE model, as well as other models, implies assessing its capability to reproduce a large set of stylized facts, in our case macroeconomic ones (microeconomic regularities cannot be attained by construction given the represented-agent assumption). Fukac and Pagan (2006) perform such exercises on a popular DSGE model with disappointing results. Moreover, DSGE models might seem to do well in “normal” time, but they cannot account even in principle for crises and deep downturns (Stiglitz 2015), even when fat-tailed shocks are assumed (Ascari et al. 2015).

The results just described seem to support (Favero 2007) in claiming that modern DSGE models are exposed to the same criticisms advanced against the old-fashioned macroeconometric models belonging to the Cowles Commission tradition: they pay too much attention to the identification of the structural model (with all the problems described above) without testing the potential misspecification of the underlying statistical model (see also Johansen 2006; Juselius and Franchi 2007). If the statistical model is misspecified, policy analysis exercises loose significance, because they are carried out in a “virtual” world whose DGP is different from the one underlying observed time-series data.

More generally, the typical assertion made by DSGE modelers that their theoretical frameworks are able to replicate real world evidence is at odds with a careful scrutiny of how the empirical evaluation of DSGE models is actually done. DSGE modelers, indeed, typically select ex-ante the set of empirical criteria that their models should satisfy in such a way to be sure that these restrictions are met. However, they usually restrain from confronting their models with the wealth of fundamental features of growth over the capitalist business cycles, which DSGE are not structurally able to replicate.

Political-economy issues

Given the theoretical problems and the puny empirical performance, the assumptions of DSGE models can no longer be defended invoking arguments such as parsimonious modeling or data matching. This opens a Pandora’s box on the links between the legion of assumptions of DSGE models and their policy conclusions. But behind all that the crucial issue concerns the relationship between the information the representative agent is able to access, “the model of the world” she has and her ensuing behaviors.

DSGE models assume a very peculiar framework, whereby representative agents are endowed with a sort of “olympic” rationality and have free access to the whole information set.Footnote 19 Rational expectation is the common short-cut employed by DSGE models to deal with uncertainty. Such strong assumptions raise more question marks than answers.

First, even assuming individual rationality, how does it carry over through aggregation, yielding rational expectations (RE) at the system level? For sure individual rationality is not a sufficient condition for letting the system converge to the RE fixed-point equilibrium (Howitt 2012). Relatedly, while it is unreasonable to assume that agents possess all the information required to attain the equilibrium of the whole economy (Caballero 2010), this applies even more so in periods of strong structural transformation, like the Great Recession, that require policies never tried before (e.g. quantitative easing, see Stiglitz 2011, 2015).

Second, agents can also have the “wrong” model of the economy, but available data may corroborate it (see the seminal contribution of Woodford 1990 among the rich literature on sunspots.).

Third, as Hendry and Minzon (2010) point out, when “structural breaks” affect the underlying stochastic process that governs the evolution of the economy, the learning process of agents introduce further non-stationarity into the system, preventing the economy to reach an equilibrium state, if there is one. More generally, in presence of genuine uncertainty (Knight 1921; Keynes 1936), “rational” agents should follow heuristics as they always outperform more complex expectation formation rules (Gigerenzer and Brighton 2009). But, if this is so, then the modelers should assume that agents behave according to how the psychological and sociological evidence suggests that they actually behave (Akerlof 2002; Akerlof and Shiller 2009). Conversely, given such premises, no wonder that empirical tests usually reject the full-information, rational expectation hypothesis (see e.g. Coibion and Gorodnichenko 2015; Gennaioli et al. 2015).

The representative-agent (RA) assumption prevents DSGE models to address any distributional issue, even if they are intertwined with the major causes of the Great Recession and, more generally, they are fundamental for studying the effects of policies. Indeed, increasing income (Atkinson et al. 2011) and wealth (Piketty and Zucman 2014) inequalities helped inducing households to hold more and more debt, paving the way to the subprime mortgage crisis (Fitoussi and Saraceno 2010; Stiglitz 2011). Redistribution matters and different policies have a different impact on the economy according to the groups of people they are designed for (e.g. unemployed benefits have large multipliers than tax cuts for high-income individuals, see Stiglitz 2011). However, the study of redistributive policies requires then models with heterogenous agents.

The RA assumption coupled with the implicit presence of a Walrasian auctioneer, which sets prices before exchanges take place, rule out almost by definition the possibility of interactions carried out by heterogeneous individuals. This prevents DSGE model also from accurately study the dynamics of credit and financial markets. Indeed, the assumption that the representative agent always satisfies the transversality condition, removes the default risk from the models (Goodhart 2009). As a consequence, agents face the same interest rate (no risk premia) and all transactions can be undertaken in capital markets without the need of banks. The abstraction from default risks does not allow DSGE models to contemplate the conflict between price and financial stability faced by Central Banks (Howitt 2012). As they do not considering the huge costs of financial crisis, they deceptively appear to work fine only in normal time (Stiglitz 2011, 2015).

In the same vein, DSGE models are not able to account for involuntary unemployment. Indeed, even if they are developed to study the welfare effects of macroeconomic policies, unemployment is not present or when it is, it only stems from frictions in the labor market or wage rigidities. Such explanations are especially hard to believe during deep downturns like e.g. the Great Recession. In DSGE models, the lack of heterogenous, interacting firms and workers/consumers prevents to study the possibility of massive coordination failures (Leijonhufvud 1968, 2000; Cooper and John 1988), which could lead to an insufficient level of aggregate demand and to involuntary unemployment.

In fact, the macroeconomics of DSGE models does not appear to be genuinely grounded on any microeconomics (Stiglitz 2011, 2015): they do not even take into account the micro and macro implications of imperfect information, while the behavior of agents is often described with arbitrary specification of the functional forms (e.g. Dixit-Stigltiz utility function, Cobb-Douglas production function, etc.). More generally, DSGE models suffer from a sort of internal contradiction. On the one hand, strong assumptions such as rational expectations, perfect information, complete financial markets are introduced ex-ante to provide a rigorous and formal mathematical treatment and to allow for policy recommendations. On the other hand, many imperfections (e.g., sticky prices, rule-of-thumb consumers) are introduced ex-post without any theoretical justification only to allow DSGE model to match the data (see also below). Along these lines Chari et al. (2009) argue that the high level of arbitrariness of DSGE models in the specifications of structural shocks may leave them exposed to the Lucas critiques, preventing them to be usefully employed for policy analysis.

DSGE models assume that business cycles stem from a plethora of exogenous shocks. As a consequence, DSGE models do not explain business cycles, preferring instead to generate them with a sort of deus-ex-machina mechanism. This could explain why even in normal times DSGE models are not able to match many business cycle stylized facts or have to assume serially correlated shocks to produce fluctuations resembling the ones observed in reality (cf. Zarnowitz 1985; Zarnowitz1997; Cogley and Nason 1993; Fukac and Pagan 2006). Even worse, the subprime mortgage crisis clearly shows how bubbles and, more generally, endogenously generated shocks are far more important for understanding economic fluctuations (Stiglitz 2011, 2015).

Moving to the normative side, one supposed advantage of the DSGE approach is the possibility to derive optimal policy rules. However, when the “true” model of the economy is not known, rule-of-thumb policy rules can perform better than optimal policy rules (Brock et al. 2007; Orphanides and Williams 2008). Indeed, in complex worlds with pervasive uncertainty (e.g. in financial markets), policy rules should be simple (Haldane 2012), while “redundancy” and “degeneracy” (Edelman and Gally 2001) are required to achieve resiliency.

3 Post-crisis DSGE models: some fig leaves are not a cloth

The failure of DSGE models to account for the Great Recessions sparked the search of refinements, which were also partly trying to address the critiques discussed in the previous Section. More specifically, researchers in the DSGE camp have tried to include a financial sector to the barebone model, consider some forms of agents’ heterogeneity and bounded rationality, and explore the impact of rare exogenous shocks on the performance of DSGE models. Let us provide a bird’s eye view of such recent developments (another overview is Caverzasi and Russo2018).

The new generation of DSGE model with financial frictions are mostly grounded on the so called financial accelerator framework (Bernanke et al. 1999), which provides a straightforward explanation why credit and financial markets can affect real economic activity. The presence of imperfect information between borrowers and lenders introduces a wedge between the cost of credit and those of internal finance. In turn, the balance-sheets of lenders and borrowers can affect the real sector via the supply of credit and the spread on loan interest rates (see Gertler and Kiyotaki 2010 for a survey). For instance, Curdia and Woodford (2010) introduces patient and impatient consumers to justify the existence of a stylized financial intermediary, which copes with default risk charging a spread on its loan subject to exogenous, stochastic disturbances. From the policy side they conclude that Central Bank should keep on controlling the short-term interest rate (see also Curdia and Woodford 2015). In the model of Gertler and Karadi (2011), households can randomly become workers or bankers. In the latter case, they provide credit to firms, but as they are constrained by deposits and the resources they can raise in the interbank market, a spread emerges between loan and deposits interest rates (see also Christiano et al. 2013). They find that during (exogenous) recessions, unconventional monetary policy (i.e. Central Bank providing credit intermediation) is welfare enhancing (see also Curdia and Woodford 2011; and Gertler and Kiyotaki 2010 for other types of credit policies).

The foregoing papers allow for some form of mild heterogeneity among agents. Some DSGE models consider two classes of agents in order to explore issues such as debt deflations or inequality. For instance, Eggertsson and Krugman (2012) introduce patient and impatient agents and expose the latter to exogenous debt limit shocks, which force them to deleverage. In such a framework, there can be debt deflations, liquidity traps, and fiscal policies can be effective. Kumhof et al. (2015) try to study the link between rising inequality and financial crises employing a DSGE model where exogenously imposed income distribution guarantee that top earner households (5% of the income distribution) lend to the bottom ones (95% of the income distribution). Exogenous shocks induce low-income households to increase their indebtedness, raising their rational willingness to default and, in turn, the probability of a financial crisis. More recent works consider a continuum of heterogenous households in an incomplete market framework. For instance, in the Heterogenous Agent New Keyenesian (HANK) model developed by Kaplan et al. (2018), the assumptions of uninsurable income shocks and multiple assets with different degrees of liquidity and returns lead to wealth distributions and marginal propensities to consume more in tune with the empirical evidence. In this framework, monetary policy is effective only if it provokes a general-equilibrium response of labor demand and household income, and as the Ricardian equivalence breaks down, its impact is intertwined with fiscal policy.

An increasing number of DSGE models allow for various forms of bounded rationality (see Dilaver et al. 2018 for a survey). In one stream of literature, agents know the equilibrium of the economy and form their expectations as if they were econometricians, by using the available observation to compute their parameter estimates via ordinary least square (the seminal contribution is Evans and Honkapohja 2001). Other recent contributions have relaxed the rational expectations assumption preserving maximization (Woodford 2013). For instance, an increasing number of papers assume rational inattention, i.e. optimizing agents rationally decide not to use all the available information because they have finite processing capacity (Sims 2010). Along this line, Gabaix (2014) models bounded rationality assuming that agents have a simplified model of the world, but, nonetheless, they can jointly maximize their utility and their inattention. Drawing inspiration from state-of-the-art artificial intelligence programs, Woodford (2018) develops a DSGE model where rational agents can forecast up to k steps ahead. Finally, building on the Brock and Hommes (1997), in an increasing number of DSGE models (see e.g. Branch and McGough, 2011; De Grauwe, 2012; Anufriev et al., 2013; Massaro, 2013), agents can form their expectations using an ecology of different learning rules (usually fundamentalist vs. extrapolative rules). As the fraction of agents following different expectations rules change over time, “small” shocks can give raise to persistent and asymmetric fluctuations and endogenous business cycles may arise.

Finally, a new generation of DSGE models try to account for deep downturns and disasters. Curdia et al. (2014) estimate the (Smets and Wouters 2007) model assuming Student’s t-distributed shocks. They find that the fit of the model improves and rare deep downturns become more relevant (see also Fernandez-Villaverde and Levintal, 2016 for a DSGE model with exogenous time-varying rare disaster risk). A similar strategy is employed to Canzoneri et al. (2016) to allow the effects of fiscal policies to change over time and get state-dependent fiscal multipliers higher than one in recessions.

Taking stock of new DSGE developments

The new generation of DSGE models tries to address some of the problems mentioned in the previous Section. But do post-crisis DSGE models go beyond the intrinsic limits of such an approach and provide a satisfactory account of macroeconomics dynamics? We mantain that the answer is definitely negative.

The major advance of the new class of models is the recognition that agents can be heterogeneous in terms of their rationality, consumption preferences (patient vs. impatient), incomes, etc. However, DSGE models 2.0 can handle only rough forms of heterogeneity and they do not contemplate direct interactions among agents. Without interactions, they just scratch the surface of the impact of credit and finance on real economic dynamics without explicitly modeling the behavior of banks (e.g. endogenous risk-taking), network dynamics, financial contagion, the emergence of bankruptcy chains, the implications of endogenous money. A complex machinery is built just to introduce into the model a new epicycle: exogenous credit shocks.

Similar remarks apply to the other directions of “advancements”. So, bounded rationality is introduced in homeopathic quantities in order to get quasi-Rational Expectations equilibrium models (Caverzasi and Russo 2018) with just marginally improved empirical performance. But one can’t be a little bit pregnant! The impact of bounded rationality, à la (Simon 1959) at least!, on macroeconomic dynamics can be pervasive well beyond what can be accounted for by DSGE (see below).

Similarly, DSGE models superficially appear able to face both mild and deep downturns, but they only assume them, increasing the degrees of freedom of the models. Indeed, business cycles are still triggered by exogenous shocks, which come from an ad-hoc fat-tailed distribution or they have massive negative effects.Footnote 20 More generally, no DSGE model has ever tried to jointly account for the endogenous emergence of long-run economic growth and business cycles punctuated by deep downturns. In that, the plea of Solow (2005) is still unanswered.

Summing up, we suggest that the recent developments in DSGE camp are just patches added to torn clothes. But how many patches can one add before trashing it? For instance, Lindé and Wouters (2016), after having expanded the benchmark DSGE model to account for the zero-lower bound, non-Gaussian shocks, and the financial accelerator, conclude that such extensions “do not suffice to address some of the major policy challenges associated with the use of non-standard monetary policy and macroprudential policies.”Footnote 21 More radically, we do think that the emperor is naked:Footnote 22 DSGE models are simply post-real (Romer 2016) and additional patches are a waste of intellectual (and economic) resources that pushes macroeconomics deeper and deeper into a “Fantasyland” (Caballero 2010). Macroeconomics should then be built on very different grounds in complexity science. In that, evolutionary economics (Nelson and Winter 1982) agent-based computational economics (ACE, Tesfatsion and Judd, 2006; LeBaron and Tesfatsion, 2008; Farmer and Foley, 2009) and more generally complexity sciences represent a valuable tools. We present such an alternative paradigm in the next section.

4 Macroeconomic agent-based models

Agent-based computational economics (ACE) can be defined as the computational study of economies thought as complex evolving systems (Tesfatsion 2006, but in fact, Nelson and Winter 1982 have been the genuine contemporary root of evolutionary ACE, before anyone called them that way).

Contrary to DSGE models, and indeed to many other models in economics, ACE provides an alternative methodology to build macroeconomic models from the bottom up with sound microfoundations based on realistic assumptions as far as agent behaviors and interactions are concerned, where realistic here means rooted in the actual empirical micro-economic evidence (Simon 1977; Kirman 2016). The state of economics discipline nowadays is such that it is already subversive the view that in modeling exercises agents should have the same information as do the economists modeling the economy.

Needless to say such an epistemological prescription is a progressive step vis-á-vis the idea that theorists, irrespectively of the information they have, must know as much as God — take or leave some stochastic noise — and agents must know as much as they theorists (or better theologist and God). However, such a methodology is not enough. First, it is bizarre to think of any isomophism between the knowledge embodied in the observer and that embodied in the object of observation: it is like saying that ants or bees must know as much as the student of anthills and beehives! Second, in actual fact human-agents behave according to rules, routines and heuristics which have little to do with either the “Olympic rationality” or even the “bounded” one (Gigerenzer 2007, see; Gigerenzer and Brighton 2009; Dosi et al. 2018a). The big challenge here, largely still unexplored, concerns the regularities on what people, and especially organizations do, concerning e.g. pricing, investment rules, R&D, hiring and firing... Half a century ago, we knew much more on mark-up pricing, scrapping and expansionary investment and more, because there were micro inquiries asking firms “what do you actually do ...” This is mostly over, because at least since the 80’s, the conflict between evidence and theory was definitely resolved: theory is right, evidence must be wrong (or at least well massaged)! With that, for example, no responsible advisor would suggest a PhD student to undertake case studies and no research grant would be asked on the subject. However, for ABMs all this evidence is the crucial micro behavioral foundation, compared to which current “calibration exercises”Footnote 23 look frankly pathetic.

All this regarding behaviors. Another crucial tenet concerns interactions.

The ABM, evolutionary, methodology is prone to build whatever macro edifice, whenever possible, upon actual micro interactions. They concerns what happens within organizations — a subject beyond the scope of this introduction —, and across organizations and individuals, that is the blurred set of markets and networks. Admittedly, one is very far from any comprehensive understanding of “how market works”, basically for the same (bogus) reasons as above: if one can prove the existence of some market fixed point why should one bother to show how particularly market mechanics lead there? And, again here, ABMs badly need the evidence on the specific institutional architecture of interactions and their outcomes. Kirman and Vriend (2001) and Kirman (2010a) offer vivid illustrations of the importance of particular institutional set-ups. Fully-fledged ABMs require also fully-fledged markets as one explores, concerning the labor-market in Dosi et al.(2017b, 2017c c). One should hopefully expect the exercise to markets and networks.

Short of that, much more concise (and more blackboxed) representations come from network theory (e.g., Albert and Barabasi 2002) and social interactions (e.g., Brock and Durlauf 2001) which move away from non-trivial interaction patterns.

That together with evidence on persistent heterogeneity and turbulence characterizing markets and economies focus the investigation on out-of-equilibrium dynamics endogenously fueled by the interactions among heterogenous agents.

All those building blocks are more than sufficient to yield the properties of complex environments. But what about evolution? Basically, that means the emergence of novelty. That is new technologies, new products, new organizational forms, new behaviors,Footnote 24 etc. emerging at some point along the arrow of time, which were not those from the start. Formally, all this may well be captured by endogenous dynamics on the “fundamentals” of the economy. Or, better still an ever-expanding dimensionality of the state-space and its dynamics (more in Dosi and Winter 2002 and Dosi and Virgillito 2017).

The basics

Every macroeconomic ABM typically possesses the following structure. There is a population — or a set of populations — of agents (e.g., consumers, firms, banks, etc.), possibly hierarchically organized, whose size may change or not in time. The evolution of the system is observed in discrete time steps, t = 1,2,…. Time steps may be days, quarters, years, etc.. At each t, every agent i is characterized by a finite number of micro-economic variables \(\underline {x}_{i,t}\) which may change across time (e.g. production, consumption, wealth, etc.) and by a vector of micro-economic parameters \(\underline {\theta }_{i}\) (e.g. mark-ups, propensity to consume, etc.). In turn, the economy may be characterized by some macroeconomic (fixed) parameters Θ (even mimiking policy like tax rates, the Basel capital requirements, etc.).

Given some initial conditions \(\underline {x}_{i,0}\) (e.g. wealth, technology, etc.) and a choice for micro and macro parameters, at each time step, one or more agents are chosen to update their micro-economic variables. This may happen randomly or can be triggered by the state of the system itself. Agents picked to perform the updating stage might collect their available information (or not) about the current and past state (i.e., micro-economic variables) of a subset of other agents, typically those they directly interact with. They might use their knowledge about their own history, their local environment, as well as, possibly, the (limited) information they can gather about the state of the whole economy, into heuristics, routines, and other algorithmic behavioral rules. Together, in truly evolutionary environments, technologies, organizations, behaviors, market links change.

After the updating round has taken place, a new set of micro-economic variables is fed into the economy for the next-step iteration: aggregate variables \(\underline {X}_{t}\) are computed by simply summing up or averaging individual characteristics. Once again, the definitions of aggregate variables closely follow those of statistical aggregates (i.e., GDP, unemployment, etc.).

Emergence and validation.

The stochastic components possibly present in decision rules, expectations, and interactions will in turn imply that the dynamics of micro and macro variables can be described by some stochastic processes. However, non-linearities which are typically present in the dynamics of AB systems make it hard to analytically derive laws of motion. This suggests that the researcher must often resort to computer simulations in order to analyze the behavior of the ABM at hand. And even there, the detection of such “laws of motion” is no simple matter.

Simulation exercises allow to study long-run statistical distributions, patterns and emergent properties of micro and macro dynamics. For instance, a macro agent-based model can endogenously generate apparently ordered patterns of growth together with business cycles and deep crises. In that ABMs provide a direct answer to Solow and Stiglitz’ plea for macroeconomic models that can jointly account for long-run trajectories, short-run fluctuations and deep downturns (see Section 1). At the same time, such models can deliver emergent properties ranging from macroeconomic relationships such as the Okun and Phillips curves to microeconomic distributions of e.g. firm growth rates, income inequality, etc.. Moreover, as every complex system, after crossing some critical threshold or tipping point the economy can self-organized along a different statistical path. This allows to straightforwardly study phenomena like hysteresis in unemployment and GDP, systemic risk in credit and financial markets, etc.

More generally, the very structure of ABMs naturally allows one to take the model to the data and validate it against observed real-world observations. Most of ABMs follow what one has called an indirect calibration approach (Windrum et al. 2007; Fagiolo et al. 2017) which indeed represents also a powerful form of validation: the models are required to match a possibly wide set of micro and macro empirical regularities. Non-exhaustive lists of micro and macro stylized facts jointly accounted by macro agent-based models are reported in Haldane and Turrell (2019) in this special issue and Dosi et al. (2016a, 2018b). For instance, at the macro level, ABMs are able to match the relative volatility and comovements between GDP and different macroeconomic variables, the interactions between real, credit and financial aggregates, including the emergence of deep banking crises. At the microeconomic levels, macro agent-based models are able to reproduce firm size and growth rate distributions, heterogeneity and persistent differentials among firm productivity, lumpy investment patterns, etc.

Many approaches to validation of ABMs can be in principle taken and the frontier of research is expanding fast. A detailed survey with the most recent developments is provided in Fagiolo et al. (2017). First, on the input side, i.e. modeling assumptions about individual behaviors and interactions to make them more in tune with the observed ones, ABMs rely on laboratory experiments (Hommes 2013; Anufriev et al. 2016), business practices (Dawid et al. 2019in this special issue) and microeconomic empirical evidence (Dosi et al. 2016a).Footnote 25 ABMs can also be validated on the output side, by restricting the space of parameters and initial conditions to the range of values which allow the model to replicate the stylized facts of interest. For instance, Barde (2016) and Lamperti (2018) validate the output of agent-based models according to the replication of time-series dynamics, while Guerini and Moneta (2017) assess how a macro ABM matches the causal relations in the data. Finally, some works estimate ABMs via indirect inference (see e.g., Alfarano et al. 2005; Winker et al. 2007; Grazzini et al. 2013; Grazzini and Richiardi 2015) or Bayesian methods (Grazzini et al. 2017).

No matter the empirical validation procedures actually employed, an important domain of analysis regards the sensitivity of the model to parameter changes, through different methods including Kriging meta-modeling (Salle and Yıldızoğlu2014; Bargigli et al. 2016; Dosi et al. 2017c, 2017dd, 2018c), and machine-learning surrogates (Lamperti et al. 2018c). Such methodologies provide detailed sensitivity analyses of macro ABMs, allowing one to get a quite deep descriptive knowledge of the behavior of the system.

Policy analysis

Once empirically validated, agent-based models represent a very powerful device able to address policy questions under more realistic, flexible and modular set-ups. Indeed, they do not impose any strong theoretical consistency requirements (e.g., equilibrium, representative individual assumptions, rational expectations) and assumptions can be replaced in a modular way, without impairing the analysis of the model.Footnote 26 Micro and macro parameters can be designed in such a way to mimic real-world key policy variables like tax rates, subsidies, etc. Moreover, initial conditions might play a relevant role (somewhat equivalent to initial endowments in standard models) and describe different distributional setups concerning e.g. incomes or technologies. In addition, interaction and behavioral rules employed by economic agents can be easily devised so as to represent alternative institutional, market or industry setups. Since all these elements can be freely interchanged, one can investigate a huge number of alternative policy experiments and rules, the consequences of which can be assessed either qualitatively or quantitatively. For example, one might statistically test whether the effect on the moments of the individual consumption distribution (average, etc.) will be changed (and if so by how much) by a percentage change in any given consumption tax rate. Most importantly, all this might be done while preserving the ability of the model to replicate existing macroeconomic stylized facts, as well as microeconomic empirical regularities, which by construction cannot be matched by DSGE models. In their surveys, Fagiolo and Roventini (2012, 2017) and Dawid and Delli Gatti (2018) discuss the increasing number of ABMs developed in order to address fiscal policy, monetary policy, macroprudential policy, labor market governance, regional convergence and cohesion policies, and climate change.

5 Pushing forward the frontier: the contributions of the special issue

The papers contained in this special issue of the Journal of Evolutionary Economics contributes to enrich Agent-Based macroeconomics from different perspectives.

The already mentioned work of Haldane and Turrell (2019) is intimately connected to this introduction. Given the intrinsic inability of DSGE models to account for deep downturns and to reproduce many relevant empirical regularities, they argue in favor of ABMs as a tool for researchers and policy makers dealing with macroeconomic modeling, especially when addressing questions where complexity, heterogeneity, networks, and heuristics play an important role. Indeed, ABMs have been employed for years on the topics that are bound to be taken on board by mainstream macroeconomic models, namely granularity and networks, heterogeneity of firms and households, financial intermediation, and policy interdependence (Ghironi 2018). And the works of this special issues further contributes to such lines of research.Footnote 27

So, Dosi et al. (2019) employ the Schumpeter meeting Keynes (K+S) agent-based modelFootnote 28 to shed light on the granular origins of business cycles (Gabaix 2011). After showing that Gabaix’s “supply granularity” hypothesis (proxied by productivity growth shocks) is not robust at the empirical level, they find both empirical and theoretical evidence supporting “demand granularity”, grounded on investment growth shocks. The K+S model leads in turn to the identification of a sort of microfounded Keynesian multiplier impacting on both short- and long-run dynamics.

Relatedly, the role of firm’s production network, technological change and macroeconomic dynamics is explored in Gualdi and Mandel (2019). More specifically, they develop an agent-based model where firms can perform both product and process innovation interacting along the input-output structure determined by a time-varying network. The short-run dynamics of the economy is bound by the production network, while the evolution of the system reflects the long-term impacts of competition and innovation on the economy. They find that the interplay between process and product innovations generate complex technological dynamics in which phases of process and product innovation successively dominate, affecting in turn the structure of the economy, productivity and output growth.

Also Ciarli et al. (2019) consider structural change, but they relate it to income distribution and growth in two alternative institutional regimes — “Fordism” vs. “Post-Fordism” — characterized by different labour relations, competition and consumption patterns (for earlier antecedents see Ciarli et al.2010; Lorentz et al. 2016). Simulating a multi-sector ABM, they find that the Fordist regime yields lower unemployment and inequality and higher productivity and output growth with respect to the second one, in tune with the findings of Dosi et al. (2017b, 2017c). The better performance of the Fordist regime depends on the coevolution of lower wage differences and bonuses, lower market concentration, and higher effective demand across sectors.

Heterogeneity of workers and firms are fundamental in the work of Caiani et al. (2019) to study the emergence of inequality and its impact on long-run growth. They expand the model of Caiani et al. (2016) adding endogenous technical change stemming from the R&D investment of firms and heterogenous classes of workers competing in segmented and decentralized labor markets. Simulation results show that emerging inequality has a negative impact on growth, as it reduces aggregate demand, and indirectly it slows down R&D investment. On the policy side, progressive taxation, interventions to sustain the wage of low and middle level workers, and lower wage flexibility can succesfully tackle inequality improving the short- and long-run performance of the economy.

Rengs and Scholz-Wäckerle (2019) study the impact of heterogenous consumers’ behaviors on macroeconomic dynamics developing a model with endogenously changing social classes. They find that bandwagon, Veblen and snob effects are emerging properties of the model, where the co-evolution of consumption and firm specialization affect employment, prices and other macroeconomic variables.

Almost all the remaining contributions of the special issues focus on the complex relationship between financial intermediation and macroeconomic dynamics. Assenza and Delli Gatti (2019) build an hybrid ABM in which aggregate investment depends on the moments of the distribution of firms’ net worth (see also Assenza and Delli Gatti 2013). The impact of fiscal, monetary and financial shocks can be decomposed into a first-round effect, where the moments of the net worth distribution are kept constant, and a second-round one, where the moments can change, thus capturing the financial transmission mechanism. The second-round effects can in turn be decomposed between representative-agent component and heterogeneous-agent one. The first-round effect account for the bulk of the variation of the output gap, but the second-round one is negative, with a relevant heterogenous-agent component.

The complex links between firms’ financial decisions, financial markets instability and real dynamics is studied by Seppecher et al. (2019) and Meijers et al. (2019). The former extends the JAMEL model (Seppecher 2012; Seppecher and Salle 2015) and studies the selection process by decentralized markets over firms which adaptively choose their debt strategy in order to finance their investment plans. In such a framework, downturns are recurrently deep as markets are not able to select those firms with “robust” debt strategies whose features endogenously change as the very result of the distribution of such strategies. Using a different model, Meijers et al. (2019) find that bankruptcies emerge as debts endogenously accumulate. In turn, the dynamics of financial assets has an impact on the real economy and affects the nature of business cycles.

In presence of changing strategies, learning does not always stabilize the system. Indeed, as shown by Arifovic (2019) in an agent-based version of the Diamond-Dybvig model, when heterogenous agents adapt according to individual evolutionary algorithms, higher level of strategic uncertainty can lead to the emergence of sunspot bank-run equilibria. The simulated results generated by the ABM are indeed well in tune with experimental evidence on the interactions among human subjects. In uncertain environments, learning has also relevant implications for monetary policy, possibly undermining the relationship between Central Bank transparency and credibility on inflation targeting rules as found by Salle et al. (2019). In particular, the results show that transparency seems to work well in apparently stable periods akin to the “Great Moderation”, but may well undermine Central Bank’s credibility in more uncertain environments.

The macroprudential framework is considered in Raberto et al. (2019), whereby different banking and regulatory policies can affect the likelihood of green financing. Using the Eurace model (Cincotti et al. 2010; Raberto et al. 2012; Teglio et al. 2017; Ponta et al. 2018), extended to account for capital goods with different degrees of energy efficiency, they study the impact of different capital adequacy ratios on the financial allocations of banks. They find that when the policy framework penalizes speculative lending with respect to credit for firms’ investment, the energy efficiency of firms increases, supporting the transition to more sustainable growth .

Finally, Dawid et al. (2019) discuss how to improve the transparency, reproducibility and replication of the results generated by ABMs employing the Eurace@Unibi model (Dawid et al. 2012, 2014, 2018a, 2018b; van der Hoog and Dawid 2017). They describe in details all decision rules, interaction protocols and balance sheet structures used in the model, and provide a virtual appliance to allow the reproducibility of simulation results. A very relevant contribution to the whole ABM community.

6 Ways ahead

In a visionary note, Frank Hahn (1991), one of the fathers of General Equilibrium theory, was very pessimistic about current theorizing in economics:

Thus we have seen economists abandoning attempts to understand the central question of our subject, namely: how do decentralized choices interact and perhaps get coordinated in favor of a theory according to which an economy is to be understood as the outcome of the maximization of a representative agent’s utility over an infinite future? Apart from purely theoretical objections it is clear that this sort of thing heralds the decadence of endeavor just as clearly as Trajan’s column heralded the decadence of Rome. It is the last twitch and gasp of a dying method. It rescues rational choice by ignoring every one of the questions pressing for attention. (p. 47-48 Hahn 1991)

But he indicates a route for economics for the next hundred years:

Instead of theorems we shall need simulations, instead of simple transparent axioms there looms the likelihood of psychological, sociological and historical postulates. (...) In this respect the signs are that the subject will return to its Marshallian affinities to biology. Evolutionary theories are beginning to flourish, and they are not the sort of theories we have had hitherto. (...) But wildly complex systems need simulating. (p. 49 Hahn 1991)