Abstract

This paper aims to offer a testing framework for the structural properties of the Brownian motion of the underlying stochastic process of a time series. In particular, the test can be applied to financial time-series data and discriminate among the lognormal random walk used in the Black-Scholes-Merton model, the Gaussian random walk used in the Ornstein-Uhlenbeck stochastic process, and the square-root random walk used in the Cox, Ingersoll and Ross process. Alpha-level hypothesis testing is provided. This testing framework is helpful for selecting the best stochastic processes for pricing contingent claims and risk management.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One approach to testing for lognormality of financial time series is to analyze the distribution of the returns. If a time series follows a lognormal random walk, then the continuously compounded returns \(\ln (S_{t}/S_{t-1})\) where \(S_t\) is the price process must be normally distributed. This approach has been used by Becker (1991) for interest rate changes. There are many ways to test the normality of a distribution such as the Kolmogorov-Smirnov test and the Anderson-Darling test (Lehmann 2010). Such tests may be used to accept or reject the hypothesis of normally distributed returns given a certain significance level.

Inferring lognormality from a normality test of the distribution of returns has some weaknesses. For example, in many cases a Gaussian random walk or square-root random walk may exhibit close to normally distributed returns. Accordingly, testing for normality of returns is not enough to infer lognormality of a time series. In addition, returns of financial time series exhibit departures from normality such as anomalies of the skewness and kurtosis, outliers and, for equity and market indexes, fat tails (Rachev et al. 2005, 2007). Therefore, a normality test may give a negative result at the same time that we accept that the underlying process is lognormal overall. These weaknesses motivate the development of tests that do not rely on the hypothesis that returns are normally distributed.

The present paper contributes to the literature by providing a testing framework for the structural properties of the Brownian motion of the underlying stochastic process of a time series. Stochastic processes are used in probability theory to model the evolution of price processes over time and for option valuation. The assumption used in the Black-Scholes-Merton model for option pricing is that the underlying price process follows a lognormal random walk. The lognormal random walk, which is expressed as \(dS_t = \mu S_t dt + \sigma S_t dW_t\), has Brownian motion of the form \( \sigma S_t dW_t\), where \(\mu \) and \(\sigma \) are model parameter, and \(W_t\) is the Wiener process. Another example is the Ornstein-Uhlenbeck stochastic process, which is expressed as \(dS_t=\lambda \left( \mu - S_t \right) dt + \sigma dW_t\), where \(\lambda \), \(\mu \), and \(\sigma \) are model parameters. In this stochastic differential equation, the Brownian motion is a Gaussian random walk of the form \(\sigma dW_t\). In the Cox, Ingersoll and Ross process, which is expressed as \(dS_t = \lambda \left( \mu - S_t\right) dt + \sigma \sqrt{S_t} dW_t\), the Brownian motion is a square-root random walk of the form \(\sigma \sqrt{S_t}dW_t\). For the general form of stochastic processes, \(dS_t = \mu (S_t,t) dt + \sigma _k S_t^k dW_t\), the present study proposes a method to estimate k which is the order of the Brownian motion.

Section 2 introduces the modeling assumptions and the statistics that are used for the present study’s testing framework. In this section, we discuss the no-drift assumption used to analyze financial time series, and introduce volatility estimators as the test is based on these measures. The linear model with intercept that we use in our testing framework is presented, and a proof of convergence of the estimator of the order of the Brownian motion is provided. In Sect. 3, we present the hypothesis-testing method used to accept or reject the null hypothesis (lognormal random walk) and the alternative hypotheses (Gaussian and square-root random walks). In Sect. 4, we run some Monte Carlo simulations to compare the usual method for testing lognormality based on the normality of the returns and the present study’s testing framework. In this section, we also assess the power of the testing framework (i.e. the rate of type I and type II errors). In Sect. 5, we apply the test to the S&P 500 and VIX indices and discuss the results. In this section, we also compare the linear model with intercept and without intercept. In Sect. 6, we offer our conclusion.

2 Model

In our analysis, because daily time increments are generally used for the purpose of analyzing time-series data, the drift term of the stochastic differential equation is considered to be negligible. Under risk-neutral measure, the share price is equal to the discounted expectation of the share price. Option valuation is based on an equivalent martingale measure where, in order to prevent market arbitrage, the drift is equal to the risk-free rate. If we assume a risk-free rate of \(5\,\%\) annual with 250 trading days per year, the daily drift would be two basis points, which is very small compared to daily price variations. Also, markets which are tested positive to mean reversion generally exhibit trends that persist over longer periods of several weeks or months. Such a mean-reversion effect may be considered negligible compared to price variations on a daily basis. Hence, for a lognormal random walk, the stochastic differential equation without drift is as follows:

where \(S_t\) is the asset price, \(\sigma \) is the volatility, and \(dW_t\) (the Brownian term) is a \(\mathcal {N} (0,1)\) variable.

Let us consider the expected value of the absolute value of \(dS_t/S_t\) in Eq. (1). We get:

To evaluate \(\mathbb {E}\left( \left| dW_t\right| \right) \), we need to integrate the density function of \(\mathcal {N} (0,1)\) multiplied by the absolute value of the integration variable between \(-\infty \) and \(\infty \), which is equal to twice the integral of the density function multiplied by the integration variable between 0 and \(\infty \):

Hence, the expected value of the absolute value of \(dW_t\) is as follows:

To develop a test for the structural properties of the Brownian motion of the underlying stochastic process of a time series, we need to introduce volatility estimators. The following estimator of the volatility is obtained from Eqs. (2) and (4):

where \(\hat{\sigma _1}\) is the volatility estimator for n returns \( r_i = \ln \frac{S_i}{S_{i-1}}\).

Equation (5) estimates volatility based on the average value of absolute returns assuming that the returns are normally distributed. In contrast, the usual approach to computing volatility calculates a standard deviation from the sum of squared returns:

Equation (6) makes no assumptions about return distribution.

Because volatility is a measure of the variation of the price and infinitesimal price variations are scaled by the price of the process for a lognormal random walk (Eq. 1), let us do a linear regression of the absolute value of the differences \(\left| dS_t\right| =\left| S_t-S_{t-1}\right| \) versus \(S_t\). The regression model is \(\left| S_i-S_{i-1}\right| = \alpha + \beta S_i + \epsilon _i \), where \(\alpha \) is the intercept, \(\beta \) the slope, and \(\epsilon _i\) is the error term with zero mean and finite variance that is assumed to be Gaussian. Then let us compute the following parameter:

where \(\beta \) is the slope of the linear regression.

The estimator \(\hat{\sigma _3}= \sqrt{\frac{\pi }{2}} \; \hat{\beta }\) is also an estimator of the volatility for a lognormal random walk. The estimator of the slope of the linear regression with intercept (Kenney 1947)is as follows:

For a lognormal random walk, \(\sigma _3\) will converge toward the volatility \(\sigma \) of the time series (estimators \(\hat{\sigma _1}\) or \(\hat{\sigma _2}\)); whereas for a Gaussian random walk of the form \(dS_t=\sigma _{Gauss} dW_t\),Footnote 1 \(\sigma _3\) will converge toward 0. For a square-root random walk of the form \(dS_t= \sigma _{sqrt} \sqrt{S_t} dW_t\),Footnote 2 \(\sigma _3\) will converge toward half of \(\sigma \). The proof for convergence is provided below.

Let us show that for the general form of stochastic processes \(dS_t = \sigma _k S_t^k dW_t\), the ratio \(\sigma _3/\sigma \), where \(\sigma \) is the volatility of the time series, converges toward k. Let us set \(y=\left| dS\right| \); hence, the slope of |dS| versus S for arbitrary t is as follows:

From the scaling relationship:

we get:

Hence:

Finally, the expected value of \(\beta _k\) is as follows:

Hence, \(\mathbb {E} (\sigma _3)= k {\sigma }\) and the ratio \(\sigma _3/\sigma \) converges toward k, which is equal to one for the lognormal random walk, \(\frac{1}{2}\) for the square-root random walk, and zero for the Gaussian random walk.

3 Hypothesis testing

The parametric test of lognormality for a given time series and the alternatives (Gaussian and square-root random walks) is based on the Student’s t-test for the slope of a linear regression (Casella and Berger 2002). The null hypothesis \(H_0\) is that the underlying stochastic process of the time series is lognormal. The alternative hypothesis \(H_1\) is that the underlying stochastic process is a square-root random walk. The alternative hypothesis \(H_2\) is that the underlying stochastic process is a Gaussian random walk. Let us assume that the error terms of the linear regression of \(|dS_t|\) versus \(S_t\) used for the estimation of \(\hat{\sigma _3}\) are Gaussian, centered in zero, and of finite variance. If a time series is lognormal, then the slope of the linear regression \(\beta \) must converge toward \(\sqrt{\frac{2}{\pi }} \sigma \). For a square-root random walk, the slope of the linear regression \(\beta \) must converge toward \(\frac{1}{2} \sqrt{\frac{2}{\pi }} \sigma \); and, for a Gausian random walk, the slope converges toward zero (see Table 1).

Hence, for each hypothesis, we compute the value of the test t-statistic as follows:

where the standard error of the slope of the linear regression \(\beta \) is as follows:

where \(\bar{S}\) is the average of the \(S_i\) observations over the sample n, and MSE is the mean square error of the linear regression, which is the sum of square errors divided by \(n-2\):

where \(\epsilon _i\) are the error terms of the linear regression \(y_i= \alpha + \beta x_i + \epsilon _i\), with n observations.

To compute \(\beta _0\) in Eq. (14), we shall use \(\hat{\sigma _2}\) as this is the standard estimator to compute the volatility of a time series. The p-value is determined by referring to a Student’s t-distribution with \(t_{n-2}\) degrees of freedom. If the p-value is smaller than the significance level \(\alpha \), we reject the corresponding hypothesis; if it is larger than \(\alpha \), we accept the corresponding hypothesis at the significance level \(\alpha \).

4 Simulations

To assess the present test, let us run some Monte Carlo simulations with different stochastic processes, respectively the lognormal random walk, the Gaussian random walk, and the square-root random walk. For comparability, we need to scale the \(\sigma _{Gauss}\) and \(\sigma _{sqrt}\) of the Gaussian and square-root random walk. Let us take \(\sigma _{Gauss}=S_0 \sigma \), and \(\sigma _{sqrt}=\sqrt{S_0} \sigma \), where \(S_0\) is the initial asset price of the simulation. For the Monte Carlo simulation, let us take \(S_0=100\), \(\sigma =0.15\), over a time horizon T of 2 years with daily time increments.

In the simulations of Table 2, the volatility estimators have been scaled to annual basis using the \(\sqrt{dt}\) scaling factor assuming 250 trading days in a year. The simulation shows that the parameter \(\sigma _3\) converges toward its expected value in all three scenarios. Estimators \(\hat{\sigma _1}\) and \(\hat{\sigma _2}\) converge toward the volatility in all three scenarios. Estimator \(\hat{\sigma _2}\) is a consistent estimator, and \(\hat{\sigma _1}\) appears to converge toward the volatility for the Gaussian and square-root random walks due to the law of large numbers. Estimator \(\hat{\sigma _3}\) converges toward the volatility for the lognormal random walk only; however, it is less efficient than \(\hat{\sigma _1}\) and \(\hat{\sigma _2}\) as it requires a larger sample size in order to converge. For the purpose of running the simulations over longer time horizons (e.g. TFootnote 3 of 20 years), we must add a constraint on the minimum value that S can reach; otherwise, the estimators \(\hat{\sigma _1}\) and \(\hat{\sigma _2}\) become unstable when S is close to zero for the Gaussian and square-root random walk. A floor price of 1 unit when \(S_0\) is equal to 100 would be adequate.

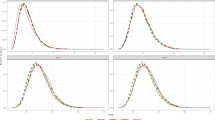

To show that testing for normality of the returns is not sufficient to make inferences about the lognormality of a time series, let us run some Monte Carlo simulations under different scenarios and compute the percentage rejection of the null hypothesis (i.e. normally distributed returns) using the Kolmogorov-Smirnov test at a significance level of 5\(\,\%\) over 10,000 simulation paths with daily time increments (see Table 3).

For the simulations with the 2-year horizon, the test fails to reject the normally distributed-return hypothesis for the Gaussian and square-root random walks; whereas, for the 20-year horizon, the rejection rate is 9.9\(\,\%\) for the square-root random walk and 42.5\(\,\%\) for the Gaussian random walk. What accounts for this result is that, for the 2-year horizon, the variations of S are small. Hence, returns from the stochastic differential equation are almost normally distributed for both the Gaussian and square-root random walks. For the 20-year horizon, the variations of S are larger (S spans a larger range of values); therefore, we start to observe some deviations from normality for the returns.

To evaluate the power of the proposed hypothesis-testing framework, let us run some Monte Carlo simulations under the different scenarios and compute the percentage of type IFootnote 4 and type IIFootnote 5 errors for \(H_0\), \(H_1\) and \(H_2\) with the 5-year and 20-year horizons at a significance level of 5\(\,\%\) over 10,000 simulation paths with daily time increments (see Tables 4, 5). For these simulations, we take \(S_0=100\), \(\sigma =0.15\), and use the linear model with intercept introduced in the Sect. 2. When applying the test to financial data, we also considered the linear model without intercept (see Sect. 5).

For the simulation with the 5-year horizon, type I errors are in a range of 5.0 to 5.8\(\,\%\) for the three stochastic processes. Type I error is the highest for the square-root random walk with the 20-year horizon where it is 20.2\(\,\%\). Type II errors are quite important for the simulations with the 5-year horizon. In 26.6\(\,\%\) of the cases in the simulation, the test failed to reject \(H_1\) for the lognormal random walk. In case of the Gaussian random walk, that happened 27.1\(\,\%\) of the time. For the square-root random walk, the simulation failed to reject \(H_0\) 26.7\(\,\%\) of the time and \(H_2\) 25.8\(\,\%\) of the time. Type II errors are always below 0.2\(\,\%\) for the simulations with the 20-year horizon. We can see that the power of the test is greatly improved when compared to the usual approach based on the testing of the normality of the returns. The power of the test is high enough in order to say one can accept \(H_0\) at the given significance level provided the sample size is large, whereas in the usual test the power of the test is not good enough to reject \(H_0\) in many cases.

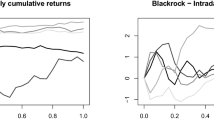

5 Analysis with actual data on the S&P 500 and the VIX indices

Now, let us apply the present study’s hypothesis-testing framework to financial data for the Standard & Poor’s 500 (S&P 500) and VIX indices. The S&P 500 is a US stock index of 500 large companies having common stock listed on the NYSE or NASDAQ. The stocks are selected by the S&P Index Committee based on market capitalization, liquidity and industry grouping (among other factors), and are weighted according to the total market value of the outstanding shares.

The VIX is the trademark for the Chicago Board Options Exchange Market Volatility Index which is a measure of the implied volatility of S&P 500 index options. The VIX index was developed for hedging changes in volatility (Brenner and Galai 1989). It is calculated by the Chicago Board Options Exchange using at-the-money and out-of-the-money put and call options for the front month and second month of expiration. The VIX aims to measure the 30-day implied volatility of the S&P 500 index.

For our purpose, let us compare the results with two distinct models for the linear regression of \(|dS_i|\) versus \(S_i\), respectively: the linear model with intercept \(|S_i - S_{i-1}| = \alpha +\beta S_i + \epsilon _i\); and the linear model without intercept \(|S_i - S_{i-1}|= \beta S_i+ \epsilon _i\), where \(\alpha \) is the intercept, \(\beta \) the slope and \(\epsilon _i\) the error term which has zero mean and finite variance. Note that the linear model without intercept is not appropriate to test the Gaussian random walk hypothesis, because for a Gaussian random walk the slope \(\beta \) should converge toward zero and the absolute price differences are always lying above the x-axis.

For the analysis of the S&P 500, we used daily time series for the period of 01/02/1980 to 12/31/2004. We excluded the data after 2004 as the present study’s test shows some departure from lognormality for the last decade. Taking the full dataset up until the present time would lead to rejection of \(H_0\) at 5 % significance level for the S&P 500 index; whereas the test accepts \(H_0\) for the linear model without intercept for the period considered. For the analysis of the VIX index, we used daily time series for the period of 01/02/1990 to 01/16/2015. The main statistical parameters for the linear regression, volatility measures and the order of the Brownian motion are displayed in Table 6 for the S&P 500 index and in Table 7 for the VIX index.

The order k of the Brownian motion (see Tables 6 and 7) is close to one for both the S&P 500 and VIX indices with the linear model without intercept which does suggest a lognormal random walk as the underlying stochastic process. The t-statistic for the Student’s t-test of the slope of the linear regresion is calculated for \(H_0\), \(H_1\) and \(H_2\) for the S&P 500 index in Table 8 and for the VIX index in Table 9.

The t-critical statistic for the t-Student’s distribution for the large sample size considered (n \(=\) 6510 for the S&P 500 index test and n \(=\) 6311 for the VIX index test) at 5 % significance level is 1.960 for both sets. Hence, the test rejects all three hypotheses considered (\(H_0\), \(H_1\) and \(H_2\)) for both the S&P 500 and the VIX index with the linear model with intercept. For the linear model without intercept, the null hypothesis \(H_0\) (i.e. lognormal random walk ) is accepted (\( t^* < \) t-critical) for both the S&P 500 and VIX indices at 5 % significance level; whereas, the alternative hypotheses \(H_1\) and \(H_2\) are rejected.

6 Conclusion

The present study presents a testing framework for the structural properties of the underlying stochastic process of a time series. This test aims to discriminate among stochastic processes, in particular, among the lognormal random walk used in the Black-Scholes-Merton model, the Gaussian random walk used in the Ornstein-Uhlenbeck stochastic process, and the square-root random walk used in the Cox, Ingersoll and Ross process. The test is based on the statistical parameters \(\sigma _3\) (or equivalently the slope \(\beta \) of the linear model) and the volatility \(\sigma \) of the time series estimated by \(\hat{\sigma _1}\) and \(\hat{\sigma _2}\). For a lognormal random walk, \(\sigma _3\) converges toward \(\sigma \). For a Gaussian random walk, \(\sigma _3\) converges toward zero; and, for a square-root random walk, \(\sigma _3\) converges toward half of \(\sigma \). Finally, an \(\alpha \)-level hypothesis test is provided to test for the lognormality of a time series versus the alternatives (Gaussian and square-root random walks). We analyzed the S&P 500 and VIX indices. The test accepted the lognormality hypothesis at 5 % significance level for both indices when using the linear model without intercept, while it rejected the alternative hypotheses. When using the linear model with intercept, the test rejected all three hypotheses (lognormal, square-root and Gausian random walks) at 5 % significance level. Non-stationary effect and volatility spikes may be responsible for the negative results with the linear model with intercept. For the purpose of analyzing financial time series which do not follow a Gaussian random walk, we suggest using the linear model without intercept. In conclusion, practitioners may find the present test useful for selecting which stochastic processes they use for contigent-claim valuation and risk management. The test can be applied to any asset class so long as we have an observable, as shown for an equity and a volatility index.

Notes

\(\sigma _{Gauss}\) is the volatility parameter of the Gaussian random walk which has been scaled for a Brownian motion of order zero with Eq. (11).

\(\sigma _{sqrt}\) is the volatility parameter of the square-root random walk which has been scaled for a Brownian motion of order \(\frac{1}{2}\) with Eq. (11).

T is the time horizon in years, and consists of 250 trading days per year for the sample size.

A type I error is the incorrect rejection of a true hypothesis.

A type II error is a failure to reject a false hypothesis.

References

Becker DN (1991) Statistical tests of the lognormal distribution as a basis for interest rate changes. Trans Soc Actuar 43:7–72

Brenner M, Galai D (1989) New financial instruments for hedging changes in volatility. Financ Anal J 45(4):61–65

Casella G, Berger RL (2002) Statistical inference. Duxbury advanced series, 2nd edn. Cengage learning India Private Limited, Delhi, p 557

Kenney JF (1947) Mathematics of statistics (part one), 3rd edn. D. Van Nostrand Company, Inc, Toronto, New York, p 143 [Reprinted in 1952]

Lehmann E, Romano J (2010) Testing statistical hypotheses. Springer, New York

Rachev ST, Stoyanov SV, Biglova A, Fabozzi FJ (2005) Data analysis and decision support. springer, Berlin

Rachev ST, Stoyanov SV, Wu C, Fabozzi FJ (2007) Empirical analyses of industry stock index return distributions for taiwan stock exchange. Ann Econ Finance 8:21–31

Acknowledgments

The author is grateful to Professor Anthony Davison from the Institute of Mathematics at the EPFL, Professor Thomas Mikosch from the Department of Mathematics at the University of Copenhagen and anonymous referees for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Heymann, Y. A test of financial time-series data to discriminate among lognormal, Gaussian and square-root random walks. Comput Stat 31, 1373–1383 (2016). https://doi.org/10.1007/s00180-015-0630-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-015-0630-6