Abstract

The goal of this paper is to introduce local length scale control in an explicit level set method for topology optimization. The level set function is parametrized explicitly by filtering a set of nodal optimization variables. The extended finite element method (XFEM) is used to represent the non-conforming material interface on a fixed mesh of the design domain. In this framework, a minimum length scale is imposed by adopting geometric constraints that have been recently proposed for density-based topology optimization with projections filters. Besides providing local length scale control, the advantages of the modified constraints are twofold. First, the constraints provide a computationally inexpensive solution for the instabilities which often appear in level set XFEM topology optimization. Second, utilizing the same geometric constraints in both the density-based topology optimization and the level set optimization enables to perform a more unbiased comparison between both methods. These different features are illustrated in a number of well-known benchmark problems for topology optimization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Topology optimization has become a popular technique for design optimization due to its capability to provide highly efficient and innovative solutions during the early stages of the design process. This has led to the development of different strategies for topology optimization in the last two decades. A number of review papers (Deaton and Grandhi 2013; Sigmund and Maute 2013; van Dijk et al. 2013) present an excellent overview of these developments. The work in this paper focuses on the two most prominent methods: the density-based SIMP method (Bendsøe and Sigmund 2004) and level set methods (Sethian and Wiegmann 2000; Wang et al. 2003; Allaire et al. 2004).

The manufacturability of the optimized design remains an important challenge in topology optimization. Incorporating aspects of the manufacturing process in the optimization can be valuable in this respect (Jansen et al. 2013; Zhou et al. 2014). On the other hand, the ability to control the minimum and maximum length scales in the design is also crucial for obtaining a manufacturable design (Lazarov et al. 2016). Moreover, it has been recognized that a minimum length scale can improve the robustness of the design with respect to geometric imperfections (Wang et al. 2011; Schevenels et al. 2011). Geometric limitations are also useful for avoiding numerical instabilities such as checkerboard patterns in the finite element approximation (Sigmund and Petersson 1998).

In the framework of level set methods, a number of authors (Luo et al. 2008; Chen et al. 2008) have proposed geometric constraints based on energy functionals in order to impose a minimum length scale in the design. These energy functionals are typically added as a penalty term to the objective function. More recently, Guo et al. (2014) developed a method for feature size control based on the concept of the structural skeleton which is defined by the signed distance function of the material domain. Allaire et al. (2016) also use the properties of the signed distance function to impose thickness constraints in the level set method. These approaches provide accurate size control, but the sensitivity analysis of functionals including distance functions becomes quite involved.

The minimum length scale issue has received more attention in the density-based approach to topology optimization. Petersson and Sigmund (1998) proposed the so-called MOLE constraint to impose a minimum member thickness. Later, filter-based solutions became popular starting with the original density filters (Bourdin 2001; Bruns and Tortorelli 2001) which were followed by nonlinear projection filters (Guest et al. 2004; Xu et al. 2010). An overview of different filter-based solutions was presented by Sigmund (2007). In general, these filters are usually only capable of introducing a minimum feature size in a single phase, either material or void. Robust projection filters (Wang et al. 2011; Schevenels et al. 2011) are capable of imposing a minimum length scale in both material and void simultaneously. However, the computational cost of these filters is significant as they require multiple finite element analyses per design iteration. Zhou et al. (2015) proposed a set of computationally inexpensive geometric constraints that essentially achieve the same geometric effect as the robust filters. The principal idea behind these constraints is that a minimum length scale can be ensured if the topology of the design does not change when the interface between material and void is perturbed within specified limits.

The constraints proposed by Zhou et al. (2015) have several favorable features, most notably: simplicity, computational inexpensiveness, and decent convergence properties. This paper therefore presents an adaptation of these constraints for an explicit level set extended finite element method (LS XFEM) for topology optimization. An immediate advantage of this adjustment of the density-based formulation to the level set framework is that it enables to make a comparative analysis of both methods with equivalent geometric constraints. A number of quantitative comparisons of the LS XFEM and the density-based SIMP method have been presented in the literature, most notably by Wei et al. (2010) and Villanueva and Maute (2014), but these works relied on different regularization techniques for both methods.

Note that a similar adaptation of the density-based constraints was recently proposed by Dunning (2018). However, there are two important differences between the constraints in this paper and the formulation of Dunning (2018). First, the constraints proposed in this work are defined on the level set domains rather than using regularized indicator functions for the material and void domains. This leads to a formulation and sensitivity analysis that remains closer to the nature of the level set method. Second, in our explicit method, the level set is given as a function of the optimization variables by means of a filtering operation similar to density filtering, and the constraints are applied directly on this filtered level set instead of on a newly introduced density field. As a result, the proposed constraints do not require any additional filtering compared to the original level set formulation. Furthermore, this improves the similarity with the original density-based formulation and simplifies the adjustments of the original formulation as the same values for the constraint parameters can be retained.

The paper is organized as follows. The following section briefly summarizes the main aspects of topology optimization. As already mentioned, the developments in this paper allow to compare the explicit level set method with the density-based method. Although the main developments of this work are related to the former, both methods for topology optimization will be discussed in order to keep the paper self-contained. Afterwards the adaptation of the original geometric constraints by Zhou et al. (2015) to the level set method is presented. Finally, the application of the constraints is demonstrated in some benchmark problems. These examples also serve as a comparison for the level set and density method.

2 Topology optimization

Topology optimization performs structural optimization with almost complete design freedom by optimizing the distribution of a limited amount of material in a given design domain D. In general, the material distribution can be represented by the indicator function \(\rho (\mathbf {x})\) defined on the domain D:

where the open set \({\Omega }\) represents the material domain.

In topology optimization of linear elastic problems, the state variables \(\mathbf {u}\), i.e., the displacements of the structure, are found as the solution of Navier-Cauchy equations. The equivalent weak form can be expressed on the complete design domain D as follows:

where \(\boldsymbol {\epsilon }\) is the linear strain operator and \(\mathcal {U}\) is the set of admissible displacements satisfying the homogeneous Dirichlet boundary conditions. Furthermore, to simplify the presentation, it is assumed that there are no body loads and that the non-homogeneous Neumann boundary \({\Gamma }_{\mathrm {n}}\) with external tractions \(\bar {\mathbf {t}}\) is fixed in the optimization. When optimizing a structure consisting of a single material embedded in the void, the constitutive tensor \(\mathbf {C}\) is expressed as a function of \(\rho \) as follows:

where \(\mathbf {C}^{0}\) is the Hookean tensor of the material.

This work is concerned with the solution of the following optimization problem:

where the constraint \(G_{\text {vf}}\) limits the volume of solid material to a fraction \(\epsilon _{\text {vf}} \in (0,1)\) of the volume \(V_{\mathrm {D}}\) of the design domain D. The total volume of material is defined as follows:

Furthermore, in the examples, the following specific type of objective functions is considered:

where the boundary \({\Gamma }_{\mathrm {f}} \subset {\Gamma }\) is again fixed during the optimization. The minimum compliance problem is retrieved by using the non-homogeneous Neumann boundary \({\Gamma }_{\mathrm {n}}\) as \({\Gamma }_{\mathrm {f}}\) and setting \(\mathbf {f}\) equal to the external load \(\bar {\mathbf {t}}\) in the objective function (6).

With the binary-valued \(\rho \) as the independent optimization variables, the problem (4) represents a combinatorial optimization problem which becomes very hard to solve, even at modest problem sizes. This issue is often considered to be the main challenge in topology optimization. In this paper, two of the most prominent solution strategies are considered: an explicit level set XFEM and the density-based SIMP method. The former method is often regarded as a geometric view on the problem in which the structural topology is optimized by considering variations of the shape of the material domain, while the latter method transforms the optimization problem into a sizing problem. Although both methods are based on different concepts, ultimately they lead to quite similar optimization problems (Sigmund and Maute 2013). Both methods are briefly summarized in the following. The level set method is considered first as the main developments in this paper are related to this approach.

2.1 Level set XFEM

2.1.1 Level set parametrization

In level set methods, the material domain \({\Omega }\) is characterized by means of the level set of a scalar function \(\phi \) defined on the design domain D as follows:

In a parametric level set approach (van Dijk et al. 2013), the level set \(\phi \) is expressed as an explicit function of the vector of independent optimization variables \(\mathbf {p}\). On the other hand, it is now straightforward to express the indicator function \(\rho \) as a function of the level set \(\phi \), and hence the optimization variables \(\mathbf {p}\), by means of the Heaviside function H:

In this work, the level set function is discretized on the same finite element mesh of the domain D that is used to solve the elasticity problem (2) during the optimization. In the framework of the XFEM (see Section 2.1.2), it is common to use the regular first-order finite element shape functions \(N_{i}\) in this discretization:

where n is the total number of nodes in the mesh of the design domain D. Similarly, an optimization variable \(p_{i}\) is attached to each node of the mesh which leads to n independent optimization variables.

The nodal level set values \(\phi _{i}\) are expressed in terms of the optimization variables \(\mathbf {p}\) by means of a filter operation (Kreissl and Maute 2012):

where the nodal weights \(v_{j}\) and the filter coefficients \(\kappa _{ij}\) are computed as follows:

where \(\mathbf {x}_{i}\) are the coordinates of node i. The filter operation (10) is equivalent to the density filter used in density-based topology optimization (see Section 2.2). It is useful for smoothing the level set function and improving the overall convergence of the optimization algorithm by diffusing the parametric shape sensitivities (Villanueva and Maute 2014). Note, however that in contrast to the density filters used in the SIMP method, the filter operation (10) does not provide any significant regularization of the solid domain \({\Omega }\).

2.1.2 Extended finite element method

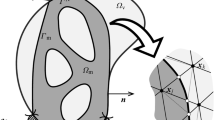

The level set parametrization (7) provides a flexible representation of the material distribution in the design domain. Furthermore, in combination with the XFEM it is possible to accurately track the interface \({\Gamma }\) of the material domain on a fixed non-conformal mesh of the design domain D.

The XFEM was originally developed for modeling discontinuities due to cracks on non-conformal finite element meshes by enriching the finite element shape functions (Moës et al. 1999). Later, the potential of the method was also recognized for arbitrary interface problems, multi-material problems, fluid-structure interaction etc. (See the review paper by Fries and Belytschko (2010) for an overview of different applications of the XFEM.) The method is also frequently used in topology optimization for its capability to track non-conformal material domains on a fixed finite element mesh of the design domain (Wei et al. 2010; Van Miegroet 2012; Abdi et al. 2014; Kreissl and Maute 2012).

This work uses the XFEM for material-void interfaces (Daux et al. 2000; Belytschko et al. 2003). The weak form as used in the formulation by Belytschko et al. (2003) is retrieved by inserting the parametrization of the indicator function (8) in the weak form (2):

The finite element discretization is based on a mesh of the design domain D. The displacement field \(\mathbf {u}\) is discretized on the complete design domain using standard finite element shape functions \(N_{i}\) in this basic extended FE formulation:

where \(\mathbf {u}_{i}\) are the nodal displacements. Inserting the displacement discretization into the weak form (13) leads to the linear finite element system:

where \(\mathbf {f}\) is the external load vector and \(\mathbf {u}\) is the displacement vector. The XFEM stiffness matrix \(\mathbf {K}\) is assembled as follows:

where \(n_{\mathrm {e}}\) is the total number of elements in the finite element mesh and \(\mathbf {B}\) is the linear strain matrix. The assembly (16) follows exactly the same steps as in a regular finite element analysis. Only the elements cut by the interface (ϕ = 0) require special attention. In order to take into account the Heaviside function in these cut elements, the Gaussian integration scheme is modified. A modified integration rule is constructed by splitting the element into sub-elements along the interface and then using regular Gaussian integration for each of the sub-elements belonging to the material phase. Figure 1 illustrates this process for different configurations of a quadrilateral element.

2.1.3 Optimization problem

The parametrization of the level set (10) and the numerical resolution of the elasticity problem using the XFEM are combined to recast the optimization problem (4) in the following nonlinear programming form:

Note that the bounds on the optimization variables \(p_{i}\) are chosen rather arbitrarily and do not represent any physical limitation. However, in the framework of the geometric constraints based on threshold values (see Section 3), the range of allowed values does play an important role since they determine the maximum attainable slope of the level set function.

2.1.4 Numerical instabilities

The level set method as presented above is known to be susceptible to numerical instabilities in the XFEM approximation. These difficulties have been described in detail by Makhija and Maute (2014).

The instabilities arise in particular when the material domain is cut by a hole that is smaller than twice the element size. An example is shown in Fig. 2. The basic XFEM scheme described in Section 2.1.2 erroneously adds stiffness contributions to the node B in the void region shared by the elements 1 and 2. As a result, the nodes A and C in the material phase will be artificially connected across the void region. These numerical instabilities are therefore quite similar to the intermediate densities seen in the density-based methods. The optimization algorithm tends to exploit these inaccuracies in the XFEM scheme which results in the creation of crack-like features in the optimized design.

Makhija and Maute (2014) proposed a generalized enrichment strategy to eliminate these artificial connections between disconnected nodes. Although effective, the enrichment strategy is rather complicated to implement as it deviates considerably from more standard XFEM enrichment strategies. Furthermore, the computational cost of the method might be significant as the enrichment strategy increases the number of degrees of freedom in the finite element system. For these reasons, this work follows an alternative strategy to alleviate these numerical artifacts based on the geometric constraints presented in Section 3. The idea is simply to avoid artificial connections across the void region by introducing a minimum length scale in the void region that is larger than the finite element size. The effectiveness of the geometric constraints at eliminating the numerical instabilities is illustrated in the example in Section 5.1.

2.2 Density-based topology optimization

2.2.1 Three-field parametrization

In density-based methods, a continuous nonlinear optimization problem is obtained by relaxing the binary condition (1): the design variables \(\rho \) are identified as volume densities which take values in the interval \([0,1]\). The well-known Solid Isotropic Material with Penalization (SIMP) method (Bendsøe 1989; Rozvany et al. 1992) is used in order to obtain optimized designs that consist almost entirely of material (ρ = 1) and void (ρ = 0). The SIMP method penalizes intermediate densities by modifying the density/stiffness interpolation (3) as follows:

where the parameter \(\epsilon _{\mathrm {c}} \ll 1\) is used to attribute a small non-zero stiffness to the void phase. Intermediate densities are made inefficient by using a penalization parameter \(p>1\).

In the framework of the SIMP method, the physical densities \(\rho \) are discretized by assigning a constant value \(\rho _{e}\) to each element of the finite element mesh of the design domain. The vector \(\boldsymbol {\rho }\in \mathbb {R}^{n_{\mathrm {e}}}\) contains all the element densities.

More recently, the SIMP method is frequently combined with nonlinear projection filters (Guest et al. 2004; Xu et al. 2010; Wang et al. 2011). These filters serve to regularize the optimized design while simultaneously improving the convergence to \(0/1\) solutions. The filters typically use three fields of design variables. The new fields of independent optimization variables \(\bar {\rho }\) and intermediate densities \(\tilde {\rho }\) are introduced. Both fields are discretized to a constant value per finite element which leads to the vector of independent design variables \(\bar {\boldsymbol {\rho }}\in \mathbb {R}^{n_{\mathrm {e}}}\) and the intermediate variables \(\tilde {\boldsymbol {\rho }}\in \mathbb {R}^{n_{\mathrm {e}}}\). First, the design variables \(\bar {\boldsymbol {\rho }}\) are smoothed by a density filter (Bourdin 2001; Bruns and Tortorelli 2001) to obtain the intermediate densities \(\tilde {\rho }_{e}\):

It is clear that the density filter is equivalent to the filter operation (10) in the level set method. The only difference is that the density filter (19) filters quantities associated to the elements rather than the nodes; for example, the weights \(v_{i}\) and coefficients \(\kappa _{ei}\) are computed as:

where \(\mathbf {x}_{e}\) represents the coordinates of the center of element e.

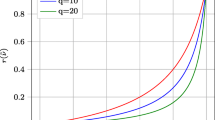

Second, in order to remove the gray transitions zones between material and void phases, the actual material densities \(\rho \) are obtained by projecting the intermediate variables \(\tilde {\rho }\) to \(0/1\) using a regularized Heaviside function (Wang et al. 2011):

where the parameter \(\beta \) determines the steepness of the function and \(\eta \in [0;1]\) is the threshold value of the projection. In combination with the geometric constraints (see Section 3), this work always assumes a projection threshold \(\eta = 0.5\). However, it is well know that the filter as such does not impose a minimum length scale in neither the material nor the void phase (Wang et al. 2011).

2.2.2 Optimization problem

Using the two-step parametrization, Eqs. 19 and 22, the material densities \(\mathbf {\rho }\) are expressed as a function of the optimization variables \(\bar {\boldsymbol {\rho }}\). The original optimization problem (4) is then recast in following form:

3 Geometric constraints

As discussed in the previous sections, the filter operations (10) and (19) in both the level set method and the three field density method do not suffice to control the local length scale of the optimized design.

The geometric constraints proposed by Zhou et al. (2015) are based on considering specific perturbations of the interface between material and void phase which are related to a variation of the projection threshold \(\eta \) in the projection filter (22). Increasing the threshold to \(\eta ^{\mathrm {e}} > \eta \) leads to an erosion or thinning of the material phase, while decreasing the threshold to \(\eta ^{\mathrm {d}} < \eta \), corresponds to a dilation or thickening of the material phase. The principal idea behind the geometric constraints is that a minimum length scale is ensured in the material and void phase if the erosion and dilation operations do not modify the topology of the design. Transforming this requirement into a mathematical form is usually based on a one-dimensional representation of the problem. This is illustrated in Fig. 3a where an example of a smoothed intermediate field \(\tilde {\rho }\) is shown. The corresponding material densities \(\rho \) obtained after projection with the threshold \(\eta \) are indicated by the gray regions. It is easy to see that the topology will not change if the intermediate densities \(\tilde {\rho }\) locally exceed the erosion threshold \(\eta ^{\mathrm {e}}\) in the material phase and fall below the dilation threshold \(\eta ^{\mathrm {d}}\) in the void phase. To verify these conditions, it suffices to consider the local extrema of the intermediate \(\tilde {\rho }\), i.e., the values at the inflection points of \(\tilde {\rho }\). The crosses + in Fig. 3a indicate two examples of inflection points which do not meet these criteria.

Illustration of the geometric constraints for a the density-based formulation and b the level set formulation. The material domain is represented by the gray areas. The crosses + indicate the critical inflection points in the intermediate density field \(\tilde {\rho }\) and level set ϕ that do not satisfy the constraints

The geometrical constraints penalize the inflection regions that do not satisfy the abovementioned requirements. To simplify the comparison with the level set formulation, the original constraints are presented in a continuous form based on the intermediate density field \(\tilde {\rho }\):

where \(\left [\cdot \right ]_{+} = \max \left [\cdot ,0\right ]\) and the continuous indicator function \(I_{\rho }\) for the inflection regions is defined as:

The constraint relaxation parameter \(\epsilon _{\rho }\) is added to accommodate for numerical errors and the approximate nature of the indicator function \(I_{\rho }\). The parameter cρ in the indicator function and the relaxation parameter \(\epsilon _{\rho }\) are determined as a function of the filter radius R as described by Zhou et al. (2015). The actual length scale that is imposed by these constraints can be estimated based on a similar one-dimensional reasoning; see Wang et al. (2011) for the details of this procedure.

Due to the similarities in the parameterization of the material domain (represented by the function \(\rho \)), it is straightforward to transform the constraints to the level set formulation. This adaptation to the level set framework is illustrated in Fig. 3b by depicting the same configuration as for the density-based formulation in Fig. 3a. The material densities \(\rho \) are replaced by the Heaviside representation (8) while the level set function \(\phi \) replaces the intermediate densities \(\tilde {\rho }\). As a result, the constraints can be cast in the following form:

where \(\phi ^{\mathrm {e}}\) and \(\phi ^{\mathrm {d}}\) represent the level set thresholds for erosion and dilation, respectively, and \(I_{\phi }\) corresponds to Eq. 26 with \(c_{\rho }\) replaced by \(c_{\phi }\). A minor difference between both formulations is that the densities \(\bar {\rho }\) take values in the interval \([0,1]\), while the variables p are allowed to vary in the interval \([-1,1]\). In order to achieve the same length scale as in the density formulation, the threshold values are therefore selected as follows:

Likewise, a similar scaling is applied to the constraint parameters: it is easy to verify that the equivalent parameters should be chosen as \(c_{\phi }=c_{\rho }/4\) and \(\epsilon _{\phi }= 2\epsilon _{\rho }\).

In order to make the implementation of the constraints applicable to unstructured grids, Gaussian integration is used to integrate the constraints (27)–(28) while the evaluation of the level set \(\phi \) and its gradient is based on the finite element interpolation of \(\phi \). However, since the first-order Lagrange shape functions are only \(C_{0}\) continuous, potential inflection zones of the function \(\phi \) located at the interfaces between elements could be missed by the numerical integration scheme. Therefore, in the evaluation of the indicator function \(I_{\phi }\), a continuous gradient field is first constructed by a simple nodal averaging strategy as is common in finite element analysis in the reconstruction of continuous gradient fields (Cook et al. 2002). Similarly, in the SIMP implementation, the gradient of the density field \(\tilde {\rho }\) is computed based on the nodal averaged values of the element densities \(\tilde {\rho }_{e}\). Despite these modifications in the numerical evaluation of the constraints, the original values for the parameters \(c_{\rho }\) and \(\epsilon _{\rho }\) as proposed by Zhou et al. (2015) have been used in the examples and lead to satisfactory results.

4 Optimization algorithm and sensitivity analysis

An important advantage of the explicit level set method is that the problem can be formulated as a nonlinear programming problem that can be solved by general-purpose optimization algorithms. In this work, the level set problem (17) and density-based problem (23) are solved both by the Method of Moving Asymptotes (MMA) (Svanberg 1987) or the Globally Convergent MMA (GCMMA) (Svanberg 2002).

These gradient-based optimization algorithms require the first-order design sensitivities of the objective and constraint functions. The sensitivity analysis for the three-field density methods is well documented in the literature and will not be repeated here. Likewise, the sensitivity analysis for the explicit level set method based on parametric shape derivatives can be found in the literature (see for example the review paper by van Dijk et al. (2013)). Nevertheless, the sensitivity analysis of the new geometric constraints (27)–(28) is briefly discussed here.

As an example, the design sensitivities of the constraint on the solid domain are considered. The sensitivities of the functional \(G_{\mathrm {S}}\) with respect to the nodal level set values can be expressed as follows:

The design sensitivities with respect to the nodal level set values consist of two contributions. The first part represents the parametric shape derivative which is related to the dependence of the integration domain \({\Omega }\) on the design variables. The second part is due to the direct dependence of the integrand on the level set \(\phi \).

The second term in this expression is found by simple differentiation of the integrand \(g_{\mathrm {S}}\) in Eq. 27:

The sensitivities with respect to the optimization variables p are determined by applying the chain rule of differentiation to respectively Eq. 32 and the linear filter operation (10):

where:

5 Examples

The geometric constraints are applied in a number of benchmark problems for topology optimization. Throughout the examples, the properties are presented without explicitly specifying the units as is common in topology optimization. Nonetheless, it is assumed that consistent units are being adopted. The material properties of the elastic solid are a Young’s modulus E0 = 1 and a Poisson’s ratio \(\nu = 0.3\). The density-based results are based on a fixed penalization \(p = 3\) in the SIMP method (18). A common value 𝜖c = 10− 9 is used for the stiffness of the void phase in the compliance minimization problems. On the other hand, for the compliant mechanism problems, we follow the advice of Jouve and Mechkour (2008) and use a value \(\epsilon _{\mathrm {c}}= 10^{-3}\). In our experience, a larger value for \(\epsilon _{\mathrm {c}}\) can help to avoid trivial solutions with zero output displacement in the level set method.

If the threshold values \(\phi ^{\mathrm {e}}\) and \(\phi ^{\mathrm {d}}\) are given as input parameters for the LS XFEM in the examples then this automatically implies that the equivalent thresholds \(\eta ^{\mathrm {e}}\) and ηd as determined by Eqs. 29–30 are used in the density-based results. For example, if \(\phi ^{\mathrm {e}}=-0.2\) and ϕd = 0.2, then \(\eta ^{\mathrm {e}}= 0.6\) and \(\eta ^{\mathrm {d}}= 0.4\). Minimum length scale constraints restrict the geometrical changes during the optimization and might cause the optimizer to converge to a sub-optimal solution. A common solution is to activate the constraints only after a certain number of iterations. In the LS XFEM, the constraints are activated after 100 iterations. In the density-based implementation, the projection filter uses the standard continuation scheme described in Sigmund (2007) with a maximum \(\beta = 32\). As a result, the geometric constraints are activated after 175 iterations. The convergence criterion in topology optimization is often based on the maximum design variable change. However, in case nonlinear projection filters are included in the density-based formulation, this criterion tends to perform poorly when the projection becomes highly nonlinear. For this reason, a fixed number of 300 MMA iterations is used as a simple but objective convergence criterion for both the density-based and level set method.

With respect to the presented results it should be noted that the reported performance values (i.e., compliance or displacement) correspond to objective values at the end of the optimization. This implies that these results are computed using the corresponding finite element routine for each method; i.e., XFEM for the level set method and regular FEM for the density method. Gray and white circles are used in the figures of the optimized designs to indicate the theoretical minimum length scales imposed by the geometric constraints in the material and void phase, respectively. Finally, the finite element meshes and the post-processing views were all generated using Gmsh (Geuzaine and Remacle 2009). In the following figures, the color scheme for the XFEM topologies is based on the displacement magnitude. These colors were only added for illustrative reasons without including a color legend. The relevant objective values of the designs are provided in the figures’ captions.

5.1 MBB beam

The first example of the MBB beam illustrates the effectiveness of the geometric constraints at resolving the numerical instabilities occurring in the LS XFEM scheme. The design domain and boundary conditions for the problem are shown in Fig. 4. A unit load P is applied in the top left corner. The design domain with length \(L = 60\) is meshed with \(120 \times 40\) square elements. The optimization uses an allowed volume fraction \(\epsilon _{\text {vf}} = 0.5\) and a filter radius \(R = 1.75\).

Figure 5 shows the results of the LS XFEM optimization. The optimization is initialized from the level set displayed in Fig. 5a. The XFEM representation of the corresponding material domain (Fig. 5b) only contains four holes.

In a first step, the design is optimized by solving the regular minimum compliance problem (17) without geometric constraints. The optimized level set and XFEM material domain are shown in Fig. 5c–d. The optimized design clearly resembles the typical solutions found in the literature for this problem. Nevertheless, the XFEM domain in Fig. 5d contains several crack-like features in the corners of the design. These features are due to the numerical instabilities described in Section 2.1.4.

In a second step, the geometric constraints (27)–(28) with threshold values \(\phi ^{\mathrm {e}}=-0.2\) and \(\phi ^{\mathrm {d}}= 0.2\) are included in the optimization. The optimized level set and XFEM material domain for this problem are shown in Fig. 5e–f. The theoretical minimum length scales that are imposed by the constraints in the void and material phase are indicated by the gray and white circles in Fig. 5f. It can be seen that the crack-like features have been removed by introducing a minimum length scale in the void phase that is larger than the element size. Furthermore, including the minimum length scale constraints in this example has only a minor effect on the performance of the optimized design: the design with constraints has a compliance \(F = 187.68\), while the compliance for the design without constraints is \(F = 186.81\).

5.2 Cantilever beam

The design of a cantilever beam is considered as the second minimum compliance example. The design domain and boundary conditions for the cantilever beam are shown in Fig. 6: a unit load P is applied in the middle of the right edge of the domain while the left edge is clamped. The design domain with length \(L = 20\) is meshed with \(200 \times 100\) square elements. The optimization uses an allowed volume fraction \(\epsilon _{\text {vf}} = 0.5\) and a filter radius \(R = 0.5\).

Figure 7 shows the different results for this problem. First, the regular minimum compliance problem is solved by means of the LS XFEM. The initial design for the optimization is shown in Fig. 7a, while Fig. 7b displays the optimized design. Similar to the previous example, some instabilities can be seen in the corners of the design.

Results for the cantilever beam. The top row shows the results for the LS XFEM optimization without geometric constraints with a the initial XFEM material domain and b the optimized design. The bottom row shows the optimized design including geometric constraints for c the LS XFEM and d the density method

Next, the geometric constraints with threshold values \(\phi ^{\mathrm {e}}=-0.4\) and \(\phi ^{\mathrm {d}}= 0.4\) are included in the optimization. The problem is solved using both the LS XFEM and the density-based method. The LS XFEM optimization is again initialized from the design in Fig. 7a, while the density-based method starts from a uniform material distribution as usual.

The optimized designs obtained by the LS XFEM and the density method are displayed in Fig. 7c and d, respectively. A visual inspection of these figures shows that the optimized designs are almost identical with very similar compliance values. Moreover, the same minimum length scale in the void region is achieved by both methods. Comparing Fig. 7c and d also illustrates the difference in the representation of slanted material/void interfaces by both methods: while the SIMP method with piecewise constant densities is limited to staircase-like interfaces, the LS XFEM leads to a smooth interface due to the continuous representation of the level set and the corresponding XFEM partitioning of the mesh.

Finally, with respect to the computational cost of both methods for this problem, it can be noted that the main differences lie in the assembly of the finite element system (15). As discussed in Section 2.1.2, the XFEM method requires a modified integration scheme for the elements that are cut by the iso-zero level set. Running on a single 3.50 GHz Intel Xeon CPU, the CPU time for the LS XFEM optimization was \(319~\mathrm {s}\), while the SIMP optimization took \(282~\mathrm {s}\). The relevance of these timings are however limited, since the current implementation is part of a larger finite element codebase where the XFEM routines contain a number of additional steps that are not strictly necessary for the topology optimization algorithm. As discussed in the introduction of Section 5, both methods use a fixed number of 300 MMA iterations to generate an objective comparison. However, in this particular example, a smaller number of iterations would also suffice to obtain suitable results. An alternative convergence criterion based on the change in objective value could be used to reduce the total number of iterations. For example, in case a criterion ∥ΔF∥ < 10− 6F would be adopted, the LS XFEM strategy would require only 180 iterations with an objective value of \(F = 61.26\), while the SIMP method would need 285 iterations with a final performance \(F = 62.03\). However, this comparison is not entirely fair since the current continuation scheme used in the projection filter of the SIMP method could be further optimized to reduce the required number of iterations.

5.3 Compliant force inverter

The design of compliant mechanisms forms a challenging test case for minimum length scale strategies in topology optimization. The deformable parts of the mechanism typically consist of very thin hinges which are often connected by a single finite element node. A minimum length scale has to be imposed in both the material and void phase in order to fully resolve these critical parts in the design (Wang et al. 2011).

The well-known problem of the inverter mechanism (Fig. 8) is considered in this example. The goal of the optimization is to maximize the output displacement \(u_{\text {out}}\) when the input force \(f_{\text {in}}\) is applied to the mechanism. The design domain with length \(L = 100\) is meshed with \(200 \times 100\) square elements. A unit input force is applied while the stiffness coefficients of the in- and output springs are \(k_{\text {in}}= 1\) and \(k_{\text {out}}= 0.001\). The remaining parameters in the optimization problem are an allowed volume fraction \(\epsilon _{\text {vf}} = 0.3\) and a filter radius \(R = 2.8\).

Similar to the previous examples, the optimization problem without geometric constraints is solved by means of the LS XFEM. The initial design corresponds to the hole pattern in Fig. 9a, while the optimized design is shown in Fig. 9b. It can be seen that the optimized design contains very thin hinges which are typical for solutions obtained without local length scale control.

Results for the inverter mechanism. The top row shows the results for the LS XFEM optimization without geometric constraints with a the initial XFEM material domain and b the optimized design. The bottom row shows the optimized design including geometric constraints for c the LS XFEM and d the density method

Figure 9c shows the design obtained with the addition of the geometric constraints (with thresholds \(\phi ^{\mathrm {e}}=-0.2\) and \(\phi ^{\mathrm {d}}= 0.2\)) in the LS XFEM optimization. The hinges in this design are properly connected and the imposed minimum length scale is respected. As a comparison, the solution found by the density method for the equivalent problem is displayed in Fig. 9d. This design also respects the same minimum length scale constraint. Finally, comparing the output displacements reported at the bottom of Fig. 9 for both designs, the density-based method was able to find a slightly better solution for this problem.

5.4 Gripper mechanism

The gripper mechanism (Fig. 10) is considered as the last example. This problem has been considered by several authors in the framework of density-based topology optimization (Schevenels et al. 2011; Lazarov et al. 2012; Zhou et al. 2015). The goal is again to maximize the output displacement \(u_{\text {out}}\) when the input force \(f_{\text {in}}\) is applied.

The design domain has a length \(L = 200\) and is meshed with \(200\times 100\) square elements. The boundary conditions consist of an input force \(f_{\text {in}}= 0.5\) applied in the top left corner of the domain and stiffness coefficients \(k_{\text {in}}= 0.1\) and \(k_{\text {out}}= 0.005\). Similar to the previous examples, the gripper is optimized using both the LS XFEM and density method. The optimization problem uses a filter radius \(R = 6.0\) and an allowed volume fraction \(\epsilon _{\text {vf}} = 0.3\). The initial design for the LS XFEM is shown in Fig. 11a.

Results for the gripper mechanism. The top row shows the results for the LS XFEM optimization without geometric constraints with a the initial XFEM material domain and b the optimized design. The bottom row shows the optimized design including geometric constraints for c the LS XFEM and d the density method

As a reference, Fig. 11b shows the design obtained by the LS XFEM without geometric constraints. Again, the design contains very thin hinges that are typical for an optimized mechanism without length scale control. Figure 11c–d display the designs obtained by the LS XFEM and the density method when the equivalent geometric constraints (with thresholds \(\phi ^{\mathrm {e}}=-0.4\) and \(\phi ^{\mathrm {d}}= 0.4\)) are included in the optimization problem. Both designs respect the minimum length scales imposed by the constraints. Similar to the inverter problem, the density method was able to find a solution with a slightly better performance than the LS XFEM.

6 Conclusions

This paper presented a strategy for imposing minimum length scale in an explicit level set method for topology optimization. The ability to control the feature size is crucial for the manufacturability of the optimized design. Furthermore, the proposed method provides a computationally inexpensive solution for avoiding the numerical instabilities that often occur in the LS XFEM by enforcing a minimum length scale that is larger than the finite element size.

The method is based on geometric constraints that have been proposed in the framework of density-based topology optimization with projection filters (Zhou et al. 2015). Adopting the same geometric constraints in both the density-based and level set method enables to formulate equivalent optimization problems for both methods. In fact, the presentation in Section 2 showed that although both methods use a different parametrization of the material distribution \(\rho \) by the independent optimization variables, the final optimization problems are very similar. The main remaining differences between both methods are the design sensitivity analysis and the finite element method used in the optimization.

Finally, let us reiterate that the explicit level set strategy combined with parametric shape sensitivity information enables to express the optimization problem as a nonlinear programming problem. In this general format the optimization problem can be solved by well-established optimization algorithms. The current implementation uses the sequential convex programming approach MMA, but other methods such as sequential quadratic programming or interior points methods could be used as well. Since these methods are capable of dealing with an arbitrary number of constraints, it is straightforward to incorporate the proposed geometric constraints in the optimization problem. Furthermore, although this is not covered by the examples in this paper, it should be possible to combine the geometric constraints with other manufacturing constraints or mechanical constraints such as limitations on the maximal stress or displacement.

A number of benchmark topology optimization problems were considered as example. The results of these test cases highlighted that the constraints are capable of imposing a minimum length scale in both material and void phase. In this way, the numerical artifacts due the XFEM were also removed from the optimized design. Finally, the level set and the density-based methods were compared while using the equivalent geometric constraints in both methods.

References

Abdi M, Ashcroft I, Wildman R (2014) High resolution topology design with Iso-XFEM. In: Proceedings of the 25th annual international solid freeform fabrication symposium, pp 1288–1303

Allaire G, Jouve F, Toader A (2004) Structural optimization using sensitivity analysis and a level-set method. J Comput Phys 194(1):363–393

Allaire G, Jouve F, Michailidis G (2016) Thickness control in structural optimization via a level set method. Struct Multidiscip Optim 53(6):1349–1382

Belytschko T, Parimi C, Moës N, Sukumar N, Usui S (2003) Structured extended finite element methods for solids defined by implicit surfaces. Int J Numer Methods Eng 56(4):609–635

Bendsøe M (1989) Optimal shape design as a material distribution problem. Struct Multidiscip Optim 1:193–202

Bendsøe M, Sigmund O (2004) Topology optimization: theory, methods and applications, 2nd edn. Springer, Berlin

Bourdin B (2001) Filters in topology optimization. Int J Numer Methods Eng 50(9):2143–2158

Bruns T, Tortorelli D (2001) Topology optimization of non-linear elastic structures and compliant mechanisms. Comput Methods Appl Mech Eng 190(26–27):3443–3459

Chen S, Wang M, Liu A (2008) Shape feature control in structural topology optimization. Comput Aided Des 40(9):951–962

Cook R, Malkus D, Plesha M, Witt R (2002) Concepts and applications of finite element analysis, 4th edn. Wiley, New York

Daux C, Moës N, Dolbow J, Sukumar N, Belytschko T (2000) Arbitrary branched and intersecting cracks with the extended finite element method. Int J Numer Methods Eng 48(12):1741–1760

Deaton J, Grandhi R (2013) A survey of structural and multidisciplinary continuum topology optimization: post 2000. Struct Multidiscip Optim 49(1):1–38

Dunning P (2018) Minimum length-scale constraints for parameterized implicit function based topology optimization. Struct Multidiscip Optim 58(1):155–169

Fries TP, Belytschko T (2010) The extended/generalized finite element method: An overview of the method and its applications. Int J Numer Methods Eng 84(3):253–304

Geuzaine C, Remacle JF (2009) Gmsh: a 3-D finite element mesh generator with built-in pre- and post-processing facilities. Int J Numer Methods Eng 79(11):1309–1331

Guest J, Prevost J, Belytschko T (2004) Achieving minimum length scale in topology optimization using nodal design variables and projection functions. Int J Numer Methods Eng 61(2):238–254

Guo X, Zhang W, Zhong W (2014) Explicit feature control in structural topology optimization via level set method. Comput Methods Appl Mech Eng 272:354–378

Jansen M, Lazarov B, Schevenels M, Sigmund O (2013) On the similarities between micro/nano lithography and topology optimization projection methods. Struct Multidiscip Optim 48(4):717–730

Jouve F, Mechkour H (2008) Level set based method for design of compliant mechanisms. Eur J Comput Mech 17(5–7):957–968

Kreissl S, Maute K (2012) Levelset based fluid topology optimization using the extended finite element method. Struct Multidiscip Optim 46(3):311–326

Lazarov B, Schevenels M, Sigmund O (2012) Topology optimization considering material and geometric uncertainties using stochastic collocation methods. Struct Multidiscip Optim 46(4):597–612

Lazarov B, Wang F, Sigmund O (2016) Length scale and manufacturability in density-based topology optimization. Arch Appl Mech 86(1–2):189–218

Luo J, Luo Z, Chen S, Tong L, Wang M (2008) A new level set method for systematic design of hinge-free compliant mechanisms. Comput Methods Appl Mech Eng 198(2):318–331

Makhija D, Maute K (2014) Numerical instabilities in level set topology optimization with the extended finite element method. Struct Multidiscip Optim 49(2):185–197

Moës N, Dolbow J, Belytschko T (1999) A finite element method for crack growth without remeshing. Int J Numer Methods Eng 46(1):131–150

Petersson J, Sigmund O (1998) Slope constrained topology optimization. Int J Numer Methods Eng 41 (8):1417–1434

Rozvany G, Zhou M, Birker T (1992) Generalized shape optimization without homogenization. Struct Multidiscip Optim 4:250–252

Schevenels M, Lazarov B, Sigmund O (2011) Robust topology optimization accounting for spatially varying manufacturing errors. Comput Methods Appl Mech Eng 200(49–52):3613– 3627

Sethian J, Wiegmann A (2000) Structural boundary design via level set and immersed interface methods. J Comput Phys 163:489–528

Sigmund O (2007) Morphology-based black and white filters for topology optimization. Struct Multidiscip Optim 33(4–5):401– 424

Sigmund O, Maute K (2013) Topology optimization approaches. Struct Multidiscip Optim 48(6):1031–1055

Sigmund O, Petersson J (1998) Numerical instabilities in topology optimization: a survey on procedures dealing with checkerboards, mesh-dependencies and local minima. Struct Multidiscip Optim 16:68–75

Svanberg K (1987) The method of moving asymptotes—a new method for structural optimization. Int J Numer Methods Eng 24:359–373

Svanberg K (2002) A class of globally convergent optimization methods based on conservative convex separable approximation. SIAM J Optim 12:555–573

van Dijk N, Maute K, Langelaar M, van Keulen F (2013) Level-set methods for structural topology optimization: a review. Struct Multidiscip Optim 48(3):437–472

Van Miegroet L (2012) Generalized shape optimization using XFEM and level set description. PhD thesis, University of Liege, Aerospace and Mechanical Engineering Department

Villanueva C, Maute K (2014) Density and level set-XFEM schemes for topology optimization of 3-D structures. Comput Mech 54(1):133–150

Wang M, Wang X, Guo D (2003) A level set method for structural topology optimization. Comput Methods Appl Mech Eng 192(1–2):227–246

Wang F, Lazarov B, Sigmund O (2011) On projection methods, convergence and robust formulations in topology optimization. Struct Multidiscip Optim 43:767–784

Wei P, Wang M, Xing X (2010) A study on X-FEM in continuum structural optimization using a level set model. Comput Aided Des 42(8):708–719

Xu S, Cai Y, Cheng G (2010) Volume preserving nonlinear density filter based on Heaviside functions. Struct Multidiscip Optim 41:495–505

Zhou M, Lazarov B, Sigmund O (2014) Topology optimization for optical projection lithography with manufacturing uncertainties. Appl Opt 53(12):2720–2729

Zhou M, Lazarov B, Wang F, Sigmund O (2015) Minimum length scale in topology optimization by geometric constraints. Comput Methods Appl Mech Eng 293:266–282

Funding

The work presented in this paper was performed in the framework of the Any-Shape 4.0 project supported by the Walloon Region (grant number 151066).

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible Editor: Jose Herskovits

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jansen, M. Explicit level set and density methods for topology optimization with equivalent minimum length scale constraints. Struct Multidisc Optim 59, 1775–1788 (2019). https://doi.org/10.1007/s00158-018-2162-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-018-2162-5