Abstract

People’s comfort with and acceptability of artificial intelligence (AI) instantiations is a topic that has received little systematic study. This is surprising given the topic’s relevance to the design, deployment and even regulation of AI systems. To help fill in our knowledge base, we conducted mixed-methods analysis based on a survey of a representative sample of the US population (N = 2254). Results show that there are two distinct social dimensions to comfort with AI: as a peer and as a superior. For both dimensions, general and technological efficacy traits—locus of control, communication apprehension, robot phobia, and perceived technology competence—are strongly associated with acceptance of AI in various roles. Female and older respondents also were less comfortable with the idea of AI agents in various roles. A qualitative analysis of comments collected from respondents complemented our statistical approach. We conclude by exploring the implications of our research for AI acceptability in society.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Society is currently facing another big technological change in robotization and Artificial intelligence (AI) integration, which has the same dramatic potential for change in people's lives as has the computer/Internet revolution. Depending on whom you ask, robots and AI harken a third or fourth industrial revolution (Rifkin 2011; Floridi 2014; Schwab 2017), one that has its roots not only in the Internet revolution and the concomitant digital transformation of society via information communication technologies (ICTs), but also further back in the twentieth century’s shift to industrial automation (Bassett and Roberts 2019). As automation reaches beyond industry into the social and personal spheres of our lives, integrated robotic and AI technology become the culmination of these transformations.

AI systems generally speaking have a wide range of public and private implementations, in sectors like finance, health care, criminal justice, as well as in the home with “personal assistant” technologies (West and Allen 2018). Much attention has been paid to the economic and civil implications of AI systems’ proliferation on society (West and Allen 2018). However, AI is increasingly being incorporated into multiple sectors of people’s everyday lives and their interpersonal communication (Hancock et al. 2020). While some researchers and stakeholders hail the ways in which AI will augment and expand human capabilities, there is likewise concern that increased dependency on AI will erode people’s abilities to think for themselves and that more AI-enabled automatized decision-making may encroach on people’s independence and control of their own lives (Anderson et al. 2018; Sundar 2020).

When transformative technologies are rolled out, they present new pitfalls for those who adopt them; people must learn new protocols and systems to navigate the new tools. If a technology becomes widespread, not adopting it might adversely affect one’s life—as happened with the Internet and its ancillary platforms. More, people have to potentially relinquish their competence in one technological arena (e.g., faxing) to master the new technology (e.g., e-mail). A source of resistance to new technologies is a loss of competence: people fear losing the expertise that has been so carefully earned.

Further, AI in particular may challenge not just people’s sense of competence but also their own agency (Sundar 2020); in this way, as AI-enabled technology becomes increasingly more agentic and proliferated it may unearth certain power dynamics between people and technology (Guzman and Lewis 2020). Presently, AI-supported “smart” devices are seen as mere tools to the user instead of encompassing ways to connect and interact with others like the Internet is perceived. In that way, people do not yet associate AI with its potential to both enhance existing technologies and create new ones (Purington et al. 2017).

Right now people “communicate” with AI most obviously through voice-activated digital assistants like Alexa or Siri. However, AI systems span numerous personal technological domains. A recent Pegasystems (2018) study found that 70% of people do not recognize basic AI applications in their daily lives. We can imagine a time soon when these virtual assistants may manifest as physically embodied “devices.” Thus, some have introduced the idea of AI-enabled social robots as not just mediators of communication and basic assistant tools, but communication partners themselves (Höflich 2013; Guzman 2018; Guzman and Lewis 2020; Spence 2018; Sundar 2020). Social science research about AI has been focused on the AI market disruption, economic growth possibilities, and civil implications as well as threats to privacy, human agency, and national security (West and Allen 2018; Anderson et al. 2018; Brundage et al. 2018), but offers less evidence on how non-expert users (and non-users) actually perceive AI and robotic technologies. It is useful to understand, given these potential developments, how people’s traits like personality, efficacy, and control may interplay with perceptions and the use of these emergent technologies. To our knowledge, little research has explored broad perceptions of AI and examined what individual factors might influence the extent to which people feel comfortable with AI in their lives, and we seek to address this gap in our study.

2 Literature review

Like the previous industrial and digital revolutions, the upcoming AI technological revolution is first welcomed with human apprehension. Fears related to past revolutions’ impact on the workforce and the potential deskilling of the population are at the forefront of AI risk perception in society (Barrett and Roberts 2019). And while human apprehension of new technologies is not a novel concept, it is estimated that the AI revolution will disrupt vast areas of our lives: for example, AI is predicted to substitute and amplify “practically all” tasks and jobs that humans currently have (Makridakis 2017). With AI systems already in place in some major business sectors like health care and national security, it may be that people are already starting to see the capabilities of such technologies. However, unlike other technological phenomena such as the mobile phone—a concrete device that a user wields and controls—AI is an amorphous program, something the runs autonomously in the background of wide-ranging devices and systems. Though devised and programmed by humans, it is not a technology that is either visibly or simultaneously controlled by a human.

Given this, existing theoretical models for studying user perceptions of technology like the “Technology Acceptance Model” (TAM) are ill-equipped to address AI perceptions. The “use” of AI is far removed from one’s direct control and thus its application goes beyond a single human-tool use case in which one can more clearly perceive how easy to use and useful the tool may be in their life. As such, in the pivot “from computer culture to robotic culture” (Guzman 2017, p. 69), we are faced with new considerations for our communication processes.

The ICTs we are presently accustomed to—computers, mobile phones, and other Internet-enabled applications, as a few examples—serve as information exchange and communication interfaces with other people. In contrast, robots and AI-enabled devices can act as communicative partners in their own right (Guzman 2018; Guzman and Lewis 2020). Human–machine communication (HMC) has emerged as a subfield within the communication discipline to capture this shift from technologically mediated communication to communication with technology (Guzman 2020; Guzman and Lewis 2020). The impetus for carving out a new space with HMC was to emphasize the relational (rather than functional) aspect of communication and the implications therein (Guzman 2018). HMC also draws in metaphysical considerations—how, ontologically, machines are perceived—and considers the ways in which these three aspects of functionality, sociality, and metaphysicality might interrelate and influence human–machine relationships (Guzman and Lewis 2020). The relational encompasses both individual reactions to technology as well as the larger milieu in which these interactions occur. The scope of inquiry, therefore, spans both micro- and macro-level considerations.

The present study situates itself within HMC because of its focus on AI as a socially embedded technology that can be interacted with on an individual level. Importantly, given AI’s vast proliferation, one has little choice about AI in their lives, regardless of whether they might intend or want to adopt the technology. AI is also employed in roles with varying degrees of power: some types of AI are assistive, as in digital voice assistants, while others have more decision-making capabilities, as in algorithms that make criminal sentencing recommendations. Therefore, this study considers a range of potential scenarios in which AI could be employed that vary in power distance (Kim and Mutlu 2014) from the individual. It also explores antecedent individual traits that might influence comfort with AI technology.

2.1 Ontologically perceiving AI

First, we wish to clarify our grouping of AI and robots to describe the emerging phenomenon of agentic and autonomous technology. Though they may share technological characteristics, AI and robots are conceptually distinct from one another. AI programs are designed to “learn” from their surrounding environment and adapt and act as needed. In this way, they have seemingly human-like intelligence and can operate on their own without direct human commands (Oberoi 2019). Importantly, AI is not necessarily physically embodied; indeed, it currently exists in already familiar devices like smartphones, computers, and online chatbots. Similarly, robots do not necessarily include AI. There are extant robots that operate only by pre-existing programming or direct human commands (Oberoi 2019). Despite differences, however, AI and social robots also generally share the capacity for autonomy and agency. Because of this similarity, research embracing both AI and social robots is reviewed to inform our research questions.

AI’s gradual adoption into society has been mostly in the form of administrative tasks like language translation or simple command recognition. However, AI’s potential goes far beyond such objectives: On an individual level, digital assistants like Alexa are becoming increasingly sophisticated in generating socio-emotional conversations with users (Purington et al. 2017). While the general notion of sharing an emotional connection with a machine is still met with resistance, personal robots are closer than ever to partly fulfilling human’s need for affection (Sullins 2012).

In this way, AI spans sectors and roles, and its consequences may loom large in people’s minds, especially when people do not have a clear picture of how AI actually works. Therefore, how people ontologically perceive AI may affect their approach towards it. We can see an example of this in Sundar’s (2008) “machine heuristic,” which contends that, in interactions with machines rather than humans, people attribute certain machine-like qualities such as objectivity and neutrality to the interaction and subsequent outcome expectations. This heuristic has affected the extent to which people disclose information online; particularly if they believe in the machine heuristic, people are more likely to share private information with a machine over a human (Sundar and Kim 2019). In a context where bias is of particular import, such as with news, the machine heuristic has also been observed: early research showed that people perceived news selected by a machine as more credible than news curated by a human editor (Sundar and Nass 2001).

More recent studies on machine intervention in the news have found more mixed evidence on the machine heuristic. One study found that people perceived news written by a machine or human similarly (Clerwall 2014). Another showed an inverse finding to the machine heuristic, demonstrating that machine-written news was viewed as less credible than human-written news (Waddell 2018). Anthropocentric bias was offered as an explanation for this difference: people still consider news writing as a fundamentally human task, and so when a machine performs it instead, people’s normative expectations are violated. In yet a different context—customer service—automation was least preferred to interactions with humans across any channel, whether in person, over the phone, or computer-mediated (Mays et al. 2020). Qualitative work exploring this preference revealed that in customer service, people want human foibles because they anticipate that bias, nuance, and persuadability will help them circumvent the system and achieve more favorable personal outcomes (Walsh et al. 2018). Importantly, people’s sense that they could better manipulate a human–human customer service interaction gave them a feeling of not only competence but also control and agency. Additionally, the sociality nature of such interactions, despite their often being constricted to narrow ranges, are also a source of positive user affect. Therefore, it appears that both conceptions of what technology is and its corresponding context or use-case is important, and so we ask an exploratory question about qualitative judgments on AI:

RQ1: How do people conceive of and perceive an “AI agent”?

2.2 Influencing technological comfort: AI roles and individual traits

2.2.1 AI roles

As mentioned, the current focus tends to be trained on AI’s future workplace implications and uncertain consequences. The social and relational aspects have been less studied but are an important next step for examining AI manifestations such as social robots. Media equation research has shown time and again that people’s interactions with technology are fundamentally and reflexively social (Reeves and Nass 1996; Nass and Moon 2000). Given this tendency and the technology’s capabilities, Gunkel (2018) has suggested that we are at the “third wave” of human–computer interaction research, which “is concerned not with the capabilities or operations of the two interacting components—the human user and the computational artifact—but with the phenomenon of the relationship that is situated between them” (p. 3). This “relational turn” suggests that the positioning of the AI or robot relative to the user will influence perceptions and comfort.

Social distance is a useful concept for exploring people’s relational orientation to machines (Banks and Edwards 2019). Originally formulated to describe human–human interactions, social distance explains intimacy with others, inter-group perceptions, and identification (Kim and Mutlu 2014). This notion has also been examined in human–robot interactions in a workplace context, in the form of social structural distance, which encompasses both power and task distance theories. Perhaps not surprisingly, people have been more accepting of an assistive or menial-labor robot (Katz and Halpern 2014). Kim and Mutlu (2014) found that physical distance and power distance—whether the robot was presented as a subordinate or a supervisor—affected how positively someone viewed the robot (preferring the higher-status robot to be close, and the lower-status robot to be farther away). Supervisory robots have also been viewed more critically when relied upon to complete a task (Hinds et al. 2004).

There is less research on the effect of a robot’s status in a non-work context. The findings so far on in-group/out-group biases towards robots (Eyssel and Kuchenbrandt 2012; Edwards et al. 2019) as well as Ferrari et al. (2016) threat to human distinctiveness hypothesis suggest that robots in a superior position would be disliked more than those who are positioned as peers or subordinates. Previous research on people's perceptions of robots taking various roles in their lives indicate that human acceptability of robots is contingent on the congruency between the role the robot plays and its nonverbal conduct (Kim and Mutlu 2014), essentially naming the technological traits that would make emerging technologies more acceptable by society.

A potential through-line in the above research is people’s desire to manipulate technology for their personal benefit. Consequently, apprehension towards AI may come from an innate human desire to control one’s surroundings, a more uncertain prospect given AI’s capacity for agency. Additionally, people want to look proficient and capable in front of their peers and do not want to risk embarrassment by lacking competence in new technologies (Campbell 2006). In this second aspect, AI is no different from its technological predecessors that were met with the same user resistance.

Not much research has been done on the human traits that affect perception towards AI systems. We have seen through its development that the Internet is not a monolithic force in everyone’s lives; different people with varying personality traits and dispositions use social media differently, for example (Correa et al. 2010). Of particular interest here are both efficacy and personality traits that relate to technology as well as social interaction, given the autonomous and interactive nature of AI technology. Thus it considers the efficacy-related beliefs of communication apprehension, locus of control, and perceived technology competence and anxiety, in addition to social personality traits, extraversion and neuroticism.

2.2.2 Efficacy traits: Communication apprehension, locus of control, and technology competence

Since the concept’s origin in the late 1900s, communication apprehension has been widely studied in relation to internet use and interpersonal interaction. However, to our knowledge, there is little research tying it to the newest and upcoming technological developments, such as AI. Communication apprehension was initially conceptualized as a trait-oriented anxiety about real or anticipated communication with another person or multiple people (McCroskey 1984). In the past decade, communication apprehension has been found to be a persistent predictor of attitudes towards technology, particularly computer anxiety (Brown et al. 2004). In fact, it was posited in the early 2000s that computer anxiety and communication apprehension were behind one-third of households rejecting internet access (Rockwell and Singleton 2002). At that time, conventional computer anxiety was thought to account for only some of the psychological resistance to adoption, as it was predicted that the Internet would become available through other mediums (e.g., on televisions and personal devices).

Importantly, the negative influence of communication apprehension has been found to be mitigated with more experience and interaction with the subject of apprehension (Campbell 2006). In this way, communication apprehension may be both a trait-like disposition lessened or amplified depending on the situation. According to McCroskey, causes of situational communication apprehension include novelty, formality, unfamiliarity, dissimilarity, and degree of attention from others, among other factors (McCroskey 1984). In this study we explore how comfort with AI may be related to communication apprehension across contexts (e.g., person-to-person, meetings, small groups, and public speaking), as AI can be present in a range of functions.

The notion of control has been incorporated in a number of theories related to intention and behavior, including the Theory of Planned Behavior and the Technology Acceptance Model (TAM) (Venkatesh 2000). The locus of control scale (Rotter 1966) was developed in the mid-1950s to measure the extent to which people felt that they could control their outcomes (Lida and Chaparro 2002). Rotter (1966) found differences in participants’ behavior when they perceived outcomes as contingent on their own behavior (internal locus of control) compared to those who thought outcomes were contingent on outside factors like chance (external locus of control).

With regards to technology use, locus of control has been found to predict the more goal-oriented online activity and be related to earlier computer adoption and usage practices (Lida and Chaparro 2002). It is also a reliable predictor of how technology is perceived in terms of ease of use and usefulness, the cornerstones of TAM (Venkatesh 2000; Hsia et al. 2014; Hsia 2016). This may be in part because the locus of control shapes risk perception and people with a high internal locus of control are “more likely to accept the risk in using new technology” (Fong et al. 2017). However, findings are mixed in terms of the extent to which locus of control informs attitudes towards technology. It has been found both that internal locus of control corresponded with more positive perceptions of computers (Coovert and Goldstein 1980) and that locus of control was not related to computer anxiety; rather, variables like exposure and cognitive appraisal informed attitudes towards the technology (Crable et al. 1994).

It follows that technology anxiety may be related to not only internal self-efficacy beliefs but also people’s direct experiences with technology. Research has shown that experience with robots reduces uncertainty and anxiety towards them (Nomura et al. 2006), and higher levels of comfort with mobile phones moderate its positive effects. However, people who perceive themselves as having more technological competence were also more wary of robots’ society-wide effects, perhaps because they have a greater awareness of the technology’s potential shortcomings (Katz and Halpern 2014).

2.2.3 Social traits: extraversion and neuroticism

AI is a departure from existing technology in a few key aspects, namely its capacity for autonomy and the subsequent enhanced interactivity. With this latter component, particularly, social personality traits are potentially important. To that end, extraversion/introversion—the extent to which someone enjoys and seeks out social interaction or enjoys being alone—is the most commonly studied personality trait with regards to an AI-enabled technology like social robots (Robert 2018). People who are more extraverted tend to humanize robots more and are also more comfortable with them (Salem et al. 2015, in Robert 2018). At least among older people, higher extraversion was related to more openness toward social robots (Damholdt et al. 2015). In that same vein, neuroticism (i.e., less emotional stability) was related to less comfort with robots and a lower propensity to humanize them (Robert 2018). Research has also examined the influence of personality traits manifested by robots and shows that people tend to prefer extraverted, friendly robots over introverted robots, though these preferences tend to be related to task alignment (Joosse et al. 2013; Robert 2018). It is unclear if these same patterns would be found in perceptions of a robot-adjacent and more vaguely defined technology like AI.

2.3 Comfort with AI

Drawing together these various threads, we explore both the influence of AI’s and individuals’ traits on people’s comfort with the technology. Presented with an “AI agent” in various roles that range in structural power, we examine how the AI’s position may influence perceptions of it. Then, we examine the extent to which efficacy-related traits (communication apprehension, locus of control, perceived technology competence, and technology anxiety) alongside personality traits (extraversion and neuroticism) relate to people’s comfort with AI. Early research on people’s dispositions toward the Internet indicated that personal characteristics can contribute not only to how quickly people adopt a new medium but also how they use it once it becomes mainstream. We may expect similar dynamics to be at play with the coming technological shift towards AI and robots. As such, it is important to understand the barriers to entry that may be in place for individual use of this technology, starting with the technological and personal characteristics that might influence perceptions of and attitudes towards AI systems.

RQ2: Does an “AI agent’s” role influence how comfortable people are with AI?

RQ3a–b: To what extent, if any, do a) general efficacy and technological efficacy and b) social personality traits influence comfort with AI?

3 Method

3.1 Design and participants

We conducted a survey through an online questionnaire, administered via the professional survey company Qualtrics from February to March 2019. The variables used in this analysis are from a section of the larger survey and were determined from the outset of data collection, save for one variable (robot phobia) that was subsequently added post-hoc to encompass technology anxiety. The survey drew from a national sample (N = 2254), with specified quotas on gender (52.6% female, n = 1185), age (M = 46.5, SD = 16.44), race (63.2% White/Caucasian, n = 1424), income (61.7% made $75,000 or less), and education (44.8% had some college or less), to get as nationally representative a sample as possible. We included two attention checks to filter for valid responses.

3.2 Measurement

3.2.1 Comfort with AI

For our dependent measure of people’s comfort with AI, we asked respondents to imagine an AI agent in a range of roles and indicate how comfortable they would feel with these scenarios. For example, “An AI agent as the leader of your country.” We adapted this index from Ericsson research (Ericsson 2017) that asked about willingness to interact with an AI agent in various contexts, from a company CEO to a work advisor. We pre-tested these items and removed one, “upload your mind and become an AI yourself” because it made respondents question the survey’s seriousness. We also added a few more roles to create an 8-item index for comfort with AI in the following roles: country leader, town mayor, company leader, work manager, work advisor, co-worker, personal assistant, and therapist. Responses were given on a five-point Likert-type scale, from “not comfortable at all” to “very comfortable.” These items were preceded by the following definition for AI: “Artificially Intelligent (AI) agents are smart computers that put into action decisions that they make by themselves.” Given that this is a newly tested scale, an exploratory factor analysis (detailed in the results) was conducted to evaluate whether this construct of “AI Comfort” was uni- or multi-dimensional.

3.2.2 Efficacy-related traits

Efficacy beliefs included both general (locus of control and communication apprehension) and technology-specific (perceived technology competence and technology anxiety, operationalized as “robot phobia”).

We adapted Rotter’s (1966) 13-item locus of control scale and reduced it to 6 items (a = 0.764), again measuring on a five-point, Likert-type scale (“strongly disagree” to “strongly agree”). Higher values corresponded to a higher internal locus of control, with statements such as “When I make plans, I am almost certain I can make them work.”, “I do not have enough control over the direction my life is taking” (reverse-coded) (M = 3.54, SD = 0.71).

Communication apprehension was adapted from McCroskey’s (1982) “Personal Report of Communication Apprehension (PRCA)” scale. Following Levine et al. (2006), a confirmatory factor analysis (CFA) was run on the 24-item scale. After removing items with a primary factor loading of less than 0.6, the CFA showed that the four-factor model validated in prior studies was a reasonably good fit. Each 3-item subscale measured apprehension distinct contexts: a group setting, a meeting, in a dyad (e.g., interpersonal), and giving a speech. Communication apprehension in a group includes statements like “I dislike participating in group discussions” (α = 0.82, M = 2.75, SD = 1.05). Communication apprehension in a meeting includes statements like “Generally, I am nervous when I have to participate in a meeting” (α = 0.90, M = 2.74, SD = 0.99). Interpersonal communication apprehension includes statements like “Ordinarily I am very tense and nervous in conversations” (α = 0.88, M = 2.54, SD = 0.89). Finally, communication apprehension when giving a speech includes statements like “Certain parts of my body feel very tense and rigid while I am giving a speech” (α = 0.89, M = 2.94, SD = 1.03). Higher values corresponded to higher communication apprehension.

Perceived technology competence (PTC) was adapted from Katz and Halpern (2014). PTC is a 7-item, five-point Likert-type scale (strongly disagree to “strongly agree”) that includes statements like “I feel technology, in general, is easy to operate” and “It is easy for me to use my computer to communicate with others” (α = 0.87, M = 3.59, SD = 0.83). To account for technology anxiety, we adapted an index of “robot phobia” from Katz and Halpern (2014). As mentioned above, this measure was included in the analysis post-hoc and, while not a direct measure of the AI agent described, it incorporated the agentic aspect of the technology in a way that PTC did not capture (α = 0.78, M = 2.96, SD = 0.88).

3.2.3 Personality traits

Indices for extraversion and neuroticism were adapted from Eysenck et al. (1985) and measured on a five-point, Likert-type scale from “strongly disagree” to “strongly agree.” The 7-item extraversion scale asked respondents to respond how much they agree with statements like “I enjoy meeting new people” and “I tend to keep in the background on social occasions” (reverse-coded) (α = 0.91, M = 3.23, SD = 0.87). The 12-item neuroticism scale included statements like “I would call myself tense or ‘highly strung’” and “I worry too long after an embarrassing experience” (α = 0.94, M = 2.69, SD = 0.95).

3.3 Analysis

We took a two-pronged approach to better understand people’s comfort with AI. The first was a quantitative analysis that looked for relationships between the variables of interest (described above) and the extent to which they helped explain respondents’ AI comfort. An exploratory factor analysis was also conducted to discover any latent constructs within the “AI comfort” scale. All quantitative analyses were conducted using IBM SPSS Statistics. The second was a qualitative content analysis of the open-ended responses received from survey respondents about their general thoughts on AI. Immediately after the AI-related questions, we provided the following prompt: “OPTIONAL: Hearing your opinions is very important. Please use this space to tell us anything you would like to share about the topic just presented. To protect anonymity, do not include any personal identifying information, if you choose to respond.” From this optional prompt, we received 842 responses, 37% of the sample. After cleaning the data for off-topic or nonsense responses, 482 remained for the analysis.

The qualitative content analysis followed the quantitative analysis. We took a conventional approach, in that codes were derived inductively from the text (Hsieh and Shannon 2005). Responses were reviewed and analyzed in multiple rounds and employed a combination of coding methods put forth by Saldaña (2021). In the initial round, responses were coded holistically and descriptively to capture the breadth of content covered; subsequent rounds used structural and values coding to explore and draw connections between participants’ ontological, normative, and affective attitudes about AI technology. Given the one-shot and open-ended nature of the prompt (that is, we could not ask structured follow-up questions), this analysis primarily serves to illustrate and expand on the quantitative findings.

4 Results

Overall, our sample was not comfortable with AI in most roles presented (see Fig. 1). For ease of presenting these items descriptively, we collapsed “not comfortable at all” and “somewhat uncomfortable” into “uncomfortable,” and “somewhat comfortable” and “very comfortable” into “comfortable.” There is a clear inverse pattern of respondents’ AI comfort and the amount of power an AI agent would wield in a given role. At least 70% of participants were uncomfortable with an AI as a country leader, mayor, company leader, or manager. Discomfort drops considerably when the hierarchy levels off or inverts: only 58% and 47% of participants would be uncomfortable with an AI as a work advisor or co-worker, respectively. And the proportion of participants comfortable with AI as an assistant (39%) surpasses (though just barely) those uncomfortable with AI in that supplicative role (36%).

4.1 Construct of AI comfort

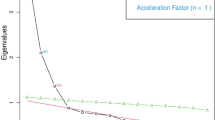

An exploratory factor analysis was first conducted to reveal whether “AI Comfort” was a uni- or multi-dimensional construct. The factorability of the eight items was considered appropriate because each correlated with the other above 0.30; additionally, the Kaiser–Meyer–Olkin measure of sampling adequacy was 0.92 (well above the recommended 0.70 value), and the Bartlett’s test of sphericity was significant, X2(28) = 19,494.54, p < 0.001. Because our goal was to uncover any latent constructs, a principal axis factoring (PAF) extraction and Oblimin with Kaiser Normalization rotation was used.

The PAF indicated that two factors could be extracted: the first factor’s initial Eigenvalue was 5.77, explaining 72.15% of the variance, and the second factor’s initial Eigenvalue was 1.04, explaining an additional 12.96% of the variance. The resulting pattern matrix indicates one very clear emergent factor of AI in positions of power—leader of the country (0.95; correlates with first factor 0.91), mayor of the town (0.97; correlates with first factor 0.95), leader of the company (0.95; correlates with first factor 0.96), manager (0.86; correlates with first factor 0.93). The second factor that emerged could best be interpreted as AI as a peer—a co-worker (0.84; correlates with second factor 0.89) and a personal assistant (0.88; correlate with second factor 0.81). Two items in the original 8-item index are more difficult to place cleanly in either factor. They more closely fit with the second factor, but the loadings are weak: AI as a therapist (0.47; correlates with second factor 0.75) and as a work advisor (0.57; correlates with second factor 0.81). These two roles could exert some power over people, but not as clearly indicated as the other power contexts. However, the structure matrix suggests that one of these items—AI as a work advisor—fits well in the second factor, as it correlates 0.81 with the AI as a peer factor, similar to the co-worker (0.89) and personal assistant (0.81) correlations. Therefore, for the purposes of a clean analysis, the AI therapist item was not included in either factors, and suggests the need for further scale development that takes into account nuances of power distance in various roles: it may be the case that an “AI comfort” construct should have three dimensions, rather than the two we uncovered.

4.2 Explaining variance in AI comfort

Given the results of the EFA, above, two “AI comfort” variables were created: “AI power” (α = 0.97) and “AI peer” (α = 0.87). To explore how individual differences might contribute to our understanding of people’s comfort with AI, we ran two OLS regressions with “AI power” and “AI peer” as the dependent variables and comprised of four blocks: (1) demographic traits (age, gender, education, income); (2) general efficacy traits (locus of control, communication apprehension); (3) domain efficacy traits (perceived technology competence and robot phobia); and (4) personality traits (extraversion and neuroticism).

4.2.1 Contributors to comfort with AI in power

All four blocks in the model (see Table 1) were significant at p < 0.001 (1: F(4,2249) = 25.61, 2: F(9,2244) = 62.41, 3: F(11,2242) = 61.80, 4: F(13, 2240) = 58.38), and overall the model explained 24.90% of the variance in comfort with an AI in power.

As can be seen in Table 1, general efficacy-related traits contributed the most explanatory power (15.7%). The higher someone’s internal locus of control, the less comfortable they were with AI (β = − 0.29, p < 0.001). Aside from a group context, communication apprehension was positively related to comfort with a powerful AI: those who were more apprehensive communicating in a meeting (β = 0.12, p < 0.01), interpersonally (β = 0.23, p < 0.001), and giving a speech (β = 0.26, p < 0.001) were more comfortable with AI in power.

Domain-specific efficacy (3.2%), personality (2.0%), and demographic (4.4%) traits contributed much less explanatory power compared to general efficacy. Respondents that perceived themselves as more technologically competent (β = 0.07, p < 0.05) and less robot phobic (β = − 0.15, p < 0.001) were more comfortable with AI in power. Those who were more extraverted (β = 0.15, p < 0.001) and less neurotic (β = − 0.15, p < 0.001) were more comfortable with an AI in power. Age was the only significant demographic predictor, with younger respondents more receptive to a powerful AI (β = − 0.06, p < 0.01).

4.2.2 Contributors to comfort with an AI peer

All four blocks in this model (see Table 2) were also significant at p < 0.001 (1: F(4,2249) = 27.39, 2: F(9,2244) = 24.40, 3: F(11,2242) = 49.47, 4: F(13, 2240 = 49.55), and overall the model explained 19.6% of the variance in comfort with AI as a peer. In contrast to the “AI power” model, the technological efficacy traits contributed the most explanation (10.3%), while generally efficacy (4.6%), personality (0.6%), and demographic (4.6%) traits had much less explanatory power.

This model (Table 2) also showed similar relationships between the independent and dependent variables, although some of the predictors of comfort with AI as peer varied in their relative strength. In particular, locus of control remained significant but was not as strongly predictive (β = − 0.14, p < 0.001). Higher communication apprehension in interpersonal (β = 0.17, p < 0.001) and speech (β = 0.16, p < 0.001) contexts resulted in more comfort with an AI peer.

In contrast to comfort with a powerful AI, respondents’ technological efficacy traits were more influential in their comfort with an AI peer: those who perceived themselves as more technologically competent (β = 0.16, p < 0.001) and who were less robot phobic (β = − 0.27, p < 0.001) were more comfortable. More extraverted (β = 0.09, p < 0.001) and less neurotic (β = − 0.06, p < 0.05) respondents were more comfortable with an AI peer. Finally, men (β = − 0.08, p < 0.001) and younger (β = − 0.05, p < 0.05) respondents were more comfortable with AI as a peer in this model.

4.3 Qualitative analysis

To answer the first research question about how people conceive of and perceive AI in different roles, the open-ended responses were qualitatively explored and interpreted. Because respondents’ prompt was open-ended, the comments were understandably wide-ranging. This open-ended approach had the virtue of allowing respondents to tell us what was on their mind concerning AI, and thus provided a rich source of data for us to consider. Some respondents oriented their comments more affectively (how AI made them feel) while others provided more normative accounts in their appraisals of AI (how AI should or should not be used). This latter type of response more directly addressed our research question, though the affective responses also shed light on how AI technology in everyday life may be received on an emotional level, which has implications for its overall acceptance. Consequently, we have organized our analysis first with a synthesis of respondents’ conceptions of AI and how they influence judgments about AI in various roles. Then we have provided a summary of respondents’ emotional reactions to AI.

4.3.1 Conceptions of AI

Respondents’ notions about what AI is and therefore what it is suited for can be bucketed into three broad categories: what AI lacks; what AI excels in; and what is uncertain about AI. Put simply, what AI lacks is human qualities that respondents deemed were essential for presently human-occupied roles. The compiled list of qualities that respondents cited is extensive. Some focused on the cognitive complexity needed for various situations, and noted that AI lacked the requisite nuance, human judgment, common sense, morals, values, beliefs, instinct, creativity, critical thinking, and wisdom. Others pointed out that an AI cannot accrue life or personal experience in the same way humans can, and that this inability to have a “human touch,” sustain personal contact, “go in-depth and personal,” experience camaraderie, have a soul, “practice grace,” or be sentient, made them skeptical of an AI taking on human roles. Emotions were the other set of qualities cited in responses: AI cannot have feelings, empathy, compassion, or care for those with whom they interact. In some responses, the dearth of “soft skills” elicited normative judgments about what AI is best suited for, or more frequently, not suited for; in particular, leadership, therapist, and mentorship roles, as illustrated in this comment:

I do not like the idea of AI having any authority or controlling any part of business, school, or government counsel. They do not have the ability to feel the same emotions as humans can which would not make them good leaders of others.

Other respondents focused on the characteristics of AI that are optimal—and perhaps an improvement over humans—for certain tasks and roles. Not surprisingly and somewhat in line with the “machine heuristic” (Sundar 2008), the traits cited were computational in nature: “algorithmic thinking,” calculations, processing information, time management, language translation, organization, objectivity, and deduction. Given these, respondents supported using AI for “routine tasks,” “non-feeling” jobs, manufacturing, manual labor, and assisting. A few responses expressed enthusiasm for certain domains being taken over by AI (military, police, and government) because they distrust what people are currently doing in those spheres. However, more often respondents acknowledged that the roles AI would not be suited for could be supported by AI’s skills with data processing and algorithms. In this line of thinking, responses advocated for a balance of human-AI collaboration. As one response noted: “AI should only be used as an advisory not the final decision maker. It should present options and probabilities of the success of those same options.” This thinking—that AI should not be the sole arbiter of decisions in human domains but could contribute in conscripted and human-controlled ways—was typical in comments that did not whole-sale reject the idea of an AI agent. A few offered a cautionary note, that humans should control what we create and that AI should remain a “servant to mankind.”

Between these two normative buckets of what AI should and should not do or is and is not capable of was a third set of comments that spoke primarily to respondents’ uncertainty around AI. Some asserted that the technology was simply not sophisticated enough yet to take on many of the roles asked about in the survey, but that if it advanced in the ways predicted, that they would be more open to an AI engaged in their everyday lives. Other respondents also said they needed more time, on their end, to adjust to the idea of AI and become more comfortable interacting with it. Finally, some respondents expressed uncertainty around the creation and implementation of AI, the “human input factor,” as one respondent described it. This “human input factor” related both to who was designing the AI (e.g., fear of programming) and who might manipulate the AI once it is in the world (e.g., fear of hacking). The basis of these concerns related to people’s fallibility and malintent, rather than the AI technology itself. In this vein, respondents referred to programmers’ biases that could be built into AI, the profit-motives that may pervert AI and its uses, and the bad actors who may exploit it. That being the case, some comments advocated for AI “parameters,” “safeguards,” and regulation that would ensure AI only served in roles from which human harm would be impervious to its malfunctioning.

4.3.2 Emotions about AI

As referred to above, some respondents whole-sale rejected the idea of an autonomous AI agent that could make decisions and take on social roles. These were primarily affective, fear-based responses that framed the nature of AI technology as an existential threat to humans (e.g., “AI is highly dangerous to the future of humanity”). Specifically, respondents asserted that such an AI agent threatens human: contact, connection, productivity, thriving/progress, free will, and uniqueness. As one respondent noted:

One, it would seem that putting AI’s in positions of power just makes all the sci-fi horror stories come true. Two, we all share general characteristics as human beings, which you can program AI’s for, but we are all also unique, and putting an AI in any of those positions would tend to deny the uniqueness of human beings and eventually try to eliminate it.

Some comments asserted that any human replacement by AI was unacceptable because people should have priority for jobs. One respondent shared that they refused to use the self-check-out at grocery stores because it had replaced a person’s job. Thus, the fears expressed about AI taking on human roles were either a more generalized attitude about humanity or more specifically focused on an impending employment crisis if AI replaced people at work.

5 Discussion

This study explored factors that may affect people’s comfort with AI, an emerging technology that is already a part of our daily lives and that also presents unknown possibilities for future manifestations. Indeed, AI researchers predict that in the next three or so decades, AI will become advanced enough to match or outperform humans in activities like translating languages, writing essays, working retail, driving trucks, and even performing surgery (Grace et al. 2018). Given these possibilities, the study asked about forward-looking instantiations of AI as an autonomous agent that occupied various roles in human life. Our aim was to provide a baseline of understanding about AI perceptions presently and contribute to HMC’s research agenda, which in part calls for a focus on how AI is situated in people’s social worlds (Guzman and Lewis 2020). Too, we explored the individual differences that influenced comfort with AI to understand potential barriers to use.

First and foremost, we found that the particular role an AI takes is an important factor for people’s comfort with the technology. An “AI agent” positioned as a superior is viewed differently than one that holds more of a peer or subordinate position. The open-ended responses provided more insight into what is driving this discomfort with a non-assistive AI. A general summary is that people place AI in a different ontological category from humans, which aligns with Guzman’s (2020) findings on the “ontological divides” that exist in people’s perceptions of AI. Further, this categorical distinction renders the AI unsuitable for certain roles and life domains. Drilling down, respondents’ reasoning for ontologically distinguishing AI varied, with some respondents focusing on particular traits AI did or did not possess, following research that has shown how people’s opinions and behaviors during interactions with communicative AI technologies are influenced by how they conceived of and categorized AI as a communicator (Dautenhahn et al. 2005; Sundar 2008). Other respondents spoke more broadly to the immutability of human-AI categories. This latter attitude aligns with research on “identity threat” and the “human distinctiveness hypothesis,” which have argued that the extent to which people feel a robot threatens their unique human status relates to negative attitudes about robots (Ferrari et al. 2016; Yogeeswaran et al. 2016).

Some of the resistance to AI did not address ontological perceptions but instead focused on the pragmatic threat AI technology posed toward people’s jobs and work. The main concern here was not can or should AI take on human roles because it is more or less capable than people; rather, should AI take on human roles at the risk of thwarting human thriving? In this, we see echoes of the “automation hysteria” (Terborgh 1965) that stemmed from the cybernetics movement in the mid-twentieth century. In short, concerns abounded that, in the same way that the human arm was replaced by industrial machines, the next industrial revolution would see the human brain replaced by “technologies of communication and control” (Weiner 1948; in Bassett and Roberts 2019).

When considered in tandem with the quantitative results, these findings suggest that explanations for AI perceptions have multi-faceted origins that are related to both the individual’s and AI’s traits. In terms of individual differences, efficacy-related traits contributed the most to AI attitudes. Interestingly, general efficacy traits were more influential in comfort with powerful AI, while technological efficacy traits were more influential in comfort with peer AI. In particular, for AI in power, locus of control—the degree to which one believes their actions can determine outcomes—was significantly negatively correlated with AI comfort. This diverges somewhat with past research that has shown higher internal control corresponded with more positive attitudes towards computers and may be explicated by AI’s unique nature as an autonomous technology, which is perceived as more challenging to control, as some of the open-ended responses illustrated. Therefore, those who have a stronger internal locus of control may feel more threatened by AI because it is perceived structurally as a technology outside of their control.

The role of communication apprehension also diverged with past research that apprehension promotes computer anxiety. Rather the opposite, we found that those with higher communication apprehension were more comfortable with both powerful and peer AI. This again may be a result of how AI is conceived as a non-human interlocuter. With other technologies that mediated interactions between people, the novel and unfamiliar channel may have heightened existing anxiety around communicating with people (McCroskey 1984). Our inverse finding may (tentatively) suggest that an AI entity is not a human stand-in and that different communicative processes are at play in human-AI interactions (Gambino et al. 2020). Further research about these dynamics is warranted, particularly on the downstream effects of perceptual ontological differences of the machine communicator on HMC interactions (Guzman and Lewis 2020).

Interestingly, we observe the reverse relationship with technological competence: the more someone perceived themselves as technologically competent, the more comfortable they were with AI. We might expect, given previous findings that technological competence corresponded with more negative views of robots in society (Katz and Halpern 2014), that we would observe a similar trend with AI. It may be the case, however, that those who are more competent with technology have a better understanding of how AI operates and therefore rely less on the negative cultural tropes about AI-related technology. They may be more aware of AI’s presence in the technologies they currently use and therefore see it as less intimidating. This aligns, too, with our finding that “robot phobia” was negatively related to AI comfort, particularly with AI as a peer. Again, the deviation between generalized and domain-specific efficacy buffers the notion that AI is unique from our extant technology “tools” (e.g., Sandry 2018; Guzman and Lewis 2020).

As this technology proliferates, it will continue to be important to understand individual barriers to comfort and use, as we have seen how in the last few decades the digital divide in Internet access has resulted in inequality of opportunities across many sectors (van Dijk 2006). We should be wary of how a commensurate automation divide could leave behind some groups that may be more avoidant of or resistant to this emerging technology. Our findings show possible divides along demographic lines—with younger people and men more open to AI—as well as divides that are harder to pin down and relate to individual feelings of power, control, and competence.

As mentioned above, anxiety about AI is not a new phenomenon (Bassett and Roberts 2019). What followed the “automation hysteria” of the 1950s and 1960s was neither mass unemployment nor an eternal stasis. As new technology was introduced to workplaces, the job loss from mechanization was outpaced by the employment gained from the jobs generated by new technological developments (Terborgh 1965). In this light, technological disruption is folded into existing processes: the question is not an either-or for humans vs. machines but one of collaboration to reach an equilibrium between the two.

To that point, Sandry (2018) has advocated that people resist trying to control agentic machines as we do our other tools. Rather, we should leverage the nonhuman advantages this technology provides (Sandry 2018), which allows people focus their efforts on more creative tasks (Spence et al. 2018). To do this effectively, though, Sandry (2018) argues that people must acknowledge the machine’s agency and alterity. This point about effective collaboration suggests the importance of understanding how people conceive of AI and also the individual differences that drive these perceptions. This study was an early step in examining how people may react to AI agents in their lives and suggests factors that could influence people’s acceptance of this emerging technology.

5.1 Limitations and future directions

Our study was limited by the usual short-comings of survey methodologies and attitude measurement. Additionally, the AI roles were presented in sequence and within-respondents, which may have had anchoring and order effects. It would be interesting to examine differences in perceptions in a between-subjects design. Respondents were recruited online through a professional survey company. While demographic quotas were established to improve national representativeness, the online and paid sampling strategy means that there might be other respondent characteristics that mitigate the sample’s representativeness and thus the generalizability of our findings. Finally, AI is a complex technology that manifests in a vast array of applications. We provided a simplistic definition of AI to ensure a baseline understanding that would also be accessible for all respondents, but did not measure AI knowledge or awareness. Indeed, in some open-ended comments, respondents asserted that they had too little knowledge of AI, or the survey did not provide enough information about AI, for them to have more fully formed opinions about the technology. Guzman (2020) has found that people have divergent understandings of what AI is; to account for this, a survey could ask respondents to provide their own definition of AI, and these qualitative discrepancies could be coded and included in the analysis.

Future research should further explore the dynamics of power and control in interactions with and perceptions of agentic technologies. Specifically, what may be driving the extent to which one feels threatened by AI and the technology it supports like social robots. Ontological perceptions may be critical, but there could also be larger structural forces that drive these attitudes, such as one’s employment status or industry. It will be important to drill down into the various domains in which AI may wield power and determine first which areas are viewed as more suitable for AI decision making, and second, whether there are meaningful differences between people in terms of their comfort with specific kinds of AI decision making. Such work would contribute to the discussion of ethical AI from the “user” standpoint by discerning who is positioned to benefit from or be marginalized by the proliferation of AI systems through our work and personal lives.

Availability of data and material

Request for data can be sent to the corresponding author.

Code availability

Not applicable.

References

Anderson J, Rainie L, Luchsinger A (2018) Artificial intelligence and the future of humans, Pew Research Center. https://www.pewinternet.org/2018/12/10/artificial-intelligence-and-the-future-of-humans/

Banks J, Edwards A (2019) A common social distance scale for robots and humans. In: 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), pp. 1–6. IEEE. https://doi.org/10.1109/RO-MAN46459.2019.8956316

Bassett C, Roberts B (2019) Automation now and then: automation fevers, anxieties and utopias. New Format 98(98):9–28. https://doi.org/10.3898/NEWF:98.02.2019

Brown SA, Fuller RM, Vician C (2004) Who’s afraid of the virtual world? Anxiety and computer-mediated communication. J Assoc Inform Syst 5(2):79–107. https://doi.org/10.17705/1jais.00046

Brundage M, Avin S, Clark J, Toner H, Eckersley P, Garfinkel B, Amodei D (2018) The malicious use of artificial intelligence: forecasting, prevention, and mitigation, arXiv preprint, https://arxiv.org/ftp/arxiv/papers/1802/1802.07228.pdf

Campbell J (2006) Media richness, communication apprehension and participation in group videoconferencing. J Inform Inform Technol Organ 1:87–96. https://doi.org/10.28945/149

Clerwall C (2014) Enter the robot journalist: users’ perceptions of automated content. J Pract 8(5):519–531. https://doi.org/10.1080/17512786.2014.883116

Coovert MD, Goldstein M (1980) Locus of control as a predictor of users’ attitude toward computers. Psychol Rep 47(3 suppl):1167–1173. https://doi.org/10.2466/pr0.1980.47.3f.1167

Correa T, Hinsley AW, De Zuniga HG (2010) Who interacts on the web? The intersection of users’ personality and social media use. Comput Hum Behav 26(2):247–253. https://doi.org/10.1016/j.chb.2009.09.003

Crable EA, Brodzinski JD, Scherer RF, Jones PD (1994) The impact of cognitive appraisal, locus of control, and level of exposure on the computer anxiety of novice computer users. J Educ Comput Res 10(4):329–340. https://doi.org/10.2190/K2YH-MMJV-GBBL-YTTU

Dautenhahn K, Woods S, Kaouri C, Walters ML, Koay KL, Werry I (2005). What is a robot companion-friend, assistant or butler?. In 2005 IEEE/RSJ international conference on intelligent robots and systems (pp. 1192–1197) IEEE.

Damholdt MF, Nørskov M, Yamazaki R, Hakli R, Hansen CV, Vestergaard C, Seibt J (2015) Attitudinal change in elderly citizens toward social robots: the role of personality traits and beliefs about robot functionality. Front Psychol 6:1701. https://doi.org/10.3389/fpsyg.2015.01701

Edwards C, Edwards A, Stoll B, Lin X, Massey N (2019) Evaluations of an artificial intelligence instructor’s voice: social identity theory in human-robot interactions. Comput Hum Behav 90:357–362. https://doi.org/10.1016/j.chb.2018.08.027

Ericsson (2017) 10 hot consumer trends 2017. https://www.ericsson.com/en/trends-and-insights/consumerlab/consumer-insights/reports/10-hot-consumer-trends-2017#trend1aieverywhere

Eyssel F, Kuchenbrandt D (2012) Social categorization of social robots: anthropomorphism as a function of robot group membership. Br J Soc Psychol 51(4):724–731. https://doi.org/10.1111/j.2044-8309.2011.02082.x

Eysenck SBG, Eysenck HJ, Barrett P (1985) A revised version of the psychoticism scale. Personal Individ Differ 6(1):21–29. https://doi.org/10.1016/0191-8869(85)90026-1

Ferrari F, Paladino MP, Jetten J (2016) Blurring human–machine distinctions: anthropomorphic appearance in social robots as a threat to human distinctiveness. Int J Soc Robot 8(2):287–302. https://doi.org/10.1007/s12369-016-0338-y

Floridi L (2014) The fourth revolution: how the infosphere is reshaping human reality. OUP, Oxford

Fong LHN, Lam LW, Law R (2017) How locus of control shapes intention to reuse mobile apps for making hotel reservations: evidence from Chinese consumers. Tour Manag 61:331–342. https://doi.org/10.1016/j.tourman.2017.03.002

Gambino A, Fox J, Ratan RA (2020) Building a stronger CASA: extending the computers are social actors paradigm. Hum Mach Commun 1(1):5. https://doi.org/10.30658/hmc.1.5

Grace K, Salvatier J, Dafoe A, Zhang B, Evans O (2018) When will AI exceed human performance? Evidence from AI experts. J Artif Intell Res 62:729–754. https://doi.org/10.1613/jair.1.11222

Gunkel DJ (2018) The relational turn: third wave HCI and phenomenology. In: Filimowicz M, Tzankova V (eds) New directions in third wave human-computer interaction: Volume 1—technologies human–computer interaction series. Springer, Cham. https://doi.org/10.1007/978-3-319-73356-2_2

Guzman AL (2017) Making AI safe for humans: a conversation with Siri. In: Gehl RW, Bakardjieva M (eds) Socialbots and their friends: digital media and the automation of sociality. Routledge, New York, pp 69–85

Guzman AL (2018) What is human-machine communication, anyway? In: Guzman AL (ed) Human-machine communication: rethinking communication, technology, and ourselves. Peter Lang, New York, pp 1–28

Guzman AL, Lewis SC (2020) Artificial intelligence and communication: a human-machine communication research agenda. New Media Soc 22(1):70–86. https://doi.org/10.1177/1461444819858691

Guzman AL (2020) Ontological boundaries between humans and computers and the implications for human-machine communication. Hum-Mach Commun 1(1):37–54. https://doi.org/10.3058/hmc.1.3

Hancock JT, Naaman M, Levy K (2020) AI-mediated communication: definition, research agenda, and ethical considerations. J Comput-Mediat Commun 25(1):89–100. https://doi.org/10.1093/jcmc/zmz022

Hinds P, Roberts T, Jones H (2004) Whose job is it anyway? A study of human–robot interaction in a collaborative task. Hum-Comput Interact 19(12):151–181. https://doi.org/10.1080/07370024.2004.9667343

Höflich JR (2013) Relationships to social robots: towards a triadic analysis of media-oriented behavior. Intervalla Platf Intell Exch 1(1):35–48

Hsia JW, Chang CC, Tseng AH (2014) Effects of individuals’ locus of control and computer self-efficacy on their e-learning acceptance in high-tech companies. Behav Inform Technol 33(1):51–64. https://doi.org/10.1080/0144929X.2012.702284

Hsia JW (2016) The effects of locus of control on university students’ mobile learning adoption. J Comput High Educ 28(1):1–17. https://doi.org/10.1007/s12528-015-9103-8

Hsieh HF, Shannon SE (2005) Three approaches to qualitative content analysis. Qual Health Res 15(9):1277–1288. https://doi.org/10.1177/1049732305276687

Joosse M, Lohse M, Pérez JG, Evers V (2013) What you do is who you are: the role of task context in perceived social robot personality. In: 2013 IEEE International Conference on Robotics and Automation, pp 2134–2139. IEEE. https://doi.org/10.1109/ICRA.2013.6630863

Katz J, Halpern D (2014) Attitudes towards robot’s suitability for various jobs as affected robot appearance. Behav Inform Technol 33(9):941–953. https://doi.org/10.1080/0144929X.2013.783115

Kim Y, Mutlu B (2014) How social distance shapes human–robot interaction. Int J Hum Comput Stud 72(12):783–795. https://doi.org/10.1016/j.ijhcs.2014.05.005

Levine T, Hullett CR, Turner MM, Lapinski MK (2006) The desirability of using confirmatory factor analysis on published scales. Commun Res Rep 23(4):309–314. https://doi.org/10.1080/08824090600962698

Lida BL, Chaparro BS (2002) Using the locus of control personality dimension as a predictor of online behavior. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 46(14):1286–1290. https://doi.org/10.1177/154193120204601410

McCroskey JC (1982) An introduction to rhetorical communication, 4th edn. Prentice-Hall, Englewood Cliffs

McCroskey JC (1984) The communication apprehension perspective. Avoiding communication: shyness, reticence, and communication apprehension. Hampton Press, New York, pp 13–38

Makridakis S (2017) The forthcoming Artificial Intelligence (AI) revolution: its impact on society and firms. Futures 90:46. https://doi.org/10.1016/j.futures.2017.03.006

Mays KK, Katz J, Groshek J (2020) Mediated communication and customer service experiences: psychological and demographic predictors of user evaluations in the United States. In: Proceedings of the 53rd Hawaii International Conference on System Sciences. https://doi.org/10.24251/HICSS.2020.337

Nass C, Moon Y (2000) Machines and mindlessness: social responses to computers. J Soc Issues 56(1):81–103. https://doi.org/10.1111/0022-4537.00153

Nomura T, Suzuki T, Kanda T, Kato K (2006) Measurement of anxiety toward robots. In: ROMAN 2006-The 15th IEEE International Symposium on Robot and Human Interactive Communication, Institute of Electrical and Electronics Engineers, Hatfield, United Kingdom, pp 372–377. https://doi.org/10.1109/ROMAN.2006.314462

Oberoi E (2019) Differences between robotics and artificial intelligence. SkyfiLabs.com. https://www.skyfilabs.com/blog/difference-between-robotics-and-artificial-intelligence#:~:text=What%20is%20the%20basic%20difference,t%20need%20to%20be%20physical

Pegasystems (2018) What do consumers really think about AI: a global study [PowerPoint slides]. https://www.pega.com/ai-survey

Purington A, Taft JG, Sannon S, Bazarova NN, Taylor SH (2017) Alexa is my new BFF: social roles, user satisfaction, and personification of the Amazon Echo. In: Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems, Association for Computing Machinery, Denver, Colorado, pp 2853–2859. https://doi.org/10.1145/3027063.3053246

Reeves B, Nass CI (1996) The media equation: how people treat computers, television, and new media like real people and places. Cambridge University Press

Rifkin J (2011) The third industrial revolution: how lateral power is transforming energy, the economy, and the world. Macmillan

Robert LP (2018) Personality in the human robot interaction literature: a review and brief critique. In: Proceedings of the 24th Americas Conference on Information Systems, Aug, pp 16–18

Rockwell S, Singleton L (2002) The effects of computer anxiety and communication apprehension on the adoption and utilization of the internet. Electron J Commun 12(1):437–454

Rotter JB (1966) Generalized expectancies for internal versus external control of reinforcement. Psychol Monogr Gen Appl 80(1):1–28, American Psychological Association. https://psycnet.apa.org/doi/https://doi.org/10.1037/h0092976

Saldaña J (2021) The coding manual for qualitative researchers, 2nd edn. Sage

Salem M, Lakatos G, Amirabdollahian F, Dautenhahn K (2015) Would you trust a (faulty) robot? Effects of error, task type and personality on human–robot cooperation and trust. In: 2015 10th ACM/IEEE International Conference on Human–Robot Interaction (HRI), pp 1–8. IEEE. https://doi.org/10.1145/2696454.2696497

Sandry E (2018) Aliveness and the off-switch in human–robot relations. In: Guzman A (ed) Human–machine communication: rethinking communication, technology, and ourselves. Peter Lang, New York, pp 51–66

Schwab K (2017) The fourth industrial revolution. Currency

Spence PR, Westerman D, Lin X (2018) A robot will take your job. How does that make you feel? In: Guzman A (ed) Human–machine communication: rethinking communication, technology, and ourselves. Peter Lang, New York, pp 185–200

Sullins JP (2012) Robots, love, and sex: the ethics of building a love machine. IEEE Trans Affect Comput 3(4):398–409. https://doi.org/10.1109/T-AFFC.2012.31

Sundar SS, Nass C (2001) Conceptualizing sources in online news. Journal of communication, 51(1):52–72

Sundar SS (2008) The MAIN model: a heuristic approach to understanding technology effects on credibility. Digital media, youth, and credibility. In: Metzger MJ, Flanagin AJ (eds) The John D. and Catherine T. MacArthur Foundation Series on Digital Media and Learning. The MIT Press, Cambridge, pp 73–100. https://doi.org/10.1162/dmal.9780262562324.073

Sundar SS (2020) Rise of machine agency: a framework for studying the psychology of human–AI interaction (HAII). J Comput-Mediat Commun 25(1):74–88. https://doi.org/10.1093/jcmc/zmz026

Sundar SS, Kim J (2019) Machine heuristic: when we trust computers more than humans with our personal information. In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, pp 1–9. https://doi.org/10.1145/3290605.3300768

Terborgh G (1965) The automation hysteria (No. 376). WW Norton & Company Incorporated

Van Dijk JA (2006) Digital divide research, achievements and shortcomings. Poetics 34(4–5):221–235. https://doi.org/10.1016/j.poetic.2006.05.004

Venkatesh V (2000) Determinants of perceived ease of use: Integrating control, intrinsic motivation, and emotion into the technology acceptance model. Inf Syst Res 11(4):342–365. https://doi.org/10.1287/isre.11.4.342.11872

Waddell TF (2018) A robot wrote this? How perceived machine authorship affects news credibility. Digit J 6(2):236–255. https://doi.org/10.1080/21670811.2017.1384319

Walsh J, Katz JE, Andersen BL, Groshek J (2018) Personal power and agency when dealing with interactive voice response systems and alternative modalities. Media and Communication 6(3):60–68.

Weiner N (1948) Cybernetics, or Control and communication in the animal and the machine. New York: John Wiley

West D, Allen J (2018) How Artificial Intelligence is transforming the world. Brookings Institute report. https://www.brookings.edu/research/how-artificial-intelligence-is-transforming-the-world/

Yogeeswaran K, Złotowski J, Livingstone M, Bartneck C, Sumioka H, Ishiguro H (2016) The interactive effects of robot anthropomorphism and robot ability on perceived threat and support for robotics research. J Hum Rob Interact 5(2):29–47. https://doi.org/10.5898/JHRI.5.2.Yogeeswaran

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

Not applicable.

Ethics approval

Study was reviewed and approved as exempt by the Boston University Charles River Campus IRB.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mays, K.K., Lei, Y., Giovanetti, R. et al. AI as a boss? A national US survey of predispositions governing comfort with expanded AI roles in society. AI & Soc 37, 1587–1600 (2022). https://doi.org/10.1007/s00146-021-01253-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00146-021-01253-6