Abstract

Social robots are gradually entering children’s lives in a period when children learn about social relationships and exercise prosocial behaviors with parents, peers, and teachers. Designed for long-term emotional engagement and to take the roles of friends, teachers, and babysitters, such robots have the potential to influence how children develop empathy. This article presents a review of the literature (2010–2020) in the fields of human–robot interaction (HRI), psychology, neuropsychology, and roboethics, discussing the potential impact of communication with social robots on children’s social and emotional development. The critical analysis of evidence behind these discussions shows that, although robots theoretically have high chances of influencing the development of empathy in children, depending on their design, intensity, and context of use, there is no certainty about the kind of effect they might have. Most of the analyzed studies, which showed the ability of robots to improve empathy levels in children, were not longitudinal, while the studies observing and arguing for the negative effect of robots on children’s empathy were either purely theoretical or dependent on the specific design of the robot and the situation. Therefore, there is a need for studies investigating the effects on children’s social and emotional development of long-term regular and consistent communication with robots of various designs and in different situations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Modern research in social robotics has delivered robotic teachers, nannies, companions for children with Autism Spectrum Condition (ASC), and socially interactive toys (Breazeal 2002; Sharkey and Sharkey 2010; Sharkey 2016; Turkle 2017). In the past decade, much research has focused on improving the interaction between people and robots (Mutlu et al. 2016), as well as building stronger relationships between them (Mitsunaga et al. 2006; de Graaf et al. 2015; Kory-Westlund et al. 2018). The latest tendency in social robotics research has been developing social robots for specific purposes, such as educational robots Saya, Robovie and Rubi (Sharkey 2016: 284), care robot Paro (Kang et al. 2020), therapy robot Kaspar (Wood et al. 2019) and assistant robot Jibo (Breazeal 2017). Some smart and connected toys from the Internet of toys fall under the category of “social robots” (Peter et al. 2019). Such technologies are meant for long-term emotional relationships with their users, and they are often designed in a humanlike form to be used in human roles. It looks like we might soon see robots among children’s early social contacts, together with parents, teachers, and peers.

Early childhood (3–8 years old) is the age when children rapidly develop their social and emotional intelligence. Their socialization starts with communication with their parents or caregivers and later continues with teachers and peers. Such projects as robotic nannies, tutors, and socially interactive toys seem to aim at occupying some time children normally would spend socializing with people. One of the most important social skills that children develop in early childhood is empathy, which contributes to prosocial behavior (Spinrad and Eisenberg 2017: 1). The development of empathy is highly influenced by the children’s early social circle. Despite the significant (25–30%) genetic component (Knafo and Uzefovsky 2013), even the lower levels of empathy are thought to be learned by children in specific social conditions, such as consistent and repetitive behavior, resulting in strong associations (Heyes 2018a: 17). The parental (or main caregiver’s) role is, therefore, crucial (Decety et al. 2018: 9).

The latest understanding of empathy that most researchers agree on (Cuff et al. 2016) includes affective and cognitive components. Parental practices are crucial for the development of affective empathy in children (Waller and Hyde 2018), while cognitive empathy starts developing through the process of role-taking from approximately the age of four (Decety et al. 2018: 6). Children form hypotheses and test them against a database of human behavior (Heyes 2018b: 147). This way, they learn which emotional reactions people have to which actions and gradually better understand the mental states of others. Collaborative play with peers is especially important at this stage for the development of cognitive empathy, as children feel the need to understand the emotional behavior of other children and the reasons behind it to achieve their communication goals (Brownell et al. 2002: 28). Therefore, although the specific psychological mechanisms of transmitting prosocial behaviors from one person to another remain unknown (Decety et al. 2018: 9), the quality of children’s social connections in early childhood influences how they develop empathy.

The intention to design robots for long-term emotional engagement with children, as well as making them play the roles of tutors, babysitters, and friends, raises the question about the quality of emotional communication that these robots can offer. It is well-established that humans tend to relate socially and emotionally to information and communication technologies (ICTs), even though they are aware that they are communicating with machines (Reeves and Nass 1996). Children engage in imaginary play with toys, which seems to contribute positively to their development. How do robots differ from other ICTs and toys, and why is there a question of their potential influence on children’s social and emotional development?

First of all, social robots, unlike other technologies, are designed to have agency in communication. Turkle (2017) writes in her book Alone Together that children partially lose control over play with socially interactive toys because such toys act independently from the children’s imagination. Second, designers build social robots to communicate naturally with users. The robot’s body allows for expressing non-verbal behavior: establishing and maintaining eye contact with the help of the face recognition technique (Hashimoto et al. 2002), expressing understanding, encouragement and curiosity through paralinguistic utterances (Fujie et al. 2004), and hiding their imperfections, such as the need to repeat the sentence when speech recognition fails, through facial expressions (confusion), head nods, and bodily motion (Breazeal et al. 2016: 1947). Third, robots are meant to evolve with time and adjust to the needs of a particular user, which requires memory to learn from previous interactions. Long-term interactions with robots, however, are still challenging for robot designers (Leite et al. 2013; Kory-Westlund et al. 2018). Finally, robots are increasingly more often designed for empathic interactions with children. They are built to recognize people and acknowledge their presence (Turkle 2017), simulate a Theory of Mind (Breazeal et al. 2016: 1941), and recognize simple emotions and mimic them (Tapus and Mataric 2007). Projects from developmental robotics are working on models of gradual development of empathy in robots, similar to the development of children, where robots are trained to recognize emotions and differentiate between themselves and others (Lim and Okuno 2015; Asada 2015).

Despite the fascinating achievements in the field of social robotics, currently, social robots cannot communicate on the human level. Thus, there is a need to address the question of the potential influence of using social robots with pre-school children on children’s social and emotional development. Unlike adults, children lack knowledge about the norms of social interactions because they have yet to learn them by socializing among other humans. Therefore, the present article aims to answer the question: Can communication with social robots in early childhood influence how children develop empathy?

In this article, I present a review of literature from the past decade (2010–2020), discussing the potential effects of communication with social robots on children’s social and emotional development. I am interested in bringing perspectives from different fields on this subject. While the fields of human–robot interaction (HRI) and child–robot interaction (CRI) are occupied with certain types of problems, psychologists, neuropsychologists, and ethicists pay attention to other kinds of important issues, which are often neglected by robot designers.

The article is organized as follows. First, I summarize the results of the previous relevant research and identify a knowledge gap. Second, I describe the protocol of this literature review. Third, I briefly outline the main results of the review and summarize them in a table. In the discussion section, I critically evaluate the arguments and evidence they are based on in the reviewed publications, synthesize the best current knowledge on the issue, and suggest directions for further research. I conclude with a summary of the main findings and topics discussed in the article.

2 Previous research

Critical studies of robotic communication produced by ethicists and psychologists have recently arrived in abundance. Such fields as robophilosophy have emerged, aiming to integrate the humanities and human sciences (psychology, cognitive science, linguistics, etc.) into social robotics and study the ethical aspects of social robots (Coeckelbergh 2012; Ess 2016). Although there is a very little direct discussion about the potential influence of social robots on children’s development of empathy, researchers involved in relevant projects (Borenstein and Arkin 2017; Turkle 2017) have raised this question. Turkle (2017) expressed concerns about the parasocial nature of relationships with machines and the potential difficulty for children to develop self–other differentiation (the core component of empathy) because robots are more of an extension of the self of the user than a separate social entity (Turkle 2017: 55–56, 117). Borenstein and Arkin (2017) became unsure about the ethical aspects of robots “nudging” children toward prosocial behavior.

Meanwhile, several researchers are working on developing and testing educational and therapeutic programs involving robots, which aim to help children develop social skills. Zuckerman and Hoffman (2015) have developed a prototype of an ambient robot, which reacts to the users’ emotional behavior, thus reminding people to be kinder toward each other. Ihamäki and Heljakka (2020) studied the potential influence of communication with a robotic toy dog on the development of social, emotional, and empathy skills in children. Hurst et al. (2020) developed the empathic companion robot Moxie for providing playful social and emotional learning. In their preliminary experiment, a group of children with ASC showed improvement “both in subjectively assessed skill categories (i.e., emotion regulation, self-esteem, conversation skills, and friendship skills) as well as quantitatively assessed behaviors such as increased engagement, eye contact, contribution to the interaction, social and relational language” (p. 12). Marino et al. (2020), as a result of an intervention with the human-assisted social robot NAO and children with ASC experiencing deficits in cognitive empathy, showed that these children scored significantly higher on the scales of the Test of Emotional Comprehension and Emotional Lexicon Test than children who were treated only by the therapist.

There is, however, a lack of research discussing the effects of interactions with social robots on the development of empathy in typically developing children. The purpose of this study was to systematize the best current evidence of the potential outcomes of the socialization of children with social robots and to evaluate the strength of the existing arguments in the literature.

3 Methods

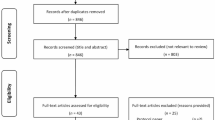

This article presents a systematic search and review (see the review protocol Table 2 in “Appendix”), which combines a comprehensive search process with a critical review (Grant and Booth 2009: 102). This kind of review allows for including several types of publications with different types of methods, and the aim is to systematize the best current evidence to identify the knowledge gaps.

Because it is an interdisciplinary inquiry, I consulted Web of Science, a comprehensive database of academic literature, to aggregate relevant studies. I used the keywords extracted from the research question: “children,” “robots,” and “empathy.” I searched for publications in the past 10 years (2010–2020). I included the following types of publications: journal articles and conference proceedings. The proceedings were included because studies from the fields of HRI and CRI are often published in this format (Shamir 2010). The search string (child* AND robot* AND empath*) gave 62 results, from which 50 were excluded and 12 included (see the list of reviewed studies Table 3 in “Appendix”). First, I filtered the publications by relevance in abstracts and conclusions. If the relevance was unclear, I read the discussion section to make sure that the relevant arguments were present in the study. Although my main research question primarily concerned typically developing children, since the development of robots for social skills therapy for children with ASC has shown relatively good results, and the improvement of social skills is directly relevant to my research question, a selection of studies with children with ASC was included in the review.

Materials were excluded for the following reasons:

-

1.

The robots the researchers used were not social (not meant for communication);

-

2.

There was no discussion about the development of empathy in children or arguments indirectly contributing to this discussion;

-

3.

The questions investigated in the study were too ASC-specific, which could not be relevant for typically developing children;

-

4.

The studies investigated the effect of empathy in robots on children’s perception, enjoyment, information retention, etc., and not specifically developing empathy or learning social skills in children;

-

5.

The studies suggested new models of empathy for social robots meant for children without a test or an evaluation with children.

The biggest challenge was to filter the studies testing different aspects of empathic robots in social interactions with children because they often indirectly indicated how such robots could influence the development of empathy but did not discuss it directly. When I suspected such studies, I had to read the full text in order to filter them by relevance.

The materials included in the review are predominantly experiments and observations of social interactions between children and robots, both individually and in groups, except for one literature review.

I analyzed the included studies by (1) extracting the argument with the evidence it is based on from the full text, (2) sorting the arguments into “no” and “yes” categories regarding the possibility of social robots to influence the development of empathy in children, and (3) sorting “yes” arguments into “positive” and “negative influence” categories. After that, I (4) evaluated the strength of the evidence of all the arguments and (5) systematized the knowledge on the topic. Finally, I (6) pointed out the uncertainty of knowledge and the gaps that need to be filled with further research.

4 Results

Table 1 below shows a short summary of the main results of the literature review. All 12 studies either directly or indirectly indicated the potential of social robots to influence the development of empathy in children. One of them avoided pointing out any direction this influence might have. Seven studies were optimistic about this influence, while two were rather pessimistic. The two remaining publications, whose authors worked on the robots supposed to help children develop empathy, underlined a number of problems that needed to be addressed, as these can reverse the positive influence of robots on children’s emotional development. Based on the reviewed materials, I also identified three main categories of factors, which are decisive when it comes to the effect of robots on the development of empathy in children: the robot’s design, the context of use, and the duration of the interaction.

Among the reviewed publications, not many studies directly discussed the potential effect of communication with robots in early childhood on the development of empathy in children. All studies have different designs in terms of the type of robot, the robot’s programming, and the context. My goal was to collect the arguments for and against the potential influence of social robots on children’s development of empathy, but none of the studies in this selection questioned whether this influence was possible at all or tested it.

Severson and Carlson (2010) discussed from the simulation-theory perspective (imagining the internal states of other people through mirror neurons) whether children genuinely attribute mental states to robots, or if it is a part of their imaginary play. As an outcome of their review of empirical studies of interactions between children and robots, they concluded that robots presented a new ontological category, meaning that children, although accepting the robots not being alive in a biological sense until quite an advanced age (11 years old) attribute “perception, intelligence, feelings, volition, and moral standing” (p. 1101) to robots. Since robots are designed to have a certain agency and personality, the fact that children imagine the internal states of robots not as a pretense may have an effect on the development of their cognitive empathy. The authors, however, did not offer an opinion on what kind of effective communication with robots might cause.

4.1 Negative influence

The studies that looked at interactions between children and robots in real-life environments tended to notice problematic aspects of these interactions. Nomura et al. (2016), observing the behavior of 23 children (5–9 y.o.) toward a robot in a shopping mall in Japan for two weeks, learned from interviews with children who abused the robot that half of them, despite believing that the robot could “feel” pain and stress, continued to abuse it. Black (2019) argues that even if humans relate emotionally to technologies and create parasocial relationships with them, empathy similar to the kind people experience toward human beings is possible toward robots only if there is a “flawless illusion of faciality” (on the other side of the “uncanny valley”) (p. 11). However, in the unlikely case of robots having perfectly humanlike faces in the future, the absence of affect behind this face might lead to the detachment of personhood. Black asks: “How differently would this process play out if a child grew up interacting with domestic helpers or teachers whose faces she knew to be disconnected from any claim to genuine subjectivity?” (p. 21).

4.2 Positive influence

Among the publications, which I sorted in the category of positive influence, are mostly those that either build robots, which are meant to promote the development of empathy in children or suggest educational scenarios, where children can learn social and emotional skills, assisted by robots. A separate category consists of works that test social robots for social skills therapy with children with ASC.

Björling et al. (2020) indirectly indicated the potential positive influence of social robots on children’s emotion regulation (to handle stress), if the robot demonstrates authenticity in communication, human flaws, and active listening. Rafique et al. (2020) argue that it is possible for robots to influence the development of empathy in children positively through learning to recognize robot emotions and special activities promoting self-awareness, social awareness, and perspective-taking. Leite et al. (2017) suggest building emotional intelligence skills through storytelling activities with robots, using the RULER framework for promoting emotional literacy (including the skills of recognizing, understanding, labeling, expressing, and regulating emotions). Antle et al. (2019) suggested, but have not yet tested, an educational model for practicing emotion regulation, empathy expression, and compassionate actions with peers, pets, and a robotic dog. Fosch-Villaronga et al. (2016) critically analyzed two models of cognitive rehabilitation therapies. Their conclusions were that personalization and emotional adaptation (EA) in a robot contribute to stronger emotional bonds, which are useful for cognitive therapy. However, the emotional dependence evoked by the deception of the robot showing “feelings” can be harmful and unhealthy for the developing child.

Hood et al. (2015) explored the “learning by teaching” approach in groups of children with a robot to stimulate perspective-taking. Javed and Park (2019), focusing on improving emotion regulation (ER) in children with ASC, suggested the design of an animated character with a set of 14 simple emotional expressions on an iPod-based robotic platform. The experiment showed success in ER for 8 out of 11 typically developing children and three out of six children with ASC. Mazzei et al. (2010) and Costa et al. (2014) tested humanoid robots in emotion recognition therapy with children with ASC.

5 Discussion

The goal of the literature review was to answer the question of whether social robots can influence the development of empathy in children. Before we critically evaluate their potential influence on children’s social and emotional development, we first need to know whether it is possible for robots to have such a lasting influence on children.

In the reviewed publications from the last decade, I found no clear answer to this question. The researchers express either their concerns about the potential negative consequences of communication with robots for children or hope that we can design robots that can help children develop their social skills. None of the studies questioned whether this influence was at all possible or tested it reliably. This could be because it seems too early to do such studies, as there are currently very few robots available on the market. This means there is no immediate danger of children growing up with robotic babysitters or teachers. Moreover, the technology in robots is very limited and not robust enough to be used in real-life situations for long periods of time. Additionally, the majority of reviewed studies address in their limitations the difficulty of eliminating the novelty effect of interactions with robots, since very few children had previously had an experience of communicating with a social robot. Longitudinal studies, which are more suitable for measuring the lasting effect of communication with a social robot on children’s development, are generally quite rare.

Leite et al. (2013), in their survey of 24 long-term studies of interactions with social robots, discuss the challenges of conducting longitudinal studies. They mention the cost (including time and effort) of collection and analysis of large amounts of data, the need to adapt the methods for the analysis of long-term interactions, and ethical considerations, such as attachment to technological devices with lifelike qualities and the yet unknown potential influences on the well-being of the subjects involved in the study (p. 305–306). It may also be challenging to attract participants for a longer study.

5.1 The promise of affective and cognitive empathy with robots

However, among their hopes and concerns, the researchers make interesting arguments for the potential of social robots to influence the way children may develop empathy in the future. We know that children describe robots as humanlike and attribute mental states to them. However, Severson and Carlson (2010) became curious about whether such attribution was genuine or a part of imaginary play. Having analyzed a number of empirical studies from the simulation theory perspective, they came to the conclusion that even older children, who previously had experience with robots, think that robots possess brains and intelligence. Children cannot conceive of the emotional states of robots independently from the context of their interaction because robots appear to have their own personalities and inner lives. That is why they seem to trigger real cognitive empathy in children, and interactions with robots may help develop this part of human empathy.

Affective empathy, however, appears to be much harder to develop with robots, largely due to the deceptive character of emotions expressed by robots. Black (2019) makes a case against developing empathy with robots, arguing that no matter what kind of face we equip the robots with, people cannot experience the kind of affect toward robots that we direct at humans. The variety of emotional expressions that human faces can produce, and the human ability to read even the smallest nuances in these expressions, make any robot face so inferior toward a human face that we can never treat robots with empathy for a long time. People might react empathically toward robots unconsciously, but they cannot be tricked by this illusion for long.

In the theoretical case of equipping robots with a face so close to human that we do not notice any difference, the absence of affect might gradually train people to disassociate facial expressions with experienced feelings, which can potentially lead to psychopathy. Mazzei et al. (2010) discussed the development and use of a humanoid robot, FACE, with artificial skin from Hanson Robotics, for helping children with ASC navigate the emotional complexity of human communication. They acknowledge the big challenge of avoiding what they call the “Joker Effect”—“the emphatic misalignment typical of sociopaths who are not able to regulate their behaviour to the social context” (p. 795). When the robot demonstrates emotional expressions unsuitable for the context, children may become uneasy and scared. Nomura et al. (2016), observing young children abusing a robot at a Japanese mall, concluded from the interviews with these children that robots currently fail to trigger affective empathy even in those children who think that robots can feel the abuse.

Hortensius et al. (2018) came to the exact same conclusion in their review of studies on the human perception of emotions in artificial agents (p. 859). Therefore, even if robots appear intelligent and capable of having mental states, it seems that their affective expressions, almost no matter the design, are not convincing enough for children to trigger empathy. Whether social robots at all are capable of invoking empathy (and what kind of empathy) in children needs more scholarly attention. The study done by Cross et al. (2019) with adults who socialized with the Cozmo robot for a week showed no significant effect on the levels of empathy toward robots. However, the researchers admit the possibility that a long-term relationship with a robot could change the empathic attitude toward robots, especially in children, who have not been as exposed to socialization with other humans.

5.2 Promoting empathy

There is much optimism among the researchers working on the design of robots and their testing for educational and therapeutic purposes. However, they rarely suggest all-purpose robots embedded with empathic behaviors, but rather focus on promoting and developing specific social and emotional skills related to empathy mechanisms, while limiting their experiments with robots from 15 min to an hour, sometimes repeating the sessions for a couple of weeks. Some of these projects are meant for individual use with children (mainly for children with ASC), while robots meant for education and use in schools are often tailored for group interactions in public places and tested in small groups of children. Such projects show how fully controlled design, context, and activities can indeed help develop children’s social and emotional skills with robots.

In the educational context, arguably the best results can be achieved when robots are teleoperated, reply with pre-written scripts, and children are involved in programming the software. As Björling et al. (2020) demonstrated, teen children wanted the robot to listen to them rather than give them advice, and just to behave as humanly as possible, including human flaws in communication. Fosch-Villaronga et al. (2016) describe a dilemma of using robots for cognitive therapy: the higher the level of personalization provided by a robot, the better the emotional connection with the child, which is positive for rehabilitation purposes (p. 197), but it creates an emotional dependency on the robot, especially considering the one-sided character of such relationships (p. 200).

5.2.1 Emotion recognition

The educational design suggested by Rafique et al. (2020) was also limited to specific game scenarios. Their scenarios did not really engage children in communication with Cozmo, as the robot was used as a playful tool in educational tasks promoting self-awareness, social awareness, and perspective-taking between humans. However, the emotion recognition exercise with a robot deserves some attention. Cozmo is known for its relatively rich emotional palette for a commercially available robot. The authors argued that children improved their emotion recognition skills by learning to recognize the emotional expressions of Cozmo. At the same time, the authors described only two emotional states that Cozmo could have in the game scenarios: success (“joyful expressions and exciting sounds”) and failure (“a sad expression and worrying sounds”) (p. 149624). The test they used to measure emotion recognition in children, however, consists of 20 different emotional expressions on human faces, which include variations not only in the eyes (which Cozmo uses to express emotions), but also the mouth, eyebrows, and head tilt. Cozmo could not possibly help children learn to recognize such a variety of human emotional expressions.

Moreover, Barrett et al. (2019) argue that human emotional expressions are not directly and universally connected to relevant internal states: “how people communicate anger, disgust, fear, happiness, sadness, and surprise varies substantially across cultures, situations, and even across people within a single situation” (p. 1). What children can learn from the emotional expression of robots is to recognize the emotional expressions of robots, not humans, even though the models of emotions are inspired by stereotypical human emotions. Costa et al. (2014) used a humanoid robot ZECA to teach children to recognize five “basic” emotions in imitation and storytelling activities. The experiment tested whether children correctly labeled emotional expressions and could reproduce them, as well as whether they could imagine from the described empathic situation what the robots must be feeling (perspective-taking). This method can be used as a test of children’s emotion recognition, but there was not enough discussion about its efficiency as a learning practice.

5.2.2 Perspective/role-taking

The idea of Leite et al. (2017) aimed to improve children’s emotional literacy by taking the robot’s perspective in a storytelling exercise. The robot in the exercise described an empathy-triggering situation and asked children to choose one out of three reactions. When children recalled the narrative later in an interview, they attributed mental and emotional states to story characters and explained their emotional behavior. This activity, which helps promote self–other differentiation, labeling, and discussing the emotional states of others, is a part of the RULER educational framework for promoting emotional literacy. Their study, however, did not measure children’s emotional understanding before the session. All the sessions were of different difficulty levels, which makes it impossible to trace their progress. The idea of developing children’s empathy through taking the perspective of a robotic character is clear, but the design of the study made it impossible to judge whether social interaction with a robot contributed to higher levels of emotional intelligence in children.

“Learning by teaching” is another approach the researchers used in the educational context to practice perspective-taking. In the experiment by Hood et al. (2015), children gave feedback to the NAO robot about its handwriting. Making children play the role of a teacher shows to be quite useful, as children feel responsibility and try to help the robot, thus trying to imagine its mental states. The problem with robots being in a position of authority is also eliminated this way. However, the problem of deception remains: children project mental states they attribute to humans onto the robot, but the robot cannot “forget” how to write its name correctly unless specifically programmed to do so (p. 89); the errors the robot makes may simply result from bugs in its programming. Thus, taking on the perspective of a robot may be a wrong way to teach children empathy because at least the robots in the reviewed studies do not have any perspective: they lack affect, intentions, preferences, beliefs, personality, memory, and lived experience.

5.2.3 Emotion regulation

In order to teach children emotion regulation, Antle et al. (2019) used an idea similar to that of Zuckerman and Hoffman (2015): a non-verbal robot that gently indicates its discomfort when people around it express higher levels of what it considers negative emotions. In these kinds of projects, it is crucial that the robot recognizes the situation correctly. The cultural and individual variations in emotional expressions suggest there might not be a general rule when the robot needs to get involved. More discussion and testing are needed to assess the potential outcomes of such robotic mediation in human relationships to evaluate the usefulness of such an approach to promoting empathic behavior.

Javed and Park (2019), in the more restricted context of a game, used an iPod-based robotic platform with a virtual character that, through an interaction model, tried to guide children toward a chosen emotional state. Effectiveness was assessed based on the variation between the children’s and the robot’s emotional states by the end. Their success rate, however, was close to a chance (50%) for both typically developing children and children with ASC. The game suggested by the researchers, where children needed to choose their current emotional state from 14 options, nevertheless helps children reflect on their emotional states: by labeling their emotional expressions, they create connections between feelings and emotions, as well as increase self-awareness for better emotional control.

The studies I have reviewed show slight improvements in specific social skills after a few sessions with robots. However, all these robots are designed for long-term use. Therefore, there is a need for studies that measure the changes in children’s social and emotional behaviors with robots (1) children are familiar with, (2) have consistent interaction over the course of several weeks or, if the technology allows, months, and (3) of various designs.

5.3 The effect of communication with social robots in groups of children

As Leite et al. (2017) point out, in a group context, it is impossible to personalize a robot to a particular user. From the empathy development point of view, this can be beneficial, since robots need to simulate having a stable personality with preferences, goals, beliefs, and intentions. A robot that constantly adapts to the needs of the user cannot be perceived as a separate “self”; neither can it disappoint (Turkle 2017). Researchers developing robots for social interaction with children often face the question of the potential lack of need in human contact when robots (like other ICT devices) become more interactive and engaging. Björling et al. (2020) argued that the interaction of a group of teens with an empathic robot encouraged social referencing among them, thus promoting human–human interaction. In an experiment done by Hood et al. (2015), where children taught a NAO robot to write, children soon started teaching each other.

There can also be negative consequences of interaction with robots in groups of children. As Nomura et al. (2016) observed, four children in their interviews said that they abused the robot because they saw others doing the same. Such observations mostly come as unintended consequences of experiments with different goals. However, if social robots are going to be used in real-life situations, they are more likely to interact with groups of children than individuals. The potential effect of communication with robots on children’s social learning and empathy development in a group deserves more scholarly attention.

5.4 Limitations

The scope of the review is rather small due to the relative unpopularity of the topic, which results in the current lack of critical studies investigating the effects of interactions with social robots on children’s development. Studies in the field of HRI rarely approach the question of building empathy and emotional behavior into robots from a critical perspective. Although researchers evaluate these interactions, they often have different foci. The therapeutic interventions for children with ASC were harder to evaluate within the scope of this article because they are created for the specific needs of non-typically developing children. Therefore, I assumed their results were effective and looked for specific methods of improving the levels of cognitive empathy in children.

5.5 Suggestions for further research

The review inspired the following research questions: can social robots really trigger empathy in children, and what kind of empathy? How do we design emotional expressions in robots, considering the variance across populations, cultures, in different situations, and within one person? How do we design social robots having a “perspective”—with personality, intentions, beliefs, goals, etc.? How should robots be designed to promote the development of empathy without “nudging”? How should robots be designed for group interactions? Should robots be designed for empathic communication at all?

6 Conclusion

Since the field of robotics for children has only recently started to attract the attention of scholars in other disciplines, there are disproportionately more studies from HRI and CRI included in the review. The HRI researchers tend to be more positive about the potential outcomes, since the design of robots is flexible, and many of the problems with robots we face today can in theory be solved in the future. There is currently a lack of longitudinal studies on typically developing children and their interaction with robots, largely because there are no robots that are meant for a consistent and regular interaction for longer than several days. The majority of current studies find it hard to overcome the novelty effect and often list it as a limitation, because a stable relationship is one of the requirements for the development of empathy, and stable relationships are not yet possible with social robots. Additionally, the robot’s personality needs to develop through the memory of past interactions and learning new information in order for the development of self-other differentiation to be possible.

The review showed that robots have good chances of influencing the development of empathy in children, but mostly theoretically. Robots are unlikely to influence the development of affective empathy in children because the caring practices of parents and primary caregivers play a crucial role in it, in addition to the genetic component. Moreover, robots do not have affect, the capacity to experience feelings, which is needed for the development of affective empathy in children. However, robots in theory are capable of influencing the development of cognitive empathy in children: the ability to recognize emotional states and understand their external and internal reasons, as well as regulate one’s own emotions. The quality of the influence of robots on empathy development may depend on the design and situation of interaction with robots. Studies that focused on investigating child–robot interaction in real life with commercial robots tended to negatively evaluate the potential effect that communication with robots might have on empathy development in children. At the same time, there are a number of studies that suggest specific designs and scenarios where robots can help children develop their emotional skills, such as in the spheres of education and social skills therapy (with children with ASC). The findings suggest that social robots as a technology, by their design, can have a certain influence on the development of empathy in children, but that it is also possible to design the robot and situation to direct the development of empathy in a positive way. There is, however, a danger of manipulation or “nudging” when the robot is specifically designed to promote empathy and prosocial behaviors in children (Borenstein and Arkin 2017).

We need to understand how putting robots in the roles of early social contacts for children will impact their social and emotional development in the long run, because these robots are being developed not to be played with for a couple of days, but as substitutes for people (at least where they are lacking). The importance of empathy for children’s development and success later in life, and the necessity of social human contact to develop the elements of which empathy consists, make for good reasons to investigate the potential of robots to provide satisfactory empathic communication if they are at all to be used with children.

Availability of data and material (data transparency)

The materials for the literature review were all acquired from public sources (published in academic books, journals, and conference proceedings).

References

Antle AN, Sadka O, Radu I, Gong B, Cheung V, Baishya U (2019) EmotoTent: Reducing school violence through embodied empathy games. In Proceedings of the 18th ACM International Conference on Interaction Design and Children, June 12, 2019:755–760. https://doi.org/10.1145/3311927.3326596

Asada M (2015) Towards artificial empathy. How can artificial empathy follow the developmental pathway of natural empathy? Int J Soc Robot 7:19–33. https://doi.org/10.1007/s12369-014-0253-z

Barrett LF, Adolphs R, Marsella S, Martinez AM, Pollak SD (2019) Emotional expressions reconsidered: challenges to inferring emotion from human facial movements. Psychol Sci Public Interest 20(1):1–68. https://doi.org/10.1177/1529100619832930

Björling EA, Cakmak M, Thomas K, Rose EJ (2020) Exploring teens as robot operators, users and witnesses in the wild. Front Robot AI 7(5):1–15. https://doi.org/10.3389/frobt.2020.00005

Black D (2019) Machines with faces: robot bodies and the problem of cruelty. Body Soc 25(2):3–27. https://doi.org/10.1177/1357034X19839122

Borenstein J, Arkin RC (2017) Nudging for good: robots and the ethical appropriateness of nurturing empathy and charitable behavior. AI Soc 32(4):499–507. https://doi.org/10.1007/s00146-016-0684-1

Breazeal C (2002) Designing sociable robots. MIT Press

Breazeal C (2017) Social robots: From research to commercialization. In Proceedings of the 2017 ACM/IEEE International Conference on Human–Robot Interaction (HRI '17). ACM, New York:1–1. https://doi.org/10.1145/2909824.3020258

Breazeal C, Dautenhahn K, Kanda T (2016) Social robotics. In: Siciliano B, Khatib O (eds) Springer handbook of robotics. Springer handbooks, Springer, Cham, pp 1935–1971

Brownell CA, Zerwas S, Balaraman G (2002) Peers, cooperative play, and the development of empathy in children. Behav Brain Sci 25(1):28–29. https://doi.org/10.1017/s0140525x02300013

Coeckelbergh M (2012) “How I learned to love the robot”: capabilities, information technologies, and elderly care. In: Oosterlaken I, van den Hoven J (eds) The capability approach, technology and design. Philosophy of Engineering and Technology 5. Springer, Dordrecht, pp 77–86

Costa S, Soares F, Pereira AP, Santos C, Hiolle A (2014) A pilot study using imitation and storytelling scenarios as activities for labelling emotions by children with autism using a humanoid robot. In 4th International Conference on Development and Learning and on Epigenetic Robotics, Genoa Italy, October 13–16, 2014:299–304. https://doi.org/10.1109/DEVLRN.2014.6982997

Cross ES, Riddoch KA, Pratts J, Titone S, Chaudhury B, Hortensius R (2019) A neurocognitive investigation of the impact of socializing with a robot on empathy for pain. Philos Trans R Soc B 374:1–13. https://doi.org/10.1098/rstb.2018.0034

Cuff BMP, Brown SJ, Taylor L, Howat DJ (2016) Empathy: a review of the concept. Emot Rev 8(2):144–153. https://doi.org/10.1177/1754073914558466

de Graaf MMA, Ben Allouch S, Klamer T (2015) Sharing a life with Harvey: exploring the acceptance of and relationship-building with a social robot. Comput Hum Behav 43:1–14. https://doi.org/10.1016/j.chb.2014.10.030

Decety J, Meidenbauer KL, Cowell JM (2018) The development of cognitive empathy and concern in preschool children: a behavioral neuroscience investigation. Dev Sci 21(3):1–12. https://doi.org/10.1111/desc.12570

Ess C (2016) What’s love got to do with it? Robots, sexuality, and the arts of being human. In Nørskov M (ed) Social robots: Boundaries, potential, challenges. Routledge. https://doi.org/10.4324/9781315563084

Fosch-Villaronga E, Barco A, Özcan B, Shukla J (2016) An interdisciplinary approach to improving cognitive human–robot interaction: A novel emotion-based model. In Seibt J, Nørskov M, Andersen SS (eds) What social robots can and should do. Frontiers in artificial intelligence and applications, 290, IOS Press, pp 195–205. https://doi.org/10.3233/978-1-61499-708-5-195

Fujie S, Ejiri Y, Nakajima K, Matsusaka Y, Kobayashi T (2004) A conversation robot using head gesture recognition as paralinguistic information. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Kurashiki, Okayama Japan September 20–22, 2004:158–164. https://doi.org/10.1109/roman.2004.1374748

Grant MJ, Booth A (2009) A typology of reviews: an analysis of 14 review types and associated methodologies. Health Info Libr J 26(2):91–108. https://doi.org/10.1111/j.1471-1842.2009.00848.x

Hashimoto S, Narita S, Kasahara H et al (2002) Humanoid robots in Waseda University—Hadaly-2 and WABIAN. Auton Robot 12:25–38. https://doi.org/10.1023/A:1013202723953

Heyes C (2018a) Empathy is not in our genes. Neurosci Biobehav Rev 95:499–507. https://doi.org/10.1016/j.neubiorev.2018.11.001

Heyes C (2018b) Cognitive gadgets. The cultural evolution of thinking. The Belknap Press of Harvard University Press, Cambridge

Hood D, Lemaignan S, Dillenbourg P (2015) When children teach a robot to write: An autonomous teachable humanoid which uses simulated handwriting. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human–Robot Interaction, March 2, 2015:83–90. https://doi.org/10.1145/2696454.2696479

Hortensius R, Hekele F, Cross ES (2018) The perception of emotion in artificial agents. IEEE Trans Cogn Develop Syst 10(4):852–864. https://doi.org/10.1109/TCDS.2018.2826921

Hurst N, Clabaugh C, Baynes R, Cohn J, Mitroff D, Scherer S (2020) Social and emotional skills training with embodied Moxie. arXiv preprint arXiv:2004.12962

Ihamäki P, Heljakka K (2020) Social and emotional learning with a robot dog: technology, empathy and playful learning in kindergarten. In: 9th annual arts, humanities, social sciences and education conference, January 6–8, 2020, Honolulu, Hawaii

Javed H, Park CH (2019) Interactions with an empathetic agent: regulating emotions and improving engagement in autism. IEEE Robot Autom Mag 26(2):40–48. https://doi.org/10.1109/MRA.2019.2904638

Kang HS, Makimoto K, Konno R, Koh IS (2020) Review of outcome measures in PARO robot intervention studies for dementia care. Geriatr Nurs 41(3):207–214. https://doi.org/10.1016/j.gerinurse.2019.09.003

Knafo A, Uzefovsky F (2013) Variation in empathy: the interplay of genetic and environmental factors. In: Legerstee M, Haley DW, Bornstein MH (eds) The infant mind: origins of the social brain. Guilford Press, New York, pp 97–122

Kory-Westlund JM, Won Park H, Williams R, Breazeal C (2018) Measuring young children’s long-term relationships with social robots. In Proceedings of the 17th ACM Conference on Interaction Design and Children (IDC ‘18). ACM, New York: 207–218. https://doi.org/10.1145/3202185.3202732

Leite I, Martinho C, Paiva A (2013) Social robots for long-term interaction: a survey. Int J Soc Robot 5:291–308. https://doi.org/10.1007/s12369-013-0178-y

Leite I, McCoy M, Lohani M, Ullman D, Salomons N, Stokes C, Rivers S, Scassellati B (2017) Narratives with robots: the impact of interaction context and individual differences on story recall and emotional understanding. Front Robot AI 4(29):1–14. https://doi.org/10.3389/frobt.2017.00029

Lim A, Okuno HG (2015) A recipe for empathy. Int J Soc Robot 7(1):35–49. https://doi.org/10.1007/s12369-014-0262-y

Marino F, Chilà P, Sfrazzetto ST, Carrozza C, Crimi I, Failla C, Busà M, Bernava G, Tartarisco G, Vagni D, Ruta L, Pioggia G (2020) Outcomes of a robot-assisted social–emotional understanding intervention for young children with autism spectrum disorders. J Autism Dev Disord 50:1973–1987. https://doi.org/10.1007/s10803-019-03953-x

Mazzei D, Billeci L, Armato A, Lazzeri N, Cisternino A, Pioggia G, Igliozzi R, Muratori F, Ahluwalia A, De Rossi D (2010) The face of autism. In 19th International Symposium in Robot and Human Interactive Communication, Viareggio Italy, September 13–15, 2010:791–796. https://doi.org/10.1109/ROMAN.2010.5598683

Mitsunaga N, Miyashita T, Ishiguro H, Kogure K, Hagita N (2006) Robovie-IV: A communication robot interacting with people daily in an office. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems IROS, Beijing: 5066–5072. https://doi.org/10.1109/IROS.2006.282594

Mutlu B, Roy N, Šabanović S (2016) Cognitive human–robot interaction. In: Siciliano B, Khatib O (eds) Springer Handbook of Robotics. Springer Handbooks, Springer, Cham, pp 1907–1933

Nomura T, Kanda T, Kidokoro H, Suehiro Y, Yamada S (2016) Why do children abuse robots? Interact Stud 17(3):347–369. https://doi.org/10.1075/is.17.3.02nom

Peter J, Kühne R, Barco A, de Jong C, van Straten CL (2019) Asking today the crucial questions of tomorrow: Social robots and the Internet of toys. In Mascheroni G, Holloway D (eds) The Internet of toys. Palgrave Macmillan, pp 25–46

Rafique M, Hassan MA, Jaleel A, Khalid H, Bano G (2020) A computation model for learning programming and emotional intelligence. IEEE Access 8:149616–149629. https://doi.org/10.1109/ACCESS.2020.3015533

Reeves B, Nass C (1996) The media equation: how people treat computers, television, and new media like real people and places. Cambridge University Press

Severson RL, Carlson SM (2010) Behaving as or behaving as if? Children’s conceptions of personified robots and the emergence of a new ontological category. Neural Netw 23(8–9):1099–1103. https://doi.org/10.1016/j.neunet.2010.08.014

Shamir L (2010) The effect of conference proceedings on the scholarly communication in computer science and engineering. Scholarly Res Commun 1(2):1–7. https://doi.org/10.22230/src.2010v1n2a25

Sharkey A (2016) Should we welcome robot teachers? Ethics Inf Technol 18(4):283–297. https://doi.org/10.1007/s10676-016-9387-z

Sharkey N, Sharkey A (2010) The crying shame of robot nannies: an ethical appraisal. Interact Stud 11(2):161–190. https://doi.org/10.1075/is.11.2.01sha

Spinrad TL, Eisenberg N (2017) Compassion in Children. In: Seppälä EM, Simon-Thomas E, Brown SL, Worline MC, Cameron CD, Doty JR (eds) The Oxford handbook of compassion science. https://doi.org/10.1093/oxfordhb/9780190464684.013.5

Tapus A, Mataric MJ (2007) Emulating empathy in socially assistive robotics. In AAAI Spring Symposium on Multidisciplinary Collaboration for Socially Assistive Robotics, Stanford, USA, pp 93–96

Turkle S (2017) Alone together, Revised and expanded. Basic Books, NY

Waller R, Hyde LW (2018) Callous–unemotional behaviors in early childhood: the development of empathy and prosociality gone awry. Curr Opin Psychol 20:11–16. https://doi.org/10.1016/j.copsyc.2017.07.037

Wood LJ, Zaraki A, Robins B, Dautenhahn K (2019) Developing Kaspar: a humanoid robot for children with autism. Int J Soc Robot. https://doi.org/10.1007/s12369-019-00563-6

Zuckerman O, Hoffman G (2015) Empathy objects: Robotic devices as conversation companions. In Proceedings of the Ninth International Conference on Tangible, Embedded, and Embodied Interaction (TEI’15), pp 593–598. https://doi.org/10.1145/2677199.2688805

Funding

Open access funding provided by University of Oslo (incl Oslo University Hospital). This research was conducted as part of a PhD scholarship funded by the Faculty of Humanities at the University of Oslo.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pashevich, E. Can communication with social robots influence how children develop empathy? Best-evidence synthesis. AI & Soc 37, 579–589 (2022). https://doi.org/10.1007/s00146-021-01214-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00146-021-01214-z